TaCOS: Task-Specific Camera Optimization with Simulation

Abstract

The performance of perception tasks is heavily influenced by imaging systems. However, designing cameras with high task performance is costly, requiring extensive camera knowledge and experimentation with physical hardware. Additionally, cameras and perception tasks are mostly designed in isolation, whereas recent methods that jointly design cameras and tasks have shown improved performance. Therefore, we present a novel end-to-end optimization approach that co-designs cameras with specific vision tasks. This method combines derivative-free and gradient-based optimizers to support both continuous and discrete camera parameters within manufacturing constraints. We leverage recent computer graphics techniques and physical camera characteristics to simulate the cameras in virtual environments, making the design process cost-effective. We validate our simulations against physical cameras and provide a procedurally generated virtual environment. Our experiments demonstrate that our method designs cameras that outperform common off-the-shelf options, and more efficiently compared to the state-of-the-art approach, requiring only 2 minutes to design a camera on an example experiment compared with 67 minutes for the competing method. Designed to support the development of cameras under manufacturing constraints, multiple cameras, and unconventional cameras, we believe this approach can advance the fully automated design of cameras. Code is available on our project page at https://roboticimaging.org/Projects/TaCOS/.

1 Introduction

The quality of camera captures directly affects perception tasks. An analogy in nature is how animals’ visual perceptions impact their daily activities. It is believed that evolution designs distinct visual systems for different species to suit their habitats [30], suggesting a need for a sophisticated and application-based camera design approach.

Currently, designing cameras is cumbersome, typically requiring professionals to devise designs based on their experiences, followed by extensive experiments with various design decisions. Moreover, the camera hardware is often designed in isolation from perception tasks, despite literature showing the benefits of joint design.

Existing methods optimize imaging systems for tasks through software, often using ray tracing renderers to simulate the physical image formation process. Ray tracing render has been widely used for designing and evaluating cameras for autonomous driving [5, 33, 32, 58, 34]. However, manual tuning of camera parameters for optimization is still required, and joint optimization of the cameras and the tasks is not addressed in these methods.

Other works propose propose automatic co-design of imaging systems and perception tasks using differentiable ray tracing or proxy neural networks [48, 50, 59, 7, 25, 2, 53, 52, 46, 9, 39, 36, 6, 11, 62, 60, 40]. Nevertheless, these methods focus primarily on optics design since their design space is restricted by using captured image datasets which prevents them from generalizing to more complex camera design problems involving field-of-view (FOV), resolution, multi-cameras, or unconventional cameras.

Game engines like Unreal Engine (UE) [13] are repurposed for simulating cameras due to their ray tracing support and ability to produce video sequences and interact with virtual environments. Klinghoffer et al. [29] propose a reinforcement learning (RL) method for camera design evaluated with a UE-based simulator. While this method achieves high performance, it optimizes a complex neural network to design cameras, making it less efficient than directly optimizing camera parameters.

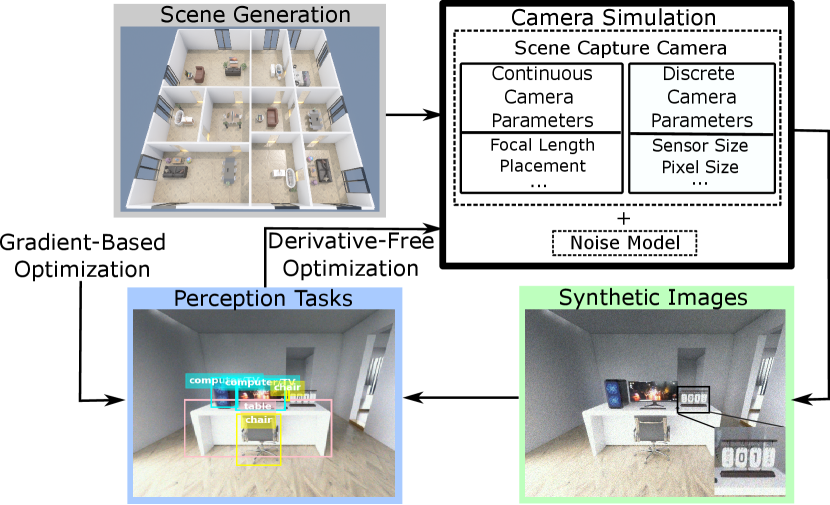

As illustrated in Fig. 1, we introduce an end-to-end camera design method that directly optimizes camera parameters for perception tasks. We propose a camera simulator that allows tuning various parameters and addresses image signal-to-noise ratio (SNR) with a physics-based noise model[18]. Additionally, we use a procedural generation algorithm to create indoor virtual environments in UE 5 with machine learning labels for our method.

Inspired by evolution, we employ a genetic algorithm [22], a derivative-free optimizer, to optimize discrete, continuous, and categorical variables, which supports manufacturing constraints and selecting parts (optics, image sensors, etc.) from catalogs where custom manufacture is infeasible. Finally, we adopt a quantized continuous approach [9] for discrete variables, considering their interdependencies.

We implement our approach on two design problems, demonstrating our method’s efficiency compared to the state-of-the-art (SOTA) design method, and cameras designed by our approach achieve compelling performance compared to high-quality robotic/machine vision cameras. We also validate our simulation’s accuracy by comparing it with physical cameras. In summary, our contributions are:

-

•

We introduce an end-to-end camera design method that combines derivative-free and gradient-based optimization to automatically co-design cameras with perception tasks, allowing continuous, discrete, and categorical camera variables.

-

•

We develop a camera simulation with a physics-based noise model and a virtual environment, and provide a procedurally generated virtual environment.

-

•

We validate our simulator by establishing equivalence in both low-level image statistics and high-level task performance between synthetic and captured imagery.

-

•

We validate our method with improved performance compared to other methods and off-the-shelf options.

This work is a key step in simplifying the process of designing cameras for autonomous systems like robots, emphasizing task performance and manufacturability constraints.

Limitations Our work focuses exclusively on camera parameters set during the manufacturing stage. Parameters that can be dynamically adjusted during camera operation, such as exposure settings, will be addressed in future work. Nevertheless, our method can still select algorithms that control these dynamic parameters dynamically.

2 Related Work

Task-Specific Simulation-Based Camera Design Designing cameras tailored for vision tasks using simulation has gained popularity. Blasinski et al. [5] propose optimizing a camera design to detect vehicles. They used synthetic data generated with ISET [56, 55]. The method optimizes cameras by experimentally analyzing the impact of image postprocessing pipelines and auto-exposure algorithms on object detection tasks. This work continues in [33, 32, 58, 34] with larger datasets and an optimization framework for high dynamic range (HDR) imaging. Nevertheless, these methods require manual tuning and testing of camera parameters, whereas our method is an automatic end-to-end approach.

Other works have proposed end-to-end methods to optimize the imaging system based on tasks. Many use gradient-based optimization with differentiable camera simulators, including differentiable ray tracing and proxy neural networks for non-differentiable image formation processes. In these works, prerecorded images are used as input scenes to their pipeline, and their simulators convert the input images to images formed by their proposed cameras. Applications include extended depth of field (DOF) [48, 50, 59], depth estimation [20, 7, 25, 2], object detection [53, 52, 46, 9], HDR imaging [39], [36], image classification [6, 11, 62, 60], and motion deblurring [40]. However, these methods use precaptured images so that their camera simulation is restricted to the domains of the data. Key camera design decisions such as the FOV, resolution, use of multiple cameras, and the design of unconventional cameras (light field, etc.), are not addressed. Our method establishes a virtual environment to support the simulation and optimization of a much broader range of camera designs.

RL has also been explored for end-to-end optimization of imaging systems. Klinghoffer et al. [29] uses RL to train a camera designer, encompassing various camera parameters using the CARLA Simulator [12]. Hou et al. [24] introduce another RL-based approach for pedestrian detection. Although RL demonstrates impressive results in camera design, it involves optimizing complex neural networks that learn to design cameras, demanding more training data and time since the neural network contains a larger number of parameters that need to be optimized. In contrast, our approach directly optimizes the camera’s parameters, yielding competitive results with much less computation.

Genetic Algorithms for Camera Design Genetic algorithms [22] are widely applied across many domains thanks to their flexibility, robustness, and ability to explore complex search spaces including continuous, discrete, and categorical variables. For imaging systems design, existing works have applied genetic algorithms for optimizing camera placements in a network of cameras [41, 1, 21], and for optimizing optics design to improve image quality [4, 54, 42, 15, 14, 51, 61], a review can be found in [23]. To our knowledge, none of these existing methods combines genetic algorithms with gradient-based optimizers to co-design the camera hardware and perception tasks for automatic camera design and improved performance, which is the primary contribution of our work.

3 Method

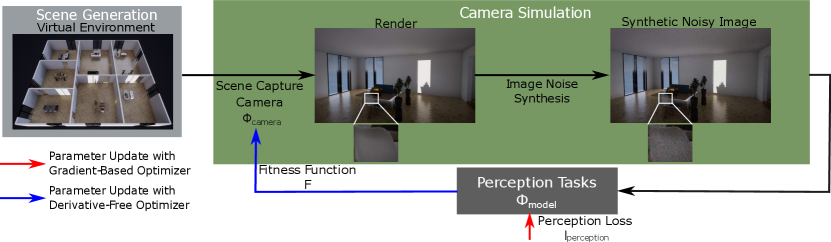

Our method (Fig. 2) uses a simulated camera to capture a virtual environment and apply a physics-based noise model. We then evaluate the resulting images in perception tasks and use this data to optimize the camera design. Our method outputs the designed camera parameters and trained models for perception tasks if the task is trainable.

3.1 Simulation Environment

The simulation environment should be photorealistic to minimize the sim-to-real gap. It should support rendering with various camera parameters and illuminations to maximize the design space, as well as the deployment of multiple cameras for unconventional imaging systems.

Considering the desired features of the simulation environment, we chose to use UE for this work. UE utilizes real-time hardware ray tracing combined with software ray tracing for global illumination and reflection, aligning with the physics of light and providing realistic shadowing, ambient occlusion, illumination, reflections, etc. [13]. UE supports the alteration of various camera parameters, detailed in Sec. 3.2, and the application of multiple cameras. Nevertheless, our method is not limited to UE, other simulators with the desired features or suited to the downstream tasks can also be used to build the simulation environment.

We deploy cameras on an auto-agent simulating the platform that uses the camera. The agent navigates the virtual environment autonomously, enabling a fully automated design process. The cameras capture scene renders as the agent moves, which are then used in downstream processes.

To demonstrate our method, we implemented the procedural generation of random indoor virtual environments and their associated semantic labels with UE 5, with support for application-specific objects. The implementation details of the environment are further explained in Sec. 4.3.

3.2 Camera Simulation

Our camera simulation includes a scene capture component from the simulation environment and an image noise synthesis component to augment the scene captures to enhance the realism of our simulation.

Scene Capture The scene capture component captures scene irradiance from the virtual environment. We employ the camera in UE that captures the scene renders, which allows the configuration of parameters associated with camera placement (location, orientation), optics (focal length and aperture), the image sensor (width, height, and pixel count), exposure settings (shutter speed and ISO), and multi-camera designs (number of cameras and their poses). It also allows the configuration of algorithms in the image processing pipeline (auto-exposure, white balancing, tone mapping, color correction, gamma correction, and compression) and includes image effects like motion blur.

We experimentally validate our method using parameters supported by UE. Additional parameters like geometric distortion and defocus blur could be added by augmenting the renderer. The noise synthesis model described below serves as an example of such augmentation.

Noise Synthesis Image noise is a fundamental limiting factor for many vision tasks that is tightly coupled to camera parameters such as pixel size and exposure settings. As UE lacks a realistic noise model, we incorporate a post-render image augmentation that introduces noise. We employ thermal and signal-dependent Poisson noise following the affine noise model [18]. The noise model is calibrated with a physical camera following established methods [43, 31, 57] and then generalized for different exposure time and gain settings. The generalized form of the noise model defines the variation of intensity at a pixel as

| (1) |

where and are photon and thermal noise respectively, and are the new gain and calibrated gain, and is the measured intensity. We detail the noise model calibration and generalization method in the supplementary material.

To generalize the noise model to different image sensors, we consider the ratio of their pixel sizes. For the same illumination, larger pixel sizes capture more photons, resulting in a higher measured intensity level, which is readily reflected by adjusting the gain in Eq. 1 inversely proportional to the pixel area. While we employ these observations to generalise noise characterisations, it is also possible to directly characterize multiple sensors and directly use these characterizations for more accurate noise characteristics.

3.3 Optimization

Optimizers We employ a derivative-free optimizer to optimize the camera parameters as many simulators and tasks used for designing cameras are non-differentiable, and numerous camera parameters are discrete or categorical, which makes gradient-based optimizers, such as gradient-descent, and gradient estimation based method, such as surrogate gradient and finite differences, inapplicable. Hence, we utilize the genetic algorithm [22] as the derivative-free optimizer, although other derivative-free optimizers can also be integrated into the proposed pipeline.

To enhance the performance of perception tasks, we jointly optimize the tasks (if applicable) along with the design of the camera hardware. The applicable tasks are the ones that involve machine learning models and are optimized using their corresponding optimizers. Given that neural networks are commonly used in SOTA methods for these tasks, gradient-based optimizers such as Stochastic Gradient Descent [45] or Adam [28] are typically employed. Therefore, our method simultaneously optimizes the camera hardware with a derivative-free optimizer and the trainable perception tasks with gradient-based optimizers. However, perception tasks that are not trainable, such as extracting Oriented FAST and Rotated BRIEF (ORB) features [47], are not jointly optimized.

Camera Parameters Our optimizer can handle the optimization of all parameters captured in the camera simulation, e.g. those outlined in Sec. 3.2, which can be both continuous and discrete. For instance, parameters related to optics and image sensors can be optimized as continuous variables if there are no manufacturing constraints on new optics/sensors. Alternatively, they can be selected from existing lens/sensor catalogs, allowing manufacturing and availability to be considered.

Discrete Variable Optimization Our approach offers two schemes to optimize the discrete camera variables: fully discrete and quantized continuous. In the fully discrete scheme, we optimize the parameter , representing the discrete parameter in its available values, by constraining the mutation stage of the genetic algorithm to ensure that only available values of this variable are used.

In the quantized continuous scheme, we adopt the “quantized continuous variables” method introduced in [9]. Here, in each iteration, the discrete parameter can freely change as a continuous variable from its current best value obtained in the previous iteration. However, it is then replaced with the closest value from its available range:

| (2) |

where is the parameter retained from its available range, is the variable obtained from the optimization process, and represents the -th parameter in its available values.

A limitation of the fully discrete scheme arises when a variable encompasses multiple interconnected parameters. For instance, if represents available image sensors, it includes parameters such as width, height, and pixel size. Selecting categorically does not leverage the relationships between these parameters. The quantized continuous scheme addresses this by optimizing all parameters within freely and then replaced by it with the closest categorical . Therefore, the fully discrete scheme is suitable for independent parameters like the number of cameras, while the quantized continuous scheme is beneficial for parameters with interdependencies like image sensors.

Fitness Function The fitness function is constructed based on the performance of tasks, which is used to optimize the camera parameters using the derivative-free optimizer. We demonstrate the construction of the fitness function incorporating various perception tasks in our experiments.

4 Experiments

We apply our proposed method to two design problems for demonstration: designing a stereo camera with two components for depth estimation on a vehicle and designing a monocular camera for multiple tasks on a Mixed Reality (MR) device. Additionally, we validate our camera simulation approach by comparing it with physical cameras. Additional details and results are provided in the supplement.

Assumptions (1) Objects’ distances to the camera exceed the camera’s hyperfocal distance so that the DOF is safely neglected. (2) The lens of our camera is free of geometric distortion and chromatic aberration.

Noise Synthesis We apply the affine noise model calibrated using a FLIR Flea3 Camera [17] with a Sony IMX172 sensor to all synthetic images, except the simulations of off-the-shelf cameras used in Sec. 4.1 and 4.3, which are calibrated using their corresponding noise model.

4.1 Simulator Validation

To validate our simulator, we compare synthetic images from our simulator with those captured by physical cameras in terms of image statistics and task performance.

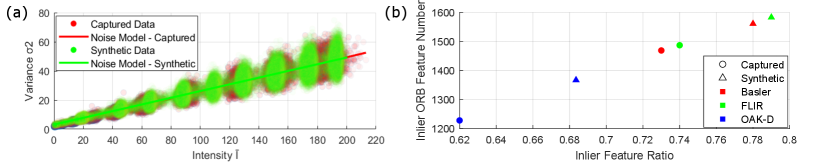

Image Statistics We use a test target with linear greyscale colorbars in a controlled illumination environment, capturing images with the FLIR Flea3 Camera [17]. The same test target is then recreated in our simulator, maintaining consistent illumination, camera position, and parameters. Fig. 3(a) shows intensity variances versus pixel intensities for both captured and synthetic images, with good alignment validating the accuracy of our noise model. The slight differences in mean intensity for each greyscale bar are caused by printer variations during manufacturing.

Perception Task Performance We further validate the cameras’ performance on perception tasks in our simulation, which serves as the evaluation method in Sec. 4.3. In this experiment, we focus on a feature extraction task using the widely applied ORB feature extractor [47].

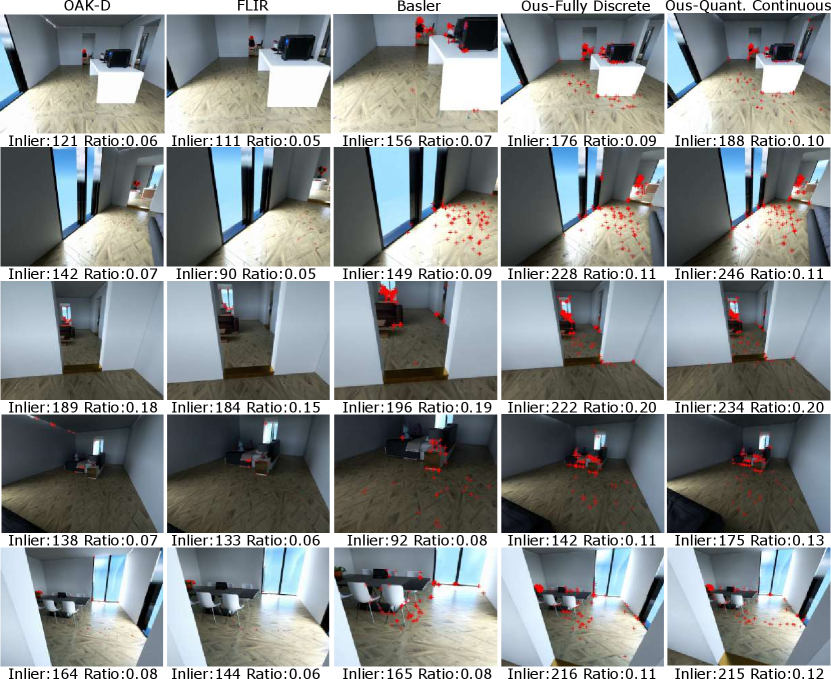

We use a test target from [10] with features of varying scales and depths, set against a texture-less background. Ten frames are captured with constant translational motion, ensuring consistent illumination, camera parameters, and relative positioning between real and virtual experiments. Fig. 3 (b) shows the number of inlier features (correctly matched points across consecutive frames) along with the ratio of inliers to total features (inliers plus outliers).

The comparison includes three robotic/machine vision cameras: the RGB camera of the Luxonis OAK-D Pro Wide [35], the FLIR Flea3 [17], and the Basler Dart DaA1280-54uc [3]. Specific noise models for these cameras, calibrated as described in Sec. 3.2, are applied in our simulator. The feature extraction task serves as a reliable measure of performance consistency between physical and simulated environments.

Fig. 3 (b) shows that synthetic images from our simulator yield more inlier features and a higher inlier ratio due to quality reduction in the physical test target from the manufacturing process. However, the relative performance of the cameras, crucial for optimization, remains consistent between simulated and physical settings. This validates that our simulations accurately reflect real-world camera performance and effectively evaluate camera designs.

4.2 Stereo Camera on Autonomous Vehicle

We apply our method to design a stereo camera on an autonomous vehicle for the task of depth estimation.

Environment and Data This experiment is conducted in CARLA [12], based on UE 4. The stereo camera is mounted to a car that moves automatically. The environment is configured with constant illumination, and motion blur enabled due to the moving platform. Images are captured during both training and testing. In training, each camera configuration captures one image per step, totalling 1000 images over 1000 steps. For testing, each camera design captures 1500 images to evaluate performance. Training and testing are performed on different urban outdoor maps.

Design Space We optimize the baseline () and horizontal FOV (]) as continuous variables. We use two cameras with fixed sensor sizes of 1.536 mm0.768 mm, a pixel size of 1.55 m, and a mounting height of 2 m, positioned forward-facing with no slant.

Stereo Matching Network We use the PSMNet [8] to predict the disparity map, which is pretrained on the KITTI Stereo 2015 dataset [37, 38] and subsequently fine-tuned with the optimization of camera parameters using its loss function (, Smooth L1 Loss), and the maximum disparity for this model is capped as 192 following [8]. We use an Adam optimizer [28] with a batch size of 4 and a learning rate of to train the network.

Fitness Function We use the inverse of the log error between the predicted and ground-truth disparity maps (normalized by baselines), which emphasizes the depth prediction accuracy in both large and small distances.

Derivative-Free Optimization The genetic algorithm uses 5 solutions per generation, retaining the top 2 for the next iteration. The top 3 generate offspring via uniform crossover, followed by mutation with a random factor (0.8 to 1.2) and an addition value (-5 to 5 for FOV, -0.2 to 0.2 for baseline). Hyperparameters are empirically chosen, and optimization parameters are randomly initialized. See the supplement for comparisons of hyperparameters and initialization.

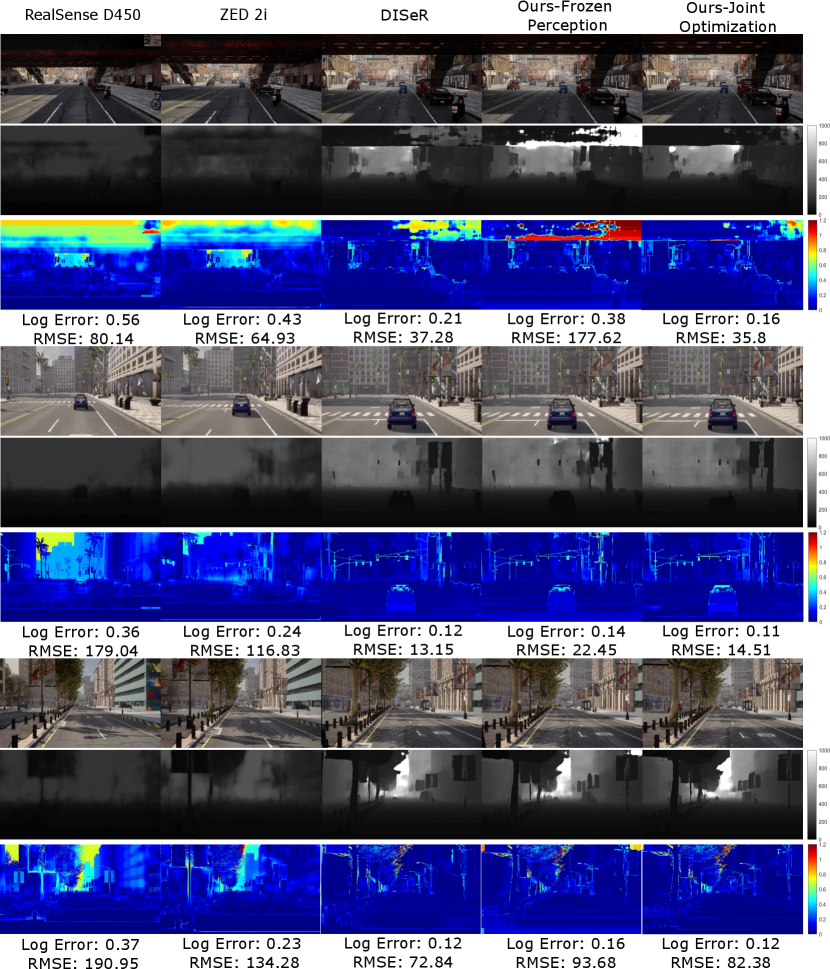

Results Tab. 1 details the performance of stereo cameras designed by our method, compared with two off-the-shelf models, the Intel RealSense D450 [26] and ZED 2i [49], as well as a camera designed with the RL method DISeR [29]. We also compare results from jointly optimizing camera design and perception tasks against optimizing camera design alone while fixing perception model parameters (pretrained with 2500 images from CARLA using 50 camera configurations). indicates optimized parameters and indicates fixed parameters. Performance is evaluated using Average Log Error and Root Mean Square Error (RMSE) between estimated and ground-truth depths in meters.

Our camera design and DISeR perform best in terms of log error, which evaluates depth estimation accuracy across both short and long distances, and RMSE, which favors larger baselines for more accurate long-distance estimation as long-distance has a greater impact on this value. However, larger baselines struggle with short-distance accuracy due to limited overlapping FOVs and the perception model’s maximum disparity setting. While increasing FOVs can improve overlap, it reduces accuracy by lowering disparity values. As a result, both our method and DISeR converge on small FOVs and moderate baselines to balance accuracy across all distances. See the supplementary material for qualitative results and analysis.

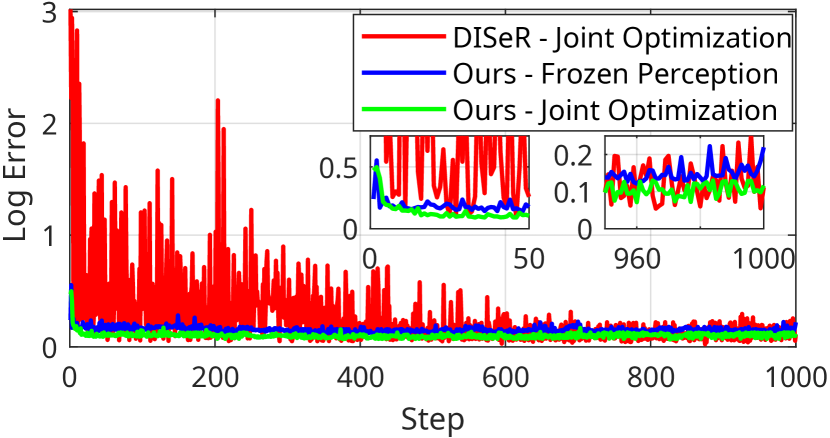

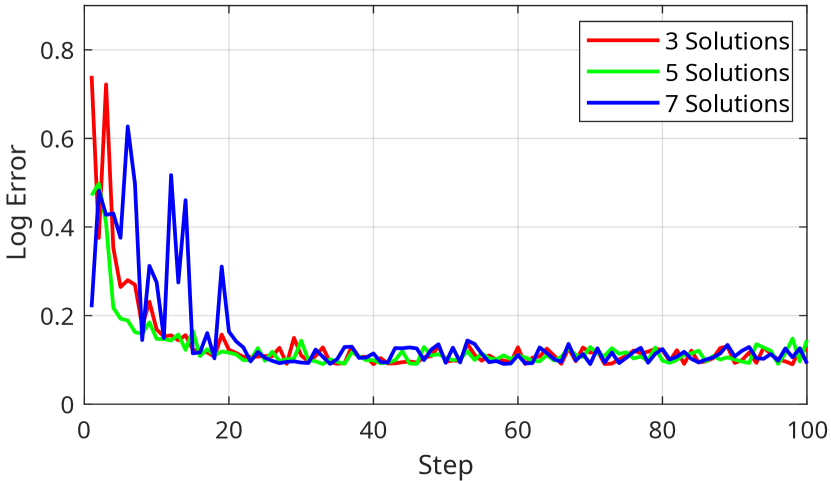

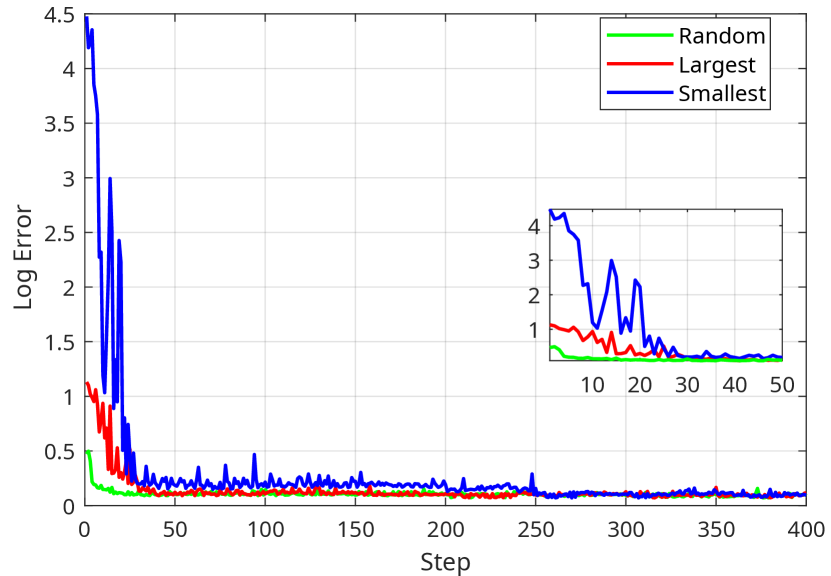

Fig. 4 compares the training curves for our joint optimization method, our method with fixed perception parameters, and the RL method. Our method converges faster than DISeR by directly optimizing camera parameters, whereas DISeR optimizes a policy network with many parameters to predict them. The RL method shows abrupt changes during training, as all network parameters are updated in each iteration, leading to significant fluctuations in camera parameters and performance. In contrast, the genetic algorithm makes smaller parameter adjustments, resulting in smoother performance changes. Our method converges with 45 steps in 2 min and completes 1000 steps in 38 minutes with an NVIDIA RTX4070 GPU, while DISeR takes 700 steps (67 min) to converge and 97 minutes to complete 1000 steps.

| Scenario | Camera | Camera Parameters | Performance | ||||||

| Pitch Angle | Focal Length | Sensor Size | Pixel Size | Obstacle Detect. | Object Detect. | Inlier Number | Inlier Ratio | ||

| (∘) | (mm) | (mm) | (m) | Accuracy | AP | ||||

| Day | OAK-D [35] | -20.04 | 2.75 | 6.294.71 | 1.55 | 1 | 0.37 | 115 | 0.07 |

| FLIR [17] | -23.28 | 3.6 | 6.24.65 | 1.55 | 1 | 0.37 | 77 | 0.05 | |

| Basler [3] | -25.90 | 3.6 | 4.83.6 | 3.75 | 1 | 0.23 | 131 | 0.08 | |

| Ours - Fully Discrete | -27.87 | 4.01 | 8.456.76 | 6.6 | 1 | 0.43 | 191 | 0.13 | |

| -24.69 | 3.77 | 8.456.76 | 6.6 | 1 | 0.51 | 189 | 0.12 | ||

| Ours - Quantized Continuous | -21.86 | 2.99 | 8.456.76 | 6.6 | 1 | 0.46 | 230 | 0.13 | |

| -26.34 | 2.88 | 7.315.58 | 4.5 | 1 | 0.64 | 224 | 0.13 | ||

| Night | OAK-D [35] | -19.72 | 2.75 | 6.294.71 | 1.55 | 1 | 0.34 | 117 | 0.06 |

| FLIR [17] | -22.71 | 3.6 | 6.24.65 | 1.55 | 1 | 0.36 | 69 | 0.03 | |

| Basler [3] | -25.83 | 3.04 | 4.83.6 | 3.75 | 1 | 0.16 | 122 | 0.07 | |

| Ours - Fully Discrete | -23.65 | 2.61 | 7.25.4 | 4.5 | 1 | 0.37 | 179 | 0.11 | |

| -23.66 | 3.10 | 7.25.4 | 4.5 | 1 | 0.42 | 170 | 0.11 | ||

| Ours - Quantized Continuous | -29.86 | 3.33 | 8.456.76 | 6.6 | 1 | 0.37 | 165 | 0.11 | |

| -22.58 | 3.49 | 14.489.94 | 9 | 1 | 0.57 | 172 | 0.11 | ||

4.3 Monocular Camera on Mixed Reality Headset

In our second experiment, we apply our method to design a monocular RGB camera for an MR headset. Object detection, obstacle avoidance, and feature extraction for 3D reconstruction are selected as examples of tasks as they are essential for most MR devices. However, other tasks can be added depending on the target application.

Environment and Data We establish an indoor environment in UE 5 with 10 object classes. Floorplans and object locations are randomly generated to introduce variability and the camera is mounted on an auto-agent acting as a user. During training, each camera design captures 500 images, indicating 10000 images in total as we optimize for 20 generations, 10 solutions per generation. Testing is conducted with 1000 images using different scene configurations.

Design Space We focus on designing geometric parameters that determine the camera’s FOV and photometric parameters affecting resolution, crucial for user mobility and objects’ effective resolution. For FOV, we optimize the mounting angle in pitch direction (, focal length (), and image sensor dimensions (width and height ). The number of pixels is decided by the sensor’s pixel size () and its dimensions. The camera’s height varies randomly between 1 m to 2 m per training step to ensure robustness across different user heights.

We restrict our design to readily available sensors by optimizing the image sensor () as a categorical variable, selecting from a catalog of 43 commercial CMOS image sensors from five manufacturers (, ,…, ). Each sensor () comprises a set of sensor-related parameters (, , and ). We compare two optimization techniques for discrete variables, treating as fully discrete and using the quantized continuous approach. Our catalog predominantly features 28 Sony sensors, reflecting their widespread use in machine vision cameras, and our noise model is more likely to generalize well across sensors from the same manufacturer. Hence, we also used a Sony sensor to calibrate.

Obstacle Avoidance To assess the camera’s ability to detect obstacles affecting user mobility, we place thresholds at room entrances in our virtual environment. These low-height obstacles highlight the importance of an appropriate FOV and mounting angle. We focus on low-height obstacles because taller ones are constrained by object and feature detection tasks. Visibility to the camera is determined by whether the obstacle appears in rendered images. Additionally, the auto-agent is programmed to react when stepping on a threshold, regardless of its visual rendering.

Feature Extraction We extract ORB features [47] and employ the Brute-Force Matcher in OpenCV [27] to match features across consecutive frames, we then apply RANSAC [16] to find inlier features while estimating transformation matrix between frames.

Object Detector We utilize a Faster R-CNN [44] object detector with a ResNet-50 [19] backbone, pretrained on 2000 images generated by our simulator and then fine-tuned alongside the camera parameters. Training employs an Adam optimizer [28] with a batch size of 8 and a learning rate of , which decays by 0.5 every 5 steps during both pre-training and fine-tuning.

Fitness Function The fitness function combines three perception tasks. For obstacle avoidance, it calculates the ratio of the number of obstacles seen by the camera () to the total number of obstacles () in the user’s path. The object detector is trained using its loss function (), and we take the Average Precision (AP) with a 0.5 Intersection-over-Union (IoU) threshold as a term in the fitness function. Feature extraction contributes through the number of inlier features () and the ratio of inlier features to total features (), emphasizing both the quantity and accuracy of detected features. Thus, the total fitness function is

| (3) | |||

where we balance the weights of all terms by setting to 0.0025 (inlier in an image is typically 100-250), to 0.5, , , and to 1 as we consider these tasks equally significant.

Derivative-Free Optimization We optimize the camera parameters using the genetic algorithm over 20 generations with 10 solutions per generation. A uniform crossover is applied using the top 5 solutions to produce offspring, while the top 3 solutions are reused. The mutation process remains consistent with the previous experiment, except the addition value is randomly selected between -3 and 3. These hyperparameters are chosen empirically. Each solution involves collecting 500 images to evaluate both the feature extraction and obstacle (threshold) avoidance tasks.

Results We present the optimized set of camera parameters obtained through our approach and evaluate the camera’s performance in Tab. 2, considering obstacle detection accuracy, AP score, average number of inlier ORB features across consecutive frames, and ratio of average inlier features to total features extracted. Additionally, we report optimized parameters under two application scenarios: daytime operation in a well-illuminated simulation environment (20 lux) with a lower baseline camera gain (5 dB), and nighttime operation in low-light conditions (2 lux) with a higher baseline camera gain (15 dB). We observed that different application scenarios led to distinct camera designs. Our approach designed a camera with a larger pixel size for nighttime applications, which is expected as a sensor with larger pixels delivers higher SNR since larger pixels gather more light. Hence, sensors with larger pixels require lower gain to capture images with the comparable measured intensity compared to sensors with smaller pixels.

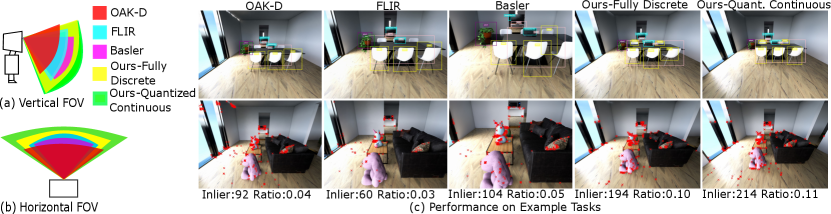

We compare our optimized camera with three human-designed robotic/machine vision cameras, OAK-D, FLIR, and Basler, used in previous experiments in our simulation. Specific noise models are applied to these cameras, with optimized mounting angles while others remain fixed due to configurability. Additionally, we report performance without joint optimization, where parameters of the object detection network are frozen. Fig. 5 illustrates FOVs and example evaluations of our proposed cameras with joint optimization alongside robotic/machine vision cameras. Please refer to the supplementary material for more visualisations.

The results show performance improvements across all tasks with our method, while lower performance is observed under night scenarios due to reduced SNR. Fully discrete optimization schemes show lower performance, indicating the importance of considering parameter interdependencies. Similarly, freezing parameters of the perception model results in reduced performance. The performance of obstacle avoidance is always perfect because we optimized the pitch angle of all the cameras, including the off-the-shelf ones.

We note that the RL method was developed for and demonstrated on single-frame tasks [29]. Both feature matching and obstacle avoidance are episodic, multi-frame tasks requiring consecutive images for precise performance evaluation, and this data collection process is time-consuming. The RL method demands significantly more training steps and image data than ours, applying it to episodic tasks requires impractically long optimization times that prevent us from making a direct comparison. Hence, it is not compared in this experiment.

5 CONCLUSION

We presented a novel end-to-end approach that combines derivative-free and gradient-based optimizers to co-design cameras with perception tasks efficiently. Utilizing UE and an affine noise model, we constructed a camera simulator and validated its accuracy against physical cameras. Our method handles continuous, discrete, categorical camera parameters, and advances a quantized continuous approach for discrete variables to consider their interdependencies. We believe this work can be generalized easily and is an important step toward principled and automated camera design for autonomous systems that account for the interdependency between cameras and the algorithms that interpret them. For future work, we aim to develop a task-driven control algorithm that dynamically adjusts camera parameters, such as exposure settings, in an online manner.

Acknowledgements We would like to thank both ARIA Research Pty Ltd and the Australian government for their funding support via a CRC Projects Round 11 grant.

References

- [1] Azeddine Aissaoui, Abdelkrim Ouafi, Philippe Pudlo, Christophe Gillet, Zine-Eddine Baarir, and Abdelmalik Taleb-Ahmed. Designing a camera placement assistance system for human motion capture based on a guided genetic algorithm. Virtual reality, 22:13–23, 2018.

- [2] Seung-Hwan Baek and Felix Heide. Polka lines: Learning structured illumination and reconstruction for active stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5757–5767, 2021.

- [3] Basler AG. Basler dart daA1280-54uc, 3 2024. Rev. 85.

- [4] Ellis I Betensky. Postmodern lens design. Optical Engineering, 32(8):1750–1756, 1993.

- [5] Henryk Blasinski, Joyce Farrell, Trisha Lian, Zhenyi Liu, and Brian Wandell. Optimizing image acquisition systems for autonomous driving. Electronic Imaging, 2018(5):161–1, 2018.

- [6] Julie Chang, Vincent Sitzmann, Xiong Dun, Wolfgang Heidrich, and Gordon Wetzstein. Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification. Scientific reports, 8(1):12324, 2018.

- [7] Julie Chang and Gordon Wetzstein. Deep optics for monocular depth estimation and 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10193–10202, 2019.

- [8] Jia-Ren Chang and Yong-Sheng Chen. Pyramid stereo matching network. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5410–5418, 2018.

- [9] Geoffroi Côté, Fahim Mannan, Simon Thibault, Jean-François Lalonde, and Felix Heide. The differentiable lens: Compound lens search over glass surfaces and materials for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 20803–20812, 2023.

- [10] Donald G Dansereau, Bernd Girod, and Gordon Wetzstein. LiFF: Light field features in scale and depth. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8042–8051, 2019.

- [11] Steven Diamond, Vincent Sitzmann, Frank Julca-Aguilar, Stephen Boyd, Gordon Wetzstein, and Felix Heide. Dirty pixels: Towards end-to-end image processing and perception. ACM Trans. Graph., 40(3):1–15, 2021.

- [12] Alexey Dosovitskiy, German Ros, Felipe Codevilla, Antonio Lopez, and Vladlen Koltun. CARLA: An open urban driving simulator. In Conference on robot learning, pages 1–16. PMLR, 2017.

- [13] Epic Games. Unreal engine.

- [14] Yi Chin Fang, Tung-Kuan Liu, Cheng-Mu Tsai, Jyh-Horng Chou, Han-Ching Lin, and Wei Teng Lin. Extended optimization of chromatic aberrations via a hybrid taguchi–genetic algorithm for zoom optics with a diffractive optical element. Journal of Optics A: Pure and Applied Optics, 11(4):045706, 2009.

- [15] Yi-Chin Fang, Chen-Mu Tsai, John MacDonald, and Yang-Chieh Pai. Eliminating chromatic aberration in gauss-type lens design using a novel genetic algorithm. Applied Optics, 46(13):2401–2410, 2007.

- [16] Martin A Fischler and Robert C Bolles. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 24(6):381–395, 1981.

- [17] FLIR Integrated Imaging Solutions Inc. FLIR FLEA®3 USB3 Vision, 1 2017. Rev. 8.1.

- [18] Alessandro Foi. Clipped noisy images: Heteroskedastic modeling and practical denoising. Signal Processing, 89(12):2609–2629, 2009.

- [19] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [20] Lei He, Guanghui Wang, and Zhanyi Hu. Learning depth from single images with deep neural network embedding focal length. IEEE Transactions on Image Processing, 27(9):4676–4689, 2018.

- [21] Andries M Heyns. Optimisation of surveillance camera site locations and viewing angles using a novel multi-attribute, multi-objective genetic algorithm: A day/night anti-poaching application. Computers, Environment and Urban Systems, 88:101638, 2021.

- [22] John H Holland. Adaptation in natural and artificial systems: an introductory analysis with applications to biology, control, and artificial intelligence. MIT press, 1992.

- [23] Kaspar Höschel and Vasudevan Lakshminarayanan. Genetic algorithms for lens design: a review. Journal of Optics, 48(1):134–144, 2019.

- [24] Yunzhong Hou, Xingjian Leng, Tom Gedeon, and Liang Zheng. Optimizing camera configurations for multi-view pedestrian detection. arXiv preprint arXiv:2312.02144, 2023.

- [25] Hayato Ikoma, Cindy M Nguyen, Christopher A Metzler, Yifan Peng, and Gordon Wetzstein. Depth from defocus with learned optics for imaging and occlusion-aware depth estimation. In 2021 IEEE International Conference on Computational Photography, pages 1–12. IEEE, 2021.

- [26] Intel. Intel RealSense Product Family D400 Series, 3 2024. Rev. 018.

- [27] Itseez. Open source computer vision library. https://github.com/itseez/opencv, 2015.

- [28] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Proccedings of the 3rd International Conference on Learning Representations, 2015.

- [29] Tzofi Klinghoffer, Kushagra Tiwary, Nikhil Behari, Bhavya Agrawalla, and Ramesh Raskar. DISeR: Designing imaging systems with reinforcement learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 23632–23642, 2023.

- [30] Michael F Land and Dan-Eric Nilsson. Animal eyes. OUP Oxford, 2012.

- [31] Ce Liu, William T Freeman, Richard Szeliski, and Sing Bing Kang. Noise estimation from a single image. In 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, volume 1, pages 901–908. IEEE, 2006.

- [32] Zhenyi Liu, Trisha Lian, Joyce Farrell, and Brian Wandell. Soft prototyping camera designs for car detection based on a convolutional neural network. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pages 0–0, 2019.

- [33] Zhenyi Liu, Shen Minghao, Jiaqi Zhang, Shuangting Liu, Henryk Blasinski, Trisha Lian, and Brian Wandell. A system for generating complex physically accurate sensor images for automotive applications. Electronic Imaging, 2019:53–1, 01 2019.

- [34] Zhenyi Liu, Devesh Shah, Alireza Rahimpour, Devesh Upadhyay, Joyce Farrell, and Brian A Wandell. Using simulation to quantify the performance of automotive perception systems. arXiv preprint arXiv:2303.00983, 2023.

- [35] Luxonis. OAK-D Pro (USB boot with 256Mbit NOR flash), 12 2021.

- [36] Julien NP Martel, Lorenz K Mueller, Stephen J Carey, Piotr Dudek, and Gordon Wetzstein. Neural sensors: Learning pixel exposures for hdr imaging and video compressive sensing with programmable sensors. IEEE transactions on pattern analysis and machine intelligence, 42(7):1642–1653, 2020.

- [37] Moritz Menze, Christian Heipke, and Andreas Geiger. Joint 3d estimation of vehicles and scene flow. In ISPRS Workshop on Image Sequence Analysis, 2015.

- [38] Moritz Menze, Christian Heipke, and Andreas Geiger. Object scene flow. ISPRS Journal of Photogrammetry and Remote Sensing, 2018.

- [39] Christopher A Metzler, Hayato Ikoma, Yifan Peng, and Gordon Wetzstein. Deep optics for single-shot high-dynamic-range imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1375–1385, 2020.

- [40] Cindy M Nguyen, Julien NP Martel, and Gordon Wetzstein. Learning spatially varying pixel exposures for motion deblurring. In 2022 IEEE International Conference on Computational Photography, pages 1–11. IEEE, 2022.

- [41] Gustavo Olague and Roger Mohr. Optimal camera placement for accurate reconstruction. Pattern recognition, 35(4):927–944, 2002.

- [42] Isao Ono, Shigenobu Kobayashi, and Koji Yoshida. Optimal lens design by real-coded genetic algorithms using undx. Computer methods in applied mechanics and engineering, 186(2-4):483–497, 2000.

- [43] Netanel Ratner and Yoav Y Schechner. Illumination multiplexing within fundamental limits. In 2007 IEEE Conference on Computer Vision and Pattern Recognition, pages 1–8. IEEE, 2007.

- [44] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster R-CNN: Towards real-time object detection with region proposal networks. Advances in neural information processing systems, 28, 2015.

- [45] Herbert Robbins and Sutton Monro. A stochastic approximation method. The annals of mathematical statistics, pages 400–407, 1951.

- [46] Nicolas Robidoux, Luis E Garcia Capel, Dong-eun Seo, Avinash Sharma, Federico Ariza, and Felix Heide. End-to-end high dynamic range camera pipeline optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6297–6307, 2021.

- [47] Ethan Rublee, Vincent Rabaud, Kurt Konolige, and Gary Bradski. ORB: An efficient alternative to sift or surf. In 2011 International conference on computer vision, pages 2564–2571. Ieee, 2011.

- [48] Vincent Sitzmann, Steven Diamond, Yifan Peng, Xiong Dun, Stephen Boyd, Wolfgang Heidrich, Felix Heide, and Gordon Wetzstein. End-to-end optimization of optics and image processing for achromatic extended depth of field and super-resolution imaging. ACM Trans. Graph, 37(4):1–13, 2018.

- [49] StereoLabs. ZED 2i Camera Overview & Datasheet. Rev. 1.2.

- [50] Qilin Sun, Congli Wang, Fu Qiang, Dun Xiong, and Heidrich Wolfgang. End-to-end complex lens design with differentiable ray tracing. ACM Trans. Graph, 40(4):1–13, 2021.

- [51] Cheng-Mu Tsai and Yi-Chin Fang. Improvement of filed curvature aberration in a projector lens by using hybrid genetic algorithm with damped least square optimization. Journal of Display Technology, 11(12):1023–1030, 2015.

- [52] Ethan Tseng, Ali Mosleh, Fahim Mannan, Karl St-Arnaud, Avinash Sharma, Yifan Peng, Alexander Braun, Derek Nowrouzezahrai, Jean-Francois Lalonde, and Felix Heide. Differentiable compound optics and processing pipeline optimization for end-to-end camera design. ACM Trans. Graph., 40(2):1–19, 2021.

- [53] Ethan Tseng, Felix Yu, Yuting Yang, Fahim Mannan, Karl ST Arnaud, Derek Nowrouzezahrai, Jean-François Lalonde, and Felix Heide. Hyperparameter optimization in black-box image processing using differentiable proxies. ACM Trans. Graph., 38(4):27–1, 2019.

- [54] Derek C Van Leijenhorst, Carlos B Lucasius, and Jos M Thijssen. Optical design with the aid of a genetic algorithm. BioSystems, 37(3):177–187, 1996.

- [55] Brian Wandell, David Cardinal, David Brainard, Zheng Lyu, Zhenyi Liu, Aaron Webster, Joyce Farrell, Trisha Lian, Henryk Blasinski, and Kaijun Feng. Iset/isetcam, 2 2024.

- [56] Brian Wandell, David Cardinal, Trisha Lian, Zhenyi Liu, Zheng Lyu, David Brainard, Henryk Blasinski, Amy Ni, Joelle Dowling, Max Furth, Thomas Goossens, Anqi Ji, and Jennifer Maxwell. Iset/iset3d, 2 2024.

- [57] Taihua Wang and Donald G Dansereau. Multiplexed illumination for classifying visually similar objects. Applied Optics, 60(10):B23–B31, 2021.

- [58] Korbinian Weikl, Damien Schroeder, Daniel Blau, Zhenyi Liu, and Walter Stechele. End-to-end imaging system optimization for computer vision in driving automation. In Proceedings of IS&T Int’l. Symp. on Electronic Imaging: Autonomous Vehicles and Machines, 2021.

- [59] Xinge Yang, Qiang Fu, and Wolfgang Heidrich. Curriculum learning for ab initio deep learned refractive optics. Nature communications, 15(1):6572, 2024.

- [60] Xinge Yang, Qiang Fu, Yunfeng Nie, and Wolfgang Heidrich. Image quality is not all you want: Task-driven lens design for image classification. arXiv preprint arXiv:2305.17185, 2023.

- [61] Chih-Ta Yen and Jhe-Wen Ye. Aspherical lens design using hybrid neural-genetic algorithm of contact lenses. Applied optics, 54(28):E88–E93, 2015.

- [62] Yuxuan Zhang, Bo Dong, and Felix Heide. All you need is raw: Defending against adversarial attacks with camera image pipelines. In European Conference on Computer Vision, pages 323–343. Springer, 2022.

Appendix A Code

Our code is available on our project page at https://roboticimaging.org/Projects/TaCOS/. We include the implementation of our camera design method for both the stereo camera and monocular camera design experiments, the source code and guidance for creating the indoor virtual environment used in the monocular camera design experiment, and the catalog of commonly available image sensors that we collected.

Appendix B Additional Details on Noise Synthesis

We provide additional details on our image noise calibration and generalization method.

B.1 Noise Model Calibration

We adopt the affine noise model [18] in this work. The noise model describes a linear relationship between the variance () in image pixel intensities for different mean intensity values () in terms of constant thermal noise () and intensity-varying photon noise ():

| (4) |

Calibrating the noise model follows established methods [43, 31, 57]. In this work, we use a greyscale test target with colorbars containing uniformly distributed grey levels from fully white to fully black. With captured images of the test target, we determine mean intensities and variances for each pixel, using these values to fit the affine noise model defined in Eq. 4.

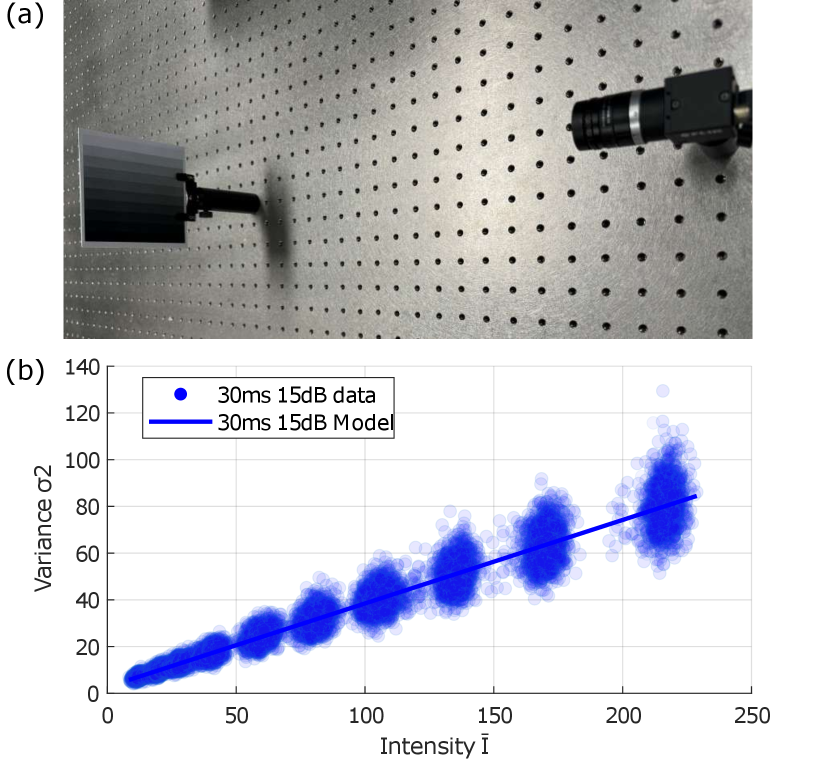

We calibrate the noise model with a FLIR Flea3 Camera [17] with a Sony IMX172 image sensor. The exposure time is set as 30 ms and the gain is set as 15 dB. Note that the dark current noise is safely neglected in this work as the exposure time used (30 ms) is relatively short. The setup of this calibration is demonstrated in Fig. 6 and we display the plot of the obtained noise model in Fig. 6 (b).

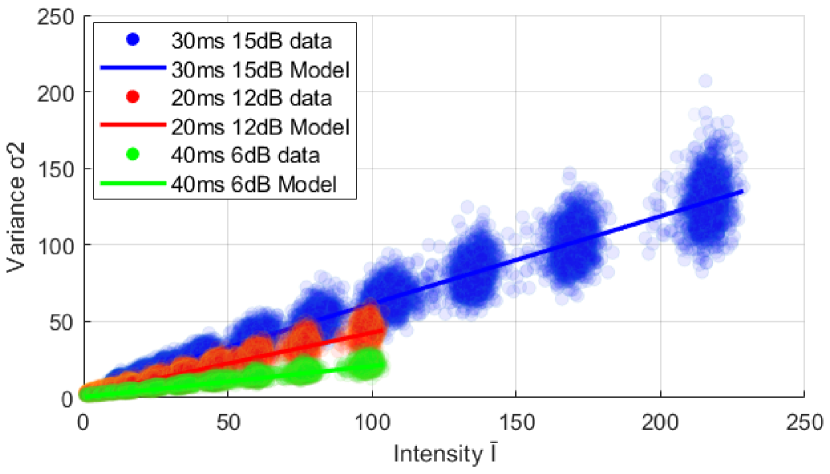

B.2 Noise Model Generalization

The noise model in Eq. 4 is calibrated using a specific image sensor and exposure setting. We expand the equation so that it can be generalized to different exposure settings and image sensors.

Consider the intensity () in Eq. 4 as the measured pixel intensity by the camera: , where and are the exposure time and gain respectively, and is the scene radiance. Intensity changes due to exposure time setting are reflected in the measured intensity value, therefore, we generalize the noise model to other exposure and gain settings by multiplying the ratio of the new gain () and the calibrated gain () used in the noise calibration stage. Then we can transform Eq. 4 to obtain the noise model with new exposure settings. For the photon noise term, replacing in Eq. 4 with the new observed intensity and multiplying it with the gain ratio to scale the value of . For the thermal noise term, scaling with the second order square of the ratio between the new gain and the calibrated gain, the noise model becomes Eq. 2 of the main paper.

In Fig. 7, we show the generalization of the calibrated noise model using 30 ms exposure time and 15 dB gain to two other exposure settings with the same camera, which are 20 ms exposure time with 12 dB gain and 40 ms exposure time and 6 dB gain. The data on the plot are captured with the camera, while the noise models are obtained with our derived generalization equation, which is Eq. 2 of the main paper. This experiment validates both the noise calibration and the generalisation of the noise model.

Appendix C Additional Details on Simulator Validation

We provide implementation details for the simulator validation experiments. The results of the experiments are illustrated in Fig. 3 of the main paper.

| Initialization | Camera Parameters | Performance | ||

|---|---|---|---|---|

| Baseline | Horizontal FOV | Log Error | RMSE | |

| (m) | (°) | |||

| Smallest | 1.67 | 50 | 0.14 | 80.7 |

| Largest | 1.64 | 50 | 0.14 | 78.05 |

| Random | 1.6 | 50 | 0.14 | 79.81 |

C.1 Image Statistics

This experiment reuses the same test target and the FLIR camera as the noise level calibration experiment shown in Fig. 6 (a). In this experiment, we strictly control the distance between the test target and the camera to 50 cm, and the illumination level at the test target as 2000 lux (measured with a light meter) using an LED panel light. The light is placed above the camera with an 80 cm distance and an angle of approximately 25°, facing downward to the test target.

The same setup is then duplicated in Unreal Engine (UE), which is the simulator used in our experiments. In UE, the scene capture camera is set to have the same focal length, sensor size, pixel number, exposure time, and aperture size as the physical camera. However, we manually tune the ISO setting in the simulator to achieve the same brightness level as the physical camera. The rendered images are then applied with the noise model calibrated with the physical camera.

C.2 Perception Task Performance

To validate our simulator in the performance of extracting Oriented FAST and Rotated BRIEF (ORB) [47] features, we adopt a test target used in [10], displayed in Fig. 8 (a), that is suitable for feature extraction. We use the same illumination setup in this experiment as described in Sec. C.1. This experiment was conducted with the RGB camera of the Luxonis OAK-D Pro Wide camera, the FLIR Flea3 Camera, and the Basler Dart DaA1280-54uc camera for comparison, which are shown in Fig. 8 (b), (c), and (d). In addition, the background of this experiment is textureless to avoid additional features, and the test target is moved and captured at 10 locations along the same horizontal line to simulate a translational motion for feature matching and determining the number of inlier features, motion blur is not considered for this experiment due to a short exposure time.

In our UE simulator, we also duplicate the setup in the physical experiment. Similarly, we configure the scene capture camera to have the same focal lengths, sensor sizes, pixel numbers, exposure times, and aperture sizes as the three physical cameras, and we manually calibrate the ISO settings to match their gain values. We calibrate the noise models for these three cameras individually for this experiment and apply their noise models to the renders. The noise model calibration method follows Sec. B.1.

Appendix D Genetic Algorithm Implementation

D.1 Hyperparameter Selection

Population Size The number of solutions per generation is selected empirically based on the number of parameters to optimize. For example, we only optimize 2 camera parameters in the stereo camera design example so that we choose a relatively small solution number (5 solutions per generation), and for a more complex problem like the monocular camera design example, we use a larger solution number (10 solutions per generation). This scheme is selected since more complex design problems generally require more diverse solutions to search through a larger search space. However, a larger number of solutions takes longer to converge since a larger search space is explored. Conversely, a smaller solution number gives a less diverse search space and encounters the issue of local optima. Hence, we empirically select the number of solutions in this work as illustrated in Fig. 9, in which we compare the training curves of the depth estimation experiment with 3 different numbers of solutions per generation.

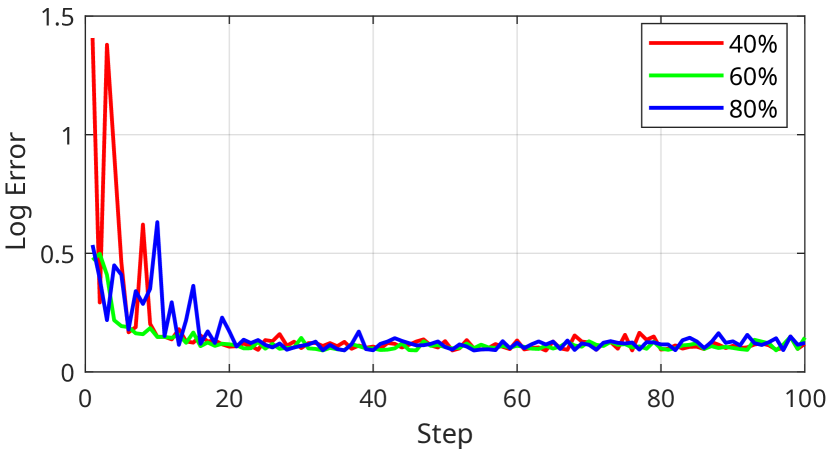

Offspring Generation The offspring generation process contains three steps: parent selection, crossover, and mutation. We select the top solutions from the current generation as parents for offspring generation. The number of parents is chosen as 50% or 60% of the size of the population, 50% for an even number of solutions and 60% for an odd number of solutions. For example, we use the top 3 solutions out of a total of 5 solutions per generation for the stereo camera design problem and use the top 5 solutions out of a total of 10 solutions per generation for the monocular design problem. We compare the optimization curves from 3 different portions of populations used for offspring generation (40%, 60%, 80%) in the depth estimation task. The results are displayed in Fig. 10, which indicate that although the final performance is not affected when using a large number of training steps, using 60% of the population to generate offspring yields the fastest and smoothest convergence. Since using 40% of the population as parents emphasizes exploitation over exploration, the optimizer may get stuck at local optima with fewer training steps. On the other hand, using 80% of the population as parents creates more diverse offspring, which emphasizes exploration over exploitation and leads to slower convergence. Hence, we choose an intermediate percentage for offspring generation that is roughly half of the solution number per generation.

We then apply a uniform crossover scheme using the selected parents to produce the offspring for the next generation of solutions, indicating that each parameter for the offspring is randomly selected from the parents. For mutation, we apply a multiplication factor from the same range, which is a random number between 0.8 to 1.2, for all the parameters in our experiments and apply an addition value whose range is customised for different parameters depending on their available design space. All these hyperparameters used to implement the genetic algorithm are selected empirically.

D.2 Parameter Initialization

In our experiments, all the camera parameters that are optimized by the genetic algorithm are initialized with random values within their design space. However, we compare the optimization results using the proposed method with different initialization by setting the camera parameters in the depth estimation task to be initialized at their smallest values (baseline: 0.01 m, FOV: 50°) and their largest values in the design space (baseline: 3 m, FOV: 120°).

The results are displayed in Tab. 3. The experiment uses the same hyperparameters for the genetic algorithm as described in Sec. D.1 to optimize the camera parameters. The perception network is jointly trained during optimization, indicated by in the table. The results indicate that the initialization of camera parameters does not have a significant impact on the final camera parameters and the downstream task performance.

In addition, we illustrate the training curves of the above-mentioned 3 camera parameter initialization schemes in Fig. 11, which shows that random generation results in faster convergence compared to initialising from extreme values. This is because the optimal solution is usually not the extreme values, and starting from random values between the extremes gives values that are relatively closer to the optimal solution. It is observed that starting from the smallest parameter values results in the slowest convergence. This is because the offspring at every iteration is generated from the top solutions of the previous generation with a mutation process, where the parent solutions are multiplied by a random factor as the first mutation step. Therefore, smaller parameters change on a smaller scale compared to larger values, which slows down the exploration process and leads to slower convergence.

Appendix E Additional Results on Stereo Camera Design

We provide additional qualitative results for our stereo camera design experiment in Fig. 12. The figure displays the captured left images, the estimated depths, and the log error between the estimated maps and the ground-truth maps. We compare the images and results by using the stereo cameras designed by the proposed method with and without joint optimization, the Reinforcement Learning (RL) method (DISeR) [29], and two off-the-shelf cameras, which are Intel RealSense D450 and ZED 2i. The configurations of these cameras are listed in Tab. 1 of the main paper. The metrics displayed in this figure are the same as Tab. 1 of the main paper, which is log error and RMSE error in meters.

The results show that in our application scenario of the outdoor environment, the off-the-shelf cameras get low performance since their baselines are relatively low (0.095 m and 0.12 m), but many objects, such as the buildings and the footbridge, are far from the stereo camera. However, it is observed that these off-the-shelf cameras perform well in short distances, indicating that they can be beneficial for an application that does not involve long-distance objects. On the contrary, the cameras designed by our method and DISeR perform well across both long and short distances.

Appendix F Additional Details on Monocular Camera Design

F.1 Environment

We construct the indoor virtual environment in UE 5 with a procedural generation technique, which generates random floorplans and object locations. The size of the environment is configured to be 15 m in width and length and 3 m in height for our experiment, and adjusting to different dimensions is trivial. However, each room in the environment needs to have a minimum length of 5 m to fit the furniture. The environment contains objects from 10 classes, which are sourced from the UE marketplace, encompassing 10 classes: sofa, bed, table, chair, bathtub, bathroom basins, computer/TV, plant, lamp, and toy.

We illustrate some example renders from our virtual environment in Fig. 13, including a comparison of the day and night design scenarios used to validate our method.

F.2 Image Sensor Catalog

The image sensor catalog we collected contains 43 image sensors, 28 of which are from Sony, 10 from Onsemi, 3 from Luxima, and 2 from CMOSIS. The pixel sizes of these sensors vary from 1.12 m to 9 m. The smallest sensor has a dimension of 3.07 mm2.3 mm, while the largest has a dimension of 16.13 mm12.04 mm.

F.3 Obstacle Placement

To restrict the camera’s Field-of-View (FOV), we place low-height gold-colored thresholds at the entrance of all the rooms in our virtual environment. An example of the threshold is shown in Fig. 14. The thresholds act as obstacles that may put the users at risk. They are configured to be interactive actors in UE, making the auto-agent aware of them even though they are not captured within the FOV of the scene capture camera.

F.4 Qualitative Results

We visualise the performance of object detection, as well as the feature extraction and matching task, with images captured by the cameras designed by the proposed method and the off-the-shelf machine/robotic cameras in Fig. 15 and Fig. 16 respectively. The off-the-shelf cameras are the same cameras used to validate our simulator in Sec. C.2, which are the OAK-D Pro Wide camera, FLIR Flea3 camera, and Basler Dart camera.