Temporal Aggregation and Propagation Graph Neural Networks for Dynamic Representation

Abstract

Temporal graphs exhibit dynamic interactions between nodes over continuous time, whose topologies evolve with time elapsing. The whole temporal neighborhood of nodes reveals the varying preferences of nodes. However, previous works usually generate dynamic representation with limited neighbors for simplicity, which results in both inferior performance and high latency of online inference. Therefore, in this paper, we propose a novel method of temporal graph convolution with the whole neighborhood, namely Temporal Aggregation and Propagation Graph Neural Networks (TAP-GNN). Specifically, we firstly analyze the computational complexity of the dynamic representation problem by unfolding the temporal graph in a message-passing paradigm. The expensive complexity motivates us to design the AP (aggregation and propagation) block, which significantly reduces the repeated computation of historical neighbors. The final TAP-GNN supports online inference in the graph stream scenario, which incorporates the temporal information into node embeddings with a temporal activation function and a projection layer besides several AP blocks. Experimental results on various real-life temporal networks show that our proposed TAP-GNN outperforms existing temporal graph methods by a large margin in terms of both predictive performance and online inference latency. Our code is available at https://github.com/doujiang-zheng/TAP-GNN.

Index Terms:

Graph Embedding, Graph Neural Networks, Temporal Graph, Dynamic Graph, Link Prediction1 Introduction

Temporal interactions construct numerous dynamically variable temporal graphs in many domains, such as bitcoin transaction network, e-commerce purchasing network, movie rating network, social network, etc [1, 2, 3, 4]. As depicted in Fig. 1(a), the temporal graph keeps evolving with node occurrences and interactions, with each interaction marked with a timestamp. In real-life networks, it is crucial to capture node dynamics in temporal graphs to provide accurate predictions on customer preferences and anomalous users [5, 6, 7, 8, 9, 10]. On the one hand, recommending items for customers requires taking into account both temporal interests and long-range user preferences [8, 11, 12]. On the other hand, predicting if a user is malicious in online payment systems defends the system security [10], and detecting the surge of network traffic guarantees the network service [13], which relies on fast responses to suspicious behaviors.

Learning low-dimensional embeddings for nodes in graphs is a promising method for downstream tasks like node classification (classifying if a user is malicious), link prediction (predicting whether two users will be friends in the future), etc. The majority of graph embeddings researches [14, 15, 16, 17, 18] like graph neural networks (GNNs) focus on static graphs, while are not yet capable of generating dynamic node representation on temporal graphs. Also, existing industrial practices may suffer from the loss of evolutionary patterns, which mainly involve static graph embedding techniques [11, 12].

Previous works on temporal graphs can be roughly divided into two categories: discrete-time models as shown in Fig. 1(c) and sampling models as shown in Fig. 1(d), which essentially differ in how to model the varying temporal neighborhood of nodes. Discrete-time models, such as TNODE [7] and EvolveGCN [19], usually slice the whole temporal graph into a sequence of graph snapshots, where the temporal information inside a snapshot is lost. In contrast, sampling models [9, 10] construct the k-hop temporal subgraphs for each dynamic node (i.e., a node with a query timestamp) and conduct message-passing on the subgraphs. The neighborhood of sampling models is restricted by the predefined window despite its flexibility.

In summary, two challenges remain unsolved for existing methods of temporal graphs. The first challenge is how to capture the whole temporal neighborhood of nodes with time elapsing. If considering the whole temporal neighborhood, discrete-time models will turn to static graph models; sampling models will suffer from huge complexity (hundreds of times of complexity compared to the original temporal graph) even with 1-hop neighbors. The second challenge is how to perform online inference when the interactions between nodes arrive at the finest temporal granularity (e.g., every second or millisecond). The inference latency of most methods is positively related to the neighborhood size. Notably, the neighborhood of sampling models increases exponentially with the number of layers. It requires a trade-off between the representation quality and the inference latency.

Present work. Therefore, in this paper, we present Temporal Aggregation and Propagation Graph Neural Networks (TAP-GNN), which generates dynamic node embeddings with the whole temporal neighborhood and supports online inference in the graph-stream scenario.

Firstly, the temporal graph is unfolded into a message-passing graph as shown in Figure 1(b) without the propagation links, where the size of temporal nodes is linear with the size of edges. The temporal nodes are densely connected with their temporal neighbors following the message-passing paradigm, resulting in huge complexity. Further, a decomposition block, namely aggregation and propagation (AP), is proposed to reduce the repetitive computation in the proposed message-passing graph, as illustrated in Fig. 1(b). The additional propagation links send the aggregated neighbor information in the past to nodes in the future, which is mathematically equal to existing graph convolution kernels. The AP block both inherits the merits of GNNs and shows linear complexity with the number of temporal edges.

The final learning framework, Temporal Aggregation and Propagation Graph Neural Networks (TAP-GNN) aims at generating node embeddings at any time with various temporal information. It incorporates the time point encodings with a temporal activation function and a projection layer.

Moreover, TAP-GNN supports online inference due to the decomposition property of the AP block, whose complexity is only related to the size of upcoming new interactions.

To evaluate our framework, we conduct experiments over various real-life temporal graphs on two prediction tasks: future link prediction and dynamic node classification. In the experiments, our proposed TAP-GNN demonstrates robust and better performance than the state-of-the-art temporal graph methods. It implies that the TAP-GNN benefits significantly from the whole temporal neighborhood of nodes. Meanwhile, the inference latency of TAP-GNN is only linear with the number of layers, compared with the exponential increasing time cost of TGAT [9].

Our contributions can be summarized as follows:

-

•

The dynamic node representation problem on temporal graphs is formulated in a message-passing paradigm, and its computational complexity is analyzed theoretically and empirically.

-

•

An efficient AP block is proposed to perform graph convolutions on the whole temporal neighborhood of nodes, whose computational complexity is only linearly dependent on the number of temporal edges.

-

•

The TAP-GNN framework provides dynamic node representation and supports online inference in the graph stream scenario built on top of the proposed AP block.

-

•

Experimental results show that our proposed TAP-GNN outperforms existing temporal graph methods in terms of both predictive performance and online inference latency.

The rest of our paper is organized as follows. Section 2 reviews the related works. In Section 3, we introduce the message-passing temporal graphs, the proposed AP block, and the TAP-GNN framework. The computational complexities of each proposed module are discussed separately. The experimental results and ablation studies are discussed in Section 4. Section 5 concludes the paper.

2 Related Works

2.1 Static Graph Embedding

Decades ago, the emerging big graph data on the website had already attracted much concern of researchers [20, 21, 22, 1, 23]. The learned low-dimensional embeddings for nodes, edges, and subgraphs can be easily integrated with the machine learning algorithms like Logistic Regression, Random Forest, and Gradient Boosting Decision Trees to perform risk monitoring [13], link prediction [23], item recommendation [1], etc. Recently, researchers interested in graph mining have been motivated by the great success of deep learning methods in computer vision [24] and natural language processing [25]. The deep graph embedding methods can also be categorized into two kinds: graph convolutional networks [26] and skip-gram models [18].

On the one hand, graph neural networks [27] are initially defined in the spectral space, which is inspired by the convolution operations defined on the image grid. GCN [16] simplifies the learning framework of [27] and shows a successful application on semi-supervised node classification tasks. GraphSAGE [17] proposes an inductive learning paradigm on large-scale graphs, which samples subgraphs for each node. Meanwhile, GAT [28] introduces a learnable attention mechanism to impose different importances over neighbors. The attention mechanism not only achieves better performance on several benchmarks but also presents an explainable result by the neighbor importances.

On the other hand, DeepWalk [14] is the pioneering work to introduce the skip-gram models [25] into graph representation learning with the sampled node sequences by random walks. Node2Vec [15] design two hyper-parameters to control the random walk preferences, while LINE [29] explores the high-order relations in the graph topology. Their shortcomings are obvious: the generated embeddings are not task-oriented, and a new model is required to deal with the additional side information. The interested readers can refer to the survey papers [18, 26] for an overview of graph embedding methods in general.

Moreover, these methods have successful applications in industrial scenarios such as commodity recommendation and risk monitoring [11, 12, 30, 31, 8]. However, these static graph embedding methods are not designed to capture node dynamics with the continuously emerging node interactions. Hence, an additional model is needed to perform real-time predictions, which may lose the evolutionary pattern in temporal graphs.

2.2 Discrete-Time Dynamic Graph Embedding

As illustrated in Fig. 1(c), discrete-time dynamic graph (DTDG) embedding methods slice the whole temporal graph into a sequence of graph snapshots. The predefined time window choice is a crucial part of DTDG methods, which causes high costs for hyper-parameter search. Intuitively, the smaller time window models the real scenarios more precisely with the increasing computation cost. As a result, DTDG methods are designed for scenarios that only need to update embeddings periodically.

DTDG methods mainly contain two parts: the first part is to capture the evolutionary patterns; the second part is to fuse the historical information with current embeddings. For the first part, DynamicTriad [32] models the probability that open triads evolve into closed triads; SPNN [33] studies high-order dependencies of the induced subgraphs; EPNE [34] incorporates both periodic and non-periodic patterns into the learned embeddings. For the second part, Know-Evolve [35], E-LSTM-D [36], and TNODE [7] use an LSTM to fuse the historical information and current information. In addition, EvolveGCN [19] learns to generate the transformation matrix for each separate graph snapshot in a recurrent paradigm. Nevertheless, DTDG methods cannot generate dynamic node embeddings to meet the requirements of continuous-time dynamic graphs.

2.3 Continuous-Time Dynamic Graph Embedding

Continuous-time dynamic graph (CTDG), which we use with temporal graph exchangeably, keeps evolving with the appearance, disappearance, and changing of edges and nodes [5]. Recent advances for dynamic node representation on temporal graphs have been summarized in [37]. While they focus on the encoder-decoder techniques, we mainly review existing solutions to the aforementioned challenges in this paper.

Methods tackling the varying neighborhood challenge are mostly recurrent models. HTNE [6] adopts the Hawkes process and an attention mechanism to model the influence of temporal neighbors; DyRep [38] discriminates the topological evolution from node interactions and introduces a two-time scale temporal point process model; JODIE [8] uses two mutually recursive RNNs for users and items separately and proposes a projection operation to predict the node trajectory; TigeCMN [39] adopts a key-value memory network to encode the network dynamics with sequentially updated operations. Differently, CTDNE [5] incorporates temporal random walks into the static skip-gram models but can only obtain the final state for each node. The aforementioned CTDG methods mainly rely on the local node embedding update with the upcoming interactions. Consequently, the influence of historical neighbors in these models will gradually vanish, and high-order neighbors are neglected by these models, which have been proved useful in static GNNs [11, 31]. Our proposed TAP-GNN treats the historical neighbors according to their contributions to the generated embeddings and can be easily extended to obtain the high-order temporal neighbors.

In contrast, CTDG methods based on GNNs are naturally designed for the high-order neighborhood on temporal graphs but cannot capture the whole neighborhood due to the neighbor dropout technique. Hence, previous works on GNNs can only partially inject temporal information and perform online inference. TGAT [9] and APAN [10], which are the most related works to our paper, construct message-passing subgraphs for each dynamic node inspired by GraphSAGE [17]. Detailedly, TGAT [9] proposes a novel temporal encoding kernel and implements an attention-based graph convolution model. However, TGAT suffers from the exponentially expanding neighborhood and the information loss of the unseen neighbors. APAN makes substantial improvements over TGAT in consideration of online inference latency but also inherits the restricted neighbor size. Therefore, they both are unable to access the information from the whole temporal neighbors.

3 Methods

In this section, we present the message-passing paradigm on temporal graphs for dynamic node representation. An alternative message-passing temporal graph (MPTG) is constructed upon the definitions of temporal nodes and neighbors. However, further analysis shows the cumbersome computational complexity of the MPTG. An efficient Aggregation-Propagation (AP) block is proposed to reduce the repetitive computations in the MPTG, which is mathematically equivalent to the message-passing graph convolutions. The final learning framework, Temporal Aggregation-Propagation Graph Neural Networks (TAP-GNN) consists of a sequence of AP blocks and a projection layer, aiming at generating dynamic node embeddings. Moreover, the TAP-GNN can be easily extended to support online inference, whose time complexity is linearly dependent on the size of upcoming new interactions.

3.1 Dynamic Node Representation Formulation

A temporal graph can be defined as , where is extended as a set of temporal edges, and is a function that maps each edge to a corresponding timestamp[5]. A common assumption is that edges in temporal graphs are formed according to some underlying distribution [14, 5, 40]. The neighbors of node at time can be drawn from in temporal graphs. In general, our target of graph embedding is to learn an embedding function revealing the underlying data distribution. Let be the dynamic node embedding function, and the dot product be the similarity function. The dynamic node representation problem can be written as

| (1) |

where is an observed interaction, is the time point, and iterates all nodes of . In practice, the negative sampling loss [25, 40] is usually adopted instead as Eq. 1 to accelerate the training speed.

3.2 Preliminary of Graph Neural Networks

Existing Graph Neural Networks (GNNs) mainly follow the message-passing paradigm that aggregates neighborhood embeddings recursively to obtain high-order neighborhood information. Formally, the graph convolution operation is defined as

| (2) |

in [16, 17], where is an embedding function, is the neighborhood function, is the graph convolution kernel. The detailed kernels can be listed as follows:

| (3) |

where is written as , denotes the degree of nodes, is a non-linear activation function, is a transformation matrix, mean and max are reduction operations. The attention kernel is provided in Appendix .2.

3.3 Message-Passing Temporal Graph

Although requires dynamic representation for node with the varying , the representation should be related to the limited node interactions. The observation motivates us to define the temporal nodes restricted to the observed time points. Representation for the infinite time dimension can be solved with an additional projection layer. Let denotes the set of observed time points. For simplicity, we model the node dynamics with the observed as:

Definition 3.1.

Temporal nodes associates each node with its occurrences in temporal edges.

Definition 3.2.

Temporal neighbors is defined with a dynamic query as , where . Specifically, the temporal neighbors of is defined as , which is abbreviated as for simplicity.

For temporal nodes, it’s worth noting that the set of temporal nodes is not the cartesian product of nodes and since if there are no interactions of at . Nodes are only connected with their active time points, as shown in Fig. 1(b). Since temporal graphs may have parallel and dynamic edges between nodes, the spectral graph convolution way [16] is not feasible in our settings.

Generally speaking, the definition of temporal neighbors is suitable for any dynamic node even if never appears in the observed . It means that we can generate dynamic node representation for any as long as node embeddings on are obtained. Moreover, the defined temporal neighbors are associated with each other via directional links, i.e. doesn’t imply except . A simple corollary is that temporal neighbors of one node are also temporal neighbors of it in the future, i.e., when .

So far, we can build the message-passing temporal graph (MPTG) as , where is the set of temporal nodes, and is the set of directional links between temporal nodes. The messages of the temporal neighbors can thus be sent to the destination nodes on the , i.e., message passing operations on temporal graphs. High-order temporal neighbors can be accessed by stacking layers of the as described in [16, 17, 41]. For instance, if we focus on V and its one-hop neighbor {A,F} in Fig. 1(d), we will get three temporal nodes . At the time , the edge set contains one temporal edge . At the time , the edge set contains two temporal edges , where shares a same edge with at . The overall is the union set of the edge set at each time point, namely .

| Temporal Graph | Ratio | ||

|---|---|---|---|

| fb-forum | 33K | 9.9M | 296 |

| soc-sign-bitcoinotc | 35K | 4.7M | 134 |

| ia-escorts-dynamic | 50K | 2.6M | 51 |

| ia-movielens-10m | 95K | 64M | 671 |

| soc-wiki-elec | 107K | 17M | 164 |

| ia-slashdot-reply | 140K | 13M | 94 |

| Wikipedia | 167K | 39M | 253 |

| 672K | 5.7B | 8537 |

However, the alternative MPTG based on the temporal graph may incur the computation complexity problem because of the repeated links with temporal neighbors. We provide an analysis of the cardinality of temporal nodes and directional links as follows.

Claim 3.3.

The size of temporal nodes is , where represents the edges in the original temporal graph.

Claim 3.4.

The size of directional links defined in is , where is the original node set and denotes the degree of node .

Claim 3.3 implies that the size of temporal nodes increases linearly with the dynamic interactions on temporal graphs. A new temporal node is created when each new interaction is marked with a unique timestamp.

Claim 3.4 indicates that directional links expand rapidly with the increasing dynamic interactions. Table I also shows the surprising result on experimental datasets, where ratios of small graphs still exceed , while the largest expansion ratio even reaches . The resulting message-passing graph may not fit the main memory for medium graphs. Therefore, we have to design a lightweight mechanism to replace the burdensome .

3.4 Aggregation-Propagation Block

The proposed block comes from an observation that historical node embeddings already contain the information of historical neighbors. Let’s take a single node and its consecutive active time points into account. Here and only represent the relative sequential order. Referring to the Definition 3.2 , is a subset of . Hence, the difference set of the two sets is , which is only related with . Let denote the difference set, then and . It indicates that the aforementioned temporal graph convolution can be decomposed sequentially as long as it doesn’t involve commutative computations between and . Fortunately, we find that the commonly adopted graph convolution kernels on static graphs fit this property.

Thus, the Aggregation-Propagation (AP) block, as depicted in Fig. 2, is designed to save the repeated computation in . We illustrate the overall workflow of the proposed AP block before going into the technical decomposition details of several graph convolution methods. The AP block consists of two sequential layers, termed aggregation layer and propagation layer, which refer to AGG and PROP used in Algo. 1, respectively. The aggregation layer is a lightweight message-passing graph and written as , where connects the temporal nodes only at the same time point. It works in a message-passing way that sends the neighbor information to the temporal nodes like . The propagation layer reuses the historical node embeddings to compute the temporal node embeddings and works with a linked list , which organizes the temporal nodes referring to the same origin node into a sorted list. It accumulates the node embeddings chronologically as .

Let be the embeddings of temporal nodes. Formally, we combine AGG and PROP for a node to obtain the same embeddings as graph kernels in Eq. 3 regardless of time points, written as

| (4) |

where AGG aggregates neighborhood embeddings and PROP summarizes historical embeddings. Let be the consecutive active time points of node , the AGG operation is written as.

| (5) |

where is the node embedding of the previous layer, and refers to the neighbor set of the current time point. The PROP operation is then written as

| (6) |

where is ’s embedding of PROP, and PROP performs on sequentially.

Suppose that , the decomposition methods are listed as follows, where the attention kernel is provided in Appendix B.

GCN. Aggregation: Let . Propagation: , where denotes the indegree of nodes.

MEAN. Aggregation: . Propagation: .

POOL. Aggregation: . Propagation: .

Complexity analysis. Let be the size of nodes, and be the size of temporal edges. The AP block shares the same node set with and preserves the links between nodes at the same time point, whose size is . Suppose each node has -dimension embeddings, the space complexity of the AP block is since the size of is . The time complexity of the aggregation layer is because the size of the preserved links is . The time complexity of the propagation layer is also because it propagates embeddings over temporal nodes. But it requires more time than the aggregation layer in practice since the propagation cannot be fully paralleled. Overall, the time complexity of the AP block is , where the feature transformation operations are omitted here.

Input: Graph ; Input features ;

A batch of queries ;

Temporal activation functions ;

Aggregation functions ;

Propagation functions ;

Projection layer ;

Output: Dynamic node embeddings .

3.5 Temporal Aggregation and Propagation Graph Neural Networks

The whole process is listed in Algorithm 1. The input includes a temporal graph , features for all nodes , and a batch of queries asking for dynamic node representation.

The additional edge features are concatenated with node features for input, as illustrated in Fig. 3. The learning backbone consists of -layer AP blocks and a final projection layer, where denotes the iterations of temporal graph aggregation-propagation. Inspired by [8, 9], a temporal activation function is applied before each AP block to inject the temporal information into the node embeddings as

| (7) |

where is the hidden representation of node , is the corresponding time point, encodes the temporal information, and may capture the repeated patterns of interactions. The temporal activation function provides distinct embeddings even though some node has few interactions. The AP block described in Fig. 2 works with the help of the proposed temporal nodes , a new set of temporal edges , and a propagation linked list . splits nodes at different time points as different temporal nodes, links the temporal nodes at the same time point with respect to the original edges, and organizes the temporal nodes referring to the same node into sorted lists. The temporal node features are initialized from the given node features . At each inner loop of the iterations, temporal activation function injects the temporal information into node features from the previous layer . The function then aggregates neighbor features into the intermediate node embeddings following the message-passing paradigm [41]. Further, the function propagates the along the sorted node list for each node independently. We can obtain the high-order temporal node embeddings after iterations. However, the required dynamic node representation is usually defined upon a future time point on tasks like future link prediction. Let be , which is the latest node embedding for a query . A projection layer is then used to provide dynamic node representation, which works as

| (8) |

where MLP denotes a multilayer perceptron, and is the temporal activation function.

Node grouping. The training speed can be boosted much faster with GPUs since [24]. Thanks to [42], the computation speed of graphs can be largely accelerated by grouping nodes with the same degree into a computation bucket. Detailed description is provided in Appendix .3.

Model optimization. We perform future link prediction for the TAP-GNN learning framework in a self-supervised paradigm. The training objective is defined as

| (9) |

where the loss iterates over the temporal edges, is the sigmoid function, denotes the number of negative samples, and denotes the negative sampling distribution on nodes.

Complexity analysis. The dimensions for all input features and hidden features are assumed for simplicity. Let be the size of nodes, and be the size of temporal edges. Each AP block contains a temporal activation function and an aggregation function, so it has parameters. Besides, the projection layer has parameters, where the first part refers to the temporal activation function, the second part refers to the multilayer perceptron, and denotes the number of layers. The total parameters of the TAP-GNN is , where means the number of AP blocks. However, the training of the TAP-GNN requires computing the whole temporal neighborhood. As a result, the space complexity of the TAP-GNN is , where denotes the number of temporal nodes . The time complexity of each AP block is , where the first part refers to the aggregation-propagation process and the second part refers to the feature transformation process. Overall, the time complexity of the TAP-GNN is . The complexity comparison between TAP-GNN and TGAT [9] is discussed in Appendix .4.

Input: Stream graph ; Input features ;

Temporal activation functions ;

Aggregation functions ;

Propagation functions ;

Historical node embeddings ;

Output: Updated node embeddings .

3.6 Online Inference in Graph-Stream Scenario

Temporal graphs usually evolve with continuously occurring edges, called graph-stream. It is essential to adapt the node embedding model for the graph-stream scenario to capture the node dynamics as soon as possible. Given a batch of edges occurring simultaneously, our proposed TAP-GNN can be naturally extended to update the embeddings of the affected nodes efficiently. As illustrated in Section 3.4, the affected nodes only exist in the upcoming batch of edges, and they don’t influence the historical neighbors due to the temporal order. Then, the affected nodes update their embeddings by fusing the historical embeddings with current embeddings.

Let be the affected nodes, be the batch edges, be the time point, and current node embeddings can be easily computed using message-passing GNNs as illustrated in Algorithm 2. Only and are involved in the computation of current embeddings. In the graph-stream scenario, each of the propagation link lists contains two elements: the latest node embeddings and current node embeddings, which can be implemented efficiently. To compute the updated node embeddings, the historical embeddings of each layer have to be saved and fused with the propagation functions. In general, each batch of new interactions updates the embeddings of the paired nodes accordingly.

Complexity analysis. The online TAP-GNN shares the same parameters with the original TAP-GNN, which is also . Let denote the size of , and denote the size of . The online TAP-GNN only needs the latest node embeddings so that its space complexity is , where is the number of AP blocks, is the size of total nodes, and is the dimension of embeddings. The time complexity of computing current embeddings (line 1 to line 4 in Algorithm 2) is , where the first part is the message-passing process and the second part is the feature transformation process. The time complexity of fusing historical embeddings and current embeddings is .

Overall, the time complexity of online inference of TAP-GNN is .

3.7 Discussions with TDGNN [43] and TGAT [9]

Existing temporal GNNs [43, 9] design specific aggregation operations for neighbors, injecting temporal information into node embeddings. Firstly, their aggregation operations work on a much smaller neighborhood than that of our TAP-GNN, which implies that TDGNN [43] and TGAT [9] lose neighborhood information before their GNNs. Secondly, the scalability of TDGNN [43] and TGAT [9] is restricted since the computation complexity increases exponentially with the number of layers. In contrast, TAP-GNN could flexibly scale to large-scale temporal graphs due to its linear growth of computation complexity with the number of layers.

| Temporal Graph | Bipartite | Edge Type | Repetition | Density | Timespan (days) | ||||

| Future Link Prediction | |||||||||

| fb-forum | False | Post | 20.8% | 899 | 33.7K | 0.08 | 1.8K | 37.51 | 164.49 |

| soc-sign-bitcoinotc | False | Trans | 0.0% | 5.8K | 35.5K | 0.002 | 1.2K | 6.05 | 1903.27 |

| ia-escorts-dynamic | True | Rating | 10.9% | 10K | 50.6K | 0.009 | 616 | 5.01 | 2232.00 |

| ia-movielens-user2tags-10m | True | Assignment | 19.9% | 17K | 95.5K | 0.0007 | 6.0K | 5.8 | 1108.97 |

| soc-wiki-elec | False | Election | 0.2% | 7.1K | 107.0K | 0.004 | 1.3K | 15.04 | 1378.84 |

| ia-slashdot-reply-dir | False | Post | 4.2% | 51K | 140.7K | 0.0001 | 3.3K | 2.76 | 977.36 |

| Dynamic Node Classification | |||||||||

| Wikipedia | True | Edit | 79.1% | 9.2K | 157.4K | 0.0036 | 1.9K | 57.64 | 29.77 |

| True | Post | 61.4% | 10.9K | 672.4K | 0.011 | 58.7K | 61.22 | 31.00 | |

4 Experiment

We conduct the experiments over a wide range of temporal dynamic graphs to investigate the effectiveness of our proposed TAP-GNN framework. The statistics summarization of temporal graphs is listed in Table II. The configurations of the competing baselines and our method are described in detail. Two experiment tasks, future link prediction and dynamic node classification, are designed to demonstrate the effectiveness of our proposed TAP-GNN framework. Discussions on the experimental results and ablation studies are provided in the following.

4.1 Datasets and Evaluation

4.1.1 Datasets

The datasets listed in Table II are used for two evaluation tasks. Datasets for future link prediction are collected from Network Repository [4]111http://networkrepository.com/dynamic.php. Datasets for dynamic node classification are obtained from SNAP [8]222http://snap.stanford.edu/jodie/. They are divided into two categories because of their different repetition ratios, which means that a node interacted with the same node consecutively. Temporal graphs with high repetition ratios usually follow regular behavior patterns and are not suitable for the future link prediction task. In addition, datasets for future link prediction also vary greatly in their graph properties, which is challenging for model robustness.

Future link prediction. These temporal graphs are provided without node or edge features from various fields, from Facebook posts (e.g., fb-forum) to Wikipedia elections (e.g. soc-wiki-elec). They differ significantly in many fields: the smallest node size is 899, and the largest one is over 51 thousand; two of the datasets are bipartite, and the others are homogeneous; the timespan of them starts from 164 days to 2232 days when setting the time unit as one day, etc.

-

•

fb-forum: The network shows user activities on the Facebook-like forum network with 899 user nodes and 33 thousand posts.

-

•

soc-sign-bitcoinotc: This is a signed trust network on a platform called Bitcoin OTC333https://www.bitcoin-otc.com/, where people trade using Bitcoin. It is noteworthy that no repeated interactions happen in this network. The network contains 5.8 thousand users and 35.5 thousand trust ratings.

-

•

ia-escorts-dynamic: The network is a bipartite rating network, containing more than 10 thousand nodes and 50 thousand ratings.

-

•

ia-movielens-user2tags-10m: This is another bipartite network from the collected MovieLens dataset444https://movielens.org/, where edges connect users with the tags they gave to the movies. The sparse network has over 17 thousand nodes and 95 thousand edges.

-

•

soc-wiki-elec: The network presents the Wikipedia administrator election and voting history, which contains more than 7 thousand users and 107 thousand interactions.

-

•

ia-slashdot-reply-dir: This is a reply network collected from the technology website Slashdot over 977 days. The network has the largest nodes and edges for future link prediction, consisting of 51 thousand users and over 140 thousand replies.

Dynamic node classification. The additional two datasets are used for dynamic node classification with the extremely imbalanced class ratio. The features of user edits in Wikipedia and user posts on Reddit are both converted into the 172-dimensional vectors under the linguistic inquiry and word count (LIWC) categories [44].

-

•

Wikipedia: The network consists of 8,227 users and 1,000 most edited pages, with 157,474 interactions in total. The timestamp of interaction tells when the user edits the page. There are 217 positive labels (0.41%) among all interactions, which tells whether the user is banned from editing the Wikipedia page.

-

•

Reddit: The network contains 10,000 active users and 1,000 active subreddits, resulting in 672,447 temporal edges. Each edge with a timestamp means a user made a Reddit post at some time. There are 366 positive labels (0.05%) among all posts, which indicates the user is banned from posting under the subreddit.

Overall, the variety of datasets could fully demonstrate the difference and robustness of compared methods.

4.1.2 Evaluation Tasks

Evaluation metrics. We adopt the Accuracy metric and the area under the receiver operating characteristic curve (AUC-ROC) as the evaluation metrics for different tasks.

Future link prediction. For methods comparison, we perform future link prediction on a hold-out evaluation dataset and run each experiment five times to ensure performance robustness. We select the hyperparameters of the best average performance on the validation dataset for each method and report the corresponding performance on the test dataset. The dataset is sorted by the timestamp ascendingly, and the first 70% of edges are taken for training, and the next 15% are used for validation. Since the datasets don’t have a node or edge features, we remove edges with unseen nodes in the test dataset for correctness. The left edges in the test dataset are used as positive samples. We sample an equal number of edges as negative samples by substituting the target node of the positive edges with a randomly sampled node. For each dataset, we generate a labeled test set for future link prediction. We use the training set to generate embeddings for each node for models that are not directly designed for future link prediction. Then for each prediction edge, the embeddings of two nodes are concatenated as its features. We apply the same strategy to construct a labeled training set and use logistic regression (LR) to perform future link prediction. For end-to-end models, we measure the reported performance of their output probabilities. The evaluation metric is AUC-ROC and Accuracy.

Dynamic node classification. Besides the future link prediction task, we introduce the dynamic node classification task as described in [9]. The task is challenging due to the extremely imbalanced labels: Wikipedia has 217 positive labels with 157,474 interactions (=0.14%), while Reddit has 366 true labels among 672,447 interactions (=0.05%).

The temporal graphs are split chronologically into 70%-15%-15% for training, validation, and testing. Since removing unseen nodes from the test set will largely reduce the positive samples, we only compare against methods that can generalize to unseen nodes. We train the self-supervised future link prediction task firstly and use an MLP classifier to predict the dynamic node label with the temporal node embeddings. Notably, we adopt the same concatenation function on the two interaction node embeddings for prediction as TGAT [9]. The evaluation metric is only AUC-ROC due to the highly imbalanced ratio.

4.2 Experiment Setting

| Temporal Graph | fb-forum | soc-sign-bitcoinotc | ia-escorts-dynamic | |||

|---|---|---|---|---|---|---|

| Metrics | AUC | Accuracy | AUC | Accuracy | AUC | Accuracy |

| Node2Vec[15] | ||||||

| GraphSAGE [17] | ||||||

| [17] | ||||||

| CTDNE[5] | ||||||

| HTNE[6] | ||||||

| TNODE[7] | ||||||

| TGAT[9] | ||||||

| TDGNN[43] | ||||||

| JODIE[8] | ||||||

| TGN[45] | ||||||

| TAP-GNN | ||||||

| TAP-GNN* | ||||||

| Temporal Graph | ia-movielens-user2tags-10m | soc-wiki-elec | ia-slashdot-reply-dir | |||

| Metrics | AUC | Accuracy | AUC | Accuracy | AUC | Accuracy |

| Node2Vec[15] | ||||||

| GraphSAGE [17] | ||||||

| [17] | ||||||

| CTDNE[5] | ||||||

| HTNE[6] | ||||||

| TNODE[7] | ||||||

| TGAT[9] | ||||||

| TDGNN[43] | ||||||

| JODIE[8] | ||||||

| TGN[45] | ||||||

| TAP-GNN | ||||||

| TAP-GNN* | ||||||

4.2.1 Baseline Methods

We evaluate the TAP-GNN framework for learning temporal node embeddings against the following baseline methods. We set the embedding dimension as for all compared methods for a fair comparison. Additionally, all methods use the one-hot encoding features and generate temporal embeddings according to the method settings.

- •

-

•

GraphSAGE [17] adopts several aggregators for neighbor messages and introduces an inductive learning paradigm for large-scale graphs, which is a strong baseline in static GNNs.

- •

-

•

CTDNE [5] generates the final state for each node in temporal graphs with the temporal random walks. However, it is challenging to deploy CTDNE for an online inference scenario.

-

•

HTNE [6] fuses the historical neighbor information with the Hawkes process and an attention mechanism, which is implemented in a recurrent paradigm. Despite its efficiency, the neighbor window of HTNE limits its receptive field.

- •

-

•

TGAT [9] incorporates a time encoding kernel and an attention mechanism into dynamic node embeddings based on the sampled temporal subgraph. TGAT may fail in some cases due to the lack of the whole neighborhood, as shown in our experiments.

- •

4.2.2 Experiment Setup

Static Methods Configuration. Static graph methods use the training data both for training and test. For Node2Vec, we grid search over and set the other hyperparameters as default in [15]. For GraphSAGE, a trainable node embedding matrix is employed instead of node features due to the lack of node features. The training adjacency matrix is used in the test phase to avoid information leakage. The other hyper-parameters of GraphSAGE are set as default in [17].

Temporal Methods Configuration. For temporal graph methods based on graph snapshots, we run methods over different graph snapshot divisions, namely snapshots, and report the best test performance over these three kinds of division strategies. For CTDNE, we use the learned node embeddings during training for the test. For HTNE, we grid search the history length over as suggested in [6] and report the test performance. tNodeEmbed [7] is an end-to-end framework that utilizes RNN over embeddings for various snapshots so that we run this method over three different division strategies. TGAT [9] is capable of generating dynamic node representations and is well suitable for future link prediction. The input features are one-hot encodings instead of all-zero vectors, and the sampling strategies are evaluated under uniform sampling and inverse temporal sampling. Other hyper-parameters of TGAT are set as default in [9]. Moreover, we search hyper-parameters of JODIE [8], TDGNN [43], and TGN [45] according to their papers.

TAP-GNN Configuration. The input features are one-hot encoding features, and the hidden dimension is all set as 128 for a fair comparison. If edge features are given, node features, edge features, and time encodings are concatenated for the next layer, including the AP block and the projection layer. The whole framework is described as shown in Fig. 3, where AP blocks are stacked two times, and the projection layer is a two-layer MLP. Moreover, we implement TAP-GNN* in Table III by aggregating directly from the whole neighborhood and reusing the trained weights of TAP-GNN to validate whether the intermediate propagation affects the prediction performance.

Implementation. Our proposed framework is implemented using PyTorch [46] and DGL (Deep Graph Library) [42]. One-hot encodings are set for temporal graphs without node features, where the embedding matrix is initialized with Glorot initialization. We adopt the Adam optimizer, and select the learning rate as 0.01 from . The default batch size is set at 256, the default dropout ratio is set at 0.2, and the negative samples are set at 1 for each positive sample. The early-stopping strategy is applied during training until the validation AUC score does not improve over five epochs. Our experimental machine is a 24-core Linux server with 125GB memory and a Quadro P6000 GPU.

Downstream node classification. We use the same three-layer MLP [9] as a classifier. Since the banned user is reported with the corresponding wiki page/subreddit, the two-node embeddings are concatenated as input features for dynamic node classification. The MLP classifier is trained with the Adam optimizer and the Glorot initialization, and the early-stopping strategy with ten epochs. Due to the data imbalance, the positive labels are oversampled to achieve the balance label ratio in each batch.

4.3 Effectiveness of TAP-GNN

4.3.1 Future Link Prediction

Table III presents the results of the future link prediction of our TAP-GNN, two static graph methods, and five temporal graph methods. The proposed TAP-GNN outperforms other state-of-the-art baselines on all experimental temporal graphs, which achieves at most 12.9% improvement of AUC and 16.2% improvement of Accuracy on ia-slashdot-reply-dir. These datasets can be divided into two categories according to their repetition ratios and experimental performance of compared methods: high-repetition datasets (i.e., fb-forum, ia-escorts-dynamic and ia-movielens-user2tags-10m) and low-repetition datasets (i.e., soc-sign-bitcoinotc, soc-wiki-elec and ia-slashdot-reply-dir). TAP-GNN shows much better improvements on low-repetition datasets than on high-repetition datasets because of its ability to handle the whole neighborhood. Meanwhile, most compared methods can hardly handle the varying neighborhood and graph stream simultaneously. Overall, the TAP-GNN framework demonstrates both superior and robust performance. It indicates that the varying neighborhood plays an essential role in the task of predicting future link existence. TAP-GNN* in Table III performs on par with TAP-GNN, implying that differences between direct aggregation and TAP-GNN are probably caused by calculation errors.

For static graph methods, Node2Vec performs better on three datasets, while GraphSAGE beats Node2Vec on the left three datasets. It implies that it is essential to balance the structure information and local neighbor information since Node2Vec embeds the former, and GraphSAGE encodes the latter. It is interesting to see that outperforms GraphSAGE by a large margin on five datasets but fail greatly on ia-slashdot-reply-dir. The former tells that node activity is positively related to their recent interactions on most temporal graphs, designed by the temporal negative sampling loss. However, the assumption may fail in cases where long-range dependencies exist among nodes.

For temporal graph methods, TGAT and TGN consistently outperform other baseline methods on most datasets. Nevertheless, these two methods both degrades on two sparse networks soc-wiki-elec and ia-slashdot-reply-dir. JODIE [8] presents much better performance than TGAT and TGN on two sparse networks, indicating the importance of neighborhood for future link prediction. Meanwhile, temporal graph methods including CTDNE, HTNE, TNODE, and TDGNN obtain comparable performance to GraphSAGE*, implying the superiority of GNNs.

4.3.2 Dynamic Node Classification

Table IV presents the AUC-ROC scores of our TAP-GNN and five compared temporal graph methods. Since the node labels in Wikipedia and Reddit are extremely imbalanced, only methods that can generalize to unseen nodes are shown here. Also, the Accuracy metric is omitted here because of the imbalanced label ratio. The positive labels in both datasets denote that users are banned from the destination wiki pages/subreddits. Intuitively, this task reflects the flexibility of experimental models because users exhibit frequent interactions with low banned times. Table IV shows that both TGN and TAP-GNN outperform other baselines, and TGN performs better than TAP-GNN. We conjecture that the frequent update of node memory contributes to the superiority of TGN, which motivates us to design a more flexible graph kernel in future work.

| Methods | Batch-size | Layers | ||||||

| 100 | 200 | 300 | 400 | 500 | 1 | 2 | 3 | |

| TGAT[9] | 32.6 | 49.7 | 68.7 | 110.2 | 113.9 | 5.6 | 49.7 | 934.7 |

| TAP-GNN | 11.0 | 12.5 | 13.8 | 15.1 | 16.3 | 5.6 | 12.5 | 13.8 |

| Speedup | ||||||||

4.4 Efficiency of TAP-GNN

The proposed TAP-GNN consists of many specific modules: AP blocks, the temporal activation function, and the projection layer, each with its own hyper-parameters. Experiments in this section aim to validate the efficiency of different components of TAP-GNN by varying the hyper-parameters. Without specific settings, the batch size is 256, the number of AP blocks is 2, the embedding dimensionality is set to 128, and the dropout ratio is 0.2. Also, the names of the datasets are abbreviated without confusion for simplicity in the figures.

4.4.1 Online Inference Efficiency

Since other approaches such as CTDNE [5], HTNE [6], and TNODE [7] are not designed for graph representation in the graph stream scenario, we only compare against TGAT [9] to show the online inference efficiency.

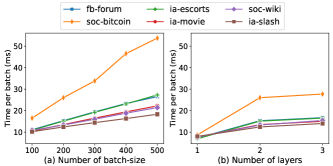

For a fair comparison, we implement the attention kernel with TAP-GNN since TGAT costs much time in the attention kernel, as shown in Table V and Fig. 4. The task predicts the probability that the edge exists between two nodes when given a future time point. The graph topology keeps evolving with a batch of new interactions. Specifically, the original implementation of TGAT sampling is extremely slow and can achieve the speedup nearly 50 times with the Numba555http://numba.pydata.org/ library, which dramatically reduces the time cost of graph convolutions of TGAT. As reported in Table V, the consumed time of TAP-GNN remains nearly constant while TGAT presents the linearly increasing time cost when varying the batch size of the upcoming new interactions. When changing the number of layers, TGAT demonstrates the exponential growth of inference time, which inherits the disadvantages of GraphSAGE [17]. While our proposed TAP-GNN demonstrates the linear growing rate as shown both in Table V and Fig. 4. It is also crucial that the online inference time of TAP-GNN is almost irrelevant to the graph size, which indicates the scalability of TAP-GNN on large industrial temporal graphs.

4.4.2 Ablation Studies

Graph convolution kernels. We implement four graph convolution kernels ATTENTION, GCN, MEAN, and POOL according to Eq. 3 and Appendix .2.

As depicted in Fig. 5, GCN performs the most robust among all datasets, while ATTENTION, MEAN and POOL exhibit performance degradation over several datasets. Further, MEAN shows inferior to GCN on large and sparse graphs, and ATTENTION and POOL perform worse than GCN on small and dense graphs. Hence, we select the GCN as the default graph convolution kernels for the following experiments. Overall, these three kernels demonstrate comparable performance on most datasets, which implies the robustness of TAP-GNN.

Temporal activation function. The temporal activation function encodes the time point of each interaction to generate time-respecting node embeddings. It is useful to capture the periodic information inside the dynamic interactions. As described in Fig. 6, TAP-GNN, which is equipped with the temporal activation function, shows significant improvements on the baseline without the function.

The projection layer. The obtained node embeddings are further projected to the future time point with the projection layer. TAP-GNN without the projection layer predicts the probability of the edge’s existence by a fully-connected layer. As plotted in Fig. 6, it is crucial to perform an additional projection on the learned embeddings.

4.4.3 Parameter Sensitivity

Batch-size. Deep learning models update the parameters once a mini-batch using the SGD strategy. Usually, a smaller batch size accelerates the training speed, and a larger batch size gives a more robust model. As shown in Fig. 7(a), TAP-GNN exhibits slight changes in performance on most datasets under the varying batch size. On the one hand, it indicates the robustness of our proposed TAP-GNN. On the other hand, temporal graphs used in our experiments also demonstrate stable interactions between nodes so that it is effective in generating embeddings from the whole temporal neighborhood.

Number of layers. The number of layers plays an essential role in graph neural networks [27, 16, 17, 28]. But GNNs also suffer from the over-smoothing and overfitting problem when the number of layers is deep. As shown in Fig. 7(b), the AUC scores of TAP-GNN on most datasets are very stable regardless of the number of layers. Nonetheless, TAP-GNN shows performance degradation on the dataset ia-slash when the number of layers is 1. Also, baseline methods like GraphSAGE, HTNE, and TGAT show poor performance, as shown in Table III, which only access the local k-hop neighbors. We conjecture it may be related to the dataset property as ia-slash is a directional reply dataset from the technology website. Hence, TAP-GNN can benefit from the 2-hop neighbors largely compared to the 1-hop neighbors.

Embedding dimensionality. Node embeddings aim to preserve the topology proximity in the graph with the dense vectors for each node [14]. The small embedding dimensionality [47] may hurt the learning ability if the graph is very complicated. The performance of TAP-GNN on fb-forum in Fig. 8(a) exhibits vast improvements when the embedding dimensionality keeps increasing. Referring to other datasets, TAP-GNN performs almost invariantly, as shown in Fig. 8(a).

Dropout ratio. The dropout strategy is a successful technique to avoid overfitting for neural networks [48], which dropouts the neurons randomly and adjusts the hidden outputs accordingly. Our TAP-GNN also benefits from the dropout strategy slightly on most datasets, as depicted in Fig. 8(b). However, it requires grid-search over the hyper-parameters to find the best dropout ratio.

4.4.4 Visualization

Visualization of node embeddings can assess the quality of our proposed TAP-GNN qualitatively. We select one thousand destination nodes among all temporal edges of three datasets, obtain the dynamic node embeddings of 128-dimensions, and project them into 2D vectors using t-SNE [49] as reported in Fig. 9. For simplicity, one thousand nodes are selected around five quantiles of the timespan, i.e., , where each quantile contributes two hundred nodes. The nodes are thus labeled with their corresponding quantiles and plotted with color ramps. As shown in Fig. 9, the time evolves forward with the color from dark blue to dark red. It is clear that dynamic node embeddings also demonstrate distinct boundaries among different time points. Especially, the continuous-time points exhibit proximity on the 2D plane, while the discontinuous time points like 0.0 and 0.8 exhibit clear separations visually. In terms of the repetition ratios, the selected datasets have fewer repeated neighbors, leading to the evolving node embeddings and interaction patterns. Otherwise, temporal graphs with high repeated ratios are similar to the static graph, which doesn’t show the evolving nature as depicted in Fig. 9. Overall, TAP-GNN generates the dynamic node representation effectively with time evolving.

5 Conclusion

In this paper, we study the problem of performing message-passing on temporal graphs with respect to the varying temporal neighborhood of nodes. A message-passing temporal graph (MPTG) is introduced to model the dynamic message-passing on temporal graphs, which also causes heavy computational complexity. Further, we propose the temporal graph learning framework, called Temporal Aggregation and Propagation Graph Neural Networks (TAP-GNN), which updates node embeddings with their whole temporal neighbors efficiently. Our proposed TAP-GNN outperforms other state-of-the-art baselines on temporal graphs over a variety of temporal graphs. Specifically, TAP-GNN demonstrates robust and stable performance on extremely sparse temporal graphs. The additional experiments on the parameter sensitivity reveal the effectiveness of different components of the proposed framework.

Nevertheless, there are still limitations of existing approaches of temporal graphs. Node dynamics on temporal graphs may be caused by permanent reasons (e.g., job changes) or transient reasons (e.g., festivals), which lead to different influences on their future interactions. For example, the job change may cause persistent influence on the email networks, while the festivals usually bring a surge in sales on e-commerce networks. Our future direction is to develop an adaptive model to infer the latent reasons for node dynamics.

6 Acknowledgment

This work is partially supported by National Key R&D Program of China under Grant No. 2022YFB2703100, the Starry Night Science Fund of Zhejiang University Shanghai Institute for Advanced Study (Grant No. SN-ZJU-SIAS-001), and the National Research Foundation Singapore under its AI Singapore Programme(Award Number:AISG2-RP-2021-023).

References

- [1] J. Bennett, S. Lanning et al., “The netflix prize,” in Proceedings of KDD Cup and Workshop, 2007, pp. 35–35.

- [2] J. Kunegis, “Konect: the koblenz network collection,” in Proceedings of the 22nd International Conference on World Wide Web, 2013, pp. 1343–1350.

- [3] J. Leskovec and A. Krevl, “SNAP Datasets: Stanford large network dataset collection,” http://snap.stanford.edu/data, Jun. 2014.

- [4] R. A. Rossi and N. K. Ahmed, “The network data repository with interactive graph analytics and visualization,” in National Conference on Artificial Intelligence, 2015, pp. 4292–4293.

- [5] G. H. Nguyen, J. B. Lee, R. A. Rossi, N. K. Ahmed, E. Koh, and S. Kim, “Continuous-time dynamic network embeddings,” in Companion Proceedings of the The Web Conference 2018, 2018, pp. 969–976.

- [6] Y. Zuo, G. Liu, H. Lin, J. Guo, X. Hu, and J. Wu, “Embedding temporal network via neighborhood formation,” in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2018, pp. 2857–2866.

- [7] U. Singer, I. Guy, and K. Radinsky, “Node embedding over temporal graphs,” in Proceedings of the 28th International Joint Conference on Artificial Intelligence, 2019, pp. 4605–4612.

- [8] S. Kumar, X. Zhang, and J. Leskovec, “Predicting dynamic embedding trajectory in temporal interaction networks,” in Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2019, pp. 1269–1278.

- [9] D. Xu, C. Ruan, E. Korpeoglu, S. Kumar, and K. Achan, “Inductive representation learning on temporal graphs,” in International Conference on Learning Representations, 2020.

- [10] X. Wang, D. Lyu, M. Li, Y. Xia, Q. Yang, X. Wang, X. Wang, P. Cui, Y. Yang, B. Sun et al., “Apan: Asynchronous propagate attention network for real-time temporal graph embedding,” in Proceedings of the 2020 ACM SIGMOD International Conference on Management of Data, 2021.

- [11] R. Ying, R. He, K. Chen, P. Eksombatchai, W. L. Hamilton, and J. Leskovec, “Graph convolutional neural networks for web-scale recommender systems,” in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2018, pp. 974–983.

- [12] J. Wang, P. Huang, H. Zhao, Z. Zhang, B. Zhao, and D. L. Lee, “Billion-scale commodity embedding for e-commerce recommendation in alibaba,” in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2018, pp. 839–848.

- [13] J. Zhang, C. Chen, Y. Xiang, W. Zhou, and A. V. Vasilakos, “An effective network traffic classification method with unknown flow detection,” IEEE Transactions on Network and Service Management, vol. 10, no. 2, pp. 133–147, 2013.

- [14] B. Perozzi, R. Al-Rfou, and S. Skiena, “Deepwalk: Online learning of social representations,” in Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2014, pp. 701–710.

- [15] A. Grover and J. Leskovec, “node2vec: Scalable feature learning for networks,” in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2016, pp. 855–864.

- [16] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in International Conference on Learning Representations, 2017.

- [17] W. Hamilton, Z. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” in Proceddings of the 31st International Conference on Neural Information Processing Systems, 2017, pp. 1024–1034.

- [18] P. Goyal and E. Ferrara, “Graph embedding techniques, applications, and performance: A survey,” Knowledge Based Systems, vol. 151, pp. 78–94, 2018.

- [19] A. Pareja, G. Domeniconi, J. Chen, T. Ma, T. Suzumura, H. Kanezashi, T. Kaler, T. B. Schardl, and C. E. Leiserson, “Evolvegcn: Evolving graph convolutional networks for dynamic graphs.” in Proceedings of the 34th AAAI Conference on Artificial Intelligence, 2020, pp. 5363–5370.

- [20] L. Page, S. Brin, R. Motwani, and T. Winograd, “The pagerank citation ranking: Bringing order to the web.” Stanford InfoLab, Tech. Rep., 1999.

- [21] S. T. Roweis and L. K. Saul, “Nonlinear dimensionality reduction by locally linear embedding,” Science, vol. 290, no. 5500, pp. 2323–2326, 2000.

- [22] M. Belkin and P. Niyogi, “Laplacian eigenmaps and spectral techniques for embedding and clustering,” in Proceedings of the 16th International Conference on Neural Information Processing Systems, 2002, pp. 585–591.

- [23] Y. Sun, J. Han, X. Yan, P. S. Yu, and T. Wu, “Pathsim: Meta path-based top-k similarity search in heterogeneous information networks,” Proceedings of the VLDB Endowment, vol. 4, no. 11, pp. 992–1003, 2011.

- [24] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Proceedings of the 26th International Conference on Neural Information Processing Systems, 2012, pp. 1097–1105.

- [25] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” in Proceedings of the 27th International Conference on Neural Information Processing Systems, 2013, pp. 3111–3119.

- [26] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, and S. Y. Philip, “A comprehensive survey on graph neural networks,” IEEE Transactions on Neural Networks and Learning Systems, 2020.

- [27] M. Defferrard, X. Bresson, and P. Vandergheynst, “Convolutional neural networks on graphs with fast localized spectral filtering,” in Proceedings of the 30th International Conference on Neural Information Processing Systems, 2016, pp. 3844–3852.

- [28] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio, “Graph attention networks,” in International Conference on Learning Representations, 2018.

- [29] J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei, “Line: Large-scale information network embedding,” in Proceedings of the 24th International Conference on World Wide Web, 2015, pp. 1067–1077.

- [30] G. Zhou, N. Mou, Y. Fan, Q. Pi, W. Bian, C. Zhou, X. Zhu, and K. Gai, “Deep interest evolution network for click-through rate prediction,” in Proceedings of the 33th AAAI Conference on Artificial Intelligence, 2019, pp. 5941–5948.

- [31] X. Wang, X. He, M. Wang, F. Feng, and T.-S. Chua, “Neural graph collaborative filtering,” in Proceedings of the 42nd international ACM SIGIR conference on Research and development in Information Retrieval, 2019, pp. 165–174.

- [32] L. Zhou, Y. Yang, X. Ren, F. Wu, and Y. Zhuang, “Dynamic network embedding by modeling triadic closure process,” in Proceddings of the 32nd AAAI Conference on Artificial Intelligence, 2018, pp. 571–578.

- [33] C. Meng, S. C. Mouli, B. Ribeiro, and J. Neville, “Subgraph pattern neural networks for high-order graph evolution prediction.” in AAAI, 2018, pp. 3778–3787.

- [34] J. Wang, Y. Jin, G. Song, and X. Ma, “Epne: Evolutionary pattern preserving network embedding.” in ECAI, 2020, pp. 1603–1610.

- [35] R. Trivedi, H. Dai, Y. Wang, and L. Song, “Know-evolve: Deep temporal reasoning for dynamic knowledge graphs,” in Proceedings of the 34th International Conference on Machine Learning, 2017, pp. 3462–3471.

- [36] J. Chen, J. Zhang, X. Xu, C. Fu, D. Zhang, Q. Zhang, and Q. Xuan, “E-lstm-d: A deep learning framework for dynamic network link prediction,” IEEE Transactions on Systems, Man, and Cybernetics, pp. 1–14, 2019.

- [37] S. M. Kazemi, R. Goel, K. Jain, I. Kobyzev, A. Sethi, P. Forsyth, and P. Poupart, “Representation learning for dynamic graphs: A survey.” Journal of Machine Learning Research, vol. 21, no. 70, pp. 1–73, 2020.

- [38] R. Trivedi, M. Farajtabar, P. Biswal, and H. Zha, “Dyrep: Learning representations over dynamic graphs,” in International Conference on Learning Representations, 2019.

- [39] Z. Zhang, J. Bu, M. Ester, J. Zhang, C. Yao, Z. Li, and C. Wang, “Learning temporal interaction graph embedding via coupled memory networks,” in Proceedings of The Web Conference 2020, 2020, pp. 3049–3055.

- [40] Z. Yang, M. Ding, C. Zhou, H. Yang, J. Zhou, and J. Tang, “Understanding negative sampling in graph representation learning,” in Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2020.

- [41] J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, and G. E. Dahl, “Neural message passing for quantum chemistry,” in Proceedings of the 34th International Conference on Machine Learning, vol. 70, 2017, pp. 1263–1272.

- [42] M. Wang, L. Yu, D. Zheng, Q. Gan, Y. Gai, Z. Ye, M. Li, J. Zhou, Q. Huang, C. Ma, Z. Huang, Q. Guo, H. Zhang, H. Lin, J. Zhao, J. Li, A. J. Smola, and Z. Zhang, “Deep graph library: Towards efficient and scalable deep learning on graphs,” ICLR Workshop on Representation Learning on Graphs and Manifolds, 2019.

- [43] L. Qu, H. Zhu, Q. Duan, and Y. Shi, “Continuous-time link prediction via temporal dependent graph neural network,” in Proceedings of The Web Conference 2020, ser. WWW ’20, New York, NY, USA, 2020, p. 3026–3032. [Online]. Available: https://doi.org/10.1145/3366423.3380073

- [44] J. W. Pennebaker, M. E. Francis, and R. J. Booth, “Linguistic inquiry and word count: Liwc 2001,” Mahway: Lawrence Erlbaum Associates, vol. 71, no. 2001, p. 2001, 2001.

- [45] E. Rossi, B. Chamberlain, F. Frasca, D. Eynard, F. Monti, and M. Bronstein, “Temporal graph networks for deep learning on dynamic graphs,” arXiv preprint arXiv:2006.10637, 2020.

- [46] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” in Proceedings of the 33th International Conference on Neural Information Processing Systems, 2019, pp. 8026–8037.

- [47] Z. Yin and Y. Shen, “On the dimensionality of word embedding,” in Proceedings of the 32nd International Conference on Neural Information Processing Systems, 2018, pp. 887–898.

- [48] G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, and R. R. Salakhutdinov, “Improving neural networks by preventing co-adaptation of feature detectors,” arXiv preprint arXiv:1207.0580, 2012.

- [49] L. v. d. Maaten and G. Hinton, “Visualizing data using t-sne,” Journal of Machine Learning Research, vol. 9, pp. 2579–2605, 2008.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x12.png) |

Tongya Zheng is currently pursuing the Ph.D. degree with the College of Computer Science, Zhejiang University. He received his B.Eng. Degree from Nanjing University of Science and Technology. His research interests include graph neural networks, temporal graphs, and explanation for artificial intelligence. He has authored and co-authored many scientific articles at top venues including IEEE TNNLS and AAAI. He has served with international conferences including AAAI and ECML-PKDD, and international journals including Information Sciences. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x13.png) |

Xinchao Wang is currently an assistant professor in the Department of Electrical and Computer Engineering, National University of Singapore (NUS). Before joining NUS, he was a tenure-track assistant professor of Computer Science at Stevens Institute of Technology, and a SwissNSF postdoctoral fellow at University of Illinois Urbana-Champaign with Prof. Thomas S. Huang. He received a PhD from Ecole Polytechnique Federale de Lausanne, and a first-class honorable degree from Hong Kong Polytechnic University. His research interests include artificial intelligence, computer vision, machine learning, medical image analysis, and multimedia. His articles have been published in major venues including CVPR, ICCV, ECCV, NeurIPS, ICLR, AAAI, IJCAI, ACL, MICCAI, TPAMI, IJCV, TIP, TKDE, and TMI. He has been or is currently serving as an associate editor of IEEE Transactions on Circuits and Systems for Video Technology, Journal of Visual Communication and Image Representation, and Patter Recognition. He regularly serves as an area chair of CVPR, ICCV, ECCV, NeurIPS, ICPR, ICIP, ICME, and as a senior program committee member of AAAI and IJCAI. His team has been winner of several international challenging, including NTIRE Super-resolution Challenge’18 and AI City Challenge’17. He received the best editor award of JVCI for 2020. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x14.png) |

Zunlei Feng is an associate professor fellow in College of Software Technology, Zhejiang University. He received his Ph.D degree in Computer Science and Technology from College of Computer Science, Zhejiang University, and B. Eng. Degree from Soochow University. His research interests mainly include computer vision, image information processing, representation learning, medical image analysis. He has authored and co-authored many scientific articles at top venues including IJCV, NeurIPS, AAAI, TVCG, ACM TOMM, and ECCV. He has served with international conferences including AAAI and PKDD, and international journals including IEEE Transactions on Circuits and Systems for Video Technology, Information Sciences, Neurocomputing, Journal of Visual Communication and Image Representation and Neural Processing Letters. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x15.png) |

Jie Song is an assistant research fellow in College of Software Technology, Zhejiang University. He received his Ph.D degree in Computer Science and Technology from College of Computer Science, Zhejiang University, and B. Eng. Degree from Sichuan University. His research interests mainly include transfer learning, few-shot learning, model compression and interpretable machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x16.png) |

Yunzhi Hao is currently pursuing the Ph.D. degree with the College of Computer Science, Zhejiang University. Her research interests include graph inference and network embedding. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x17.png) |

Mingli Song received the Ph.D. degree in computer science from Zhejiang University, China, in 2006. He is currently a Professor with the Microsoft Visual Perception Lab- oratory, Zhejiang University. His research interests include face modeling and facial expression analysis. He received the Microsoft Research Fellowship in 2004. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x18.png) |

Xingen Wang received the graduate and PhD degrees in computer science from Zhejiang University of China, in 2005 and 2013, respectively. He is currently a research assistant in the College of Computer Science, Zhejiang University. His research interests include distributed computing and software performance. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x19.png) |

Xinyu Wang received the graduate and PhD degrees in computer science from Zhejiang University of China, in 2002 and 2007, respectively. He was a research assistant at the Zhejiang University, from 2002 to 2007. He is currently a professor in the College of Computer Science, Zhejiang University. His research interests include streaming data analysis, formal methods, very large information systems, and software engineering. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6e3419ee-3bab-4ca7-a81a-692432dfc9c0/x20.png) |

Chun Chen is currently a Professor with the College of Computer Science, Zhejiang University. His research interests include computer vision, computer graphics, and embedded technology. |

.1 Proofs

Claim 3.3.

The size of temporal nodes is , where represents the edges in the original temporal graph.

Proof.

Let denote the degree of node , and we have on graphs. . ∎

Claim 3.4.

The size of directional links defined in is , where is the original node set and denotes the degree of node .

Proof.

Also, let denote the degree of node . We take a single node into account at first. Let be the set of temporal nodes and be the set of directional links . Since if there are no interactions of at , then . Further, as the indegree of each temporal node , we have . Summing over all nodes, we have . ∎

.2 The attention kernel and its decomposition

The attention mechanism is extended as a graph attention kernel by GAT [28] to impose adaptive neighborhood weights for GNNs. Given a node and its neighbor set , the attention kernel of GAT [28] can be written as

| (10) |

where encodes the neighbor importances. In temporal graphs, temporal neighbors also impose different importances for dynamic representation. Let be the consecutive active time points of node , is a neighbor emerging at , be the embeddings of AGG, and be the embeddings of PROP. We also need to record the cumulative attention scores to normalize the node embeddings. The decomposed Aggregation step is written as

| (11) |

where shows the importance of temporal neighbors. The decomposed Propagation step is written as

| (12) |

where records the cumulative attention scores of last timestamp to normalize the node embeddings.

.3 Node grouping algorithm

The node grouping [42] enables the automatic differential of computing multiple ragged lists parallelly, boosting the training speed of the PROP operation. Firstly, we briefly review the workflow of the AP Block as shown in Algo. 3. As shown in Algo. 1, TAP-GNN performs iterative AGG and PROP operations to obtain the high-order node embeddings for dynamic representation. AGG works with a temporal edge set , which connects the temporal nodes only at the same time point. The cardinality of is the same as the original edge set , which motivates us to send the temporal messages with directly. The lined list organizes the temporal nodes referring to the same origin node into a sorted list. The PROP operation iterates over to obtain the cumulative temporal node embeddings according to Section 3.4. Secondly, we introduce the usage of the node grouping in the PROP operation. As shown in Algo 3, the node grouping algorithm of DGL [42] works from line 6 to line 13, which parallelizes the outer loop of the PROP operation with the optimized CUDA kernels. The inner loop from line 7 to line 12 performs a cumulative addition along with the sorted temporal nodes.

Input: Input node embeddings ; The temporal edge set and the linked list ;

Output: Output node embeddings .

.4 Complexity of TAP-GNN and TGAT

We simplify the attention mechanism as a constant operation to avoid the relation of graph kernels. The dimensions for all input features and hidden features are assumed for simplicity. Let be the size of nodes, be the size of temporal edges, and be the number of TAP-GNN layers. The time complexity of the TAP-GNN is , where is the number of MLP layers. Furthermore, the online time complexity of TAP-GNN is , where is the edge number of the stream graph, and is the node number of the stream graph. The theoretical time complexity of TGAT is reported as , where is the number of sampled neighbors, is the number of TGAT layers, and is the transformation of node embeddings.

Compared with TAP-GNN, TGAT will suffer from the exponential expansion of sampled neighbors when increasing the number of layers. Particularly, TAP-GNN shows much faster inference latency in the theoretical analysis.

We firstly speedup the TGAT sampler 50 times faster with the Numba666http://numba.pydata.org/ library, where the original implementation is very slow. In Table V, we compare TAP-GNN against TGAT with the attention kernel in Table V, showing that TAP-GNN could achieve 3-7 times speedup with two layers and up to 68 times speedup when extended to three layers.

.5 Discussions of The Direct Aggregation Method

Although TAP-GNN could reduce the computation complexity, the effect of the additional propagation step has not been well studied in our experiments. We present the training progress and the AUC trend on the test set in Fig. 10. The direct aggregation method in Fig. 10 refers to a temporal GCN as defined in Eq. 3, which costs ten times of GPU memory as our TAP-GNN. The TAP-GNN shown in Fig. 10 is implemented with GCN kernel for fairness. Fig. 10(a) illustrates that TAP-GNN achieves a slightly larger training loss than Direct Aggregation, indicating that the propagation step may hinder the optimization of TAP-GNN. Nonetheless, Fig. 10(b) illustrates that TAP-GNN achieves the close AUC scores more stably and faster than Direct Aggregation, indicating that the propagation step could promote the generalization of TAP-GNN on the test set.

.6 Discussions of Discrete-Time Models

Discrete-time models [32, 35, 7, 19] rely on a sequence of graph snapshots to capture graph dynamics in temporal graphs. The similarities of TAP-GNN and discrete-time models probably refer to the constructed message-passing graph, namely Aggregation-Propagation Block (AP Block). However, similar message-passing graphs lead to different computation mechanisms and prediction performance.