Tetrationally Compact Entanglement Purification

Abstract

This paper shows that entanglement can be purified using very little storage, assuming the only source of noise is in the quantum channel being used to share the entanglement. Entangled pairs with a target infidelity of can be created in time using storage space, where is the iterated logarithm. This is achieved by using multiple stages of error detection, with boosting within each stage. For example, the paper shows that 11 qubits of noiseless storage is enough to turn entanglement with an infidelity of into entanglement with an infidelity of .

Data availability: All code written for this paper is included in its attached ancillary files.

1 Introduction

If Alice and Bob are linked by a noisy quantum channel, they can use error correction to reduce the noise of the channel. For example, Alice can encode a qubit she wants to send into the perfect code [Laf+96], before transmitting over the channel to Bob. This increases the amount of data transmitted, but allows the message to be recovered if one error happens. However, directly encoding the data to be sent is suboptimal. A more effective technique is to use entanglement purification [Ben+96].

In an entanglement purification protocol, Alice never exposes important data to the noisy quantum channel. The channel is only used to share EPR pairs (copies of the state ). Once an EPR pair has been successfully shared, the message is moved from Alice to Bob using quantum teleportation [Ben+93]. The benefit of doing this is that, if a transmission error happens when sharing the EPR pair, nothing important has been damaged. EPR pairs are fungible; broken ones can be discarded and replaced with new ones. This allows error correction to be done with error detection, which is more efficient and more flexible. For example, discarding and retransmitting allows a distance three code to correct two errors instead of one.

The time complexity of entanglement purification is simple. Starting from a channel with infidelity, creating at least one EPR pair with a target infidelity of requires uses of the noisy channel. This time complexity is achievable with a variety of techniques, like encoding the entanglement into a quantum error correcting code with linear distance [PK21]. This time complexity is optimal because, if fewer than noisy pairs are shared, the chance of every single physical pair being corrupted would be larger than .

Surprisingly, the space complexity of entanglement purification is not so simple. My own initial intuition, which I think is shared by some other researchers based on a few conversions I’ve had, is that the space complexity would also be . If storage was noisy then this would be the case, since otherwise the chance of every single storage qubit failing simultaneously would be greater than the desired error rate. However, if you assume the only source of noise is the noisy channel (meaning storage is noiseless, local operations are noiseless, and classical communication is noiseless), then the space complexity drops dramatically even if you still require the time complexity to be .

This paper explains a construction that improves entanglement fidelity tetrationally versus storage space, achieving a space complexity of . It relies heavily on the assumption of perfect storage, which is unrealistic, but the consequences are surprising enough to merit a short paper. Also, some of the underlying ideas are applicable to practical scenarios.

2 Construction

2.1 Noise Model

I’ll be using a digitized noise model of shared EPR pairs. Errors are modelled by assuming that, when an EPR pair is shared, an unwanted Pauli may have been applied to one of its qubits. There are four Paulis that may be applied: the identity Pauli resulting in the correct shared state , the bit flip resulting in the state , the phase flip resulting in the state , and the combined bit phase flip resulting in the state .

I will represent noisy EPR pairs using a four dimensional vector of odds. The vector

describes a noisy EPR pair where the odds of the applied Pauli being , , , or are respectively. Note that this is a degenerate representation: there are multiple ways to represent the same noisy state. The exact density matrix of a noisy state described by an odds vector is:

| (1) |

Note that this noise model can’t represent coherent errors, like an unwanted gate being applied to one of the qubits of an EPR pair. Some readers may worry that this means my analysis won’t apply to coherent noise. This isn’t actually a problem, because the purification process will digitize the noise. But, for the truly paranoid, coherent noise can be forcibly transformed into incoherent noise by Pauli twirling. If Alice and Bob have an EPR pair that has undergone coherent noise, they can pick a Pauli uniformly at random then apply to both qubits and forget . The density matrix describing the state after applying the random , not conditioned on which was used, is expressible in the digitized noise model I’m using. Discarding coherence information in this way is suboptimal, but sufficient for correctness.

Often, the exact odds will be inconveniently complicated and it will be beneficial to simplify them even if that makes the state worse. For that purpose, I define to mean "a state with odds vector can be turned into a state with odds vector via the addition of artificial noise". A sufficient condition for this relationship to hold is for the identity term to not grow and for the error terms to not shrink:

| (2) |

2.2 Distilling with Rep Codes

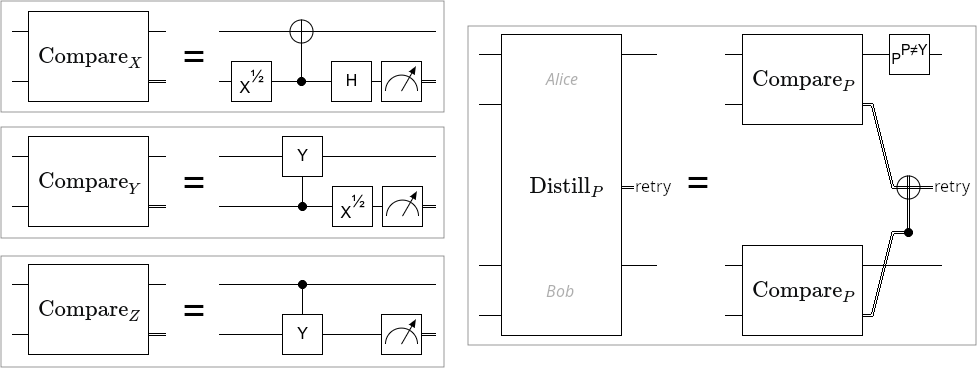

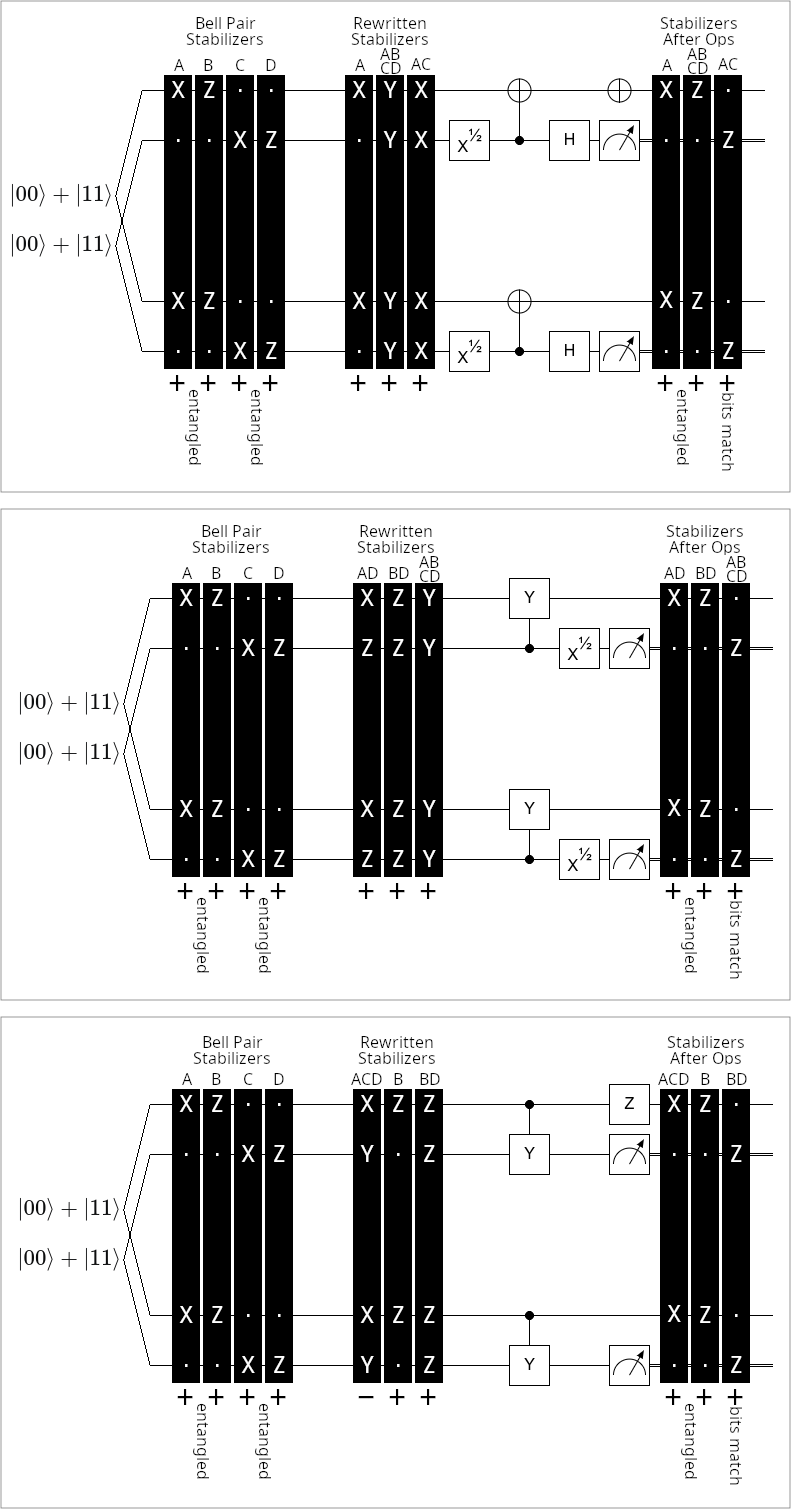

The basic building block used by this paper is distillation with distance 2 rep codes. There are three relevant rep codes: X basis, Y basis, and Z basis. The X basis rep code has the stabilizer , the logical X observable , and the logical Z observable . The Y basis rep code has the stabilizer , the logical X observable , and the logical Z observable . The Z basis rep code has the stabilizer , the logical X observable , and the logical Z observable . Beware that the choice of observables is slightly non-standard, and that their exact definition matters as it determines how errors propagate through the distillation process. See Figure 1 for circuits implementing these details correctly.

A rep code distillation is performed by Alice and Bob each measuring the stabilizer of the rep code, and comparing their results by using classical communication. If the results differ from the result predicted by assuming they have shared copies of , an error has been detected. Equivalently, an error is detected if the stabilizer of the rep code anticommutes with the combination of the noisy Paulis applied to the input EPR pairs. For example, an X basis rep code will raise a detection event if given a correct EPR pair and a phase flipped EPR pair. Conversely, it won’t detect a problem if given a bit flipped EPR pair or if given two phase flipped EPR pairs.

Stepping back, a rep code distillation takes two noisy EPR pairs as input. It has some chance of detecting an error and discarding. Otherwise it succeeds and produces one output. See Figure 2 for diagrams showing how each rep code catches errors.

To analyze rep code distillation, it’s necessary to be able to compute the chance of discarding and the noise model describing the state output upon success. I’ll use to represent the probability of a -basis rep code distillation detecting an error. I used sympy to enumerate the cases detected by each rep code and symbolically accumulate the probability of a failure. The probabilities of a distillation failure for each basis are:

| (3) |

| (4) |

| (5) |

I’ll use to represent the state output by distilling a state against a state with a basis- rep code, given that no error was detected. I used sympy to enumerate the cases not detected by each rep code, and symbolically accumulate the weight of each output case. When distillation succeeds, the output states for each basis are:

| (6) |

| (7) |

| (8) |

Note that is left-associative. Also note that all these operators behave as you would expect with respect to decaying: if , and , and all states have less than 50% infidelity, then and . This allows bounds on actual distilled states to be proved via bounds on decayed distilled states.

2.3 Staging

Purifying with one enormous error detecting code doesn’t work very well. The issue is that, with large codes, the chances of seeing no detection events is extremely small. This can result in over-discarding, where the purification process becomes inefficient because nothing is ever good enough. It’s more efficient to purify with a series of smaller stages, as shown in Figure 3, where each stage applies a small code and only successful outputs are collected to be used as inputs for the next stage.

For example, consider a process where input EPR pairs are distilled by an X basis rep code, and then survivor states are distilled by a Z basis rep code to produce final states . I describe this distillation chain using the notation . The Shor code [Sho95] is also defined by concatenating a distance two X rep code with a distance two Z rep code, so you may expect a single stage distillation with the [[4,1,2]] code to behave identically. The distillation chain does produce the same output as , but it succeeds at a slightly slower rate. The issue is that when the distillation detects a Z error, it costs 4 input pairs because the code takes all 4 data qubits in one group. The two stage distillation detects Z errors during the first stage, so a Z error will only costs 2 input pairs.

A simple staging construction that purifies entanglement reasonably well is to alternate between stages that distill using the X basis rep code and stages that distill using the Z basis rep code:

The error suppression of an X stage followed by a Z stage is quadratic, because any single error will be caught by one stage or the other. Furthermore, each stage only needs one qubit of storage because, as soon as two qubits are ready within one stage, they are distilled into one qubit in the next stage. additional stages cost additional space to square the infidelity. Therefore, given a target , alternating between X rep code and Z rep code stages can reach that infidelity using storage.

2.4 Boosting

In the previous subsection, each stage distilled copies of the output from the previous stage. Distillation was always combining two of the best-so-far state. “Boosting” is an alternative technique, where a good state is improved by repeatedly merging mediocre states into it. (Note: in the literature, “boosting” is more commonly called “pumping” [DB03]). In particular, staging as described in the previous subsection results in states being combined like this:

| (9) |

That kind of merging requires an amount of storage that increases with the amount of nesting. You can instead combine states like this:

| (10) |

Here the result can be built up in a streaming fashion, with identical “booster states” arriving one by one to be folded into a single gradually improving “boosted state”.

The upside of boosting is that it squeezes more benefit out of a state that you are able to repeatedly make. The downside of boosting is that it can only be repeated a finite number of times. The boosted state doesn’t get arbitrarily good under the limit of infinite boosting. It approaches a floor set by the booster state’s error rates. A second downside is that, because each booster state has a fixed chance of failing, the chance of detecting an error and having to restart the boosting process limits to 100% as more boosts are performed. The infidelity floor and the growing restart chance force you to eventually stop boosting and start a new stage.

2.5 Bias Boosting Stage

Suppose you have access to a booster state where the , , and errors of are all equal. You are boosting a state where the and terms are equal. The following relationships hold:

| (11) |

| (12) |

In other words: boosting with using a basis rep code additively increases the chance of a error and the chance of discarding, but multiplicatively suppresses and errors.

If this boosting process is repeated times, the output is:

| (13) |

If the state being boosted is itself, then the result after all these boosts has exponentially smaller and error rates (as long as the infidelity is small enough to begin with, e.g. less than 0.1%):

| (14) |

In this sequence of boosts, the first one is the most likely to discard. The chance of any of the boosts discarding can be upper bounded by multiplying this probability by the number of boosts:

| (15) |

2.6 Bias Busting Stage

The bias boosting stage creates an enormous disparity between the error rate and the other error rates. The bias busting stage fixes this; bringing the error rate down to match the others without increasing them by much.

This stage boosts using the biased state output from the previous stage. This state has equal and terms that are much smaller than its term. The boosts alternate between distilling with the X basis rep code and the Z basis rep code:

| (16) |

Assuming the initial infidelity is small enough (e.g. less than 0.1%), the chance of this pair of boosts failing is at most:

| (17) |

The simplification to is done by knowing that, immediately after a bias boosting stage, will be the largest term and the and terms will be orders of magnitude smaller.

Repeating this pair of boosts times, starting from , reduces all of the error terms below :

| (18) |

Since bias busting is always applied immediately after bias boosting, it will be the case that and for some . So the number of repetitions is at most . The chance of discarding across all the bias busting boosts is therefore at most

| (19) |

Ensuring that is less than 50% requires starting from an below . You can start boosting from higher error rates , without causing a disastrous discard rate, if you do fewer boosts in the bias boosting and bias busting stages.

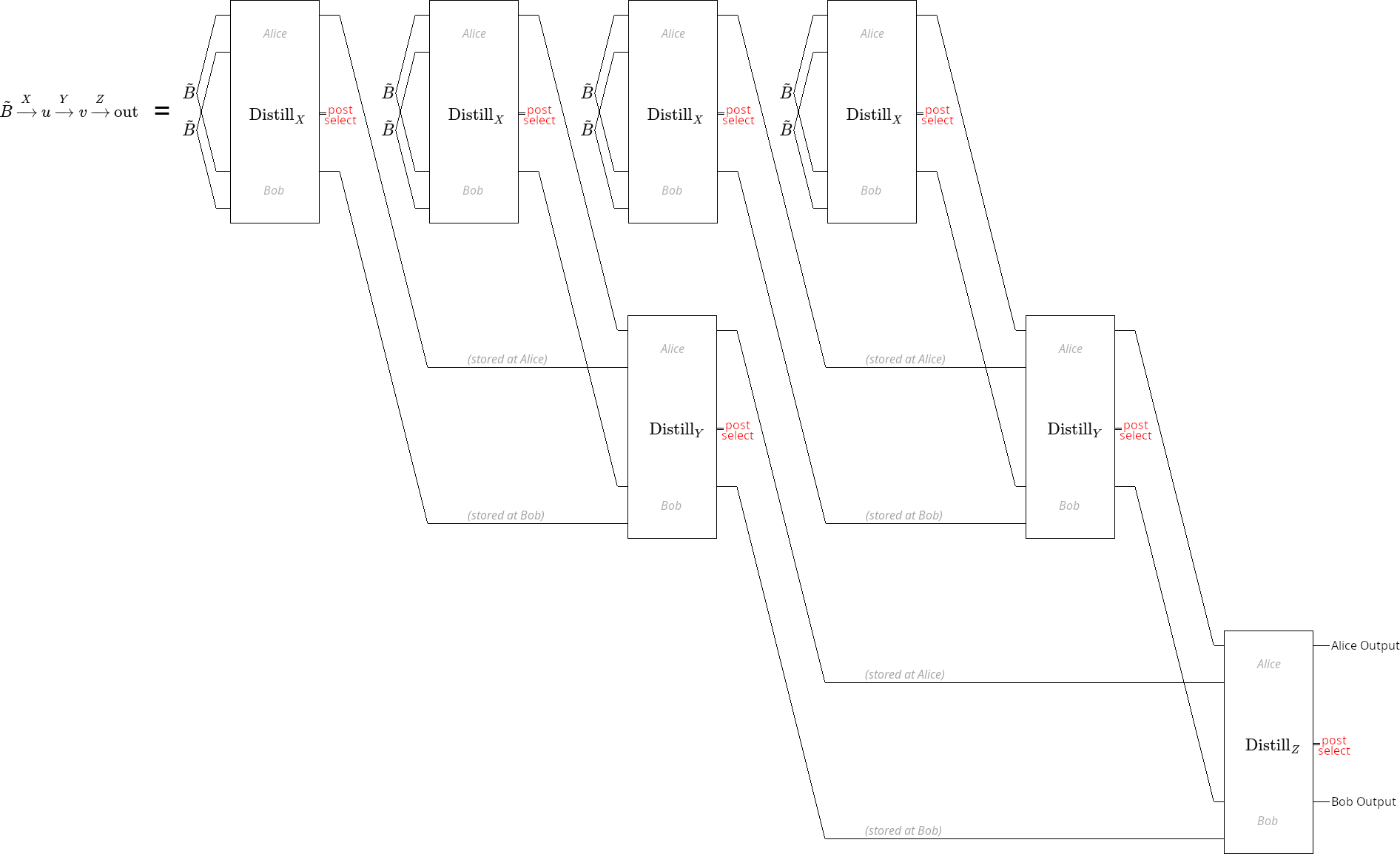

2.7 Full Construction

An example of a full construction is shown in Figure 4. The first step is to bootstrap from the initial infidelity to an infidelity low enough for the bias boosting and bias busting stages to start working. Bootstrapping is done with unboosted rep code stages. The bases of the rep codes are chosen by brute forcing a sequence that gets all error rates below 0.1% in the fewest steps.

Once the error rate is bootstrapped, the construction begins alternating between bias boosting stages and bias busting stages. The number of repetitions is customized during the first alternation, instead of using the loose bounds specified in previous sections, to get a faster takeoff. Later alternations turn an infidelity of at most into an infidelity of at most . This is not quite an exponential decrease, due to the cube root, but doing two alternations fixes this and guarantees a reduction to an infidelity below .

Because each stage uses 1 qubit of storage, and a constant number of stages exponentiates the error rate, each additional exponentiation has space cost. It takes exponentiations to reach an infidelity of , and therefore the storage needed to reach is .

The last detail to discuss is the time complexity. Because the discard rate at each stage is proportional to the infidelity at that stage, and the infidelity is decreasing so much from stage to stage, the sum of the discard rates across all stages converges to a constant as the number of stages limits to infinity. This avoids one way that time costs can become non-linear versus number of stages. That said, the input-to-output conversion efficiency is not quite asymptotically optimal. Each stage is individually achieving a complexity of , with a constant factor of input-to-output waste. As the number of stages increases, these waste factors compound. As a result, the time complexity is instead of the optimal .

3 Conclusion

In this paper, I showed that surprisingly little storage is needed to purify entanglement, assuming storage and local operations and classical communication are noiseless. An infidelity of can be reached using storage and time. Although I focused on an asymptotic limit more interesting to theory than practice, I’m hopeful that the presented ideas will inspire practical constructions.

References

- [Ben+93] Charles H. Bennett, Gilles Brassard, Claude Crépeau, Richard Jozsa, Asher Peres and William K. Wootters “Teleporting an unknown quantum state via dual classical and Einstein-Podolsky-Rosen channels” In Physical Review Letters 70.13 American Physical Society (APS), 1993, pp. 1895–1899 DOI: 10.1103/physrevlett.70.1895

- [Ben+96] Charles H. Bennett, Gilles Brassard, Sandu Popescu, Benjamin Schumacher, John A. Smolin and William K. Wootters “Purification of Noisy Entanglement and Faithful Teleportation via Noisy Channels” In Physical Review Letters 76.5 American Physical Society (APS), 1996, pp. 722–725 DOI: 10.1103/physrevlett.76.722

- [DB03] W. Dür and H.-J. Briegel “Entanglement Purification for Quantum Computation” In Physical Review Letters 90.6 American Physical Society (APS), 2003 DOI: 10.1103/physrevlett.90.067901

- [Laf+96] Raymond Laflamme, Cesar Miquel, Juan Pablo Paz and Wojciech Hubert Zurek “Perfect Quantum Error Correction Code” arXiv, 1996 DOI: 10.48550/ARXIV.QUANT-PH/9602019

- [PK21] Pavel Panteleev and Gleb Kalachev “Asymptotically Good Quantum and Locally Testable Classical LDPC Codes” arXiv, 2021 DOI: 10.48550/ARXIV.2111.03654

- [Sho95] Peter W. Shor “Scheme for reducing decoherence in quantum computer memory” In Physical Review A 52.4 American Physical Society (APS), 1995, pp. R2493–R2496 DOI: 10.1103/physreva.52.r2493