TeX-Graph: Coupled tensor-matrix knowledge-graph embedding for COVID-19 drug repurposing

Abstract

Knowledge graphs (KGs) are powerful tools that codify relational behaviour between entities in knowledge bases. KGs can simultaneously model many different types of subject-predicate-object and higher-order relations. As such, they offer a flexible modeling framework that has been applied to many areas, including biology and pharmacology – most recently, in the fight against COVID-19. The flexibility of KG modeling is both a blessing and a challenge from the learning point of view. In this paper we propose a novel coupled tensor-matrix framework for KG embedding. We leverage tensor factorization tools to learn concise representations of entities and relations in knowledge bases and employ these representations to perform drug repurposing for COVID-19. Our proposed framework is principled, elegant, and achieves improvement over the best baseline in the COVID-19 drug repurposing task using a recently developed biological KG.

Keywords— knowledge graphs, tensor, drug repurposing, COVID-19, embedding, network

1 Introduction

How does COVID-19 relate to better-studied viral infections and biological mechanisms? Can we use existing drugs to effectively treat COVID-19 symptoms? Since the COVID-19 pandemic has disrupted our lives, there is a pressing need to answer such questions, and COVID-19 research has swiftly risen to the top of the scientific agenda, worldwide. While these questions will ultimately be answered by medical experts, data-driven methods can help to cut-down the immense search space, thus helping to accelerate progress and optimize the allocation of precious research resources. In this paper, our goal is to derive such a method by using network science and multi-view analysis tools.

Networks are powerful abstractions that model interactions between the entities of a system [3]. Networks and network science offer concise and informative modeling, analysis and processing for various biological, engineering and social systems, to name a few [11, 22]. Networks are usually represented by graphs, that are defined by a set of nodes and a set of edges connecting pairs of nodes. The entities of a system are usually represented by the nodes of the graph, and the interactions by the edges.

A knowledge graph (KG) models the relational behavior of various entities in knowledge bases. A KG is heterogeneous in the sense that it models interactions between entities of different type, e.g., drugs and diseases, and is also a multidimensional network (edge-labeled multi-graph) [4], since the edges (interactions) that connect the nodes (entities) can be multiple and also of different type. Knowledge graphs (KGs) have recently attracted significant attention due to their applicability to various science and engineering tasks. For instance, popular knowledge graphs are YAGO [32], DBpedia [1], NELL [8], Freebase [5], and the Google KG [29]. A recent trend codifies knowledge bases of biomedical components and processes, such as genes, diseases and drugs into KGs e.g., [14, 15, 17]. KGs can model any relations of the form subject-predicate-object, as well as higher-order generalizations. However, this broad modeling freedom can sometimes be a challenge, as the entities can be very diverse and the dimensions of the KG can turn prohibitively large.

A common way to exploit KGs for data mining and machine learning applications is via knowledge graph embedding. KG embedding aims to extract meaningful low dimensional representations of the entities and the relations present in a KG. A plethora of methods have been proposed to perform KG embedding [20, 12, 25, 18, 26, 6, 21, 34, 23, 33, 30, 2]. The most popular among them adopt a single-layer perceptron or neural network approach e.g., [6, 21, 34, 33, 30]. Various tensor factorization models have also been proposed, e.g., [20, 12, 25, 23, 2]. Matrix factorization is also a tool that has been utilized for KG embedding, e.g., [18, 26].

In this paper we propose TeX-Graph, a novel coupled tensor-matrix framework to perform KG embedding. The proposed KG coupled tensor-matrix modeling extracts meaningful information from a set of diverse entities with multi-modal interactions in a principled and concise manner. TeX-Graph avoids modeling inefficiencies in previously proposed tensor models, and relative to neural network approaches it offers a principled and effective way to produce unique KG representations. The proposed framework is used for drug repurposing, a pivotal tool in the fight against COVID-19 and other diseases. Learning concise representations for drug compounds, diseases, and the relations between them, our approach allows for link prediction between drug compounds and COVID-19 or other diseases. The impact is critical. First, compound repurposing enables drug design that drastically reduces the design exploration cycle and the failure rate. Second, it markedly reduces drug development cost, as developing new therapeutic drugs is tremendously expensive.

The contributions of our work can be summarized as follows:

-

•

Novel KG modeling: We propose a principled coupled tensor-matrix model tailored to KG needs for efficient and parsimonious representations.

-

•

Analysis: The TeX-Graph embeddings are unique and permutation invariant, a property which is important for consistency and necessary for interpretability.

-

•

Algorithm: We design a scalable algorithmic framework with lightweight updates, that can effectively handle very large KGs.

-

•

Application: The proposed framework is developed to perform drug repurposing, a pivotal task in the fight against COVID-19.

-

•

Performance: TeX-Graph achieves performance improvement compared to the best available baseline for COVID-19 drug repurposing using a recently developed COVID-19 KG.

Reproducibility: The DRKG dataset used in the experiments is publicly available; we will also release our code upon publication of the paper.

Notation: Our notation is summarized in Table 1.

| scalars | ||

| , | ordered tuple | |

| vectors | ||

| matrices | ||

| tensors | ||

| sets | ||

| -th column of matrix | ||

| -th row of matrix | ||

| -th frontal slab of tensor | ||

| transpose of matrix | ||

| Frobenius norm of matrix | ||

| Khatri-Rao (columnwise Kronecker) product | ||

| outer product | ||

| Hadamard product | ||

| diag | diagonal matrix of vector | |

| largest integer that is less than or equal to | ||

| nnz | number of non-zeros |

2 Preliminaries

In order to facilitate the upcoming discussion we now discuss some tensor algebra preliminaries. For more background on tensors the reader is referred to [28, 19].

A third-order tensor is a three-way array indexed by with elements . It has three mode types – columns ( is used to denote all relevant index values for that mode, i.e., from beginning to end), rows , and fibers – see Fig. 1. A third order tensor can also be described by a set of matrices (slabs), i.e., horizontal , vertical and frontal slabs – see Fig. 2. A rank-one third order tensor is defined as the outer product of three vectors. Recall that a rank one matrix is the outer product of two vectors. Any third order tensor can be decomposed into a sum of three-way outer products (rank one tensors) as:

| (2.1) |

where are matrices collecting the respective mode factors, i.e., hold in their columns the vectors involved in the three-way outer products. The above expression is known as the polyadic decomposition (PD) of a third-order tensor. If is the minimum number of outer products required to synthesize , then is the rank of tensor and the decomposition is known as the canonical polyadic decomposition (CPD) or parallel factor analysis (PARAFAC) [13]. For the rest of the paper we use the notation to denote the CPD of the tensor.

A striking property of the CPD is that it is essentially unique under mild conditions, even if the rank is higher than the dimensions– see [9] for a generic result.

A tensor can be represented in a matrix form by employing the matricization operation. There are three common ways to matricize (or unfold) a third-order tensor, by stacking columns, rows, or fibers of the tensor to form a matrix. To be more precise let:

| (2.2) |

where are the frontal slabs of tensor and in the context of this paper they model adjacency matrices. Then the mode-, mode- and mode- unfoldings of are:

| (2.3) |

| (2.4) |

| (2.5) |

Another important tensor model is the coupled CPD. In coupled CPD we are interested in decomposing an array of tensors that share at least one common latent factor. In particular, consider a collection of tensors . The rank- coupled CPD of can be expressed as:

| (2.6) |

where is the common factor and are unshared factors. The coupled CPD is also unique under certain conditions, even if individual CPDs of are not unique. In this work we will use the following uniqueness theorem for coupled CPD:

Theorem 1

where is defined as:

| (2.7) | ||||

and is the second compound matrix of – see [10, p. 861-862]. In the context of coupled CPD, essential uniqueness corresponds to being unique and being identifiable up to column scaling and counter-scaling.

3 Problem Statement

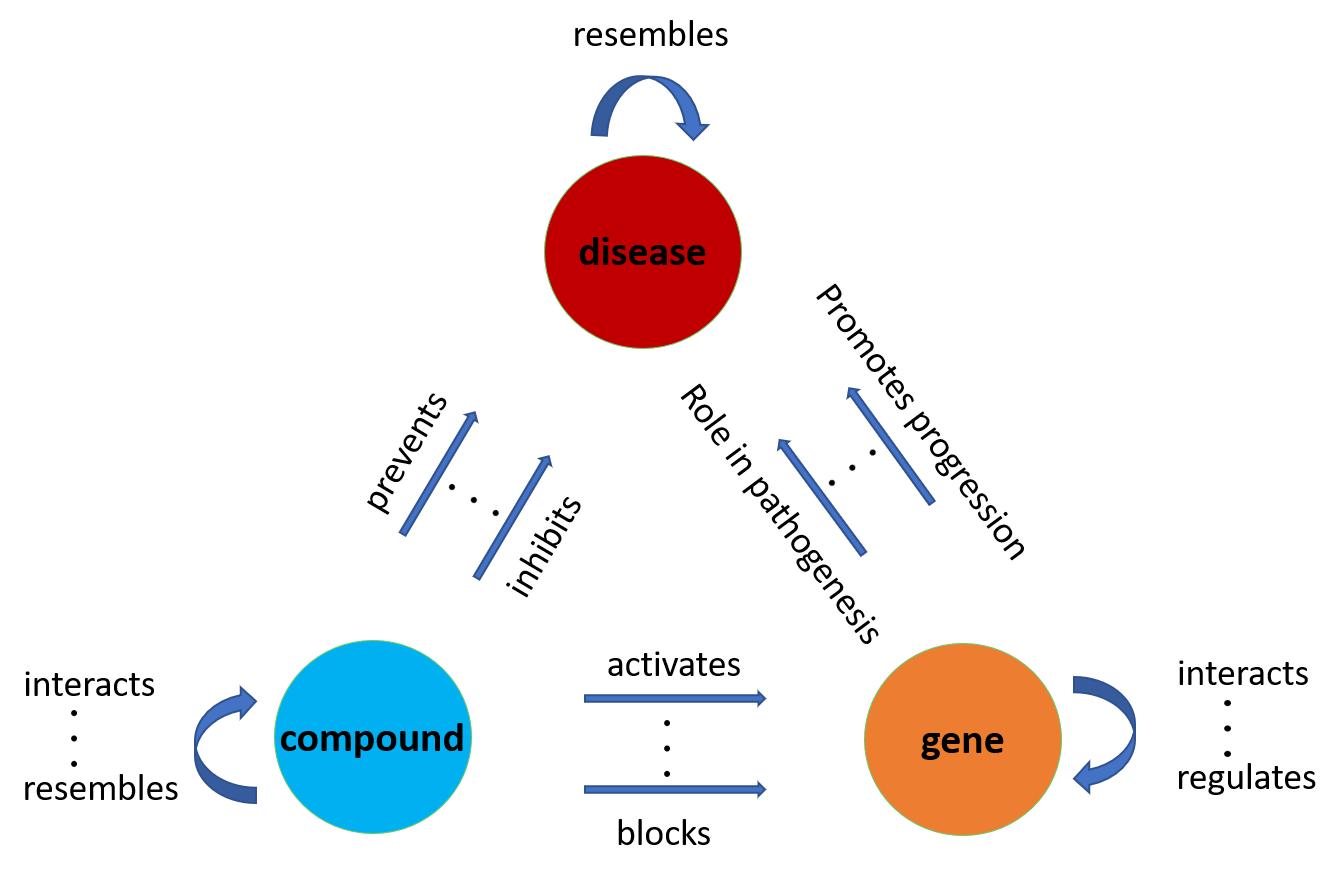

As mentioned in the introduction knowledge graphs (KGs) have attracted significant attention over the past decade due to their tremendous modeling capabilities. In particular, KGs model triplets of subject-predicate-object, denoted as (head, relation, tail) or (h, r, t). Subjects (heads) and objects (tails) are entities that are represented as graph nodes and predicates (relations) define the type of edge according to which the subject is connected to the object. A schematic representation of a KG, which models relations between genes, compounds and diseases is presented in Fig. 3.

In this paper, we focus our attention on a biological KG that models relational triplets between biological entities. For example, (compound 1, interacts with, compound 2), (compound 1, activates, gene 1), (gene 1, regulates, gene 2), (compound 1, prevents, disease 1), (gene 2, is linked with, disease 2) are common triplets in numerous recently developed knowledge bases [14, 15, 17]. Modeling these types of relations as a KG enables embedding entities and relations in a Euclidean space which can further facilitate any type of processing and analysis. For instance, obtaining a low dimensional representation of compounds, diseases and the ‘prevents’ relation allows measuring similarity, and thus predicting and testing hypotheses regarding (compound, prevents, disease) interactions. Drug repurposing can be performed by predicting candidate compounds for new and existing target diseases.

Note that the proposed framework to be introduced shortly is not limited to biological KGs – it can be applied to a wide variety of interesting KGs.

3.1 Prior Art

Several methods have been proposed to learn low dimensional representations of KGs. To properly describe them we need to define the score function and the loss function .

Let be an available triplet and and be the low dimensional embeddings we aim to learn. Note that entity and relation embeddings need not be of the same dimension. The score function determines the relation model between the head (subject) and the tail (object). In simple words, high values of the score function are desirable for existing triplets and low values of for non-existing ones.

In order to produce the entity and relational embeddings we define the following forward model for each triplet :

| (3.8a) | |||

| (3.8b) | |||

| (3.8c) |

where are one-hot input vectors corresponding to the head, tail and relation index of the triplet respectively, with being the total number of entities (nodes) and types of relations; and are element-wise functions and are matrices that contain the model parameters to be learned.

Popular choices for and are the identity function and hyperbolic tangent. If or are identity functions then the rows of or are the learned embeddings for entities and relations respectively. For TransE, DistMult and RotatE , whereas for TransR and RESCAL . In the TransR model is a projection matrix associated with relation r and in RESCAL .

| Model | score function |

|---|---|

| TransE [6] | or |

| TransR [21] | |

| DistMult[34] | diag |

| RESCAL [23] | |

| RotatE [33] |

In order to learn the embeddings, state-of-the-art methods attempt to minimize the following risk:

| (3.9) |

where is the number of data points (triplets or non-triplets), if the triplet exists, else . Typical loss functions include the logistic loss, square loss, pairwise ranking loss, margin-based ranking loss and variants of them. In order to tackle the problem in (3.9) the most popular approach is stochastic gradient descent (SGD) or batch SGD [7].

3.2 The 3-way model

Modeling a KG using a third order tensor has been considered in [20, 12, 25, 23, 2]. In these works, the first and second mode of the tensor is the concatenation of all the available entities, regardless of their type, whereas the third mode represents the different type of relations – i.e., each frontal slab of the third order tensor represents a certain interaction type between the entities of the KG. The methods in [25, 2] work with incomplete tensors, whereas [20, 12, 23] model each frontal slab as an adjacency matrix. To be more precise, let be the third order tensor in [20, 12, 23]. Then if entity interacts with entity through relation and if there is no interaction between entities and via the relation.

An important observation is that although the first and second mode of tensor represent the same entities, each frontal slab is not necessarily symmetric. The reason is that subject-predicate-object does not necessarily imply object-predicate-subject. The works in [20, 12] compute the CPD of (or scaled versions of ) and produce two embeddings for each entity, one as a subject and another as an object. Although this is not always a drawback, it can result in an overparametrized model because in many applications entities usually act either as a subject or as an object, but not both. Furthermore, a single unified representation is usually preferable. In order to overcome this issue, RESCAL [23] proposed the following model for each frontal slab:

| (3.10) |

where is square matrix holding the relation embeddings associated with relation . Note that the RESCAL model is different than the traditional CPD (symmetric in mode and ) in the sense that is not constrained to be diagonal. Relaxing the diagonal constraints allows matrix to absorb in the relation embedding the direction in which different entities interact. On the downside, this type of relaxation forfeits the parsimony and uniqueness properties of the CPD. This is an important point, since uniqueness is a prerequisite for model interpretability when we are interested in exploratory / explanatory analysis (and not simply in making ‘black box’ predictions).

Another important drawback of the tree-way model is that it models unnecessary interactions. To see this, consider a KG that describes interactions between genes and diseases. Suppose that the observed interactions are of gene-gene and gene-disease type but there are no available data for disease-disease interactions. The tree-way model involves disease-disease interactions in the learning process (as non-edges), even though there are no data to justify it. As we will see in the upcoming section our proposed coupled tensor-matrix modeling addresses all the aforementioned challenges.

4 The TeX-Graph model

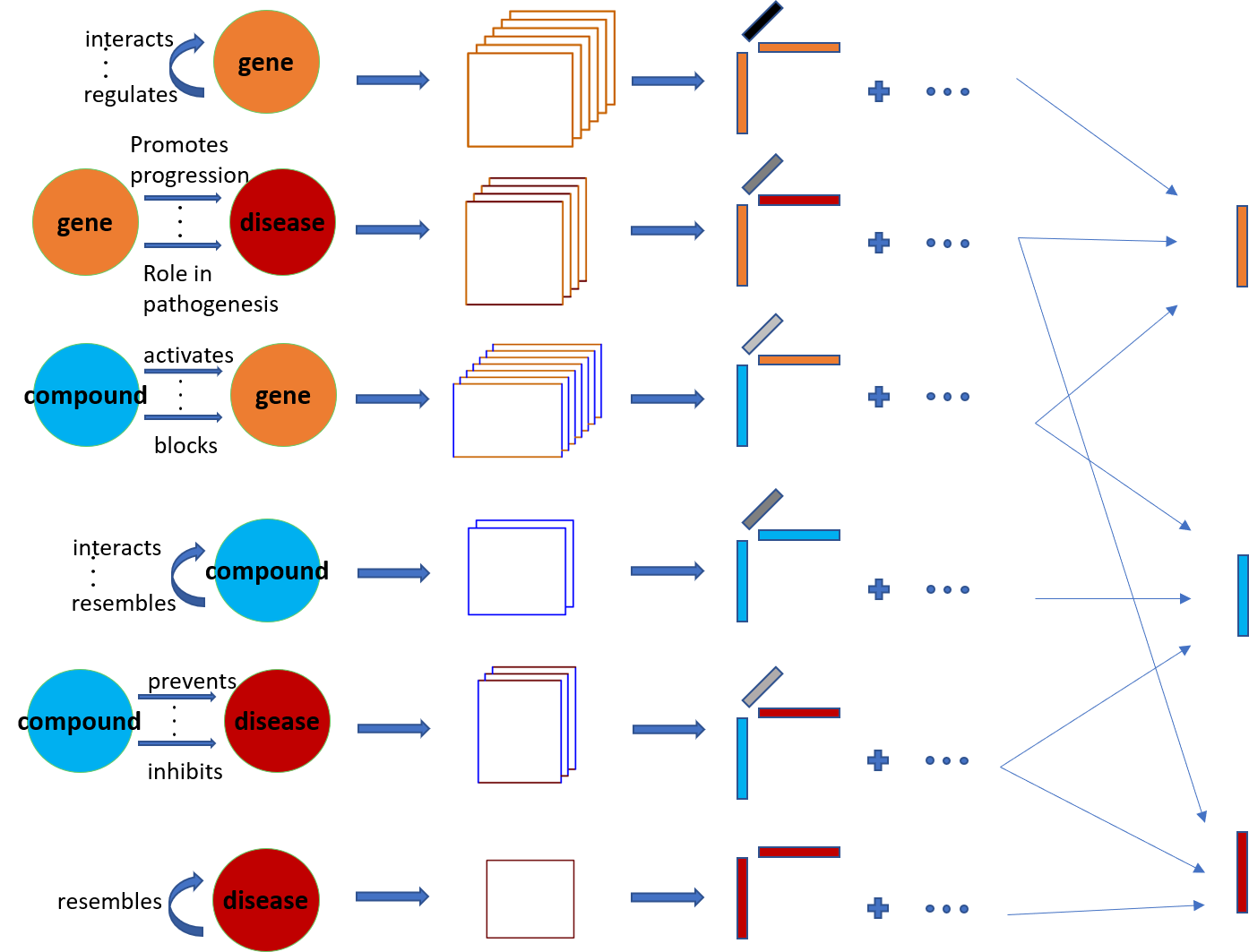

In this paper we leverage coupled tensor-matrix factorization to extract low dimensional representations of entities (head, tail) as well as representations for the interactions (relation). KGs can be naturally represented by a collection of tensors and matrices, as shown in Fig. 4. To see this, consider the previous example of gene, compound and disease entities. Gene-compound interactions, of a certain type, can be represented by an adjacency matrix. Since there are multiple types of interactions, multiple adjacency matrices are necessary to model every interaction, resulting in a tensor , where are the number of genes and compounds respectively, and is the number of different interactions between genes and compounds. The same idea can be applied to any (entity,interaction,entity) triplet.

To facilitate the discussion let be the tensor of interactions between entity of type- and type-, e.g., codifies genes and codifies compounds. Also let be the total number of different entity types, then . if the -th entity of type- interacts with the -th entity of type- via relation and if there is no type- interaction between the -th entity of type- and the -th entity of type-. The KG is represented by a collection of tensors as:

| (4.11) | |||

where and . Note that tensors and contain the same information since . Therefore we only consider tuples where .

Each of the tensors in the array admits a CPD and the overall model is cast as:

| (4.12) |

where . The -th row of represents the -dimensional embedding of the -th type- entity and the -th row of represents the -dimensional embedding of the -th type relation between type- and type- entities. Note that in the case where entities of type- interact with entities of type- via only one type of relation, is a matrix and can be factored as:

| (4.13) |

The model in (4.12) is a coupled CPD as the factors appear in multiple tensors. For instance, type-1-type-1 interactions (gene-gene), type-1-type-2 interactions (gene-compound), type-1-type-3 interactions (gene-disease), result in the factor appearing in tensors and .

The proposed TeX-Graph exhibits several favorable properties. First, the produced embeddings are unique, provided that they appear in more than one adjacency matrices.

Proposition 1

(Uniqueness of the embeddings) If the coupled tensor model in (4.12) is indeed low-rank, , there exist entity and relation embedding vectors in dimensional space that generate the given knowledge base. Then the dimensional TeX-Graph embeddings for type- entities and type- relations are unique and permutation invariant provided that and respectively, where are defined in (4.16).

The proof of Proposition 1 utilizes the uniqueness results of Theorem 1 and is relegated to the journal version due to space limitations. In the case where and type- entities appear in multiple tensors but type- entities only in one, the TeX-Graph model identifies and diag, since there is rotational freedom between and .

Another important property of the proposed TeX-Graph is that it avoids modeling of spurious ‘cross-product’ relations that can never be observed. The coupled tensor-matrix model allows for a concise KG representation that eliminates such spurious relations from the start, contrary to the three-way model. To see this, consider the previous example of gene-disease KG that observes relational triplets between gene-gene and gene-disease type but not for disease-disease type. The proposed TeX-Graph does not model disease-disease interactions, whereas the three-way model treats them as non-edges.

It is worth noticing that TeX-Graph makes the implicit assumption that are symmetric in the first and second mode. This is not always the case, since interactions between some entity types might be directed. To overcome this issue we assume that (h,r,t) implies (t,r,h) for (h,t) of the same type. Although this assumption ignores the direction in this type of interactions, it results in a more parsimonious model for the entity embeddings.

4.1 Algorithmic framework

In order to learn the -dimensional embeddings of all entities and relations we formulate the KG embedding problem as:

| (4.14) |

The problem in (4.14) is non-convex and NP-hard in general. In order to tackle it we propose to fix all variables but one and update the remaining variable. This procedure is repeated in an alternating fashion. The update for is a system of linear equations and takes the form:

| (4.15) |

where

| (4.16) |

The update for is the solution to the following system of linear equations:

| (4.17) |

The derivations for these updates as well as implementation details are presented in Appendix A.

The proposed TeX-Graph is presented in Algorithm 1. TeX-Graph is an iterative algorithm that tackles a non-convex problem and NP-hard in general. As a result different initial points might produce different results. Although we have observed that random initialization is sufficient most of the times we propose an alternative initialization procedure that yields consistent and reproducible results. To be more specific we form a symmetric version of tensor as:

| (4.18) |

Then we compute the semi-symmetric CPD of using sparse eigenvalue decomposition (EVD) [27]. The proposed initialization procedure is presented in Algorithm 2.

4.2 Computational complexity analysis

In terms of memory requirements and computational complexity, the main bottleneck of TeX-Graph lies in instantiating and computing the matricized tensor times Khatri-Rao product (MTTKRP) in the left hand side (LHS) of (4.15) and (4.17). The number of flops needed to compute the LHS of (4.15) and (4.17) is and respectively. For small values of which is usually the case in practice the complexity is linear in the number of triplets participating in each update. Furthermore the Khatri-Rao products in the (LHS) of (4.15) and (4.17) are not being instantiated as shown in Appendix A.

5 Drug Repurposing for COVID-19

In this section we apply TeKGraph to a recently developed KG [17] in order to perform drug repurposing for COVID-19 disease. All algorithms were implemented in Matlab or Python, and executed on a Linux server comprising 32 cores at 2GHz and 128GB RAM.

5.1 Data

The dataset used in the experiments is the Drug Repurposing Knowledge Graph (DRKG)111github.com/gnn4dr/DRKG [17]. It codifies triplets of biological interactions between 97,238 different entities of 13 types, namely, genes, compounds, diseases, anatomy, tax, biological process, cellular component, pathway, molecular function, anatomical therapeutic chemical (Atc), side effect, pharmacological class, and symptom. The total number of triplets is 5,874,258 and there are 107 different types of interactions. The KG is organised in 6 adjacency tensors and 11 adjacency matrices. Detailed description of the dataset and the modeling can be found in Table 3. Each row denotes a different adjacency tensor or matrix and type- entities, type- entities, relation types correspond to the dimension of mode 1, mode 2, and mode 3 respectively. The last column (sparsity) denotes the sparsity of each tensor, i.e.,

| entity type-m | entity type-n | # type-m entities 1 | # type-n entities 2 | # relation types | tensor | sparsity |

| Gene | Gene | 39,220 | 39,220 | 32 | ||

| Compound | 39,220 | 24,313 | 34 | |||

| Disease | 39,220 | 5,103 | 15 | |||

| Anatomy | 39,220 | 400 | 3 | 0.0154 | ||

| Tax | 39,220 | 215 | 1 | 0.0017 | ||

| Biological Process | 39,220 | 11,381 | 1 | 0.0013 | ||

| Cellular Component | 39,220 | 1,391 | 1 | 0.0013 | ||

| Pathway | 39,220 | 1,822 | 1 | 0.0012 | ||

| Molecular Function | 39,220 | 2,884 | 1 | |||

| Compound | Compound | 24,313 | 24,313 | 2 | 0.0023 | |

| Disease | 24,313 | 5,103 | 10 | |||

| Atc | 24,313 | 4,048 | 1 | |||

| Side Effect | 24,313 | 5,701 | 1 | 0.0010 | ||

| Pharmacological Class | 24,313 | 345 | 1 | |||

| Disease | Disease | 5,103 | 5,103 | 1 | ||

| Anatomy | 5,103 | 400 | 1 | 0.0018 | ||

| Symptom | 5,103 | 415 | 1 | 0.0016 |

5.2 Procedure

Drug repurposing refers to the task of discovering existing drugs that can effectively manage certain diseases– COVID-19 in our study. DRKG codifies relational triplets of (compound,treats,disease) and (compound,inhibits,disease). Therefore drug repurposing in the context of DRKG boils down to predicting new ‘treats’ and ‘inhibits’ edges (links) between compounds and diseases of interest.

We follow the evaluation procedure proposed in [17]. In the training phase we learn low dimensional representations for the entities and relations, using all the edges in DRKG. In the testing phase, we assign a score to (compound,treats,disease) and (compound,inhibits,disease) triplets according to the scoring function used for training. For the proposed TeX-Graph, the scores assigned to the triplet (hyper-edge) (compound ,treats,disease ) and (compound ,inhibits,disease ) are:

since ‘treats’ and ‘inhibits’ relations correspond to the second and ninth frontal slab of , respectively. The testing set consists of 34 corona-virus related diseases, including SARS, MERS and SARS-COV2 and FDA-approved drugs in Drugbank. Drugs with molecule weight less than 250 daltons are excluded from testing. Ribavirin was also excluded from the testing set, since there exist a ‘treat’ edge in the training set between Ribavirin and a target disease. In order to evaluate the performance of the proposed TeX-Graph and the alternatives we retrieve the top-100 ranked drugs that appear in the highest testing scoring (hyper-)edges. These are the proposed candidate drugs for COVID-19. Then we assess how many of the 32 clinical trial drugs 222www.covid19-trials.com (Ribavirin is excluded) appear in the proposed candidate top-100 drugs.

5.3 Methods

The methods used in the experiments are:

-

•

TeX-Graph. The proposed TeKGraph algorithm initialized with Algorithm 2. The embedding dimension is set to and the algorithm runs for 10 iterations.

-

•

TransE-DRKG [6, 17]. TransE learns low dimensional KG embeddings using the score function shown in Table 2. For the the task of drug repurposing we use the specifications proposed in [17]. The norm is chosen in the score function and training is performed using the deep graph library for knowledge graphs [35]. To evaluate the performance of TransE-DRKG on the drug repurposing task we used the dimensional pretrained embeddings in [17], with which the drug repurposing results were better than the stand-alone code without pretraining.

-

•

3-way KG embeddings (3-way KGE). We add as a baseline the embeddings produced by computing the CPD of tensor in (4.18). Recall that we use an algebraic CPD of to initialize TeX-Graph. In 3-way KGE we initialize using the same procedure and also run 10 alternating least-squares iterations to compute the CPD of . 3-way KGE is tested with .

5.4 Results

Table 4 shows the clinical trial drugs that appear in the top-100 recommendations along with their [rank-order]. The proposed approach retrieves 10 clinical trial drugs in the top-100 positions, and 7 in the top-50. Compared to TransE-DRKG that was the first proposed algorithm to perform drug-repurposing for COVID-19, TeX-Graph achieves and improvement in precision in the top- and top- respectively.

It is worth emphasizing that the proposed Tex-Graph retrieves approximately of the COVID-19 clinical trial drugs, in the top-100, among a testing set of drugs. This result is pretty remarkable and can essentially help cutting down the immense search space of medical research. For instance, consider the case of Dexamethasone, which is retrieved by Tex-Graph in the top ranked position (it admitted the highest score among all drugs). At the onset of the pandemic, the initial guidance for Dexamethasone and other corticosteroids was indecisive. Guidelines from different sources issued either a weak recommendation to use Dexamethasone (with an asterisk that further evidence was required) or a weak recommendation against corticosteroids and Dexamethasone [24]. However, recent results indicate that treatment with Dexamethasone reduces mortality in patients with COVID-19 [16]. The results of Tex-Graph coalign with the latest evidence and rank Dexamethasone as the top recommended drug. This suggests that our proposed data-driven approach could have essentially contributed in overturning the initial hesitancy to administrate Dexamethasone as a first line treatment.

| TeX-Graph | TransE-DRKG | 3-way KGE |

|---|---|---|

| F=50 | F=400 | F=50 |

| Dexamethasone [1] | Dexamethasone [4] | Oseltamivir [89] |

| Methylprednisolone [6] | Colchine [8] | |

| Azithromycin [13] | Methylprednisolone [16] | |

| Thalidomide [18] | Oseltamivir [49] | |

| Losartan [41] | Deferoxamine [87] | |

| Hydroxychloroquine [47] | ||

| Colchine [48] | ||

| Oseltamivir[60] | ||

| Chloroquine[68] | ||

| Deferoxamine [88] |

6 Conclusion

In this paper we proposed a novel coupled tensor-matrix framework for knowledge graph embedding. The proposed model is principled and enjoys several favorable properties, including parsimony and uniqueness. The developed algorithmic framework admits lightweight updates and can handle very large graphs. Finally the proposed TeX-Graph showed very promising results in a timely application to drug repurposing, a task of paramount importance in the fight against COVID-19.

7 Acknowledgements

The authors would like to acknowledge Ioanna Papadatou, M.D., Ph.D, for contributing in the medical assessment of the produced results.

A Appendix: TeX-Graph updates

TeX-Graph solves the following problem

| (A.1) |

Then the update for is the solution of:

| (A.2) |

where are defined in (4.16). Problem (A) can be written as:

| (A.3) |

Taking the gradient of (A) with respect to and setting it to zero yields the equation in (4.15). The main bottleneck of (4.15) in terms of memory requirements and computational complexity is instantiating the Khatri-Rao products and computing the MTTKRP . We focus on the computation of:

| (A.4) |

Equation (A.4) can be equivalently written as:

| (A.5) |

It is clear from equation (A) that need not be instantiated. Furthermore, the number of flops to compute (A) is . Note that computing is only different in the fact that the frontal slabs are not transposed, and is thus omitted.

The update for is the solution of:

| (A.6) |

or equivalently:

| (A.7) |

Taking the gradient of (A.7) with respect to and setting it to zero yields the equation in (4.17). The main memory and computation bottleneck of equation (4.17) is computing the MTTKRP. The formula in (A) can be utilized if is replaced by , is replaced by and the transposed frontal slabs are replaced by vertical slabs.

References

- [1] Sören Auer, Christian Bizer, Georgi Kobilarov, Jens Lehmann, Richard Cyganiak, and Zachary Ives. Dbpedia: A nucleus for a web of open data. In The semantic web, pages 722–735. Springer, 2007.

- [2] Ivana Balazevic, Carl Allen, and Timothy Hospedales. Tucker: Tensor factorization for knowledge graph completion. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 5188–5197, 2019.

- [3] Albert-László Barabási et al. Network science. Cambridge university press, 2016.

- [4] Michele Berlingerio, Michele Coscia, Fosca Giannotti, Anna Monreale, and Dino Pedreschi. Foundations of multidimensional network analysis. In 2011 international conference on advances in social networks analysis and mining, pages 485–489. IEEE, 2011.

- [5] Kurt Bollacker, Colin Evans, Praveen Paritosh, Tim Sturge, and Jamie Taylor. Freebase: a collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD international conference on Management of data, pages 1247–1250, 2008.

- [6] Antoine Bordes, Nicolas Usunier, Alberto Garcia-Duran, Jason Weston, and Oksana Yakhnenko. Translating embeddings for modeling multi-relational data. In Advances in neural information processing systems, pages 2787–2795, 2013.

- [7] Léon Bottou. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT’2010, pages 177–186. Springer, 2010.

- [8] Andrew Carlson, Justin Betteridge, Bryan Kisiel, Burr Settles, Estevam R Hruschka, and Tom M Mitchell. Toward an architecture for never-ending language learning. In Twenty-Fourth AAAI Conference on Artificial Intelligence, 2010.

- [9] Luca Chiantini and Giorgio Ottaviani. On generic identifiability of 3-tensors of small rank. SIAM Journal on Matrix Analysis and Applications, 33(3):1018–1037, 2012.

- [10] Ignat Domanov and Lieven De Lathauwer. On the uniqueness of the canonical polyadic decomposition of third-order tensors — part ii: Uniqueness of the overall decomposition. SIAM Journal on Matrix Analysis and Applications (SIMAX), 34(3):876–903, 2013.

- [11] David Easley, Jon Kleinberg, et al. Networks, crowds, and markets, volume 8. Cambridge university press Cambridge, 2010.

- [12] Thomas Franz, Antje Schultz, Sergej Sizov, and Steffen Staab. Triplerank: Ranking semantic web data by tensor decomposition. In International semantic web conference, pages 213–228. Springer, 2009.

- [13] Richard A Harshman, Margaret E Lundy, et al. Parafac: Parallel factor analysis. Computational Statistics and Data Analysis, 18(1):39–72, 1994.

- [14] Daniel S Himmelstein and Sergio E Baranzini. Heterogeneous network edge prediction: a data integration approach to prioritize disease-associated genes. PLoS computational biology, 11(7), 2015.

- [15] Daniel Scott Himmelstein, Antoine Lizee, Christine Hessler, Leo Brueggeman, Sabrina L Chen, Dexter Hadley, Ari Green, Pouya Khankhanian, and Sergio E Baranzini. Systematic integration of biomedical knowledge prioritizes drugs for repurposing. Elife, 6:e26726, 2017.

- [16] Peter Horby, Wei Shen Lim, Jonathan R Emberson, Marion Mafham, Jennifer L Bell, Louise Linsell, Natalie Staplin, Christopher Brightling, Andrew Ustianowski, Einas Elmahi, et al. Dexamethasone in hospitalized patients with covid-19-preliminary report. The New England journal of medicine, 2020.

- [17] Vassilis N. Ioannidis, Xiang Song, Saurav Manchanda, Mufei Li, Xiaoqin Pan, Da Zheng, Xia Ning, Xiangxiang Zeng, and George Karypis. Drkg - drug repurposing knowledge graph for covid-19. https://github.com/gnn4dr/DRKG/, 2020.

- [18] Xueyan Jiang, Volker Tresp, Yi Huang, and Maximilian Nickel. Link prediction in multi-relational graphs using additive models. SeRSy, 919:1–12, 2012.

- [19] Tamara G Kolda and Brett W Bader. Tensor decompositions and applications. SIAM review, 51(3):455–500, 2009.

- [20] Tamara G Kolda, Brett W Bader, and Joseph P Kenny. Higher-order web link analysis using multilinear algebra. In Proceedings of Fifth IEEE International Conference on Data Mining, pages 8–pp. IEEE, 2005.

- [21] Hailun Lin, Yong Liu, Weiping Wang, Yinliang Yue, and Zheng Lin. Learning entity and relation embeddings for knowledge resolution. Procedia Computer Science, 108:345–354, 2017.

- [22] Mark Newman. Networks. Oxford university press, 2018.

- [23] Maximilian Nickel, Volker Tresp, and Hans-Peter Kriegel. A three-way model for collective learning on multi-relational data. In Icml, volume 11, pages 809–816, 2011.

- [24] Hallie C Prescott and Todd W Rice. Corticosteroids in covid-19 ards: evidence and hope during the pandemic. Jama, 324(13):1292–1295, 2020.

- [25] Steffen Rendle and Lars Schmidt-Thieme. Pairwise interaction tensor factorization for personalized tag recommendation. In Proceedings of the third ACM international conference on Web search and data mining, pages 81–90, 2010.

- [26] Sebastian Riedel, Limin Yao, Andrew McCallum, and Benjamin M Marlin. Relation extraction with matrix factorization and universal schemas. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 74–84, 2013.

- [27] Eugenio Sanchez and Bruce R Kowalski. Tensorial resolution: a direct trilinear decomposition. Journal of Chemometrics, 4(1):29–45, 1990.

- [28] Nicholas D Sidiropoulos, Lieven De Lathauwer, Xiao Fu, Kejun Huang, Evangelos E Papalexakis, and Christos Faloutsos. Tensor decomposition for signal processing and machine learning. IEEE Transactions on Signal Processing, 65(13):3551–3582, 2017.

- [29] Amit Singhal. Introducing the knowledge graph: things, not strings. Official google blog, 16, 2012.

- [30] Richard Socher, Danqi Chen, Christopher D Manning, and Andrew Ng. Reasoning with neural tensor networks for knowledge base completion. In Advances in neural information processing systems, pages 926–934, 2013.

- [31] MIKAEL Sørensen, Ignat Domanov, and L De Lathauwer. Coupled canonical polyadic decompositions and (coupled) decompositions in multilinear rank-(lr, n, lr, n, 1) terms—part ii: Algorithms. SIAM Journal on Matrix Analysis and Applications, 36:1015–1045, 2015.

- [32] Fabian M Suchanek, Gjergji Kasneci, and Gerhard Weikum. Yago: a core of semantic knowledge. In Proceedings of the 16th international conference on World Wide Web, pages 697–706, 2007.

- [33] Zhiqing Sun, Zhi-Hong Deng, Jian-Yun Nie, and Jian Tang. Rotate: Knowledge graph embedding by relational rotation in complex space. In International Conference on Learning Representations, 2018.

- [34] Bishan Yang, Wen-tau Yih, Xiaodong He, Jianfeng Gao, and Li Deng. Embedding entities and relations for learning and inference in knowledge bases. arXiv preprint arXiv:1412.6575, 2014.

- [35] Da Zheng, Xiang Song, Chao Ma, Zeyuan Tan, Zihao Ye, Jin Dong, Hao Xiong, Zheng Zhang, and George Karypis. Dgl-ke: Training knowledge graph embeddings at scale. arXiv preprint arXiv:2004.08532, 2020.