The Dependence of Parallel Imaging with Linear Predictability on the Undersampling Direction

Abstract

Parallel imaging with linear predictability takes advantage of information present in multiple receive coils to accurately reconstruct the image with fewer samples. Commonly used algorithms based on linear predictability include GRAPPA and SPIRiT. We present a sufficient condition for reconstruction based on the direction of undersampling and the arrangement of the sensing coils. This condition is justified theoretically and examples are shown using real data. We also propose a metric based on the fully-sampled auto-calibration region which can show which direction(s) of undersampling will allow for a good quality image reconstruction.

Keywords Parallel Imaging Linear Predictability Fourier Shift Theorem SMASH GRAPPA SPIRiT

1 Introduction

Magnetic resonance imaging (MRI) is a ubiquitously used and highly versatile gross medical imaging modality with abundant natural contrast; it is relatively slow due to the length of time required for longitudinal magnetization recovery, parameterized by . Producing images of diagnostic quality requires patients to remain still for a long time. For a given sequence and contrast, scan time is directly proportional to the amount of data collected—therefore, it is advantageous to reconstruct good quality images with fewer samples.

Several methods to accelerate MRI have been developed, including parallel imaging [1], compressed sensing (CS) [2, 3, 4, 5], partial Fourier acquisition [6], and, more recently, the use of convolutional neural networks and other deep learning solutions [7, 8, 9].

Parallel imaging uses multiple sensing coils to reduce the amount of data required for a high-quality image. There are two distinct approaches to parallel imaging: model based reconstruction (which requires estimates of the sensitivity maps), and linear predictability (which uses a calibration region to estimate linear interpolation coefficients). The quintessential algorithms for parallel imaging with linear predictability are AUTO-SMASH [10], GRAPPA [11], and SPIRiT [12]. In [13], Haldar et al. show that a sufficient condition for parallel imaging with linear predictability is that the support of the imaged object is a strict subset of the field-of-view of the image. That is, if there are regions of the field-of-view that do not image the subject and just image air, then parallel imaging with linear predictability is possible. Notably, though this is a sufficient condition for parallel imaging with linear predictability generally, it may not be sufficient for a specific instance of linear predictability based on user specified parameters. Thus, even when this condition is met, parallel imaging algorithms may fail to yield a high-quality image, as we will show.

In this manuscript, we will present a separate sufficient condition for parallel imaging with linear predictability. We will show that parallel imaging reconstruction algorithms based on linear predictability, such as GRAPPA [11] and SPIRiT [12], have an innate dependence on the direction of undersampling, which is based on the arrangement of the sensing coils. We explore this dependence by showing that GRAPPA (and by extension, SPIRiT) is based on similar assumptions as those required by AUTO-SMASH [10]. We show results where the quality of the reconstruction differs dramatically when the direction of undersampling is changed. We also show cases where the quality is impervious to the direction of undersampling and explain why this is the case. Lastly, we propose a metric based on the fully sampled auto-calibration region; this metric identifies which undersampling direction (horizontal or vertical) will lead to a low-quality image.

2 Theory

In this section, we start by reviewing the main points of AUTO-SMASH [10] and show that the generalization of its theoretical basis justifies GRAPPA [11] and SPIRiT [12]. Note that a complete review of the theory of AUTO-SMASH using the notation of this manuscript can be found in Appendix A. For the purposes of this discussion, we will assume that imaging is performed in two-dimensions. These sampling patterns may be generated with a spin-warp trajectory using two dimensions of phase encodes and one dimension of readout; after inverse Fourier transforming along the readout direction, the data is placed in a hybrid space and the reconstruction of each slice may be considered independent of every other slice [17].

For this discussion, the MRI signal with spin density for coil is

| (1) |

where , , is the gyromagnetic ratio, and are the x and y gradient waveforms, and are the lengths of time that the respective gradient fields are turned on, and denotes the Fourier transform of evaluated at . For simplicity, we are ignoring the effects of relaxation and recovery.

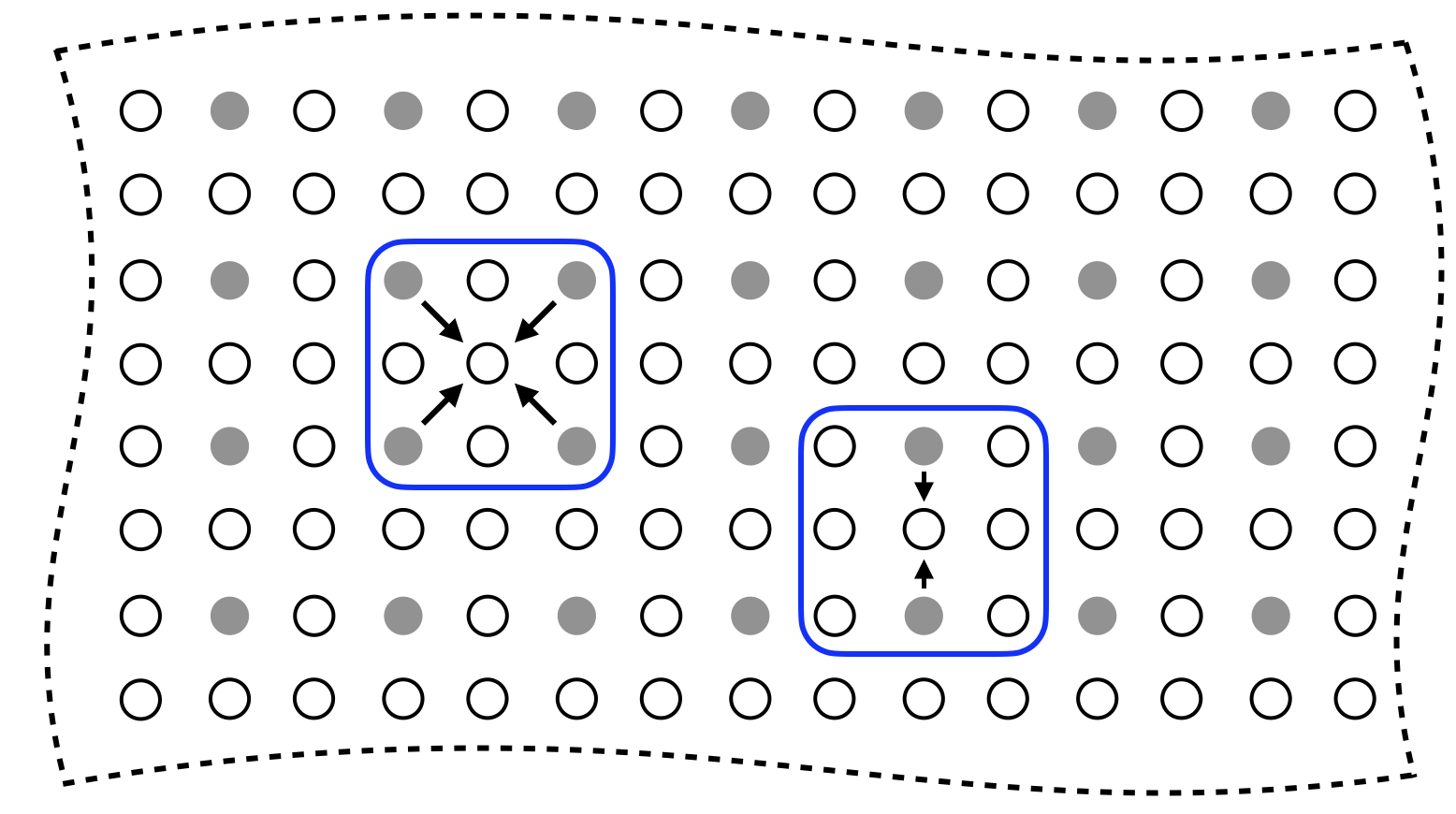

Parallel imaging with linear predictability is depicted in Fig. 1. In this example, the data is undersampled by a factor of in both the horizontal and vertical directions (for a total undersampling factor of ). Note that with coils, each sampled point consists of complex values, one for each coil.

The user supplies a metric and (at least one) threshold, perhaps one per direction. Points that were not collected are synthesized as linear combinations of those values that lie within the threshold. For the example provided in Fig. 1, the user has specified the as the metric and a single threshold of . Thus, for any uncollected Fourier location, linear coefficient are found to interpolate from all collected points that lie within a threshold’s distance.

2.1 AUTO-SMASH

AUTO-SMASH is a seminal work of parallel imaging with linear predictability; the algorithm uses a two-dimensional spin-warp trajectory [18] (e.g., phase encode and readout in and dimensions, respectively). Whereas the Nyquist sampling theorem would dictate that the phase encoding lines be separated by , AUTO-SMASH separates lines by for some . Consider the case where there are coils, each with sensitivity map . AUTO-SMASH defines a composite sensitivity map, , created by a linear combination of the individual coil sensitivity maps with linear coefficients . That is, . AUTO-SMASH further assumes that for each ; there exist unknown linear coefficients such that . Then, by the Fourier shift theorem (as detailed in Appendix A), . In [18], the coils were designed to satisfy the above requirements.

This illustrates that the linear coefficients permit interpolation from known values to unknown values located distance away in the direction of the Fourier domain. It is assumed that these same coefficients are valid across the entire Fourier domain. With AUTO-SMASH, the coils were designed so that , for some constant , across the field-of-view of the image. To determine the linear coefficients , a set of lines in the Fourier domain (called the auto-calibration signal – ACS) are collected and a linear system is numerically solved, where is comprised of the values of the ACS. (See Appendix A for details.)

2.2 GRAPPA

GRAPPA was developed heuristically; and yet, we have found that it can be explained with a theoretical basis very similar to that of AUTO-SMASH, as we will now do.

GRAPPA eliminates the assumption of known and the existence of an approximately constant composite sensitivity map. Moreover, instead of searching for coefficients that correspond to a single predetermined displacement, GRAPPA attempts to find linear coefficients for multiple displacements. The combination of distance threshold and undersampling rate gives rise to different patterns of collected and uncollected points used for interpolation, as depicted in Fig. 1. Each unique pattern of collected points surrounding an uncollected point is called a kernel; The blue contours in Fig. 1 shows two different kernels.

For a given kernel, as with AUTO-SMASH, the linear interpolation coefficients can be determined by solving a linear system. Here, we present a least-squares problem that simultaneously identifies the interpolation coefficients for all coils:

| (2) |

where is comprised of the appropriate values from the auto-calibration region, is the matrix of weights we solve for, and is a matrix of points from the auto-calibration region, a region which has rectangular size . For a specific uncollected Fourier location , let be the number of nonzero points of the relevant kernel. The matrix will be of size , is a matrix of size , is a matrix of size , and and are the number of times that the kernel fits inside the auto-calibration region in the and directions, respectively.

We will now explicitly relate problem (2) to the assumption of Eq. (5). An equivalent form of problem (2) is

| (3) |

where is an individual location inside the auto-calibration region, is the row of that corresponds to location , and is the row of . We recognize further that we can write as

| (4) |

where is the signal collected at point from coil , is the appropriate weight, the inner sum is over the coils, and the outer sum is over the collected sample points that lie within the kernel .

Similarly to AUTO-SMASH, for a given displacement and coil , GRAPPA seeks a set of linear coefficients such that

| (5) |

The notable difference is that the linear combination yields a complex exponential weighted by an individual coil’s sensitivity rather than a composite sensitivity. Using analogous mathematics as presented in App. A, performing a linear combination of the collected points from all coils linearly interpolates missing values of a specific coil’s data. After the missing data of all coils is interpolated, the data from multiple coils can be combined into a single image [19].

Here, we describe a sufficient condition for estimation without error when the approximation of 5 is perfectly satisfied and without any noise. With the formulation described above, the sufficient condition becomes evident. This formulation shows that each point of the GRAPPA kernel corresponds to a set of weights (one for each coil). Each interpolated point can be thought of as a linear combination of sensitivity maps approximating a complex exponential of a specific frequency in accordance with Eq. (5). For a given location and kernel, if at least one displacement vector to a collected point within the kernel satisfies Eq. (5), then the interpolation will be accurate. If more than one displacement vector satisfies Eq. (5), then GRAPPA finds the set of linear coefficients that interpolate from multiple points in a least-squares optimal sense.

2.3 SPIRiT

SPIRiT [12] is an extension of GRAPPA. With a fixed kernel size, rather than just interpolating from points that were collected, SPIRiT will use every point in the kernel, regardless of whether or not it was collected.

SPIRiT interpolates all values (even those that were collected) by solving the following constrained least-squares problem:

| (6) |

where denotes the norm, represents linear interpolation from all values that lie within the kernel (even those that were not collected), is the linear transformation that isolates the sample points that were collected and, is the values of the collected data, and is a bound on the noise power. In general, problem (6) can be solved with the Fast Iterative Shrinkage-Thresholding algorithm (FISTA) [20]. In [12], Lustig et al. set and only solve for the values of the uncollected data, which somewhat simplifies the implementation of the optimization algorithm, but this is not necessary.

Here, again, we describe a sufficient condition for estimation without error when the approximation of Eq. 5 is perfectly satisfied and without any noise. The sufficient condition for an accurate SPIRiT interpolation is similar to that which was developed for GRAPPA, but more general. Consider the set of collected data as a directed graph where the location of each Fourier value is a node and the displacement vectors from each point in the kernel to that location are the directed edges. Accurate interpolation at a location is possible when there is a path from a collected data point to such that all edges of that path satisfy the approximation of Eq. (5) well. Practically, any error in the approximation and noise in the values are amplified with each edge of the path; so shorter paths lead to more accurate interpolations.

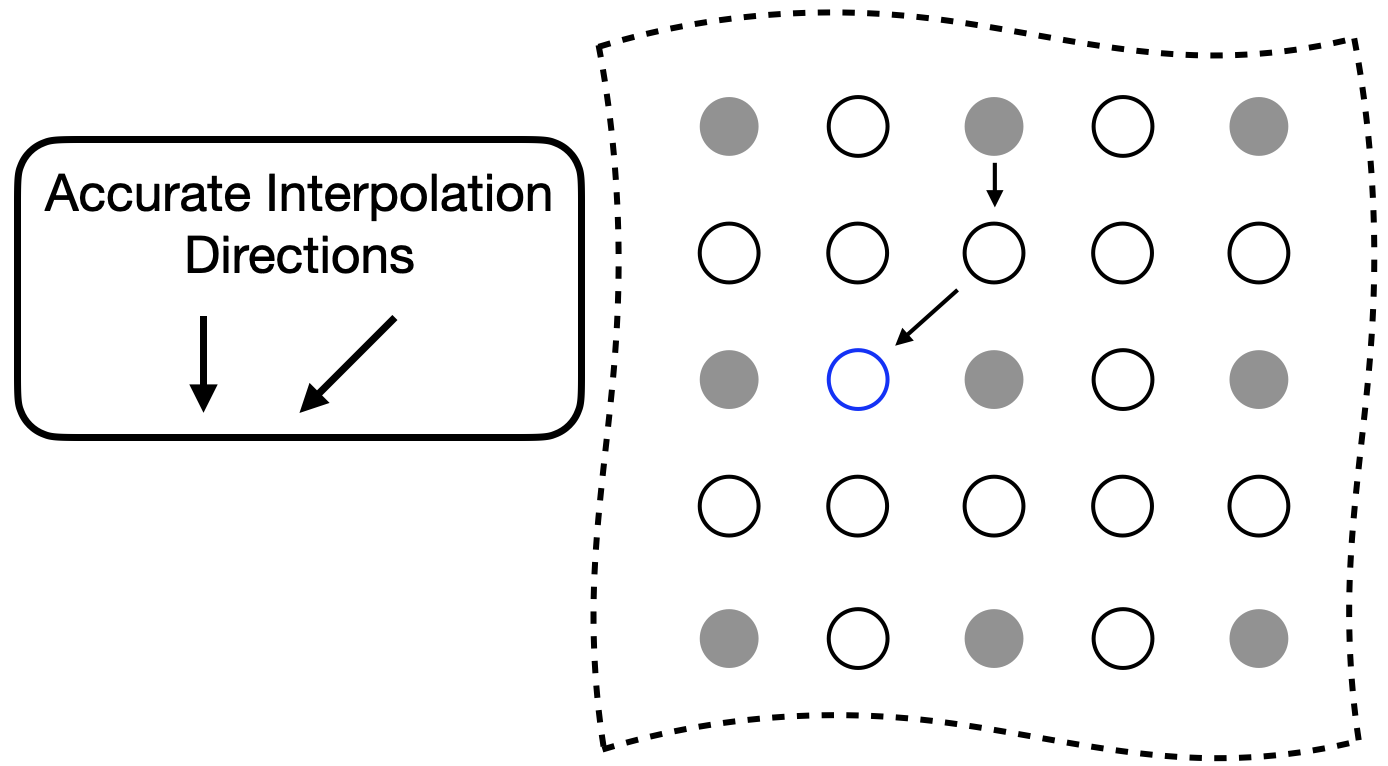

Figure 2 depicts an example that explains this sufficient condition. In this example, we would like to interpolate the value of the blue circle. The directions where condition Eq. 5 is satisfied is down and downward-left. We could interpolate the value of the blue circle in two steps: 1) estimate all values below all sampled values, and 2) estimating all values downward and left of all known or estimated values. This would perfectly estimate the value of the blue circle. If this process were iterated until all unknown values were estimated, then it would solve problem Eq. 6 with an objective function value of 0.

2.4 Accuracy Metric

In the results section, we will provide examples where the quality of the reconstruction is significantly degraded when the sufficient conditions identified are not met. Here, we present a metric that can be calculated from the ACR that identifies any undersampling directions that would not meet the sufficient conditions for accurate interpolation. Given the fully sampled ACR and a kernel size, we solve for the interpolation coefficients using two kernels—one solely in the horizontal direction and one in the vertical direction—of the same size as the kernel used for reconstruction. The kernel has a in the center and all other values are . E.g., if the desired kernel is , the test kernels look like

| (7) |

Denote the solutions to problem (2) with each of these kernels as and . The metric for accuracy is the relative norm error of the linear system:

| (8) |

If the value of the relative error is high, then there is not a consistent set of interpolation coefficients for the auto-calibration region in the direction specified by the kernel. If the value of the relative error is low, then there is and the data can be undersampled in that direction while retaining a high-quality reconstruction.

3 Experimental Setup

In this manuscript, we first use Biot-Savart simulations to examine an 8-element birdcage coil. We then analyze three different datasets: a knee, a brain, and an ankle. All datasets were fully-sampled three-dimensional Cartesian data with two dimensions of phase encodes and one dimension of readout. The data of the knee was taken from the publicly available MRIData [21]. Data of the brain and ankle were collected with a 3 Tesla General Electric MR750 clinical scanner (GE Healthcare, Waukesha, WI). All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. MR data of humans were gathered with institutional review board (IRB) approval and Health Insurance Portability and Accountability Act (HIPAA) compliance. Informed consent was obtained from all individual participants included in the study.

Data was retrospectively downsampled for processing. The data was inverse transformed along the readout direction and placed into a hybrid space of . Then, individual slices at specific locations were isolated for further processing.

4 Results

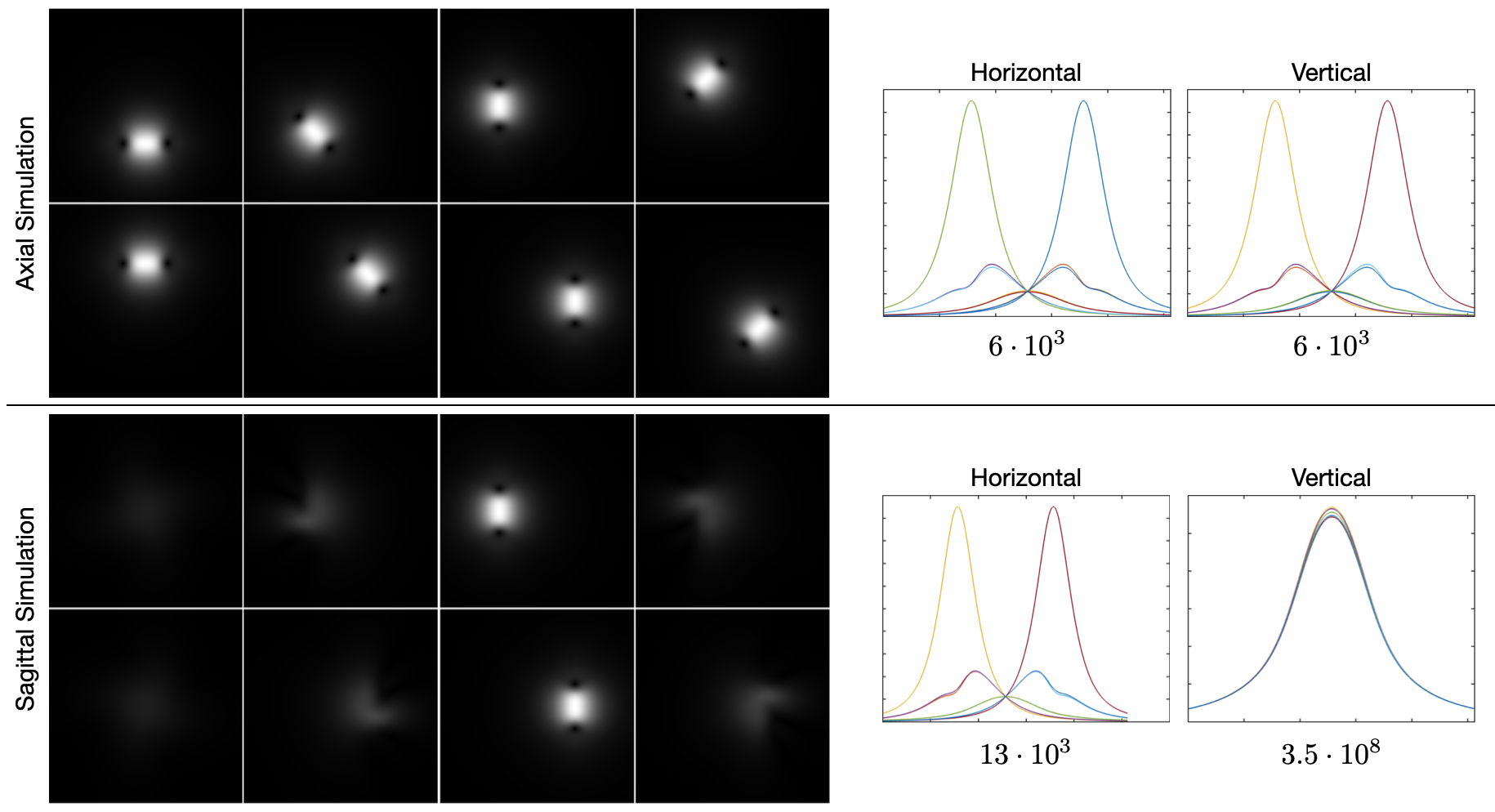

Figure 3 shows results from Biot-Savart simulations for two perpendicular orientations of an 8-element birdcage coil according to [22]. The top/bottom of the figure shows simulations for an axial/sagittal plane that lies at the birdcage coil, respectively. The plots isolate single horizontal and vertical lines that lie at the center of the simulations; the number below each plot is the condition number of the matrix made by concatenating the vectors of the plots above it. This condition number is a metric that indicates how much variation there is between the sensitivity maps across space. For both the horizontal and vertical lines of the axial simulations, the condition number is on the order of . While this remains the case for the horizontal line of the sagittal simulation, the condition number for the vertical line is much higher: on the order of . By looking at the corresponding plot, it becomes obvious that the sensitivities are approximately scaled versions of each other, and that the problem of finding coefficients to linearly combine the sensitivity maps so that they approximate a complex exponential is ill-conditioned.

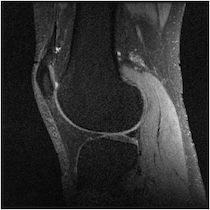

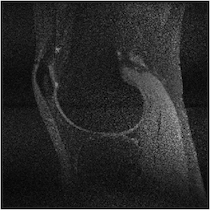

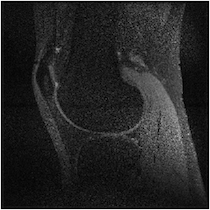

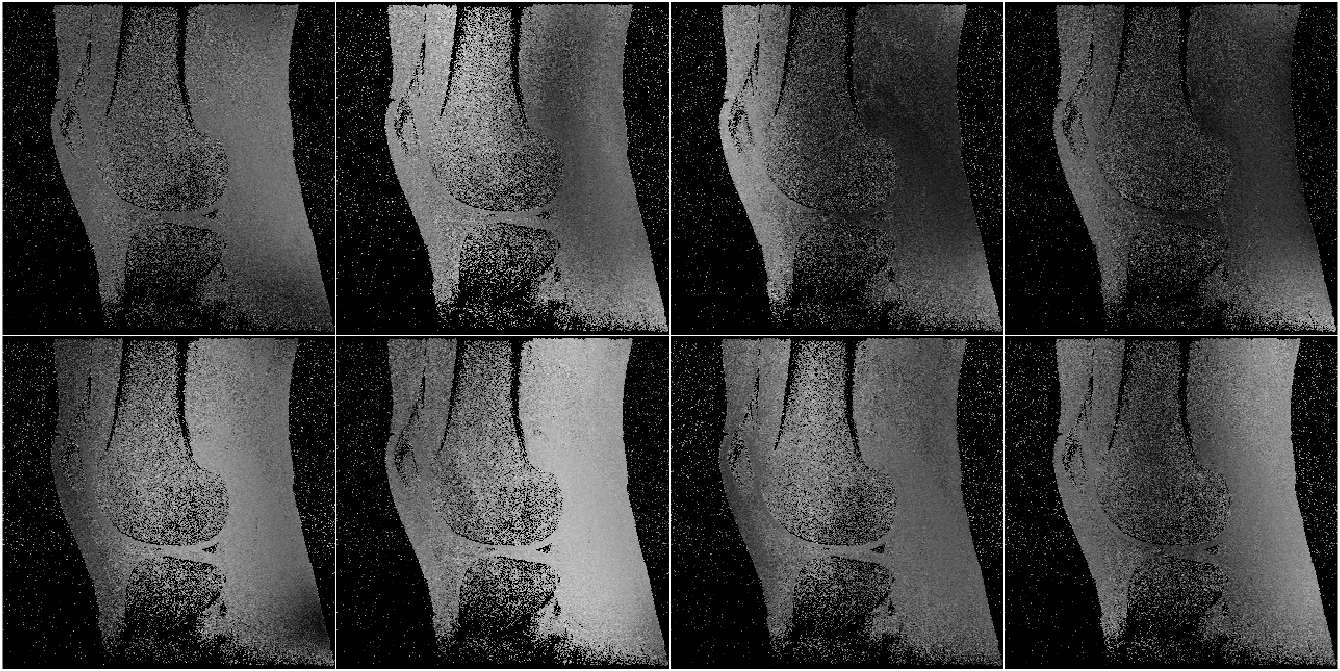

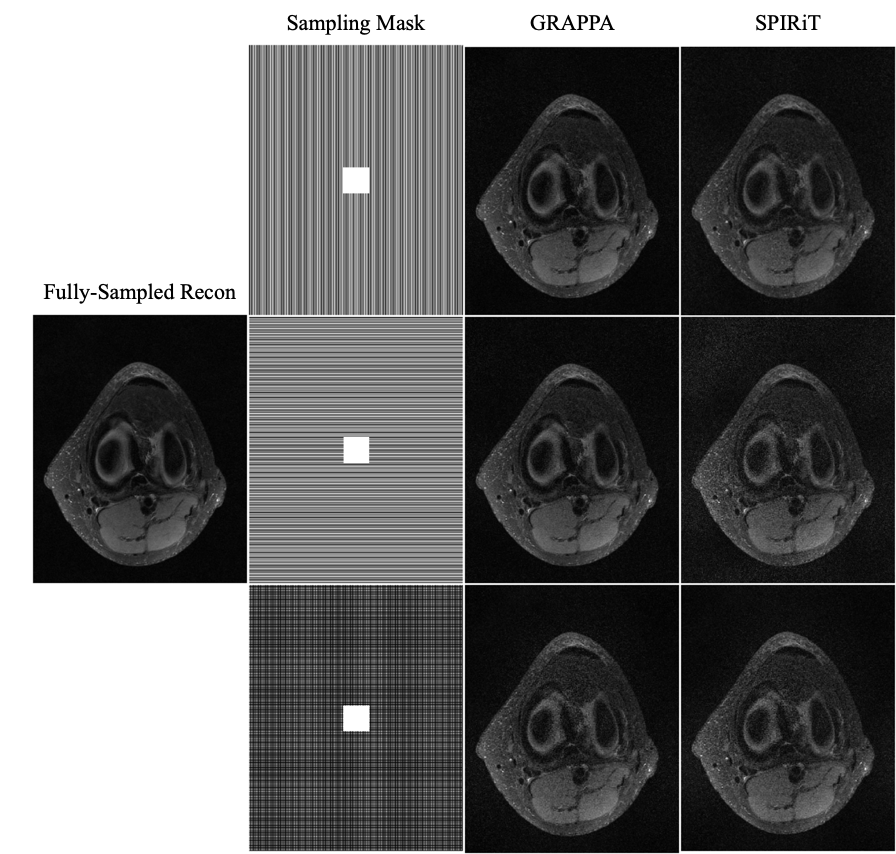

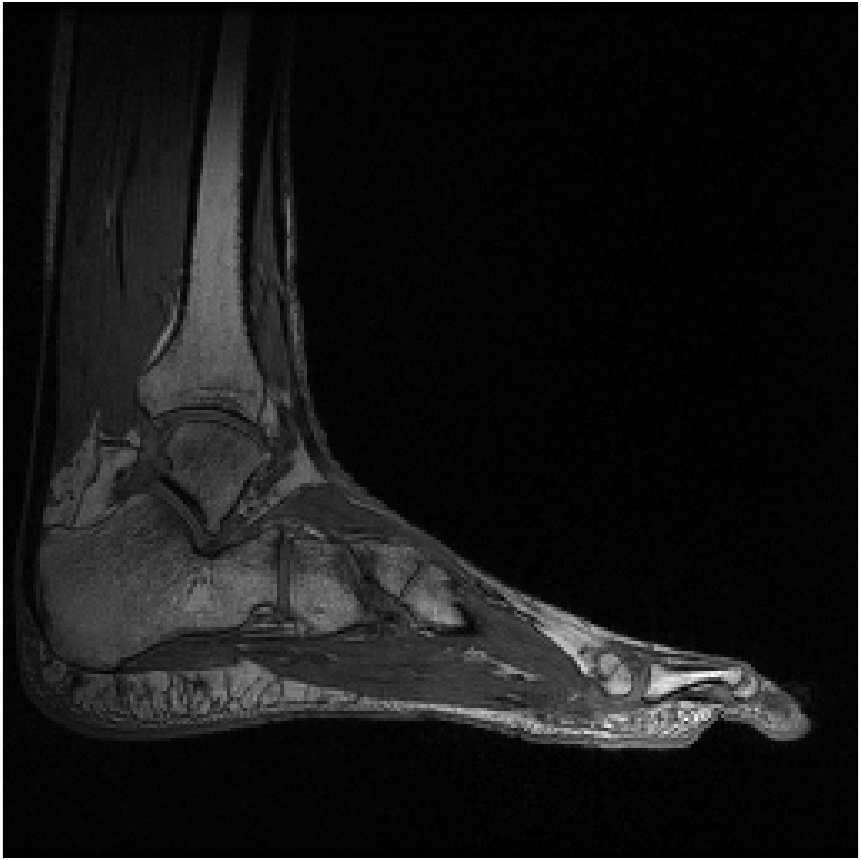

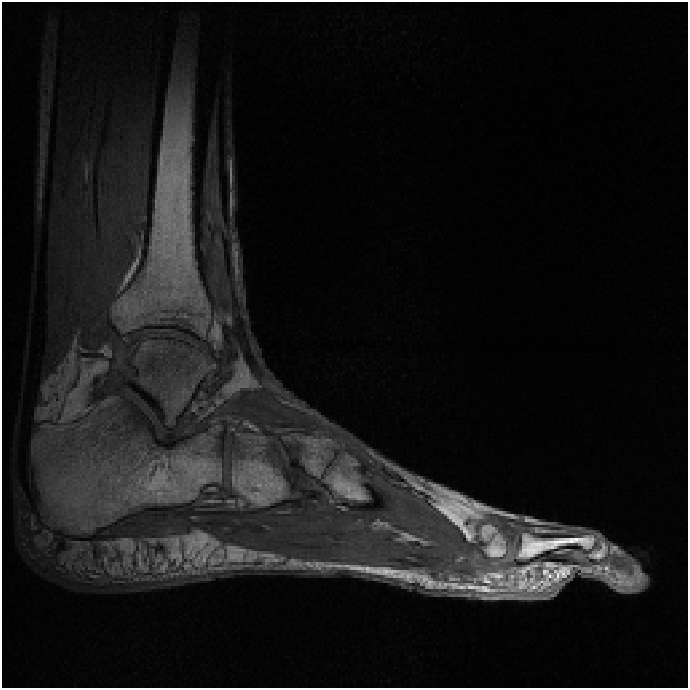

Figure 4 shows reconstructions with GRAPPA and SPIRiT using two separate sampling patterns. Both data were retrospectively undersampled at the same reduction factor of 2; the only difference is the direction of undersampling. This dependence of quality on undersampling direction is present with both GRAPPA [11] and SPIRiT [12].

Figure 5 illuminates why this happens based on the understanding presented in Sec. 2—for any specific horizontal location, the coil sensitivity functions as a function of vertical location are approximately scaled versions of each other. When undersampled by every other row, GRAPPA and SPIRiT will attempt to interpolate unknown values from collected data that lies above and below in the Fourier domain. With relatively little spatial variation in the coil sensitivities in those directions, there does not exist a linear combination of coil sensitivities such that they approximate a complex exponential and the interpolation coefficients do not yield an accurate estimate.

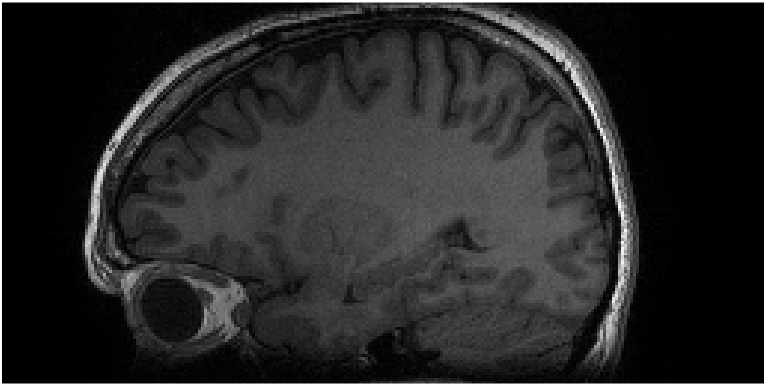

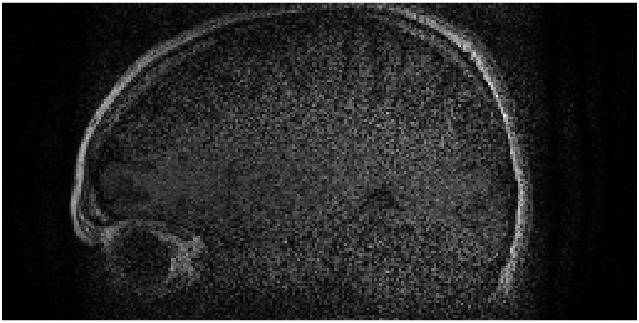

This data was collected with an 8-channel birdcage coil. Therefore, each coil extends from the most inferior to the most superior portions of the image. Thus, in the SI (vertical) direction, there is not enough variation to approximate a complex exponential well. This same phenomenon happens when imaging other anatomy with a similar coil arrangement; e.g., imaging the brain with a birdcage coil, as shown in Fig. 6.

Figure 7 shows MR images of an axial slice of the knee; this is the same dataset as Fig. 4. In this case, the quality of the reconstruction is independent of the undersampling direction. Moreover, when simultaneously undersampling in both directions, the quality remains high; though the signal-to-noise ratio has been reduced due to the reduced scan time [23, 24]. Good quality reconstructions in both directions indicates that the corresponding optimization problems to find the interpolation coefficients for GRAPPA and SPIRiT found good solutions. Owing to our understanding that the optimization problem is attempting to find linear coefficients for the coil sensitivities such that they linearly combine to a weighted complex exponential, the high quality indicates that this is true for at least one direction identified with the GRAPPA and SPIRiT kernels.

Figure 8 shows reconstructions of a sagittal slice of an ankle from data collected with an 8-channel dedicated ankle coil arrangement. The SPIRiT reconstructions of retrospectively downsampled data results in a high-quality reconstruction independent of the direction of undersampling. Fig. 9 shows the sensitivities of each coil. In contrast to the sensitivity maps of Fig. 5, each coil shows good spatial variation in both directions.

We varied reconstruction parameters for GRAPPA and SPIRiT reconstructions of the knee and brain to include kernel sizes of and and to include an undersampling factor of approximately . In all cases, the same trend was observed: the quality of the reconstruction is highly dependent on the undersampling direction. (Results are not shown.)

Table 1 shows the horizontal and vertical accuracy metrics for the data studied in this manuscript. The relative errors for those undersampling directions that yielded a poor quality image are larger than those that yielded a high-quality image. The axial slice of the knee, which yielded a high-quality reconstruction independent of the undersampling direction, has a small relative error in both directions. For this dataset, a threshold on the relative error of would identify directions that would yield a poor-quality image. This, however, is too small a dataset for us to make that a conclusion. Instead, we present this as a preliminary result and hope to pursue it further in the future.

| Data | Kernel | Vertical Error | Horizontal Error |

|---|---|---|---|

| Knee—sagittal slice | 3x3 | 55.0% (large) | 17.1% (small) |

| Knee—axial slice | 3x3 | 25.2% (small) | 26.6% (small) |

| Ankle—sagittal slice | 3x3 | 8.1% (small) | 5.0% (small) |

| Brain—sagittal slice | 3x3 | 61.7% (large) | 5.0% (small) |

| Brain—sagittal slice | 5x5 | 52.6% (large) | 3.9% (small) |

| Brain—sagittal slice | 7x7 | 47.3% (large) | 3.6% (small) |

5 Discussion

We have presented a rigorous physical reasoning that explains why the direction of undersampling can impact reconstruction quality.

Having the requisite spatial variation in the coil sensitivities is a sufficient condition for quality reconstruction based on linear predictability. Other methods of parallel imaging (e.g. SENSE [25] and model based reconstruction [26]), which are not based on linear predictability of the coil sensitivities, may not display such a dependence on the undersampling pattern. There are other sufficient conditions that may be satisfied for linear predictability which may preclude this difficulty of GRAPPA and SPIRiT. One such sufficient condition is that the support of the imaged object be less than the field-of-view of the image [13]. Note further that this condition of support is met by the data studied in this manuscript; however, the quality remains dependent on the direction of undersampling. This indicates that, for the data studied, the more important consideration is the direction in which the coil sensitivities can be linearly combined to approximate a complex exponential.

Furthermore, as detailed in Eq. 12 of Appendix A, the linear combination of the sensitivities only needs to approximate a complex exponential over the support of the image. Generally, the support of the image is a strict subset of the field of view. Consider the reconstruction of the ankle; there is little coil sensitivity in the superior-anterior quadrant of the image. However, there is plenty of coil variation over the other quadrants, which is where the ankle and leg are located. This is why the reconstruction of the ankle is robust regardless of the direction of undersampling. The coils are placed along the ankle and leg and oriented in similar directions (the normal vectors of the coils are all approximately parallel), allowing for local approximations of the sensitivities to complex exponentials.

Results indicate that the accuracy metric can be used to determine undersampling directions that will yield a poor-quality reconstruction, so that those undersampling directions can be avoided during the scan. In the future, we hope to test this metric on a much larger dataset to ensure that it is reliable. If so, we hope to create an adaptive algorithm that uses the auto-calibration region to identify good undersampling directions prior to collecting the outer portion of the Fourier domain. By doing so, parallel imaging with linear predictability would become robust to an inappropriately selected undersampling direction.

Existing methods for combining parallel imaging with compressed sensing often utilize a variable density poisson disc sampling pattern [27] based on the intuition that samples should not be placed too close together since parallel imaging permits interpolation between samples [28]. Based on the work of this manuscript, parallel imaging with linear predictability may not be able to accurate interpolate between samples that are spaced in a given direction. If the parameter that governs variable density for the sampling pattern is radially symmetric, this indicates that the reconstruction relies more heavily on the sparsity assumptions of compressed sensing in one direction over another and suggests that there may be a sampling pattern that yields a higher quality when combining parallel imaging with compressed sensing.

6 Conclusion

We showed that the quality of parallel imaging reconstruction algorithms based on linear predictability, such as GRAPPA and SPIRiT, has a dependence on the direction of undersampling. This directional dependence is related to the amount of spatial variance in the individual coil sensitivities. The impact to the quality of reconstructions is the difference between having a diagnostic image or not. We note that this happens for specific coil configurations and body slices, whereas other configurations are robust to this problem.

Appendix A AUTO-SMASH

In a coil array with elements, the coil has a distinct sensitivity function . A composite sensitivity is generated as a linear combination of individual coil sensitivities with linear coefficients as follows:

| (9) |

The composite two-dimensional MR signal takes the form,

| (10) |

the Fourier transformation of .

Suppose that there is another set of complex weights such that the linear combination of coil sensitivities yields the following composite sensitivity:

| (11) |

Importantly, this approximation only needs to be valid over the support of the image. With these linear coefficients, the composite MR signal becomes

| (12) | ||||

where is the support of . The coefficients serve to interpolate Fourier values at a distance of . Note that for the special case where , the Fourier coefficients are those of .

The innovation of AUTO-SMASH is to use collected auto-calibration signal (ACS) lines to estimate the weights for Eq. 11. These ACS data, are exactly shifted by the amount . The composite signal generated using weights according to Eq. A yields

| (13) |

Alternatively, following Eq. 12, we can write

| (14) |

Write the right-hand side of Eq. 15 simply as to reinforce that is the final, combined image produced using the original weights. For each this is a (complex) scalar, and the left-hand side is a linear combination of the collected MR signals. We are attempting to solve for .

To write this as a linear system, denote , and as follows:

To find the interpolation coefficients , one can minimize .

Once the weights are determined, the matrix-vector multiplication for constructed for a specific will estimate the composite Fourier value at .

References

- [1] Anagha Deshmane, Vikas Gulani, Mark A Griswold, and Nicole Seiberlich. Parallel MR imaging. Journal of Magnetic Resonance Imaging, 36(1):55–72, 2012.

- [2] Michael Lustig, David Donoho, and John M Pauly. Sparse MRI: The application of compressed sensing for rapid mr imaging. Magnetic Resonance in Medicine, 58(6):1182–1195, 2007.

- [3] Corey A Baron, Nicholas Dwork, John M Pauly, and Dwight G Nishimura. Rapid compressed sensing reconstruction of 3D non-cartesian MRI. Magnetic resonance in medicine, 79(5):2685–2692, 2018.

- [4] Nicholas Dwork, Daniel O’Connor, Corey A. Baron, Ethan M.I. Johnson, Adam B Kerr, John M. Pauly, and Peder E.Z. Larson. Utilizing the wavelet transform’s structure in compressed sensing. Signal, Image and Video Processing, 15(7):1407–1414, 2021.

- [5] Nicholas Dwork and Peder EZ Larson. Utilizing the structure of a redundant dictionary comprised of wavelets and curvelets with compressed sensing. Journal of Electronic Imaging, 31(6):063043, 2022.

- [6] Douglas C Noll, Dwight G Nishimura, and Albert Macovski. Homodyne detection in magnetic resonance imaging. Transactions on Medical Imaging, 10(2):154–163, 1991.

- [7] Christopher M Sandino, Joseph Y Cheng, Feiyu Chen, Morteza Mardani, John M Pauly, and Shreyas S Vasanawala. Compressed sensing: From research to clinical practice with deep neural networks: Shortening scan times for magnetic resonance imaging. Signal Processing Magazine, 37(1):117–127, 2020.

- [8] Kerstin Hammernik, Jo Schlemper, Chen Qin, Jinming Duan, Ronald M Summers, and Daniel Rueckert. -net: Systematic evaluation of iterative deep neural networks for fast parallel mr image reconstruction. arXiv, 1912.09278, 2019.

- [9] Florian Knoll, Tullie Murrell, Anuroop Sriram, Nafissa Yakubova, Jure Zbontar, Michael Rabbat, Aaron Defazio, Matthew J Muckley, Daniel K Sodickson, C Lawrence Zitnick, et al. Advancing machine learning for MR image reconstruction with an open competition: Overview of the 2019 fastMRI challenge. Magnetic resonance in medicine, 84(6):3054–3070, 2020.

- [10] Peter M Jakob, Mark A Grisowld, Robert R Edelman, and Daniel K Sodickson. AUTO-SMASH: a self-calibrating technique for SMASH imaging. Magnetic Resonance Materials in Physics, Biology and Medicine, 7(1):42–54, 1998.

- [11] Mark A Griswold, Peter M Jakob, Robin M Heidemann, Mathias Nittka, Vladimir Jellus, Jianmin Wang, Berthold Kiefer, and Axel Haase. Generalized autocalibrating partially parallel acquisitions (GRAPPA). Magnetic Resonance in Medicine, 47(6):1202–1210, 2002.

- [12] Michael Lustig and John M. Pauly. SPIRiT: Iterative self-consistent parallel imaging reconstruction from arbitrary k-space. Magnetic Resonance in Medicine, 64(2):457–471, 2010.

- [13] Justin P. Haldar and Kawin Setsompop. Linear predictability in magnetic resonance imaging reconstruction: Leveraging shift-invariant fourier structure for faster and better imaging. Signal Processing Magazine, 37(1):69–82, 2020.

- [14] Nicole Seiberlich, Felix A Breuer, Martin Blaimer, Kestutis Barkauskas, Peter M Jakob, and Mark A Griswold. Non-cartesian data reconstruction using GRAPPA operator gridding (GROG). Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 58(6):1257–1265, 2007.

- [15] Katherine L Wright, Jesse I Hamilton, Mark A Griswold, Vikas Gulani, and Nicole Seiberlich. Non-Cartesian parallel imaging reconstruction. Journal of Magnetic Resonance Imaging, 40(5):1022–1040, 2014.

- [16] Tianrui Luo, Douglas C Noll, Jeffrey A Fessler, and Jon-Fredrik Nielsen. A GRAPPA algorithm for arbitrary 2D/3D non-cartesian sampling trajectories with rapid calibration. Magnetic resonance in medicine, 82(3):1101–1112, 2019.

- [17] PJ Beatty, AC Brau, S Chang, S Joshi, CR Michelich, E Bayram, TE Nelson, RJ Herfkens, and JH Brittain. A method for autocalibrating 2D accelerated volumetric parallel imaging with clinically practical reconstruction times. In Proceedings of the International Society for Magnetic Resonance in Medicine, volume 1749, 2007.

- [18] Charles L Epstein. Introduction to the mathematics of medical imaging. SIAM, 2007.

- [19] Peter B Roemer, William A Edelstein, Cecil E Hayes, Steven P Souza, and Otward M Mueller. The NMR phased array. Magnetic resonance in medicine, 16(2):192–225, 1990.

- [20] Amir Beck and Marc Teboulle. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. Journal on imaging sciences, 2(1):183–202, 2009.

- [21] Frank Ong, Shahab Amin, Shreyas Vasanawala, and Michael Lustig. Mridata.org: An open archive for sharing MRI raw data. In Proceedings of the International Society of Magnetic Resonance in Medicine, volume 26, 2018.

- [22] Giulio Giovannetti, Luigi Landini, Maria Filomena Santarelli, and Vincenzo Positano. A fast and accurate simulator for the design of birdcage coils in MRI. Magnetic Resonance Materials in Physics, Biology and Medicine, 15(1):36–44, 2002.

- [23] Albert Macovski. Noise in MRI. Magnetic resonance in medicine, 36(3):494–497, 1996.

- [24] Dwight G Nishimura. Principles of magnetic resonance imaging. Standford Univ., 2010.

- [25] Klaas P. Pruessmann, Markus Weiger, Markus B. Scheidegger, and Peter Boesiger. Sense: Sensitivity encoding for fast MRI. Magnetic Resonance in Medicine, 42(5):952–962, Nov 1999.

- [26] Jeffrey A Fessler. Model-based image reconstruction for MRI. Signal processing magazine, 27(4):81–89, 2010.

- [27] Nicholas Dwork, Corey A Baron, Ethan MI Johnson, Daniel O’Connor, John M Pauly, and Peder EZ Larson. Fast variable density poisson-disc sample generation with directional variation for compressed sensing in MRI. Magnetic Resonance Imaging, 77:186–193, 2021.

- [28] SS Vasanawala, MJ Murphy, Marcus T Alley, P Lai, Kurt Keutzer, John M Pauly, and Michael Lustig. Practical parallel imaging compressed sensing MRI: Summary of two years of experience in accelerating body MRI of pediatric patients. In international symposium on biomedical imaging: From nano to macro, pages 1039–1043. IEEE, 2011.