The Emergence of Heterogeneous Scaling in Research Institutions

Research institutions provide the infrastructure for scientific discovery, yet their role in the production of knowledge is not well characterized. To address this gap, we analyze interactions of researchers within and between institutions from millions of scientific papers. Our analysis reveals that the number of collaborations scales superlinearly with institution size, though at different rates (heterogeneous densification). We also find that the number of institutions scales with the number of researchers as a power law (Heaps’ law) and institution sizes approximate Zipf’s law. These patterns can be reproduced by a simple model with three mechanisms: (i) researchers collaborate with friends-of-friends, (ii) new institutions trigger more potential institutions, and (iii) researchers are preferentially hired by large institutions. This model reveals an economy of scale in research: larger institutions grow faster and amplify collaborations. Our work provides a new understanding of emergent behavior in research institutions and how they facilitate innovation.

Scientific innovation and training require efficient and robust infrastructure, which is provided by research institutions, a category that includes universities, government labs, industrial labs, and national academies (?, ?). Despite the long tradition of bibliometric and science of science research (?), the focus has only recently shifted from individual scientists (?, ?) and teams (?, ?, ?) to how institutions affect researcher productivity and impact (?, ?). Many gaps remain in our understanding of the role of institutions in the production of scientific knowledge, and specifically, how they form, grow, and facilitate scientific collaborations. These questions are important, because collaborations are increasingly prevalent in scientific research (?, ?, ?) and produce more impactful and transformative work (?, ?). Collaboration allows scientists to cope with the increasing complexity of knowledge (?) by leveraging the diversity of expertise (?) and perspectives offered by collaborators from different institutions (?) and disciplines (?).

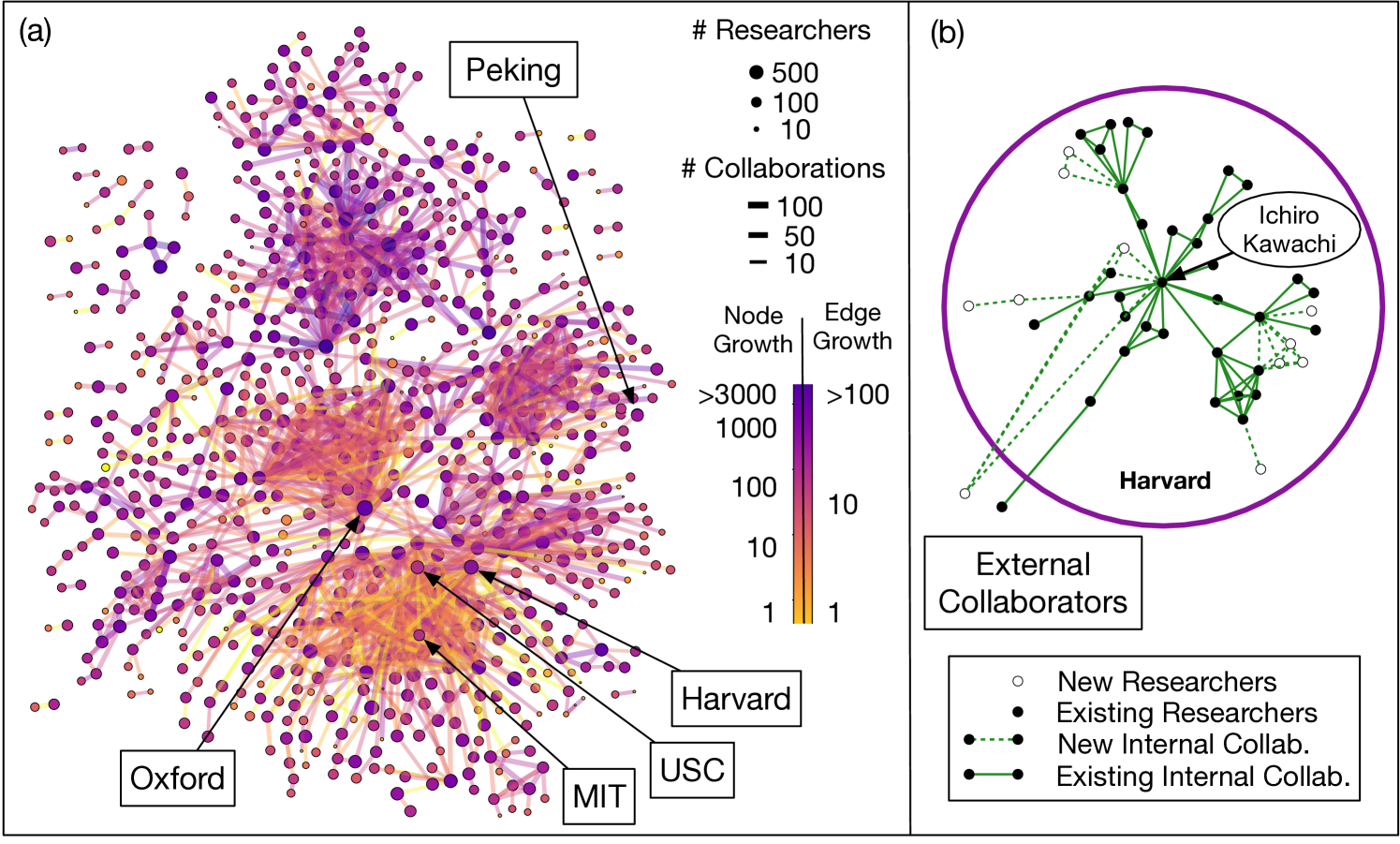

To understand the evolution of research institutions and collaborations, we analyze a large bibliographic database spanning many decades and multiple scientific disciplines. The database contains millions of publications from which the names of authors (collaborators) and their affiliations (research institutions) have been extracted for each paper. Figure 1 shows collaboration network between sociology researchers at different institutions, as well as size of institutions, as of 2017. We see a remarkable diversity of institution size and growth, both in terms of the number of researchers (node growth) and collaborations between institutions (edge growth). Collaborations are clustered, with clear groups of interacting institutions. Research collaborations within an institution are equally complex. Figure 1b highlights the largest connected component of the collaboration network within Harvard. Individual researchers vary widely in the number of collaborators, with new collaborations appearing in clusters.

Analysis of these data reveal strong statistical regularities. We find that collaborations scale superlinearly with institution size, i.e., faster than institutions grow, consistent with densification of growing networks (?, ?, ?). However, the scaling law is different for each institution, and as a result, different parts of collaboration network densify at different rates. The scaling laws cannot be explained by growing output (papers), as researcher productivity is roughly constant at each institution. We also find that institutions vary in size by many orders of magnitude, with distribution approximated by Zipf’s law (?), while the number of institutions scales sub-linearly with the number of researchers, in agreement with Heaps’ law (?, ?). The sublinear scaling implies that, even as more institutions appear, each institution gets larger on average, but this average belies an enormous variance.

We propose a stochastic model that explains how institutions and research collaborations form and grow. In this model, a researcher appears at each time step and is preferentially hired by larger institutions (e.g., due to their prestige or funding), which leads to the rich-get-richer effect creating Zipf’s law. With a small probability, however, a researcher joins a newly appearing institution. The arrival of this new institution then triggers yet more new institutions to form in the future (?). Finally, once hired, researchers make connections to other researchers and their collaborators with an independent probability. Despite its simplicity, the model reproduces a range of empirical observations, including the number and size of research institutions, and how pockets of increasingly dense structures form in collaboration networks. Although the first and second step of this model has individually has been studied before, this model is unique by combining these mechanisms to form a cohesive model of collaborations.

These empirical results demonstrate universal emergent patterns in the formation and growth of research institutions and collaborations. Our model demonstrates that new institutions are critical to innovation by providing the triggering mechanism that makes more institutions possible. At the same time, large institutions offer an economy of scale: they grow faster and provide more collaboration opportunities compared to smaller institutions.

Results

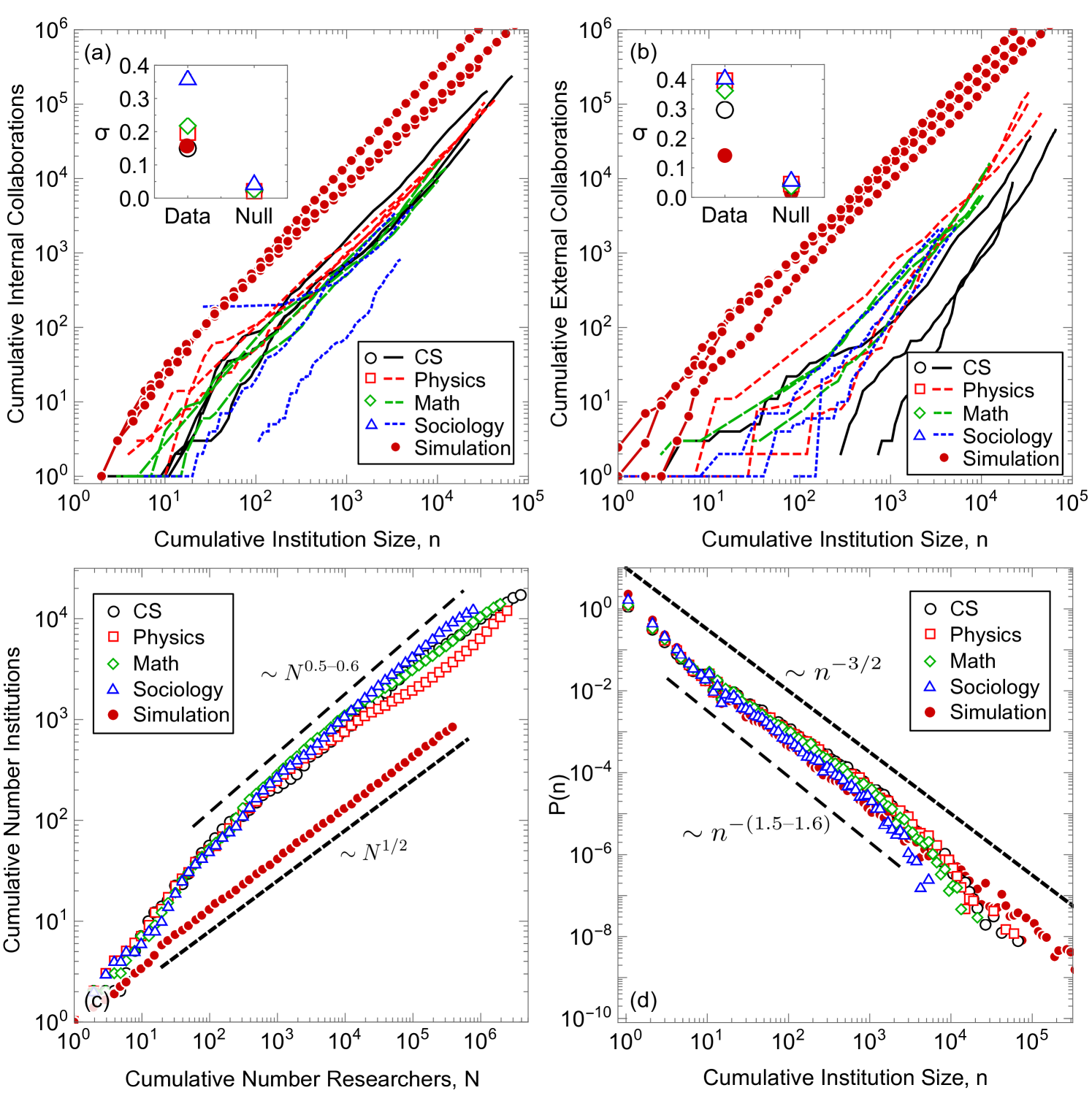

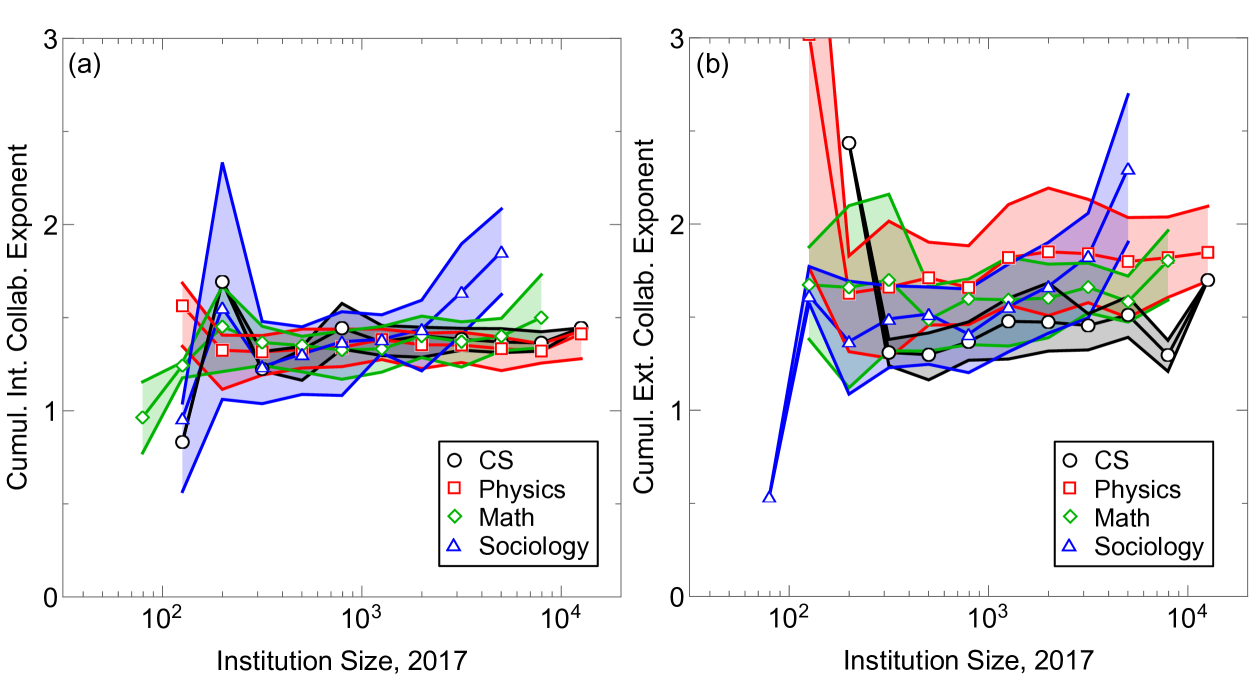

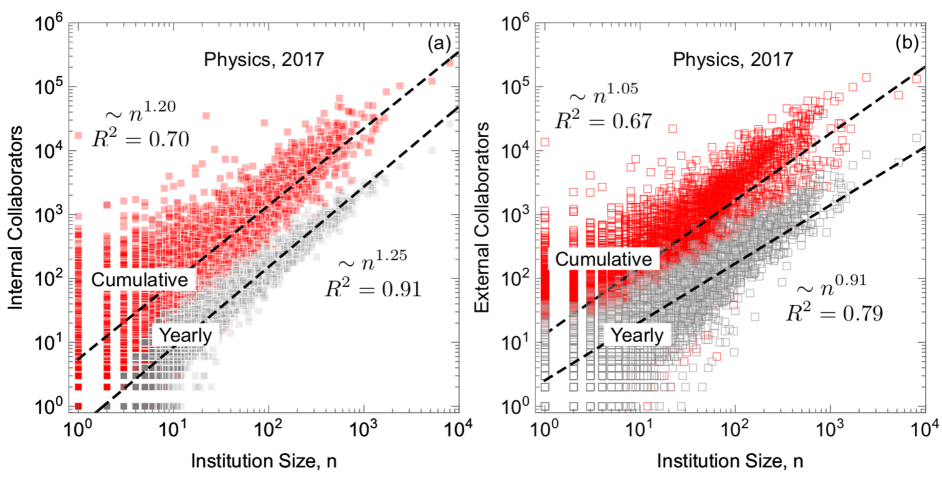

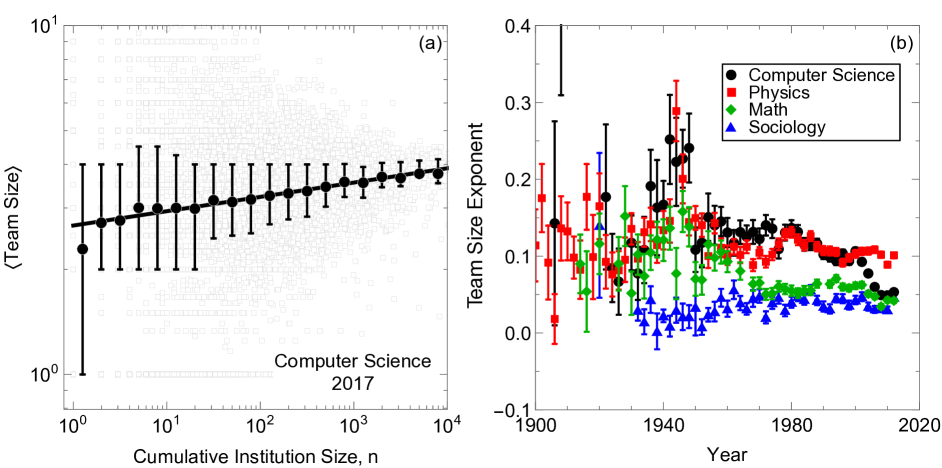

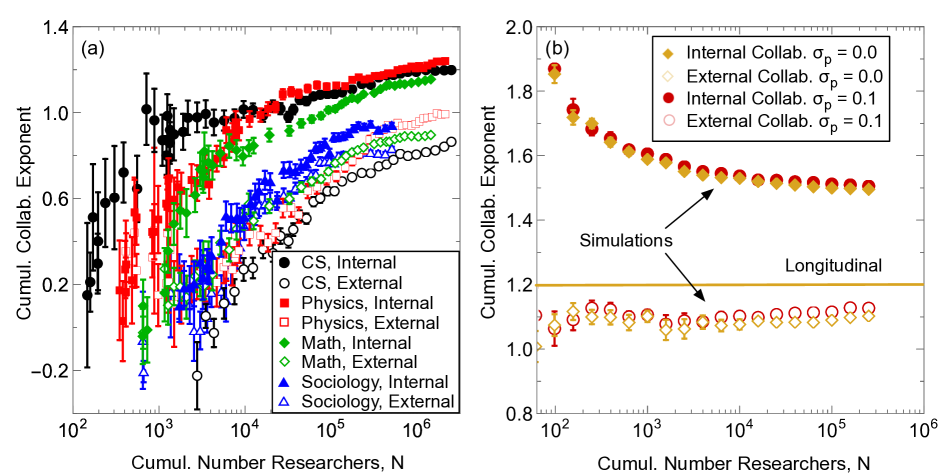

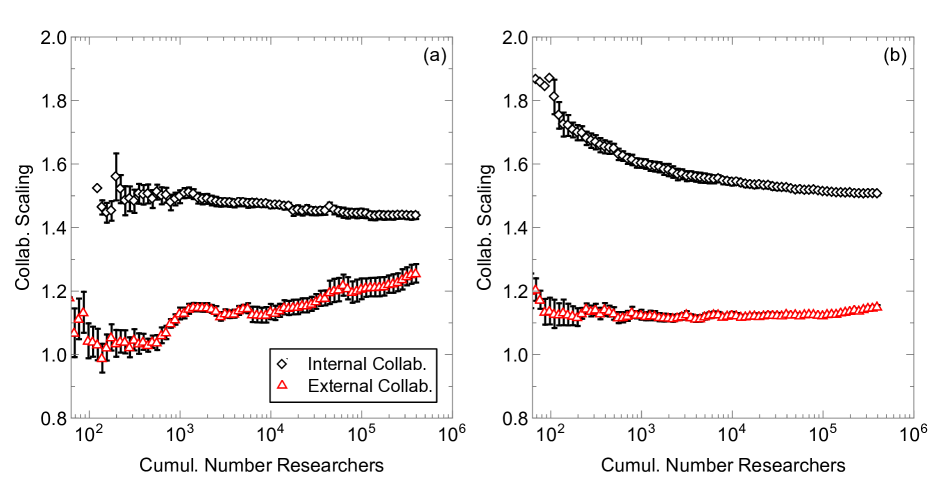

As the first step towards characterizing the complexity of institution scaling, we use large longitudinal data to measure how collaborations scale with institution size . Figure 2a–b shows how the number of internal and external collaborations changes over time for several institutions from different disciplines. While each institution follows a scaling law ( is consistently around 1, see Supplementary Note 6: Comparison Between Data and Simulations), the exponents differ substantially between institutions.

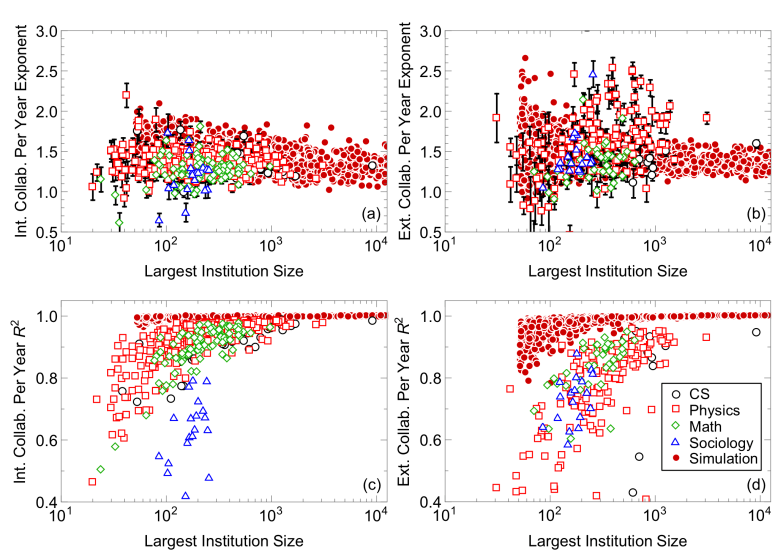

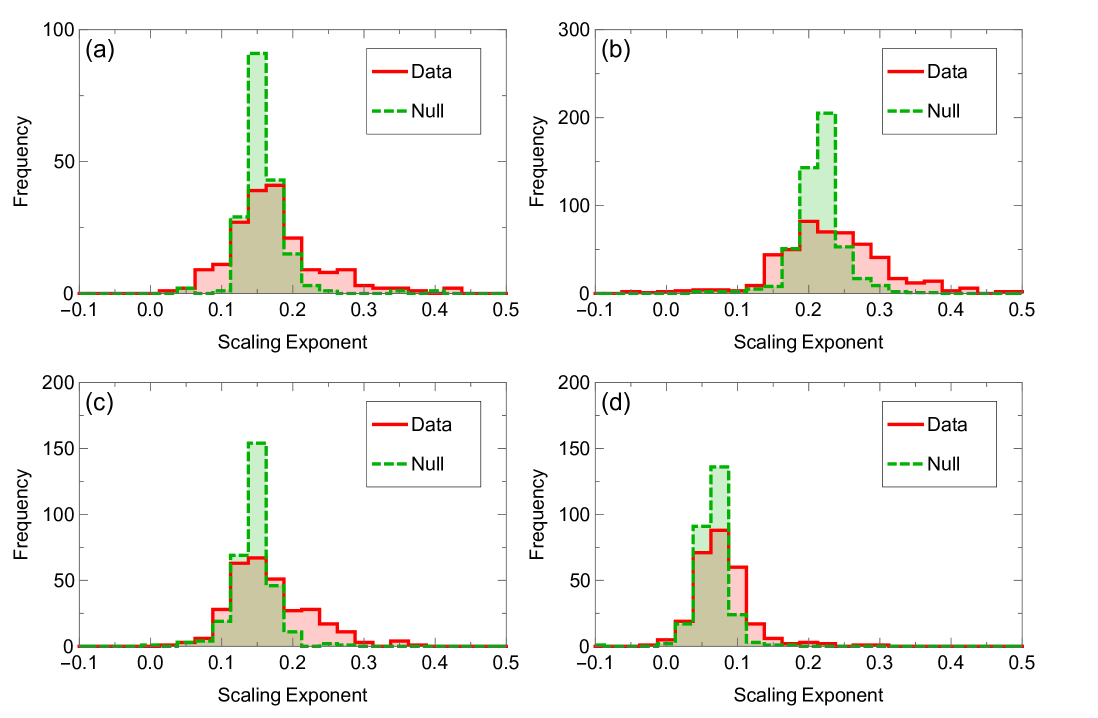

To show that the scaling exponents of all institutions are different, we create a null model (see SI Supplementary Note 3) in which all institutions follow the same scaling law. In this null model, residuals of each institution’s fitted scaling relation are reshuffled and added as noise onto a single scaling relation. Differences between fitted exponents in this model are due to statistical noise rather than different scaling laws. We find that the variance of the scaling laws across all institutions is much higher than this null model (insets of Fig. 2a–b). We therefore reject the hypothesis that all the exponents within a field are the same within statistical error. We explore the dependence of scaling on institution size, , in Supplementary Note 6: Comparison Between Data and Simulations, and find the scaling exponents are superlinear (approximately 1.2 on average) and independent of . This implies that parts of the collaboration network densify at different rates, which are intrinsic to each institution.

We find weak evidence that higher scaling exponents correspond to institutions with greater success. In physics, the Spearman rank correlation, , between mean paper impact after five years and internal collaboration scaling exponents is 0.09 (borderline significant, p-value) and for external collaboration is 0.27 (p-value ). Similarly, in sociology, the correlation is 0.19 (p-value) between impact and internal collaboration exponents, and the same correlation value is found for external collaboration exponents. For all other fields, however, the correlations are not statistically significant (p-value). Impact, a proxy of institution research quality, cannot fully explain why collaborations grow faster in some institutions and not others, but can give some insight into reasons for this diversity.

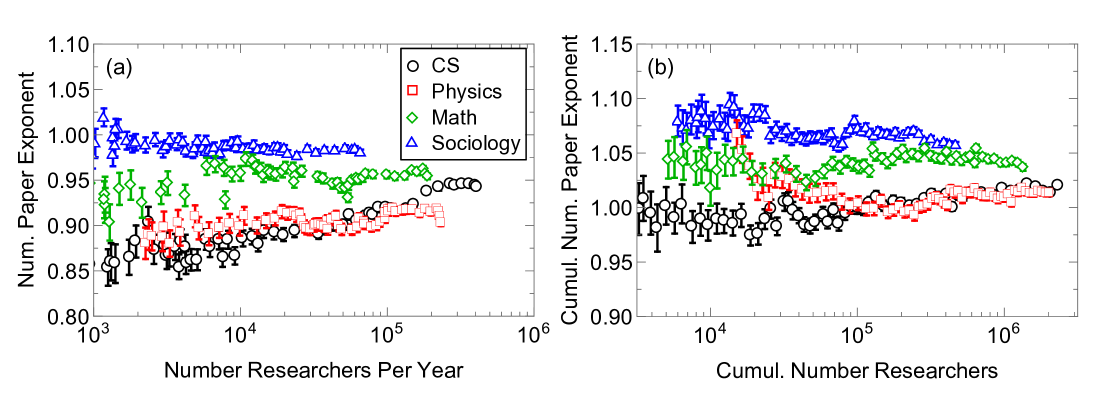

The superlinear scaling of collaborations cannot be explained by the higher productivity of researchers at larger institutions. When we look at institution output, i.e., the cumulative number of papers published by researchers affiliated with that institution at a given year, the scaling exponents of output are centered around 1.0 (see SI Supplementary Note 4: Scaling of Output). This suggests that paper output per researcher is approximately independent of institution size. Instead, we find that average team size per institution increases with institution size (see SI Supplementary Note 5: Scaling of Team Size), which explains the scaling of collaborations.

| Discipline | Heaps’ Law Exponent | Zipf’s Law Exponent |

|---|---|---|

| Comp. Sci. | ||

| Physics | ||

| Math | ||

| Sociology | ||

| Simulation |

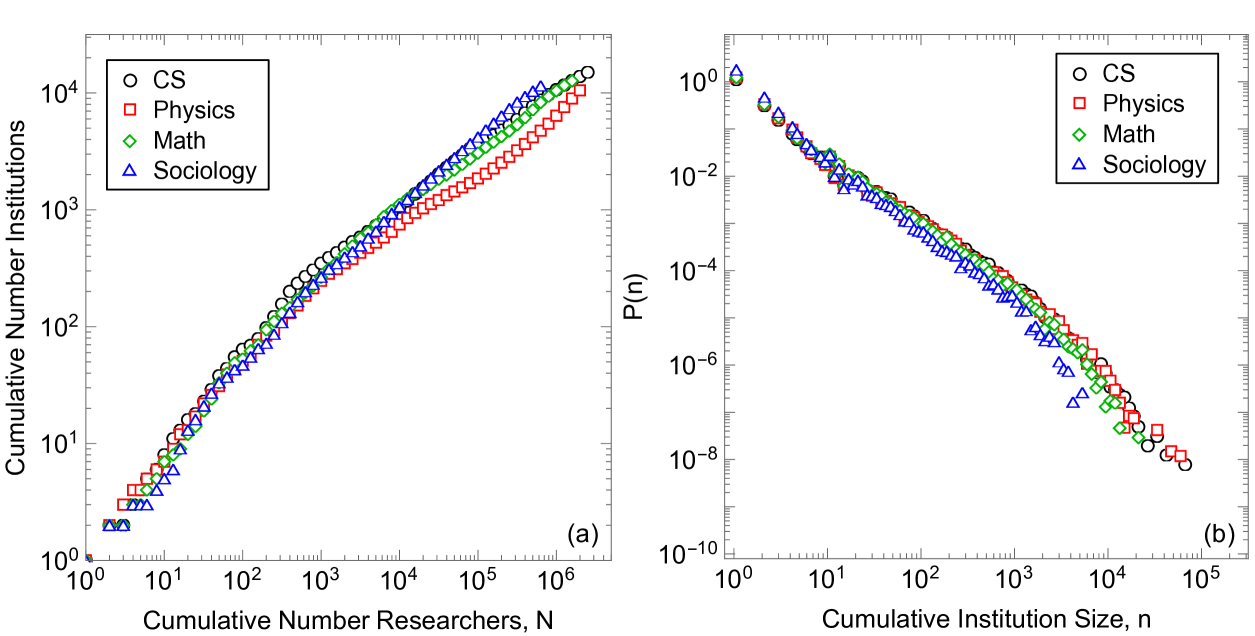

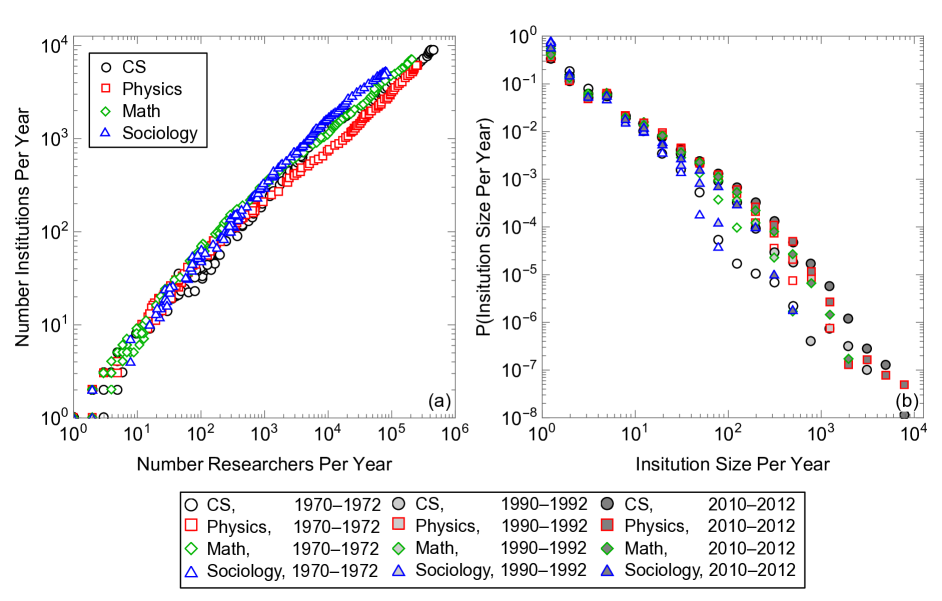

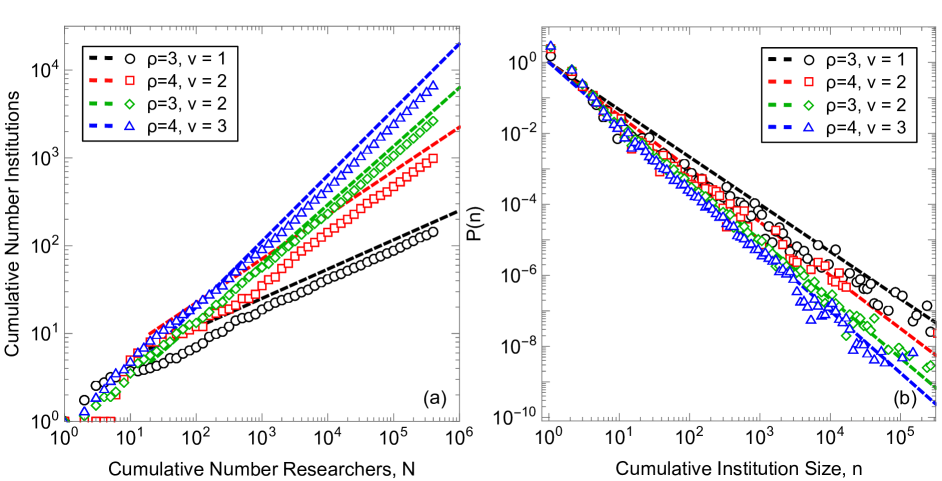

We also find that the number of institutions grows sublinearly with the number of researchers (Fig. 2c): as new researchers start their careers, new institutions eventually form. The number of institutions follows Heaps’ law (?). The distribution of institution sizes (as of 2017), on the other hand, follows Zipf’s law (Fig. 2d), similar to the observed heavy-tailed distribution of city sizes (?, ?). Exact scaling law values for each field can be found in Table 1, where scaling laws are calculated for the number of researchers, , greater than twenty and institution size, , greater than ten.

A Model of Institution Growth

We now describe a stochastic growth model of institution formation that elucidates how institutions and collaborations jointly grow. We model institution formation and growth with a Pólya’s urn-like set of mechanisms described in (?), and we model the growth of collaborations with a network densification mechanism (?, ?). Unlike existing models of network densification (?, ?, ?), however, our model reproduces the heterogeneous densification of internal and external collaborations, and the non-trivial growth structure on institutions.

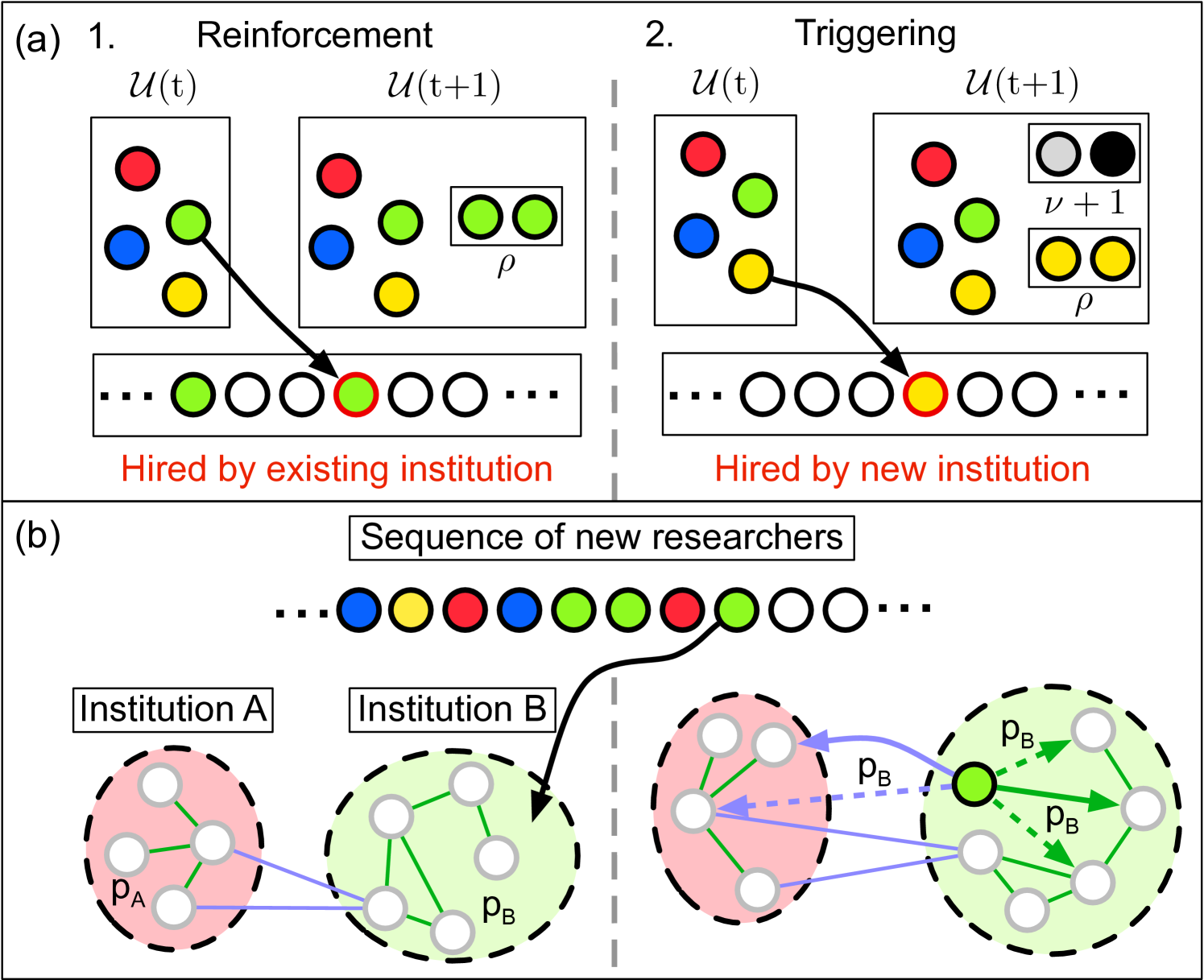

We imagine an urn containing balls of different colors, with each color representing a different institution, as shown in Fig. 3a. Balls are picked with replacement, each pick representing a newly hired researcher, and the ball color is recorded in a sequence to represent what institution hires the researcher. Afterwards balls of the same color are added to the urn to represent the additional resources and prestige given to a larger institution, known as “reinforcement” (left panel of Fig. 3a) (?). If a previously not seen color is chosen, then uniquely-colored balls are placed into the urn, a step known as “triggering” (right panel of Fig. 3a) (?). The new colors represent institutions that are able to form because of the existence of a new institution. For example, UC Davis was spun out of UC Berkeley, and USC Institute for Creative Technology was spun out of USC Information Sciences Institute, which itself was founded by researchers from the Rand Corporation. This model predicts Heaps’ law with scaling relation and Zipf’s law with scaling relation (?). In our simulations, we chose and , which agrees well with the data shown in Fig. 2.

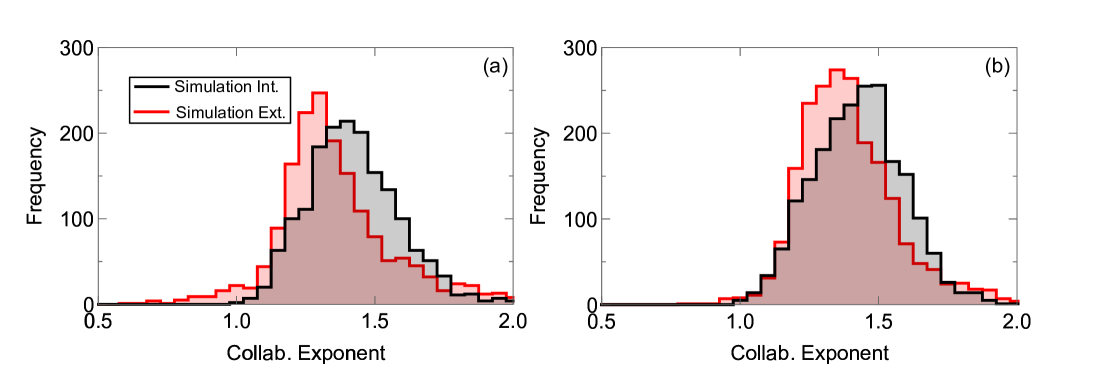

Next, we explain heterogeneous and superlinear scaling of collaborations through a model of network densification. Building on the work of (?, ?), we have each new researcher, represented as a node, connect to a random researcher within the same institution, as well as an external researcher picked uniformly at random (left panel of Fig. 3b). New collaborators are then chosen independently from neighbors of neighbors with probability , where is unique to each researcher’s institution (right panel of Fig. 3b). We let be a Gaussian distributed random variable with mean, , and standard deviation, and truncated between 0 and 1. We show separately that directly controls the heterogeneity we observe in internal collaboration scaling, but the heterogeneity in external collaboration scaling is an emergent outcome of this model (?).

To summarize, our model has four parameters: , , , and . This model reproduces Heaps’ and Zipf’s laws (Fig. 2c–d and Table 1) and the heterogeneous scaling of internal and external collaborations shown in Fig. 2a–b. While other plausible mechanisms for Zipf’s law (?, ?, ?), Heaps’ law (?), or densification (?) exist, the current model describes these patterns in a cohesive framework and explains the heterogeneous scaling we discover in the data. While this heterogeneity is built into our internal scaling laws, the external scaling heterogeneity is a uniquely emergent property within the model (?).

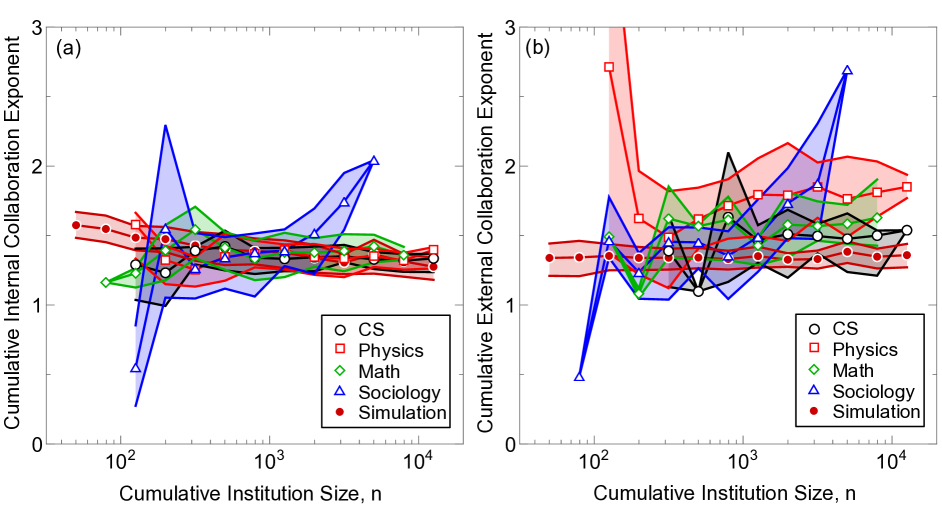

The model also reproduces qualitative trends of cross-sectional analysis. Specifically, the scaling exponents of internal collaborations produced by the model when measured at a specific point in time, i.e., in cross-sectional setting, vary in time and are larger than scaling exponents of external collaborations and decrease over time (SI Supplementary Note 6: Comparison between Data and Simulations), unlike what we see in data (SI Supplementary Figure 3). These results are robust to stochastic variations of the densification mechanism (SI Supplementary Note 7: Simulations of Alternative Mechanisms). As a final comparison with data, we compared the growth of institutions and the ways links form to the model mechanisms and found broad agreement (?).

Discussion

We identify strong statistical regularities in the growth of research institutions. The number of collaborations increases superlinearly with institution size, i.e., faster than institutions grow in size, though the scaling is heterogeneous, with a different exponent for each institution. The superscaling is not explained by the increased productivity of researchers at larger institutions—the number of papers per researcher is roughly independent of institution size. Instead, the growing collaborations are associated with bigger teams at larger institutions. The diversity in collaboration scaling exponents is partly explained by variations in institution impact. Institutions with higher impact papers also tend to have a larger scaling exponent. This provides evidence that a higher collaboration scaling exponent allows for collaborations to form more easily, and that in turn creates higher-impact papers. Further analysis is needed to test this hypothesis in the future.

When these observations are incorporated into a minimal stochastic model of institution growth, we are able to reproduce the surprising regularity of research institution formation, growth and the heterogenous densification of collaboration networks. These findings support the idea that academic environments differ in their ability to bolster researcher productivity and prominence (?), and also demonstrate that institution size and ability to facilitate collaborations as a potential factor explaining differences in academic environments. Additional research is also needed to identify other factors that contribute to an institution’s success.

Methods & Materials

Data

We use bibliographic data from Microsoft Academic Graph (MAG), from which researcher names (authors), their institutional affiliation, and references made to other papers have been extracted (?, ?). MAG data has disambiguated institutions and authors for each paper, allowing us to consider all authors with the same unique identifier to be the same researcher, and similarly for each institution. The MAG data only records one affiliation per researcher per paper, even when the researcher may have multiple affiliations in a given paper. Such cases are rare, especially among older papers (?), and thus unlikely to affect our results. We focus on four fields of study: computer science, physics, math and sociology. After data cleaning, we have almost ten million papers published between 1800 and 2018 (see SI Supplementary Note 1). Our computer science data includes early research in topics relating to computers, including electrical engineering, and therefore stretches back to before 1900.

We define institution size in a given year as the number of authors who have been ever been affiliated with that institution up until that year. Collaborations are defined as two researchers who have co-authored a paper up until that year. We distinguish between internal collaborations (co-authors at the same institution) and external collaborations (co-authors affiliated with different institutions). Finally, to understand the relation between collaborations and institution size, we define output as the cumulative number of papers from researchers affiliated with an institution in a particular year.

Analysis

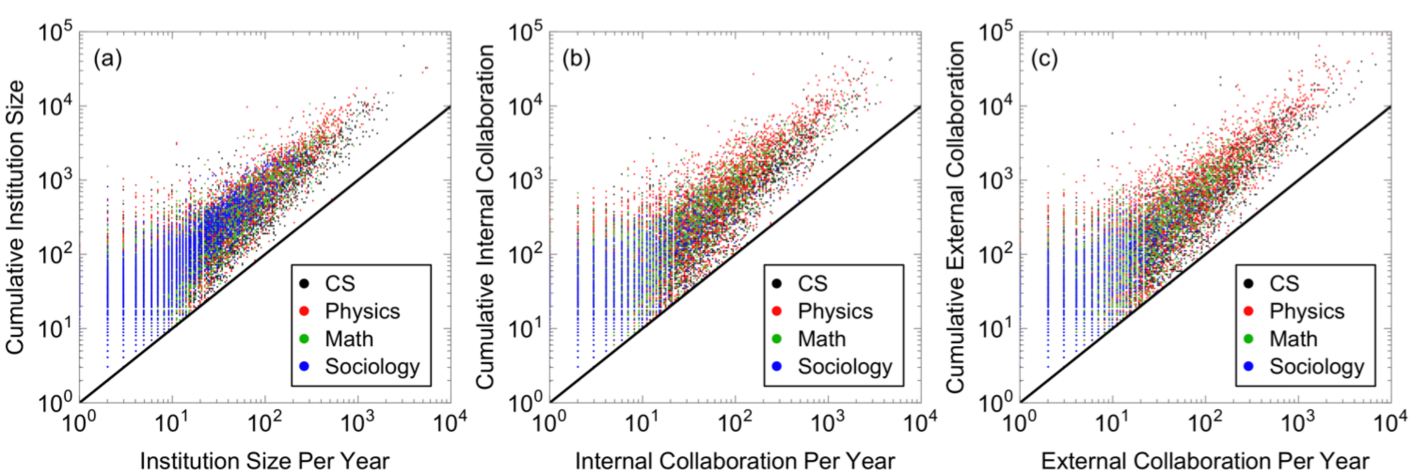

We use cumulative statistics to reduce statistical variations and to better compare to a stochastic growth model of institution formation. To check the robustness of results, we compare to an alternate yearly definition of institution size and collaborations (see SI Supplementary Note 2: Cumulative versus Yearly Measures). We find all qualitative results are the same, in part because both definitions are highly correlated.

We present scaling results for longitudinal analysis, which tracks how collaborations evolve as individual institutions grow (?, ?, ?). This contrasts to cross-sectional analysis applied in previous work on city scaling (?, ?) and institution scaling (?), which measures collaborations as a function of size of all institutions at a given point in time. We find that cross-sectional analysis identifies scaling laws that are not representative of the growth of most institutions (see Supplementary Note 7: Robustness Check of Simulations), and while simulations and empirical data give scaling exponents that are fairly constant in time for each institution, cross-sectional scaling exponents vary in time for both data and simulation. For these reasons, we focus on longitudinal scaling analysis in this paper, although scaling laws derived by either analysis method strongly relate to each other (?, ?).

1 Supporting Information

Supplementary Note 1: Data

We use bibliographic data from Microsoft Academic Graph (MAG)111https://www.microsoft.com/en-us/research/project/microsoft-academic-graph/, from which researcher names (authors), their institutional affiliations, and references made to other papers have been extracted (?, ?). MAG data has disambiguated institutions and authors for each paper, allowing us to consider all authors with the same unique identifier to be the same researcher, and similarly for each institution. The data only records one affiliation per researcher per paper, even when researchers may have multiple affiliations within a given paper. However, multiple affiliations are rare (?) and can be safely ignored.

The MAG data enables us to measure institution size (the number of published authors affiliated with the institution), productivity (number of papers written), and collaborations (co-authors of the same paper), both within and among institutions. We gather data from papers published in four fields of study between 1800 and 2018: computer science (14,666,855 papers), physics (8,428,923 papers), math (6,192,706 papers), and sociology (4,407,288 papers). Because the metadata for MAG are extracted automatically, many papers have some missing values among extracted names, references, institution, or year published. As part of the data cleaning process, we remove papers with missing fields, and also papers with more than 25 authors. These many-authored papers only represent of all physics papers, and of papers in other fields but are removed because they may be too large to constitute a meaningful collaboration between any individuals. This leaves 3,916,332 computer science papers, 2,494,000 physics papers, 2,370,712 math papers, and 1,115,841 sociology papers.

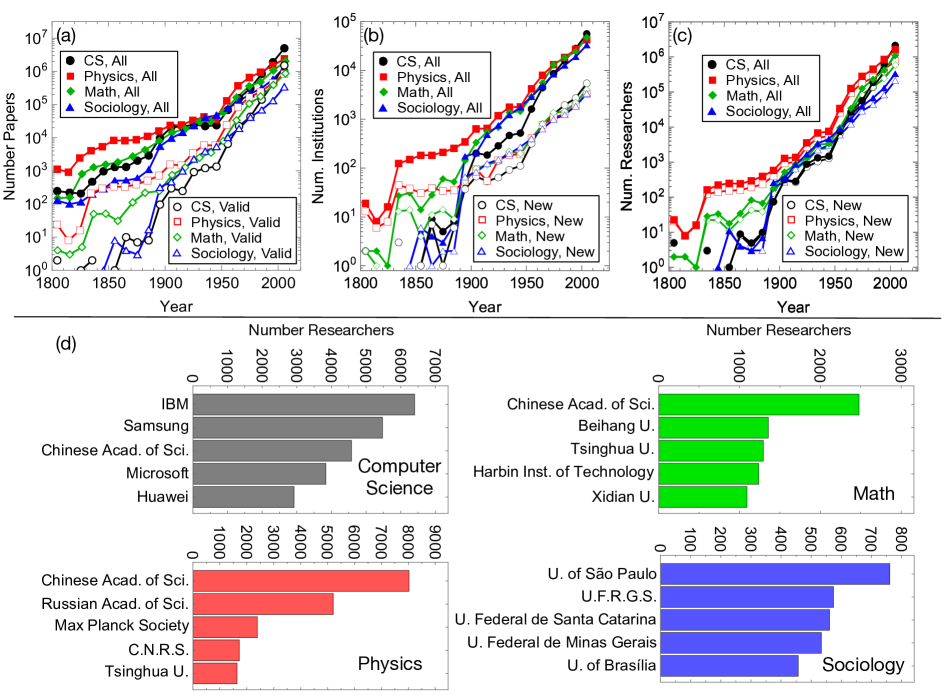

Supplementary Figure 4 shows the descriptive statistics of the data, including the growth of the number of researchers, institutions, and papers published in the four disciplines, and the five largest institutions in each field. Notably, while the largest Physics, Sociology, and Math institutions are universities, the largest computer science institutions are often companies. Figure 2 in the main text demonstrates that institution sizes are broadly distributed with many smaller than 10 researchers, and some larger than . While Supplementary Figure 4 shows that the largest institutions are intuitive, such as Harvard, we separately check the quality of the data for small institutions. We randomly sampled 44 institutions in each field with fewer than 10 researchers as of 2017. We observe that they tend to be for-profit colleges, community colleges, and institutions without a formal department in the field of interest (e.g., an engineering school with papers in sociology). That said, we see the journals they publish in tend to be well-aligned with the field, therefore the small institutions were not associated with a particular field by mistake. While these are qualitative checks, they nonetheless show that the data and institutions found are reasonable.

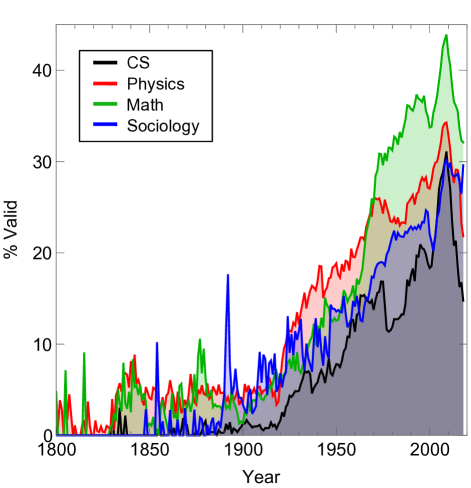

We also analyze the quality of MAG’s data over time in Fig. 5. This figure shows the percentage of papers considered valid (containing year, author, and affiliation). We find surprisingly few papers are considered valid before 1900, while even when the data quality is highest around 2000, the minority of papers are considered valid. This demonstrates bias in data sampling, and is a potential limitation of our work. Nonetheless, we show in Supplementary Figure 13 & 14 that the number of papers per author is roughly independent with institution size. If the sampling bias had an increasing preference towards, e.g., large institutions, then this finding would not have held. Furthermore, Supplementary Figure 6 shows that removing half of all data, or removing data pre-1950 (where the percent of valid data is low), does not substantially affect the scaling relations. In that plot, we show the scaling relations versus institution size in 2017 for all institutions studied. Data is qualitatively and quantitatively the same despite the significant drop in the number of papers studied. These robustness tests suggest that undersampling does not affect our overall conclusions.

Finally, because we include all research output, including journal papers and patents, we check the robustness of our findings when just including the standards of academic research: journals and conference proceedings. In Table 2, we find that between 57%-90% of all documents are in these two categories, with 90% in sociology and only 57% in computer science, presumably because research output in that field is often patents. In Supplementary Figures 7 & 8, however, we show that our major findings are qualitatively unchanged. We see a strong Heaps’ law and Zipf’s law that looks very similar to the main text (Fig. 7). Next, we find in Fig. 8, that collaboration scaling laws are virtually unchanged from the main text figures, thus our results are robust to different forms of data cleaning.

| Data | Journal & Conference | Other | Total | % J&C |

|---|---|---|---|---|

| CS | 1571485 | 1172424 | 2743909 | 57% |

| Physics | 1953468 | 287740 | 2241208 | 87% |

| Math | 1567928 | 401392 | 1969320 | 80% |

| Sociology | 913549 | 101146 | 1014695 | 90% |

Supplementary Note 2: Cumulative versus Yearly Measures

While the main text measured the cumulative size of institutions and collaborations, the findings are qualitatively the same if the growth of research institutions was measured on a year-to-year basis. Institution size is therefore the number of active authors affiliated with that institution who published in that particular year. Collaborations were similarly based on papers published that year, etc.

Figure 4 shows the growth of four academic disciplines, including (a) the number of published papers, (b) the number of institutions and (b) the number of researchers each year all increase exponentially, regardless of whether these are measured on the cumulative (all) or year-to-year basis.

| Data | Size | Internal Collab. | External Collab. |

|---|---|---|---|

| CS | 0.85 | 0.83 | 0.83 |

| Physics | 0.85 | 0.84 | 0.84 |

| Math | 0.84 | 0.82 | 0.82 |

| Sociology | 0.81 | 0.71 | 0.71 |

Figure 9 further demonstrates the robustness of our results, regardless of how they are measured. This figure shows that the cumulative institution size, cumulative number of internal and external collaborations are well correlated with their year-to-year values. The correlations are in Table 3, where Spearman correlations are 0.7–0.8 or higher. Comparison between yearly and cumulative results can also be seen in Fig. 10 where we show cross-sectional collaboration scaling for researchers active in 2017 as well as cumulative collaboration scaling for cumulative institution size.

Figure 11 reproduces Fig. 4 in the main text, except we calculate the yearly number of researchers, institutions, and institution size. Figure 11a shows the number of institutions in a given year versus the number of researchers in a given year. We see, much like in the main text, a sub-linear scaling between the number of institutions and researchers. Figure 11b shows the institution size distribution. Importantly, the institution size distribution might change over time, therefore we plotted the institution size per year for 1970–1972, 1990–1992, and 2010–2012, and found the distribution was extremely stable in time and across fields.

Figure 12 shows the year-to-year collaboration scaling exponents versus the largest institution size, as well as the quality of the linear model fits (). We see, much like the main text, a large variance in the exponent values, but that they do not significantly change with institution size. That said, the scaling law is higher for large institutions in agreement with the expectation that the scaling law works best in the large- limit of institution sizes. This is also similar to what was found in the main text for cumulative sizes.

Supplementary Note 3: Homogeneous Densification (Null) Model

To understand whether the observed heterogeneity in longitudinal scaling laws is due to statistical noise, we create a null model that assumes a homogenous scaling exponent for all institutions. To create the null model, we fit a scaling law for each institution, keeping the residual values, , along with their positions, creating a set of pairs . The null model homogeneous scaling law, , is the average scaling laws, , across all institutions, weighted by the inverse of the standard error squared, . For each institution, we randomly permute the residuals to create new data: . Because of random permutation of residuals, we assume the data are homoscedastic, but make no other assumptions, not even whether the residuals are normally distributed. After refitting each new set of points for each institution, we expect the new null model coefficient for each institution to fluctuate around due to noise. To see whether the distribution of null model coefficients differs from the empirically derived coefficients, we use the Kolmogorov-Smirnov test on these two distributions (?). We find almost invariably that the two distributions differ with p-value .

Supplementary Note 4: Scaling of Output

We define institution output as the cumulative number of papers written by researchers affiliated with that institution. Cross-sectional scaling laws are surprisingly stable in time, with a value of almost exactly 1.0, as shown in Fig. 13. This means that the output per person is independent of institution size. This holds also for different disciplines, regardless of whether we look at annual output or cumulative output. We explore the scaling laws in longitudinal data as well in Fig. 14. These results also show approximately linear scaling relationships.

Figure 14 shows the scaling exponents of institution output versus institution size. The scaling exponents are centered around 1.0, although these scaling laws differ between institutions. This suggests, surprisingly, that paper output per researcher is approximately independent of institution size. That being said, when we compare the longitudinal data to a homogeneous scaling null model, we find that institutions have a greater variance in their scaling laws than the null model predicts. This means that some institutions create slightly more papers per person as the institution grows, while others show a reduction in output. The overall effect, however, appears to be subtle. Overall, institution size appears to affect collaborations much more than output.

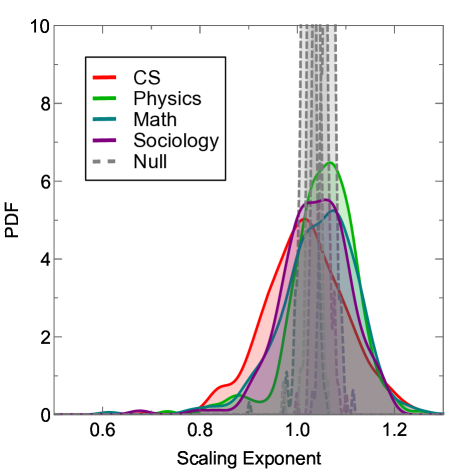

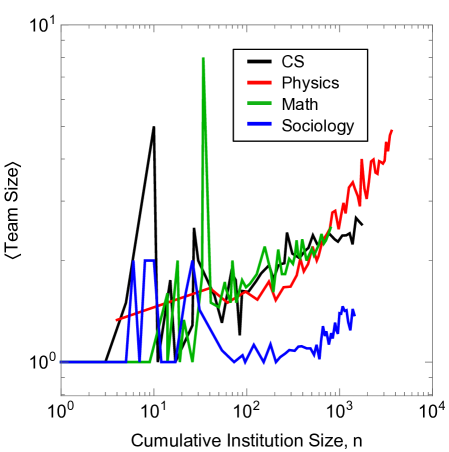

Supplementary Note 5: Scaling of Team Size

Team size measures the number of co-authors on a single paper. Prior work has shown that team size has grown over time, with papers produced by larger teams getting more citations compared to papers written by smaller teams (?). We analyze whether institution size benefits team size via both cross-sectional and longitudinal analysis. As shown in Fig. 15, we find that team size would seem to scale positively with institution size but the scaling relations can vary significantly in time (Fig. 15b). While the scaling laws are not universal, Fig. 15a shows that the fit to a line is remarkably strong.

When we analyze data longitudinally, we see a more complete picture. Namely, Fig. 16 shows that team size scales positively, but the scaling laws differ significantly between institutions. We explore this more thoroughly in Fig. 17 where we plot a histogram of scaling exponents, whose distribution is wider than the null model (KS-test p-value ). We see that there is both significant heterogeneity and generally larger scaling exponents than cross-sectional analysis would predict. For example, while cross-sectional analysis shows Physics has a scaling law of about 0.1, longitudinal analysis instead shows that each institution scales with an exponent of roughly 0.2.

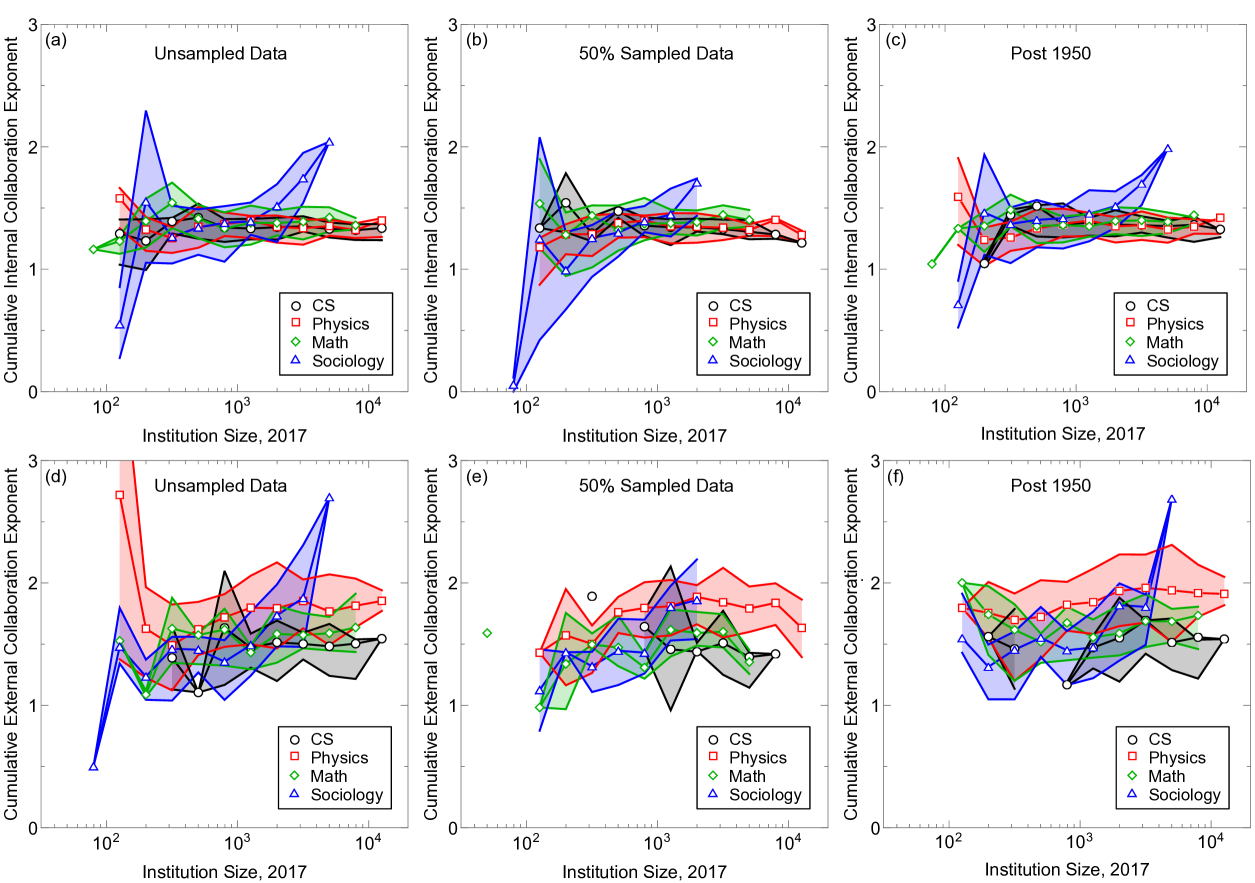

Supplementary Note 6: Comparison Between Data and Simulations

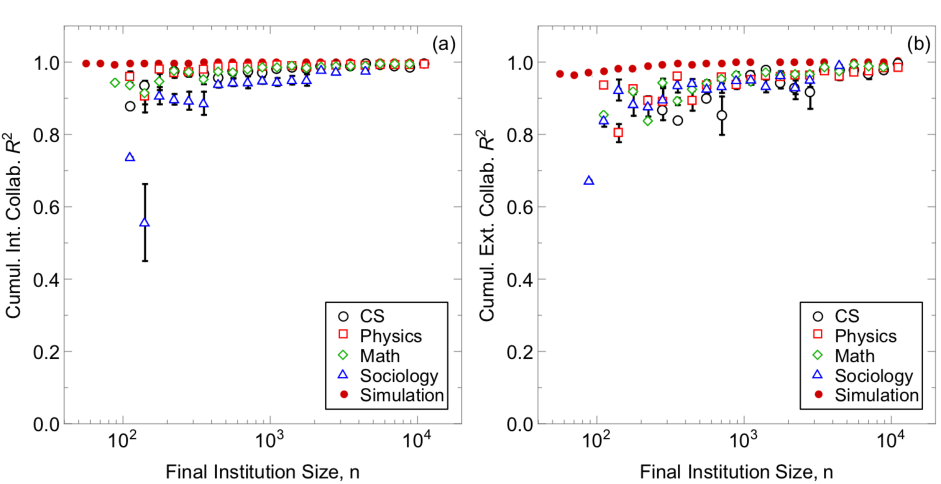

For the rest of the SI, we will just discuss cumulative results. Figure 19a is similar to the main text by showing that cumulative cross-scaling exponents vary, but here the x-axis is the total number of researchers who have authored a paper up until that date. This slightly unusual x-axis allows us to compare these results to cross-sectional scaling in simulations shown in Fig. 19b. Parameters in some of these simulations are the same as the main text, with , , , and . For these parameters, we discover that, much like in the data, internal scaling laws are higher than external scaling laws, even though, for each institution, both should be centered around the horizontal lines labeled “longitudinal” (which corresponds to the mean values in the longitudinal analysis. We also notice that, like the data, the exponents vary as a function of the total number of researchers. These simulations do not just allow us to reproduce results, however, but we can make contrapositive hypotheses. For example, what would the statistics look like if there was no statistical variation in the longitudinal scaling laws? To better understand this, we let in Fig. 19b, and discover that the results are quantitatively almost exactly the same. If institutions had the exact same scaling laws and the exact same constant coefficients, then the cross-sectional and longitudinal scaling laws would be the same. These discrepancies point to either finite size effects or different constant coefficients are dominant factors in explaining why cross-sectional scaling and longitudinal scaling laws differ and vary in time, at least in simulations. We hypothesize similar effects in empirical data as well, although there are qualitative differences in data, such as scaling laws increasing rather than decreasing which point to limitations of the simulations. We also show in Fig. 18 that internal and external scaling laws sare intrinsic, and do not vary significantly with institution size. Moreover, there is significant variance in these scaling laws that our simulation can capture.

Focusing on longitudinal scaling, however, we observe in Fig. 20 that the data and simulations are both well-characterized by linear relations in log-log space. Namely, the figure shows that a linear fit of log(collaborations) versus log(institution size) have an value of nearly 1 for each institute. If their size as of 2017 is large, then is even closer to 1, in agreement with what we should expect in the thermodynamic limit (where finite size effects are negligible). The data is therefore well-characterized as a power law, but these power law values vary between institutions, as shown in Fig. 3 of the main text.

Theory surrounding the Polya’s urn portion of our model is discussed in detail in previous work (?). Nonetheless, we check the robustness of this theory in Fig. 21. We find excellent agreement between the theory and simulation, demonstrating that, even for finite sizes, the theory they developed accurately explains the simulation patterns.

Supplementary Note 7: Robustness Check of Simulations

We might wonder whether our model is sensitive to stochastic variations in how the model behaves. For example, we might ask whether changing the number of initial collaborators from 1 to a range of values will affect results. To this end, we made an additional model in which the number of initial internal and external collaborators was Poisson distributed, with (i.e., on average one internal and one external collaborator). This will not affect the institution formation, but it might affect the institution growth, e.g., the longitudinal collaboration scaling exponents. Importantly, Bhat et al. and Lambiotte et al. shows that number of links over time are not self-averaging (?, ?), therefore initial conditions greatly affect the final number of links. Figures 22 & 23 show our results. In Fig. 22, we find that, while there are slightly more outliers in the scaling exponent distribution, results are quantitatively very similar. In Fig. 23a we find that external collaboration cross-sectional scaling exponents increase with the cumulative number of researchers, more alike to what we see in empirical data (Fig. 2 main text), and the internal collaboration exponents are stationary. In Fig. 23b, however, we find that the external collaboration exponents are mostly stationary in the original form of the model, while internal collaboration exponents decrease with the cumulative number of researchers.

References

- 1. D. Hicks, J. S. Katz, Science and public policy 23, 39 (1996).

- 2. R. C. Taylor, et al., ArXiv Preprint: arXiv:1910.05470 (2019).

- 3. S. Fortunato, et al., Science 359, eaao0185 (2018).

- 4. R. Sinatra, D. Wang, P. Deville, C. Song, A.-L. Barabási, Science 354 (2016).

- 5. D. Wang, C. Song, A.-L. Barabási, Science 342, 127 (2013).

- 6. R. Guimera, B. Uzzi, J. Spiro, L. A. N. Amaral, Science 308, 697 (2005).

- 7. S. Wuchty, B. F. Jones, B. Uzzi, Science 316, 1036 (2007).

- 8. S. Milojević, Proceedings of the National Academy of Sciences 111, 3984 (2014).

- 9. S. F. Way, A. C. Morgan, D. B. Larremore, A. Clauset, Proceedings of the National Academy of Sciences 116, 10729 (2019).

- 10. P. Deville, et al., Scientific Reports 4, 4770 EP (2014).

- 11. L. Wu, D. Wang, J. A. Evans, Nature 566, 378 (2019).

- 12. B. F. Jones, The Review of Economic Studies 76, 283 (2009).

- 13. S. E. Page, The diversity bonus: How great teams pay off in the knowledge economy, vol. 5 (Princeton University Press, 2019).

- 14. Y. Dong, H. Ma, J. Tang, K. Wang, arXiv preprint: 1806.03694 (2018).

- 15. A. Yegros-Yegros, I. Rafols, P. D’Este, PloS one 10, e0135095 (2015).

- 16. J. Leskovec, J. Kleinberg, C. Faloutsos, ACM Trans. Knowl. Discov. Data 1 (2007).

- 17. U. Bhat, P. L. Krapivsky, R. Lambiotte, S. Redner, Phys. Rev. E 94, 062302 (2016).

- 18. R. Lambiotte, P. L. Krapivsky, U. Bhat, S. Redner, Phys. Rev. Lett. 117, 218301 (2016).

- 19. G. K. Zipf, Human Behavior and the Principle of Least Effort: An Introduction to Human Ecology (Addison-Wesley Press, Inc., Cambridge, MA, 1949).

- 20. L. Lü, Z.-K. Zhang, T. Zhou, PLOS ONE 5, 1 (2010).

- 21. F. Simini, C. James, EPJ Data Science 8, 24 (2019).

- 22. F. Tria, V. Loreto, V. D. P. Servedio, S. H. Strogatz, Scientific Reports 4, 5890 EP (2014).

- 23. M. Batty, Nature 444, 592 (2006).

- 24. K. Burghardt, A. Percus, Z. He, K. Lerman, arXiv preprint:arXiv:XXXX (2020).

- 25. R. Gibrat, Les inegalites economiques; applications: aux inegalites des richesses, a la concentration des entreprises, aux populations des villes, aux statistiques des familles, etc., da une loi nouvelle, la loi de la effet proportionnel (Librairie du Recueil Sirey, Paris, 1931).

- 26. J. Eeckhout, American Economic Review 94, 1429 (2004).

- 27. R. L. Axtell, Science 293, 1818 (2001).

- 28. A. Sinha, et al., Proceedings of the 24th international conference on world wide web (ACM, 2015), pp. 243–246.

- 29. D. Herrmannova, P. Knoth, D-Lib Magazine 22 (2016).

- 30. H. Hottenrott, C. Lawson, Scientometrics 111, 285 (2017).

- 31. J. Depersin, M. Barthelemy, Proceedings of the National Academy of Sciences 115, 2317 (2018).

- 32. M. Keuschnigg, Proceedings of the National Academy of Sciences 116, 13759 (2019).

- 33. F. L. Ribeiro, J. Meirelles, V. M. Netto, C. R. Neto, A. Baronchelli, PLOS ONE 15, 1 (2020).

- 34. L. M. A. Bettencourt, J. Lobo, D. Helbing, C. Kühnert, G. B. West, Proceedings of the National Academy of Sciences 104, 7301 (2007).

- 35. L. M. A. Bettencourt, Science 340, 1438 (2013).

- 36. L. M. A. Bettencourt, et al., J. R. Soc. Interface 17, 20190846 (2020).

- 37. F. J. Massey, Jr., Journal of the American Statistical Association 46, 68 (1951).

Acknowledgments

Research was funded by in part by DARPA under contract #W911NF1920271 and by the USC Annenberg Fellowship. Data, code used for data analysis, code for simulations is available in the following repository: https://github.com/ZagHe568/institution_scaling.