The Geometry of Nonlinear Embeddings

in Kernel Discriminant Analysis

Abstract

Fisher’s linear discriminant analysis is a classical method for

classification, yet it is limited to capturing linear features

only. Kernel discriminant analysis as an extension is known

to successfully alleviate the limitation through a nonlinear feature

mapping. We study the geometry of nonlinear embeddings in discriminant analysis with

polynomial kernels and Gaussian kernel by identifying the

population-level discriminant function that depends on the data

distribution and the kernel. In order to obtain

the discriminant function, we solve a generalized

eigenvalue problem with between-class and within-class covariance

operators. The polynomial discriminants are shown to capture the class

difference through the population moments explicitly.

For approximation of the Gaussian discriminant, we

use a particular representation of the Gaussian kernel by utilizing the

exponential generating function for Hermite polynomials. We also show

that the Gaussian discriminant can be approximated using randomized

projections of the data.

Our results illuminate how the data distribution and the kernel interact in

determination of the nonlinear embedding for discrimination, and

provide a guideline for choice of the kernel and its parameters.

Keywords: Discriminant analysis, Feature map, Gaussian kernel, Polynomial kernel, Rayleigh quotient, Spectral analysis

1 Introduction

Kernel methods have been widely used in statistics and machine learning for pattern recognition and analysis (Shawe-Taylor and Cristianini 2004, Schölkopf and Smola 2002, Hofmann et al. 2008). They can be described in a unified framework with a special class of functions called kernels encoding pairwise similarities between data points. Such kernels enable nonlinear extensions of linear methods seamlessly and allow us to deal with general types of data such as vectors, text documents, graphs, and images. Combined with problem-specific evaluation criteria typically in the form of a loss function or a spectral norm of a kernel matrix, this kernel-based framework can produce a variety of learning algorithms for regression, classification, ranking, clustering, and dimension reduction. Popular kernel methods include smoothing splines (Wahba 1990), support vector machines (Vapnik 1995), kernel Fisher discriminant analysis (Mika et al. 1999, Baudat and Anouar 2000), ranking SVM (Joachims 2002), spectral clustering (Scott and Longuet-Higgins 1990, von Luxburg 2007), and kernel principal component analysis (Schölkopf et al. 1998).

This paper regards the geometry of kernel discriminant analysis (KDA). KDA is a nonlinear generalization of Fisher’s linear discriminant analysis (LDA), which is a standard multivariate technique for classification. Intrinsically as a dimension reduction method, KDA looks for discriminants that embed multivariate data into a real line so that decisions can be made easily in a low dimensional space. For simplicity of exposition, we focus on the case of two classes. Fisher’s linear discriminant projects data along the direction that maximizes separation between classes. Extending this geometric idea, kernel discriminant analysis finds a data embedding that maximizes the ratio of the between-class variation to within-class variation measured in the feature space specified by a kernel. To determine the embedding as a discriminant, we solve a generalized eigenvalue problem involving kernel-dependent covariance matrices.

We examine the kernel discriminant at the population level to illuminate the interplay between the kernel and the probability distribution for data. Of particular interest is how the kernel discriminant captures the difference between two classes geometrically, and how the choice of a kernel and associated kernel parameters affect the discriminant in connection with salient features of the underlying distribution. As a continuous analogue of the kernel-dependent covariance matrices, we define the between-class and within-class covariance operators first and state the population version of the eigenvalue problem using those operators which depend on both the data distribution and the kernel. For some kernels, we can obtain explicit solutions and determine the corresponding population kernel discriminants.

Similar population-level analyses have been done for kernel PCA and spectral clustering (Zhu et al. 1998, Shi et al. 2009, Liang and Lee 2013) to gain insights into the interplay between the kernel and distributional features on low dimensional embeddings for data visualization and clustering. The population analyses of kernel PCA, spectral clustering, and KDA require a spectral analysis of kernel operators of different forms depending on the method. They help us examine the dependence of eigenfunctions and eigenvalues of the kernel operators on the data distribution, which can guide applications of those methods in practice.

The population discriminants with polynomial kernels admit a closed-form expression due to their finite dimensional feature map. Analogous to the geometric interpretation of Fisher’s linear discriminant that it projects data along the mean difference direction after whitening the within-class covariance, the polynomial discriminants are characterized by the difference in the population moments between classes. By contrast, the Gaussian kernel does not allow a simple closed-form expression for the discriminant because its feature map and associated function space are infinite-dimensional. We provide approximations to the Gaussian discriminant instead using two representations of the kernel. These approximations shed some light on the workings of KDA with the Gaussian kernel. By using a deterministic representation of the Gaussian kernel with the Hermite polynomial generating function, we approximate the population Gaussian discriminant with polynomial discriminants of degree as high as desired for the accuracy of approximation. This implies that the Gaussian discriminant captures the difference between classes through the entirety of the moments. Alternatively, using a stochastic representation of the Gaussian kernel through Fourier features of random projections (Rahimi and Recht 2008a), we can also view the Gaussian discriminant as an embedding that combines the expected differences in sinusoidal features of randomly projected data from two classes.

How are the forms of these population kernel discriminants related to the task of minimizing classification error? To attain the least possible error rate, the optimal decision rule assigns a data point to the most probable class by comparing the likelihood of one class, say , versus the other, , given . In other words, the ideal data embedding for discrimination of two classes should be based on the likelihood ratio or . As a simple example, when the population distribution for each class is multivariate normal with a common covariance matrix, is linear in , and it coincides with the population version of Fisher’s linear discriminant. Difference in the covariance brings additional quadratic terms to the log likelihood ratio requiring a quadratic discriminant for the lowest error. As the distributions further deviate from elliptical scatter patterns exemplified by normal distributions, the ideal data embedding according to will involve nonlinear terms beyond quadratic. The basic fact that each distribution can be identified with its moment-generating function or characteristic function, i.e., its Fourier transform, implies that any difference between two distributions can be described in terms of the moments or expected Fourier features in general. Our population analysis of kernel discriminants indicates that the Gaussian kernel treats the distributional difference as a whole, including both global and local (or low and high frequency) characteristics, while the polynomial kernels focus on differences in more global characteristics represented by low-order moments. The ideal choice of a kernel in KDA will inevitably depend on the mode of class difference mathematically expressed through the log likelihood ratio, .

The rest of the paper is organized as follows. Section 2 provides a brief review of kernel discriminant analysis and describes its population version by introducing two kernel covariance operators for measuring the between-class variation and within-class variation in the feature space. Section 3 presents a population-level discriminant analysis using two types of polynomial kernels and Gaussian kernel and provides an explicit form of population kernel discriminants. Numerical examples are given in Section 4 to illustrate the geometry of kernel discriminants in relation to the data distribution. Section 5 concludes the paper with discussions.

2 Preliminaries

This section provides a technical background for kernel discriminant analysis. After reviewing kernel functions, corresponding function spaces, and feature mappings in Section 2.1, we briefly describe Fisher’s linear discriminant analysis and its extension using kernels in Section 2.2 and further extend the sample-dependent description of kernel discriminant analysis to its population version in Section 2.3.

2.1 Kernel

Let the input domain for data be denoted by . A kernel is defined as a positive semi-definite function from to . As a positive semi-definite function, is symmetric: for all , and for each and for all choices of , as an matrix is positive semi-definite.

Given , there is a unique function space with inner product corresponding to the kernel such that for every and , (i) , and (ii) . The second property is called the reproducing property of , and it entails the following identity: . Such a function space with reproducing kernel is called a reproducing kernel Hilbert space (RKHS). See Aronszajn (1950), Wahba (1990) and Gu (2002) for reference.

Alternatively, kernels can be characterized as those functions that arise as a result of the dot product of feature vectors. This is a common viewpoint in machine learning in the use of kernels for nonlinear generalization of linear methods. To capture nonlinear features often desired for data analysis, consider a mapping from the input space to a feature space , , which is called a feature map. The feature vector consists of features, and for expressiveness of the features, the dimension of the feature space is often much higher than the input dimension, and possibly infinite. Through the dot product of feature vectors, we can define a valid kernel on as . When , the dot product is to be interpreted in the sense of inner product. More general treatment of kernels with a general inner product for the feature space is feasible, but for brevity, we confine our description to the dot product only. Using a feature map, we can generalize a linear method by applying it in the feature space, which amounts to replacing the dot product for the original features, , in the linear method with a kernel, . This substitution is called the “kernel trick” in machine learning. For general description of kernel methods, an explicit form of a feature map is not needed nor the feature map for a given kernel is unique. See Schölkopf and Smola (2002) for general properties of kernels.

In this paper, we focus on the following kernels that are commonly used in practice with :

-

•

Homogeneous polynomial kernel of degree :

-

•

Inhomogeneous polynomial kernel of degree :

-

•

Gaussian kernel with bandwidth parameter : .

Consideration of their explicit feature maps will be useful for the analyses presented in Section 3. For instance, the homogeneous polynomial kernel of degree on , , can be described with a feature map . The Gaussian kernel on with bandwidth parameter 1 admits with inner product as a feature space and a feature map of

2.2 Kernel Discriminant Analysis

Kernel discriminant analysis (KDA) is a nonlinear extension of Fisher’s linear discriminant analysis using kernels. For description of KDA, we start with a classification problem. Suppose we have data from two classes labeled 1 and 2: . For simplicity, assume that the data points are ordered so that the first observations are from class 1 and the rest () are from class 2.

2.2.1 Fisher’s Linear Discriminant Analysis

As a classical approach to classification, Fisher’s linear discriminant analysis (LDA) looks for linear combinations of the original variables called linear discriminants that can separate observations from different classes effectively. It can be viewed as a dimension reduction technique for classification.

When , a linear discriminant is of the form, , with a coefficient vector . For the discriminant as a univariate measurement, we define the between-class variation as

and the within-class variation as its pooled sample variance:

where and are the sample mean vector and sample covariance matrix of for class . Letting and (the pooled covariance matrix), we can express the two variations succinctly as quadratic forms of and , respectively. Note that both forms are shift-invariant.

To find the best direction that gives the maximum separation between two classes measured relative to the within-class variance in LDA, we maximize the ratio of the between-class variation to the within-class variation with respect to :

This ratio is also known as the Rayleigh quotient and taken as a measure of the signal-to-noise ratio in classification along the direction . This maximization problem leads to the following generalized eigenvalue problem:

and the solution is given by the leading eigenvector. More explicitly, defined only up to a normalization constant, and is the corresponding eigenvalue. Since has rank 1, is the only positive eigenvalue. The resulting linear discriminant, , together with an appropriately chosen threshold yields a classification boundary of the form , which is linear in the input space. When , (mean difference) provides the best direction for projection. Re-expression of the linear discriminant as further reveals that LDA projects data onto the mean difference direction after whitening the variables via . This interpretation also implies the invariance of under variable scaling.

2.2.2 Nonlinear Generalization

Using the aforementioned kernel trick, Mika et al. (1999) proposed a nonlinear extension of linear discriminant analysis, which can be useful when the optimal classification boundary is not linear. Conceptually, by mapping the data into a feature space using a kernel, kernel discriminant analysis finds the best direction for discrimination and corresponding linear discriminant in the feature space, which then defines a nonlinear discriminant function in the input space.

Given kernel , let be a feature map. Then using the feature vector , we can define the sample means and between-class and within-class covariance matrices in the feature space analogously. These matrices are denoted by and . KDA aims to find in the feature space that maximizes

| (1) |

When is in the span of all training feature vectors , it can be expressed as for some . When we plug of the form into the numerator and denominator of the ratio in (1) and expand both in terms of using the kernel identity , we have

where and are the matrices defined through the kernel that reflect between-class variation and within-class variation, respectively. To describe and precisely, start with the kernel matrix . It can be partitioned into with matrix of and matrix of , according to the class label . Using this partition of , we can show that with and

where is the vector of ones, and ( matrix of ones).

In order to find the best discriminant direction , we maximize with respect to instead. The solution is again given by the leading eigenvector of the generalized eigenvalue problem:

| (2) |

Further, the estimated direction results in the discriminant function of the form:

| (3) |

Obviously is in the span of , , and belongs to the reproducing kernel Hilbert space . As with Fisher’s linear discriminant, the kernel discriminant function is determined only up to a normalization constant. To specify a decision rule completely, we need to choose an appropriate threshold for the discriminant function.

2.3 Population Version of Kernel Discriminant Analysis

To understand the effects of the data distribution, geometrical difference between two classes, in particular, and the kernel on the resulting discriminant function, we consider a population version of KDA. For proper description of the population version, we first assume that in the dataset is a random sample of from a mixture of two distributions and with population proportions of and for two classes, or .

To illustrate how the sample version of KDA extends to the population version under this assumption, we begin with the eigenvalue problem in (2). Suppose and are a pair of eigenvalue and eigenvector satisfying (2). After scaling both sides of (2) by the sample size , we have

| (4) |

As a continuous population analogue of and , we can define the following bivariate functions on :

| (5) | |||||

| (6) |

where and indicate that the expectation and covariance are taken with respect to . The matrices and can be viewed as a sample version of and evaluated at all pairs of data points .

Further treating as a discrete version of a function at the data points, i.e., , and taking the sample class proportion, , as an estimate of the population proportion, , and as a sample version of the population eigenvalue , we arrive at the following integral counterpart of (4):

| (7) |

This eigenvalue problem involves two integral operators: (i) the between-class covariance operator defined as

and (ii) the within-class covariance operator defined as

The form of the sample discriminant function in (3) with scaling of suggests that using the solution to equation (7), , we define the population discriminant function as

| (8) |

Clearly, the eigenfunction depends on the kernel and probability distribution , and so does the kernel discriminant function with as a coefficient function. Hence, identification of the solution to the generalized eigenvalue problem in (7) will give us better understanding of kernel discriminants in relation to the data distribution and the choice of the kernel. The correspondence between the pattern of class difference and the nature of the resulting discriminant is of particular interest.

3 Kernel Discriminant Analysis with Covariance Operators

In this section, we carry out a population-level discriminant analysis with two types of polynomial kernels and Gaussian kernel and derive an explicit form of population discriminant functions. Section 3.1 covers the case with polynomial kernels in . Section 3.2 extends it to the Gaussian kernel using two types of kernel representations.

3.1 Polynomial Discriminant

Starting with , we lay out steps necessary for a population version of discriminant analysis with homogeneous polynomial kernel and derive the population kernel discriminant function in Section 3.1.1. We then extend the results to a multi-dimensional setting with homogeneous polynomial kernel in Section 3.1.2 and inhomogeneous polynomial in Section 3.1.3.

3.1.1 Homogeneous Polynomial Kernel in Two-Dimensional Setting

The homogeneous polynomial kernel of degree in is

| (9) |

The simple form of allows us to obtain the between-class variation function in (5) and within-class variation function in (6) explicitly in terms of the population parameters.

For , we begin with

which depends on the difference in the moments of total degree between two classes. Letting , the difference in moments, we can express as

Similarly, for , using the form of , we first derive the covariance for each class ()

Letting , the within-class covariance of a pair of polynomial features of degree , we can express the within-class variation function as

Using these two functions for , we obtain the between-class covariance operator as

and the within-class covariance operator as

Given , is a constant. Thus, letting , we arrive at the following eigenvalue problem from (7) for identification of :

which should hold for all . Rearranging the terms in the polynomial equation, we have

Matching the coefficients of on both sides of the equation leads to the following system of linear equations for :

| (10) |

where is a vector of the mean differences of for , and is a weighted average of their covariance matrices.

When , becomes a linear kernel, and the features are just and . Thus, (population mean difference) and (pooled population covariance matrix). Clearly, the eigenvalue problem in (10) reduces to that for the population version of Fisher’s LDA when .

Assuming that exists, we can show that the largest eigenvalue satisfying equation (10) is with eigenvector of . Given the best direction , the population discriminant function in (8) with homogenous polynomial kernel of degree is

We see that this polynomial discriminant is expressed as a linear combination of the corresponding polynomial features and their coefficients are determined through the mean differences and variances of the features.

3.1.2 Homogeneous Polynomial Kernel in Multi-Dimensional Setting

We extend the result in to general . The homogeneous polynomial kernel of degree in is given as

As a function of , it involves polynomials in variables of total degree . To facilitate similar derivations as in , we will use a multi-index for the polynomial features.

Let denote a -tuple multi-index with non-negative integer entries that sum up to . That is, with cardinality of . We will omit the subscript from for brevity whenever it is clear from the context. For , we abbreviate the multinomial coefficient to , and let and . For and , let , and for , means . For convenience, we will use and interchangeably.

Using this multi-index, we rewrite the homogeneous polynomial kernel in simply as

| (11) |

which can be viewed as a multi-dimensional extension of the expression in (9). Further, we can derive the between-class and within-class variation functions similarly:

with and for . As an example, when the degree is 2 in , . For and , and , and thus we have and . Due to the same structure, we can easily extend the between-class and within-class covariance operators.

To identify the population discriminant function in this setting, we define , and analogously. Letting given a kernel coefficient function , we solve the generalized eigenvalue problem in (10) for , and determine the population-level discriminant function as

Note that the size of and is , and while ordering of the indices in does not matter, the elements in and should be consistently indexed for specification of the eigenvalue problem. The following theorem summarizes the results so far.

Theorem 3.1.

Suppose that for each class, the distribution of has finite moments, and for all . For the homogeneous polynomial kernel of degree , ,

-

(i)

The kernel discriminant function maximizing the ratio of between-class variation relative to within-class variation is of the form

(12) -

(ii)

The coefficients, , for the discriminant function satisfy the eigen-equation with :

(13) where is a vector of moment differences, , and is a matrix of pooled covariances, .

Alternatively, the discriminant function can be derived using an explicit feature map for the kernel. The expression of in (11) suggests as a feature vector, and it can be shown that a direct application of LDA to the between-class and within-class variance matrices of leads to the same kernel discriminant. This result indicates that employing homogeneous polynomial kernels in discriminant analysis has the same effect as using the polynomial features of given degree in LDA.

3.1.3 Inhomogeneous Polynomial Kernel

The inhomogeneous polynomial kernel of degree in can be expanded as

which is a sum of all homogeneous polynomial kernels of degree up to . Since for , , and the term with is 1, we can rewrite the kernel as

Note that is abbreviated to . This kernel has the same structure as the homogenous polynomial kernel. Using the relation, we can find the population kernel discriminant function similarly. Recognizing that involves expanded polynomial features in variables of total degree 0 to : , we define a vector of the mean differences of those features (excluding the constant ) and a block matrix of their pooled covariances as follows:

where and for all . That is, contains all the difference of the moments of degree 1 to , and has the covariances between all the monomials of degree 1 to . Thus, the size of the eigenvalue problem to solve becomes . The following theorem states similar results for the discriminant function with inhomogeneous polynomial kernel.

Theorem 3.2.

Suppose that for each class, the distribution of has finite moments, and for all , and . For the inhomogeneous polynomial kernel of degree , ,

-

(i)

The kernel discriminant function maximizing the ratio of between-class variation relative to within-class variation is of the form

(14) -

(ii)

The coefficients, , for the discriminant function satisfy the eigen-equation with :

(15)

3.2 Gaussian Discriminant

We extend the discriminant analysis with polynomial kernels in the previous section to the Gaussian kernel. For the extension, we use two representations for the Gaussian kernel: a deterministic representation in Section 3.2.1 and a randomized feature representation in Section 3.2.2.

3.2.1 Deterministic Representation of Gaussian Kernel

We have seen so far that derivation of the population discriminant function with polynomial kernels is aided by their expansion, or equivalently, their explicit feature maps. Taking a similar approach to the Gaussian kernel, we could use the Maclaurin series of to express it as

with . While the structure of in this representation would permit similar derivations as before for the discriminant function, the result will depend on the expectations and covariances of which may not be easy to obtain analytically in general.

Alternatively, we consider a representation of the kernel in the form that allows a direct use of polynomial features in much the same way as polynomial kernels. We start with a one-dimensional case and then extend it to a multi-dimensional case. The one-dimensional Gaussian kernel with bandwidth can be written as

| (16) |

are referred to as the probabilist’s Hermite polynomials and defined as

where is the density function of the standard normal distribution. The representation of in (16) comes from the Hermite polynomial generating function:

| (17) |

It can be extended to a multivariate case using the vector-valued Hermite polynomials introduced in Holmquist (1996).

For and , the -variate vector-valued Hermite polynomial of order is defined as

where (-times) is a Kronecker product of the differential operator and is the product of univariate standard normal densities. Thus the components of are a product of univariate Hermite polynomials whose total degree is : , where for each .

Using this notation, a multivariate version of the generating function (17) can be written as

where (-times) and

Using the generating function for and letting with bandwidth , we get the following expansion for the multivariate Gaussian kernel:

Further with the definition of , the kernel is represented as

| (18) |

Although this representation is asymmetric in and , it facilitates similar derivations of the generalized eigenvalue problem and population kernel discriminant as with polynomial kernels, but using the entirety of polynomial features.

With this representation, it is easy to show that

which involves the moments of the distribution rather than the expectations of . Note that the last equality is due to for . Thus the between-class variation function is given as

Similarly the within-class variation function is given as

Therefore, the eigenvalue problem in (7) with the Gaussian kernel is given by

| (19) |

where .

To find satisfying (19) for every , the coefficients of on both sides must equal for all , . This entails the following system of an infinite number of linear equations for :

| (20) |

and the resulting discriminant function of the form: .

For a finite dimensional approximation of the population discriminant function, we may consider truncation of the kernel representation in (18) at :

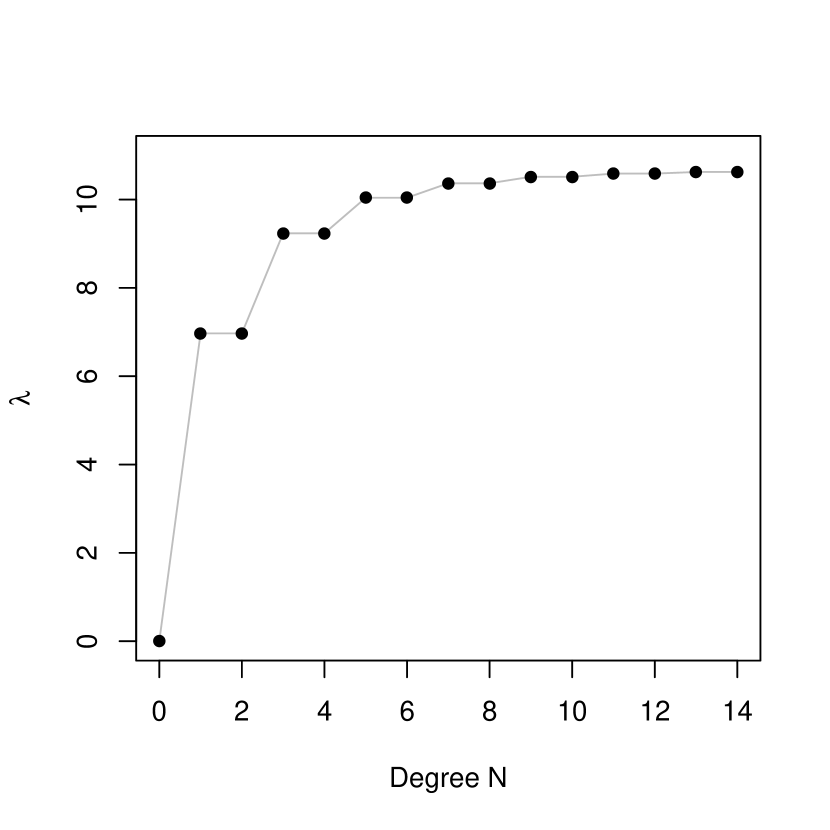

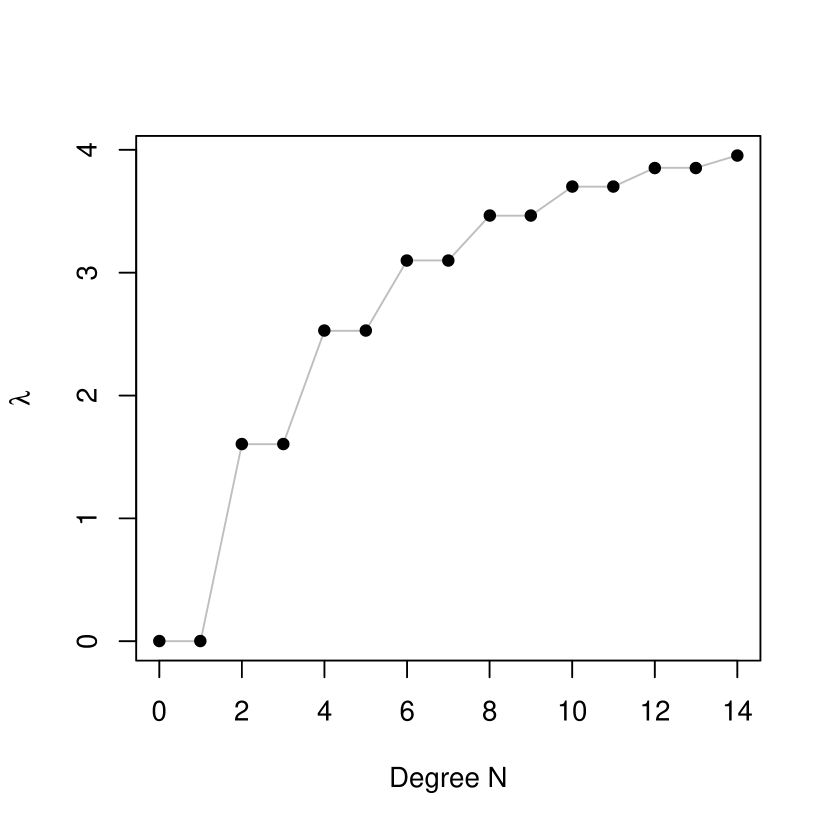

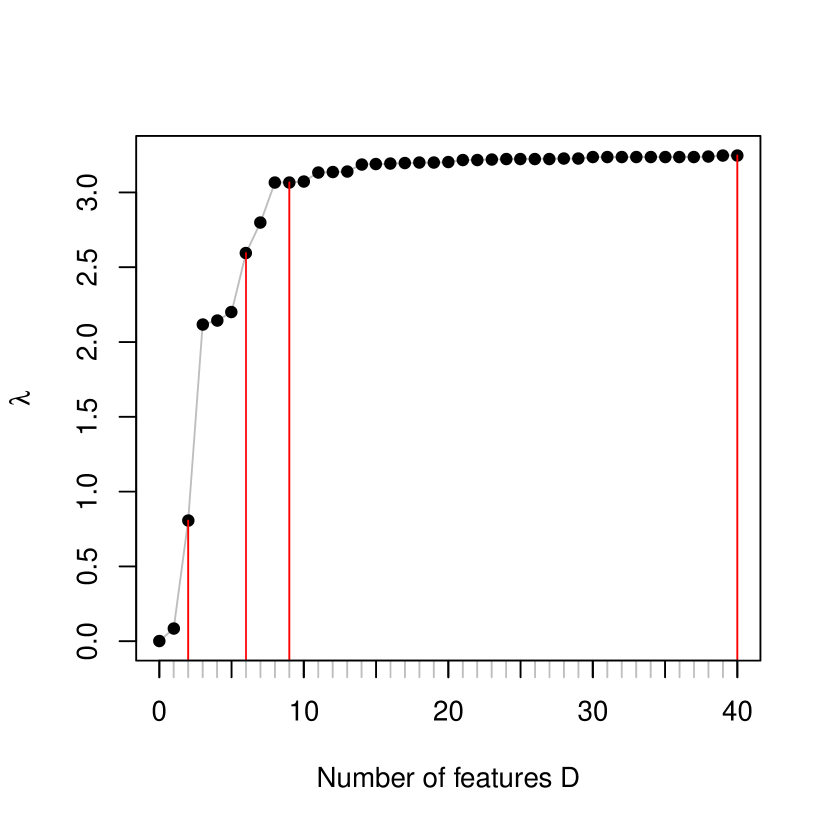

This approximation brings the corresponding truncation of the system of linear equations for the generalized eigenvalue problem in (20). As a result, the eigenvalue equation coincides with that for the inhomogeneous polynomial kernel of degree in Theorem 3.2, and so does the truncated discriminant function. As more polynomial features are added or increases, the largest eigenvalue satisfying equation (15) increases. Adding subscript to , and to indicate the degree clearly, let . The moment difference vector and the within-class covariance matrix expand with , including all the elements up to degree . This nesting structure produces an increasing sequence of . It is because maximization of the ratio for degree amounts to that for degree with a limited space for . In Section 4.1, we will study the relation between polynomial and Gaussian discriminants numerically under various scenarios and discuss the effect of on the quality of the discriminant function.

3.2.2 Fourier Feature Representation of Gaussian kernel

In addition to the polynomial approximation presented in the previous section, a stochastic approximation to the Gaussian kernel can be used for population analysis. Rahimi and Recht (2008a) examined approximation of shift-invariant kernels in general using random Fourier features for fast large-scale optimization with kernels. They proposed the following representation for the Gaussian kernel using random features of the form :

| (21) |

where is a random vector from a multivariate normal distribution with mean zero and covariance matrix . This representation comes from Bochner’s theorem (Rudin 2017), which describes the correspondence between a positive definite shift-invariant kernel and the Fourier transform of a nonnegative measure. The feature map projects onto a random direction first and then takes sinusoidal transforms. Their frequency depends on the norm of . A large bandwidth for the Gaussian kernel implies realization of with a small norm on average, which generally entails a low frequency for the sinusoids.

The representation in (21) suggests a Monte Carlo approximation of the kernel. Suppose that , are randomly generated from . Defining random Fourier features with , we can approximate the Gaussian kernel using a sample average as follows:

This average can be taken as an unbiased estimate of the kernel, and its precision is controlled by . Concatenating these random components , we can also see that the stochastic approximation above amounts to defining

as a randomized feature map for the kernel.

Using the random Fourier features, we approximate the between-class variation function and within-class variation function as follows:

where and . Then we can define a randomized version of the eigenvalue problem in (7) with these approximations. Let denote the solution to the problem with and define . Similar arguments as before lead to the following generalized eigenvalue problem to determine :

where and for . Given , the approximate Gaussian discriminant obtained via random Fourier features is

| (22) |

Rather than sine and cosine pairs, we could also use phase-shifted cosine features only to approximate the Gaussian kernel as suggested in Rahimi and Recht (2008a) and Rahimi and Recht (2008b). Let with an additional phase parameter which is independent of and distributed uniformly on . Then using a trigonometric identity, we can verify that

Given and , if is distributed with , we can show that

Thus in the classical LDA setting of for , this Fourier feature lets us focus on the difference in rather than .

4 Numerical Studies

This section illustrates the relation between the data distribution and kernel discriminants discussed so far through simulation studies and an application to real data.

4.1 Simulation Study

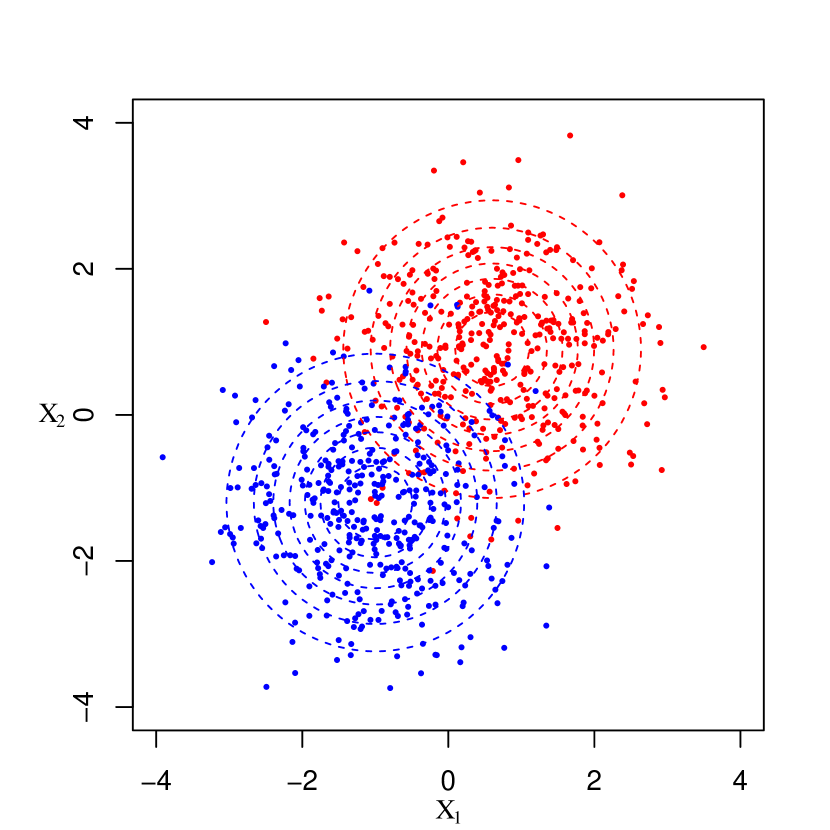

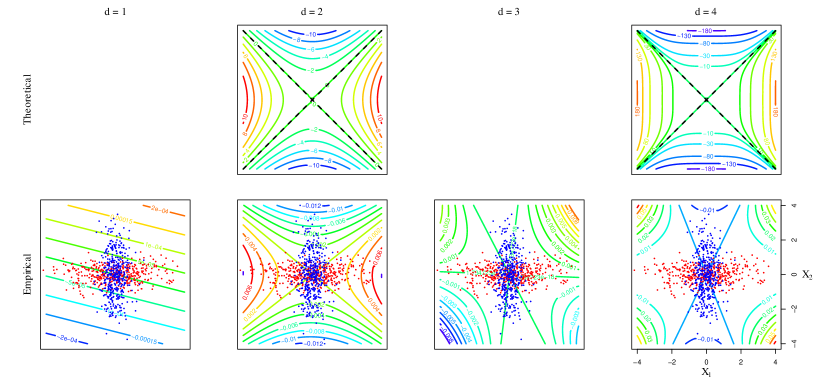

We numerically study the population discriminant functions in (12), (14), and (22) with both polynomial and Gaussian kernels, and examine their relationship with the underlying data distributions for two classes. For illustration, we consider two scenarios where each class follows a bivariate normal distribution. In Scenario 1, two classes have different means ( and ) but the same covariance (), and in Scenario 2, they have the same mean () but different covariances ( and ). Figure 1 shows the scatter plots of samples generated from each scenario with 400 data points in each class (red: class 1 and blue: class 2) under the assumption that two classes are equally likely.

4.1.1 Polynomial Kernel

Under each scenario, we find the population discriminant functions in (12) and (14) with polynomial kernels of degree 1 to 4 and examine the effect of the degree on the discriminants. To determine , we first obtain the population moment differences and covariances explicitly and solve the eigenvalue problem in (13). Similarly we determine with and . Tables 1 and 2 present the coefficients for the polynomial discriminants and in each scenario, which are the solution or (eigenvector) normalized to unit length.

| Homogeneous polynomial | Inhomogeneous polynomial | |||||||

| Term | ||||||||

| 0.6060 | - | - | - | 0.6060 | 0.6060 | 0.6033 | 0.6033 | |

| 0.7954 | - | - | - | 0.7954 | 0.7954 | 0.7919 | 0.7919 | |

| - | -0.4461 | - | - | - | 0.0000 | -0.0141 | -0.0141 | |

| - | -0.8376 | - | - | - | 0.0000 | -0.0369 | -0.0369 | |

| - | -0.3154 | - | - | - | 0.0000 | -0.0242 | -0.0242 | |

| - | - | 0.6412 | - | - | - | -0.0118 | -0.0118 | |

| - | - | 0.3105 | - | - | - | -0.0465 | -0.0465 | |

| - | - | -0.2277 | - | - | - | -0.0610 | -0.0610 | |

| - | - | 0.6637 | - | - | - | -0.0267 | -0.0267 | |

| - | - | - | -0.2575 | - | - | - | 0.0000 | |

| - | - | - | -0.6186 | - | - | - | 0.0000 | |

| - | - | - | 0.3860 | - | - | - | 0.0000 | |

| - | - | - | -0.6146 | - | - | - | 0.0000 | |

| - | - | - | -0.1563 | - | - | - | 0.0000 | |

Scenario 1: Fisher’s linear discriminant analysis is optimal in this scenario. Since the common covariance matrix is , the linear discriminant is simply determined by the direction of the mean difference, which is . This gives as an optimal linear discriminant defined up to a multiplicative constant. From Table 1, we first notice that the coefficient vector for the population linear discriminant, , , is a normalized mean difference. Further we observe that the coefficients for the discriminants with inhomogeneous polynomial kernels, and , are also proportional to the mean difference.

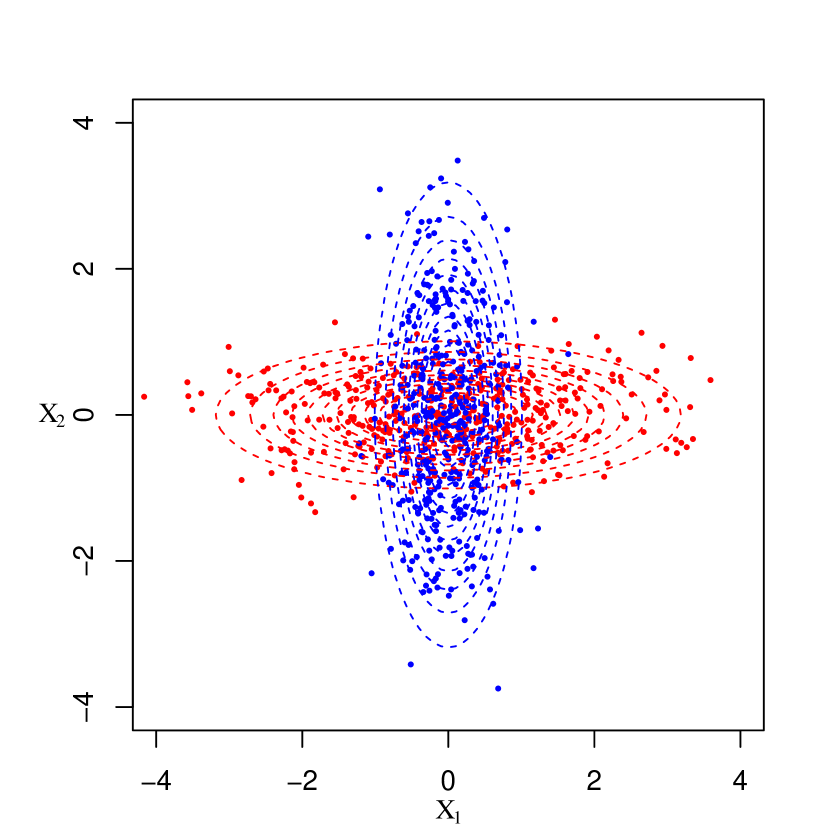

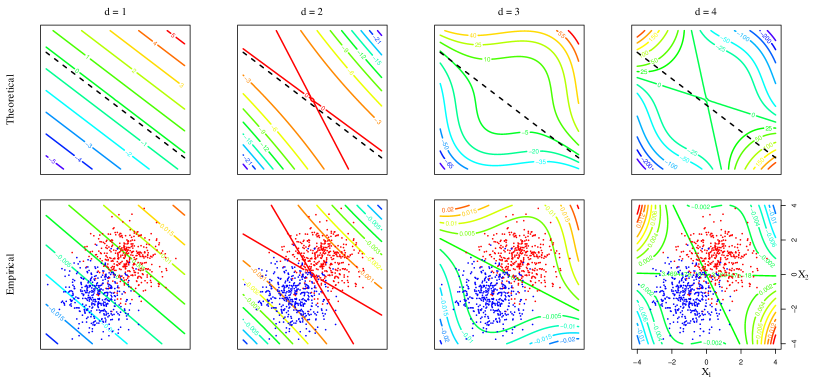

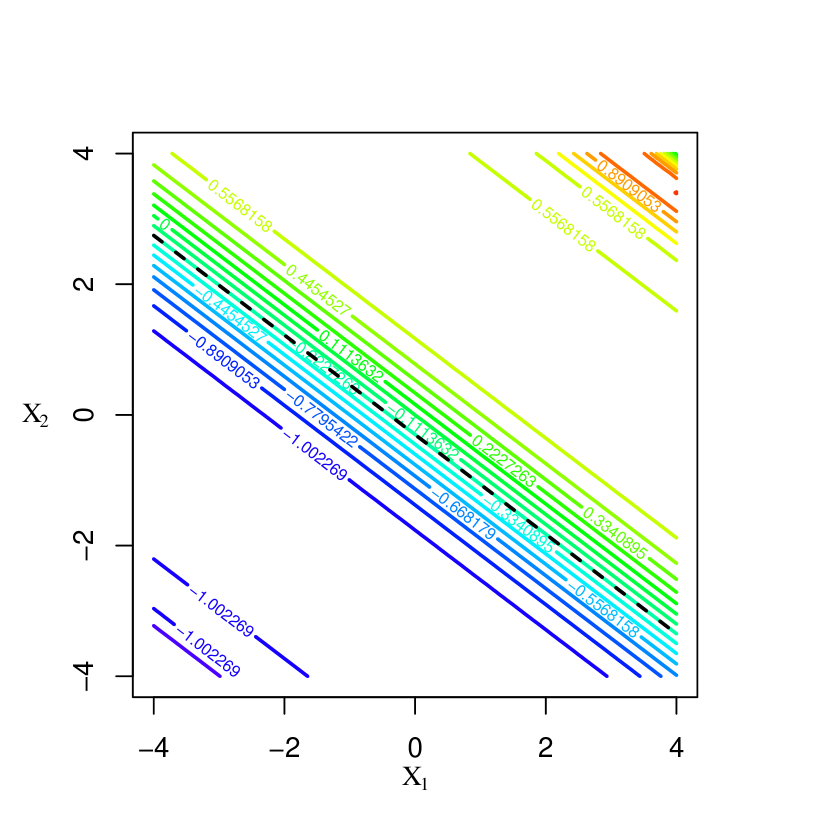

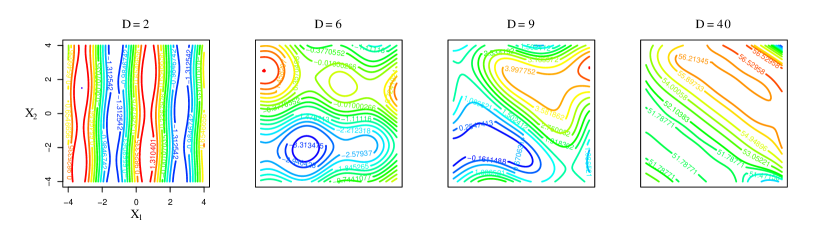

Figures 2 and 3 display the polynomial discriminants identified in Table 1. The first row of Figure 2 shows contours of the population discriminants with homogenous polynomial kernels. High to low discriminant scores correspond to red to blue contours. The black dashed line is , which is the classification boundary from Fisher’s linear discriminant analysis. The second row of Figure 2 presents the corresponding sample embeddings obtained by performing a kernel discriminant analysis to the given samples. Figure 3 shows contours of both versions with inhomogeneous polynomial kernels of degree 2 to 4, omitting degree 1 as they are identical to those with the linear kernel in Figure 2.

The population discriminants and sample versions are similar in terms of shape and direction of change in contours. With odd-degree homogeneous polynomial kernels, we observe that the contours change in the direction of the mean difference, indicating that odd degrees are effective in this setting. The even-degree discriminants, however, are of hyperbolic paraboloid shape, varying in a way that masks the class difference completely. By contrast, the degree doesn’t affect the major direction of change in the population discriminants with inhomogeneous polynomial kernels. Their variation seems to occur only in the direction of the mean difference. Table 1 confirms that the resulting discriminants are identical for degrees and , .

| Homogeneous polynomial | Inhomogeneous polynomial | |||||||

| Term | ||||||||

| 0.00 | - | - | - | 0.00 | 0.0000 | 0.0000 | 0.0000 | |

| 0.00 | - | - | - | 0.00 | 0.0000 | 0.0000 | 0.0000 | |

| - | 0.7071 | - | - | - | 0.7071 | 0.7071 | 0.7063 | |

| - | 0.0000 | - | - | - | 0.0000 | 0.0000 | 0.0000 | |

| - | -0.7071 | - | - | - | -0.7071 | -0.7071 | -0.7063 | |

| - | - | 0.0000 | - | - | - | 0.0000 | 0.0000 | |

| - | - | 0.0000 | - | - | - | 0.0000 | 0.0000 | |

| - | - | 0.0000 | - | - | - | 0.0000 | 0.0000 | |

| - | - | 0.0000 | - | - | - | 0.0000 | 0.0000 | |

| - | - | - | 0.7071 | - | - | - | -0.0335 | |

| - | - | - | 0.0000 | - | - | - | 0.0000 | |

| - | - | - | 0.0000 | - | - | - | 0.0000 | |

| - | - | - | 0.0000 | - | - | - | 0.0000 | |

| - | - | - | -0.7071 | - | - | - | 0.0335 | |

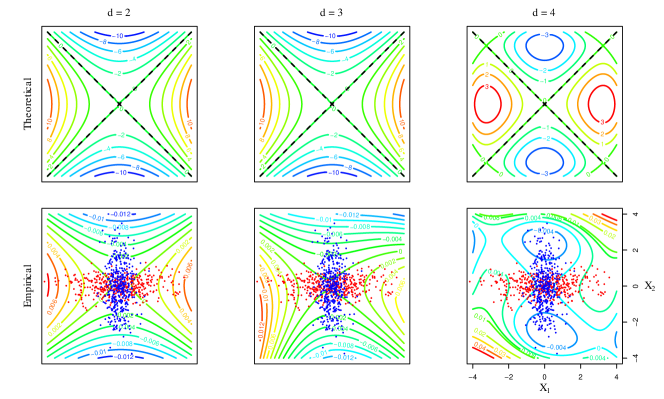

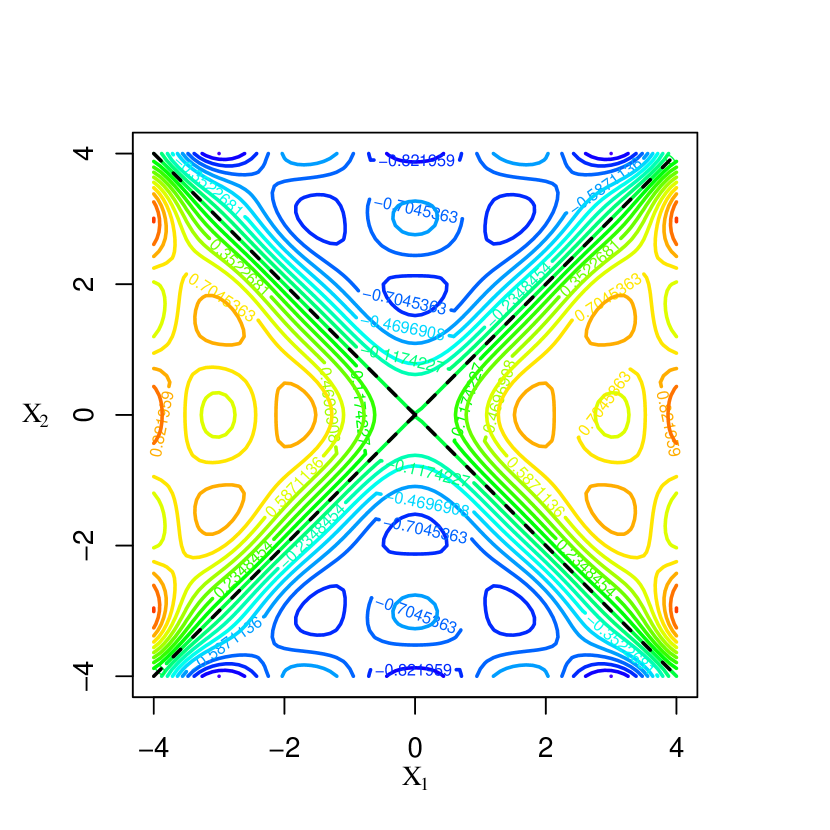

Scenario 2: In this scenario, using the true densities, the optimal decision boundary is found to be , and the optimal discriminant function is , which is a homogeneous polynomial of degree 2. In contrast with Scenario 1, even-degree features are discriminative in this setting. Note that the coefficients of , and in Table 2 are proportional to those of . Odd-degree homogeneous polynomials produce a degenerate discriminant in this setting. The quadratic discriminant, , is a normalized version of . With degree 4 homogeneous polynomial kernel, we have , which has the optimal discriminant as its factor. Contours of these polynomial discriminants are displayed in the first row of Figure 4. The black dashed lines are the optimal decision boundaries. The second row of Figure 4 presents the corresponding nonlinear kernel embeddings of degree 1 to 4 induced by the samples. Figure 5 shows contours of both versions (theoretical in the first row and empirical in the second row) with inhomogeneous polynomial kernels of degree 2 to 4, omitting the degenerate linear case in Table 2.

Similar to Scenario 1, we observe that the population discriminant functions and their sample counterparts in Figures 4 and 5 exhibit similarity in terms of shape and direction of change in contours. The contours of the population quadratic and quartic discriminants in Figure 4 show symmetry along each variable axis. Quadratic features contain all information necessary for discrimination in this scenario. Even-degree features successfully discriminate the two classes while odd-degree features completely fail as shown in Figure 4. Nonlinear inhomogeneous polynomial kernels with even-degree features enable proper classification as illustrated in Figure 5. Inhomogeneous polynomial kernels of degree and produce identical discriminants in this setting.

4.1.2 Gaussian Kernel

We examine Gaussian discriminant functions under each scenario using

two types of approximation to the Gaussian kernel

discussed earlier.

Deterministic representation: Truncation of the deterministic representation of the Gaussian kernel at a certain degree leads to the population polynomial discriminant using the inhomogeneous polynomial kernel of the same degree. Thus to approximate the population Gaussian discriminant, we need to choose an appropriate degree for truncation. As the truncation degree increases, the largest (and only nonzero) eigenvalue as a measure of class separation naturally increases. We may stop at where the increment in is negligible.

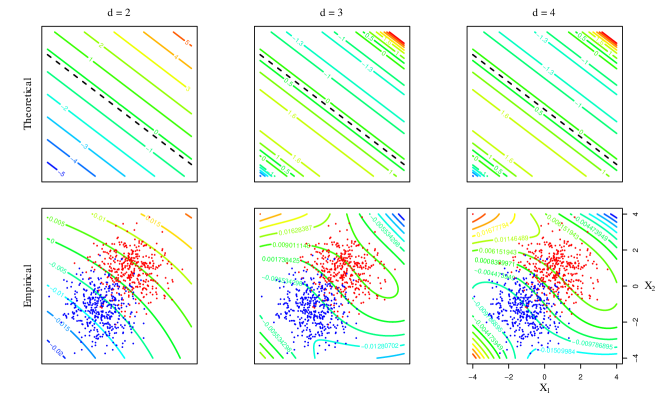

Figure 6 shows how this eigenvalue changes with degree for each scenario. In Scenario 1, since a linear component is essential, there is a sharp increase in at degree 1 followed by a gradual increase as odd features are added. By contrast, in Scenario 2, steadily increases as even features are added. Overall the magnitude of the maximum ratio of between-class variation to within-class variation () indicates that Scenario 1 presents an inherently easier problem than Scenario 2. Figure 7 displays some contours of the approximate Gaussian discriminants for each scenario using , which suggest that the Gaussian kernel can capture the difference between classes effectively in both scenarios.

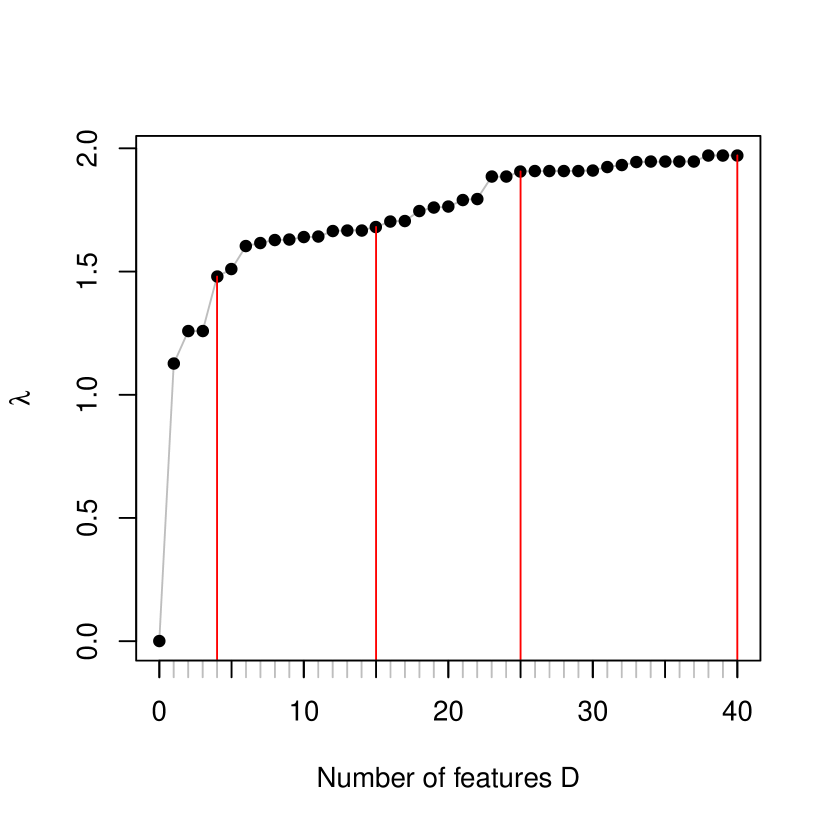

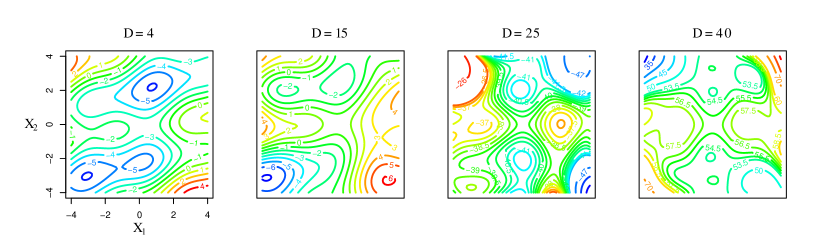

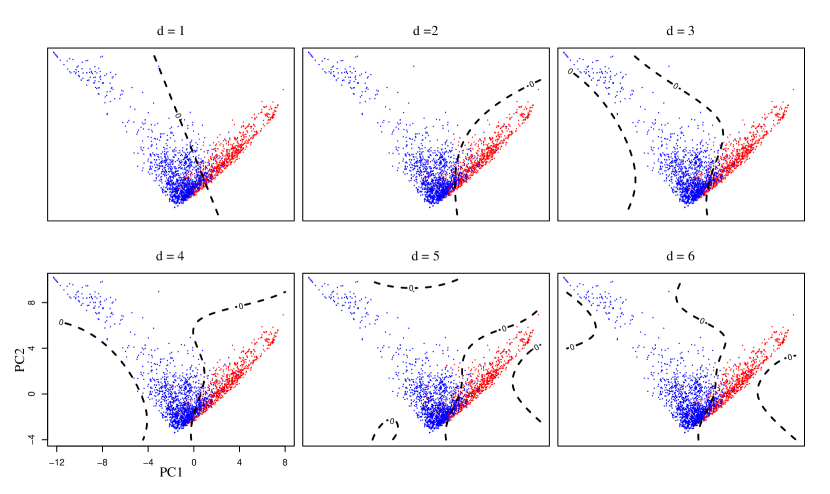

Random Fourier feature representation: While polynomial features in the deterministic representation are naturally ordered by degree, there is no natural order in random Fourier features. As with degree for deterministic features, however, the Rayleigh quotient as a measure of class separation or the corresponding eigenvalue increases as we add more random features. We numerically examine the effect of the number of random features on the eigenvalue and monitor the increment in .

For both scenarios, we randomly generated 40 from and from Uniform, and defined phase-shifted cosine features, . Figure 8 shows how changes with for each scenario. Figure 9 shows how the approximate Gaussian discriminant in (22) changes as the number of random features increases from 2 to 40 under Scenario 1. Figure 10 shows a similar change under Scenario 2. Those snapshots in Figures 9 and 10 are chosen by monitoring the increment in the eigenvalue as more features are added. The number of features used is marked by the red vertical lines in Figure 8 for reference. As increases, the approximate Gaussian discriminants tend to better approximate the optimal classification boundaries. Compared to the polynomial approximation, the eigenvalues level off quickly with the number of random features , and the maximum values are far less than their counterparts with polynomial features in both scenarios in part due to the randomness in the choice of and and the fact that the nature of class difference is not harmonic. In summary, Fourier features are not as effective as polynomial features in these two settings.

4.2 Real Data Example

In this section, we carry out a kernel discriminant analysis on the spam email data set from the UCI Machine Learning Repository (Dua and Graff 2017). We examine the geometry of sample kernel discriminants with various kernels as in the simulation study, and test the performance of the induced classifiers to see the impact of the kernel choice and kernel parameters.

The data set contains information from 4601 email messages of which 60.6% are regular email and 39.4% spam. The task is to detect whether a given email is regular or spam using 57 predictors available in order to filter out spam. 48 predictors are the percentage of words in the email that match a given word (e.g., credit, you, free), 6 predictors are the percentage of punctuation marks in the email that match a given punctuation mark (e.g., !, $), and additional three predictors are the longest, average, and total length of strings of capital letters in the message.

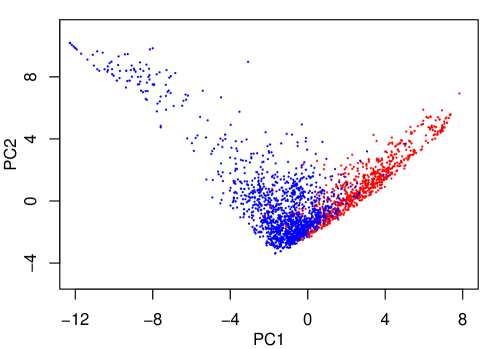

For ease of illustration, we start with a low dimensional representation of the data using principal components and construct kernel discriminants with those components rather than the individual predictors. We observed that the predictors measuring relative frequencies of words exhibit strong skewness in distribution. To alleviate the skewness, we considered a logit transformation before defining principal components. We also observed a large number of zeros on many predictors as some words do not necessarily appear in every e-mail message. To handle this issue, we replaced zeros with a half of the least nonzero value in each predictor before taking a logit transformation and carried out a principal component analysis on the transformed data using their correlation matrix. We then split the principal component scores into training and test sets of about 60% and 40% each and evaluated the performance of trained classifiers over the test set.

Figure 11 shows the scores on the first two principal components for the training data. The two principal components explain 26% of variation in the original data. The score distributions for two types of email are skewed and substantially overlap with very different covariances, suggesting that a nonlinear boundary is needed for classification.

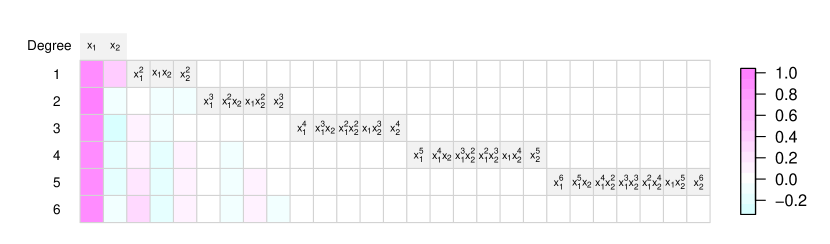

We performed a kernel discriminant analysis on the training data using the inhomogeneous polynomial kernels of degree 1 to 6, and obtained the corresponding polynomial discriminants. For computational efficiency, we estimated the moment difference and covariance matrix directly using the training data and solved a sample version of (15) instead of (2). Figure 12 shows the estimated coefficients for the discriminants that are normalized to unit length using a color map. High order terms, especially beyond the cubic terms, have negligible coefficients. We need to decide on a threshold for discriminant scores to make a decision for spam filtering. We chose the threshold value by minimizing the training error. Figure 13 displays the decision boundaries of the final discriminant functions using the chosen threshold. All nonlinear polynomial discriminants in the figure seem to have similar boundaries at least in the region where data density is high. Table 3 presents their test error rates for comparison along with the rates for misclassifying spam as regular and vice versa. The fifth and sixth order polynomial discriminants have the lowest error rate in this case. However, reduction in the test error rate is marginal after the third order, which we may expect from the result in Figure 12 and diminishing returns in the ratio from degree as shown in Table 3. We may well consider the cubic discriminant sufficient for this application. It provides a good compromise between the two kinds of errors while maintaining simplicity.

| Degree | Ratio | Training | Test error | ||

|---|---|---|---|---|---|

| error | Misclassified spam | Misclassified regular | Overall | ||

| 1 | 4.3154 | 0.1381 | 0.2218 | 0.0744 | 0.1325 |

| 2 | 6.4226 | 0.1163 | 0.1887 | 0.0645 | 0.1135 |

| 3 | 7.2928 | 0.1116 | 0.1736 | 0.0645 | 0.1075 |

| 4 | 7.7043 | 0.1095 | 0.1377 | 0.0959 | 0.1124 |

| 5 | 8.0941 | 0.1058 | 0.1612 | 0.0672 | 0.1042 |

| 6 | 8.3192 | 0.1033 | 0.1543 | 0.0717 | 0.1042 |

5 Discussion

We have examined the population version of kernel discriminant analysis and the generalized eigenvalue problem with between-class and within-class kernel covariance operators to shed light on the relation between the data distribution and resulting kernel discriminant. Our analysis shows that polynomial discriminants capture the difference between two distributions through their moments of a certain order specified by the polynomial kernel. Depending on the representation of the Gaussian kernel, on the other hand, Gaussian discriminants encode the class difference using all polynomial features or Fourier features of random projections.

Whenever we have some discriminative predictors in the data by design as is typically the case, kernels of a simple form aligned with those predictors will work well. For instance, if we use polynomial kernels in such a setting, we expect the Rayleigh quotient as a measure of class separation to become saturated quickly with degree and low-order polynomial features to prevail. The geometric perspective of kernel discriminant analysis presented in this paper suggests that the ideal kernel for discrimination retains only those features necessary for describing the difference in two distributions. This promotes a compositional view of kernels (e.g., ) and further points to the potential benefits of selecting kernel components relevant to discrimination similar to the way feature selection is incorporated into linear discriminant analysis using sparsity inducing penalties (Clemmensen et al. 2011, Cai and Liu 2011). For instance, Kim et al. (2006) formulated a convex optimization problem for kernel selection in KDA. It is also of interest to compare this kernel selection approach with other approaches for numerical approximation of kernel matrices themselves through Nyström approximation (Williams and Seeger 2001, Drineas and Mahoney 2005) or random projections (Ye et al. 2017).

As a related issue, it has not been formally examined how the Rayleigh quotient maximized in kernel discriminant analysis is related to the error rate of the induced classifier except for some special cases only. It is of particular interest how the relation changes with the form of a kernel and associated features given the difference between two distributions.

While our analysis has focused on the case of two classes, we can generalize it to the case of multiple classes where more than one kernel discriminants need to be considered and properly combined to make a decision. We leave this extension as future research.

Acknowledgements

This research was supported in part by the National Science Foundation under grant DMS-15-13566. We thank Professor Mikyoung Lim at KAIST for helpful conversations on linear operators.

References

- (1)

- Aronszajn (1950) Aronszajn, N. (1950). Theory of reproducing kernel, Transactions of the American Mathematical Society 68: 3337–404.

- Baudat and Anouar (2000) Baudat, G. and Anouar, F. (2000). Generalized discriminant analysis using a kernel approach, Neural Computation 12(10): 2385–2404.

- Cai and Liu (2011) Cai, T. and Liu, W. (2011). A direct estimation approach to sparse linear discriminant analysis, Journal of the American Statistical Association 106(496): 1566–1577.

- Clemmensen et al. (2011) Clemmensen, L., Hastie, T., Witten, D. and Ersboll, B. (2011). Sparse discriminant analysis, Technometrics 53(4): 406–413.

- Drineas and Mahoney (2005) Drineas, P. and Mahoney, M. W. (2005). On the Nyström method for approximating a Gram matrix for improved kernel-based learning, J. Mach. Learn. Res. 6: 2153–2175.

-

Dua and Graff (2017)

Dua, D. and Graff, C. (2017).

UCI machine learning repository.

http://archive.ics.uci.edu/ml - Gu (2002) Gu, C. (2002). Smoothing Spline ANOVA Models, New York: Springer.

- Hofmann et al. (2008) Hofmann, T., Schölkopf, B. and Smola, A. J. (2008). Kernel methods in machine learning, The Annals of Statistics 36(3): 1171–1220.

- Holmquist (1996) Holmquist, B. (1996). The -variate vector Hermite polynomial of order , Linear algebra and its applications 237: 155–190.

- Joachims (2002) Joachims, T. (2002). Optimizing search engines using clickthrough data, Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’02, Association for Computing Machinery, New York, NY, USA, p. 133–142.

- Kim et al. (2006) Kim, S., Magnani, A. and Boyd, S. (2006). Optimal kernel selection in kernel Fisher discriminant analysis, Proceedings of the 23rd international conference on Machine learning, ACM, pp. 465–472.

- Liang and Lee (2013) Liang, Z. and Lee, Y. (2013). Eigen-analysis of nonlinear PCA with polynomial kernels, Statistical Analysis and Data Mining 6: 529–544.

- Mika et al. (1999) Mika, S., Ratsch, G., Weston, J., Schölkopf, B. and Müllers, K. (1999). Fisher discriminant analysis with kernels, Neural networks for signal processing IX: Proceedings of the 1999 IEEE signal processing society workshop, IEEE, pp. 41–48.

- Rahimi and Recht (2008a) Rahimi, A. and Recht, B. (2008a). Random features for large-scale kernel machines, Advances in neural information processing systems, pp. 1177–1184.

- Rahimi and Recht (2008b) Rahimi, A. and Recht, B. (2008b). Uniform approximation of functions with random bases, 2008 46th Annual Allerton Conference on Communication, Control, and Computing, pp. 555–561.

- Rudin (2017) Rudin, W. (2017). Fourier analysis on groups, Courier Dover Publications.

- Schölkopf and Smola (2002) Schölkopf, B. and Smola, A. J. (2002). Learning with Kernels, MIT Press, Cambridge, MA.

- Schölkopf et al. (1998) Schölkopf, B., Smola, A. J. and Müller, K. R. (1998). Nonlinear component analysis as a kernel eigenvalue problem, Neural Computation 10: 1299–1319.

- Scott and Longuet-Higgins (1990) Scott, G. L. and Longuet-Higgins, H. C. (1990). Feature grouping by relocalisation of eigenvectors of proximity matrix, Proceedings of British Machine Vision Conference, pp. 103–108.

- Shawe-Taylor and Cristianini (2004) Shawe-Taylor, J. and Cristianini, N. (2004). Kernel Methods for Pattern Analysis, Cambridge University Press, USA.

- Shi et al. (2009) Shi, T., Belkin, M. and Yu, B. (2009). Data spectroscopy: eigenspaces of convolution operators and clustering, The Annals of Statistics 37(6B): 3960–3984.

- Vapnik (1995) Vapnik, V. N. (1995). The Nature of Statistical Learning Theory, Springer, New York.

- von Luxburg (2007) von Luxburg, U. (2007). A tutorial on spectral clustering, Statistics and Computing 17(4): 395–416.

- Wahba (1990) Wahba, G. (1990). Spline models for observational data, Philadelphia, PA: Society for Industrial and Applied Mathematics.

- Williams and Seeger (2001) Williams, C. K. I. and Seeger, M. (2001). Using the Nyström method to speed up kernel machines, in T. K. Leen, T. G. Dietterich and V. Tresp (eds), Advances in Neural Information Processing Systems 13, MIT Press, pp. 682–688.

- Ye et al. (2017) Ye, H., Li, Y., Chen, C. and Zhang, Z. (2017). Fast Fisher discriminant analysis with randomized algorithms, Pattern Recognition 72: 82–92.

- Zhu et al. (1998) Zhu, H., Williams, C. K., Rhower, R. and Morciniec, M. (1998). Gaussian regression and optimal finite dimensional linear models, in C. Bishop (ed.), Neural Networks and Machine Learning, Springer, Berlin, pp. 167–184.