This work has been submitted to the IEEE for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible

This work was supported by Petróleo Brasileiro S/A - Petrobras (nº 0050.0124520.23.9), Fundação de Apoio A Física e A Química (FAFQ), Universidade de São Paulo (USP), Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES), grant nº 88887.992906/2024-00, and National Council for Scientific and Technological Development (CNPq), grants nº 309201/2021-7 and 406949/2021-2.

Corresponding author: João Manoel Herrera Pinheiro (e-mail: joao.manoel.pinheiro@usp.br).

The Impact of Feature Scaling In Machine Learning: Effects on Regression and Classification Tasks

Abstract

This research addresses the critical lack of comprehensive studies on feature scaling by systematically evaluating 12 scaling techniques - including several less common transformations - across 14 different Machine Learning algorithms and 16 datasets for classification and regression tasks. We meticulously analyzed impacts on predictive performance (using metrics such as accuracy, MAE, MSE, and R²) and computational costs (training time, inference time, and memory usage). Key findings reveal that while ensemble methods (such as Random Forest and gradient boosting models like XGBoost, CatBoost and LightGBM) demonstrate robust performance largely independent of scaling, other widely used models such as Logistic Regression, SVMs, TabNet, and MLPs show significant performance variations highly dependent on the chosen scaler. This extensive empirical analysis, with all source code, experimental results, and model parameters made publicly available to ensure complete transparency and reproducibility, offers model-specific crucial guidance to practitioners on the need for an optimal selection of feature scaling techniques.

Index Terms:

Data preprocessing, feature scaling, machine learning algorithms, normalization, standardization.=-21pt

I Introduction

Machine Learning progress has been notorious in several domains of knowledge engineering, notably driven by the rise of big data [1, 2], its applications in healthcare [3, 4, 5, 6], forecasting [7, 8, 9, 10], precision agriculture [11], wireless sensor networks [12], language tasks [13, 14, 15] and many other domains [16, 17, 18, 19, 20, 21]. All different applications compose the field of Machine Learning [14], which has become a major subarea of computer science and statistics due to its crucial role in the modern world [22]. Although these methods hold immense potential for the advancement of predictive modeling, their improper application has introduced significant obstacles [23, 24, 25].

One such obstacle is the indiscriminate use of preprocessing techniques, particularly feature scaling [26]. Feature scaling is a mapping technique in a preprocessing stage by which the user tries to give all attributes the same weight [27, 28]. In some applications, this data transformation can improve the performance of Machine Learning models [29].

Consequently, applying a scaling method without a careful evaluation of its suitability for the specific problem and model may be inadvisable and could negatively impact results. This practice risks undermining the validity of the claims regarding model performance and may lead to a feedback loop of overconfidence in the results, known as overfitting [30].

Reproducibility is a critical problem in Machine Learning [31, 32, 33, 34]. It is often undermined by factors such as missing data or code, inconsistent standards, and sensitivity to training conditions [35]. Feature scaling, in particular, if not documented or applied correctly, can significantly affect model performance and hinder the replication of results. The absence of rigorous evaluation not only hampers reproducibility, but can also lead to the adoption of practices with poor generalizability across different datasets or domains.

As Machine Learning methods continue to shape research by their use in a wide range of applications, it is essential to critically assess and justify each step of the modeling pipeline, including feature scaling, to ensure robust and replicable findings [36].

The primary objective of this study is to evaluate the impact of different data scaling methods on the training process and performance metrics of various Machine Learning algorithms across multiple datasets. We employ 14 widely used Machine Learning models for tabular data, including Linear Regression, Logistic Regression, Support Vector Machines (SVM), K-Nearest Neighbors, Multilayer Perceptron, Random Forest, TabNet, Naive Bayes, Classification and Regression Trees (CART), Gradient Boosting Trees, AdaBoost, LightGBM, CatBoost, and XGBoost. These models were evaluated using 12 different data scaling techniques, in addition to a baseline without scaling, across 16 datasets covering both classification and regression tasks. The selected models represent the state of the art in tabular data analysis, offering a favorable balance between predictive performance and computational efficiency, often outperforming deep learning techniques in this context [37, 38, 39, 40].

In Section II we cover some related work in similar studies while Section III explains more about each algorithm, each feature scaling technique, the study diagram, evaluation metrics, and how the models were trained. In Section IV we represent the final results of this study and some discussion. The limitations of our study are discussed in Section V. Lastly, in Section VI we give a final conclusion of the current experiments and future works.

II Related Work

Despite its fundamental role in Machine Learning pipelines, the impact of feature scaling remains an underexplored area in the literature. Most existing studies examine only a limited number of algorithms [41] or datasets, and often provide minimal analysis of the specific effects of different scaling techniques [42] on each particular algorithm’s performance. Studies that comprehensively evaluate various scaling methods across a broad range of models and datasets, such as the approach taken in this work, are scarce. In many Machine Learning cases, preprocessing is briefly mentioned, with scaling treated as a routine step rather than a variable worthy of in-depth investigation.

For some Machine Learning models, feature scaling is extremely necessary, such as K-Nearest Neighbors [43], Neural Networks [44, 45] [46], and SVM [47] [48]. In object detection, data scaling has a crucial impact [49].

In [50], the authors compared six normalization methods in an SVM classifier to improve intrusion data, and the Min-Max Normalization showed the best performance. In [51], the authors evaluated eleven Machine Learning algorithms, including Logistic Regression, Linear Discriminant Analysis, K-Nearest Neighbors, Classification and Regression Trees, Naive Bayes, Support Vector Machine, XGBoost, Random Forest (RF), Gradient Boosting, AdaBoost, and Extra Tree, across six different data scaling methods: Normalization, Z-score Normalization, Min-Max Normalization, Max Normalization, Robust Scaler, and Quantile Transformer. However, they focused on only one dataset, the UCI - Heart Disease [52]. Despite that, their results are interesting; models based on Decision Trees showed the best performance without any scaling method, while K-Nearest Neighbors and Support Vector Machine achieved the lowest performance.

In a work on diabetes diagnosis using models such as Random Forest, Naive Bayes, K-Nearest Neighbors, Logistic Regression and Support Vector Machine [53] the researchers compared only three preprocessing scenarios: Normalization, Z-score Normalization, and no feature scaling. Their findings suggested that Random Forest, Naive Bayes, and Logistic Regression showed little sensitivity to these specific scaling approaches, and in some cases, their performance even worsened post-scaling.

Another problem is data leakage and the reproducibility of Machine Learning models. As shown in [36], many studies used preprocessing steps, such as feature scaling, on the entire dataset before splitting the data into training and test. Some studies also applied a scaling technique without knowing if that specific Machine Learning algorithm would benefit from it.

In [54] the authors demonstrate how feature scaling methods can impact the final model performance. Rather than relying on traditional scaling techniques, they propose a Generalized Logistic algorithm. This method showed particularly strong performance on datasets with a small number of samples, consistently outperforming models that used features scaled with Min-Max Normalization or Z-score Normalization.

The convergence of stochastic gradient descent is highly sensitive to the scale of input features, with studies such as [55] demonstrating that normalization is an effective method for improving convergence.

For leaf classification, a Neural Network called the Probabilistic Neural Network was used as a classifier and Min-Max normalization was applied [56]. For approval, Min-Max normalization was again used with a K-Nearest Neighbors classifier [57].

The impact of scaling and normalization techniques on NMR spectroscopic metabonomic datasets was examined in [58]. In a related study, [59] explored eight different normalization methods to enhance the biological interpretation of metabolomics data.

An in-depth study on the impact of data normalization on classification performance was presented in [41]. The authors evaluated fourteen normalization methods but employed only the K-Nearest Neighbor Classifier. Their findings indicate that normalization as a data preprocessing technique is affected by various data characteristics, such as features with differing statistical properties, the dominance of certain features, and the presence of outliers.

In an unsupervised task, [44] investigates the impact of the scaling of features on K-Means, highlighting its importance for datasets with features measured in different units. The study compares five scaling methods: Z-score Normalization, Min-Max Normalization, Percentile transformation, Maximum absolute scaling, and Robust Scaler. For cluster analysis, normalization helps prevent unwanted biases in external validation indices, such as the Jaccard, Fowlkes-Mallows, and Adjusted Rand Index, that may arise due to variations in the number of clusters or imbalances in class size distributions [60].

In the context of glaucoma detection based on a combination of texture and higher-order spectral features [61], the authors demonstrate that Z-score normalization, when paired with a Random Forest classifier, achieves superior performance compared to a Support Vector Machine.

A most recent study [62] evaluates five Machine Learning models, but focuses exclusively on classification problems and applies only two feature scaling techniques. However, most of these studies do not explain the rationale for choosing these techniques. In addition, some apply normalization to the entire dataset before splitting it into training and testing sets, leading to data leakage.

III Methodology

Our primary focus in this study is to ensure reproducibility. To that end, we use a well-known dataset for classification and regression tasks, sourced from the University of California, Irvine (UCI) Machine Learning Repository, due to its well-recognized and diverse collection of real-world datasets with standardized formats, that can easily be used for benchmarking and comparison between the different models chosen in this work.

III-A Dataset

Tables I and II provide detailed information about the datasets used in this study, including the number of features, instances, and classes. All features are numeric, represented as either int64 or float64 types. The classification tasks are either binary or multi-class.

| Dataset | Instances | Features | Classes |

|---|---|---|---|

| Breast Cancer Wisconsin (Diagnostic) | 569 | 30 | 2 |

| Dry Bean Dataset | 13611 | 16 | 7 |

| Glass Identification | 214 | 9 | 6 |

| Heart Disease | 303 | 13 | 2 |

| Iris | 150 | 4 | 3 |

| Letter Recognition | 20000 | 16 | 26 |

| MAGIC Gamma Telescope | 19020 | 10 | 2 |

| Rice (Cammeo and Osmancik) | 3810 | 7 | 2 |

| Wine | 178 | 13 | 3 |

| Dataset | Instances | Features |

|---|---|---|

| Abalone | 4177 | 8 |

| Air Quality | 9358 | 15 |

| Appliances Energy Prediction | 19735 | 28 |

| Concrete Compressive Strength | 1030 | 8 |

| Forest Fires | 517 | 12 |

| Real Estate Valuation | 414 | 6 |

| Wine Quality | 4898 | 11 |

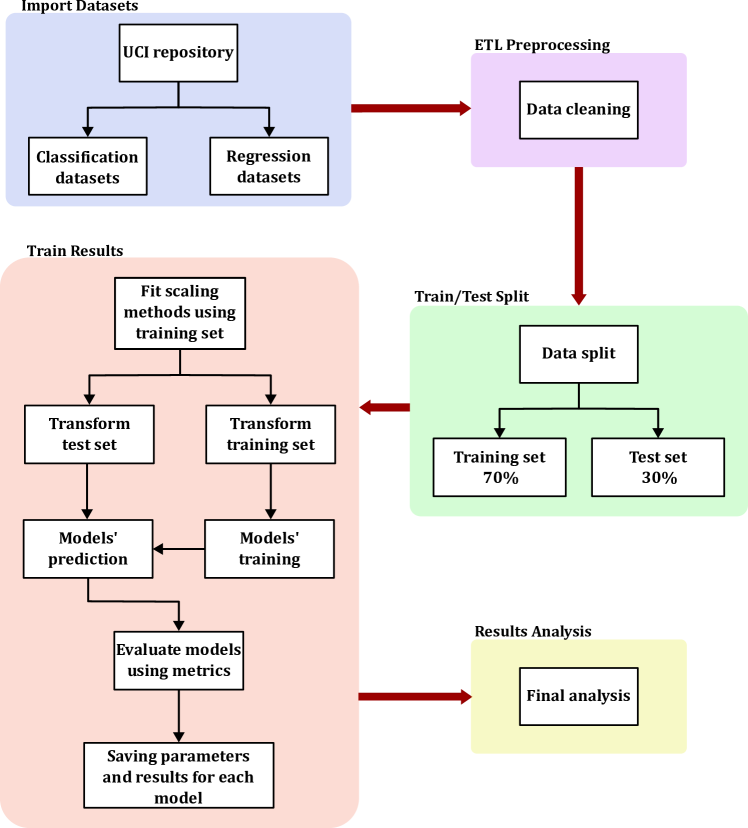

III-B Train and Test set

To preserve the integrity of our analysis and avoid data leakage, we split the dataset into training and test sets prior to applying any preprocessing steps, such as feature scaling. Following standard practice in machine learning [78, 79], 70% of the data is allocated to the training set and 30% to the test set. While determining the optimal train-test split is inherently challenging in machine learning [80], our choice reflects a balance between reproducibility and the practical constraints posed by the relatively small size of some datasets. This ratio allows for both effective model training and reliable performance evaluation.

Data leakage occurs when information from the test set inadvertently influences the training process, often leading to overly optimistic performance estimates. For instance, performing oversampling or other transformations before splitting the data can introduce overlap between the training and test sets, thereby compromising their independence. By strictly separating the data prior to any preprocessing, we maintain a clear boundary between the two sets, ensuring a robust and unbiased evaluation of the model performance [36].

III-C Feature Scaling Techniques

Several feature scaling techniques were investigated. For a subset of these, we leveraged the built-in implementations available in the scikit-learn library. However, other specialized or less common scaling methods required custom implementation, which we developed as classes within our Python experimental framework.

III-C1 Min-Max Normalization (MM)

III-C2 Max Normalization (MA)

III-C3 Z-score Normalization (ZSN)

Z-score normalization (also known as Standardization) transforms data to have a mean of 0 and a unit variance [27, 83]. The formula is given by:

| (3) |

where is the original feature value, is the mean of the feature, is the standard deviation of the feature, and is the scaled value.

III-C4 Variable Stability Scaling (VAST)

Variable stability scaling adjusts the data based on the stability of each feature. It is particularly useful for high-dimensional datasets and can be seen as a variation of standardization that incorporates the Coefficient of Variation (CV), , as a scaling factor [59]:

| (4) |

III-C5 Pareto Scaling (PS)

Pareto scaling is a normalization technique in which each feature is centered, by subtracting the mean, and then divided by the square root of its standard deviation [84]. It’s similar to Z-score normalization, but instead of dividing by the full standard deviation, it uses its square root as the scaling factor. Pareto scaling is particularly useful when the goal is to preserve relative differences between features while reducing the impact of large variances [85, 86].

| (5) |

III-C6 Mean Centered (MC)

Mean centering subtracts the mean of each feature from the data. This method is often used as a preprocessing step in Principal Component Analysis (PCA) [87].

| (6) |

III-C7 Robust Scaler (RS)

The robust scaler uses the median and interquartile range (IQR) to scale the data:

This method is robust to outliers [88].

III-C8 Quantile Transformation (QT)

Quantile transformation maps the data to a uniform or normal distribution. It is useful for non-linear data [88].

III-C9 Decimal Scaling Normalization (DS)

III-C10 Tanh Transformation (TT)

A variant of tanh normalization is used, in which the Hampel estimators are replaced by the mean and standard deviation of each feature [89]:

| (8) |

III-C11 Logistic Sigmoid Transformation (LS)

III-C12 Hyperbolic Tangent Transformation (HT)

III-D Machine Learning Algorithms

The following models were used:

-

•

Logistic Regression (LR): A simple and effective statistical model for binary classification that estimates class probabilities using the logistic function [92].

- •

- •

- •

- •

-

•

Naive Bayes (NB): Based on Bayes’ Theorem with the assumption of conditional independence between features, only works for classification [104].

- •

-

•

LightGBM (LGBM): A gradient boosting framework that uses histogram-based learning and leaf-wise tree growth, works for classification and regression [107].

- •

-

•

CatBoost: A gradient boosting model with native support for categorical features, works for regression and classification [110].

-

•

XGBoost: An efficient implementation of gradient boosting with regularization and optimized parallel computing, works for regression and classification [111].

- •

-

•

Attentive Interpretable Tabular Learning (TabNet): A deep learning model for tabular data that employs sequential attention to select relevant features at each decision step, works for classification and regression [115].

III-E Metrics

III-E1 Classification Metrics

-

•

Accuracy: one of the most widely used metrics to evaluate classification tasks. Measures the proportion of correctly predicted instances relative to the total number of predictions:

(11) where , , , and represent true positives, true negatives, false positives, and false negatives, respectively.

Despite its popularity, accuracy can be misleading when dealing with imbalanced datasets, as highlighted by [116]. However, given the characteristics of our datasets, comprising both binary and multiclass classification problems, we chose to include accuracy in our analysis, acknowledging its limitations in the presence of class imbalance.

III-E2 Regression Metrics

-

•

Mean Absolute Error (MAE): measures the average absolute difference between predicted and actual values, offering an intuitive sense of the magnitude of the error.

(12) -

•

Mean Squared Error (MSE): calculates the average squared differences between actual and predicted values, penalizing larger errors more heavily:

(13) -

•

Coefficient of Determination (): indicates the proportion of variance in the dependent variable that is predictable from the independent variables. A higher value indicates a better fit of the model to the data [117].

These regression metrics are standard for evaluating continuous output predictions [118].

III-E3 Computational Metrics

To complement the evaluation of predictive performance, we also assess:

-

•

Memory Usage: measure memory usage during the scaling step.

-

•

Training Time: the time taken to train each model on a given dataset.

-

•

Inference Time: the time required for the trained model to make predictions on unseen data.

These metrics are essential when evaluating models for real-time or resource-constrained environments.

III-F Experiment Workflow

The objective of this subsection is to document every step of the experimentation process to ensure full transparency and reproducibility. All datasets used in this work are publicly available and can be downloaded along with their respective train/test splits. All Machine Learning algorithms were applied using their default hyperparameters, as implemented in scikit-learn or the corresponding official libraries. For algorithms that support the random_state parameter, a fixed seed was used to ensure consistent results across runs. During each training session, a configuration file is generated to record the parameters used by each model, enabling complete traceability of the experimental setup.

The experiment begins with the import and cleaning of each dataset. Categorical target variables are encoded numerically, and column names are standardized using regular expressions. After that, each dataset is partitioned into training and testing subsets, which are saved both as .csv files and as Python dictionaries. Subsequently, for every dataset and Machine Learning model combination, various scaling techniques are applied. Each model is trained on the training set and evaluated on the test set, with performance metrics computed accordingly. In addition to the results, the model configuration and metadata - including training and inference times - are stored to ensure reproducibility and to facilitate further analysis.

III-G Python Script Descriptions

The following scripts were developed to automate and manage the experimental pipeline:

-

•

import_dataset.py: Imports datasets from the UCI repository and maps the appropriate target variable for each dataset.

-

•

etl_cleaning.py: Convert categorical and numerical variables as needed and cleans column names using regular expressions.

-

•

train_test_split.py: Splits the datasets into training and testing sets and saves them in both .csv and dictionary formats.

-

•

train_results.py: Train each Machine Learning model on every dataset using different scaling techniques. Calculate validation metrics and save both performance results and model configuration.

-

•

main.py: Serves as the main execution script that orchestrates all stages of the experiment.

III-H Source Code of this Experiments

For complete transparency and reproducibility, the source code, all experimental results, and detailed model parameters are publicly available in GitHub

The experiments were conducted on a system running a 64-bit Linux distribution, equipped with an AMD Ryzen™ 9 7900 processor (capable of boosting to 5.4 GHz) and 64 GB of RAM.

IV Experiments

This section presents the empirical results of our extensive experiments. We selected five representative models and three scaling techniques, along with a baseline without scaling, as this subset already allows us to draw meaningful conclusions and observe distinctions among models. The complete results are available in the Appendix A.

IV-A Impact on Validation Metrics

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Breast Cancer Wisconsin Diagnostic | KNN | 0.9591 | 0.9766 | 0.9591 | 0.9649 |

| LGBM | 0.9474 | 0.9474 | 0.9591 | 0.9591 | |

| MLP | 0.9649 | 0.9766 | 0.9766 | 0.9708 | |

| RF | 0.9708 | 0.9708 | 0.9708 | 0.9708 | |

| SVM | 0.9240 | 0.9825 | 0.9766 | 0.9825 | |

| Dry Bean | KNN | 0.7113 | 0.9141 | 0.9216 | 0.9190 |

| LGBM | 0.9275 | 0.9275 | 0.9263 | 0.9275 | |

| MLP | 0.2980 | 0.9101 | 0.9327 | 0.9314 | |

| RF | 0.9238 | 0.9226 | 0.9226 | 0.9226 | |

| SVM | 0.5803 | 0.9109 | 0.9263 | 0.9268 | |

| Glass Identification | KNN | 0.5846 | 0.6615 | 0.6308 | 0.6308 |

| LGBM | 0.8154 | 0.8154 | 0.8000 | 0.8308 | |

| MLP | 0.7077 | 0.7231 | 0.6923 | 0.6769 | |

| RF | 0.7538 | 0.7538 | 0.7692 | 0.7846 | |

| SVM | 0.6769 | 0.5077 | 0.6615 | 0.6462 | |

| Heart Disease | KNN | 0.4945 | 0.5495 | 0.5714 | 0.5275 |

| LGBM | 0.5275 | 0.5275 | 0.5275 | 0.5385 | |

| MLP | 0.3516 | 0.5495 | 0.5055 | 0.5385 | |

| RF | 0.5604 | 0.5604 | 0.5604 | 0.5604 | |

| SVM | 0.5055 | 0.5934 | 0.6154 | 0.5934 | |

| Iris | KNN | 1.0000 | 1.0000 | 1.0000 | 0.9556 |

| LGBM | 1.0000 | 1.0000 | 1.0000 | 1.0000 | |

| MLP | 1.0000 | 1.0000 | 1.0000 | 1.0000 | |

| RF | 1.0000 | 1.0000 | 1.0000 | 1.0000 | |

| SVM | 1.0000 | 1.0000 | 0.9778 | 0.9778 | |

| Letter Recognition | KNN | 0.9493 | 0.9480 | 0.9405 | 0.9158 |

| LGBM | 0.9640 | 0.9640 | 0.9637 | 0.9637 | |

| MLP | 0.9367 | 0.9280 | 0.9502 | 0.9545 | |

| RF | 0.9577 | 0.9577 | 0.9570 | 0.9580 | |

| SVM | 0.8135 | 0.8208 | 0.8488 | 0.8488 | |

| Magic Gamma Telescope | KNN | 0.8098 | 0.8254 | 0.8340 | 0.8340 |

| LGBM | 0.8803 | 0.8803 | 0.8792 | 0.8810 | |

| MLP | 0.8170 | 0.8677 | 0.8717 | 0.8777 | |

| RF | 0.8808 | 0.8808 | 0.8808 | 0.8808 | |

| SVM | 0.2976 | 0.5158 | 0.4341 | 0.3212 | |

| Rice Cammeo And Osmancik | KNN | 0.8775 | 0.9204 | 0.9143 | 0.9064 |

| LGBM | 0.9213 | 0.9213 | 0.9178 | 0.9160 | |

| MLP | 0.5468 | 0.9335 | 0.9318 | 0.9309 | |

| RF | 0.9265 | 0.9265 | 0.9265 | 0.9265 | |

| SVM | 0.9248 | 0.9309 | 0.9309 | 0.9274 | |

| Wine | KNN | 0.7407 | 0.9444 | 0.9630 | 0.9444 |

| LGBM | 0.9815 | 0.9815 | 0.9815 | 0.9815 | |

| MLP | 0.9815 | 1.0000 | 0.9815 | 0.9815 | |

| RF | 1.0000 | 1.0000 | 1.0000 | 1.0000 | |

| SVM | 0.5926 | 1.0000 | 0.9815 | 0.9815 |

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Abalone | KNN | 0.5164 | 0.4955 | 0.4662 | 0.4552 |

| LGBM | 0.5260 | 0.5260 | 0.5256 | 0.5190 | |

| MLP | 0.5245 | 0.5265 | 0.5578 | 0.5632 | |

| RF | 0.5244 | 0.5249 | 0.5234 | 0.5241 | |

| SVR | 0.5293 | 0.5257 | 0.5421 | 0.5398 | |

| Air Quality | KNN | 0.9995 | 0.9993 | 0.9994 | 0.9986 |

| LGBM | 0.9999 | 0.9999 | 0.9999 | 0.9999 | |

| MLP | 0.9985 | 1.0000 | 1.0000 | 0.9999 | |

| RF | 1.0000 | 1.0000 | 1.0000 | 1.0000 | |

| SVR | 0.9966 | 0.9619 | 0.9269 | 0.9188 | |

| Appliances Energy Prediction | KNN | 0.1681 | 0.2049 | 0.3279 | 0.2929 |

| LGBM | 0.4318 | 0.4318 | 0.4334 | 0.4192 | |

| MLP | 0.1598 | 0.1581 | 0.3144 | 0.2970 | |

| RF | 0.5122 | 0.5120 | 0.5122 | 0.5125 | |

| SVR | -0.1056 | -0.0275 | 0.0154 | -0.0096 | |

| Concrete Compressive Strength | KNN | 0.6770 | 0.6631 | 0.6714 | 0.7446 |

| LGBM | 0.9229 | 0.9229 | 0.9217 | 0.9226 | |

| MLP | 0.8030 | 0.7468 | 0.8725 | 0.8662 | |

| RF | 0.8896 | 0.8895 | 0.8891 | 0.8894 | |

| SVR | 0.2259 | 0.5394 | 0.6093 | 0.6987 | |

| Forest Fires | KNN | -0.0115 | -0.0470 | -0.0447 | -0.0345 |

| LGBM | -0.0246 | -0.0246 | -0.0188 | -0.0135 | |

| MLP | -0.0067 | 0.0057 | 0.0013 | 0.0121 | |

| RF | -0.1060 | -0.1101 | -0.1093 | -0.1058 | |

| SVR | -0.0257 | -0.0246 | -0.0244 | -0.0245 | |

| Real Estate Valuation | KNN | 0.6232 | 0.6232 | 0.6153 | 0.6348 |

| LGBM | 0.7001 | 0.7001 | 0.7120 | 0.7075 | |

| MLP | 0.6199 | 0.5608 | 0.6436 | 0.6894 | |

| RF | 0.7444 | 0.7449 | 0.7444 | 0.7444 | |

| SVR | 0.4897 | 0.5327 | 0.5788 | 0.5872 | |

| Wine Quality | KNN | 0.1221 | 0.3283 | 0.3475 | 0.3234 |

| LGBM | 0.4557 | 0.4557 | 0.4578 | 0.4602 | |

| MLP | 0.2500 | 0.3243 | 0.3872 | 0.3879 | |

| RF | 0.4985 | 0.4991 | 0.4982 | 0.4992 | |

| SVR | 0.1573 | 0.3185 | 0.3842 | 0.3799 |

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Abalone | KNN | 4.9107 | 5.1229 | 5.4200 | 5.5324 |

| LGBM | 4.8137 | 4.8137 | 4.8170 | 4.8846 | |

| MLP | 4.8285 | 4.8087 | 4.4900 | 4.4356 | |

| RF | 4.8295 | 4.8244 | 4.8392 | 4.8328 | |

| SVR | 4.7801 | 4.8158 | 4.6502 | 4.6734 | |

| Air Quality | KNN | 0.9109 | 1.2492 | 1.0231 | 2.3434 |

| LGBM | 0.1129 | 0.1129 | 0.1298 | 0.1291 | |

| MLP | 2.6090 | 0.0278 | 0.0110 | 0.1181 | |

| RF | 0.0131 | 0.0131 | 0.0131 | 0.0131 | |

| SVR | 5.7798 | 65.6306 | 125.8177 | 139.7765 | |

| Appliances Energy Prediction | KNN | 8570.5671 | 8192.0770 | 6924.2331 | 7285.3653 |

| LGBM | 5854.3508 | 5854.3508 | 5837.8269 | 5983.4385 | |

| MLP | 8656.1894 | 8673.7742 | 7063.7610 | 7243.1268 | |

| RF | 5025.6175 | 5028.0530 | 5025.4432 | 5022.6680 | |

| SVR | 11390.9162 | 10585.9003 | 10144.3973 | 10401.7762 | |

| Concrete Compressive Strength | KNN | 87.4024 | 91.1487 | 88.9093 | 69.1034 |

| LGBM | 20.8520 | 20.8520 | 21.1791 | 20.9335 | |

| MLP | 53.3124 | 68.5215 | 34.5083 | 36.1983 | |

| RF | 29.8643 | 29.8945 | 30.0114 | 29.9231 | |

| SVR | 209.4406 | 124.6258 | 105.7187 | 81.5215 | |

| Forest Fires | KNN | 8049.5193 | 8331.5751 | 8314.0348 | 8232.2422 |

| LGBM | 8153.9240 | 8153.9240 | 8107.4363 | 8065.4234 | |

| MLP | 8010.9478 | 7912.5614 | 7947.6005 | 7861.6344 | |

| RF | 8801.7409 | 8833.7029 | 8827.5833 | 8799.6126 | |

| SVR | 8162.5768 | 8154.0176 | 8152.2006 | 8152.5913 | |

| Real Estate Valuation | KNN | 63.0027 | 63.0182 | 64.3252 | 61.0770 |

| LGBM | 50.1547 | 50.1547 | 48.1590 | 48.9211 | |

| MLP | 63.5585 | 73.4388 | 59.6005 | 51.9328 | |

| RF | 42.7505 | 42.6657 | 42.7479 | 42.7405 | |

| SVR | 85.3424 | 78.1453 | 70.4354 | 69.0369 | |

| Wine Quality | KNN | 0.6406 | 0.4901 | 0.4761 | 0.4937 |

| LGBM | 0.3971 | 0.3971 | 0.3956 | 0.3939 | |

| MLP | 0.5472 | 0.4930 | 0.4471 | 0.4466 | |

| RF | 0.3659 | 0.3655 | 0.3661 | 0.3654 | |

| SVR | 0.6149 | 0.4973 | 0.4493 | 0.4525 |

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Abalone | KNN | 1.5673 | 1.6102 | 1.6555 | 1.6654 |

| LGBM | 1.5476 | 1.5476 | 1.5500 | 1.5632 | |

| MLP | 1.6159 | 1.5748 | 1.5318 | 1.4958 | |

| RF | 1.5590 | 1.5584 | 1.5617 | 1.5619 | |

| SVR | 1.5048 | 1.5111 | 1.4964 | 1.4990 | |

| Air Quality | KNN | 0.5506 | 0.6755 | 0.5862 | 0.8379 |

| LGBM | 0.0677 | 0.0677 | 0.0728 | 0.0731 | |

| MLP | 1.2505 | 0.0909 | 0.0698 | 0.1910 | |

| RF | 0.0167 | 0.0167 | 0.0166 | 0.0167 | |

| SVR | 0.8970 | 1.4802 | 1.9293 | 5.4424 | |

| Appliances Energy Prediction | KNN | 47.7696 | 45.5977 | 39.8189 | 41.5828 |

| LGBM | 39.0633 | 39.0633 | 39.0969 | 39.3198 | |

| MLP | 53.8080 | 54.1215 | 47.7318 | 47.7236 | |

| RF | 34.2701 | 34.2923 | 34.2876 | 34.2838 | |

| SVR | 48.9182 | 45.5442 | 43.4164 | 45.1344 | |

| Concrete Compressive Strength | KNN | 7.2301 | 7.0405 | 7.3319 | 6.4237 |

| LGBM | 3.0480 | 3.0480 | 3.0473 | 3.0197 | |

| MLP | 5.9685 | 6.4045 | 4.4758 | 4.5392 | |

| RF | 3.7512 | 3.7503 | 3.7608 | 3.7550 | |

| SVR | 11.6674 | 8.9978 | 8.1314 | 7.0217 | |

| Forest Fires | KNN | 21.5065 | 20.7837 | 21.0229 | 19.7818 |

| LGBM | 24.2821 | 24.2821 | 24.1922 | 23.9371 | |

| MLP | 21.2084 | 20.6192 | 24.5951 | 23.9833 | |

| RF | 24.2812 | 24.3738 | 24.3496 | 24.3443 | |

| SVR | 14.9474 | 14.9755 | 14.9655 | 14.9590 | |

| Real Estate Valuation | KNN | 5.4626 | 5.4435 | 5.6979 | 5.5819 |

| LGBM | 4.8106 | 4.8106 | 4.7284 | 4.8254 | |

| MLP | 5.3601 | 6.1126 | 5.4400 | 5.0138 | |

| RF | 4.3971 | 4.3951 | 4.4002 | 4.3916 | |

| SVR | 6.8824 | 6.2845 | 5.9805 | 5.8563 | |

| Wine Quality | KNN | 0.6243 | 0.5231 | 0.5259 | 0.5349 |

| LGBM | 0.4832 | 0.4832 | 0.4829 | 0.4833 | |

| MLP | 0.5692 | 0.5499 | 0.5187 | 0.5196 | |

| RF | 0.4366 | 0.4368 | 0.4370 | 0.4362 | |

| SVR | 0.6076 | 0.5453 | 0.5111 | 0.5142 |

As anticipated, one of the key findings from our classification experiments is the differential impact of feature scaling on model performance. Table III shows the accuracy results. Ensemble methods, including Random Forest and the gradient boosting family (LightGBM, CatBoost, XGBoost), demonstrated strong robustness by consistently achieving high validation performance irrespective of the preprocessing strategy or dataset. This inherent robustness offers a significant practical advantage, particularly in resource-constrained environments, since omitting the scaling step eliminates the associated memory and computational overhead. The Naive Bayes model showed similar scaling resistance, although its overall accuracy was not competitive with these top-tier ensembles. In stark contrast, the performance of Logistic Regression (LR), Support Vector Machines (SVM), K-Nearest Neighbor (KNN), TabNet, and Multi-Layer Perceptrons (MLP) was highly dependent on the choice of scaler, revealing their pronounced sensitivity to data preprocessing through significant fluctuations in performance.

A similar pattern of scaling sensitivity was observed in regression tasks when employing the regression counterparts of these classification models, as shows Tables IV, V and VI. This suggests that the underlying mathematical principles governing these model families lead to consistent behavior regarding data scaling, whether applied to classification or regression problems.

In general, our findings affirm the superior performance of the ensemble methods, Random Forest, LightGBM, CatBoost, and XGBoost, which is consistent with their established reputation as state-of-the-art models for tabular data. Their ability to achieve high accuracy regardless of the scaling technique applied explains why feature scaling is often considered an optional preprocessing step for these particular models in many Machine Learning projects. This practical consideration, combined with their predictive power, underscores their utility in a wide range of applications.

IV-B Impact on Training and Inference Times

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Breast Cancer Wisconsin Diagnostic | KNN | 0.0002 | 0.0002 | 0.0002 | 0.0002 |

| LGBM | 0.0213 | 0.0219 | 0.0275 | 0.0256 | |

| MLP | 0.1708 | 0.2929 | 0.1683 | 0.1824 | |

| RF | 0.0706 | 0.0707 | 0.0709 | 0.0706 | |

| SVM | 0.0016 | 0.0007 | 0.0009 | 0.0009 | |

| Dry Bean | KNN | 0.0008 | 0.0008 | 0.0008 | 0.0008 |

| LGBM | 0.2732 | 0.2759 | 0.2988 | 0.3025 | |

| MLP | 0.3396 | 3.2012 | 2.4228 | 3.8822 | |

| RF | 1.8703 | 1.8860 | 1.8872 | 1.8734 | |

| SVM | 0.1096 | 0.1932 | 0.1560 | 0.1470 | |

| Glass Identification | KNN | 0.0002 | 0.0002 | 0.0002 | 0.0004 |

| LGBM | 0.0422 | 0.0427 | 0.0345 | 0.0410 | |

| MLP | 0.2307 | 0.2416 | 0.2376 | 0.2372 | |

| RF | 0.0453 | 0.0456 | 0.0453 | 0.0453 | |

| SVM | 0.0010 | 0.0007 | 0.0008 | 0.0009 | |

| Heart Disease | KNN | 0.0003 | 0.0003 | 0.0003 | 0.0003 |

| LGBM | 0.0466 | 0.0450 | 0.0412 | 0.0439 | |

| MLP | 0.0198 | 0.1105 | 0.3423 | 0.3942 | |

| RF | 0.0449 | 0.0452 | 0.0451 | 0.0451 | |

| SVM | 0.0029 | 0.0009 | 0.0017 | 0.0012 | |

| Iris | KNN | 0.0004 | 0.0002 | 0.0002 | 0.0003 |

| LGBM | 0.0116 | 0.0119 | 0.0130 | 0.0118 | |

| MLP | 0.1074 | 0.1444 | 0.0947 | 0.1164 | |

| RF | 0.0382 | 0.0387 | 0.0386 | 0.0385 | |

| SVM | 0.0003 | 0.0004 | 0.0003 | 0.0003 | |

| Letter Recognition | KNN | 0.0010 | 0.0009 | 0.0009 | 0.0010 |

| LGBM | 1.1319 | 1.0970 | 1.1101 | 1.1380 | |

| MLP | 11.1298 | 22.0963 | 11.5231 | 13.5357 | |

| RF | 0.8526 | 0.8582 | 0.8632 | 0.8535 | |

| SVM | 0.8484 | 0.7841 | 0.7588 | 0.7936 | |

| Magic Gamma Telescope | KNN | 0.0065 | 0.0065 | 0.0065 | 0.0071 |

| LGBM | 0.0469 | 0.0460 | 0.0461 | 0.0468 | |

| MLP | 0.5592 | 4.8919 | 4.0988 | 3.4037 | |

| RF | 2.6338 | 2.6361 | 2.6471 | 2.6319 | |

| SVM | 0.0697 | 0.2742 | 0.2290 | 0.2240 | |

| Rice Cammeo And Osmancik | KNN | 0.0009 | 0.0010 | 0.0010 | 0.0010 |

| LGBM | 0.0294 | 0.0291 | 0.0309 | 0.0315 | |

| MLP | 0.0464 | 0.6241 | 0.1954 | 0.2864 | |

| RF | 0.2023 | 0.2032 | 0.2026 | 0.2034 | |

| SVM | 0.0093 | 0.0223 | 0.0201 | 0.0199 | |

| Wine | KNN | 0.0003 | 0.0003 | 0.0002 | 0.0003 |

| LGBM | 0.0164 | 0.0151 | 0.0167 | 0.0154 | |

| MLP | 0.2105 | 0.1658 | 0.0603 | 0.0696 | |

| RF | 0.0406 | 0.0408 | 0.0411 | 0.0406 | |

| SVM | 0.0008 | 0.0004 | 0.0005 | 0.0005 |

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Breast Cancer Wisconsin Diagnostic | KNN | 0.0025 | 0.0025 | 0.0025 | 0.0030 |

| LGBM | 0.0004 | 0.0004 | 0.0007 | 0.0007 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0013 | 0.0013 | 0.0013 | 0.0013 | |

| SVM | 0.0002 | 0.0002 | 0.0001 | 0.0001 | |

| Dry Bean | KNN | 0.0529 | 0.0536 | 0.0536 | 0.0594 |

| LGBM | 0.0101 | 0.0099 | 0.0099 | 0.0100 | |

| MLP | 0.0011 | 0.0012 | 0.0012 | 0.0012 | |

| RF | 0.0180 | 0.0181 | 0.0184 | 0.0179 | |

| SVM | 0.0255 | 0.1723 | 0.0892 | 0.0907 | |

| Glass Identification | KNN | 0.0012 | 0.0012 | 0.0012 | 0.0014 |

| LGBM | 0.0005 | 0.0006 | 0.0006 | 0.0006 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0013 | 0.0013 | 0.0013 | 0.0013 | |

| SVM | 0.0002 | 0.0002 | 0.0002 | 0.0002 | |

| Heart Disease | KNN | 0.0016 | 0.0016 | 0.0016 | 0.0020 |

| LGBM | 0.0007 | 0.0006 | 0.0006 | 0.0006 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0014 | 0.0014 | 0.0014 | 0.0015 | |

| SVM | 0.0003 | 0.0002 | 0.0002 | 0.0002 | |

| Iris | KNN | 0.0012 | 0.0010 | 0.0009 | 0.0012 |

| LGBM | 0.0004 | 0.0005 | 0.0004 | 0.0004 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0011 | 0.0011 | 0.0011 | 0.0011 | |

| SVM | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| Letter Recognition | KNN | 0.8666 | 0.0819 | 0.0821 | 0.0870 |

| LGBM | 0.0611 | 0.0606 | 0.0610 | 0.0601 | |

| MLP | 0.0022 | 0.0022 | 0.0021 | 0.0021 | |

| RF | 0.0471 | 0.0473 | 0.0472 | 0.0479 | |

| SVM | 0.8372 | 1.3598 | 0.8540 | 0.8722 | |

| Magic Gamma Telescope | KNN | 0.1088 | 0.1550 | 0.1659 | 0.1635 |

| LGBM | 0.0024 | 0.0023 | 0.0024 | 0.0023 | |

| MLP | 0.0012 | 0.0012 | 0.0012 | 0.0012 | |

| RF | 0.0347 | 0.0349 | 0.0349 | 0.0341 | |

| SVM | 0.0214 | 0.1027 | 0.0838 | 0.0856 | |

| Rice Cammeo And Osmancik | KNN | 0.0139 | 0.0148 | 0.0149 | 0.0152 |

| LGBM | 0.0007 | 0.0007 | 0.0007 | 0.0008 | |

| MLP | 0.0003 | 0.0003 | 0.0002 | 0.0003 | |

| RF | 0.0045 | 0.0044 | 0.0045 | 0.0044 | |

| SVM | 0.0012 | 0.0083 | 0.0055 | 0.0056 | |

| Wine | KNN | 0.0011 | 0.0011 | 0.0011 | 0.0011 |

| LGBM | 0.0004 | 0.0004 | 0.0004 | 0.0004 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0011 | 0.0011 | 0.0011 | 0.0011 | |

| SVM | 0.0001 | 0.0001 | 0.0001 | 0.0001 |

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Abalone | KNN | 0.0010 | 0.0010 | 0.0010 | 0.0010 |

| LGBM | 0.0286 | 0.0288 | 0.0301 | 0.0307 | |

| MLP | 0.8414 | 1.1404 | 0.8932 | 1.2343 | |

| RF | 0.5945 | 0.6035 | 0.6003 | 0.5977 | |

| SVR | 0.1382 | 0.1383 | 0.1392 | 0.1440 | |

| Air Quality | KNN | 0.0032 | 0.0032 | 0.0031 | 0.0031 |

| LGBM | 0.0391 | 0.0395 | 0.0392 | 0.0396 | |

| MLP | 0.5701 | 3.3321 | 2.1864 | 1.8880 | |

| RF | 1.8482 | 1.8664 | 1.8547 | 1.8659 | |

| SVR | 0.6315 | 0.5742 | 0.4535 | 0.7643 | |

| Appliances Energy Prediction | KNN | 0.0004 | 0.0004 | 0.0004 | 0.0004 |

| LGBM | 0.0522 | 0.0532 | 0.0552 | 0.0545 | |

| MLP | 3.3408 | 12.6484 | 17.8381 | 16.1881 | |

| RF | 18.2580 | 18.3987 | 18.3023 | 18.2253 | |

| SVR | 3.6868 | 3.6063 | 3.6767 | 3.7207 | |

| Concrete Compressive Strength | KNN | 0.0004 | 0.0004 | 0.0004 | 0.0004 |

| LGBM | 0.0221 | 0.0232 | 0.0231 | 0.0237 | |

| MLP | 0.1335 | 0.7281 | 0.8502 | 0.8323 | |

| RF | 0.1397 | 0.1396 | 0.1390 | 0.1438 | |

| SVR | 0.0086 | 0.0093 | 0.0087 | 0.0091 | |

| Forest Fires | KNN | 0.0003 | 0.0003 | 0.0003 | 0.0003 |

| LGBM | 0.0115 | 0.0121 | 0.0119 | 0.0118 | |

| MLP | 0.2457 | 0.3816 | 0.4316 | 0.4330 | |

| RF | 0.0997 | 0.1006 | 0.1002 | 0.1063 | |

| SVR | 0.0025 | 0.0028 | 0.0027 | 0.0025 | |

| Real Estate Valuation | KNN | 0.0002 | 0.0002 | 0.0002 | 0.0002 |

| LGBM | 0.0090 | 0.0094 | 0.0094 | 0.0098 | |

| MLP | 0.1022 | 0.3386 | 0.3599 | 0.3778 | |

| RF | 0.0664 | 0.0666 | 0.0663 | 0.0689 | |

| SVR | 0.0019 | 0.0018 | 0.0023 | 0.0017 | |

| Wine Quality | KNN | 0.0022 | 0.0022 | 0.0023 | 0.0022 |

| LGBM | 0.0314 | 0.0313 | 0.0322 | 0.0334 | |

| MLP | 0.4713 | 1.3455 | 2.3311 | 1.6345 | |

| RF | 1.2844 | 1.2891 | 1.2968 | 1.3414 | |

| SVR | 0.3166 | 0.3181 | 0.3239 | 0.3213 |

| Dataset | Model | NO | MA | ZSN | RS |

|---|---|---|---|---|---|

| Abalone | KNN | 0.0034 | 0.0048 | 0.0042 | 0.0041 |

| LGBM | 0.0006 | 0.0007 | 0.0007 | 0.0007 | |

| MLP | 0.0003 | 0.0003 | 0.0003 | 0.0003 | |

| RF | 0.0110 | 0.0111 | 0.0110 | 0.0109 | |

| SVR | 0.0652 | 0.0652 | 0.0656 | 0.0666 | |

| Air Quality | KNN | 0.0171 | 0.0317 | 0.0338 | 0.0381 |

| LGBM | 0.0010 | 0.0009 | 0.0009 | 0.0009 | |

| MLP | 0.0005 | 0.0006 | 0.0006 | 0.0006 | |

| RF | 0.0169 | 0.0171 | 0.0170 | 0.0171 | |

| SVR | 0.2986 | 0.2676 | 0.1981 | 0.3599 | |

| Appliances Energy Prediction | KNN | 0.0222 | 0.0215 | 0.0225 | 0.0216 |

| LGBM | 0.0017 | 0.0017 | 0.0017 | 0.0017 | |

| MLP | 0.0012 | 0.0012 | 0.0012 | 0.0012 | |

| RF | 0.0694 | 0.0697 | 0.0700 | 0.0692 | |

| SVR | 1.8656 | 1.8341 | 1.7995 | 1.8159 | |

| Concrete Compressive Strength | KNN | 0.0009 | 0.0010 | 0.0010 | 0.0010 |

| LGBM | 0.0004 | 0.0004 | 0.0004 | 0.0004 | |

| MLP | 0.0001 | 0.0002 | 0.0001 | 0.0001 | |

| RF | 0.0033 | 0.0034 | 0.0032 | 0.0034 | |

| SVR | 0.0044 | 0.0043 | 0.0041 | 0.0041 | |

| Forest Fires | KNN | 0.0003 | 0.0006 | 0.0006 | 0.0005 |

| LGBM | 0.0003 | 0.0004 | 0.0003 | 0.0003 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0018 | 0.0018 | 0.0018 | 0.0018 | |

| SVR | 0.0011 | 0.0012 | 0.0011 | 0.0013 | |

| Real Estate Valuation | KNN | 0.0003 | 0.0003 | 0.0003 | 0.0003 |

| LGBM | 0.0003 | 0.0003 | 0.0003 | 0.0003 | |

| MLP | 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| RF | 0.0017 | 0.0017 | 0.0017 | 0.0017 | |

| SVR | 0.0007 | 0.0009 | 0.0007 | 0.0007 | |

| Wine Quality | KNN | 0.0048 | 0.0257 | 0.0369 | 0.0329 |

| LGBM | 0.0008 | 0.0008 | 0.0008 | 0.0009 | |

| MLP | 0.0004 | 0.0004 | 0.0004 | 0.0005 | |

| RF | 0.0143 | 0.0147 | 0.0145 | 0.0151 | |

| SVR | 0.1501 | 0.1527 | 0.1419 | 0.1422 |

The application of different scaling techniques had a variable impact on inference times across the evaluated models. Notably, Classification and Regression Trees (CART) exhibited exceptionally robust behavior, remaining unaffected across all scaling methods and datasets. While certain Machine Learning algorithms — such as K-Nearest Neighbors (KNN), Random Forest, Support Vector Machine (SVM), and Support Vector Regressor (SVR) — showed more evident sensitivity to the choice of scaling technique, this was not the norm. For the majority of models, the preprocessing step introduced only a small, non-uniform computational overhead — as illustrated in Tables VIII and X — which may become more significant in the context of large-scale datasets or time-sensitive applications requiring real-time inference.

IV-C Results of Memory Usage (kB)

The analysis revealed a clear distinction in memory consumption between scaling techniques. As expected, applying no scaling resulted in zero additional memory consumption, only the consumption to load the data. Among the actual scaling methods, the RobustScaler, StandardScaler, Tanh Transformer, and Hyperbolic Tangent were found to be the most memory-intensive. In contrast, the MaxAbsScaler, MinMaxScaler, and Decimal Scaler consistently registered the lowest memory usage as shown in Table XI.

| Dataset | NO | MA | ZSN | RS | |||

|---|---|---|---|---|---|---|---|

|

0.1875 | 175.7594 | 176.1266 | 384.9979 | |||

| Dry Bean | 1704.2750 | 2448.3812 | 2599.3156 | 2388.1666 | |||

|

0.1875 | 23.1984 | 26.1799 | 51.8070 | |||

| Heart Disease | 33.6609 | 67.2016 | 71.7602 | 122.0010 | |||

| Iris | 0.1875 | 8.7828 | 10.5523 | 20.2666 | |||

|

0.1875 | 3566.3000 | 3787.1906 | 2568.4697 | |||

|

0.1875 | 1552.0641 | 1552.2750 | 1552.7039 | |||

|

211.2688 | 358.2641 | 378.0189 | 297.6197 | |||

| Wine | 21.0234 | 40.3781 | 43.8195 | 73.9416 | |||

| Abalone | 0.1875 | 294.4004 | 294.5879 | 294.9893 | |||

| Air Quality | 880.1270 | 1294.5430 | 1373.1387 | 1233.9355 | |||

|

4164.2959 | 5894.4902 | 6261.5137 | 5832.6855 | |||

|

65.6523 | 137.7617 | 144.9668 | 94.3340 | |||

| Forest Fires | 43.2832 | 87.1992 | 92.4219 | 157.8330 | |||

|

22.2676 | 43.1992 | 46.3398 | 79.0088 | |||

| Wine Quality | 0.1875 | 624.3691 | 624.5879 | 625.0762 |

V Limitation

While this study provides a broad empirical analysis of feature scaling across various models and datasets, certain limitations should be acknowledged, which also open avenues for future research.

-

•

Hyperparameter Optimization: The Machine Learning models analyzed in this study were evaluated using their default hyperparameters, as outlined in the methodology. A comprehensive hyperparameter tuning process for each model–scaler–dataset combination was beyond the current scope; however, such optimization could potentially uncover different optimal pairings or further improve model performance.

-

•

Scope and Diversity of Datasets: Although 16 datasets were used for both classification and regression tasks, the findings could be further enhanced by incorporating an even wider array of datasets, particularly those with very high dimensionality, different types of underlying data distributions, or from more specialized domains.

-

•

Evaluation Metrics for Classification: The primary metric for classification tasks was accuracy. Although acknowledged as potentially misleading for imbalanced datasets, future work could incorporate a broader suite of metrics, such as F1 score, precision, recall AUC, or balanced precision, to provide a more nuanced understanding of performance, especially on datasets with skewed class distributions.

-

•

Dataset Size and Synthetic Data: For some of the smaller datasets utilized, the exploration of techniques such as synthetic data generation or data augmentation was not performed. Such methods could potentially improve the robustness and performance of certain models, representing a promising direction for further research.

-

•

Focus on Default Algorithm Implementations: The study relied on standard implementations of algorithms mainly from well-known libraries. Investigating variations or more recent advancements within these algorithm families could offer additional insight.

These limitations are common in empirical studies of this nature and primarily highlight areas where this already extensive work could be expanded in the future.

VI Conclusion

This comprehensive empirical study investigated the impact of 12 feature scaling techniques on 14 Machine Learning algorithms in 16 classification and regression datasets. Key findings reaffirmed the robustness of ensemble methods (e.g., Random Forest, gradient boosting family), which, along with Naive Bayes, largely maintained high performance irrespective of scaling. This offers efficiency gains by potentially avoiding preprocessing overhead. In stark contrast, models such as Logistic Regression, SVMs, MLPs, K-Nearest Neighbor, and TabNet demonstrated high sensitivity, with their performance critically dependent on scaler choice — a pattern consistent across both task types. Computational analysis also indicated that scaling choices can influence training/inference times and memory usage, with certain scalers being notably more resource-intensive.

This study contributes with one of the first systematic evaluations of such an extensive array of models, some less common models like TabNet, and scaling techniques — including transformations, e.g Tanh (TT) and Hyperbolic Tangent (HT), which are less commonly benchmarked as general-purpose scalers —, all within a unified Python framework. This is particularly relevant given that feature scaling is often applied in the literature without clear rationale, sometimes incorrectly before data splitting, which can lead to data leakage, or without verifying algorithm-specific benefits. By providing broad empirical evidence, our work offers clear guidance on how to mitigate these common issues, promoting informed scaling selection and more rigorous experimental design.

Future research could extend these insights by exploring extensive hyperparameter optimization, incorporating more diverse datasets, and utilizing a broader suite of evaluation metrics. Nevertheless, this study significantly contributes to a deeper, practical understanding of feature scaling’s role in Machine Learning.

References

- [1] L. Zhou, S. Pan, J. Wang, and A. V. Vasilakos, “Machine learning on big data: Opportunities and challenges,” Neurocomputing, vol. 237, pp. 350–361, 2017. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0925231217300577

- [2] X. Wu, V. Kumar, J. Ross Quinlan, J. Ghosh, Q. Yang, H. Motoda, G. J. McLachlan, A. Ng, B. Liu, P. S. Yu, Z.-H. Zhou, M. Steinbach, D. J. Hand, and D. Steinberg, “Top 10 algorithms in data mining,” Knowl. Inf. Syst., vol. 14, no. 1, p. 1–37, dec 2007. [Online]. Available: https://doi.org/10.1007/s10115-007-0114-2

- [3] K. Shailaja, B. Seetharamulu, and M. A. Jabbar, “Machine learning in healthcare: A review,” in 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), 2018, pp. 910–914.

- [4] C. J. Haug and J. M. Drazen, “Artificial intelligence and machine learning in clinical medicine, 2023,” New England Journal of Medicine, vol. 388, no. 13, pp. 1201–1208, 2023. [Online]. Available: https://www.nejm.org/doi/full/10.1056/NEJMra2302038

- [5] Z. Obermeyer and E. J. Emanuel, “Predicting the future — big data, machine learning, and clinical medicine,” New England Journal of Medicine, vol. 375, no. 13, pp. 1216–1219, 2016. [Online]. Available: https://www.nejm.org/doi/full/10.1056/NEJMp1606181

- [6] Y. Wang, Y. Fan, P. Bhatt, and C. Davatzikos, “High-dimensional pattern regression using machine learning: From medical images to continuous clinical variables,” NeuroImage, vol. 50, no. 4, pp. 1519–1535, 2010. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1053811909013810

- [7] N. K. Ahmed, A. F. Atiya, N. E. Gayar, and H. E.-S. and, “An empirical comparison of machine learning models for time series forecasting,” Econometric Reviews, vol. 29, no. 5-6, pp. 594–621, 2010. [Online]. Available: https://doi.org/10.1080/07474938.2010.481556

- [8] R. P. Masini, M. C. Medeiros, and E. F. Mendes, “Machine learning advances for time series forecasting,” Journal of Economic Surveys, vol. 37, no. 1, pp. 76–111, 2023. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1111/joes.12429

- [9] G. Bontempi, S. Ben Taieb, and Y.-A. Le Borgne, Machine Learning Strategies for Time Series Forecasting. Springer Berlin Heidelberg, 01 2013, vol. 138, pp. 62–77.

- [10] F. Sun, X. Meng, Y. Zhang, Y. Wang, H. Jiang, and P. Liu, “Agricultural product price forecasting methods: A review,” Agriculture, vol. 13, no. 9, 2023. [Online]. Available: https://www.mdpi.com/2077-0472/13/9/1671

- [11] A. Sharma, A. Jain, P. Gupta, and V. Chowdary, “Machine learning applications for precision agriculture: A comprehensive review,” IEEE Access, vol. 9, pp. 4843–4873, 2021.

- [12] M. A. Alsheikh, S. Lin, D. Niyato, and H.-P. Tan, “Machine learning in wireless sensor networks: Algorithms, strategies, and applications,” IEEE Communications Surveys & Tutorials, vol. 16, no. 4, pp. 1996–2018, 2014.

- [13] S. Wang, J. Huang, Z. Chen, Y. Song, W. Tang, H. Mao, W. Fan, H. Liu, X. Liu, D. Yin, and Q. Li, “Graph machine learning in the era of large language models (llms),” ACM Trans. Intell. Syst. Technol., May 2025, just Accepted. [Online]. Available: https://doi.org/10.1145/3732786

- [14] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” 2023. [Online]. Available: https://arxiv.org/abs/1706.03762

- [15] A. Conneau, H. Schwenk, L. Barrault, and Y. Lecun, “Very deep convolutional networks for text classification,” 2017. [Online]. Available: https://arxiv.org/abs/1606.01781

- [16] P. P. Shinde and S. Shah, “A review of machine learning and deep learning applications,” in 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), 2018, pp. 1–6.

- [17] A. P. Singh, V. K. Mishra, and S. Akhter, “Investigating machine learning applications for fdsoi mos-based computer-aided design,” in 2023 9th International Conference on Signal Processing and Communication (ICSC), 2023, pp. 708–713.

- [18] E. R. Hruschka, R. J. G. B. Campello, A. A. Freitas, and A. C. Ponce Leon F. de Carvalho, “A survey of evolutionary algorithms for clustering,” IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), vol. 39, no. 2, pp. 133–155, 2009.

- [19] W. Liu, Z. Wang, X. Liu, N. Zeng, Y. Liu, and F. Alsaadi, “A survey of deep neural network architectures and their applications,” Neurocomputing, vol. 234, 12 2016.

- [20] G. Hinton, L. Deng, D. Yu, G. E. Dahl, A.-r. Mohamed, N. Jaitly, A. Senior, V. Vanhoucke, P. Nguyen, T. N. Sainath, and B. Kingsbury, “Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups,” IEEE Signal Processing Magazine, vol. 29, no. 6, pp. 82–97, 2012.

- [21] M. Little, Machine Learning for Signal Processing: Data Science, Algorithms, and Computational Statistics. Oxford University Press, 2019. [Online]. Available: https://books.google.com.br/books?id=mDGoDwAAQBAJ

- [22] D. D. and, “50 years of data science,” Journal of Computational and Graphical Statistics, vol. 26, no. 4, pp. 745–766, 2017. [Online]. Available: https://doi.org/10.1080/10618600.2017.1384734

- [23] D. Sculley, G. Holt, D. Golovin, E. Davydov, T. Phillips, D. Ebner, V. Chaudhary, M. Young, J.-F. Crespo, and D. Dennison, “Hidden technical debt in machine learning systems,” Advances in neural information processing systems, vol. 28, 2015.

- [24] A. Holzinger, P. Kieseberg, E. Weippl, and A. M. Tjoa, “Current advances, trends and challenges of machine learning and knowledge extraction: From machine learning to explainable ai,” in Machine Learning and Knowledge Extraction, A. Holzinger, P. Kieseberg, A. M. Tjoa, and E. Weippl, Eds. Cham: Springer International Publishing, 2018, pp. 1–8.

- [25] S. Kaufman, S. Rosset, and C. Perlich, “Leakage in data mining: formulation, detection, and avoidance,” in Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ser. KDD ’11. New York, NY, USA: Association for Computing Machinery, 2011, p. 556–563. [Online]. Available: https://doi.org/10.1145/2020408.2020496

- [26] S. García, J. Luengo, and F. Herrera, Data Preprocessing in Data Mining, ser. Intelligent Systems Reference Library. Cham: Springer International Publishing, 2015, vol. 72. [Online]. Available: https://doi.org/10.1007/978-3-319-10247-4

- [27] J. Han, M. Kamber, and J. Pei, Data Mining: Concepts and Techniques, ser. The Morgan Kaufmann Series in Data Management Systems. Elsevier Science, 2011. [Online]. Available: https://shop.elsevier.com/books/data-mining-concepts-and-techniques/han/978-0-12-381479-1

- [28] T. Hastie, R. Tibshirani, and J. Friedman, The Elements of Statistical Learning, 2nd ed. Springer New York, NY, 2009.

- [29] L. A. Shalabi, Z. Shaaban, and B. Kasasbeh, “Data mining: A preprocessing engine,” Journal of Computer Science, vol. 2, no. 9, pp. 735–739, Sep 2006. [Online]. Available: https://thescipub.com/abstract/jcssp.2006.735.739

- [30] D. M. Hawkins, “The problem of overfitting,” Journal of Chemical Information and Computer Sciences, vol. 44, no. 1, pp. 1–12, 2004, pMID: 14741005. [Online]. Available: https://doi.org/10.1021/ci0342472

- [31] O. E. Gundersen, K. Coakley, C. Kirkpatrick, and Y. Gil, “Sources of irreproducibility in machine learning: A review,” 2023. [Online]. Available: https://arxiv.org/abs/2204.07610

- [32] M. B. A. McDermott, S. Wang, N. Marinsek, R. Ranganath, M. Ghassemi, and L. Foschini, “Reproducibility in machine learning for health,” 2019. [Online]. Available: https://arxiv.org/abs/1907.01463

- [33] H. Semmelrock, S. Kopeinik, D. Theiler, T. Ross-Hellauer, and D. Kowald, “Reproducibility in machine learning-driven research,” 2023. [Online]. Available: https://arxiv.org/abs/2307.10320

- [34] B. Haibe-Kains, G. A. Adam, A. Hosny, F. Khodakarami, T. Shraddha, R. Kusko, S.-A. Sansone, W. Tong, R. D. Wolfinger, C. E. Mason, W. Jones, J. Dopazo, C. Furlanello, L. Waldron, B. Wang, C. McIntosh, A. Goldenberg, A. Kundaje, C. S. Greene, T. Broderick, M. M. Hoffman, J. T. Leek, K. Korthauer, W. Huber, A. Brazma, J. Pineau, R. Tibshirani, T. Hastie, J. P. A. Ioannidis, J. Quackenbush, and H. J. W. L. Aerts, “Transparency and reproducibility in artificial intelligence,” Nature, vol. 586, no. 7829, p. E14–E16, Oct. 2020. [Online]. Available: http://dx.doi.org/10.1038/s41586-020-2766-y

- [35] H. Semmelrock, T. Ross-Hellauer, S. Kopeinik, D. Theiler, A. Haberl, S. Thalmann, and D. Kowald, “Reproducibility in machine-learning-based research: Overview, barriers, and drivers,” AI Magazine, vol. 46, no. 2, p. e70002, 2025. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1002/aaai.70002

- [36] S. Kapoor and A. Narayanan, “Leakage and the reproducibility crisis in machine-learning-based science,” Patterns, vol. 4, no. 9, p. 100804, 2023. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2666389923001599

- [37] R. Shwartz-Ziv and A. Armon, “Tabular data: Deep learning is not all you need,” Information Fusion, vol. 81, pp. 84–90, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1566253521002360

- [38] Y. Gorishniy, I. Rubachev, V. Khrulkov, and A. Babenko, “Revisiting deep learning models for tabular data,” in Proceedings of the 35th International Conference on Neural Information Processing Systems, ser. NIPS ’21. Red Hook, NY, USA: Curran Associates Inc., 2021.

- [39] R. Levin, V. Cherepanova, A. Schwarzschild, A. Bansal, C. B. Bruss, T. Goldstein, A. G. Wilson, and M. Goldblum, “Transfer learning with deep tabular models,” 2023. [Online]. Available: https://arxiv.org/abs/2206.15306

- [40] H.-J. Ye, S.-Y. Liu, H.-R. Cai, Q.-L. Zhou, and D.-C. Zhan, “A closer look at deep learning methods on tabular datasets,” 2025. [Online]. Available: https://arxiv.org/abs/2407.00956

- [41] D. Singh and B. Singh, “Investigating the impact of data normalization on classification performance,” Applied Soft Computing, vol. 97, p. 105524, 2020. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1568494619302947

- [42] K. Maharana, S. Mondal, and B. Nemade, “A review: Data pre-processing and data augmentation techniques,” Global Transitions Proceedings, vol. 3, no. 1, pp. 91–99, 2022, international Conference on Intelligent Engineering Approach(ICIEA-2022). [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2666285X22000565

- [43] S. Aksoy and R. M. Haralick, “Feature normalization and likelihood-based similarity measures for image retrieval,” Pattern Recognition Letters, vol. 22, no. 5, pp. 563–582, 2001, image/Video Indexing and Retrieval. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0167865500001124

- [44] C. Wongoutong, “The impact of neglecting feature scaling in k-means clustering,” PLOS ONE, vol. 19, 12 2024.

- [45] R. F. de Mello and M. A. Ponti, Machine Learning: A Practical Approach on the Statistical Learning Theory. Cham: Springer International Publishing, 2018. [Online]. Available: https://doi.org/10.1007/978-3-319-94989-5

- [46] T. Jayalakshmi and A. Santhakumaran, “Statistical normalization and back propagation for classification,” International Journal of Computer Theory and Engineering, vol. 3, no. 1, pp. 1793–8201, 2011.

- [47] C.-W. Hsu, C.-C. Chang, C.-J. Lin et al., “A practical guide to support vector classification,” 2003.

- [48] J. Pan, Y. Zhuang, and S. Fong, “The impact of data normalization on stock market prediction: Using svm and technical indicators,” in Soft Computing in Data Science, M. W. Berry, A. Hj. Mohamed, and B. W. Yap, Eds. Singapore: Springer Singapore, 2016, pp. 72–88.

- [49] X. Wen, L. Shao, W. Fang, and Y. Xue, “Efficient feature selection and classification for vehicle detection,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 25, no. 3, pp. 508–517, 2015.

- [50] W. li and Z. Liu, “A method of svm with normalization in intrusion detection,” Procedia Environmental Sciences, vol. 11, pp. 256–262, 2011, 2011 2nd International Conference on Challenges in Environmental Science and Computer Engineering (CESCE 2011). [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1878029611008632

- [51] M. M. Ahsan, M. A. P. Mahmud, P. K. Saha, K. D. Gupta, and Z. Siddique, “Effect of data scaling methods on machine learning algorithms and model performance,” Technologies, vol. 9, no. 3, 2021. [Online]. Available: https://www.mdpi.com/2227-7080/9/3/52

- [52] A. Janosi, M. Steinbrunn, William ad Pfisterer, and R. Detrano, “Heart Disease,” UCI Machine Learning Repository, 1988, DOI: https://doi.org/10.24432/C52P4X.

- [53] D. U. Ozsahin, M. Taiwo Mustapha, A. S. Mubarak, Z. Said Ameen, and B. Uzun, “Impact of feature scaling on machine learning models for the diagnosis of diabetes,” in 2022 International Conference on Artificial Intelligence in Everything (AIE), 2022, pp. 87–94.

- [54] X. H. Cao, I. Stojkovic, and Z. Obradovic, “A robust data scaling algorithm to improve classification accuracies in biomedical data,” BMC Bioinformatics, vol. 17, no. 1, Sep 2016.

- [55] X. Wan, “Influence of feature scaling on convergence of gradient iterative algorithm,” Journal of Physics: Conference Series, vol. 1213, no. 3, p. 032021, jun 2019. [Online]. Available: https://dx.doi.org/10.1088/1742-6596/1213/3/032021

- [56] A. Kadir, L. E. Nugroho, A. Susanto, and P. I. Santosa, “Leaf classification using shape, color, and texture features,” CoRR, vol. abs/1401.4447, 2014. [Online]. Available: http://arxiv.org/abs/1401.4447

- [57] C.-M. Wang and Y.-F. Huang, “Evolutionary-based feature selection approaches with new criteria for data mining: A case study of credit approval data,” Expert Systems with Applications, vol. 36, pp. 5900–5908, 04 2009.

- [58] A. Craig, O. Cloarec, E. Holmes, J. Nicholson, and J. Lindon, “Scaling and normalization effects in nmr spectroscopic metabonomic data sets,” Analytical chemistry, vol. 78, pp. 2262–7, 05 2006.

- [59] R. van den Berg, H. Hoefsloot, J. Westerhuis, A. Smilde, and M. van der Werf, “Van den berg ra, hoefsloot hcj, westerhuis ja, smilde ak, van der werf mj.. centering, scaling, and transformations: improving the biological information content of metabolomics data. bmc genomics 7: 142-157,” BMC genomics, vol. 7, p. 142, 02 2006.

- [60] M. Z. Rodriguez, C. H. Comin, D. Casanova, O. M. Bruno, D. R. Amancio, L. d. F. Costa, and F. A. Rodrigues, “Clustering algorithms: A comparative approach,” PLOS ONE, vol. 14, no. 1, pp. 1–34, 01 2019. [Online]. Available: https://doi.org/10.1371/journal.pone.0210236

- [61] U. R. Acharya, S. Dua, X. Du, V. Sree S, and C. K. Chua, “Automated diagnosis of glaucoma using texture and higher order spectra features,” IEEE Transactions on Information Technology in Biomedicine, vol. 15, no. 3, pp. 449–455, 2011.

- [62] K. Mahmud Sujon, R. Binti Hassan, Z. Tusnia Towshi, M. A. Othman, M. Abdus Samad, and K. Choi, “When to use standardization and normalization: Empirical evidence from machine learning models and xai,” IEEE Access, vol. 12, pp. 135 300–135 314, 2024.

- [63] W. Wolberg, O. Mangasarian, N. Street, and W. Street, “Breast Cancer Wisconsin (Diagnostic),” UCI Machine Learning Repository, 1995, DOI: https://doi.org/10.24432/C5DW2B.

- [64] “Dry Bean Dataset,” UCI Machine Learning Repository, 2020, DOI: https://doi.org/10.24432/C50S4B.

- [65] B. German, “Glass Identification,” UCI Machine Learning Repository, 1987, DOI: https://doi.org/10.24432/C5WW2P.

- [66] R. A. Fisher, “Iris,” UCI Machine Learning Repository, 1988, DOI: https://doi.org/10.24432/C56C76.

- [67] D. Slate, “Letter Recognition,” UCI Machine Learning Repository, 1991, DOI: https://doi.org/10.24432/C5ZP40.

- [68] R. Bock, “MAGIC Gamma Telescope,” UCI Machine Learning Repository, 2007, DOI: https://doi.org/10.24432/C52C8B.

- [69] “Rice (Cammeo and Osmancik),” UCI Machine Learning Repository, 2019, DOI: https://doi.org/10.24432/C5MW4Z.

- [70] S. Aeberhard and M. Forina, “Wine,” UCI Machine Learning Repository, 1991, DOI: https://doi.org/10.24432/C5PC7J.

- [71] S. Vito, “Air Quality,” UCI Machine Learning Repository, 2008, DOI: https://doi.org/10.24432/C59K5F.

- [72] Warwick Nash, Tracy Sellers, Simon Talbot, Andrew Cawthorn, and Wes Ford, “Abalone,” UCI Machine Learning Repository, 1994, DOI: https://doi.org/10.24432/C55C7W.

- [73] L. Candanedo, “Appliances Energy Prediction,” UCI Machine Learning Repository, 2017, DOI: https://doi.org/10.24432/C5VC8G.

- [74] I.-C. Yeh, “Concrete Compressive Strength,” UCI Machine Learning Repository, 1998, DOI: https://doi.org/10.24432/C5PK67.

- [75] P. Cortez and A. Morais, “Forest Fires,” UCI Machine Learning Repository, 2007, DOI: https://doi.org/10.24432/C5D88D.

- [76] I.-C. Yeh, “Real Estate Valuation,” UCI Machine Learning Repository, 2018, DOI: https://doi.org/10.24432/C5J30W.

- [77] Paulo Cortez, A. Cerdeira, F. Almeida, T. Matos, and J. Reis, “Wine Quality,” UCI Machine Learning Repository, 2009, DOI: https://doi.org/10.24432/C56S3T.

- [78] S. Raschka, “Model evaluation, model selection, and algorithm selection in machine learning,” 2020. [Online]. Available: https://arxiv.org/abs/1811.12808

- [79] D. Wilimitis and C. G. Walsh, “Practical considerations and applied examples of cross-validation for model development and evaluation in health care: Tutorial,” JMIR AI, vol. 2, p. e49023, Dec 2023. [Online]. Available: https://ai.jmir.org/2023/1/e49023

- [80] V. R. Joseph, “Optimal ratio for data splitting,” Statistical Analysis and Data Mining: An ASA Data Science Journal, vol. 15, no. 4, pp. 531–538, 2022. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1002/sam.11583

- [81] A. Jain, K. Nandakumar, and A. Ross, “Score normalization in multimodal biometric systems,” Pattern Recognition, vol. 38, no. 12, pp. 2270–2285, 2005. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0031320305000592

- [82] K. Cabello-Solorzano, I. Ortigosa de Araujo, M. Peña, L. Correia, and A. J. Tallón-Ballesteros, “The impact of data normalization on the accuracy of machine learning algorithms: A comparative analysis,” in 18th International Conference on Soft Computing Models in Industrial and Environmental Applications (SOCO 2023), P. García Bringas, H. Pérez García, F. J. Martínez de Pisón, F. Martínez Álvarez, A. Troncoso Lora, Á. Herrero, J. L. Calvo Rolle, H. Quintián, and E. Corchado, Eds. Cham: Springer Nature Switzerland, 2023, pp. 344–353.

- [83] A. Reverter, W. Barris, S. McWilliam, K. A. Byrne, Y. H. Wang, S. H. Tan, N. Hudson, and B. P. Dalrymple, “Validation of alternative methods of data normalization in gene co-expression studies,” Bioinformatics, vol. 21, no. 7, pp. 1112–1120, 11 2004. [Online]. Available: https://doi.org/10.1093/bioinformatics/bti124

- [84] I. Noda, “Scaling techniques to enhance two-dimensional correlation spectra,” Journal of Molecular Structure, vol. 883-884, pp. 216–227, 2008, progress in two-dimensional correlation spectroscopy. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0022286007008411

- [85] L. Eriksson, E. Johansson, S. Kettapeh-Wold, and S. Wold, Introduction to Multi- and Megavariate Data Analysis Using Projection Methods (PCA & PLS). Umetrics AB, 1999. [Online]. Available: https://books.google.com.br/books?id=3aW8GwAACAAJ

- [86] H. Kubinyi, G. Folkers, and Y. Martin, 3D QSAR in Drug Design: Recent Advances, ser. Three-Dimensional Quantitative Structure Activity Relationships. Springer Netherlands, 2006. [Online]. Available: https://books.google.com.br/books?id=8GnrBwAAQBAJ

- [87] D. Kim and K. You, “Pca, svd, and centering of data,” 2024. [Online]. Available: https://arxiv.org/abs/2307.15213

- [88] V. N. G. Raju, K. P. Lakshmi, V. M. Jain, A. Kalidindi, and V. Padma, “Study the influence of normalization/transformation process on the accuracy of supervised classification,” in 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), 2020, pp. 729–735.

- [89] R. Snelick, U. Uludag, A. Mink, M. Indovina, and A. Jain, “Large-scale evaluation of multimodal biometric authentication using state-of-the-art systems.” IEEE transactions on pattern analysis and machine intelligence, vol. 27, pp. 450–5, 04 2005.

- [90] S. Theodoridis and K. Koutroumbas, Pattern Recognition, Fourth Edition, 4th ed. USA: Academic Press, Inc., 2008.

- [91] K. Priddy and P. Keller, Artificial Neural Networks: An Introduction, ser. SPIE tutorial texts. SPIE Press, 2005. [Online]. Available: https://books.google.com.br/books?id=BrnHR7esWmkC

- [92] D. W. Hosmer, S. Lemeshow, and R. X. Sturdivant, Applied Logistic Regression, 3rd ed. Wiley, 2013.

- [93] G. James, D. Witten, T. Hastie, and R. Tibshirani, An Introduction to Statistical Learning: With Applications in R. Springer, 2013.

- [94] X. Su, X. Yan, and C.-L. Tsai, “Linear regression,” WIREs Computational Statistics, vol. 4, no. 3, pp. 275–294, 2012. [Online]. Available: https://wires.onlinelibrary.wiley.com/doi/abs/10.1002/wics.1198

- [95] C. Cortes and V. Vapnik, “Support-vector networks,” Machine learning, vol. 20, no. 3, pp. 273–297, 1995.

- [96] A. Ben-Hur and J. Weston, “A user’s guide to support vector machines,” Methods in molecular biology (Clifton, N.J.), vol. 609, pp. 223–39, 01 2010.

- [97] H. Drucker, C. J. C. Burges, L. Kaufman, A. Smola, and V. Vapnik, “Support vector regression machines,” in Advances in Neural Information Processing Systems, M. Mozer, M. Jordan, and T. Petsche, Eds., vol. 9. MIT Press, 1996. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/1996/file/d38901788c533e8286cb6400b40b386d-Paper.pdf

- [98] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, pp. 436–44, 05 2015.

- [99] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” 2015. [Online]. Available: https://arxiv.org/abs/1502.01852

- [100] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” 2017. [Online]. Available: https://arxiv.org/abs/1412.6980

- [101] L. Breiman, “Random forests,” Machine Learning, vol. 45, pp. 5–32, 10 2001.

- [102] A. Parmar, R. Katariya, and V. Patel, “A review on random forest: An ensemble classifier,” in International Conference on Intelligent Data Communication Technologies and Internet of Things (ICICI) 2018, J. Hemanth, X. Fernando, P. Lafata, and Z. Baig, Eds. Cham: Springer International Publishing, 2019, pp. 758–763.

- [103] C. Zhang and Y. Ma, Ensemble machine learning: Methods and applications. Springer New York, 01 2012.

- [104] I. Rish, “An empirical study of the naïve bayes classifier,” IJCAI 2001 Work Empir Methods Artif Intell, vol. 3, 01 2001.

- [105] L. Breiman, J. Friedman, C. Stone, and R. Olshen, Classification and Regression Trees. Taylor & Francis, 1984.

- [106] J. Singh Kushwah, A. Kumar, S. Patel, R. Soni, A. Gawande, and S. Gupta, “Comparative study of regressor and classifier with decision tree using modern tools,” Materials Today: Proceedings, vol. 56, pp. 3571–3576, 2022, first International Conference on Design and Materials. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2214785321076574

- [107] G. Ke, Q. Meng, T. Finley, T. Wang, W. Chen, W. Ma, Q. Ye, and T.-Y. Liu, “Lightgbm: A highly efficient gradient boosting decision tree,” in Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., vol. 30. Curran Associates, Inc., 2017. [Online]. Available: https://proceedings.neurips.cc/paper/2017/file/6449f44a102fde848669bdd9eb6b76fa-Paper.pdf

- [108] Y. Freund and R. E. Schapire, “A decision-theoretic generalization of on-line learning and an application to boosting,” Journal of Computer and System Sciences, vol. 55, no. 1, pp. 119–139, 1997. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S002200009791504X

- [109] R. Schapire, “The boosting approach to machine learning: An overview,” Nonlin. Estimat. Classif. Lect. Notes Stat, vol. 171, pp. 149–171, 01 2002.

- [110] A. V. Dorogush, A. Gulin, G. Gusev, N. Kazeev, L. O. Prokhorenkova, and A. Vorobev, “Fighting biases with dynamic boosting,” CoRR, vol. abs/1706.09516, 2017. [Online]. Available: http://arxiv.org/abs/1706.09516

- [111] T. Chen and C. Guestrin, “Xgboost: A scalable tree boosting system,” in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ser. KDD ’16. ACM, Aug. 2016, p. 785–794. [Online]. Available: http://dx.doi.org/10.1145/2939672.2939785

- [112] T. Cover and P. Hart, “Nearest neighbor pattern classification,” IEEE Transactions on Information Theory, vol. 13, no. 1, pp. 21–27, 1967.

- [113] S. Zhang, X. Li, M. Zong, X. Zhu, and R. Wang, “Efficient knn classification with different numbers of nearest neighbors,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 5, pp. 1774–1785, 2018.

- [114] S. Zhang, X. Li, M. Zong, X. Zhu, and D. Cheng, “Learning k for knn classification,” ACM Trans. Intell. Syst. Technol., vol. 8, no. 3, Jan. 2017. [Online]. Available: https://doi.org/10.1145/2990508

- [115] S. O. Arik and T. Pfister, “Tabnet: Attentive interpretable tabular learning,” 2020. [Online]. Available: https://arxiv.org/abs/1908.07442

- [116] D. M. Powers, “Evaluation: from precision, recall and f-measure to roc, informedness, markedness and correlation,” Journal of Machine Learning Technologies, vol. 2, no. 1, pp. 37–63, 2011.

- [117] N. J. D. NAGELKERKE, “A note on a general definition of the coefficient of determination,” Biometrika, vol. 78, no. 3, pp. 691–692, 09 1991. [Online]. Available: https://doi.org/10.1093/biomet/78.3.691

- [118] D. Chicco and G. Jurman, “The coefficient of determination r-squared is more informative than smape, mae, mape, mse and rmse in regression analysis evaluation,” PeerJ Computer Science, vol. 7, p. e623, 2021.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/eb440438-e706-4b4f-bcb9-196c22bd7e2a/joao.png) |

João Manoel Herrera Pinheiro Received the B.Sc. degree in Mechatronics Engineering. Currently pursuing an M.Sc. degree at the University of São Paulo, with a focus on Computer Vision and Machine Learning. Currently enrolled in two specialization programs: Didactic-Pedagogical Processes for Distance Learning at UNIVESP and Software Engineering at USP. Serves as a reviewer for international journals such as Nature Scientific Data, Artificial Intelligence (IBERAMIA) and the Journal of the Brazilian Computer Society. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/eb440438-e706-4b4f-bcb9-196c22bd7e2a/suzana.jpg) |

Suzana Vilas Boas de Oliveira Suzana Vilas Boas is currently pursuing a Ph.D. degree in the Signal Processing and Instrumentation Program at the University of São Paulo (EESC - USP) with a research focus on the development of a non-invasive motor imagery-based Brain-Computer Interface (MI-BCI) for the interaction with 3D images and automatic wheelchairs. She received her B.Sc. degree in Electrical Engineering, emphasis on Electronics and special studies in Biomedical Engineering, from the same institution. Her research interests include neuroscience, neuroplasticity, brain-computer interfaces, and artificial intelligence. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/eb440438-e706-4b4f-bcb9-196c22bd7e2a/thiago.png) |

Thiago Henrique Segreto Silva, Thiago H. Segreto received the B.S. degree in Mechatronics Engineering from the University of São Paulo, São Carlos, Brazil, in 2021, and the M.Sc. degree in Robotics with a specialization in Computer Vision from the same institution in 2025. He is currently pursuing a Ph.D. degree in Robotics, focusing on perception systems integrated with reinforcement learning. His research interests include robotic perception, deep reinforcement learning, computer vision, and autonomous systems, aiming at the development of intelligent, adaptive robots capable of operating effectively in complex, dynamic environments. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/eb440438-e706-4b4f-bcb9-196c22bd7e2a/pedro.jpeg) |

Pedro Saraiva is currently pursuing a Bachelor of Engineering degree in Mechatronics Engineering at the University of São Paulo (USP). He is an undergraduate researcher within USP’s Mobile Robotics Group, where he contributes to advancements in the field of mobile robotics, with a focus on applications for oil platforms. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/eb440438-e706-4b4f-bcb9-196c22bd7e2a/enzo.jpeg) |

Enzo Ferreira de Souza is currently pursuing a Bachelor of Engineering degree in Mechatronics Engineering at the University of São Paulo (USP). He is an undergraduate researcher within USP’s Mobile Robotics Group, where he contributes to advancements in the field of mobile robotics, with a focus on applications for oil platforms. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/eb440438-e706-4b4f-bcb9-196c22bd7e2a/x2.jpg) |