The menu complexity of “one-and-a-half-dimensional” mechanism design

Abstract

We study the menu complexity of optimal and approximately-optimal auctions in the context of the “FedEx” problem, a so-called “one-and-a-half-dimensional” setting where a single bidder has both a value and a deadline for receiving an item [FGKK16]. The menu complexity of an auction is equal to the number of distinct (allocation, price) pairs that a bidder might receive [HN13]. We show the following when the bidder has possible deadlines:

-

•

Exponential menu complexity is necessary to be exactly optimal: There exist instances where the optimal mechanism has menu complexity . This matches exactly the upper bound provided by Fiat et al.’s algorithm, and resolves one of their open questions [FGKK16].

-

•

Fully polynomial menu complexity is necessary and sufficient for approximation: For all instances, there exists a mechanism guaranteeing a multiplicative -approximation to the optimal revenue with menu complexity , where denotes the largest value in the support of integral distributions.

-

•

There exist instances where any mechanism guaranteeing a multiplicative -approximation to the optimal revenue requires menu complexity .

Our main technique is the polygon approximation of concave functions [Rot92], and our results here should be of independent interest. We further show how our techniques can be used to resolve an open question of [DW17] on the menu complexity of optimal auctions for a budget-constrained buyer.

1 Introduction

It is by now quite well understood that optimal mechanisms are far from simple: they may be randomized [Tha04, BCKW10, HN13], behave non-monotonically [HR15, RW15], and be computationally hard to find [CDW13, DDT14, CDP+14, Rub16]. To cope with this, much recent attention has shifted to the design of simple, but approximately optimal mechanisms (e.g. [CHK07, CHMS10, HN12, BILW14]). However, the majority of these works take a binary view on simplicity, developing simple mechanisms that guarantee constant-factor approximations. Only recently have researchers started to explore the tradeoff space between simplicity and optimality through the lens of menu complexity.

Hart and Nisan first proposed the menu complexity as one quantitative measure of simplicity, which captures the number of different outcomes that a buyer might see when participating in a mechanism [HN13]. For example, the mechanism that offers only the grand bundle of all items at price (or nothing at price ) has menu complexity . The mechanism that offers any single item at price (or nothing at price ) has menu complexity , and randomized mechanisms could have infinite menu complexity.

Still, all results to date regarding menu complexity have really been more qualitative than quantitative. For example, only just now is the state-of-the-art able to show that for a single additive bidder with independent values for multiple items and all , the menu complexity required for a approximation is finite [BGN17] (and even reaching this point was quite non-trivial). On the quantitative side, the best known positive results for a single additive or unit-demand bidder with independent item values require menu complexity exp(n) for a -approximation, but the best known lower bounds have yet to rule out that poly(n) menu complexity suffices for a -approximation in either case. In this context, our work provides the first nearly-tight quantitative bounds on menu complexity in any multi-dimensional setting.

1.1 One-and-a-half dimensional mechanism design

The setting we consider is the so-called “FedEx Problem,” first studied in [FGKK16]. Here, there is a single bidder with a value for the item and a deadline for receiving it, and the pair is drawn from an arbitrarily correlated distribution where the number of possible deadlines is finite (). The buyer’s value for receiving the item by her deadline is , and her value for receiving the item after her deadline (or not at all) is . While technically a two-dimensional problem, optimal mechanisms for the FedEx problem don’t suffer the same undesirable properties as “truly” two-dimensional problems. Still, the space of optimal mechanisms is considerably richer than single-dimensional problems (hence the colloquial term “one-and-a-half dimensional”). More specifically, while the optimal mechanism might be randomized, it has menu complexity at most , and there is an inductive closed-form solution describing it. Additionally, there is a natural condition on each (the marginal distribution of conditioned on ) guaranteeing that the optimal mechanism is deterministic (and therefore has menu complexity ).111This condition is called “decreasing marginal revenues,” and is satisfied by distributions with CDF and PDF such that is monotone non-decreasing.

A number of recent (and not-so-recent) works examine similar settings such as when the buyer has a value and budget [LR96, CG00, CMM11, DW17], or a value and a capacity [DHP17], and observe similar structure on the optimal mechanism. Such settings are quickly gaining interest within the algorithmic mechanism design community as they are rich enough for optimal mechanisms to be highly non-trivial, but not quite so chaotic as truly multi-dimensional settings.

1.2 Our results

We study the menu complexity of optimal and approximately optimal mechanisms for the FedEx problem. Our first result proves that the upper bound on the menu complexity of the optimal mechanism provided by Fiat et al.’s algorithm is exactly tight:

Theorem 1.1.

For all , there exist instances of the FedEx problem on days where the menu complexity of the optimal mechanism is .

From here, we turn to approximation and prove our main results. First, we show that fully polynomial menu complexity suffices for a -approximation. The guarantee below is always , but is often improved for specific instances. Below, if the FedEx instance happens to have integral support and the largest value is , we can get an improved bound (but if the support is continuous or otherwise non-integral, we can just take the term instead).222Actually our bounds can be be improved to replace with many other quantities that are always , and will still be well-defined for continuous distributions, more on this in Section 4.

Theorem 1.2.

For all instances of the FedEx problem on days, there exists a mechanism of menu complexity guaranteeing a approximation to the optimal revenue.

In Theorem 1.2, observe that for any fixed instance, as , our bound grows like (because eventually will exceed ). Similarly, our bound is always for any . Both of these dependencies are provably tight for our approach (discussed shortly in Section 1.3), and in general tight up to a factor of .333The gap of comes as our upper bound approach requires that we lose at most “per day,” while our lower bound approach shows that any mechanism with lower menu complexity loses at least on some day.

Theorem 1.3.

For all , there exists an instance of the FedEx problem on days with , such that the menu complexity of every -optimal mechanism is .

1.3 Our techniques

We’ll provide an intuitive proof overview for each result in the corresponding technical section, but we briefly want to highlight one aspect of our approach that should be of independent interest.

It turns out that the problem of revenue maximization with bounded menu complexity really boils down to a question of how well piece-wise linear functions with bounded number of segments can approximate concave functions (we won’t get into details of why this is the case until Section 4). This is a quite well-studied problem called polygon approximation (e.g. [Rot92, YG97, BHR91]). Questions asked here are typically of the form “for a concave function and interval such that , , what is the minimum number of segments a piece-wise linear function must have to guarantee for all ?”

The answer to the above question is [Rot92, YG97]. This bound certainly suffices for our purposes to get some bound on the menu complexity of -approximate auctions, but it would be much weaker than what Theorem 1.2 provides (we’d have linear instead of logarithmic dependence on , and no option to remove from the picture completely). Interestingly though, for our application absolute additive error doesn’t tightly characterize what we need (again, we won’t get into why this is the case until Section 4). Instead, we are really looking for the following kind of guarantee, which is a bit of a hybrid between additive and multiplicative: for a concave function and interval such that , , what is the minimum number of segments a piece-wise linear function must have to guarantee ?

At first glance it seems like this really shouldn’t change the problem at all: why don’t we just redefine and plug into upper bounds of Rote for ? This is indeed completely valid, and we could again chase through and obtain some weaker version of Theorem 1.2 that also references additional parameters in unintuitive ways. But it turns out that for all examples in which this dependence is tight, there is actually quite a large gap between and , and a greatly improved bound is possible (which replaces the linear dependence on with logarithmic dependence, and provides an option to remove from the picture completely at the cost of worse dependence on ).

Theorem 1.4.

For any concave function and any such that , , there exists a piece-wise linear function such that with segments, and this is tight.

If one wishes to remove the dependence on , then one can replace the bound with , which is also tight (among bounds that don’t depend on ).

The proof of Theorem 1.4 is self-contained and appears in Section 4. Both the statement of Theorem 1.4 and our proof will be useful for future work on menu complexity, and possibly outside of mechanism design as well - to the best of our knowledge these kinds of hybrid guarantees haven’t been previously considered.444Interestingly (and completely unrelated to this work), hybrid additive-multiplicative approximations for core problems in online learning have also found use in other recent directions in AGT [DJF16, SBN17].

1.4 Related work

Menu complexity. Initial results on menu complexity prove that for a single additive or unit-demand bidder with arbitrarily correlated item values over just items, there exist instances where the optimal (randomized, with infinite menu complexity) mechanism achieves infinite revenue, while any mechanism of menu complexity achieves revenue (so no finite approximation is possible with bounded menu complexity) [BCKW10, HN13]. This motivated follow-up work subject to assumptions on the distributions, such as a generalized hazard rate condition [WT14], or independence across item values [DDT13, BGN17]. Even for a single bidder with independent values for two items, the optimal mechanism could have uncountable menu complexity [DDT13], motivating the study of approximately optimal mechanisms subject to these assumptions. Only just recently did we learn that the menu complexity is indeed finite for this setting [BGN17].

It is also worth noting that other notions of simplicity have been previously considered as well, such as the sample complexity (how many samples from a distribution are required to learn an approximately optimal auction?). Here, quantitative bounds are known for the single-item setting (where the menu complexity question is trivial: optimal mechanisms have menu complexity ) [CR14, HMR15, DHP16, GN17], but again only binary bounds are known for the multi-item setting: few samples suffice for a constant-factor approximation if values are independent [MR15, MR16], while exponentially many samples are required when values are arbitrarily correlated [DHN14]. In comparison to works of the previous paragraphs, we are the first to nail down “the right” quantitative menu complexity bounds in any multi-dimensional setting.

One-and-a-half dimensional mechanism design. One-and-a-half dimensional settings have been studied for decades by economists, the most notable example possibly being that of a single buyer with a value and a budget [LR96, CG00]. Recently, such problems have become popular within the AGT community as optimal auctions are more involved than single-dimensional settings, but not quite so chaotic as truly multidimensional settings [FGKK16, DW17, DHP17]. Each of these works focus exclusively on exactly optimal mechanisms (and exclusively on positive results). In comparison, our work is both the first to prove lower bounds on the complexity of (approximately) optimal mechanisms in these settings, and the first to provide nearly-optimal mechanisms that are considerably less complex.

Polygon approximation. Prior work on polygon approximation is vast, and includes, for instance, core results on univariate concave functions [Rot92, BHR91, YG97], the study of multi-variate functions [Bro08, GG09, DDY16], and even applications in robotics [BGO07]. The more recent work has mostly been pushing toward better guarantees for higher dimensional functions. To the best of our knowledge, the kinds of guarantees we target via Theorem 1.4 haven’t been previously considered, and could prove more useful than absolute additive guarantees for some applications.

1.5 Organization

In Section 2, we formally describe the FedEx problem and recap the main result of [FGKK16]. In Section 3 we present an instance of the FedEx problem whose menu complexity for optimal auctions is exponential, the worst possible. In Section 4 we present a mechanism that guarantees a (1-) fraction of the optimal revenue with a menu complexity of . We also explain the connection between approximate auctions and polygon approximation. In Section 5 we present an instance of the FedEx problem that requires a menu complexity of in order to approximate the revenue within . In Section 7 we use similar techniques to those of Section 3 to construct an example resolving an open question of [DW17].555Specifically, [DW17] ask whether the optimal mechanism for a single buyer with a private budget and a regular value distribution conditioned on each possible budget is deterministic. The answer is yes if we replace “regular” with “decreasing marginal revenues,” or “private budget” with “public budget.” We show that the answer is no in general: the optimal mechanism, even subject to regularity, could be randomized.

2 Preliminaries

We consider a single bidder who’s type depends on two parameters: a value and a deadline . Deterministic outcomes that the seller can award are just a day to ship the item, or to not ship the item at all (and the seller may also randomize over these outcomes). A buyer receives value if the item is shipped by her deadline, and if it is shipped after her deadline (or not at all).

The types are drawn from a known (possibly correlated) distribution . Let denote the probability that the bidder’s deadline is and the marginal distribution of conditioned on a deadline of . For simplicity of exposition, in several parts of this paper we’ll assume that is supported on . This assumption is w.l.o.g., and all results extend to continuous distributions, or distributions with arbitrary discrete support if desired [CDW16].

In Appendix A, we provide the standard linear program whose solution yields the revenue-optimal auction for the FedEx problem. We only note here the relevant incentive compatibility constraints (observed in [FGKK16]). First, note that w.l.o.g. whenever the buyer has deadline , the optimal mechanism can ship her the item (if at all) exactly on day . Shipping the item earlier doesn’t make her any happier, but might make the buyer interested in misreporting and claiming a deadline of if her deadline is in fact earlier. Next note that, subject to this, the buyer never has an incentive to overreport her deadline, but she still might have incentive to underreport her deadline (or misreport her value).

We will be interested in understanding the menu complexity of auctions, which is the number of different outcomes that, depending on the buyer’s type, are ever selected. If denotes the probability that a buyer with value and deadline receives the item, then we define the -deadline menu complexity to be the number of distinct options on deadline (). The menu complexity then just sums the -deadline menu complexities, and we will sometimes refer also to the “deadline menu complexity” as the maximum of the -deadline menu complexities.

2.1 Optimal auctions for the FedEx problem

Here, we recall some tools from [FGKK16] regarding optimal mechanisms for the FedEx problem. The first tool they use is the notion of a revenue curve.666For those familiar with revenue curves, note that this revenue curve is intentionally drawn in value space, and not quantile space.

Definition 2.1 (Revenue curves).

For a given deadline , define the revenue curve so that

Intuitively, captures the achievable revenue by setting price exclusively for consumers on deadline . It is also necessary to consider the ironed revenue curve, defined below.

Definition 2.2 (Ironed revenue curves).

For any revenue curve , define to be its upper concave envelope.777That is, is the smallest concave function such that for all . We say is ironed at if , and we call an ironed interval of if is not ironed at or , but is ironed at for all .

Of course, it is not sufficient to consider each possible deadline of the buyer in isolation. In particular, offering certain options on day constrains what can be offered on days subject to incentive compatibility. For instance, if some pair receives the item with probability on day for price , no bidder with a deadline will ever choose to pay . So we would also like a revenue curve that captures the optimal revenue we can make from days conditioned on selling the item deterministically at price on day . It’s not obvious how to construct such a curve, but this is one of the main contributions of [FGKK16], stated below.

Definition 2.3.

Let and Define for to :

Lemma 2.1 ([FGKK16]).

is the optimal revenue of any mechanism that satisfy the following:

-

•

The buyer can either receive the item on day and pay , or receive nothing/pay nothing.

-

•

The buyer cannot receive the item on any day .

Moreover, for any , and such that , is the optimal revenue of any mechanism that satisfy the following:

-

•

The buyer can receive the item on day with probability and pay , for any (or not receive the item on day and pay nothing).

-

•

The buyer cannot receive the item on any day .

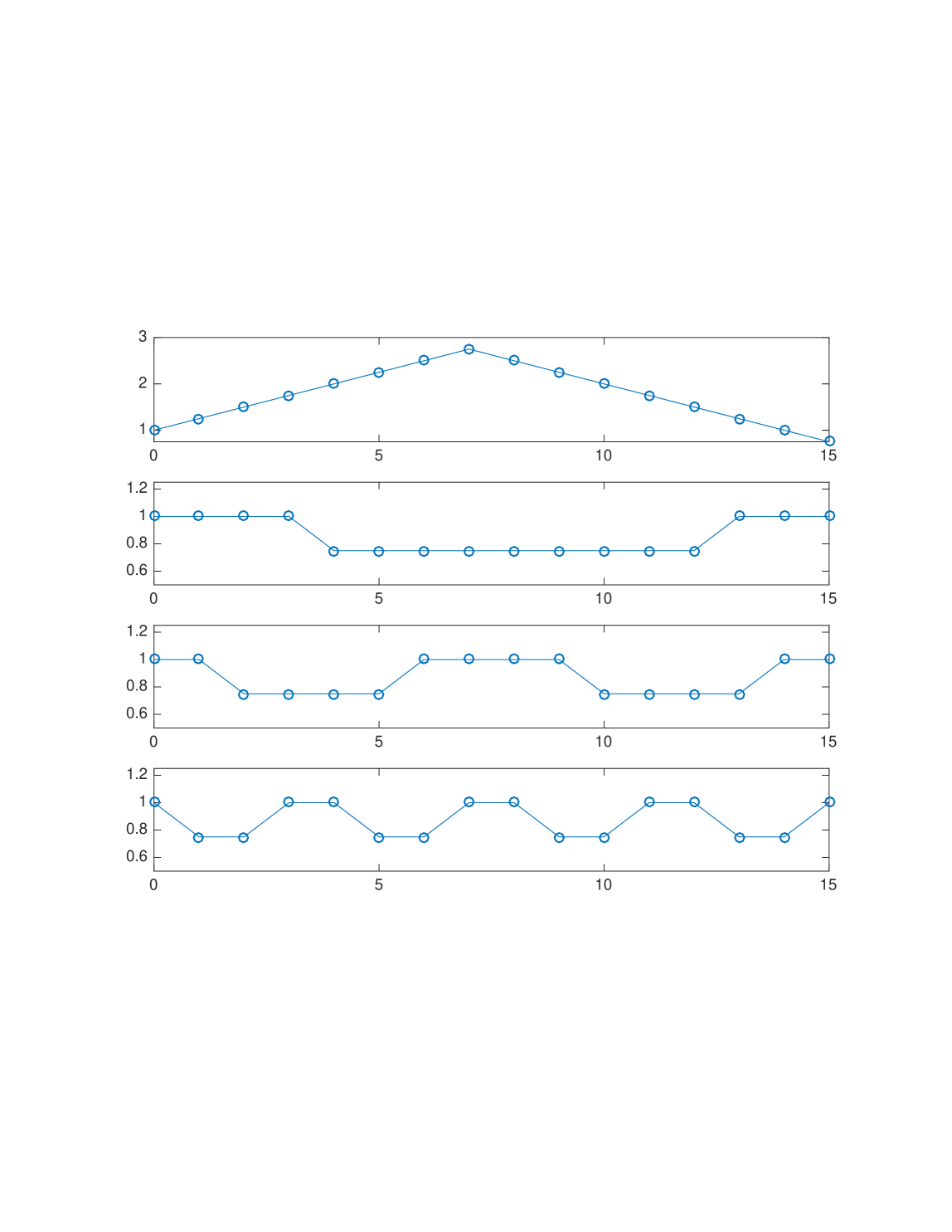

Finally, we describe the optimal mechanism provided by [FGKK16], which essentially places mass optimally upon each day’s revenue curve, subject to constraints imposed by the decisions of previous days (a more detailed description appears in Appendix A, but the description below will suffice for our paper). First, simply set any price maximizing to receive the item on day (as day is unconstrained by previous days). Now inductively, assume that the options for day have been set and we’re deciding what to do for day . If the menu options offered on day are (interpret the option as “charge to ship the item on day with probability ”), think of this instead as a distribution over prices, where price has mass .888This is the standard transformation between “lotteries” and “distributions over prices” (e.g. [RZ83]). For each such price , it will undergo one of the following three operations to become an option for day .

-

•

If , move all mass from to .

-

•

If is not ironed at , and , keep all mass at .

-

•

If is ironed at , and , let denote the ironed interval containing , and let . Move a fraction of the mass at to , and a fraction of the mass at to .

Once the mass is resettled, if there is mass on price for , the buyer will have the option to receive the item on day with probability for price for any (or not at all). Note that due to case three in the transformation above, there could be up to twice as many menu options on day as day .

Theorem 2.2 ([FGKK16]).

The allocation rule described above is the revenue-optimal auction.

3 Optimal Mechanisms Require Exponential Menu Complexity

In this section we overview our construction for an instance of the FedEx problem with integral values for each day and days where the -deadline menu complexity of the optimal mechanism is for all (and this is the maximum possible [FGKK16]), implying that the menu complexity is . Note that the deadline menu complexity is always upper bounded by , so must be at least .

At a high level, constructing the example appears straight-forward, once one understands Fiat et al.’s algorithm (end of Section 2). Every menu option from day is either “shifted” to , “copied,” or “split.” If the option is shifted or copied, it spawns only a single menu option on day , while if split it spawns two (hence the upper bound of ). So the goal is just to construct an instance where every option is split on every day.

Unfortunately, this is not quite so straight-forward: whether or not an option is split depends on whether it lies inside an ironed interval in this curve, which is itself the sum of revenue curves (some ironed and some not), and going back and forth between distributions and sums of revenue curves is somewhat of a mess. So really what we’d like to do is construct the curves directly, and be able to claim that there exists a FedEx input inducing them. While not every profile of curves is valid, we do provide a broad class of curves for which it is somewhat clean to show that there exists a FedEx input inducing them.

From here, it is then a matter of ensuring that we can find the revenue curve profiles we want (where for every day , every menu option is split, because it is inside an ironed interval in ) within our class. We’ll highlight parts of our construction below, but most details are in Appendix B.

Lemma 3.1.

For any and , there exists an input to the FedEx problem such that:

-

•

is maximized at (that is, ) and has no ironed intervals.

-

•

For all , has a maximizer at price and has ironed intervals for .

-

•

(the ironed revenue curve) is a constant function for all .999Note that it is possible for two disjoint ironed intervals to have the same slope.

-

•

has the same ironed intervals as . In fact, , for some constant .

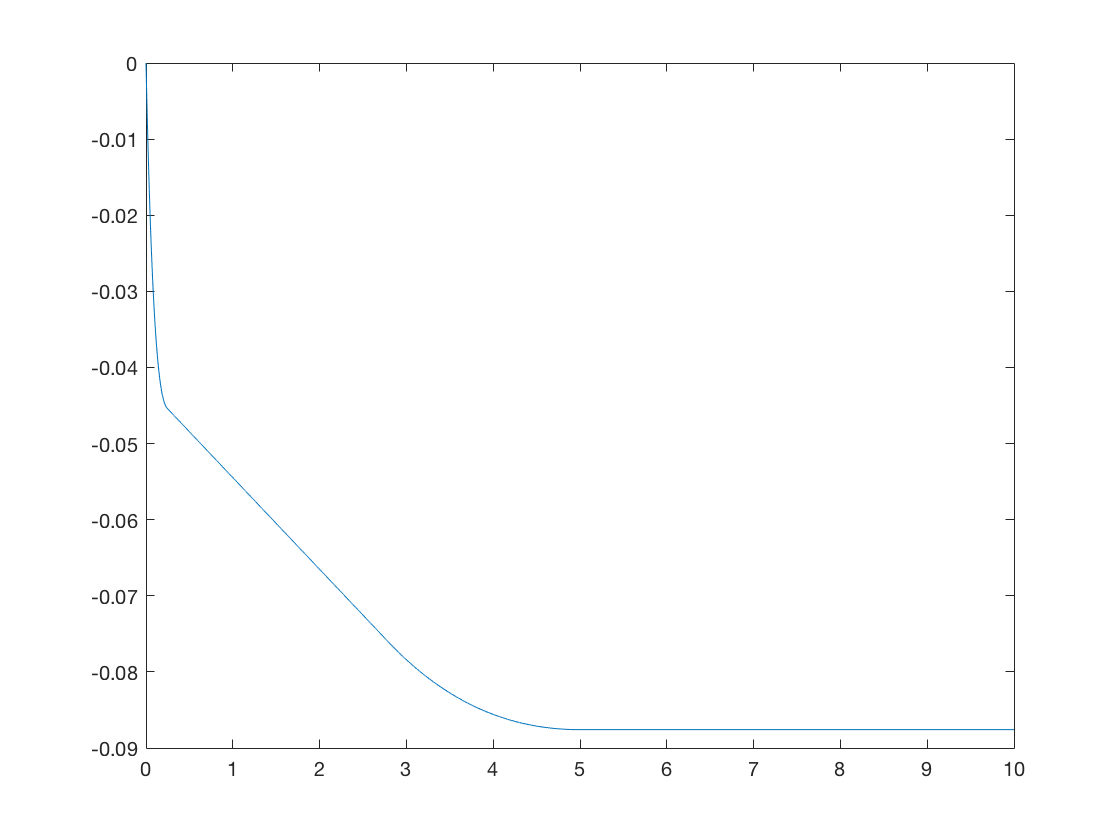

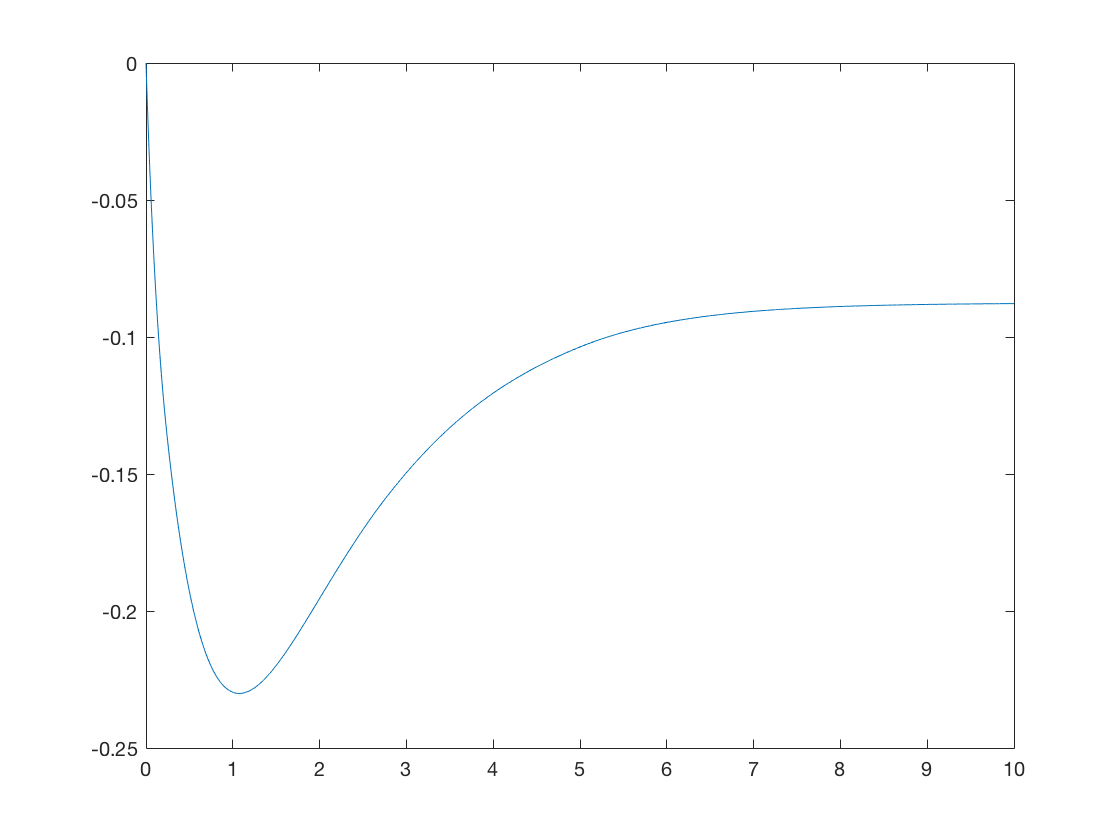

We include in Figure 2 a picture of the generated revenue curves for . As a result of this construction, we see that has ironed intervals, whose endpoints themselves lie in ironed intervals of . This guarantees that all menu options from day (which are guaranteed to be endpoints of ironed intervals) are split into two options on day . The proof of Theorem 3.2 (which implies Theorem 1.1) formalizes this.

Theorem 3.2.

The optimal mechanism for any instance satisfying the conditons of Lemma 3 has -deadline complexity for all , and menu complexity .

4 Approximately Optimal Mechanisms with Small Menus

In this section, we describe a mechanism that attains at least fraction of the optimal revenue for any FedEx instance with menu complexity , which proves Theorem 1.2. Most proofs appear in Appendix C, but we overview our approach here.

Our main approach is to use the polygon approximation of concave functions applied to revenue curves. For a sequence of points in the domain of a function , the polygon approximation of a function with respect to is the piecewise linear function formed by connecting the points for by line segments. Thus, if the sequence has points, the function will have segments. For a concave function , the line joining and for any two points and , lies entirely below the function . Thus, for concave functions , we have for any sequence , the value of . Typically, for a ‘good’ polygon approximation, one requires for , that .

It turns out that the question of approximating revenue with low menu complexity boils down to a question of approximating revenue curves with piecewise-linear functions of few segments. The connection isn’t quite obvious, but isn’t overly complicated. Without getting into formal details, here is a rough sketch of what’s going on:

-

•

Recall the Fiat et al. procedure to build the optimal mechanism: menu options from deadline might be “split” into two options for deadline if they lie inside an ironed interval of . This might cause the menu complexity to double from one deadline to the next.

-

•

Instead, we want to create at most “anchoring points” on each revenue curve. For a menu option from deadline , instead of distributing it to the endpoints of its ironed interval, we distribute it to the two nearest anchor points.

-

•

By subsection 2.1, we know exactly how to evaluate the revenue lost by this change, and it turns out this is captured by the maximum gap between and the polygon approximation obtained to (this isn’t obvious, but not hard. See Appendix C).

-

•

Finally, it turns out that the -deadline menu complexity with at most anchoring points is at most (also not quite obvious, but also not hard). So the game is to find few anchoring points that obtain a good polygon approximation to each revenue curve. section 4 of subsection C.4 describes the reduction formally, but all related proofs are in Appendix C.

Corollary 4.1.

Consider a FedEx instance with deadlines. For all , let be the function defined in 2.3, and let be a sequence of points in such that for all , we have . Then there exists a mechanism with -deadline menu complexity (and menu complexity ) whose revenue is at least .

Here, denotes the optimal revenue of the FedEx instance.

At this point, it seems like the right approach is to just set each and plug into the best existing bounds on polygon approximation. In some sense this is correct, but the menu complexity bounds one would obtain are far from optimal. The main insight is that we know something about the curves we wish to approximate: for all , and we want to leverage this fact if it can give us better guarantees. Additionally, if all values are integral in the range , we wish to leverage this fact as well, as it implies that an additive loss is also OK, as . It turns out that both facts can indeed be leveraged to obtain much stronger approximation guarantees than what are already known (essentially replacing with in previous bounds), stated in Theorem 4.2 below.

Theorem 4.2.

For any and concave function such that , , 101010We use to denote the right hand derivative and to denote the left hand derivative., there exists a sequence of at most points such that for all

The proof of Theorem 1.2 follows from section 4 and Theorem 4.2 together with a little bit of algebra, and is deferred until Appendix C.

Finally, we remark on some alternative terms that can be taken to replace in Theorem 1.2. It will become clear why these replacements are valid after reading the proof of Theorem 1.2, but we will not further justify the validity of these replacements here.

-

•

First, for instances with integral valuations, we may replace everywhere with . This is essentially because we don’t actually need to approximate on the entire interval , but only the interval .

-

•

We may further define for any (not necessarily integral, possibly continuous) instance, and replace everywhere with , even for non-integral instances. This is essentially because we only used the integrality assumption to guarantee that .

-

•

Finally, if denotes the probability that the buyer has value at least and deadline at least , observe that . So if the probability of sale at each is at least , we may observe that (where is defined as in the previous bullet) and replace with everywhere.

The bullets above suggest that the “hard” instances (where some instance-specific parameter shows up in order to maintain optimal dependence on ) are those where most of the revenue comes from very infrequent events where the buyer has an unusually high value. Due to the intricate interaction between different deadlines, these parameters can’t be circumvented with simple discretization arguments, or by improved polygon approximations (provably, see Section 4.1), but it is certainly interesting to see if other arguments might allow one to replace with (for example) something like .

4.1 A tight example for polygon approximation

It turns out that the guarantees provided by Theorem 4.2 are tight. Specifically, if no dependence on is desired, then is the best bound achievable. Also, if it’s acceptable to depend on both and , then the bound of in Theorem 4.2 is tight. Taken together, this means that lies at the Pareto frontier of the dependences achievable as a function of both and . The examples proving tightness of these bounds are actually quite simple, and provably the worst possible examples (proof of the below claim appears in Appendix C)

Proposition 4.3.

Let be a concave function on , and let there be no polygon approximation of using segments for additive error . Then there exists a concave function over satisfying:

-

•

There is no polygon approximation of using segments for additive error .

-

•

, , , .

-

•

is piecewise-linear with segments.

5 Tightness of the approximation scheme

Finally, we construct an instance of the FedEx problem that is hard to approximate with small menu complexity. We try to reason similar to the example constructed in Section 3, but things are trickier here. In particular, the challenge in Section 3 was in mapping between distributions and revenue curves. But once we had the revenue curves, it was relatively straight-forward to plug through Fiat et al.’s algorithm [FGKK16] and ensure that the optimal auction had high menu complexity.

Already nailing down the behavior of an optimal auction was tricky enough, but we now have to consider every approximately optimal auction (almost all of which don’t necessarily result from Fiat et al.’s algorithm (see, e.g. Section 4)). Indeed, one can imagine doing all sorts of strange things on any day that are suboptimal, but might somehow avoid the gradual buildup in the -deadline menu complexity.111111For example, an -approximate menu could set price or with probability for shipment on any day, or something much more chaotic.

To cope with this, our approach has two phases: first, we characterize a restricted class of auctions that we call clean. At a very high level, clean auctions never make “bizarre” choices on day that both decrease the revenue gained on day and strictly increase constraints on choices available for future days. To have an example in mind: if the revenue on day is maximized by setting a price of , it might make sense to set price to receive the item on day instead, as this relaxes constraints on future days, and maybe this somehow helps when also constrained by menu complexity. But it makes no sense to instead set price : this only decreases the revenue achieved on day , and provides stricter constraints on future days (as now she has the option to get the item on day at a cheaper price).

For our example, we first show that all clean auctions that maintain a good approximation ratio must have high menu complexity. We then follow up by making the claims in the previous paragraph formal: any arbitrary auction of low menu complexity can be derived by “muddling” a clean auction, a process which never increases the revenue. A little more specifically, cleaning the menu for deadline can only increase the revenue and allow more options on later deadlines, without increasing the menu complexity. Formal definitions and claims related to this appear in Appendix D. We conclude with a formal statement of our lower bound, which proves Theorem 1.3.

Theorem 5.1.

Any mechanism for the FedEx instance described in subsection D.1 that has at most menu options on a day has revenue at most .

6 Conclusions and Future Work

We provide the first nearly-tight quantitative results on menu complexity in a multi-dimensional setting. Along the way, we design new polygon approximations for a hybrid additive-multiplicative guarantee that turns out to be just right for our application (as evidenced by the nearly-matching lower bounds obtained from the same ideas).

There remains lots of future work in the direction of menu complexity, most notably the push for tighter quantitative bounds in “truly” multi-dimensional settings, where the gaps between upper (exponential) and lower (polynomial) are vast. We believe that continuing a polygon approximation approach is likely to yield fruitful results. After all, there is a known connection between concave functions and any mechanism design setting via utility curves, and low menu complexity exactly corresponds to piece-wise linear utility curves with few segments. Still, there are two serious barriers to overcome: first, these utility curves are now multi-dimensional instead of single-dimensional revenue curves. And second, the relationship between utility curves and revenue is somewhat odd (expected revenue is equal to an integral over the support of ), whereas the relationship between revenue curves and revenue is more direct. There are also intriguing directions for future work along the lines of one-and-a-half dimensional mechanism design, the most pressing of which is understanding multi-bidder instances (as all existing work, including ours, is still limited to the single-bidder setting).

7 Instances with regular distributions may require randomness

For single-dimensional settings, it’s well-understood that “the right” technical condition on value distributions to guarantee a simple optimal mechanism is regularity. This guarantees that “virtual values” are non-decreasing and removes the need for ironing, even for multi-bidder settings. Interestingly, “the right” technical condition on value distributions to guarantee a simple optimal mechanism for 1.5 dimensional settings is no longer regularity, but decreasing marginal values. For example, if all marginals satisfy decreasing marginal values, the optimal mechanism is deterministic for the FedEx problem [FGKK16], selling a single item to a budget-constrained buyer [CG00, DW17], and a capacity-constrained buyer [DHP17].

Still, regularity seems to buy something in these problems. For instance, Fiat et al. show that when there are only two possible deadlines, regularity suffices to guarantee that the optimal mechanism is deterministic. It has also been known since early work of Laffont and Robert that regularity suffices to guarantee that the optimal mechanism is deterministic when selling to a budget-constrained buyer with only one possible budget [LR96]. But the extent to which regularity guarantees simplicity remained open (and was explicitly stated as such in [DW17]). In this section, we show that regularity guarantees nothing beyond what was already known. In particular, there exists an instance of the FedEx problem with three possible deadlines where all marginals are regular but the optimal mechanism is randomized. This immediately implies an example for a budget-constrained buyer and three possible budgets as well (for instance, just set all three budgets larger than so they will never bind).

We proceed by describing now our instance of the FedEx problem where the optimal auction is randomized, despite all marginals being regular and there only being possible deadlines (recall that Fiat et al. show that the optimal auction remains deterministic for regular marginals and deadlines).Throughout this section, instead of using revenue curves , we use . This is in accordance to [FGKK16].

7.1 The setting

Consider bidders with types distributed as on day , on day , and on day .

The distribution over days is

Note that the three distributions are regular but don’t have decreasing marginal revenues.

7.2 Analysis

We use the iterative procedure described in [FGKK16] to find the optimal auction.

All real values written are approximate and end with “”. The case in boldface denotes the ironed interval. The optimal price for day is which is in that interval. Hence the optimal auction has to be randomized.

Appendix A Additional preliminaries

In this appendix, we summarize the approach in [FGKK16] to obtain the optimal mechanism for an arbitrary “FedEx” instance. We begin with the linear program that encodes this optimization problem.

| maximize | (1) | ||||||||

| subject to | (Leftwards IC) | ||||||||

| (Rightwards IC) | |||||||||

| (Downwards IC) | |||||||||

| (Feasibility) | |||||||||

| (Individual Rationality) | |||||||||

Note that we have not included the constraints where the bidder misreports a higher deadline. No rational bidder would consider these deviations since they would always get non-positive utility. We now formally present the allocation curves described in Section 2. This, combined with the definitions of optimal revenue curves, provide a clean characterization of optimal auctions for any instance of the FedEx problem.

Definition A.1 (Optimal allocation curves [FGKK16]).

Let be the largest such that . For any , consider two cases:

-

•

is not ironed at . Then

-

•

is ironed at . Let be the largest such that is not ironed at and be the smallest such that is not ironed at . Let be such that

Define

Then set as follows

where is the probability of allocating the item with value on day .

Lemma A.1.

Remark A.1.

Every solution to LP 1 is an optimal mechanism for the FedEx problem.

In addition, we present this simple claim, which is unrelated to the FedEx problem itself, that will be useful in future sections.

Claim A.1.

Let be a distribution over , and let be the corresponding virtual value and revenue curve, respectively. Then, for all

Proof.

This follows from some simple calculations:

∎

Appendix B Omitted proofs and perturbed example of Section 3

B.1 Omitted proofs

Lemma B.1.

Fix . Let and let be a sequence of real numbers such that . If , there exists a distribution such that .

Proof.

By our choice of , all elements in the sequence are greater than . Let and define

We will show that indeed corresponds to a valid distribution function by showing that it is non-negative and non-decreasing. Once we have shown this, it becomes clear that , proving the Lemma.

Claim B.1.

is non-negative, non-decreasing.

Proof.

For , . Suppose . Since , it follows that . To show it is non-decreasing, consider the difference between two consecutive terms. For all ,

For ,

∎

∎

We are now ready to explicitly construct an example that achieves deadline menu complexity of where . Fix and construct sequences of length , then

where denotes the -th least significant bit in the binary expansion of , . Let as . Lemma B.1 implies that there exist distributions such that . In our construction the type distribution of day corresponds to the distribution . Let the distribution over days be uniform (i.e. ). In order to show that this construction achieves a menu complexity of we first need to characterize the revenue curves for all days, and then show that an optimal auction exists where prices can be set so as to create a large menu. The intuition for these revenue curves is that their ironed intervals are nested: prices at the endpoints of ironed intervals on day are the midpoints of new ironed intervals on day . In addition, after ironing the curves, they look like constants. Therefore, the optimal revenue curves will have the same ironed intervals as their original counterparts. We design the revenue of the first day to be maximized at the median value. On the next day this price will belong to an ironed interval, meaning that any optimal auction must offer a lottery over two prices that are not ironed for day 2. The size of the lottery offered directly translate into the minimum number of options a menu for that day must have. By the nesting construction, this will double the number of options offered on day with respect to day .

We can now use the above construction, combined with Claim A.1 and Lemma B.1, to show a more general result, Lemma 3.

Proof of section 3.

By simple examination, the revenue curve for day is just a line that increases until it reaches and then decreases, so it is maxed at . Consider day . The sequence that generates its revenue curve, is non-zero iff has remainder . Since there are values in the sequence, there will be non-zero values. These values will alternate between and each alternation creates one ironed interval: the revenue decreases by , stays at that value for a while, and increases by again. This gives ironed intervals for the revenue curve of day . Moreover, the last price where the revenue increases is at , making it a valid maximizer. The revenue remains constant from there on so any higher value is also a maximizer.

For the third point, note that takes values in , alternating between them for intervals whose lengths depend on . , the upper concave hull of , is then a constant function with value everywhere.

We prove the last point by induction, starting from . This holds because . Note that, from our previous claim, is a constant function. Suppose that for some . For , . For , . In either case, the term added to is a constant (and it is the same constant) by the inductive hypothesis, so the claim follows. ∎

Lemma B.2.

The series of optimal revenue curves induced by the distributions is such that on any day , an optimal allocation curve as constructed by [FGKK16] is a step function with jumps and takes the following form:

where . Moreover, these prices where the function jumps on day will belong to ironed intervals for the optimal revenue curve of the following day, for all .

Proof.

We prove this also by induction going from the first day to the last. As stated before, is maximized at and has no ironed intervals, therefore it is clear and the optimal allocation curve corresponds to a step function at . By construction, this price belongs to an ironed interval of . Thus the base case holds. Suppose that the statement is true for day . We want to understand the optimal allocation curve for day .

First note that the places where the function jumps on day , , belong to ironed intervals of (which are the same intervals as ). This is because at these prices the function takes a value of . The nearest place where they are non-zero are exactly at and , where the function takes values and , meaning we are inside an iron interval (the revenue decreases and then increase by ). Thus by the optimal allocation curves suggested in [FGKK16] we observe that if on day we offered a price of with positive probability , we must also allocate at prices and on day with positive probability.

The probability for allocating the item with price depends on the parity of . If is odd then the values in this range correspond to a non-ironed interval on day , meaning they preserve the probability of allocation from day . The probability of allocating on the interval with endpoints and , which contains our new interval of interest, is . If is even then we belong to an ironed interval on day , meaning that the probability of allocation is going to be the average of allocating on the two intervals on day that intersect this one. These intervals are and . Thus the probability of allocating at on day is just . ∎

B.2 Perturbed case

In this appendix, we show how to tweak the example in Section 3 so that no optimal auction has a menu complexity less than . The problem with the example in Section 3 is that we don’t have to follow the allocation suggestion of [FGKK16] in order to achieve an optimal auction because we could simply choose larger ironed intervals on every day that spanned the whole spectrum of prices and (because of the simplicity of the construction) still recover all the revenue. We add a small non-linear term to the revenue curve of each day to dissuade from this while still preserving the ’nested’ structure of ironed intervals. Consider now the sequences

where and , the weight of the non-linear term, is . The distribution for the first day is the same as the one we used for the previous case. Note that the non-linear term added is maxed at . Again, Lemma B.1, Claim A.1 allow us to conclude there are revenue curves and valid distributions , whose changes are dictated by the sequence . We restate the results from 3 with the appropriate adjustments.

Lemma B.3.

The series of revenue curves induced by the distributions satisfy the following properties:

-

•

is maximized at and has no ironed intervals.

-

•

for has a unique maximizer at price and has ironed intervals.

-

•

has the same ironed intervals as .

Proof.

The first point remains true since we haven’t changed . Sine was a maximizer of the function before adding the non-linear term, it will remain a maximizer since it’s also optimal for that function. In fact, it is now the unique maximizer. This implies that . The last point follows from a similar inductive proof to the one in the original case. It is easy to see that now will be maximized at , for all . Then by the characterization from [FGKK16] we have that for any , . ∎

The key property of Lemma B.1 is that the ironed intervals for day are nested in those of day . This property is kept despite the small adjustment of the function, so the statement of the Lemma remains and the proof of the main theorem of Section 3 follows. After the adjustment the ironed intervals become unique and no clever algorithm can take large ironed intervals to avoid a large menu complexity.

Appendix C Omitted proofs of Section 4

C.1 Polygon approximation of concave functions

Polygon approximation of functions is a classic problem in approximation theory. The central problem in approximation theory is to see how ‘well’ does a class of ‘simple’ functions approximate an arbitrary function. Usually, the ‘simple’ functions have a property that makes them easy to study and the approximation scheme ensures that results carry over to arbitrary functions. In the subfield of polygon approximation, the class of ‘simple’ functions is the set of all continuous piecewise linear functions. Different error metrics (an error metric defines the properties of a good approximation) are studied, the most common being an additive error defined by a parameter . We define polygon approximation formally and state a celebrated result for additive error.

Definition C.1 (Polygon approximation).

Given a function and a sequence of points , define , the -approximation of , to be the continuous piecewise linear function that passes through .

Before continuing, we briefly state the notation that we are going to use throughout this section. For a concave function , we use to denote the right-derivative and to denote the left derivative. We remind the reader that for a concave function over an interval, both and are well defined at every point (and there are only countably many points for which is not differentiable). The following theorem has been taken from [Rot92] and has been reworded to fit our notation.

Theorem C.1 ([Rot92, BHR91]).

For any and concave such that and , there exists a sequence of at most points such that .

Theorem C.1, as stated above, deals with arbitrary concave functions and is known to be tight [Rot92]. In our setting of the FedEx problem, the concave functions that we wish to approximate are monotone revenue curves defined over (for the rest of this section, we will use instead of to refer to the right end-point of the interval to keep the notation non-specific to the FedEx problem). Being monotone revenue curves, they satisfy many other properties, e.g., , , and . Also, the error that we are interested in is not the additive error that is dealt with in the theorem. subsection C.2 formalizes the exact guarantees we desire. Note that the error metric that we use is an additive multiplicative hybrid that increases with . To prove this lemma, we use Theorem C.1 separately on each of the intervals . We show that when the size of the approximating sequence is large in these intervals, then must be large. In turn, this implies that we have more wiggle room in our error. We exploit this room to improve the dependence on to logarithmic from the polynomial dependence on in Theorem C.1.

C.2 Proof of Theorem 4.2

We begin with an easy lemma: subsection C.2 shows how to lower bound using the derivatives of various points along the way.

Lemma C.2.

For any concave such that , we have

Proof.

Since the function is concave, we get that for all ,

Adding these inequalities for , we get

∎

And now, we show how to improve the depends on from linear to logarithmic for our relaxed hybrid guarantee.

Lemma C.3.

For any and concave function such that , , , there exists a sequence of at most points such that for all

Proof.

We divide the domain of the function into the intervals for and the interval . For each interval , we define a sequence that approximates well over this interval. The final sequence is obtained by combining all the sequences .

We start with the interval . The length of is . Since , we can apply Theorem C.1 with and conclude that to approximate up to an additive error on this interval, we need a sequence of at most points.

We now argue for the rest of the domain of . The length of the interval is . Let . Applying Theorem C.1 on with the error parameter gives a sequence at most points such that approximates on up to an additive error.

Combine all the sequences for to get a single sequence such that approximates up to an additive throughout its domain . The number of points in is at most

| (Cauchy-Schwarz) | ||||

| (Definition of ) | ||||

| (subsection C.2) | ||||

∎

It is noteworthy that subsection C.2 preserves the quadratic dependence on in Theorem C.1, and simply replaces with . Note that essentially the main idea is that we wish to target additive error in the domain of “large ” (as targeting an additive in this range requires linear dependence on that can be avoided if is big) but additive error in the domain of “small ” (as targeting an additive requires linear dependence on which is small). So in order to get the improved guarantee, our bound needs the flexibility to take either the additive or multiplicative approximation in the different ranges.

To complete the proof of Theorem 4.2, we additionally provide a much simpler argument showing that the dependence on can be removed at the cost of suboptimal dependence on .

Lemma C.4.

For any and any increasing concave function such that , there exists a sequence of at most points such that for all ,

Proof.

We divide the range of the function into intervals and take one point from each interval. Formally, let , for all . Note that since is continuous (it is concave), .

We define the sequence to be the points followed by . We prove that is the required polygon approximation. For any point , let be such that . Since is increasing, we have . This gives .

Since is a piecewise linear function, we have . Again, we get .

Combining the two inequalities, we get . Since is concave, any piecewise linear segment joining two points on the function lies below the function and we have:

∎

Given any function , we can use either of the two polygon approximation schemes defined by subsection C.2 and subsection C.2. Taking the better of these two schemes we get a proof of Theorem 4.2.

Proof of Theorem 4.2.

Let and be the sequences given by subsection C.2 and subsection C.2 respectively. Suppose . In this case, the size of is at most . Now suppose . In this case, the size of is at most . Thus, the smaller of the two sequence is of size at most . ∎

C.3 Proof of Proposition 4.1

Proof of Proposition 4.1.

Consider any increasing concave function and a polygon approximation of . Let . Since is concave, the function lies ‘below’ the function . Consider now the tangents to the function drawn at points in . Since is concave, these tangents are always ‘above’ the function . The tangents can be stitched together to define a function that is always above the function . Formally, define . Observe that , , and for all , giving us the second bullet. Observe also that has at most different slopes (because and each only contribute one possibility, while the rest contribute two possibilities), so it is piecewise-linear with at most segments.

It remains to show that there exists a polygon approximation we can start with so that there is no polygon approximation of using segments for additive error . Let be points so as to minimize , and call this value (by hypothesis, ). This guarantees us the following property on :

If not, then there is some where . could then be moved a teeny bit to the left (making the approximation within the interval a teeny bit worse) so that remains , but becomes as well (as it also makes the approximation within the interval a teeny bit better, and it was to begin with). Iterating this procedure would then let us make for all . And a similar argument for moving a teeny bit to the right allows us to claim the same for . At the end, we have now made strictly less than , a contradiction.

Now that we have for all , we can immediately see that for any function with for all , and for all , any such that is an -approximation polygon approximation requires at least one point . So we can immediately see that also has no polygon approximation using segments for additive error . ∎

With this in mind, we’ll now restrict attention to piecewise-linear functions with not too many segments. To this end, first consider a piecewise-linear function with just two segments. The first segment is of length with slope . Similarly, the second segment is of length and slope . If we wish to approximate this function using just one segment, the segment should have length and slope . The error in this approximation will be . This calculation says that the error roughly depends on the product of the slope difference and the lengths. For our lower bound, we would wish to have many such segments combined together so that the error in all of the segments is the same. For a concave function, the slope keeps decreasing as grows. Thus, if the error has to be the same in every segment, the lengths of each segment have to keep increasing so that the product is the same. Observe that the function satisfies this property.

With this direction in mind, we now demonstrate a function that we call (for logarithmically-piecewise-linear) that can’t be approximated using less than segments. We chose this name because the function has derivative in the range which is (roughly) a constant factor away from the derivative of the logarithmic function . In fact, what we do can be seen as stitching together appropriate tangents to the logarithmic curve. For a value of , let . Consider the following function defined on :

The function satisfies , and that , . We prove that any good polygon approximation for must have at least segments. Since , this proves that a dependence of is unavoidable if we want to be independent of . Since in this example, , this also shows that the bound in Theorem 4.2 is tight. The idea of our proof is to identify disjoint intervals such that any sequence that defines a good polygon approximation should have at least one point in every such interval. We will identify such intervals for .

Lemma C.5.

Suppose there exists a sequence of points such that for all . The sequence has at least one point in the range for all .

Proof of subsection C.3.

Suppose for the sake of contradiction there is an for which . We prove that the approximation doesn’t hold at the midpoint of (call this ). Since no point of is in and is concave, is at most . Thus, ∎

The following corollary follows immediately from Lemma C.3.

Corollary C.6.

An additive polygon approximation of above requires at least segments.

subsection C.3 shows that the bounds of Theorem 4.2 are tight, both in the case that there is no dependence on , and in the case that we allow dependence . Moreover, subsection 4.1 shows that “LPL-like” functions exhibit the worst-case gaps for whatever kinds of guarantees are desired.

C.4 From Polygon Approximation to Approximate Auctions

In this subsection we prove subsection C.4 and section 4 establishing the connection between ”small” polygon approximations and approximate auctions with low menu complexity. The high level idea of the proof of subsection C.4 is that the revenue generated by a mechanism on days through is equal to a weighted sum of the function evaluated at points where the allocation curve changes (see subsection 2.1). If a good polygon approximation of exists, then every point where the allocation curve changes can be substituted by the endpoints of the segment of polygon approximation that contains it. The error in the polygon approximation would become loss in revenue after this transformation. The number of segments (or endpoints) in the polygon approximation would be the menu complexity of the transformed mechanism. Thus, a good and small polygon approximation would generate an almost optimal and low menu complexity mechanism. The proof of section 4 relies on iteratively calling on subsection C.4 from the first day to the last while keeping a check on the total revenue lost compared to the optimal over all days.

Proposition C.7.

Consider a FedEx instance with deadlines. For , let be the function defined in 2.3, and let be a sequence of points in such that for all , we have . Then for any mechanism , there exists a mechanism with the following properties:

-

•

The menu offered on days is the same as .

-

•

The -deadline menu complexity is at most .

-

•

The revenue is at least .

Here, denotes the optimal revenue for any mechanism that offers the same menu as on deadlines .

Intuitively, every segment of corresponds to a different menu option in the approximate mechanism, and the fact that and are close for all implies that the revenue of the mechanism based on isn’t far from optimal (by subsection 2.1). The additional factor of two comes from the fact that is ironed, so to “set price ” in , we might need to randomize over two prices in .

Proof of subsection C.4.

We assume without loss of generality that the mechanism does the optimal pricing on days through (given the prices it sets on days through ). Thus, the revenue generated by is . The menu offered by any such mechanism on day can be seen as a distribution over prices . The prices are all at most as the behaves optimally on day (see A.1). Since the mechanism behaves optimally day onwards, the revenue generated by on days through is by subsection 2.1.

Let be the sequence of points such that for all . Since is the upper concave envelope of , for every point , there exists points and in such that , , and for any , we have . For all points , we add the points and to and remove the point . Adding the points and will only improve the quality of the polygon approximation. Further, since is linear on the interval that contains , removing doesn’t affect the quality of the polygon approximation. Thus, the new set satisfies

| (2) |

Further, by construction all elements satisfy

| (3) |

The set now has at most points. For any , we let (resp. ) denote the largest (resp. smallest) value in that is at most (at least) . With this notation, if , then we have that

| (4) |

We are now ready to define a mechanism with high revenue and low -deadline menu complexity. The mechanism is defined as follows:

-

•

Mimic on days .

-

•

On day , if has mass , add mass to and mass to . This generates a new distribution on which defines our menu for day .

-

•

Do the optimal pricing days onwards given the prices on day through .

Since the menu offered on day is defined by a distribution over , it’s size is at most , the size of . We finish the proof by showing that is feasible and has high revenue.

Claim C.1.

The mechanism defined above is feasible.

Proof.

We only need to check the intra-day incentive compatibility constraints. For days through , mimics and thus, these constraints are satisfied. Days onwards, prices optimally and thus, satisfies the constraints. We only argue about the constraints for day . We wish to prove that the utility of any bidder with type in mechanism is at least that of the bidder with type . We know that the utility of any bidder with type in mechanism is at least that of the bidder with type . Since the mechanisms and are the same on days through , it is sufficient to prove that the utility of any bidder with type in mechanism is at least that of the bidder with type in mechanism . This follows directly from the expression of utilitiy . We have

| (Definition of ) | |||

| (Definition of ) | |||

| (Since ) | |||

∎

Claim C.2.

The revenue generated by is at least .

Proof.

First, we note and are identical for the first days. Thus, the revenue generated on the first days is the same for both and . Since prices optimally day onwards, the revenue generated on day through is . We have

| (Equation 3) | ||||

| (Definition of ) | ||||

| (Equation 4) | ||||

| (Equation 2) | ||||

This analysis proves that the total revenue generated by is at most less than that generated by . In other words, it is at least .

∎

These two claims combined suffice to prove the proposition. ∎

Having described the construction of low -deadline menu complexity mechanisms, we repeat this construction times (once for each deadline) to get our approximately optimal mechanism. We also show how to use subsection C.4 to go from the optimal mechanism to an entire menu of low menu complexity. Thus, the total revenue loss on days through is at most . Using the above result, we prove Corollary 4.

Proof of Corollary 4.

We prove that for all , there exists a mechanism with -deadline menu complexity of for all whose revenue is at least . For , this is the same as the statement of the corollary. This proof will proceed via induction on . For , the statement is trivial. We assume the statement for and prove it for .

Let be the mechanism that is promised by the induction hypothesis and let be its revenue.

We invoke Proposition C.4 on and to get a mechanism with the following properties:

-

•

The menu offered by on days is the same as .

-

•

The -deadline menu complexity is at most .

-

•

The revenue is at least .

Property and imply that the -deadline menu complexity of is at most for all . The revenue of is at least . This completes the proof.

∎

Finally, we may complete the proof of Theorem 1.2.

Proof of Theorem 1.2.

For any FedEx instance where the bidder’s types have integral support , observe that the optimal revenue is at least . This is because is the revenue generated by the auction that offers the price on all deadlines. Further, for all deadlines , .

Note that all revenue curves when restricted to satisfy the requirements of Theorem 4.2. This implies that for all , there exists sequences of at most points such that for all

We set and use section 4 to get that there exists a mechanism with menu complexity at most whose revenue is at least .

∎

Appendix D Analysis omitted in Section 5

D.1 The instance

In this section, we describe our instance of the FedEx problem that is hard to approximate using small menus. Before delving into the details of our parameters, we give a brief high level intuition.

In Section 4.1, we described a function that is hard to polygon approximate. The function provided a lower bound for Theorem 4.2 that was tight (modulo constant factors). The idea behind this example was to have a piecewise linear function such that the slope of the constituent segments decreases gradually in a controlled way. Because of the intimate connection between polygon approximation and menu complexity (Proposition C.4), similar ideas should also help in constructing a FedEx instance that is hard to approximate using small menus.

The construction of the hard FedEx instance can be expected to have many additional complications. The complications arise from the fact that each revenue curve in a FedEx instance is formed by appropriately adding revenue curves of multiple distributions (see Definition 2.3) and is obtained by ‘ironing’ . Since the size of the optimal menu only increases when prices ‘split’ across ironed intervals, it is necessary to have multiple (polynomial, in our case) ironed intervals in the function .

The presence of multiple ironed intervals in is, however, not sufficient. It is easy to see why. Imagine an example where all the revenue curves are generated from the same underlying distribution. If this is the case, all the curves might have the same ironed intervals.121212This is not always true as whether a particular interval is ironed or not for a given deadline depends on many other factors, e.g. the distribution across the various days. If the ironed intervals in the curves for all correspond, then even the optimal revenue auction would require only price on each day. This is because only those prices ‘split’ that are inside an ironed interval. If all the ironed intervals correspond, then no price can ever lie inside a interval. The solution is to have ironed intervals ‘nested’ so that the ends of the interval on day lie inside the intervals on day .

The two points raised above concern splitting in general and were also true of our worst case example described in Section 3. If we want a lower bound for all approximate auctions, we also want the slope between two consecutive segments to be very different (as in ). This ensures that we need at least one menu option for each segment for a good approximation.

With these ideas in mind, we describe our hard instance. We assume that the bidder’s type is drawn from a distribution supported on . The value of in our example is and the distribution over days is uniform. We make explicit that we don’t include the in our calculation. Since they only scale all revenue curves by , they don’t affect the quality of a approximation. The marginal distribution on day is designed so that the revenue curve has segments. The slope of the first segments is geometrically decreasing with common ratio . This value is large enough so that a approximation will require one menu option for each segment. It also satisfies,

Claim D.1.

For , .

Proof.

∎

As we define the slopes to be in geometric progression, the geometric sum will appear multiple times in our description and its analysis. To avoid writing the all the terms everywhere, we define and for all .

The segment of will have slope where . This last segment will split into two segments on day . The first segment will have slope , continuing the geometric progression. The last segment will have slope , allowing it to be split similarly in the future. In order to ensure this behavior of , we need the slope of to be for this segment, where

| (5) |

This value of satisfies the following two properties:

Claim D.2.

is an increasing sequence for .

Claim D.3.

For , .

We now define the marginal distribution on the deadline. We write a expression for the distribution and then, calculate the revenue curve of the distribution . The part of the analysis where we prove that is a valid distribution is omitted. This would use and (where Claim D.1 and Claim D.3 help prove the latter) and can easily be done by the interested reader. The distribution over types on the day is given by :

| (6) |

The revenue curve for this distribution is given by :

| (7) |

Using Definition 2.3, we now calculate the combined revenue curve for days through . We prove that has the form we desire using induction.

Theorem D.1.

| (8) |

Proof.

Proof by backwards induction. The base case is easily verified. For the inductive step, we first calculate . This is done by ironing the curve defined in Equation 8. We provide an expression for and prove that it is correct in Lemma D.2 in Subsection D.2 of this Appendix. The expression is:

| (9) |

Note that this function is maximized at . We now use Definition 2.3 which says:

Using Equation 7 and Equation 9 in this gives:

which is of the form required by the induction hypothesis. ∎

It is relatively easy to find the optimal auction in our instance using Fiat et al.’s algorithm [FGKK16]. The optimal auction closely follows the behavior of the curves . It has one menu option on day . On each subsequent day, the last menu option splits into two options while all the other options are carried as is. For example, the price splits into two prices of and on day . The price is pushed forward to all of the days while the price is split into and . The price is pushed through while the price is again spit into two on day . This process goes on till the day. The menu offered on day has options and .

Remark D.1.

The optimal revenue in the setting described is at most which is between and . This is denoted by throughout.

D.2 Omitted details from the proof of Theorem D.1

In this subsection we show that is the upper concave envelope of , where both functions are as defined in Appendix D. The proof consists of showing that the two functions agree on the points where changes, and that is greater than on the other points. This, combined with the fact that is linear in between the points where it changes, suffices to show the claim.

Lemma D.2.

For all , we have .

Proof.

We prove this lemma using four claims, all of which establish for different domains of .

Claim D.4.

For all , we have .

Proof.

By verification: . ∎

Claim D.5.

For all , we have .

Proof.

By verification:

∎

Claim D.6.

.

Proof.

| (Equation 5) | ||||

∎

Claim D.7.

.

Proof.

This is easily verifiable from the description of the functions. ∎

∎

Lemma D.3.

For all , we have .

Proof.

Note that both functions are piecewise linear. The function changes form at points . The function changes form at points in . To prove this lemma, it is sufficient to show that for all . If , this follows by subsection D.2. We only prove for .

If , we get

| (Equation 5) | ||||

| (D.3) | ||||

If , we get

as the other term in the definition of is positive.

∎

Lemma D.4.

The upper concave envelope of the function defined in Equation 8 is the function defined in Equation 9.

Proof.

By definition, is a continuous piecewise linear function whose successive segments have decreasing slope. Thus, it is concave. subsection D.2 shows that the function is always larger that . Note that the function is piecewise linear and changes form at points , and . subsection D.2 shows that at all these points, the value of is that same as that of . This finishes the proof. ∎

D.3 Cleaning

We start by fixing notation that we employ in this subsection. The letters , , , and are reserved for the revenue curves and the type distribution of our FedEx instance. These are described in Equation 8, Equation 9, and Equation 6 respectively ( is assumed to be uniform and is omitted throughout). The letters and would denote the allocation curves of mechanisms, i.e., the allocation curve on day would be denoted by or . Thus, for , a bidder that reports type gets the item with probability . We assume without loss of generality that and . In line with the notation used for probability distributions, we reserve the lower case for the ‘allocation density’, i.e., . With this notation, the menu complexity of a mechanism is the number of such that . Unless specified otherwise, all statements about clean mechanisms pertain our particular instance of the FedEx problem defined in the previous section.

In the FedEx problem, menus on day are constrained by the menu offered on day . These constraints are represented by the downwards IC constraints in Equation 1. In this sense, some menus on day are strictly more constraining than others. For example, a price of is offered on day is strictly more constraining than a price of . This is because the utility is strictly higher. Similarly, a distribution over two prices of and with probabilities and respectively is strictly more constraining than offering the single price . This reasoning also applies in case the price has a small mass in a larger distribution. In the case a feasible less constraining menu generates a higher revenue on a particular day than a more constraining one, the latter can be changed to the former without violating any of the feasibility constraints. This change will only increase the revenue generated.

In the problem instance we consider, the revenue curve for a given day is increasing and touches the upper concave envelope at all points in . Thus, if a price in this range is offered with probability on day , the least constraining and revenue maximizing option on day is to offer the same price with the same probability. We define ‘clean’ mechanisms to be mechanisms that satisfy this property. Formally,

Definition D.1 (Clean mechanisms).

Let be the allocation density for day of a mechanism . The mechanism is said to be clean if for all days and any point , we have

The reason we do not require equality in the expression above is that the (allocation) mass from points higher than may be moved to lower points causing an increase in the value of there. We prove in Theorem D.8 that an arbitrary mechanism can be converted to a clean mechanism that is ‘intimately’ connected to the original mechanism. We state a direct corollary here that is obtained by setting and for all in the statement of the theorem.

Corollary D.5 (Cleaning).

Consider any mechanism for the FedEx instance described in subsection D.1. There exists a mechanism such that:

-

•

mimics on day .

-

•

is clean.

-

•

For all days , we have .

-

•

For all days , .

Here, denotes stochastic dominance of the second-order.

D.4 The statement and proof of Theorem D.8 131313Auxiliary results that can be skipped without loss of comprehension.

Consider any mechanism . Let denote the allocation function of mechanism on day . We know from the IC constraints that is monotone non-decreasing for all . Without loss of generality, assume that . We interpret as the cumulative distribution function of some random variable. The leftwards IC constraints say that for all , we have,

| (10) |

We define stochastic dominance and state a well-known result

Definition D.2.

Consider two discrete random variables and supported on the non-negative integers. (, are the cdfs of the random variables). We say has (second-order) stochastic dominance over if and only if for all ,

We denote this by .

We have the following well-known result on necessary and sufficient conditions for second-order stochastic dominance:

Theorem D.6.

Let and be two random variables with distributions and respectively. The following statements are equivalent:

-

•

.

-

•

There exist random variables and such that , with always at most and such that .

-

•

For any concave and increasing function , it holds that .

Here, denotes equivalence in distribution.

We proceed to prove our main technical lemma.

Lemma D.7.

If any two random variables and defined on satisfy , then for any , there exists a such that and for all , we have .

Furthermore, if is a function that is increasing on such that for all , then .

Proof.

Theorem D.6 promises random variables and such that , where and . Our goal is to construct a random variable such that . We wish to establish both the stochastic dominance results via Theorem D.6. To this end, we first construct random variables and and use these to define . Let

| (11) |

Note that . Next, define

| (12) |

We prove that in D.9 paving the way for Theorem D.6. For that, we need another result.

Claim D.8.

For any , the event happens if and only if .

Proof.

First, assume . This means that and hence, by Equation 11, . However, implying that .

Second, assume that . Again, we have and hence, by Equation 11, . ∎

Claim D.9.

Proof.

Fix where is arbitrary. If , then and the claim follows.

If, on the other hand, , then D.8 applies. Thus, by Equation 12, we have

∎

Define . Then, D.9 means that . Now, we wish to prove that . Our strategy is to again employ Theorem D.6. For this, we define and .

| (13) |

Note that

Claim D.10.

.

Proof.

We prove that . If , the and this is trivial. Otherwise, . ∎

We next define as

| (14) |

Claim D.11.

.

Proof.

Fix where is arbitrary. Since , this is equivalent to saying that .

We prove that by arguing and . We first deal with the former. If , then (Equation 12) and hence and we are done.

Now, suppose . In this case, (Equation 12) and hence if and only if .

Note that is the same as which happens if and only if (D.8). Thus,

∎

Since by Equation 13 and Equation 14, we have that by D.10 and D.11.

Consider the event for . Since , both and are . Thus, . Hence, .

Finally, consider a function such that for all . We wish to prove that . We break the proof into cases. Each one of the following claims handles one such case.

Claim D.12.

Let be the event . We have

Proof.

By D.8, can equivalently be described as . We use these two forms interchangeably in this proof. If occurs, and (Equation 11 and Equation 12). Thus, and the claim follows. ∎

Claim D.13.

Let be the event . We have

Proof.