The Nature of Temporal Difference Errors in

Multi-step Distributional Reinforcement Learning

Abstract

We study the multi-step off-policy learning approach to distributional RL. Despite the apparent similarity between value-based RL and distributional RL, our study reveals intriguing and fundamental differences between the two cases in the multi-step setting. We identify a novel notion of path-dependent distributional TD error, which is indispensable for principled multi-step distributional RL. The distinction from the value-based case bears important implications on concepts such as backward-view algorithms. Our work provides the first theoretical guarantees on multi-step off-policy distributional RL algorithms, including results that apply to the small number of existing approaches to multi-step distributional RL. In addition, we derive a novel algorithm, Quantile Regression-Retrace, which leads to a deep RL agent QR-DQN-Retrace that shows empirical improvements over QR-DQN on the Atari-57 benchmark. Collectively, we shed light on how unique challenges in multi-step distributional RL can be addressed both in theory and practice.

1 Introduction

The return is a fundamental concept in reinforcement learning (RL). In general, the return is a random variable, whose distribution captures important information such as the stochasticity in future events. While the classic view of value-based RL typically focuses on the expected return (bertsekas1996neuro, ; sutton1998, ; szepesvari2010algorithms, ), learning the full return distribution is of both theoretical and practical importance (morimura2010nonparametric, ; morimura2012parametric, ; bellemare2017distributional, ; yang2019fully, ; bodnar2019quantile, ; wurman2022outracing, ; bdr2022, ).

To design efficient algorithms for learning return distributions, a natural idea is to construct distributional equivalents of existing multi-step off-policy value-based algorithms. In value-based RL, multi-step learning tends to propagate useful information more efficiently and off-policy learning is ubiquitous in modern RL systems. Meanwhile, the return distribution shares inherent commonalities with the expected return, thanks to the close connection between the distributional Bellman equation (morimura2010nonparametric, ; morimura2012parametric, ; bellemare2017distributional, ; bdr2022, ) and the celebrated value-based Bellman equation (sutton1998, ). The Bellman equation is foundational to value-based RL algorithms, including many multi-step off-policy methods (precup2001off, ; harutyunyan2016q, ; munos2016safe, ; mahmood2017multi, ). Due to the apparent similarity between distributional and value-based Bellman equations, should we expect key value-based concepts and algorithms to seamlessly transfer to distributional learning?

Our study indicates that the answer is no. There are critical differences between distributional and value-based RL, which requires a distinct treatment of multi-step learning. Indeed, thanks to the focus on expected returns, the value-based setup offers many unique conceptual and computational simplifications in algorithmic design. However, we find that such simplifications do not hold for distributional learning. Multi-step distributional RL requires a deeper look at the connections between fundamental concepts such as -step returns, TD errors and importance weights for off-policy learning. To this end, we make the following conceptual, theoretical and algorithmic contributions:

Distributional TD error.

We demonstrate the emergence of a novel notion of path-dependent distributional TD error (Section 4). Intriguingly, as the name suggests, path-dependent distributional TD errors are path-dependent, i.e., distributional TD errors at time depend on the sequence of immediate rewards . This differs from value-based TD errors, which are path-independent. We will show that the path-dependency property is not an artifact, but rather a fundamental property of distributional learning. We show numerically that naively constructing certain path-independent distributional TD errors does not produce convergent algorithms. The path-dependency property also has conceptual and computational impacts on forward-view estimates and backward-view algorithms.

Theory of multi-step distributional RL.

We derive distributional Retrace, a novel and generic multi-step off-policy operator for distributional learning. We prove that distributional Retrace is contractive and has the target return distribution as its fixed point. Distributional Retrace interpolates between the one-step distributional Bellman operator (bellemare2017distributional, ) and Monte-Carlo (MC) estimation with importance weighting (chandak2021universal, ), trading-off the strengths from the two extremes.

Approximate multi-step distributional RL.

Finally, we derive Quantile Regression-Retrace, a novel algorithm combining distributional Retrace with quantile representations of distributions (dabney2018distributional, ) (Section 5). One major technical challenge is to define the quantile regression (QR) loss against signed measures, which are unavoidable in sample-based settings. We bypass the issue of ill-defined QR loss and derive unbiased stochastic estimates to the QR loss gradient. This leads up to QR-DQN-Retrace, a deep RL agent with performance improvements over QR-DQN on Atari-57 games.

In Figure 1, we illustrate how the back-up target is computed for multi-step distributional RL. In summary, we take our findings to demonstrate how the set of unique challenges presented by multi-step distributional RL can be addressed both theoretically and empirically. Our study also opens up many exciting research pathways in this domain, paving the way for future investigations.

2 Background

Consider a Markov decision process (MDP) represented as the tuple where is the state space, the action space, the reward kernel (with a finite set of possible rewards), the transition kernel and the discount factor. In general, we use denote a distribution over set . We assume the reward to take a finite set of values mainly because it is notationally simpler to present results; it is straightforward to extend our results to the general case. Let be a fixed policy. We use to denote a random trajectory sampled from , such that . Define as the random return, obtained by following starting from . The Q-function is defined as the expected return under policy . For convenience, we also adopt the vector notation . Define the one-step value-based Bellman operator such that where . The Q-function satisfies and is also the unique fixed point of .

2.1 Distributional reinforcement learning

In general, the return is a random variable and we define its distribution as . The return distribution satisfies the distributional Bellman equation (morimura2010nonparametric, ; morimura2012parametric, ; bellemare2017distributional, ; rowland2018analysis, ; bdr2022, ),

| (1) |

where is the pushforward operation defined through the function (rowland2018analysis, ). For convenience, we adopt the notation . Throughout the paper, we focus on the space of distributions with bounded support . Let be any distribution vector, we define the distributional Bellman operator as follows (rowland2018analysis, ; bdr2022, ),

| (2) |

Let be the collection of return distributions under ; the distributional Bellman equation can then be rewritten as . The distributional Bellman operator is -contractive under the supremum -Wasserstein distance (dabney2018distributional, ; bdr2022, ), so that is the unique fixed point of . See Appendix B for details of the distance metrics.

2.2 Multi-step off-policy value-based learning

We provide a brief background on the value-based multi-step off-policy setting as a reference for the distributional case discussed below. In off-policy learning, the data is generated under a behavior policy , which potentially differs from target policy . The aim is to evaluate the target Q-function . As a standard assumption, we require . Let be the step-wise importance sampling (IS) ratio at time step . Step-wise IS ratios are critical in correcting for the off-policy discrepancy between and .

Let be a time-dependent trace coefficient. We denote and define by convention. Consider a generic form of the return-based off-policy operator as in (munos2016safe, ),

| (3) |

In the above and below, we omit the notation conditioning on for conciseness. The general form of encompasses many important special cases: when on-policy and , it recovers the Q-function variant of TD() (sutton1998, ; harutyunyan2016q, ); when , it recovers a specific form of Retrace (munos2016safe, ); when , it recovers the importance sampling (IS) operator. The back-up target is computed as a mixture over TD errors , each calculated from the one-step transition data. We also define the discounted TD error , which can be interpreted as the difference between -step returns from two time steps and , as we discuss in Section 4. As we will detail, the property of marks a significant difference from the distributional RL setting.

By design, has as the unique fixed point. Multi-step updates make use of rewards from multiple time steps, propagating learning signals more efficiently. This is reflected by the fact that is -contractive with (munos2016safe, ) and often contracts to faster than the one-step Bellman operator . Our goal is to design distributional equivalents of multi-step off-policy operators, which can lead to concrete algorithms with sample-based learning.

3 Multi-step off-policy distributional reinforcement learning

We now present the core theoretical results relating to multi-step distributional operators. In general, the aim is to evaluate the target distribution with access to off-policy data generated under .

Below, we use to denote the partial sum of discounted rewards between two time steps . We define the generic form of multi-step off-policy distributional operator such that for any , its back-up target is computed as

| (4) |

As an effort to simplify the naming, we call the distributional Retrace operator. Distributional Retrace only requires and represents a large family of distributional operators. Throughout, we will heavily adopt the pushforward notations. This is mainly because instead of directly working with the random variable , we find it much more convenient to express various important multi-step operations with pushfoward notations.

The back-up target is written as a weighted sum of the path-dependent distributional TD errors , which we extensively discuss in Section 4. Though the form of seems to bear certain similarities to the value-based operator in Equation (3), the critical differences lie in subtle definitions of the distributional TD errors and where to place the traces for off-policy corrections. We resume to unpack the insights entailed by the design of the operator in Section 4.

Below, we first present theoretical properties of the distributional Retrace operator. We start with a key property which underlies many ensuing theoretical results. Given a fixed -step reward sequence and a fixed state-action pair , we call pushfoward distributions of the form the -step target distributions. Our result shows that the back-up target of Retrace is a convex combination of -step target distributions with varying values of .

Lemma 3.1.

(Convex combination) The Retrace back-up target is a convex combination of -step target distributions. Formally, there exists an index set such that where , and are -return target distributions.

Since , we can measure the contraction of under probability metrics.

Proposition 3.2.

(Contraction) is -contractive under supremum -Wasserstein distance, where .

The contraction rate of the distributional Retrace operator is determined by its effective horizon. At one extreme, when , the effective horizon is and , in which case Retrace recovers the one-step operator. At the other extreme, when , the effective horizon is infinite which gives . This latter case can be understood as correcting for all the off-policy discrepancies with IS, which is very efficient in expectation but incurs high variance under sample-based approximations. Proposition 3.2 also implies that the distributional Retrace operator has a unique fixed point.

Proposition 3.3.

(Unique fixed point) has as the unique fixed point in .

The above result suggests that starting with , the recursion produces iterates which converge to in at a rate of .

4 Understanding multi-step distributional reinforcement learning

Now, we pause and take a closer look at the construction of the distributional Retrace operator. We present a number of insights that distinguish distributional learning from value-based learning.

4.1 Path-dependent TD error

The value-based Retrace back-up target can be written as a mixture of value-based TD errors. To better parse the distributional Retrace operator and draw comparison to the value-based setting, we seek to rewrite the distributional back-up target into a weighted sum of some notion of distributional TD errors. To this end, we start with a natural analogy to the value-based TD error.

Definition 4.1.

(Distributional TD error) Given a transition , define the associated distributional TD error as .

When the context is clear, we also adopt the concise notation . By construction, distributional TD errors are signed measures with zero total mass (bdr2022, ). The distributional TD error is a natural counterpart to the value-based TD error, because they both stem directly from the corresponding one-step Bellman operators. However, unlike in value-based RL, where TD errors alone suffice to specify the multi-step learning operator (Equation (3)), in distributional RL this is not enough. We introduce the path-dependent distributional TD error, which serves as the building block to distributional Retrace.

Definition 4.2.

(Path-dependent distributional TD error) Given a trajectory , define the path-dependent distributional TD error at time as follows,

| (5) |

Path-dependent distributional TD errors are defined as a pushforward measures from , where the pushforward operations depend on . This equips with an intriguing property, path-dependency. Concretely, this means that the path-dependent distributional TD error depends on the sequence of rewards leading up to step . With the above definitions, we can finally rewrite the back-up target of distributional Retrace as a weighted sum of path-dependent distributional TD errors . We now illustrate the difference between value-based and distributional TD errors.

Comparison with value-based TD equivalents.

The value-based equivalent to the path-dependent distributional TD error is the discounted value-based TD error which we briefly mentioned in Section 2. To see why, note that discounted value-based TD errors allow us to rewrite the value-based Retrace back-up target as . For direct comparison between the two settings, we rewrite both and as the difference between two -step predictions evaluated at two time steps and ,

| (Distributional) | |||

| (Value-based) |

The above rewriting attributes the path-dependency to the fact that the -step distributional prediction is non-linear in . Indeed, in the value-based setting, because the partial sum of rewards cancels out as a common term. This leaves the discounted TD error path-independent. In other words, the computation of does not depend on past rewards . In contrast, in the distributional setting, the pushforward operations are non-linear in the partial sum of rewards . As a result, does not cancel out in the definition of , making the path-dependent TD error depend on the past rewards .

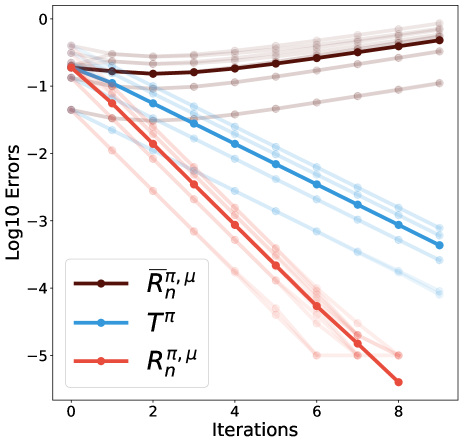

The path-dependent property is not an artifact of the distributional Retrace operator ; instead, it is an indispensable element for convergent multi-step distributional learning in general. We show this by empirically verifying that multi-step learning operators based on alternative definitions of path-independent distributional TD errors are non-convergent even for simple problems.

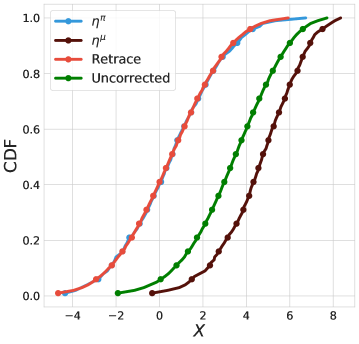

Numerically non-convergent path-independent operators.

Consider the path-independent distributional TD error . We arrived at this definition by dropping the path-dependent term in the pushforward of . Such a definition seems appealing because when , the error is zero in expectation . This implies that we can construct a multi-step operator by a weighted sum of the alternative path-independent TD error . By construction, has as one fixed point.

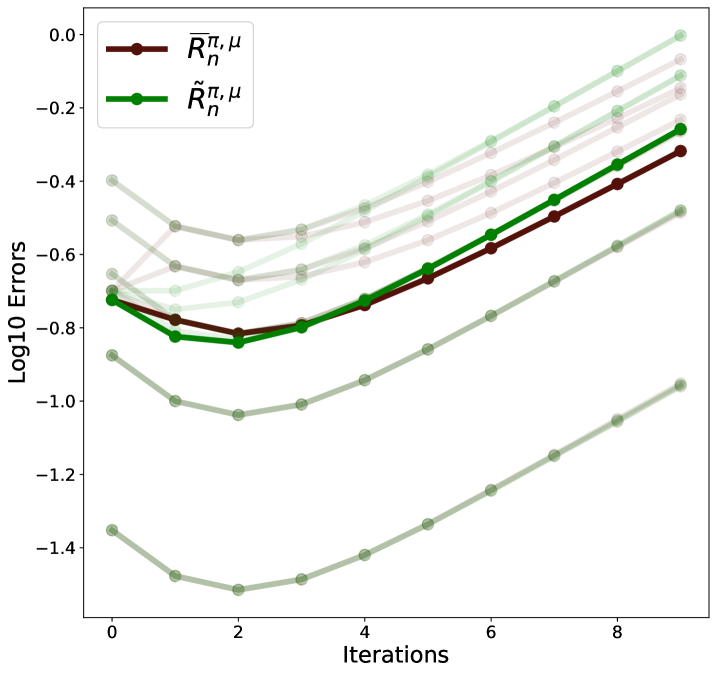

We provide a very simple counterexample on which is not contractive: consider an MDP with one state and one action. The state transitions back to itself with a deterministic reward . When the discount factor is , is a Dirac distribution centered at . We consider the simple case and . We use the distance to measure the convergence of the distribution iterates (bdr2022, ). Figure 2 shows that does not converge to , while the one-step Bellman operator and distributional Retrace are convergent.

In Appendix C, we discuss yet another alternative to designed to be path-independent . Though the resulting multi-step operator still has as one fixed point, we show numerically that it is not contractive on the same simple example. These results demonstrate that naively removing the path-dependency might lead to non-convergent multi-step operators.

4.2 Backward-view of distributional multi-step learning

To highlight the difference between distributional and value-based multi-step learning, we discuss the impact that path-dependent distributional TD errors have on the backward-view distributional algorithm. Thus far, distributional back-up targets are expressed in the forward-view, i.e., the back-up target at time is calculated as a function of future transition tuples . The forward-view algorithms, unless truncated, wait until the episode finishes to carry out the update, which might be undesirable when the problem is non-episodic or has a very long horizon.

In the backward-view, when encountering a distributional TD error , the algorithm carries out updates for all predictions at time (sutton1998, ). To this end, the algorithm needs to maintain additional partial return traces, i.e., the partial sum of rewards , in order to calculate the path-dependent TD error . Unlike the value-based state-dependent eligibility traces (sutton1998, ; van2020expected, ), partial return traces are time-dependent. This implies that in an episode of steps, value-based backward-view algorithms require memory of size while the distributional algorithms requires .

In addition to the added memory complexity, the incremental updates of distributional algorithms are also much more complicated due to the path-dependent TD errors. We remark that the path-independent nature of value-based TD errors greatly simplify the value-based backward-view algorithm. For a more detailed discussion, see Appendix D.

4.3 Importance sampling for multi-step distributional RL

In our initial derivation, we arrived at through the application of importance sampling (IS) in a different way from the value-based setting. We now highlight the subtle differences and caveats.

For a fixed , consider the trace coefficient . The back-up target of the resulting Retrace operator reduces to . This can be seen as applying IS to the -step prediction . As a caveat, note that an appealing alternative approach is to apply IS to , producing the estimate . This latter estimate does not properly correct for the off-policy discrepancy between and . To see why, note that applying the IS ratio to , instead of to the probability of its occurrence, is an artifact of value-based RL because the expected return is linear in (precup2001off, ). In general for distributional RL, one should importance weigh the measures instead of sum of rewards.

5 Approximate multi-step distributional reinforcement learning algorithm

We now discuss how the distributional Retrace operator combines with parametric distributions, using the construction of the novel Quantile Regression-Retrace algorithm as a practical example. We focus on the quantile representation because it entails the best empirical performance of large-scale distributional RL (dabney2018distributional, ; dabney2018implicit, ). Speficially, we present an application of quantile regression with signed measures, which is interesting in its own right. Below, we start with a brief background on quantile representations (dabney2018distributional, ), followed by details on the proposed algorithm.

Consider parametric distributions of the form: for a fixed , where are a set of parameters indicating the support of the distribution. Let denote the family of distribution . We define the projection as , which projects any distribution onto the space of representable distributions in the parametric class under the distance. With an abuse of notation, we also let denote the component-wise projection when applied to vectors. See (dabney2018distributional, ; bdr2022, ) for more details.

Gradient-based learning via quantile regression.

We can use quantile regression koenker1978regression ; koenker2005quantile ; koenker2017handbook to calculate the projection . Let denote the CDF of a given distribution . Let be the generalized CDF inverse, we define the -th quantile as for . The projection is equivalent to computing for (dabney2018distributional, ). To learn the -th quantile for any , it suffices to solve the quantile regression problem whose optimal solution is : where . In practice, we carry out the gradient update to find the optimal solution and learn the quantile .

5.1 Distributional Retrace with quantile representations

Given an input distribution vector , we use the distributional Retrace operator to construct the back-up target . Then, we use the quantile projection to map the back-up target onto the space of representations . Overall, we are interested in the recursive update: start with any , consider the sequence of distributions generated via . A direct application of Proposition 3.2 allows us to characterize the convergence of the sequence, following the approach of bdr2022 .

Theorem 5.1.

(Convergence of quantile distributions) The projected distributional Retrace operator is -contractive under distance in . As a result, the above converges to a limiting distribution in , such that . Further, the quality of the fixed point is characterized as .

Thanks to the faster contraction rate , the advantage of the projected operator is two-fold: (1) the operator often contracts faster to the limiting distribution than the one-step operator contracts to its own limiting distribution (dabney2018distributional, ); (2) the limiting distribution also enjoys a better approximation bound to the target distribution. We verify such results in Section 7.

5.2 Quantile Regression-Retrace: distributional Retrace with quantile regression

Below, we use to represent the -th quantile of the distribution at . Overall, we have a tabular quantile representation , where we use the notation to stress the distribution’s dependency on parameter . For any given bootstrapping distribution vector , in order to approximate the projected back-up target with the parameterized quantile distribution , we solve the set of quantile regression problems for all ,

For any fixed , to solve the quantile regression problem, we apply gradient descent on . In practice, with one sampled trajectory , the aim is to construct an unbiased stochastic gradient estimate of the QR loss . Below, let for simplicity. We start with a stochastic estimate for the QR loss,

Since is differentiable with , we use as the stochastic gradient estimate. This gradient estimate is unbiased under mild conditions.

Lemma 5.2.

(Unbiased stochastic QR loss gradient estimate) Assume that the trajectory terminates within steps almost surely, then we have and .

The above stochastic estimate bypasses the challenge that the QR loss is only defined against distributions, whereas sampled back-up targets are signed measures in general. In Quantile Regression-Retrace, we use itself as the bootstrapping distribution, such that the algorithm approximates the fixed point iteration . Concretely, we carry out the following sample-based update

5.3 Deep reinforcement learning: QR-DQN-Retrace

We introduce a deep RL implementation of the Quantile Regression-Retrace: QR-DQN-Retrace, where the parametric representation is combined with function approximations (bellemare2017cramer, ; dabney2018distributional, ; dabney2018implicit, ). The base agent QR-DQN (bellemare2017cramer, ) parameterizes the quantile locations with the output of a neural network with weights . Let denote the parameterized distribution. QR-DQN-Retrace updates its parameters by stochastic gradient descent on the estimated QR loss, averaged across all quantile levels . In practice, the update is further averaged over state-action pairs sampled from a replay buffer.

6 Discussions

Categorical representations.

The categorical representation is another commonly used class of parameterized distributions in prior literature (bellemare2017cramer, ; rowland2018analysis, ; rowland2019statistics, ; bdr2022, ). We obtain contractive guarantees for the categorical representation similar to Theorem 5.1. As with QR, this leads both to improved fixed-point approximations and faster convergence. Further, this leads to a deep RL algorithm C51-Retrace. The actor-critic Reactor agent (gruslys2017reactor, ) uses C51-Retrace as a critic training algorithm, although without explicit consideration or analysis of the associated distributional operator. See Appendix E for details. We empirically evaluate the stand-alone improvements of C51-Retrace over C51 in Section 7.

Uncorrected methods.

The uncorrected methods do not correct for the off-policyness and hence obtain a biased fixed point (hessel2018rainbow, ; kapturowski2018recurrent, ; kozuno2021revisiting, ). The Rainbow agent (hessel2018rainbow, ) combined -step uncorrected learning with C51, effectively implementing a distributional operator whose fixed point differs from .

On-policy distributional TD().

Nam et al. nam2021gmac propose SR(), a distributional version of on-policy TD) (sutton1988learning, ). In operator form, this can be viewed as a special case of Equation (4) with , ; nam2021gmac also introduce a sample-replacement technique for more efficient implementation.

7 Experiments

We carry out a number of experiments to validate the theoretical insights and empirical improvements.

7.1 Illustration of distributional Retrace properties on tabular MDPs

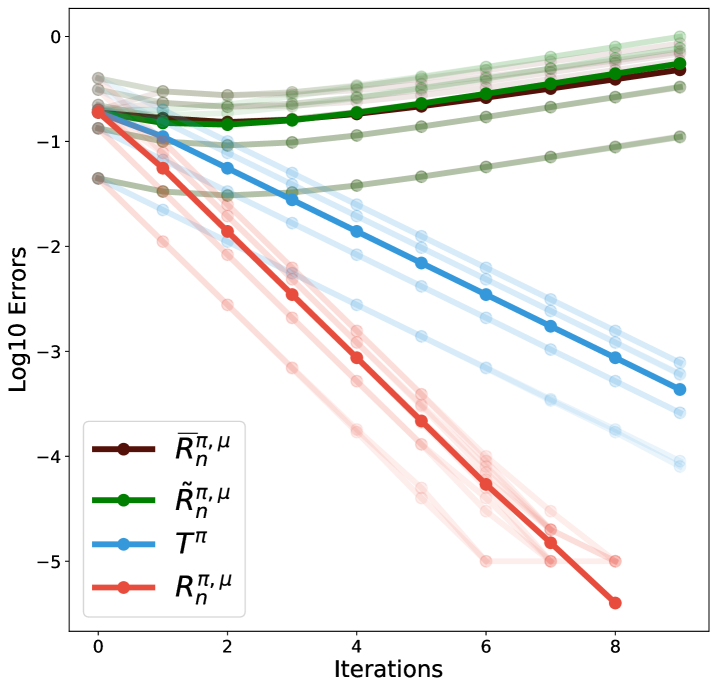

We verify a few important properties of the distributional Retrace operator on a tabular MDP. The results corroborate the theoretical results from previous sections. Throughout, we use quantile representations with atoms; we obtain similar results for categorical representations. See Appendix F for details on the experiment setup. Let be the initial distribution, we carry out dynamic programming with and denote as the th distribution iterate.

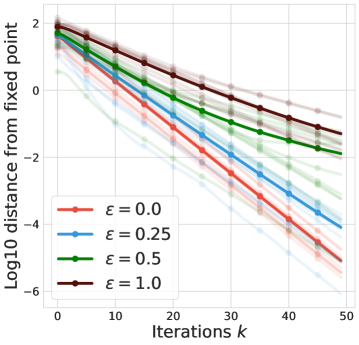

Impact of off-policyness.

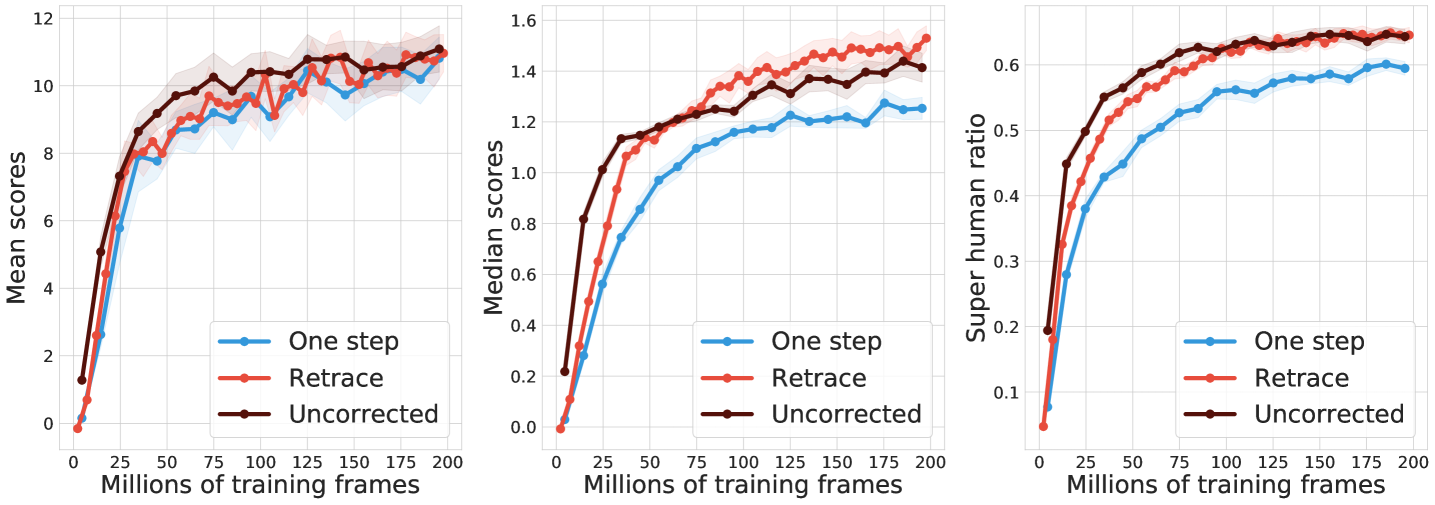

We control the level of off-policyness by setting the behavior policy to be a uniform policy and the target policy to where is a fixed deterministic policy. Moving from to , we transition from on-policy to very off-policy. We use to measure the contraction rate to the fixed point. Figure 3 shows that as the behavior becomes more off-policy, the contraction slows down, degrading the efficiency of multi-step learning.

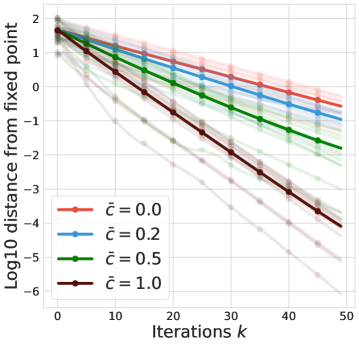

Impact of trace coefficient .

Quality of fixed point.

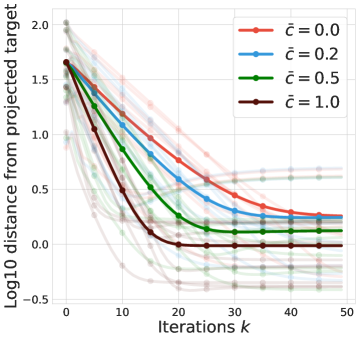

We next examine how the quality of the fixed point is impacted by , by measuring as a proxy to . As increases the error flattens, at which point we take the converged value to be which measures the fixed point quality. Figure 3(c) shows when increases, the fixed point quality improves, in line with the Theorem 5.1. This phenomenon does not arise in tabular non-distributional reinforcement learning, although related phenomena do occur when using function approximation techniques.

Bias of uncorrected methods.

Finally, we illustrate a critical difference between Retrace and uncorrected -step methods (hessel2018rainbow, ): the bias to the fixed point. Figure 3(d) shows that uncorrected -step arrives at a fixed point in between and , showing an obvious bias from .

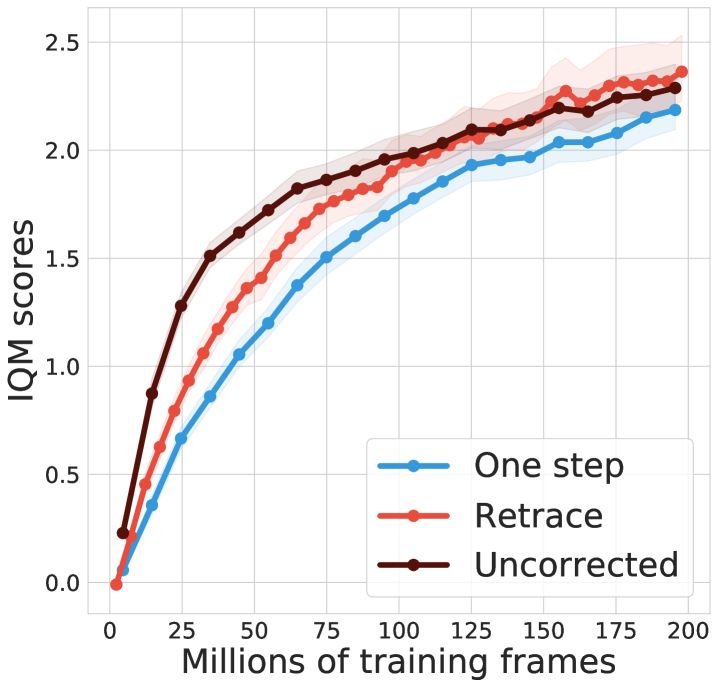

7.2 Deep reinforcement learning

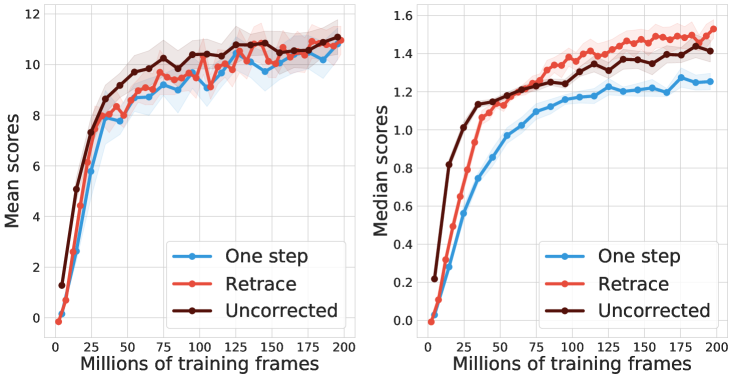

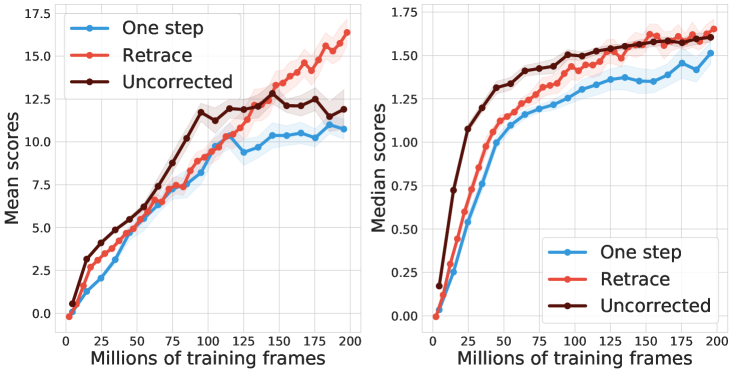

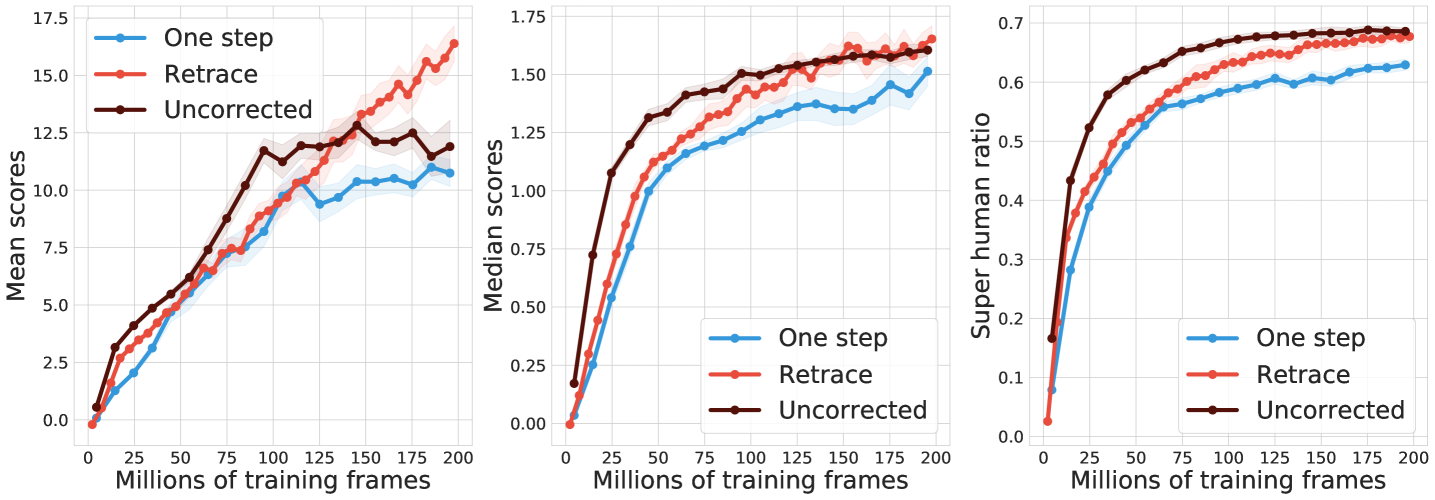

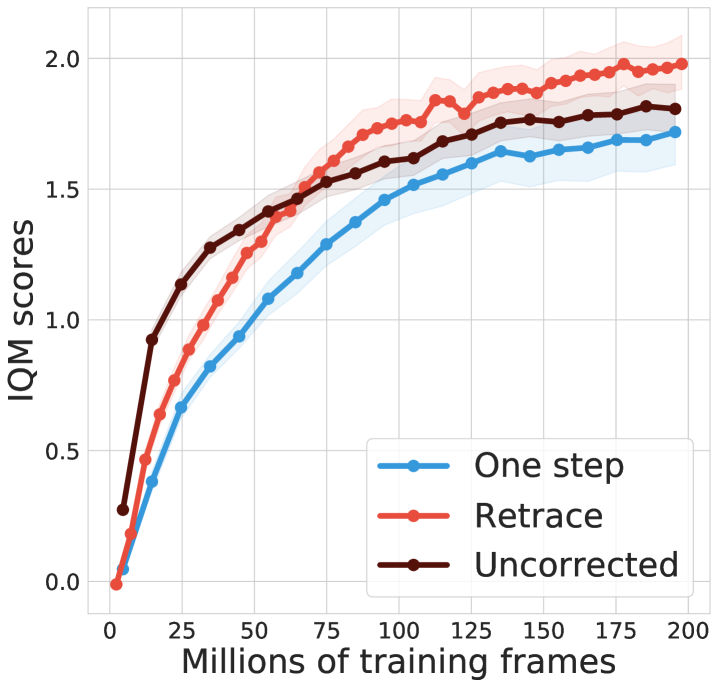

We consider the control setting where the target policy is the greedy policy with respect to the Q-function induced by the parameterized distribution. Because the training data is sampled from a replay, the behavior policy is -greedy with respect to Q-functions induced by previous copies of the parameterized distribution. We evaluate the performance of deep RL agents on 57 Atari games (bellemare2013arcade, ). To ensure fair comparison across games, we compute the human normalized scores for each agent, and compare their evaluated mean and median scores across all 57 games during training.

Deep RL agents.

The multi-step agents adopt exactly the same hyperparameters as the baseline agents. The only difference is the back-up target. For completeness of results, we show the combination of Retrace with both C51 and QR-DQN. For QR-DQN, we use the Huber loss for quantile regression, which is a thresholded variant of the QR loss (dabney2018distributional, ). Throughout, we use with as in (munos2016safe, ). See Appendix F for details. In practice, sampled trajectories are truncated at length . We also adapt Retrace to the -step case, see Appendix A.

Results.

Figure 4 compares one-step baseline, Retrace and uncorrected -step (hessel2018rainbow, ). For C51, both multi-step methods clearly improve the median performance over the one-step baseline. Retrace slightly outperforms uncorrected -step towards the end of learning. For QR-DQN, all multi-step algorithms achieve clear performance gains. Retrace significantly outperforms the uncorrected -step with the mean performance, while obtaining similar results on the median performance. Overall, distributional Retrace achieves a clear improvement over the one-step baselines. The uncorrected -step method typically takes off faster than Retrace but may to slightly worse performance.

Finally, note that in the value-based setting, uncorrected methods are generally more high-performing than Retrace, potentially due to a favorable trade-off between contraction rate and fixed-point bias (rowland2019adaptive, ). Our results add to the benefits of off-policy corrections in the control setting.

8 Conclusion

We have identified a number of fundamental conceptual differences between value-based and distributional RL in multi-step settings. Central to such differences is the novel notion of path-dependent distributional TD error, which naturally arises from the multi-step distributional RL problem. Building on this understanding, we have developed the first principled multi-step off-policy distributional operator Retrace. We have also developed an approximate distributional RL algorithm, Quantile Regression-Retrace, which makes distributional Retrace highly competitive in both tabular and high-dimensional setups. This paper also opens up a several avenues for future research, such as the interaction between multi-step distributional RL and signed measures, and the convergence theory of stochastic approximations for multi-step distributional RL.

References

- [1] D. P. Bertsekas and J. N. Tsitsiklis. Neuro-Dynamic Programming. Athena Scientific, 1996.

- [2] Richard S. Sutton and Andrew G. Barto. Reinforcement Learning: An Introduction. MIT Press, 1998.

- [3] Csaba Szepesvári. Algorithms for Reinforcement Learning. Morgan & Claypool Publishers, 2010.

- [4] Tetsuro Morimura, Masashi Sugiyama, Hisashi Kashima, Hirotaka Hachiya, and Toshiyuki Tanaka. Nonparametric return distribution approximation for reinforcement learning. In Proceedings of the International Conference on Machine Learning, 2010.

- [5] Tetsuro Morimura, Masashi Sugiyama, Hisashi Kashima, Hirotaka Hachiya, and Toshiyuki Tanaka. Parametric return density estimation for reinforcement learning. In Proceedings of the Conference on Uncertainty in Artificial Intelligence, 2010.

- [6] Marc G. Bellemare, Will Dabney, and Rémi Munos. A distributional perspective on reinforcement learning. In Proceedings of the International Conference on Machine Learning, 2017.

- [7] Derek Yang, Li Zhao, Zichuan Lin, Tao Qin, Jiang Bian, and Tie-Yan Liu. Fully parameterized quantile function for distributional reinforcement learning. Advances in neural information processing systems, 2019.

- [8] Cristian Bodnar, Adrian Li, Karol Hausman, Peter Pastor, and Mrinal Kalakrishnan. Quantile QT-OPT for risk-aware vision-based robotic grasping. In Proceedings of Robotics: Science and Systems, 2020.

- [9] Peter R Wurman, Samuel Barrett, Kenta Kawamoto, James MacGlashan, Kaushik Subramanian, Thomas J Walsh, Roberto Capobianco, Alisa Devlic, Franziska Eckert, Florian Fuchs, et al. Outracing champion Gran Turismo drivers with deep reinforcement learning. Nature, 602(7896):223–228, 2022.

- [10] Marc G. Bellemare, Will Dabney, and Mark Rowland. Distributional Reinforcement Learning. MIT Press, 2022. http://www.distributional-rl.org.

- [11] Doina Precup, Richard S. Sutton, and Sanjoy Dasgupta. Off-policy temporal-difference learning with function approximation. In Proceedings of the International Conference on Machine Learning, 2001.

- [12] Anna Harutyunyan, Marc G. Bellemare, Tom Stepleton, and Rémi Munos. Q() with off-policy corrections. In Proceedings of the International Conference on Algorithmic Learning Theory, 2016.

- [13] Rémi Munos, Tom Stepleton, Anna Harutyunyan, and Marc G. Bellemare. Safe and efficient off-policy reinforcement learning. In Advances in Neural Information Processing Systems, 2016.

- [14] Ashique Rupam Mahmood, Huizhen Yu, and Richard S Sutton. Multi-step off-policy learning without importance sampling ratios. arXiv, 2017.

- [15] Yash Chandak, Scott Niekum, Bruno da Silva, Erik Learned-Miller, Emma Brunskill, and Philip S. Thomas. Universal off-policy evaluation. Advances in Neural Information Processing Systems, 2021.

- [16] Will Dabney, Mark Rowland, Marc G. Bellemare, and Rémi Munos. Distributional reinforcement learning with quantile regression. In Proceedings of the AAAI Conference on Artificial Intelligence, 2018.

- [17] Mark Rowland, Marc G. Bellemare, Will Dabney, Rémi Munos, and Yee Whye Teh. An analysis of categorical distributional reinforcement learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, 2018.

- [18] Hado van Hasselt, Sephora Madjiheurem, Matteo Hessel, David Silver, André Barreto, and Diana Borsa. Expected eligibility traces. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020.

- [19] Will Dabney, Georg Ostrovski, David Silver, and Rémi Munos. Implicit quantile networks for distributional reinforcement learning. In Proceedings of the International Conference on Machine Learning, 2018.

- [20] Roger Koenker and Gilbert Bassett Jr. Regression quantiles. Econometrica: Journal of the Econometric Society, pages 33–50, 1978.

- [21] Roger Koenker. Quantile Regression. Econometric Society Monographs. Cambridge University Press, 2005.

- [22] Roger Koenker, Victor Chernozhukov, Xuming He, and Limin Peng. Handbook of Quantile Regression. CRC Press, 2017.

- [23] Marc G. Bellemare, Ivo Danihelka, Will Dabney, Shakir Mohamed, Balaji Lakshminarayanan, Stephan Hoyer, and Rémi Munos. The Cramer distance as a solution to biased Wasserstein gradients. arXiv preprint arXiv:1705.10743, 2017.

- [24] Mark Rowland, Robert Dadashi, Saurabh Kumar, Rémi Munos, Marc G. Bellemare, and Will Dabney. Statistics and samples in distributional reinforcement learning. In Proceedings of the International Conference on Machine Learning, 2019.

- [25] Audrunas Gruslys, Will Dabney, Mohammad Gheshlaghi Azar, Bilal Piot, Marc G. Bellemare, and Rémi Munos. The Reactor: A fast and sample-efficient actor-critic agent for reinforcement learning. In Proceedings of the International Conference on Learning Representations, 2018.

- [26] Matteo Hessel, Joseph Modayil, Hado Van Hasselt, Tom Schaul, Georg Ostrovski, Will Dabney, Dan Horgan, Bilal Piot, Mohammad Azar, and David Silver. Rainbow: Combining improvements in deep reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, 2018.

- [27] Steven Kapturowski, Georg Ostrovski, John Quan, Rémi Munos, and Will Dabney. Recurrent experience replay in distributed reinforcement learning. In Proceedings of the International Conference on Learning Representations, 2019.

- [28] Tadashi Kozuno, Yunhao Tang, Mark Rowland, Rémi Munos, Steven Kapturowski, Will Dabney, Michal Valko, and David Abel. Revisiting Peng’s Q() for modern reinforcement learning. In Proceedings of the International Conference on Machine Learning, 2021.

- [29] Daniel W. Nam, Younghoon Kim, and Chan Y. Park. GMAC: A distributional perspective on actor-critic framework. In Proceedings of the International Conference on Machine Learning, 2021.

- [30] Richard S. Sutton. Learning to predict by the methods of temporal differences. Machine learning, 3(1):9–44, 1988.

- [31] Marc G. Bellemare, Yavar Naddaf, Joel Veness, and Michael Bowling. The arcade learning environment: An evaluation platform for general agents. Journal of Artificial Intelligence Research, 47:253–279, 2013.

- [32] Mark Rowland, Will Dabney, and Rémi Munos. Adaptive trade-offs in off-policy learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, 2020.

- [33] Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas K. Fidjeland, Georg Ostrovski, Stig Petersen, Charles Beattie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. Human-level control through deep reinforcement learning. Nature, 518(7540):529–533, February 2015.

- [34] Walter Rudin. Principles of Mathematical Analysis. McGraw-Hill New York, 1976.

- [35] Charles R. Harris, K. Jarrod Millman, Stéfan J van der Walt, Ralf Gommers, Pauli Virtanen, David Cournapeau, Eric Wieser, Julian Taylor, Sebastian Berg, Nathaniel J. Smith, Robert Kern, Matti Picus, Stephan Hoyer, Marten H. van Kerkwijk, Matthew Brett, Allan Haldane, Jaime Fernández del Río, Mark Wiebe, Pearu Peterson, Pierre Gérard-Marchant, Kevin Sheppard, Tyler Reddy, Warren Weckesser, Hameer Abbasi, Christoph Gohlke, and Travis E. Oliphant. Array programming with NumPy. Nature, 585(7825):357–362, 2020.

- [36] John D. Hunter. Matplotlib: A 2D graphics environment. Computing in science & engineering, 9(03):90–95, 2007.

- [37] James Bradbury, Roy Frostig, Peter Hawkins, Matthew James Johnson, Chris Leary, Dougal Maclaurin, George Necula, Adam Paszke, Jake VanderPlas, Skye Wanderman-Milne, and Qiao Zhang. Jax: composable transformations of Python+NumPy programs. 2018.

- [38] Igor Babuschkin, Kate Baumli, Alison Bell, Surya Bhupatiraju, Jake Bruce, Peter Buchlovsky, David Budden, Trevor Cai, Aidan Clark, Ivo Danihelka, Claudio Fantacci, Jonathan Godwin, Chris Jones, Tom Hennigan, Matteo Hessel, Steven Kapturowski, Thomas Keck, Iurii Kemaev, Michael King, Lena Martens, Vladimir Mikulik, Tamara Norman, John Quan, George Papamakarios, Roman Ring, Francisco Ruiz, Alvaro Sanchez, Rosalia Schneider, Eren Sezener, Stephen Spencer, Srivatsan Srinivasan, Wojciech Stokowiec, and Fabio Viola. The DeepMind JAX ecosystem. 2010.

- [39] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations, 2015.

- [40] Rishabh Agarwal, Max Schwarzer, Pablo Samuel Castro, Aaron C Courville, and Marc Bellemare. Deep reinforcement learning at the edge of the statistical precipice. Advances in neural information processing systems, 34:29304–29320, 2021.

The Nature of Temporal Difference Errors in

Multi-step Distributional Reinforcement Learning:

Appendices

Appendix A Extension of distributional Retrace to -step truncated trajectories

The -step truncated version of distributional Retrace is defined as

which sums the path-dependent distributional TD errors up to time . Compared to the original definition of distributional Retrace, this -step operator is more practical to implement. This operator enjoys all the theoretical properties of the original distributional Retrace, with a slight difference on the contraction rate. Intuitively, the operator bootstraps with at most steps, which limits the effective horizon of the operator to be . It is straightforward to show that the operator is -contractive under with . As , .

Appendix B Distance metrics

We provide a brief review on the distance metrics used in this work. We refer readers to [10] for a complete background.

B.1 Wasserstein distance

Let be two distribution measures. Let be the CDF of . The -Wasserstein distance can be computed as

Note that the above definition is equivalent to the more traditional definition based on optimal transport; indeed, can be understood as the optimal coupling between the two distributions. The above definition is a proper distance metric if .

For any distribution vector , we can define the supremum -Wasserstein distance as

B.2 distance

Let be two distribution measures. Let be the CDF of . The distance is defined as

The above definition is a proper distance metric when .

For any distribution vector or signed measure vector , we can define the supremum Cramér- distance as

Appendix C Numerically non-convergent behavior of alternative multi-step operators

We consider another alternative definition of path-independent alternative to the path-dependent TD error . The primary motivation for such a path-dependent TD error is that the discounted value-based TD error takes the form . The resulting multi-step operator is

With the same toy example as in the paper: an one-state one-action MDP with a deterministic reward and discount factor . The target distribution is a Dirac distribution centering at . Let be the -th distribution iterate by applying the operator , we show the distance between the iterates and in Figure 5. It is clear that alternative multi-step operators do not converge to the correct fixed point.

Appendix D Backward-view algorithm for multi-step distributional RL

We now describe a backward-view algorithm for multi-step distributional RL with quantile representations. For simplicity, we consider the on-policy case and . To implement in the backward-view, at each time step and a past time step , the algorithm needs to maintain two novel traces distinct from the classic eligibility traces [2]: (1) partial return traces , which correspond to the partial sum of rewards between two time steps ; (2) modified eligibility traces, defined as , which measures the trace decay between two time steps . At a new time step , the new traces are computed recursively: .

We assume the algorithm maintains a table of quantile distributions with atoms: . For any fixed , define be the set of time steps before time at which is visited. Now, upon arriving at , we observe the TD error . Recall that denote the QR loss of parameter at quantile level and against the distrbution . To more conveniently describe the update, we define the QR loss against the path-dependent TD error

as the difference of the QR losses against the individual distributions,

Note that the QR loss can be computed using the transition data we have seen so far. We now perform the a gradient update for all entries in the table and (in practice, we update entries that correspond to visited state-action pairs):

where . For any fixed , the above algorithm effectively aggregates updates from time steps at which is visited.

D.1 Simplifications for value-based RL

We now discuss how the path-independent value-based TD errors greatly simplify the value-based backward-view algorithm. Following the above notations, assume the algorithm maintains a table of Q-function , we can construct incremental backward-view update for all as follows, by replacing the path-dependent distributional TD error by the discounted TD error

Since does not depend on the past rewards and is state-action dependent, we can simplify the summation over by defining the state-depedent eligibility traces [2] as a replacement to ,

As a result, the above update reduces to

which recovers the classic backward-view update.

D.2 Non-equivalence of forward-view and backward-view algorithms

In value-based RL, forward-view and backward-view algorithms are equivalent given that the trajectory does not visit the same state twice [2]. However, such an equivalence does not generally hold in distributional RL. Indeed, consider the following counterexample in the case of the quantile representation.

Consider a three-step MDP with deterministic transition . There is no action and no reward on the transition. The state is terminal with a deterministic terminal value . We consider atom and let the quantile parameters be and at states respectively. In this case, the quantile representation learns the median of the target distribution with .

Now, we consider the update at with both forward-view and backward-view implementation of the two-step Bellman operator , which can be obtained from distributional Retrace by setting . The target distribution at is a Dirac distribution centering at .

Forward-view update.

Below, we use to denote a Dirac distribution at . In the forward-view, the back-up distribution is

The gradient update to is thus

Backward-view update.

To implement the backward-view update, we make clear of the two path-dependent distributional TD errors at two consecutive time steps

The update consists of two steps:

Overall, we have

Now, let such that , we have .

D.3 Discussion on memory complexity

The return traces and modified eligibility traces are time-dependent, which is a direct implication from the fact that distributional TD errors are path-dependent. Indeed, to calculate the distributional TD error , it is necessary to keep track in the backward-view algorithm. This differs from the classic eligibility traces, which are state-action-dependent [2, 18]. We remark that the state-action-dependency of eligibility traces result from the fact that value-based TD errors are path-independent. The time-dependency greatly influences the memory complexity of the algorithm: when an episode is of length , value-based backward-view algorithm requires memory of size to store all eligibility traces. On the other hand, the distributional backward-view algorithm requires .

Appendix E Distributional Retrace with categorical representations

We start by showing that the distributional Retrace operator is -contractive under the distance for . As a comparison, the one-step distributional Bellman operator is -contractive under [17].

Lemma E.1.

(Contraction in ) is -contractive under supremum distance for , where . Specifically, we have .

Proof.

E.1 Categorical representation

In categorical representations [23], we consider parametric distributions of the form for a fixed , , where are a fixed set of atoms and is a categorical distribution such that and . Denote the class of such distributions as . For simplicity, we assume that the target return is supported on the set of atoms .

We introduce the projection that maps from an initial back-up distribution to the categorical parametric class: defined as . The projection can be easily calculated as described in [6, 17]. For any distribution vector , define as the component-wise projection. Now, given the composed operator , we characterize the convergence of the seququence .

Theorem E.2.

(Convergence of categorical distributions) The projected distributional Retrace operator is -contractive under distance in . As a result, the above converges to a limiting distribution in , such that . Further, the quality of the fixed point is characterized as .

Proof.

The distributional Retrace operator also improves over one-step distributional Bellman operator in two aspects: (1) the bound on the contraction rate is smaller, usually leading to faster contraction to the fixed point; (2) the bound on the quality of the fixed point is improved.

E.2 Cross-entropy update and C51-Retrace

Unlike in the quantile projection case, where calculating requires solving a quantile regression minimization problem, the categorical projection can be calculated in an analytic way [17, 10]. Assume the categorical distribution is parameterized as . After computing the back-up target distribution for a given distribution vector , the algorithm carries out a gradient-based incremental update

where denotes the cross-entropy between distribution and . For simplicity, we adopt a short-hand notation . Note also that in practice, can be a slowly updated copy of [33]. As such, the gradient-based update can be understood as approximating the iteration . We propose the following unbiased estimate to the cross-entropy , calculated as follows

Lemma E.3.

(Unbiased stochastic estimate for categorical update) Assume that the trajectory terminates within steps almost surely, then we have . Without loss of generality, assume is a scalar parameter. If there exists a constant such that , then the gradient estimate is also unbiased .

Proof.

The cross-entropy is defined for any distribution . For any signed measure with , we define the generalized cross-entropy as

Next, we note the cross-entropy is linear in the input distribution (or signed measure). In particular, for a set of (potentially infinite) coefficients and distributions (signed measures) ,

When denotes a distribution, the above rewrites as . Finally, combining everything together, we have evaluate to

In the above, (a) follows from the definition of the cross-entropy with signed measure and (b) follows from the linearity property of cross-entropy.

Next, to show that the gradient estimate is unbiased too, the high level idea is to apply dominated convergence theorem (DCT) to justify the exhchange of gradient and expectation [34]. This is similar to the quantile representation case (see proof for Lemma 5.2). To this end, consider the absolute value of the gradient estimate , which serves as an upper bound to the gradient estimate. In order to apply DCT, we need to show the expectation of the absolute gradient is finite. Note we have

where (a) follows from the application of triangle inequality; (b) follows from the fact that the QR loss gradient against a fixed distribution is bounded [16].

Hence, with the application DCT, we can exchange the gradient and expectation operator, which yields .

∎

We remark that the condition on the bounded gradient is not restrictive. When is adopts a softmax parameterization and represents the logits, .

Finally, the deep RL agent C51 parameterizes the categorical distribution with a neural network at each state action pair [23]. When combined with the above algorithm, this produces C51-Retrace.

Appendix F Additional experiment details

In this section, we provide detailed information about experiment setups and additional results. All experiments are carried out in Python, using NumPy for numerical computations [35] and Matplotlib for visualization [36]. All deep RL experiments are carried out with Jax [37], specifically making use of the DeepMind Jax ecosystem [38].

F.1 Tabular

We provide additional details on the tabular RL experiments.

Setup.

We consider a tabular MDP with states and actions. The reward is deterministic and generated from a standard Gaussian distribution. The transition probability is sampled from a Dirichlet distribution with parameter for . The discount factor is fixed as . The MDP has a starting state-action pair . The behavior policy is a uniform policy. The target policy is generated as follows: we first sample a deterministic policy and then compute , with parameter to control the level of off-policyness.

Quantile distribution and projection.

Evaluation metrics.

Let be the -th iterate. We use a few different metrics in Figure 3. Given any particular distributional Retrace operator , there exists a fixed point to the composed operator . Recall that we denote this distribution as . Fig 3(a)-(b) calculates the iterates’ distance from the fixed point, evaluated at .

Fig 3(c) calculates the distance from the projected target distribution . Recall that is in some sense the best possible approximation that the current quantile representation can obtain.

F.2 Deep reinforcement learning

We provide additional details on the deep RL experiments.

Evaluation metrics.

For the -th of the Atari games, we obtain the performance of the agent at any given point in training. The normalized performance is computed as where is the human performance and is the performance of a random policy. Then the mean/median metric is calculated as the mean or median statistics over .

The super human ratio is computed as the number of games such as , i.e., where the agent obtains super human performance on the game. Formally, it is compute as .

Shared properties of all baseline agents.

All baseline agents use the same torso architecture as DQN [33] and differ in the head outputs, which we specify below. All agents an Adam optimizer [39] with a fixed learning rate; the optimization is carried out on mini-batches of size uniformly sampled from the replay buffer. For exploration, the agent acts -greedy with respect to induced Q-functions, the details of which we specify below. The exploration policy adopts that starts with and linearly decays to over training. At evaluation time, the agent adopts ; the small exploration probability is to prevent the agent from getting stuck.

Details of baseline C51 agent.

The agent head outputs a matrix of size , which represents the logits to . The support is generated as a uniform array over . Though should in theory be determined by ; in practice, it has been found that setting leads to highly sub-optimal performance. This is potentially because usually the random returns are far from the extreme values , and it is better to set at a smaller value. Here, we set and . For details of other hyperparameters, see [6]. The induced Q-function is computed as .

Details of baseline QR-DQN agent.

The agent head outputs a matrix of size , which represents the quantile locations . Here, we set . For details of other hyperparameters, see [16]. The induced Q-function is computed as .

Details of multi-step agents.

Multi-step variants use exactly the same hyperparameters as the one-step baseline agent. The only difference is that the agent uses multi-step back-up targets.

The agent stores partial trajectories generated under the behavior policy. Here, the behavior policy is the -greedy policy with respect to a potentially old Q-function (this is because the data at training time is sampled from the replay); the target policy is the greedy policy with respect to the current Q-function.

Appendix G Proof

To simplify the proof, we assume that the immediate random reward takes a finite number of values. It is straightforward to generalize results to the case where the reward takes an infinite number of values (e.g., the random reward has a continuous distribution).

Assumption G.1.

(Reward takes a finite number of values) For all state-action pair , we assume the random reward takes a finite number of values. Let be the finite set of values that the reward can take.

For any integer , Let denotes the Cartesian product of copies of :

For any fixed , we let denote the sequence of realizable rewards from time to time . Since is a finite set, is also a finite set.

See 3.1

Proof.

In general where is a filtration of . To start with, we assume to be a Markovian trace coefficient [13]. We start with the simpler case because the proof is greatly simplified with notations and can extend to the general case with some care. We discuss the extension to the general case where towards the end of the proof.

For all , we define the coefficient

Through careful algebra, we can rewrite the Retrace operator as follows

Note that each term of the form corresponds to applying a pushforward operation on the distribution , which means . Now that we have expressed as a linear combination of distributions, we proceed to show that the combination is in fact convex.

Under the assumption , we have for all . Therefore, all weights are non-negative. Next, we examine the sum of all coefficients .

In the above, (a) follows from the fact that ; (b) follows from the fact that for all time steps , the following is true,

Finally, (c) is based on the observation that the summation telescopes. Now, by taking the index set to be the set of indices that parameterize ,

We can write . Note further that for any , is a fixed distribution. The above result suggests that is a convex combination of fixed distributions.

Extension to the general case.

When is filtration dependent, we let to be the space of the filtration value up to time . For simplicity with the notation, we assume contains a finite number of elements, such that below we can adopt the summation notation instead of integral. Define the combination coefficient

It is straightforward to verify the following

In addition, the combination coefficients sum to and are all non-negative. ∎

See 3.2

Proof.

From the proof of Lemma 3.1, we have

Now, we have for any , for any fixed , we have upper bounded as follows

In the above, (a) follows by applying the convexity of the -Wasserstein distance [10]; (b) follows by the contraction property of the pushforward operation and [10]; (c) follows from the definition of . By taking the maixmum over on both sides of the inequality, we obtain

This concludes the proof. ∎

Lemma G.2.

For any fixed and scalar ,

| (6) |

Proof.

Let be a subset of indexed by . Since the set of all such sets is dense in the sigma-field of [34], if we can show for two measures

then, for all Borel sets in . Hence, in the following, we seek to show

| (7) |

Let be the CDF of random variable . The distributional Bellman equation in Equation (1) implies

For any constant , let and plug into the above equality,

Note the LHS is while the RHS is . This implies that Equation (7) holds and we conclude the proof. ∎

See 3.3

Proof.

To verify that is a fixed point, it is equivalent to show

Here, the RHS term denotes the zero measure, a measure such that for all Borel sets , . We now verify that each of the summation term is a zero measure, i.e.,

To see this, we follow the derivation below,

| (8) |

In the above, in (a) we condition on and the equality follows from the tower property of expectations; in (b), we use the fact that the trace product and are deterministic function of the conditioning variable ; in (c), we split the summation . Now we examine the first term in Equation (8), by applying Lemma G.2, we have

See 5.1

Proof.

The quantile projection is a non-expansion under [16]. Since is -contractive under for all , the composed operator is -contractive under . Now, because (1) ; (2) the space is closed [10]; (3) the operator is contractive, the iterate converges to a limiting distribution . Finally, by Proposition 5.28 in [10], we have . ∎

See 5.2

Proof.

The QR loss is defined for any distribution and scalar parameter . Let be the linear combination of distributions where s are potentially negative coefficients. In this case, is a signed measure. We define the generalized QR loss for as the linear combination of QR losses against weighted by ,

Next, we note that the QR loss is linear in the input distribution (or signed measure). This means given any (potentially infinite) set of distributions or signed measures with coefficients ,

When denotes a distribution, the above is equivalently expressed as an exchange between expectation and the QR loss . For notational convenience, we let and . Because the trajectory terminates within steps almost surely, since where , the estimate is finite almost surely. Combining all results from above we obtain the following

In the above, (a) follows from the definition of the generalized QR loss against signed measure the definition of ; (c) follows from the linearity of the QR loss.

Next, to show that the gradient estimate is unbiased too, the high level idea is to apply dominated convergence theorem (DCT) to justify the exhchange of gradient and expectation [34]. Since the expected QR loss gradient exists, we deduce that the estimate exists almost surely. Consider the absolute value of the gradient estimate , which serves as an upper bound to the gradient estimate. In order to apply DCT, we need to show the expectation of the absolute gradient is finite. Note we have

where (a) follows from the application of triangle inequality; (b) follows from the fact that the QR loss gradient against a fixed distribution is bounded [16].

With the application of DCT, we can exchange the gradient and expectation operator, which yields . ∎