The strong convergence phenomenon

Abstract.

In a seminal 2005 paper, Haagerup and Thorbjørnsen discovered that the norm of any noncommutative polynomial of independent complex Gaussian random matrices converges to that of a limiting family of operators that arises from Voiculescu’s free probability theory. In recent years, new methods have made it possible to establish such strong convergence properties in much more general situations, and to obtain even more powerful quantitative forms of the strong convergence phenomenon. These, in turn, have led to a number of spectacular applications to long-standing open problems on random graphs, hyperbolic surfaces, and operator algebras, and have provided flexible new tools that enable the study of random matrices in unexpected generality. This survey aims to provide an introduction to this circle of ideas.

2010 Mathematics Subject Classification:

60B20; 15B52; 46L53; 46L541. Introduction

The aim of this survey is to discuss recent developments surrounding the following phenomenon, which has played a central role in a series of breakthroughs in the study of random graphs, hyperbolic surfaces, and operator algebras.

Definition 1.1.

Let be a sequence of -tuples of random matrices of increasing dimension, and let be an -tuple of bounded operators on a Hilbert space. Then is said to converge strongly to if

for every and .

Here we recall that a noncommutative polynomial with matrix coefficients of degree is a formal expression

where are complex matrices. Such a polynomial defines a bounded operator whenever bounded operators are substituted for the free variables . When , this reduces to the classical notion of a noncommutative polynomial (we will then write ).

The significance of the strong convergence phenomenon may not be immediately obvious when it is encountered for the first time. Let us therefore begin with a very brief discussion of its origins.

The modern study of the spectral theory of random matrices arose from the work of physicists, especially that of Wigner and Dyson in the 1950s and 60s [129]. Random matrices arise here as generic models for real physical systems that are too complicated to be understood in detail, such as the energy level structure of complex nuclei. It is believed that universal features of such systems are captured by random matrix models that are chosen essentially uniformly within the appropriate symmetry class. Such questions have led to numerous developments in probability and mathematical physics, and the spectra of such models are now understood in stunning detail down to microscopic scales (see, e.g., [46]).

In contrast to these physically motivated models, random matrices that arise in other areas of mathematics often possess a much less regular structure. One way to build complex models is to consider arbitrary noncommutative polynomials of independent random matrices drawn from simple random matrix ensembles. It was shown in the seminal work of Voiculescu [126] that the limiting empirical eigenvalue distribution of such matrices can be described in terms of a family of limiting operators obtained by a free product construction. This is a fundamentally new perspective: while traditional random matrix methods are largely based on asymptotic explicit expressions or self-consistent equations satisfied by certain spectral statistics, Voiculescu’s theory provides us with genuine limiting objects whose spectral statistics are, in many cases, amenable to explicit computations. The interplay between random matrices and their limiting objects has proved to be of central importance, and will play a recurring role in the sequel.

While Voiculescu’s theory is extremely useful, it yields rather weak information in that it can only describe the asympotics of the trace of polynomials of random matrices. It was a major breakthrough when Haagerup and Thorbjørnsen [60] showed, for complex Gaussian (GUE) random matrices, that also the norm of arbitrary polynomials converges to that of the corresponding limiting object. This much more powerful property, which was the first instance of strong convergence, opened the door to many subsequent developments.

The works of Voiculescu and Haagerup–Thorbjørnsen were directly motivated by applications to the theory of operator algebras. The fact that polynomials of a family of operators can be approximated by matrices places strong constraints on the operator (von Neumann- or -)algebras generated by this family: roughly speaking, it ensures that such algebras are “approximately finite-dimensional” in a certain sense. These properties have led to the resolution of important open problems in the theory of operator algebras which do not a priori have anything to do with random matrices; see, e.g., [128, 99, 60].

The interplay between operator algebras and random matrices continues to be a rich source of problems in both areas; an influential recent example is the work of Hayes [65] on the Peterson–Thom conjecture (cf. section 5.4). In recent years, however, the notion of strong convergence has led to spectacular new applications in several other areas of mathematics. Broadly speaking, the importance of the strong convergence phenomenon is twofold.

-

Noncommutative polynomials are highly expressive: many complex structures can be encoded in terms of spectral properties of noncommutative polynomials.

-

Norm convergence is an extremely strong property, which provides access to challenging features of complex models.

Furthermore, new mathematical methods have made it possible to establish novel quantitative forms of strong convergence, which enable the treatment of even more general random matrix models that were previously out of reach.

We will presently highlight a number of themes that illustrate recent applications and developments surrounding strong convergence. The remainder of this survey is devoted to a more detailed introduction to this circle of ideas.

It should be emphasized at the outset that while I have aimed to give a general introduction to the strong convergence phenomenon and related topics, this survey is selectively focused on recent developments that are closest to my own interests, and is by no means comprehensive or complete. The interested reader may find complementary perspectives in surveys of Magee [88] and Collins [39], and is warmly encouraged to further explore the research literature on this subject.

1.1. Optimal spectral gaps

Let be a -regular graph with vertices. By the Perron-Frobenius theorem, its adjacency matrix has largest eigenvalue with eigenvector (the vector all of whose entries are one). The remaining eigenvalues are bounded by

The smaller this quantity, the faster does a random walk on mix. The following classical lemma yields a lower bound that holds for any sequence of -regular graphs. It provides a speed limit on how fast random walks can mix.

Lemma 1.2 (Alon–Boppana).

For any -regular graphs with vertices,

Given a universal lower bound on the nontrivial eigenvalues, the obvious question is whether there exist graphs that attain this bound. Such graphs have the largest possible spectral gap. One may expect that such heavenly graphs must be very special, and indeed the first examples of such graphs were carefully constructed using deep number-theoretic ideas by Lubotzky–Phillips–Sarnak [87] and Margulis [97]. It may therefore seem surprising that this property does not turn out to be all that special at all: random graphs have an optimal spectral gap [50].111Here we gloss over an important distinction between the explicit and random constructions: the former yields the so-called Ramanujan property , while the latter yields only which is the natural converse to Lemma 1.2 (cf. section 6.5).

Theorem 1.3 (Friedman).

For a random -regular graph on vertices,

We now explain that Theorem 1.3 may be viewed as a very special instance of strong convergence. This viewpoint will open the door to establishing optimal spectral gaps in much more general situations.

Let us begin by recalling that the proof of Lemma 1.2 is based on the simple observation that for any graph , the number of closed walks with a given length and starting vertex is lower bounded by the number of such walks in its universal cover . When is -regular, its universal cover is the infinite -regular tree. From this, it is not difficult to deduce that the maximum nontrivial eigenvalue of a -regular graph is asymptotically lower bounded by the spectral radius of the infinite -regular tree, which is [71, §5.2.2].

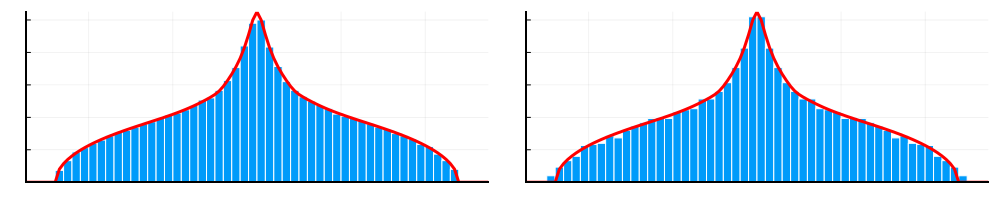

Theorem 1.3 therefore states, in essence, that the support of the nontrivial spectrum of a random -regular graph behaves as that of the infinite -regular tree. To make the connection more explicit, it is instructive to construct both the random graph and infinite tree in a parallel manner. For simplicity, we assume is even (the construction can be modified to the odd case as well).

Given a permutation , we can define edges between vertices by connecting each vertex to its neighbors and . This defines a -regular graph. To define a -regular graph, we repeat this process with permutations. If the permutations are chosen independently and uniformly at random from , we obtain a random -regular graph with adjacency matrix

where are i.i.d. random permutation matrices of dimension .222This is the permutation model of random graphs; see [50, p. 3] for its relation to other models.

To construct the infinite -regular tree in a parallel manner, we identify the vertices of the tree with the free group with free generators . Each vertex is then connected to its neighbors and for . This defines a -regular tree with adjacency matrix

where is defined by the left-regular representation , i.e., where is the coordinate vector of .

These models are illustrated in Figure 1.1 for , where the edges are colored according to their generator; e.g., and are the adjacency matrices of the red edges in the left and right figures, respectively.

In these terms, Theorem 1.3 states that

in probability. This is precisely the kind of convergence described by Definition 1.1, but only for one very special polynomial

Making a leap of faith, we can now ask whether the same conclusion might even hold for every noncommutative polynomial . That this is indeed the case is a remarkable result of Bordenave and Collins [19].

Theorem 1.4 (Bordenave–Collins).

Let and be defined as above. Then converges strongly to , that is,

for every and .333The reason that we must restrict to is elementary: the matrices have a Perron-Frobenius eigenvector , but the operators do not as (an infinite vector of ones is not in ). Thus we must remove the Perron-Frobenius eigenvector to achieve strong convergence.

Theorem 1.4 is a powerful tool because many problems can be encoded as special cases of this theorem by a suitable choice of . To illustrate this, let us revisit the optimal spectral gap phenomenon in a broader context.

In general terms, the optimal spectral gap phenomenon is the following. The spectrum of various kinds of geometric objects admits a universal bound in terms of that of their universal covering space. The question is then whether there exist such objects which meet this bound. In particular, we may ask whether that is the case for random constructions. Lemma 1.2 and Theorem 1.3 establish this for regular graphs. An analogous picture in other situations is much more recent:

-

It was shown by Greenberg [71, Theorem 6.6] that for any sequence of finite graphs with diverging number of vertices that have the same universal cover , the maximal nontrivial eigenvalue of is asymptotically lower bounded by the spectral radius of . On the other hand, given any (not necessarily regular) finite graph , there is a natural construction of random lifts with the same universal cover [71, §6.1]. It was shown by Bordenave and Collins [19] that an optimal spectral gap phenomenon holds for random lifts of any graph .

-

Any hyperbolic surface has the hyperbolic plane as its universal cover. Huber [77] and Cheng [35] showed that for any sequence of closed hyperbolic surfaces with diverging diameter, the first nontrivial eigenvalue of the Laplacian is upper bounded by the bottom of the spectrum of . Whether this bound can be attained was an old question of Buser [29]. An affirmative answer was obtained by Hide and Magee [69] by showing that an optimal spectral gap phenomenon holds for random covering spaces of hyperbolic surfaces.

The key ingredient in these breakthroughs is that the relevant spectral properties can be encoded as special instances of Theorem 1.4. How this is accomplished will be sketched in sections 5.1 and 5.2. In section 5.5, we will sketch another remarkable application due to Song [122] to minimal surfaces.

Another series of developments surrrounding optimal spectral gaps arises from a different perspective on Theorem 1.4. The map that assigns to each permutation the restriction of the associated permutation matrix to defines an irreducible representation of the symmetric group of dimension called the standard representation. Thus

where are independent uniformly distributed elements of . One may ask whether strong convergence remains valid if is replaced by other representations of . This and related optimal spectral gap phenomena in representation-theoretic settings are the subject of long-standing questions and conjectures; see, e.g., [119] and the references therein.

Recent advances in the study of strong convergence have led to major progress in the understanding of such questions [21, 32, 33, 90, 30]. One of the most striking results in this direction to date is the recent work of Cassidy [30], who shows that strong convergence for the symmetric group holds uniformly for all nontrivial irreducible representations of of dimension up to .444For comparison, all irreducible representations of have dimension . This makes it possible, for example, to study natural models of random regular graphs that achieve optimal spectral gaps using far less randomness than is required by Theorem 1.4. We will discuss these results in more detail in section 5.3.

1.2. Intrinsic freeness

We now turn to an entirely different development surrounding strong convergence that has enabled a sharp understanding of a very large class of random matrices in unexpected generality.

To set the stage for this development, let us begin by recalling the original strong convergence result of Haagerup and Thorbjørnsen [60]. Let be independent GUE matrices, that is, self-adjoint complex Gaussian random matrices whose off-diagonal elements have variance and whose distribution is invariant under unitary conjugation. The associated limiting object is a free semicircular family (cf. section 4.1). Define the random matrix

and the limiting operator

where are self-adjoint matrix coefficients.

Theorem 1.5 (Haagerup–Thorbjørnsen).

For and defined as above,555Here denotes the spectrum of , and we recall that the Hausdorff distance between sets is defined as .

It is a nontrivial fact, known as the linearization trick, that Theorem 1.5 implies that converges strongly to ; see section 2.5. This conclusion was used by Haagerup and Thorbjørnsen to prove an old conjecture that the invariant of the reduced -algebra of any countable free group with at least two generators is not a group. For our present purposes, however, the above formulation of Theorem 1.5 will be the most natural.

The result of Haagerup–Thorbjørnsen may be viewed as a strong incarnation of Voiculescu’s asymptotic freeness principle [126]. The limiting operators arise from a free product construction and are thus algebraically free (in fact, they are freely independent in the sense of Voiculescu). This makes it possible to compute the spectral statistics of by means of closed form equations, cf. section 4.1. The explanation for Theorem 1.5 provided by the asymptotic freeness principle is that since the random matrices have independent uniformly random eigenbases (due to their unitary invariance), they become increasingly noncommutative as which leads them to behave freely in the limit.

From the perspective of applications, however, the most interesting case of this model is the special case , that is, the random matrix defined by

where are independent standard Gaussians. Indeed, any self-adjoint random matrix with jointly Gaussian entries (with arbitrary mean and covariance) can be expressed in this form. This model therefore captures almost arbitrarily structured random matrices: if one could understand random matrices at this level of generality, one would capture in one fell swoop a huge class of models that arise in applications. However, since the matrices commute, the asymptotic freeness principle has no bearing on such matrices, and there is no reason to expect that has any significance for the behavior of .

It is therefore rather surprising that the spectral properties of arbitrarily structured Gaussian random matrices are nonetheless captured by those of in great generality. This phenomenon, developed in joint works of the author with Bandeira, Boedihardjo, Cipolloni, and Schröder [10, 11], is captured by the following counterpart of Theorem 1.5 (stated here in simplified form).

Theorem 1.6 (Intrinsic freeness).

For and be defined as above, we have

for all . Here is a universal constant,

and is the covariance matrix of the entries of .

Remark 1.7.

While Theorem 1.6 captures the edges of the spectrum of , analogous results for other spectral parameters (such as the spectral distribution) may be found in [10, 11]. These results are further extended to a large class of non-Gaussian random matrices in joint work of the author with Brailovskaya [23].

Theorem 1.6 states that the spectrum of behaves like that of as soon at the parameter is small. Unlike for the model , where noncommutativity is overtly introduced by means of unitarily invariant matrices, the mechanism for to behave as can only arise from the structure of the matrix coefficients . We call this phenomenon intrinsic freeness. It should not be obvious at this point why the parameter captures intrinsic freeness; the origin of this phenomenon (which was inspired in part by [60, 125]) and the mechanism that gives rise to it will be discussed in section 4.

In practice, Theorem 1.6 proves to be a powerful result as is indeed small in numerous applications, while the result applies without any structural assumptions on the random matrix . This is especially useful in questions of applied mathematics, where messy random matrices are par for the course. Several such applications are illustrated, for example, in [11, §3].

Another consequence of Theorem 1.6 is that the Haagerup–Thorbjørnsen strong convergence result extends to far more general situations. We only give one example for sake of illustration. A -sparse Wigner matrix is a self-adjoint real random matrix so that each row has exactly nonzero entries, each of which is an independent (modulo symmetry ) centered Gaussian with variance . In this case . Theorem 1.6 shows that if are independent -sparse Wigner matrices of dimension , then converges strongly to as soon as regardless of the choice of sparsity pattern. Unlike GUE matrices, such models need not possess any invariance and can have localized eigenbases. Even though this appears to dramatically violate the classical intuition behind asymptotic freeness, this model exhibits precisely the same strong convergence property as GUE (see Figure 1.2).

1.3. New methods in random matrix theory

The development of strong convergence has gone hand in hand with the discovery of new methods in random matrix theory. For example, Haagerup and Thorbjørnsen [60] pioneered the use of self-adjoint linerization (section 2.5), which enabled them to make effective use of Schwinger-Dyson equations to capture general polynomials (their work was extended to various classical random matrix models in [121, 7, 41]); while Bordenave and Collins [19, 21, 20] developed operator-valued nonbacktracking methods in order to efficiently apply the moment method to strong convergence.

More recently, however, two new methods for proving strong convergence have proved to be especially powerful, and have opened the door both to obtaining strong quantitative results and to achieving strong convergence in new situations that were previously out of reach. In contrast to previous approaches, these methods are rather different in spirit to those used in classical random matrix theory.

1.3.1. The interpolation method

The general principle captured by strong convergence (and by the earlier work of Voiculescu) is that the spectral statistics of families of random matrices behave of those of a family of limiting operators. In classical approaches to random matrix theory, however, the limiting operators do not appear directly: rather, one shows that the spectral statistics of these operators admit explicit expressions or closed-form equations, and that the random matrices obey these same expressions or equations approximately.

In contrast, the existence of limiting operators suggests that these may be exploited explicitly as a method of proof in random matrix theory. This is the basic idea behind the interpolation method, which was developed independently by Collins, Guionnet, and Parraud [40] to obtain a quantitative form of the Haagerup–Thorbjørnsen theorem, and by Bandeira, Boedihardjo, and the author [10] to prove the intrinsic freeness principle (Theorem 1.6).

Roughly speaking, the method works as follows. We aim to show that the spectral statistics of a random matrix behave as those of a limiting operator . To this end, one introduces a certain continuous interpolation between these objects, so that and . To bound the discrepancy between the spectral statistics of and , one can then estimate

where denotes the limiting trace (see section 2.1). If a good bound can be obtained for sufficiently general (we will choose for and ), convergence of the norm will follow as a consequence.

As stated above, this procedure does not make much sense. Indeed (a random matrix) and (a deterministic operator) do not even live in the same space, so it is unclear what it means to interpolate between them. Moreover, the general approach outlined above does not in itself explain why the derivative along the interpolation should be small: the latter is the key part of the argument that requires one to understand the mechanism that gives rise to free behavior. Both these issues will be explained in more detail in section 4, where we will sketch the main ideas behind the proof of Theorem 1.6.

1.3.2. The polynomial method

We now describe an entirely different method, developed in the recent work of Chen, Garza-Vargas, Tropp, and the author [32], which has led to a series of new developments.

Consider a sequence of self-adjoint random matrices with limiting operator ; one may keep in mind the example and in the context of Theorem 1.4. In many natural models, it turns out to be the case that spectral statistics of polynomial test functions can be expressed as

where is a rational function whose degree is controlled by the degree of the polynomial . Whenever this is the case, the fact that

is generally an immediate consequence. However, such soft information does not in itself suffice to reason about the norm.

The key observation behind the polynomial method is that classical results in the analytic theory of polynomials (due to Chebyshev, Markov, Bernstein, ) can be exploited to “upgrade” the above soft information to strong quantitative bounds, merely by virtue of the fact that is rational. The basic idea is to write

This is reminiscent of the interpolation method, where now instead of an interpolation parameter we “differentiate with respect to ”. In contrast to the interpolation method, however, the surprising feature of the present approach is that the derivative of can be controlled by means of completely general tools that do not require any understanding of the random matrix model. In particular, the analysis makes use of the following two classical facts about polynomials [34].

-

An inequality of A. Markov states that for every real polynomial of degree at most .

-

A corollary of the Markov inequality states that for any discretization of with spacing at most .

Applying these results to the numerator and denominator of the rational function yields with minimal effort a bound of the form

(the additional factor arises since we must restrict to the part of the interval where the spacing between the points is sufficiently small). Thus we obtain a strong quantitative bound in a completely soft manner.

In this form, the above method does not suffice to achieve strong convergence. To this end, two additional ingredients must be added.

-

1.

The above analysis requires the test function to be a polynomial. However, since the bound depends only polynomially on the degree of , one can use a Fourier-analytic argument to extend the bound to arbitrary smooth .

-

2.

The rate obtained above does not suffice to deduce convergence of the norm, since it can only ensure that has a bounded (rather than vanishing) number of eigenvalues outside the support of . To achieve strong convergence, we must expand to second (or higher) order and control the additional term(s).

Nonetheless, all these ingredients are essentially elementary and can be implemented with minimal problem-specific inputs.

The polynomial method will be discussed in detail in section 3, where we will illustrate it by giving an essentially complete proof of Theorem 1.4. That an elementary proof is possible at all is surprising in itself, given that previous methods required delicate and lengthy computations.

When it is applicable, the polynomial method has typically provided the best known quantitative results and has made it possible to address previously inaccessible questions. To date, this includes works of Magee and de la Salle [90] and of Cassidy [30] on strong convergence of high dimensional representations of the unitary and symmetric groups (see also [33]); strong convergence for polynomials with coefficients in subexponential operator spaces [33]; strong convergence of the tensor GUE model of graph products of semicircular variables (ibid.); a characterization of the unusual large deviations in Friedman’s theorem [32] as illustrated in Figure 1.3; and work of Magee, Puder and the author on strong convergence of uniformly random permutation representations of surface groups [92].

1.4. Organization of this survey

The rest of this survey is organized as follows.

Section 2 collects a number of basic but very useful properties of strong (and weak) convergence that are scattered throughout the literature. These properties also illustrate the fundamdental interplay between strong convergence and the operator algebraic properties of the limiting objects.

Section 3 provides a detailed illustration of the polynomial method: we will give an essentially self-contained proof of Theorem 1.4.

Section 4 is devoted to further discussion of the intrinsic freeness phenomenon. In particular, we aim to explain the mechanism that gives rise to it.

Section 5 discusses in more detail various applications of the strong convergence phenomenon that we introduced above. In particular, we aim to explain how the strong convergence property is used in these applications.

Finally, section 6 is devoted to a brief exposition of various open problems surrounding the strong convergence phenomenon.

2. Strong convergence

The aim of this section is to collect various general properties of strong convergence that are often useful. Many of these properties rely on operator algebraic properties of the limiting objects. We have aimed to make the presentation accessible to readers without any prior background in operator algebras.

2.1. -probability spaces

Let be an self-adjoint (usually random) matrix. We will be interested in understanding the empirical spectral distribution

(that is, is the fraction of the eigenvalues of that lies in the set ); and the spectral edges, that is, the extreme eigenvalues

or, more generally, the full spectrum as a set. In the models that we will consider, both these spectral features are well described by the corresponding features of a limiting operator as : convergence of the spectral distribution is weak convergence, and convergence of the spectral edges is strong convergence. These notions will be formally defined in the next section.666These notions capture the macroscopic features of the spectrum. A large part of modern random matrix theory is concerned with understanding the spectrum at the microscopic (or local) scale, that is, understanding the limit of the eigenvalues viewed as a point process. Such questions are rather different in spirit, as the behavior of the local statistics is expected to be universal and is not described by the spectral properties of limiting operators.

To do so, we must first give meaning to the spectral distribution and edges of the limiting operator . For the spectral edges, we may simply consider the norm or spectrum of which are well defined for bounded operators on any Hilbert space . However, the meaning of the spectral distribution of is not clear a priori. Indeed, since the empirical spectral distribution

is defined by the normalized trace,777We denote by the normalized trace of an matrix , and define by functional calculus (i.e., apply to the eigenvalues of while keeping the eigenvectors fixed). defining the spectral distribution of requires us to make sense of the normalized trace of infinite-dimensional operators. This is impossible in general, as any linear functional with the trace property for all must be trivial (this follows immediately by noting that when is infinite-dimensional, every element of can be written as the sum of two commutators [61]).

This situation is somewhat reminiscent of a basic issue of probability theory: one cannot define a probability measure on arbitrary subsets of an uncountable set, but must rather work with a suitable -algebra of sets for which the notion of measure makes sense. In the present setting, we cannot define a normalized trace for all bounded operators on an infinite-dimensional Hilbert space , but must rather work with a suitable algebra of operators on which the trace can be defined. These objects must satisfy some basic axioms.888We are a bit informal in our terminology: -algebras are usually defined as Banach algebras rather than as subalgebras of . However, as any -algebra may be represented in the latter form, our definition entails no loss of generality. What we call a trace should more precisely be called a tracial state. A crash course on the basic notions may be found in [106].

Definition 2.1 (-algebra).

A unital -algebra is an algebra of bounded operators on a complex Hilbert space that is self-adjoint ( implies ), contains the identity , and is closed in the operator norm topology.

Definition 2.2 (Trace).

A trace on a unital -algebra is a linear functional that is positive , unital , and tracial . A trace is called faithful if implies .

Definition 2.3 (-probability space).

A -probability space is a pair , where is a unital -algebra and is a faithful trace.

The simplest example of a -probability space is the algebra of matrices with its normalized trace . One may conceptually think of general -probability spaces as generalizations of this example.

Remark 2.4.

Most of the axioms in the above definitions are obvious analogues of the properties of . What may not be obvious at first sight is why we require to be closed in the norm topology. The reason is that it ensures that for any self-adjoint not only when is a polynomial (which follows merely from the fact that is an algebra), but also when is a continuous function, since the latter can be approximated in norm by polynomials. This property will presently be needed to define the spectral distribution.

Remark 2.5.

If we make the stronger assumption that is closed in the strong operator topology, is called a von Neumann algebra. This ensures that even when is a bounded measurable function. Von Neumann algebras form a major research area in their own right, but appear in this survey only in section 5.4.

Given a -probability space , we can now associate to each self-adjoint element , a spectral distribution by defining

for every continuous function . Indeed, that is positive and unital implies that is a positive and normalized linear functional on , so exists by the Riesz representation theorem.

This survey is primarily concerned with strong convergence, that is, with norms and not with spectral distributions. Nonetheless, it is generally the case that the only spectral statistics of random matrices that are directly computable are trace statistics (such as the moments ), so that a good understanding of weak convergence is prerequisite for proving strong convergence. In particular, we must understand how to recover the spectrum from the spectral distribution. It is here that the faithfulness of the trace plays a key role.

Lemma 2.6 (Spectral distribution and spectrum).

Let be a -probability space. Then for any self-adjoint , , we have and thus

Proof.

By the definition of support, if and only if there is a continuous nonnegative function so that and . On the other hand, by the spectral theorem, if and only if there is a continuous nonnegative function so that and . That therefore follows as if and only if , since is faithful and . ∎

We now introduce one of the most important examples of a -probability space. Another important example will appear in section 4.1.

Example 2.7 (Reduced group -algebras).

Let be a finitely generated group with generators , and be its left-regular representation, i.e., where is the coordinate vector of . Then

is called the reduced -algebra of . Here and in the sequel, denotes the -algebra generated by a family of operators (that is, the norm-closure of the set of all noncommutative polynomials in ).

The reduced -algebra of any group always comes equipped with a canonical trace that is defined for all by

where is the identity element. Note that:

-

It is straightforward to check that is indeed tracial: by linearity, it suffices to show that for all .

-

is also faithful: if , then for all by the trace property (since ), and thus .

Thus defines a -probability space.

Example 2.8 (Free group).

In the case that is a free group, we implicitly encountered the above construction in section 1.1. We argued there that the adjacency matrix of a random -regular graph is modelled by the operator

It follows immediately from the definition that the moments of the spectral distribution (defined by the canonical trace ) are given by

As the moments grow at most exponentially in , this uniquely determines . The density of was computed in a classic paper of Kesten [80, proof of Theorem 3], and is known as the Kesten distribution. Since the explicit formula for the density shows that , Lemma 2.6 yields

This explains the value of the norm that appears in Theorem 1.3.

2.2. Strong and weak convergence

We can now formally define the notions of weak and strong convergence of families of random matrices.

Definition 2.9 (Weak and strong convergence).

Let be a sequence of -tuples of random matrices, and let be an -tuple of elements of a -probability space .

-

is said to converge weakly to if for every

(2.1) -

is said to converge strongly to if for every

(2.2)

This definition appears to be slightly weaker than our initial definition of strong convergence in Definition 1.1, where we allowed for polynomials with matrix rather than scalar coefficients. We will show in section 2.4 that the apparently weaker definition in fact already implies the stronger one.

We begin by spelling out some basic properties, see for example [41, §2.1].

Lemma 2.10 (Equivalent formulations of weak convergence).

The following are equivalent.

-

a.

converges weakly to .

-

b.

Eq. (2.1) holds for every self-adjoint .

-

c.

For every self-adjoint , the empirical spectral distribution converges weakly to in probability.

Proof.

Since every polynomial can be written as for self-adjoint polynomials , the equivalence is immediate by linearity of the trace. Moreover, the implication is trivial since by the definition of the spectral distribution (and as is compactly supported).

On the other hand, since for every , (2.1) implies

in probability. As is compactly supported, convergence of moments implies weak convergence, and the implication follows. ∎

A parallel result holds for strong convergence.

Lemma 2.11 (Equivalent formulations of strong convergence).

The following are equivalent.

-

a.

converges strongly to .

-

b.

Eq. (2.2) holds for every self-adjoint .

-

c.

For every self-adjoint and , we have

-

d.

For every self-adjoint , we have

Proof.

Since for any operator and as is self-adjoint, it is immediate that . That is immediate as for any bounded self-adjoint operators .

To show that , we may choose for any a univariate real polynomial so that , where . Since (2.2) implies that with probability as , we obtain

with probability as by applying (2.2) to . That follows by taking .

To show that , choose so that for and otherwise. Since implies that in probability,

with probability as for every . On the other hand, for any , we may choose so that and for . Since implies that in probability,

with probability as for every . As can be covered by a finite number of such sets , the implication follows. ∎

The elementary equivalent formulations of weak and strong convergence discussed above are all concerned with the (real) eigenvalues of self-adjoint polynomials. In contrast, what implications weak or strong convergence may have for the empirical distributions of the complex eigenvalues of non-self-adjoint (or non-normal) polynomials is poorly understood; see section 6.9. We nonetheless record one easy observation in this direction [100, Remark 3.6].

Lemma 2.12 (Spectral radius).

Suppose that converges strongly to . Then

for every , where denotes the spectral radius of any (not necessarily normal) operator .

Proof.

This follows immediately from the fact that the spectral radius is upper semicontinuous with respect to the operator norm [62, §104]. ∎

2.3. Strong implies weak

While we have formulated weak and strong convergence as distinct phenomena, it turns out that strong convergence—or even merely a one-sided form of it—often automatically implies weak convergence. Such a statement should be viewed with suspicion, since the definition of weak convergence requires us to specify a trace while the definition of strong convergence is independent of the trace. However, it turns out that many -algebras have a unique trace, and this is precisely the setting we will consider.

Lemma 2.13 (Strong implies weak).

Let be a sequence of -tuples of random matrices, and let be an -tuple of elements of a -probability space . Consider the following conditions.

-

a.

For every

-

b.

converges weakly to .

-

c.

For every

Then , and if in addition has a unique trace.

Proof.

To prove , note that weak convergence implies

with probability for every , as . The conclusion follows by letting and applying Lemma 2.6.

To prove , let us first consider the special case that are nonrandom. Define a linear functional by

This is called the law of the family ; it has the same properties as the trace in Definition 2.2, but restricted only to polynomials. Note that by linearity, is fully determined by its value on all monomials.

Since for every monomial , the sequence is precompact in the weak∗-topology. Thus for every subsequence of the indices , there is a further subsequence so that pointwise for some law that satisfies the properties of a trace. On the other hand, condition ensures that

where the limits are taken along the subsequence. Thus extends by continuity to a trace on . Since the latter has the unique trace property, we must have , and thus we have proved weak convergence.

When are random, we note that condition implies (by Borel-Cantelli and as is separable) that for every subequence of indices , we can find a further subsequence along which for every a.s. The proof now proceeds as in the nonrandom case. ∎

The unique trace property turns out to arise frequently in practice. In particular, that has a unique trace for is a classical result of Powers [118], and a general characterization of countable groups so that has a unique trace is given by Breuillard–Kalantar–Kennedy–Ozawa [24]. In such situations, Lemma 2.13 shows that a strong convergence upper bound (condition ) already suffices to establish both strong and weak convergence in full. Establishing such an upper bound is the main difficulty in proofs of strong convergence.

2.4. Scalar, matrix, and operator coefficients

In Definition 2.9, we have defined the weak and strong convergence properties for polynomials with scalar coefficients. However, applications often require polynomials with matrix or even operator coefficients to encode the models of interest.999Let and be -probability spaces. If are elements of and is a polynomial with coefficients in , then lies in the algebraic tensor product . This viewpoint suffices for weak convergence. To make sense of strong convergence, however, we must define a norm on the tensor product. We will do so in the obvious way: Given and , we define the -algebra by and extend the trace accordingly. This construction is called the minimal tensor product of -probability spaces, and is often denoted . For simplicity, we fix the following convention: in this survey, the notation will always denote the minimal tensor product. We now show that such properties are already implied by their counterparts for scalar polynomials.

For weak convergence, this situation is easy.

Lemma 2.15 (Operator-valued weak convergence).

The following are equivalent.

-

a.

converges weakly to , i.e., for all

-

b.

For any -probability space and

Proof.

That is obvious. To prove , let us express concretely as with operator coefficients . Then clearly

where we denote for . Since yields for all , the conclusion follows. ∎

Unfortunately, the analogous equivalence for strong convergence is simply false at this level of generality; a counterexample can be constructed as in [33, Appendix A]. Nonetheless, strong convergence extends in complete generality to polynomials with matrix (as opposed to operator) coefficients. This justifies the apparently more general Definition 1.1 given in the introduction.

Lemma 2.16 (Matrix-valued strong convergence).

The following are equivalent.

-

a.

converges strongly to , i.e., for all

-

b.

For every and

Proof.

That is obvious. To prove , express as with , where denotes the standard basis of . We can therefore estimate

and analogously for . Here we used for the first inequality and the triangle inequality for the second. Thus yields

for probability as for every . Now note that since and for every , applying the above inequality to implies a fortiori that

for probability as . Taking completes the proof. ∎

Strong convergence of polynomials with operator coefficients requires additional assumptions. For example, if the coefficients are compact operators, strong convergence follows easily from Lemma 2.16 since compact operators can be approximated in norm by finite rank operators (i.e., by matrices).

A much weaker requirement is provided by the following property of -algebras. We give the definition in the form that is most relevant for our purposes; its equivalence to the original more algebraic definition (in terms of short exact sequences) is a nontrivial fact due to Kirchberg, see [115, Chapter 17] or [25].

Definition 2.17 (Exact -algebra).

A -algebra is called exact if for every finite-dimensional subspace and , there exists and a linear embedding such that

for every -algebra and .

We can now prove the following.

Lemma 2.18 (Operator-valued strong convergence).

Suppose that converges strongly to . Then we have

for every with coefficients in an exact -algebra .

Proof.

The exactness property turns out to arise frequently in practice. In particular, is exact [115, Corollary 17.10], as is for many other groups . For an extensive discussion, see [25, Chapter 5] or [6].

One reason that exactness is very useful in a strong convergence context is that it enables us construct complex strong convergence models by combining simpler building blocks, as will be explained briefly in section 5.4. Another useful application of exactness is that it enables an improved form of Lemma 2.13 with uniform bounds over polynomials with matrix coefficients of any dimension [90, §5.3].

2.5. Linearization

In the previous section, we showed that strong convergence of polynomials with scalar coefficients implies strong convergence of polynomials with matrix coefficients. If we allow for matrix coefficients, however, we can achieve a different kind of simplification: to establish strong convergence, it suffices to consider only polynomials with matrix coefficients of degree one. This nontrivial fact is often referred to as the linearization trick.

We first develop a version of the linearization trick for unitary families.

Theorem 2.19 (Unitary linearization).

Let be a sequence of -tuples of unitary random matrices, and let be an -tuple of unitaries in a -algebra . Then the following are equivalent.

-

a.

For every and self-adjoint of degree one,

-

b.

converges strongly to .

Theorem 2.19 is due to Pisier [114, 117], but the elementary proof we present here is due to Lehner [84, §5.1]. We will need a classical lemma.

Lemma 2.20.

For any operator in a -algebra , define its self-adjoint dilation in . Then and .

Proof.

We first note that . To show that the spectrum is symmetric, it suffices to note that is unitarily conjugate to since with . ∎

The main step in the proof of Theorem 2.19 is as follows.

Lemma 2.21.

Fix and for . Then there exist , and for such that

for any family of unitaries in any -algebra .

Proof.

Let . Lemma 2.20 yields with . We therefore obtain for any

where the second equality used that has a symmetric spectrum.

Now note that the block matrix is self-adjoint, and we can choose sufficiently large so that it is positive definite. Then we may write for . Now view as an block matrix with blocks , so that . Therefore with . To conclude we let , , and define by padding with zero columns. ∎

We can now conclude the proof of Theorem 2.19.

Proof of Theorem 2.19.

Fix any of degree at most , and let be unitaries in any -algebra . Denote by all monomials of degree at most in the variables . Then we may clearly express for some matrix coefficients . Lemma 2.21 yields of degree at most so that

Iterating this procedure times and using Lemma 2.20, we obtain a self-adjoint of degree at most one and a real polynomial so that

for any -tuple of unitaries in any -algebra . As this identity therefore applies also to , the implication follows immediately. The converse implication follows from Lemma 2.16. ∎

We have included a full proof of Theorem 2.19 to give a flavor of how the linearization trick comes about. In the rest of this section, we briefly discuss two additional linearization results without proof.

The proof of Theorem 2.19 relied crucially on the unitary assumption. It is tempting to conjecture that its conclusion extends to the non-unitary case. Unfortuntately, a simple example shows that this cannot be true.

Example 2.22.

Consider any and of degree one, that is, . Then the spectral theorem yields

for every self-adjoint operator . Now let be self-adjoint operators with and . Since the right-hand side of the above identity is the supremum of a convex function of , it is clear that for every of degree one. But clearly while .

This example shows that the norms of polynomials of degree one cannot detect gaps in the spectrum of a self-adjoint operator, while higher degree polynomials can. Thus the norm of degree one polynomials does not suffice for strong convergence in the self-adjoint setting. However, it was realized by Haagerup and Thorbjørnsen [60] that this issue can be surmounted by requiring convergence not just of the norm, but rather of the full spectrum, of degree one polynomials.

Theorem 2.23 (Self-adjoint linearization).

Let be a sequence of -tuples of self-adjoint random matrices, and let be an -tuple of self-adjoint elements of a -algebra . The following are equivalent.

-

a.

For every and self-adjoint of degree one,

-

b.

For every and self-adjoint

We omit the proof, which may be found in [60] or in [59] (see also [99, §10.3]). Let us note that while this theorem only gives an upper bound, the corresponding lower bound will often follow from Lemma 2.13.

Finally, while we have focused on strong convergence, linearization tricks for weak convergence can be found in the paper [45] of de la Salle. For example, we state the following result which follows readily from the proof of [45, Lemma 1.1].

Lemma 2.24 (Linearization and weak convergence).

Let be a -probability space. Then in the setting of Theorem 2.23, the following are equivalent.

-

a.

For every and self-adjoint of degree one,

-

b.

converges weakly to .

Why is linearization useful? It is often the case that one can perform computations more easily for polynomials of degree one than for general polynomials. For example, linearization played a key role in the Haagerup–Thorbjørnsen proof of strong convergence of GUE matrices [60] because the matrix Cauchy transform of polynomials of degree one can be computed by means of quadratic equations. Similarly, polynomials of degree one make the moment computations in the works of Bordenave and Collins [19, 21, 20] tractable. However, the interpolation and polynomial methods discussed in section 1.3 do not rely on linearization.

2.6. Positivization

The linearization trick of the previous section states that if we work with general matrix coefficients, it suffices to consider only polynomials of degree one. We now introduce (in the setting of group -algebras) a complementary principle: if we admit polynomials of any degree, it suffices to consider only polynomials with positive scalar coefficients. This positivization trick due to Mikael de la Salle appears in slightly different form in [90, §6.2].101010The form of this principle that is presented here was explained to the author by de la Salle.

The positivization trick will rely on another nontrivial operator algebraic property that we introduce presently. Let us fix a finitely generated group with generators , let be its left-regular representation, and let be the canonical trace on . For simplicity, we will denote . Then for any , we can uniquely express

| (2.3) |

for some coefficients that vanish for all but a finite number of . Moreover, it is readily verified using the definition of the trace that

We can now introduce the following property.

Definition 2.25 (Rapid decay property).

The group is said to have the rapid decay property if there exists constants so that

for all and of degree .

The key feature of this property is the polynomial dependence on degree . This is a major improvement over the trivial bound obtained by applying the triangle inequality and Cauchy–Schwarz, which would yield such an inequality with an exponential constant .

While the rapid decay property appears to be very strong, it is widespread. It was first proved by Haagerup [57] for the free group , for which rapid decay property is known as the Haagerup inequality. The rapid decay property is now known to hold for many other groups, cf. [31].

We are now ready to introduce the positivization trick. For simplicity, we formulate the result for the case of the free group (see Remark 2.27).

Lemma 2.26 (Positivization).

Let be a sequence of -tuples of unitary random matrices, and let be defined as above for (that is, ). Then the following are equivalent.

-

a.

For every self-adjoint

-

b.

converges strongly to .

Proof.

The implication is trivial. To prove , fix any . We may clearly assume without loss of generality that all the monomials of are reduced (i.e., do not contain consecutive letters or ), so that the coefficients of are precisely those that appear in the representation (2.3).

Let us write for defined by taking the real (imaginary) parts of the coefficients of . Since the polynomials are self-adjoint with real coefficients, we can write for self-adjoint defined by keeping only the positive (negative) coefficients of . Then we can estimate by the triangle inequality

On the other hand, note that

We can therefore estimate

for some , where is the degree of and we have applied the rapid decay property of in the first and last inequality. Thus implies that

for every of degree at most and some constants .

Now note that, for every , applying the above to yields

Taking yields the strong convergence upper bound, and the lower bound now follows from Lemma 2.13 since has the unique trace property. ∎

The positivization trick is very useful in the context of the polynomial method, as we will see in section 3. Let us however give a hint as to its significance.

For a self-adjoint polynomial with positive coefficients, we may interpret (2.3) as defining the adjacency matrix of a weighted graph with vertex set , where we place an edge with weight between every pair of vertices with and . Thus, for example, computing the moments of is in essence a combinatorial problem of counting the number of closed walks in this graph. This greatly facilitates the analysis of such quantities; for example, we can obtain upper bounds by overcounting some of the walks.

For a general choice of , we may still view as a kind of adjacency matrix of a graph with complex edge weights. This is a much more complicated object, however, since the moments of this operator may exhibit cancellations between different walks and can therefore no longer by treated as a counting problem. The surprising consequence of the positivization trick is that for the purposes of proving strong convergence, we can completely ignore these cancellations and restrict attention only to the combinatorial situation.

Remark 2.27.

The only part of the proof of Lemma 2.26 where we used is in the very first step, where we argued that we may assume that the coefficients of agree with those in the representation (2.3). For other groups , it is not clear that this is the case unless we assume that the matrices also satisfy the group relations, i.e., that where is a (random) unitary representation of . Under the latter assumption, Lemma 2.26 extends directly to any with the rapid decay and unique trace properties.

Alternatively, when the positivization trick is applied to the polynomial method, it is possible to apply a variant of the argument directly to the limiting object that appears in the proof, avoiding the need to invoke properties of the random matrices. This form of the positivization trick is developed in [90, §6.2] (cf. Remark 3.11).

3. The polynomial method

The polynomial method, which was introduced in the recent work of Chen, Garza-Vargas, Tropp, and the author [32], has enabled significantly simpler proofs of strong convergence and has opened the door to various new developments. The method was briefly introduced in section 1.3.2 above. In this section, we aim to provide a detailed illustration of this method by using it to prove strong convergence of random permutation matrices (Theorem 1.4).

We will follow a simplified form of the treatment in [32]. The simplifications arise for two reasons: we will make no attempt to get good quantitative bounds, enabling us to to use crude estimates in various places; and we will take advantage of the idea of [90] to significantly simplify one part of the argument by exploiting positivization. Aside from the use of standard results on polynomials and Schwartz distributions, the proof given here is essentially self-contained.

Despite its simplicity, what makes the polynomial method work appears rather mysterious at first sight. We will conclude this section with a discussion of the new phenomenon that is captured by this method (section 3.6).

3.1. Outline

In the following, we fix independent random permutation matrices and the limiting model as in Theorem 1.4. More precisely, recall that , where and are the free generators and left-regular representation of . We will view as living in the -probability space where denotes the canonical trace.

For notational purposes, it will be convenient to define and for . We analogously define and , and similarly and , for . We will think of as fixed, and all constants that appear in this section may depend on .

We begin by outlining the key ingredients that are needed to conclude the proof. These ingredients will then be developed in the remainder of this section.

3.1.1. Polynomial encoding

The first step of the analysis is to show that the expected traces of monomials of are rational expressions of .

Lemma 3.1.

For every and , there exist real polynomials and of degree at most so that for all

3.1.2. Asymptotic expansion

Now fix a self-adjoint noncommutative polynomial . Then for every univariate real polynomial , since is again a noncommutative polynomial, we immediately obtain

| (3.2) |

Here and are defined, a priori, as linear functionals on the space of all univariate real polynomials (of course, also depend on the choice of , but we will view as fixed throughout the argument).

The core of the proof is now to show that the expansion (3.2) is valid not only for polynomial test functions , but even for arbitrary smooth test functions . It is far from obvious why this should be the case; for example, it is conceivable that there could exist smooth test functions for which weak convergence takes place at a rate slower than the rate for polynomial . If that were to be the case, then would not even make sense for smooth . We will show, however, that this hypothetical scenario is not realized.

Recall that a linear functional on is called a compactly supported (Schwartz) distribution (see [72, Chapter II]) if

holds for some constants and .

Proposition 3.2.

3.1.3. The infinitesimal distribution

As , Lemma 2.6 yields

The final ingredient of the proof is to show that satisfies the same bound. By the positivization trick, it suffices to assume that has positive coefficients.

Lemma 3.3.

For every choice of self-adjoint , we have

To prove Lemma 3.3 we face a conundrum: while we know abstractly that is a compactly supported distribution, we are only able to compute its value for polynomial test functions (as we have an explicit formula for in section 3.1.1). To surmount this issue, we will use the following general fact [32, Lemma 4.9]: for any compactly supported distribution , we have

Thus it suffices to show that

which is tractable as we have access to the moments of . It is this moment estimate that is greatly simplified by the assumption that has positive coefficients.

3.1.4. Proof of Theorem 1.4

We now use these ingredients to conclude the proof.

Proof of Theorem 1.4.

Fix and a self-adjoint with positive coefficients. Moreover, let be a nonnegative smooth function that vanishes in a neighborhood of and such that for .

We now turn to the proofs of the various ingredients described above.

3.2. Polynomial encoding

The aim of this section is to prove Lemma 3.1. We follow [85]; see also [105, 42]. We begin by noting that

so that it suffices to compute the rightmost expectation. Clearly

A tuple is realizable if the corresponding summand is nonzero. Denote by the set of all realizable tuples.

To bring out the dependence on dimension , we note that by symmetry, the expectation inside the above sum only depends on how many distinct pairs of indices appear for each permutation matrix. To encode this information, we associate to each a directed edge-colored graph as follows. Number each distinct value among by order of appearance, and assign to each a vertex. Now draw an edge colored from one vertex to another if or appears in the expectation, where are the values associated to the first and second vertex, respectively; see Figure 3.1.

Denote by the set of graphs thus constructed, and note that this set is independent of . For each such graph with vertices, we can recover all associated uniquely by assigning distinct values of to its vertices. There are ways to do this. If the graph has edges with color , then the corresponding expectation for each such is

since the random variable in the expectation is the event that for each , the permutation matrix has of its rows fixed as specified by the realizable tuple . Here we presumed that , which ensures that and .

In summary, we have proved the following.

Lemma 3.4.

For every and , we have

The proof of Lemma 3.1 is now straightforward.

Proof of Lemma 3.1.

We can rewrite the above lemma as

where is the total number of edges in . As every is connected by construction, we have and thus the right-hand side is a rational function of .

Define a polynomial of degree by

Since for all , it is clear that is a polynomial of degree at most for some constant (which depends on ). ∎

We can now read off the first terms in the -expansion. Recall that is called a proper power if for some , , and is called a non-power otherwise. Every can be written uniquely as for a non-power .

Corollary 3.5.

We have

Moreover, if for a non-power , then

where denotes the number of divisors of .

Proof.

If , it is obvious that . We therefore assume this is not the case. We may further assume that is cyclically reduced, since the left-hand side of Lemma 3.1 is unchanged under cyclic reduction. Then every vertex of any must have degree at least two.

For the first identity, it now suffices to note that there cannot exist with : this would imply that is a tree, which must have a vertex of degree one. Thus the expression in the proof of Lemma 3.1 yields .

We can similarly read off from the proof of Lemma 3.1 that

That implies (as each vertex has degree at least two) that is a cycle. As defines a closed nonbacktracking walk in , it must go around the cycle an integer number of times, so the possible lengths of cycles are the divisors of . ∎

3.3. The master inequality

We now proceed to the core of the polynomial method. Our main tool is the following inequality of A. Markov [34, p. 91].

Lemma 3.6 (Markov inequality).

For any real polynomial of degree and

As well known consequence of the Markov inequality is that a bound on a polynomial on a sufficiently fine grid extends to a uniform bound [34, p. 91]. For completeness, we spell out the argument in the form we will need it.

Corollary 3.7.

For any real polynomial of degree and , we have

Proof.

For any , its distance to the set is at most . Thus

by the Markov inequality. The conclusion follows. ∎

In the following, we fix a self-adjoint of degree . For every polynomial test function of degree , Lemma 3.1 yields

where are real polynomials of degree at most and is defined in the proof of Lemma 3.1. We define for as in (3.2), and denote by the sum of the moduli of the coefficients of . Note that all the above objects depend on the choice of , which we consider fixed.

The key idea is to use the Markov inequality to bound the derivatives of .

Lemma 3.8.

For any of degree , we have

for all , where is a constant (which depends on ).

Proof.

It is easily verified using the explicit expression for in the proof of Lemma 3.1 that there are constants (which depend on ) so that

for all . We now simply apply the chain rule. For the first derivative,

using Lemma 3.6. But Corollary 3.7 yields

where we used in the second inequality and that in the last inequality. The bound on is obtained in a completely analogous manner. ∎

We now easily obtain a quantitative form of (3.2).

Corollary 3.9 (Master inequality).

For every of degree and ,

as well as .

Proof.

The bound on follows immediately from Lemma 3.8 as . Now note that the left-hand side of the equation display in the statement equals

Thus the bound in the statement follows for from Lemma 3.8. On the other hand, when , we can trivially bound

by the triangle inequality, as is supported in , and using the bound on . The conclusion follows using . ∎

3.4. Extension to smooth functions

We are now ready to prove Proposition 3.2. To this end, we will show that Corollary 3.9 can be extended to smooth test functions using a simple Fourier-analytic argument.

Recall that the Chebyshev polynomial (of the first kind) is the polynomial of degree defined by . Any of degree can be written as

for some real coefficients . Note that the latter are merely the Fourier coefficients of the function defined by .

Proof of Proposition 3.2.

Fix any and let be its Chebyshev coefficients as above. As for all , we can estimate

using the estimate on in Corollary 3.9. Now note that is the th Fourier coefficient of the th derivative of . We can therefore estimate

by Cauchy–Schwarz and Parseval, where the last inequality is obtained by applying the chain rule to . Since this estimate holds for all , the definition of extends uniquely by continuity to any . In particular, extends to a compactly supported distribution.

3.5. The infinitesimal distribution

It remains to prove Lemma 3.3. As was explained in section 3.1.3, this result follows immediately from the following lemma, whose proof uses a spectral graph theory argument due to [49, Lemma 2.4].

Lemma 3.10.

Assume that has positive coefficients. Then

To set up the proof, let us fix a noncommutative polynomial of degree with positive coefficients. By homogeneity, we may assume without loss of generality that the coefficients sum to one. It will be convenient to express

where are random variables such that . Now let be independent copies of for , so that we have . Then we can apply Corollary 3.5 to compute

where denotes the set of non-powers in .111111Here we used that if , since .

Proof of Lemma 3.10.

We would like to argue that if a word , it must be a concatenation of words that reduce to . This is only true, however, if is cyclically reduced: otherwise the last letters of may cancel the first letters of the next repetition of , and the cancelled letters need not appear in our word. The correct version of this statement is that there exist with (where is the cyclic reduction of ) so that every word that reduces to is a concatenation of words that reduce to . Thus

To relate this bound to the spectral properties of , we make the simple observation that the indicators above can be expressed as matrix elements

If we substitute the formula in the above inequality, and then take the expectation with respect to each independent block of variables that lies entirely inside one of the matrix elements, we obtain

with

where , , , and we write and for simplicity.

The crux of the proof is now to note that as

it follows readily using Cauchy–Schwarz that

since each contains at most variables other than . As and , the conclusion follows directly from the expression for stated before the proof. ∎

Remark 3.11.

The proof of Lemma 3.10 relies on positivization: since all the terms in the proof are positive, we are able to obtain upper bounds by overcounting as in the first equation display of the proof. While this argument only applies in first instance to polynomials with positive coefficients, strong convergence for arbitrary polynomials then follows a posteriori by Lemma 2.26.

It is also possible, however, to apply a variant of the positivization trick directly to . This argument [90, §6.2] shows that the validity of Lemma 3.10 for polynomials with positive coefficients already implies its validity for all self-adjoint polynomials (even with matrix coefficients), so that the polynomial method can be applied directly to general polynomials. The advantage of this approach is that it yields much stronger quantitative bounds than can be achieved by applying Lemma 2.26. Since we have not emphasized the quantitative features of the polynomial method in our presentation, we do not develop this approach further here.

3.6. Discussion: on the role of cancellations

When encountered for the first time, the simplicity of proofs by the polynomial method may have the appearance of a magic trick. An explanation for the success of the method is that it uncovers a genuinely new phenomenon that is not captured by classical methods of random matrix theory. Now that we have provided a complete proof of Theorem 1.4 by the polynomial method, we aim to revisit the proof to highlight where this phenomenon arises. For simplicity, we place the following discussion in the context of random matrices with limiting operator ; the reader may keep in mind

in the context of Theorem 1.4 and its proof.

3.6.1. The moment method

It is instructive to first recall the classical moment method that is traditionally used in random matrix theory. Let us take for granted that converges weakly to , so that

| (3.3) |

as with fixed. The premise of the moment method is that if it could be shown that this convergence remains valid when is allowed to grow with at rate , then a strong convergence upper bound would follow: indeed, since , we could then estimate

where we used that for .

There are two difficulties in implementing the above method. First, establishing (3.3) for can be technically challenging and often requires delicate combinatorial estimates. When is fixed, we can write an expansion

(this is immediate, for example, from (3.1)) and establishing (3.3) requires us to understand only the lowest-order term . In contrast, when the coefficients themselves grow faster than polynomially in , so that it is necessary to understand the terms in the expansion to all orders.

In the setting of Theorem 1.4, however, there is a more serious problem: (3.3) is not just difficult to prove, but actually fails altogether.

Example 3.12.

Consider the permutation model of -regular random graphs as in Theorem 1.3, so that where is the adjacency matrix. We claim that with probability at least . As , this implies

contradicting the validity of (3.3) for .

To prove the claim, note that any given point of is simultenously a fixed point of the random permutations with probability . Thus with probability at least , random graph has a vertex with self-loops which is disconnected from the rest of the graph, so that has eigenvalue with multiplicity at least two. The latter cleatly implies that .

Example 3.12 shows that the appearance of outliers in the spectrum with polynomially small probability presents a fundamental obstruction to the moment method. In random graph models, this situation arises due to the appearance of “bad” subgraphs, called tangles. Previous proofs [50, 18, 19] of optimal spectral gaps in this setting must overcome these difficulties by conditioning on the absence of tangles, which significantly complicates the analysis and has made it difficult to adapt these methods to more challenging models.121212A notable exception being the work of Anantharaman and Monk on random surfaces [4, 5].

3.6.2. A new phenomenon

The polynomial method is essentially based on the same input as the moment method: we consider the spectral statistics

where is any real polynomial of degree , and aim to compare these with the spectral statistics of . Since we have shown in Example 3.12 that each moment can be larger than its limiting value by a factor exponential in the degree, that is, for , it seems inevitable that must be poorly approximated by for high degree polynomials . The surprising feature of the polynomial method is that it defies this expectation: for example, a trivial modification of the proof of Corollary 3.9 yields the bound

| (3.4) |

which depends only polynomially on the degree .

There is of course no contradiction between these observations: if we choose in (3.4), then and we recover the exponential dependence on degree that was observed in Example 3.12. On the other hand, (3.4) shows that the dependence on the degree becomes polynomial when is uniformly bounded on the interval . Thus the polynomial method reveals an unexpected cancellation phenomenon that happens when the moments are combined to form bounded test functions .

The idea that classical tools from the analytic theory of polynomials, such as the Markov inequality, make it possible to capture such cancellations lies at the heart of the polynomial method. These cancellations would be very difficult to realize by a direct combinatorial analysis of the moments. The reason that this phenomenon greatly simplifies proofs of strong convergence is twofold. First, it only requires us to understand the -expansion of the moments to first order, rather than to every order as would be required by the moment method. Second, this eliminates the need to deal with tangles, since tangles do not appear in the first-order term in the expansion. (The tangles are however visible in the higher order terms, which gives rise to the large deviations behavior in Figure 1.3.)

Remark 3.13.

We have contrasted the polynomial method with the moment method since both rely only on the ability to compute moments . Beside the moment method, another classical method of random matrix theory is based on resolvent statistics such as . This approach was used by Haagerup–Thorbjørnsen [60] and Schultz [121] to establish strong convergence for Gaussian ensembles, where strong analytic tools are available. It is unclear, however, how such quantities can be computed or analyzed in the context of discrete models as in Theorem 1.4. Nonetheless, let us note that the recent works [76, 74, 75] have successfully used such an approach in the setting of random regular graphs.

4. Intrinsic freeness

The aim of this section is to explain the origin of the intrinsic freeness phenomenon that was introduced in section 1.2. Since Theorem 1.6 requires a number of technical ingredients whose details do not in themselves shed significant light on the underlying phenomenon, we defer to [10, 11] for a complete proof. Instead, we aim to give an informal discussion of the key ideas behind the proof: in particular, we aim to explain the underlying mechanism.

Before we can do so, however, we must first describe the limiting object and explain why it is useful in practice, which we will do in section 4.1. We subsequently sketch some key ideas behind the proof of Theorem 1.6 in section 4.2.

4.1. The free model

To work with Gaussian random matrices, we must recall how to compute moments of independent standard Gaussians : given any , the Wick formula [106, Theorem 22.3] states that

where denotes the set of pairings of (that is, partitions into blocks of size two). This classical result is easily proved by induction on using integration by parts. A convenient way to rewrite the Wick formula is to introduce for every and random variables with the same law as so that for , and are independent otherwise. Then

as the expectation in the sum factors as .

What happens if we replace the scalar Gaussians by independent GUE matrices ? To explain this, we need the following notion: a pairing has a crossing if there exist pairs so that . If we represent by drawing each element of as a vertex on a line, and drawing a semicircular arc between the vertices in each pair , the pairing has a crossing precisely when two of the arcs cross; see Figure 4.1.

Lemma 4.1.

We have

where denotes the set of noncrossing pairings.

Proof.

Define analogously to above. Then

by the Wick formula. Consider first a noncrossing pairing . Since pairs cannot cross, there must be an adjacent pair , and if this pair is removed we obtain a noncrossing pairing of . As ,131313This follows from a simple explicit computation using the following characterization of GUE matrices: is a self-adjoint matrix whose entries above the diagonal are i.i.d. complex Gaussians and entries on the diagonal are i.i.d. real Gaussians with mean zero and variance . we obtain

by repeatedly taking the expectation with respect to an adjacent pair.

On the other hand, if is an independent copy of , we can computefootnote 13

| (4.1) |

for any matrices that are independent of . Thus

as whenever is a crossing pairing. ∎

In view of Lemma 4.1, the significance of the following definition of the limiting object associated to independent GUE matrices is self-evident.

Definition 4.2 (Free semicircular family).

A family of self-adjoint elements of a -probability space such that

for all and is called a free semicircular family.

Free semicircular families can be constructed in various ways, guaranteeing their existence; see, e.g. [106, pp. 102–108]. Lemma 4.1 states that a family of independent GUE matrices converges weakly to a free semicircular family .

Remark 4.3.