Department of Creative Informatics

Graduate School of Information Science and Technology

June \degreeyear1990 \thesisdateMay 18, 1990

William J. SupervisorAssociate Professor

Arthur C. ChairmanChairman, Department Committee on Graduate Theses

The University of Tokyo

Dedicated with love to my mother, for always believing and supporting;

and to my sister, for her unbounded trust and inspiration.

Acknowledgments

Most of all, I want to thank my mother and sister for raising me to value education and science—I would not be writing this thesis without their life-long dedicated support and disarming warmth. My second deepest appreciation goes to Prof. Hideki Nakayama for taking me under his wing and supporting me to explore creative and informative ideas, just like our department name. He kindly allowed me to challenge many different ideas despite my shortcomings. I am grateful to Research Center for Medical Big Data staff Prof. Fuyuko Kido, Dr. Youichirou Ninomiya, Fumika Tamura, and Prof. Shin’ichi Satoh, for offering me amazing research opportunities and helping me apply for Japanese permanent residency. In particular, I always enjoyed discussions and consequent publications together with you, Prof. Murao Kohei.

Many thanks to all people committed to our GAN projects. To physicians, including Dr. Yusuke Kawata and Dr. Fumiya Uchiyama (National Center for Global Health and Medicine), for their brilliant clinical insights and contributions. Especially, I owe entirely to Dr. Tomoyuki Noguchi (Kyushu Medical Center) for always sparing no effort to protect health and save lives with state-of-the-art technology—he introduced me many competent physicians for evaluating our GAN applications’ clinical relevance. Dr. Yujiro Furukawa (Jikei University School of Medicine) has always supported our GAN projects from the very first project, trusting its potential despite repeated urgent requests. Dr. Kazuki Umemoto (Juntendo University School of Medicine) is AI-enthusiastic and very passionate about advancing healthcare.

This thesis would not have been achievable without Ryosuke Araki (Chubu University) and Prof. Hideaki Hayashi (Kyushu University) because we launched and carried out these tough but fruitful projects together for so long. During my internship at FujiFilm with Dr. Yoshiro Kitamura, Akira Kudo, Akimichi Ichinose, and Dr. Yuanzhong Li, my best memory was drinking endless free beer.

I appreciate those participated in our questionnaire survey and GCL workshop: Dr. Yoshitaka Shida, Dr. Ryotaro Kamei (National Center for Global Health and Medicine), Dr. Toshifumi Masuda (Kyushu Medical Center), Dr. Ryoko Egashira, Dr. Yoshiaki Egashira, Dr. Tetsuya Kondo (Saga University), Dr. Takafumi Nemoto (Keio University School of Medicine), Dr. Yuki Kaji, Miwa Aoki Uwadaira, Hajime Takeno (The University of Tokyo), and Kohei Yamamoto (Corpy&Co., Inc.).

My sincere thanks go to Prof. Takeo Igarashi, Prof. Tatsuya Harada, Prof. Kazuhiko Ohe, Prof. Manabu Tsukada, and Prof. Murao Kohei for refereeing my Ph.D. thesis. Including Prof. Tsukada’s mentoring, I was privileged to have continuous financial and human support from GCL program of my University by MEXT.

Clinically valuable research requires international/interdisciplinary collaboration. Backed up by the GCL, I achieved this goal thanks to Dr. Leonardo Rundo, real friend who invited me to Università degli Studi di Milano-Bicocca twice, University of Cambridge once, and his hometown in Sicily twice. After working hard together everyday from everywhere, he understands this thesis better than anyone in the world. Special thanks also to Prof. Giancarlo Mauri on his 70th birthday in Milan and Prof. Evis Sala in Cambridge, along with the other (mostly Italian) guys in their labs, including Prof. Daniela Besozzi, Prof. Paolo Cazzaniga, Prof. Marco Nobile, Dr. Andrea Tangherloni, and Simone Spolaor. After visiting 19 Italian cities, sono quasi Italiano! Nicolas Y. Kröner was my geek tutor at Technische Universität München.

I wish to thank all my pleasant friends for making my Ph.D. days enjoyable and memorable in these intense years. Cheers to our long friendship, UTDS members, including Marishi Mochida and Kazuki Taniyoshi. Again, congratulations on your happy wedding, Yuki/Mari Inoue and Akito/Hitomi Misawa. Moreover, I shared far too many hilarious memories with KEK members Zoltán Ádám Milacski and Florian Gesser. Kurumi Nagasako drew this thesis’ very first figure. Prof. Yumiko Furuichi and Miwako Hayasaka are always cheerful and smiling like Mother Teresa.

“My true title of glory is that I will live forever” Last, but not least, I would like to thank Napoleon Bonaparte who taught me that nothing is impossible. With courage and hope, I could conduct this research for both human prosperity and individual happiness. Listening to Beethoven’s Symphony No.3 Eroica in the morning, I always try to be an everyday hero with great ambition like you. Your reign will never cease.

Abstract

Convolutional Neural Networks (CNNs) can play a key role in Medical Image Analysis under large-scale annotated datasets. However, preparing such massive dataset is demanding. In this context, Generative Adversarial Networks (GANs) can generate realistic but novel samples, and thus effectively cover the real image distribution. In terms of interpolation, the GAN-based medical image augmentation is reliable because medical modalities can display the human body’s strong anatomical consistency at fixed position while clearly reflecting inter-subject variability; thus, we propose to use noise-to-image GANs (e.g., random noise samples to diverse pathological images) for (i) medical Data Augmentation (DA) and (ii) physician training. Regarding the DA, the GAN-generated images can improve Computer-Aided Diagnosis based on supervised learning. For the physician training, the GANs can display novel desired pathological images and help train medical trainees despite infrastructural/legal constraints. This thesis contains four GAN projects aiming to present such novel applications’ clinical relevance in collaboration with physicians. Whereas the methods are more generally applicable, this thesis only explores a few oncological applications.

In the first project, after proposing the two applications, we demonstrate that GANs can generate realistic/diverse 128 128 whole brain Magnetic Resonance (MR) images from noise samples—despite difficult training, such noise-to-image GAN can increase image diversity for further performance boost. Even an expert fails to distinguish the synthetic images from the real ones in Visual Turing Test.

The second project tackles image augmentation for 2D classification. Most CNN architectures adopt around 256 256 input sizes; thus, we use the noise-to-noise GAN, Progressive Growing of GANs (PGGANs), to generate realistic/diverse 256 256 whole brain MR images with/without tumors separately. Multimodal UNsupervised Image-to-image Translation further refines the synthetic images’ texture and shape. Our two-step GAN-based DA boosts sensitivity 93.7% to 97.5% in 2D tumor/non-tumor classification. An expert classifies a few synthetic images as real.

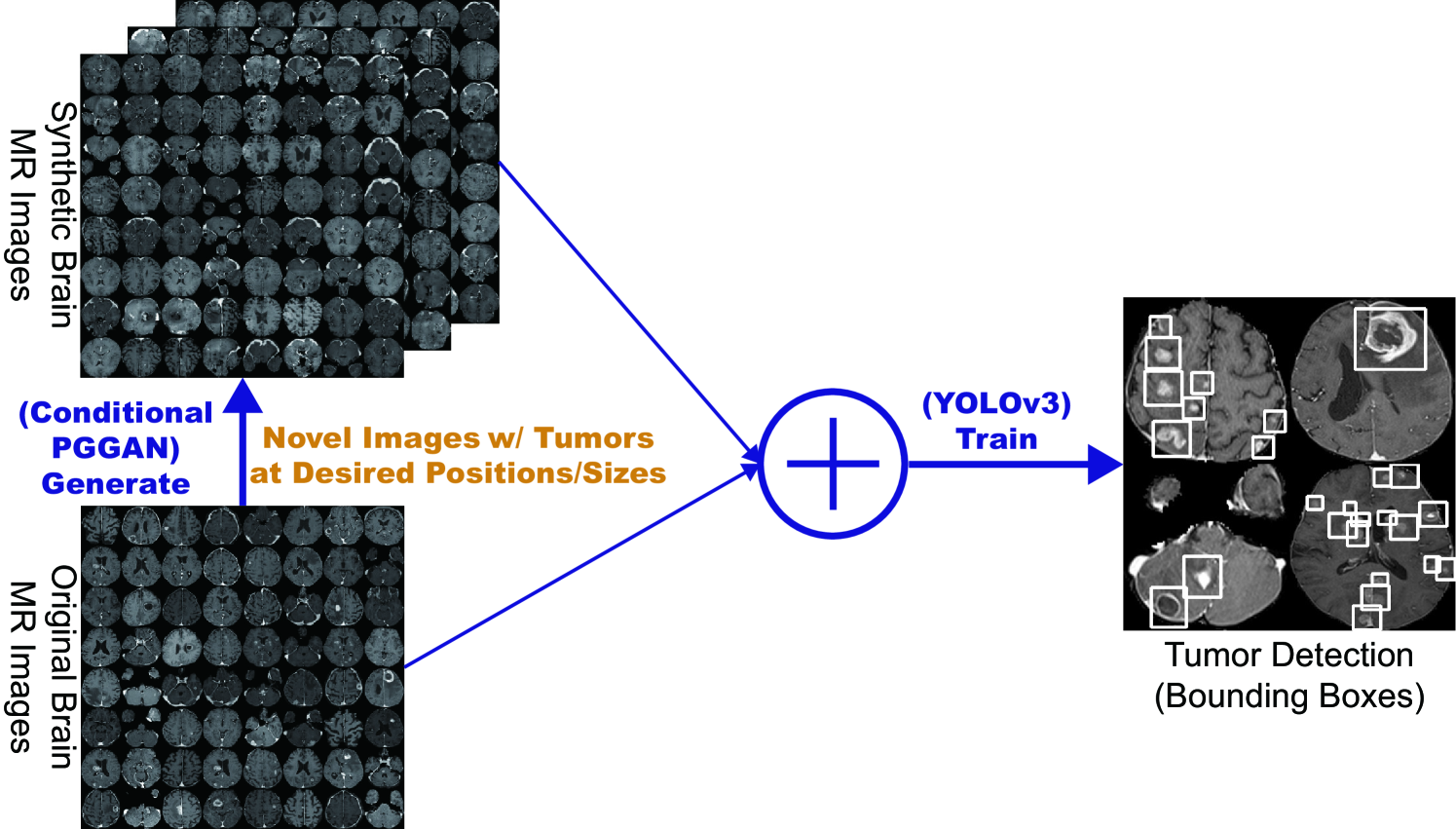

The third project augments images for 2D detection. Further DA applications require pathology localization for detection and advanced physician training needs atypical image generation, respectively. To meet both clinical demands, we propose Conditional PGGANs (CPGGANs) that incorporates highly-rough bounding box conditions incrementally into the noise-to-image GAN (i.e., the PGGANs) to place realistic/diverse brain metastases at desired positions/sizes on MR images; the bounding box-based detection requires much less physicians’ annotation effort than segmentation. Our CPGGAN-based DA boosts sensitivity 83% to 91% in tumor detection with clinically acceptable additional False Positives (FPs). In terms of extrapolation, such pathology-aware GANs are promising because common and/or desired medical priors can play a key role in the conditioning—theoretically, infinite conditioning instances, external to the training data, exist and enforcing such constraints have an extrapolation effect via model reduction.

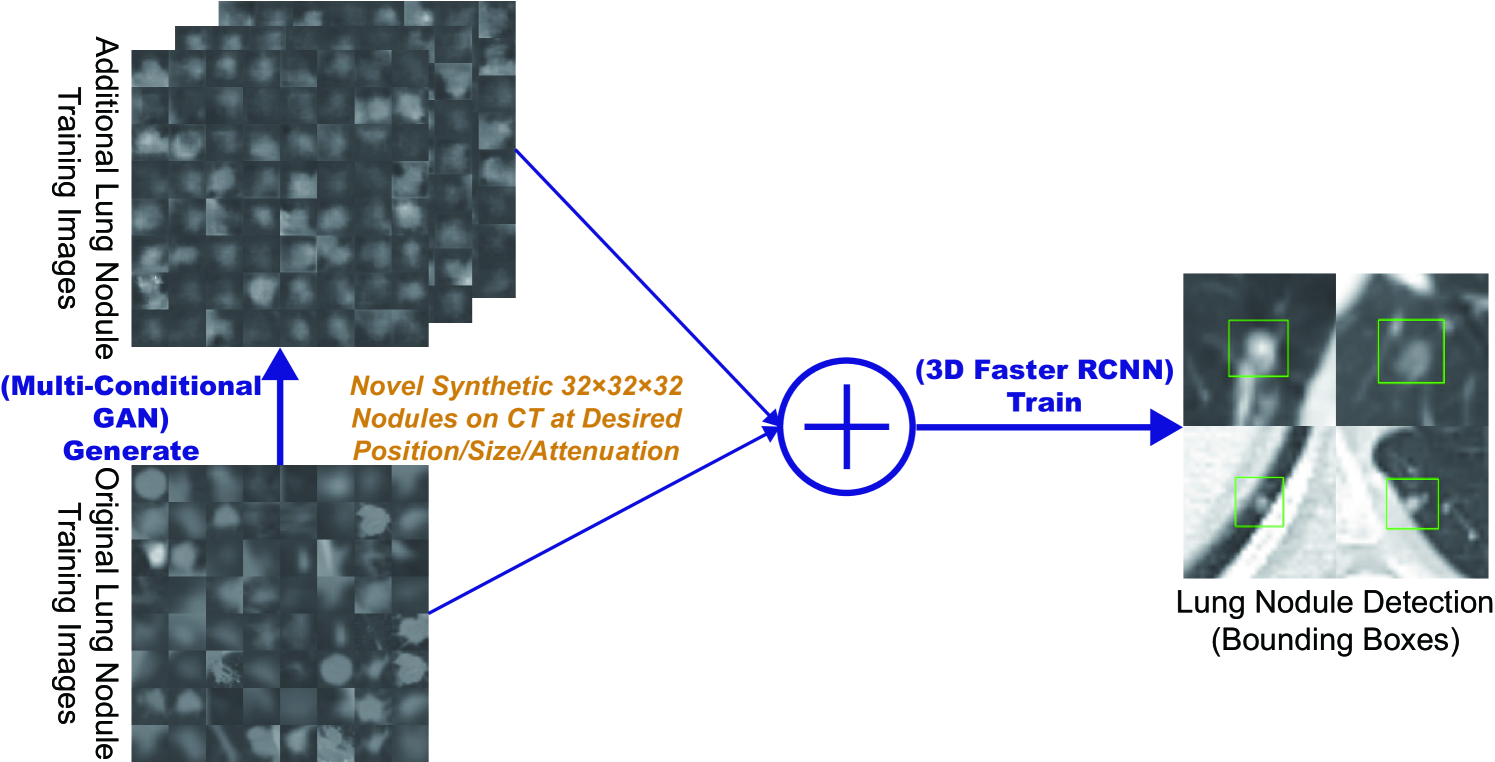

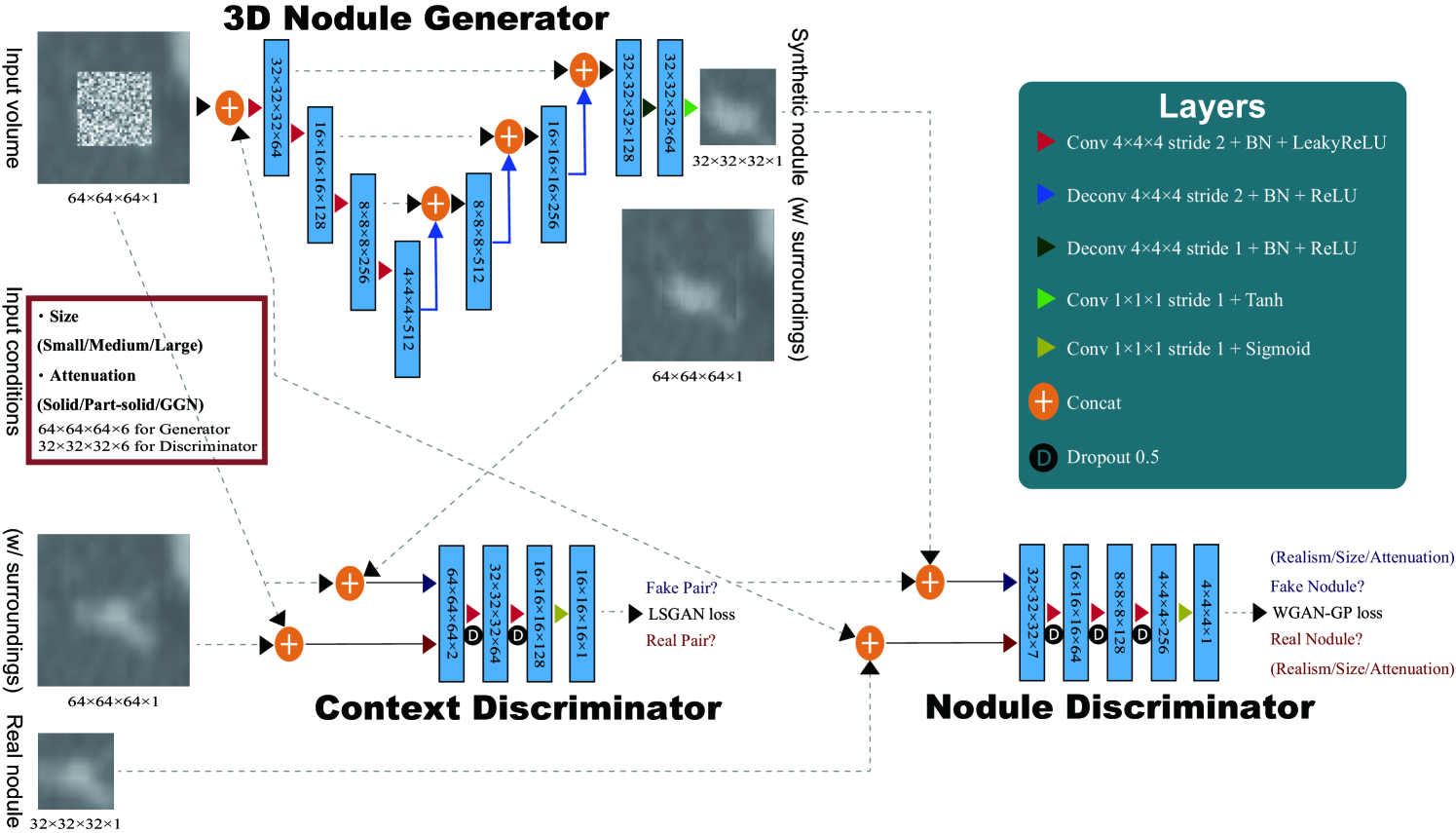

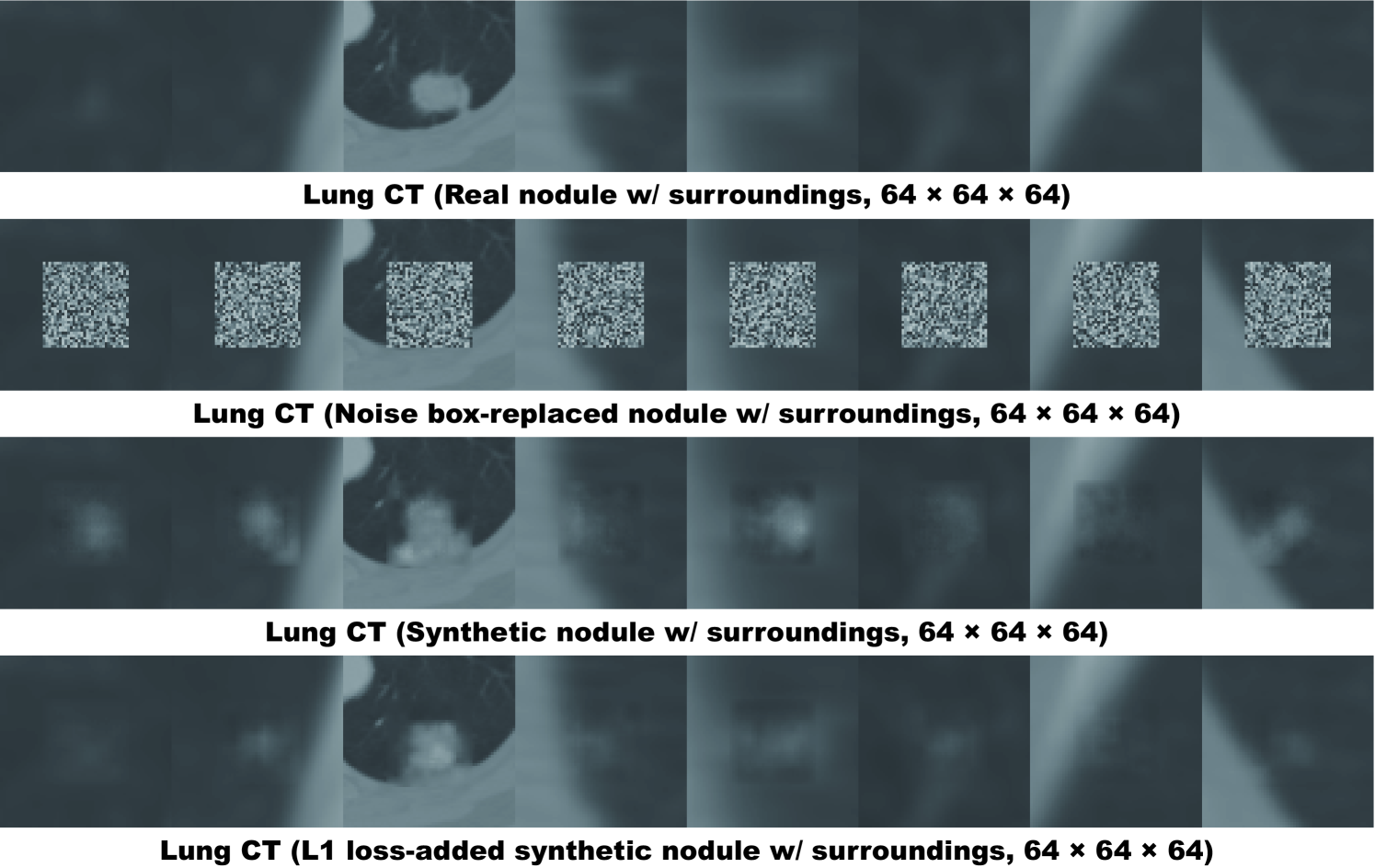

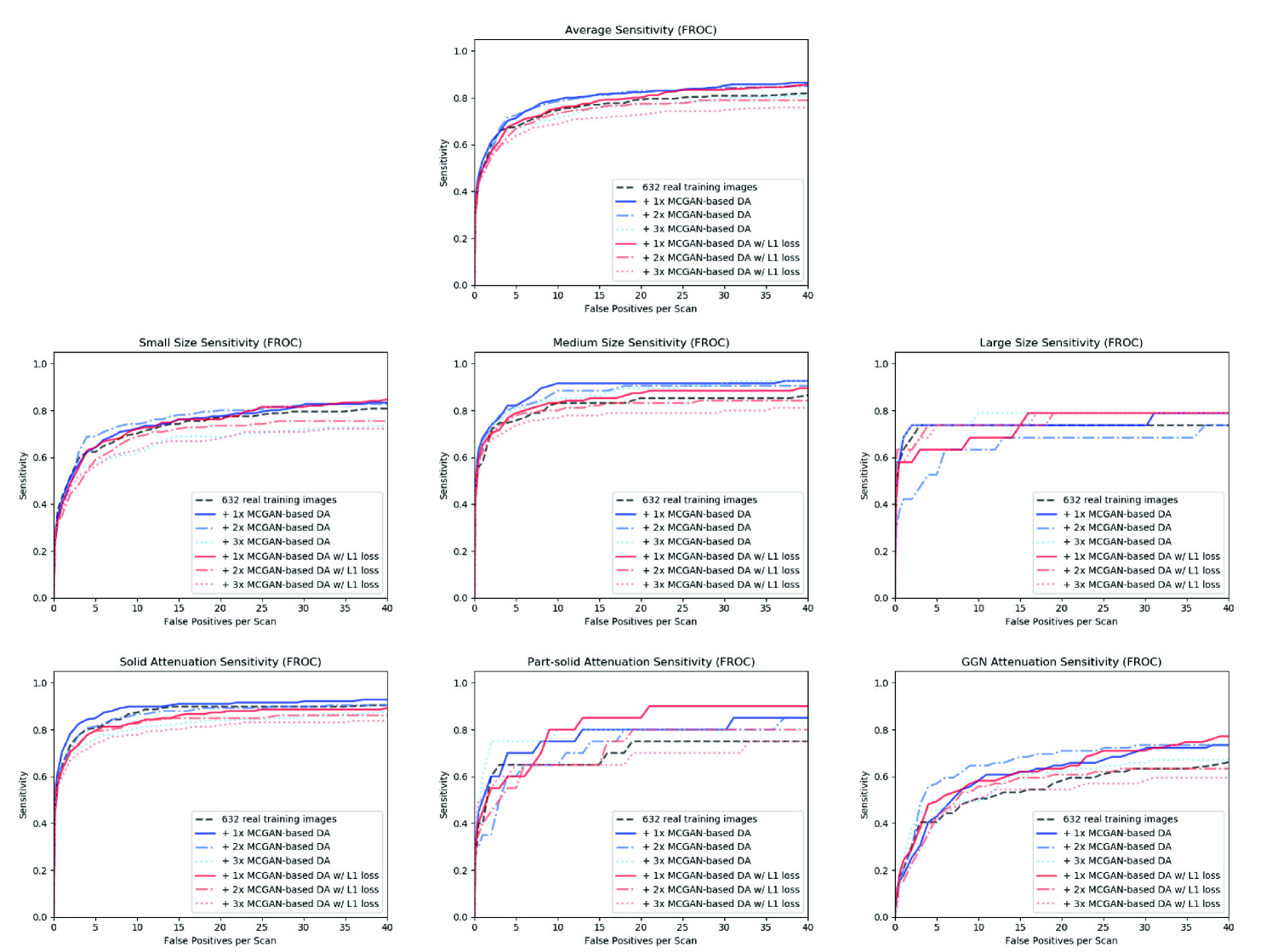

Finally, we solve image augmentation for 3D detection. Because lesions vary in 3D position/appearance, 3D multiple pathology-aware conditioning is important. Therefore, we propose 3D Multi-Conditional GAN (MCGAN) that translates noise boxes into realistic/diverse lung nodules placed naturally at desired position/size/attenuation on Computed Tomography scans. Our 3D MCGAN-based DA boosts sensitivity in 3D nodule detection under any nodule size/attenuation at fixed FP rates. Considering the realism confirmed by physicians, it could perform as a physician training tool to display realistic medical images with desired abnormalities.

We confirm our pathology-aware GANs’ clinical relevance for diagnosis via two discussions: (i) Conducting a questionnaire survey about our GAN projects for 9 physicians; (ii) Holding a workshop about how to develop medical Artificial Intelligence (AI) fitting into a clinical environment in five years for 7 professionals with various AI and/or Healthcare background.

List of Abbreviations

| AI | Artificial Intelligence |

|---|---|

| BRATS | Brain Tumor Image Segmentation Benchmark |

| CAD | Computer-Aided Diagnosis |

| CNN | Convolutional Neural Network |

| CPGGANs | Conditional Progressive Growing of Generative Adversarial Networks |

| CPM | Competition Performance Metric |

| CT | Computed Tomography |

| DA | Data Augmentation |

| DCGAN | Deep Convolutional Generative Adversarial Network |

| FLAIR | FLuid Attenuation Inversion Recovery |

| FP | False Positive |

| FROC | Free Receiver Operation Characteristic |

| GAN | Generative Adversarial Network |

| HGG | High-Grade Glioma |

| IoU | Intersection over Union |

| JS | Jensen-Shannon |

| LGG | Low-Grade Glioma |

| LSGANs | Least Squares Generative Adversarial Networks |

| MCGAN | Multi-Conditional Generative Adversarial Network |

| MRI | Magnetic Resonance Imaging |

| MUNIT | Multimodal UNsupervised Image-to-image Translation |

| PGGANs | Progressive Growing of Generative Adversarial Networks |

| ReLU | Rectified Linear Unit |

| ROI | Region Of Interest |

|---|---|

| SGD | Stochastic Gradient Descent |

| SimGAN | Simulated and unsupervised learning Generative Adversarial Network |

| t-SNE | t-distributed Stochastic Neighbor Embedding |

| T1 | T1-weighted |

| T1c | contrast enhanced T1-weighted |

| T2 | T2-weighted |

| UNIT | UNsupervised Image-to-image Translation |

| VAE | Variational AutoEncoder |

| VOI | Voxel Of Interest |

| WGAN | Wasserstein Generative Adversarial Network |

| WGAN-GP | Wasserstein Generative Adversarial Network with Gradient Penalty |

Chapter 1 Introduction

“Life is short, and the Art long; the occasion fleeting; experience fallacious, and judgment difficult. The physician must not only be prepared to do what is right himself, but also to make the patient, the attendants, and externals cooperate.”

Hippocrates [460-375 BC]

1.1 Aims and Motivations

Convolutional Neural Networks (CNNs) have revolutionized Medical Image Analysis by extracting valuable insights for better clinical examination and medical intervention; the CNNs occasionally outperformed even expert physicians in diagnostic accuracy when large-scale annotated datasets were available [1, 2]. However, obtaining such massive datasets often involves the following intrinsic challenges [3, 4]: (i) it is costly and laborious to collect medical images, such as Magnetic Resonance (MR) and Computed Tomography (CT) images, especially for rare disease; (ii) it is time-consuming and observer-dependent, even for expert physicians, to annotate them due to the low pathological-to-healthy ratio. To tackle these issues, researchers have mainly focused on extracting as much information as possible from the available limited data [5, 6]. Instead, Generative Adversarial Networks (GANs) [7] can generate realistic but completely new samples via many-to-many mappings, and thus effectively cover the real image distribution; they showed great promise in Data Augmentation (DA) using natural images, such as 21% performance improvement in eye-gaze estimation [8].

Interpolation refers to new data point construction within a discretely-sampled data distribution. In terms of the interpolation, the GAN-based image augmentation is reliable on the medical images because medical modalites (e.g., X-ray, CT, MRI) can display the human body’s strong anatomical consistency at fixed position while clearly reflecting inter-subject variability [9, 10]—this is different from the natural images, where various objects can appear at any position; accordingly, to tackle large inter-subject, inter-pathology, and cross-modality variability [3, 4], we propose to use noise-to-image GANs (e.g., random noise samples to diverse pathological images) for (i) medical DA and (ii) physician training [11]. The noise-to-image GAN training is much more difficult than training image-to-image GANs (e.g., a benign image to a malignant one); but, it can perform more global regularization (i.e., adding constraints when fitting a loss function on a training set to prevent overfitting) and increase image diversity for further performance boost.

Regarding the DA, the GAN-generated images can improve Computer-Aided Diagnosis (CAD) based on supervised learning [12]. For the physician training, the GANs can display novel desired pathological images and help train medical trainees despite infrastructural and legal constraints [13]. However, we cannot directly use conventional GANs for realistic/diverse high-resolution medical image augmentation. Moreover, we have to find effective loss functions and training schemes for each of those applications [14]; the diversity matters more for the DA to sufficiently fill the real image distribution whereas the realism matters more for the physician training not to confuse the medical students and radiology trainees.

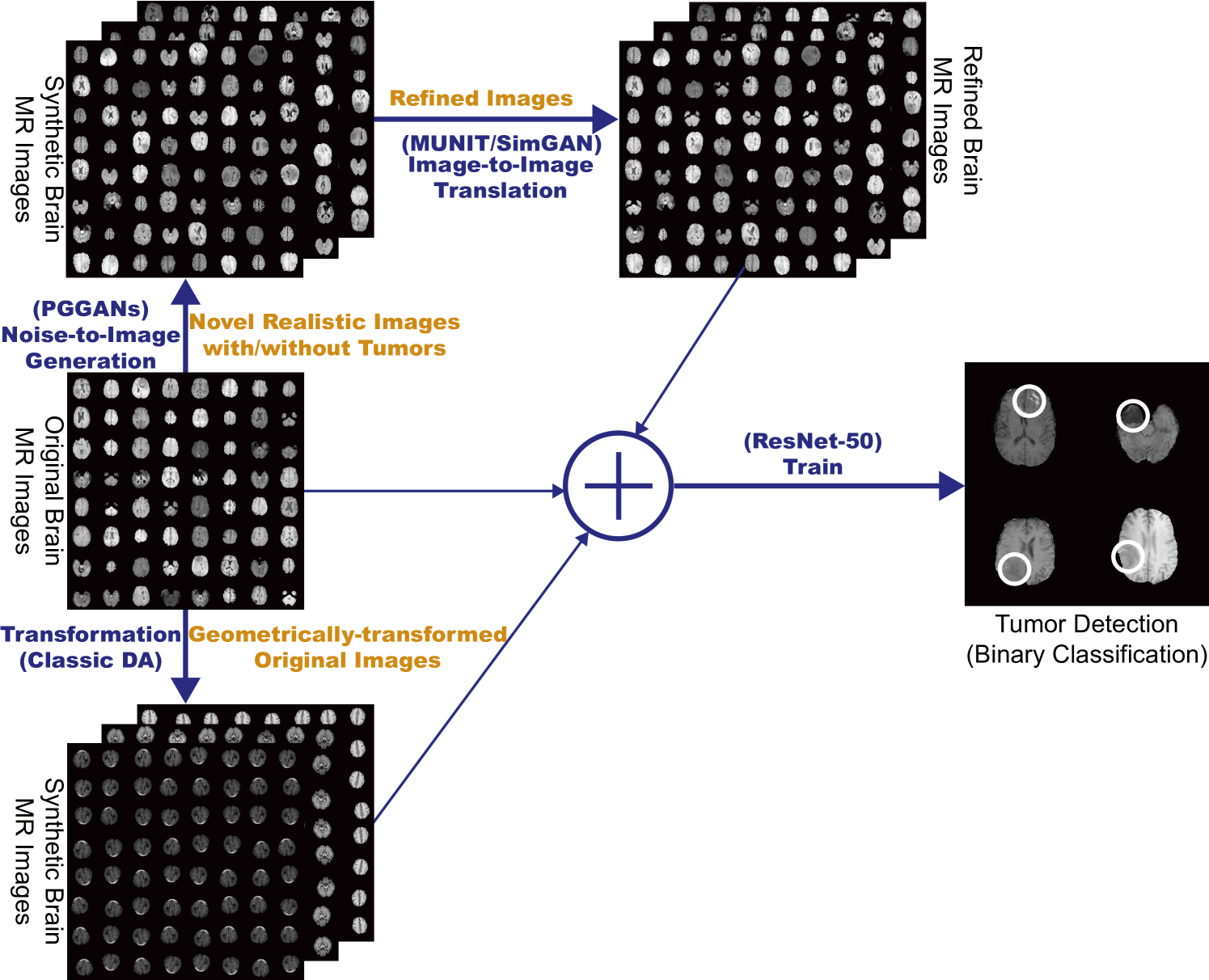

So, how can we perform clinically relevant GAN-based DA/physician training using only limited annotated training images? Always in collaboration with physicians, for improving 2D classification, we combine the noise-to-image [15, 16] (i.e., Progressive Growing of GANs, PGGANs [17]) and image-to-image GANs (i.e., Multimodal UNsupervised Image-to-image Translation, MUNIT [18]); the two-step GAN can generate and refine realistic/diverse original-sized brain MR images with/without tumors separately. Nevertheless, further DA applications require pathology localization for detection (i.e., identifying target pathology positions in medical images) and advanced physician training needs atypical image generation, respectively. To meet both clinical demands, we propose novel 2D/3D bounding box-based GANs conditioned on pathology position/size/appearance; the bounding box-based detection requires much less physicians’ annotation effort than rigorous segmentation.

Extrapolation refers to new data point estimation beyond a discretely-sampled data distribution. While it is not mutually-exclusive with the interpolation and both rely on a model’s restoring force, it is more subject to uncertainty and thus a risk of meaningless data generation. In terms of the extrapolation, the pathology-aware GANs (i.e., the conditional GANs controlling pathology, such as tumors and nodules, based on position/size/appearance) are promising because common and/or desired medical priors can play a key role in the conditioning—theoretically, infinite conditioning instances, external to the training data, exist and enforcing such constraints have an extrapolation effect via model reduction [19]; inevitable errors, not limited between two data points, caused by the model reduction forces a generator to synthesize images that the generator has never synthesized before.

For improving 2D detection, we propose Conditional PGGANs (CPGGANs) that incorporates highly-rough bounding box conditions incrementally into the noise-to-image GAN (i.e., the PGGANs) to place realistic/diverse brain metastases at desired positions/sizes on MR images [20]. As its pathology-aware conditioning, we use 2D tumor position/size on MR images. Since lesions vary in 3D position/appearance, for improving 3D detection, we propose 3D Multi-Conditional GAN (MCGAN) that translates noise boxes into realistic/diverse lung nodules placed naturally at desired position/size/attenuation on CT scans [21]; inputting the noise box with the surrounding tissues has the effect of combining the noise-to-image and image-to-image GANs. As its pathology-aware conditioning, we use 3D nodule position/size/attenuation on CT scans.

Lastly, we confirm our pathology-aware GANs’ clinical relevance for diagnosis as a clinical decision support system and non-expert physician training tool via two discussions: (i) Conducting a questionnaire survey about our GAN projects for 9 physicians; (ii) Holding a workshop about how to develop medical Artificial Intelligence (AI) fitting into a clinical environment in five years for 7 professionals with various AI and/or Healthcare background.

Contributions. Our main contributions are as follows:

-

•

Noise-to-Image GAN Applications: We propose clinically-valuable novel noise-to-image GAN applications, medical DA and physician training, focusing on their ability to generate realistic and diverse images.

-

•

Pathology-Aware GANs: For required extrapolation, always in collaboration with physicians, we propose novel 2D/3D GANs controlling pathology (i.e., tumors and nodules) on most major modalities (i.e., brain MRI and lung CT).

-

•

Clinical Validation: After detailed discussions with many physicians and professionals with various AI and/or Healthcare background, we confirm our pathology-aware GANs’ clinical relevance as a (i) clinical decision support system and (ii) non-expert physician training tool.

1.2 Thesis Overview

This Ph.D. thesis aims to present the clinical relevance of our novel pathology-aware GAN applications, medical DA and physician training, always in collaboration with physicians.

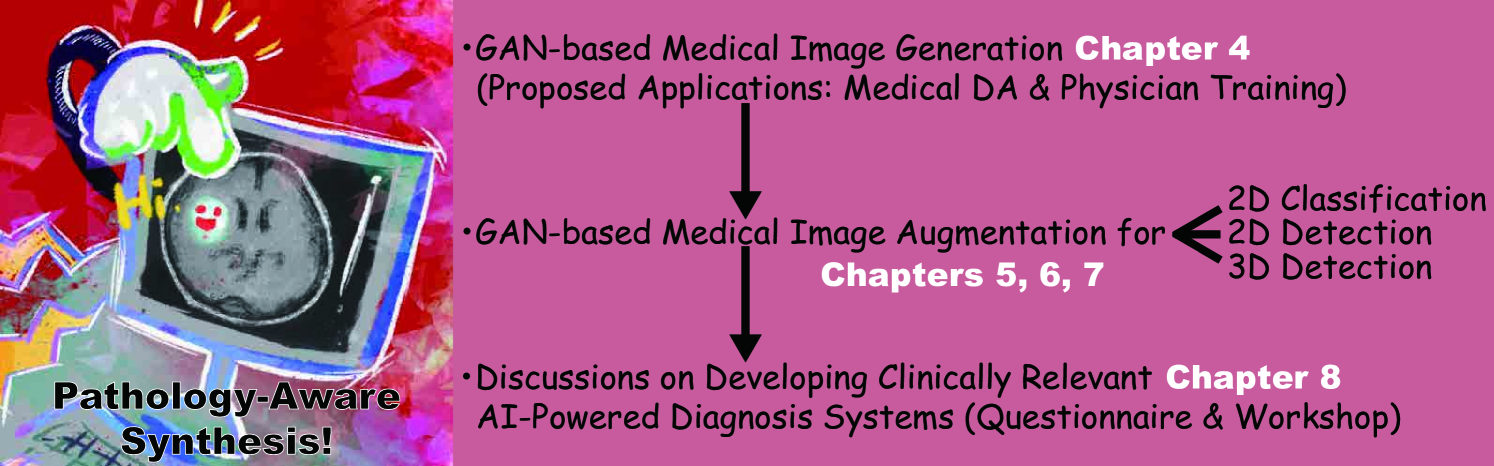

The thesis is organized as follows (Fig. 1.1). Chapter 2 covers the background of Medical Image Analysis and Deep Learning, as well as methods to address data paucity to bridge them. Chapter 3 describes related work on the GAN-based medical DA and physician training, which emerged after our proposal to use noise-to-image GANs for those applications in Chapter 4. Chapter 5 presents a two-step GAN for 2D classification that combines both noise-to-image and image-to-image GANs. Chapter 6 proposes CPGGANs for 2D detection that incorporates highly-rough bounding box conditions incrementally into the noise-to-image GAN. Finally, we propose 3D MCGAN for 3D detection that translates noise boxes into desired pathology in Chapter 7. Chapter 8 discusses both our pathology-aware GANs’ clinical relevance via a questionnaire survey and how to develop medical AI fitting into a clinical environment in five years via a workshop. Lastly, Chapter 9 provides the conclusive remarks and future directions for further GAN-based extrapolation.

Chapter 2 Background

This chapter introduces basic concepts in Medical Image Analysis and Deep Learning. Afterwards, we describe methods to address data paucity because they play the greatest role in bridging the Medical Image Analysis and Deep Learning.

2.1 Medical Image Analysis

Medical Image Analysis refers to the process of increasing clinical examination/medical intervention efficiency, based on several imaging modalities and digital image processing techniques [22, 23]; to effectively visualize the human body’s anatomical and physiological features, it covers various modalities including X-ray, CT, MRI, positron emission tomography, endoscopy, optical coherence tomography, pathology, ultrasound imaging, and fundus imaging. Its tasks are mainly classified into three groups: (i) Early detection/diagnosis/prognosis of disease often based on pathology classification/detection/segmentation and survival prediction [24, 25]; (ii) Clinical workflow enhancement often based on body part segmentation, inter-modality registration, 3D reconstruction, flow measurement, and surgery simulation [26, 27]; (iii) Clinically impossible image analysis, such as radiogenomics that identifies the correlation between cancer imaging features and gene expression [28].

Among the various modalities, this thesis focuses on the most common 3D modalities for non-invasive diagnosis, CT and MRI. To get a detailed picture inside the body, the CT merges multiple X-rays at different angles using computational tomographic reconstruction [9, 29]. Since X-ray intensity is associated with the mass attenuation coefficient, higher-density tissues show higher attenuation and vice versa. Accordingly, each voxel possesses its attenuation value following the Hounsfield scale from to (e.g., Hounsfield units for air, 0 for water, and for dense bone). The CT can provide a outstanding contrast within soft-tissue, bone, and lung while the soft-tissue contrast is poor—accordingly, it is especially performed for comprehensive lung assessment.

The MRI uses magnetization properties of atomic nuclei [10, 30]. Since different tissues show various relaxation processes when the nuclei return to their resting alignment, the tissues’ proton density maps serve as both anatomical and functional images. Since the tissues possess two different relaxation times, T1 (i.e., longitudinal relaxation time) and T2 (i.e., transverse relaxation time), as MRI sequences, we can obtain both T1-weighted (T1) and T2-weighted (T2) images. Moreover, using very long repetition time and time to echo, we can obtain FLuid Attenuation Inversion Recovery (FLAIR) images. The MRI can provide a superior soft-tissue contrast to the CT—accordingly, it is especially performed for comprehensive brain assessment.

2.2 Deep Learning

Deep Learning is a kind of Machine Learning algorithms, based on Artificial Neural Networks [31]. The Deep Neural Networks consist of many linearly connected non-linear units whose parameters are optimized by gradient descent [32]; accordingly, their multiple layers can gradually grasp more-detailed features as training progresses (i.e., learning which features to place is automatic). Thanks to the good generalization ability, under large-scale data, the Deep Learning significantly outperforms classical Machine Learning algorithms relying on feature engineering. A visual cortex includes arrangements of simple and complex cells activated by a receptive field (i.e., subregions of a visual field); inspired by this biological structure [33], CNNs adopt a mathematical operation called convolution to achieve translation invariance [34]. Since the CNNs are excellent at image/video recognition, their diverse medical applications include pathology classification/detection/segmentation and survival prediction [24, 25].

Variational AutoEncoders (VAEs) often suffer from blurred samples despite easier training, due to the imperfect reconstruction using a single objective function [35]; meanwhile, GANs have revolutionized image generation in terms of realism and diversity [36], including denoising [37] and MRI-to-CT translation [38], based on a two-player objective function using two CNNs [7]: a generator tries to generate realistic images to fool a discriminator while maintaining diversity; attempts to distinguish between the real and synthetic images. However, difficult GAN training from the two-player objective function accompanies artifacts and mode collapse [39], when generating high-resolution images (e.g., pixels) [40]; to tackle this, multi-stage noise-to-image GANs have been proposed: AttnGAN generated images from text using attention-based multi-stage refinement [41]; PGGANs generated realistic images using low-to-high resolution multi-stage training [17].

Contrarily, to obtain images with desired texture and shape, some researchers have proposed image-to-image GANs: MUNIT translated images using both GANs and VAEs [18]; Simulated and unsupervised learning GAN (SimGAN) translated images for DA using the self-regularization term and local adversarial loss [8]; Isola et al. proposed Pix2Pix GAN to produce robust images using paired training samples [42]. Others have proposed conditional GANs: Reed et al. proposed bounding box-based conditional GAN to control generated images’ local properties [43]; Park et al. proposed multi-conditional GAN to refine base images based on texts describing desired position [44].

In Healthcare, medical images have generated the largest volume of data and this trend will no doubt increase due to equipment improvement [45, 46]. Accordingly, as the Deep Learning dominates Computer Vision, Medical Image Analysis is not an exception; their combination can analyze the large-scale medical images and extract valuable insights for better clinical examination and medical intervention. However, the biggest challenge to bridge them lies in the difficulty of obtaining desired pathological images, especially for rare disease [3, 4]. Moreover, it is time-consuming and observer-dependent, even for expert physicians, to annotate them.

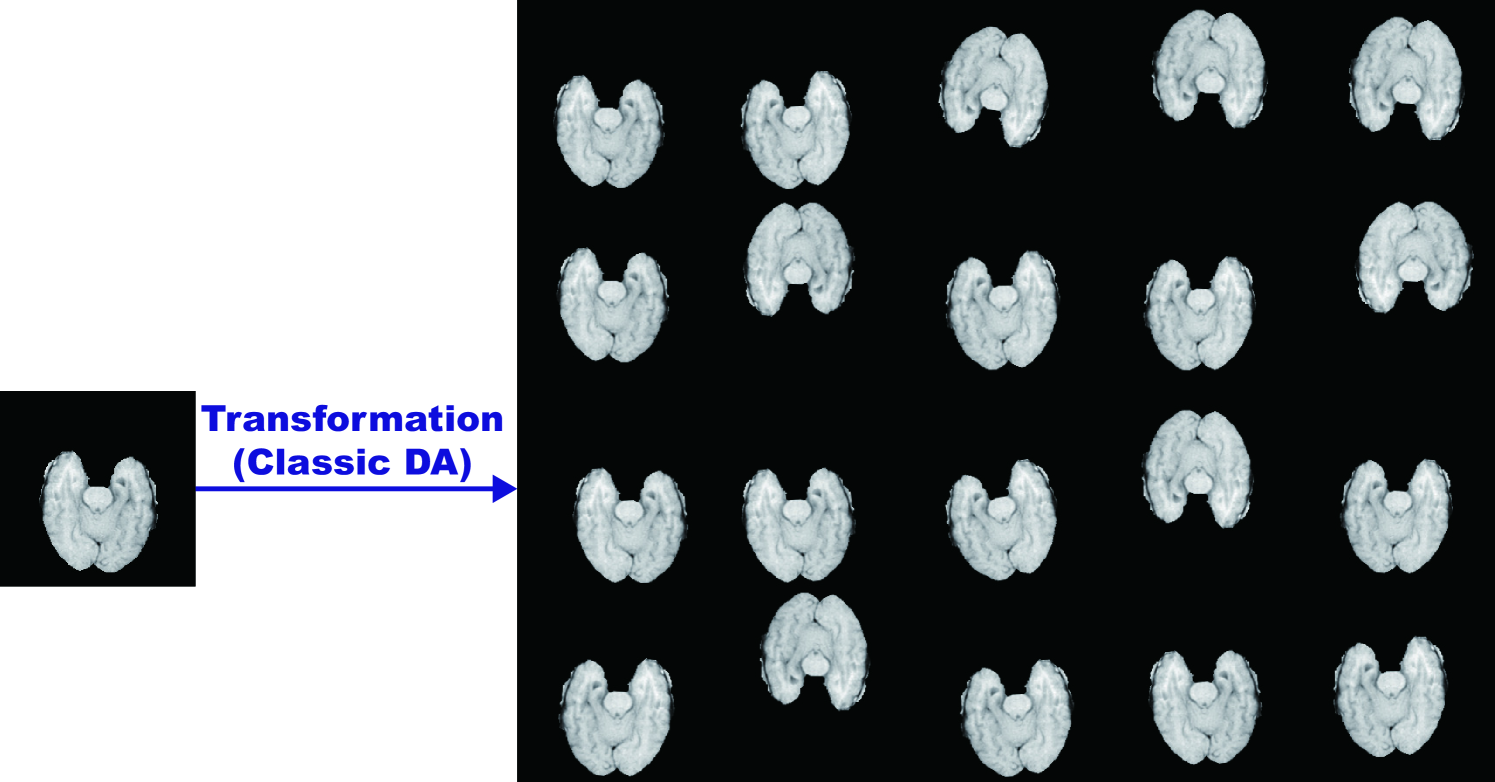

2.3 Methods to Address Data Paucity

So, how can we tackle the data paucity? We can either attempt to (a) overcome the lack of generalization or (b) overcome difficulties in optimization. The most straightforward and effective way to address the generalization is DA [47, 48]; because the best model when given data is uncertain, we commonly increase training set size. Human perception is invariant to size, shape, brightness, and color [49]. Accordingly, we recognize the same objects while their such features change, and thus intentionally changing the features is plausible to obtain more data. Such classical DA include (i) x/y/z-axis flipping and rotating, (ii) zooming and scaling, (iii) cropping, (iv) translating, (v) elastic deformation, (vi) adding Gaussian noise (i.e, the distortion of high frequency features), and (vii) brightness and contrast fluctuation.

Recent DA techniques focus on regularization: Mixup [50] and Between-class learning [51] mixed two images during training, such as a dog image and a cat one, for regularization; Cutout randomly masked out square regions during training for regularization [52]; CutMix combined the Mixup and Cutout [53]. As a recent impressive DA approach, AutoAugment automatically searched for improved DA policies [54]. Moreover, similarly to the Mixup among all images within the same class, GAN-based DA can fill the uncovered real image distribution by generating realistic and diverse images via many-to-many mapping [55].

Along with the DA, researchers proposed many other techniques to improve the generalization: semi-supervised learning can considerably increase accuracy under limited labeled data by using pseudo labels for unlabeled data [5]; unsupervised anomaly detection allows to detect out-of-distribution images from normal ones, such as disease, without any labeled data [56]; regularization techniques, such as dropout [57], Lasso [58], and elastic net [59], are commonly used for reducing overfitting; similarly, ensembling multiple models via bagging [60] and boosting [61] can effectively increase the robustness; Lastly, in Medical Image Analysis, we can fuse multiple image modalities and/or sequences, such as MRI CT [62] and T1 MRI T2 MRI [63].

Moreover, many techniques exist for overcoming the difficulties in optimization: transfer learning can achieve better parameter initialization [64]; problem reduction, such as inputting 2D/3D image patches instead of a whole image, can eliminate unnecessary parameters [65]; learning methods with less data, such as zero-shot learning [66], one-shot learning [6], and neural Turing machine [67], are also promising; meta-learning promotes a versatile model applicable to various tasks without requiring multiple training from scratch [68].

Chapter 3 Investigated Contexts and Applications

In terms of interpolation, GAN-based medical image augmentation is reliable because medical modalities (e.g., X-ray, CT, MRI) can display the human body’s strong anatomical consistency at fixed position while clearly reflecting inter-subject variability [9, 10]—this is different from natural images, where various objects can appear at any position. Accordingly, we proposed to use noise-to-image GANs for (i) medical DA and (ii) physician training [11] in Chapter 4. Since then, research towards such clinically valuable applications has shown great promise. This chapter covers such related research works except our own works [15, 16, 20, 21] included in Chapters 5-7. Involving 9 physicians, we discuss in detail the clinical relevance of the GAN-based medical DA and physician training [69] in Chapter 8.

3.1 GAN-based Medical DA

Because the lack of annotated pathological images is the greatest challenge in CAD [3, 4], to handle various types of small/fragmented datasets from multiple scanners, researchers have actively conducted GAN-based DA studies especially in Medical Image Analysis. For better classification, some researchers adopted image-to-image GANs similarly to their conventional medical applications, such as denoising [37] and MRI-to-CT translation [38]: Wu et al. translated normal mammograms into lesion ones [70], Gupta et al. translated normal leg X-ray images into bone lesion ones [71], and Malygina et al. translated / normal chest X-ray images into pneumonia/pleural-thickening ones [72]. Meanwhile, others adopted the noise-to-image GANs as we proposed, to increase image diversity for further performance boost—the diversity matters more for the DA to sufficiently fill the real image distribution: Frid-Adar et al. augmented liver lesion CT images [12], Madani et al. augmented chest X-ray images with cardiovascular abnormality [73], and Konidaris et al. augmented brain MR images with Alzheimer’s disease [74].

To facilitate pathology detection and segmentation, researchers conditioned the image-to-image GANs, not the noise-to-image GANs like our work in Chapter 6, with pathology features (e.g., position, size, and appearance) and generated realistic/diverse pathology at desired positions in medical images. In terms of extrapolation, the pathology-aware GANs are promising because common and/or desired medical priors can play a key role in the conditioning—theoretically, infinite conditioning instances, external to the training data, exist and enforcing such constraints have an extrapolation effect via model reduction [19]. To the best of our knowledge, only Kanayama et al. tackled bounding box-based pathology detection using the image-to-image GAN [75]; they translated normal endoscopic images with various image sizes ( on average) into gastric cancer ones by inputting both a benign image and a black image (i.e., pixel value: 0) with a specific lesion Region Of Interest (ROI) at desired position. Without conditioning the noise-to-image GAN with nodule position, Gao et al. generated 3D nodule subvolumes only applicable to their subvolume-based detector using binary classification [76].

Since 3D imaging is spreading in radiology (e.g., CT and MRI), most GAN-based DA works for segmentation exploited 3D conditional image-to-image GANs. However, 3D medical image generation is more challenging than 2D one due to expensive computational cost and strong anatomical consistency; so, instead of generating a whole image including pathology, researchers only focused on a malignant Voxel Of Interest (VOI): Shin et al. translated normal brain MR images into tumor ones by inputting both a benign image and a tumor-conditioning image [77], similarly to the Kanayama et al.’s work [75]; Jin et al. generated CT images of lung nodules including the surrounding tissues by only inputting a VOI centered at a lung nodule, but with a central sphere region erased [78]. Recently, instead of generating realistic images and training classifiers on them separately, Chaitanya et al. directly optimized segmentation results on cardiac MR images [26]; however, it segmented body parts, instead of pathology. Since effective GAN-based medical DA generally requires much engineering effort, we also published a tutorial journal paper [14] about tricks to boost classification/detection/segmentation performance using the GANs, based on our experience and related work.

3.2 GAN-based Physician Training

While medical students and radiology trainees must view thousands of images to become competent [79], accessing such abundant medical images is often challenging due to infrastructural and legal constraints [80]. Because pathology-aware GANs can generate novel medical images with desired abnormalities (e.g., position, size, and appearance)—while maintaining enough realism not to confuse the medical trainees—GAN-based physician training concept is drawing attention: Chuquicusma et al. appreciated the GAN potential to train radiologists for educational purpose after successfully generating CT images of lung nodules that even deceived experts [81]; thanks to their anonymization ability, Shin et al. proposed to share pathology-aware GAN-generated images outside institutions after achieving considerable tumor segmentation results with only synthetic MR images for training [77]; more importantly, Finlayson et al. from Harvard Medical School are currently validating a class-conditional GANs’ radiology educational efficacy after succeeding in learning features that distinguish fractures from non-fractures on pelvic X-ray images [13].

Chapter 4 GAN-based Medical Image Generation

4.1 Prologue to First Project

4.1.1 Project Publication

-

•

GAN-based Synthetic Brain MR Image Generation. C. Han, H. Hayashi, L. Rundo, R. Araki, Y. Furukawa, W. Shimoda, S. Muramatsu, G. Mauri, H. Nakayama, In IEEE International Symposium on Biomedical Imaging (ISBI), pp. 734–738, April 2018.

4.1.2 Context

Prior to this work, it remained challenging to generate realistic and diverse medical images using noise-to-image GANs, not image-to-image GANs [37], due to their unstable training. GAN architectures well-suited for medical images were unclear. Yi et al. published results on the noise-to-image GAN-based brain MR image generation, proposing its potential for medical DA and physician training while our paper was under submission [82]; however, they only generated single-sequence low-resolution brain MR images without tumors.

4.1.3 Contributions

This project’s main contribution is to propose to use recently developed Wasserstein Generative Adversarial Network (WGAN) [83] for medical DA and physician training—the medical GAN applications are reliable in terms of interpolation because medical modalities can display the human body’s strong anatomical consistency at fixed position while clearly reflecting inter-subject variability. We also demonstrate the noise-to-image GAN’s such potential by generating multi-sequence realistic/diverse whole brain tumor MR images [83]; then, we confirm the superb realism via Visual Turing Test by a physician.

4.1.4 Recent Developments

Since proposing the GAN applications, we have successfully applied the noise-to-image GANs to improve 2D tumor classification/detection on brain MR images [15, 16, 20] as described in Chapters 5 and 6. For better 3D tumor segmentation, Shin et al. have translated normal brain MR images into tumor ones using the image-to-image GAN [77]. Finlayson et al. have generated pelvic fracture/non-fracture X-ray images using a class-conditional noise-to-image GAN, also introducing ongoing work on validating such GANs’ radiology educational efficacy [13]. Kwon et al. have generated realistic/diverse 3D brain MR images using the noise-to-image GAN [84].

4.2 Motivation

Along with classic methods [85], CNNs have recently revolutionized medical image analysis [86], including brain MRI segmentation [87]. However, CNN training demands extensive medical data that are laborious to obtain [88]. To overcome this issue, DA techniques via reconstructing original images are common for better performance, such as geometry and intensity transformations [89, 90].

However, those reconstructed images intrinsically resemble the original ones, leading to limited performance improvement in terms of generalization abilities; thus, generating realistic (similar to the real image distribution) but completely new images is essential. In this context, GAN-based DA has excellently performed in general computer vision tasks. It attributes to GAN’s good generalization ability from matching the noise-generated distribution to the real one with a sharp value function. Especially, Shrivastava et al. (SimGAN) outperformed the state-of-the-art with a relative 21% improvement in eye-gaze estimation [8].

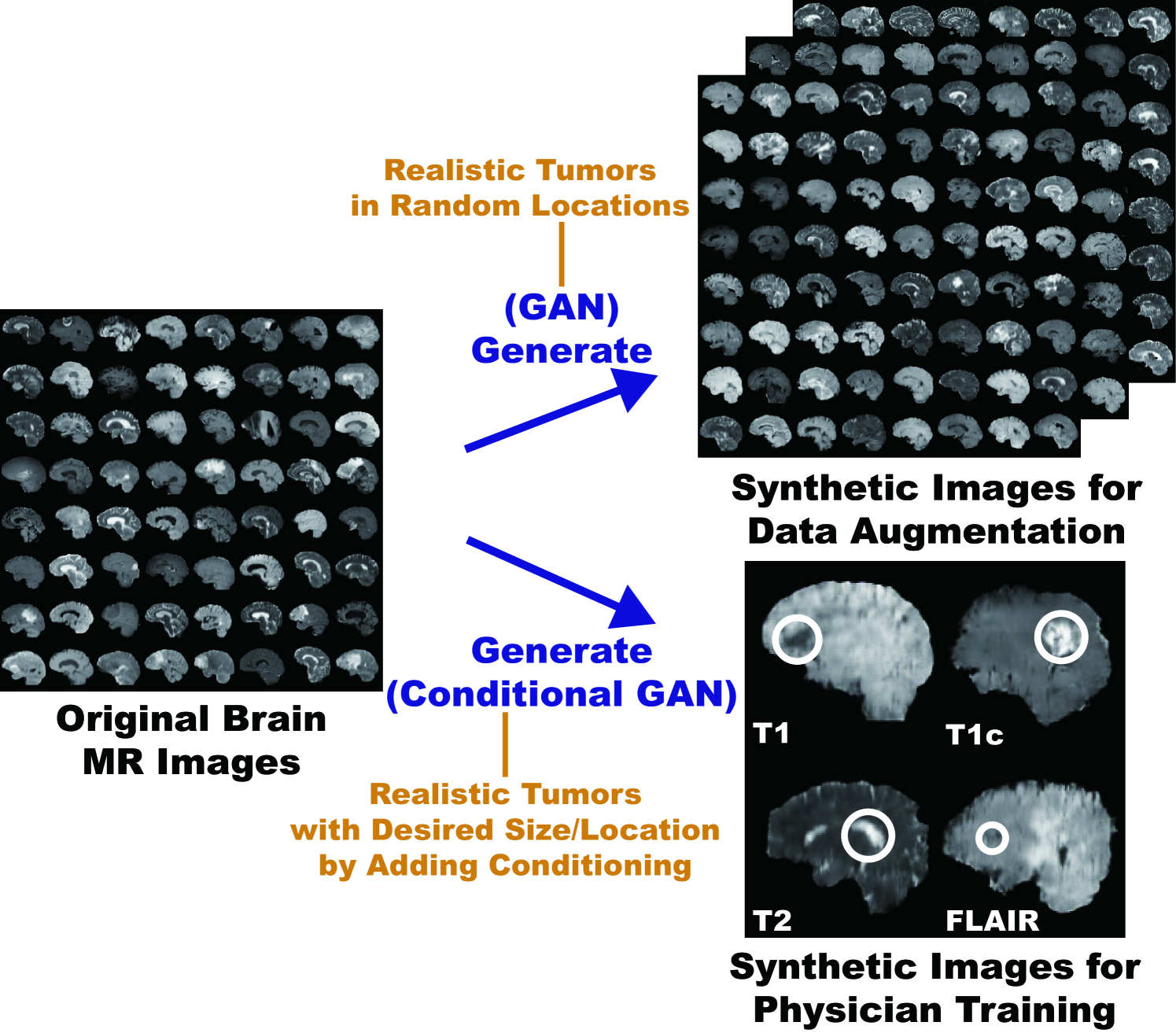

So, how can we generate realistic medical images completely different from the original samples? Our aim is to generate synthetic multi-sequence brain MR images using GANs, which is essential in medical imaging to increase diagnostic reliability, such as via DA in CAD as well as physician training (Fig. 4.1) [91]. However, this is extremely challenging—MR images are characterized by low contrast, strong visual consistency in brain anatomy, and intra-sequence variability. Our novel GAN-based approach for medical DA adopts Deep Convolutional Generative Adversarial Network (DCGAN) [40] and WGAN [83] to generate realistic images, and an expert physician validates them via Visual Turing Test [92].

Research Questions. We mainly address two questions:

-

•

GAN Selection: Which GAN architecture is well-suited for realistic medical image generation?

-

•

Medical Image Processing: How can we handle MR images with specific intra-sequence variability?

Contributions. Our main contributions are as follows:

-

•

MR Image Generation: This research shows that WGAN can generate realistic multi-sequence brain MR images, possibly leading to valuable clinical applications: DA and physician training.

-

•

Medical Image Generation: This research provides how to exploit medical images with intrinsic intra-sequence variability towards GAN-based DA for medical imaging.

4.3 Materials and Methods

Towards clinical applications utilizing realistic brain MR images, we generate synthetic brain MR images from the original samples using GANs. Here, we compare the most used two GANs, DCGAN and WGAN, to find a well-suited GAN between them for medical image generation—it must avoid mode collapse and generate realistic MR images with high resolution.

4.3.1 BRATS 2016 Dataset

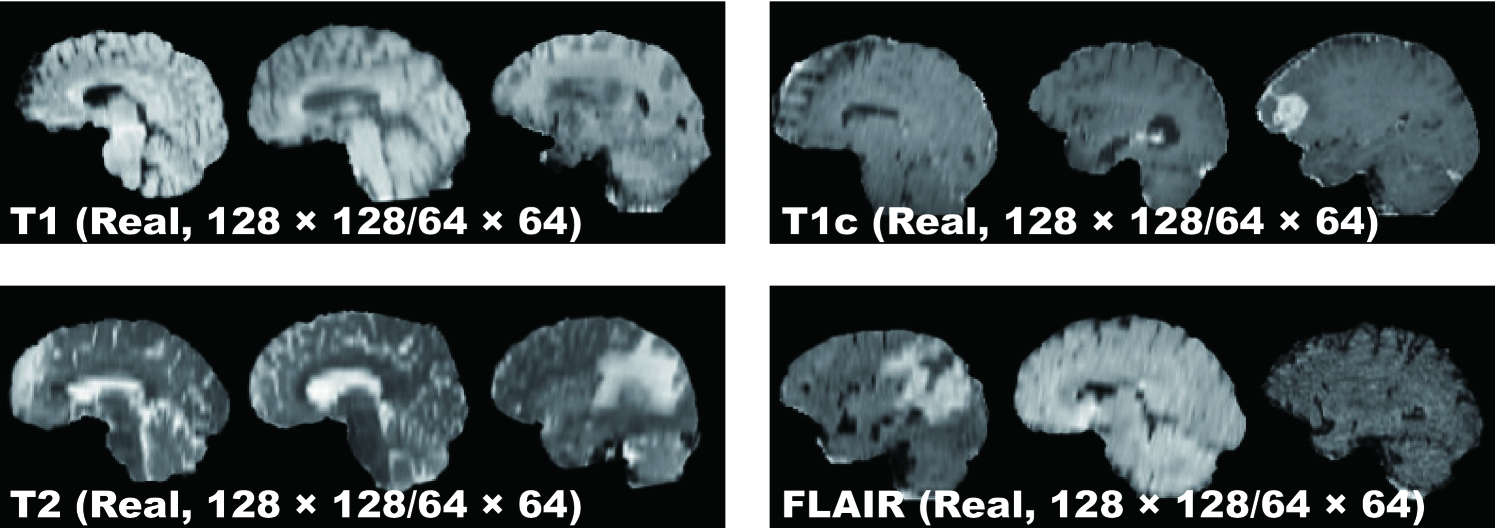

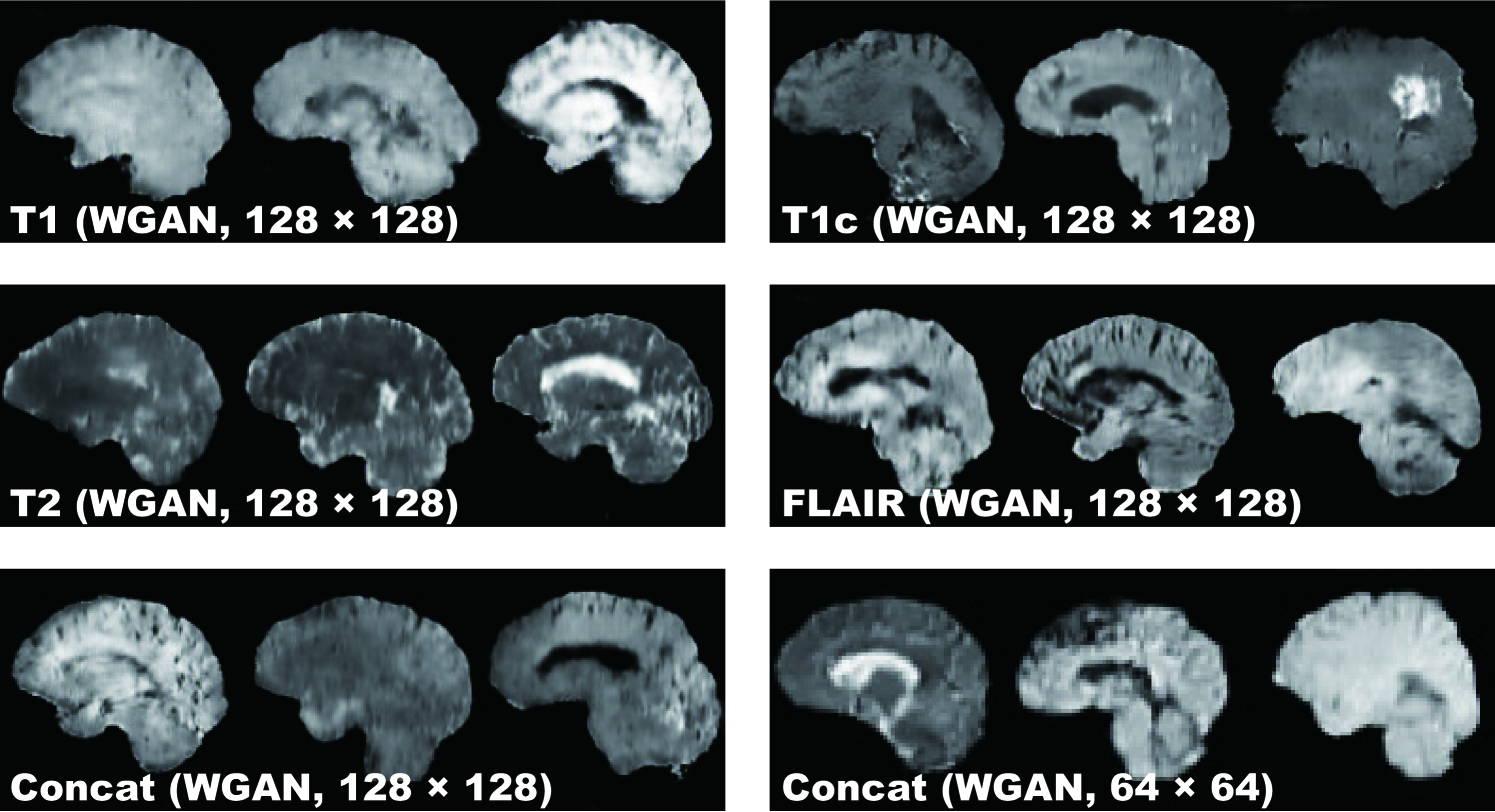

This project exploits a dataset of multi-sequence brain MR images to train GANs with sufficient data and resolution, which was originally produced for the Multimodal Brain Tumor Image Segmentation Benchmark (BRATS) Challenge [93]. In particular, the BRATS 2016 training dataset contains 220 High-Grade Glioma (HGG) and 54 Low-Grade Glioma (LGG) cases, with T1-weighted (T1), contrast enhanced T1-weighted (T1c), T2-weighted, and FLAIR sequences—they were skull stripped and resampled to isotropic resolution with voxels; among the different sectional planes, we use sagittal multi-sequence scans of the HGG patients to show that our GANs can generate a complete view of the whole brain anatomy (allowing for visual consistency among the different brain lobes), including also severe tumors for clinical purpose.

4.3.2 DCGAN/WGAN-based Image Generation

Pre-processing We select the slices from to among the whole slices to omit initial/final slices, since they convey a negligible amount of useful information and could affect the training. The images are resized to both / pixels from for better GAN training (DCGAN architecture results in stable training on 64 64 pixels [40], and so is reasonably a high-resolution). Fig. 4.2 shows example real MR images used for training; each sequence contains 15,400 images with 220 patients 70 slices (61,600 in total).

MR Image Generation DCGAN and WGAN generate six types of images as follows:

-

•

T1 sequence () from the real T1;

-

•

T1c sequence () from the real T1c;

-

•

T2 sequence () from the real T2;

-

•

FLAIR sequence () from the real FLAIR;

-

•

Concat sequence () from concatenating the real T1, T1c, T2, and FLAIR (i.e., feeding the model with samples from all the MRI sequences);

-

•

Concat sequence () from concatenating the real T1, T1c, T2, and FLAIR.

Concat sequence refers to a new ensemble sequence for an alternative DA, containing features of all four sequences. We also generate Concat images to compare the generation performance in terms of image resolution.

DCGAN [40] is a standard GAN [7] with a convolutional architecture for unsupervised learning; this generative model uses up-convolutions interleaved with Rectified Lineaer Unit (ReLU) non-linearity and batch normalization.

Let be a generating distribution over data . The generator is a mapping to data space that takes a prior on input noise variables , where is a neural network with parameters . Similarly, the discriminator is a neural network with parameters that takes either real data or synthetic data and outputs a single scalar probability that came from the real data. The discriminator maximizes the probability of classifying both training examples and samples from correctly while the generator minimizes the likelihood; it is formulated as a minimax two-player game with value function :

| (4.1) |

This can be reformulated as the minimization of the Jensen-Shannon (JS) divergence between the distribution and another distribution derived from and .

DCGAN Implementation Details We use the same DCGAN architecture [40] with no in the generator, ELU as the discriminator, all filters of size , and a half channel size for DCGAN training. A batch size of and Adam optimizer with learning rate were implemented.

WGAN [83] is an alternative to traditional GAN training, as the JS divergence is limited, such as when it is discontinuous; this novel GAN achieves stable learning with less mode collapse by replacing it to the Earth Mover (EM) distance (i.e., the Wasserstein-1 metrics):

| (4.2) |

where is the set of all joint distributions whose marginals are and , respectively. In other words, implies how much mass must be transported from one distribution to another. This distance intuitively indicates the cost of the optimal transport plan.

WGAN Implementation Details We use the same DCGAN architecture [40] for WGAN training. A batch size of 64 and Root Mean Square Propagation (RMSprop) optimizer with learning rate were implemented.

4.3.3 Clinical Validation via Visual Turing Test

To quantitatively evaluate how realistic the synthetic images are, an expert physician was asked to constantly classify a random selection of real/ synthetic MR images as real or synthetic shown in random order for each GAN/sequence, without previous training stages revealing which is real/synthetic; Concat images were classified together with real T1, T1c, T2, and FLAIR images in equal proportion. The so-called Visual Turing Test [92] uses binary questions to probe a human ability to identify attributes and relationships in images. For these motivations, it is commonly used to evaluate GAN-generated images, such as for SimGAN [8]. This applies also to medical images in clinical environments [81], wherein physicians’ expertise is critical.

4.4 Results

This section shows how DCGAN and WGAN generate synthetic brain MR images. The results include instances of synthetic images and their quantitative evaluation of the realism by an expert physician.

4.4.1 MR Images Generated by DCGAN/WGAN

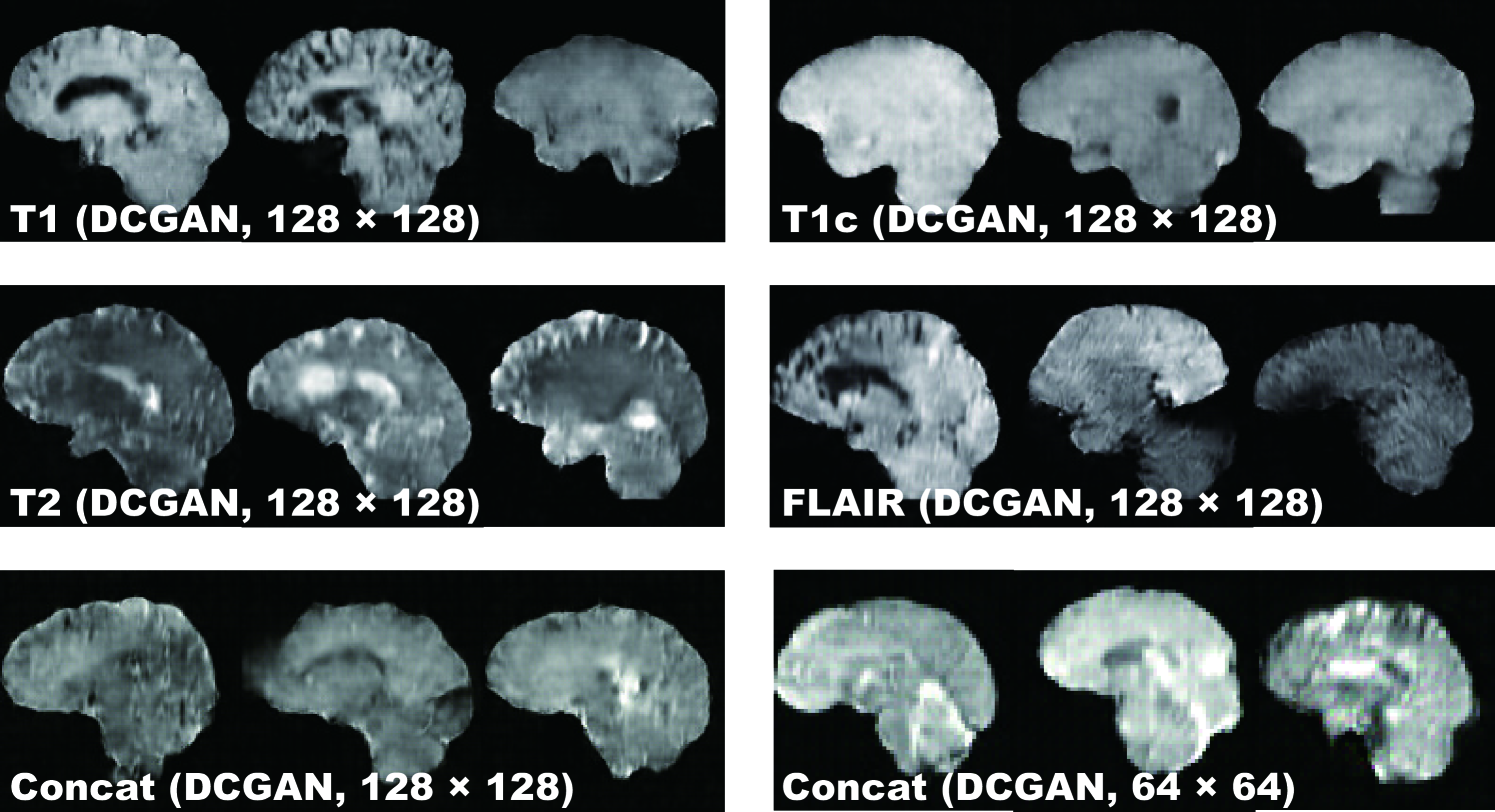

DCGAN Fig. 4.3 illustrates examples of synthetic images by DCGAN. The images look similar to the real samples. Concat images combine appearances and patterns from all the four sequences used in training. Since DCGAN’s value function could be unstable, it often generates hyper-intense T1-like images analogous to mode collapse for 64 64 Concat images, while sharing the same hyper-parameters with 128 128.

WGAN Fig. 4.4 shows the example output of WGAN in each sequence. Remarkably outperforming DCGAN, WGAN successfully captures the sequence-specific texture and tumor appearance while maintaining the realism of the original brain MR images. As expected, Concat images tend to have more messy and unrealistic artifacts than Concat ones, especially around boundaries of the brain, due to the introduction of unexpected intensity patterns.

4.4.2 Visual Turing Test Results

Table 4.1 shows the confusion matrix concerning the Visual Turing Test. Even the expert physician found classifying real and synthetic images challenging, especially in lower resolution due to their less detailed appearances unfamiliar in clinical routine, even for highly hyper-intense Concat images by DCGAN; distinguishing Concat images was easier compared to the case of T1, T1c, T2, and FLAIR images because the physician often felt odd from the artificial sequence. WGAN succeeded to deceive the physician significantly better than DCGAN for all the MRI sequences except FLAIR images ( to ).

| Accuracy (%) | Real as Real (%) | Real as Synt (%) | Synt as Real (%) | Synt as Synt (%) | |

| T1 (DCGAN, ) | 70 | 52 | 48 | 12 | 88 |

| T1c (DCGAN, ) | 71 | 48 | 52 | 6 | 94 |

| T2 (DCGAN, ) | 64 | 44 | 56 | 16 | 84 |

| FLAIR (DCGAN, ) | 54 | 24 | 76 | 16 | 84 |

| Concat (DCGAN, ) | 77 | 68 | 32 | 14 | 86 |

| Concat (DCGAN, ) | 54 | 26 | 74 | 18 | 82 |

| T1 (WGAN, ) | 64 | 40 | 60 | 12 | 88 |

| T1c (WGAN, ) | 55 | 26 | 74 | 16 | 84 |

| T2 (WGAN, ) | 58 | 38 | 62 | 22 | 78 |

| FLAIR (WGAN, ) | 62 | 32 | 68 | 8 | 92 |

| Concat (WGAN, ) | 66 | 62 | 38 | 30 | 70 |

| Concat (WGAN, ) | 53 | 36 | 64 | 30 | 70 |

4.5 Conclusion

Our preliminary results show that GANs, especially WGAN, can generate realistic multi-sequence brain MR images that even an expert physician is unable to accurately distinguish from the real, leading to valuable clinical applications, such as DA and physician training. This attributes to WGAN’s good generalization ability with a sharp value function. In this context, DCGAN might be unsuitable due to both inferior realism and mode collapse in terms of intensity. We only use slices of interest in training to obtain desired MR images and generate both original/Concat sequence images for DA in medical imaging.

This study confirms the synthetic image quality by the human expert evaluation, but a more objective computational evaluation for GANs should also follow, such as Classifier Two-Sample Tests (C2ST) [94], which assesses whether two samples are drawn from the same distribution. Currently this work uses sagittal MR images alone, so we plan to generate coronal and transverse images. As this research uniformly selects middle slices in pre-processing, better data generation demands developing a classifier to only select brain MRI slices with/without tumors.

Towards DA, whereas realistic images give more insights on geometry/intensity transformations in classification, more realistic images do not always assure better DA, so we have to find suitable image resolutions and sequences; that is why we generate both high-resolution images and Concat images, yet they looked more unrealistic for the physician. For physician training, generating desired realistic tumors by adding conditioning requires exploring latent spaces of GANs extensively.

Overall, our novel GAN-based realistic brain MR image generation approach sheds light on diagnostic and prognostic medical applications; future studies on these applications are needed to confirm our encouraging results.

Chapter 5 GAN-based Medical Image Augmentation for 2D Classification

5.1 Prologue to Second Project

5.1.1 Project Publications

-

•

Infinite Brain MR Images: PGGAN-based Data Augmentation for Tumor Detection. C. Han, L. Rundo, R. Araki, Y. Furukawa, G. Mauri, H. Nakayama, H. Hayashi, In A. Esposito, M. Faundez-Zanuy, F. C. Morabito, E. Pasero (eds.) Neural Approaches to Dynamics of Signal Exchanges, Springer, pp. 291–303, September 2019.

-

•

Combining Noise-to-Image and Image-to-Image GANs: Brain MR Image Augmentation for Tumor Detection. C. Han, L. Rundo, R. Araki, Y. Nagano, Y. Furukawa, G. Mauri, H. Nakayama, H. Hayashi, IEEE Access, pp. 156966–156977, October 2019.

5.1.2 Context

At the time we wrote the former paper, high-resolution (e.g., 256 256) medical image generation using noise-to-image GANs had been challenging [73] while most CNN architectures adopt around 256 256 input sizes (e.g., InceptionResNetV2 [95]: , ResNet-50 [96]: ). Moreover, prior to the latter paper, analysis had been immature on GAN-generated additional training images for better CNN-based classification.

5.1.3 Contributions

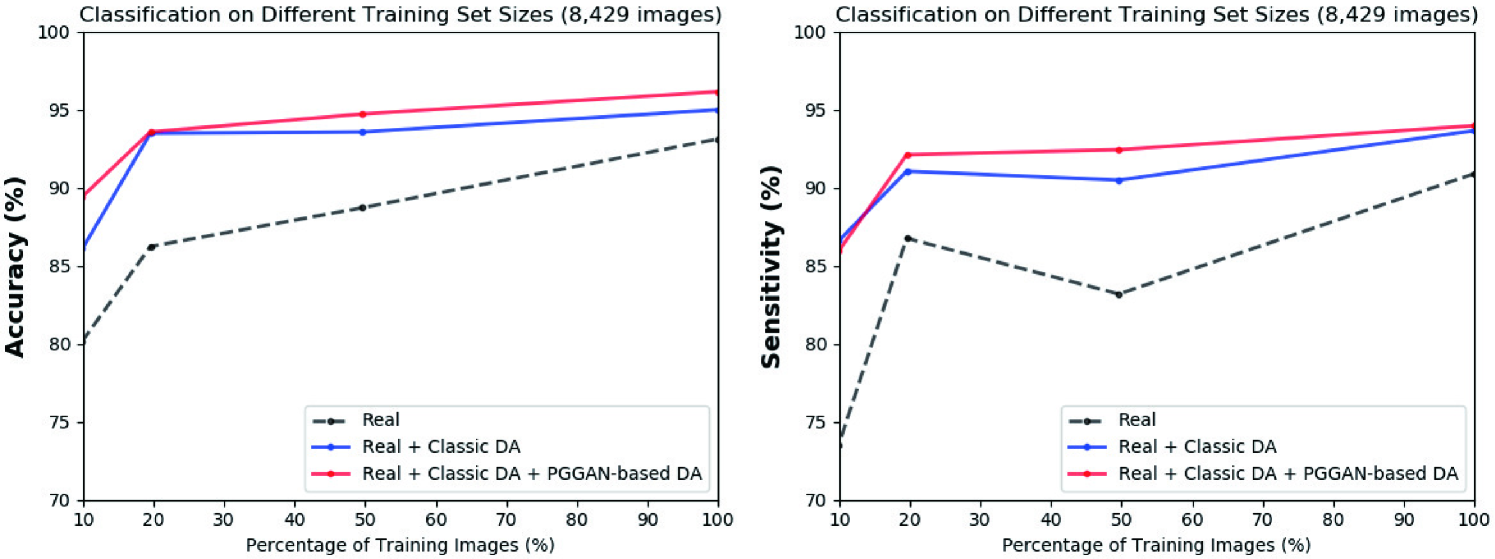

This project’s core contribution is to firstly combine noise-to-image and image-to-image GANs for improved 2D classification. The former paper adopts a noise-to-image GAN called PGGANs to generate realistic/diverse original-sized 256 256 whole brain MR images with/without tumors separately; additionally, the latter paper exploits an image-to-image GAN called MUNIT to further refine the synthetic images’ texture and shape similarly to real ones. By so doing, our two-step GAN-based DA boosts sensitivity 93.7% to 97.5% in tumor/non-tumor classification. Moreover, we firstly analyze how medical GAN-based DA is associated with pre-training on ImageNet and discarding weird-looking synthetic images to humans to achieve high sensitivity. A physician classifies a few synthetic images as real in Visual Turing Test despite the high resolution.

5.1.4 Recent Developments

Since the former paper’s acceptance (the book chapter’s publication process took more than a year), to improve 2D classification, Konidaris et al. generated brain MR images with Alzheimer’s disease using the noise-to-image GAN [74]. No more recent developments to report exist for the latter paper because it is very recent.

5.2 Motivation

CNNs are playing a key role in Medical Image Analysis, updating the state-of-the-art in many tasks [87, 97, 98] when large-scale annotated training data are available. However, preparing such massive medical data is demanding; thus, for better diagnosis, researchers generally adopt classic DA techniques, such as geometric/intensity transformations of original images [89, 90]. Those augmented images, however, intrinsically have a similar distribution to the original ones, resulting in limited performance improvement. In this sense, GAN-based DA can considerably increase the performance [7]; since the generated images are realistic but completely novel samples, they can relieve the sampling biases and fill the real image distribution uncovered by the original dataset [99].

The main problem in CAD lies in small/fragmented medical imaging datasets from multiple scanners; thus, researchers have improved classification by augmenting images with noise-to-image GANs [11] or image-to-image GANs [70]. However, no research has achieved further performance boost by combining noise-to-image and image-to-image GANs.

So, how can we maximize the DA effect under limited training images using the GAN combinations? To generate and refine brain MR images with/without tumors separately (Fig. 5.1), we propose a two-step GAN-based DA approach: (i) PGGANs [17], low-to-high resolution noise-to-image GAN, first generates realistic/diverse images—the PGGANs helps DA since most CNN architectures adopt around input sizes (e.g., InceptionResNetV2 [95]: , ResNet-50 [96]: ); (ii) MUNIT [18] that combines GANs/VAEs [35] or SimGAN [8] that uses a DA-focused GAN loss, further refines the texture and shape of the PGGAN-generated images to fit them into the real image distribution. Since training a single sophisticated GAN system is already difficult, instead of end-to-end training, we adopt a two-step approach for performance boost via an ensemble generation process from those state-of-the-art GANs’ different algorithms.

We thoroughly investigate CNN-based tumor classification results, also considering the influence of pre-training on ImageNet [34] and discarding weird-looking GAN-generated images. Moreover, we evaluate the synthetic images’ appearance via Visual Turing Test [92] by an expert physician, and visualize the data distribution of real/synthetic images via t-Distributed Stochastic Neighbor Embedding (t-SNE) [100]. When combined with classic DA, our two-step GAN-based DA approach significantly outperforms the classic DA alone, boosting sensitivity to .

Research Questions. We mainly address two questions:

-

•

GAN Selection: Which GAN architectures are well-suited for realistic/diverse medical image generation?

-

•

Medical DA: How to use GAN-generated images as additional training data for better CNN-based diagnosis?

Contributions. Our main contributions are as follows:

-

•

Whole Image Generation: This research shows that PGGANs can generate realistic/diverse whole medical images—not only small pathological sub-areas—and MUNIT can further refine their texture and shape similarly to real ones.

-

•

Two-step GAN-based DA: This novel two-step approach, combining for the first time noise-to-image and image-to-image GANs, significantly boosts tumor classification sensitivity.

-

•

Misdiagnosis Prevention: This study firstly analyzes how medical GAN-based DA is associated with pre-training on ImageNet and discarding weird-looking synthetic images to achieve high sensitivity with small and fragmented datasets.

5.3 Materials and Methods

5.3.1 BRATS 2016 Dataset

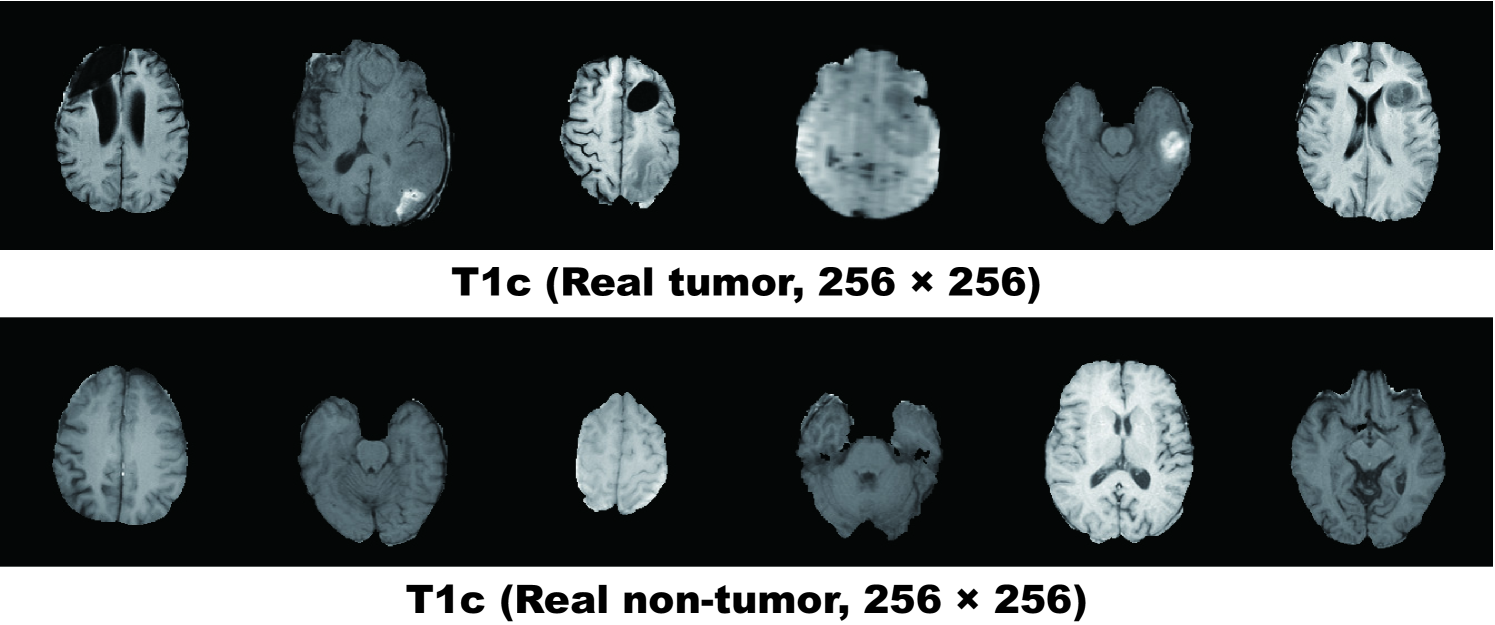

We use a dataset of T1c brain axial MR images of HGG cases from BRATS 2016 [93]. T1c is the most common sequence in tumor classification thanks to its high-contrast [101].

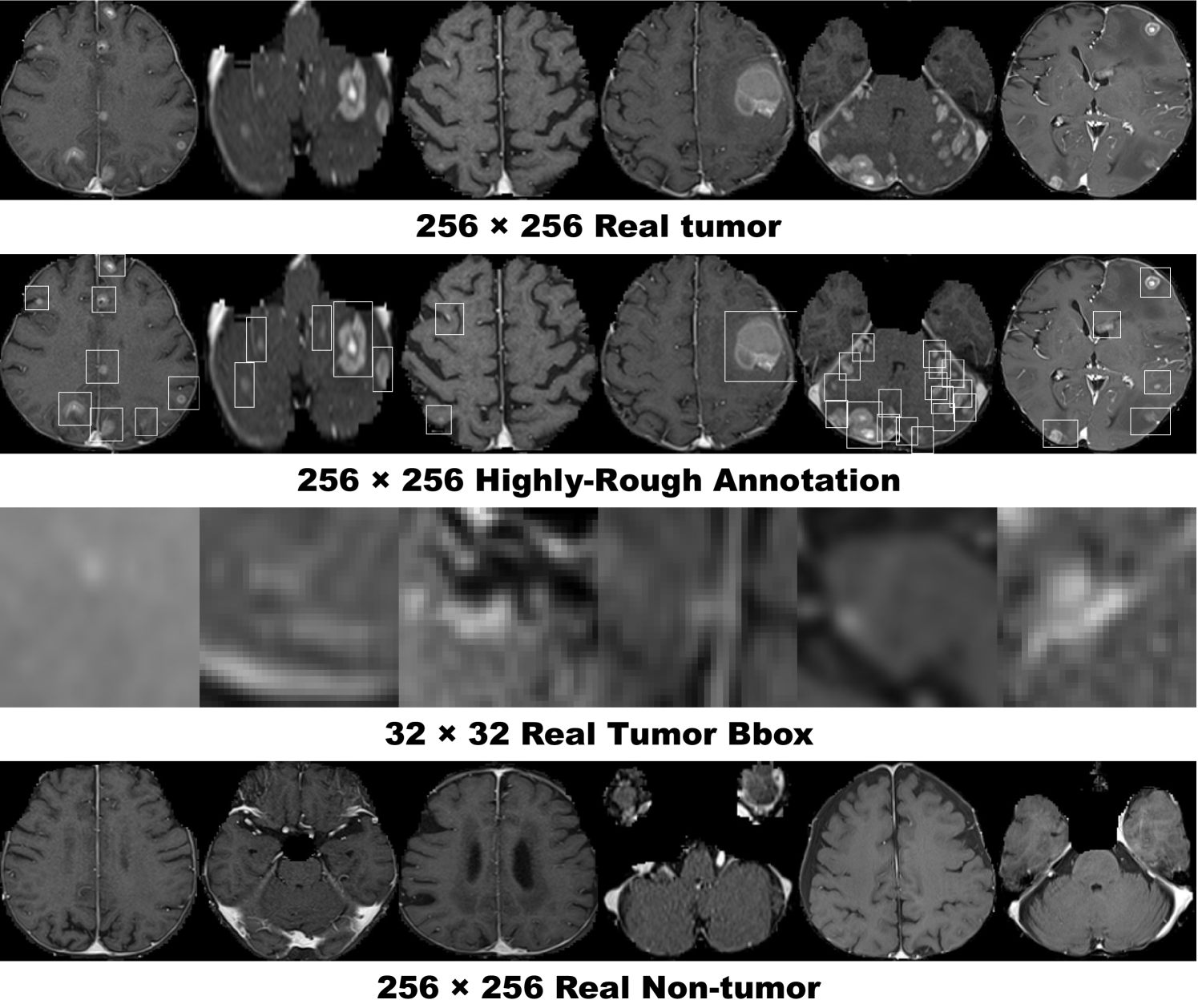

5.3.2 PGGAN-based Image Generation

Pre-processing For better GAN/ResNet-50 training, we select the slices from to among the whole slices to omit initial/final slices, which convey negligible useful information; also, since tumor/non-tumor annotation in the BRATS 2016 dataset, based on 3D volumes, is highly incorrect/ambiguous on 2D slices, we exclude () tumor images tagged as non-tumor, () non-tumor images tagged as tumor, () borderline images with unclear tumor/non-tumor appearance, and () images with missing brain parts due to the skull-stripping procedure. For tumor classification, we divide the whole dataset ( patients) into:

-

•

Training set

( patients/ tumor/ non-tumor images); -

•

Validation set

( patients/ tumor/ non-tumor images); -

•

Test set

( patients/ tumor/ non-tumor images).

During the GAN training, we only use the training set to be fair; for better PGGAN training, the training set images are zero-padded to reach a power of : pixels from . Fig. 5.2 shows example real MR images.

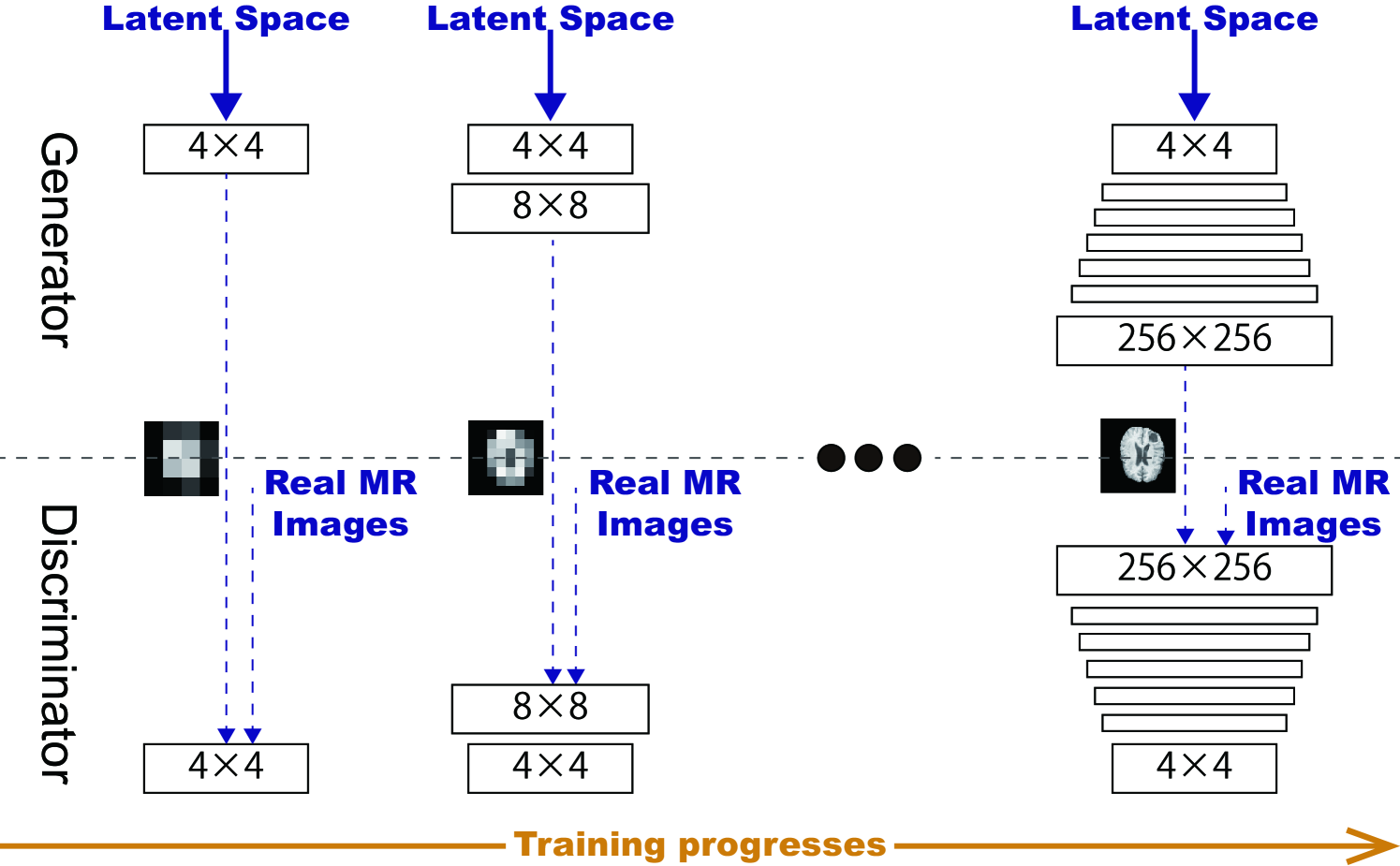

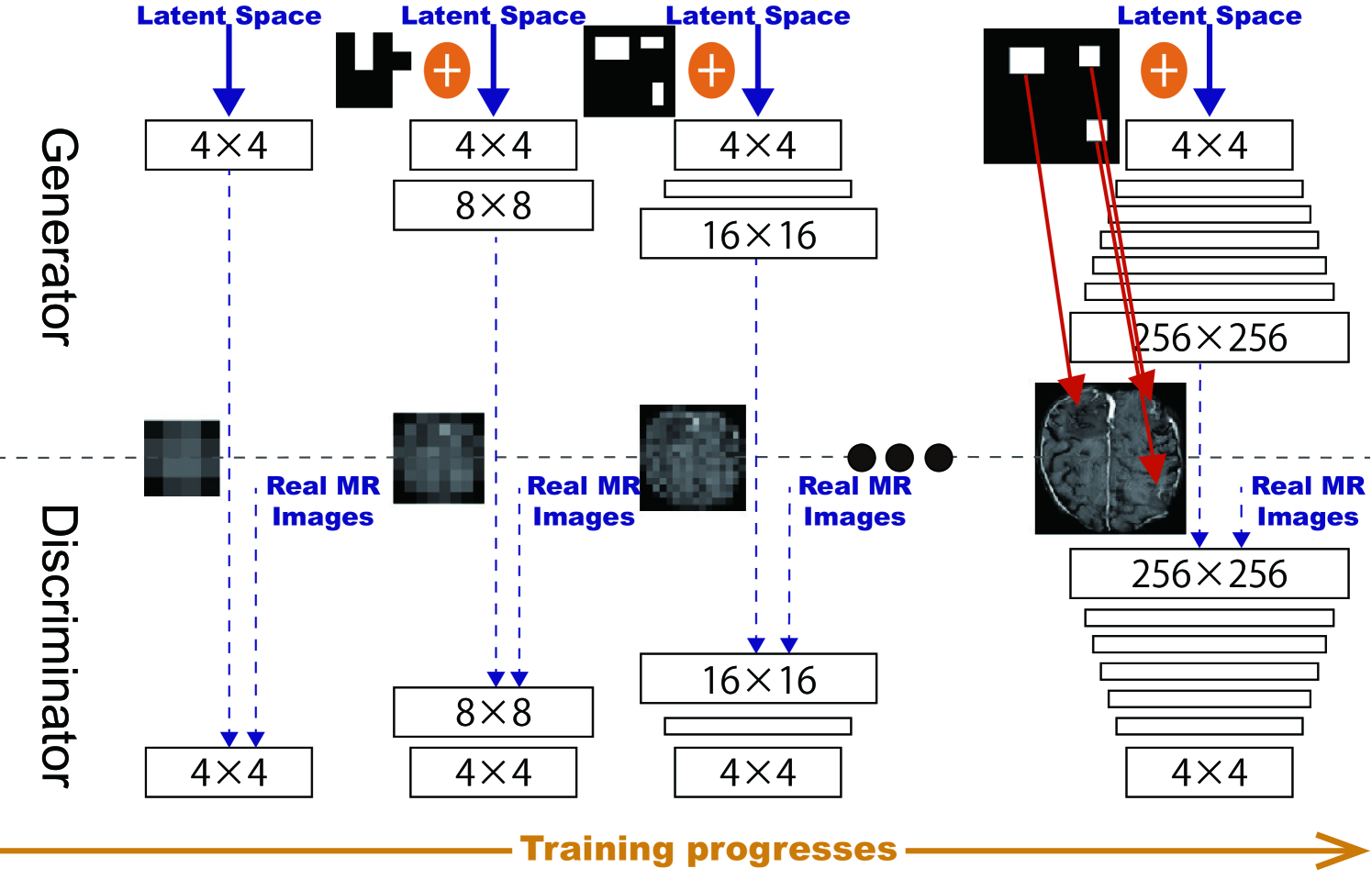

PGGANs [17] is a GAN training method that progressively grows a generator and discriminator: starting from low resolution, new layers model details as training progresses. This study adopts the PGGANs to synthesize realistic/diverse brain MR images (Fig. 5.3); we train and generate tumor/non-tumor images separately.

PGGAN Implementation Details The PGGAN architecture adopts the Wasserstein loss with Gradient Penalty (WGAN-GP) [39]:

| (5.1) |

where denotes the expected value, the discriminator (i.e., the set of -Lipschitz functions), is the data distribution defined by the true data sample , and is the model distribution defined by the generated sample ( is the input noise to the generator sampled from a Gaussian distribution). A gradient penalty is added for the random sample , where is the gradient operator towards the generated samples and is the gradient penalty coefficient.

We train the model (Table 5.1) for epochs with a batch size of and learning rate for the Adam optimizer (the exponential decay rates ) [102]. All experiments use with critic iteration per generator iteration. During training, we apply random cropping in - pixels as DA.

| Generator | Activation | Output Shape |

|---|---|---|

| Latent vector | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Upsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Upsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Upsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Upsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Upsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Upsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | Linear | |

| Discriminator | Activation | Output Shape |

|---|---|---|

| Input image | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Downsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Downsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Downsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Downsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Downsample | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Downsample | – | |

| Minibatch stddev | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Fully-connected | Linear | |

5.3.3 MUNIT/SimGAN-based Image Refinement

Refinement Using resized images for ResNet-50, we further refine the texture and shape of PGGAN-generated tumor/non-tumor images separately to fit them into the real image distribution using MUNIT [18] or SimGAN [8]. SimGAN remarkably improved eye gaze estimation results after refining non-GAN-based synthetic images from the UnityEyes simulator image-to-image translation; thus, we also expect such performance improvement after refining synthetic images from a noise-to-image GAN (i.e., PGGANs) an image-to-image GAN (i.e., MUNIT/SimGAN) with considerably different GAN algorithms.

We randomly select real/ PGGAN-generated tumor images for tumor image training, and we perform the same for non-tumor image training. To find suitable refining steps for each architecture, we pick the MUNIT/SimGAN models with the highest accuracy on tumor classification validation, when pre-trained and combined with classic DA, among // steps, respectively.

MUNIT [18] is an image-to-image GAN based on both auto-encoding/translation; it extends UNIT [104] to increase the generated images’ realism/diversity via a stochastic model representing continuous output distributions.

MUNIT Implementation Details The MUNIT architecture adopts the following loss:

| (5.2) | |||||

where denotes the loss function. Using the multiple encoders /, generators /, discriminators /, cycle-consistencies CC1/CC2, and domain-invariant perceptions VGG1/VGG2 [105], this framework jointly solves learning problems of the VAE1/VAE2 and GAN1/GAN2 for the image reconstruction streams, image translation streams, cycle-consistency reconstruction streams, and domain-invariant perception streams. Since we do not need the style loss for our experiments, instead of the MUNIT loss, we use the UNIT loss with the perceptual loss for the MUNIT architecture (as in the UNIT authors’ GitHub repository). The MUNIT architecture adopts the following loss:

| (5.3) |

We train the model (Table 5.2) for steps with a batch size of 1 and learning rate for the Adam optimizer () [102]. The learning rate is reduced by half every steps. We use the following MUNIT weights: the adversarial loss weight ; the image reconstruction loss weight ; the Kullback-Leibler (KL) divergence loss weight for reconstruction ; the cycle consistency loss weight ; the KL divergence loss weight for cycle consistency ; the domain-invariant perceptual loss weight ; the Least Squares GAN objective function for the discriminators [106]. During training, we apply horizontal flipping as DA.

| Generator | Activation | Output Shape |

| Content Encoder | ||

| Input image | – | |

| Conv | ReLU | |

| Conv | ReLU | |

| Conv | ReLU | |

| ResBlock4 | ReLU | |

| – | ||

| Decoder | ||

| ResBlock4 | ReLU | |

| – | ||

| Upsample | – | |

| Conv | ReLU | |

| Upsample | – | |

| Conv | ReLU | |

| Conv | Tanh | |

| Discriminator | Activation | Output Shape |

|---|---|---|

| Input image | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | – | |

| AveragePool | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | – | |

| AveragePool | – | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | LReLU | |

| Conv | – | |

| AveragePool | – | |

SimGAN [8] is an image-to-image GAN designed for DA that adopts the self-regularization term/local adversarial loss; it updates a discriminator with a history of refined images.

SimGAN Implementation Details The SimGAN architecture (i.e., a refiner) uses the following loss:

| (5.4) |

where denotes the loss function, is the function parameters, is the PGGAN-generated training image, and is the set of the real images . The first part adds realism to the synthetic images using a discriminator, while the second part preserves the tumor/non-tumor features.

We train the model (Table 5.3) for steps with a batch size of 10 and learning rate for the Stochastic Gradient Descent (SGD) optimizer [109] without momentum. The learning rate is reduced by half at 15,000 steps. We train the refiner first with just the self-regularization loss with for steps; then, for each update of the discriminator, we update the refiner times. During training, we apply horizontal flipping as DA.

| Refiner | Activation | Output Shape |

|---|---|---|

| Input image | – | |

| Conv | ReLU | |

| ResBlock12 | ReLU | |

| – | ||

| Conv | Tanh | |

| Discriminator | Activation | Output Shape |

|---|---|---|

| Input image | – | |

| Conv | ReLU | |

| Conv | ReLU | |

| Maxpool | – | |

| Conv | ReLU | |

| Conv | ReLU | |

| Maxpool | – | |

| Conv | ReLU | |

| Conv | ReLU | |

5.3.4 ResNet-50-based Tumor Classification

Pre-processing As ResNet-50’s input size is pixels, we resize the whole real images from and whole PGGAN-generated images from .

ResNet-50 [96] is a -layer residual learning-based CNN. We adopt it to conduct tumor/non-tumor binary classification on MR images due to its outstanding performance in image classification tasks [110], including binary classification [24]. Chang et al.[111] also used a similar -layer residual convolutional network for the binary classification of brain tumors (i.e., determining the Isocitrate Dehydrogenase status in LGG/HGG).

DA Setups To confirm the effect of PGGAN-based DA and its refinement using MUNIT/SimGAN, we compare the following DA setups under sufficient images both with/without ImageNet [34] pre-training (i.e., 20 DA setups):

-

1.

real images;

-

2.

+ k classic DA;

-

3.

+ k classic DA;

-

4.

+ k PGGAN-based DA;

-

5.

+ k PGGAN-based DA w/o clustering/discarding;

-

6.

+ k classic DA & k PGGAN-based DA;

-

7.

+ k MUNIT-refined DA;

-

8.

+ k classic DA & k MUNIT-refined DA;

-

9.

+ k SimGAN-refined DA;

-

10.

+ k classic DA & k SimGAN-refined DA.

Due to the risk of overlooking the tumor diagnosis, higher sensitivity matters much more than higher specificity [112]; thus, we aim to achieve higher sensitivity, using the additional synthetic training images. We perform McNemar’s test on paired tumor classification results [113] to confirm our two-step GAN-based DA’s statistically-significant sensitivity improvement; since this statistical analysis involves multiple comparison tests, we adjust their -values using the Holm-Bonferroni method [114].

Whereas medical imaging researchers widely use the ImageNet initialization despite different textures of natural/medical images, recent study found that such ImageNet-trained CNNs are biased towards recognizing texture rather than shape [115]; thus, we aim to investigate how the medical GAN-based DA affects classification performance with/without the pre-training. As the classic DA, we adopt a random combination of horizontal/vertical flipping, rotation up to degrees, width/height shift up to , shearing up to , zooming up to , and constant filling of points outside the input boundaries (Fig. 5.4). For the PGGAN-based DA and its refinement, we only use success cases after discarding weird-looking synthetic images (Fig. 5.5); DenseNet-169 [116] extracts image features and k-means++ [117] clusters the features into groups, and then we manually discard each cluster containing similar weird-looking images. To verify its effect, we also conduct a PGGAN-based DA experiment without the discarding step. Additionally, to confirm the effect of changing training data set sizes, we compare classification results with pre-training on /// real images vs real images + k classic DA vs real images + k classic DA & k PGGAN-based DA (i.e., setups).

ResNet-50 Implementation Details The ResNet-50 architecture adopts the binary cross-entropy loss for binary classification both with/without ImageNet pre-training. As shown in Table 5.4, for robust training, before the final sigmoid layer, we introduce a dropout [57], linear dense, and batch normalization [118] layers—training with GAN-based DA tends to be unstable especially without the batch normalization layer. We use a batch size of , learning rate for the SGD optimizer [109] with momentum, and early stopping of epochs. The learning rate was multiplied by every epochs for the training from scratch and by every epochs for the ImageNet pre-training.

| Classifier | Activation | Output Shape |

|---|---|---|

| Input image | – | |

| Conv | ReLU | |

| Maxpool | – | |

| ResBlock3 | ReLU | |

| ReLU | ||

| ReLU | ||

| ResBlock4 | ReLU | |

| ReLU | ||

| ReLU | ||

| ResBlock6 | ReLU | |

| ReLU | ||

| ReLU | ||

| ResBlock3 | ReLU | |

| ReLU | ||

| ReLU | ||

| AveragePool | – | |

| Flatten | – | 2048 |

| Dropout | – | 2048 |

| Dense | – | 2 |

| BatchNorm | Sigmoid | 2 |

5.3.5 Clinical Validation via Visual Turing Test

To quantify the (i) realism of synthetic images by PGGANs, MUNIT, and SimGAN against real images respectively (i.e., 3 setups) and (ii) clearness of their tumor/non-tumor features, we supply, in random order, to an expert physician a random selection of:

-

•

real tumor images;

-

•

real non-tumor images;

-

•

synthetic tumor images;

-

•

synthetic non-tumor images.

Then, the physician is asked to classify them as both (i) real/synthetic and (ii) tumor/non-tumor, without previously knowing which is real/synthetic and tumor/non-tumor.

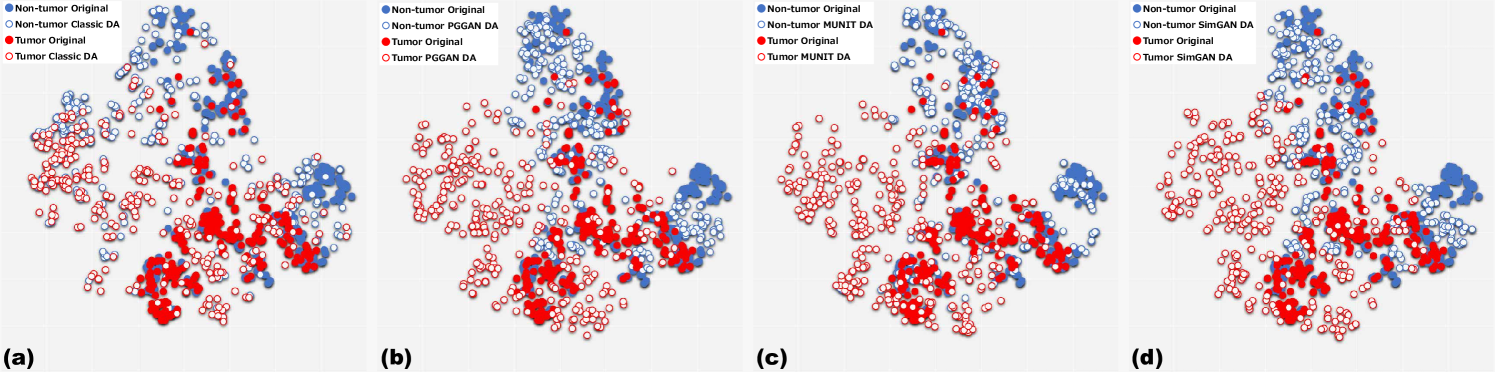

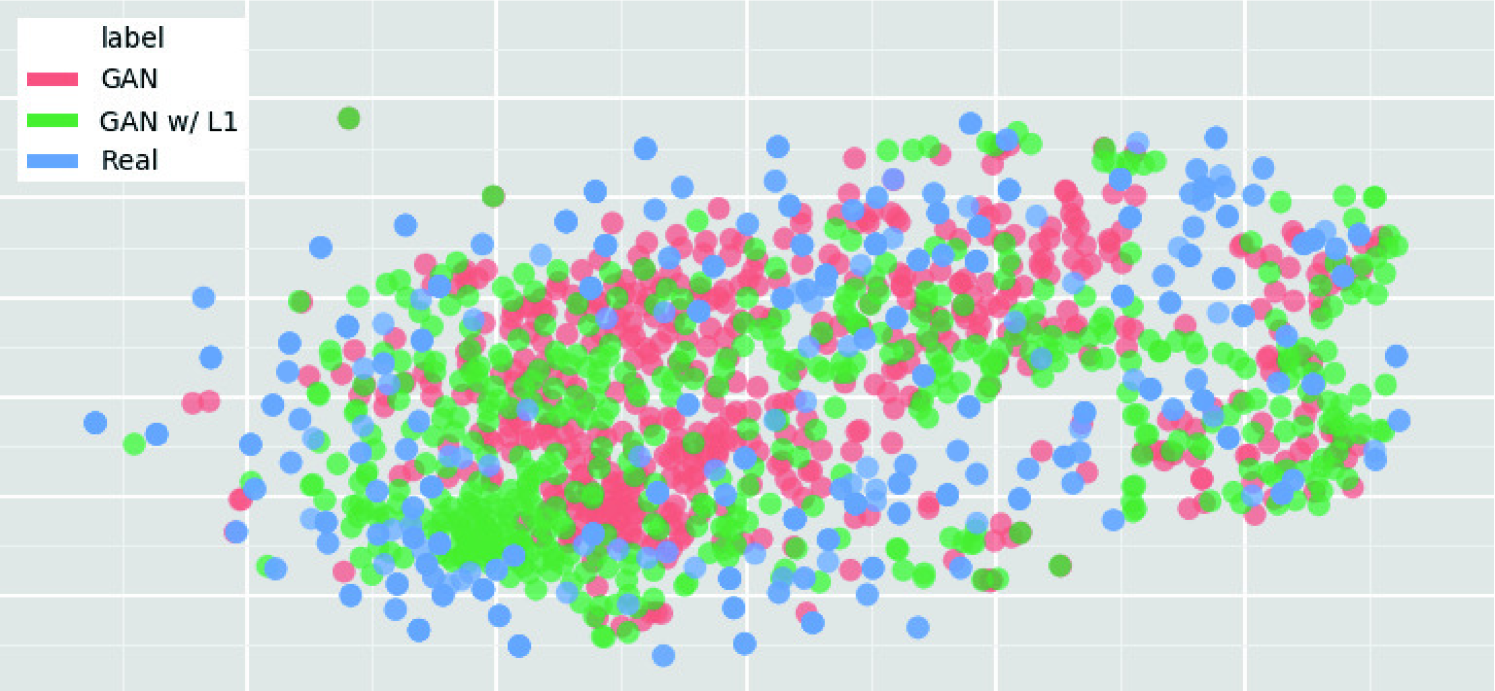

5.3.6 Visualization via t-SNE

To visualize distributions of geometrically-transformed and each GAN-based images by PGGANs, MUNIT, and SimGAN against real images respectively (i.e., 4 setups), we adopt t-SNE [100] on a random selection of:

-

•

real tumor images;

-

•

real non-tumor images;

-

•

geometrically-transformed or each GAN-based tumor images;

-

•

geometrically-transformed or each GAN-based non-tumor images.

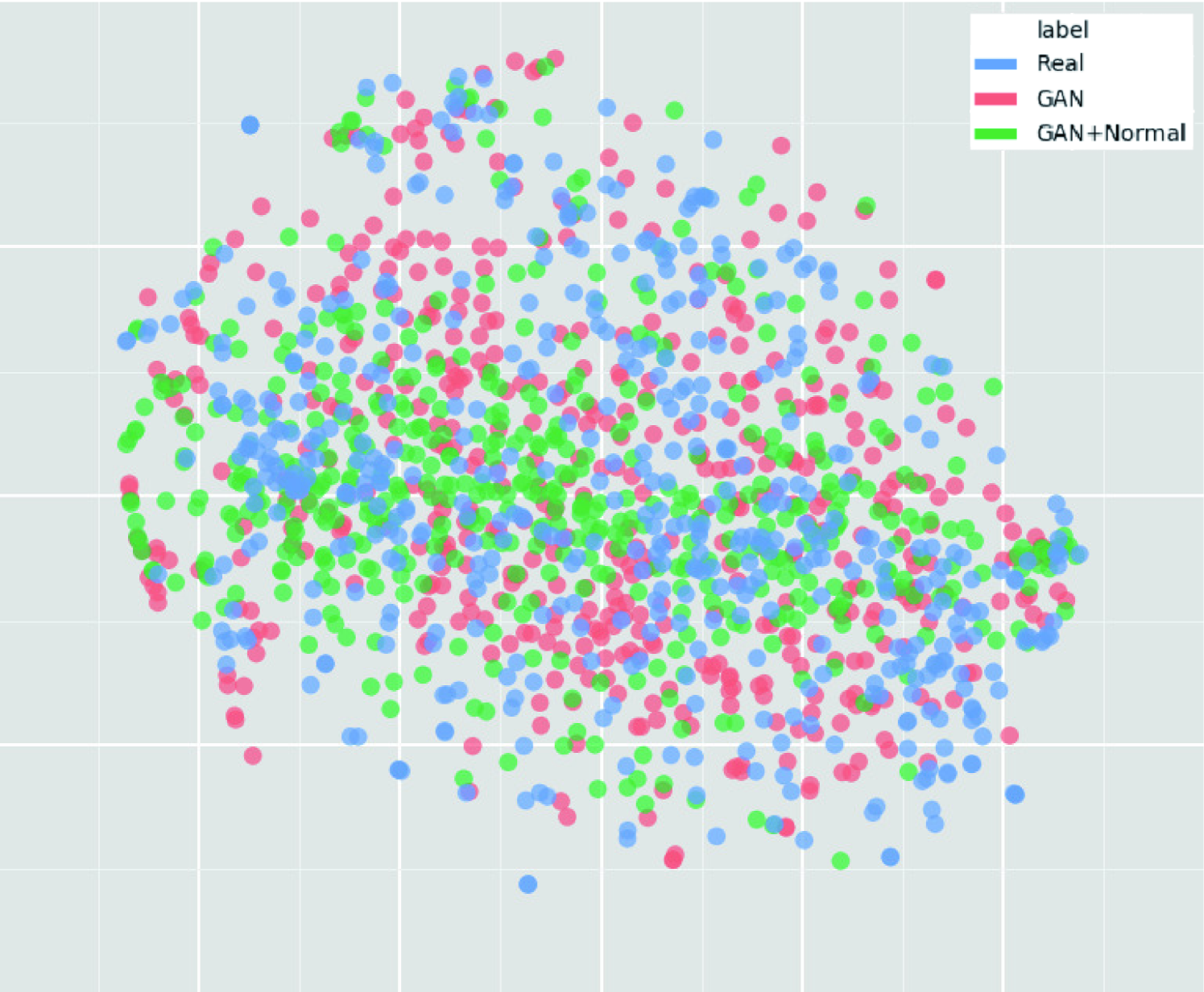

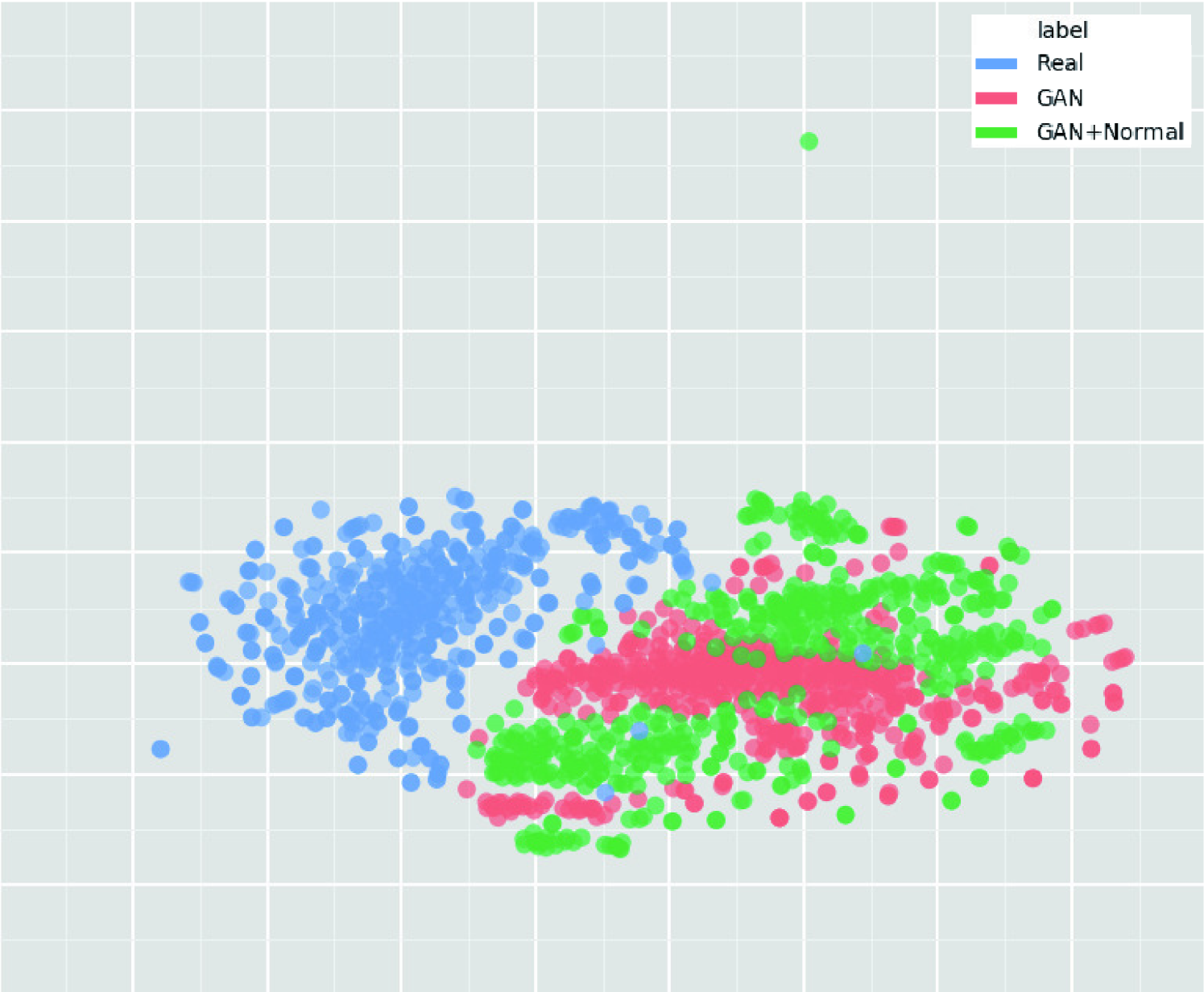

We select only images per each category for better visualization. The t-SNE method reduces the dimensionality to represent high-dimensional data into a lower-dimensional (2D/3D) space; it non-linearly balances between the input data’s local and global aspects using perplexity.

T-SNE Implementation Details The t-SNE uses a perplexity of for iterations to visually represent a 2D space. We input the images after normalizing pixel values to . For point locations of the real images, we compress all the images simultaneously and plot each setup (i.e., the geometrically-transformed or each GAN-based images against the real ones) separately; we maintain their locations by projecting all the data onto the same subspace.

5.4 Results

This section shows how PGGANs generates synthetic brain MR images and how MUNIT and SimGAN refine them. The results include instances of synthetic images, their quantitative evaluation by a physician, their t-SNE visualization, and their influence on tumor classification.

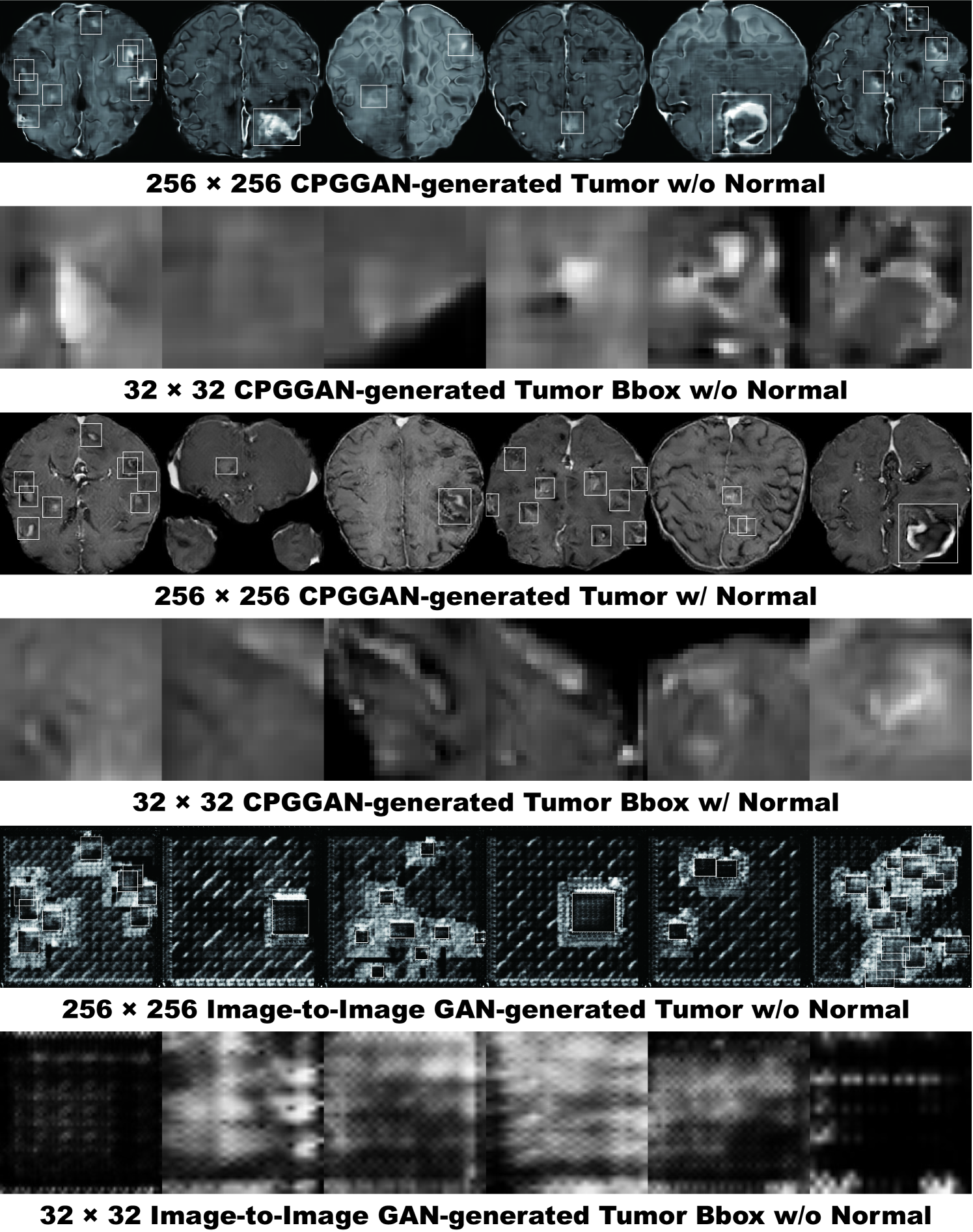

5.4.1 MR Images Generated by PGGANs

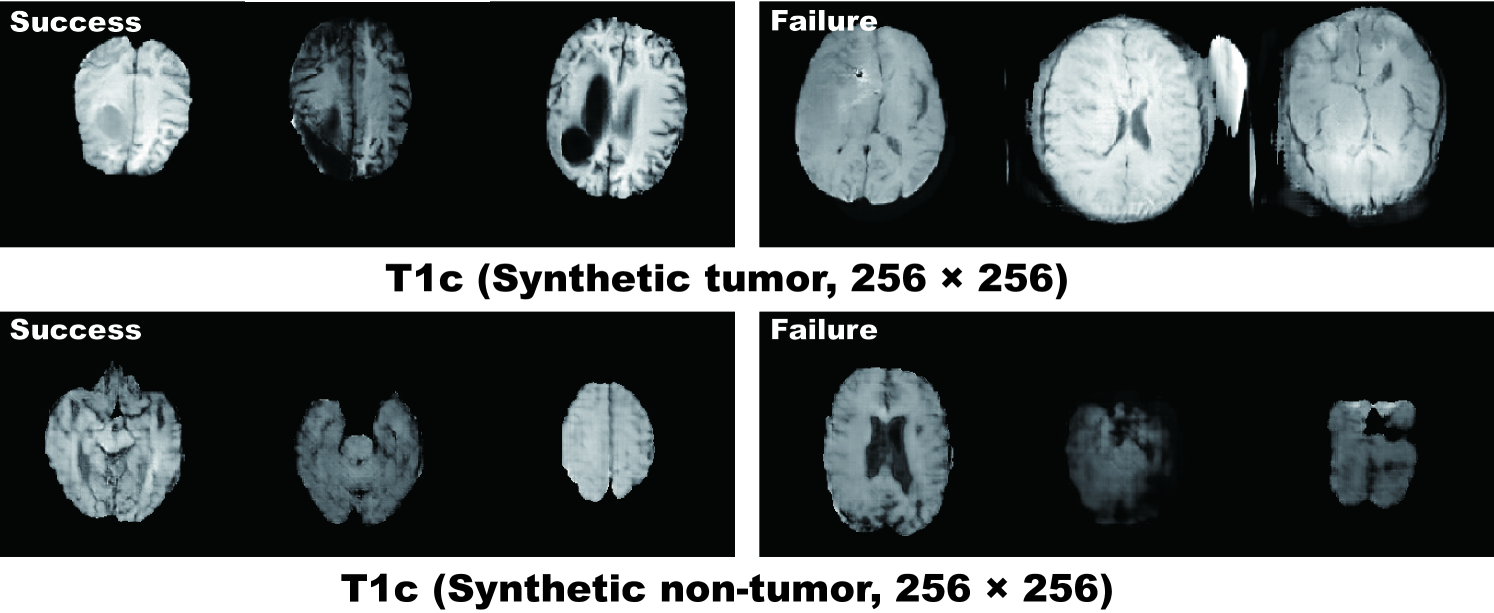

Fig. 5.5 illustrates examples of synthetic MR images by PGGANs. We visually confirm that, for about of cases, it successfully captures the T1c-specific texture and tumor appearance, while maintaining the realism of the original brain MR images; but, for the rest , the generated images lack clear tumor/non-tumor features or contain unrealistic features (i.e., hyper-intensity, gray contours, and odd artifacts).

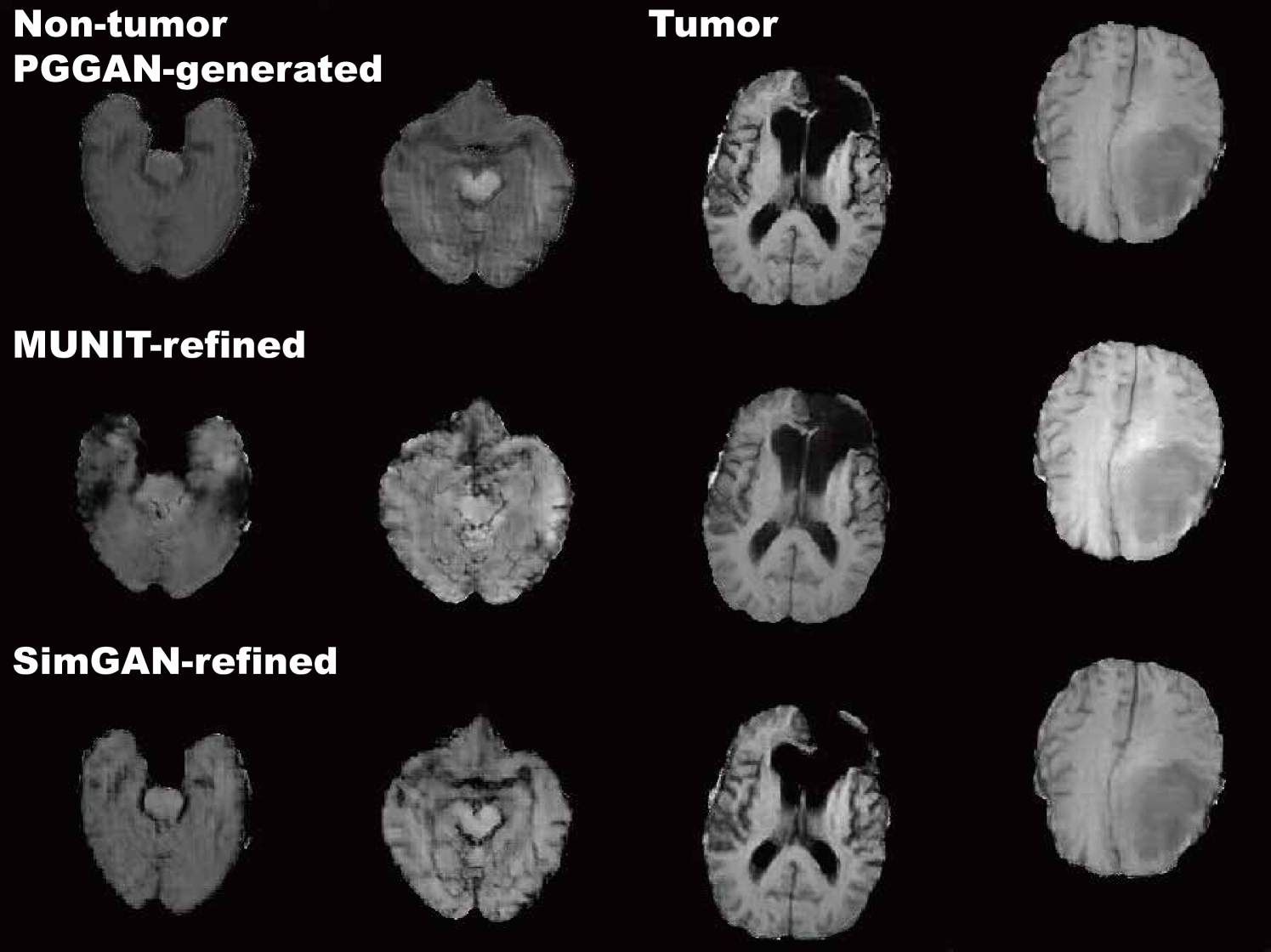

5.4.2 MR Images Refined by MUNIT/SimGAN

MUNIT and SimGAN differently refine PGGAN-generated images—they render the texture and contours while maintaining the overall shape (Fig. 5.6). Non-tumor images change more remarkably than tumor images for both MUNIT and SimGAN; it probably derives from unsupervised image translation’s loss for consistency to avoid image collapse, resulting in conservative change for more complicated images.

5.4.3 Tumor Classification Results

Table 5.5 shows the brain tumor classification results with/without DA while Table 5.6 indicates their pairwise comparison (-values between our two-step GAN-based DA setups and the other DA setups) using McNemar’s test. ImageNet pre-training generally outperforms training from scratch despite different image domains (i.e., natural images to medical images). As expected, classic DA remarkably improves classification, while no clear difference exists between the / classic DA under sufficient geometrically-transformed training images. When pre-trained, each GAN-based DA (i.e., PGGANs/MUNIT/SimGAN) alone helps classification due to the robustness from GAN-generated images; but, without pre-training, it harms classification due to the biased initialization from the GAN-overwhelming data distribution. Similarly, without pre-training, PGGAN-based DA without clustering/discarding causes poor classification due to the synthetic images with severe artifacts, unlike the PGGAN-based DA’s comparable results with/without the discarding step when pre-trained.

| DA Setups | Accuracy (%) | Sensitivity (%) | Specificity (%) | |

|---|---|---|---|---|

| (1) 8,429 real images | 93.1 (86.3) | 90.9 (88.9) | 95.9 (83.2) | |

| (2) + 200k classic DA | 95.0 (92.2) | 93.7 (89.9) | 96.6 (95.0) | |

| (3) + 400k classic DA | 94.8 (93.2) | 91.9 (90.9) | 98.4 (96.1) | |

| (4) + 200k PGGAN-based DA | 93.9 (86.2) | 92.6 (87.3) | 95.6 (84.9) | |

| (5) + 200k PGGAN-based DA w/o clustering/discarding | 94.8 (80.7) | 91.9 (80.2) | 98.4 (81.2) | |

| (6) + 200k classic DA & 200k PGGAN-based DA | 96.2 (95.6) | 94.0 (94.2) | 98.8 (97.3) | |

| (7) + 200k MUNIT-refined DA | 94.3 (83.7) | 93.0 (87.8) | 95.8 (78.5) | |

| (8) + 200k classic DA & 200k MUNIT-refined DA | 96.7 (96.3) | 95.4 (97.5) | 98.2 (95.0) | |

| (9) + 200k SimGAN-refined DA | 94.5 (77.6) | 92.3 (82.3) | 97.1 (72.0) | |

| (10) + 200k classic DA & 200k SimGAN-refined DA | 96.4 (95.0) | 95.1 (95.1) | 97.9 (95.0) | |

| DA Setup Comparison | Accu | Sens | Spec | DA Setup Comparison | Accu | Sens | Spec | DA Setup Comparison | Accu | Sens | Spec | |

| (7) w/ PT vs (1) w/ PT | 0.693 | 0.206 | 1 | (7) w/ PT vs (1) w/o PT | 0.001 | 0.002 | 0.001 | (7) w/ PT vs (2) w/ PT | 1 | 1 | 1 | |

| (7) w/ PT vs (2) w/o PT | 0.034 | 0.024 | 1 | (7) w/ PT vs (3) w/ PT | 1 | 1 | 0.035 | (7) w/ PT vs (3) w/o PT | 1 | 0.468 | 1 | |

| (7) w/ PT vs (4) w/ PT | 1 | 1 | 1 | (7) w/ PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | (7) w/ PT vs (5) w/ PT | 1 | 1 | 0.003 | |

| (7) w/ PT vs (5) w/o PT | 0.001 | 0.001 | 0.001 | (7) w/ PT vs (6) w/ PT | 0.009 | 1 | 0.001 | (7) w/ PT vs (6) w/o PT | 0.397 | 1 | 1 | |

| (7) w/ PT vs (7) w/o PT | 0.001 | 0.001 | 0.001 | (7) w/ PT vs (8) w/ PT | 0.001 | 0.025 | 0.045 | (7) w/ PT vs (8) w/o PT | 0.008 | 0.001 | 1 | |

| (7) w/ PT vs (9) w/ PT | 1 | 1 | 1 | (7) w/ PT vs (9) w/o PT | 0.001 | 0.001 | 0.001 | (7) w/ PT vs (10) w/ PT | 0.001 | 0.077 | 0.108 | |

| (7) w/ PT vs (10) w/o PT | 1 | 0.206 | 1 | (7) w/o PT vs (1) w/ PT | 0.001 | 0.135 | 0.001 | (7) w/o PT vs (1) w/o PT | 0.026 | 1 | 0.014 | |

| (7) w/o PT vs (2) w/ PT | 0.001 | 0.001 | 0.001 | (7) w/o PT vs (2) w/o PT | 0.001 | 1 | 0.001 | (7) w/o PT vs (3) w/ PT | 0.001 | 0.020 | 0.001 | |

| (7) w/o PT vs (3) w/o PT | 0.001 | 0.147 | 0.001 | (7) w/o PT vs (4) w/ PT | 0.001 | 0.002 | 0.001 | (7) w/o PT vs (4) w/o PT | 0.044 | 1 | 0.001 | |

| (7) w/o PT vs (5) w/ PT | 0.001 | 0.015 | 0.001 | (7) w/o PT vs (5) w/o PT | 0.011 | 0.001 | 1 | (7) w/o PT vs (6) w/ PT | 0.001 | 0.001 | 0.001 | |

| (7) w/o PT vs (6) w/o PT | 0.001 | 0.001 | 0.001 | (7) w/o PT vs (8) w/ PT | 0.001 | 0.001 | 0.001 | (7) w/o PT vs (8) w/o PT | 0.001 | 0.001 | 0.001 | |

| (7) w/o PT vs (9) w/ PT | 0.001 | 0.004 | 0.001 | (7) w/o PT vs (9) w/o PT | 0.001 | 0.001 | 0.001 | (7) w/o PT vs (10) w/ PT | 0.001 | 0.001 | 0.001 | |

| (7) w/o PT vs (10) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/ PT vs (1) w PT | 0.001 | 0.001 | 0.010 | (8) w/ PT vs (1) w/o PT | 0.001 | 0.001 | 0.001 | |

| (8) w/ PT vs (2) w/ PT | 0.001 | 0.074 | 0.206 | (8) w/ PT vs (2) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/ PT vs (3) w/ PT | 0.002 | 0.001 | 1 | |

| (8) w/ PT vs (3) w/o PT | 0.001 | 0.001 | 0.112 | (8) w/ PT vs (4) w/ PT | 0.001 | 0.001 | 0.006 | (8) w/ PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | |

| (8) w/ PT vs (5) w/ PT | 0.002 | 0.001 | 1 | (8) w/ PT vs (5) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/ PT vs (6) w/ PT | 1 | 0.128 | 1 | |

| (8) w/ PT vs (6) w/o PT | 0.222 | 0.760 | 1 | (8) w/ PT vs (8) w/o PT | 1 | 0.008 | 0.001 | (8) w/ PT vs (9) w/ PT | 0.001 | 0.001 | 1 | |

| (8) w/ PT vs (9) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/ PT vs (10) w/ PT | 1 | 1 | 1 | (8) w/ PT vs (10) w/o PT | 0.007 | 1 | 0 | |

| (8) w/o PT vs (1) w/ PT | 0.001 | 0.001 | 1 | (8) w/o PT vs (1) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/o PT vs (2) w/ PT | 0.179 | 0.001 | 0.588 | |

| (8) w/o PT vs (2) w/o PT | 0.001 | 0.001 | 1 | (8) w/o PT vs (3) w/ PT | 0.101 | 0.001 | 0.001 | (8) w/o PT vs (3) w/o PT | 0.001 | 0.001 | 1 | |

| (8) w/o PT vs (4) w/ PT | 0.001 | 0.001 | 1 | (8) w/o PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/o PT vs (5) w/ PT | 0.197 | 0.001 | 0.001 | |

| (8) w/o PT vs (5) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/o PT vs (6) w/ PT | 1 | 0.001 | 0.001 | (8) w/o PT vs (6) w/o PT | 1 | 0.001 | 0.007 | |

| (8) w/o PT vs (9) w/ PT | 0.023 | 0.001 | 0.256 | (8) w/o PT vs (9) w/o PT | 0.001 | 0.001 | 0.001 | (8) w/o PT vs (10) w/ PT | 1 | 0.002 | 0.001 | |

| (8) w/o PT vs (10) w/o PT | 0.143 | 0.005 | 1 | (9) w/ PT vs (1) w/ PT | 0.387 | 1 | 1 | (9) w/ PT vs (1) w/o PT | 0.001 | 0.046 | 0.001 | |

| (9) w/ PT vs (2) w/ PT | 1 | 1 | 1 | (9) w/ PT vs (2) w/o PT | 0.008 | 0.262 | 0.321 | (9) w/ PT vs (3) w/ PT | 1 | 1 | 0.931 | |

| (9) w/ PT vs (3) w/o PT | 0.910 | 1 | 1 | (9) w/ PT vs (4) w/ PT | 1 | 1 | 0.764 | (9) w/ PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | |

| (9) w/ PT vs (5) w/ PT | 1 | 1 | 0.639 | (9) w/ PT vs (5) w/o PT | 0.001 | 0.001 | 0.001 | (9) w/ PT vs (6) w/ PT | 0.014 | 0.660 | 0.066 | |

| (9) w/ PT vs (6) w/o PT | 0.716 | 0.365 | 1 | (9) w/ PT vs (9) w/o PT | 0.001 | 0.001 | 0.001 | (9) w/ PT vs (10) w/ PT | 0.004 | 0.006 | 1 | |

| (9) w/ PT vs (10) w/o PT | 1 | 0.017 | 0.256 | (9) w/o PT vs (1) w/ PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (1) w/o PT | 0.001 | 0.001 | 0.001 | |

| (9) w/o PT vs (2) w/ PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (2) w/o PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (3) w/ PT | 0.001 | 0.001 | 0.001 | |

| (9) w/o PT vs (3) w/o PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (4) w/ PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | |

| (9) w/o PT vs (5) w/ PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (5) w/o PT | 0.022 | 1 | 0.001 | (9) w/o PT vs (6) w/ PT | 0.001 | 0.001 | 0.001 | |

| (9) w/o PT vs (6) w/o PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (10) w/PT | 0.001 | 0.001 | 0.001 | (9) w/o PT vs (10) w/o PT | 0.001 | 0.001 | 0.001 | |

| (10) w/ PT vs (1) w/ PT | 0.001 | 0.001 | 0.049 | (10) w/ PT vs (1) w/o PT | 0.001 | 0.001 | 0.001 | (10) w/ PT vs (2) w/ PT | 0.039 | 0.515 | 1 | |

| (10) w/ PT vs (2) w/o PT | 0.001 | 0.001 | 0.002 | (10) w/ PT vs (3) w/ PT | 0.017 | 0.001 | 1 | (10) w/ PT vs (3) w/o PT | 0.001 | 0.001 | 0.415 | |

| (10) w/ PT vs (4) w/ PT | 0.001 | 0.019 | 0.028 | (10) w/ PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | (10) w/ PT vs (5) w/ PT | 0.015 | 0.001 | 1 | |

| (10) w/ PT vs (5) w/o PT | 0.001 | 0.001 | 0.001 | (10) w/ PT vs (6) w/ PT | 1 | 1 | 1 | (10) w/ PT vs (6) w/o PT | 0.981 | 1 | 1 | |

| (10) w/ PT vs (10) w/o PT | 0.054 | 1 | 0.002 | (10) w/o PT vs (1) w/ PT | 0.039 | 0.001 | 1 | (10) w/o PT vs (1) w/o PT | 0.001 | 0.001 | 0.001 | |

| (10) w/o PT vs (2) w/ PT | 1 | 0.727 | 0.649 | (10) w/o PT vs (2) w/o PT | 0.001 | 0.001 | 1 | (10) w/o PT vs (3) w/ PT | 1 | 0.002 | 0.001 | |

| (10) w/o PT vs (3) w/o PT | 0.039 | 0.001 | 1 | (10) w/o PT vs (4) w/ PT | 1 | 0.019 | 1 | (10) w/o PT vs (4) w/o PT | 0.001 | 0.001 | 0.001 | |

| (10) w/o PT vs (5) w/ PT | 1 | 0.002 | 0.001 | (10) w/o PT vs (5) w/o PT | 0.001 | 0.001 | 0.001 | (10) w/o PT vs (6) w/ PT | 0.308 | 1 | 0.001 | |

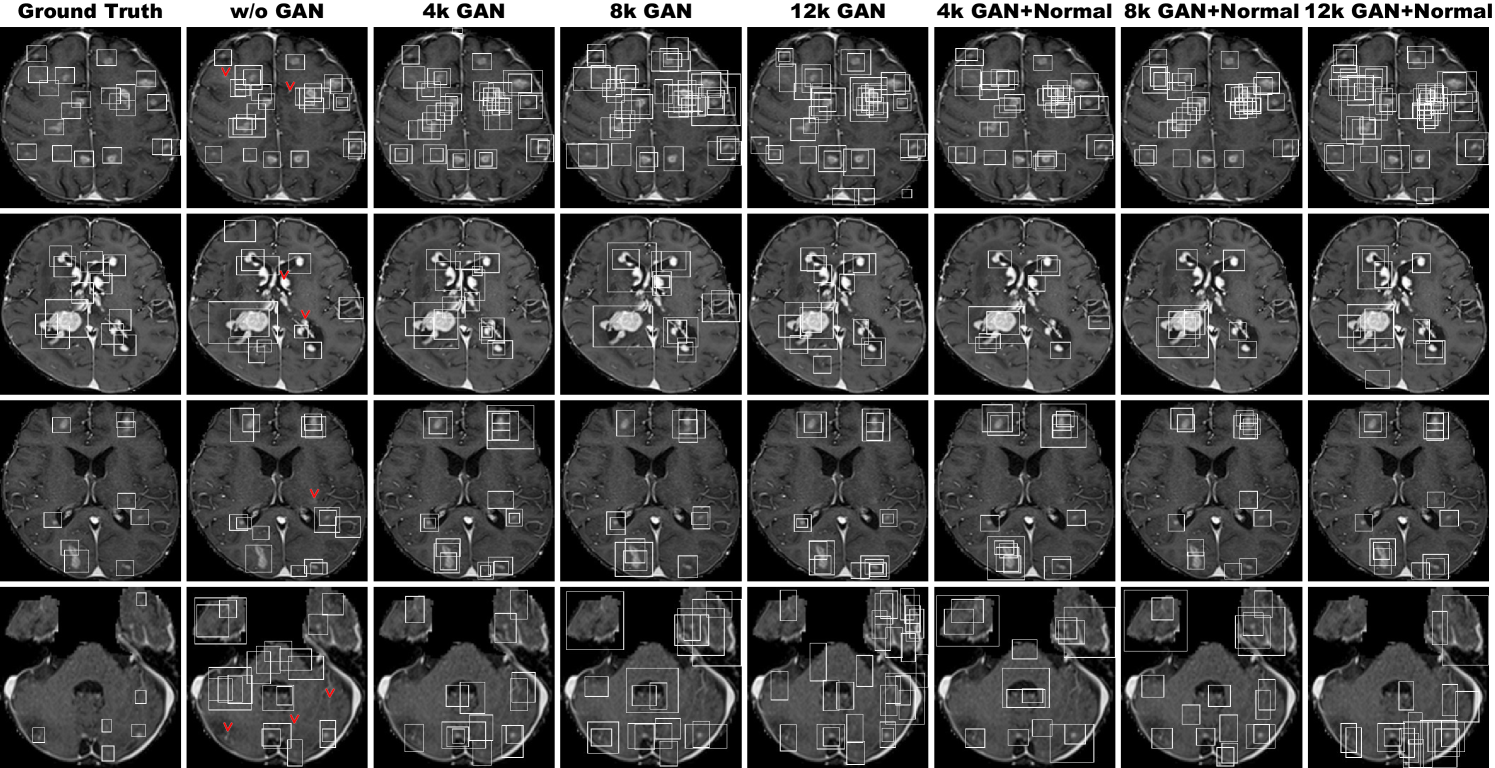

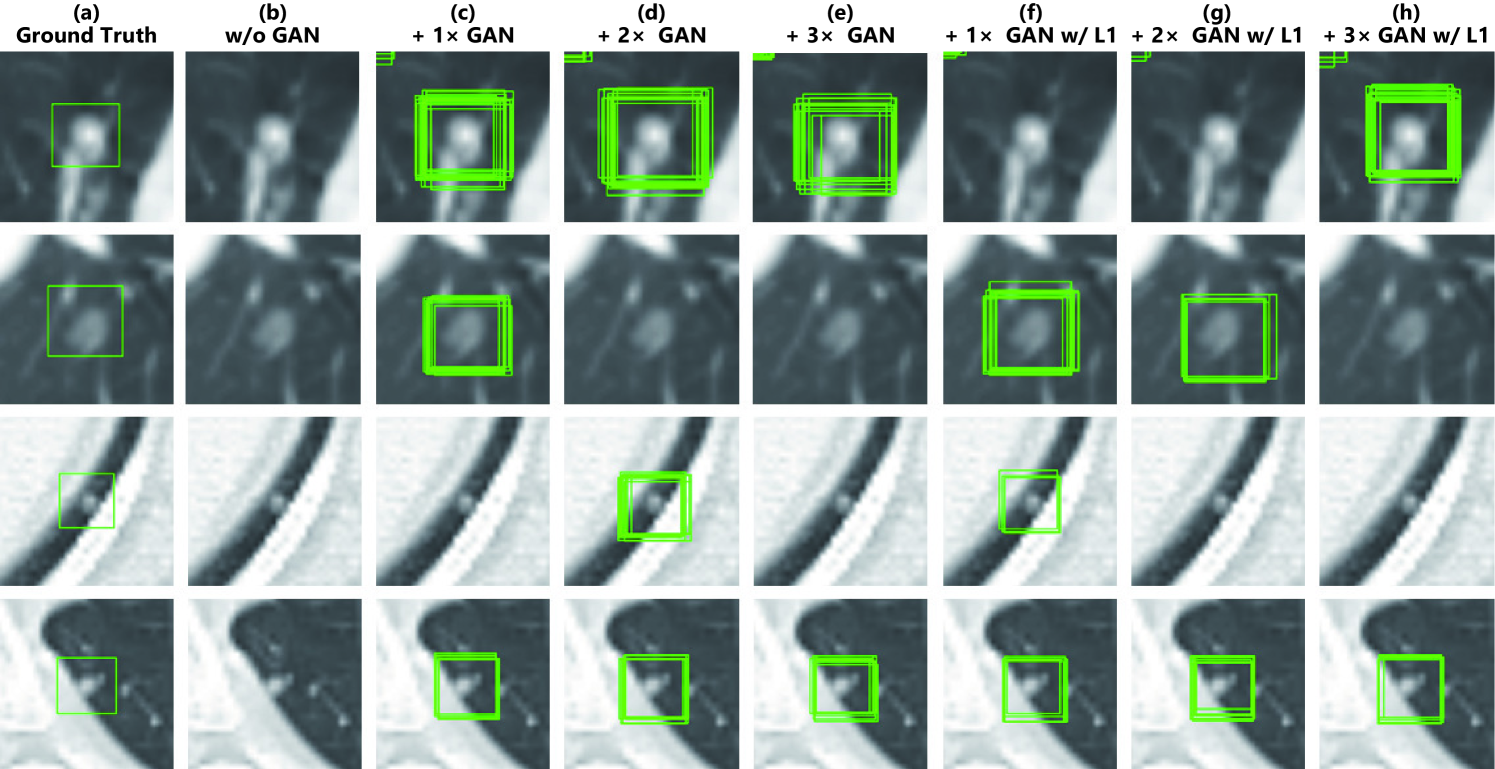

| (10) w/o PT vs (6) w/o PT | 1 | 1 | 0.035 | |||||||||