Theoretical Analysis of Deep Neural Networks in Physical Layer Communication

Abstract

Recently, deep neural network (DNN)-based physical layer communication techniques have attracted considerable interest. Although their potential to enhance communication systems and superb performance have been validated by simulation experiments, little attention has been paid to the theoretical analysis. Specifically, most studies in the physical layer have tended to focus on the application of DNN models to wireless communication problems but not to theoretically understand how does a DNN work in a communication system. In this paper, we aim to quantitatively analyze why DNNs can achieve comparable performance in the physical layer comparing with traditional techniques, and also drive their cost in terms of computational complexity. To achieve this goal, we first analyze the encoding performance of a DNN-based transmitter and compare it to a traditional one. And then, we theoretically analyze the performance of DNN-based estimator and compare it with traditional estimators. Third, we investigate and validate how information is flown in a DNN-based communication system under the information theoretic concepts. Our analysis develops a concise way to open the “black box” of DNNs in physical layer communication, which can be applied to support the design of DNN-based intelligent communication techniques and help to provide explainable performance assessment.

Index Terms:

Theoretical analysis, deep neural network (DNN), physical layer communication, information theory.I Introduction

The mathematical theories of communication systems have been developed dramatically since Claude Elwood Shannon’s monograph “A mathematical theory of communication” [2] provides the foundation of digital communication. However, the wireless channel-related gap between theory and practice still needs to be filled due to the difficulty of precisely modeling wireless channels. Recently, deep neural network (DNN) has drawn a lot of attention as a powerful tool to solve science and engineering problems such as protein structure prediction [3], image recognition [4], speech recognition [5] and natural language processing [6] that are virtually impossible to explicitly formulate. These promising approaches motivate researchers to implement DNNs in existing physical layer communication.

In order to mitigate the gap, a natural thought is to let a DNN to jointly optimize a transmitter and a receiver for a given channel model without being limited to component-wise optimization. Autoencoders (AEs) are considered as a tool to solve this problem. An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data. An autoencoder has two main parts: an encoder that maps the input into the code, and a decoder that maps the code to a reconstruction of the input. This structure is equivalent to the concept of a communication system. Along this thread, pure data-driven AE-based end-to-end communication systems are firstly proposed to jointly optimize transmitter and receiver components [7, 8, 9, 10]. And then, T. O’Shea and J. Hoydis consider the linear and nonlinear steps of processing the received signal as a radio transformer network (RTN) in [7], which can be integrated into the end-to-end training process. The idea of end-to-end learning of communication system and RTN through DNN is extended to orthogonal frequency division multiplexing (OFDM) in [11] and multiple-input multiple-output (MIMO) in [12].

Unfortunately, training these DNNs in practical wireless channels is never a trivial issue. First, these methods require the reliable feedback links. As Shannon once described in [2], the fundamental problem of communication is described as “reproducing at one point either exactly or approximately a message selected at another point”. But, AE-based methods present a “chicken and egg” problem. That is to say, first need a reliable communications system to do the error back-propagation to actually train an end-to-end communication system for you. This leads to the paradox [13]. To tackle this problem, DNNs are trained offline and then tested online in practical applications. However, this implementation strategy leads to the second problem that these methods assume the availability of the explicit channel model to calculate the gradients for the optimization. Still, the unavailability of perfect channel information forces these methods to adopt simulation-based strategy to train the DNN, which usually results the model mismatch problem. Specifically, the DNN models trained offline show significant performance degradation in test unless both training and test sets are subjected to the same probability distribution. Another resolution is to sample a wireless channel through transmitting probe signal from a neural network-based transmitter. For example, since an AE requires a differentiable channel model, the proposed method in reference [14] calculates the gradient w.r.t the neural network-based transmitter’s parameters through sampling of the channel distribution. Similarly, reference [15] utilizes stochastic perturbation technique to train an end-to-end communication system without relying on explicit channel models but the number of training samples is still prohibitive.

Another idea is to estimate the channel as accurate as possible and recover channel state information (CSI) by implementing a DNN at the side of a receiver so that the effects of fading could be reduced. This strategy usually can be divided into two main categories, one using pure data to train a DNN (also known as the data-driven) and the other combining data and current models to train a DNN (also known as the model-driven). In the data-driven manner, the neural networks (NNs) are optimized merely over a large training data set labeled by true channel responses and a mathematically tractable model is unnecessary [16]. The authors of [17] propose a data-driven end-to-end DNN-based CSI compression feedback and recovery mechanism which is further extended with long short-term memory (LSTM) to tackle time-varying massive MIMO channels [18]. To achieve better estimation performance and reduce computation cost, a compact and flexible deep residual network architecture is proposed to conduct channel estimation for an OFDM system based on downlink pilots in [19]. Nevertheless, the performance of the data-driven approaches heavily depends on an enormous amount of labeled data which cannot be easily obtained in wireless communication. To address this issue, a plethora of model-driven research gradually has been carried out to achieve efficient receivers [20, 21, 22, 23, 24]. Instead of only using the enormous size of labeled data, in the model-driven manner, domain knowledge is also used to construct a DNN [25]. For example, in model-driven channel estimation, the least-square (LS) estimations usually are fed into a DNN, and then the DNN yields the enhanced channel estimates. Furthermore, in order to mitigate the disturbances, in addition to Gaussian noise, such as channel fading and nonlinear distortion, and further reduce the computation complexity of training, [26] proposes an online fully complex extreme learning machine (ELM)-based channel estimation and equalization scheme.

Comparing with traditional physical layer communication systems, the above-mentioned DNN-based techniques show competitive performance by simulation experiments. However, the dynamics behind the DNN in physical layer communication remains unknown. In the domain of information theory, a plethora of research has been conducted to investigate the process of learning. In [27], Tishby et al. propose the information bottleneck (IB) method which provides a framework for discussing a variety of problems in signal processing, learning, etc. Then, in [28], DNNs are analyzed via the theoretical framework of the IB principle. In [29], the variants of the IB problem are discussed. In [30], Tishby’s centralized IB method is generalized to the distributed setting. Reference [31] considers the IB problem of a Rayleigh fading MIMO channel with an oblivious relay. However, the considered problems in these researches are different from that in wireless communication standpoint in which the complexity-relevance tradeoffs usually are not considered. Moreover, there are still three major problems. (i) Although it has been shown by simulations that AE-based end-to-end communication systems can approach the optimal symbol error rate (SER), i.e., the SER of a system using optimal constellation, the quantitative comparative analysis has not been conducted. (ii) As a module in a receiver, how does a DNN process information has not been quantitatively investigated. (iii) The methodology to design data sets and the structure of a neural network, which plays an important role in the neural network-based channel estimation, have not been the theoretically studied.

In this paper, we attempt to first give a mathematical explanation to reveal the mechanism of end-to-end DNN-based communication systems. Then, we try to unveil the role of the DNNs in the task of channel estimation. We believe that we have developed a concise way to open as well as understand the “black box” of DNNs in physical layer communication, and hence our main contributions of this paper are fourfold:

-

•

Instead of proposing a scheme combining a DNN with a typical communication system, we analyze the behaviors of a DNN-based communication system from the perspectives of the whole DNN (communication system), encoder (transmitter) and decoder (receiver). And we also analyze and compare the performance of the DNN-based transmitter with the conventional method, i.e., the gradient-search algorithm, in terms of both convergence properties and computational complexity.

-

•

We consider the task of channel estimation as a typical inference problem. With the information theory, we analyze and compare the performance of the DNN-based channel estimation with LS and linear minimum mean-squared error (LMMSE) estimators. Furthermore, we derive the analytical relation between the hyperparameters, as well as training sets, and the performance.

-

•

We conduct computer simulations and the results verify that the constellations produced by AEs are equivalent to the (locally) optimum constellations obtained by the gradient-search algorithm which minimize the asymptotic probability of error in Gaussian noise under an average power constraint.

-

•

Through simulation experiments, our theoretical analysis is validated, and the information flow in the DNNs in the task of channel estimation is estimated by using matrix-based functional of Rényi’s -entropy to approximate Shannon’s entropy.

To the best of our knowledge, there are typically two approaches to integrate DNN with communication systems. (i) Holistic approach treats a communication system as an end-to-end process, which uses an AE to replace a whole communication system. (ii) Phase-oriented approach, which only investigates the application of DNN in a certain module of communication process [32]. Therefore, without loss of generality, we mainly investigate two cases: the AE-based communication system and the DNN independently deployed at a receiver.

We note that a shorter conference version of this paper has appeared in IEEE Wireless Communications and Networking Conference (2022). Our initial conference paper gives preliminary simulation results. This manuscript provides comprehensive analysis and proof.

The remainder of this paper is organized as follows. In Section II, we give the system model and then formulate the problem in Section III and IV . Next, simulation results are presented in Section V. Finally, the conclusions are drawn in Section VI.

Notations: The notations adopted in the paper are as follows. We use boldface lowercase , capital letters and calligraphic letters to denote column vectors, matrices and sets respectively. For a matrix , we use to denote its -th entry. For a vector , we use to denote the Euclidean norm. For a matrix , we use to denote Frobenius norm and to denote the operator norm, where represents the largest singular value of matrix . If a matrix is positive semi-definite, we use to denote its smallest eigenvalue. We use to denote the standard Euclidean inner product between two vectors or matrices. We let . We use to denote -dimensional standard Gaussian distribution. We also use to denote standard Big-O only hiding constants. In addition, denotes the Hadamard product, denotes the expectation operation, and denotes the trace of a matrix.

II System Model

In this section, we first describe the considered system model and then provide a detailed explanation of the problem formulation from two different perspectives in the following sections.

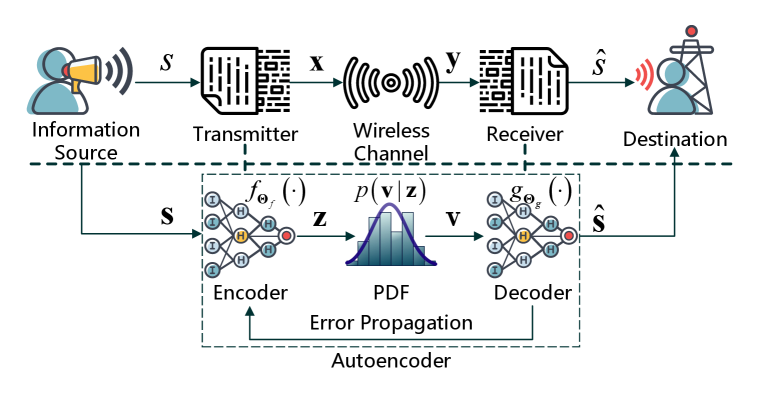

II-A Traditional Communication System

As shown in the upper part of Fig. 1, consider the process of message transmission from the perspective of a typical communication system. We assume that an information source generates a sequence of -bit message symbols to be communicated to the destination. Then the modulation modules inside the transmitter map each symbol to a signal , where denoted the dimension of the signal space. The signal alphabet is denoted by . During channel transmission, -dimensional signal is corrupted to with conditional probability density function (PDF) . In this paper, we use bandpass channels, each with separately modulated inphase and quadrature components to transmit the -dimensional signal [33]. Finally, the received signal is mapped by the demodulation module inside the receiver to which is an estimate of the transmitted symbol . The procedures mentioned above have been exhaustively presented by Shannon.

II-B Understanding Autoencoders on Message Transmission

From the point of view of filtering or signal inference, the idea of AE-based communication system matches Norbert Wiener’s perspective [34]. As shown in the lower part of the Fig. 1, the AE consists of an encoder and a decoder and each of them is a feedforward neural network with parameters (weights and biases) and , respectively. Note that each symbol from information source usually needs to be encoded to a one-hot vector and then is fed into the encoder. Under a given constraint (e.g., average signal power constraint), the PDF of a wireless channel and a loss function to minimize symbol error probability, the encoder and decoder are respectively able to learn to appropriately represent as and to map the corrupted signal to an estimate of transmitted symbol where . Here, we use denoted the transmitted signals from the encoder in order to distinguish it from the transmitted signals from the transmitter.

From the perspective of the whole AE (communication system), it aims to transmit information to a destination with low error probability. The symbol error probability, i.e., the probability that the wireless channel has shifted a signal point into another signal’s decision region, is

| (1) |

The AE can use the cross-entropy loss function

| (2) | ||||

to represent the cost brought by inaccuracy of prediction where denotes the -th element of the -th symbol in a training set with symbols. In order to train the AE to minimize the symbol error probability, the optimal parameters could be found by optimizing the loss function

| (3) | ||||

where denotes the average power. In this paper, we set . Now, it is important to explain how does the mapping vary after the training was done.

III Encoder: Finding a Good Representation

Let’s pay attention to the encoder (transmitter). In the domain of communication, an encoder needs to learn a robust representation to transmit against channel disturbances, including thermal noise, channel fading, nonlinear distortion, phase jitter, etc. This is equivalent to find a coded (or uncoded) modulation scheme with the signal set of size to map a symbol to a point for a given transmitted power, which maximizes the minimum distance between any two constellation points. Usually the problem of finding good signal constellations for a Gaussian channel111The problem of constellation optimization is usually considered under the condition of the Gaussian channel. Although the problem under the condition of Rayleigh fading channel has been studied in [35], its prerequisite is that the side information is perfect known. is associated with the search for lattices with high packing density which is a well-studied problem in the mathematical literature [36]. This issue can be addressed through two different methods as follows.

III-A Traditional Method: Gradient-Search Algorithm

The eminent work of [37] proposed a gradient-search algorithm to obtain the optimum constellations. Consider a zero-mean stationary additive white Gaussian noise (AWGN) channel with one-sided spectral density . For large signal-to-noise ratio (SNR), the asymptotic approximation of (1) can be written as

| (4) |

To minimize , the problem can be formulated as

| (5) | ||||

where denotes the optimal signal set. This optimization problem can be solved by using a constrained gradient-search algorithm. We denote as an matrix

| (6) |

Then, the -th step of the constrained gradient-search algorithm can be described by

| (7a) | |||

| (7b) | |||

where denotes step size and denotes the gradient of respect to the current constellation points. It can be written as

| (8) |

where

| (9) |

The vector denotes -dimensional unit vector in the direction of .

Comparing (3) to (5), we can understand the mechanism of the encoder in an AE-based communication system. Its optimized variables of AE method are the parameters and . In other words, AE learns the constellation design through simultaneously optimizing the parameters of the encoder and decoder. It does not directly optimize the constellation points . Differently, the gradient-search algorithm directly optimizes the . Although the optimized variables of these two methods are different, their optimization goals of minimizing the SER are essentially identical. Therefore, the mapping function of encoder can be represented as

| (10) |

when the PDF used for generating training samples is multivariate zero-mean normal distribution where denotes -dimensional zero vector and is an diagonal matrix. In the next subsection, detailed explanation is given.

III-B Neural Network-based Method

Unlike gradient-search algorithm, models based on neural networks are created directly from data by an algorithm. However, under most cases, these models are "black boxes", which means that humans, even those who design them, cannot understand how variables are being combined to make predictions [38]. At this stage, the task of wireless communication does not require the interpretability of neural networks. However, the theoretical and comparative analyses of a neural network-based communication system are indispensable since an accurate interpretable model has been built.

Let the one-hot vector be the input , is the first weight matrix, is the weight at the -th layer for and is the activation function. We assume intermediate layers are square matrices for the sake of simplicity. There are and hidden layers at the side of transmitter and receiver, respectively. The prediction function can be defined recursively as

| (11) | ||||

where is a scaling factor to normalize the input in the initialization phase, and -th layer is defined as channel layer. Note that is the set of elements that belong to but not to . To constrain the average power of transmitted signal to , is normalized as

| (12) |

Then, the effect on the transmitted signal resulting from a wireless channel can be expressed as

| (13) |

where is the functional form of the wireless channel with parameter set 222In accordance with practice, and without introducing ambiguity, is used to denote hidden layer and channel in the context of neural network and physical communication, respectively.. Let , and finally, the received signal is demodulated as

| (14) |

where .

To theoretically analyze the neural network, some technical conditions on the activation function are imposed. The first condition is Lipschitz and Smooth. The second is that is analytic and is not a polynomial function. In this section, softplus is chosen for the hidden layers except for the and -th layers.

While training the deep neural network, randomly initialized gradient descent algorithm is used to optimize the empirical loss (2). Specifically, for every layer , each entry is sample from a standard Gaussian distribution, . Then, the values for parameters can be updated by gradient descent, for and as

| (15) |

where is the step size.

The update of parameters at the side of the receiver can be realized as

| (16) |

since we know the function entirely. At the side of the transmitter, it becomes

| (17) |

where the terms and are difficult to acquire unless the knowledge about the channel is fully known. In this paper, we consider both the Gaussian channel and the Rayleigh flat fading channel.

III-B1 Gaussian Channel

Let is a white Gaussian noise vector and the variance of each entry is . The output of the channel layer can be expressed as

| (18) |

where and . Then, (18) can be expressed as

| (19) |

where denotes the equivalent weights of the channel layer and . Finally, the terms and can be written as

| (20a) | |||

| (20b) | |||

Substituting 20 into (17), we get

| (21) | ||||

III-B2 Fading Channel

We consider a Rayleigh flat fading channel for simplicity. It is not difficult to generalize our analysis to other fading channels, e.g., frequency selective fading channels.

To transmitted the modulated signal , bandpass channels are needed. We assume that the channel impulse of each channel is mutually independent, i.e., where

| (22) |

and . The real and imaginary parts of the channel impulse of the -th bandpass channel are denoted as and , respectively. In this case, the parameters update of parameters at this side of transmitter has the same form as (21).

III-C Properties of Convergence

The convergence properties of the traditional algorithm, i.e., gradient-search algorithm, have been exhaustively analyzed in [37]. Whereas, the properties of the AE-abased algorithm have not been studied yet, and therefore, we mainly try to analyze the convergence properties of the AE-based algorithm in this subsection.

In [39], Simon S. Du et al. analyze two-layer fully connected ReLU activated neural networks. It has been shown that, with over-parameterization, gradient descent provably converges to the global minimum of the empirical loss at a linear convergence rate. Then, they develop a unified proof strategy for the fully-connected neural network, ResNet and convolutional ResNet [40].

We replace (2) with square loss function as

| (23) |

Then, the individual prediction at the -th iteration can be denoted as

| (24) |

Let where denotes the size of the training set. Simon S. Du et al. [39, 40] show that for DNN, the sequence admits the dynamics

| (25) |

where

| (26) | ||||

The Gram matrix induced by the weights from -th layer is defined as for . Note for all , each entry of is an inner product.

In [40], it has been shown that, if the width is large enough for all layers, for all , is close to a fixed matrix which depends on the input data, neural network architecture and the activation but does not depend on neural network parameters . The Gram matrix is recursively defined as follows. For and ,

| (27a) | |||

| (27d) | |||

| (27e) | |||

| (27f) | |||

However, the existence of the channel layer obstructs this recursive process. Specifically, under the case of Gaussian channel, results that the strictly positive definiteness of matrix may not be guaranteed. This leads that the dynamics of gradient descent does not enjoy a linear convergence rate as (25) shown.

Under the case of Rayleigh flat fading channel, makes the situation worse. Since the fading channel is not static, the diagonal elements of the weights matrix in the at -th iterative are a random sample from . At the stage of random initialization, suppose we have some perturbation in the first layer. This perturbation propagates to the -th layer admits the form

| (28) |

Therefore, we need to have and this makes have exponential dependency on [40]. Moreover, at the training stage, the perturbation in the -th layer induced by fading channel can disperse to the whole network, i,e., the averaged Frobenius norm

| (29) |

is not small for all .

In addition, large biases would be introduced by the channel noise when SNR is low. Although the biases do not impact the weights directly, its influence can be spread to the whole network through forward and backward propagation.

IV Decoder: Inference

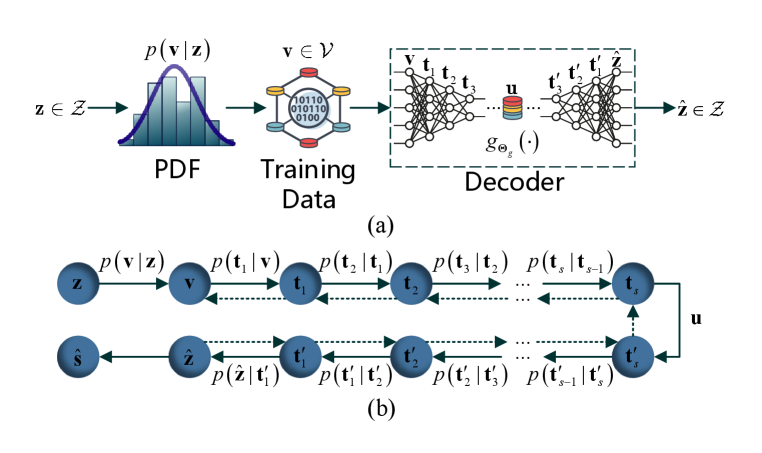

In this section, we will zoom in the lower right corner of the Fig. 1 to investigate what happens inside the decoder (receiver). As Fig. 2(a) shows, for the task of DNN-based channel estimate, the problem can be formulated as an inference model. For the sake of convenience, we can denote the target output of the decoder as instead of because we can assume is bijection. If the decoder is symmetric, the decoder also can be seen as a sub AE which consists of a sub encoder and decoder. Its bottleneck (or middlemost) layer codes is denoted as . Here we use to denote CSI or transmitted symbol which we desire to predict. The decoder infers a prediction according to its corresponding measurable variable . This inference problem can be addressed by the following two different methods.

IV-A Traditional Estimation Method

IV-A1 LS Estimator

Without loss of generality, we can consider this issue under the context of complex channel estimation. Let the measurable variable be

| (30) |

where denotes the LS estimation of its corresponding true channel responses , and is a vector of i.i.d. complex zero-mean Gaussian noise with variance . The noise is assumed to be uncorrelated with the channel . The corresponding MSE is

| (31) |

IV-A2 LMMSE Estimator

If the channel is stationary and subject to , its LMMSE estimate can be expressed as

| (32) |

where is the channel autocorrelation matrix and is a diagonal matrix containing the known transmitted signaling points [41, 42, 43]. The MSE of the LMMSE estimate is

| (33) | ||||

To perform (32), and are assumed to be known.

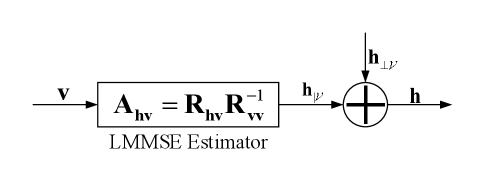

We define a complex space . Every element of is a finite-variance, zero-mean, proper complex Gaussian random variable, and every subset of is jointly Gaussian. The set of observed variables and its closure or the subspace generated by are denoted as and , respectively. Let be the estimation error where is a linear estimate of . By the projection and Pythagorean theorems, we have

| (34) |

with equality if and only if . For this reason, is called the LMMSE estimate of given , and is called the MMSE estimation error. Moreover, the orthogonality principle holds: is the LMMSE estimate of given if and only if is orthogonal to . Writing as a set of linear combinations of the elements of in matrix form, namely , we obtain a unique solution . Fig. 3 illustrates that can be decomposed into a linear estimate derived from and an independent error variable . Moreover, this block diagram implies that the MMSE estimate is a sufficient statistic for estimation of from , since is evidently a Markov chain; i.e., and are conditionally independent given . This is also known as the sufficiency property of the MMSE estimate [44].

By the sufficiency property, the MMSE estimate is a function of that satisfies the data processing inequality of information theory with equality: 333Note that no confusion should arise if we abuse the notation slightly by using a lower-case letter to denote a random variable.. In other words, the reduction of to is information-lossless. Since is a linear Gaussian channel model with Gaussian input , Gaussian output , and independent additive Gaussian noise , we have

| (35) |

where

| (36) | ||||

IV-B DNN-based Estimation

Let be the hypothesis class associated with a -layer neural network of size with activation function . We assume that for all . More specifically, is the set of all functions . Given a random sample , we define

| (37) |

i.e., is the minimum empirical square loss attained by a -layer neural network of size . Mario Diaz et al. establishes a probabilistic bound for the MMSE in estimating given based on the 2-layer estimator , and the Barron constant of the conditional expectation of given [45, Theorem 1]. We extend this probabilistic bound to explore the MSE performance of DNN-based estimators.

Assumption 1

Let and be a bounded set containing . If each entry of belongs to , is supported on , and the conditional expectation belongs to , the set of all function , then, with probability at least ,

| (38) |

where

| (39) | ||||

Theorem 1

Under Assumption 39, for a linear estimation problem, given a limit , and a specific training set , if , and , then

| (40) |

Proof 1

According to Assumption 39, for a 2-layer neural network, with probability at least ,

| (41) |

If , the output of the trained 2-layer neural network is not a sufficient statistic for estimation of from . By the data processing inequality, we have

| (42) |

and

| (43) |

IV-C Information Flow in Neural Networks

If the joint probability distribution is known, the expected (population) risk can be written as

| (44) | ||||

where and denotes Kullback-Leibler divergence between and [29]444If and are continuous random variables, the sum becomes an integral when their PDFs exist.. If and only if the decoder is given by the conditional posterior , the expected (population) risk reaches the minimum .

In physical layer communication, instead of perfectly knowing the channel-related joint probability distribution , we only have a set of i.i.d. samples from . In this case, the empirical risk is defined as

| (45) |

Practically, the from usually is a finite set. This leads the difference between the empirical and expected (population) risks which can be defined as

| (46) | ||||

We now can preliminarily conclude that the DNN-based receiver is an estimator with minimum empirical risk for a given set , whereas its performance is inferior to the optimal with minimum expected (population) risk under a given joint probability distribution .

Furthermore, it is necessary to quantitatively understand how information flows inside the decoder. Fig. 2(b) shows the graph representation of the decoder where and denote -th hidden layer representations starting from the input layer and the output layer, respectively. Usually, the Shannon’s entropy cannot be calculated directly since the exact joint probability distribution of two variables is difficult to acquire. Therefore, we use the method proposed in [46] to illustrate layer-wise mutual information by three kinds of information planes (IPs) where the Shannon’s entropy is estimated by matrix-based functional of Rényi’s -entropy [47]. Its details are given in Appendix.

V Simulation Results

In this section, we provide simulation results to illustrate the behaviour of DNN in physical layer communication.

V-A Constellation and Convergence of AE-based Communication System

V-A1 Gaussian Channel

| Layer | Output dimensions |

|---|---|

| Input | |

| Dense+ReLU | |

| Dense+linear | |

| Normalization | |

| Channel | |

| Dense+ReLU | |

| Dense+softmax |

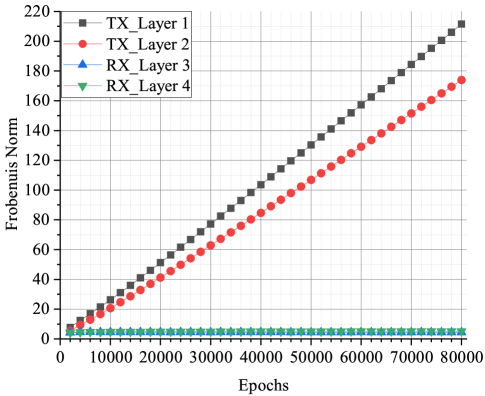

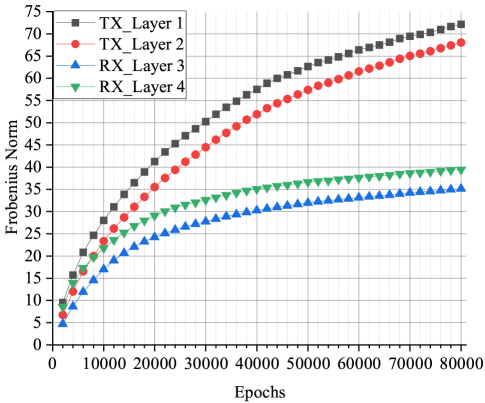

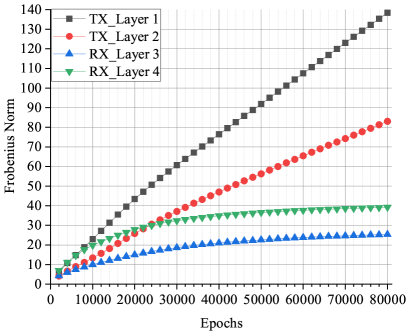

Fig. 4(a) and Fig. 4(b) visualize the Frobenius norm of each layer of a AE-based communication system for and versus epochs under Gaussian channel. The Layout of the AEs are provided in Table I. When SNR=0 dB, the Frobenius norms of layers at the transmitter side increase with the epoch, and that at the receiver side are kept in small values and do not change significantly. In contrast to the case of low SNR, the Frobenius norms of all the layers close to convergence after epochs when SNR=25 dB. This phenomenon can be explained by our analysis in Section III. If SNR is low, large biases would be introduced into the channel layer by noise since . This leads to the exploding gradient problem at the transmitter side. At the receiver side, the Frobenuis norm of each layer tends to be small to counter the large noise, but it produces a very little effect. From Fig. 5, it can be seen that all the signal points are on a line, and two of them are almost overlapped. A number of simulations have been conducted, and the constellations generated by the AE with different initial parameters keep in the same pattern that all constellation points are in a straight line.

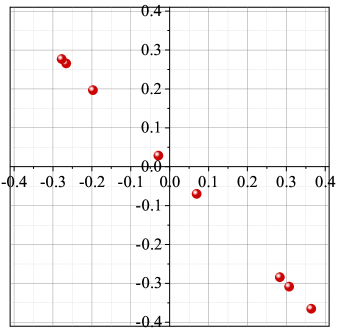

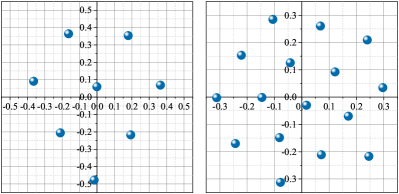

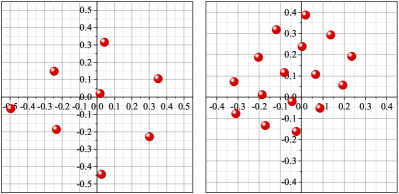

Fig. 6(a) and Fig. 7(a) show the optimum constellations obtained by gradient-search technique proposed in [37]. When and , the algorithm was run allowing for 1000 and 3000 steps, respectively. Several random initialization constellations (initial points selected according to a uniform probability density of the unit disk) are used for each value of and . The step size . Numerous local optima are found, many of which are merely rotations or other symmetric modifications of each other. Fig. 6(b) and Fig. 7(b) show the constellations produced by AEs which have the same network structures and hyperparameters with the AEs mentioned in [7] (see also TABLE I). The AEs were trained with epochs, each of which contains different symbols. Several simulations have been conducted, and it can be found that AE does not ensure that the optimum constellation whereas the gradient-search technique has a high probability of finding an optimum.

When , the two-dimensional constellations produced by AEs have a similar pattern to the optimum constellations which form a lattice of (almost) hexagonal. Specifically, in the case of , one of the constellations found by the AE can be obtained by rotating the optimum constellation found by gradient-search technique. In the case of , the constellation found by the AE is different from the optimum constellation but it still forms a lattice of (almost) equilateral triangles. In the case of , one signal point of the optimum constellation found by gradient-search technique is almost at the origin while the other 15 signal points are almost at the surface of a sphere with radius and centre 0. This pattern is similar to the surface of a truncated icosahedron which is composed of pentagonal and hexagonal faces. However, the three-dimensional constellation produced by an AE is a local optima which is formed by 16 signal points almost in a plane.

From the perspective of computational complexity, the cost to train an AE is significantly higher than the cost of traditional algorithm. Specifically, an AE which has 4 dense layers respectively with , , and neurons needs to train parameters for epochs whereas the gradient-search algorithm only needs trainable parameters for steps.

V-A2 Rayleigh Flat Fading Channel

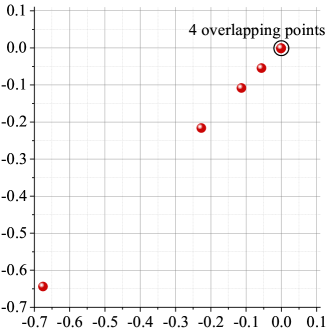

In the case of Rayleigh flat fading channel, multiplicative perturbation is introduced since . Fig. 8(a) illustrates that the exploding gradient problem occurs at the side of transmitter under the case of Rayleigh flat fading channel with SNR= 25dB, which is similar to the case of low SNR Gaussian channel. It means that fading leads the AE not to convergence even if noise is small. Fig. 8(b) illustrates the corresponding constellation produced by AE. The receiver cannot distinguish all the transmitted signals correctly because of 4 overlapping points.

Summarily, the structure of AE-based communication system makes it has strict requirements on channel to train the network. First, it demands high SNR. Second, the AE cannot work properly in fading channels. These impede the implement of AE-based communication system in practical wireless scenarios.

V-B The Performance of DNN-based Estimation

We consider a common channel estimation problem for an OFDM system. Let which denotes channel frequency response (CFR) vector of a channel. denotes the number of subcarriers of an OFDM symbol. For the sake of convenience, we denote the measurable variable as where represents the LS estimation of . Usually, it can be obtained by using training symbol-based channel estimation. Practically, the MMSE channel estimation will not be chosen, unless the covariance matrix of fading channel is known.

V-B1 MSE Performance

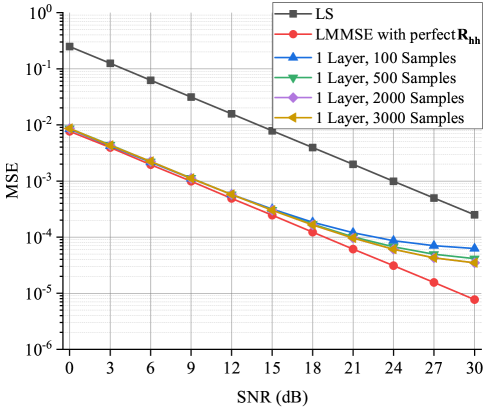

Fig. 9 compares the MSEs of the LS, LMMSE, and single hidden layer estimators versus SNRs. The size of the test set is . The size of neural network . The MSEs of the LS and LMMSE estimators are used as the benchmarks. The MSE performance of the single hidden layer neural network improves as the size of the training set increases. Compared to LS estimator, the single hidden layer neural network has superior performance, even if the size of the training set is 100. However, its performance is inferior to the LMMSE regardless of the size of the training set.

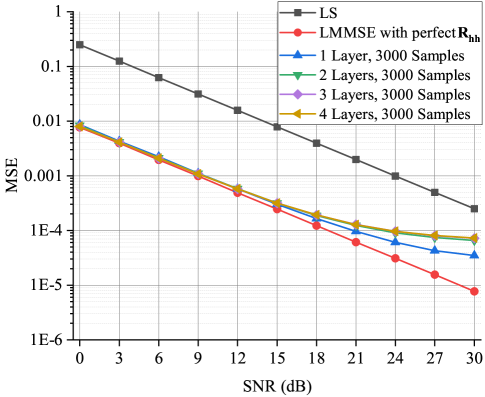

Fig. 10 compares the MSEs of neural network-based estimators with different hidden layers to that of the LS and LMMSE estimators. The sizes of these neural networks are the same with . Given a limited set of training data, the MSE performance of neural network-based estimator degrades with increased hidden layers. This result coincides with the description in Theorem 40.

V-B2 Information Flow

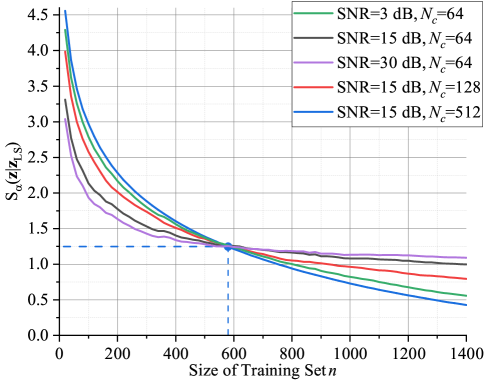

According to (44), the minimum logarithmic expected (population) risk for this inference problem is which can be estimated by Rényi’s -entropy with . Fig. 11 illustrates the entropy with respect to different values of SNR and . In a practical scenario, we use linear interpolation and the number of pilots . As can be seen, monotonically decreases as the size of training set increases. When , decreases slowly. It is because the joint probability distribution can be perfectly learned and therefore the empirical risk is approaching to the expected risk. Interestingly, when , the lower the SNR or the larger input dimension is, the smaller is needed to obtain the same value of .

| Input/Output dimension () | Number of hidden layers () | |

| 1 | 128 | 1 |

| 4 | 128 | 7 |

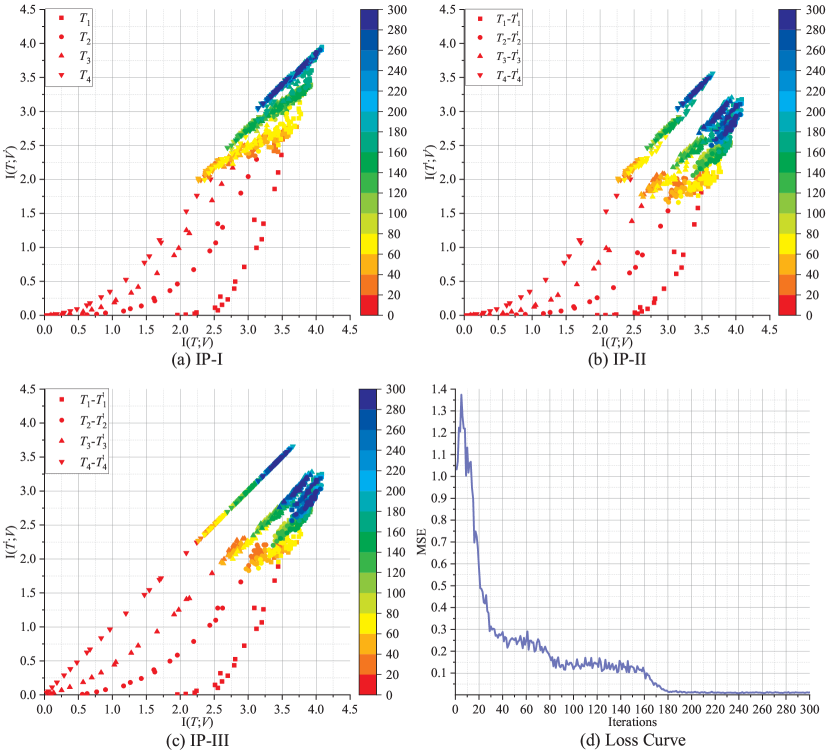

We analyze two NN-based OFDM channel estimators with different layouts (See TABLE II). Fig. 12(a), (b) and (c) illustrate the behavior of the IP-I, IP-II and IP-III in a DNN-based OFDM channel estimator with topology “” where linear activation function is considered and the training sample is constructed by concatenating the real and imaginary parts of the complex channel vectors. Batch size is 100 and learning rate . Note that in our in preliminary work [23], we find that the linear learning model may achieve better performance compared with other models, and therefore the linear activation function is chosen in this paper. We use and to denote the input and output of the decoder, respectively. The number of iterations is illustrated through a color bar. From IP-I, it can be seen that the final value of mutual information in each layer tends to be equal to the final value of , which means that the information from has been learnt and transferred to by each layer. In IP-II, in each layer, which implies that all the layers are not overfitting. The tendency of to approach the value of can be observed from IP-III. Finally, from all the IPs, it is easy to notice that the mutual information does not change significantly when the number of iterations is larger than 200. Meanwhile, according to Fig. 12(d), the MSE value reaches a very low value and also does not change sharply. It means that 200 iterations are enough for the task of 64-subcarrier channel estimation using a DNN with the above-mentioned topology.

In [26], single hidden layer feedforward neural network (SLFN)-based channel estimation and equalization scheme shows outstanding performance compared to the DNN-based scheme. However, it is still unknown whether a SLFN can completely learn the channel structural information from the training set, and then transfer the information from the input layer to the output layer. Fig. 13(a), (b) and (c) illustrate the behavior of the IP-I, IP-II, and IP-III in an SLFN-based OFDM channel estimator with topology “” where the other hyperparameters are same to that of the DNN with . In this case, the IP-I and IP-II are entirely identical since . From IP-I, when the number of iterations is larger than 50, it can be seen that in hidden layer tends to be equal to . The final value of approximately equals to 3.5 which is nearly the same final value for . Correspondingly, the MSE value does not change significantly. Furthermore, comparing Fig. 13(d) to Fig. 12(d), the MSE value decreases more rapidly and smoothly. These mean that the SLFN with 128 hidden neurons is able to learn the same information from the training set with and its learning speed and quality are better than that of the DNN with .

VI Conclusion

In this paper, we propose a framework to understand the manner of the DNNs in physical communication. We find that a DNN-based transmitter essentially tries to produce a good representation of the information source. In terms of convergence, the AE has specific requirements for wireless channels, i.e., the channel should be AWGN with high SNR. Then, we quantitatively analyze the MSE performance of neural network-based estimators and the information flow in neural network-based communication systems. The analysis reveals that, in the practical scenario, i.e, given limited training samples, a neural network with deeper layers may has inferior MSE performance compared to a shallow one. For the task of inference (e.g., channel estimation), we verify that the decoder can learn the information from a training set, and the shallow neural network with a single hidden layer has advantages in learning speed and quality by comparing with the DNN.

We believe that this framework has the potential for the design of DNN-based physical communication. Specifically, theoretical analysis shows that the application of the neural network-based communication system with end-to-end structure has high requirements on the channel, and the practical application range is limited. Therefore, it is more suitable to deploy neural networks at receiver. Under the condition of limited training samples, the neural network with single hidden layer can achieve the optimal MSE estimation performance, and therefore an SLFN can be deployed in a receiver for the task of channel estimation. Furthermore, the size of the training set and the dimension of a DNN can be determined by the proposed framework.

In the future, limitations for the DNN under fading channels should be solved. It would be interesting to use deep reinforcement learning technique to design waveform parameters for a transmitter instead of entirely replacing it with a DNN.

Appendix A Matrix-based Functional of Rényi’s -Entropy

For a random variable in a finite set , its Rényi’s entropy of order is defined as

| (47) |

where is the PDF of the random variable . Let be an i.i.d. sample of realizations from the random variable with PDF . The Gram matrix can be defined as where is a real valued positive definite and infinitely divisible kernel. Then, a matrix-based analogue to Rényi’s -entropy for a normalized positive definite matrix of size with trace 1 can be given by the functional

| (48) |

where denotes the -th eigenvalue of , a normalized version of :

| (49) |

Now, the joint-entropy can be defined as

| (50) |

Finally, the matrix notion of Rényi’s mutual information can be defined as

| (51) |

References

- [1] J. Liu, H. Zhao, D. Ma, K. Mei, and J. Wei, “Opening the black box of deep neural networks in physical layer communication,” in 2022 IEEE Wireless Communications and Networking Conference (WCNC), 2022, pp. 435–440.

- [2] C. E. Shannon, “A mathematical theory of communication,” The Bell system technical journal, vol. 27, no. 3, pp. 379–423, 1948.

- [3] A. W. Senior, R. Evans, J. Jumper, J. Kirkpatrick, L. Sifre, T. Green, C. Qin, A. Žídek, A. W. Nelson, A. Bridgland et al., “Improved protein structure prediction using potentials from deep learning,” Nature, vol. 577, no. 7792, pp. 706–710, 2020.

- [4] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [5] O. Abdel-Hamid, A.-r. Mohamed, H. Jiang, L. Deng, G. Penn, and D. Yu, “Convolutional neural networks for speech recognition,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 22, no. 10, pp. 1533–1545, 2014.

- [6] J. Hirschberg and C. D. Manning, “Advances in natural language processing,” Science, vol. 349, no. 6245, pp. 261–266, 2015.

- [7] T. O’Shea and J. Hoydis, “An introduction to deep learning for the physical layer,” IEEE Transactions on Cognitive Communications and Networking, vol. 3, no. 4, pp. 563–575, 2017.

- [8] T. J. O’Shea, T. Roy, N. West, and B. C. Hilburn, “Physical layer communications system design over-the-air using adversarial networks,” in 2018 26th European Signal Processing Conference (EUSIPCO). IEEE, 2018, pp. 529–532.

- [9] B. Zhu, J. Wang, L. He, and J. Song, “Joint transceiver optimization for wireless communication PHY using neural network,” IEEE Journal on Selected Areas in Communications, vol. 37, no. 6, pp. 1364–1373, 2019.

- [10] M. E. Morocho-Cayamcela, J. N. Njoku, J. Park, and W. Lim, “Learning to communicate with autoencoders: Rethinking wireless systems with deep learning,” in 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), 2020, pp. 308–311.

- [11] A. Felix, S. Cammerer, S. Dörner, J. Hoydis, and S. T. Brink, “OFDM-autoencoder for end-to-end learning of communications systems,” in 2018 IEEE 19th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC). IEEE, 2018, pp. 1–5.

- [12] T. J. O’Shea, T. Erpek, and T. C. Clancy, “Physical layer deep learning of encodings for the MIMO fading channel,” in 2017 55th Annual Allerton Conference on Communication, Control, and Computing (Allerton), 2017, pp. 76–80.

- [13] J. Liu, K. Mei, X. Zhang, D. McLernon, D. Ma, J. Wei, and S. A. R. Zaidi, “Fine timing and frequency synchronization for MIMO-OFDM: An extreme learning approach,” IEEE Transactions on Cognitive Communications and Networking, pp. 1–1, 2021.

- [14] F. A. Aoudia and J. Hoydis, “Model-free training of end-to-end communication systems,” IEEE Journal on Selected Areas in Communications, vol. 37, no. 11, pp. 2503–2516, 2019.

- [15] V. Raj and S. Kalyani, “Backpropagating through the air: Deep learning at physical layer without channel models,” IEEE Communications Letters, vol. 22, no. 11, pp. 2278–2281, 2018.

- [16] Z. Qin, H. Ye, G. Y. Li, and B.-H. F. Juang, “Deep learning in physical layer communications,” IEEE Wireless Communications, vol. 26, no. 2, pp. 93–99, 2019.

- [17] C.-K. Wen, W.-T. Shih, and S. Jin, “Deep learning for massive MIMO CSI feedback,” IEEE Wireless Communications Letters, vol. 7, no. 5, pp. 748–751, 2018.

- [18] T. Wang, C.-K. Wen, S. Jin, and G. Y. Li, “Deep learning-based CSI feedback approach for time-varying massive MIMO channels,” IEEE Wireless Communications Letters, vol. 8, no. 2, pp. 416–419, 2018.

- [19] L. Li, H. Chen, H.-H. Chang, and L. Liu, “Deep residual learning meets OFDM channel estimation,” IEEE Wireless Communications Letters, vol. 9, no. 5, pp. 615–618, 2019.

- [20] J. Zhang, Y. Cao, G. Han, and X. Fu, “Deep neural network-based underwater OFDM receiver,” IET communications, vol. 13, no. 13, pp. 1998–2002, 2019.

- [21] Y. Yang, F. Gao, X. Ma, and S. Zhang, “Deep learning-based channel estimation for doubly selective fading channels,” IEEE Access, vol. 7, pp. 36 579–36 589, 2019.

- [22] E. Balevi and J. G. Andrews, “One-bit OFDM receivers via deep learning,” IEEE Transactions on Communications, vol. 67, no. 6, pp. 4326–4336, 2019.

- [23] K. Mei, J. Liu, X. Zhang, K. Cao, N. Rajatheva, and J. Wei, “A low complexity learning-based channel estimation for OFDM systems with online training,” IEEE Transactions on Communications, vol. 69, no. 10, pp. 6722–6733, 2021.

- [24] L. V. Nguyen, A. L. Swindlehurst, and D. H. N. Nguyen, “Linear and deep neural network-based receivers for massive MIMO systems with one-bit ADCs,” IEEE Transactions on Wireless Communications, vol. 20, no. 11, pp. 7333–7345, 2021.

- [25] H. He, S. Jin, C.-K. Wen, F. Gao, G. Y. Li, and Z. Xu, “Model-driven deep learning for physical layer communications,” IEEE Wireless Communications, vol. 26, no. 5, pp. 77–83, 2019.

- [26] J. Liu, K. Mei, X. Zhang, D. Ma, and J. Wei, “Online extreme learning machine-based channel estimation and equalization for OFDM systems,” IEEE Communications Letters, vol. 23, no. 7, pp. 1276–1279, 2019.

- [27] N. Tishby, F. C. Pereira, and W. Bialek, “The information bottleneck method,” arXiv preprint physics/0004057, 2000.

- [28] N. Tishby and N. Zaslavsky, “Deep learning and the information bottleneck principle,” in 2015 IEEE Information Theory Workshop (ITW), 2015, pp. 1–5.

- [29] A. Zaidi, I. Estella-Aguerri, and S. Shamai (Shitz), “On the information bottleneck problems: Models, connections, applications and information theoretic views,” Entropy, vol. 22, no. 2, p. 151, 2020.

- [30] I. E. Aguerri and A. Zaidi, “Distributed variational representation learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 1, pp. 120–138, 2021.

- [31] H. Xu, T. Yang, G. Caire, and S. Shamai (Shitz), “Information bottleneck for a Rayleigh fading MIMO channel with an oblivious relay,” Information, vol. 12, no. 4, 2021. [Online]. Available: https://www.mdpi.com/2078-2489/12/4/155

- [32] F. Liao, S. Wei, and S. Zou, “Deep learning methods in communication systems: A review,” in Journal of Physics: Conference Series, vol. 1617, no. 1. IOP Publishing, 2020, p. 012024.

- [33] B. Sklar and P. K. Ray, Digital Communications Fundamentals and Applications. Pearson Education, 2014.

- [34] S. Yu, M. Emigh, E. Santana, and J. C. Príncipe, “Autoencoders trained with relevant information: Blending Shannon and Wiener’s perspectives,” in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2017, pp. 6115–6119.

- [35] J. Boutros, E. Viterbo, C. Rastello, and J.-C. Belfiore, “Good lattice constellations for both Rayleigh fading and Gaussian channels,” IEEE Transactions on Information Theory, vol. 42, no. 2, pp. 502–518, 1996.

- [36] G. C. Jorge, A. A. de Andrade, S. I. Costa, and J. E. Strapasson, “Algebraic constructions of densest lattices,” Journal of Algebra, vol. 429, pp. 218–235, 2015.

- [37] G. Foschini, R. Gitlin, and S. Weinstein, “Optimization of two-dimensional signal constellations in the presence of Gaussian noise,” IEEE Transactions on Communications, vol. 22, no. 1, pp. 28–38, 1974.

- [38] C. Rudin and J. Radin, “Why are we using black box models in AI when we don’t need to? A lesson from an explainable AI competition,” Harvard Data Science Review, vol. 1, no. 2, 11 2019, https://hdsr.mitpress.mit.edu/pub/f9kuryi8. [Online]. Available: https://hdsr.mitpress.mit.edu/pub/f9kuryi8

- [39] S. S. Du, X. Zhai, B. Poczos, and A. Singh, “Gradient descent provably optimizes over-parameterized neural networks,” arXiv preprint arXiv:1810.02054, 2018.

- [40] S. Du, J. Lee, H. Li, L. Wang, and X. Zhai, “Gradient descent finds global minima of deep neural networks,” in Proceedings of the 36th International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, K. Chaudhuri and R. Salakhutdinov, Eds., vol. 97. PMLR, 09–15 Jun 2019, pp. 1675–1685. [Online]. Available: https://proceedings.mlr.press/v97/du19c.html

- [41] J.-J. van de Beek, O. Edfors, M. Sandell, S. Wilson, and P. Borjesson, “On channel estimation in OFDM systems,” in 1995 IEEE 45th Vehicular Technology Conference. Countdown to the Wireless Twenty-First Century, vol. 2, 1995, pp. 815–819 vol.2.

- [42] O. Edfors, M. Sandell, J.-J. van de Beek, S. Wilson, and P. Borjesson, “OFDM channel estimation by singular value decomposition,” IEEE Transactions on Communications, vol. 46, no. 7, pp. 931–939, 1998.

- [43] K. Mei, J. Liu, X. Zhang, N. Rajatheva, and J. Wei, “Performance analysis on machine learning-based channel estimation,” IEEE Transactions on Communications, vol. 69, no. 8, pp. 5183–5193, 2021.

- [44] G. D. Forney Jr, “Shannon meets Wiener II: On MMSE estimation in successive decoding schemes,” arXiv preprint cs/0409011, 2004.

- [45] M. Diaz, P. Kairouz, and L. Sankar, “Lower bounds for the minimum mean-square error via neural network-based estimation,” arXiv preprint arXiv:2108.12851, 2021.

- [46] S. Yu and J. C. Príncipe, “Understanding autoencoders with information theoretic concepts,” Neural Networks, vol. 117, pp. 104–123, 2019.

- [47] L. G. S. Giraldo, M. Rao, and J. C. Príncipe, “Measures of entropy from data using infinitely divisible kernels,” IEEE Transactions on Information Theory, vol. 61, no. 1, pp. 535–548, 2014.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e4c66c82-eb16-4379-9c5e-fe8bda6a7c8c/x18.png) |

Jun Liu received the B.S. degree in optical information science and technology from the South China University of Technology (SCUT), Guangzhou, China, in 2015, and the M.E. degree in communications and information engineering from the National University of Defense Technology (NUDT), Changsha, China, in 2017, where he is currently pursuing the Ph.D. degree with the Department of Cognitive Communications. He was a visiting Ph.D. student with the University of Leeds from 2019 to 2020. His current research interests include machine learning with a focus on shallow neural networks applications, signal processing for broadband wireless communication systems, multiple antenna techniques, and wireless channel modeling. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e4c66c82-eb16-4379-9c5e-fe8bda6a7c8c/x19.png) |

Haitao Zhao (Senior Member, IEEE) received his B.E., M.Sc. and Ph.D. degrees all from the National University of Defense Technology (NUDT), P. R. China, in 2002, 2004 and 2009 respectively. And he is currently a professor in the Department of Cognitive Communications, College of Electronic Science and Technology at NUDT. Prior to this, he visited the Institute of ECIT, Queen’s University of Belfast, UK and Hong Kong Baptist University. His main research interests include wireless communications, cognitive radio networks and self-organized networks. He has served as a TPC member of IEEE ICC’14-22, Globecom’16-22, and guest editor for IEEE Communications Magazine. He is a senior member of IEEE. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e4c66c82-eb16-4379-9c5e-fe8bda6a7c8c/x20.png) |

Dongtang Ma (SM’13) received the B.S. degree in applied physics and the M.S. and Ph.D. degrees in information and communication engineering from the National University of Defense Technology (NUDT), Changsha, China, in 1990, 1997, and 2004, respectively. From 2004 to 2009, he was an Associate Professor with the College of Electronic Science and Engineering, NUDT. Since 2009, he is a professor with the department of cognitive communication, School of Electronic Science and Engineering, NUDT. From Aug. 2012 to Feb. 2013, he was a visiting professor at University of Surrey, UK. His research interests include wireless communication and networks, physical layer security, intelligent communication and network. He has published more than 150 journal and conference papers. He is one of the Executive Directors of Hunan Electronic Institute. He severed as the TPC member of PIMRC from 2012 to 2020. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e4c66c82-eb16-4379-9c5e-fe8bda6a7c8c/x21.png) |

Kai Mei received the master’s degree from the National University of Defense Technology, in 2017, where he is currently pursuing the Ph.D. degree. His research interests include synchronization and channel estimation in OFDM systems and MIMO-OFDM systems, and machine learning applications in wireless communications. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e4c66c82-eb16-4379-9c5e-fe8bda6a7c8c/x22.png) |

Jibo Wei (Member, IEEE) received the B.S. and M.S. degrees from the National University of Defense Technology (NUDT), Changsha, China, in 1989 and 1992, respectively, and the Ph.D. degree from Southeast University, Nanjing, China, in 1998, all in electronic engineering. He is currently the Director and a Professor of the Department of Communication Engineering, NUDT. His research interests include wireless network protocol and signal processing in communications, more specially, the areas of MIMO, multicarrier transmission, cooperative communication, and cognitive network. He is a member of the IEEE Communication Society and also a member of the IEEE VTS. He also works as one of the editors of the Journal on Communications and is a Senior Member of the China Institute of Communications and Electronics. |