Time-Aware Knowledge Representations of

Dynamic Objects with Multidimensional Persistence

Abstract

Learning time-evolving objects such as multivariate time series and dynamic networks requires the development of novel knowledge representation mechanisms and neural network architectures, which allow for capturing implicit time-dependent information contained in the data. Such information is typically not directly observed but plays a key role in the learning task performance. In turn, lack of time dimension in knowledge encoding mechanisms for time-dependent data leads to frequent model updates, poor learning performance, and, as a result, subpar decision-making. Here we propose a new approach to a time-aware knowledge representation mechanism that notably focuses on implicit time-dependent topological information along multiple geometric dimensions. In particular, we propose a new approach, named Temporal MultiPersistence (TMP), which produces multidimensional topological fingerprints of the data by using the existing single parameter topological summaries. The main idea behind TMP is to merge the two newest directions in topological representation learning, that is, multi-persistence which simultaneously describes data shape evolution along multiple key parameters, and zigzag persistence to enable us to extract the most salient data shape information over time. We derive theoretical guarantees of TMP vectorizations and show its utility, in application to forecasting on benchmark traffic flow, Ethereum blockchain, and electrocardiogram datasets, demonstrating the competitive performance, especially, in scenarios of limited data records. In addition, our TMP method improves the computational efficiency of the state-of-the-art multipersistence summaries up to 59.5 times.

1 Introduction

Over the last decade, the field of topological data analysis (TDA) has demonstrated its effectiveness in revealing concealed patterns within diverse types of data that conventional methods struggle to access. Notably, in cases where conventional approaches frequently falter, tools such as persistent homology (PH) within TDA have showcased remarkable capabilities in identifying both localized and overarching patterns. These tools have the potential to generate a distinctive topological signature, a trait that holds great promise for a range of ML applications. This inherent capacity of PH becomes particularly appealing for capturing implicit temporal traits of evolving data, which might hold the crucial insights underlying the performance of learning tasks.

In turn, the concept of multiparameter persistence (MP) introduces a groundbreaking dimension to machine learning by enhancing the capabilities of persistent homology. Its objective is to analyze data across multiple dimensions concurrently, in a more nuanced manner. However, due to the complex algebraic challenges intrinsic to its framework, MP has yet to be universally defined in all contexts (Botnan and Lesnick 2022; Carrière and Blumberg 2020).

In response, we present a novel approach designed to effectively harness MP homology for the dual purposes of time-aware learning and the representation of time-dependent data. Specifically, the temporal parameter within time-dependent data furnishes the crucial dimension necessary for the application of the slicing concept within the MP framework. Our method yields a distinctive topological MP signature for the provided time-dependent data, manifested as multidimensional vectors (matrices or tensors). These vectors are highly compatible with ML applications. Notably, our findings possess broad applicability and can be tailored to various forms of PH vectorization, rendering them suitable for diverse categories of time-dependent data.

Our key contributions can be summarized as follows:

-

•

We bring a new perspective to use TDA for time-dependent data by using multipersistence approach.

-

•

We introduce TMP vectorizations framework which provides a multidimensional topological fingerprint of the data. TMP framework expands many known single persistence vectorizations to multidimensions by utilizing time dimension effectively in PH machinery.

-

•

The versatility of our TMP framework allows its application to diverse types of time-dependent data. Furthermore, we show that TMP enjoys many important stability guarantees as most single persistence summaries.

-

•

Rooted in computational linear algebra, TMP vectorizations generate multidimensional arrays (i.e., matrices or tensors) which serve as compatible inputs for various ML models. Notably, our proposed TMP approach boasts a speed advantage, performing up to 59.5 times faster than the cutting-edge MP methods.

-

•

Through successful integration of the latest TDA techniques with deep learning tools, our TMP-Nets model consistently and cohesively outperforms the majority of state-of-the-art deep learning models.

2 Related Work

2.1 Time Series Forecasting

Recurrent Neural Networks (RNNs) are the most successful deep learning techniques to model datasets with time-dependent variables (Lipton, Berkowitz, and Elkan 2015). Long-Short-Term Memory networks (LSTMs) addressed the prior RNN limitations in learning long-term dependencies by solving known issues with exploding and vanishing gradients (Yu et al. 2019), serving as basis for other improved RNN, such as Gate Recurrent Units (GRUs) (Dey and Salem 2017), Bidirectional LSTMs (BI_LSTM) (Wang, Yang, and Meinel 2018), and seq2seq LSTMs (Sutskever, Vinyals, and Le 2014). Despite the widespread adoption of RNNs in multiple applications (Xiang, Yan, and Demir 2020; Schmidhuber 2017; Shin and Kim 2020; Shewalkar, Nyavanandi, and Ludwig 2019; Segovia-Dominguez et al. 2021; Bin et al. 2018), RNNs are limited by the structure of the input data and can not naturally handle data-structures from manifolds and graphs, i.e. non-Euclidean spaces.

2.2 Graph Convolutional Networks

New methods on graph convolution-based methods overcome prior limitations of traditional GCN approaches, e.g. learning underlying local and global connectivity patterns (Veličković et al. 2018; Defferrard, Bresson, and Vandergheynst 2016; Kipf and Welling 2017). GCN handles graph-structure data via aggregation of node information from the neighborhoods using graph filters. Lately, there is an increasing interest in expanding GCN capabilities to the time series forecasting domain. In this context, modern approaches have reached outstanding results in COVID-19 forecasting, money laundering, transportation forecasting, and scene recognition (Pareja et al. 2020; Segovia Dominguez et al. 2021; Yu, Yin, and Zhu 2018; Yan, Xiong, and Lin 2018; Guo et al. 2019; Weber et al. 2019; Yao et al. 2018). However, a major drawback of these approaches is the lack of versatility as they assume a fixed graph-structure and rely on the existing correlation among spatial and temporal features.

2.3 Multiparameter Persistence

Multipersistence (MP) is a highly promising approach to significantly improve the success of single parameter persistence (SP) in applied TDA, but the theory is not complete yet (Botnan and Lesnick 2022). Except for some special cases, the MP theory tends to suffer from the problem of the nonexistence of barcode decomposition because of the partially ordered structure of the index set . The existing approaches remedy this issue via the slicing technique by studying one-dimensional fibers of the multiparameter domain. However, this approach tends to lose most of the information the MP approach produces. Another idea along these lines is to use several such directions (vineyards), and produce a vectorization summarizing these SP vectorizations (Carrière and Blumberg 2020). However, again choosing these directions suitably and computing restricted SP vectorizations are computationally costly which restricts these approaches in many real-life applications. There are several promising recent studies in this direction (Botnan, Oppermann, and Oudot 2022; Vipond 2020), but these techniques often do not provide a topological summary that can readily serve as input to ML models. In this paper, we develop a highly efficient way to use the MP approach for time-dependent data and provide a multidimensional topological summary with TMP Vectorizations. We discuss the current fundamental challenges in the MP theory and the contributions of our TMP vectorizations in Section D.2.

3 Background

We start by providing the basic background for our machinery. While our techniques are applicable to any type of time-dependent data, here we mainly focus on the dynamic networks since our primary motivation comes from time-aware learning of time-evolving graphs as well as time series and spatio-temporal processes, also represented as graph structures. (For discussion on other types of data see Section D.3.)

Notation Table: All the notations used in the paper are given in Table 12 in the appendix.

Time-Dependent Data: Throughout the paper, by time-dependent data, we mean the data which implicitly or explicitly has time information embedded in itself. Such data include but are not limited to multivariate time series, spatio-temporal processes, and dynamic networks. Since our paper is primarily motivated by time-aware graph neural networks and their broader applications to forecasting, we focus on dynamic networks. Let be a sequence of weighted graphs for time steps . In particular, } with node set , and edge set . Let be the cardinality of the node set. represents the edge weights for as a nonnegative symmetric -matrix with entries , i.e. the adjacency matrix of . In other words, for any and , otherwise. In the case of unweighted networks, let for any and , otherwise.

3.1 Background on Persistent Homology

Persistent homology (PH) is a mathematical machinery to capture the hidden shape patterns in the data by using algebraic topology tools. PH extracts this information by keeping track of the evolution of the topological features (components, loops, cavities) created in the data while looking at it using different resolutions. Here, we give basic background for PH in the graph setting. For further details, see (Dey and Wang 2022; Edelsbrunner and Harer 2010).

For a given graph , consider a nested sequence of subgraphs . For each , define an abstract simplicial complex , , yielding a filtration of complexes . Here, clique complexes are among the most common ones, i.e., clique complex is obtained by assigning (filling with) a -simplex to each complete -complete subgraph in , e.g., a -clique, a complete -subgraph, in will be filled with a -simplex (triangle). Then, in this sequence of simplicial complexes, one can systematically keep track of the evolution of the topological patterns mentioned above. A -dimensional topological feature (or -hole) may represent connected components (-hole), loops (-hole) and cavities (-hole). For each -hole , PH records its first appearance in the filtration sequence, say , and first disappearance in later complexes, with a unique pair , where We call the birth time of and the death time of . We call the life span of . PH records all these birth and death times of the topological features in persistence diagrams. Let where is the highest dimension in the simplicial complex . Then persistence diagram . Here, represents the homology group of which keeps the information of the -holes in the simplicial complex . With the intuition that the topological features with a long life span (persistent features) describe the hidden shape patterns in the data, these persistence diagrams provide a unique topological fingerprint of .

As one can easily notice, the most important step in the PH machinery is the construction of the nested sequence of subgraphs . For a given unweighted graph , the most common technique is to use a filtering function with a choice of thresholds where . For , let . Let be the induced subgraph of by , i.e. where . This process yields a nested sequence of subgraphs , called the sublevel filtration induced by the filtering function . Choice of is crucial here, and in most cases, is either an important function from the domain of the data, e.g. amount of transactions or volume transfer, or a function defined from intrinsic properties of the graph, e.g. degree, betweenness. Similarly, for a weighted graph, one can use sublevel filtration on the weights of the edges and obtain a suitable filtration reflecting the domain information stored in the edge weights. For further details on different filtration types of networks, see (Aktas, Akbas, and El Fatmaoui 2019; Hofer et al. 2020).

3.2 Multidimensional Persistence

In the previous section, we discussed the single-parameter persistence theory. The reason for the term ”single” is that we filter the data in only one direction . Here, the choice of direction is the key to extracting the hidden patterns from the observed data. For some tasks and data types, it is sufficient to consider only one dimension (or filtering function ) (e.g., atomic numbers for protein networks) in order to extract the intrinsic data properties. However, often the observed data may have more than one direction to be analyzed (for example, in the case of money laundering detection on bitcoin, we may need to use both transaction amounts and numbers of transactions between any two traders). With this intuition, multiparameter persistence (MP) theory is suggested as a natural generalization of single persistence (SP).

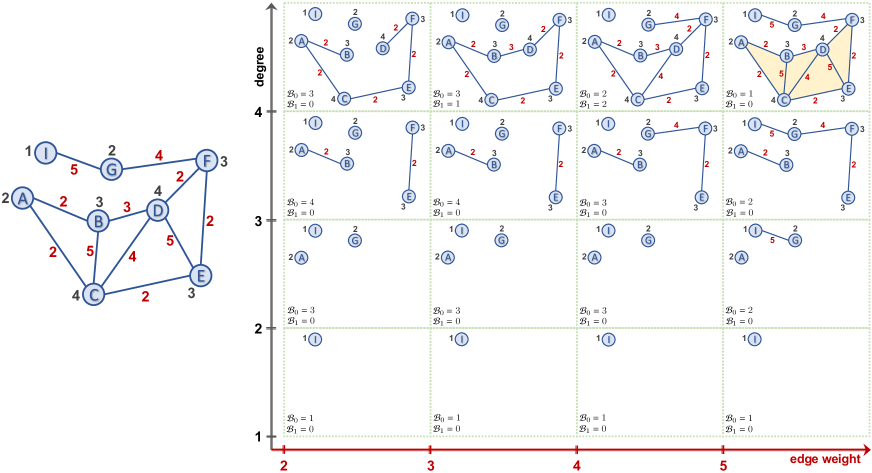

In simpler terms, if one uses only one filtering function, sublevel sets induce a single parameter filtration . Instead, if one uses two or more functions, then it would enable us to study finer substructures and patterns in the data. In particular, let and be two filtering functions with very valuable complementary information of the network. Then, MP idea is presumed to produce a unique topological fingerprint combining the information from both functions. These pair of functions induces a multivariate filtering function with . Again, we can define a set of nondecreasing thresholds and for and respectively. Then, , i.e. . Then, let be the induced subgraph of by , i.e. the smallest subgraph of containing . Then, instead of a single filtration of complexes, we get a bifiltration of complexes . See Figure 2 (Appendix) for an explicit example.

As illustrated in Figure 2, we can imagine as a rectangular grid of size such that for each , gives a nested (horizontal) sequence of simplicial complexes. Similarly, for each , gives a nested (vertical) sequence of simplicial complexes. By computing the homology groups of these complexes, , we obtain the induced bigraded persistence module (a rectangular grid of size ). Again, the idea is to keep track of the -dimensional topological features via the homology groups in this grid. As detailed in Section D.2, because of the technical issues related to commutative algebra coming from the partially ordered structure of the multipersistence module, this MP approach has not been completed like SP theory yet, and there is a need to facilitate this promising idea effectively in real-life applications.

In this paper, for time-dependent data, we overcome this problem by using the naturally inherited special direction in the data: Time. By using this canonical direction in the multipersistence module, we bypass the partial ordering problem and generalize the ideas from single parameter persistence to produce a unique topological fingerprint of the data via MP. Our approach provides a general framework to utilize various vectorization forms defined for single PH and gives a multidimensional topological summary of the data.

Utilizing Time Direction - Zigzag Persistence: While our intuition is to use time direction in MP for forecasting purposes, the time parameter is not very suitable to use in PH construction in its original form. This is because PH construction needs nested subgraphs to keep track of the existing topological features, while time-dependent data do not come nested, i.e. in general for . However, a generalized version of PH construction helps us to overcome this problem. We want to keep track of topological features which exist in different time instances. Zigzag homology (Carlsson and Silva 2010) bypasses the requirement of the nested sequence by using the ”zigzag scheme”. We provide the details of zigzag persistent homology in Section C.1.

4 TMP Vectorizations

We now introduce a general framework to define vectorizations for multipersistence homology on time-dependent data. First, we recall the single persistence vectorizations which we will expand as multidimensional vectorizations with our TMP framework.

4.1 Single Persistence Vectorizations

While PH extracts hidden shape patterns from data as persistence diagrams (PD), PDs being a collection of points in by itself are not very practical for statistical and machine learning purposes. Instead, the common techniques are by accurately representing PDs as kernels (Kriege, Johansson, and Morris 2020) or vectorizations (Ali et al. 2023). Single Persistence Vectorizations transform obtained PH information (PDs) into a function or a feature vector form which are much more suitable for ML tools. Common single persistence (SP) vectorization methods are Persistence Images (Adams et al. 2017), Persistence Landscapes (Bubenik 2015), Silhouettes (Chazal et al. 2014), and various Persistence Curves (Chung and Lawson 2022). These vectorizations define a single variable or multivariable functions out of PDs, which can be used as fixed size or vectors in applications, i.e vectors or vectors. For example, a Betti curve for a PD with thresholds can be written as size vectors. Similarly, persistence images is an example of vectors with the chosen resolution (grid) size. See the examples below and in Section D.1 for further details.

4.2 TMP Vectorizations

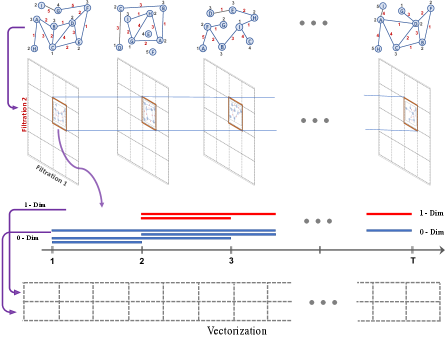

Finally, we define our Temporal MultiPersistence (TMP) framework for time-dependent data. In particular, by using the existing single-parameter persistence vectorizations, we produce multidimensional vectorization by effectively using the time direction in the multipersistence module. The idea is to use zigzag homology in the time direction and consider -dimensional filtering for the other directions. This process produces -dimensional vectorizations of the dataset. While the most common choice would be for computational purposes, we restrict ourselves to to give a general idea. The construction can easily be generalized to higher dimensions. Below and in Section D.1, we provide explicit examples of TMP Vectorizations. While we mainly focus on network data in this part, we give how to generalize TMP vectorizations to other types of data (e.g., point clouds, images) in Section D.3.

Again, let be a sequence of weighted (or unweighted) graphs for time steps with } as defined in Section 3. By using a filtering function or weights, define a bifiltration for each , i.e. for and . For each fixed , consider the sequence . This sequence of subgraphs induces a zigzag sequence of clique complexes as described in Section C.1:

Now, let be the induced zigzag persistence diagram. Let represent an SP vectorization as described above, e.g. Persistence Landscape, Silhouette, Persistence Image, Persistence Curves. This means if is the persistence diagram for some filtration induced by , then we call is the corresponding vectorization for (see Figure 1 in Appendix F7). In most cases, is represented as functions on the threshold domain (Persistence curves, Landscapes, Silhouettes, Persistence Surfaces). However, the discrete structure of the threshold domain enables us to interpret the function as a -vector (Persistence curves, Landscapes, Silhouettes) or -vector (Persistence Images). See examples given below and in the Section D.1 for more details.

Now, let be the corresponding vector for the zigzag persistence diagram . Then, for any and , we have a ( or ) vector . Now, define the induced TMP Vectorization as the corresponding tensor for and

In particular, if is a -vector of size , then would be a -vector (rank- tensor) with size . if is a -vector of size , then would be a rank- tensor with size . In the examples below, we provide explicit constructions for for the most common SP vectorizations .

4.3 Examples of TMP Vectorizations

While we describe TMP Vectorizations for , in most applications, would be preferable for computational purposes. Then if the preferred single persistence (SP) vectorization produces -vector (say size ), then induced TMP Vectorization would be a -vector (a matrix) of size where is the number of thresholds for the filtering function used, e.g. . These matrices provide unique topological fingerprints for each time-dependent dataset . These multidimensional fingerprints are produced by using persistent homology with two-dimensional filtering where the first dimension is the natural direction time , and the second dimension comes from the filtering function .

Here, we discuss explicit constructions of two examples of TMP vectorizations. As we mentioned above, the framework is very general, and it can be applied to various vectorization methods. In Section D.1, we provide details of further examples of TMP Vectorizations for time-dependent data, i.e., TMP Silhouettes, and TMP Betti Summaries.

TMP Landscapes

Persistence Landscapes are one of the most common SP vectorization methods introduced in (Bubenik 2015). For a given persistence diagram , produces a function by using generating functions for each , i.e. is a piecewise linear function obtained by two line segments starting from and connecting to the same point . Then, the Persistence Landscape function is defined as for , where represents the thresholds for the filtration used.

Considering the piecewise linear structure of the function, is completely determined by its values on points, i.e. where . Hence, a vector of size whose entries the values of this function would suffice to capture all the information needed, i.e. .

Now, for the time-dependent data , to construct our induced TMP Vectorization , TMP Landscapes, we use for time direction, . For zigzag persistence, we have thresholds steps. Hence, by taking , we would have length vector .

For the other multipersistence direction, by using a filtering function with the threshold set , we obtain TMP Landscape as follows: where represents -row of the -vector . Here, is induced by the sublevel filtration for as described in the paper, i.e. is the induced subgraph by .

Hence, for a time-dependent data , TMP Landscape is a -vector of size where is the number of time steps.

TMP Persistence Images

Next SP vectorization in our list is persistence images (Adams et al. 2017). Different than most SP vectorizations, persistence images produce -vectors. The idea is to capture the location of the points in the persistence diagrams with a multivariable function by using the Gaussian functions centered at these points. For , let represent a -Gaussian centered at the point . Then, one defines a multivariable function, Persistence Surface, where is the weight, mostly a function of the life span . To represent this multivariable function as a -vector, one defines a grid (resolution size) on the domain of , i.e. threshold domain of . Then, one obtains the Persistence Image, a -vector of size , where and is the corresponding pixel (rectangle) in the grid.

Following a similar route, for our TMP vectorization, we use time as one direction, and the filtering function in the other direction, i.e. with threshold set . Then, for time-dependent data , in the time direction, we use zigzag PDs and their persistence images. Hence, for each , we define TMP Persistence Image as where -vector is -floor of the -vector . Then, TMP Persistence Image is a -vector of size .

More details for TMP Persistence Surfaces and TMP Silhouettes are provided in Section D.1.

4.4 Stability of TMP Vectorizations

We now prove that when the source single parameter vectorization is stable, then so is its induced TMP vectorization . We discuss the details of the stability notion in persistence theory and examples of stable SP vectorizations in Section C.2.

Let and be two time sequences of networks. Let be a stable SP vectorization with the stability equation

for some . Here, represents Wasserstein- distance as defined in Section C.2.

Now, consider the bifiltrations and for each . We define the induced matching distance between the multiple persistence diagrams (See Remark 2) as

Now, define the distance between TMP Vectorizations as .

Theorem 1.

Let be a stable vectorization for single parameter PDs. Then, the induced TMP Vectorization is also stable, i.e. With the notation above, there exists such that for any pair of time-aware network sequences and , we have the following inequality.

The proof of the theorem is given in Appendix E.

5 TMP-Nets

To fully take advantage of the extracted signatures by TMP vectorizations, we propose a GNN-based module to track and learn significant temporal and topological patterns. Our Time Aware Multiparameter Persistence Nets (TMP-Nets) capture spatio-temporal relationships via trainable node embedding dictionaries in a GDL-based framework.

Graph Convolution on Adaptive Adjacency Matrix

To model the hidden dependencies among nodes in the spatio-temporal graph, we define the spatial graph convolution operation based on the adaptive adjacency matrix and given node feature matrix. Inspired by (Wu et al. 2019), to investigate the beyond pairwise relations among nodes, we use the adaptive adjacency matrix based on trainable node embedding dictionaries, i.e., , where (here and ), and are the input and output of -th layer, and (here represents the number of features for each node), and is the trainable weights.

Topological Signatures Representation Learning

In our experiments, we use CNN based model to learn the TMP topological features. Given the TMP topological features of resolution , i.e., , we employ CNN-based model and global max pooling to obtain the image-level local topological feature as

where is the global max pooling, is a CNN based neural network with parameter set , and is the output for TMP representation.

Lastly, we combine the two embeddings to obtain the final embedding :

To capture both spatial and temporal correlations in time-series, we feed the final embedding into Gated Recurrent Units (GRU) for future time points forecasting.

6 Experiments

Datasets:

We consider three types of data: two widely used benchmark datasets on California (CA) traffic (Chen et al. 2001) and electrocardiography (ECG5000) (Chen et al. 2015a), and the newly emerged data on Ethereum blockchain tokens (Shamsi et al. 2022). (The results on the ECG5000 are presented in Section A.4). More details descriptions of datasets can be found in Section B.1.

6.1 Experimental Results

We compare our TMP-Nets with 6 state-of-the-art baselines. We use three standard performance metrics Mean Absolute Error (MAE), Root Mean Square Error (RMSE), and Mean absolute percentage error (MAPE). We provide additional experimental results in Appendix A. In Appendix B, we provide further details on the experimental setup and empirical evaluation. Our source code is available at the link 111https://www.dropbox.com/sh/h28f1cf98t9xmzj/AACBavvHc˙ctCB1FVQNyf-XRa?dl=0.

| Model | Bytom | Decentraland | Golem |

|---|---|---|---|

| DCRNN (Li et al. 2018) | 35.361.18 | 27.691.77 | 23.151.91 |

| STGCN (Yu, Yin, and Zhu 2018) | 37.331.06 | 28.221.69 | 23.682.31 |

| GraphWaveNet (Wu et al. 2019) | 39.180.96 | 37.671.76 | 28.892.34 |

| AGCRN (Bai et al. 2020) | 34.461.37 | 26.751.51 | 22.831.91 |

| Z-GCNETs (Chen, Segovia, and Gel 2021) | 31.040.78 | 23.812.43 | 22.321.42 |

| StemGNN (Cao et al. 2020) | 34.911.04 | 28.371.96 | 22.502.01 |

| TMP-Nets | 28.773.30 | 22.971.80 | 29.011.05 |

Results on Blockchain Datasets: Table 1 shows performance on Bytom, Decentraland, and Golem. Table 1 suggests the following phenomena: (i) TMP-Nets achieves the best performance on Bytom and Decentraland, and the relative gains of TMP-Nets over the best baseline (i.e., Z-GCNETs) are 7.89% and 3.66% on Bytom and Decentraland respectively; (ii) compared with Z-GCNETs, the size of TMP topological features used in this work is much smaller than the zigzag persistence image utilized in Z-GCNETs.

An interesting question is why TMP-Nets performs differently on Golem vs. Bytom and Decentraland. Success on each network token depends on the diversity of connections among nodes. In cryptocurrency networks, we expect nodes/addresses to be connected with other nodes with similar transaction connectivity (e.g. interaction among whales) as well as with nodes with low connectivity (e.g. terminal nodes). However, the assortativity measure of Golem (-0.47) is considerably lower than Bytom (-0.42) and Decentraland (-0.35), leading to disassortativity patterns (i.e., repetitive isolated clusters) in the Golem network, which, in turn, downgrade the success rate of forecasting.

| Model | PeMSD4 | PeMSD8 | ||||

|---|---|---|---|---|---|---|

| MAE | RMSE | MAPE (%) | MAE | RMSE | MAPE (%) | |

| AGCRN | 110.360.20 | 150.370.15 | 208.360.20 | 87.120.25 | 109.200.33 | 277.440.26 |

| Z-GCNETs | 112.650.12 | 153.470.17 | 206.090.33 | 69.820.16 | 95.830.37 | 102.740.53 |

| StemGNN | 112.830.07 | 150.220.30 | 209.520.51 | 65.160.36 | 89.600.60 | 108.710.51 |

| TMP-Nets | 108.380.10 | 147.570.23 | 208.660.27 | 59.820.82 | 85.860.64 | 109.880.65 |

| Model | PeMSD4 | PeMSD8 | ||||

|---|---|---|---|---|---|---|

| MAE | RMSE | MAPE (%) | MAE | RMSE | MAPE (%) | |

| AGCRN | 90.360.10 | 122.610.13 | 176.900.35 | 55.200.19 | 83.010.53 | 167.390.25 |

| Z-GCNETs | 89.570.11 | 117.940.15 | 180.110.26 | 47.110.20 | 80.250.24 | 98.150.33 |

| StemGNN | 93.270.16 | 131.490.21 | 189.180.30 | 53.860.39 | 82.000.52 | 97.780.30 |

| TMP-Nets | 85.150.12 | 115.000.16 | 170.970.22 | 50.200.37 | 80.170.26 | 100.310.58 |

Results on Traffic Datasets: For traffic flow data PeMSD4 and PeMSD8, we evaluate Z-GCNETs’ performance on varying lengths. This allows us to further explore the learning capabilities of our Z-GCNETs as a function of sample size. In particular, in many real-world scenarios, there exists only a limited number of temporal records to be used in the training stage, and the learning problem with lower sample sizes becomes substantially more challenging. Tables 2 and 3 show that under the scenario of limited data records for both PeMSD4 and PeMSD8 (i.e., and ), our TMP-Nets always outperforms three representative baselines in MAE and RMSE. For example, TMP-Nets significantly outperform SOTA baselines, where we achieve relative gains of 1.79% and 4.36% in RMSE on PeMSD4T=1,000 and PeMSD8T=1,000, respectively. Overall, the results demonstrate that our proposed TMP-Nets can accurately capture the hidden complex spatial and temporal correlations in the correlated time series datasets and achieve promising forecasting performances under the scenarios of limited data records. Moreover, we conduct experiments on the whole PeMSD4 and PeMSD8 datasets. As Table 6 (Appendix) indicates, our TMP-Nets still achieve competitive performances on both datasets.

Finally, we applied our approach in a different domain with a benchmark electrocardiogram dataset, ECG5000. Again, our model gives highly competitive results with the SOTA methods (Section A.4).

Ablation Studies:

To better evaluate the importance of different components of TMP-Nets, we perform ablation studies on two traffic datasets, i.e., PeMSD4 and PeMSD8 by using only (i) or (ii) as input. Table 4 report the forecasting performance of (i) , (ii) , and (iii) TMP-Nets (our proposed model). We find that our TMP-Nets outperforms both and on two datasets, yielding highly statistically significant gains. Hence, we can conclude that (i) TMP vectorizations help to better capture global and local hidden topological information in the time dimension, and (ii) spatial graph convolution operation accurately learns the inter-dependencies (i.e., spatial correlations) among spatio-temporal graphs. We provide further ablation studies comparing the effect of slicing direction and the MP vectorization methods in the Section A.2.

| Model | PeMSD4 | PeMSD8 |

|---|---|---|

| TMP-Nets | 147.570.23 | 85.860.64 |

| 165.670.30 | 90.230.15 | |

| 153.750.22 | 88.381.05 |

Computational Complexity:

One of the key issues why MP has not propagated widely into practice yet is its high computational costs. Our method improves on the state-of-the-art MP (ranging from 23.8 to 59.5 times faster than Multiparameter Persistence Image (MP-I) (Carrière and Blumberg 2020), and from 1.2 to 8.6 times faster than Multiparameter Persistence Kernel (MP-L) (Corbet et al. 2019)) and, armed with a computationally fast vectorization method (e.g., Betti summary (Lesnick and Wright 2022)), TMP yields competitive computational costs for a lower number of filtering functions (See Section A.3). Nevertheless, scaling for really large scale-problems is still a challenge. In the future we will explore TMP constructed only on the landmark points, that is, TMP will be constructed not on all but on the most important landmark nodes, which would lead to substantial sparsification of the graph representation.

Comparison with Other Topological GNN Models for Dynamic Networks:

The two existing time-aware topological GNNs for dynamic networks are TAMP-S2GCNets (Chen et al. 2021) and Z-GCNETs (Chen, Segovia, and Gel 2021). The pivotal distinction between our model and these counterparts lies in the fact that our model serves as a comprehensive extension of both, applicable across diverse data types encompassing point clouds and images (see Section D.3). Z-GCNETs employs single persistence approach, rendering it unsuitable for datasets that encompass two or more significant domain functions. In contrast, TAMP-S2GCNets employs multipersistence; however, its Euler-Characteristic surface vectorization fails to encapsulate lifespan information present in persistence diagrams. Notably, in scenarios involving sparse data, barcodes with longer lifespans signify main data characteristics, while short barcodes are considered as topological noise. The limitation of Euler-Characteristic Surfaces, being simply a linear combination of bigraded Betti numbers, lies in its inability to capture this distinction. In stark contrast, our framework encompasses all forms of vectorizations, permitting practitioners to choose their preferred vectorization technique while adapting to dynamic networks or time-dependent data comprehensively. For instance, compared to TAMP-S2GCNets model, our TMP-Nets achieves a better performance on the Bytom dataset, i.e., TMP-Nets (MAPE: 28.773.30) vs. TAMP-S2GCNets (MAPE: 29.261.06). Furthermore, from the computational time perspective, the average computation time of TMP and Dynamic Euler-Poincaré Surface (which is used in TAMP-S2GCNets model) are 1.85 seconds and 38.99 seconds respectively, i.e., our TMP is more efficient.

7 Discussion

We have proposed a new highly computationally efficient summary for multidimensional persistence for time-dependent objects, Temporal MultiPersistence (TMP). By successfully combining the latest TDA methods with deep learning tools, our TMP approach outperforms many popular state-of-the-art deep learning models in a consistent and unified manner. Further, we have shown that TMP enjoys important theoretical stability guarantees. As such, TMP makes an important step toward bringing the theoretical concepts of multipersistence from pure mathematics to the machine learning community and to the practical problems of time-aware learning of time-conditioned objects, such as dynamic graphs, time series, and spatio-temporal processes.

Still, scaling for ultra high-dimensional processes, especially in modern data streaming scenarios, may be infeasible for TMP. In the future, we will investigate algorithms such as those based on landmarks or pruning, with the goal to advance the computational efficiency of TMP for streaming applications.

Acknowledgements

Supported by the NSF grants DMS-2220613, DMS-2229417, ECCS 2039701, TIP-2333703, Simons Foundation grant # 579977, and ONR grant N00014-21-1-2530. Also, the paper is based upon work supported by (while Y.R.G. was serving at) the NSF. The views expressed in the article do not necessarily represent the views of NSF or ONR.

References

- Adams et al. (2017) Adams, H.; et al. 2017. Persistence images: A stable vector representation of persistent homology. JMLR, 18.

- Akcora, Gel, and Kantarcioglu (2022) Akcora, C. G.; Gel, Y. R.; and Kantarcioglu, M. 2022. Blockchain networks: Data structures of bitcoin, monero, zcash, ethereum, ripple, and iota. Wiley Int. Reviews: Data Mining and Knowledge Discovery, 12(1): e1436.

- Aktas, Akbas, and El Fatmaoui (2019) Aktas, M. E.; Akbas, E.; and El Fatmaoui, A. 2019. Persistence homology of networks: methods and applications. Applied Network Science, 4(1): 1–28.

- Ali et al. (2023) Ali, D.; et al. 2023. A survey of vectorization methods in topological data analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence.

- Atienza et al. (2020) Atienza, N.; et al. 2020. On the stability of persistent entropy and new summary functions for topological data analysis. Pattern Recognition, 107: 107509.

- Bai et al. (2020) Bai, L.; Yao, L.; Li, C.; Wang, X.; and Wang, C. 2020. Adaptive Graph Convolutional Recurrent Network for Traffic Forecasting. NeurIPS, 33.

- Bin et al. (2018) Bin, Y.; et al. 2018. Describing video with attention-based bidirectional LSTM. IEEE transactions on cybernetics, 49(7): 2631–2641.

- Botnan and Lesnick (2022) Botnan, M. B.; and Lesnick, M. 2022. An introduction to multiparameter persistence. arXiv preprint arXiv:2203.14289.

- Botnan, Oppermann, and Oudot (2022) Botnan, M. B.; Oppermann, S.; and Oudot, S. 2022. Signed barcodes for multi-parameter persistence via rank decompositions. In SoCG.

- Bubenik (2015) Bubenik, P. 2015. Statistical Topological Data Analysis using Persistence Landscapes. JMLR, 16(1): 77–102.

- Cao et al. (2020) Cao, D.; et al. 2020. Spectral Temporal Graph Neural Network for Multivariate Time-series Forecasting. In NeurIPS, volume 33, 17766–17778.

- Carlsson, De Silva, and Morozov (2009) Carlsson, G.; De Silva, V.; and Morozov, D. 2009. Zigzag persistent homology and real-valued functions. In SoCG.

- Carlsson and Silva (2010) Carlsson, G.; and Silva, V. 2010. Zigzag Persistence. Found. Comput. Math., 10(4): 367–405.

- Carrière and Blumberg (2020) Carrière, M.; and Blumberg, A. 2020. Multiparameter persistence image for topological machine learning. NeurIPS.

- Chazal et al. (2014) Chazal, F.; Fasy, B. T.; Lecci, F.; Rinaldo, A.; and Wasserman, L. 2014. Stochastic convergence of persistence landscapes and silhouettes. In SoCG.

- Chen et al. (2001) Chen, C.; Petty, K.; Skabardonis, A.; Varaiya, P.; and Jia, Z. 2001. Freeway performance measurement system: mining loop detector data. Transportation Research Record, 1748(1): 96–102.

- Chen et al. (2015a) Chen, Y.; Keogh, E.; Hu, B.; Begum, N.; Bagnall, A.; Mueen, A.; and Batista, G. 2015a. The UCR time series classification archive.

- Chen, Segovia, and Gel (2021) Chen, Y.; Segovia, I.; and Gel, Y. R. 2021. Z-GCNETs: time zigzags at graph convolutional networks for time series forecasting. In ICML, 1684–1694. PMLR.

- Chen et al. (2021) Chen, Y.; Segovia-Dominguez, I.; Coskunuzer, B.; and Gel, Y. 2021. TAMP-S2GCNets: coupling time-aware multipersistence knowledge representation with spatio-supra graph convolutional networks for time-series forecasting. In ICLR.

- Chen et al. (2015b) Chen, Y.; et al. 2015b. A general framework for never-ending learning from time series streams. Data mining and knowledge discovery, 29: 1622–1664.

- Chung and Lawson (2022) Chung, Y.-M.; and Lawson, A. 2022. Persistence curves: A canonical framework for summarizing persistence diagrams. Advances in Computational Mathematics, 48(1): 6.

- Corbet et al. (2019) Corbet, R.; Fugacci, U.; Kerber, M.; Landi, C.; and Wang, B. 2019. A kernel for multi-parameter persistent homology. Computers & graphics: X, 2: 100005.

- Defferrard, Bresson, and Vandergheynst (2016) Defferrard, M.; Bresson, X.; and Vandergheynst, P. 2016. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In NeurIPS, volume 29, 3844–3852.

- Dey and Salem (2017) Dey, R.; and Salem, F. M. 2017. Gate-variants of Gated Recurrent Unit (GRU) neural networks. In MWSCAS.

- Dey and Wang (2022) Dey, T. K.; and Wang, Y. 2022. Computational Topology for Data Analysis. Cambridge University Press.

- di Angelo and Salzer (2020) di Angelo, M.; and Salzer, G. 2020. Tokens, Types, and Standards: Identification and Utilization in Ethereum. In 2020 IEEE DAPPS, 1–10.

- Edelsbrunner and Harer (2010) Edelsbrunner, H.; and Harer, J. 2010. Computational topology: an introduction. American Mathematical Soc.

- Eisenbud (2013) Eisenbud, D. 2013. Commutative algebra: with a view toward algebraic geometry, volume 150. Springer Science & Business Media.

- Guo et al. (2019) Guo, S.; Lin, Y.; Feng, N.; Song, C.; and Wan, H. 2019. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In AAAI.

- Hofer et al. (2020) Hofer, C.; Graf, F.; Rieck, B.; Niethammer, M.; and Kwitt, R. 2020. Graph filtration learning. In ICML, 4314–4323. PMLR.

- Jiang and Luo (2022) Jiang, W.; and Luo, J. 2022. Graph neural network for traffic forecasting: A survey. Expert Systems with Applications, 207: 117921.

- Johnson and Jung (2021) Johnson, M.; and Jung, J.-H. 2021. Instability of the Betti Sequence for Persistent Homology. J. Korean Soc. Ind. and Applied Math., 25(4): 296–311.

- Kipf and Welling (2017) Kipf, T. N.; and Welling, M. 2017. Semi-supervised classification with graph convolutional networks. ICLR.

- Kriege, Johansson, and Morris (2020) Kriege, N. M.; Johansson, F. D.; and Morris, C. 2020. A survey on graph kernels. Applied Network Science, 1–42.

- Lesnick and Wright (2022) Lesnick, M.; and Wright, M. 2022. Computing minimal presentations and bigraded betti numbers of 2-parameter persistent homology. SIAGA.

- Li et al. (2018) Li, Y.; Yu, R.; Shahabi, C.; and Liu, Y. 2018. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In ICLR.

- Lipton, Berkowitz, and Elkan (2015) Lipton, Z. C.; Berkowitz, J.; and Elkan, C. 2015. A critical review of recurrent neural networks for sequence learning. arXiv preprint arXiv:1506.00019.

- Pareja et al. (2020) Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Schardl, T.; and Leiserson, C. 2020. Evolvegcn: Evolving graph convolutional networks for dynamic graphs. In AAAI.

- Schmidhuber (2017) Schmidhuber, J. 2017. LSTM: Impact on the world’s most valuable public companies. http://people.idsia.ch/~juergen/impact-on-most-valuable-companies.html. Accessed: 2020-03-19.

- Segovia Dominguez et al. (2021) Segovia Dominguez, I.; et al. 2021. Does Air Quality Really Impact COVID-19 Clinical Severity: Coupling NASA Satellite Datasets with Geometric Deep Learning. KDD.

- Segovia-Dominguez et al. (2021) Segovia-Dominguez, I.; et al. 2021. TLife-LSTM: Forecasting Future COVID-19 Progression with Topological Signatures of Atmospheric Conditions. In PAKDD (1), 201–212.

- Shamsi et al. (2022) Shamsi, K.; Victor, F.; Kantarcioglu, M.; Gel, Y.; and Akcora, C. G. 2022. Chartalist: Labeled Graph Datasets for UTXO and Account-based Blockchains. NeurIPS.

- Shewalkar, Nyavanandi, and Ludwig (2019) Shewalkar, A.; Nyavanandi, D.; and Ludwig, S. A. 2019. Performance evaluation of deep neural networks applied to speech recognition: RNN, LSTM and GRU. J. Artificial Intelligence and Soft Computing Research, 9(4): 235–245.

- Shin and Kim (2020) Shin, S.; and Kim, W. 2020. Skeleton-Based Dynamic Hand Gesture Recognition Using a Part-Based GRU-RNN for Gesture-Based Interface. IEEE Access, 8: 50236–50243.

- Sutskever, Vinyals, and Le (2014) Sutskever, I.; Vinyals, O.; and Le, Q. V. 2014. Sequence to Sequence Learning with Neural Networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2, NIPS’14, 3104–3112. Cambridge, MA, USA: MIT Press.

- Vassilevska, Williams, and Yuster (2006) Vassilevska, V.; Williams, R.; and Yuster, R. 2006. Finding the Smallest H-Subgraph in Real Weighted Graphs and Related Problems. In Automata, Languages and Programming.

- Veličković et al. (2018) Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; and Bengio, Y. 2018. Graph attention networks. ICLR.

- Vipond (2020) Vipond, O. 2020. Multiparameter Persistence Landscapes. J. Mach. Learn. Res., 21: 61–1.

- Wang, Yang, and Meinel (2018) Wang, C.; Yang, H.; and Meinel, C. 2018. Image Captioning with Deep Bidirectional LSTMs and Multi-Task Learning. ACM Trans. Multimedia Comput. Commun. Appl., 14(2s).

- Weber et al. (2019) Weber, M.; et al. 2019. Anti-Money Laundering in Bitcoin: Experimenting with GCNs for Financial Forensics. In KDD.

- Wu et al. (2019) Wu, Z.; Pan, S.; Long, G.; Jiang, J.; and Zhang, C. 2019. Graph WaveNet for Deep Spatial-Temporal Graph Modeling. In IJCAI.

- Xiang, Yan, and Demir (2020) Xiang, Z.; Yan, J.; and Demir, I. 2020. A rainfall-runoff model with LSTM-based sequence-to-sequence learning. Water resources research, 56(1): e2019WR025326.

- Yan, Xiong, and Lin (2018) Yan, S.; Xiong, Y.; and Lin, D. 2018. Spatial temporal graph convolutional networks for skeleton-based action recognition. In AAAI.

- Yao et al. (2018) Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; and Li, Z. 2018. Deep multi-view spatial-temporal network for taxi demand prediction. In AAAI.

- Yu, Yin, and Zhu (2018) Yu, B.; Yin, H.; and Zhu, Z. 2018. Spatio-temporal GCNs: a deep learning framework for traffic forecasting. In IJCAI.

- Yu et al. (2019) Yu, Y.; Si, X.; Hu, C.; and Zhang, J. 2019. A Review of Recurrent Neural Networks: Lstm Cells and Network Architectures. Neural Comput., 31(7): 1235–1270.

Appendix

In this part, we give additional details about our experiments and methods. In Appendix A, we provide more experimental results as ablation studies and additional baselines. In Appendix B, we discuss datasets and our experimental setup. In Appendix C, we provide a more theoretical background on persistent homology. In Appendix D, we give further examples of TMP vectorizations and generalizations to general types of data. We also discuss fundamental challenges in applications of multipersistence theory in spatio-temporal data, and our contributions in this context in Section D.2. Finally, in Appendix E, we prove the stability of TMP vectorizations. Our notation table (Table 12) can be found at the end of the appendix.

Appendix A Additional Results on Experiments

A.1 Additional Baselines

Contrary to other papers (e.g., (Jiang and Luo 2022)) which consider only a single option of 16,992 (PeMSD4) / 17,856 (PeMSD8) time stamps, we evaluate Z-GCNETs performance on varying lengths of 1,000 and 2,000 (Section 6.3 - Experimental Results details). This allows us to further explore the learning capabilities of our Z-GCNETs as a function of sample size and also most importantly to assess the performance of Z-GCNETs and its competitors under a more challenging and much more realistic scenario of limited temporal records. To better highlight the effectiveness of our proposed TMP-Nets model, we compare it with more baselines - DCRNN (Li et al. 2018), STGCN (Yu, Yin, and Zhu 2018), and GraphWaveNet (Wu et al. 2019). As shown in Table 5, we can find that our TMP-Nets is highly statistically significantly better than DCRNN, STGCN, and GraphWaveNet on PeMSD4 dataset.

| Dataset | Model | RMSE |

|---|---|---|

| PEMSD4 | TMP-Nets | 147.570.23 |

| PEMSD4 | DCRNN | 153.340.55 |

| PEMSD4 | STGCN | 174.750.35 |

| PEMSD4 | GraphWaveNet | 151.870.22 |

| Model | PeMSD4 | PeMSD8 | ||||

|---|---|---|---|---|---|---|

| MAE | RMSE | MAPE (%) | MAE | RMSE | MAPE (%) | |

| AGCRN | 19.83 | 32.26 | 12.97% | 15.95 | 25.22 | 10.09% |

| Z-GCNETs | 19.50 | 31.61 | 12.78% | 15.76 | 25.11 | 10.01% |

| StemGNN | 20.24 | 32.15 | 10.03% | 15.83 | 24.93 | 9.26% |

| TMP-Nets | 19.57 | 31.69 | 12.89% | 16.36 | 25.85 | 10.36% |

A.2 Further Ablation Studies

Slicing Direction.

To investigate the importance of time direction, we now consider zigzag persistent homology along the axis of degree instead of time. We then conduct comparison experiments between TMP-Nets (i.e., is generated through the time axis) and TMPdeg-Nets (i.e., is generated along the axis of degree instead of time). As Table 7 indicates, TMP-Nets based on the time component outperforms TMPdeg-Nets. These findings can be expected, as time is one of the core variables in spatio-temporal processes, and, hence, we can conclude that extracting the zigzag-based topological summary along the time dimension is important for forecasting tasks. Nevertheless, we would like to underline that the TMP idea can be also applied to non-time-varying processes as long as there exists some alternative natural geometric dimension.

| Dataset | Model | MAPE |

|---|---|---|

| Bytom | TMP-Nets | 28.773.30 |

| Bytom | TMPdeg-Nets | 29.154.17 |

Bigraded Betti Numbers vs. TMP.

To compare the effectiveness of which facilitates time direction with zigzag persistence for spatio-temporal forecasting, we conduct additional experiments on traffic datasets, i.e., PeMSD4 and PeMSD8 by using (i) TMP-Nets (based on Z-Meta) and (ii) MPBetti-Nets (based on bigraded Betti numbers). Tables 8 below show the results when using bigraded Betti numbers as a source of topological signatures in the ML model. As Tables 3 and 4 indicate, our TMP-Nets achieves better forecasting accuracy (i.e., lower RMSE) on both PeMSD4 and PeMSD8 datasets than the MPBetti-Nets and the difference in performance is highly statistically significant.

Such results can be potentially attributed to the fact that TMP-Nets tends to better capture the most important topological signals by choosing a suitable vectorization method for the task at hand. In particular, MPBetti-Nets only counts the number of topological features but do not give any emphasis to the longer barcodes appearing in the temporal direction, that is, MPBetti-Nets are limited in distinguishing topological signals from topological noise. However, longer barcodes (or density of the short barcodes) in the temporal dimension typically are the key to accurately capturing intrinsic topological patterns in the spatio-temporal data.

| Model | PEMSD4 | PEMSD8 |

|---|---|---|

| TMP-Nets | 147.570.23 | 85.860.64 |

| MPBetti-Nets | 151.580.19 | 87.710.70 |

| Dataset | Dim | Betweenness | Closeness | Degree | Power-Tran | Power-Volume |

|---|---|---|---|---|---|---|

| Bytom | {0,1} | 236.95 seg | 239.36 seg | 237.60 seg | 987.90 seg | 941.39 seg |

| Decentraland | {0,1} | 134.75 seg | 138.81 seg | 133.82 seg | 2007.50 seg | 1524.10 seg |

| Golem | {0,1} | 571.35 seg | 581.36 seg | 573.93 seg | 4410.47 seg | 4663.52 seg |

A.3 Computational Time on Different Filtering Functions

As expected, Table 9 shows that computational time highly depends on the complexity of the selected filtering function. However, the time spent in computing TMP Vectorizations is below two hours at most, which makes our approach highly useful in ML tasks.

A.4 Experiments on ECG5000 Benchmark Dataset

To support that our methodology can be applied in other dynamic networks, we run additional experiments in the ECG5000 dataset, (Chen et al. 2015b). This benchmark dataset contains 140 nodes and 5,000 time stamps. When running our methodology we extract patterns via Betti, Silhouette, and Entropy vectorizations, set the window size to 12, and the forecasting step as 3. Following preliminary cross-validation experiments, we set the resolution to 50 where we use a quantile-based selection of thresholds. We perform edge-weight filtration on graphs created via a correlation matrix. In our experiments, we have found that there is no significant difference between the results based on Betti and Silhouette vectorizations. In Table 10, we only report the results of TMP-Nets based on Silhouette vectorization. From Table 10, we can find that our TMP-Nets either deliver on par or outperforms the state-of-the-art baselines (with a smaller standard deviation). Note that ECG5000 is a small dataset (that is, 140 nodes only), and as such, the differences among models cannot be expected to be high for such a smaller network.

| Dataset | Model | RMSE |

|---|---|---|

| ECG5000 | TMP-Nets | 0.520.005 |

| ECG5000 | StemGNN | 0.520.006 |

| ECG5000 | AGCRN | 0.520.008 |

| ECG5000 | DCRNN | 0.550.005 |

A.5 Connectivity in Ethereum networks

An interesting question is why TMP-Nets performs differently on Golem vs. Bytom and Decentraland. Success on each network token depends on the diversity of connections among nodes. In cryptocurrency networks, we expect nodes/addresses to be connected with other nodes with similar transaction connectivity (e.g. interaction among whales) as well as with nodes with low connectivity (e.g. terminal nodes). However, the assortativity measure of Golem (-0.47) is considerably lower than Bytom (-0.42) and Decentraland (-0.35), leading to disassortativity patterns (i.e., repetitive isolated clusters) in the Golem network, which, in turn, downgrade the success rate of forecasting.

| Token | Degree | Betweenness | Density | Assortativity |

|---|---|---|---|---|

| Bytom | 0.1995789 | 0.0002146 | 0.0020159 | -0.4276000 |

| Decentraland | 0.3387378 | 0.0004677 | 0.0034215 | -0.3589580 |

| Golem | 0.3354401 | 0.0004175 | 0.0033882 | -0.4731063 |

Appendix B Further Details on Experimental Setup

B.1 Datasets

CA Traffic.

We consider two traffic flow datasets, i.e., PeMSD4 and PeMSD8, in California from January 1, 2018, to February 28, 2018, and from January 7, 2016, to August 31, 2016, respectively. Note that, both PeMSD4 and PeMSD8 are aggregated to 5 minutes, which means there are 12 time points in the flow data per hour. Following the settings of (Guo et al. 2019), we split the traffic datasets with ratio into training, validation, and test sets; furthermore, in our experiments, we evaluate our TMP-Nets and baselines on two traffic flow datasets with varying lengths of sequences, i.e., (first 1,000 networks out of whole dataset) and (first 2,000 networks out of whole dataset).

Electrocardiogram.

We use the electrocardiogram (ECG5000) dataset (i.e., with a length of 5,000) from the UCR time series archive (Chen et al. 2015a), where each time series length is 140.

Ethereum blockchain tokens.

We use three token networks from the Ethereum blockchain (Bytom, Decentraland and Golem) each with more than $100M in market value (https://EtherScan.io). Thus dynamic networks are a compound of addresses of users, i.e. nodes, and daily transactions among users, i.e. edges (di Angelo and Salzer 2020; Akcora, Gel, and Kantarcioglu 2022). Since original token networks have an average of 442788/1192722 nodes/edges, we compute a subgraph via a maximum weight subgraph approximation (Vassilevska, Williams, and Yuster 2006) using the amount of transactions as weight. The dynamic networks contain different numbers of nets since every token was created on different days; Bytom (285), Decentraland (206), and Golem (443). Hence, given the dynamic network and its corresponding node feature matrix , where represents the number of features, we test our algorithm with both node and edge features and use the set of more active nodes, i.e. .

B.2 Experimental Setup

We implement our TMP-Nets with Pytorch framework on NVIDIA GeForce RTX 3090 GPU. Further, for all datasets, TMP-Nets is trained end-to-end by using the Adam optimizer with a L1 loss function. For Ethereum blockchain token networks, we use Adam optimizer with weight decay, initial learning rate, batch size, and epoch as 0, 0.01, 8, 80 respectively. For traffic datasets, we use Adam optimizer with weight decay, initial learning rate, batch size, and epoch as 0.3, 0.003, 64, and 350 respectively (where the learning rate is reduced by every 10 epochs after 110 epochs). In our experiments, we compare with 6 types of state-of-the-art methods, including DCRNN (Li et al. 2018), STGCN (Yu, Yin, and Zhu 2018), GraphWaveNet (Wu et al. 2019), AGCRN (Bai et al. 2020), Z-GCNETs (Chen, Segovia, and Gel 2021), and StemGNN (Cao et al. 2020). We search the hidden feature dimension of the CNN-based model for TMP representation learning among , and the embedding dimension among values . The resolution of TMP is 50 for all three datasets (i.e., the shape of input TMP is ). The tuning of our proposed TMP-Nets on each dataset is done via grid search over a fixed set of choices and the same cross-validation setup is used to tune the above baselines. For both PeMSD4 and PeMSD8, specifically, we consider the first 1,000 and 2,000 timestamps for both of them. This allows us to further explore the learning capabilities of our Z-GCNETs as a function of sample size and also most importantly to assess the performance of Z-GCNETs and its competitors under a more challenging and much more realistic scenario of limited temporal records. For all methods (including our TMP-Nets and baselines), we run 5 times in the same partition and report the average accuracy along with the standard deviation.

Filtering Functions and Thresholds.

We select five filtering functions capturing different graph properties: 3 node sublevel filtrations (degree, closeness, and betweenness) and 2 power filtrations on edges (transaction and volume). The thresholds are chosen from equally spaced quantiles of either, function values (node sublevel filtration), or geodesic distances (power filtration). As a result, the number of thresholds depends upon the desired resolution for the MP grid. A too-low resolution does not provide sufficient topological information for graph learning tasks, whilst a too-high resolution unnecessarily increases the computational complexity. Based on our cross-validation experiments, we found 50 thresholds is a reasonable rule of thumb, working well in most studies.

Prediction Horizon.

For Ethereum blockchain token networks (i.e., Bytom, Decentraland, and Golem), according to (Chen, Segovia, and Gel 2021), we set the forecasting step as 7 and the sliding window size as 7. For traffic datasets (PeMSD4 and PeMD8), according to (Guo et al. 2019), we set the forecasting step as 5 and the sliding window size as 12.

Appendix C More on Persistent Homology

C.1 Zigzag Persistent Homology

While the notion of zigzag persistence is general, to keep the exposition simpler, we restrict ourselves to dynamic networks. For a given dynamic network , zigzag persistence detects pairwise compatible topological features in this time-ordered sequence of networks. While in single PH inclusions are always in the same direction (forward or backward), zigzag persistence and, more generally, the Mayer–Vietoris Diamond Principle allows us to consider evolution of topological features simultaneously in multiple directions (Carlsson, De Silva, and Morozov 2009). In particular, define a set of network inclusions over time

where is defined as a graph with a node set and an edge set .

Then, as before, by going to clique complexes of and , we obtain an ”extended” zigzag filtration induced by the dynamic network , which allows us to detect the topological features which persist over time. That is, we record time points where we first and last observe a topological feature over the considered time period, i.e., birth and death times of , respectively, and . Notice that in contrast to ordinary persistence, both birth and death times ( or ) can be fractional (corresponding to ) for . We then obtain Zigzag Persistence Diagram .

C.2 Stability for Single Persistence Vectorizations

For a given PD vectorization, stability is one of the most important properties for statistical purposes. Intuitively, the stability question is whether a small perturbation in PD causes a big change in the vectorization or not. To make this question meaningful, one needs to formalize what ”small” and “big” means in this context. That is, we need to define a notion of distance, i.e., a metric in the space of PDs. The most common such metric is called Wasserstein distance (or matching distance) which is defined as follows. Let and be persistence diagrams two datasets and . (We omit the dimensions in PDs). Let and where represents the diagonal (representing trivial cycles) with infinite multiplicity. Here, represents the birth and death times of a -hole . Let represent a bijection (matching). With the existence of the diagonal on both sides, we make sure of the existence of these bijections even if the cardinalities and are different. Then, Wasserstein distance defined as

where . Here, the bottleneck distance is .

Then, function is called stable if , where is a vectorization of and is a suitable metric in the space of vectorizations. Here, the constant is independent of . This stability inequality interprets that as the changes in the vectorizations are bounded by the changes in PDs. If a given vectorization holds such a stability inequality for some and , we call a stable vectorization (Atienza et al. 2020). Persistence Landscapes (Bubenik 2015), Persistence Images (Adams et al. 2017), Stabilized Betti Curves (Johnson and Jung 2021) and several Persistence curves (Chung and Lawson 2022) are among well-known examples of stable vectorizations.

Appendix D More on TMP Vectorizations

D.1 Further Examples of TMP Vectorizations

TMP Silhouettes.

Silhouettes are another very popular SP vectorization method in machine learning applications (Chazal et al. 2014). The idea is similar to persistence landscapes, but this vectorization uses the life span of the topological features more effectively. For , let be the generating function for as defined in Landscapes (Section 5). Then, Silhouette function is defined as , where the weight is mostly chosen as the lifespan , and represents the thresholds for the filtration used. Again such a Silhouette function produces a -vector of size as in persistence landscapes case.

As the structures of Silhouettes and Persistence Landscapes are very similar, so are their TMP Vectorizations. For a given time-dependent data , similar to persistence landscapes, we use time direction and filtering function direction for our TMP Silhouettes. For a filtering function with threshold set , we obtain TMP Silhouette as , where represents -row of the -vector and is the Silhouette vector induced by the zigzag persistence diagram for the time sequence . Again similar to the landscapes, by taking , , we obtain a -vector of size , where is the number of time steps in the data .

TMP Betti & TMP Persistence Summaries.

Next, we discuss an important family of SP vectorizations, Persistence Curves (Chung and Lawson 2022). This is an umbrella term for several different SP vectorizations, i.e. Betti Curves, Life Entropy, Landscapes, et al. Our TMP framework naturally adapts to all Persistence Curves to produce multidimensional vectorizations. As Persistence Curves produce a single variable function in general, they all can be represented as 1D-vectors by choosing a suitable mesh size depending on the number of thresholds used. Here, we describe one of the most common Persistence Curves in detail, i.e. Betti Curves. It is straightforward to generalize the construction to other Persistence Curves.

Betti curves are one of the simplest SP vectorizations as it gives the count of the topological feature at a given threshold interval. In particular, is the total count of -dimensional topological feature in the simplicial complex , i.e. (See Figure 2). For a given time-dependent data , we use zigzag persistence in time direction, which yields thresholds steps. Then, the Betti Curve for the zigzag persistence diagram is a step function with -intervals (we add another threshold after to interpret the last interval). As is a step function, it can be described as a vector of size , i.e. , where is the total count of topological features in . Here we omit the homological dimensions (i.e., subscript ) to keep the exposition simpler.

Then, by using a filtering function with threshold set for other direction, we define a TMP Betti curve as , where is the -row of the -vector . Here, is induced by the sublevel filtration for , i.e. . Then, is s -vector of size .

An alternate (and computationally friendly) route for TMP Betti Summaries is to bypass zigzag persistent homology and cliques and use directly clique complexes . This is because Betti curves do not need PDs, and they can be directly computed from the simplicial complexes . This way, we obtain a vector of size as . Then, this version of induced TMP Betti curve yields a -vector of size . It might have less information than the original zigzag version, but this is computationally much faster (Lesnick and Wright 2022) as one skips computation of PDs. Note that skipping zigzag persistence in time direction is only possible for Betti curves, as other vectorizations come from PDs, that is, lifespans, birth and death times are needed.

D.2 TMP Vectorizations and Multipersistence

Multipersistence theory is under intense research because of its promise to significantly improve the performance and robustness properties of single persistence theory. While single persistence theory obtains the topological fingerprint of single filtration, a multidimensional filtration with more than one parameter should deliver a much finer summary of the data to be used with ML models. However, multipersistence virtually has not reached any applications yet and remains largely unexplored by the ML community because of technical problems. Here, we provide a short summary of these issues. For further details, (Botnan and Lesnick 2022) gives a nice outline of the current state of the theory and major obstacles.

In single persistence, the threshold space being a subset of , is totally ordered, i.e., birth time death time for any topological feature appearing in the filtration sequence . By using this property, it was shown that “barcode decomposition” is well-defined in single persistence theory in the 1950s [Krull-Schmidt-Azumaya Theorem (Botnan and Lesnick 2022)–Theorem 4.2]. This decomposition makes the persistence module uniquely decomposable into barcodes. This barcode decomposition is exactly what we call a PD.

However, when one goes to higher dimensions, i.e. , then the threshold set is no longer totally ordered, but becomes partially ordered (Poset). In other words, some indices have ordering relation , while some do not, e.g., (2,3) vs. (1,5). Hence, if one has a multipersistence grid , we no longer can talk about birth time or death time as there is no ordering any more. Furthermore, Krull-Schmidt-Azumaya Theorem is no longer true for upper dimensions (Botnan and Lesnick 2022)–Section 4.2. Hence, for general multipersistence modules barcode decomposition is not possible, and the direct generalization of single persistence to multipersistence fails. On the other hand, even if the multipersistence module has a good barcode decomposition, because of partial ordering, representing these barcodes faithfully is another major problem. Multipersistence modules are an important subject in commutative algebra, where one can find the details of the topic in (Eisenbud 2013).

While complete generalization is out of reach for now, several attempts have been tried to utilize the MP idea by using one-dimensional slices in the MP grid in recent (Carrière and Blumberg 2020; Vipond 2020). Slicing techniques use the persistence diagrams of predetermined one-dimensional slices in the multipersistence grid and then combine (compress) them as one-dimensional output (Botnan and Lesnick 2022). In that respect, one major issue is that the topological summary highly depends on the predetermined slicing directions in this approach. The other problem is the loss of information when compressing the information in various persistence diagrams.

As explained above, the MP approach does not have theoretical foundations yet, and there are several attempts to utilize this idea. In this paper, we do not claim to solve theoretical problems of multipersistence homology but offer a novel, highly practical multidimensional topological summary by advancing the existing methods in spatio-temporal settings. We use the time direction in the multipersistence grid as a natural slicing direction and overcome the predetermined slices problem. Furthermore, for each filtering step of the spatial direction, unlike other MP vectorizations, we do not compress the induced PDs, but we combine them as multidimensional vectors (matrices or arrays). As a result, these multidimensional topological fingerprints are capable of capturing very fine topological information hidden in the spatio-temporal data. In the spatial direction, we filter the data with one (or more) domain function and obtain induced substructures, while in the time direction, we capture the evolving topological patterns of these substructures induced by the filtering function via zigzag persistence. Our fingerprinting process is highly flexible, one can easily choose the right single persistence vectorization to emphasize either density of short barcodes or give importance to long barcodes appearing in these PDs. We obtain multidimensional vectors (matrices and arrays) as output which are highly practical to be used with various ML models.

D.3 TMP Framework for General Types of Data

So far, to keep the exposition simpler, we described our construction for dynamic networks. However, our framework is suitable for various types of time-dependent data. Let be a time sequence of images or point clouds. Let be a filtering function that can be applied to all . Ideally, is a function that does not depend on , e.g if represent a sequence of images for time , can be taken as a grayscale function. If is a sequence of point clouds at different times, then can be defined as a density function.

Then, the construction is the same as before. Let be the filtering function with threshold set . Let . Then, for each , we have a time sequence . Let be the induced simplicial complexes to be used for filtration. Then, by taking , we apply Zigzag PH to this sequence as before.

Then, we obtain the zigzag persistence diagram for the filtration . Hence, we obtain zigzag PDs, , one for each . Then, again by applying a preferred SP vectorization to the persistence diagram , we have corresponding vector (say size ). Then, TMP vectorization can be defined as where represents -row of the -vector . Hence, TMP vectorization of , , becomes a -vector of size .

Appendix E Stability of TMP Vectorizations

In this part, we prove the stability theorem (Theorem 1) for TMP vectorizations. In particular, we prove that if the original SP vectorization is stable, then so is its TMP vectorization . Let and be two time sequences of networks. Let be a stable SP vectorization with the stability equation

| (1) |

for some . Here, represents Wasserstein- distance as defined before.

Now, by taking for TMP construction, we obtain bifiltrations and for each . We define the induced matching distance between the multiple PDs as

|

|

(2) |

Now, we define the distance between induced TMP Vectorizations as

| (3) |

Theorem 1: Let be a stable vectorization for single parameter PDs. Then, the induced TMP Vectorization is also stable, i.e. there exists such that for any pair of time-aware network sequences and , the following inequality holds

Proof.

WLOG, we assume SP vectorization produces a -vector. For or higher dimensional vectors, the proof will be similar. For any with and , we will have filtration sequences and . This produces a zigzag persistence diagrams and . Therefore, we have pairs of zigzag persistence diagrams.

Consider the distance definition for TMP vectorizations (Equation 3). Let be the indices realizing the maximum in the right side of the equation, i.e.

| (4) |

Then, by stability of (i.e., inequality (1)), we have

|

. |

(5) |

Now, as

|

, |

(6) |

by the definition of distance between TMP vectorizations (Equation 2), we find that

|

. |

(7) |

Finally,

Here, the leftmost equation follows from Equation 3. The first inequality follows from Equation 5. The final inequality follows from Equation 2. This concludes the proof of the theorem. ∎

Remark 2 (Stability with respect to Matching Distance).

While we define our own distance in the space of MP modules for suitability to our setup, if you take matching distance in the space of MP modules, our result still implies the stability for TMP vectorizations induced from stable SP vectorization with . In particular, our distance definition with specified slices is just a version of matching distance restricted only to horizontal slices. The matching distance between two multipersistence modules is defined as the supremum of the bottleneck () distances between single persistence diagrams induced from all one-dimensional fibers (slices) of the MP module, i.e. where represents the slice restricted to line in the MP grid (Dey and Wang 2022, Section 12.3).

In this sense, with this notation, our distance would be a restricted version of matching distance as follows: where represent horizontal slices, then . Then, by combining with our stability theorem, we obtain . Hence, if two MP modules are close to each other in the matching distance, then their corresponding TMP vectorizations are close to each other, too.

To sum up, for TMP vectorizations induced from stable SP vectorization with , our result naturally implies stability with respect to matching distance on multipersistence modules. The condition comes from the bottleneck distance () used in the definition of . If one defines a generalization of matching distance for other Wasserstein distances for , then a similar result can hold for other stable TMP vectorizations.

| Notation | Definition |

|---|---|

| the spatial network at timestamp | |

| the node set at timestamp | |

| the edge set at timestamp | |

| the edge weights at timestamp | |

| the number of nodes at timestamp | |

| a time series of graphs | |

| an abstract simplicial complex | |

| the highest dimension in a simplicial complex | |

| a -dimensional topological feature | |

| the life span of | |

| dimensional persistent diagram | |

| homology group | |

| and | two filtering functions for sublevel filtration |

| multivariate filtering function | |

| rectangular grid for bifiltrations | |

| the zigzag persistence diagram | |

| a single persistence vectorization | |

| the vector from | |

| TMP Vectorization of | |

| persistence landscape | |

| TMP Landscape | |

| persistence surface | |

| TMP Persistence Image | |

| distance between persistence diagrams | |

| distance between TMP Vectorizations | |

| node feature matrix | |

| graph convolution on adptive adjacency matrix | |

| image-level local topological feature | |

| Wasserstein distance | |

| Bottleneck distance |