Top Gear or Black Mirror: Inferring Political Leaning from Non-political Content

Abstract

Polarization and echo chambers are often studied in the context of explicitly political events such as elections, and little scholarship has examined the mixing of political groups in non-political contexts. A major obstacle to studying political polarization in non-political contexts is that political leaning (i.e., left vs right orientation) is often unknown. Nonetheless, political leaning is known to correlate (sometimes quite strongly) with many lifestyle choices leading to stereotypes such as the “latte-drinking liberal.” We develop a machine learning classifier to infer political leaning from non-political text and, optionally, the accounts a user follows on social media. We use Voter Advice Application results shared on Twitter as our groundtruth and train and test our classifier on a Twitter dataset comprising the 3,200 most recent tweets of each user after removing any tweets with political text. We correctly classify the political leaning of most users (F1 scores range from 0.70 to 0.85 depending on coverage). We find no relationship between the level of political activity and our classification results. We apply our classifier to a case study of news sharing in the UK and discover that, in general, the sharing of political news exhibits a distinctive left–right divide while sports news does not.

Introduction

Word choice, grammatical structures, and other linguistic features often correlate with demographic variables such as age and gender, although audience and other performative aspects can play a significant role Wang et al. (2019); Nguyen et al. (2016).

Latent attribute inference describes the field of research in computer science that tries to infer demographic and other attributes from individuals’ behaviours. While much attention has been paid to inferring gender, age, and location from social media data Wang et al. (2019); Liu et al. (2019); Zagheni et al. (2017); Zhang et al. (2016); Chen et al. (2015); Sap et al. (2014); Nguyen et al. (2013); Rosenthal and McKeown (2011); Rao et al. (2010), less work has been done on inferring political orientation. Nonetheless, political orientation is known to correlate (sometimes quite strongly) with many lifestyle choices, which leads to stereotypes such as the “latte-drinking liberal” or “bird-hunting conservative” DellaPosta et al. (2015). Individuals’ perception of their own political identities also correlates with the degree of moral value adoption and behaviour Talaifar and Swann (2019). Furthermore, political leaning may influence perceptions of non-political topics: Ahn et al. (2014) were able to infer political leaning from fMRI data in which subjects were shown non-political images as stimuli. Even academic publications and their findings contain some signals of the political leanings of their authors Jelveh et al. (2014).

It, therefore, is very likely that the content people share, the accounts they follow, and the words they use on social media may also contain traces of their political orientation and that a properly trained machine learning system will be able to infer individuals’ political orientations even in non-political contexts.

Although there are several political spectrum models Eysenck (1964); Sznajd-Weron and Sznajd (2005); Kitschelt (1994), in this study, we focus specifically on a unidimensional left–right spectrum, given its ubiquity in political science and popular culture alike.

We develop and freely share our machine learning system, which achieves an F1 score of more than 0.85 even when considering non-political content. Our ground-truth data come from individuals who used Voter Advice Applications (VAAs) during the 2015 and 2017 UK General Elections. Many respondents shared their VAA results on social media during the elections, and we use these social media accounts and their VAA results to train our system. We gather multiple datasets, including these users’ political and non-political Twitter activity.

After developing our classifier, we use the system to infer the political orientations of individuals sharing news articles to investigate the level of polarization in sharing different types of news and from different sources. Specifically, we investigate the sharing of political and sports news from The Telegraph (right-leaning), the BBC (centrist), and The Guardian (left-leaning).

We first present our classifier’s motivation, development, and evaluation before turning to the news sharing case study. Finally, we conclude on broader implications and future research directions.

Inferring political leaning

Political ideology prediction from digital trace data has become a core interest for researchers. Prior work has focused on predicting ideological stances using text data, network data, or a combination.

Social media, news outlets, and parliamentary discussions are the most popular sources of textual data: work on social media has used both users’ and politicians’ textual data Preoţiuc-Pietro et al. (2017); Conover et al. (2011). Newsgroups are also often political, and the political leanings of their text have been studied Kulkarni et al. (2018); Iyyer et al. (2014). Although legislators are already officially affiliated with a political party, several studies have classified the members of the US Congress Iyyer et al. (2014); Diermeier et al. (2012); Yu et al. (2008).

Text-based approaches focus on the words people choose to use Preoţiuc-Pietro et al. (2017), the style of their messages, and social-media specific attributes such as hashtags Weber et al. (2013); Conover et al. (2011). These approaches work well for polarised topics where the left and right use different phrases to talk about the same things: for example, “gun control” and “gun rights.”

Network approaches have used both textual elements such as hashtags, mentions, and URLs Gu et al. (2016) to create networks, and social links Barberá (2015).

Within Twitter, there is a divide between using either retweets and mentions (e.g., Conover et al., 2011; Gu et al., 2016; Hale, 2014) or followers and friends (e.g., King et al., 2016; Barberá, 2015; Golbeck and Hansen, 2014; Pennacchiotti and Popescu, 2011; Zamal et al., 2012; Compton et al., 2014) to infer latent attributes. Networks based on mentions and retweets are often preferred over followers for two reasons. First, taking a snapshot of an extensive network is difficult, given the Twitter API rate limits. Second, retweeting and mentions, often have greater recency, can be analysed temporally, and can form weighted networks (e.g., based on how many times users mention each other). As retweeting is more publicly visible than following a user, there is also a greater social cost to retweeting another account, suggesting that users will more carefully consider which users they retweet or mention. Retweeting has been used as a sign of support (e.g., Conover et al., 2011; Wong et al., 2016), but it is important to note that some mentions, retweets, and hashtag uses will be specifically to call attention to content with which a user disagrees.

As detailed below, we examine the use both words (through topic modeling) in political and non-political text, and network features (the accounts a user follows) to estimate the political leaning of social media users.

We find network features and political text equally reveal political leaning, but network features have lower coverage. Inferring political leaning with non-political text is less accurate, but network data helps in that task and performance is on par with previous work inferring political leaning from other sources.

Ground truth data

We collected all mentions of social media accounts and URLs associated with the three most-used voter advice applications (VAAs) during the 2015 and 2017 General Elections in the United Kingdom.111We used commercial, firehose access for the 2015 election and elevated direct access during the 2017 election. We believe our dataset represents the entire population as closely as possible. VAAs ask individuals a series of political and policy issue questions to help them understand their preferences and how these align with political candidates running for office. Users of VAAs have the opportunity (but not requirement) to share their results on social media.

The three VAAs from which we collected data were “I Side With You” (ISW), “Who Should You Vote For” (WSYVF), and “Vote Match” (VM). We found 4,118 unique users who shared their VAA results: 2,975 of these users came from ISW, 794 from VM, and 387 from WSYVF. Our data only includes original tweets and not retweets of VAA result URLs. Thirty eight users shared results from multiple VAAs.

After collecting the data during the elections, we extracted all VAA result URLs in July 2018, downloaded the content of VAA result pages, and extracted users’ matches with political parties. We furthermore obtained the most recent 3,200 public tweets as of January 2019 of each user who shared a VAA result by using the user_timeline endpoint of Twitter’s public RESTful API.

All VAAs provided the voter’s alignment with individual political parties. WSYVF also gave a left–right score, but the exact details of this score are unknown. Therefore, we develop our own left–right or political leaning score using only the matches to parties, which can be applied consistently across all three VAAs.

We calculate political leaning scores by subtracting the user’s match to Labour and the Conservatives (the UK’s two largest parties).222We experimented with summing a user’s match with left-leaning parties (Labour, SNP, Greens, Plaid-Cymru, Sinn Fein) and subtracting this from the sum of the user’s match to right-leaning parties (Conservatives, UKIP, British National). This procedure produced similar results but was less transparent as not all parties are available in all constituencies. We normalize all scores into the [-1,1] interval for each platform before combining data from all the VAAs together.

Users with a political leaning score greater than zero are labelled as “right-leaning” while those with scores less than zero are labelled as “left-leaning.” We dropped all users with political leaning scores equal to zero. A small number of users shared results from multiple VAAs. We checked for consistency and removed one inconsistent user from our dataset. We created one political leaning score for users with multiple but consistent political leanings by taking the mean of their political leaning scores from the multiple VAAs.

In total, our ground-truth dataset contains 2,694 unique users from all three VAAs. We collected last 3,200 tweets from 1,921 of these users in January 2019.

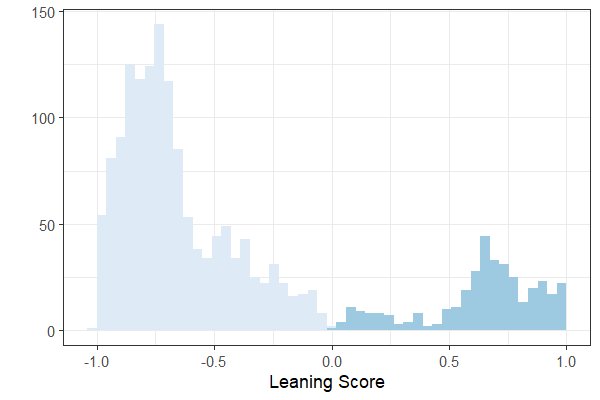

We detect the language of all tweets using the Compact Language Detector 2 Sites (2013) and remove all users who had less than 75% of their most recently 3,200 tweets in English. Last, we remove users who tweeted less than 100 times. As a result, the final dataset comprises 1,760 users. Of these users, 1,396 are left-leaning, and 364 are right-leaning (Figure 1).

Predictive variables

We build five datasets using users’ tweets and Twitter ‘friends’ (i.e., the accounts a user follows). The first three datasets are (1) network information, (2) political text, and (3) non-political text. The final two-hybrid datasets are constructed by joining textual and network data.

Textual data

We create two datasets of texts from tweets authored by the users. The first is a set of political text from tweets containing a political term (e.g., #ge2015, labour) primarily collected during the 2015 and 2017 elections with firehose or elevated streaming API access. The second dataset, non-political text, is mainly from users’ most recent 3,200 tweets collected with the RESTful API. We find that some recent tweets are political (e.g., discussing Brexit) and exclude tweets with political words from the non-political dataset.

Determining political tweets

To detect political tweets, we develop a two-step procedure. In the first step, we create an index by using election and non-election word occurrence frequencies. Then, we extract initial political words by using this index. Finally, we train a word embedding model to extend the political terms list in the second step.

We select words that appear in at least 250 different tweets during an election period in the initial step.333The periods for the elections as follow: GE2010 (2010-04-12 to 2010-06-06), GE2015 (2014-11-19 to 2015-06-07), and GE2017 (2017-04-18 to 2017-07-08). Then, we examine word occurrences both within and outside of the election periods. We normalize these two frequencies by using the total tweet count for each period. Finally, we divide the out-of-election number by the in-election number to develop a political index.

In our case, setting political index threshold of 0.25 yields a word list comprising 316 words with scores lower than 0.25. However, this initial list contains several ambiguous words. Therefore, we manually examined all words and removed 78 vague terms (#eurovision2015, #pensioners, youtuber, tuition, etc.) before continuing to the second step. We also add political words that were present throughout the whole period, including ‘brexit’, ‘eu’, ‘trump’, ‘clinton’, ‘sanders’, ‘johnson’, ‘gop’, and ‘referendum.’

In the second step, we use word embeddings to determine the closest terms to the political words extracted in the first step. We train a skip-gram embedding model (skip window: 5, minimum frequency: 100). Using Euclidean distance, we choose the closest three words to any political term in the list. Then, we again manually inspect all words and remove ambiguous terms. This results in 433 political words. The complete list of words is available within the supplemental materials.

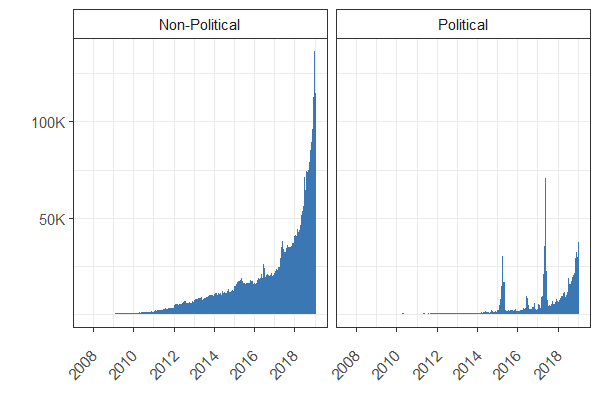

We label any tweet containing a term from this final list as political. Other tweets are labelled non-political. In the end, the non-political dataset consists of 3.66 million tweets, and the political dataset contains 888 thousand tweets (Figure 2).

Network data

We collected the accounts that users in our dataset followed (their ‘friends’) on Twitter for 1,742 of the 1,760 users in our dataset who shared VAA results. Next, we create a binary matrix where the columns are Twitter accounts followed by multiple users, and the rows are the users in our dataset.

Approach

Our goal is to estimate political leaning at a per-user level, not at a per-tweet level. We, therefore, sort all tweets of each user by date and concatenate these tweets into two text documents: one for political text and one for non-political text.

To construct document-feature matrices (DFM), we remove URLs and non-alpha characters from the text. Next, we stem all words using Porter’s algorithm Porter (1980) and exclude words from the SMART stop word list Lewis et al. (2004). Finally, we create lists of unigram, bigram and trigrams as input features to our classifier. Consequently, our features consist of unigrams, bigrams and trigrams altogether, and the documents are combined tweets of each user.

To reduce the dimension of features, we remove sparse terms by setting sparsity for political dataset to 0.9 and for non-political dataset to 0.85. Consequently, the political DFM consists of 1,760 users and 4,676 features, and the non-political DFM contains 1,760 users and 6,613 features. We also remove sparse terms from the network DFM by setting sparsity to 0.88. Our network DFM consists of 1,742 users and 55 features (followed accounts).

Last but not least, our dataset contains more left-leaning than right-leaning users. Therefore we select all of the right-leaning users and an equal number of left-leaning users randomly. We combine these two subsets and shuffle the documents in the resulting DFM, which contains 728 users balanced across our two classes (left-leaning and right-leaning) in the political and non-political sample datasets. When using network data as an input on its own or in combination with text, the numbers are slightly lower as network data was not available for all users.

| Dataset | NB | NN | SVMlin | SVMpoly | SVMrad |

|---|---|---|---|---|---|

| net | 0.27 | 0.75 | 0.73 | 0.75 | 0.75 |

| non-pol | 0.54 | 0.64 | 0.60 | 0.65 | 0.65 |

| non-pol+net | 0.59 | 0.70 | 0.68 | 0.71 | 0.72 |

| pol | 0.66 | 0.75 | 0.74 | 0.73 | 0.72 |

| pol+net | 0.67 | 0.69 | 0.66 | 0.71 | 0.67 |

network non-political non-pol+net political pol+net Classifier F1 P R F1 P R F1 P R F1 P R F1 P R Naïve Bayes 0.29 0.17 1.00 0.64 0.63 0.67 0.70 0.69 0.72 0.75 0.79 0.71 0.73 0.75 0.71 Neural Network 0.77 0.71 0.83 0.69 0.67 0.73 0.77 0.74 0.80 0.81 0.80 0.82 0.74 0.70 0.78 SVMlin 0.74 0.66 0.85 0.65 0.59 0.78 0.72 0.66 0.80 0.79 0.76 0.83 0.74 0.68 0.82 SVMpoly 0.77 0.72 0.83 0.69 0.72 0.71 0.78 0.74 0.83 0.74 0.78 0.79 0.75 0.70 0.81 SVMrad 0.78 0.74 0.83 0.70 0.81 0.67 0.75 0.70 0.81 0.74 0.77 0.78 0.73 0.65 0.83

Classification procedure

Our classification procedure follows these steps: (1) We split each sample data into training (80%) and test (20%) datasets. (2) We create a topic model using only the training data. (3) We train the political leaning classifiers on the training data using 10-fold cross-validation. (4) We apply the topic model to test data, and (5) we predict the leanings of test data. We completely isolate the test dataset from both the topic generation and classifier training processes by following this procedure.

We use Structural Topic Models (STM) to create our topic models. However, we do not provide prevalence co-variates to prevent biased topic generation. Therefore, we feed the topic modelling algorithm with only text data. In this case, the algorithm becomes an implementation of the Correlated Topic Model (CTM) Roberts et al. (2019).

We treat the number of topics as a hyperparameter that we tune through cross-validation on the training data. We find 150 topics gives the most meaningful results due to the large corpora (3.8M) and long-period (approx. nine years). We use spectral initialization as advised by the creators since it generates ‘better’ and more ‘consistent’ topics by using “a spectral decomposition (non-negative matrix factorization) of the word co-occurrence matrix" Roberts et al. (2019). We extract the theta distributions to classify documents (users) when the model returns. Theta states the probability of a document belonging to a topic.

To infer political leanings and compare the results, we train and compare neural network (NN), Naïve Bayes (NB), and support-vector machine (SVM) classifiers. The SVMs are trained with linear, radial and polynomial kernels. SVM, NB and NN are three of the most popular machine learning methods to classify text, although the ‘best’ approach depends heavily on the data and the specific task being performed Wang and Manning (2012); Ng and Jordan (2001).

After performing a classification, we can apply a threshold to the probabilistic results from the classifier. If the probability of a user’s classification is above the threshold, we assign them the predicted outcome. When the probability is below the threshold, we assign a value of ‘unknown’ for each best-performing model in Table 3. The ‘Unknown’ column reports the percentage of users in each classification task with probabilities below the stated threshold.

Classification results

We first calculate F1 scores using 10-fold cross-validation on the training data (Table 1). For those results, we renew the classification with five different balanced, random samples and report the average final F1 scores across all folds and samples. We also report F1, precision, and recall scores on the entirely held out test data (Table 2).This test data had no role in creating the topic models or other features and better approximates real-world performance.

In Table 1 we compare the performance of our models on the training data. The neural network, SVMpoly and SVMrad models perform similar to each other for the network dataset. The SVMlin and neural network model performances are close on political text and network only datasets. The F1 scores for the dataset combining political text and network data are generally lower than for either alone, suggesting the signals from these two sources somewhat overlap.

In Table 2, we compare the neural network, SVM and NB model performances on the unseen test data averaged across five balanced samples. The results show the best performing classifier changes with the task. The neural network model performs best on the political dataset, but the SVMpoly classifier has the highest F1 score for both hybrid datasets. Lastly, for the non-political and network datasets the SVMrad model has the highest F1 score.

Our main objective in this paper is to understand how well political leaning can be predicted from the non-political text; so, we further explore how different probability thresholds can be applied to the best performing classifiers’ outputs and report these in Table 3.

For the non-political dataset we select the SVMrad model, and for the non-political+network dataset we select the SVMpoly model. Classifying political leaning from non-political tweets is more challenging than using political tweets. Incorporating network data helps increase coverage and accuracy in the non-political case. However, even without network data, it is possible to infer a political leaning for nearly half of the users with high performance (For a probability threshold of , the F1 score is , but 56% of users are classified as being of an unknown political leaning).

Estimating political leaning from political tweets has a similar F1 score to using network data alone. However, combining network and political text data results in lower confidence estimates (and a higher number of unknown users than either text or network data alone at each threshold).

The effect of the probability threshold for models makes the comparison easier. This threshold excludes users with results of lower certainty and marks them as unknown. The impact on the performance of each threshold is reasonably consistent across input datasets: F1 scores increase with higher thresholds, but so too do the percentages of users classified with an unknown political leaning. For example, for the non-political input dataset, a 0.62 probability threshold increases the F1 scores by up to 23 percentage points but also marks nearly 97% of users in the dataset as unknown. The effects are similar for other input datasets but less extreme.

| Dataset | Prob. Threshold | F1 | Prec. | Rec. | Unknown |

|---|---|---|---|---|---|

| net | 0.50 | 0.86 | 0.85 | 0.87 | 0.00 |

| (SVMrad) | 0.64 | 0.90 | 0.88 | 0.92 | 0.23 |

| 0.68 | 0.95 | 0.95 | 0.95 | 0.41 | |

| non-pol | 0.50 | 0.77 | 0.75 | 0.79 | 0.00 |

| (SVMrad) | 0.51 | 0.90 | 0.93 | 0.87 | 0.56 |

| 0.62 | 1.00 | 1.00 | 1.00 | 0.97 | |

| non-pol+net | 0.50 | 0.85 | 0.82 | 0.88 | 0.00 |

| (SVMpoly) | 0.70 | 0.90 | 0.90 | 0.90 | 0.36 |

| 0.78 | 0.95 | 0.95 | 0.95 | 0.47 | |

| pol | 0.50 | 0.85 | 0.83 | 0.87 | 0.00 |

| (NN) | 0.84 | 0.90 | 0.88 | 0.92 | 0.27 |

| 0.94 | 0.95 | 0.92 | 0.97 | 0.41 | |

| pol+net | 0.50 | 0.81 | 0.75 | 0.87 | 0.00 |

| (SVMpoly) | 0.74 | 0.91 | 0.90 | 0.92 | 0.52 |

| 0.76 | 0.96 | 0.97 | 0.94 | 0.59 |

Post-hoc analysis

We extract the eleven most important topics and the ten most influential Twitter accounts from our best performing non-political+network SVMpoly model.

Table 4 shows the 10 most significant Twitter accounts in the SVMpoly non-political+network model. We generate the ratio of the normalized number of left and right followers of these accounts using our ground-truth dataset, and find a higher proportion of users following @10DowningStreet, @realDonaldTrump, and @JeremyClarkson are right-leaning. The remaining accounts were followed more by left-leaning users than right-leaning users. Although most of these accounts are political, there are two non-political accounts: Jeremy Clarkson—the former Top Gear presenter—and Charlton Brooker—the creator of Black Mirror.

| Account | Screen Name | Left (%) | Right (%) |

|---|---|---|---|

| Jeremy Corbyn | @jeremycorbyn | 47 | 11 |

| Owen Jones | @OwenJones84 | 33 | 4 |

| UK Prime Minister | @10DowningStreet | 17 | 36 |

| Donald J. Trump | @realDonaldTrump | 16 | 39 |

| Charlie Brooker | @charltonbrooker | 30 | 8 |

| Caroline Lucas | @CarolineLucas | 25 | 2 |

| Jeremy Clarkson | @JeremyClarkson | 15 | 35 |

| The Green Party | @TheGreenParty | 20 | 3 |

| Jon Snow | @jonsnowC4 | 25 | 7 |

| NHS Million | @NHSMillion | 21 | 3 |

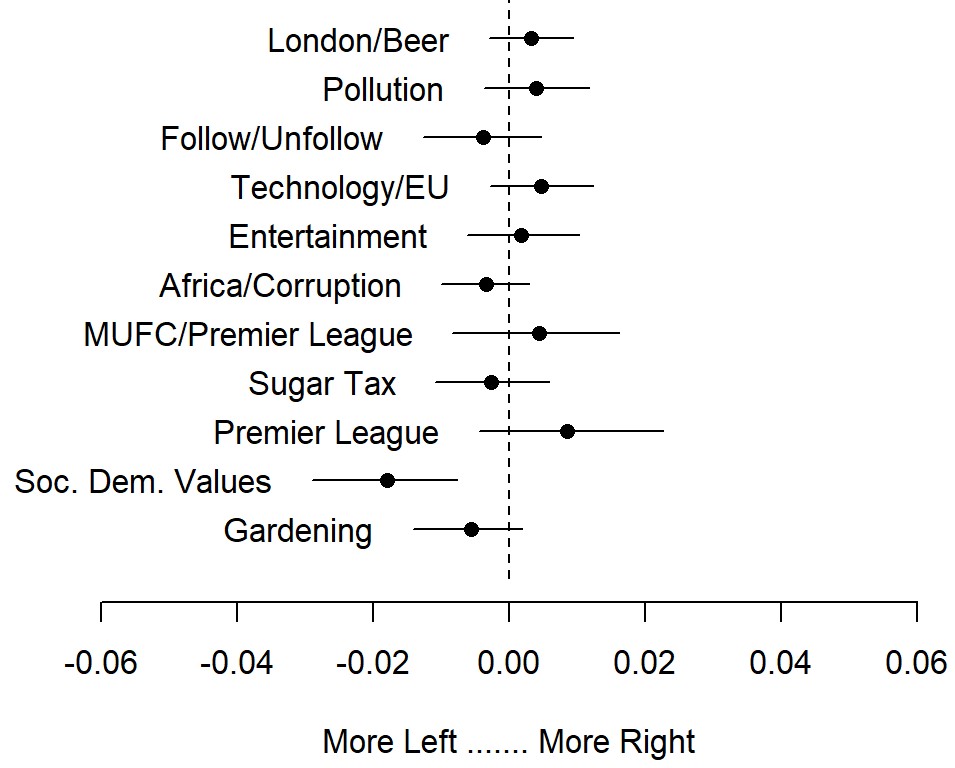

After detecting the most important topics, we assign a label to each based on the most prevalent words and estimate the effects of left-leaning and right-leaning users. Figure 3 shows the contrast between leanings. The top words belonging to these topics are displayed in Table 5 and a complete list of all topics is available in the supplemental materials. The word lists are developed in two steps. First, we list the top 10 words by using FREX, LIFT and log scores. Then we concatenate these three lists in the given order and extract the top 15 unique words.444“FREX is the weighted harmonic mean of the word’s rank in terms of exclusivity and frequency,” “LIFT weights words by dividing by their frequency in other topics, therefore giving higher weight to words that appear less frequently in other topics,” and “Score divides the log frequency of the word in the topic by the log frequency of the word in other topics” Roberts et al. (2019).

Figure 3 shows topics discussing social democratic values are more prevalent in left-leaning users. A gardening related topic is also more left-leaning while the Premier League and a pollution-related topic are more right-leaning. It should be noted that most 95% confidence intervals cross the zero point indicating any left–right distinction is subtle.

| Topic Name | Features |

|---|---|

| London/Beer | unit_kingdom, kingdom, ben, unit, beer, pub, london, januari, station, tim, wimbledon, cheat, rip, slice, peak |

| Pollution | plastic, contain, ocean, pollut, dr, usa, sea, recycl, dwp, path, locat, commit, toe, current, planet |

| Follow/Unfollow | unfollow, automat, peopl_follow, stat, follow, check, person, ireland, mention, reach, irish, track, retweet, big_fan, joe |

| Technology/EU | android, dj, ee, eu, app, greec, independ, mobil, scotland, ipad, euro, europ, io, beat, scottish |

| Entertainment | beth, xx, haha, xxx, deploy, sean, artist, nicki, wire, hahaha, jay, spain, boyfriend, til, willi |

| Africa/Corruption | ni, nigeria, lawyer, african, corrupt, parcel, bbcradio, lol, journo, arrest, wk, individu, brazil, pregnant, id |

| MUFC/Premier League | mufc, manutd, unit, rooney, manchest_unit, utd, goal, golf, moy, mourinho, ronaldo, pogba, score, fifa, leagu |

| Sugar Tax | frog, level, skill, c***, sugar, committe, divin, nhs, suspect, holland, hunter, jeremi, bullshit, jeremi_hunt, ww |

| Premier League | west_ham, spur, ham, salah, everton, kane, chelsea, klopp, pogba, lukaku, goal, mourinho, tottenham, midfield, liverpool |

| Soc. Dem. Values | foodbank, poverti, nhs, wage, worker, crisi, fund, wick, solidar, food_bank, grenfel, bank, auster, polit, privat |

| Gardening | bloom, garden, cute, layer, kinda, ship, charact, rich, bless, hug, glad, mom, damn, lmao, gonna |

Effect of political activity

After labelling political tweets as discussed, we develop a political activity index by dividing the number of political tweets by the number of overall tweets per user. To determine whether there is a relation between political activity and classifier performance, we apply a Pearson correlation test to the classifier probabilities and our measure of political activity. For the best performing non-political textual model (SVMrad), we find only very weak correlations between the classifier probability and political activity (), the number of tokens (), and leaning scores (). The best-performing political textual model (NN) similarly has weak correlations between its predicted probabilities and political activity (), the number of tokens (), and leaning scores ().

Case study: Polarization and news sharing

Having developed a classifier that successfully predicts the users left–right political leaning even with non-political text as input we now seek to apply this classifier in a short case study. Social media has become intertwined with ideas of political polarization and echo chambers in popular culture Pariser (2011). There are, of course, many studies that show polarization in explicitly political contexts, such as the hyperlinks of political bloggers Adamic and Glance (2005), the sharing of political news Flaxman et al. (2016), and Twitter activity in the context of politics Barberá (2015); Hong and Kim (2016).

Despite this, there is a lack of studies examining the polarization and echo chamber hypotheses in diverse contexts (for more, see, Dubois and Blank, 2018). The Internet offers the potential to access various information sources, and enthusiasm for politics and consumption of more media sources negatively coordinate with the likelihood of being in an echo chamber Dubois and Blank (2018); Barberá et al. (2015).

In this case study, we apply our non-political classifier to the sharing of news from three UK media outlets: The Telegraph (right-leaning), the BBC (centrist), and The Guardian (left-leaning). The case study fulfils two objectives. First, it demonstrates the face validity of our classifier by matching common expectations that political news from The Telegraph should be shared chiefly by right-leaning users, political news from The Guardian should be shared mostly by left-leaning users, and the BBC should be the least polarized of the three. Second, the case study advances the scholarly conversation on polarization by examining the sharing of non-political news—namely sports news—from these three outlets. Our results show that many right-leaning users consume sports news from The Guardian, suggesting more right-leaning users engage with, at least the sports section of, The Guardian than conventional wisdom would expect.

Data and methods

We examine the number of left- and right-leaning accounts sharing news in a two-by-three design. Our three news sources are The Telegraph, the BBC, and The Guardian, and our new types are political and sports news.

We first use elevated Twitter Streaming API access to collect URL patterns related to theguardian.com, telegraph.co.uk, and bbc.co.uk. We then manually examine these URLs to identify URLs patterns that are explicitly associated with political news and sport news. For example, all URLs containing bbc.co.uk/sport/ are labelled as sports news from the BBC, and all URLs containing theguardian.com/politics/2018/ are labelled as political news from The Guardian. A complete list of URL patterns is available in the supplemental materials.

Returning to the Twitter Streaming API, we collect all instances of URLs shared matching any of our patterns between 1 September 2018 and 8 September 2018. We then query the 3,200 most recent tweets of each user and collect the friends of each user appearing in our dataset using the Twitter RESTful API statuses/user_timeline endpoint and friends/ids endpoint.

Each user’s recent tweets and friends are used as input to estimate each user’s left–right political leaning. Next, we clean the text as described in the development of our classifier and combine all tweets from a given user into one document per user to estimate the political leaning of that user. The DFMs result in extremely sparse matrices (e.g., approx. 25M features). We, therefore, remove terms whose total frequency is lower than three, which makes the DFM dimensions computationally-feasible (e.g., approx. 5M features). Then, we extract features which are not present in our non-political DFM. As a result, we also remove political terms in this step. In the last step, we fit the non-political topic model to the news data to predict the political leaning of each user.

Our setup matches the non-political + network approach described in the main article. To classify our users sharing political and sports news, we select the SVMpoly classifier that yielded the optimal performance in the non-political + network task and set probability threshold to 0.7.

News sharing results

The results of our classification are shown in Table 6. The results conform with our hypothesis that the political leaning of users would match the political-leaning of the news publishers for political news sharing. For example, among users sharing political news who were classified as left-leaning, over 75% shared articles from the left-leaning Guardian. Similarly, over half of the users are classified as right-leaning who shared political news links from the right-leaning Telegraph.

In contrast to politics, we find The Guardian is the most popular source for sports news among users classified as either left- or right-leaning. In general right-leaning users share more sports than left-leaning users as a whole. Even so, the number of right-leaning users sharing sports news from The Telegraph is 8.5 times higher than the number of left-leaning users sharing articles from that source—the largest proportional difference in the dataset. We suspect sports news sharing is influenced by availability: The Telegraph has a paywall while the BBC and The Guardian do not. So it makes sense that subscribers paying for The Telegraph would get both political and sports news from it but that non-subscribers would look elsewhere. Many right-leaning users share sports news from the left-leaning, but free-to-access, Guardian suggests factors beyond political leaning such as availability and cost heavily influence the news sources people read.

Overall, the case study shows our classifier’s face-validity being able to detect the expected leanings of users sharing political news. At the same time, it suggests that the sharing of sports news is not as polarized and points toward new avenues of study made possible with a classifier that can predict political leaning from non-political text.

| Guardian | BBC | Telegraph | Total | |

|---|---|---|---|---|

| Political | ||||

| Left | 1,223 | 240 | 161 | 1,624 |

| Right | 812 | 377 | 1,230 | 2,419 |

| Unknown | 650 | 238 | 334 | 3,641 |

| Sport | ||||

| Left | 314 | 295 | 44 | 653 |

| Right | 1,631 | 869 | 378 | 2,878 |

| Unknown | 956 | 564 | 115 | 1,635 |

Discussion

Polarization is often studied in explicitly political contexts; however, understanding the actual effects of polarization requires researchers to explore non-political contexts. This, in turn, requires the ability to estimate political leaning in these non-political contexts. Building on the concept of ‘lifestyle politics’ DellaPosta et al. (2015) this paper has shown it is possible to estimate political leaning from non-political text.

What exactly is non-political text is unclear. Our preliminary analysis found that tweets sent outside of election periods were still often political in nature (e.g., containing discussion about the UK’s relationship with Europe). In response, we developed an approach to define and expand a list of political keywords and only allow tweets without these keywords to be considered for our tasks with non-political text. The topics displayed in Table 5 suggest this worked reasonably well. However, a qualitative inspection of the topics reveals a small number of political tweets are still in the non-political dataset: eight out of the 150 topics (5.3%) are political (Israel–Palestine, Scottish independence, Brexit, Macron–Merkel, the Green Party, UK Politics, US Politics, Westminster). Nonetheless, these form a tiny part of the dataset and cannot be responsible for the performance of our classifiers. Indeed, applying the best performing classifier trained on political text to the non-political text results in accuracy no better than random chance.

We find only only weak correlations between classifier performance and either political activity or political leaning. This suggests that individuals with different activity levels and at different points in the left–right spectrum all leave traces of their political leaning.

The use of Voter Advice Applications (VAAs) for ground truth data represents an exciting way to build larger-datasets for training and testing machine learning classifiers. In contrast to human coders (e.g., Conover et al., 2011) or crowdsourcing (e.g., Iyyer et al., 2014), VAAs are self-reported. Preoţiuc-Pietro et al. (2017) used self-report surveys, but VAAs can result in larger sample sizes, provide a longer assessment, and rely on the genuine motivation of users who also learn how their political preferences align with major parties. Nonetheless, it will be important to understand any biases in who shares VAA results on social media platforms. For instance, it may be that only the most politically-engaged users share VAA results on social media.

In addition to the variety of input data used, prior research has also tried various machine learning approaches to classifying political leaning including SVM, Naïve Bayes, and neural networks. Reported performance scores range from 60% to 90% Preoţiuc-Pietro et al. (2017); Gu et al. (2016); Jelveh et al. (2014); Iyyer et al. (2014); Yu et al. (2008); Kulkarni et al. (2018). Thus, our F1 score of 0.81 for political text is good, and achieving an F1 score of 0.7 for non-political text alone or 0.75 for non-political text and network data is in line with other work using explicitly political text.

Ideology, of course, is much broader than a simple left–right or liberal–conservative spectrum. Our work, therefore, complements approaches such as Graham et al. (2012) who apply moral foundation theory and find liberals and conservatives do not differ dramatically on moral foundations. The topics we find echo the moral foundations Graham et al. identify: liberals give higher importance to individualizing foundations—harm/care and fairness/reciprocity—and conservatives give higher importance to binding foundations—in-group/loyalty, authority/respect, and purity/sanctity. That the topic on pollution is more associated with the right may seem counter-intuitive, but we note the effect sizes are small and the analysis only considers prevalence, not attitude or sentiment.

We find the accounts that a user follows often reveal that user’s political leaning. While some of these accounts are explicitly political, others such as Jeremy Clarkson, a former Top Gear presenter, and Charlton Brooker, the creator of Black Mirror, are not. In other words, interest in Top Gear/Grand Tour or Black Mirror may hint at individuals’ political leaning more than expected. It may be unexpected that an official government account such as that of the UK Prime Minister is so explicitly political, but it is worth noting in the UK context that the Conservative Party has been in power since 2010.

Although our F1 scores indicate there are left/right differences, claiming that all right and left users behave similarly would be a gross oversimplification. There may be significant differences in other countries and cultures. In addition, our data is not representative. We cannot generalize the results of this study to explain complex structures such as conservative or social-democratic ideologies.

Our brief case study of news sharing hints at promising ways in which classifiers of non-political content may be used. We found that political news sharing on Twitter is associated with the political leaning of users, but that sports news sharing is less polarised. Many accounts classified as right-leaning shared sports news from The Guardian, a left-leaning source. Telegraph sports news, however, was still heavily associated with right-leaning users. We suspect paywalls partially drive this result: The Telegraph is the only source with a paywall in our data. It may be that many right-leaning users look to The Guardian and other sources for sports news rather than pay for access to The Telegraph. On the other hand, individuals who already pay for access to The Telegraph likely also choose to get their sports news there.

The level of polarization observed on social media thus depends in part on the type of content examined. As mentioned, most studies examine polarization in the context of the sharing of explicitly political content. However, our results indicate that a more holistic view of the content shared beyond politics may show less polarization. Our results also suggest that editorial decisions such as having a paywall or not affect the level of polarization observed in link-sharing on social media.

Conclusions

As political orientation influences and correlates with many aspects of non-political life, there is good reason to expect that political leaning can be inferred even from non-political text.

We first developed a classifier using political text from tweets primarily associated with general elections in the UK, achieving an F1 score of 0.81. We then collected recent tweets from users outside of any election period and removed tweets with political keywords. Using this non-political text data to estimate the political leaning of users, we were still able to achieve an F1 score of 0.70. Furthermore, incorporating the network information of these users alongside the textual features from their tweets further increased the F1 score.

Using our classifier, we were able to classify the political leaning of users sharing political and sports news on Twitter from three UK national news sources. Our analysis indicated the importance of examining polarization in political and non-political contexts.

This study has shown the possibility for the classification of political leaning from non-political text and highlighted the importance of studying political leaning in non-political contexts. We hope that this paper can help spur research to understand polarization in non-political contexts.

References

- Adamic and Glance (2005) Adamic LA, Glance N (2005) The political blogosphere and the 2004 u.s. election: Divided they blog. In: Proceedings of the 3rd International Workshop on Link Discovery, ACM, New York, NY, USA, LinkKDD ’05, pp 36–43, doi:10.1145/1134271.1134277, URL http://doi.acm.org/10.1145/1134271.1134277

- Ahn et al. (2014) Ahn WY, Kishida K, Gu X, Lohrenz T, Harvey A, Alford J, Smith K, Yaffe G, Hibbing J, Dayan P, Montague PR (2014) Nonpolitical images evoke neural predictors of political ideology 24(22):2693–2699, doi:10.1016/j.cub.2014.09.050, URL http://www.sciencedirect.com/science/article/pii/S0960982214012135

- Barberá (2015) Barberá P (2015) Birds of the Same Feather Tweet Together. Bayesian Ideal Point Estimation Using Twitter Data p 28

- Barberá et al. (2015) Barberá P, Jost JT, Nagler J, Tucker JA, Bonneau R (2015) Tweeting from left to right: Is online political communication more than an echo chamber? 26(10):1531–1542, doi:10.1177/0956797615594620, URL http://journals.sagepub.com/doi/10.1177/0956797615594620

- Chen et al. (2015) Chen X, Wang Y, Agichtein E, Wang F (2015) A comparative study of demographic attribute inference in twitter p 4

- Compton et al. (2014) Compton R, Jurgens D, Allen D (2014) Geotagging one hundred million twitter accounts with total variation minimization. In: 2014 IEEE International Conference on Big Data (Big Data), IEEE, pp 393–401, doi:10.1109/BigData.2014.7004256, URL http://ieeexplore.ieee.org/document/7004256/

- Conover et al. (2011) Conover MD, Goncalves B, Ratkiewicz J, Flammini A, Menczer F (2011) Predicting the Political Alignment of Twitter Users. In: 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, pp 192–199, doi:10.1109/PASSAT/SocialCom.2011.34

- DellaPosta et al. (2015) DellaPosta D, Shi Y, Macy M (2015) Why do liberals drink lattes? American Journal of Sociology 120(5):1473–1511, doi:10.1086/681254, URL https://doi.org/10.1086/681254

- Diermeier et al. (2012) Diermeier D, Godbout JF, Yu B, Kaufmann S (2012) Language and ideology in congress 42(1):31–55, doi:10.1017/S0007123411000160, URL https://www.cambridge.org/core/journals/british-journal-of-political-science/article/language-and-ideology-in-congress/1063F5509BC2ABC3F9A0E164E58157EE

- Dubois and Blank (2018) Dubois E, Blank G (2018) The echo chamber is overstated: the moderating effect of political interest and diverse media 21(5):729–745, doi:10.1080/1369118X.2018.1428656, URL https://doi.org/10.1080/1369118X.2018.1428656

- Eysenck (1964) Eysenck HJ (1964) Sense and nonsense in psychology. Penguin Books

- Flaxman et al. (2016) Flaxman S, Goel S, Rao JM (2016) Filter bubbles, echo chambers, and online news consumption. Public Opinion Quarterly 80(S1):298–320, doi:10.1093/poq/nfw006, URL https://doi.org/10.1093/poq/nfw006

- Golbeck and Hansen (2014) Golbeck J, Hansen D (2014) A method for computing political preference among Twitter followers. Social Networks 36:177–184, doi:10.1016/j.socnet.2013.07.004, URL http://www.sciencedirect.com/science/article/pii/S0378873313000683

- Graham et al. (2012) Graham J, Nosek BA, Haidt J (2012) The moral stereotypes of liberals and conservatives: Exaggeration of differences across the political spectrum. PLoS ONE 7(12):e50092, doi:10.1371/journal.pone.0050092, URL https://dx.plos.org/10.1371/journal.pone.0050092

- Gu et al. (2016) Gu Y, Chen T, Sun Y, Wang B (2016) Ideology Detection for Twitter Users with Heterogeneous Types of Links. arXiv:161208207 [cs] URL http://arxiv.org/abs/1612.08207, arXiv: 1612.08207

- Hale (2014) Hale SA (2014) Global connectivity and multilinguals in the twitter network. In: Proceedings of the 32nd annual ACM conference on Human factors in computing systems - CHI ’14, ACM Press, pp 833–842, doi:10.1145/2556288.2557203, URL http://dl.acm.org/citation.cfm?doid=2556288.2557203

- Hong and Kim (2016) Hong S, Kim SH (2016) Political polarization on twitter: Implications for the use of social media in digital governments 33(4):777–782

- Iyyer et al. (2014) Iyyer M, Enns P, Boyd-Graber J, Resnik P (2014) Political ideology detection using recursive neural networks. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Association for Computational Linguistics, pp 1113–1122, doi:10.3115/v1/P14-1105, URL http://aclweb.org/anthology/P14-1105

- Jelveh et al. (2014) Jelveh Z, Kogut B, Naidu S (2014) Detecting latent ideology in expert text: Evidence from academic papers in economics. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, pp 1804–1809, doi:10.3115/v1/D14-1191, URL http://aclweb.org/anthology/D14-1191

- King et al. (2016) King AS, Orlando FJ, Sparks DB (2016) Ideological Extremity and Success in Primary Elections: Drawing Inferences From the Twitter Network. Social Science Computer Review 34(4):395–415, doi:10.1177/0894439315595483, URL https://doi.org/10.1177/0894439315595483

- Kitschelt (1994) Kitschelt H (1994) The Transformation of European Social Democracy by Herbert Kitschelt. doi:10.1017/CBO9780511622014, URL /core/books/transformation-of-european-social-democracy/C92F284FC17302253C3B5B14123BBA80

- Kulkarni et al. (2018) Kulkarni V, Ye J, Skiena S, Wang WY (2018) Multi-view models for political ideology detection of news articles URL http://arxiv.org/abs/1809.03485, 1809.03485

- Lewis et al. (2004) Lewis DD, Yang Y, Rose TG, Li F (2004) RCV1: A new benchmark collection for text categorization research pp 361–397

- Liu et al. (2019) Liu Y, Cheng D, Pei T, Shu H, Ge X, Ma T, Du Y, Ou Y, Wang M, Xu L (2019) Inferring gender and age of customers in shopping malls via indoor positioning data p 2399808319841910, doi:10.1177/2399808319841910, URL https://doi.org/10.1177/2399808319841910

- Ng and Jordan (2001) Ng AY, Jordan MI (2001) On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes. In: Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic, MIT Press, NIPS’01, pp 841–848, URL http://dl.acm.org/citation.cfm?id=2980539.2980648, event-place: Vancouver, British Columbia, Canada

- Nguyen et al. (2013) Nguyen D, Gravel R, Trieschnigg D, Meder T (2013) "how old do you think i am?" a study of language and age in twitter. In: ICWSM

- Nguyen et al. (2016) Nguyen D, Doğruöz AS, Rosé CP, de Jong F (2016) Computational sociolinguistics: A survey. Computational Linguistics 42(3):537–593, doi:10.1162/COLI_a_00258, URL https://doi.org/10.1162/COLI_a_00258

- Pariser (2011) Pariser E (2011) The filter bubble: What the Internet is hiding from you. Viking, London

- Pennacchiotti and Popescu (2011) Pennacchiotti M, Popescu AM (2011) Democrats, republicans and starbucks afficionados: user classification in twitter p 9

- Porter (1980) Porter MF (1980) An algorithm for suffix stripping 40:211–218, doi:10.1108/00330330610681286

- Preoţiuc-Pietro et al. (2017) Preoţiuc-Pietro D, Liu Y, Hopkins D, Ungar L (2017) Beyond Binary Labels: Political Ideology Prediction of Twitter Users. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Association for Computational Linguistics, Vancouver, Canada, pp 729–740, doi:10.18653/v1/P17-1068, URL http://aclweb.org/anthology/P17-1068

- Rao et al. (2010) Rao D, Yarowsky D, Shreevats A, Gupta M (2010) Classifying latent user attributes in Twitter. In: Proceedings of the 2nd international workshop on Search and mining user-generated contents - SMUC ’10, ACM Press, Toronto, ON, Canada, p 37, doi:10.1145/1871985.1871993, URL http://portal.acm.org/citation.cfm?doid=1871985.1871993

- Roberts et al. (2019) Roberts ME, Stewart BM, Tingley D (2019) stm: An R package for structural topic models 91(1):1–40, doi:10.18637/jss.v091.i02, URL https://www.jstatsoft.org/index.php/jss/article/view/v091i02, number: 1

- Rosenthal and McKeown (2011) Rosenthal S, McKeown K (2011) Age prediction in blogs: A study of style, content, and online behavior in pre- and post-social media generations p 10

- Sap et al. (2014) Sap M, Park G, Eichstaedt J, Kern M, Stillwell D, Kosinski M, Ungar L, Schwartz HA (2014) Developing age and gender predictive lexica over social media. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, pp 1146–1151, doi:10.3115/v1/D14-1121, URL http://aclweb.org/anthology/D14-1121

- Sites (2013) Sites D (2013) Compact language detector 2. URL https://github.com/CLD2Owners/cld2

- Sznajd-Weron and Sznajd (2005) Sznajd-Weron K, Sznajd J (2005) Who is left, who is right? 351(2):593–604, doi:10.1016/j.physa.2004.12.038, URL http://www.sciencedirect.com/science/article/pii/S0378437104016061

- Talaifar and Swann (2019) Talaifar S, Swann WB (2019) Deep alignment with country shrinks the moral gap between conservatives and liberals 40(3):657–675, doi:10.1111/pops.12534, URL https://onlinelibrary.wiley.com/doi/abs/10.1111/pops.12534, _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1111/pops.12534

- Wang and Manning (2012) Wang S, Manning CD (2012) Baselines and bigrams: Simple, good sentiment and topic classification. In: Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics: Short Papers - Volume 2, Association for Computational Linguistics, ACL ’12, pp 90–94, URL http://dl.acm.org/citation.cfm?id=2390665.2390688, event-place: Jeju Island, Korea

- Wang et al. (2019) Wang Z, Hale S, Adelani DI, Grabowicz P, Hartman T, FlÃ{\textbackslash}Pck F, Jurgens D (2019) Demographic inference and representative population estimates from multilingual social media data. In: The World Wide Web Conference, ACM, WWW ’19, pp 2056–2067, doi:10.1145/3308558.3313684, URL http://doi.acm.org/10.1145/3308558.3313684, event-place: San Francisco, CA, USA

- Weber et al. (2013) Weber I, Garimella VRK, Teka A (2013) Political hashtag trends. In: ECIR

- Wong et al. (2016) Wong FMF, Tan CW, Sen S, Chiang M (2016) Quantifying Political Leaning from Tweets, Retweets, and Retweeters. IEEE Transactions on Knowledge and Data Engineering 28(8):2158–2172, doi:10.1109/TKDE.2016.2553667

- Yu et al. (2008) Yu B, Kaufmann S, Diermeier D (2008) Classifying party affiliation from political speech 5(1):33–48, doi:10.1080/19331680802149608, URL https://doi.org/10.1080/19331680802149608

- Zagheni et al. (2017) Zagheni E, Weber I, Gummadi K (2017) Leveraging facebook’s advertising platform to monitor stocks of migrants 43(4):721–734, doi:10.1111/padr.12102, URL https://onlinelibrary.wiley.com/doi/abs/10.1111/padr.12102

- Zamal et al. (2012) Zamal FA, Liu W, Ruths D (2012) Homophily and Latent Attribute Inference: Inferring Latent Attributes of Twitter Users from Neighbors. pp 1–6, doi:10.1109/IWBF.2017.7935106

- Zhang et al. (2016) Zhang J, Hu X, Zhang Y, Liu H (2016) Your age is no secret: Inferring microbloggers’ ages via content and interaction analysis p 10

Appendix A Supplemental materials

This supplemental material includes additional detail on how we identify political content, the URL patterns we use to identify the sharing of political and sport news, and all topics in the topic model for non-political and also political content using 150 topics.

Identifying political content

Political Words

#askmay, #battlefornumber10, #bbcdebate, #bbcelection, #bbcqt, #bbcsp, #believeinbritain, #brexit, #brexitdeal, #bristolgreenmp, #bristolwest, #budget2015, #budget2017, #cameronmustgo, #conservative, #conservatives, #corbyn, #cpc17, #dementiatax, #dupcoalition, #ed4pm, #election, #election2015, #election2017, #electionday, #electionday2017, #forthemany, #ge15, #ge17, #ge2015, #ge2017, #generalelection, #generalelection17, #generalelection2017, #getcameronout, #greens, #greensurge, #hungparliament, #imvotinglabour, #itvdebate, #jc4pm, #jc4pm2019, #jeremycorbyn, #jezwecan, #labour, #labourdoorstep, #labourleadership, #labourmanifesto, #labourmustwin, #le2017, #leadersdebate, #libdem, #libdemfightback, #libdems, #makejunetheendofmay, #marrshow, #mayvcorbyn, #newsnight, #nhscyberattack, #nigelfarage, #peston, #plaid15, #pmqs, #queensspeech, #registertovote, #ridge, #saveilf, #snp, #snpout, #tories, #toriesout, #toriesoutnow, #tory, #toryelectionfraud, #torymanifesto, #ukip, #uklabour, #victorialive, #vote, #vote2017, #votecameronout, #voteconservative, #votegreen2015, #votegreen2017, #votelabour, #votelibdem, #votematch, #votesnp, #voteukip, #weakandwobbly, #whyimvotingukip, #whyvote, #wsyvf, @aaronbastani, @ainemichellel, @amberruddhr, @amelia_womack, @andrewspoooner, @andysearson, @angelarayner, @angiemeader, @angry_voice, @angrysalmond, @annaturley, @barrygardiner, @bbcbreaking, @bbclaurak, @bbcnews, @bbcpolitics, @bbcr4today, @bbcscotlandnews, @bonn1egreer, @borisjohnson, @brexitbin, @bristolgreen, @britainelects, @campbellclaret, @carolinelucas, @cchqpress, @charliewoof81, @christinasnp, @chukaumunna, @chunkymark, @conservatives, @corbyn_power, @corbynator2, @d_raval, @daily_politics, @darrenhall2015, @david_cameron, @davidjfhalliday, @davidjo52951945, @davidlammy, @davidschneider, @dawnhfoster, @debbie_abrahams, @dmreporter, @dpjhodges, @dvatw, @ed_miliband, @el4jc, @emilythornberry, @evolvepolitics, @faisalislam, @fight4uk, @frasernelson, @gdnpolitics, @georgeaylett, @georgeeaton, @grantshapps, @guardian, @guardiannews, @guidofawkes, @hackneyabbott, @harrietharman, @harryslaststand, @hephaestus7, @hrtbps, @huffpostuk, @huffpostukpol, @iainmartin1, @iandunt, @imajsaclaimant, @independent, @ipsosmori, @itvnews, @jacob_rees_mogg, @james4labour, @jameskelly, @jamesmelville, @jamieross7, @jeremy_hunt, @jeremycorbyn, @jeremycorbyn4pm, @jimwaterson, @joglasg, @johnmcdonnellmp, @johnprescott, @johnrentoul, @jolyonmaugham, @jon_swindon, @jonashworth, @joswinson, @keir_starmer, @kevin_maguire, @kezdugdale, @krishgm, @laboureoin, @labourleft, @labourlewis, @labourpress, @ladydurrant, @leannewood, @liamyoung, @libdempress, @libdems, @louisemensch, @lucympowell, @mancman10, @marcherlord1, @markfergusonuk, @mhairihunter, @michaelrosenyes, @mikegalsworthy, @mirrorpolitics, @mmaher70, @molly4bristol, @mollymep, @montie, @mrmalky, @msmithsonpb, @natalieben, @newsthump, @nhaparty, @nhsmillion, @nick_clegg, @nickreeves9876, @nicolasturgeon, @nigel_farage, @normanlamb, @nw_nicholas, @owenjones84, @patricianpino, @paulmasonnews, @paulnuttallukip, @paulwaugh, @peoplesmomentum, @peston, @peterstefanovi2, @petewishart, @plaid_cymru, @politicshome, @prisonplanet, @rachael_swindon, @realdonaldtrump, @reclaimthenews, @redhotsquirrel, @redpeter99, @rhonddabryant, @richardburgon, @richardjmurphy, @robmcd85, @rosscolquhoun, @ruthdavidsonmsp, @sarahchampionmp, @scottishlabour, @scottories, @screwlabour, @skwawkbox, @skynews, @skynewsbreak, @socialistvoice, @standardnews, @stvnews, @sunny_hundal, @survation, @suzanneevans1, @telegraph, @telegraphnews, @telepolitics, @thecanarysays, @thegreenparty, @themingford, @thepileus, @theredrag, @theresa_may, @theresa_may’s, @therightarticle, @thesnp, @thisisamy_, @timfarron, @timothy_stanley, @tnewtondunn, @tomlondon6, @toryfibs, @trevdick, @trobinsonnewera, @uk_rants, @ukip, @uklabour, @vincecable, @wesstreeting, @willblackwriter, @wingsscotland, @wowpetition, @yougov, @yvettecoopermp, abbot, abbott, bennett, bernie, boris, brexit, cameron, cameron’s, campaign, campaigning, candidate, candidates, canvassing, centrist, clegg, clegg’s, clinton, coalition, communist, comres, con, conservative, conservatives, constituency, constituents, corbyn, corbyn’s, councillor, councillors, cymru, davidson, debates, dem, democrat, democrats, dems, dup, election, elections, electoral, entitlement, eu, fallon, farage, farage’s, farron, fein, fiscal, gardiner, ge, gop, gove, govern, government, government’s, govt, govt’s, greens, grn, guardian, hamas, hammond’s, hillary, hustings, icm, ifs, ind, ira, isis, islamist, johnson, johnson’s, jones, labour, labour’s, labours, ld, ldem, leanne, lib, libdem, libdems, liberal, manifesto, manifestos, may’s, mcdonnell, miliband, miliband’s, milliband, minister, mogg, mps, nuttall, obama, oth, palin, paxman, plaid, pm’s, policies, policy, poll, polling, polls, portillo, putin, queen’s, ref, referendum, republican, republicans, rudd, russia, salmond, sanders, scrapping, sinn, snp, snp’s, socialist, soros, sturgeon, sturgeon’s, terror, terrorism, terrorist, theresa, thornberry, tns, tories, tories’, tory, trident, trump, ukip, ukip’s, unionist, vaughan, vladimir, vote, voter, voters, votes, voting, yougov

Ambiguous Words

free, #amazonbasket, #beastfromtheeast, #bournemouth, #colchester, #eurovision2015, #finland, #firearms, #foxhunting, #freebies, #glastonbury, #grenfell, #grenfelltower, #leeds, #londonbridge, #marr, #onelovemanchester, #spanishgp, #thursdaythoughts, #trident, #uk, #york, #youtube, @daraobriain, @emmakennedy, @jamin2g, @janeygodley, @kindleuk, @marcuschown, @paulbernaluk, @pinknews, @rustyrockets, @sophiareed1, @swanseacity1, 10m, 1bn, 2015, 34, 8bn, 8th, allowance, amber, ars, attack, austerity, baptist, behave, benz, branch, burrows, buts, chaos, clarkson, conference, cons, costed, costings, cuts, davey, david, deal, debate, deceased, defend, dementia, diane, diaz, dodging, donald, dunk, ed, emily, esther, exc, factions, fairer, fees, firearms, firefighters, foxes, freddy, general, glastonbury, grenfell, gy, hamlets, hs2, hsbc, ht, htt, hung, inheritance, intention, jeremy, june, kensington, kyle, lab, lambert, landslide, leader, leaders’, leaflet, leafleting, leaflets, lorde, marginal, matched, may, mcintyre, meeting, meetup, members, membership, methodist, middlesbrough, middleton, muppets, murphy, natalie, nicola, nigel, observer, participate, party, pensioners, pledge, pledges, plymouth, posters, progressive, prop, reception, register, registered, rehearsing, results, rodgers, rt, ruth, scarpping, scrap, seat, seats, sentencing, sheeran, slater, sos, source, stable, sub, supporting, surge, tactical, tactically, th, theo, tim, transformative, tuition, uc, uk, violence, watson, weak, weir, wheat, youtuber

News case study URL patterns

| Source | Type | URL pattern |

|---|---|---|

| Guardian | political | theguardian.com/politics/2018/ |

| sport | theguardian.com/sport/2018/ | |

| BBC | political | bbc.co.uk/news/uk-politics- |

| sport | bbc.co.uk/sport/ | |

| Telegraph | political | telegraph.co.uk/politics/2018/ |

| sport | telegraph.co.uk/football/2018/ | |

| telegraph.co.uk/cycling/2018/ | ||

| telegraph.co.uk/cricket/2018/ | ||

| telegraph.co.uk/rugby-union/2018/ |

Full topic models

We are providing non-political and political topic models in this section.

Non-Political Topics

The topics we name in the paper are: 38 (Entertainment), 46 (Technology/EU), 48 (Gardening), 58 (MUFC/Premier League), 75 (London/Beer), 77 (Follow/Unfollow), 91 (Africa/Corruption), 95 (Sugar Tax), 98 (Premier League), 114 (Soc. Dem. Values), 148 (Pollution).

The explicitly political topics we identified in the non-political model are 27 (Israel–Palestine), 37 (Scottish independence), 54 (UK politics), 62 (EU politics), 69 (the Green Party), 84 (Brexit), 86 (the US), 106 (Westminster).

| Topic | Features |

| 1 | risk, daili, de, hr, late, leadership, insur, manag, thetim, innov, aug |

| 2 | youtub, youtub_video, video, video_youtub, islam, lyric, hd, feat, music_video, trailer, video_make, parodi, muslim |

| 3 | anxieti, ur, mental, mental_health, depress, mental_ill, ill, stigma, base, disord, anxious, therapist, mental_health_issu, insta, xx |

| 4 | man_love, steel, andrew, everton, liam, lfc, liverpool, gallagh, jim, lg, oasi, arena, easter_egg, klopp, giveaway |

| 5 | comic, health, daili, technolog, mentalhealth, healthcar, late, tech, busi, dc, medicin, mm, book |

| 6 | fm, mile, en, gb, step, travel, insid, leadership, eu, construct, stem, sustain |

| 7 | gay, today_find, pride, tho, netflixuk, porn, coffe, sean, ah, memori, twitter_account, dublin, domino, work_today, ugh |

| 8 | ink, illustr, draw, japanes, lesbian, omg, lil, irish, queer, ireland, submiss, dublin, plz, freelanc, eurovis |

| 9 | bronz, trophi, ps, earn, silver, gold, destini, broadcast, assassin, giveaway, gran, game |

| 10 | tourism, student, research, academ, univers, publish, phd, colleg, studi, organis, leisur, philosoph, prof, philosophi, sustain |

| 11 | lewi, xx, haha, chris, happi_birthday, birthday, gari, cheer, liam, bf, spencer, haha_good, oop, lol |

| 12 | sampl, repli, bargain, thriller, kindl, free, mysteri, buy, author, book, suspens, amazonuk, trilog, romanc |

| 13 | academi, chariti, budget, kent, educ, sector, trust, tax, manufactur, school, sussex, cyber, mat, implic |

| 14 | chanc_win, giveaway, competit, follow_chanc, enter, follow_chanc_win, chanc, follow_win, simpli, prize, winner_announc, follow_enter, follow_retweet, bundl, win |

| 15 | playlist, folk, tea, tuesday, thursday, monday, wednesday, august, decemb, novemb, reel, hardi, rhythm, elvi, octob |

| 16 | angel, pari, sam, bastard, danni, barber, pump, shite, lad, jim, barclay, glenn, innit, daft, casual |

| 17 | ebay, volum, nobl, barn, check, dvd, review, ukchang, sign_petit, petit, sign_petit_ukchang, petit_ukchang, disc, fossil |

| 18 | fb, mk, past_week, donat, past, awesom, geek, airport, cake, cycl, browni, amazonuk, phase, protein, workout |

| 19 | autom, schedul, script, web, window, marcus, loop, solut, technic, wizard, stop_work, scrape, boundari, blog, datum |

| 20 | gt_gt, gt, gt_gt_gt, album, bird, song, music, vibe, mega, moth, dope, make_sick, bloodi_good, blur, ala |

| 21 | railway, carriag, rail, destini, train, ps, delay, se, marvel, sigh, molli, virginmedia, berri, geek, lbc |

| 22 | lodg, swansea, ken, swan, leicest, anna, squar, mason, ed, instal, surnam, ceremoni, virginmedia, cardiff |

| 23 | canal, trip, boat, sun, great_day, festiv, weekend, rugbi, lock, cycl, sail, countrysid, great_weekend, visitor, temp |

| 24 | sw, pool, waterloo, citi, pr, xxxx, leader, anna, andrea, kyli, compassion, abba, samuel, yawn, grayl |

| 25 | sibl, studi, immigr, patient, diseas, evid, effect, outcom, increas, clinic, diabet, mortal, norm, intervent, genet |

| 26 | shaw, race, bet, cricket, bbcsport, bat, ffs, ball, bowl, counti, fort, moor, hardi, prove_wrong, tbh |

| 27 | antisemit, israel, jew, palestinian, jewish, isra, racist, anti_semit, semit, gaza, reluct |

| 28 | post_photo, photo, facebook, post, fisher, hors, race, xxx, parti, indi, sim, happi_day, rider, adel, xx |

| 29 | subscript, content, exclus, access, fan, renew, month, join, utd, portug, ab, manchest_unit, ferguson, leed, man_utd |

| 30 | durham, newcastl, laura, lauren, xo, hun, isl, dubai, cute, nah, luci, mc, lmao, tbh |

| 31 | develop, birmingham, role, derbi, coventri, net, softwar, job, hire, engin, infrastructur, midland, bbc_news |

| 32 | ur, pl, gal, wanna, uni, liter, babe, xo, bc, omg, ew, soz, makeup, sophi, casualti |

| 33 | detect, youtub, trailer, cinema, video_youtub, director, color, magazin, lee, ai, maggi, nasa, arch, depict, vice |

| 34 | imaceleb, doctorwho, wale, gbbo, nurs, swansea, flood, die_age, doctor, bbcone, phillip, minut_silenc, iain, evacu, sharon |

| 35 | eurovis, sync, brit, fave, song, spice, fab, chart, singl, battl, woo, wk, love_song, mexico, album |

| 36 | mi, run, race, marathon, runner, nike, crush, pace, jedi, paul, hm, endur, sprint, gym |

| 37 | scottish, scotland, independ, proport, glasgow, scot, averag, edinburgh, 3, wee, ruth, good_support, tier, uefa, ranger |

| 38 | beth, xx, haha, xxx, deploy, sean, artist, nicki, wire, hahaha, knit, navi, bbc_radio, willi, lol |

| 39 | tshirt, york, wed, edinburgh, magic, earn, badg, awesom, film, packag, surf, tee, yorkshir, chanc_win |

| 40 | keith, trek, samuel, christ, faith, god, spirit, israel, august, promis, ministri, profound, divin, amanda, revel |

| 41 | sheffield, yorkshir, south, vine, centr, ago_today, year_ago_today, sarah, year_ago, shop, hillsborough, world_big, arthur, xbox, lol |

| 42 | disabl, dwp, disabl_peopl, benefit, univers_credit, poverti, welfar, credit, homeless, auster, pip, sanction, luther, duncan |

| 43 | consult, insight, appl, io, iphon, karl, server, tim, read_tweet, cook, smartphon, broadband, samsung, softwar |

| 44 | afc, arsenal, wenger, chelsea, spur, arsenal_fan, alexi, sanchez, tottenham, emir, au, bayern, dortmund |

| 45 | legal, law, court, lawyer, lynn, ms, divorc, student, aid, justic, suprem_court, profess, mainten, academ |

| 46 | android, dj, ee, eu, app, greec, independ, mobil, scotland, ipad, tutori, currenc, rubi, versus, workout |

| 47 | lt, oxford, input, lt_lt, hey, stream, ill, nowplay, rank, hall, aha, acoust, meter, gordon, ah_good |

| 48 | bloom, garden, cute, layer, kinda, ship, charact, rich, bless, hug, hope_feel, roller, ldn, shoutout, gosh |

| 49 | thanksgiv, km, coffe, cat, tourist, holiday, gym, tube, latin, breakfast, sticker, latt, florida, unlock, kitti |

| 50 | connect, typo, lbc, garylinek, coin, sugar, lord, bot, threaten, climat_chang, fork, lord_sugar, edl, man_make, russian |

| 51 | gay, eurovis, lgbt, money_make, pride, australia, marriag, equal, sydney, homophob, gay_man, lgbtq, homophobia, abba, queer |

| 52 | bbc_news, bbc, news, great_good, portrait, exhibit, irish, ireland, wonder, museum, serena, twitter_follow, austria, thame, sad_news |

| 53 | opinion, fact, agre, understand, genuin, sens, fair, forbid, disagre, interest, logic, necessarili, piti, grasp, satir |

| 54 | bruce, anna, remain, parliament, migrant, illeg, franc, countri, politician, puppi, griev, leaver, traitor, des, imparti |

| 55 | fuck, cunt, fuckin, connor, yer, kyle, shite, ye, shit, lmao, cum, shag, fanni, fuck_hate, wank |

| 56 | writer, kickstart, movi, film, cinema, write, author, doctorwho, book, episod, haul, andr, crowdfund, day_leav, big_screen |

| 57 | follow_follow, blog, blogger, instagram, shoe, outfit, fashion, dress, gorgeous, beauti, follow_back, skirt, aso, luxuri, wardrob |

| 58 | mufc, manutd, unit, rooney, manchest_unit, utd, goal, golf, moy, mourinho, bale, trafford, ucl, cristiano, pogba |

| 59 | shade, film, star_war, hairdress, starwar, movi, hell, war, jedi, damn, shaun, laser, film_make, titan, awaken |

| 60 | lol, bald, lmao, im, omg, tho, tbh, ur, ppl, wtf, lol_good, sooo, goin, carniv, sooooo |

| 61 | cat, eye, eden, mill, insect, fuck_fuck, biscuit, ian, tree, poem, badger, windsor, good_god, andr, ha |

| 62 | deficit, el, la, los, remind, econom, en, tax, america, del, es, nationalist, merkel, macron, lo |

| 63 | turkish, lisa, bing, miss, mailonlin, harvey, louis, bloke, lbc, custom_servic, leo, robbi, vip, xx |

| 64 | art, galleri, artist, exhibit, studio, paint, print, workshop, beach, landscap, contemporari, tide, artwork |

| 65 | cricket, broad, bat, ash, stoke, test, england, bowl, root, ball, aussi, ol, over, jonni, fuck |

| 66 | thor, teacher, physic, activ, educ, child, school, teach, student, earli_year, nurseri, pe, classroom, toddler, egypt |

| 67 | stain, graphic, websit, van, ff, sign, newcastl, vehicl, updat, wall, saturday_morn, copper, frost, banner, high_street |

| 68 | exam, collin, uni, revis, essay, err, sister, lectur, graduat, hull, abbey, gcse, time_aliv, snapchat |

| 69 | green_parti, climat, green, climat_chang, ride, bike, peac, nhs, mag, hunt, pollut, heathrow, countrysid, environment, finland |

| 70 | stamp, paul, writer, morri, blue, andr, scotland, fabric, craft, recip, thumb, sew, stripe, xx, tho |

| 71 | jennif, hahaha, danni, haha, lawrenc, em, il, fav, nottingham, stop_watch, hunger, larri, fuck, im |

| 72 | market, strategi, content, social_medium, brand, social, digit, medium, trend, tip, optim, linkedin, influenc, algorithm, facebook |

| 73 | mia, xfactor, sing, factor, song, sterl, anthoni, england, fuck_fuck, gonna, fluffi, big_brother, week_day, carrot, blade |

| 74 | dec, furi, tyson, fight, fighter, box, mate, jr, pal, champ, boxer, ko, parker, big_man, aj |

| 75 | unit_kingdom, kingdom, ben, unit, beer, pub, london, januari, station, tim, buffet, slice, high_street, poet, buff |

| 76 | ppl, didnt, im, sandra, ive, doesnt, isnt, cuz, folk, what, your, hmmm, lol, hes |

| 77 | unfollow, automat, peopl_follow, stat, follow, check, person, ireland, mention, reach, shane, big_fan, monitor, vinc, find_peopl |

| 78 | long_term, ear, lfc, defend, poor, injuri, midfield, el, defens, hes, dale, trent, analys, salli, your |

| 79 | jane, lt, xxx, xo, nugget, ad, robert, hay, laura, lt_lt, sob, xxxx |

| 80 | blaze, nfl, wrestl, season, coach, defens, chief, matt, game, offens, vardi, boston, houston, panther, ref |

| 81 | maintain, action, figur, base, statement, appar, talk, link, prefer, give, mental_health, connect, make, time |

| 82 | council, communiti, wellb, local, west, committe, servic, resid, fund, volunt, social_care, neighbourhood, district, turnout, great_hear |

| 83 | nathan, josh, hall, eve, exam, jame, gonna, shit, revis, preston, homework, kennedi, quid, il, fuck |

| 84 | deal, trade, remain, democraci, agreement, union, border, european, freedom, good_pay, david_davi, european_union, leaver, negoti, norway |

| 85 | sign_petit, petit, frack, ukchang, degre, nhs, petit_ukchang, sign_petit_ukchang, sign, gaza, privatis, open_letter, georg_osborn |

| 86 | presid, america, syria, cnn, health_care, american, gun, senat, white_hous, potus, congress, fox_news, presid_unit, presid_unit_state, fbi |

| 87 | ted, steve, neighbour, chris, math, podcast, sum, wealth, hey, parliament, ch, sky_news, gorilla, puzzl, worth_watch |

| 88 | barrel, rick, turner, swansea, church, tire, brighton, rugbi, mate, morn, julia, tobi, chick, dissert, briton |

| 89 | chef, restaur, menu, manchest, lunch, food, meal, open, dish, roast, good_food, dine, norman, citi_centr, pm_pm |

| 90 | librari, museum, cat, omg, jo, daniel, liter, ur, staff, pay, patron, windsor, charlott, printer, citizenship |

| 91 | ni, nigeria, lawyer, african, corrupt, parcel, bbcradio, lol, journo, arrest, astronaut, eye_open, hoo, detain, wk |

| 92 | karaok, drink, gin, bake, tire, cake, nottingham, beer, dave, lil, gemma, cider, burnley, lush, buffet |

| 93 | choir, experi, care, young_peopl, ne, scotland, wee, kenni, glasgow, edinburgh, portray, peopl_care, jk_rowl, show_support, rowl |

| 94 | carter, saint, billi, st, fa, dan, ranger, bbcsport, band, radio, show_tonight, nra, curl, di, andi_murray |

| 95 | frog, level, skill, cunt, sugar, committe, divin, nhs, suspect, holland, jeremi_hunt, coffin, rag, blog, fascist |

| 96 | theapprentic, ps, nintendo, xbox, switch, mario, episod, game, star_war, trailer, ea, batman, starwar, consol |

| 97 | raf, york, scotland, poppi, veteran, fraser, soldier, jim, store, boss, primark, lili, today_rememb, case_miss, bbcradio |

| 98 | west_ham, spur, ham, salah, everton, kane, chelsea, klopp, pogba, lukaku, cl, mane, hazard, fuck, midfield |

| 99 | blackpool, wimbledon, itv, liverpool, strict, fabul, tenni, eurovis, ff, manutd, casualti, handsom, watson, saturday_night, im |

| 100 | vegan, hunt, anim, lash, rescu, wildlif, fox, rickygervai, protect, meat, rhino, endang, extinct, eleph |

| 101 | dart, mark, piersmorgan, lbc, pint, proud, midland, yep, kthopkin, twat, wetherspoon, hood, lap, chuck, rickygervai |

| 102 | leed, veggi, daughter, gbbo, rat, mum, charlott, sew, kim, advert, elton, usernam, crappi, amber, fuck |

| 103 | rio, euro, olymp, gold, medal, doctorwho, bridg, wale, silver, itv, andi_murray, world_record, showcas, pursuit, bbcsport |

| 104 | print, font, leed, design, poster, gig, mount, beer, brew, peter, ale, pale, punk, fest |

| 105 | album, gaga, omg, song, ariana, kate, meghan, singl, tour, icon, good_song, rihanna, banger, beyonc, nicki |

| 106 | economi, rural, britain, unemploy, budget, growth, chancellor, union, bn, westminst, commonwealth, margaret_thatcher, good_futur, gdp, booth |

| 107 | bowi, gig, david_bowi, album, david, band, music, ticket, tour, vinyl, great_weekend, tix, acoust, happi_friday, pre_order |

| 108 | mate, lincoln, lad, haha, newcastl, sunderland, hahaha, aye, dean, cunt, mate_good, morri, hahahahaha, ashley, fuck |

| 109 | muslim, islam, migrant, rape, tommi, ukrain, religion, gang, tommi_robinson, immigr, mosqu, free_speech, hate_crime, merkel, groom |

| 110 | celtic, ranger, rodger, wit, hes, scottish, bn, robert, brendan, mcgregor, iv, lennon, gerrard, daft, midfield |

| 111 | pin, fanci, newcastl, iphon, favorit, fab, nowplay, nois, bike, wear, christma_dinner, cube, lamp, rickygervai, bicycl |

| 112 | retail, water, ceo, energi, custom, market, gas, sector, regul, innov, supplier, icymi, storag, resili |

| 113 | yoga, theatr, delici, fabul, cast, workout, drag, mac, audit, dinner, repost, luv, nightclub, popcorn, rehears |

| 114 | foodbank, poverti, nhs, wage, worker, crisi, fund, wick, solidar, food_bank, grayl, live_wage, privatis, hunger, comrad |

| 115 | irl, ass, pokemon, damn, movi, dude, meme, bc, charact, shit, wizard, behold, vampir, worm, demon |

| 116 | franci, scout, shift, tattoo, beer, german, tbh, world_cup, worldcup, incid, navi, ballot, houston, troop, sadiq |

| 117 | black, smh, nah, bro, ass, mad, girl, lmao, drake, tryna, pierc, tl, black_man, black_peopl, hoe |

| 118 | cruis, safeti, driver, bbc_news, fire, leicest, car, vegetarian, member, union, exposur, fleet, trail, england_wale, fog |

| 119 | world_cup, goal, cup, fulham, footbal, leagu, worldcup, premier_leagu, sterl, england, huddersfield, pep, southgat, manchest_citi, colombia |

| 120 | franchis, agent, properti, episod, bro, soundcloud, ms, review, lol, itun, buyer, estat, mortgag, seller, xx |

| 121 | lfc, liverpool, belfast, klopp, anfield, suarez, xx, hillsborough, gerrard, lui, ski, salah |

| 122 | ironi, racist, rail, remain, ban, odd, invent, lefti, sane, tho, appropri, dodgi, mph, impli, nearbi |

| 123 | panel, energi, solar, hill, climat, local, council, sustain, green, winner, borough, effici, org, st_centuri, coal |

| 124 | outdoor, mountain, spotifi, lake, peak, trail, summit, district, honey, walk, moss, sculptur, vintag, nowplay, yorkshir |

| 125 | loveisland, georgia, alex, megan, laura, wes, love_island, liverpool, adam, beyonc, madonna, kyli, loyal, xx |

| 126 | church, fr, pray, mass, bishop, priest, cathol, mari, feast, worship, ministri, christ |

| 127 | villa, aston, cricket, dr, premier_leagu, xi, premier, england, score, mini, sydney, russia, off, good_lad, hugh |

| 128 | xxx, fab, xx, xxxx, wonder, love_show, gorgeous, smile, daughter, ador, make_smile, daisi, mt, hope_feel, bravo |

| 129 | photographi, cornwal, photograph, bird, sunset, essex, natur, wolf, photo, van, je, mere, sunris, bonfir, wildlif |

| 130 | carbon, thread, cox, bark, artist, andrea, tran, cc, jersey, energi, orient, fossil, solar, mt, word_day |

| 131 | calm, london, friday, airport, languag, uniqu, googl, ad, gatwick, german, camden, fur, den, paddington, croatia |

| 132 | welsh, cardiff, wale, languag, member, independ, william, group, english, facebook, renam, mud, royal_famili, christma_card, dump |

| 133 | recruit, warehous, graduat, virginmedia, email, glasgow, author, bobbi, web, slight, rupert, ten_minut, murdoch, amanda, email_address |

| 134 | mrjamesob, gun, gender, feminist, dumb, argument, dude, disagre, ideolog, nazi, alt, rifl, notch, ration, virtu |

| 135 | tom, jen, tit, nowplay, pint, doo, wrestl, fool, telli, tbh, minc_pie, minc, preston, fuck |

| 136 | christian, bibl, jesus, christ, ye, church, sin, pray, god, faith, satan, worship, vers, atheist, flesh |