Toward Data-centric Directed Graph Learning: An Entropy-driven Approach

Abstract

The directed graph (digraph), as a generalization of undirected graphs, exhibits superior representation capability in modeling complex topology systems and has garnered considerable attention in recent years. Despite the notable efforts made by existing DiGraph Neural Networks (DiGNNs) to leverage directed edges, they still fail to comprehensively delve into the abundant data knowledge concealed in the digraphs. This data-level limitation results in model-level sub-optimal predictive performance and underscores the necessity of further exploring the potential correlations between the directed edges (topology) and node profiles (feature and labels) from a data-centric perspective, thereby empowering model-centric neural networks with stronger encoding capabilities.

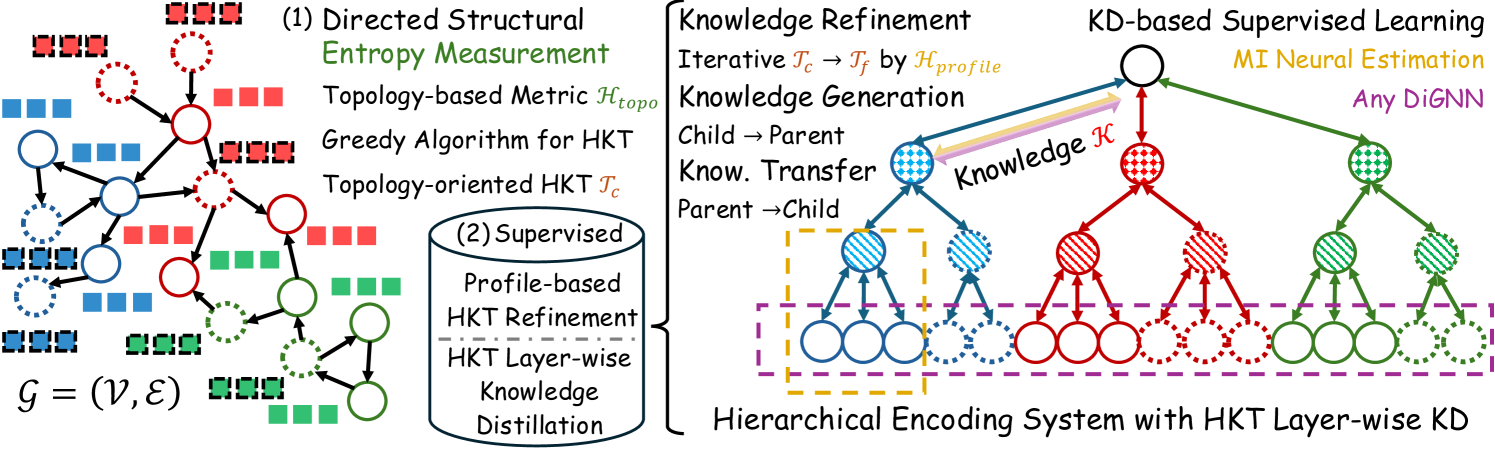

In this paper, we propose Entropy-driven Digraph knowlEdge distillatioN (EDEN), which can serve as a data-centric digraph learning paradigm or a model-agnostic hot-and-plug data-centric Knowledge Distillation (KD) module. The core idea is to achieve data-centric ML, guided by our proposed hierarchical encoding theory for structured data. Specifically, EDEN first utilizes directed structural measurements from a topology perspective to construct a coarse-grained Hierarchical Knowledge Tree (HKT). Subsequently, EDEN quantifies the mutual information of node profiles to refine knowledge flow in the HKT, enabling data-centric KD supervision within model training. As a general framework, EDEN can also naturally extend to undirected scenarios and demonstrate satisfactory performance. In our experiments, EDEN has been widely evaluated on 14 (di)graph datasets (homophily and heterophily) and across 4 downstream tasks. The results demonstrate that EDEN attains SOTA performance and exhibits strong improvement for prevalent (Di)GNNs.

1 Introduction

Recently, Graph Neural Networks (GNNs) have achieved SOTA performance across node- (Wu et al., 2019; Hu et al., 2021; Li et al., 2024b), link- (Zhang & Chen, 2018; Tan et al., 2023), graph-level tasks (Zhang et al., 2019; Yang et al., 2022). However, most GNNs are tailored for undirected scenarios, resulting in a cascade of negative impacts:

(1) Data-level sub-optimal representation: Due to the complex structural patterns present in the real world, the absence of directed topology limits the captured relational information, thereby resulting in sub-optimal data representations with inevitable information loss (Koke & Cremers, 2023; Geisler et al., 2023; Maekawa et al., 2023); (2) Model-level inefficient learning: The optimization dilemma arises when powerful GNNs are applied to sub-optimal data. For instance, undirected GNNs struggle to analyze the connective rules among nodes in the entanglement of homophily and heterophily (i.e., whether connected nodes have similar features or same labels) (Luan et al., 2022; Zheng et al., 2022; Platonov et al., 2023) due to neglect the valuable directed topology (Rossi et al., 2023; Maekawa et al., 2023; Sun et al., 2024). This oversight compels the undirected methods to rely heavily on well-designed models or tricky theoretical assumptions to remedy the neglect of directed topology.

To break these limitations, Directed GNNs (DiGNNs) are proposed to capture this data complexity (Rossi et al., 2023; Sun et al., 2024; Li et al., 2024a). Despite advancements in existing model design considering asymmetric topology, these methods still fail to fully explore the potential correlations between directed topology and node profiles at the data level. Notably, this potential correlation extends beyond directed edges and high-order neighbors to unseen but pivotal structural patterns (aka., digraph data knowledge).

Therefore, we emphasize revealing this data knowledge from a data-centric perspective to improve the learning utility of model-centric approaches fundamentally. Specifically, (1) Topology: unlike undirected graphs, directed topology offers node pairs or groups a more diverse range of connection patterns, implying abundant structural knowledge; (2) Profile: digraph nodes present greater potential for more sophisticated profile knowledge caused by directed edges when compared to nodes in the undirected graph that often present with predominant homophily (Ma et al., 2021; Luan et al., 2022; Zheng et al., 2022). The two above perspectives form the basis of digraphs (i.e., the entanglement of directed topology and node profiles). The core of our approach is disentangling this complexity with our proposed data-centric hierarchical encoding system. For the motivation and key insights behind this framework, please refer to Sec. 2.2.

To this end, we propose Entropy-driven Digraph knowlEdge distillatioN (EDEN). As a general Knowledge Distillation (KD) strategy, EDEN seamlessly integrates data knowledge into model training as supervised information to obtain the optimal embeddings for downstream tasks. Specifically, EDEN first employs directed structural measurement as a quantification metric to capture the natural evolution of directed topology, thereby constructing a Hierarchical Knowledge Tree (HKT) (topology perspective). Subsequently, EDEN refines the HKT with fine-grained adjustments based on the Mutual Information (MI) of node profiles, regulating the knowledge flow (profile perspective). Based on this, EDEN can be viewed as a new data-centric DiGNN or a hot-and-plug data-centric online KD strategy for existing DiGNNs. Notably, while we highlight the importance of EDEN in extracting intricate digraph data knowledge, it can naturally extend to undirected scenarios and exhibit satisfactory performance. More details can be found in Sec. 4.1.

Our contributions. (1) New Perspective. To the best of our knowledge, EDEN is the first attempt to achieve hierarchical data-level KD. It offers a new and feasible perspective for data-centric graph ML. (2) Unified Framework. EDEN facilitates data-centric digraph learning through the establishment of a fine-grained HKT from topology and profile perspectives. It contributes to discovering unseen but valuable structural patterns concealed in the digraph to improve data utility. (3) Flexible Method. EDEN can be regarded as a new data-centric digraph learning paradigm. Furthermore, it can also serve as a model-agnostic hot-and-plug data-centric KD module, seamlessly integrating with existing DiGNNs to improve predictions. (4) SOTA Performance. Extensive experiments demonstrate that EDEN consistently outperforms the best baselines (up to 3.12% higher). Moreover, it provides a substantial positive impact on prevalent (Di)GNNs (up to 4.96% improvement).

2 Preliminaries

2.1 Notations and Problem Formulation

We consider a digraph with nodes, edges. Each node has a feature vector of size and a one-hot label of size , the feature and label matrix are represented as and . can be described by an asymmetrical adjacency matrix . is the corresponding degree matrix. Typical digraph-based downstream tasks are as follows.

Node-level Classification. Suppose is the labeled set, the semi-supervised node classification paradigm aims to predict the labels for nodes in the unlabeled set with the supervision of . For convenience, we call it Node-C.

Link-level Prediction. (1) Existence: predict if exists in the edge sets; (2) Direction: predict the edge direction of pairs of nodes for which either or ; (3) Three-class link classification (Link-C): classify , or .

2.2 Hierarchical Encoding Theory in Structured Data

Inspired by the information theory of structured data (Li & Pan, 2016), let be a real-world digraph influenced by natural noise. We define its information entropy from topology and profile perspectives, where determines the true structure , and data knowledge is concealed in . The assumptions about these definitions are as follows:

Assumption 2.1.

The information entropy is captured by the directed topology and profile-based hierarchical encoding system, reflecting the uncertainty of complex systems.

Assumption 2.2.

The true structure is obtained by minimizing , reflecting the natural organization of nodes.

Assumption 2.3.

The data knowledge forms the foundation of and is concealed in the , which is used to optimize the trainable hierarchical encoding system.

Based on these assumptions, we adhere to the traditional hierarchical encoding theory (Byrne & Russon, 1998; Dittenbach et al., 2002; Clauset et al., 2008) to establish a novel data-centric digraph learning paradigm shown in Fig. 1. This paradigm standardizes the evolution of structured data in physical systems, inspiring the notion of decoding this naturally structured knowledge for analyzing complex digraphs. In other words, this trainable encoding system progressively captures the information needed to determine nodes, such as their positions uniquely. From this, the encoded result constitutes knowledge residing within the true structure . Subsequently, applying KD on extracted from optimizes the encoding system to achieve iterative training. The above concepts form the core of our motivation.

Notably, the directed structural measurement and node MI in Fig. 1 aim to uncover the topology and profile complexity. Based on this, we efficiently compress information, reduce redundancy, and reveal hierarchical structures that capture subtle data knowledge often overlooked by previous studies. In other words, we minimize uncertainty and noise in , revealing the underlying true structure , which captures the layered organization of the data’s inherent evolution. This allows us to effectively decode the underlying knowledge , corresponding to the HKT in EDEN. This theoretical hypothesis has been widely applied in recent years, driving significant research advancements in graph contrastive learning (Wu et al., 2023; Wang et al., 2023) and graph structure learning (Zou et al., 2023; Duan et al., 2024).

In this paper, we adopt a data-centric perspective, which we believe has been overlooked in previous studies. Specifically, we investigate the potential of data-level KD as supervised information to enhance model-centric (Di)GNNs. The core intuition behind our approach is that data quality often limits the upper bound for model performance (Yang et al., 2023; Zheng et al., 2023; Liu et al., 2023). By leveraging HKT, we can break this data-level limitation. This is particularly relevant for digraphs, where intricate directed causal relationships demand deeper exploration. However, our approach can also be naturally extended to undirected graphs. For further discussion on our proposed HKT and hierarchical graph clustering, please refer to Appendix A.1.

2.3 Digraph Representation Learning

To obtain digraph node embeddings, both spectral (Zhang et al., 2021c; Lin & Gao, 2023; Koke & Cremers, 2023; Li et al., 2024a) and spatial (Tong et al., 2020b, a; Zhou et al., 2022; Rossi et al., 2023; Sun et al., 2024) methods are proposed. Specifically, to implement spectral convolution on digraphs with theoretical guarantees, the core is to depend on holomorphic (Duong & Robinson, 1996) filters or obtain a symmetric (conjugated) digraph Laplacian based on PageRank (Andersen et al., 2006) or magnetic Laplacian (Chung, 2005). Regarding spatial methods, researchers draw inspiration from the message-passing mechanisms that account for directed edges. They commonly employ independently learnable weights for in- and out-neighbors to fuse node representations (He et al., 2022b; Kollias et al., 2022; Sun et al., 2024).

2.4 Entropy-driven MI Neural Estimation

Information entropy originates from the practical need for measuring uncertainty in communication systems (Shannon, 1948). Motivated by this application, MI measures the dependence between two random variables. Based on this, Infomax (Linsker, 1988) maximizes the MI between inputs (features) and outputs (predictions), concentrating the encoding system more on frequently occurring patterns. To effectively estimate MI, MINE (Belghazi et al., 2018) uses the DV (Pinsky, 1985) representation to approximate the KL divergence closely associated with MI. It achieves neural estimation of MI by parameterizing the function family as a neural network and gradually raising a tight lower bound through gradient descent. Motivated by these key insights, DGI (Veličković et al., 2019) proposes graph Infomax to guide the contrastive learning process. GMI (Peng et al., 2020) maximizes the MI between the current node and its neighbors, effectively aggregating features. CoGSL (Liu et al., 2022) optimizes graph view generation and fusion through MI to guide graph structure learning.

3 Methodology

The core idea of EDEN is to fully leverage the digraph data knowledge to empower model training. As a data-centric online KD framework, EDEN achieves mutual evolution between teachers and students (i.e., parent and child nodes in the HKT) shown in Fig. 2. To avoid confusion between the data-level online KD and the model-level offline KD (i.e., large teacher model and lightweight student model), we provide a detailed explanation in Appendix A.2.

Step 1: Knowledge Discovery: (a) To begin with, we employ directed topology measurement as a quantification metric to construct a coarse-grained HKT; (b) Based on this, we perform node MI neural estimation. Through gradient descent, we regulate the knowledge flow to obtain fine-grained HKT.

Step 2: Knowledge Distillation: Then, we denote parent and child nodes within the same corrected partition as teachers and students to achieve online KD. Specifically, we propose node-adaptive knowledge generation and transfer operators.

Step 3: Leaf Prediction: Finally, we generate leaf predictions (i.e., original digraph nodes) for downstream tasks. In this process, to harness rich knowledge from the HKT, we employ random walk to capture multi-level representations from their parents and siblings to improve predictions.

3.1 Multi-perspective Knowledge Discovery

In the context of digraph learning, original data have two pivotal components: (1) Topology describes the intricate connection patterns among nodes; (2) Profile uniquely identifies each node. If knowledge discovery focuses on only one aspect, it would lead to coarse-grained knowledge and sub-optimal distillation. To avoid this, EDEN first conducts topology mining to enrich subsequent profile mining, and collectively, establish a robust foundation for effective KD.

Topology-aware structural measurement. In a highly connected digraph, nodes frequently interact with their neighbors. By employing random walks (Pearson, 1905), we can capture these interactions and introduce entropy as a measure of topology uncertainty (Li & Pan, 2016). Specifically, we can quantify one-dimensional structural measurement of by leveraging the stationary distribution of its degrees and the Shannon entropy, which is formally defined as:

| (1) |

where and are in and out-degrees of digraph node. Based on this, to achieve high-order topology mining, let be a partition of , where denotes a community. To this point, we can define the two-dimensional structural measurement of by as follows:

| (2) | ||||

where , and are the nodes and the number of directed edges with end-point/start-point in the partition . Notably, real-world digraphs commonly exhibit hierarchical structure, extending Eq. (2) to higher dimensions. Consequently, we leverage -height partition tree (Appendix A.3) to obtain -dimensional formulation:

| (3) | ||||

where is the parent of and is the root node of the HKT, and are the number of directed edges from other partitions to the current partition and from the current partition to other partitions, at the node level.

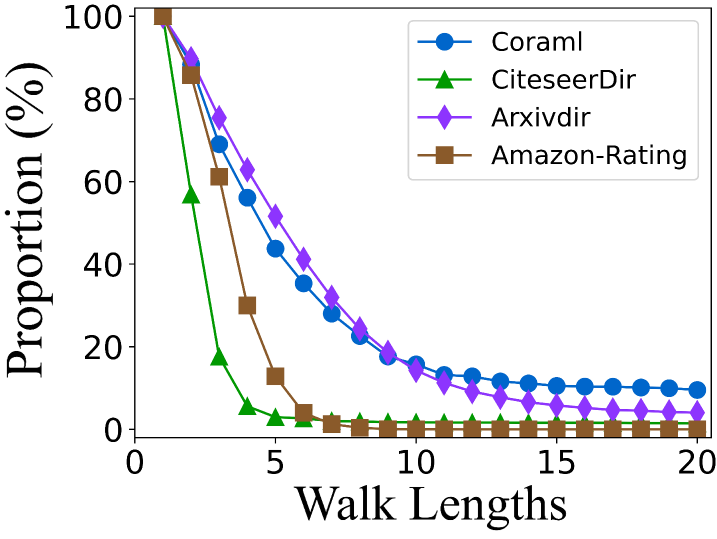

Coarse-grained HKT construction. In contrast to the topology measurements defined in previous work (Li & Pan, 2016), EDEN addresses the limitations of forward-only random walks by incorporating reverse probability. This modification is motivated by the non-strongly connected nature of most digraphs, where the proportion of complete walk paths declines sharply after only five steps (shown in Appendix A.4). This decline suggests that strictly adhering to edge directions in walks (forward-only) fails to capture sufficient information beyond the immediate neighborhood of the starting node. Furthermore, we add self-loops for sink nodes to prevent the scenario where the adjacency matrix might be a zero power and ensure that the sum of landing probabilities is 1. Based on this, we utilize Eq. (3) as a metric and employ a greedy algorithm (DeVore & Temlyakov, 1996) to seek the optimal HKT that minimizes uncertainty. For a detailed algorithm, please refer to Appendix A.5.

Profile-aware node measurement. As previously pointed out, node profiles play an equally pivotal role in digraph learning, which means that the topology measurement alone is insufficient to reflect the true structure. Therefore, we aim to leverage node profiles to fine-tune HKT for KD. The key insight is to emphasize high node similarity within the same partition while ensuring differences across distinct partitions. This is to retain authority in the parent nodes (teachers) and avoid the reception of misleading knowledge by the child nodes (students). To achieve our targets, we introduce intra- and inter-partition node MI neural estimation. The former retains nodes with higher MI within the current partition. These nodes not only serve as effective representations of the current partition but also inherit partition criteria based on topology measurement. The latter identifies nodes in other partitions that effectively represent their own partitions while exhibiting high MI with the current partition. We can adjust the affiliations of these nodes to improve HKT.

Partition-based MI neural estimation. Before introducing our method, we provide a formalized definition as follows. For current partition , we first sample a subset consisting of nodes from and other partitions at the same HKT height (more details can be found in Appendix A.6). Then, we employ a criterion function to quantify the information of , aiming to find the most informative subset for generating knowledge about by solving the problem , subject to . In our implementation, we formulate for based on the neural MI estimator between nodes and their generalized neighborhoods, capturing the neighborhood representation capability of nodes. Based on this, we derive the following theorems related to MI neural estimation for structured data, guiding the design of a criterion function for HKT partitions.

Theorem 3.1.

Let be the HKT in a digraph . For any selected node and in the subset , we define their generalized neighborhoods as and . Given and as an example, consider random variables and as their unique node (sets) features, the lower bound of MI between and its generalized neighborhoods is given by the KL divergence between the joint distribution and the product of marginal distributions can be defined as follows:

| (4) | ||||

where represents the randomly selected node in except for . This lower bound is derived from the -divergence representation based on KL divergence. is an arbitrary function that maps a pair of the node and its generalized neighborhoods to a real value, reflecting the dependency.

Theorem 3.2.

The lower bound in Theorem 3.1 can be converted to f-divergence representations based on non-KL divergence. This GAN-like divergence for structured data is formally defined as:

| (5) | ||||

where is the activation function. Since solving across the entire function space is practically infeasible, we employ a neural network parameterized by .

Theorem 3.3.

Through the optimization of , we obtain as the GAN-based node MI neural estimation for every partition within fine-grained HKT:

| (6) | ||||

The two terms capture the dependency and difference between selected nodes and their neighborhoods.

Fine-grained HKT correction. Based on the above theorems, we instantiate the intra-partition MI:

| (7) |

where is an embedding function designed to quantify node MI by maximizing intra-partition similarity, is a model-agnostic digraph learning function, and and are embedding functions for selected nodes and their generalized neighborhoods. Building upon this, we extend Eq. (7) to the inter-partition scenario, enabling the discovery of potential nodes that exhibit high MI with and inherit the directed structure measurement criteria of :

| (8) |

Notably, the above equations share and , as they are both used for encoding the current node and corresponding generalized neighborhoods. In our implementation, and are instantiated as MLP and the linear layer. Furthermore, we combine it with Sec. 3.2 to reduce complexity. Detailed proofs of the theorems can be found in Appendix A.6-A.8.

3.2 Node-adaptive Knowledge Distillation

Knowledge Generation. After considering the distinctness of nodes, we obtain for the current partition by solving Eq. (6), where comprises nodes selected from and other partitions . Now, we compute an affinity score for each sampling node in based on their unique roles given by HKT, where is the nodes from the current partition, and is the nodes obtained by performing partition-by-partition sampling of the other partitions. The sampling process is limited by the number of nodes in .

| (9) | ||||

where and are used to discover the knowledge related to the current partition. However, this strategy often causes over-fitting. Therefore, we introduce to bring diverse knowledge from other partitions. Specifically, we aim to identify and emphasize nodes that, while representing other partitions, exhibit significant differences from the current partition by . Finally, we obtain the parent representation of by .

Knowledge Transfer. In this section, we introduce personalized knowledge transfer from the parent node (teacher) to the child nodes (student) under partition . The key insights are as follows: (1) For parent nodes, not all knowledge is clearly expressible, implying that class knowledge hidden in embeddings or soft labels may be ambiguous. (2) For child nodes, each node has a unique digraph context, causing various knowledge requirements. Therefore, we consider the trade-off between the knowledge held by the parent node and the specific requirements of child nodes.

Specifically, we first refine the knowledge hidden in the parent node through . Then, we aim to capture the diverse requirements of child nodes in knowledge transfer by to achieve personalized transfer. Similar to Sec. 3.1, we employ MLP to instantiate . To this point, we have built an end-to-end framework for the mutual evolution of teacher and student by the data-level online KD loss:

| (10) | ||||

3.3 Random walk-based Leaf Prediction

Now, we have obtained representations for all nodes in the HKT. Then, our focus shifts to generating leaf-centered predictions for various downstream tasks. To improve performance, a natural idea is to leverage the multi-level representations, including siblings and higher-level parents of the current leaf node, to provide a more informative context. Therefore, we employ the tree-based random walk to obtain this embedding sequence. However, given a receptive field, the number of paths is greater than the number of nodes, employing all paths becomes impractical, especially with a large receptive field. To gather more information with fewer paths in the search space, we define walk rules based on the specific downstream task. Specifically, we concentrate on sampling siblings () to capture same-level representation for link-level tasks. Conversely, for node-level tasks, we prioritize sampling from parents () or children () to acquire multi-level representations. Consider a random walk on edge , currently at node and moving to the next node . The transition probability is set as follows:

| (11) |

Then, we concat the -step random walk results (i.e., node sequence) to obtain for each leaf node. After that, the leaf-centered prediction and overall optimization with -flexible KD and MLP instantiated are formally defined as (please refer to Appendix A.9 for complexity analysis):

| (12) | ||||

3.4 Lightweight EDEN Implementation

As a data-centric framework, EDEN implements HKT-driven data KD. This framework offers new insights and tools for advancing data-centric graph ML. However, scalability remains a bottleneck in our approach, and we aim to propose feasible solutions to enhance its efficiency. Specifically, we implement a lightweight EDEN as outlined below.

Lightweight Coarse-grained HKT Construction. As detailed in Algorithm 1-2 of Appendix A.5, we introduce Monte Carlo methods, which select potential node options rather than optimal ones before detaching and merging. This approach involves running multiple Monte Carlo simulations, where nodes are randomly chosen in each run to generate various candidate solutions. An optimal or near-optimal solution is then selected for execution.

Lightweight Fine-grained HKT Construction. For node MI neural estimation, computational efficiency can be further optimized using incremental training and prototype representation for label-specific child and parent nodes. This training and embedding representation method will significantly reduce the computational overhead.

Lightweight Layer-wise Digraph Learning Function. We can obtain node representations through weight-free feature propagation, a computationally efficient embedding method that has proven effective in recent studies (Wu et al., 2019; Zhang et al., 2022; Li et al., 2024b). Through this design, we significantly reduce the number of learnable parameters and achieve efficient gradient updates.

| Datasets () | Slashdot | WikiTalk | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tasks () | Existence | Direction | Link-C | Existence | Direction | Link-C | ||||

| Models () | AUC | AP | AUC | AP | ACC | AUC | AP | AUC | AP | ACC |

| GCN | 88.4±0.1 | 88.6±0.1 | 90.1±0.1 | 90.2±0.1 | 83.8±0.2 | 92.4±0.1 | 92.3±0.0 | 86.5±0.2 | 87.1±0.1 | 84.6±0.2 |

| GAT | 88.1±0.2 | 88.4±0.1 | 90.4±0.2 | 90.5±0.1 | 83.5±0.3 | OOM | OOM | OOM | OOM | OOM |

| NSTE | 90.6±0.1 | 90.8±0.0 | 92.2±0.1 | 92.4±0.0 | 85.4±0.2 | 94.4±0.1 | 94.6±0.1 | 90.7±0.1 | 90.0±0.0 | 90.4±0.1 |

| Dir-GNN | 90.4±0.1 | 90.5±0.0 | 92.0±0.1 | 91.8±0.1 | 85.2±0.2 | 94.7±0.2 | 94.3±0.1 | 90.9±0.1 | 90.3±0.1 | 90.6±0.2 |

| MagNet | 90.3±0.1 | 90.2±0.1 | 92.2±0.2 | 92.4±0.1 | 85.3±0.1 | OOM | OOM | OOM | OOM | OOM |

| MGC | 90.1±0.1 | 90.4±0.0 | 92.1±0.1 | 92.3±0.1 | 85.0±0.1 | 94.5±0.1 | 94.2±0.0 | 90.6±0.1 | 90.2±0.0 | 90.1±0.1 |

| EDEN | 91.8±0.1 | 92.0±0.0 | 93.3±0.1 | 93.1±0.0 | 87.1±0.2 | 95.4±0.1 | 95.8±0.1 | 91.5±0.0 | 91.7±0.1 | 91.0±0.1 |

| Models | CoraML | CiteSeer | WikiCS | Arxiv | Photo | Computer | PPI | Flickr | Improv. |

|---|---|---|---|---|---|---|---|---|---|

| OptBG | 81.5±0.7 | 62.4±0.7 | 77.9±0.4 | 66.4±0.4 | 91.5±0.5 | 82.8±0.5 | 57.2±0.2 | 50.9±0.3 | 2.75 |

| OptBG+EDEN | 82.8±0.6 | 64.6±0.8 | 79.4±0.3 | 67.9±0.4 | 93.9±0.6 | 84.9±0.6 | 59.8±0.3 | 52.8±0.4 | |

| NAG | 81.2±0.9 | 62.5±0.9 | 78.3±0.3 | 65.9±0.5 | 91.3±0.7 | 83.1±0.4 | 57.1±0.2 | 51.2±0.4 | 2.54 |

| NAG+EDEN | 83.0±0.9 | 64.8±0.7 | 79.8±0.4 | 67.3±0.4 | 93.6±0.8 | 85.2±0.5 | 59.2±0.2 | 52.5±0.4 | |

| DIMPA | 82.4±0.6 | 64.0±0.8 | 78.8±0.4 | 67.1±0.3 | 91.4±0.6 | 82.4±0.5 | 56.7±0.3 | 50.5±0.3 | 4.32 |

| DIMPA+EDEN | 85.4±0.5 | 66.9±0.7 | 82.2±0.5 | 69.9±0.3 | 94.1±0.7 | 85.1±0.5 | 59.5±0.4 | 52.9±0.2 | |

| Dir-GNN | 82.6±0.6 | 64.5±0.6 | 79.1±0.4 | 66.9±0.4 | 91.1±0.5 | 82.9±0.6 | 56.8±0.3 | 50.8±0.4 | 4.68 |

| Dir-GNN+EDEN | 85.9±0.4 | 67.2±0.5 | 82.8±0.3 | 70.5±0.3 | 93.8±0.5 | 84.8±0.7 | 59.4±0.3 | 53.1±0.3 | |

| HoloNet | 82.5±0.5 | 64.1±0.7 | 79.2±0.3 | 67.5±0.2 | 90.8±0.5 | 83.0±0.6 | 57.0±0.3 | 51.0±0.4 | 4.46 |

| HoloNet+EDEN | 86.0±0.4 | 67.5±0.6 | 82.6±0.2 | 70.8±0.3 | 93.7±0.5 | 85.3±0.5 | 59.5±0.5 | 53.4±0.5 |

4 Experiments

In this section, we aim to offer a comprehensive evaluation and address the following questions: Q1: How does EDEN perform as a new data-centric DiGNN? Q2: As a hot-and-plug data online KD module, what is its impact on the prevalent (Di)GNNs? Q3: If EDEN is effective, what contributes to its performance? Q4: What is the running efficiency of EDEN? Q5: How robust is EDEN when dealing with hyperparameters and sparse scenarios? To maximize the usage for the constraint space, we will introduce datasets, baselines, and experiment settings in Appendix A.10-A.13.

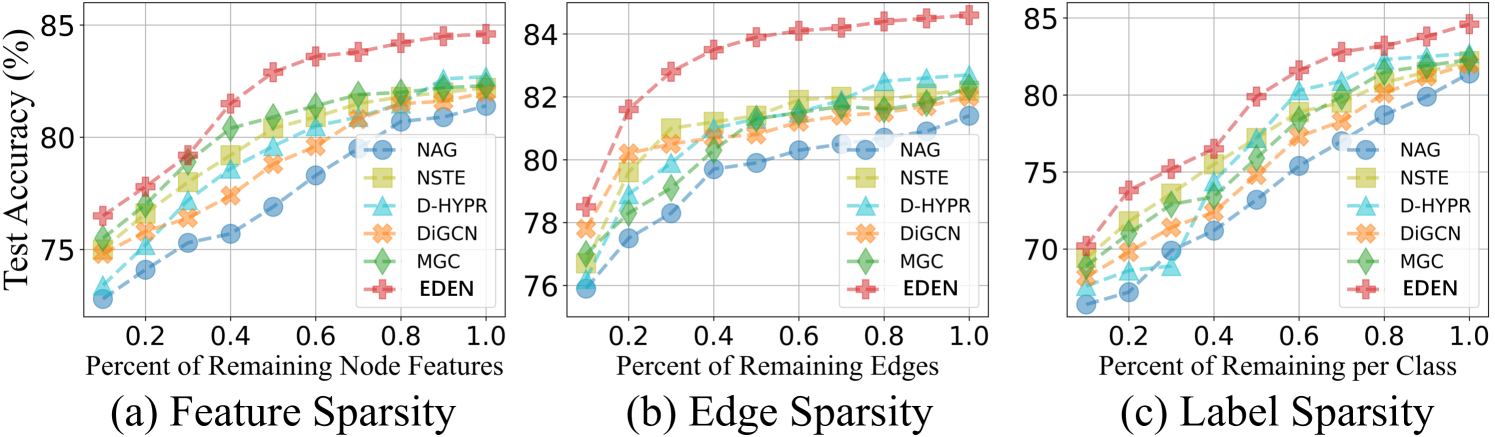

4.1 Performance Comparison

| Models | CoraML | CiteSeer | WikiCS | Tolokers | Empire | Rating | Arxiv |

| GCNII | 80.8±0.5 | 62.5±0.6 | 78.1±0.3 | 78.5±0.1 | 76.3±0.4 | 42.3±0.5 | 65.4±0.3 |

| GATv2 | 81.3±0.9 | 62.8±0.9 | 78.0±0.4 | 78.8±0.2 | 78.2±0.9 | 43.8±0.6 | 66.7±0.3 |

| AGT | 81.2±0.8 | 62.9±0.8 | 78.3±0.3 | 78.5±0.2 | 77.6±0.7 | 43.6±0.4 | 66.2±0.4 |

| DGCN | 82.2±0.5 | 63.5±0.7 | 78.4±0.3 | 78.7±0.3 | 78.7±0.5 | 44.7±0.6 | 66.9±0.2 |

| DIMPA | 82.4±0.6 | 64.0±0.8 | 78.8±0.4 | 78.9±0.2 | 79.0±0.6 | 44.6±0.5 | 67.1±0.3 |

| D-HYPR | 82.7±0.4 | 63.8±0.7 | 78.7±0.2 | 79.2±0.2 | 78.8±0.5 | 44.9±0.5 | 66.8±0.3 |

| DiGCN | 82.0±0.6 | 63.9±0.5 | 79.0±0.3 | 79.1±0.3 | 78.4±0.6 | 44.3±0.7 | 67.1±0.3 |

| MagNet | 82.2±0.5 | 64.2±0.6 | 78.9±0.2 | 79.0±0.2 | 78.8±0.4 | 44.7±0.6 | 67.3±0.3 |

| HoloNet | 82.5±0.5 | 64.1±0.7 | 79.2±0.3 | 79.4±0.2 | 78.7±0.5 | 44.5±0.6 | 67.5±0.2 |

| EDEN | 84.6±0.5 | 65.8±0.6 | 81.4±0.3 | 81.3±0.2 | 81.1±0.6 | 46.3±0.4 | 69.7±0.3 |

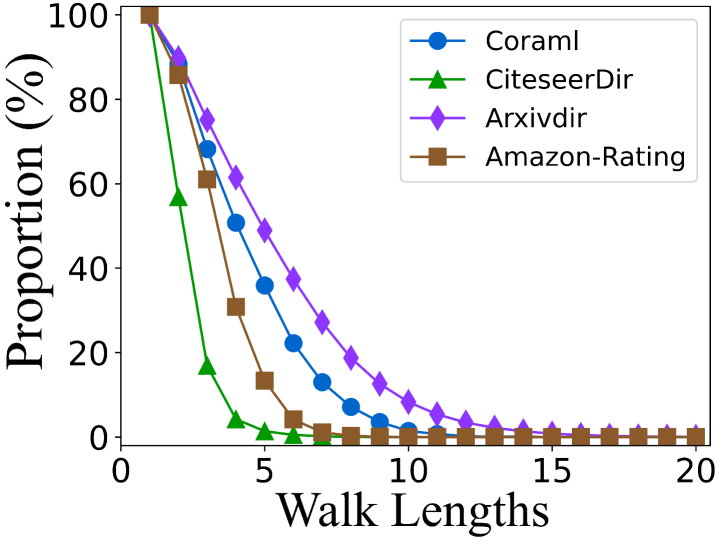

A New Digraph Learning Paradigm. To answer Q1, we present the performance of EDEN as a new data-centric DiGNN in the Table 1 and Table 3. According to reports, EDEN consistently achieves SOTA performance across all scenarios. Specifically, compared to baselines that intermittently achieve the second-best results, EDEN attains improvements of 2.78% and 2.24% on node- and link-level tasks. Notably, the design details of the HKT layer-wise digraph learning function can be found in Appendix A.11.

A Hot-and-plug Online KD Module. Subsequently, to answer Q2, we present performance gains achieved by incorporating EDEN as a hot-and-plug module into existing (Di)GNNs in Table 2 (deployment details can be found in Appendix A.11). Based on the results, we observe that EDEN performs better on digraphs and DiGNNs compared to undirected ones. This is because more abundant data knowledge is inherent in digraphs, providing stronger encoding potential for DiGNNs. EDEN is designed to meet this specific demand, thus showcasing superior performance. Notably, the performance of EDEN as a hot-and-plug module exceeds its performance as a self-reliant method in some cases. This is attributed to the adoption of a lightweight EDEN for running efficiency. While this approach sacrifices some accuracy, it significantly enhances scalability.

4.2 Ablation Study

To answer Q3, we present ablation study results in Table 4, evaluating the effectiveness of: (1) Diverse knowledge in Eq. (9) for over-fitting; (2) Node-adaptive personalized transfer for KD (Eq. (10)); (3) Tree-based random walk for leaf prediction (Eq. (11)); (4) KD loss function for the gradient interaction between teachers and students (Eq. (10)).

| Datasets() Modules() | Tolokers (ACC) | Slashdot (AUC) | ||

|---|---|---|---|---|

| Node-C | Link-C | Existence | Direction | |

| EDEN | 81.33±0.2 | 82.67±0.1 | 91.82±0.1 | 93.29±0.1 |

| w/o Diverse Knowledge | 80.90±0.4 | 82.22±0.3 | 91.40±0.2 | 92.96±0.2 |

| w/o Personalized Transfer | 80.84±0.2 | 82.34±0.1 | 91.49±0.1 | 93.01±0.1 |

| w/o Tree-based Random Walk | 80.67±0.3 | 82.18±0.2 | 91.16±0.1 | 92.77±0.1 |

| w/o Knowledge Distillation Loss | 80.01±0.3 | 81.10±0.1 | 90.84±0.1 | 92.25±0.1 |

| Datasets() Modules() | Rating (ACC) | Epinions (AUC) | ||

| Node-C | Link-C | Existence | Direction | |

| EDEN | 46.33±0.4 | 66.37±0.4 | 93.48±0.1 | 89.40±0.1 |

| w/o Diverse Knowledge | 45.76±0.6 | 66.03±0.5 | 93.11±0.2 | 89.02±0.1 |

| w/o Personalized Transfer | 45.92±0.3 | 65.94±0.3 | 93.05±0.1 | 89.04±0.1 |

| w/o Tree-based Random Walk | 45.84±0.4 | 65.72±0.5 | 93.02±0.1 | 88.99±0.1 |

| w/o Knowledge Distillation Loss | 45.45±0.5 | 65.09±0.4 | 92.57±0.1 | 88.61±0.1 |

Experimental results demonstrate a significant improvement by combining these modules, validating their effectiveness. Specifically, module (1) mitigates over-fitting issues caused by solely focusing on the current partition, achieving higher accuracy and lower variance. Module (2) affirms our key insight in Sec. 3.2, improving HKT-based KD. Module (3) indirectly underscores the validity of the EDEN, as the multi-level representations embedded in the HKT provide beneficial information for various downstream tasks. Finally, module (4) unifies the above modules into an end-to-end optimization framework to empower digraph learning.

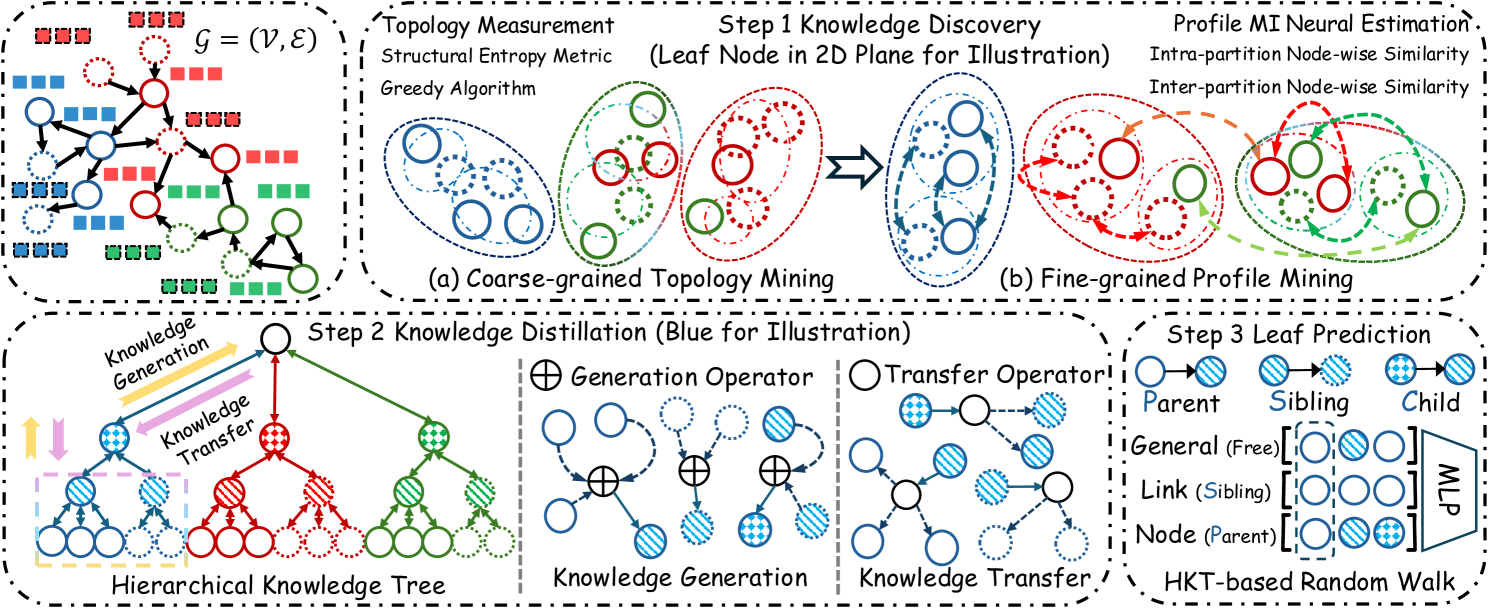

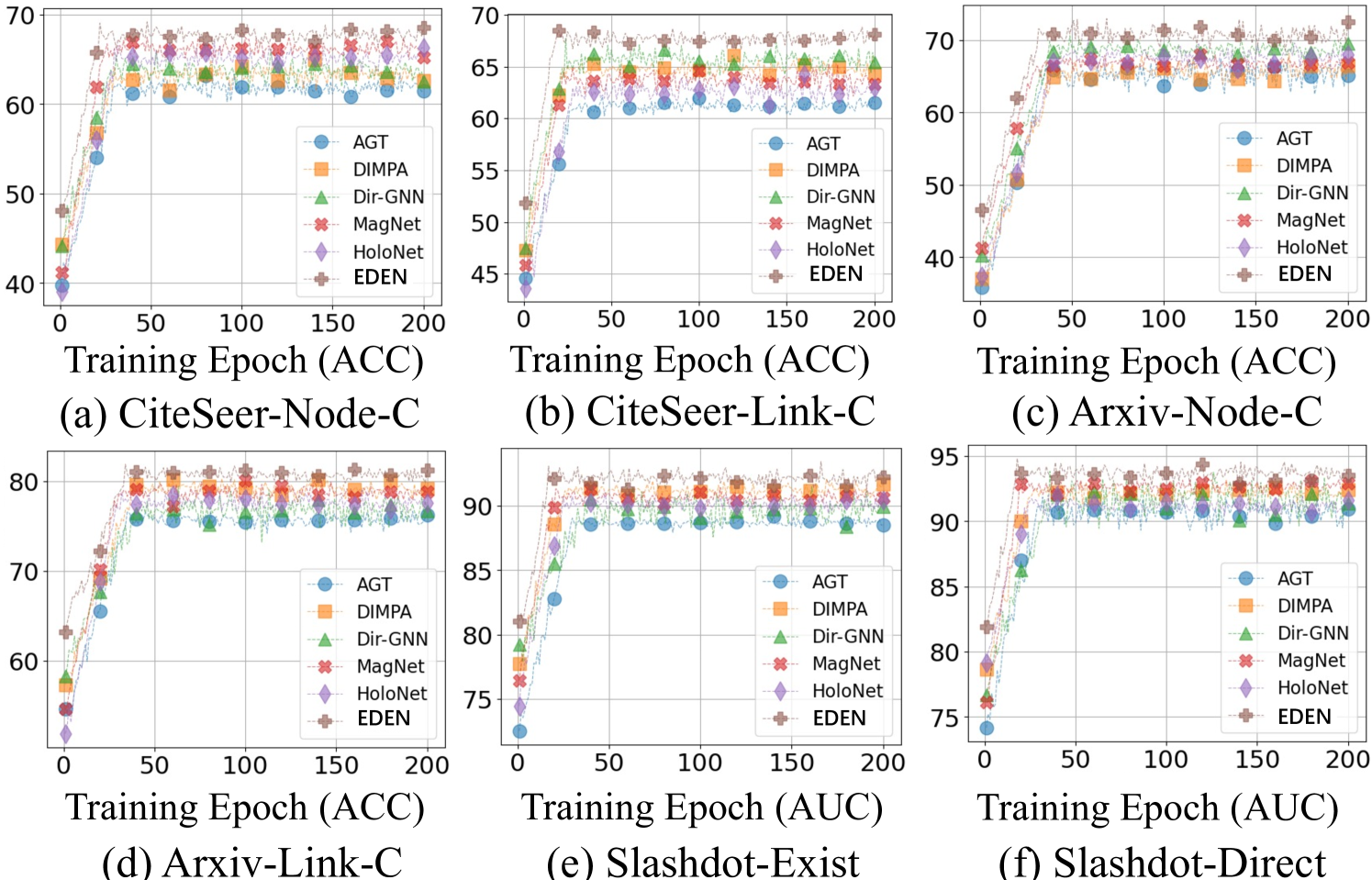

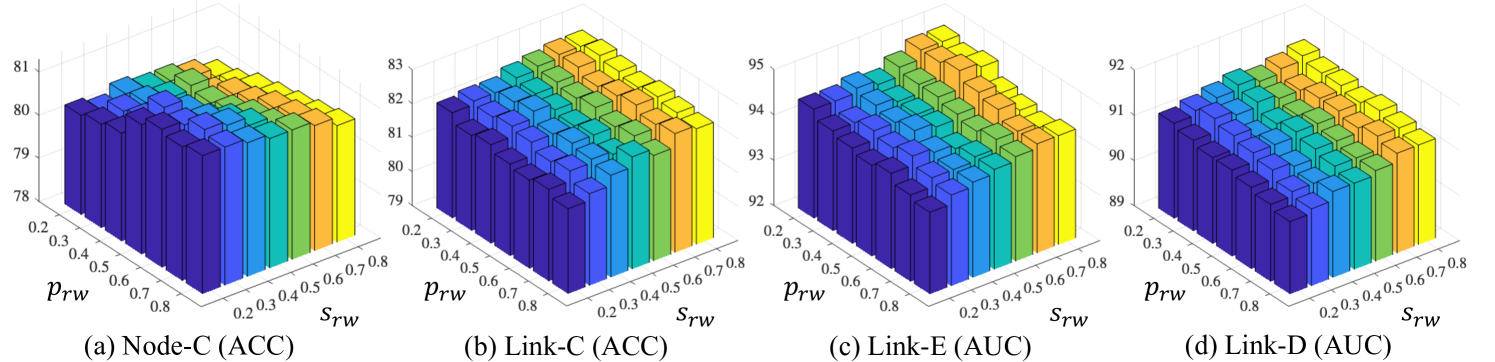

4.3 Efficiency Comparison

To answer Q4, we present the running efficiency report in Fig. 3, where EDEN is primarily divided into two segments: (1) The pre-processing step depicted in Fig. 3(a) showcases coarse-grained HKT construction, with the x-axis representing predefined tree height ; (2) The end-to-end training step depicted in Fig. 3(b). The x-axis denotes the selection of tree height and sampling coefficient introduced by Sec. 3.1 and Sec. 3.2. Since the pre-processing is independent of model training, the computational bottleneck introduced by the coarse-grained HKT construction is alleviated, reducing constraints on deployment scalability. Additionally, the lightweight implementation in pre-processing further mitigates it. Meanwhile, benefiting from the lightweight fine-grained HKT construction and personalized layer-wise digraph learning function, EDEN exhibits a significant advantage in training costs compared to existing baselines shown in Fig. 3(b)-(c). Due to space constraints, additional details regarding the model convergence efficiency during the training process can be found in Appendix A.14.

4.4 Robustness Analysis

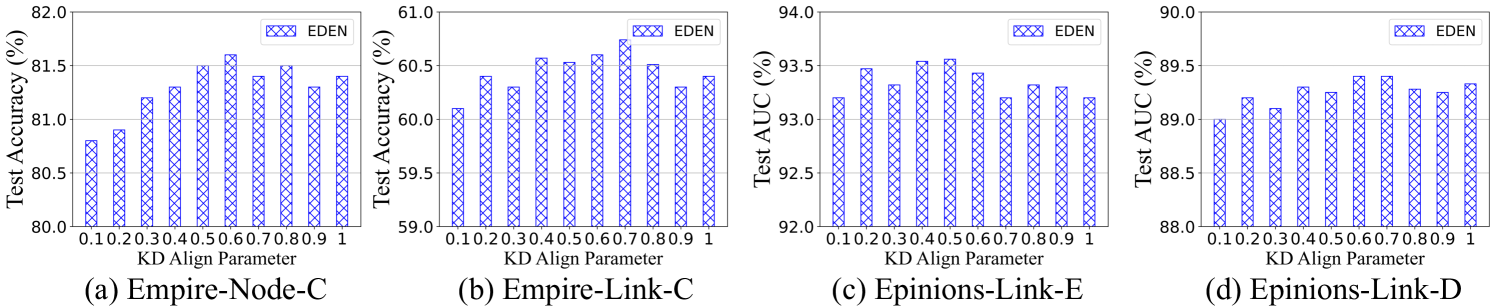

Hyperparameter Selection. To answer Q5, we first analyze the impact of hyperparameter selection on running efficiency and predictive performance based on Fig. 3(a) and (b). Our observations include: (1) Higher HKT height leads to a substantial increase in the time complexity for greedy algorithm during pre-process; (2) Larger sampling coefficients indicate additional computational costs due to considering more nodes in the knowledge generation, especially pronounced with increased height ; (3) Appropriately increasing and for fine-grained distillation significantly improves performance. However, excessive increase leads to apparent optimization bottlenecks, resulting in sub-optimal performance. In addition, we further discuss the implementation details of HKT-based random walk for leaf prediction and KD loss factor in Appendix A.14. This involves investigating the impact of transition probabilities between distinct identity nodes (i.e., parent, sibling, and child) during the sequence acquisition on predictive performance and further analyzing the effectiveness of KD.

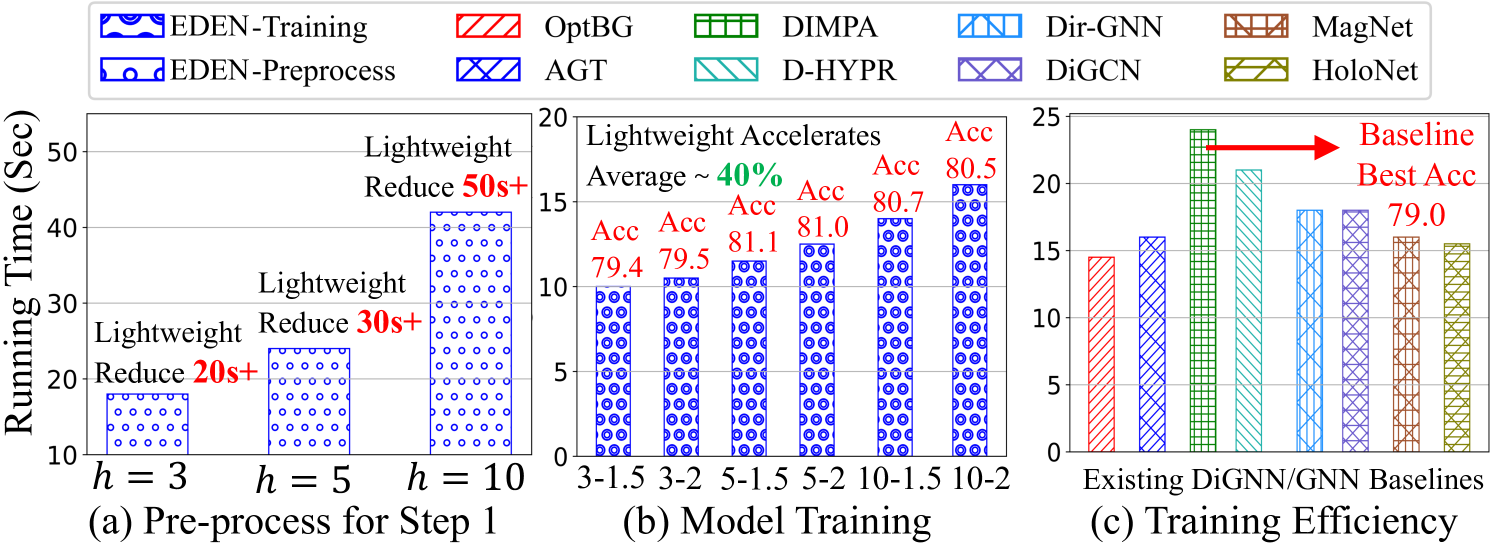

Sparsity Challenges. Subsequently, we provide sparse experimental results in Fig. 4. For stimulating feature sparsity, we assume that the feature of unlabeled nodes is partially missing. In this case, methods that rely on the quantity of node representations like D-HYPR and NAG are severely compromised. Conversely, DiGCN and MGC exhibit robustness, as high-order feature propagation partially compensates for missing features. As for edge sparsity, since all baselines rely on high-quality topology to empower their model-centric neural architectures, their predictive performance is not optimistic. However, we observe that EDEN exhibits leading performance through fine-grained digraph data-centric knowledge mining. To stimulate label sparsity, we change the number of labeled samples for each class and acquire results that follow a similar trend to the feature-sparsity scenarios. Building upon these observations, EDEN comprehensively improves both the predictive performance and robustness of the various baselines.

5 Conclusions, Limitations, and Future Work

In this paper, we propose a general data-centric (di)graph online KD framework, EDEN. It achieves fine-grained data knowledge exploration abiding with the hierarchical encoding system proposed in Sec. 2.2. Comprehensive evaluations demonstrate significant all-around advantages. We believe that implementing data-centric graph KD through the tree structure is a promising direction, as the hierarchical structure effectively captures the natural evolution of real-world graphs. However, it must be acknowledged that the current EDEN framework has significant algorithmic complexity, including multi-step computations. Despite the lightweight implementation, scalability challenges persist when applied to billion-level graphs. Therefore, our future work aims to simplify the hierarchical data-centric KD theory and develop a user-friendly computational paradigm to facilitate its practical deployment in more industry scenarios.

References

- Akiba et al. (2019) Akiba, T., Sano, S., Yanase, T., Ohta, T., and Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD, 2019.

- Andersen et al. (2006) Andersen, R., Chung, F., and Lang, K. Local graph partitioning using pagerank vectors. In Annual IEEE Symposium on Foundations of Computer Science, FOCS. IEEE, 2006.

- Belghazi et al. (2018) Belghazi, M. I., Baratin, A., Rajeswar, S., Ozair, S., Bengio, Y., Courville, A., and Hjelm, R. D. Mine: mutual information neural estimation. In International Conference on Machine Learning, ICML, 2018.

- Bojchevski & Günnemann (2018) Bojchevski, A. and Günnemann, S. Deep gaussian embedding of graphs: Unsupervised inductive learning via ranking. In ICLR Workshop on Representation Learning on Graphs and Manifolds, 2018.

- Brody et al. (2022) Brody, S., Alon, U., and Yahav, E. How attentive are graph attention networks? International Conference on Learning Representations, ICLR, 2022.

- Byrne & Russon (1998) Byrne, R. W. and Russon, A. E. Learning by imitation: A hierarchical approach. Behavioral and Brain Sciences, 21(5):667–684, 1998.

- Chen et al. (2023) Chen, J., Gao, K., Li, G., and He, K. Nagphormer: A tokenized graph transformer for node classification in large graphs. In International Conference on Learning Representations, ICLR, 2023.

- Chen et al. (2020) Chen, M., Wei, Z., Huang, Z., Ding, B., and Li, Y. Simple and deep graph convolutional networks. In International Conference on Machine Learning, ICML, 2020.

- Chen et al. (2021) Chen, Y., Bian, Y., Xiao, X., Rong, Y., Xu, T., and Huang, J. On self-distilling graph neural network. Proceedings of the International Joint Conference on Artificial Intelligence, IJCAI, 2021.

- Chung (2005) Chung, F. Laplacians and the cheeger inequality for directed graphs. Annals of Combinatorics, 9:1–19, 2005.

- Clauset et al. (2008) Clauset, A., Moore, C., and Newman, M. E. Hierarchical structure and the prediction of missing links in networks. Nature, 453(7191):98–101, 2008.

- DeVore & Temlyakov (1996) DeVore, R. A. and Temlyakov, V. N. Some remarks on greedy algorithms. Advances in Computational Mathematics, 5:173–187, 1996.

- Dittenbach et al. (2002) Dittenbach, M., Rauber, A., and Merkl, D. Uncovering hierarchical structure in data using the growing hierarchical self-organizing map. Neurocomputing, 48(1-4):199–216, 2002.

- Duan et al. (2024) Duan, L., Chen, X., Liu, W., Liu, D., Yue, K., and Li, A. Structural entropy based graph structure learning for node classification. In Proceedings of the Association for the Advancement of Artificial Intelligence, AAAI, 2024.

- Duong & Robinson (1996) Duong, X. T. and Robinson, D. W. Semigroup kernels, poisson bounds, and holomorphic functional calculus. Journal of Functional Analysis, 142(1):89–128, 1996.

- Feng et al. (2022) Feng, K., Li, C., Yuan, Y., and Wang, G. Freekd: Free-direction knowledge distillation for graph neural networks. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD, 2022.

- Geisler et al. (2023) Geisler, S., Li, Y., Mankowitz, D. J., Cemgil, A. T., Günnemann, S., and Paduraru, C. Transformers meet directed graphs. In International Conference on Machine Learning, ICML. PMLR, 2023.

- Goodfellow et al. (2014) Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. Generative adversarial nets. Advances in Neural Information Processing Systems, NeurIPS, 2014.

- Grave et al. (2018) Grave, E., Bojanowski, P., Gupta, P., Joulin, A., and Mikolov, T. Learning word vectors for 157 languages. arXiv preprint arXiv:1802.06893, 2018.

- Guo & Wei (2023) Guo, Y. and Wei, Z. Graph neural networks with learnable and optimal polynomial bases. 2023.

- He et al. (2022a) He, Y., Perlmutter, M., Reinert, G., and Cucuringu, M. Msgnn: A spectral graph neural network based on a novel magnetic signed laplacian. In Learning on Graphs Conference, LoG, 2022a.

- He et al. (2022b) He, Y., Reinert, G., and Cucuringu, M. Digrac: Digraph clustering based on flow imbalance. In Learning on Graphs Conference, LoG, 2022b.

- He et al. (2023) He, Y., Zhang, X., Huang, J., Rozemberczki, B., Cucuringu, M., and Reinert, G. Pytorch geometric signed directed: A software package on graph neural networks for signed and directed graphs. In Learning on Graphs Conference, LoG. PMLR, 2023.

- Hiriart-Urruty & Lemaréchal (2004) Hiriart-Urruty, J.-B. and Lemaréchal, C. Fundamentals of convex analysis. Springer Science & Business Media, 2004.

- Hu et al. (2020) Hu, W., Fey, M., Zitnik, M., Dong, Y., Ren, H., Liu, B., Catasta, M., and Leskovec, J. Open graph benchmark: Datasets for machine learning on graphs. Advances in Neural Information Processing Systems, NeurIPS, 2020.

- Hu et al. (2021) Hu, Y., Li, X., Wang, Y., Wu, Y., Zhao, Y., Yan, C., Yin, J., and Gao, Y. Adaptive hypergraph auto-encoder for relational data clustering. IEEE Transactions on Knowledge and Data Engineering, 2021.

- Kipf & Welling (2017) Kipf, T. N. and Welling, M. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations, ICLR, 2017.

- Klicpera et al. (2019) Klicpera, J., Bojchevski, A., and Günnemann, S. Predict then propagate: Graph neural networks meet personalized pagerank. In International Conference on Learning Representations, ICLR, 2019.

- Koke & Cremers (2023) Koke, C. and Cremers, D. Holonets: Spectral convolutions do extend to directed graphs. arXiv preprint arXiv:2310.02232, 2023.

- Kollias et al. (2022) Kollias, G., Kalantzis, V., Idé, T., Lozano, A., and Abe, N. Directed graph auto-encoders. In Proceedings of the Association for the Advancement of Artificial Intelligence, AAAI, 2022.

- Leskovec & Krevl (2014) Leskovec, J. and Krevl, A. Snap datasets: Stanford large network dataset collection. 2014.

- Leskovec et al. (2010) Leskovec, J., Huttenlocher, D., and Kleinberg, J. Signed networks in social media. In Proceedings of the SIGCHI conference on human factors in computing systems, 2010.

- Lhoest et al. (2021) Lhoest, Q., del Moral, A. V., Jernite, Y., Thakur, A., von Platen, P., Patil, S., Chaumond, J., Drame, M., Plu, J., Tunstall, L., et al. Datasets: A community library for natural language processing. arXiv preprint arXiv:2109.02846, 2021.

- Li & Pan (2016) Li, A. and Pan, Y. Structural information and dynamical complexity of networks. IEEE Transactions on Information Theory, 62(6):3290–3339, 2016.

- Li et al. (2024a) Li, X., Liao, M., Wu, Z., Su, D., Zhang, W., Li, R.-H., and Wang, G. Lightdic: A simple yet effective approach for large-scale digraph representation learning. Proceedings of the VLDB Endowment, 2024a.

- Li et al. (2024b) Li, X., Ma, J., Wu, Z., Su, D., Zhang, W., Li, R.-H., and Wang, G. Rethinking node-wise propagation for large-scale graph learning. In Proceedings of the ACM Web Conference, WWW, 2024b.

- Likhobaba et al. (2023) Likhobaba, D., Pavlichenko, N., and Ustalov, D. Toloker Graph: Interaction of Crowd Annotators. 2023. doi: 10.5281/zenodo.7620795. URL https://github.com/Toloka/TolokerGraph.

- Lin & Gao (2023) Lin, L. and Gao, J. A magnetic framelet-based convolutional neural network for directed graphs. In IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, 2023.

- Linsker (1988) Linsker, R. Self-organization in a perceptual network. Computer, 21(3):105–117, 1988.

- Liu et al. (2023) Liu, G., Inae, E., Zhao, T., Xu, J., Luo, T., and Jiang, M. Data-centric learning from unlabeled graphs with diffusion model. Advances in Neural Information Processing Systems, NeurIPS, 2023.

- Liu et al. (2022) Liu, N., Wang, X., Wu, L., Chen, Y., Guo, X., and Shi, C. Compact graph structure learning via mutual information compression. In Proceedings of the ACM Web Conference, WWW, 2022.

- Luan et al. (2022) Luan, S., Hua, C., Lu, Q., Zhu, J., Zhao, M., Zhang, S., Chang, X.-W., and Precup, D. Revisiting heterophily for graph neural networks. Advances in Neural Information Processing Systems, NeurIPS, 2022.

- Ma et al. (2023) Ma, X., Chen, Q., Wu, Y., Song, G., Wang, L., and Zheng, B. Rethinking structural encodings: Adaptive graph transformer for node classification task. In Proceedings of the ACM Web Conference, WWW, 2023.

- Ma et al. (2021) Ma, Y., Liu, X., Shah, N., and Tang, J. Is homophily a necessity for graph neural networks? International Conference on Learning Representations, ICLR, 2021.

- Maekawa et al. (2023) Maekawa, S., Sasaki, Y., and Onizuka, M. Why using either aggregated features or adjacency lists in directed or undirected graph? empirical study and simple classification method. arXiv preprint arXiv:2306.08274, 2023.

- Massa & Avesani (2005) Massa, P. and Avesani, P. Controversial users demand local trust metrics: An experimental study on epinions. com community. In Proceedings of the Association for the Advancement of Artificial Intelligence, AAAI, 2005.

- Mernyei & Cangea (2020) Mernyei, P. and Cangea, C. Wiki-cs: A wikipedia-based benchmark for graph neural networks. arXiv preprint arXiv:2007.02901, 2020.

- Nowozin et al. (2016) Nowozin, S., Cseke, B., and Tomioka, R. f-gan: Training generative neural samplers using variational divergence minimization. Advances in Neural Information Processing Systems, NeurIPS, 2016.

- Ordozgoiti et al. (2020) Ordozgoiti, B., Matakos, A., and Gionis, A. Finding large balanced subgraphs in signed networks. In Proceedings of the ACM Web Conference, WWW, 2020.

- Pearson (1905) Pearson, K. The problem of the random walk. Nature, 72(1865):294–294, 1905.

- Peng et al. (2020) Peng, Z., Huang, W., Luo, M., Zheng, Q., Rong, Y., Xu, T., and Huang, J. Graph Representation Learning via Graphical Mutual Information Maximization. In Proceedings of The Web Conference, WWW, 2020.

- Pennington et al. (2014) Pennington, J., Socher, R., and Manning, C. D. Glove: Global vectors for word representation. In Proceedings of Conference on Empirical Methods in Natural Language Processing, EMNLP, 2014.

- Pinsky (1985) Pinsky, R. On evaluating the donsker-varadhan i-function. The Annals of Probability, pp. 342–362, 1985.

- Platonov et al. (2023) Platonov, O., Kuznedelev, D., Diskin, M., Babenko, A., and Prokhorenkova, L. A critical look at the evaluation of gnns under heterophily: are we really making progress? International Conference on Learning Representations, ICLR, 2023.

- Rossi et al. (2023) Rossi, E., Charpentier, B., Giovanni, F. D., Frasca, F., Günnemann, S., and Bronstein, M. Edge directionality improves learning on heterophilic graphs. in Proceedings of The European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, ECML-PKDD Workshop, 2023.

- Shannon (1948) Shannon, C. E. A mathematical theory of communication. The Bell System Technical Journal, 27(3):379–423, 1948.

- Shchur et al. (2018) Shchur, O., Mumme, M., Bojchevski, A., and Günnemann, S. Pitfalls of graph neural network evaluation. arXiv preprint arXiv:1811.05868, 2018.

- Sun et al. (2024) Sun, H., Li, X., Wu, Z., Su, D., Li, R.-H., and Wang, G. Breaking the entanglement of homophily and heterophily in semi-supervised node classification. In International Conference on Data Engineering, ICDE, 2024.

- Tan et al. (2023) Tan, Q., Zhang, X., Liu, N., Zha, D., Li, L., Chen, R., Choi, S.-H., and Hu, X. Bring your own view: Graph neural networks for link prediction with personalized subgraph selection. In Proceedings of the ACM International Conference on Web Search and Data Mining, WSDM, 2023.

- Tong et al. (2020a) Tong, Z., Liang, Y., Sun, C., Li, X., Rosenblum, D., and Lim, A. Digraph inception convolutional networks. Advances in Neural Information Processing Systems, NeurIPS, 2020a.

- Tong et al. (2020b) Tong, Z., Liang, Y., Sun, C., Rosenblum, D. S., and Lim, A. Directed graph convolutional network. arXiv preprint arXiv:2004.13970, 2020b.

- Veličković et al. (2018) Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., and Bengio, Y. Graph attention networks. In International Conference on Learning Representations, ICLR, 2018.

- Veličković et al. (2019) Veličković, P., Fedus, W., Hamilton, W. L., Liò, P., Bengio, Y., and Hjelm, R. D. Deep Graph Infomax. In International Conference on Learning Representations, ICLR, 2019.

- Wang et al. (2020) Wang, K., Shen, Z., Huang, C., Wu, C.-H., Dong, Y., and Kanakia, A. Microsoft academic graph: When experts are not enough. Quantitative Science Studies, 1(1):396–413, 2020.

- Wang et al. (2023) Wang, Y., Wang, Y., Zhang, Z., Yang, S., Zhao, K., and Liu, J. User: Unsupervised structural entropy-based robust graph neural network. In Proceedings of the Association for the Advancement of Artificial Intelligence, AAAI, 2023.

- Wu et al. (2019) Wu, F., Souza, A., Zhang, T., Fifty, C., Yu, T., and Weinberger, K. Simplifying graph convolutional networks. In International Conference on Machine Learning, ICML, 2019.

- Wu et al. (2023) Wu, J., Chen, X., Shi, B., Li, S., and Xu, K. Sega: Structural entropy guided anchor view for graph contrastive learning. In International Conference on Machine Learning, ICML, 2023.

- Yang et al. (2023) Yang, C., Bo, D., Liu, J., Peng, Y., Chen, B., Dai, H., Sun, A., Yu, Y., Xiao, Y., Zhang, Q., et al. Data-centric graph learning: A survey. arXiv preprint arXiv:2310.04987, 2023.

- Yang et al. (2022) Yang, M., Shen, Y., Li, R., Qi, H., Zhang, Q., and Yin, B. A new perspective on the effects of spectrum in graph neural networks. In International Conference on Machine Learning, ICML, 2022.

- Zeng et al. (2020) Zeng, H., Zhou, H., Srivastava, A., Kannan, R., and Prasanna, V. Graphsaint: Graph sampling based inductive learning method. In International conference on learning representations, ICLR, 2020.

- Zhang et al. (2023) Zhang, H., Lin, S., Liu, W., Zhou, P., Tang, J., Liang, X., and Xing, E. P. Iterative graph self-distillation. IEEE Transactions on Knowledge and Data Engineering, 2023.

- Zhang et al. (2021a) Zhang, J., Hui, B., Harn, P.-W., Sun, M.-T., and Ku, W.-S. Mgc: A complex-valued graph convolutional network for directed graphs. arXiv e-prints, pp. arXiv–2110, 2021a.

- Zhang & Chen (2018) Zhang, M. and Chen, Y. Link prediction based on graph neural networks. Advances in Neural Information Processing Systems, NeurIPS, 2018.

- Zhang et al. (2020) Zhang, W., Miao, X., Shao, Y., Jiang, J., Chen, L., Ruas, O., and Cui, B. Reliable data distillation on graph convolutional network. In Proceedings of the ACM on Management of Data, SIGMOD, 2020.

- Zhang et al. (2021b) Zhang, W., Jiang, Y., Li, Y., Sheng, Z., Shen, Y., Miao, X., Wang, L., Yang, Z., and Cui, B. Rod: reception-aware online distillation for sparse graphs. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD, 2021b.

- Zhang et al. (2022) Zhang, W., Sheng, Z., Yang, M., Li, Y., Shen, Y., Yang, Z., and Cui, B. Nafs: A simple yet tough-to-beat baseline for graph representation learning. In International Conference on Machine Learning, ICML, 2022.

- Zhang et al. (2021c) Zhang, X., He, Y., Brugnone, N., Perlmutter, M., and Hirn, M. Magnet: A neural network for directed graphs. Advances in Neural Information Processing Systems, NeurIPS, 2021c.

- Zhang et al. (2019) Zhang, Z., Bu, J., Ester, M., Zhang, J., Yao, C., Yu, Z., and Wang, C. Hierarchical graph pooling with structure learning. arXiv preprint arXiv:1911.05954, 2019.

- Zheng et al. (2022) Zheng, X., Liu, Y., Pan, S., Zhang, M., Jin, D., and Yu, P. S. Graph neural networks for graphs with heterophily: A survey. arXiv preprint arXiv:2202.07082, 2022.

- Zheng et al. (2023) Zheng, X., Liu, Y., Bao, Z., Fang, M., Hu, X., Liew, A. W.-C., and Pan, S. Towards data-centric graph machine learning: Review and outlook. arXiv preprint arXiv:2309.10979, 2023.

- Zhou et al. (2022) Zhou, H., Chegu, A., Sohn, S. S., Fu, Z., De Melo, G., and Kapadia, M. D-hypr: Harnessing neighborhood modeling and asymmetry preservation for digraph representation learning. Proceedings of the ACM International Conference on Information and Knowledge Management, CIKM, 2022.

- Zhu et al. (2024) Zhu, Y., Li, J., Chen, L., and Zheng, Z. The devil is in the data: Learning fair graph neural networks via partial knowledge distillation. In Proceedings of the ACM International Conference on Web Search and Data Mining, WSDM, 2024.

- Zou et al. (2023) Zou, D., Peng, H., Huang, X., Yang, R., Li, J., Wu, J., Liu, C., and Yu, P. S. Se-gsl: A general and effective graph structure learning framework through structural entropy optimization. In Proceedings of the ACM Web Conference, WWW, 2023.

Appendix A Outline

The appendix is organized as follows:

- A.1

-

HKT Construction and Hierarchical Graph Clustering.

- A.2

-

Data-level Online Knowledge Distillation in EDEN.

- A.3

-

The Definition of partition tree.

- A.4

-

Breaking the Limitations of Single-direction Random Walks.

- A.5

-

Greedy Algorithms for Partition Tree Construction.

- A.6

-

The Proof of Theorem 3.1.

- A.7

-

The Proof of Theorem 3.2.

- A.8

-

The Proof of Theorem 3.3.

- A.9

-

Algorithm Complexity Analysis.

- A.10

-

Dataset Description.

- A.11

-

Compared Baselines.

- A.12

-

Hyperparameter Settings.

- A.13

-

Experiment Environment.

- A.14

-

Extend Experimental Results.

A.1 HKT Construction and Hierarchical Graph Clustering

Although the HKT construction process may superficially resemble hierarchical clustering, it is crucial to emphasize that HKT is fundamentally distinct. Unlike traditional clustering methods, HKT leverages topology-driven structural entropy—a dynamic metric grounded in the information theory of structured data—to extract deeper structural insights from the graph. This methodology transcends the limitations of static clustering techniques, offering a more nuanced and context-aware understanding of the underlying graph structure. Moreover, EDEN incorporates profile-oriented node MI through neural estimation as a pivotal criterion for HKT construction. This integration enables a more fine-grained and profile-aware analysis of node relationships, uncovering intricate patterns that static methods may overlook. As a result, the multi-granularity quantification criteria established by our approach not only deviate significantly from conventional hierarchical clustering but also introduce a novel and innovative perspective for understanding complex graph data (see Sec. 3.1 for more details).

While traditional hierarchical clustering can reveal the layered structure of a network, it is not directly applicable to the complexities of (di)graph learning. EDEN, on the other hand, utilizes HKT as a foundational framework to enable the development of learnable knowledge generation and transfer mechanisms that can be seamlessly integrated with existing (Di)GNN architectures. This integration provides a novel way to enhance model learning by effectively capturing and utilizing the hierarchical structure of directed graphs. Furthermore, we have designed a random walk-based leaf prediction mechanism, tailored to various graph-based downstream tasks, ensuring that our approach is robust and adaptable to different application scenarios (for more technical details, refer to Sec. 3.2-3.3).

A.2 Data-level Online Knowledge Distillation in EDEN

Graph KD typically follows a model-level, offline teacher-student framework. In this setup, knowledge is transferred from a large, pre-trained teacher GNN to a more compact and efficient student model, such as a smaller GNN or MLP. The teacher captures complex patterns and representations within the graph. The student, rather than learning directly from ground truth labels, learns from the teacher’s soft predictions or intermediate representations. This approach allows the student model to replicate the teacher’s performance while significantly reducing computational complexity.

With the rapid advancement of KD, it has expanded into multiple model-level KD variants. These include self-distillation, where a single model simultaneously acts as both the teacher and student, enhancing its own learning process (Chen et al., 2021; Zhang et al., 2023), and online distillation, where both teacher and student models are continuously updated throughout the training process (Zhang et al., 2021b; Feng et al., 2022). These innovations reflect the growing diversity in how knowledge transfer can be applied beyond the initial teacher-student (large model to lightweight model) framework.

In this paper, we focus specifically on data-centric graph KD, which emphasizes uncovering the latent knowledge embedded in graph structures, using data samples as the medium for distillation (Zhang et al., 2020; Zhu et al., 2024). In the EDEN framework, parent and child nodes within the HKT assume the roles of teacher and student, respectively. This enables knowledge transfer through their representations in a hierarchical manner. Our approach aligns with the principles of data-level online KD, leveraging the topological relationships between nodes to drive more effective distillation.

A.3 The Definition of partition tree

To define high-dimensional measurements of directed structural information, we introduce a partition tree of digraphs, which can also be regarded as the coarse-grained HKT without profile-oriented refinement (i.e., knowledge discovery (a) from a topology perspective only). Notably, community detection or clustering can be understood as a hierarchical structure, specifically a 3-layer partition tree. In this structure, the leaf nodes represent the individual nodes from the original graph, while their parent nodes serve as virtual nodes that represent entire communities. To make it easier to understand, we first give an example of a two-dimensional directed structural measurement of the graph, , where we consider a digraph and its 2-order partition of node sets . Building upon this, we interpret through a -height partition tree as follows.

To begin with, we introduce the root node and define a set of nodes as a subset of the root node in the -height partition tree . Notably, in this two-dimensional directed structural measurement, the nodes in the -height partition tree have only three types of identity information:

(1) the root node (), which does not exist in the original digraph but is used to describe the partition tree;

(2) the successor nodes (), which are not present in the original digraph but are employed to characterize leaf nodes;

(3) the leaf nodes (), which represent the original digraph nodes.

Then, we introduce immediate successors for the root denote , . Naturally, we can extend the concept associated with the root to successor nodes , which are directly related to the coarse partitioning of leaf nodes . Thus, we define . Now, for each , we introduce immediate successors denoted for all , and each successor is associated with an element in . Thus, we define as the singleton of a node in .

To this point, is a partition tree of height 2, and all its leaves are associated with singletons. For any node , is the union of for all values (immediate successors) of , and the union of for all nodes with values at the same level of the partition tree constitutes a partition of . Hence, the partition tree of a digraph is a set of nodes, each associated with a nonempty subset of nodes in digraph , and can be defined as follows:

Definition A.1.

(partition tree of Digraphs): Let be a connected digraph. We define the -height partition tree of with the following properties:

(1) For the root node , we define the set as the collection of nodes with heights less than .

(2) For each node , the immediate successors of are denoted as for ranging from 1 to a natural number , ordered from left to right as increases.

(3) For any natural number and each non-leaf node , the set forms a partition of , where denotes the height of (note that the height of the root node is 0).

(4) For each leaf node in , is a singleton, indicating that contains a single node from .

(5) For any two nodes at different heights, we use or to denote their hierarchical relationship.

(6) For or , we employ and to further describe this hierarchical relationship within the same partition. Specifically, if with , then represents the child nodes of . Conversely, if with , then denotes the parent node of . (note that for every non-leaf node , )

(7) For each , is the union of for all such that . Thus, .

According to Definition A.1, for a given digraph , we compute the -dimensional directed structural information measurement of by Eq. (3) while simultaneously identifying a -height coarse-grained HKT . The above process adheres to the following principles:

(1) The -dimensional structural information measurement of a digraph is achieved or approximated through the -dimensional hierarchical partition tree of ;

(2) serves as the guiding principle for the formation of the -dimensional coarse-grained HKT by minimizing the uncertainty or non-determinism inherent in the -dimensional structures of ;

(3) , functioning as a coarse-grained HKT for , encompasses the rules, regulations, and orders governing . This HKT is derived by minimizing the random variations present in the -dimensional structures of the digraphs, with these variations being determined by our -dimensional directed structural information measurement.

Based on the above principles, the -dimensional structural measurement of digraphs, provided by the -height partition tree, serves as a metric enabling us to comprehensively or maximally identify the -dimensional structure while mitigating the impact of random variations in the digraphs. Meanwhile, excellently facilitates the complete extraction of order from unordered digraphs, allowing us to discern order from disorder within structured data. Remarkably, our definition retains all properties of the digraphs, providing robust support for the thorough analysis of structured data.

A.4 Breaking the Limitations of Single-direction Random Walks

Utilizing simple random walks (SRW) on digraphs introduces unique challenges due to the inherent structure of these graphs. A common issue arises when the random walk encounters nodes with no outgoing edges, causing the walk to terminate prematurely. To better understand and visualize this limitation, we apply SRW starting from each node across four different digraphs. As the walk length increases, we track the proportion of complete paths relative to the total sequences, as shown in Fig. LABEL:fig:_empirical_1_(a). To further assess the impact of graph cycles, we design a modified SRW that excludes cycles and conduct the same experiment, with results presented in Fig. LABEL:fig:_empirical_1_(b).

This investigation highlights a key limitation of random walks on digraphs: strictly following edge directions leads to frequent interruptions in the walk. Due to the non-strongly connected nature of most digraphs, the proportion of complete walks drops sharply after just five steps. This indicates that random walks on digraphs typically fail to gather information beyond the immediate neighborhood of the starting node, limiting their ability to capture long-range dependencies. Moreover, when we eliminate the influence of cycles, the proportion of uninterrupted sequences declines even further, underscoring the difficulty of maintaining continuous paths in digraphs and further highlighting the limitations of SRWs (forward-only) in exploring deeper graph structures. It is evident that this significantly hinders the ability of structural entropy to capture topological uncertainty, reducing the effectiveness of and leading to sub-optimal coarse-grained HKT.

A.5 Greedy Algorithms for Partition Tree Construction

The primary impetus for developing the greedy partition tree construction algorithm lies in the quest for an effective method to construct hierarchical tree structures from digraph data while simultaneously minimizing the complexity and uncertainty associated with the underlying relationships. In complex systems represented by digraphs, directed structural entropy serves as a key metric to gauge the disorder and intricacy within the network. By harnessing the concept of directed edge structural entropy minimization, the algorithm aims to derive hierarchical trees that capture essential structural characteristics while promoting simplicity and interpretability. In a nutshell, the design principles of our proposed algorithm are as follows

(1) Directed edge structural entropy definition: The algorithm hinges on a rigorous definition of directed edge structural entropy within the context of the digraph mentioned in Sec. 3.1. This metric quantifies the uncertainty and disorder associated with the relationships between nodes in the digraph.

(2) Greedy selection strategy: At its core, the algorithm employs a greedy strategy, iteratively selecting directed edges that contribute most significantly to the reduction of directed structural entropy. This strategy ensures that each step in the tree construction process maximally minimizes the overall disorder in the evolving hierarchy.

(3) Hierarchical tree construction: The selected directed edges are systematically incorporated into the growing tree structure, establishing a hierarchical order that reflects the inherent organization within the graph. This process continues iterations until a coherent and informative tree representation is achieved.

(4) Complexity considerations: The algorithm balances the trade-off between capturing essential structural information and maintaining simplicity. By prioritizing directed edges that significantly impact entropy reduction, it aims to construct trees that are both insightful and comprehensible.

In conclusion, the greedy partition tree construction algorithm for digraph data, rooted in the minimization of directed edge structural entropy, presents a promising avenue for extracting hierarchical structures from the network with intricate topology. To clearly define a greedy partition tree construction algorithm, we introduce the following meta-operations in Alg. 1.

These meta-operations collectively define the intricate logic underlying the greedy partition tree construction algorithm, providing a comprehensive framework for constructing hierarchical structures in graph data while adhering to the principles of minimizing directed edge structural entropy. Building upon these foundations, we employ meta-operations to present the detailed workflow of the greedy structural tree construction algorithm. This facilitates the coarse-grained HKT construction from a topological perspective, ultimately achieving digraph data knowledge discovery (i.e., Step 1 Knowledge Discovery (a) in our proposed EDEN as illustrated in Fig. 2).

The Alg. 2 outlines the construction of a height-limited partition tree algorithm, emphasizing the minimization of directed structural uncertainty. It begins by sorting input data in non-decreasing order. Subsequently, it constructs an initial partition tree, using a greedy approach that iteratively combines nodes until the root has only two children. After that, it enters a phase of height reduction, wherein nodes contributing to excess height are detached iteratively until the tree attains height . To stabilize the structure, it inserts filler nodes for any node with a height discrepancy exceeding 1. This three-phase process ensures the efficient construction of a height-limited partition tree while minimizing directed structural measurement.

A.6 The Proof of Theorem 3.1

As discussed in Sec. 3.1, node profiles in a digraph act as essential identifiers. These profiles are not only instrumental in distinguishing nodes but also play a critical role in the construction of data knowledge. Recognizing this, our proposed partition-based node MI neural estimation seeks to further refine the coarse-grained HKT, which is initialized by the greedy algorithm. This refinement is achieved by quantifying the correlations between node profiles within the partition tree, thereby enhancing the granularity of the HKT. The refined tree provides a more accurate and nuanced representation of the graph, laying a robust foundation for subsequent KD. This process ensures that both topological structure and node profile information are effectively leveraged in the distillation, leading to improved model performance.

Considering a digraph and its coarse-grained partition tree , where encompasses all nodes in the digraph, along with the corresponding feature and label matrix represented as and . For current partition given by , we employ a sampling strategy to obtain a candidate node subset with nodes from the current partition and other partitions . Notably, different partitions used for sampling should be at the same height within the HKT (e.g., the current partition and other partitions should satisfy ). Building upon this, to reduce the computational complexity, we adopt a computation-friendly sampling strategy. Specifically, considering the number of nodes in the current partition is , we include all of them in the candidate set . Additionally, we perform random sampling for partition-by-partition until the total non-duplicated nodes in the satisfy , where is used to control the knowledge domain expansion come from the other partitions . This subset is used to generate knowledge that represents the current partition , formally represented as the parent representation of this partition in the HKT. Notably, we assign distinct identifiers to the sampled nodes based on their partition affiliations, denoting them as and , providing clarity in illustrating our method and derivation process.

Building upon this foundation, given the node as an example, a random variable is introduced to represent the node feature when randomly selecting a node from within the current partition . Then, the probability distribution of is formally defined as . Similarly, we can generalize to scenarios originating from other partitions to obtain . In , the definition of the generalized neighborhoods for any node is closely tied to the partition provided by the HKT, rather than relying on the traditional definition based on the adjacency matrix from directed edge sets .

Specifically, for nodes belonging to the current partition, denoted as , their generalized neighborhoods are defined as . This is done to identify nodes with sufficient information to efficiently represent the current partition i.e., (measure MI between and ). As for nodes belonging to other partitions, denoted as , their generalized neighborhoods are defined as . This is intended to address the limitations of the coarse-grained partition tree produced by considering only topological metrics. In other words, we aim to identify sets of nodes within other partitions that effectively capture the representation of both the current partition (explore potential correlation from the profile perspective) and their own partition (inherit their own partition criteria about directed structural information measurement), thereby refining the HKT through MI measurement between and .

Notably, we chose for the following reasons: (1) We aim to calculate the MI neural estimation between the current node and its generalized neighborhoods as a criterion for quantifying affinity scores. This approach ensures that nodes representative of the current partition receive higher affinity scores. Therefore, the generalized neighborhood of the current node needs to be closely related to the partition to which the node belongs, leading us to impose this restriction rather than defining the neighborhood as all nodes . For more on the motivation, intuition, and theory behind this mechanism, please refer to Sec. 2.2. As for the details on the calculation of affinity scores, we recommend referring to Sec. 3.2 on knowledge generation. (2) In general, the number of partitions is considerably smaller than the total set of nodes . As a result, one of the key motivations for imposing this neighborhood restriction is to minimize computational overhead and improve overall runtime efficiency. By limiting the scope of the calculations, we are able to streamline the process without sacrificing performance, making the method more scalable for large-scale graphs. In summary, expanding the neighborhood to include all nodes would result in higher computational costs and poorer performance. Therefore, we restrict the definition of the generalized neighborhood based on the partition obtained by HKT.

In either case, the generalized neighborhoods are subgraphs containing nodes from . These nodes may not be directly connected in the original topology but reveal inherent correlations at a higher level through the measurement of directed structural information. Therefore, this representation transcends the topological exploration of the digraph by and reflects intrinsic knowledge at a higher level. Building upon this, considering a node as an example, let be a random variable representing the generalized neighborhood feature selected from , originating from the current partition . We define the probability distribution of as .

Therefore, considering a node as an example, we define the joint distribution of the random variables of node features and its generalized neighborhood features within partition given by HKT, which is formulated as:

| (13) |

where the joint distribution reflects the probability that we randomly pick the corresponding node feature and its generalized neighborhood feature of the same node within partition together. Building upon this, the MI between the node features and the generalized neighborhood features within the current partition is defined as the KL-divergence between the joint distribution and the product of the marginal distributions of the two random variables . The above process can be formally defined as:

| (14) |

This MI measures the mutual dependency between the selected node and its generalized neighborhoods in . The KL divergence adopts the -representation (Belghazi et al., 2018) is defined as:

| (15) | ||||

where is an arbitrary class of functions that maps a pair of selected node features and its generalized neighborhood features to a real value. Here, we use to compute the dependency. If we explore any possible function , it can serve as a tight lower bound for MI. Building upon this, we can naturally extend the above derivation process to the scenario of sampling nodes belonging to other partitions, specifically . At this point, we can assess the shared contribution of nodes and with different affiliations in generating knowledge for the current partition .

A.7 The Proof of Theorem 3.2

The primary objective here is to introduce a node selection criterion that is grounded in quantifying the dependency between the selected node and its generalized neighborhoods. This dependency serves as the foundation for assessing the relevance and influence of each node within its local structure. The key insights behind using this dependency as a guiding principle are central to the formulation of the criterion function. By leveraging this approach, we aim to enhance the process of knowledge generation for the current partition , ensuring that both local and global relationships are effectively captured and utilized in the knowledge distillation process. The detailed reasoning and benefits of this approach are outlined as follows:

(1) In our definition, the generalized neighborhoods of the selected node are closely tied to the current partition and their own partition . Thus, measuring this dependency is equivalent to quantifying the correlation between the representation of the selected node and the knowledge possessed by the current partition and their own partition.

(2) The node-selection criterion is essentially a mechanism for weight allocation. Since the candidate node set is fixed by the sampling process, this step aims to assign higher affinity scores to nodes that better represent the current and their own partition. This guides the knowledge generation process to acquire the parent node representation for the current partition.

Building upon this, instead of calculating the exact MI based on KL divergence, we opt for non-KL divergences to offer favorable flexibility and optimization convenience. Remarkably, both non-KL and KL divergences can be formulated within the same -representation framework. We commence with the general -divergence between the joint distribution and the product of marginal distributions of vertices and neighborhoods. The above process can be formally defined as follows:

| (16) |