Towards a Theory of Systems Engineering Processes: A Principal-Agent Model of a One-Shot, Shallow Process

Abstract

Systems engineering processes coordinate the effort of different individuals to generate a product satisfying certain requirements. As the involved engineers are self-interested agents, the goals at different levels of the systems engineering hierarchy may deviate from the system-level goals which may cause budget and schedule overruns. Therefore, there is a need of a systems engineering theory that accounts for the human behavior in systems design. Undertaking such an ambitious endeavor is clearly beyond the scope of any single paper due to the inherent difficulty of rigorously formulating the problem in its full complexity along with the lack of empirical data. However, as experience in the physical sciences shows, a lot of knowledge can be generated by studying simple hypothetical scenarios which nevertheless retain some aspects of the original problem. To this end, the objective of this paper is to study the simplest conceivable systems engineering process, a principal-agent model of a one-shot (single iteration), shallow (one level of hierarchy) systems engineering process. We assume that the systems engineer maximizes the expected utility of the system, while the subsystem engineers seek to maximize their expected utilities. Furthermore, the systems engineer is unable to monitor the effort of the subsystem engineer and may not have complete information about their types or the complexity of the design task. However, the systems engineer can incentivize the subsystem engineers by proposing specific contracts. To obtain an optimal incentive, we pose and solve numerically a bi-level optimization problem. Through extensive simulations, we study the optimal incentives arising from different system-level value functions under various combinations of effort costs, problem-solving skills, and task complexities. Our numerical examples show that, the passed-down requirements to the agents increase as the task complexity and uncertainty grow and they decrease with increasing the agents’ costs.

Index Terms:

systems engineering theory, systems science, complex systems, game theory, principal-agent model, mechanism design, contract theory, expected utility, bi-level programming problem, optimal incentives.I Introduction

Cost and schedule overruns plague the majority of large systems engineering projects across multiple industry sectors including power [1], defense [2], and space [3]. As design mistakes are more expensive to correct during the production and operation phases, the design phase of the systems engineering process (SEP) has the largest potential impact on cost and schedule overruns. Collopy et al. [4] argued that requirements engineering (RE), which is a fundamental part of the design phase, is a major source of inefficiencies in systems engineering. In response, they developed value-driven design (VDD) [5], a systems design approach that starts with the identification of a system-level value function and guides the systems engineer (SE) to construct subsystem value functions that are aligned with the system goals. According to VDD, the subsystem engineers (sSE) and contractors should maximize the objective functions passed down by the SE instead of trying to meet requirements.

RE and VDD make the assumption that the goals of the human agents involved in the SEP are aligned with the SE goals. In particular, RE assumes that, agents attempt to maximize the probability of meeting the requirements, while VDD assumes that they will maximize the objective functions supplied by the SE. However, this assumption ignores the possibility that the design agents, as all humans, may have personal agendas that not necessarily aligned with the system-level goals.

Contrary to RE and VDD, it is more plausible that the design agents seek to maximize their own objectives. Indeed, there is experimental evidence that the quality of the outcome of a design task is strongly affected by the reward anticipated by the agent [6, 7, 8]. In other words, the agent decides how much effort and resources to devote to a design task after taking into account the potential reward. In the field, the reward could be explicitly implemented as an annual performance-based bonus, or, as it is the case most often, it could be implicitly encoded in expectations about job security, promotion, professional reputation, etc. To capture the human aspect in SEPs, one possible way is one needs to follow a game-theoretic approach [9], [10]. Most generally, the SEP should can be modeled as a dynamical hierarchical network game with incomplete information. Each layer of the hierarchy represents interactions among the SE and some sSEs, or the sSEs and other engineers or contractors. With the term “principal,” we refer to any individual delegating a task, while we reserve the term “agent” for the individual carrying out the task. Note that an agent may simultaneously be the principal in a set of interactions down the network. For example, the sSE is the agent when considering their interaction with the SE (the principal), but the principal when considering their interaction with a contractor (the agent). At each time step, the principals pass down delegated tasks along with incentives, the agents choose the effort levels that maximize their expected utility, perform the task, and return the outcome to the principals.

The iterative and hierarchical nature of real SEPs makes them extremely difficult to model in their full generality. Given that our aim is to develop a theory of SEPs, we start from the simplest possible version of a SEP which retains, nevertheless, some of the important elements of the real process. Specifically, the objective of this paper is to develop and analyze a principal-agent model of a one-shot, shallow SEP. The SEP is “one-shot” in the sense that decisions are made in one iteration and they are final. The term “shallow” refers to a one-layer-deep SEP hierarchy, i.e., only the SE (principal) and the sSEs (agents) are involved. The agents maximize their expected utility given the incentives provided by the principal, and the principal selects the incentive structure that maximizes the expected utility of the system. We pose this mechanism design problem [11] as a bi-level optimization problem and we solve it numerically.

A key component of our SEP model is the quality function of an agent. The quality function is a stochastic process that models the principal’s beliefs about the outcome of the delegated design task given that the agent devotes a certain amount of effort. The quality function is affected by what the principal believes about the task complexity and the problem solving skills of the agent. Following our work [12], we model the design task as a maximization problem where the agent seeks the optimal solution. The principal expresses their prior beliefs about the task complexity by modeling the objective function as a random draw from a Gaussian process prior with a suitably selected covariance function.

As we showed in [12], conditioned on knowing the task complexity and the agent type, the quality function is well approximated by an increasing, concave function of effort with additive Gaussian noise. However, we will use a linear approximation for the quality function.

We study numerically two different scenarios. The first scenario assumes that the SE knows the agent types and the task complexity, but they do not observe the agent’s effort. This situation is known in game theory as a moral hazard problem [13]. The most common way to solve a moral hazard problem is to use the first order approach (FOA) [14]. In the FOA, the incentive compatibility constraint of the agent is replaced by its first order necessary condition. However, the FOA depends on the convexity of the distribution function in effort which is not valid in our case. There have been several attempts to solve the principal-agent model where the requirements of the FOA may fail, nonetheless they must still satisfy the monotone likelihood ratio property [15].

In the second scenario, we study the case of moral hazard with simultaneous adverse selection [16], i.e., the SE observes neither the effort nor the type of agents nor the task complexity. This is a Bayesian game with incomplete information.. In this case, the SE experiences additional loss in their expected utility, because the sSEs’ can pretend to have different types. The revelation principle [17] guarantees that it suffices to search for the optimal mechanism within the set of incentive compatible mechanisms, i.e., within the set of mechanisms in which the sSEs are telling the truth about their types and technology maturity. In this paper, we solve the optimization problem in the principal-agent model, numerically with making no assumptions about the quality function.

The paper is organized as follows. In section II we will derive the mathematical model of the SEP and we will study the type-independent and type-dependent optimal contracts. We will also introduce the value and utility functions. In section III, we perform an exhaustive numerical study and show the solutions for several case studies. Finally, we conclude in section IV.

II Modeling a one-shot, shallow systems engineering process

II-A Basic definitions and notation

As mentioned in the introduction, we develop a model of a one-shot (the game evolves in one iteration and the decisions are final), shallow (one-layer-deep hierarchy) SEP. The SE has decomposed the system into subsystems and assigned a sSE to each one of them. We use to label each subsystem. From now on, we refer to the SE as the principal and the sSEs as the agents. The principal delegates tasks to the agents along with incentives. The agents choose how much effort to devote on their task by maximizing their expected utility. The principal, anticipates this reaction and selects the incentives that maximize the system-level expected utility.

Let be a probability space where, is the sample space, is a -algebra, and is the probability measure. With we refer to the random state of nature. We use upper case letters for random variables (r.v.), bold upper case letters for their range, and lower case letters for their possible values. For example, the type of agent is a r.v. taking discrete values in the set . Collectively, we denote all types with the -dimensional tuple and we reserve to refer to the -dimensional tuple containing all elements of except . This notation carries to any -dimensional tuple. For example, and are the type values for all agents and all agents except , respectively. The range of is .

The principal believes that the agents types vary independently, i.e., they assign a probability mass function (p.m.f.) on that factorizes over types as follows:

| (1) |

for all in , where is the probability that agent has type , for in . Of course, we must have , for all .

Each agent knows their type, but their state of knowledge about all other agents is the same as the principal’s. That is, if agent is of type , then their state of knowledge about everyone else is captured by the p.m.f.:

| (2) |

Agent chooses a normalized effort level for his delegated task. We assume that this normalized effort is the percentage of an agent’s maximum available effort. The units of the normalized effort depend on the nature of the agent’s subsystem. If the principal and the agent are both part of same organization then the effort can be the time that the agent dedicates to the delegated task in a particular period of time, e.g., in a fiscal year. On the other hand, if the agent is a contractor, then the effort can be the percentage of the available yearly budget that the contractor spends on the assigned task. We represent the monetary cost of the -th agent’s effort with the random process . In economic terms, is the opportunity cost, i.e., the payoff of the best alternative project in agent could devote their effort. In general, we know that the process should be an increasing function of the effort . For simplicity, we assume that the cost of effort of the agents is quadratic,

| (3) |

with a type-dependent coefficient for all in .

The quality function of the -th agent is a real valued random process paremeterized by the effort . The quality function models everybody’s beliefs about the design capabilities of agent . The interpretation of the quality function is as follows. If agent devotes to the task an effort of level , then they produce a random outcome of quality . In our previous work [12], we created a stochastic model for the quality function of a designer where we explicitly captured its dependence on the problem-solving skills of the designer and on the task complexity. In that work, we showed that has increasing and concave sample paths, that its mean function is increasing concave, and the standard deviation is decreasing with effort, albeit mildly, it is independent of the problem-solving skills of the designer, and it only increases mildly with increasing task complexity. Examining the spectral decomposition of the process for various cases, we observed that it can be well-approximated by:

| (4) |

where, for in , is an increasing, concave, type-dependent mean quality function, is a type-dependent standard deviation parameter capturing the aleatory uncertainty of the design process, and is a standard normal r.v. If we further assume that the time window for design is relatively small, then the term can be approximated as a linear function. Therefore, we will assume that the quality function is:

| (5) |

where, is inversely proportional to the complexity of the problem. For instance, a large corresponds to a low-complexity task while a corresponds to a high-complexity task. The standard deviation parameter captures the inherent uncertainty of the design process and depends on the maturity of the underlying technology. In summary, an agent’s type is characterized by the triplet cost-complexity-uncertainty.

From the perspective of the principal, the r.v.’s are independent of the agents’ types as they represent the uncertain state of nature. A stronger assumption that we employ is that the ’s are also independent to each other. This assumption is strong because it essentially means that the qualities of the various subsystems are decoupled. Under these independence assumptions, the state of knowledge of the principal is captured by the following probability measure:

| (6) |

for all and all Borel-measurable . Assuming that all these are common knowledge, the state of knowledge of agent after they observe their type (but before they observe ) is

| (7) |

Finally, we use to denote the expectation of any quantity over the state of knowledge of the principal as characterized by the probability measure of Eq. (6). That is, the expectation of any function of the agent types and the state of nature is

| (8) |

Similarly, we use the notation to denote the conditional expectation over the state of knowledge of an agent who knows that their type is . This is the expectation with respect to the probability measure of Eq. (2) and we have:

| (9) |

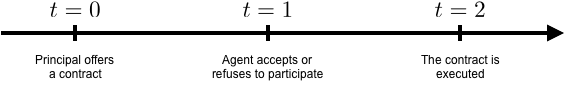

II-B Type-independent optimal contracts

We start by considering the case where the principal offers a single take-it-or-leave-it contract independent of the agent type. This is the situation usually encountered in contractual relationships between the SE and the sSEs within the same organization. The principal offers the contract and the agent decides whether or not to accept it. If the agent accepts, then they select their level of effort by maximizing their expected utility, they work on their design task, they return the outcome quality back to the principal, and they receive their reward. We show a schematic view of this type of contracts in Fig. 1(a). A contract is a monetary transfer function that specifies the agent’s compensation contingent on the quality level . Therefore, the payoff of the -th agent is the random process:

| (10) |

We assume that the agent knows their type, but they choose the optimal effort level ex-ante, i.e., they choose the effort level before seeing the state of the nature . Denoting their monetary utility function by , the -th agent selects an effort level by solving:

| (11) |

Let be the r.v. representing the quality function that the principal should expect from agent if they act optimally, i.e.,

| (12) |

Then the system level value is a r.v. of the form

| (13) |

where is a function of the subsystem outcomes . We introduce the form of the value function, , in Sec. III. Note that, even though in this work the r.v. is assumed to be just a function of , in reality it may also depend on the random state of nature, e.g., future prices, demand for the system services. Consideration of the latter is problem-dependent and beyond the scope of this work.

Given the system value and taking into account the transfers to the agents, the system-level payoff is the r.v.

| (14) |

If the monetary utility of the principal is , then they should select the transfer functions by solving:

| (15) |

However, guarantee that they want to participate in the SEP, the expected utility of the sSEs must be greater than the expected utility they would enjoy if they participated in another project. Therefore, the SE must solve Eq. (15) subject to the participation constraints:

| (16) |

for all possible values of , and all , where is known as the reservation utility of agent .

II-C Type-depdenent optimal contracts

By offering a single transfer function, the principal is unable to differentiate between the various agent types when adverse selection is an issue. That is, all agent types, independently of their cost, complexity, and uncertainty attributes, exactly the same transfer function. In other words, with a single transfer function the principal is actually targeting the average agent. This necessarily leads to inefficiencies stemming from problems such as paying an agent involved in a low-complexity task more than a same cost and uncertainty agent involved in a high-complexity task.

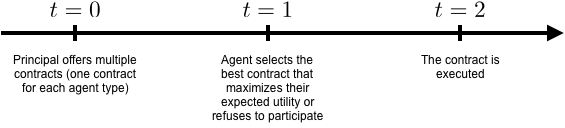

The principal can gain in efficiency by offering different transfer functions (if any exist) that target specific agent types. For example, the principal could offer a transfer function that is suitable for cost-efficient, low-complexity, low-uncertainty agents, and one for cost-inefficient agents, low-complexity, low-uncertainty, etc., for any other combination that is supported by the principal’s prior knowledge about the types of the agent population. To implement this strategy the principal can employ the following extension to the mechanism of Sec. II-B. Prior to initiating work, the agents announce their types to the principal and they receive a contract that matches the announced type. In Fig. 1(b), we show how this type of contract evolves in time. Let us formulate this idea mathematically. The -th agent announces a type in (not necessarily the same as their true type ), and they receive the associated, type-specific, transfer function . The payoff to agent is now:

| (17) |

where all other quantities are like before. Given the announcement of a type , the rational thing to do for agent is to select a level of by maximizing their expected utility, i.e., by solving:

| (18) |

Of course, the announcement of is also a matter of choice and a rational agent should select also by maximizing their expected utility. The obvious issue here is that agents can lie about their type. For example, a cost-efficient agent (agent with low cost of effort) may pretend to be a cost-inefficient agent (agent with high cost of effort). Fortunately, the revelation principle [17] comes to the rescue and simplifies the situation. It guarantees that, among the optimal mechanisms, there is one that is incentive compatible. Thus it will be sufficient if the principal constraints their contracts to over truth-telling mechanisms. Mathematically, to enforce truth-telling, the SE must satisfy the incentive compatibility constraints:

| (19) |

for all in . Eq. (19) expresses mathematically that “the expected payoff of agent when they are telling the truth is always greater than or equal to the expected payoff they would enjoy if they lied.”

Similar to the developments of Sec. II-B, the quality that the SE expects to receive is:

| (20) |

where we use the fact that the mechanism is incentive-compatible. The payoff of the SE becomes:

| (21) |

Therefore, to select the optimal transfer functions, the SE must solve:

| (22) |

subject to the incentive compatibility constraints of Eq. (19), and the participation constraints:

| (23) |

for all , where we also assume that the incentive compatibility constrains hold.

II-D Parameterization of the transfer functions

Transfer functions must be practically implementable. That is, they must be easily understood by the agent when expressed in the form of a contract. To be easily implementable, transfer functions should be easy to convey in the form of a table. To achieve this, we restrict our attention to functions that are made out of constants, step functions, linear functions, or combinations of these.

Despite the fact that including such functions would likely enhance the principal’s payoff, we exclude transfer functions that encode penalties for poor agent performance, i.e., transfer functions that can take negative values. First, contracts with penalties may not be implementable if the principal and the agent reside within the same organization. Second, even when the agent is an external contractor penalties are not commonly encountered in practice. In particular, if the SE is a sensitive government office, e.g., the department of defense, national security may dictate that the contractors should be protected from bankruptcy. Third, we do not expect our theory to be empirically valid when penalties are included since, according to prospect theory [18], humans perceive losses differently. They are risk-seeking when the reference point starts at a loss and risk-averse when the reference point starts at a gain.

To overcome these issues we restrict our attention to transfer functions that include three simple additive terms: a constant term representing a participation payment, i.e., a payment received for accepting to be part of the project; a constant payment that is activated when a requirement is met; and a linear increasing part activated after meeting the requirement. The role of the latter two part is to incentivize the agent to meet and exceed the requirements.

We now describe this parameterization mathematically. The transfer function associated with type in of agent is parameterized by:

| (24) |

where is the Heaviside function ( if and otherwise), and all the parameters are non-negative. In Eq. (24), is the participation reward, is the award for exceeding the passed-down requirement, is the passed-down requirement, and the payoff per unit quality exceeding the passed-down requirement. We will call these form of transfer functions the “requirement based plus incentive” (RPI) transfer function. In case the , we call it the “requirement based” (RB) transfer function. At this point, it is worth mentioning that the passed-down requirement is not necessarily the same as the true system requirement , see our reults in Sec. III. As we have shown in earlier work [10], the optimal passed-down requirement differs from the true system requirement. For example, the SE should ask for higher requirements for the design task with low-complexity. On the other hand, for the task with high-complexity, the SE should pass down less than the actual requirement. For notational convenience, we denote by () the transfer parameters pertaining to agent of type , i.e.,

| (25) |

Similarly, with we denote the transfer parameters pertaining to agent for all types, i.e.,

| (26) |

and with all the transfer parameters collectively, i.e.,

| (27) |

II-E Numerical solution of the optimal contract problem

The optimal contract problem is a an intractable bi-level, non-linear programming problem.In particular, the SE’s problem is for the case of type-dependent contracts is to maximize the expected system-level utility over the class of implementable contracts, i.e.,

| (28) |

subject to

-

1.

contract implementability constraints:

(29) for all ;

-

2.

individual rationality constraints:

(30) for all ;

-

3.

participation constraints:

(31) for all ; and

-

4.

incentive compatibility constraints:

(32) for all and in .

For the case of type-independent contracts, one adds the constraint for all and in and the incentive compatibility constraints are removed.

A common approach to solving bi-level programming problems is to replace the internal optimization with the corresponding Karush-Kuhn-Tucker (KKT) condition. This approach is used when the internal problem is concave, i.e., when it has a unique maximum. However, in our case, concavity is not guaranteed, and we resort to nested optimization. We implement everything in Python using the Theano [19] symbolic computation package exploit automatic differentiation. We solve the follower problem using sequential least squares programming (SLSQP) as implemented in the scipy package. We use simulated annealing to find the global optimum point of the leader problem. We first convert the constraint problem to the unconstrained problem using the penalty method such that:

| (33) |

where ’s are the constraints in Eqs. (29-32). Maximizing the in Eq. 33, is equivalent to finding the mode of the distribution:

| (34) |

we use Sequential Monte Carlo (SMC) [20] method to sample from this distribution by increasing from to . To perform the SMC, we use the “pysmc” package [21]. To ensure the computational efficiency of our approach, we need to use a numerical quadrature rule to approximate the expectation over . This step is discussed in Appendix A. To guarantee the reproducibility of our results, we have published our code in an open source Github repository (https://github.com/ebilionis/incentives) with an MIT license.

II-F Value Function and Risk Behavior

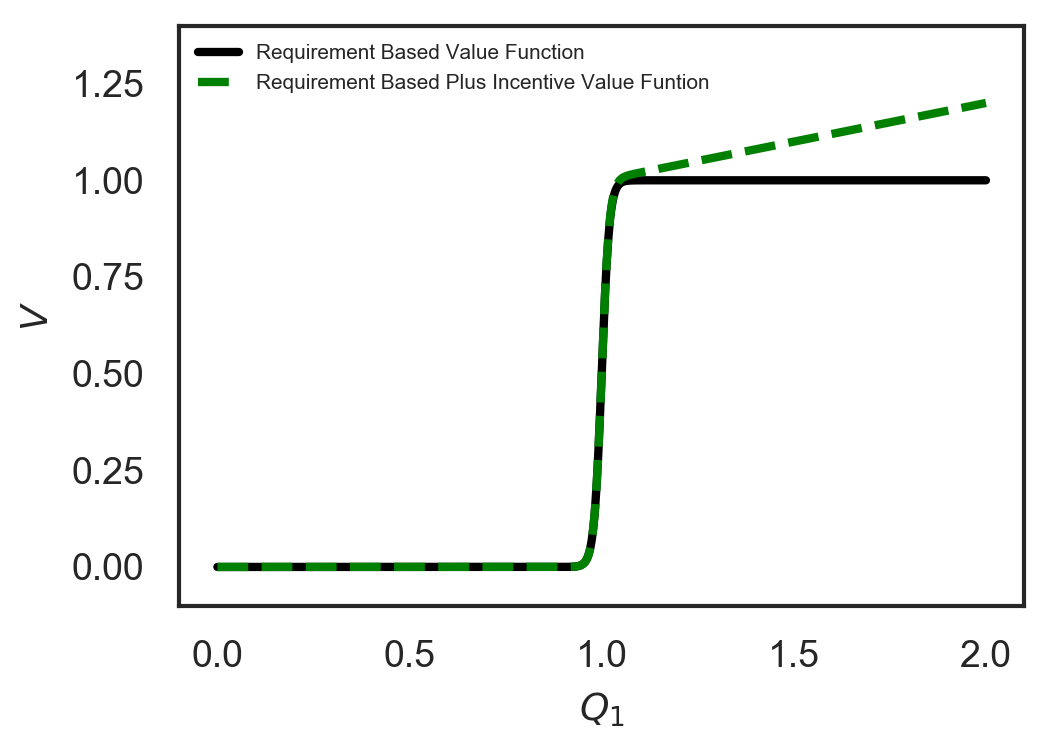

We assume two types of value functions, namely, the requirement based (RB) and requirement based plus incentive (RPI). Mathematically, we define these two value functions as:

| (35) |

and,

| (36) |

respectively. In Fig. 2, we show these two value functions for one subsystem.

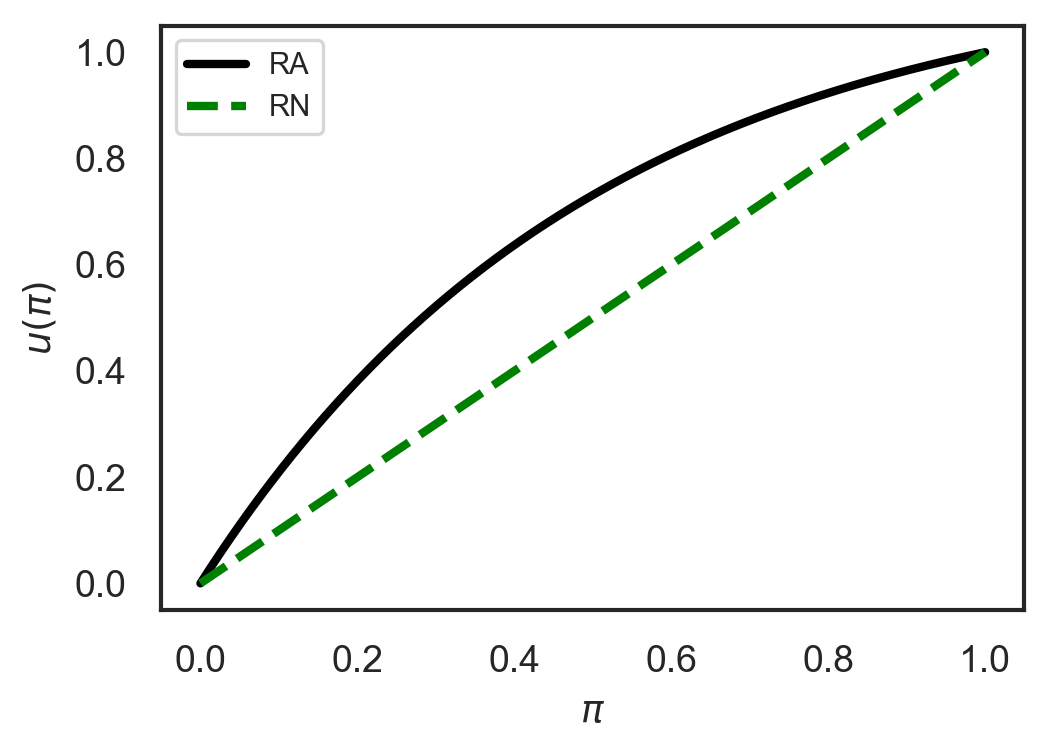

We consider two different risk behaviors for individuals, risk averse (RA) and risk neutral (RN). We use the utility function in Eq. (37), for the risk behavior of the agents and principal,

| (37) |

where for a RA agent. The parameters and are:

We show these utility functions for the two different risk behaviors in Fig. 3.

III NUMERICAL EXAMPLES

In this section, we start by performing an exhaustive numerical investigation of the effects of task complexity, agent’s cost of effort, uncertainty in the quality of the returned task, and adverse selection. In Sec. III-A1, we study the “moral hazard only” scenario with the RB transfer and value functions. In Sec. III-A2, we study the effect of the RPI transfer and value functions. We study the “moral hazard with adverse selection” in Sec. III-A3.

III-A Numerical investigation of the proposed model

In these numerical investigations we consider a single risk neutral principal and a risk averse agent. Each case study corresponds to a choice of task complexity ( in Eq. (5)), cost of effort ( in Eq. (3)), and performance uncertainty ( in Eq. (5)). With regards to task complexity, we select for an easy task and for a hard task. For the cost of effort parameter, we associate and with the low- and high-cost agents, respectively. Finally, low- and high-uncertainty tasks are characterized by and , respectively.

Note that, the parameters , , and have two indices. The first index is the agent’s (subsystem’s) number and the second index is the type of the agent. We begin with a series of cases with a single agent with a known type denoted by (moral-hazard-only case studies). In these cases, the parameters corresponding to complexity, cost and uncertainty are denoted by , , and , respectively. We end with a series of cases with a single agent but with an unknown type that can take two discrete, equally probable values and (moral-hazard-and-adverse-selection case studies). Consequently, denotes the effort coefficient of a type-1 agent , the same for a type-2 agent, and so on for all the other parameters.

To avoid numerical difficulties and singularities, we replace all Heaviside functions with a sigmoids, i.e.,

| (38) |

where the parameter controls the slope. We choose for the transfer functions and for the value function. We consider two types of value functions, RB and RPI value functions, see Sec. II-F. For the RB value function we use the transfer function of Eq. (24) constrained so (RB transfer function). In other words, the agent is paid a constant amount if they achieve the requirement and there is no payment per quality exceeding the requirement. For the case of RPI value function, we remove this constraint.

III-A1 Moral hazard with RB transfer and value functions

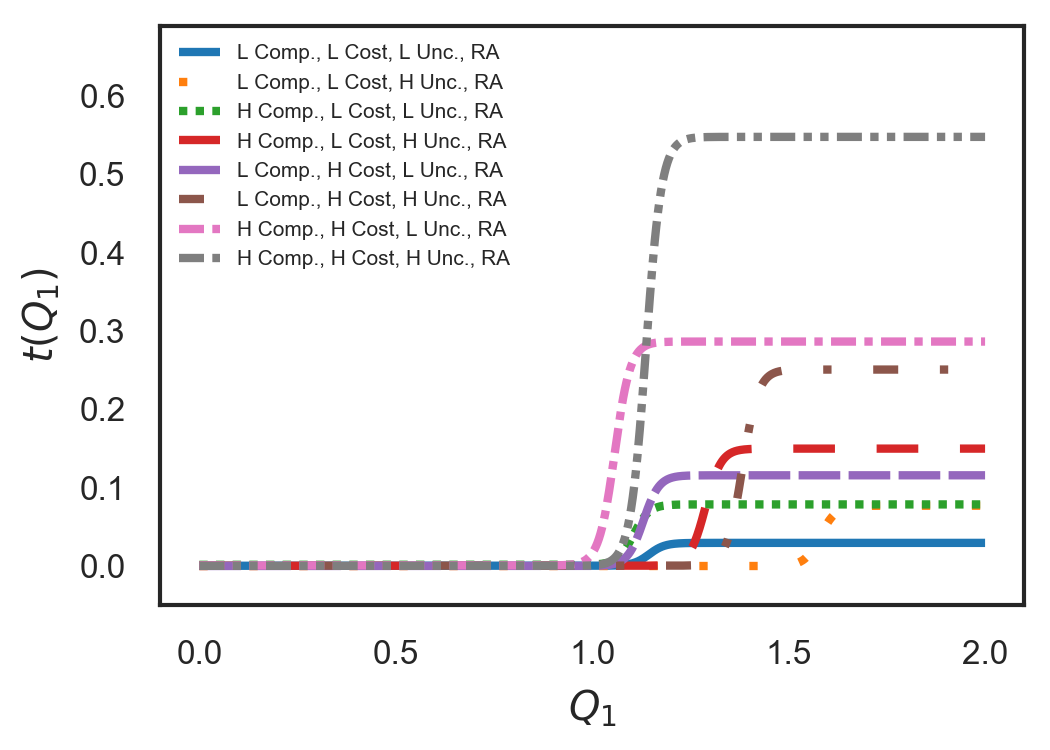

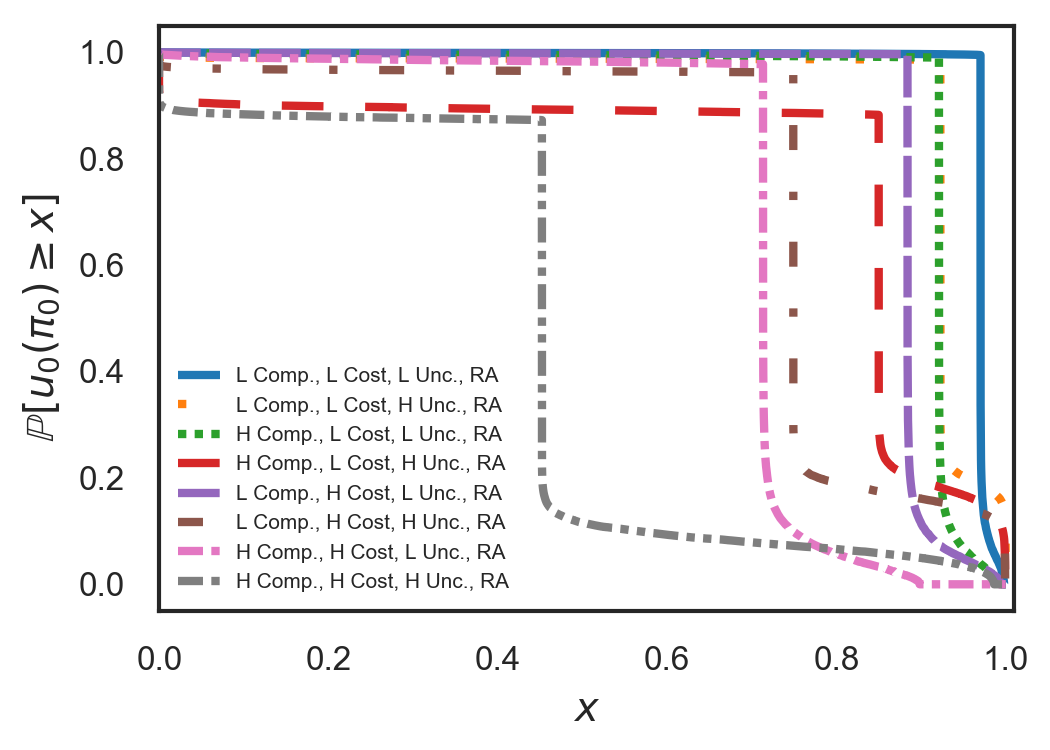

Consider the case of a single risk-averse agent of known type and a risk-neutral principal with an RB value function. In Fig. 4(a), we show the transfer functions for several agent types covering all possible combinations of low/high complexity, low/high cost, and low/high task uncertainty. Fig. 4(b) depicts the probability that the principal’s expected utility exceeds a given threshold for all these combinations. We refer to this curve as the exceedance curve. Finally, in tables I and II, we report the expected utility of the principal for the low and high cost agents, respectively. We make the following observations:

-

1.

For the same level of task complexity and uncertainty, but with increasing cost of effort:

-

(a)

the optimal passed-down requirement decreases;

-

(b)

the optimal payment for achieving the requirement increases;

-

(c)

the principal’s expected utility decreases; and

-

(d)

the exceedance curve shifts to the left.

Intuitively, as the agent’s cost of effort increases, the principal must make the contract more attractive to ensure that the participation constraints are satisfied. As a consequence, the probability that the principal’s expected utility exceeds a given threshold decreases.

-

(a)

-

2.

For the same level of task uncertainty and cost of effort, but with increasing complexity:

-

(a)

the optimal passed-down requirement decreases;

-

(b)

the optimal payment for achieving the requirement increases;

-

(c)

the principal’s expected utility decreases; and

-

(d)

the exceedance curve shifts to the left.

Thus, we see that an increase in task complexity has the same a similar effect as an increase in the agent’s cost of effort. As in the previous case, to make sure that the agent wants to participate, the principal has to make the contract more attractive as task complexity increases.

-

(a)

-

3.

For the same level of task complexity and cost of effort, but with increasing uncertainty:

-

(a)

the optimal passed-down requirement increases;

-

(b)

the optimal payment for achieving the requirement increases;

-

(c)

the principals expected utility decreases;

-

(d)

the exceedance curve shifts towards the bottom right.

This case is the most interesting. Here as the uncertainty of the task increases, the principal must increase the passed-down requirement to ensure that they are hedged against failure. At the same time, however, they must also increase the payment to ensure that the agent still has an incentive to participate.

-

(a)

-

4.

For all cases considered, the optimal passed down requirement is greater than the true requirement (which is set to one). Note, however, this is not universally true. Our study does not examine all possible combinations of cost, quality, and utility functions that could have been considered. Indeed, as we showed in our previous work [10], there are situations in which a smaller-than-the-true requirement can be optimal.

| Low Uncertainty | High Uncertainty | |

|---|---|---|

| Low Complexity | 0.97 | 0.93 |

| High Complexity | 0.92 | 0.79 |

| Low Uncertainty | High Uncertainty | |

|---|---|---|

| Low Complexity | 0.89 | 0.77 |

| High Complexity | 0.72 | 0.45 |

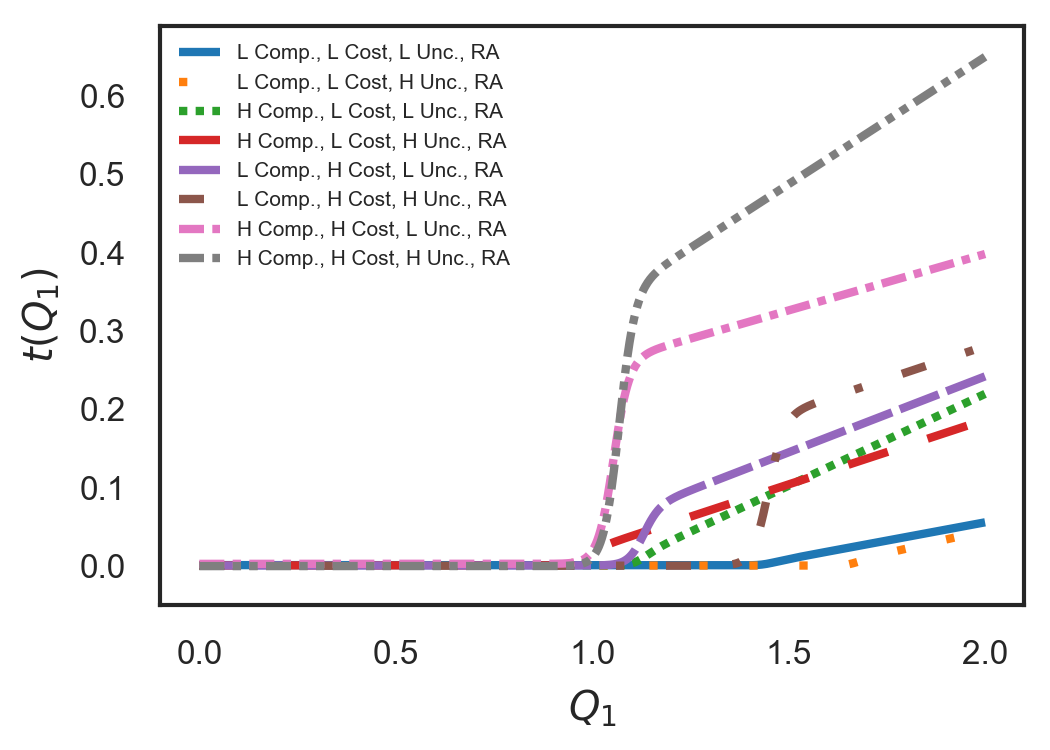

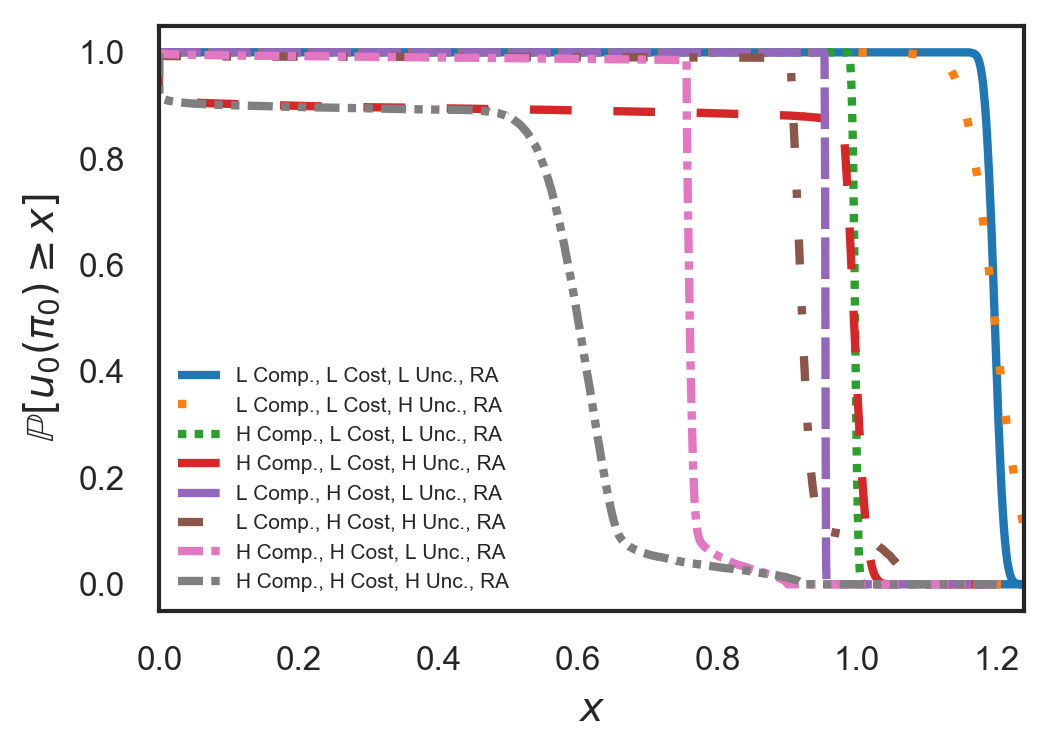

III-A2 Moral hazard with RPI transfer and value functions

This case is identical to Sec. III-A1, albeit we use the RPI value function, see Sec. II-F, and the RPI transfer function, see Eq. (24). Fig 5(a), depicts the transfer functions for all combinations of agent types and task complexities. In Fig. 5(b), we show the exceedance curve using the RPI value and transfer functions. Finally, in tables III and IV, we report the expected utility of the principal using the RPI transfer and value functions for the low and high cost agents, respectively. The results are qualitative similar to Sec. III-A1, with the additional observations:

-

1.

For the same level of task complexity, uncertainty and agent cost, the optimal reward for achieving the requirement decreases compared to the same cases in Sec. III-A1. Intuitively, as the principal has the option to reward the agent based on the quality exceeding the requirement, they prefer to pay less for fulfilling the requirement. Instead, the principal incentivizes the agent to improve the quality beyond the optimal passed-down requirement.

-

2.

The slope of the transfer function beyond the passed-down requirement is almost identical to the slope of the value function.

| Low Uncertainty | High Uncertainty | |

|---|---|---|

| Low Complexity | 1.2 | 1.2 |

| High Complexity | 1.0 | 0.89 |

| Low Uncertainty | High Uncertainty | |

|---|---|---|

| Low Complexity | 0.95 | 0.93 |

| High Complexity | 0.76 | 0.56 |

In table V, we summarize our observations for the results in Sec. III-A1 and III-A2. In this table, we show how the passed-down requirement and payment change when we fix two parameters of the model (we denote it by “fix” in the table) and vary the third parameter. We denote increase by and decrease by .

| complexity | agent cost | uncertainty | requirement | payment |

| fix | fix | |||

| fix | fix | |||

| fix | fix |

III-A3 Moral hazard with adverse selection

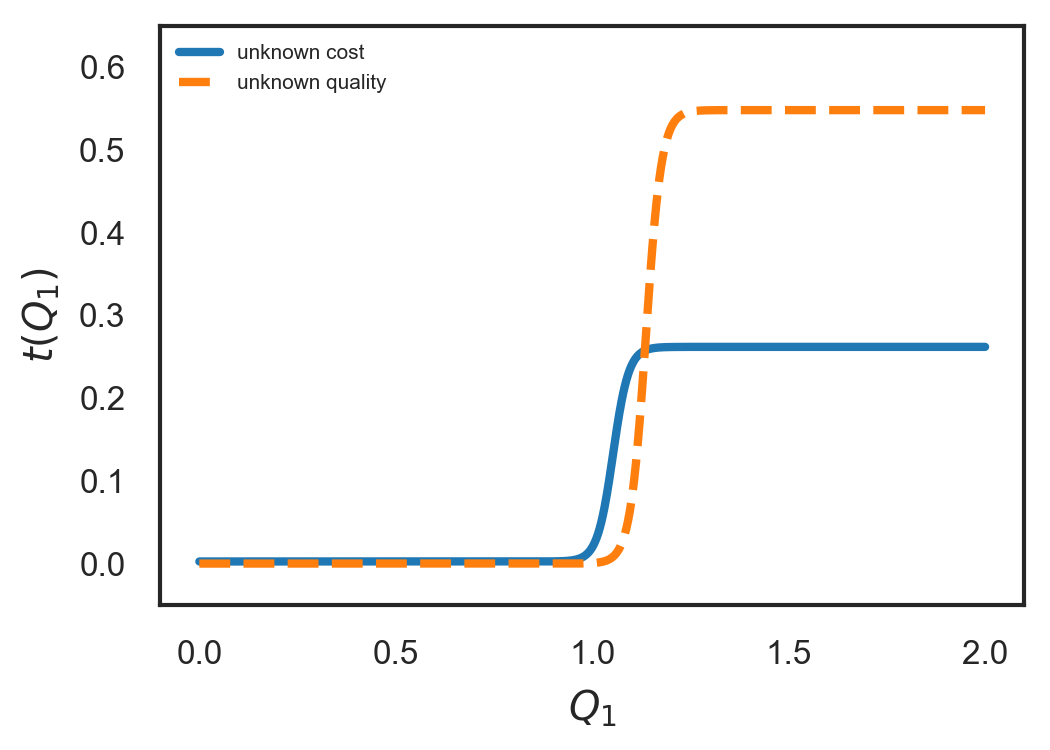

Consider the case of a single risk-averse agent of unknown type which takes two possible values, and a risk-neutral principal with a RB value function. We consider two possibilities for the unknown type:

-

1.

Unknown cost of effort. Here, we set (), (), and and

-

2.

Unknown task complexity. For the unknown quality we assume that and , (), and ().

In this scenario, we maximize the expected utility of the principal subject to constraints in Eqs. (29-32). The incentive compatibility constraint, Eq. (32), guarantees that the agent will choose the contract that is suitable for their true type. In other words, as there are two agent types’ possibilities, the principal must offer two contracts, see Fig. 1(b). These two contracts must be designed in a way that there is no benefit for the agent to deviate from their true type, i.e., the contracts enforce the agent to be truth telling.

Solving the constraint optimization problem yields:

i.e., the two contracts collapse into one. Note that the resulting contract is the same as the pure moral hazard case, Sec. III-A1, for an agent with type , , and . In other words, the principal must behave as if there was only a high-cost agent. That is, there are no contacts that can differentiate between a low- and a high-cost agent in this case.

A similar outcome occurs for unknown task complexity. The solution of the constraint optimization problem for this scenario is:

which is the same as the optimum contract that is offered for the pure moral hazard case, Sec. III-A1, for an agent with type , , and . Therefore, in this case the principal must behave as if there the task is of high complexity.

Note that in both cases above, the collapse of the two contracts to one contract is not a generalizable property of our model. In particular, it may not happen if more flexible transfer functions are allowed, e.g., ones that allow performance penalties.

In Fig. 6, we show the transfer functions for the adverse selection scenarios with unknown cost and unknown quality. In tables VI and VII, we show the expected utility of two types of agents and the principal using the optimum contract for unknown cost and unknown quality, respectively. To sum up:

-

1.

The unknown cost:

-

(a)

the optimum transfer function for this problem is as same as that the principal would have offered for a single-type high-cost agent with (moral hazard scenario with no adverse selection);

-

(b)

the expected utility of the low cost agent (efficient agent) is greater than that of the high cost agent.

In this case, the low-cost agent benefits because of information asymmetry. In other words, the principal must pay an information rent to the low-cost agent to reveal their type.

-

(a)

-

2.

The unknown task complexity:

-

(a)

the optimum contract in this case is the contract that the principal would have offered for the single-type high-complexity task with ;

-

(b)

the expected utility of an agent dealing with a low-complexity task is greater than that of an agent dealing with a high-complexity task.

Again, due to the information asymmetry, the agent benefits if the task complexity is low. The principal must pay an information rent to reveal the task complexity.

-

(a)

| Low Cost Agent (Type 1) | 0.39 | 0.72 |

| High Cost Agent (Type 2) | 0 | 0.72 |

| Low Complexity (Type 1) | 0.52 | 0.45 |

| High Complexity (Type 2) | 0 | 0.45 |

III-B Satellite Design

In this section we apply our method on a simplified satellite design. Typically a satellite consists of seven different subsystems [22], namely, electrical power subsystem, propulsion, attitude determination and control, on-board processing, telemetry, tracking and command, structures and thermal subsystems. We focus our attention on the propulsion subsystem (). To simplify the analysis, we assume that the design of these subsystems is assigned to a sSE in a one-shot fashion. Note that, the actual systems engineering process of the satellite design is an iterative process and the information and results are exchanged back and forth in each iteration. Our model is a crude approximation of reality. The goal of the SE is to optimally incentivize the sSE to produce subsystem designs that meet the mission’s requirements. Furthermore, we assume that the propulsion subsystem is decoupled from the other subsystems, i.e., there is no interactions between them, and that the SE knows the types of each sSE and therefore, there is no information asymmetry.

To extract the parameters of the model, i.e., , we will use available historical data. To this end, let be the cumulative, sector-wide investment on the propulsion subsystem and be the delivered specific impulse of solid propellants (). The specific impulse is defined as the ratio of thrust to weight flow rate of the propellant and is a measure of energy content of the propellants [22].

Historical data, say , of these quantities are readily available for many technologies. Of course, cumulative investment and best performance increase with time, i.e., and . We model the relationship between and as:

| (39) |

where and are the current states of these variables, , and and are parameters to be estimated from the all available data, . We use a maximum likelihood estimator for and . This is equivalent to a least squares estimate for :

| (40) |

and to setting equal to the mean residual square error:

| (41) |

Now, let be the required quality for the propulsion subsystem in physical units. The scaled quality of a subsystem , can be defined as:

| (42) |

with this definition, we get for the state-of-the-art, and for the requirement. Substituting Eq. (39) in Eq. (42) and using the maximum likelihood estimates for and , we obtain:

| (43) |

From this equation, we can identify the uncertainty in the quality function as:

| (44) |

Finally, we need to define effort. Let represents the time for which the propulsion engineer is to be hired. The cost of the agent per unit time is . is just the duration of the systems engineering process we consider. The value can be read from the balance sheets of publicly traded firms related to the technology. We can associate the effort variable with the additional investment required to buy the time of one engineer:

| (45) |

that is, corresponds to the effort of one engineer for time . Let us assume there are engineers work on the subsystem. Comparing this equation, Eq. (43), and Eq. (5), we get that the coefficient is given by:

| (46) |

To complete the picture, we need to talk about the value (in USD) of the system if the requirements are met. We can use this value to normalize all dollar quantities. That is, we set:

| (47) |

and for the cost per square effort of the agent we set:

| (48) |

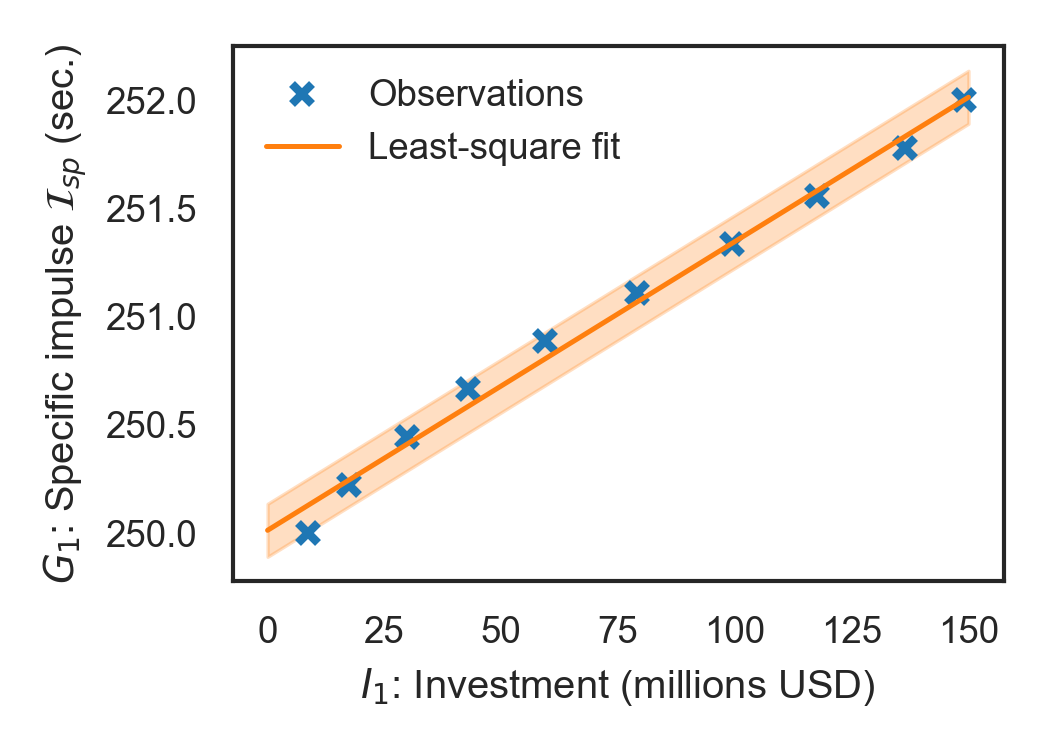

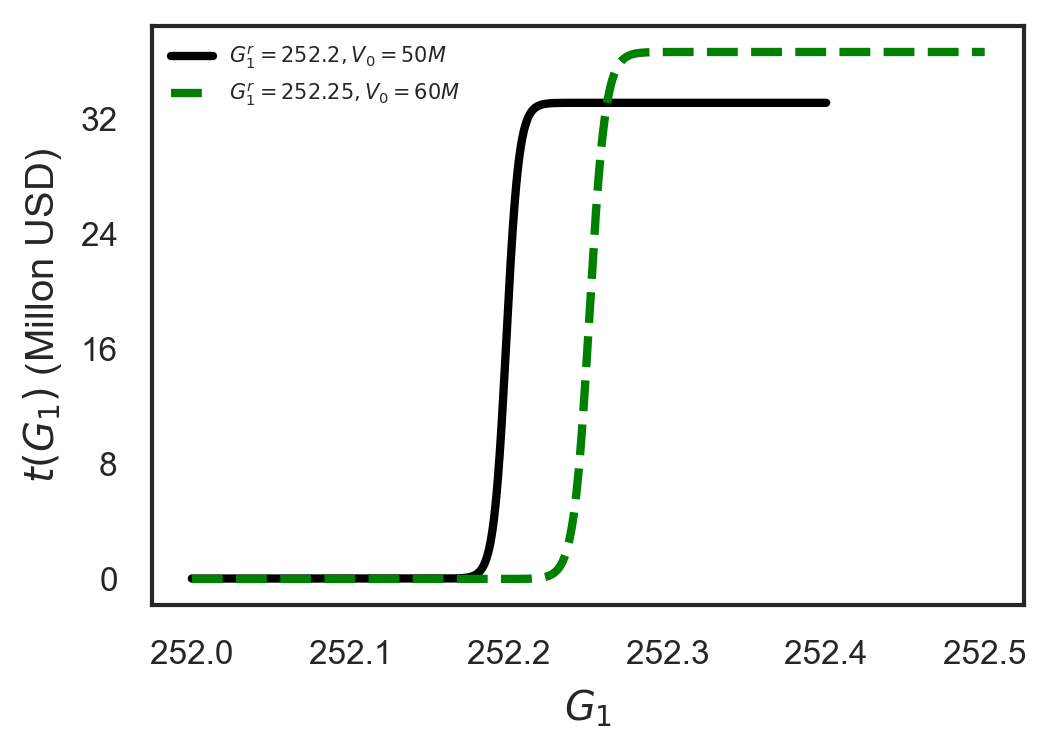

Finally, we use some real data to fix some of the parameters. Trends in delivered ( (sec.)) and investments by NASA ( (millions USD)) in chemical propulsion technology with time are obtained from [23] and [24], respectively. The state-of-the-art solid propellant technology corresponds to a value of 252 sec. and value of 149.1 million USD. The maximum likelihood fit of the parameters results in a regression coefficient of sec. per million USD, and standard deviation sec. The corresponding data and the maximum likelihood fit are illustrated in Fig. 7. The value of is the median salary (per time) of a propulsion engineer which is approximately 120,000 USD / year, according to the data obtained from [25]. For simplicity, also assume that year. Moreover, we assume that there are 200 engineers work on the subsystem, . We will examine two case studies which is summarized in table VIII.

| 252.2 s | 50,000,000 USD | 1.6 | 0.6 | 0.5 |

| 252.25 s | 60,000,000 USD | 1.28 | 0.48 | 0.4 |

Using RB value function, we depict the contracts for these two scenarios in Fig. 8.

IV CONCLUSIONS

We developed a game-theoretic model for a one-shot shallow SEP. We posed and solved the problem of identifying the contract (transfer function) that maximizes the principal’s expected utility. Our results show that, the optimum passed-down requirement is different from the real system requirement. For the same level of task complexity and uncertainty, as the agent cost of effort increases, the passed-down requirement decreases and the award to achieving the requirement increases. In this way, the principal makes the contract more attractive to the high-cost agent and ensures that the participation constraint is satisfied. Similarly, for the same level of task uncertainty and cost of effort, increasing task complexity results in lower passed-down requirement and larger award for achieving the requirement. For the same level of task complexity and cost of effort, as the uncertainty increases both the passed-down requirement and the award for achieving the requirement increase. This is because the principal wants to make sure that the system requirements are achieved. Moreover, by increasing the task complexity, the task uncertainty, or the cost of effort, the principal earns less and the exceedance curve is shifted to the left. Using the RPI contracts, the principal pays smaller amount for achieving the requirement but, instead, they pay for per quality exceeding the requirement.

For the adverse selection scenario with RB value function, we observe that when the principal is maximally uncertain about the cost of the agent, the optimum contracts are equivalent to the contract designed for the high cost agent in the single-type case with no adverse selection. The low-cost agent earns more expected utility than the high-cost agent. This is the information rent that the principal must pay to reveal the agents’ types. Similarly, if the principal is maximally uncertain about the task complexity, the two optimum contracts for the unknown quality are equivalent to the contract that is offered to the high-complexity task where there is no adverse selection. Note that, the equivalence of the contracts in adverse selection scenario with the contract that is offered in absence of adverse selection is not universal. If the class of possible contracts is enlarged, e.g., to allow penalties, there may be a set of two contracts that differentiate types.

There are still many remaining questions in modeling SEPs using a game-theoretic approach. First, there is a need to study the hierarchical nature of SEPs with potentially coupled subsystems. Second, true SEPs are dynamic in nature with many iterations corresponding to exchange of information between the various agents. These are the topics of ongoing research towards a theoretical foundation of systems engineering design that accounts for human behavior.

Appendix A Numerical estimation of the required expectations

For the numerical implementation of the suggested model, we need to be able to carry out expectations of the form of Eq. 9 a.k.a. Eq. 8 and Eq. 7. Since, we have at most two possible types in our case studies, the summation over the possible types is trivial. Focusing on expectations over , we evaluate them using a sparse grid quadrature rule [26]. In particular, any expectation of the form is approximated by:

| (49) |

where and are the quadrature points of the level sparse grid quadrature constructed by the Gauss-Hermite 1D quadrature rule.

Acknowledgment

This material is based upon work supported by the National Science Foundation under Grant No. 1728165.

References

- [1] G. Locatelli, “Why are megaprojects, including nuclear power plants, delivered overbudget and late? reasons and remedies,” arXiv preprint arXiv:1802.07312, 2018.

- [2] U. S. G. A. Office, “Navy shipbuilding past performance provides valuable lessons for future investments,” 2018.

- [3] N. GAO, “Assessments of major projects,” 2018.

- [4] I. Maddox, P. Collopy, and P. A. Farrington, “Value-based assessment of dod acquisitions programs,” Procedia Computer Science, vol. 16, pp. 1161 – 1169, 2013, 2013 Conference on Systems Engineering Research. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S1877050913001233

- [5] P. D. Collopy and P. M. Hollingsworth, “Value-Driven Design,” Journal of Aircraft, vol. 48, no. 3, pp. 749–759, 2011. [Online]. Available: https://doi.org/10.2514/1.C000311

- [6] A. Bandura, “Social cognitive theory: An agentic perspective,” Annual review of psychology, vol. 52, no. 1, pp. 1–26, 2001.

- [7] M. Shergadwala, I. Bilionis, K. N. Kannan, and J. H. Panchal, “Quantifying the impact of domain knowledge and problem framing on sequential decisions in engineering design,” Journal of Mechanical Design, vol. 140, no. 10, p. 101402, 2018.

- [8] A. M. Chaudhari, Z. Sha, and J. H. Panchal, “Analyzing participant behaviors in design crowdsourcing contests using causal inference on field data,” Journal of Mechanical Design, vol. 140, no. 9, p. 091401, 2018.

- [9] S. D. Vermillion and R. J. Malak, “Using a principal-agent model to investigate delegation in systems engineering,” in ASME 2015 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference. American Society of Mechanical Engineers, 2015, pp. V01BT02A046–V01BT02A046.

- [10] S. Safarkhani, V. R. Kattakuri, I. Bilionis, and J. Panchal, “A principal-agent model of systems engineering processes with application to satellite design,” arXiv preprint arXiv:1903.06979, 2019.

- [11] J. Watson, “Contract, mechanism design, and technological detail,” Econometrica, vol. 75, no. 1, pp. 55–81, 2007.

- [12] S. Safarkhani, I. Bilionis, and J. Panchal, “Understanding the effect of task complexity and problem-solving skills on the design performance of agents in systems engineering,” the ASME 2018 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Aug 2018.

- [13] J. A. Mirrlees, “The theory of moral hazard and unobservable behaviour: Part i,” The Review of Economic Studies, vol. 66, no. 1, pp. 3–21, 1999. [Online]. Available: http://www.jstor.org/stable/2566946

- [14] W. P. Rogerson, “The first-order approach to principal-agent problems,” Econometrica, vol. 53, no. 6, pp. 1357–1367, 1985. [Online]. Available: http://www.jstor.org/stable/1913212

- [15] R. Ke, C. T. Ryan et al., “A general solution method for moral hazard problems.”

- [16] R. Myerson, Game Theory: Analysis of Conflict. Harvard University Press, 1991. [Online]. Available: https://books.google.com/books?id=1w5PAAAAMAAJ

- [17] R. B. Myerson, “Optimal auction design,” Mathematics of operations research, vol. 6, no. 1, pp. 58–73, 1981.

- [18] D. Kahneman and A. Tversky, “Prospect theory.. an analy-sis of decision under risk,” 2008.

- [19] Theano Development Team, “Theano: A Python framework for fast computation of mathematical expressions,” arXiv e-prints, vol. abs/1605.02688, May 2016. [Online]. Available: http://arxiv.org/abs/1605.02688

- [20] A. Doucet, S. Godsill, and C. Andrieu, “On sequential monte carlo sampling methods for bayesian filtering,” Statistics and computing, vol. 10, no. 3, pp. 197–208, 2000.

- [21] Ilias bilionis, “Sequential Monte Carlo working on top of pymc.” [Online]. Available: https://github.com/PredictiveScienceLab/pysmc

- [22] J. R. Wertz, D. F. Everett, and J. J. Puschell, Space mission engineering: the new SMAD. Hawthorne, CA: Microcosm Press : Sold and distributed worldwide by Microcosm Astronautics Books, 2011, oCLC: 747731146.

- [23] “Solid.” [Online]. Available: http://www.astronautix.com/s/solid.html

- [24] “NASA Historical Data Books.” [Online]. Available: https://history.nasa.gov/SP-4012/vol6/cover6.html

- [25] “Propulsion Engineer Salaries.” [Online]. Available: https://www.paysa.com/salaries/propulsion-engineer--t

- [26] T. Gerstner and M. Griebel, “Numerical integration using sparse grids,” Numerical Algorithms, vol. 18, no. 3, p. 209, Jan 1998. [Online]. Available: https://doi.org/10.1023/A:1019129717644