Towards Meaningful Statements in IR Evaluation

Mapping Evaluation Measures to Interval Scales

Abstract

Recently, it was shown that most popular IR measures are not interval-scaled, implying that decades of experimental IR research used potentially improper methods, which may have produced questionable results. However, it was unclear if and to what extent these findings apply to actual evaluations and this opened a debate in the community with researchers standing on opposite positions about whether this should be considered an issue (or not) and to what extent.

In this paper, we first give an introduction to the representational measurement theory explaining why certain operations and significance tests are permissible only with scales of a certain level. For that, we introduce the notion of meaningfulness specifying the conditions under which the truth (or falsity) of a statement is invariant under permissible transformations of a scale. Furthermore, we show how the recall base and the length of the run may make comparison and aggregation across topics problematic. Then we propose a straightforward and powerful approach for turning an evaluation measure into an interval scale, and describe an experimental evaluation of the differences between using the original measures and the interval-scaled ones. For all the regarded measures – namely Precision, Recall, Average Precision, (Normalized) Discounted Cumulative Gain, Rank-Biased Precision and Reciprocal Rank - we observe substantial effects, both on the order of average values and on the outcome of significance tests. For the latter, previously significant differences turn out to be insignificant, while insignificant ones become significant. The effect varies remarkably between the tests considered but overall, on average, we observed a change in the decision about which systems are significantly different and which are not.

1 Introduction

By virtue or by necessity, Information Retrieval (IR) has always been deeply rooted in experimentation and evaluation has been a formidable driver of innovation and advancement in the field, as also witnessed by the success of the major evaluation initiatives – Text REtrieval Conference (TREC)111https://trec.nist.gov/ in the United States [46], Conference and Labs of the Evaluation Forum (CLEF)222http://www.clef-initiative.eu/ in Europe [36], NII Testbeds and Community for Information access Research (NTCIR)333http://research.nii.ac.jp/ntcir/ in Japan and Asia [76], and Forum for Information Retrieval Evaluation (FIRE)444http://fire.irsi.res.in/ in India – not only from the scientific and technological point of view but also from the economic impact one [71].

Central to experimentation and evaluation is how to measure the performance of an IR system and there is a rich set of IR literature discussing existing evaluation measures or introducing new ones as well as proposing frameworks to model them [20, 66]. The major goal is to quantify users’ experience of retrieval quality for certain types of search behavior, like e.g. users stopping at the first relevant document, or after the first ten results. Most of the measures proposed are based on plausible arguments and often accompanied by experimental studies, also investigating how close they are to end-user experience and satisfaction [51, 106, 107]. However, little attention has been given to a proper theoretic basis of the evaluation measures, leading to possibly flawed measures and affecting the validity of the scientific results based on them, especially their internal validity, i.e. “the ability to draw conclusions about causal relationships from the results of a study” [25, p. 157]

A few years ago, Robertson [70] raised the question of which scales are used by IR evaluation measures, since they determine which operations make sense on the values of a measure, as originally proposed by Stevens [85]. Scales have increasing properties: a nominal scale allows for determination of equality and for the computation of the mode; an ordinal scale allows only for determination of greater or less and for the computation of medians and percentiles; an interval scale allows also for determination of equality of intervals or differences and for the computation of mean, standard deviation, rank-order correlation; finally, a ratio scale allows also for the determination of equality of ratios and for the computation of coefficient of variation. Recently, Ferrante et al. [32, 33] have theoretically shown that some of the most known and used IR measures, like Average Precision (AP) or Discounted Cumulative Gain (DCG), are not interval-scales. As a consequence, we should neither compute means, standard deviations and confidence intervals, nor perform significance tests that require an interval scale. Over the decades there has been much debate about Stevens’s prescriptions [56, 95, 45, 62] and this debate has also spawn to the IR field with Fuhr [40] suggesting strict adherence to Stevens’s prescriptions and Sakai [75] arguing for a more lenient approach.

Our vision is that it is now time for the IR field to accurately investigate and understand the scale properties of its evaluation measures and their implications on the validity of our experimental findings. As a matter of fact, we are not aware of any experimental IR paper that regarded evaluation measures as ordinal scales, thus refraining from computing (and comparing) means; also, most papers using evaluation measures apply parametric tests, which should be used only from interval scales onwards. This means that improper methods have been potentially applied. Independently from your stance in the above long-standing debate, the key question about IR experimental findings is: are we on the safe side or are we at risk? Are we in a situation like using a rubber band to measure and compare lengths? Are we facing a state of the affairs where decades of IR research may have produced questionable results?

We do not have the answer to these questions but our intention with this paper is to lay the foundations and set all the pieces needed to have the means and instruments to answer these questions and to let the IR community discuss these issues on a common ground in order to reach shared conclusions.

Therefore, the contributions of the paper are as follows:

- 1.

-

2.

introduction to the notion of meaningfulness [29, 67, 69], i.e. the conditions under which the truth (or falsity) of a statement is invariant under permissible transformations of a scale. To the best of our knowledge, this concept has never investigated or applied in IR but it is fundamental to the validity of the inferences we draw;

-

3.

discussion and demonstration of further measurement issues, specific to IR and beyond the debate on permissible operations. In particular, we show how the recall base and the length of the run may make averaging across topics (or other forms of aggregate statistics) problematic, at best;

-

4.

proposal of a straightforward and powerful approach for turning an evaluation measure into an interval scale, by transforming its values into their rank position. In this way, we provide a means for improving the meaningfulness and validity of our inferences, still preserving the different user models embedded by the various evaluation measures;

-

5.

experimental evaluation of the differences between using the original measures and the interval-scaled ones, by relying on several TREC collections. For all the regarded measures – namely Precision, Recall, AP, DCG, Normalized Discounted Cumulative Gain (nDCG), Rank-Biased Precision (RBP), and Reciprocal Rank (RR) – we observe substantial effects, both on the order of average values and on the outcome of significance tests. For the latter, previously significant differences turn out to be insignificant, while insignificant ones become significant. The effect varies remarkably between the tests considered but overall, on average, we observed a change in decisions about what is significant and what is not.

The paper is organized as follows: Section 2 provides an overview of the representational theory of measurement, of the different types of scale, and the notion of meaningfulness. Section 3 deeply discusses measurement and meaningfulness issues specific to IR. Section 4 briefly summarizes related works. Section 5 explains our methodology for transforming evaluation measures into interval scales. Section 6 introduces the experimental setup while Section 7 discusses the results of the experiments. Finally, Section 8 draws some conclusions and outlooks for future works.

2 Measurement

2.1 Overview

The representational theory of measurement [53, 87, 59] is one of the most developed approaches to measurement, suitable for many areas of science ranging to physics and engineering to psychology. The basic idea is that real world objects have attributes which constitute their relevant features and induce a set of relationship among them; the set of objects together with the relationships among them comprise the so-called Empirical Relational System (ERS) . Then, we look for a mapping between the real word objects and numbers in such a way that the relationships among the objects match with relationships among numbers; the set of numbers together with the relationships constitutes the so-called Numerical Relational System (NRS) .

More precisely, the representational theory of measurement seeks for an homomorphism which maps onto in such a way that it holds that . The homomorphism is called a scale of measurement. Note that, in general, we seek for an homomorphism and not an isomorphism because two different real word objects might be mapped into the same number.

The most typical example is length. Suppose the ERS is a set of rods with an order relationship among rods and a concatenation operation among them. If the attribute under examination is the length of a rod, we can map the ERS to the NRS such that it holds and , that is if a rod is longer than another one the number assigned to the first one is bigger than the number assigned to the second one and the concatenation of two rods corresponds to the sum of the two numbers assigned to them.

The core of the representational theory of measurements is to seek for a representation theorem and a uniqueness theorem for the scale of measurement in order to fully define it.

The representation theorem ensures that if the ERS satisfies given properties, it is possible to construct an homomorphism to a certain NRS. In the previous example, the representation theorem defines which properties the order relation and the concatenation have to satisfy in order to construct a real-valued function which is order preserving and additive. It is important to underline that the representational theory of measurement seeks for “operations” among real word objects – e.g. we can put two rods side by side to order them or we can lay two rods end by end to concatenate them – and if these “operations” satisfy given properties they can be reflected into corresponding operations among numbers, where numbers are just a proxy of what happens among real world objects but are much more convenient to manipulate.

In general, given an ERS and an NRS, it is possible to create more than one homomorphism between them. For example, it is possible to express length by using meters or yards and both of them are legitimate scales for length. The uniqueness theorem is concerned with determining which are the permissible transformations such that and are both homomorphisms of the given ERS into the same NRS. In our example, any transformation is permissible for length. Therefore, the uniqueness theorem guarantees that the “structure” of a scale of measurement is invariant to changes in the numerical assignment, which preserve the relationships.

2.2 Classification of the Scales of Measurement

Stevens [85] introduced a classification of scales based on their permissible transformations, described below.

2.2.1 Nominal scale

It is used when entities of the real world can be placed into different classes or categories on the basis of their attribute under examination. The ERS consists only of different classes without any notion of ordering among them and any distinct numeric representation of the classes is an acceptable measure but there is no notion of magnitude associated with numbers. Therefore, any arithmetic operation on the numeric representation has no meaning.

The class of permissible transformations is the set of all one-to-one mappings, i.e. bijective functions: , since they preserve the distinction among classes.

Example 1 (Nominal Scale).

Consider a classification of people by their country, e.g. France, Germany, Greece, Italy, Spain, and so on. We could define the two following measurements:

both and are valid measures, which can be related with a one-to-one mapping. Note that even if looks like being ordered, there is actually no meaning in the associated magnitudes and so it should not be confused with an ordinal scale. Moreover, even if it is alway possible to operate with numbers, using and performing , which would correspond to , has no specific meaning, as well as using and performing , which would correspond to , even in disagreement with the previous case.

2.2.2 Ordinal scale

It can be considered as a nominal scale where, in addition, there is a notion of ordering among the different classes or categories. The ERS consists of classes that are ordered with respect to the attribute under examination and any distinct numeric representation which preserves the ordering is acceptable. Therefore, the magnitude of the numbers is used just to represent the ranking among classes. As a consequence, addition, subtraction or other mathematical operations have no meaning.

The class of permissible transformations is the set of all the monotonic increasing functions, since they preserve the ordering: .

Example 2 (Ordinal Scale).

The European Commission Regulation 607/2009 [27] and the follow-up regulation 2019/33 [28] set the following increasing scale to classify sparkling wines on the basis of their sugar content:

-

•

pas dosé (brut nature): sugar content is less than 3 grams per litre; let us call this range ;

-

•

extra brut: sugar content is between 0 and 6 grams per litre; let us call this range ;

-

•

brut : sugar content is less than 12 grams per litre; let us call this range ;

-

•

extra dry: sugar content is between 12 and 17 grams per litre; let us call this range ;

-

•

sec (dry): sugar content is between 17 and 32 grams per litre; let us call this range ;

-

•

demi-sec (medium dry): sugar content is between 32 and 50 grams per litre; let us call this range ;

-

•

doux (sweet): sugar content is greater than 50 grams per litre; let us call this range , where 2000 grams per litre is roughly the saturation of sugar in water, which is much higher than those of sugar in alcohol.

We can introduce two alternative ordinal scales and of the above wine scale where is given by the maximum of a range while is given by a monotonic transformation :

As in the case of the previous Example 1, mathematical operations have no specific meaning, even if, especially in the case of , we may be tempted to perform operations like to express statements like “brut may be twice as sweet as extra brut”. However, such statement cannot be expressed on the or scale and it actually comes from implicitly changing scale to the mass concentration scale of the solution, which is a ratio scale (see below) where the division operation would make sense. Also addition and subtraction have no meaning, so is not a way to express statements like “brut may have of sugar more than extra brut”, for the same reasons above. We could perform operations such as or but this would be just a more involute way of expressing the order among categories, which is the only property guaranteed by ordinal scales.

2.2.3 Interval scale

Besides relying on ordered classes, it also captures information about the size of the intervals that separate the classes. The ERS consists of classes that are ordered with respect to the attribute under examination and where the size of the “gap” among two classes is somehow understood; more precisely, fundamental to the definition of an interval scale is that intervals must be equi-spaced. An interval scale preserves order, as an ordinal one, and differences among classes have meaning – but not their ratio. Therefore, addition and subtraction are acceptable operations but not multiplication and division.

The class of permissible transformations is the set of all affine transformations: .

Note that while ratios of classes have no meaning on an interval scale, the ratio of differences among classes, i.e. the ratio of intervals, is allowed and invariant .

Example 3 (Interval Scale).

A typical example of interval scale is temperature, which can be expressed on either the Fahrenheit or the Celsius scale, where the affine transformation allows us to pass from one to the other. When talking about temperature it does not make sense to say that is twice as hot as , i.e. multiplication and division are not allowed; you can also note that the division operation is not invariant to the transformation, since but . However, it makes sense to say that the increase between and is the same as the increase between and , i.e. addition and subtractions are allowed; you can also note that the subtraction operation is invariant to the transformation since and . Moreover, the ratio of intervals is invariant to the transformation .

Central to the notion of temperature is the fact that the size of the “gap” has the same meaning all over the scale; indeed, 1 degree represents the same amount of thermal energy all over the scale. i.e. the gaps are equi-spaced.

2.2.4 Ratio scale

It allows us to compute ratios among the different classes. The ERS consists of classes that are ordered, where there is a notion of “gap” among two classes and where the “proportion” among two classes is somehow understood. It preserves order and differences as well as ratios. Therefore, all the arithmetic operations are allowed.

The class of permissible transformations is the set of all linear transformations: .

Example 4 (Ratio Scale).

A typical example of ratio scale is length which can be expressed on different scales, e.g. meters or yards, which can all be mapped one into another via a similarity transformation. For example, to pass from kilometers () to miles (), we have the following transformation .

Another example of ratio scale is the absolute temperature on the Kelvin scale where there is a zero element, which represents the absence of any thermal motion.

2.3 Admissible Statistical Operations

Stevens moved a step forward and linked the notion of scale with that of admissible statistical operations which can be carried out with that scale:

-

•

Nominal scale: the only allowable operation is counting number of items in each class, that is, in statistical terms, mode and frequency.

-

•

Ordinal scale: besides the operations already allowed for nominal scales, median, quantiles, and percentiles are appropriate, since there is a notion of ordering.

-

•

Interval scale besides the operations already allowed for ordinal scales, mean and standard deviation are allowable since they depend just on sum and subtraction555Note that when we talk about admissible operations, we mean operations between items of the scale. So, for example, a mean involves summing items of the scale, e.g. temperature, and this is possible on an interval scale. The fact that a mean also requires a division by the number of items added together is not in contrast with saying that only addition and subtraction are allowed, since is not an item of the scale..

-

•

Ratio scale: besides the operations already allowed for interval scales, geometric and harmonic mean, as well as coefficient of variation, are allowable since they depend on multiplication and division.

These prescriptions originated several debates over the decades. Lord [56, p. 751] argued that “since the numbers don’t remember where they come from, they always behave the same way, regardless” and so any operation should be allowed even on “football numbers”, i.e. a nominal scale; Gaito [42] reinforced this argument by distinguishing between the realm of the measurement theory, where Stevens’s restrictions should apply, and the realm of the statistical theory, where these restrictions should not be applied, since other assumptions, such as normal distribution of the data, are those actually needed. Townsend and Ashby [89] replied back showing cases where performing operations inadmissible for a given scale of measurement may mislead the conclusions drawn by statistical tests. O’Brien [68] discussed the type of errors introduced when using ordinal data for representing an underlying continuous variable, classifying them into pure transformation errors, pure categorization errors, pure grouping errors, and random measurement errors. Velleman and Wilkinson [95] summarized the previous debate and argumented that once you are in the numerical realm every operation is admissible among numbers. Recently, Scholten and Borsboom [80] made a case of flaws in the original Lord’s argument and, as a striking consequence, Lord’s experiment would not be a counterargument to Stevens’s restrictions but it would rather comply with them. In a very recent textbook, Sauro and Lewis [78] firmly supported Lord’s view, at least in the case of ordinal scales, but with the caveat to not make claims on the outcomes of a statistical test that violate the underlying scale. So, for example, if you are on ordinal scale and you detected a significant effect using a test which would require a ratio scale, you should not claim that that effect is twice as big as another effect but just that it is significant.

2.4 Meaningfulness

The above observation brings the debate back to the core issue of what we should pay attention to. Indeed, both Hand [45] and Michel [62, 63] argued that the problem is not what operations you can perform with numbers but what kind of inference you wish to make from those operations and how much such inference has to be indicative of what actually happens among real world objects. Already Adams et al. [2, pp. 99-100] explicitly stated that

Statistical operations on measurements of a given scale are not appropriate or inappropriate per se but only relative to the kinds of statements made about them. The criterion of appropriateness for a statement about a statistical operation is that the statement be empirically meaningful in the sense that its truth or falsity must be invariant under permissible transformations of the underlying scale

These statements opened the way to the development of a full (formal) theory of meaningfulness [29, 67, 69], which is a central concept to clearly shape and define the questions discussed above: according to the adopted measurement scales, what processing, manipulation, and analyses can be conducted and what can we tell about the conclusions drawn from such processing?

Note that the statement “A mouse weights more than an elephant” is meaningful even if it is clearly false; indeed, its truth value, i.e. false, does not change whatever weight scale you use (kilograms, pounds, and so on). Therefore, as anticipated above, meaningfulness is a distinct concept from the one of truth of a statement and it is somehow close to the notion of invariance in geometry, since the truth value of a statement stays the same independently from the permissible scales used to express it.

Example 5 (Meaningfulness for a Nominal Scale – Example 1 continued).

Suppose that we observe a set of 10 people, where 5 people are Spanish, 3 German, 1 Greek, and 1 Italian. According to we would have while according to we would have . In both cases, the statement “Most people come from Spain” is meaningful since, if we compute the mode of the values, it is in the case of and in the case of which both correspond to Spain. On the other hand, the statement “The lowest quartile consist of Spanish people” is not meaningful, since it is true with corresponding to Spain in the case of but is is false with corresponding to Germany in the case of . Indeed, the first statement about the mode involves just counting, which is an allowable operation for a nominal scale, while the second statement about the lowest quartile requires a notion of ordering not present in a nominal scale.

Example 6 (Meaningfulness for an Ordinal Scale – Example 2 continued).

Suppose that we have two wineries and . The first winery produced five bottles as follows: extra brut, extra brut, brut, extra dry, and sec; the second one produced five bottles as follows: pas dosé, pas dosé, pas dosé, brut, and demi-sec. Therefore, according to the scale , we have and ; while according to the scale , we have and . The statement “The median of the first winery is greater than the one of the second winery” is meaningful since according to is true as well as according to ; so we could safely say that the first winery produces a little more brut-like wines than the second one, focusing on a more standard product. On the other hand, the statement ”The average of the first winery is greater than the one of the second winery” is not meaningful since according to is true but according to is false, which would lead us to draw basically opposite conclusions based on the scale we use. Indeed, the first statement about the median involves just the notion of ordering which is allowable on an ordinal scale, while the second statement about the average requires to sum values, which is not an allowable operation.

Example 7 (Meaningfulness for an Interval Scale – Example 3 continued).

The statement ‘Today the difference in temperature between Rome and Oslo is twice as high as it was one month ago” is meaningful. Indeed, if, on the Celsius scale, the temperature today in Rome is ∘C and in Oslo is ∘C while one month ago it was ∘C and ∘C, leading to which is twice as , on the Fahrenheit scale we would have which is twice as .

Suppose now that we have recorded two sets of temperatures from Paris and Rome: and in Celsius degrees and, the same, and in Fahrenheit degrees.

The statement “The median temperature in Paris is the same as in Rome” is meaningful, since in Celsius degrees and in Fahrenheit degrees; this is due to the fact that interval scales are also ordinal and quantiles are an allowable operation on ordinal scales.

The statement “The mean temperature in Paris is less than in Rome” is meaningful as well, since in Celsius degrees and in Fahrenheit degrees; this is due to the fact that addition and subtraction are allowable operations on an interval scale and, as a consequence, mean is invariant to affine transformations. Indeed, let and be two set of values on an interval scale; it holds that

Therefore, the statement “The mean of is greater than the mean of ” is always meaningful.

Finally, the statement “The geometric mean of temperature in Paris is greater than in Rome” is not meaningful, since in Celsius degrees and in Fahrenheit degrees; this is due to the fact that the geometric mean involves the multiplication and division of values, which is not a permitted operation on an interval scale.

Also note that we may be tempted to compare the results of the arithmetic mean with those of the geometric mean to gain “more insights”. For example, we might observe that the arithmetic mean in Paris is less than in Rome – in Celsius degrees – but the opposite is true when we consider the geometric mean – in Celsius degrees. We might thus highlight that this due to the fact that the first (and lowest) value in Paris is double than in Rome and that the geometric mean rewards gains at lowest values; on the other hand, the arithmetic mean rewards gains at higher values and thus in Paris is (almost) half than in Rome and it contributes less. However, while the explanation why the geometric mean may differ from the arithmetic one is surely credible, the issue here is that the geometric mean could not be relied upon, as well as conclusions drawn from it, since it is based on operations not allowed on an interval scale; indeed, if we consider exactly the same temperatures just on the Fahrenheit scale, we would reach opposite conclusions.

Example 8 (Meaningfulness for a Ratio Scale – Example 4 continued).

If the air distance between Rome and Padua is (about) kilometers and the air distance among Rome and Oslo is (about) kilometers, the statement “Rome and Oslo are five times as distant as Rome and Padua” is meaningful, even expressed in miles, since .

On the Kelvin scale for temperature, it does make sense to say that a thing is twice as hot as another thing if, for example, the first one is K (almost ∘C, ∘F) and the second one is K (almost ∘C, ∘F); you can note, however, how this statement does not hold if we consider Celsius and Fahrenheit degrees, since while (and none of them is exactly twice).

Finally, let us show that the statement “The geometric mean of is greater than the geometric mean of ” is always meaningful. Indeed, let and be two set of values on a ratio scale; it holds that

2.5 Statistical Significance Testing

Siegel [83] and Senders [82] have discussed the implications of Stevens’ classification and permissible operations in the case of statistical inference and parametric and nonparametric statistical significance tests. We consider the following tests:

-

•

Sign Test [44] is a non parametric test which looks at the signs of the differences among two paired samples and ; the null hypothesis is that the median of the differences is zero.

The sign test requires samples to be on an ordinal scale, since it needs to determine the sign of their difference or, equivalently, which one is greater. Note that the sign test discards the tied samples, i.e. when .

-

•

Wilcoxon Rank Sum Test (or Mann-Whitney U Test) [104, 44] is a non parametric test which looks at the ranks of two paired samples and ; the null hypothesis is that the two samples have the same median.

The Wilcoxon rank sum test requires samples to be on an ordinal scale, since it needs to order them for determining their rank.

-

•

Wilcoxon Signed Rank Test [104, 44] is a non parametric test which looks at the signs and ranks of the differences among two paired samples and ; the null hypothesis is that the median of the differences is zero.

The Wilcoxon signed rank test requires samples to be on an interval scale, since it regards the ranks of the differences, for which intervals must be equi-spaced. Note that the Wilcoxon signed rank test discards the tied samples, i.e. when .

-

•

Student’s t Test [86] is a parametric test for the null hypothesis that two paired samples and come from a normal distribution with same mean and unknown variance.

The Student’s t test requires samples to be on an interval scale, since it needs to compute means and variances.

-

•

ANalysis Of VAriance (ANOVA) [37, 55] is a parametric test for the null hypothesis that samples come from a normal distribution with same mean and unknown variance.

ANOVA requires samples to be on an interval scale, since it needs to compute means and variances.

-

•

Kruskal-Wallis Test [54, 44] is a nonparametric version of the one-way ANOVA for the null hypothesis that samples come from a distribution with same median. It is based on the ranks of the different samples and it can be considered as an extension of the Wilcoxon rank sum test to the comparison of multiple systems at the same time.

The Kruskal-Wallis test requires samples to be on an ordinal scale, since it needs to order them for determining their rank.

-

•

Friedman Test [38, 39, 44] is a nonparametric version of the two-way ANOVA for the null hypothesis that the effects of the samples are the same. It is based on the ranks of the different samples.

The Friedman test requires samples to be on an ordinal scale, since it needs to order them for determining their rank.

As in the case of Stevens’ permissible operations, defining which statistical significance tests should be permitted on the basis of the scale properties of the investigated variables raised a lot of discussion and controversy. Anderson [10], along the line of reasoning of Lord, argued that statistical significance tests should be used regardless of scale limitations. Gardner [43] summarizes much of the discussion up to that point, leaning towards not worrying too much about scale assumptions, and suggests that, if and when lack of compliance to measurement scale requirements biases the outcomes of significance tests, transformations can be applied to turn ordinal scales into more interval-like ones such as, for example, averaging the ranks of each score, as proposed by Gaito [41], or using a more complex set of rules, as developed by Abelson and Tukey [1]. Ware and Benson [102] replied to Gardner’s positions by further revising the pro and con arguments and concluding that parametric significance tests should be used only when dealing with interval and ratio scales while, in the case of ordinal scales, nonparametric significance tests should be adopted. Townsend and Ashby [89] further investigated the issue, highlighting some serious pitfalls you may fall in, when ignoring the scale assumptions.

We can summarise the discussion with the conclusions of Marcus-Roberts and Roberts [61, p. 391]:

The appropriateness of a statistical test of a hypothesis is just a matter of whether the population and sampling procedure satisfy the appropriate statistical model, and is not influenced by the properties of the measurement scale used. However, if we want to draw conclusions about a population which say something basic about the population, rather than something which is an accident of the particular scale of measurement used, then we should only test meaningful hypotheses, and meaningfulness is determined by the properties of the measurement scale in connection with the distribution of the population.

and Hand [45, p. 471]

Restrictions on statistical operations arising from scale type are more important in model fitting and hypothesis testing contexts than in model generation or hypothesis generation contexts.

3 Measurement Issues in Information Retrieval

3.1 Why does Studying the Scale Properties of IR Evaluation Measures Matter?

Let us start our discussion by considering a not-exhaustive list of core IR areas where scales may matter.

The most common and basic operation we perform to understand whether a system is better than a system is to average their performance over a set of topics and compare these aggregate scores. According to the discussion so far this leads to meaningful statements only if IR evaluation measures are, at least, interval scales.

Topic difficulty [19] is another central theme in IR because of its importance for adapting the behaviour of a system to the topic at hand. Voorhees [98, 99], in the TREC Robust tracks, explored how to evaluate and compare systems designed to deal with difficult topics and proposed to use the geometric mean, instead of the arithmetic one, for Average Precision (AP) [15]. However, the use of a geometric mean further raises the requirements for the evaluation measures, even calling for a ratio scale.

Statistical significance testing has a long story of adoption and investigation in IR, from the early uses of t-test reported by Salton and Lesk [77], to the discussion on the compliance with the distribution assumptions of significance tests by van Rijsbergen [93], to advocating for a more wide-spread adoption of different types of significance tests by Hull [49], Savoy [79], Carterette [21], Sakai [72], to surveys on the current state of adoption of significance tests by Sakai [74]. Again, drawing meaningful inference depends on the appropriate use of parametric or nonparametric tests in accordance with the scale properties of the adopted IR evaluation measures.

Several authors have proposed the use of score transformation and standardisation techniques, such as z-score by Webber et al. [103] and other types of linear (and non-linear) transformations by Sakai [73], Urbano et al. [91], in order to compare performance across collections and to reduce the impact of few topics skewing the performance distribution. However, in order to ensure meaningful conclusions from these transformation, at least an interval scale would be required.

Despite so many aspects of IR evaluation which can be affected by the scale properties of evaluation measures and despite the deep scrutiny that the above techniques have received over the years, there has been much less attention to the implications of the scale assumptions on them.

Robertson [70] was the first to discuss the admissibility of the use of the geometric mean from the Stevens’s perspective in the context of the TREC Robust track. In particular, Robertson focused on the fact that Mean Average Precision (MAP) and Geometric Mean Average Precision (GMAP) may lead to different conclusions – e.g. blind feedback is beneficial according to MAP but detrimental according to GMAP – and which of them may hold more (intrinsic) validity. In this respect, Robertson [70, p. 80] observed that

If the interval assumption is not valid for the original measure nor for any specific transformation of it, then any monotonic transformation of the measure is just as good a measure as the untransformed version. If we believe that the interval assumption is good for the original measure, that would give the arithmetic mean some validity over and above the means of transformed versions. If, however, we believe that the interval assumption might be good for one of the transformed versions, we should perhaps favour the transformed version over the original. But if there is no particular reason to believe the interval assumption for any version, then all versions are equally valid. If they differ, it is because they measure different things.

Since both AP and the log-transformation of AP (implied by the geometric mean) are not interval scales, Robertson concluded that no preference could be granted to MAP or GMAP in terms of (intrinsic) validity of their findings. In this way Robertson takes a neutral stance with respect to the debate on whether certain operations should be permitted or not on the basis of the scale properties.

Note that Robertson somehow implicitly indicates transformations as a possible means to turn a not-interval scale into an interval one, as also supported by Gaito [41], Abelson and Tukey [1].

As a final remark, even if Robertson did not mention it explicitly, his reasoning seems to be loosely related the concept of meaningfulness when he says [p. 80]

Good robustness would be indicated if the conclusions looked the same whatever transformation we used; if we found it easy to find transformations which would substantially change the conclusions, then we might infer that our conclusions are sensitive to the interval assumption, and that the different transformations measure different things in ways that may be important to us

still keeping a neutral stance about what should or should not be done.

Fuhr [40] took a firm position and argued that Mean Reciprocal Rank (MRR) [84] should not be computed because: 1. in general, RR is just an ordinal scale and, according to Stevens means cannot be computed for a ordinal scales; 2. in particular, RR has some counter-intuitive behaviour. On the other hand, Sakai [75] has recently disagreed with Fuhr: 1. in general, on the fact that means should not be computed for an ordinal scale, using arguments similar to those discussed in Section 2.3; 2. in particular, on the use of RR which Sakai finds quite useful from a practical point of view.

Whatever stance you wish to take about whether (or not) operations should be constrained by scale properties, from the discussion so far, it clearly emerges that IR needs further and systematic investigation about the implications and impact of derogating from compliance with scale properties. Moreover, most of the above discussion is just about averaging values and does not tackles the implications for statistical significance testing. Finally, and more importantly, we completely lack a thorough discussion on and any adoption of the notion of meaningfulness in IR and this is quite striking for a discipline so strongly rooted in experimentation and so much based on inference.

3.2 A Formal Theory of Scale Properties for IR Evaluation Measures

Ferrante et al. [31, 32, 33] leveraged the representational theory of measurement for developing a formal theory of IR evaluation measures which allows us to determine the scale properties of an evaluation measure. In particular, they defined an ERS for system runs and used two basic operations – swap, i.e. swapping a relevant with a not-relevant document in a ranking, and replacement, i.e. substituting a relevant document with a not-relevant one – to study how runs are ordered. In this way, they demonstrated that there exists a partial order of runs where, when runs are comparable, all the measures agree on the same way of ordering them; however, when runs are not comparable, measures may disagree on how to order them. By using properties of the partial orders and theorems from the representational theory of measurement, they were able to define an interval scale measure and to check whether there is any linear transformation between such measure and IR evaluation measures, in order to determine if the latter are interval scales too.

In short, Ferrante et al. found that, for a single topic:

-

•

set-based evaluation measures:

-

–

binary relevance: precision, recall, F-measure are interval scales;

-

–

multi-graded relevance: Generalized Precision (gP) and Generalized Recall (gR) are interval scales only if the relevance degrees are on a ratio scale;

-

–

-

•

rank-based evaluation measures:

-

–

binary relevance: Rank-Biased Precision (RBP) [65] is an interval scale only for ; Average Precision (AP) is not an interval scale;

-

–

multi-graded relevance: Graded Rank-Biased Precision (gRBP) is an interval scale only for , where is the normalized smallest gap between the gain of two consecutive relevance degrees, and the relevance degrees themselves are on a ratio scale; Discounted Cumulative Gain (DCG) [50] and Expected Reciprocal Rank (ERR) [23] are not interval scales.

-

–

Ferrante et al. [33] also studied what they called the induced total order, i.e. pretending that runs in the ERS are ordered by the actual values of a measure. Also in this case which is the most “favourable” to each measure, Ferrante et al. have shown that AP, RBP with (and its multi-graded version), DCG, and ERR are not interval scales, because their values are not equi-spaced.

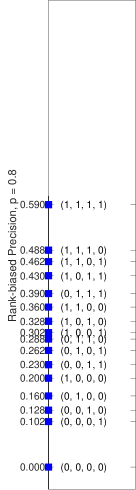

Figure 1 shows the Hasse diagram [26] which represents the partial order among all the runs of length . In the figure, vertices are runs while edges represent the direct predecessor relation that is, if , i.e. and are comparable, then is below in the diagram. Note that if and lie on the same horizontal level of the diagram, then they are incomparable; furthermore, elements on different levels may be incomparable as well. In the example , , and are all comparable; therefore, all IR measures agree on these runs and order them in the same way. On the other hand, and are not comparable, as well as and , and IR measures disagree on how to order them; as a consequence, measures will order these runs differently, producing different Rankings of Systems (RoS).

The difference in the RoS produced by evaluation measures is what is studied when performing a correlation analysis among measures, e.g. by using Kendall’s [52]; practical wisdom says that measures should be neither too much correlated – otherwise it practically makes no difference using one or the other – nor too few correlated – otherwise it may be an indicator of some “pathological” behaviour of a measure. Indeed, each evaluation measure embodies a different user model [20], i.e. a different way in which the user interacts with the ranked result list and derives gain from the retrieved documents, and the differences between the RoS produced by different evaluation measures, and as a consequence their Kendall’s , may be considered as the tangible manifestation of such different user models. Note that the work by Ferrante et al. provides a formal explanation of what originates differences in Kendall’s : for all the runs which are comparable in the Hasse diagram, Kendall’s between different measures is , since all of them order these runs in the same way; for runs which are not comparable in the Hasse diagram, Kendall’s between different measures is less than , since all of them order these runs differently; therefore, these not comparable runs are where user models differentiate themselves and can take a different stance.

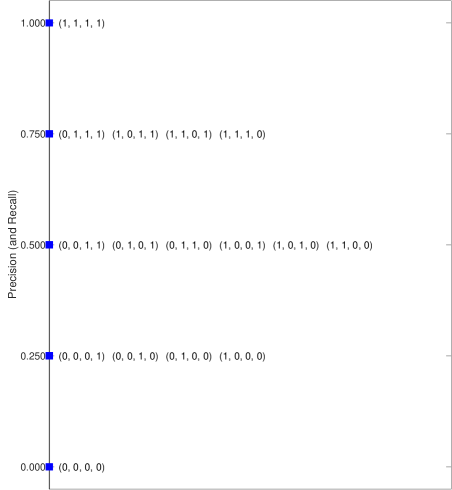

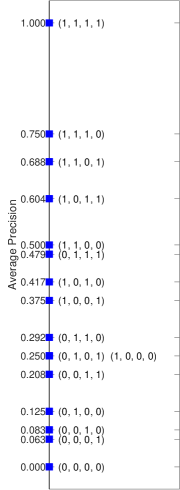

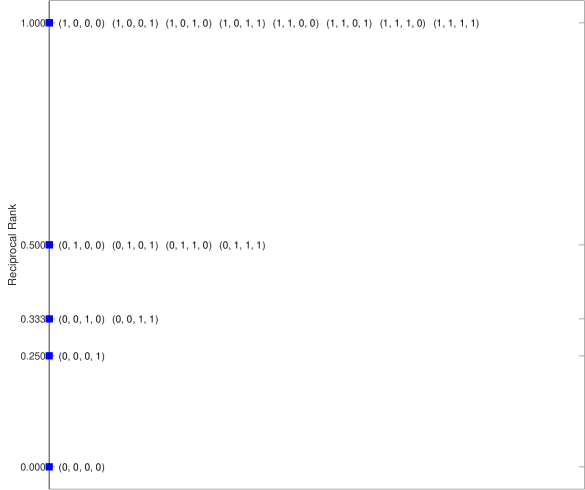

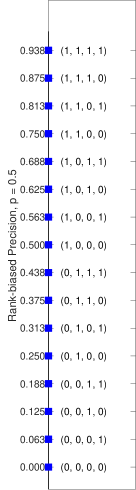

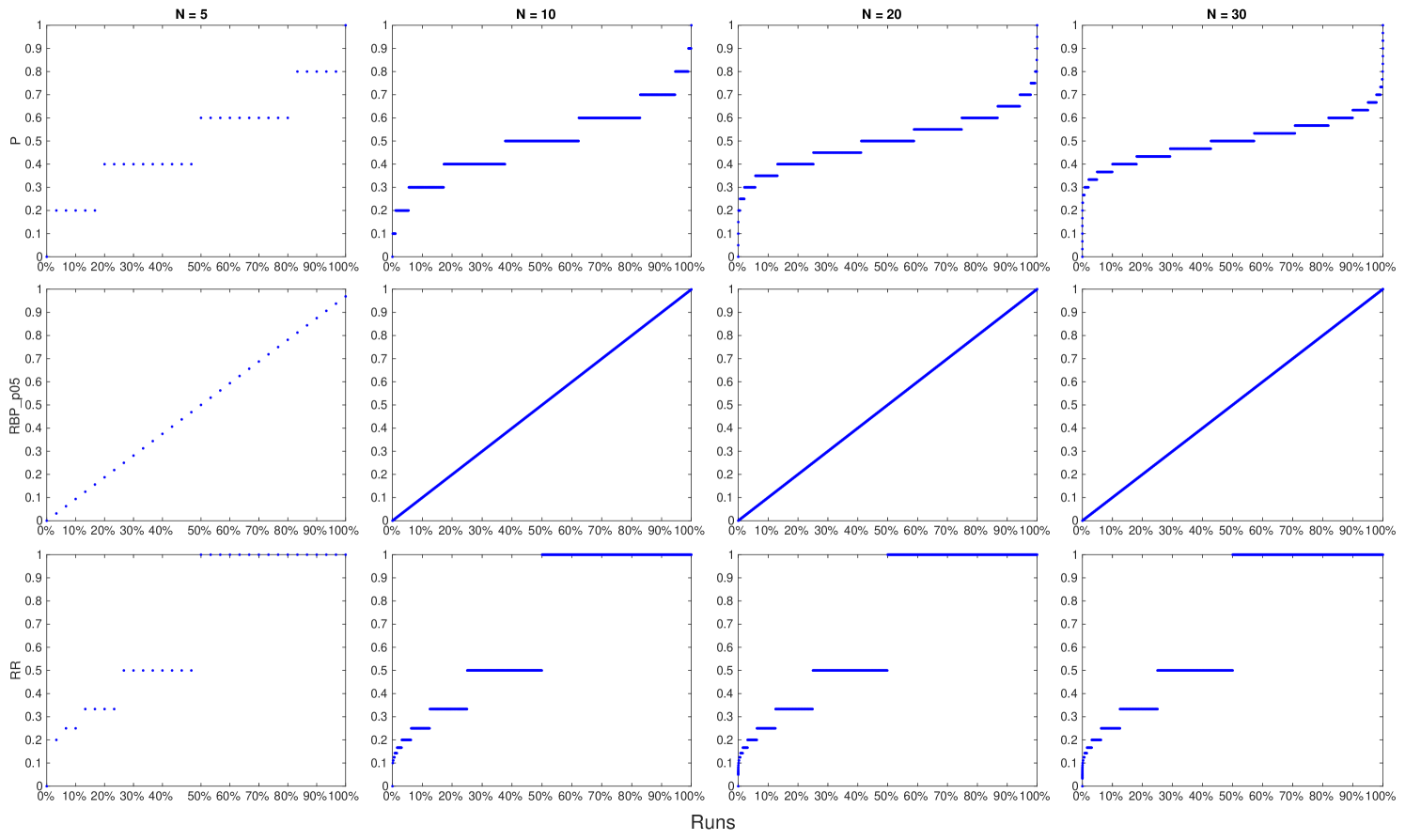

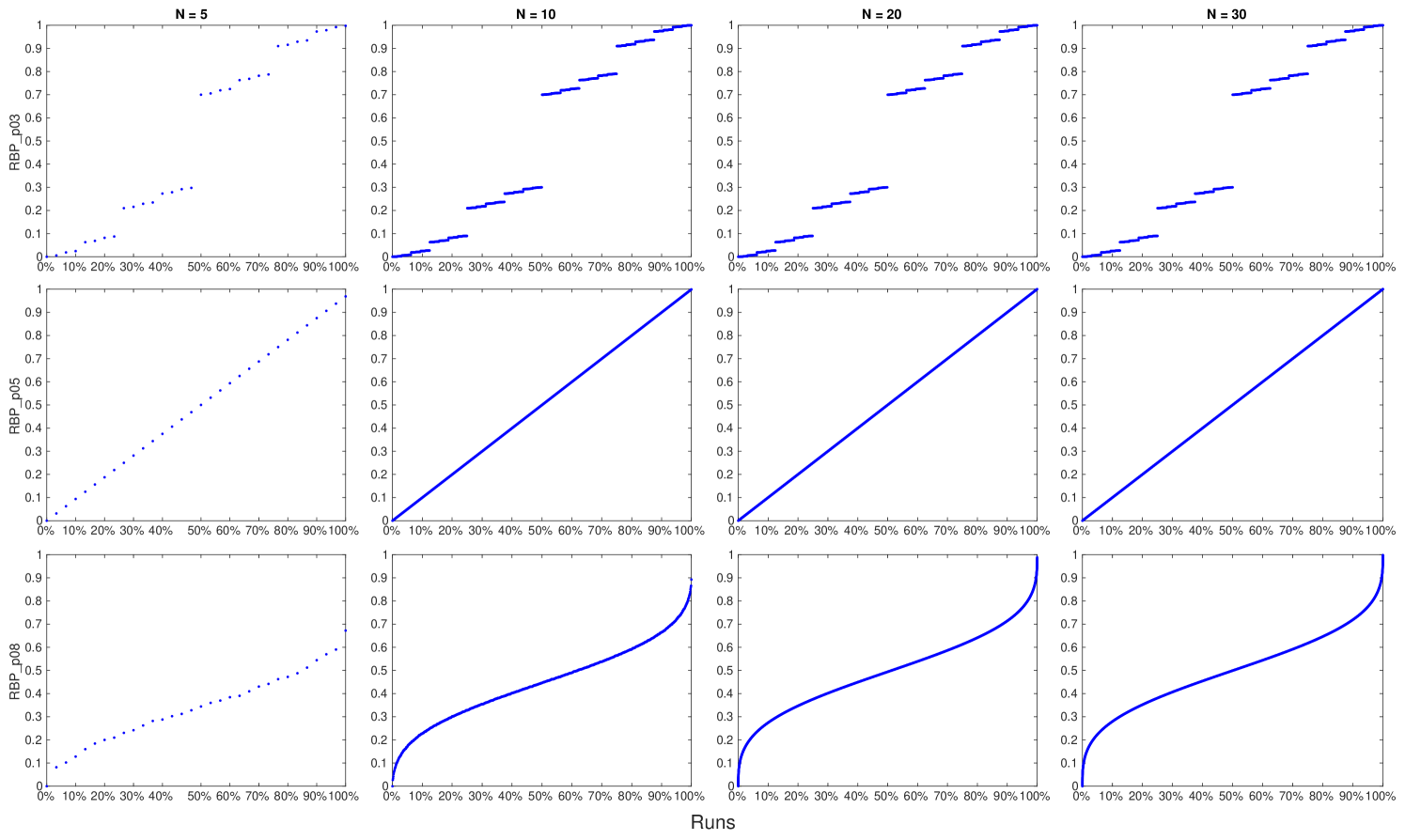

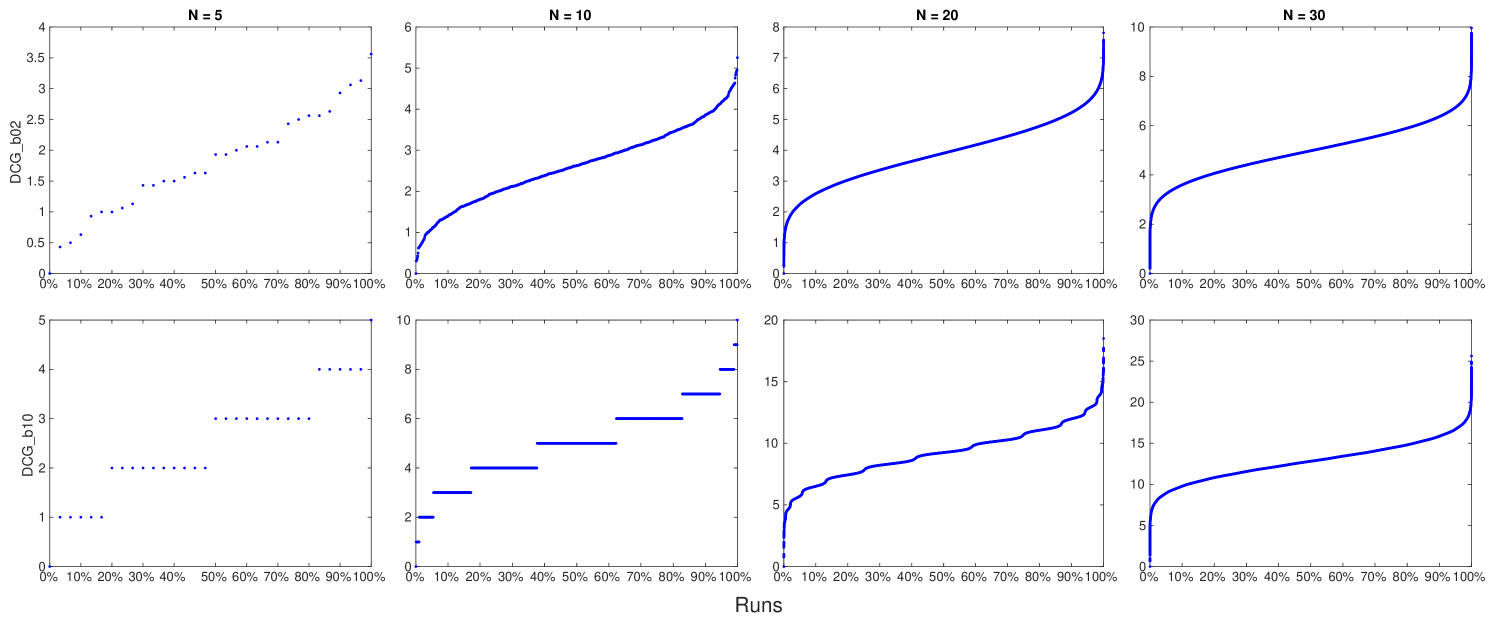

However, these differences in the RoS are not causing IR evaluation measures to not be interval scales; they would just mean that IR evaluation measures are different scales. The real problem with IR evaluation measures is that their scores are not equi-spaced and thus they cannot be interval scales, as explained in Section 2.2. This issue is depicted in Figure 2 which shows how different measures – namely, Precision (and Recall666Note that in this specific case, since the length of the run and the recall base are the same, Precision and Recall yield to the same scores.), AP, RR, RBP with , and DCG with log base – order and space the runs shown in the Hasse diagram of Figure 1.

We can observe that only Precision (Recall) and RBP with produce equi-spaced values, while all the other measures violate this assumption, required to obtain an interval scale; in other terms, Figure 2 visually represents the issue found by Ferrante et al. [33] even when using the induced total order. We can also note that all the measures agree only on the common comparable runs – i.e. and – but, as soon as incomparable runs come into play, they start to disagree on how to order them. Finally, looking at Figure 2 we can notice how IR measures behave differently in violating the equi-spacing assumption. RBP with and DCG follows a somehow regular pattern, where scores are not equi-spaced but they are in some way evenly clustered and they are symmetric if you fold the figure along its middle horizontal axis; on the other hand, AP and RR follow a much more irregular and not symmetric pattern.

We can also note how these measures spread values in their range differently. Precision (and Recall) and DCG spread their values all over the possible range while this is not always the case with RBP. Indeed, RBP assumes runs of infinite length and normalizes by the factor. However, we deal with runs of limited length and the factor is an overestimation, the bigger the overestimation the bigger is the value of and the smaller is the length of the run – this is more clearly visible in the case of RBP with in Figure 2(f). Finally, AP, RBP with , and RR, i.e. those measures farther from being interval scales, leave large portions of their possible range completely unused. In particular, AP leaves one quarter of its range unused, in the top part roughly corresponding to the first quartile of the possible values; RR leaves one half of its range unused, in the top part roughly corresponding to the first and second quartiles of the possible values; and, finally, RBP with leaves half of its range empty, in the middle part roughly corresponding to the second and third quartile of the possible values.

Why does it matter how much equi-spaced the values are and how they are spread over their range? Consider a random variable that takes values in the set . Even if all these five values can be obtained with equal probability, i.e. the random variable is uniform, the mean and the median of the variable differ, being the mean equal to and the median to . This shows how the lack of equi-spacing causes some sort of “imbalance” even in the case of a uniform variable, which may be an undesirable situation from the measurement point of view, at least if not explicitly considered and accounted for. Furthermore, when we compute , i.e. the probability that the value of is equal to with an error of at most , this function is not constant all over the range but it is assumer greater for values around than for those around . As a consequence, a similar accuracy in approximating the value of produces a different precision in the measurement depending on the value that we are considering. Note that in the present toy model it happens for , but a suitable modification of the present model can produce the same behaviour for any set in advance.

As a further example, let us consider a measure with a limited range of equi-spaced values. If we draw a set of random values taken from this range and consider its arithmetic mean, by the law of large numbers, we have that this mean converges to the middle point of the range interval. This property is independent from the distance among the subsequent values, i.e. the unit of measurement chosen. So we can use such a procedure – the convergence of the mean towards the middle of the range – in order to “calibrate” the measuring instrument, independently from the specific unit of measurement chosen. This is no more possible if we have values which are not equi-spaced.

Example 9 (Effect of RR not being equi-spaced).

Let us assume that we have two queries and two systems. System A returns the first relevant document at ranks 1 and 4, respectively, while system B finds the relevant answers in both cases at rank 2. Computing the MRR of the two systems, i.e. the average value of the RR, we get MRR(A)=, while MRR(B)=, telling us that system A is better than B. However, if instead of reciprocal rank, we regard the ranks themselves, we have equi-spaced values forming an interval scale (actually, even a ratio scale). In our example, system A finds the first relevant item on average at rank 2.5, which is worse than the average rank 2 of system B – so we would get the opposite finding when we use a scale still based on the rank of relevant documents but properly equi-spaced.

| System | AP | System | AP | ||||

|---|---|---|---|---|---|---|---|

| A | 0.5625 | C | 0.1665 | ||||

| B | 0.5575 | D | 0.1770 |

Example 10 (Effect of AP not being equi-spaced).

Table 1 shows an example of two system pairs (A,B) and (C,D) and two queries, for which we compute AP values. In the first case, AP will say that A performs better than B, while in the second case, C is worse than D. Why is this effect related to AP not being on an interval scale? Because in both the examples, the runs retrieved by the two systems for a given topic have the same relevance degrees in the first two positions and just a swap of a relevant with a not-relevant document in the last two positions. So, to the same loss of relevance for the swap in the last two positions, still keeping the same relevance in the first two positions, AP “reacts” in one case telling us that system A is better than system B, and in the second case that D is better than C and this is also due to the not-equispaced values of AP, e. g. runs ranked 13 and 14 are much closer than runs ranked 10 (on the left branch of Figure 1) and 9, as shown in Figure 2(b). Note that here we are neither questioning the top-heaviness of a measure nor its capability of reflecting user preferences but rather we point out how the lack of equi-spaced values affects the assessment supported by a measure.

The fact that IR evaluation measures, apart from Precision, Recall, and RBP with , are not interval scales leads to the general issues with computing means, statistical tests, and meaningfulness discussed in Sections from 2.2 to 7.3 and shown in Examples 3 and 7. In addition, Examples 9 and 10 above show how the lack of equi-spacing may also lead to statements like “system A is better than B” (or viceversa) which are not always intuitive all over the scale.

3.3 Averaging across Topics and Correlation Analysis Revisited

The fact that Precision and Recall are interval scales makes addition and subtraction permissible operations and, as a consequence, computing arithmetic means permissible too. Therefore, it is safe to average performance of IR systems across topics when we use Precision and Recall. But is that really true?

As said, Ferrante et al. [33] have found an interval scale , called Set-Based Total Order (SBTO), and have shown that both Precision and Recall are an affine transformation of this interval scale and thus also an affine transformation of each other. Ferrante et al. [34] have raised this question: if Precision and Recall are transformations of the same interval scale, they are ordinal scales too and they should rank systems in the same way. Therefore, if they produce the same RoS, Kendall’s correlation between them should be 1. So, why their Kendall’s correlation is , using the TREC 8 Ad-hoc data?

Let us consider how correlation analysis between evaluation measures works. Given two rankings and , their Kendall’s correlation is given by , where is the total number of concordant pairs (pairs that are ranked in the same order in both vectors), the total number of discordant pairs (pairs that are ranked in opposite order in the two vectors), and are the number of ties, respectively, in the first and in the second ranking. where indicates two perfectly concordant rankings, i.e. in the same order, indicates two fully discordant rankings, i.e. in opposite order, and means that 50% of the pairs are concordant and 50% discordant.

The typical way of performing correlation analysis is as follows: let and be two evaluation measures; in our case, is Precision and is Recall. Let and be two matrices where each cell contains the performance on topic of system according to measures and , respectively. Therefore, and represent the performance of different systems (columns) over topics (rows). Let and be the column-wise averages of the two matrices, i.e. the average of the performance of each system across the topics. If you sort systems by their score in and , you obtain two RoS corresponding to and , respectively, and you can compute Kendall’s correlation between these two RoS. This is the traditional way for computing the correlation between two evaluation measures and Ferrante et al. call it overall correlation, since it first computes the average performance across the topics and then it computes the correlation between evaluation measures. This approach leads to a Kendall’s correlation of between Precision and Recall.

Ferrante et al. proposed a different way of computing the correlation, called topic-by-topic correlation, where, for each topic , they consider the RoS on that topic corresponding to and the one corresponding to , i.e. they consider the -th rows of and , respectively; they then compute Kendall’s correlation among the two RoS on that topic. Therefore, they end-up with a set of correlation values, one for each topic. Using, this way of computing correlation, Ferrante et al. found that Kendall’s correlation between Precision and Recall is always for all the topics and this was the result expected for two interval scales which order systems in the same way.

Therefore, if you consider each topic alone, Precision and Recall are just a transformation of the same interval scale, as Celsius and Fahrenheit are, and their Kendall’s correlation is . However, if you first average across topics, which should be a permitted operation for interval scales, and then you compute Kendall’s correlation, it stops to be . This was somehow surprising and unexpected. Indeed, as an example from another domain, if you take a matrix of scores in Celsius degrees and another one with the corresponding Fahrenheit degrees, their Kendall’s correlation is always , either if you compute it row-by-row (i.e. our topic-by-topic correlation) or if you first average across rows and then compute it (i.e. our overall correlation).

Ferrante et al. [34, p. 305] explained this behaviour as due to the recall base:

Recall heavily depends on the recall base which changes for each topic and it is used to normalize the score for each topic; therefore, in a sense, recall on each topic changes the way it orders systems

We further investigate this issue in Section 3.4 below, where we provide details and demonstrations, but here we summarise the sense of our findings. The difference between overall and topic-by-topic correlation is basically due to the fact that we are using different interval scales for each topic. These scales are indeed transformations of one in the other for each topic and this is why topic-by-topic correlation is ; however, since we are changing scale from one topic to another, when average across topics we are mixing different scales and this is why the overall correlation is different from .

Example 11 (Recall corresponds to different scales on different topics).

Let us consider Recall and let us assume that we have three queries , with one, two and three relevant documents, respectively. Then, the possible values of Recall are as follows: for we have and ; for we have , and ; and for we have , , , and . Obviously, we have three different interval scales here – although they are in the same range , their possible values are different. So we have to map the values onto a single scale, before we can do any statistics. There are two possibilities for doing this:

-

1.

We take the union of the possible values. This would yield the set . However, these values are no longer equidistant, so it is not an interval scale.

-

2.

We extend the union scale from above by additional values such that we have equidistant values, based on the least common denominator. Then we would have the set in our example. However, in this scale, the values and are not possible for our three example topics, and impossible values are not considered in the definition of the equidistance property of interval scales. Only if we had a fourth query with six relevant documents, this scale would be ok. In most cases, however, no such scale exists, and so the aggregated scale is not an interval one.

The fact that we may be changing scale from topic to topic has very severe consequences. All the debate originated by Stevens’s permissible operations and the possibility of averaging only from interval scales onwards has always been based on the obvious assumption that the averaged values were all drawn from the same scale; no one has ever doubted that it is not possible to average values coming from different scales because this would be like mixing apples with oranges. So, what is the meaningfulness of typical statements like “System A is (on average) better than system B” when we are not only violating the interval scale assumptions but, even more seriously, we are mixing different scales? What about the meaningfulness of typical statements like “System A is significantly better than system B”? The debate between using parametric or nonparametric tests concerns how much you wish to comply with the interval scale assumptions but, undoubtedly, all the significance tests, when aggregating across values, expect them to be drawn from the same scale.

If we wish to make an analogy, it is like the difference between using mass and weight, being Precision similar to mass and Recall to weight. It would be somehow safe to average the mass of bodies coming from different planets but it would not to average their weight, due to the different gravity on the different planets. The recall base is what changes the gravity from planet/topic to planet/topic in the case of Recall.

However, even Precision is not completely “safe” because, when the length of the run changes, its scale changes as well. As a consequence we may end up using different scales from one run to another and this can happen not only across topics, as in the case of Recall, but also within topics, if we have two or more runs retrieving a different number of documents for that topic. This statements affects the evaluation of classical Boolean retrieval, where Precision and Recall are computed for the set of retrieved documents for each query, followed by averaging over all queries. So we have to conclude that this procedure is seriously flawed. Luckily, in most of today’s evaluations, the length of the run has a much smaller effect because, in typical TREC settings, almost all the runs retrieve 1,000 documents for each topic and just few of them retrieve less documents; this effect would also (practically) disappear when you consider Precision at lower cut-offs, like P@10, when it is almost guaranteed that all the runs retrieve 10 documents.

Summing up, independently from an evaluation measures being an interval scale or not, the recall base (greatly) and the length of the run (less) cause the scale to change from topic to topic and/or from run to run. This makes averaging across topics, as well as other forms of aggregation used in significance tests, problematic at best. We show how and why this happens in the case of Precision (Section 3.4.1) and Recall (Section 3.4.2), which are the simplest measures you can think of, since they change either the length of the run or the recall base alone. We also consider the more complex case of the F-measure (Section 3.4.3), which changes both the run length and the recall base at the same time. Therefore, we hypothesise that these issues may be even more severe in the case of more complex evaluation measures, like AP and others, which are not even interval scales and mix recall base and run length with rank position and various forms of utility accumulation and stopping behaviours.

Finally, also the way in which we interpret the results of correlation analysis may be impacted. Indeed, we typically attribute differences in correlation values to the different user models embedded by evaluation measures. The rule-of-thumb by Voorhees [96, 97] is that an overall correlation above means that two evaluation measures are practically equivalent, an overall correlation between and means that two measures are similar, while dropping below indicates that measures are departing more and more. Therefore a correlation of would suggest that Precision and Recall share some commonalities but they differ enough due to their user models, still not being pathologically different. However, we (now) know that they are just the transformation of the same interval scale and that this correlation value is just an artifact of mixing different scales across topics rather than an intrinsic difference in the user models of Precision and Recall.

3.4 Why May Scales Change from Topic to Topic or from Run Length to Run Length?

As discussed above, Ferrante et al. [33] have demonstrated that Precision, Recall, and F-measure are interval scales when you fix the length of the run and the recall base , i.e. they are an homomorphism with respect to the same ordering of runs in the ERS. However, if we mix together runs with different bounded lengths and/or different bounded recall bases, Precision, Recall and F-measure are no more interval scales, they are no more an affine transformation of each other and they even order the runs in different ways. Clearly, this is a severe issue when you need to average (or compute any other aggregate) across different topics or runs with different lengths.

Let us consider the universe set which contains all the runs of any possible length , less than or equal to , and with respect to all the possible recall bases , less than or equal to . To avoid trivial cases, we consider always and greater than or equal to . A run in is represented by a triple , where indicates the number of relevant documents retrieved by the run, is the length of the run, and is the recall base, i.e. the total number of relevant documents for a topic. Note that, for each run in it holds and by construction, but we also have , where , i.e. there is a (implicit) dependence on the recall base when it comes to the number of relevant retrieved documents.

We define as the set which contains all the runs with the same length and with respect to the same recall base . Therefore, we can express the universe set as the union of such sets, namely

models the typical case of runs all with the same length for a given topic (or for a set of topics which have the same recall base). This is exactly the case for which Ferrante et al. [33] have demonstrated that Precision, Recall and F-Measure are interval scales and an affine transformation of each other. However, this holds for each separately while the issue we discuss in this section is what happens when you mix different , i.e. when you go towards .

3.4.1 Precision

Precision is equal to the fraction of the retrieved documents that are relevant. Therefore, for a run represented by triple , Precision is given by

Let us start from : maps this set into the set and it has been proven by Ferrante et al. that is an interval scale in this case. However, already in this simpler case, there is a (implicit) dependency on the recall base, when it comes to the possible values of Precision. Therefore, even when we consider runs with the same length but for topics with different recall bases, i.e. , , , … we are dealing with different scales, all embedded in the single interval scale whose image is .

To understand the problems arising mixing different lengths and recall bases, let us consider the general scenario of Precision defined on . This is the case where we consider the Precision measure defined on the set of the runs of any possible bounded length and recall base and we find that it is an interval scale only in the almost trivial cases of . Indeed, maps , for any , into the set and it is an interval scale since these values are equispaced. When , maps , for any , into the set ; Since the values are equispaced, Prec is still an interval scale. To compare the order induced on these sets by (and the other measures), let us consider in more detail . This set is

and , while .

Continuing with a similar construction for , we obtain that assumes the four possible values , when , and the five possible values when . Indeed, for runs of length at most , these are all the possible values of the fraction for and . Since these values are not equispaced, it is sufficient to state that is not an interval scale on .

To prove that is not interval on for any finite , let us prove again that the values on the image are not equispaced. The three smallest values of are , and . Indeed, the only other possible candidate to be the third smallest value, when , would be , but when . These three values are not equispaced since , when , and therefore on cannot be an interval scale when .

3.4.2 Recall

The Recall measure depends explicitly on the recall base , i.e. the total number of relevant documents available for a given topic

Note that for any admissible run , i.e. for which , its recall value (implicitly) depends on , creating a specular situation with respect to the one of Precision.

is an interval scale on , since it is an affine transformation of , as demonstrated by Ferrante et al.. However, due to the (implicit) dependency on , even when we consider topics with the same recall base but runs with different length, i.e. , , , … we are dealing with different scales, as discussed below.

is an interval scale on the sets for any maximum length , since the image is the equispaced set . Applied to the sets , for any , takes the values and, therefore, it is an interval scale as well. However, Precision and Recall induce, for example on , two different orderings of the runs and so they stop to be an affine transformation of each other, i.e. they become two different interval scales. Indeed, consider the runs and : we have seen that , while it holds that .

When we define on , for , we have that this measure is no more interval thanks to an argument similar to that used for Precision. Indeed, the two smallest non zero values of Recall on , are as and , here obtained for a run with a unique relevant document with respect to a topic with and , respectively.

Furthermore, it is immediate to see that Recall and Precision induce for any and two different orderings on , i.e. they become two different scales. Indeed, for any two runs and , we have that if and only if , while if and only if . Both these condition are satisfied when

For example, if we take , and , the previous condition is satisfied and the two runs are ordered in a different way by the two measures.

3.4.3 Measure

The measure is the harmonic mean of precision and recall

Some small algebra gives us that is also equal to

As before (see Ferrante et al.), we have that on , is an interval scale, being an affine transformation of and .

On the contrary, if we consider defined on , it is no more an interval scale, except for the almost trivial case , whose image is the equispaced set . Let us first consider and : in both these cases the values in the image of are no more equispaced, since takes the values . If we consider on , it takes the vales and is still not an interval scale. Moreover, we have that induces yet another ordering on , since , while it holds that and .

When we define on , for or , we have that this measure is no more an interval scale, as can be easily seen since the three smallest values of the image are and , which are not equispaced. At the same time, using an example similar to the one used for , we obtain that the ordering induced on by in these latter cases differs from both the orderings induced by and .

3.4.4 Summary and Discussion

We have demonstrated that, when we consider runs with a fixed length and with respect to a fixed recall base , i.e. we consider and runs of the same length for the same topic (or, more generally, topics with the same recall base), Precision, Recall, and F-measure are interval scales and they are an affine transformation of each other. As a consequence, they order runs in the same way and their Kendall’s is .

However, when we start mixing runs with different length and/or with respect to different recall bases, the situation quickly gets more complicated. Only in the trivial (and not very useful in practice) case and , i.e. where we have runs of length or and topics with or relevant documents, Precision and Recall are still both interval scales but they stop to be an affine transformation of each other. As a consequence, they order runs in different ways and their Kendall’s is less than . F-measure already stops to be an interval scale and orders runs in yet another way than Precision and Recall, leading to a Kendall’s less than . For and all of them (Precision, Recall, and F-measure) stop to be interval scales, departing from the interval assumption more and more, and they order runs in three completely different ways, again leading to a Kendall’s less than . In the special case where we fix the length, Precision is still an interval scale, while if we fix a single recall base, Recall is still an interval scale, but in both cases the other measure is no more interval and also order the runs in a different way.

We may be tempted to consider as positive the fact that sooner than later Precision, Recall, and F-measure start ordering runs in a different way and that their Kendall’s is less than . Indeed, this is what we expect from evaluation measures, to embed different user models and to reflect different user preferences in ordering runs. This is also one of the main motivations why there is debate and we would accept to derogate from requiring them to be interval scales: reflecting user preferences could be more important than complying with rigid assumptions.

However, we should carefully consider how this is happening. They initially are the “same” scale (except for an affine transformation), when we use them to measure objects with some shared characteristics, i.e. same run length , and with respect to a similar context, i.e. same recall base . However, as soon as we measure objects with more mixed characteristics and contexts, they cease to be the “same” interval scale and only at that point they begin to order runs differently. This is more or less like saying that kilograms and pounds are the “same” interval scale only when we weigh people with the same height and from the same city but, as soon as we weigh people with different heights and/or coming from flatland or mountains, they become two different scales and they also possibly stop to be interval scales. This would sound odd and quite different from saying that weight and temperature are different (interval) scales because they measure different attributes/properties of an object or, in our terms, they would reflect different user preferences.

Why does this happen? Because run length and recall base change. This is very clear and somehow more extreme in F-measure, where both and explicitly appear in the equation of the measure.

We hypothesize that this could be even more severe and extreme in the case of rank-based measures since not only they combine, implicitly or explicitly, the two factors and but they also mix them with the rank of a document and various discounting and accumulation mechanisms. Figure 2 gives a taste of this much more complex situation: it shows the simple (and somehow safe) case of and it already emerges how different are the behaviours and patterns in violating or complying with the interval scale assumption.

Why does this matter? As already said, because we need to aggregate scores across topics and runs and to compute significance tests. We do not only have the problem of how much evaluation measures violate the interval scale assumptions, required to compute aggregates, but also the issue of not mixing apples and oranges, i.e. scores from different scales, required to make aggregates sensible. In this respect, run length is a less severe issue which can be easily mitigated in practice, either by forcing a given length or because we are interested in lower cut-offs, e.g. 5, 10, 20, 30. The effect of the recall base can be mitigated by adopting measures that do not explicitly depend on it, even if the implicit dependency due to the capping of the image values will remain.

4 Related Works

van Rijsbergen [92] was the first to tackle the issue of the foundations of measurement for IR by exploiting the representational theory of measurement. He observed that [92, pp. 365–366]

The problems of measurement in information retrieval differ from those encountered in the physical sciences in one important respect. In the physical sciences there is usually an empirical ordering of the quantities we wish to measure. For example, we can establish empirically by means of a scale in which masses are equal, and which are greater or lesser than others. Such a situation docs not hold in information retrieval. In the case of the measurement of effectiveness by precision and recall, there is no absolute sense in which one can say that one particular pair of precision/recall values is better or worse than some other pair, or, for that matter that they are comparable at all

Later on, van Rijsbergen [94, p. 33] further stressed this issue: “There is no empirical ordering of retrieval effectiveness and therefore any measure of retrieval effectiveness will be by necessity artificial”.

van Rijsbergen addressed this issue by exploiting the additive conjoint measurement [53, 58]. Additive conjoint measurement was a new part of the measurement theory developed as a reaction to the views of Campbell [17, 18] and the conclusions of Ferguson Committee of British Association for the Advancement of Science [30], where Campbell was an influential member, which considered the additive property, i.e. the concatenation operation mentioned in Section 2.1, as fundamental to science and proper measurement; as a consequence, measurement of psychological attributes, which is lacking such additive property, was not possible in a proper scientific way. As explained by Michel [63, p. 67]

Conjoint measurement involves a situation in which two variables ( and ) are noninteractively [e.g. non additively] related to a third (). It is not required that any of the variables be already quantified, although it is necessary that the values of be orderable, and that values of and be independently identifiable (at least at a classificatory level). Then, via the order on , ordinal and additive relations on , , and may be derived

Typical examples from physics are the momentum of an object, which is affected by its mass and velocity, or the density, which is affected by its mass and volume [53].

van Rijsbergen considered retrieval effectiveness as the “orderable ” mentioned above and took precision and recall as the two variables and . In particular, he demostrated that on the relational structure it was possible to define an additive conjoint measurement and to derive actual measures of retrieval effectiveness from it. Note that, in this way, he avoided the need to explicitly define what an ordering by retrieval effectiveness is and he considered that precision and recall contribute independently to retrieval effectiveness. The problem of how to order runs in the ERS has been addressed some years later by Ferrante et al. [31, 32, 33]. More subtly, van Rijsbergen treats precision and recall as two attributes which can be jointly exploited to order retrieval effectiveness but, each of them, is already a measure and quantification of retrieval effectiveness and, thus, this brings some circularity in the reasoning. Finally, van Rijsbergen did not address the problem of which are the scale properties of precision and recall (or other evaluation measures), which has been later addressed by Ferrante et al..