Towards More Generalizable One-shot Visual Imitation Learning

Abstract

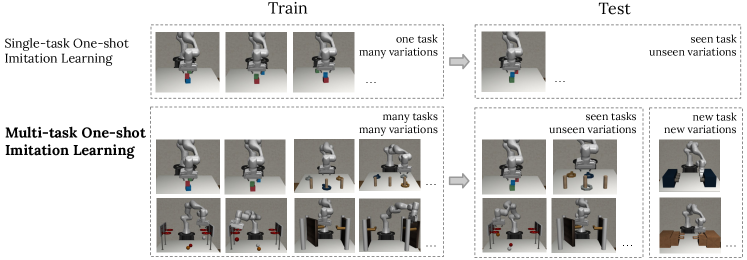

A general-purpose robot should be able to master a wide range of tasks and quickly learn a novel one by leveraging past experiences. One-shot imitation learning (OSIL) approaches this goal by training an agent with (pairs of) expert demonstrations, such that at test time, it can directly execute a new task from just one demonstration. However, so far this framework has been limited to training on many variations of one task, and testing on other unseen but similar variations of the same task. In this work, we push for a higher level of generalization ability by investigating a more ambitious multi-task setup. We introduce a diverse suite of vision-based robot manipulation tasks, consisting of 7 tasks, a total of 61 variations, and a continuum of instances within each variation. For consistency and comparison purposes, we first train and evaluate single-task agents (as done in prior few-shot imitation work). We then study the multi-task setting, where multi-task training is followed by (i) one-shot imitation on variations within the training tasks, (ii) one-shot imitation on new tasks, and (iii) fine-tuning on new tasks. Prior state-of-the-art, while performing well within some single tasks, struggles in these harder multi-task settings. To address these limitations, we propose MOSAIC (Multi-task One-Shot Imitation with self-Attention and Contrastive learning), which integrates a self-attention model architecture and a temporal contrastive module to enable better task disambiguation and more robust representation learning. Our experiments show that MOSAIC outperforms prior state of the art in learning efficiency, final performance, and learns a multi-task policy with promising generalization ability via fine-tuning on novel tasks.

I INTRODUCTION

Humans can learn to complete many tasks and quickly adapt to a new situation based on past experiences. We believe robots should also be able to learn a variety of tasks and acquire generalizable knowledge, which can then be transferred to quickly and efficiently learn a novel task.

One-shot imitation learning (OSIL) is a popular training framework for this purpose: an agent is trained to perform multiple tasks, each is described by an expert demonstration to provide context. First proposed in [9], the framework has been extended to different tasks and visual inputs [7, 11, 20, 45]. However, these prior works tend to assume a very strong similarity between train and test. For example, a typical setting is where at training time the agent learns to build a block configuration that matches the block configuration in a preceding demo, and then at test time the agent is again requested to stack blocks, with variation just stemming from which block is in which position of the stack and the starting locations of blocks on the tabletop. Another typical setting is moving objects from a table top to a set of bins, where at test time the task will again be moving the same objects from tabletop to bins, with variation just stemming from which object goes to which bin and starting locations of the objects.

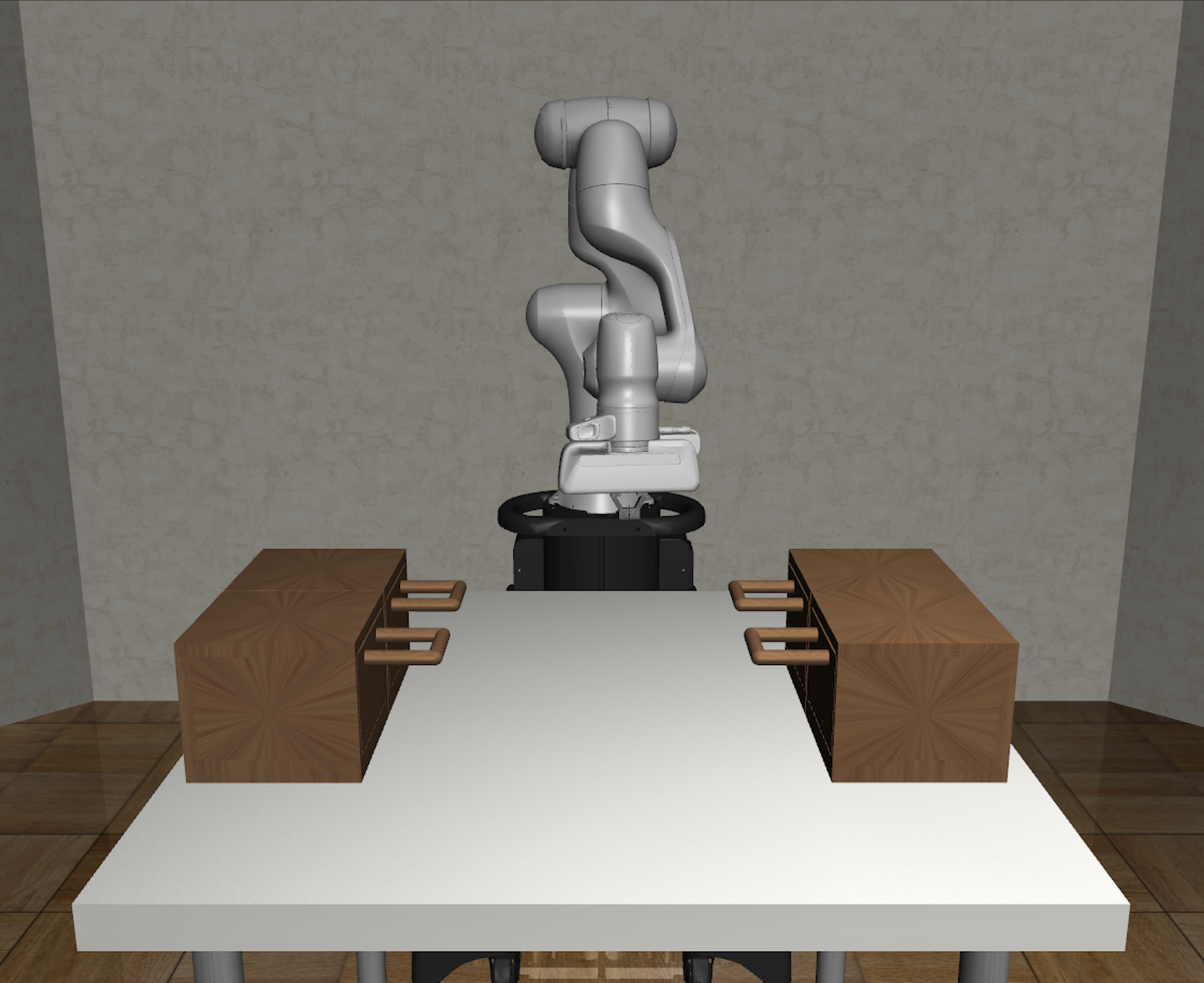

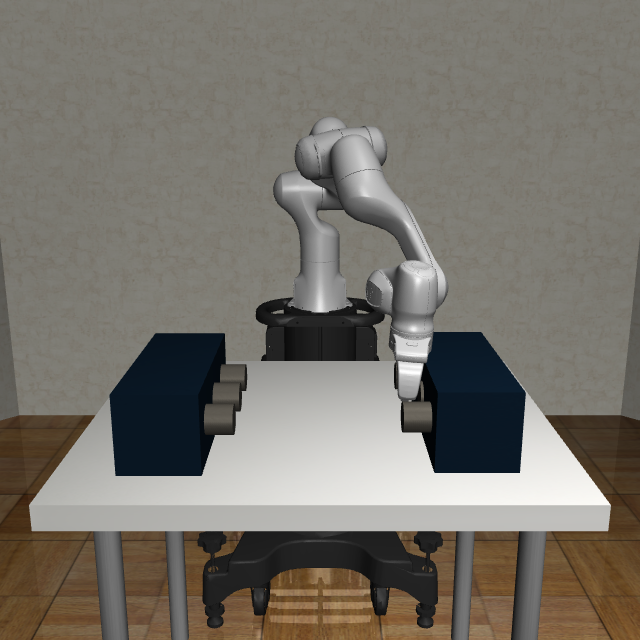

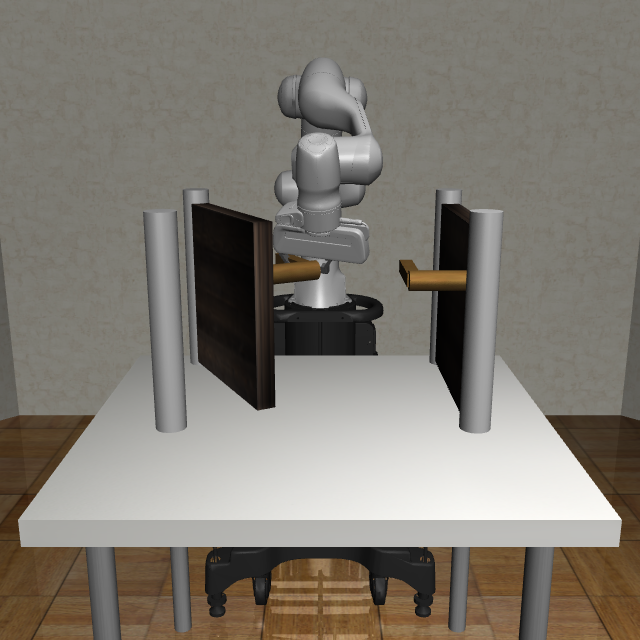

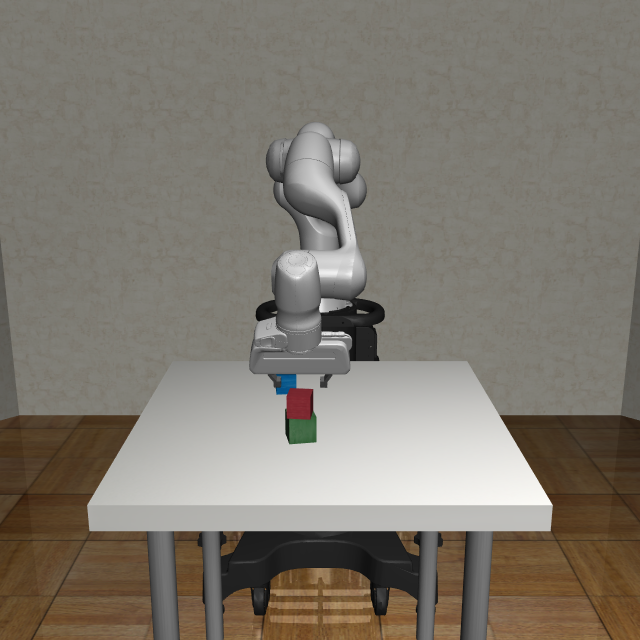

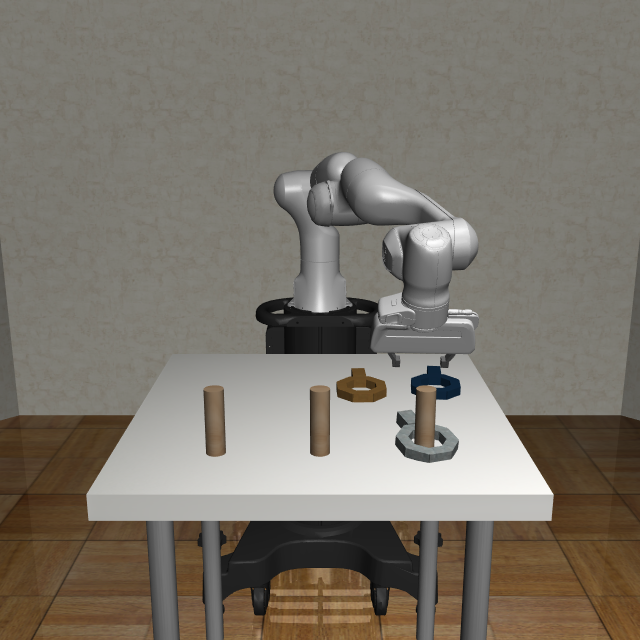

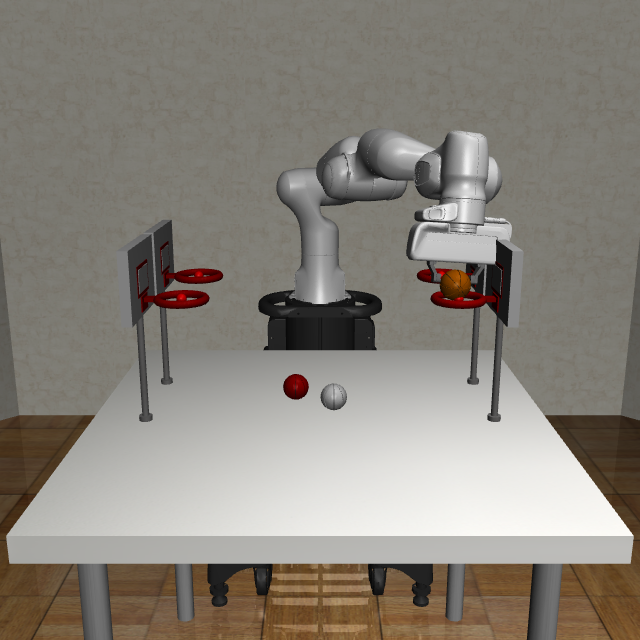

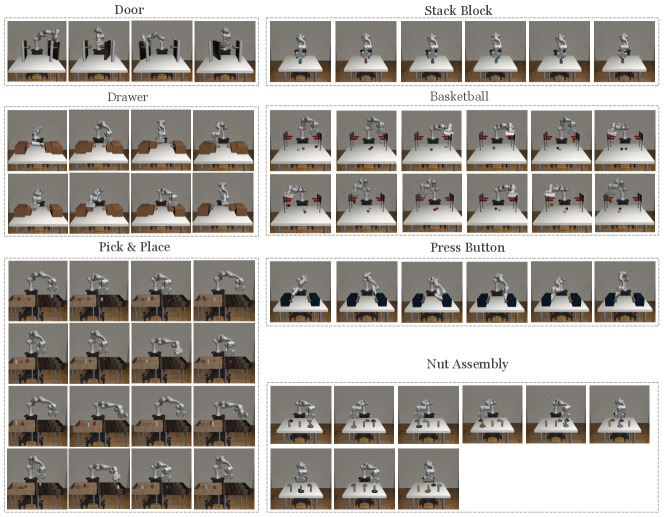

In this work, we propose to expand this narrow, single-task setting with a more significant distinction between train and test. Concretely, we build 7 robot manipulation environments: Door, Drawer, Press Button, Stack Block, Basketball, Nut Assembly, Pick & Place, based on simulation framework from Robosuite v1.1 [48] and MetaWorld [46]. Terminology-wise, we will consistently refer to them as tasks. Within each task, there are “variations”, which capture differences in which block goes on top of which block, or which object goes into which bin, etc (see Figure 11 for visualizations of all 61 variations). Within one fixed variation of a task, there is also a continuum of instances, corresponding to all possible initial states of the various objects. We illustrate this setup with the row “Multi-task One-shot Imitation Learning” in Figure 1.

We evaluate representational and generalization capability through three settings: (i) one-shot imitation on variations within the multi-task training regime, (ii) one-shot imitation on new tasks; and (iii) fine-tuning on new tasks. As a first step in our investigation, we study the performance of prior state-of-the-art methods [7, 45]. We observe that, while performing well in the prior single-task settings, these methods largely fail to handle our proposed multi-task setup. This suggests a great opportunity for novel research towards improving the generalization ability of few-shot imitation methods.

In addition to identifying this challenge for more generalizable OSIL, we also propose a new approach that shows significant performance gain over prior state of the art. Concretely, we investigate the hypothesis that prior methods fall short in the multi-task settings due to (i) poor representations that do not generalize well to new tasks; (ii) a lack of proper inductive bias in the model architecture, which prevents accessing the one demonstration from a new task. To address these challenges, we introduce MOSAIC: Multi-task One-Shot imitation with self-AttentIon and Contrastive learning, which incorporates two key components: (i) a new temporal contrastive loss objective to provide additional supervision for representation learning; (ii) a self-attention policy model architecture for extracting contextual information in the demonstration. Experimental results show significantly improved performance of our method over the prior state of the art.

Key contributions of this paper can be summarized as the following:

-

•

We introduce a simulated robotic manipulation benchmark that spans 7 tasks and a total of 61 task variations. Its codebase is publicly released to facilitate future research.

-

•

We propose a more challenging setup for one-shot imitation learning: 1) train an agent on multiple distinct tasks and test on the seen task variations; 2) train on multiple distinct tasks and test on completely new tasks, via direct one-shot execution or fine-tuning. We investigate prior state-of-the-art methods under these conditions and observe clear room for improvement.

-

•

We propose our method MOSAIC, which combines a self-attention model architecture and a temporal contrastive objective. We experimentally demonstrate its superior performance over baselines, and show its promising ability at being fine-tuned to learn a new task efficiently.

II RELATED WORK

Imitation learning. There are two main approaches for imitation learning (IL): inverse reinforcement learning (IRL) [1, 27, 16] which finds a cost function under which the expert is uniquely optimal, and behavioral cloning (BC) [3, 30] that predicts expert actions from state observations as a supervised learning problem. Recent advances in IL have enabled agents to perform various robotic control tasks, such as locomotion [28, 16], self-driving [5, 29], video games [2, 32], and manipulation [31, 39, 44]. However, a majority of these applications assumes a close match between train and test environment. This has the disadvantage of learning without the ability to transfer knowledge to new situations, and lacks the opportunity for a human to instruct the agent with a new task at test time.

One-shot imitation learning. To address these limitations, one-shot imitation learning (OSIL), first proposed in [9], trains an agent to intake both one successful demonstration and the current observation, and predict the expert’s action. Later work extended OSIL to observe visual inputs: [11] applies the Model-Agnostic Meta-Learning algorithm (MAML) [10] to adapt policy model parameters for new tasks; TecNets [21] applies a hinge rank loss to learn explicit task embeddings; DAML [45] adds a domain-adaptation objective to MAML to use human demonstration videos; [7] improves policy network with Transformer architecture [41]. Another line of work learns modular task structures that can be reused at test time [43] [18] [19], but outputs of these symbolic policies are highly abstracted into semantic action concepts (e.g. “pick”, “release”) that assume extensive domain knowledge and human-designed priors.

However, prior OSIL work has been limited to a single-task setup and mainly tests a model on a slightly different instance (e.g. different object pose) of the previously-seen task variations. For example, [11] and [21] experimented with 3 separate settings: simulated planer reaching (with different target object colors), simulated planer pushing (with varying target object locations), and real-robot, object-in-hand placing (onto different target containers). In contrast, we consider a more difficult multi-task setup, where agent needs to perform well across more diverse and distinct tasks, and generalize not only to new instances of all the seen variations, but also to completely novel tasks.

Multi-task Imitation Learning for Robotic Manipulation Our work falls under the broader category of imitation learning multiple robot manipulation tasks [47][24][35]. The term “multi-task” has varying definitions across this space of literature. Some work define stacking different block combinations as different tasks, whereas we define them as variations of the same task. Tasks that are sufficiently distinct, such as object pushing versus grasping, are sometimes called “task families” [47], where a “multi-task” policy is trained with only one family, and novel object configurations are named “new tasks” to test generalization. Recent work [26] [36] also explored language conditioning for different interact-able objects as tasks, where act-once word embeddings are used for disambiguation, and shows generalization ability at sequentially executing trained tasks to achieve longer-horizon test-time tasks. Concurrently, BC-0 [8] reports 100 distinct manipulation tasks for zero-shot imitation learning, where the 100 “tasks” fall into only 9 underlying skills and 6-15 different objects, and the “unseen” tasks are object arrangements that are excluded from training.

Unsupervised/self-supervised representation learning. Recently, several unsupervised/self-supervised representation learning methods have been proposed to improve the performance in learning from visual inputs [2, 23, 37, 34]. [2] solves hard exploration environments like Atari’s Montezuma’s Revenge [4] by using self-supervised representation to overcome domain gaps between the demonstrations and an agent’s observation. CURL [23] and ATC [37] showed that sample-efficiency can be significantly improved by applying contrastive learning [6, 14, 13] to reinforcement learning. In this paper, we show that contrastive learning also provides large gains in one-shot imitation learning.

III PROBLEM SETUP

We extend the framework of one-shot imitation learning (OSIL) [9] to a challenging multi-task setup. We categorize a set of semantically similar variants of a single task as “variations”: for example, for each variation of the Pick & Place task, the agent should pick up one of 4 differently shaped objects, and place it in one of the 4 bins, resulting in 16 variations in total. Following this definition, prior work [9, 7, 11, 20, 45] on one-shot imitation learning evaluate agents with a single task, as illustrated in Figure 1.

Consider different tasks, }, where each task contains a set of variations . For each task, the training dataset contains paired expert demonstrations and trajectories from multiple variations: . The demonstrator provides a video , and the policy is trained to intake and imitate an expert trajectory . While expert trajectories require both actions and observations, only video inputs are required as demonstrations, thus the demonstrator can be any other robot or even human. For all our experiments, the demonstrator robot has a different arm configuration (i.e. Sawyer Arm) than the imitator agent (i.e. Panda Arm).

Given training datasets of those tasks, we optimize a demonstration-conditioned policy , parameterized by , that takes an expert video and the current observation as input and takes an action at each time-step . At test time, the model is provided one demonstration of one variation of a task , paired with observations . Note that can be an unseen variation of either one of the trained tasks, or a never-seen task excluded from training.

IV MAIN METHOD

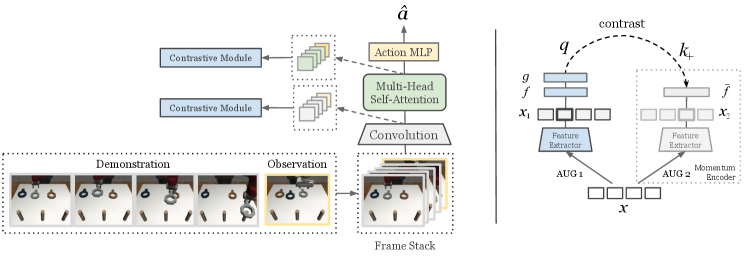

In this section, we describe in details our approach to policy model architecture (Section IV-A), self-supervised representation learning via a contrastive module (Section IV-B) and the action imitation loss objective (Section IV-C).

IV-A Network Architecture

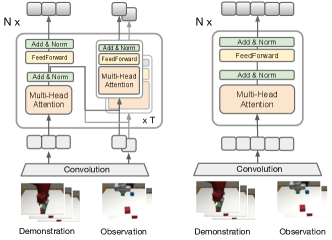

Figure 2 provides an overview of our model pipeline: a CNN backbone is followed by a multi-head self-attention module [41] and an MLP to make action predictions.

Visual features Given a batch of inputs containing expert video frames and agent observations, a CNN backbone encodes each frame into channels of size feature maps, resulting in demonstration features with size , and observation features with size . To preserve spatial and temporal information, both features are flattened along the last 3 dimensions and added with sinusoidal encodings [41], then re-shaped into the original size.

Self-attention module We use multiple self-attention layers that model the underlying relationship between the sequence of representations and . We adopt the non-local self-attention block in [42], and make it to a multi-head version as [7]. Specifically, key, query and value tensors are generated from three separate 3D convolution layers, which are then flattened along the time and space dimensions to compute spatio-temporal attention by each head individually. Formally, given temperature parameter , key , query and value , the attention head , the attention operation is computed as:

Outputs from each head are concatenated and projected to the original feature size by another 3D convolution, as used in [42]. will first pass through a self-attention module to get . Then, every frame in will compute self-attention with both and itself, which in effect calculates: (1) the spatial self-attention in each observation frame and (2) spatio-temporal cross-attention on the demonstration. The resulting will be used to predict action.

IV-B Contrastive Representation Learning

Our method bases off the intuition that, representations for two nearby frames from the same video clip should be similar, whereas frames from different tasks or variations should be drawn apart. For each frame in a video, we maximize its feature similarity with a randomly selected, temporally close-by frame. Specifically, we take an input batch, and obtain its two “view”s by two separate data augmentations. The model encodes the first view into , and a target model encodes the second to get , which is gradient-free and receives parameter updates solely from its online counterpart. Lastly, and are separately passed through a linear projector : , and its target : . For every feature frame in , we select a nearby feature frame in as positive. We then maximize the similarity between each anchor , and its positive , via the InfoNCE loss from [40], where is another linear projector, also named as predictor in prior work [12].

We follow [40, 15] to model embedding similarity as bilinear product, calculated with a projection matrix . Formally, with total frame count , treating every other in the batch as negatives, the contrastive loss at each is expressed as:

| (1) |

One may view the convolution backbone and self-attention layers as one combined feature extractor, therefore the above contrastive loss can be applied to either before or after the self-attention layers as shown in Figure 2. Moreover, our contrastive module differs from [23] in the new temporal contrast strategy: for frame feature at timestep , it contrasts with a random nearby frame selected from to in its augmented counterpart, whereas prior work [37] uses a fixed-step future frame. We provide ablation experiments in Appendix to provide additional insights on details of our contrastive objetive implementation.

IV-C Policy Learning

Our objective is to learn a policy which takes current image observation and a demonstration video as inputs, and predicts the action distribution to successfully finish the task.

To enable learning a potentially multi-modal policy that excels across many tasks, we adopt the same solution used in [7, 25, 33], which discretizes the action space into 256 independent bins along every dimension, and parameterize the policy using a mixture of discretized logistic distribution. As described in Section IV-A, the self-attended observation features will pass through the action MLP, to predict the mean , scale and mixing weight for each discretized logistic distribution. The behavior cloning training loss is the negative log-likelihood:

| (2) |

Where , is the logistic sigmoid function. At the inference time, given , the action is sampled from the predicted distribution:

| (3) |

In addition to the behavioural cloning loss, we also utilize the inverse dynamics loss as in [7]. By taking consecutive observation frames during training, another MLP will predict inverse actions . The inverse dynamics loss has similar form as (2):

| (4) | |||

| (5) |

Combing with the contrastive loss introduced in Section IV-B, we obtain the overall loss for our method:

| (6) |

V EXPERIMENTS

V-A Task Environment and Dataset

Simulation environment. We develop 7 distinct task environments using Robosuite v1.1 [48] and combining MetaWorld [46] for additional assets. For each task, we additionally design multiple semantically distinct variations. In order to investigate cross-morphology imitation, we also integrate two robot arms. The imitation policy is learned and evaluated on a Panda robot arm but takes a Sawyer robot video as demonstration.

Data collection. For every variation of each task environment, we design scripted expert policies and collect 100 demonstration videos of Sawyer robot and another 100 for Panda robot, with differently initialized scene layouts as instances. We provide more detailed information on simulation environment and data collection in Appendix.

V-B Experimental Results

| Task | Setup | DAML [45] | T-OSIL [7] | LSTM | MLP | MOSAIC (ours) |

| Door | single | 23.3 5.2 | 57.9 7.1 | 65.8 7.1 | 41.2 8.2 | 67.1 5.5 |

| multi | 10.8 5.4 | 49.2 6.0 | 43.8 9.5 | 58.8 7.1 | 68.3 6.3 | |

| Drawer | single | 15.4 5.5 | 57.5 3.9 | 57.5 8.1 | 57.9 3.6 | 65.4 3.4 |

| multi | 3.3 1.4 | 53.3 4.0 | 28.7 6.0 | 52.5 6.0 | 55.8 3.6 | |

| Press Button | single | 62.8 3.9 | 56.4 2.4 | 48.3 6.6 | 40 5.5 | 71.7 3.9 |

| multi | 1.7 0.7 | 63.3 3.5 | 25.8 3.0 | 25.0 3.8 | 69.4 3.4 | |

| Pick & Place | single | 0 0 | 74.4 2.1 | 10.6 1.8 | 12.8 2.3 | 88.5 1.1 |

| multi | 0.0 0.0 | 19.5 0.4 | 2.2 0.7 | 5.0 1.4 | 42.1 2.3 | |

| Stack Block | single | 10.0 1.8 | 13.3 2.6 | 8.6 2.3 | 52.5 4.7 | 79.3 1.8 |

| multi | 0.0 0.0 | 34.4 3.4 | 33.3 5.5 | 16.7 3.7 | 70.6 2.4 | |

| Basketball | single | 0.4 0.3 | 12.5 1.6 | 5.4 1.2 | 24.2 2.6 | 67.5 2.7 |

| multi | 0.0 0.0 | 6.9 1.3 | 12.1 2.1 | 10.0 2.0 | 49.7 2.2 | |

| Nut Assembly | single | 2.2 1.4 | 6.3 1.9 | 3.9 1.5 | 15.6 2.9 | 55.2 2.8 |

| multi | 0.0 0.0 | 6.3 1.3 | 4.4 1.3 | 6.7 1.3 | 30.7 2.5 |

We conduct experiments with the dataset described in Section V-A to answer the following questions:

-

•

How does our method compare with prior baselines under the original single-task one-shot imitation learning setup.

-

•

How well does our method perform across multiple tasks, after trained on the same set of tasks.

-

•

How well does our trained multi-task model perform given a completely new task: can it 1) directly perform one-shot imitation at test time; 2) be fine-tuned to quickly adapt to the new task requiring fewer amount of data.

-

•

Which component(s) in our contrastive module are key to its effectiveness at representation learning. The ablation experiment results are provided in Appendix due to space limitation.

We report and compare performance to the following baseline methods:

- •

- •

- •

-

•

MLP: We replace the self-attention module in our model architecture with a simple MLP layer to process stacked visual features from the demonstration into “task context vectors”, which is then concatenated with observation features and used for action predictions.

For all experiments (including baselines), we keep the first three convolutional residual blocks in ResNet-18 as the feature extractor, and apply the same data augmentation strategy to prevent over-fitting and improve model robustness. For evaluation, we take 3 different converged model checkpoints, and for each variation of each task, we gather each policy’s rollout performance across 10 episodes with different random seeds. For both the single and multi-task models, we report the mean and standard deviation of success rates in each task separately. Details on network architecture and hyper-parameters can be found in the Appendix.

Single-task One-shot Imitation

We first evaluate performance on the single-task setup as done in prior methods. Specifically, the model is trained with demonstrations from multiple variations of one task and then tested on unseen instances of the same task (e.g. different initial poses). We report the success rate of all methods on each task in the rows named “single” of Table I, with a clear out-performance of our method over baselines on every task.

We remark that DAML [45] also experimented with a pick-and-place task in their original paper, but collected “hundreds of” objects for training and 12 held-out objects for testing. Without access to further details, we hypothesize that this visual diversity in training dataset was crucial for its success at picking correct test-time objects, which explains why the same method reports massive under-performance on the 4-object Pick & Place task, where [7] also reported a very low success rate from DAML () using a differently-configured simulation of the same task.

Multi-task One-shot Imitation

We next consider the multi-task setup by mixing data from all 7 tasks into one training dataset. After training, we report the success rate of one model on each task in the rows named “multi” of Table I. The baselines’ performances drop significantly compared with their results from single-task, whereas ours continues to work well across many tasks 111For this setup, we increase the number of attention layers in the model from 2 to 3, and also adjust each baseline accordingly for fair comparisons. However, the performance of DAML [46] is still not comparable with others using the updated architecture and after tuning hyper-parameters..

Novel Task Generalization

| Training Setup | Novel-task | No Training | Single-task | Multi-task | Fine-tune |

|---|---|---|---|---|---|

| Door | 5.0 3.1 | 2.5 0.9 | 67.1 5.5 | 68.3 6.3 | 67.5 5.6 |

| Drawer | 15.0 6.6 | 1.2 1.2 | 65.4 3.4 | 55.8 3.6 | 52.5 4.5 |

| Press Button | 5.0 3.1 | 0 | 73.9 3.9 | 69.4 3.4 | 51.1 4.8 |

| Stack Block | 0 | 0 | 79.3 1.8 | 70.6 2.4 | 98.3 0.9 |

| Basketball | 0 | 0 | 67.5 2.7 | 49.7 2.2 | 72.8 2.7 |

| Nut Assembly | 0 | 0 | 55.2 2.8 | 30.7 2.5 | 73.3 2.3 |

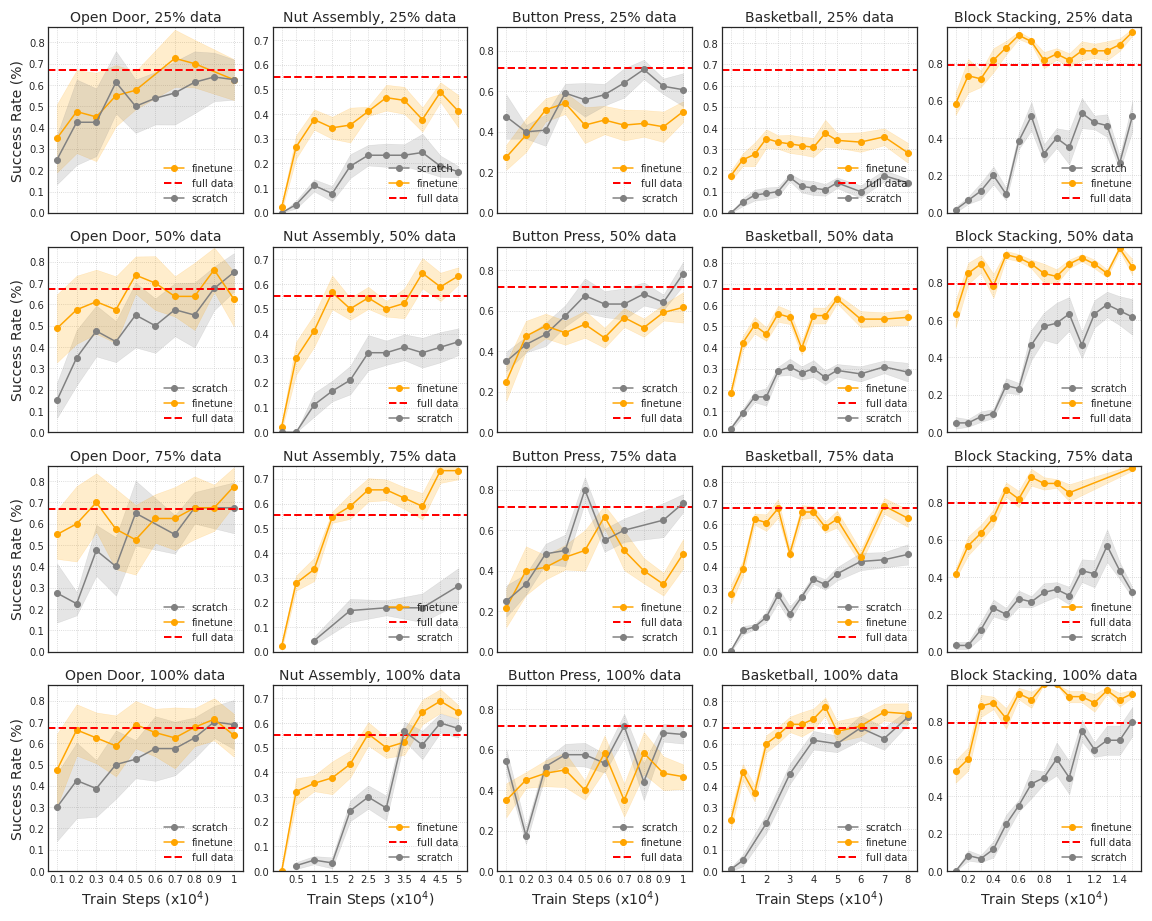

To show a higher level of generalization ability, we test a one-shot imitation agent with tasks that are sufficiently different from what it already trained on. We hence set up a series of experiments that, each picks 1 out of the 7 tasks in our benchmark suite as the held-out task, and trains a multi-task model on the remaining 6 tasks until convergence.

We first directly evaluate each model on its corresponding held-out task. Results are reported in column “Novel-task” of Table II, where each row corresponds to the experiment where the current task was excluded from training and only used for one-shot evaluation. For comparison purposes, we also include: “No Training” column, which evaluates a randomly initialized policy network without any training , “Single-task” column, where each row reports performance of a model trained and tested on the same task, and “Multi-task” column, where we take one model trained on all 7 tasks and report its performance on each task separately.

As shown in Table II, directly evaluating a multi-task model largely fails to complete an unseen novel task, and performs significantly worse than when this novel task was included during single- or multi-task training. This failure of direct one-shot imitation on a novel task suggests exciting room for future research. Nevertheless, the gap between “Novel-task” and “No Training” results 222 We remark that, the non-zero success rates in “No Training” column are due to the nature of task design in simulation, where a randomly initialized policy model would sometimes generate an action that accidentally leads to an episode being counted “successful”, such as hitting an opened drawer to shut it close or stumbling on a closed door and pushing it open. suggests a multi-task model still learns certain non-random behaviors, which could be generalized directly to a completely novel task. Note that, the improvement over “No Training” is limited to the first three tasks (Door, Drawer and Press Button), which require simpler motions and task reasoning.

We are hence encouraged to continue from this setup, but further fine-tune each 6-task model on its corresponding held-out task. We use the exact same dataset for training the models in “Single-task” column, and report the final fine-tuned results in “Fine-tune” column. To investigate the data efficiency of this setup, we additionally experiment with using , , the amount of the original single-task training data for fine-tuning, and compare with training from scratch on that single task using the same dataset size.

We plot evaluation results of intermittently-saved checkpoints during each model’s training in Figure 3. We observe that a multi-task pre-trained model is able to adapt quickly to a completely new task, even when limited data is available (i.e., ), and the final convergence performance is sometimes higher than training single-task from-scratch for some challenging tasks (e.g. Nut Assembly, Block stacking and Basketball). This indicates that these models are indeed able to accumulate generalizable knowledge (such as flexible visual feature extractors) from pre-training on many tasks, which then put them at advantage of learning a novel task very efficiently.

VI CONCLUSION

In this work, we build on prior progress in one-shot imitation learning and propose a more challenging multi-task setup. Instead of training and testing on different variations/instances of a single task, we call for training with multiple distinct tasks and testing on novel tasks that are never seen during training. We believe this setup is crucial towards building more capable and generalizable agents, and holds great potential for novel research.

To support this formulation, we introduce a one-shot imitation benchmark for robotic manipulation, which consists of 61 variations across 7 different tasks. We propose our method MOSAIC, which combines a self-attention model architecture and a temporal contrastive objective, and out-performs previous state-of-the-art methods by a large margin. When evaluated on a completely new task, we see a promising potential in fine-tuning our multi-task model to learn efficiently, but remark the great room for improvement at even better and faster one-shot learning at test time.

VII ACKNOWLEDGEMENT

This work was supported by the Bakar Family Foundation, Google LLC, Intel, the Berkeley Center for Human Compatible AI (CHAI), and National Science Foundation grant NRI no. 2024675. The authors would also like to thank a number of lab colleagues for their help throughout the project: Stephen James and Thanard Kurutach for valuable project feedbacks; Aditya Grover, Kevin Lu and Igor Mordatch for insightful discussions; Colin(Qiyang) Li, Hao Liu for suggestions on the paper writing.

References

- [1] P. Abbeel and A. Y. Ng, “Apprenticeship learning via inverse reinforcement learning,” in International Conference on Machine Learning, 2004.

- [2] Y. Aytar, T. Pfaff, D. Budden, T. L. Paine, Z. Wang, and N. de Freitas, “Playing hard exploration games by watching youtube,” in Advances in Neural Information Processing Systems, 2018.

- [3] M. Bain and C. Sammut, “A framework for behavioural cloning.” in Machine Intelligence 15, 1995.

- [4] M. G. Bellemare, Y. Naddaf, J. Veness, and M. Bowling, “The arcade learning environment: An evaluation platform for general agents,” Journal of Artificial Intelligence Research, vol. 47, pp. 253–279, 2013.

- [5] M. Bojarski, D. Del Testa, D. Dworakowski, B. Firner, B. Flepp, P. Goyal, L. D. Jackel, M. Monfort, U. Muller, J. Zhang et al., “End to end learning for self-driving cars,” arXiv preprint arXiv:1604.07316, 2016.

- [6] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A simple framework for contrastive learning of visual representations,” in International conference on machine learning, 2020.

- [7] S. Dasari and A. Gupta, “Transformers for one-shot visual imitation,” in Conference on Robot Learning, 2020.

- [8] ——, “Bc-0: Zero-shot task generalization with robotic imitation learning,” in Conference on Robot Learning, 2021.

- [9] Y. Duan, M. Andrychowicz, B. C. Stadie, J. Ho, J. Schneider, I. Sutskever, P. Abbeel, and W. Zaremba, “One-shot imitation learning,” in Advances in Neural Information Processing Systems, 2017.

- [10] C. Finn, P. Abbeel, and S. Levine, “Model-agnostic meta-learning for fast adaptation of deep networks,” in International Conference on Machine Learning, 2017.

- [11] C. Finn, T. Yu, T. Zhang, P. Abbeel, and S. Levine, “One-shot visual imitation learning via meta-learning,” in Conference on Robot Learning, 2017.

- [12] J.-B. Grill, F. Strub, F. Altché, C. Tallec, P. H. Richemond, E. Buchatskaya, C. Doersch, B. A. Pires, Z. D. Guo, M. G. Azar, B. Piot, K. Kavukcuoglu, R. Munos, and M. Valko, “Bootstrap your own latent: A new approach to self-supervised learning,” 2020.

- [13] K. He, H. Fan, Y. Wu, S. Xie, and R. Girshick, “Momentum contrast for unsupervised visual representation learning,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [14] O. Henaff, “Data-efficient image recognition with contrastive predictive coding,” in International Conference on Machine Learning, 2020.

- [15] O. J. Hénaff, A. Srinivas, J. Fauw, A. Razavi, C. Doersch, S. Eslami, and A. van den Oord, “Data-efficient image recognition with contrastive predictive coding,” ArXiv, vol. abs/1905.09272, 2020.

- [16] J. Ho and S. Ermon, “Generative adversarial imitation learning,” arXiv preprint arXiv:1606.03476, 2016.

- [17] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [18] D.-A. Huang, S. Nair, D. Xu, Y. Zhu, A. Garg, L. Fei-Fei, S. Savarese, and J. C. Niebles, “Neural task graphs: Generalizing to unseen tasks from a single video demonstration,” 2019.

- [19] D.-A. Huang, D. Xu, Y. Zhu, A. Garg, S. Savarese, F.-F. Li, and J. C. Niebles, “Continuous relaxation of symbolic planner for one-shot imitation learning,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2635–2642, 2019.

- [20] S. James, M. Bloesch, and A. J. Davison, “Task-embedded control networks for few-shot imitation learning,” in Conference on Robot Learning, 2018.

- [21] ——, “Task-embedded control networks for few-shot imitation learning,” 2018.

- [22] E. Kolve, R. Mottaghi, W. Han, E. VanderBilt, L. Weihs, A. Herrasti, D. Gordon, Y. Zhu, A. Gupta, and A. Farhadi, “Ai2-thor: An interactive 3d environment for visual ai,” 2019.

- [23] M. Laskin, A. Srinivas, and P. Abbeel, “Curl: Contrastive unsupervised representations for reinforcement learning,” in International Conference on Machine Learning, 2020.

- [24] C. Lynch, M. Khansari, T. Xiao, V. Kumar, J. Tompson, S. Levine, and P. Sermanet, “Learning latent plans from play,” Conference on Robot Learning (CoRL), 2019. [Online]. Available: https://arxiv.org/abs/1903.01973

- [25] ——, “Learning latent plans from play,” in Conference on Robot Learning. PMLR, 2020, pp. 1113–1132.

- [26] C. Lynch and P. Sermanet, “Language conditioned imitation learning over unstructured data,” Robotics: Science and Systems, 2021. [Online]. Available: https://arxiv.org/abs/2005.07648

- [27] A. Y. Ng, S. J. Russell et al., “Algorithms for inverse reinforcement learning.” in International Conference on Machine Learning, 2000.

- [28] X. B. Peng, E. Coumans, T. Zhang, T.-W. Lee, J. Tan, and S. Levine, “Learning agile robotic locomotion skills by imitating animals,” in Robotics: Science and Systems, 2020.

- [29] D. Pomerleau, “An autonomous land vehicle in a neural network,” in Advances in Neural Information Processing Systems, 1998.

- [30] D. A. Pomerleau, “Efficient training of artificial neural networks for autonomous navigation,” Neural computation, vol. 3, no. 1, pp. 88–97, 1991.

- [31] P. K. Pook and D. H. Ballard, “Recognizing teleoperated manipulations,” in International Conference on Robotics and Automation, 1993.

- [32] S. Ross, G. Gordon, and D. Bagnell, “A reduction of imitation learning and structured prediction to no-regret online learning,” in International Conference on Artificial Intelligence and Statistics, 2011.

- [33] T. Salimans, A. Karpathy, X. Chen, and D. P. Kingma, “Pixelcnn++: Improving the pixelcnn with discretized logistic mixture likelihood and other modifications,” arXiv preprint arXiv:1701.05517, 2017.

- [34] M. Schwarzer, A. Anand, R. Goel, R. D. Hjelm, A. Courville, and P. Bachman, “Data-efficient reinforcement learning with self-predictive representations,” in International Conference on Learning Representations, 2021.

- [35] A. Singh, E. Jang, A. Irpan, D. Kappler, M. Dalal, S. Levine, M. Khansari, and C. Finn, “Scalable multi-task imitation learning with autonomous improvement,” 2020.

- [36] S. Stepputtis, J. Campbell, M. Phielipp, S. Lee, C. Baral, and H. Ben Amor, “Language-conditioned imitation learning for robot manipulation tasks,” in Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, Eds., vol. 33. Curran Associates, Inc., 2020, pp. 13 139–13 150. [Online]. Available: https://proceedings.neurips.cc/paper/2020/file/9909794d52985cbc5d95c26e31125d1a-Paper.pdf

- [37] A. Stooke, K. Lee, P. Abbeel, and M. Laskin, “Decoupling representation learning from reinforcement learning,” in International Conference on Machine Learning, 2021.

- [38] I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to sequence learning with neural networks,” arXiv preprint arXiv:1409.3215, 2014.

- [39] J. D. Sweeney and R. Grupen, “A model of shared grasp affordances from demonstration,” in International Conference on Humanoid Robots, 2007.

- [40] A. van den Oord, Y. Li, and O. Vinyals, “Representation learning with contrastive predictive coding,” 2019.

- [41] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in Neural Information Processing Systems, 2017.

- [42] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7794–7803.

- [43] D. Xu, S. Nair, Y. Zhu, J. Gao, A. Garg, L. Fei-Fei, and S. Savarese, “Neural task programming: Learning to generalize across hierarchical tasks,” 2018.

- [44] S. Young, D. Gandhi, S. Tulsiani, A. Gupta, P. Abbeel, and L. Pinto, “Visual imitation made easy,” in Conference on Robot Learning, 2020.

- [45] T. Yu, C. Finn, A. Xie, S. Dasari, T. Zhang, P. Abbeel, and S. Levine, “One-shot imitation from observing humans via domain-adaptive meta-learning,” in Robotics: Science and Systems, 2018.

- [46] T. Yu, D. Quillen, Z. He, R. Julian, K. Hausman, C. Finn, and S. Levine, “Meta-world: A benchmark and evaluation for multi-task and meta reinforcement learning,” in Conference on Robot Learning (CoRL), 2019. [Online]. Available: https://arxiv.org/abs/1910.10897

- [47] A. Zhou, E. Jang, D. Kappler, A. Herzog, M. Khansari, P. Wohlhart, Y. Bai, M. Kalakrishnan, S. Levine, and C. Finn, “Watch, try, learn: Meta-learning from demonstrations and reward,” 2020.

- [48] Y. Zhu, J. Wong, A. Mandlekar, and R. Martín-Martín, “robosuite: A modular simulation framework and benchmark for robot learning,” arXiv preprint arXiv:2009.12293, 2020.

VIII APPENDIX

VIII-A Overview

In this section, we first provide detailed descriptions of our task environment design, then introduce more details about our method implementation and the experiment setup, and lastly we provide more ablation experiment results and analysis.

VIII-B Task Environment Description

We use Robosuite [48] as our base framework, and design 7 robot manipulation environments (tasks) with intra-task variations. For example, consider Nut Assembly task in the bottom-right of Figure 11: it has 9 variations in total, resulting from picking up any one of the 3 nuts on the table, then assembling it to one of the 3 pegs. Since there are multiple varied instances for a specific task, the agent should finish the correct task without mis-identification based on demonstration. Below we provide details about each of the individual task design.

VIII-C Implementation Details

Network Architecture We provide an illustration of our self-attention block and one of our baseline [7] as in Figure 12. For other baselines in our main paper, we replace this attention module with LSTM or MLP accordingly.

Data Augmentation We add data augmentation for all the baselines to prevent overfitting. In our implementation, we use 4 types of data augmentation provided in the torchvision package: random translate, random crop, color jitter, Gaussian blur.

VIII-D Experiment Details

Hyper-parameter Settings We provide more details about the hyper-parameters and other settings of model training and evaluation in Table III.

| Hyperparameter | Value |

| Input image size | |

| # Demonstation frames | 4 |

| # Observation frames (train) | 7 |

| # Observation frames (eval) | 1 |

| # Evaluation episode per task | |

| Optimizer | Adam |

| Learning rate | |

| Batch size | |

| Non-linearity | ReLU |

| Contrastive latent dimension | |

| Self-attention temperature | |

| # Action layers | |

| # Attention layers (single-task) | |

| # Attention layers (multi-task) | |

| Action head latent dimension | 256 |

| Action output dimension | 256 |

Batch Construction One batch consists of demonstrations sampled from each variant in every training task, which are mixed evenly. Since each task contains a different number of variations, to ensure they have comparable learning progress, the loss is first averaged across variants within each task, and then across different tasks.

Computation Requiremenmts Our single-task model can be trained within one GPU day of NVIDIA TITAN Xp, whereas the multi-task model takes about 4 GPU days to train.

VIII-E Ablation Experiments

|

|

|

|

|

|

|

|

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

67.1 5.5 | 65.4 3.4 | 71.1 3.9 | 88.8 1.1 | 79.3 1.8 | 67.5 2.7 | 55.2 2.8 | ||||||||||||

|

62.5 8.2 | 60.8 2.6 | 73.9 3.9 | 69.5 1.8 | 36.7 3.6 | 19.7 2.2 | 15.6 2.5 | ||||||||||||

|

68.3 6.3 | 55.8 3.6 | 69.4 3.4 | 42.1 2.3 | 70.6 2.4 | 49.7 2.2 | 30.7 2.5 | ||||||||||||

|

66.7 7.3 | 67.5 4.0 | 73.1 3.4 | 11.9 1.6 | 39.4 3.4 | 9.2 1.7 | 20.4 1.9 | ||||||||||||

|

67.1 6.4 | 67.9 4.0 | 69.4 3.1 | 12.9 1.7 | 40.0 2.4 | 10.0 1.9 | 17.8 1.8 |

|

|

|

|

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pick & Place | 12.3 1.4 | 80.2 1.9 | 23.5 2.1 | 51.5 4.1 | 88.5 1.1 | |||||||

| Basketball | 53.8 4.8 | 52.5 2.8 | 24.7 2.1 | 27.2 2.5 | 67.5 2.7 | |||||||

| Nut Assembly | 12.2 2.0 | 12.2 1.6 | 17.8 1.8 | 22.6 2.9 | 55.2 2.8 |

We conduct a set of ablation studies focused on our contrastive representation learning approach, to provide insights on the design of the loss objective, how it incorporates with the policy learning, and its impact over the one-shot imitation performance.

The effect of contrastive learning objective

We first ablate by removing contrastive loss from the model’s training update: we train a model with solely behavior cloning loss, while other training configurations are kept consistent with the multi-task experiments reported in Table I. Results are reported in row “Single-task No Contra.” and “Multi-task No Contra.” of Table IV. Noticeably, our multi-task performance is significantly decreased without the contrastive learning objective. Comparing results from single-task and multi-task setups, we observe a clear challenge of task reasoning and robust representation learning, which the contrastive objective is able to address, but not fully closing the gap between a multi-task model and its single-task counterpart on every task.

Adding the contrastive loss also shows a more significant gain on the right-most 3 tasks of Table IV, which vary only the colors of otherwise similarly-shaped objects. As compared to Pick & Place where the 4 objects are differently sized, shaped, and colored, the blocks/basketballs/nuts in these variations tend to be more easily confused, which can be addressed the contrastive loss that explicitly forces the representations to be distinguishable among different sub-tasks.

To investigate the effect of contrasting against negative samples in the loss objective design, we implement BYOL [12], in which a data sample is only drawn together with its augmented counterpart. Its multi-task performance is shown in Table IV, which show no improvement over not using contrastive loss at all (as reported in row “Multi-task No Contra.”). We remark that in multi-task setups, negative samples help learn stronger representations that are better at distinguishing among images from different tasks/variations.

Where to apply contrastive loss. As discussed in Section IV-B, we can apply the contrastive operation in features from any intermediate layers of the model. To understand the effects of this algorithmic choice, we consider the following variants of our method: (1) Pre-Attn: applying contrastive loss to only features prior to the self-attention layers; (2) Post-Attn: applying contrastive loss only after the self-attention layers; (3) Both-Attn (Ours): calculating both losses in (1) and (2), which we use for single and multi-task experiment results in above sections. Table V shows the performance of each ablation variant in three single tasks, among which we find that Both-Attn (using two contrastive losses) achieves the best performance.

The effect of temporal contrast.

Our contrastive strategy is doing a temporal contrast by randomly selecting frames from nearby time-steps as positive. To fully understand its effectiveness, we compare it with two variants: (1) No-Temp: applying contrastive loss to two different data augmentations from same frame, similar to CURL [23]; (2) Fix-Temp: applying temporal contrast but always with a fixed-step future frame, similar to ATC [dwibedi2019temporal]. Ours (denoted Rand-Temp) achieves the best performance as shown in Table V, as suggested by comparing the first, second and last column in Table V.

VIII-F Further Discussion on Related Work

In this section, we provide a more detailed discussion of the methods and experiment task settings used by related prior work in one-shot imitation learning (OSIL).

However, prior OSIL work has been limited to a single-task setup and mainly tests a model on a different task variation (e.g stacking an unseen block combination) or a different instance (e.g. different object pose) of the previously-seen variations. Experiments in [9] train an agent to stack various (unseen) block combinations at test time, but use low-dimensional state-based inputs. For visual inputs, [11] and [21] experimented with 3 separate settings: simulated planer reaching (with different target object colors), simulated planer pushing (with varying target object locations), and real-robot, object-in-hand placing (onto different target containers); [45] set up a two-stage pick-then-place task with varying target objects and target containers; [7] uses a simulated Pick & Place task with 4 objects to pick and 4 target bins to place (hence 16 variations in total). The AI2-THOR [22] environment used in [19] requires collecting varying objects and dropping off at their designated receptacles, where actions are purely semantic concepts such as “dropoff” or “search”. In contrast, in this work we consider a harder, multi-task setup, where agent needs to perform well across more diverse and distinct tasks, and generalize not only to new instances of all the seen variations, but also to completely novel tasks.