Towards Saner Deep Image Registration

Abstract

With recent advances in computing hardware and surges of deep-learning architectures, learning-based deep image registration methods have surpassed their traditional counterparts, in terms of metric performance and inference time. However, these methods focus on improving performance measurements such as Dice, resulting in less attention given to model behaviors that are equally desirable for registrations, especially for medical imaging. This paper investigates these behaviors for popular learning-based deep registrations under a sanity-checking microscope. We find that most existing registrations suffer from low inverse consistency and nondiscrimination of identical pairs due to overly optimized image similarities. To rectify these behaviors, we propose a novel regularization-based sanity-enforcer method that imposes two sanity checks on the deep model to reduce its inverse consistency errors and increase its discriminative power simultaneously. Moreover, we derive a set of theoretical guarantees for our sanity-checked image registration method, with experimental results supporting our theoretical findings and their effectiveness in increasing the sanity of models without sacrificing any performance.

1 Introduction

Learning maps between images or spaces, i.e. registration, is an important task, and has been widely studied in various fields, such as computer vision [15, 33], medical imaging [20, 52], and brain mapping [35, 47]. With recent advances in modern computing hardware and deep-learning techniques, learning-based deep image registration methods have surpassed their traditional counterparts, both in terms of metric performance and inference time. Different from the traditional style of optimizing on single image pair [12, 32, 7, 11, 2, 28, 19] using diffeomorphic formulations, such as elastic [4, 38], fluid mechanics [7, 18, 43] or B-spline [36], existing deep registrations [42, 6, 13, 29, 23, 10, 41, 25, 9] focus on maximizing image similarities between transformed moving images and fixed images. Despite the effectiveness of this approach, it inevitably leads to over-optimization of image similarities and thus introduces non-smooth mappings [6, 50, 16, 9], where smooth transformation maps are typically desirable, especially in the medical imaging domain.

To tackle the over-optimized issue, popular remedies [4, 36, 44, 37, 49, 45, 39, 40] utilize add-ons such as Large Deformations Diffeomorphic Metric Mapping (LDDMM) [7], vector Stationary Velocity Field (vSVF) [39], B-spline [36], Elastic [4] or Demons [43] to enforce diffeomorphism, requiring costly and iterative numerical optimizations [8, 49, 39]. Other methods [13, 17] seek probabilistic formulation for the registration but can lead to inferior performance [9]. Nonetheless, these methods operate only on one mapping in the direction from moving to fixed images, yet disregarding the relationship between different mappings from both directions, as shown in Appendix111Appendix goes https://arxiv.org/pdf/2307.09696.pdf Fig. A1.

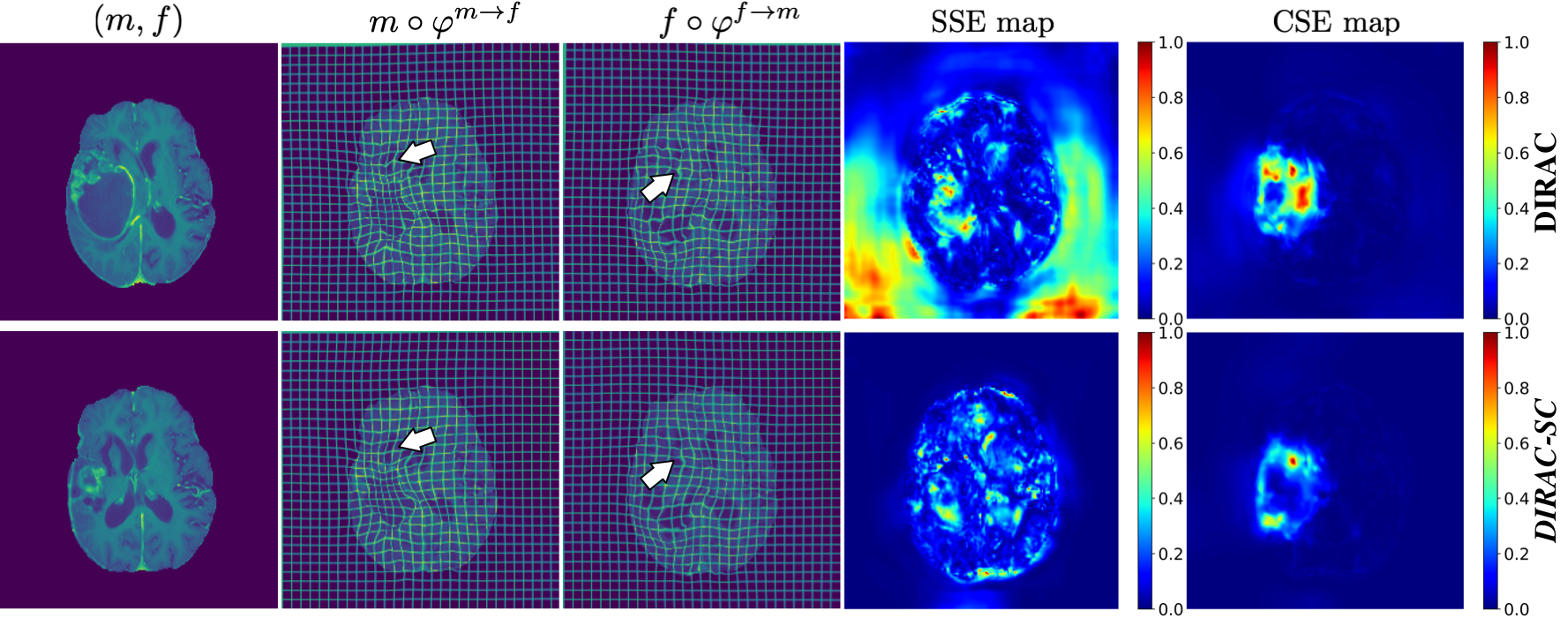

Recent studies [50, 16, 31] have made great progress in modeling the relationship, i.e. inverse consistency, for different mappings. [50, 16] explicitly enforce the relationship in a strict form for ideal inverse consistency. However, for applications such as brain tumor registration [3], where there are regions with no valid correspondences, it is impractical to apply in such a strict manner. To select valid correspondences, [31] utilize mean errors of similar anatomical locations as thresholds. However, it is always tricky to determine similar anatomical locations, especially for unsupervised registrations in medical imaging [6, 13, 41]. Different from previous methods [50, 16, 31], we introduce two straightforward yet effective constraints, namely, self-sanity check to reduce error while registering identical pairs and cross-sanity check to ensure inverse consistency. Using our sanity checks, we test a wide range of registration models and find that despite better performance such as Dice, most models are suffering from over-optimization of image similarities, leading to folded transformations and low sanity awareness, as shown in Fig. 1. Moreover, our sanity checks can be applied to different registration models, where the extensive experiments certify that our method improves their sanity awareness, without sacrificing any performance.

Our findings are five-fold: (1) We find that despite better performance such as Dice, most models produce non-smooth transformations and are less aware of sanity errors due to over-optimization on image similarities; (2) We propose two novel sanity checks for the registration model training and derive corresponding theoretical foundations; (3) Our sanity checks not only help reduce the sanity errors of existing models but also assist them to produce more regular maps without diffeomorphic add-ons; (4) Our proposed sanity-checks are model-agnostic. It can be deployed to various models and is only needed during training so that it does generate no side effects for inference; (5) Experiments on IXI [1], OASIS [26], and BraTSReg [3] datasets verify our findings and show on par or better performance.

2 Background and Related Work

Background. Consider a set of images defined on the domain (-D spatial domain) of some Hilbert space . Let be the fixed, and be the moving . Let be a displacement vector field that maps from (dual space of ) such that , where is the image grid of coordinates. denotes the transformation from . The goal of registration is to compute such transformation , whose quality can be measured by some similarity functions in the form of . denotes that is warped by . Without loss of generality, can be replaced by any differentiable similarity function, such as Normalized Cross Correlation (NCC) and Mutual Information (MI). Alternatively, we can use other distance functions such as sum squared error (SSD) by replacing with . Hereafter, we will stick to the similarity notation for later derivations.

Let be the mapping function, parameterized using the model, where stands for the displacement map from . In terms of such learned by the model, we assume that the similarity operator is concave, making convex when it is not a distance operator for a pair. Therefore, the optimization in standard learning-based registrations can be formulated as

| (1) |

trying to find such for a pair in the mapping search space. Here, term is added to smooth the transformation, penalizing sudden changes in the map. Most commonly, can be in the form of (first-order spatial derivative) or (second-order spatial derivative), where norm of the image gradient is generally adopted in medical registration, resulting in a regularization.

Definition 1 (Ideal/Strict inverse consistency).

Given two different mappings: and , if , where denotes the identity transformation, we called these two mappings are strictly inverse consistent. The strict inverse consistency is equivalently formulated as , where is back-projected from .

Relation to other inverse consistent methods. We show the relationship between methods in Tab. 1. The formulation of ICNet [50] follows strict inverse consistency in Def. 1 with Frobenius norm. Besides strict inverse consistency, ICON [16] adds regularization using the determinant of the Jacobian matrix of the transformation. Instead of explicitly enforcing strict consistency as in [50, 16], DIRAC [31] and our approach both modulate the inverse problem with more relaxation. This inequality formulation allows us to tell whether a voxel has a valid correspondence, which is practically useful in registering image pairs with topological changes, e.g., brain tumor registration [31, 30]. Different from [31], where means of inverse errors are utilized, we closely associate our formulation with the data, allowing us to show that the errors are upper bounded without resorting to extra information to determine similar anatomical structures, which has not been studied in other related works. Experimentally, our relaxation shows better performance over various metrics. Besides, to the best of our knowledge, our work is the first to consider the self-sanity error directly on displacements rather than for image similarities as in [23] for medical image registration studies.

3 Methodology

3.1 Self-sanity and Cross-sanity Checks

To increase the discriminative power of identical pairs feeding into the model, we propose our self-sanity check as

| (2) |

where the mapping function learned using any models is restricted to output zero displacements for identical pairs. Such identical pairs can be filtered out using similarity measurements. However, users are unlikely to perform these filters, especially when they do not know that trained models would produce terrible predictions for identical pairs. Hence, our self-sanity check is a natural remedy.

Next, we enforce the inverse consistency on different mappings for by the model. We will search for correspondence in the fixed image for every point in the moving image such that the transformations between the two images are inconsistent. For example, suppose that a point in the space of image is registered to the space of image . If we register this point from image back to image , the point will arrive at the same location in image (Def. 1). We first define the backward displacement map as in optical flow studies [22, 27, 24], back-projected from

| (3) |

making it convenient for calculation. We then introduce our cross-sanity check in the form of

| (4) |

. Here, we allow the estimation errors to increase linearly with the displacement magnitude with slope and intercept . Instead of imposing zero-tolerance between forward and back-projected backward displacements [16, 31], we relax the inverse consistency with error tolerance, defined by and , to allow occlusions which is more practical. I.e., This sanity check states that every point in the moving image should be able to map back from the fixed image to its original place in image with certain error tolerance. We then prove that this error tolerance is upper bounded.

Theorem 1 (Relaxed registration via cross-sanity check).

An ideal symmetric registration meets , defined in Def. 1. Then, a cross-sanity checked registration is a relaxed solution to this ideal registration, satisfying

| (5) |

Here, and . is a constant, representing the total pixel/voxel numbers. Theoretically, our proposed cross-sanity checks can be viewed as a relaxed version of the strict symmetric constraint, which is commonly used. We also derive the lower/upper bound for satisfying our forward/backward consistency check as shown in Eq. 5. The derivations’ details are shown in the Appx. A.2. To sum up, our cross-sanity check allows a series of solutions to the forward/backward consistency in an enclosed set with a radius of .

3.2 Unique Minimizer for Single Image Pair

Next, we show that there exists a unique solution for our sanity-checked optimization, in terms of a single image pair. We start with writing the standard optimization with our proposed sanity checks in the norm form as

| (6) | ||||

Theorem 2 (Existence of the unique minimizer for our relaxed optimization).

Let and be two images defined on the same spatial domain , which is connected, closed, and bounded in with a Lipschitz boundary . Let be a displacement mapping from the Hilbert space to its dual space . Then, there exists a unique minimizer to the relaxed minimization problem.

The detailed proof for Thm. 2 is shown in Appx. A.3. In summary, for a moving-fixed pair, since the relaxation by sanity checks and data term (e.g. SSD) are convex, together with the convex search space for , our problem has a unique minimizer. Thus, the optimization problem is well-conditioned by properties of our regularity constraints such that will not change dramatically.

3.3 Loyal Sanity-Checked Minimizer

In the next section, we further prove that the distance between the minimizer of the sanity-checked constrained optimization and the optimal minimizer for a single moving-fixed image pair can be represented and controlled by our proposed sanity checks. We first rewrite Eq. 6 as

| (7) | ||||

Remark 2.1.

We denote this formulation as the bidirectional optimization since it operates in both directions. It is straightforward to show that this bidirectional optimization still satisfies Thm. 2 so that there exists a unique minimizer.

Then, with a slight abuse of notations, we define that

| (8) |

We can rewrite the minimization problem as

| (9) | ||||

| s.t. | ||||

Here, , , , , and . Then, the optimal minimizer can be written in the matrix equation as

| (10) | ||||

Here, , , and , as defined previously. here means that we can have solutions for the CS check as in Eq. 9, which we show will not interfere with the theoretical foundations by masking in the next section. In this way, we obtain our unique minimizer as

| (11) |

where is the vector of parameters.

Theorem 3 (Loyalty of the sanity-checked minimizer).

The proof is presented in Appx. A.4. We then expand the right-hand side of Eq. 12 as

| (13) | ||||

Empirically, the first two terms contribute relatively less (10 smaller) than the cross-sanity error (See Experiments). Thus, we focus on the CS term here. Following Thm. 1, is upper bounded in the form of

| (14) |

Here, and ensure the inequality’s direction, where the multiplication of two accounts for cross-sanity errors from two directions, i.e., from moving image to fixed image and also fixed image to moving image. We put the full derivation of CS error bound in the Appx. A.5. In a nutshell, we prove that by satisfying the cross-sanity check, we have an upper bound for the CS error, constraining the relationship between two displacements from different directions via parameters and .

Lemma 4 (Upper-bound of distance between optimal minimizer and sanity-checked minimizer).

Let and respectively be the optimal minimizer and the constrained minimizer with cross-sanity check, then we have an upper bound for the distance between such two minimizers as

| (15) |

The Lemma 4 follows Thm. 3 and Eq. 14 (upper bound of CS error). That said, the loyalty w.r.t the distance of the constrained minimizer is controlled by the combination of , , and the weight parameter .

Interpretation of derived upper bound. By Lemma 4, we prove that if we average on the total number of voxels and also two directions, the similarity distance between the optimal minimizer and our sanity-checked minimizer per pixel/voxel is upper bounded by . That being said, to satisfy our sanity checks, and thus maintain the loyalty to the optimal minimizer, should be small. Numerically speaking, for example, if and , we have . This distance of is extremely large for this delicate image registration task, causing the constrained minimizer to be untrustworthy. Therefore, we need to adopt a relatively small loss weight , e.g. , to bound the distance between two minimizers tightly. This observation from our proven theoretical upper bound also coincides with our sanity loss weight ablation study.

3.4 Sanity-checked Registration Training

We show our sanity-checked training pipeline in Fig. 4. We introduce each loss in the subsection. The self-sanity check can be formulated into a loss function form as

| (16) |

So that the self-sanity loss penalizes the squared differences between predicted displacement maps and the ideal ones. Next, we use direction as an example ( direction follows the same principle) to formulate the proposed cross-sanity check loss. We calculate for every voxel and define a binary mask in the form of

| (17) |

An interpretation of this binary mask is that it records violations of the cross-sanity check (Eq. 4) for each individual point. In this way, solution in Eq. 10 will not challenge the theoretical formulations since these points are masked out. Thus, we can formulate the proposed cross-sanity check in the form of a loss function of

| (18) | ||||

The final . Here, denotes element-wise multiplication so that we only retain points violating the cross-sanity check. In this case, the loss value is only calculated for those violators, visualized as occlusion masks, shown in Fig. 5. Finally, the total loss is

| (19) |

Here, as NCC loss, as , and , following standard deep registrations [6, 9]. If not specified otherwise, and . While training, the model optimizes the total loss on different moving-fixed image pairs in the training set as

| (20) |

In this way, we fulfill our novel regularization-based sanity-enforcer formulation for training a registration model.

4 Experiments

Evaluation metrics. We study model behaviors in a wide range of metrics. For the main metric, we use dice to measure how fit is the transformed segmentation to its ground truth, following previous studies. To study the model taking an identical pair as inputs, we also report self dice (SDice), i.e., the dice when registering the moving image to itself. For pre-operative and post-recurrence registration, we measure the mean target registration error (TRE) of the paired landmarks with Euclidean distance in millimeters and also self mean registration error (STRE) to study the self-sanity of the models. Besides, we report 95% Hausdorff Distance (HD95) as in [9], which measures the 95th percentile of the distances between boundary points of the transformed subject and the actual subject. We follow [3, 31] to report robustness (ROB) for a pair of scans as the relative number of successfully registered landmarks.

For diffeomorphism measurements, we report the percentage of folded voxels (FV) whose Jacobian determinant , the Absolute Value of Negative Jacobian (AJ) where we sum up all negative Jacobian determinants, and the Standard Deviation of the logarithm of the Jacobian determinant (SDlogJ). All these three Jacobian determinant-related metrics reflect how regular are the transformation maps.

For quantifying sanity errors, we present the mean of self-sanity error (SSE) and the mean of cross-sanity error (CSE), defined in Eq. 16 and Eq. 18, respectively. These two metrics are designed to study model behaviors per the level of each single image pair and are essential for our sanity analysis of different learning-based deep models.

Implemented models. We denote the bidirectional optimization as Enclosed (E) Image registration, operating to maximize the similarity score and minimize the spatial gradient regularization. We also have Self-sanity checked (S) and Cross-sanity checked (C) image registrations. Moreover, as proof of concept that our sanity checks can be applied to different learning-based models, we implement our proposed techniques on models such as VoxelMorph [6] (VM), TransMorph-Large [9] (TM), TransMorph-B-spline [9] (TMBS), and DIRAC [31].

4.1 Results

| Method | Dice↑ | FV↓ |

|---|---|---|

| Affine | 0.3860.195 | - |

| SyN [2] | 0.6450.152 | 0.01 |

| NiftyReg [28] | 0.6450.167 | 0.0200.046 |

| LDDMM [7] | 0.6800.135 | 0.01 |

| deedsBCV [19] | 0.7330.126 | 0.1470.050 |

| MIDIR [34] | 0.7420.128 | 0.01 |

| PVT [46] | 0.7270.128 | 1.8580.314 |

| CoTr [48] | 0.7350.135 | 1.2980.342 |

| VM [6] | 0.7320.123 | 1.5220.336 |

| VM-diff [13] | 0.5800.165 | 0.01 |

| ICNet [50] | 0.7420.223 | 0.8540.316 |

| CM [23] | 0.7370.123 | 1.7190.382 |

| ViT-V-Net [10] | 0.7340.124 | 1.6090.319 |

| nnFormer [51] | 0.7470.135 | 1.5950.358 |

| ICON [16] | 0.7520.155 | 0.6950.248 |

| TM [9] | 0.7540.124 | 1.5790.328 |

| TM-diff [9] | 0.5940.163 | 0.01 |

| TMBS [9] | 0.7610.122 | 0.01 |

| VM-ESC | 0.7430.025 | 0.4780.101 |

| TMBS-ESC | 0.7620.023 | 0.01 |

| Method | Dice↑ | HD95↓ | SDlogJ↓ |

|---|---|---|---|

| VTN [25] | 0.8270.013 | 1.7220.318 | 0.1210.015 |

| ConAdam [41] | 0.8460.016 | 1.5000.304 | 0.0670.005 |

| VM [6] | 0.8470.014 | 1.5460.306 | 0.1330.021 |

| ClapIRN [29] | 0.8610.015 | 1.5140.337 | 0.0720.007 |

| TM [9] | 0.8620.014 | 1.4310.282 | 0.1280.021 |

| TM-SC | 0.8620.014 | 1.4490.310 | 0.0840.005 |

| Method | TRE↓ | STRE↓ | ROB↑ | FV↓ | AJ×102↓ |

|---|---|---|---|---|---|

| Initial | 6.8640.996 | - | - | - | - |

| ∗DIRAC [31] | 2.7600.247 | 0.2740.027 | 0.7760.055 | 0.0250.009 | 4.2422.954 |

| †DIRAC-SC | 2.7190.259 | 0.2180.046 | 0.7950.034 | 0.0220.005 | 3.0011.314 |

Atlas-to-subject registration. We split in total 576 T1–weighted brain MRI images from the Information eXtraction from Images (IXI) [1] database into 403, 58, and 115 volumes for training, validation, and test sets. The moving image is an atlas brain MRI obtained from [23]. FreeSurfer [14] was used to pre-process the MRI volumes. The pre-processed image volumes are all cropped to the size of 160×192×224. Label maps, including 30 anatomical structures, are obtained using FreeSurfer for evaluating registration. Besides CNN-based models [6, 23, 10], we also include other transformer-based deep methods [10, 51, 48, 46] as baselines following [9]. The comparison results are presented in Tab. 4, and qualitative comparisons in Fig. 6. For cross-sanity check, we set and . Overall, we can observe models with sanity checks achieve better diffeomorphisms without impairing any Dice performance and even improve the performance for the VM model. We show qualitative comparisons in Fig. 7. Compared to the naive counterparts, our sanity-checked model produces more regular maps from both directions and reduces huge sanity errors, comparably using error heatmaps for both self-sanity and cross-sanity errors.

Subject-to-subject registration. OASIS dataset [26, 21] contains a total of 451 brain T1 weighted MRI images, with 394/19/38 images used for training/validation/testing purposes, respectively. The pre-processed image volumes are all cropped to the size of 160×192×224. Label maps for 35 anatomical structures are provided using FreeSurfer [14] for evaluation. For the cross-sanity check, we set and . The results are shown in Tab. 4. In terms of main metrics, we achieve on-par performance with all the state-of-the-art methods, further certifying our sanity checks will not contaminate the performance of models. We also show our qualitative comparison in Fig. 8, indicating that our sanity-enforced image registration results in less self-sanity error from the self-sanity error map comparison and more regular maps in the deformed grid. All these comparisons prove the efficacy of our regularization-based sanity-enforcer to prevent both sanity errors.

Pre-operative and post-recurrence scans registration. BraTSReg [3] dataset contains 160 pairs of pre-operative and follow-up brain MR scans of glioma patients taken from different time points. Each time point contains native T1, contrast-enhanced T1-weighted, T2-weighted, and FLAIR MRI. Within the dataset, 140 pairs of scans are associated with 6 to 50 manual landmarks in both scans. The other 20 pairs of scans only have landmarks in the follow-up scan. Following [31], we create a five-fold schema where each fold has 122/10/28 for training/validation/test, respectively. We set and in the cross-sanity check since DIRAC predicts normalized displacement (range 0 to 1). We take an average of five folds to report our results in Tab. 4. All baselines and our models use the same training setup. We specifically focus on the model training part and directly train all models on data downloaded from the official website [5] without pre-affine and post-processing steps mentioned in their paper [30]. The best model is selected on the validation set, which has the lowest TREs. Despite the high performance of the baseline, we can observe that the substitution by cross-sanity check and the addition of self-sanity check enable all metrics’ improvements to be claimed as state-of-the-art. We also show qualitative comparisons in Fig. 9, where the comparison between SSE error maps demonstrates the effectiveness of our self-sanity check.

4.2 Sanity Analysis

We conduct a broad sanity analysis on a wide range of models on the IXI dataset, including popular models (VM [6] and TM [9]), model with cycle consistency (CM [23]), models with inverse consistency (ICNet [50] and ICON [16]), probabilistically-formulated models (VM-diff [13] and TM-diff [9]), and models with diffeomorphic add-ons (MIDIR [34] and TMBS [9]). The results are shown in Tab. 5. We find that models with probabilistic formulation or cycle consistency have higher self-sanity, whereas the other models are insensitive to this scenario and have an inferior SDice. However, these probabilistic formulated models suffer from an relatively low Dice score, refraining from practical usage. For CM, albeit low self-sanity error, the correspondence error between image pairs is not negligible. We also find it interesting that models with diffeomorphic add-ons still suffer from large sanity errors, despite their ability to produce a diffeomorphic/regular map, caused by not modeling different mappings from both directions. Overall, our behavior studies show that most models suffer from inferior Dice/SDice performance, lower diffeomorphism, or significant sanity errors. Our proposed sanity checks improve performance from every aspect, producing a more regular map and preventing sanity errors.

| Method | Main Metric↑ | Diffeomorphism↓ | Sanity Error↓ | |||

|---|---|---|---|---|---|---|

| Dice | SDice | FV | AJ×104 | SSE×10-1 | CSE | |

| PVT [46] | 0.727 | 0.695 | 1.858 | 3.64 | 52.95 | 19.43 |

| CoTr [48] | 0.735 | 0.846 | 1.298 | 2.10 | 6.28 | 21.37 |

| MIDIR [34] | 0.742 | 0.850 | 0.1 | 0.1 | 6.75 | 15.75 |

| VM [6] | 0.732 | 0.890 | 1.522 | 3.00 | 5.16 | 16.55 |

| VM-diff [13] | 0.580 | 1.000 | 0.1 | 0.1 | 0.13 | 0.1 |

| ICNet [50] | 0.742 | 0.849 | 0.854 | 1.90 | 13.23 | 7.48 |

| CM [23] | 0.737 | 1.000 | 1.719 | 3.44 | 1.18 | 19.47 |

| VIT-V-Net [10] | 0.734 | 0.908 | 1.609 | 2.97 | 14.52 | 12.98 |

| nnFormer [51] | 0.747 | 0.799 | 1.595 | 2.90 | 13.31 | 20.68 |

| ICON [16] | 0.752 | 0.899 | 0.695 | 0.90 | 7.31 | 5.68 |

| TM [9] | 0.754 | 0.873 | 1.579 | 2.75 | 85.63 | 19.62 |

| TM-diff [9] | 0.594 | 1.000 | 0.1 | 0.1 | 0.16 | 0.1 |

| TMBS [9] | 0.761 | 0.903 | 0.1 | 0.02 | 23.84 | 14.08 |

| VM-ESC | 0.743 | 1.000 | 0.478 | 0.43 | 0.1 | 2.55 |

| TMBS-ESC | 0.762 | 1.000 | 0.1 | 0.1 | 0.1 | 2.99 |

4.3 Ablation Study

Cross-sanity error under strict inverse consistency.

| [46] | [48] | [6] | [23] | [10] | [51] | [50] | [16] | [9] | [6] | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 19.24 | 10.02 | 5.63 | 7.58 | 11.27 | 10.44 | 3.46 | 2.78 | 22.61 | 7.31 | 1.96 | 0.92 |

We testify the performance under strict inverse consistency as in Def. 1, the same as CICE in [11], shown in Tab. 6. The reductions of strict inverse consistency error compared using relaxed inverse consistency are caused by many small errors taken into account for calculating the average, where our sanity-checked methods still show better performance, compared to all models, including the model trained using explicitly strict inverse consistency, e.g. [16].

Sanity checks loss weight study. We derive an upper bound for our saner registration in Lemma 4. We show in the study that we can utilize this upper bound to guide us to set appropriate loss weight for the cross-sanity check. For fast verification, we randomly sample 50 subjects from the training set of the IXI dataset and validate/test in the full validation/test set. We also include the self-sanity check loss weight here for comparing purposes. The experimental results are shown in Tab. 7. Compared to , is insensitive to weight change. As we discussed earlier, if we set higher loss weight to cross-sanity check, for example, 0.01/0.001, approximately having upper bounds as 0.1/0.01, respectively. This creates a huge difference when we train our models. This high tolerance for error results in significantly degenerated performance for Dice, where our derived upper bound gives useful guidance for the setting of loss weights. More discussions can be found in Appendix.

| Dice↑ | SDice↑ | FV↓ | AJ×104↓ | SSE×10-1↓ | CSE↓ | ||

|---|---|---|---|---|---|---|---|

| 5e-4 | 1e-3 | 0.720 | 0.957 | 0.913 | 1.24 | 0.658 | 6.96 |

| 1e-2 | 0.720 | 0.983 | 0.920 | 1.27 | 0.299 | 6.77 | |

| 1e-1 | 0.719 | 1.000 | 0.896 | 1.22 | 0.1 | 6.73 | |

| 1e-3 | 1e-3 | 0.721 | 0.967 | 0.422 | 0.38 | 0.572 | 3.43 |

| 1e-2 | 0.720 | 0.989 | 0.426 | 0.40 | 0.301 | 3.46 | |

| 1e-1 | 0.721 | 1.000 | 0.443 | 0.40 | 0.1 | 3.43 | |

| 1e-2 | 1e-3 | 0.473 | 0.912 | 0.1 | 0.1 | 1.966 | 0.1 |

| 1e-2 | 0.420 | 0.980 | 0.1 | 0.1 | 1.210 | 0.1 | |

| 1e-1 | 0.406 | 1.000 | 0.1 | 0.1 | 0.209 | 0.1 |

| Method | Dice↑ | SDice↑ | FV↓ | AJ×104↓ | SSE×10-1↓ | CSE↓ |

|---|---|---|---|---|---|---|

| VM | 0.732 | 0.890 | 1.522 | 3.00 | 5.156 | 16.55 |

| VM-E | 0.742 | 0.919 | 1.574 | 3.04 | 2.647 | 26.97 |

| VM-ES | 0.740 | 1.000 | 1.442 | 2.72 | 0.050 | 25.32 |

| VM-EC | 0.743 | 0.950 | 0.447 | 0.39 | 1.178 | 2.61 |

| VM-ESC | 0.743 | 1.000 | 0.478 | 0.43 | 0.1 | 2.55 |

| TM | 0.862 | 0.925 | 0.752 | 1.52 | 3.069 | 10.72 |

| TM-S | 0.861 | 1.000 | 0.777 | 1.66 | 0.018 | 11.43 |

| TM-C | 0.862 | 0.948 | 0.246 | 0.26 | 0.991 | 2.93 |

| TM-SC | 0.862 | 1.000 | 0.307 | 0.35 | 0.1 | 3.17 |

Model ablation study. We study our proposed sanity checks on IXI and OASIS validation datasets and report the results in Tab. 8. We show that each sanity check reduces the corresponding errors without compromising other metrics’ performance compared to their naive counterparts. We also find that the bidirectional registration and cross-sanity check can also mitigate self-sanity errors to a certain level, but cannot eliminate such errors completely, proving that our self-sanity check is necessary to regulate the models’ behavior for mapping identical image pairs. More experiments such as parameters and numerical study, sanity preservation study, statistical significance of results, ablative qualitative results, etc, can be seen in the Appendix.

5 Conclusion

This paper focuses on correcting learning-based deep models’ behaviors on single moving-fixed image pairs. In our model sanity analysis, we find that most existing models suffer from significant sanity errors, with no exceptions for models equipped with diffeomorphic add-ons. We show that this sanity-checked model can prevent such sanity errors without contaminating any registration performance. While the experimental results certify the effectiveness of our proposed sanity checks, our sanity checks are supported by a set of theoretical guarantees derived in this paper. We first show that there is an error upper bound for our sanity-checked formulation to the optimal condition. Then, we show that this upper bound can give significant guidance to train a sanity-checked registration model, where we believe it is beneficial for preventing overly optimization on image similarities when training deep registration models.

Acknowledgements

Authors thank anonymous reviewers for their constructive comments and help from Yuzhang Shang for proofreading the rebuttal. Authors would also want to thank Junyu Chen, Tony C. W. Mok for their generous help with their papers and codes, and learn2reg challenge organizer for evaluating our models. This work was supported by NSF SCH-2123521. This article solely reflects the opinions and conclusions of its authors and not the funding agency.

References

- [1] Ixi brain, 2022. https://brain-development.org/ixi-dataset.

- [2] Brian B Avants, Charles L Epstein, Murray Grossman, and James C Gee. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. MIA, 12(1):26–41, 2008.

- [3] Bhakti Baheti, Diana Waldmannstetter, Satrajit Chakrabarty, Hamed Akbari, Michel Bilello, Benedikt Wiestler, Julian Schwarting, Evan Calabrese, Jeffrey Rudie, Syed Abidi, et al. The brain tumor sequence registration challenge: Establishing correspondence between pre-operative and follow-up mri scans of diffuse glioma patients. arXiv:2112.06979, 2021.

- [4] Ruzena Bajcsy and Stane Kovačič. Multiresolution elastic matching. CVGIP, 46(1):1–21, 1989.

- [5] Spyridon Bakas. Brain tumor sequence registration challenge, 2022. https://www.med.upenn.edu/cbica/brats-reg-challenge.

- [6] Guha Balakrishnan, Amy Zhao, Mert R Sabuncu, John Guttag, and Adrian V Dalca. Voxelmorph: a learning framework for deformable medical image registration. TMI, 38(8):1788–1800, 2019.

- [7] M Faisal Beg, Michael I Miller, Alain Trouvé, and Laurent Younes. Computing large deformation metric mappings via geodesic flows of diffeomorphisms. IJCV, 61(2):139–157, 2005.

- [8] Xiaohuan Cao, Jianhua Yang, Jun Zhang, Qian Wang, Pew-Thian Yap, and Dinggang Shen. Deformable image registration using a cue-aware deep regression network. TBE, 65(9):1900–1911, 2018.

- [9] Junyu Chen, Eric C Frey, Yufan He, William P Segars, Ye Li, and Yong Du. Transmorph: Transformer for unsupervised medical image registration. MIA, page 102615, 2022.

- [10] Junyu Chen, Yufan He, Eric C Frey, Ye Li, and Yong Du. Vit-v-net: Vision transformer for unsupervised volumetric medical image registration. arXiv:2104.06468, 2021.

- [11] Gary E Christensen, Xiujuan Geng, Jon G Kuhl, Joel Bruss, Thomas J Grabowski, Imran A Pirwani, Michael W Vannier, John S Allen, and Hanna Damasio. Introduction to the non-rigid image registration evaluation project (nirep). In WBIR, 2006.

- [12] Gary E Christensen, Sarang C Joshi, and Michael I Miller. Volumetric transformation of brain anatomy. TMI, 16(6):864–877, 1997.

- [13] Adrian V Dalca, Guha Balakrishnan, John Guttag, and Mert R Sabuncu. Unsupervised learning of probabilistic diffeomorphic registration for images and surfaces. MIA, 57:226–236, 2019.

- [14] Bruce Fischl. Freesurfer. Neuroimage, 62(2):774–781, 2012.

- [15] Kexue Fu, Shaolei Liu, Xiaoyuan Luo, and Manning Wang. Robust point cloud registration framework based on deep graph matching. In CVPR, 2021.

- [16] Hastings Greer, Roland Kwitt, Francois-Xavier Vialard, and Marc Niethammer. Icon: Learning regular maps through inverse consistency. In ICCV, 2021.

- [17] Daniel Grzech, Mohammad Farid Azampour, Ben Glocker, Julia Schnabel, Nassir Navab, Bernhard Kainz, and Loïc Le Folgoc. A variational bayesian method for similarity learning in non-rigid image registration. In CVPR, 2022.

- [18] Gabriel L Hart, Christopher Zach, and Marc Niethammer. An optimal control approach for deformable registration. In CVPR, 2009.

- [19] Mattias P Heinrich, Oskar Maier, and Heinz Handels. Multi-modal multi-atlas segmentation using discrete optimisation and self-similarities. VISCERAL Challenge@ ISBI, 1390:27, 2015.

- [20] Alessa Hering, Lasse Hansen, Tony C. W. Mok, Albert CS Chung, Hanna Siebert, Stephanie Häger, Annkristin Lange, Sven Kuckertz, Stefan Heldmann, Wei Shao, et al. Learn2reg: comprehensive multi-task medical image registration challenge, dataset and evaluation in the era of deep learning. TMI, 2022.

- [21] Andrew Hoopes, Malte Hoffmann, Douglas N Greve, Bruce Fischl, John Guttag, and Adrian V Dalca. Learning the effect of registration hyperparameters with hypermorph. MELBA, 3:1–30, 2022.

- [22] Junhwa Hur and Stefan Roth. Mirrorflow: Exploiting symmetries in joint optical flow and occlusion estimation. In ICCV, 2017.

- [23] Boah Kim, Dong Hwan Kim, Seong Ho Park, Jieun Kim, June-Goo Lee, and Jong Chul Ye. Cyclemorph: cycle consistent unsupervised deformable image registration. MIA, 71:102036, 2021.

- [24] Pengpeng Liu, Michael Lyu, Irwin King, and Jia Xu. Selflow: Self-supervised learning of optical flow. In CVPR, 2019.

- [25] Jinxin Lv, Zhiwei Wang, Hongkuan Shi, Haobo Zhang, Sheng Wang, Yilang Wang, and Qiang Li. Joint progressive and coarse-to-fine registration of brain mri via deformation field integration and non-rigid feature fusion. TMI, 41(10):2788–2802, 2022.

- [26] Daniel S Marcus, Tracy H Wang, Jamie Parker, John G Csernansky, John C Morris, and Randy L Buckner. Open access series of imaging studies (oasis): cross-sectional mri data in young, middle aged, nondemented, and demented older adults. Journal of cognitive neuroscience, 19(9):1498–1507, 2007.

- [27] Simon Meister, Junhwa Hur, and Stefan Roth. Unflow: Unsupervised learning of optical flow with a bidirectional census loss. In AAAI, 2018.

- [28] Marc Modat, Gerard R Ridgway, Zeike A Taylor, Manja Lehmann, Josephine Barnes, David J Hawkes, Nick C Fox, and Sébastien Ourselin. Fast free-form deformation using graphics processing units. Computer methods and programs in biomedicine, 98(3):278–284, 2010.

- [29] Tony C. W. Mok and Albert Chung. Conditional deformable image registration with convolutional neural network. In MICCAI, 2021.

- [30] Tony C. W. Mok and Albert Chung. Robust image registration with absent correspondences in pre-operative and follow-up brain mri scans of diffuse glioma patients. arXiv:2210.11045, 2022.

- [31] Tony C. W. Mok and Albert Chung. Unsupervised deformable image registration with absent correspondences in pre-operative and post-recurrence brain tumor mri scans. In MICCAI, 2022.

- [32] Xavier Pennec, Pascal Cachier, and Nicholas Ayache. Understanding the “demon’s algorithm”: 3d non-rigid registration by gradient descent. In MICCAI, 1999.

- [33] Zheng Qin, Hao Yu, Changjian Wang, Yulan Guo, Yuxing Peng, and Kai Xu. Geometric transformer for fast and robust point cloud registration. In CVPR, 2022.

- [34] Huaqi Qiu, Chen Qin, Andreas Schuh, Kerstin Hammernik, and Daniel Rueckert. Learning diffeomorphic and modality-invariant registration using b-splines. In MIDL, 2021.

- [35] Lei Qu, Yuanyuan Li, Peng Xie, Lijuan Liu, Yimin Wang, Jun Wu, Yu Liu, Tao Wang, Longfei Li, Kaixuan Guo, et al. Cross-modal coherent registration of whole mouse brains. Nature Methods, 19(1):111–118, 2022.

- [36] Daniel Rueckert, Luke I Sonoda, Carmel Hayes, Derek LG Hill, Martin O Leach, and David J Hawkes. Nonrigid registration using free-form deformations: application to breast mr images. TMI, 18(8):712–721, 1999.

- [37] Tanya Schmah, Laurent Risser, and François-Xavier Vialard. Left-invariant metrics for diffeomorphic image registration with spatially-varying regularisation. In MICCAI, 2013.

- [38] Dinggang Shen and Christos Davatzikos. Hammer: hierarchical attribute matching mechanism for elastic registration. TMI, 21(11):1421–1439, 2002.

- [39] Zhengyang Shen, Xu Han, Zhenlin Xu, and Marc Niethammer. Networks for joint affine and non-parametric image registration. In CVPR, 2019.

- [40] Zhengyang Shen, François-Xavier Vialard, and Marc Niethammer. Region-specific diffeomorphic metric mapping. NeurIPS, 2019.

- [41] Hanna Siebert, Lasse Hansen, and Mattias P Heinrich. Fast 3d registration with accurate optimisation and little learning for learn2reg 2021. In MICCAI, 2021.

- [42] Hessam Sokooti, Bob De Vos, Floris Berendsen, Boudewijn PF Lelieveldt, Ivana Išgum, and Marius Staring. Nonrigid image registration using multi-scale 3d convolutional neural networks. In MICCAI, 2017.

- [43] Tom Vercauteren, Xavier Pennec, Aymeric Perchant, and Nicholas Ayache. Diffeomorphic demons: Efficient non-parametric image registration. NeuroImage, 45(1):S61–S72, 2009.

- [44] François-Xavier Vialard, Laurent Risser, Daniel Rueckert, and Colin J Cotter. Diffeomorphic 3d image registration via geodesic shooting using an efficient adjoint calculation. IJCV, 97:229–241, 2012.

- [45] Jian Wang and Miaomiao Zhang. Deepflash: An efficient network for learning-based medical image registration. In CVPR, 2020.

- [46] Wenhai Wang, Enze Xie, Xiang Li, Deng-Ping Fan, Kaitao Song, Ding Liang, Tong Lu, Ping Luo, and Ling Shao. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In ICCV, 2021.

- [47] Xuechun Wang, Weilin Zeng, Xiaodan Yang, Yongsheng Zhang, Chunyu Fang, Shaoqun Zeng, Yunyun Han, and Peng Fei. Bi-channel image registration and deep-learning segmentation (birds) for efficient, versatile 3d mapping of mouse brain. Elife, 10:63455, 2021.

- [48] Yutong Xie, Jianpeng Zhang, Chunhua Shen, and Yong Xia. Cotr: Efficiently bridging cnn and transformer for 3d medical image segmentation. In MICCAI, 2021.

- [49] Xiao Yang, Roland Kwitt, Martin Styner, and Marc Niethammer. Quicksilver: Fast predictive image registration–a deep learning approach. NeuroImage, 158:378–396, 2017.

- [50] Jun Zhang. Inverse-consistent deep networks for unsupervised deformable image registration. arXiv:1809.03443, 2018.

- [51] Hong-Yu Zhou, Jiansen Guo, Yinghao Zhang, Lequan Yu, Liansheng Wang, and Yizhou Yu. nnformer: Interleaved transformer for volumetric segmentation. arXiv:2109.03201, 2021.

- [52] S Kevin Zhou, Hoang Ngan Le, Khoa Luu, Hien V Nguyen, and Nicholas Ayache. Deep reinforcement learning in medical imaging: A literature review. MIA, 73:102193, 2021.

Appendix A Appendix

A.1 Comparison Between Diffeomorphic Methods and Inverse Consistent Methods

A.2 Proof of Thm. 1

Theorem 1 (Relaxed ideal symmetric registration via cross-sanity check).

An ideal symmetric registration meets

in which denotes the identity transformation. Then, a cross-sanity checked registration is a relaxed solution to the ideal registration, satisfying

Proof 1.

A straightforward explanation for ideal symmetric registration would be that the coordinates of one pixel () for image stay the same after two transformations: forward transformation (from to ) and backward transformation (from back to ). Therefore, we have the chain of the coordinates changing

| (A1) | ||||

By simplification, we have the ideal inverse consistency:

| (A2) | ||||

Different from the ideal inverse consistency, recall that our cross-sanity check in norm form is

| (A3) |

Suppose and . By expanding the cross-sanity check, we have

| (A4) | ||||

So, we have

| (A5) |

and

| (A6) |

Thus, we have

| (A7) | ||||

Finally, we derive the lower/upper bound as

| (A8) |

It is obvious that our cross-sanity check formulation (Eq. A8) is a relaxed version of the strict symmetry in Eq. A2. So far, we prove that under our cross-sanity check, the symmetry of and is bounded by and . When the strict symmetry is satisfied, our cross-sanity check is definitely satisfied. However, if our cross-sanity check is satisfied, it is not the other way around. We show in the experiments that our relaxed version of symmetry improves the overall results, quantitatively and qualitatively.

A.3 Proof of Thm. 2

Theorem 2 (Existence of the unique minimizer for our relaxed optimization).

Let and be two images defined on the same spatial domain , which is connected, closed, and bounded in with a Lipschitz boundary . Let be a displacement mapping from the Hilbert space to its dual space . Then, there exists a unique minimizer to the relaxed minimization problem.

Proof 2.

In reality, a meaningful deformation field cannot be unbounded. We first restrict to be a closed subset of :

| (A9) | ||||

We then seek solutions to the minimization problem in the space , and meanwhile satisfying our proposed checks. For short notations, we denote the minimization problem as

| (A10) | ||||

| s.t. | ||||

and is a positive constant. For , is bounded below and there exists a minimizing sequence satisfying

| (A11) | ||||

For identical inputs, we have , thus, the self-sanity is checked. For different image pairs, we have

| (A12) |

By definition, and its reversed displacement need to follow the constraint , so we have

| (A13) |

Here, we let , since our cross-sanity check is considered a tighter bound than . Thus, by choosing appropriate and , we can ensure that the cross-sanity is also checked, such that . Due to the fact that is precompact in space, there exists a convergent subsequence where we still denote as , and , such that , which is strongly in and a.e. in . Note that, either similarity functions (e.g. NCC) or distance functions (e.g. SSD) is naturally bounded in our image registration scenario, so that we can always have

| (A14) |

Besides, for the regularization, since is a bounded convergent sequence in , and a.e. in . By the dominant convergence theorem, we have

| (A15) |

Combining Eq. A14 with Eq. A15, we obtain

| (A16) |

Thus, is indeed a solution to the minimization problem. So far, we prove that there exists a minimizer of the modified optimization problem. We can then prove that is unique. Note that, we assume that the similarity operator is concave (e.g., NCC, negative NCC is convex) when it is not a distance operator (e.g., SSD is convex) on the transformation with a pair of . I.e., , i.e., learned by the model and conditioned on this specific pair to satisfy the proposed sanity checks. Therefore, since the data term (e.g. SSD) and the regularization ( regularization) are convex, together with the convex search space for , the uniqueness of the minimizer is proved.

A.4 Proof of Thm. 3

Theorem 3 (Loyalty of the sanity-checked minimizer).

Proof 3.

Since is optimal, thus we have that . Since , with elimination we have that . By substituting with and combining like terms, we have Eq. 12. Therefore, we prove the loyalty of the unique minimizer to the optimal minimizer , controlled by weight .

A.5 Proof of CS Error Upper Bound

Recall we want to prove that the CS error is upper bounded in the form of

Proof 4.

| Dice | SDice | FV | AJ×104 | SSE×10-1 | CSE | ||

| ∗0.1 | 5 | 0.7263 | 0.8850 | 1.5220 | 3.00 | 5.156 | 10.72 |

| †0.1 | 5 | 0.7223 | 0.8745 | 1.6130 | 3.30 | 4.337 | 11.05 |

| 0 | 0 | 0.7178 | 0.9486 | 1.4041 | 2.74 | 0.693 | 7.58→1.8176.12% |

| 0.1 | 0.1 | 0.7199 | 0.9533 | 1.2460 | 2.30 | 0.721 | 6.39→2.2165.41% |

| 1 | 0.7223 | 0.9654 | 1.2070 | 2.02 | 0.643 | 6.81→4.6032.45% | |

| 3 | 0.7233 | 0.9685 | 1.0160 | 1.51 | 0.596 | 8.70→5.1940.34% | |

| 5 | 0.7247 | 0.9588 | 0.8216 | 1.09 | 0.723 | 10.72→5.0353.07% | |

| 7 | 0.7226 | 0.9689 | 0.6632 | 0.77 | 0.642 | 12.53→4.5263.92% | |

| 8 | 0.7232 | 0.9600 | 0.6152 | 0.68 | 0.668 | 13.39→4.2768.11% | |

| 9 | 0.7226 | 0.9699 | 0.5534 | 0.58 | 0.572 | 14.25→4.0371.72% | |

| 11 | 0.7206 | 0.9665 | 0.4472 | 0.41 | 0.564 | 15.83→3.5377.70% | |

| 12 | 0.7211 | 0.9668 | 0.4219 | 0.38 | 0.572 | 16.55→3.4379.27% | |

| 13 | 0.7186 | 0.9742 | 0.4278 | 0.39 | 0.543 | 17.22→3.3980.31% | |

| 14 | 0.7189 | 0.9678 | 0.4182 | 0.37 | 0.543 | 17.80→3.2481.80% | |

| 16 | 0.7179 | 0.9681 | 0.4345 | 0.40 | 0.561 | 18.79→2.8984.62% | |

| 20 | 0.7124 | 0.9693 | 0.4668 | 0.44 | 0.577 | 20.36→2.8286.15% | |

| 0.01 | 5 | 0.7229 | 0.9677 | 0.9842 | 1.46 | 0.623 | 11.30→5.4351.94% |

| 0.10 | 0.7247 | 0.9588 | 0.8216 | 1.09 | 0.723 | 10.72→5.0353.07% | |

| 0.15 | 0.7230 | 0.9624 | 0.7891 | 0.99 | 0.591 | 10.42→4.7754.22% | |

| 0.20 | 0.7222 | 0.9656 | 0.7479 | 0.90 | 0.586 | 10.15→4.5355.37% | |

| 0.50 | 0.7218 | 0.9570 | 0.7276 | 0.86 | 0.642 | 8.64→3.4759.83% | |

| 1 | 0.7165 | 0.9353 | 0.9622 | 1.45 | 1.277 | 7.41→1.9373.95% |

A.6 Cross-sanity Check Numerical Study

We present our numerical study for and parameters in Tab. A1. This ablation study is conducted on the same subset of IXI training dataset described in the ablation study section but validate/test in the entire validation/test set. Our thought is that compared to the problem of enforcing strict inverse consistency over two different displacements, the relaxed version might be easier to solve, mathematically. Again, practically speaking, it is rather difficult to find one-to-one correspondence for every point in the moving-fixed image pair, which is the strict inverse consistency saying, relaxing or setting an error threshold can be effective from this perspective. Our error-bound formulation implicitly presents guidance for training such sanity-checked models.

Settings of and . A uniform estimate of and is possible, however, such a bound is not sharp, and it will lead to over-estimation of (the regularization parameter) for different applications. Hence, we prefer to derive the bounds on and on particular sets of applications, where we can easily find such bounds from the formulations. As stated in Thm. 1, and control the relaxation. We can also directly derive an upper bound from the check that constrains the ratio of two displacements. Since , then , is neglected for simplicity. These two bounds estimate ranges of and for our relaxation. E.g., we use existing models (e.g., VM) to predict ten samples randomly, and set to 0.15× maximum displacement; set to 0.1 for models outputting absolute displacements (e.g., VM and TM), or to 0.01 for models outputting relative displacements (e.g., DIRAC). Note that this only needs to be done once, while the previous experiment shows that the registrations are pretty robust among a range of and . Thus, it is safe to choose and within the range.

|

|

Model |

Dice |

SDice |

HD95 |

SDlogJ |

FV |

AJ×104 |

SSE×10-1 |

CSE |

|---|---|---|---|---|---|---|---|---|---|

|

|

VM |

0.714 | 0.848 | 3.039 | 0.118 | 0.831 | 1.52 | 6.24 | 7.49 |

|

VM-ESC |

0.759 | 1.000 | 2.563 | 0.080 | 0.173 | 0.16 | 0.00 | 2.86 | |

|

TMBS |

0.775 | 0.901 | 2.201 | 0.072 | 0.00 | 0.00 | 24.4 | 15.16 | |

|

TMBS-ESC |

0.783 | 1.000 | 2.144 | 0.061 | 0.00 | 0.00 | 0.00 | 3.73 | |

|

|

TM |

0.705 | 0.918 | 4.083 | 0.132 | 1.390 | 2.67 | 3.18 | 13.78 |

|

TM-SC |

0.718 | 1.000 | 3.903 | 0.097 | 0.674 | 0.84 | 0.01 | 5.58 |

A.7 Ablative Qualitative Comparisons

Qualitative comparisons between our ablative model variants are shown in Fig. A2.

A.8 Sanity-awareness Preservation Study on Cross-Dataset Scenario

We test whether sanity awareness is preserved in cross-dataset scenarios. We train our sanity-checked register in one dataset and then test it on different dataset so no overlapping between training and testing datasets. The results are shown in Tab. A2. Compared to methods without sanity checks, our sanity-checked models improve in every metric, certifying that our sanity checks do not harm the model training. Besides, the sanity-checked registers still preserve good sanity for preventing corresponding errors.

A.9 Experimental Results Statistical Significance

We specifically study whether our results are statistically significant, compared to the other strong baselines, e.g., ICON [16] and DIRAC [31]. We calculate p value using scipy package. Compared to ICON, our VM-ESC (p value: 0.0174) and TMBS-ESC (p value: 0.0280), while for DIRAC, our DIRAC-SC (p value: 0.0204), considering all metrics shown in the corresponding tables. All the p values are , indicating that our results are statistically significant.

A.10 Error Map Comparisons between Inverse Consistent Methods

Qualitative comparisons between inverse consistent methods on IXI dataset are shown in Fig. A3.

| Method | TRE↓ | STRE↓ | ROB↑ | FV↓ | AJ×102↓ |

|---|---|---|---|---|---|

| DIRAC | 2.7600.247 | 0.2740.027 | 0.7760.055 | 0.0250.009 | 4.2422.954 |

| DIRAC-C | 2.7210.262 | 0.2680.039 | 0.7910.044 | 0.0220.008 | 3.0121.442 |

| DIRAC-SC | 2.7190.259 | 0.2180.046 | 0.7950.034 | 0.0220.005 | 3.0011.314 |

A.11 Performance of Replacing DIRAC’s Inverse Error

We denote it as DIRAC-C, and report in Tab. A3.

A.12 Role of Image Similarity Loss

The image similarity loss still plays a very important role during training. The reason is that and are defined on displacements, to calculate such losses, we need to ensure that those displacements are meaningful, which is guaranteed via . Compared to the value of NCC (1), the cross-sanity error is relatively large (Tab. 4), and using small will not interfere with the optimizations.