Towards Understanding Emotions for Engaged Mental Health Conversations

Abstract.

Providing timely support and intervention is crucial in mental health settings. As the need to engage youth comfortable with texting increases, mental health providers are exploring and adopting text-based media such as chatbots, community-based forums, online therapies with licensed professionals, and helplines operated by trained responders. To support these text-based media for mental health–particularly for crisis care–we are developing a system to perform passive emotion-sensing using a combination of keystroke dynamics and sentiment analysis. Our early studies of this system posit that the analysis of short text messages and keyboard typing patterns can provide emotion information that may be used to support both clients and responders. We use our preliminary findings to discuss the way forward for applying AI to support mental health providers in providing better care.

1. Introduction

Mental health conditions and suicide are rising global health issues. In 2023, suicide was reported as the fourth leading cause of death for youths aged 15 to 19, with more than 700,000 people dying due to suicide every year (Organization, 2023). In response to the growing need for mental health services, online text-based helplines have emerged as a convenient and accessible option for individuals in distress, offering support around the clock (Hoermann et al., 2017). These platforms can be managed by AI-powered bots, licensed professionals, or trained staff.

Our work aims to inform the design of messaging platforms for mental health conversations, especially those used by manned helplines, by understanding the emotions of its users. As studied by (Bhattacharjee et al., 2023), different factors including the client’s affective states must be considered when building context-aware text messaging systems for mental health. Additionally, human responders who find fulfilment in helping clients may also face distress and unintentionally affect their quality of care (Willems et al., 2020). We investigate how incorporating emotion detection capabilities into these platforms could ease the burden on them and support more effective client communication.

Toward this goal, we are developing an unobtrusive emotion detection system, integrating both keystroke typing patterns, i.e., keystroke dynamics (KD), and sentiment analysis, to fit seamlessly into existing text-based support platforms. Both analyses can be obtained without changing the inherent interaction mechanics of users. We also aim to explore how the emotions detected can be interpreted and presented.

We are motivated by two main research questions:

-

(1)

Can KD and text data signal different emotions within a synchronous text conversation?

-

(2)

What types of feedback can be presented to mental health helpline responders based on their own emotions and the client’s?

This paper presents the initial findings from (1) and its implications on AI-supported interventions in mental health and crisis care. A within-subjects experiment was conducted with 31 participants who used a text messaging interface to engage in a synchronous conversation with a member of the research team serving as a moderator. Each participant’s text and KD data were collected and analysed using machine learning for emotion detection.

2. Related Work

Previous studies have explored various methods and benefits of incorporating emotion capabilities in text messaging services. For example, (Nguyen et al., 2022) investigated the integration of emotional analysis tools into an online communication platform and found that making emotion information available within the chat can enhance teamwork and relationship building. (Wang et al., 2004)’s work with galvanic skin response sensors combined with user-selected tags indicates that visual feedback on a chat partner’s emotions can make conversations more engaging.

KD, which initially garnered interest as a biometric form of user authentication, has been examined for its potential to reveal traits such as honesty, gender, and personality characteristics with varying degrees of accuracy. Notably, studies (Borj and Bours, 2019; Monaro et al., 2017) demonstrated that KD could predict whether individuals were lying, while (Li et al., 2019; Buker et al., 2019; Buker and Vinciarelli, 2021) utilised keystroke data combined with stylometric features to predict users’ gender and personality traits. Similar to these demonstrations of the potential of KD as a rich source of behavioural biometrics, other research such as those by (Epp et al., 2011) has also shown KD as a promising method for detecting different emotional states. Recent studies (Maalej and Kallel, 2020; Yang and Qin, 2021) have built upon this, broadening the list of viable keystroke features and advanced analytical techniques.

Studies using KD for emotion detection commonly recommend using a multi-modal approach, i.e., fusing KD with other user data, for higher accuracy (Maalej and Kallel, 2020). Despite progress in combining KD with text analysis (Nahin et al., 2014; Tahir et al., 2022), real-time application in synchronous chat contexts remains underexplored. This gap presents a unique opportunity for our work to contribute by applying these methodologies specifically to engaged conversations for mental health care.

3. Methodology

3.1. Study Design

We devised a study that emulates a text-based mental health support platform to evaluate the efficacy of passive emotion detection methods. We developed a chat messaging application typical of mental health support helplines and had participants interact with a moderator through the application in a desktop computer setting.

Participants were asked to watch a short video intended to invoke a specific emotional reaction. After viewing the video, they discussed their emotional responses with the moderator. This activity was conducted three times per participant with three unique videos (randomly selected from our pool of eight), after which the moderator would bring the conversation to a close. The pool of eight short videos (42-62 seconds) was carefully chosen from the OpenLAV dataset (Israel et al., 2021) to elicit different emotional responses spanning all combinations of valence (negative, neutral, positive) and arousal (low, medium, high). This approach ensures a comprehensive range of emotional experiences for each participant and the overall study.

The chat application recorded all user key presses and text messages. Participants were assigned unique numbers to serve as identifiers within the study, maintaining user confidentiality. Any personally identifiable information (PII) used to conduct the experiments were stored separately from all study data and discarded afterwards.

The study involved 31 participants (18 female), aged 18-50 (mean age = 28.7, SD = 8.3), with basic computer proficiency and who use English as their primary input language. Since the emotion stimuli may trigger high levels of distress in participants, we excluded people with clinical mental health diagnoses like post-traumatic stress disorder or anxiety to minimise this risk in the initial study. Participants were compensated with $10 vouchers, and the protocol was approved by our university’s Institutional Review Board (IRB).

3.2. Data Analysis

In classifying emotions, there are several models that either characterise them within a dimensional space or differentiate emotions as discrete categories. These models provide us a theoretically grounded framework for understanding emotional states in mental health communications. For the dimensional model, we applied the circumplex model of affect (Posner et al., 2005) to classify the data points along a two-dimensional scale of valence and arousal, referring to the pleasantness of a stimulus and its intensity respectively. For the categorical model, we used Ekman’s basic emotions (Ekman, 1999) plus a non-emotion state to classify our data into seven categories: surprise, happiness, sadness, anger, disgust, fear, and neutral. Both models, offering a clear lens through which to view the complex landscape of human emotions, have been used in emotion detection methods using KD (Epp et al., 2011; Maalej and Kallel, 2020; Nahin et al., 2014).

3.2.1. Text Analysis

All our analyses rely on manual annotations as ground truth. Two annotators from the research team labelled each message sent by the participants with dimensional and categorical emotion values. Valence and arousal were both labelled on a scale of -1, 0, and 1 to denote negative to positive emotional states and low to high intensity levels, respectively. Up to three emotion labels were assigned per message for the categorical classification of emotions. To ensure the reliability of the manual annotations, we computed the Krippendorf’s as a measure of inter-annotator agreement. We obtained a score of 0.75 for valence, 0.56 for arousal, and an average of 0.76 across the seven emotion labels. Discrepancies during the aggregation of the annotations were resolved by a random selection of labels from either annotator.

Utilising OpenAI’s GPT-4 Turbo (gpt-4-0125-preview), we harnessed the strengths of Large Language Models (LLMs) in nuanced emotion detection and linguistic adaptability by providing the same dataset and instructions as the human annotators, i.e., the full conversation logs with messages from the participants and the moderator. In this approach, the GPT-4 LLM also generated valence and arousal values and up to three dominant emotion labels. These were used for predicting the manually annotated emotional states.

3.2.2. Keystroke Dynamics

We transformed raw keystroke data from the in-person experiments into a feature set that could be used as input to predictive models, applying a method similar to (Epp et al., 2011). We extracted two categories of features: keystroke and content features.

Keystroke features included statistical measures of timing-related attributes such as intervals between key presses and releases, nuanced typing habits such as backspace frequency to infer correction habits and Enter key usage to understand message pacing.

Content features encompassed punctuation, capitalisation, and sentence structure to observe authentic conversational typing habits in contrast to controlled text inputs. These features, combining both mechanical and compositional typing aspects, highlight the user’s natural interaction patterns and are pivotal in building machine learning models that can predict different emotional states.

Among random forest (RF) classifiers, support vector machines (SVMs), and multinomial logistic regression models, we achieved the best results using RF (average precision=0.796, F1 score=0.791).

3.2.3. Fusion of Text Analysis and KD

We combined the results from the LLM-based text analysis with KD to yield features which were used in another set of machine learning models that predicted the same labels. This fusion of features, specifically taken in a conversational context, is the novel method of emotion detection we are presenting in this work.

4. Preliminary Findings

In this study, we evaluated the performance of machine learning models that predicted valence and arousal levels and emotion categories in terms of overall accuracy, precision, recall, and F1 score. We compared the results between models that use features from the LLM-based text analysis (henceforth referred to as text-only models), models that use only KD features (henceforth referred to as KD-only models), and models that use a fusion of both (henceforth referred to as fusion models).

From our interpretation of the results (partially presented in Table 1), models using the categorical emotion classification achieved better results than those using the dimensional one in general. Even the lowest performance from a categorical model (for neutral emotion, precision=0.756, F1 score=0.756) was higher than the better performing dimensional model (for arousal, precision=0.746, F1 score=0.746). In predicting happiness, surprise, fear and anger, and the valence level, the fusion models had higher scores than any of the standalone counterparts. However, we noted that in these cases, the differences between the text-only and fusion models are small (mean F1 score=0.007), revealing that the contribution of KD could be minimal. Additionally, for the rest of the emotion classifications, text-only models either performed better than the fusion models (i.e., for neutral, sadness, disgust) or similarly (i.e., for arousal). Overall, the best results were from the fusion models predicting anger (precision=0.984, F1 score=0.985), surprise (precision=0.976, F1 score=0.967), and fear (precision=0.956, F1 score=0.956).

| Valence | Arousal | Neutral | Happiness | Sadness | Disgust | Fear | Surprise | Anger | |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 0.660 | 0.791 | 0.756 | 0.830 | 0.938 | 0.956 | 0.961 | 0.975 | 0.988 |

| Precision | 0.660 | 0.746 | 0.756 | 0.831 | 0.933 | 0.951 | 0.956 | 0.976 | 0.984 |

| Recall | 0.660 | 0.791 | 0.756 | 0.830 | 0.938 | 0.956 | 0.961 | 0.975 | 0.988 |

| F1 score | 0.660 | 0.746 | 0.756 | 0.830 | 0.931 | 0.950 | 0.955 | 0.967 | 0.985 |

We integrated one of the trained KD-only models with our instant messaging application to verify that it can be utilised in an online chat context. The model was first converted into a format suitable for real-time inference and prediction of emotion values in our prototype. After a message is sent, the model successfully predicts valence and arousal values using keystroke features from the user. This capability can be further developed with LLM integration to perform emotion analysis using the fusion model. While additional testing needs to be done to enhance predictive accuracy, our early results demonstrate a promising outlook in conveying emotional information instantaneously in engaged mental health conversations.

5. Discussion

5.1. Improving Predictive Models

The results from the predictive models using KD indicate that there is room to increase accuracy given some modifications to our methodology. For example, the data can be analysed at a different level of granularity, i.e., per-chunk of consecutive messages or per-time-based intervals rather than per-message. Other keystroke features such as those based on digraphs and trigraphs commonly found in the local language (Li et al., 2019; Epp et al., 2011) can also be extracted for analysis. More importantly, aligned with the rapid evolution of AI and the growing amount of high-performing algorithms, other feature selection techniques and machine learning classifiers can be explored. As shown in other studies, deep learning methods can be used to analyse KD for emotion recognition (Yang and Qin, 2021).

5.2. Comparison and Integration with Other Physiological Measures

Our work focused on emotion detection through text data and KD, yet the integration of physiological measures could deepen our understanding of emotional responses. During our study, we collected facial data via webcam and heart rate data via smartwatch, as studies have shown that these can be used to detect emotional states (Canedo and Neves, 2019) and measure stress levels (Hickey et al., 2021), respectively. This additional data and multi-modal approach could enrich our comparison of text and KD analysis with existing methods of understanding affective states.

Although our primary goal is unobtrusive emotion detection, leveraging increasingly ubiquitous devices such as smartwatches might enhance the accuracy of our proposed models. Future research could explore how additional physiological data alters our findings or provides new insights into how emotions affect communication patterns.

5.3. Understanding the Impact of Emotions in Engaged Mental Health Conversations

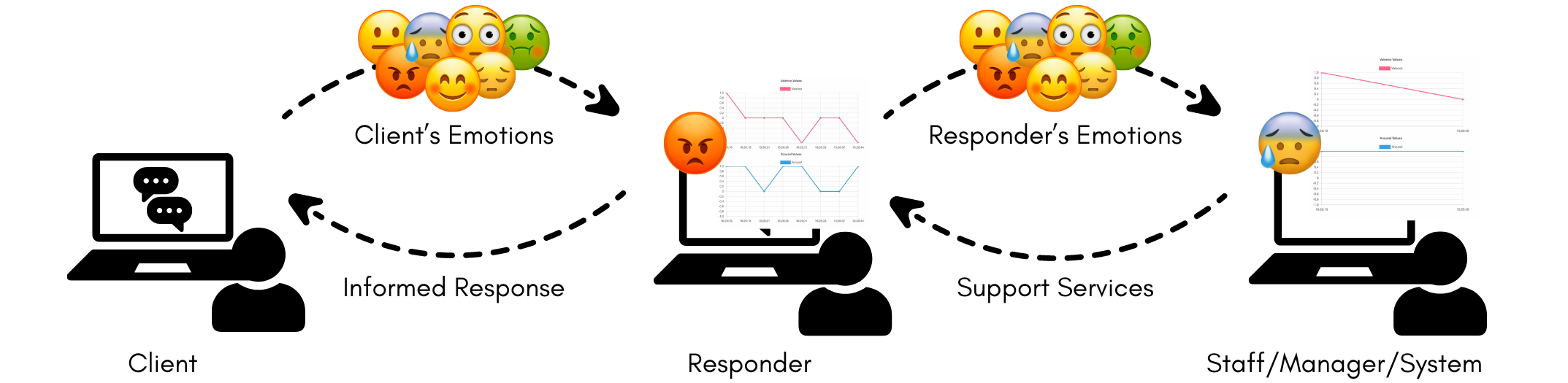

Ultimately, we aim to propose design considerations for emotion-aware online platforms (Figure 1), on which engaged mental health conversations can take place. Providing responders with this dynamic tool to accurately assess clients’ emotional states in real-time may have several benefits, including guided decision-making for urgent care or intervention and an enhanced quality of support. Such platforms can also detect distress in responders and offer response suggestions or self-care advice where necessary.

It is important to note that this technology is designed to aid human-supported services rather than replace the capabilities of a human crisis helpline responder. As with other AI-generated content, insights provided by this emotion detection system must be judged with vigilance and without forgetting the value of human empathy. We recommend that the system design implements features for offering suggestions rather than asserting facts, such as presenting confidence scores alongside predictions.

Future research could explore the effectiveness and evaluate the risks of various methods in which emotion-related information and other relevant insights are presented to users, and closely collaborate with mental health professionals to ensure our model’s seamless integration into existing frameworks.

\Description

\Description

[Relationship(s) between users on an Emotion-Aware Platform]Conceptual Diagram Showing the Relationship(s) between a Client, Responder and Staff/Manager/System on an Emotion-Aware Platform

5.4. Limitations and Challenges

We excluded modern text input capabilities such as auto-correct, auto-complete, and emojis to keep a refined approach for our study. Minimising other methods of text input allowed us to capture keystrokes that closely match with the recorded text messages, simplifying our data pre-processing steps. As this may have impacted participants’ usual flow of expression, future research might incorporate these input methods to assess their influence on KD and the overall message sentiment.

Furthermore, the limited data from a controlled setting hinders the creation of personalised models that account for a user’s unique texting and typing habits. Such tailored models can be more sensitive and responsive to subtle shifts in emotional states and can be achieved through a more extensive user study.

The use of LLMs to analyse text messages which may contain sensitive information raises data privacy and consent issues. Similar to the steps we took to protect participants in our study, we propose the inclusion of confidentiality measures such as data obfuscation, encryption, and secure storage. As observed in our study, user personal information is not necessary to predict emotions and may be removed or obfuscated in text messages before being provided to the models for processing. Further research could explore fine-tuning an open-source LLM for localised mental health services and running the system in private servers only accessible to the crisis care provider. To add an extra layer of protection, the system should also encrypt all data at rest and in transit. Running the analysis in real-time also means that raw data will be immediately deleted and only the detected emotions, free of any potentially sensitive information, are stored.

6. Conclusion

In this paper, we uncovered the benefits of an emotion-aware messaging platform in supporting engaged mental health conversations. We investigated the use of textual data and keyboard typing patterns as an unobtrusive method of detecting emotions under dimensional and categorical classifications. While our work showed promise in recognising emotion categories, especially with the help of LLMs for sentiment analysis, there is room to improve the contribution of KD in our predictive models. We conclude that emotion detection capabilities can be feasibly integrated into existing messaging platforms, yet the applications of this technology must be designed with care, especially in mental health and crisis response settings. Our work not only enhances technical methodologies in emotion detection by leveraging AI but also paves the way for more empathetic and responsive mental health services, setting a new standard for future digital communication and care.

References

- (1)

- Bhattacharjee et al. (2023) Ananya Bhattacharjee, Joseph Jay Williams, Jonah Meyerhoff, Harsh Kumar, Alex Mariakakis, and Rachel Kornfield. 2023. Investigating the Role of Context in the Delivery of Text Messages for Supporting Psychological Wellbeing. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3544548.3580774

- Borj and Bours (2019) P. R. Borj and P. Bours. 2019. Detecting Liars in Chats using Keystroke Dynamics. In Proceedings of the 2019 3rd International Conference on Biometric Engineering and Applications. Association for Computing Machinery, 1–6. https://doi.org/10.1145/3345336.3345337

- Buker et al. (2019) A. A. N. Buker, G. Roffo, and A. Vinciarelli. 2019. Type Like a Man! Inferring Gender from Keystroke Dynamics in Live-Chats. IEEE Intelligent Systems 34, 6 (2019), 53–59. https://doi.org/10.1109/MIS.2019.2948514

- Buker and Vinciarelli (2021) A. A. N. Buker and A. Vinciarelli. 2021. I Feel it in Your Fingers: Inference of Self-Assessed Personality Traits from Keystroke Dynamics in Dyadic Interactive Chats. In 9th International Conference on Affective Computing and Intelligent Interaction (ACII). Association for Computing Machinery, 1–8. https://doi.org/10.1109/ACII52823.2021.9597389

- Canedo and Neves (2019) D. Canedo and António J. R. Neves. 2019. Facial Expression Recognition Using Computer Vision: A Systematic Review. Applied Sciences 9, 21 (Nov. 2019), 4678. https://doi.org/10.3390/app9214678

- Ekman (1999) Paul Ekman. 1999. Basic emotions. Handbook of cognition and emotion 98 (1999), 45–60.

- Epp et al. (2011) C. Epp, M. Lippold, and R.L. Mandryk. 2011. Identifying emotional states using keystroke dynamics. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. Association for Computing Machinery. https://doi.org/10.1145/1978942.1979046

- Hickey et al. (2021) Blake Anthony Hickey, Taryn Chalmers, Phillip Newton, Chin-Teng Lin, David Sibbritt, Craig S. McLachlan, Roderick Clifton-Bligh, John Morley, and Sara Lal. 2021. Smart Devices and Wearable Technologies to Detect and Monitor Mental Health Conditions and Stress: A Systematic Review. Sensors 21, 10 (May 2021), 3461. https://doi.org/10.3390/s21103461

- Hoermann et al. (2017) Simon Hoermann, Kathryn L McCabe, David N Milne, and Rafael A Calvo. 2017. Application of Synchronous Text-Based Dialogue Systems in Mental Health Interventions: Systematic Review. Journal of Medical Internet Research 19, 8 (Aug. 2017), e267. https://doi.org/10.2196/jmir.7023

- Israel et al. (2021) L. Israel, P. Paukner, L. Schiestel, K. Diepold, and F. Schönbrodt. 2021. Open Library for Affective Videos (OpenLAV). https://doi.org/10.23668/PSYCHARCHIVES.5042

- Li et al. (2019) G. Li, P. R. Borj, L. Bergeron, and P. Bours. 2019. Exploring Keystroke Dynamics and Stylometry Features for Gender Prediction on Chat Data. In 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO). IEEE, 1049–1054. https://doi.org/10.23919/MIPRO.2019.8756740

- Maalej and Kallel (2020) A. Maalej and I. Kallel. 2020. Does Keystroke Dynamics tell us about Emotions? A Systematic Literature Review and Dataset Construction. In 2020 16th International Conference on Intelligent Environments (IE). 60–67. https://doi.org/10.1109/IE49459.2020.9155004

- Monaro et al. (2017) M. Monaro, Q. Li R. Spolaor, M. Conti, L. Gamberini, and G. Sartori. 2017. Type Me the Truth! Detecting Deceitful Users via Keystroke Dynamics. In Proceedings of the 12th International Conference on Availability, Reliability and Security. Association for Computing Machinery, 1–6. https://doi.org/10.1145/3098954.3104047

- Nahin et al. (2014) A.F.M. N. H. Nahin, J. M. Alam, H. Mahmud, and K. Hasan. 2014. Identifying emotion by keystroke dynamics and text pattern analysis. Behaviour & Information Technology 33, 9 (2014), 987–996. https://doi.org/10.1080/0144929X.2014.907343

- Nguyen et al. (2022) M. Nguyen, M. Laly, B. C. Kwon, C. Mougenot, and J. McNamara. 2022. Moody Man: Improving creative teamwork through dynamic affective recognition. In Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, 1–14. https://doi.org/10.1145/3491101.3519656

- Organization (2023) World Health Organization. 2023. Suicide. https://www.who.int/news-room/fact-sheets/detail/suicide. Accessed: 12 Mar 2024.

- Posner et al. (2005) J. Posner, J. A. Russell, and S. Bradley. 2005. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Development and Psychopathology 17, 03 (Sept 2005). https://doi.org/10.1017/s0954579405050340

- Tahir et al. (2022) M. Tahir, Z. Halim, Atta Ur Rahman, M. Waqas, S. Tu, S. Chen, and Z. Han. 2022. Non-Acted Text and Keystrokes Database and Learning Methods to Recognize Emotions. ACM Trans. Multimedia Comput. Commun. Appl. 18, 2, Article 61 (feb 2022), 24 pages. https://doi.org/10.1145/3480968

- Wang et al. (2004) H. Wang, H. Prendinger, and T. Igarashi. 2004. Communicating emotions in online chat using physiological sensors and animated text. In CHI ’04 Extended Abstracts on Human Factors in Computing Systems. Association for Computing Machinery, 1171–1174. https://doi.org/10.1145/985921.986016

- Willems et al. (2020) R. Willems, C. Drossaert., P. Vuijk, and E. Bohlmeijer. 2020. Impact of Crisis Line Volunteering on Mental Wellbeing and the Associated Factors: A Systematic Review. Int. J. Environ. Res. Public Health 17, 5 (2020). https://doi.org/10.3390/ijerph17051641

- Yang and Qin (2021) L. Yang and S. F. Qin. 2021. A Review of Emotion Recognition Methods From Keystroke, Mouse, and Touchscreen Dynamics. IEEE Access 9 (2021), 162197–162213. https://doi.org/10.1109/ACCESS.2021.3132233