Towards Visual Distortion in Black-Box Attacks

Abstract

Constructing adversarial examples in a black-box threat model injures the original images by introducing visual distortion. In this paper, we propose a novel black-box attack approach that can directly minimize the induced distortion by learning the noise distribution of the adversarial example, assuming only loss-oracle access to the black-box network. The quantified visual distortion, which measures the perceptual distance between the adversarial example and the original image, is introduced in our loss whilst the gradient of the corresponding non-differentiable loss function is approximated by sampling noise from the learned noise distribution. We validate the effectiveness of our attack on ImageNet. Our attack results in much lower distortion when compared to the state-of-the-art black-box attacks and achieves success rate on InceptionV3, ResNet50 and VGG16bn. The code is available at https://github.com/Alina-1997/visual-distortion-in-attack.

1 Introduction

Adversarial attack has been a well-recognized threat to existing Deep Neural Network (DNN) based applications. It injects small amount of noise to a sample (e.g., image, speech, language) but degrades the model performance drastically [1, 2, 3]. With the continuous improvements of DNN, such attack could cause serious consequences in practical conditions where DNN is used. According to [4, 5], adversarial attack has been a practical concern in real-world problems, ranging from cell-phone camera attack to attacking self-driving cars.

According to the information that an adversary has of the target network, existing attack roughly falls into two categories: white-box attack that knows all the parameters of the target network, and black-box attack that has limited access to the target network. Each category can be further divided into several subcategories depending on the adversarial strength [6]. The proposed attack in this paper belongs to loss-oracle based black-box attack, where the adversary can obtain the output loss from supplied inputs. In real-world scenario, it’s sometimes difficult or even impossible to have full access to certain networks, which makes the black-box attack practical and attract more and more attention.

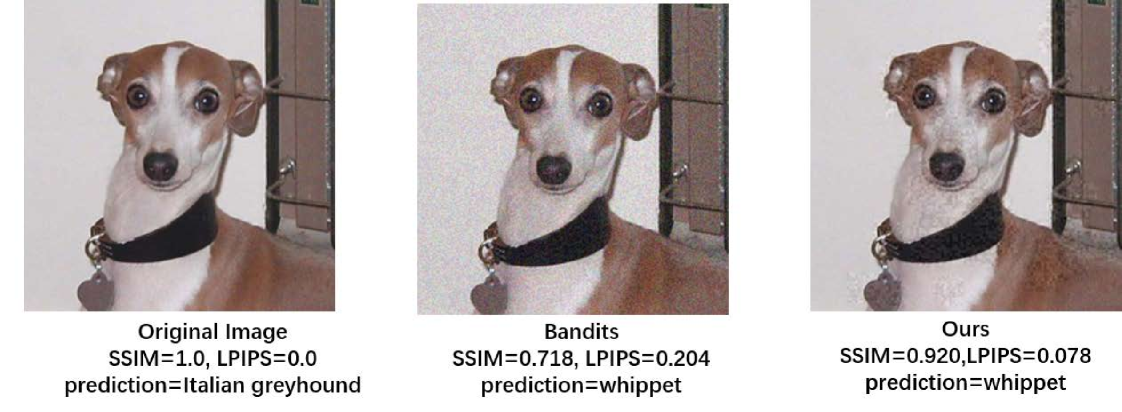

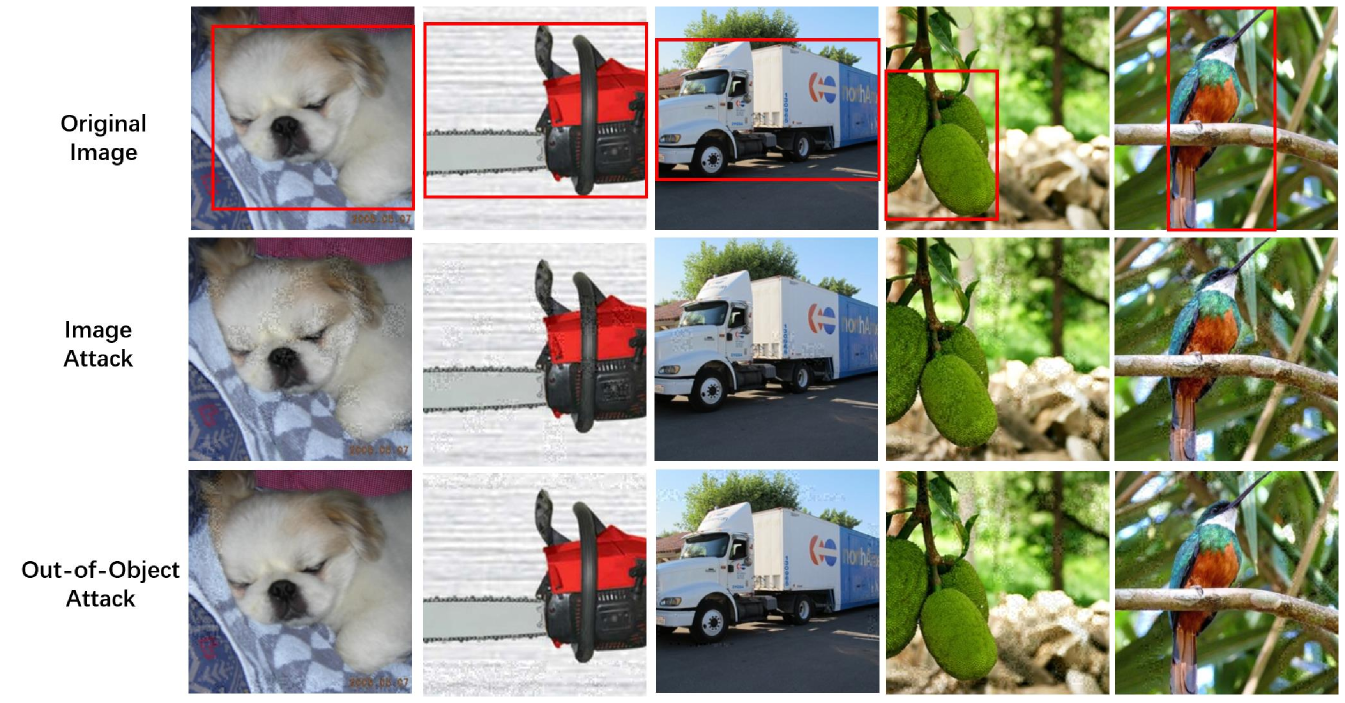

Black-box attack has very limited or no information of the target network and thus is more challenging to perform. In the -bounded setting, a black-box attack is usually evaluated on two aspects: number of queries and success rate. In addition, recent work [7] shows that visual distortion in the adversarial examples is also an important criteria in practice. Even under a small bound, perturbing pixels in the image without considering the visual impact could make the distorted image very annoying. As shown in Fig. 1, an attack [8] under a small noise level () causes relatively large visual distortion and the perturbed image is more distinguishable from the original one. Therefore, under the assumption that the visual distortion caused by the noise is related to the spatial distribution of the perturbed pixels, we take a different view from previous work and focus on explicitly learning a noise distribution based on its corresponding visual distortion.

In this paper, we propose a novel black-box attack that can directly minimize the induced visual distortion by learning the noise distribution of the adversarial example, assuming only loss-oracle access to the black-box network. The quantified visual distortion, which measures the perceptual distance between the adversarial example and the original image, is introduced in our loss where the gradient of the corresponding non-differentiable loss function is approximated by sampling noise from the learned noise distribution. The proposed attack can achieve a trade-off between visual distortion and query efficiency by introducing the weighted perceptual distance metric in addition to the original loss. Theoretically, we prove the convergence of our model under a convex or non-convex loss function. The experiments demonstrate the effectiveness of our attack on ImageNet. Our attack results in much lower distortion than the other attacks and achieves success rate on InceptionV3, ResNet50 and VGG16bn. In addition, it is shown that our attack is valid even when it’s only allowed to perturb pixels that are out of the target object in a given image.

Our contributions are as follows:

-

•

We are the first to introduce perceptual loss in a non-differentiable way for the generation of less-distorted adversarial examples. And the proposed method can achieve a trade-off between visual distortion and query efficiency by using the weighted perceptual distance metric in addition to the original loss.

-

•

Theoretically, we prove the convergence of our model.

-

•

Through extensive experiments, we show that our attack results in much lower distortion than the other attacks.

2 Related Work

Recent research on adversarial attack [9, 10, 11] has made advanced progress in developing strong and computationally efficient adversaries. In the following, we briefly introduce existing attack techniques in both the white-box and black-box settings.

2.1 White-box Attack

In white-box attack, the adversary knows the details of a network, including network structure and its parameter values. Goodfellow et al. [12] proposed a fast gradient sign method to generate adversarial examples. It’s computationally effective and serves as a baseline for attacks with additive noise. In [13], a functional adversarial attack that applied functional noise instead of additive noise to the image, is introduced. Recently, Jordan et al. [7] stressed quantifying perceptual distortion of the adversarial examples by leveraging perceptual metrics to define an adversary. Different from our method which directly optimizes the metric, their model conducts a search over parameters from several composed attacks. There are also attacks that sample noise from a noise distribution [14, 15], on the condition that gradients from the white-box network is accessible. Specifically, [14] utilizes particle approximation to optimize a convex energy function. [15] formulates the attack problem as generating a sequence of adversarial examples in a Hamiltonian Monte Carlo framework.

In summary, white-box attack is hard to detect or defend [16]. In the meantime, however, it suffers from label-leaking and gradient-masking problem [2]. The former causes adversarially trained models to perform better on adversarial examples than original images, and the latter neutralizes the useful gradient for adversaries. The preliminary of acquiring full access to a network in white-box attack is also sometimes difficult to satisfy in real-world scenarios.

2.2 Black-box Attack

Black-box attack considers the target network as a black-box, and has limited access to the network. We discuss loss-oracle based attack here, where the adversary assumes only loss-oracle access to the black-box network.

Query Efficient Attacks.

Attacks of this kind roughly fall into three categories: 1) Methods that estimate gradient of the black-box. Some methods estimate the gradient by sampling around a certain point, which formulates the task as a problem of continuous optimization. Tu et al. [17] searched for perturbations in the latent space of an auto-encoder. [18] utilizes feedback knowledge to alter the searching directions for efficient attack. Ilyas et al. [8] exploited prior information about the gradient. Al-Dujaili and O‘Reilly [9] reduced query complexity by estimating just the sign of the gradient. In [19, 20], the proposed methods perform search in a constructed low-dimensional space. [21] shares similarity with our method as it also explicitly defines a noise distribution. However, the distribution in [21] is assumed to be an isometric normal distribution without considering visual distortion whilst our method does not assume the distribution to be a specific form. We compare with their method in details in the experiments. Other approaches in this category develop a substitute model [3, 22, 23] to approximate performance of the black-box. By exploiting the transferability of adversarial attack [12], the white-box attack technique applied to the substitute model can be transferred to the black-box. These approaches assume only label-oracle to the targeted network, whereas training of the substitute model requires either access to the training dataset of the black-box, or collection of a new dataset. 2) Methods based on discrete optimization. In [24, 9], an image is divided into regular grids and the attack is performed and refined on each grid. Meunier et al. [25] adopted the tiling trick by adding the same noise for small square tiles in the image. 3) Methods that leverage evolutionary strategies or random search [25, 26]. In [26], the noise value is updated by a square-shaped random search at each query. Meunier et al. [25] developed a set of attacks using evolutionary algorithms using both continuous and discrete optimization.

Attacks that Consider Visual Impact.

Query efficient black-box attacks usually do not consider the visual impact of the induced noise, for which the adversarial example could suffer from significant visual distortion. Similar to our work, there are research that address the perceptual distance between the adversarial examples and the original image. [27, 28] introduce Generative Adversarial Network (GAN) based adversaries, where the gradient of the perceptual distance in the generator is computed through backpropagation. [29, 30] also require the adopted perceptual distance metric to be differentiable. Computing the gradients of a complex perceptual metric at each query might be computationally expensive [31], and is not possible for some rank-based metrics [32]. Different from these methods, our approach treats the perceptual distance metric as a black-box, saving the efforts of computing its gradients, and minimizing such distance by sampling from a learned noise distribution. On the other hand, [33, 34] present semantic perturbations for adversarial attacks. The produced noise map is semantically meaningful to human, whilst the image content of the adversarial example is distinct from that of the original image. Different from [33, 34] that focus on semantic distortion, our method addresses visual distortion and aims to generate adversarial examples that are visually indistinguishable from the original image.

3 Method

3.1 Learning Noise Distribution Based on Visual Distortion

An attack model is an adversary that constructs adversarial examples against certain networks. Let be the target network that accepts an input and produces an output . is a vector and represents its entry, denoting the score of the class. is the predicted class. Given a valid input and the corresponding predicted class , an adversarial example [35] is similar to yet results in an incorrect prediction . In an additive attack, an adversarial example is a perturbed input with additive noise such that . The problem of generating an adversarial example is equivalent to produce noise map that causes wrong prediction for the perturbed input. Thus a successful attack is to find such that . Since this constraint is highly non-linear, the loss function is usually rephrased in a different form [5]:

| (1) |

The attack is successful when . It’s noted that such a loss does not take the visual impact into consideration, for which the adversarial example could suffer from significant visual distortion. In order to constrain the visual distortion caused by the difference between and , we adopt a perceptual distance metric into the loss function with a predefined hyperparameter :

| (2) | ||||

where smaller indicates less visual distortion. can be any form of metric that measures the perceptual distance between and , such as well-established [36] or LPIPS [37]. manages the trade-off between a successful attack and the visual distortion caused by the attack. The effects of will be further discussed in Section 4.1.

Minimizing the above loss function faces a challenge that is not differentiable since the black-box adversary does not have access to the gradients of and the metric might be calculated in a non-differentiable way. To address this problem, we explicitly assume a flexible noise distribution of in the discrete space, in the sense that the noise values and their probabilities are discrete. And the gradient of can be estimated by sampling from this distribution. Suppose that follows a distribution parameterized by , i.e., . For the pixel in an image, we make its noise distribution , where is a vector and each element in it denotes a probability value. By sampling noise from the distribution , can be learned to minimize the expectation of the above loss such that the attack is successful (i.e., alters the predicted label) and the produced adversarial example is less distorted (i.e., small ):

| (3) |

For the pixel, we define its noise’s sample space to be a set of discrete values ranging from to : , where is the sampling frequency and is the sampling interval. The noise value of the pixel is sampled from this sample space by following , .

Given and the width and height of an image, respectively, since each pixel has its own noise distribution of length , the number of parameters for the entire image is . Note that we do not consider the difference of color channels in order to reduce the size of the sample space. Otherwise the number of parameters would be tripled. Thus, the same noise value is sampled for each RGB channel of a pixel. To estimate , we adopt policy gradient [38] to make the above expectation differentiable with respect to . Using REINFORCE, we have the differentiable loss function :

| (4) | ||||

| (5) | ||||

where is introduced as a baseline in the expectation with specific meaning: 1) when , the sampled noise map returns low , and its probability increases through gradient descent; 2) when , and remains unchanged; 3) when , the sampled noise map returns high , and its probability decreases through gradient descent. To sum up, is forced to improve over . At the iteration , we choose such that improves over the obtained minimal loss.

The above expectation is estimated using a single Monte Carlo sampling at each iteration, and the sampling of noise map is critical. Simply sampling at the iteration on the entire image might cause large variance on the norm of the noise, i.e., . Therefore, to ensure a small variance, with , only a number of pixels’ noise values are resampled in , while pixels’ noise values remain unchanged:

| (6) |

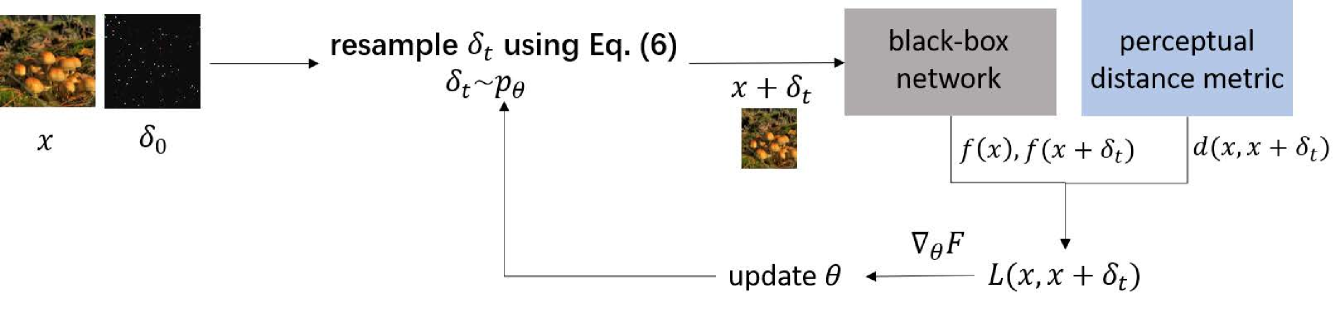

The above equation means replacing pixels’ noise values in noise map with those in , which are sampled from distribution . In other words, if , then only a random of is updated at each iteration. As shown in Fig. 2, after sampling , the feedback from the black-box and the perceptual distance metric decide the update of the distribution . The iteration stops when the attack is successful, i.e., .

3.2 Proof of Convergence

Ruan et al. [39] shows that feed-forward DNNs (Deep Neural Networks) are Lipschitz continuous with a Lipschitz constant , for which we have

| (7) |

At the iteration , since only a small part of the noise map is updated, it can be assumed that

| (8) |

where is a constant. Suppose that the perceptual distance metric is normalized to . Substituting the inequalities (7) and (8) in our definition of in Eq. (2) gets the following inequalities:

| (9) | ||||

Ideally, accurately quantifies the difference of the perturbed image even when only one noise value for just a single pixel at the iteration is different from that at . Let represent a special noise map, whose pixel’s noise value is the element in its sample space and the other pixels’ noise values are . Note that the length of the sample space for each pixel is . Similarly, denotes the probability of the element in the sample space of the pixel. By sampling every element in the sample space of the pixel, we define and to be a vector:

| (10) |

| (11) |

Although the above equations are only meaningful under the ideal situation where can quantify the difference of just one perturbed pixel, we use these equations for a theoretical proof of convergence. In the ideal situation, the gradient of the pixel’s parameters can be calculated exactly as

| (12) |

According to Eq. (9) when the number of the resampled pixels =1, we have

| (13) |

Note that for that share the same , is equal to . Thus, using Eq. (13), we have

| (14) | ||||

In practice, we adopt a single Monte Carlo sampling instead of sampling every noise values for every pixel, for which should be replaced by in the above inequality. The inequality (14) thus becomes:

| (15) | ||||

The standard softmax function disappears because it is Lipschitz continuous with the Lipschitz constant being [40]. Finally, we have the inequality for :

| (16) |

The above inequality proves that is -smooth with the Lipschitz constant being . If is convex, the exact number of steps that Stochastic Gradient Descent (SGD) takes to convergence is , where is an arbitrary small tolerable error (). However, since deep network is usually highly non-convex, we need to consider the situation where is non-convex.

Let the SGD update be

| (17) |

where is the learning rate and is the stochastic gradient. We assume that the variance of the stochastic gradient is upper bounded by :

| (18) |

| Sampling | Perceptual | Success | LPIPS | CIEDE2000 | Avg. | ||

| Frequency | Metric | Rate | Queries | ||||

| - | 0 | 100% | 0.091 | 0.099 | 0.941 | 356 | |

| 10 | 100% | 0.076 | 0.081 | 0.741 | 401 | ||

| 100 | 97.4% | 0.036 | 0.051 | 0.703 | 1395 | ||

| 200 | 92.2% | 0.025 | 0.040 | 0.622 | 2534 | ||

| dynamic | 100% | 0.009 | 0.009 | 0.204 | 7678 | ||

| LPIPS | 10 | 100% | 0.080 | 0.078 | 0.762 | 450 | |

| 100 | 98.1% | 0.049 | 0.052 | 0.711 | 1174 | ||

| 200 | 95.1% | 0.038 | 0.045 | 0.635 | 1928 | ||

| dynamic | 100% | 0.015 | 0.005 | 0.277 | 6694 | ||

| None | 10 | 100% | 0.118 | 0.142 | 5.936 | 426 | |

| 10 | 99.7% | 0.071 | 0.074 | 0.846 | 520 | ||

| 10 | 99.5% | 0.069 | 0.070 | 0.877 | 665 | ||

| 10 | 98.7% | 0.062 | 0.075 | 0.879 | 669 | ||

| 10 | 98.7% | 0.071 | 0.075 | 0.882 | 673 |

And we select that satisfies

| (19) |

Condition (19) can be easily satisfied with a decaying learning rate, e.g., . According to Lemma 1 and Theorem 2 in [41], using the -smooth property of , goes to with probability . This means that with probability for any there exists such that for . Unfortunately, unlike in the convex case, we do not know the exact number of steps that SGD takes to convergence.

The above proof simply aims to theoretically show that the proposed method converges in finite steps, although possibly in a rather slow speed. From the “Avg. Queries” in the following experiments, we can see that the actual computational cost is affordable and comparable to some of the query-efficient attacks.

4 Experiments

Following previous work [25, 8], we validate the effectiveness of our model on the large-scale ImageNet [42] dataset. We use three pretrained classification networks on Pytorch as the black-box networks: InceptionV3 [43], ResNet50 [44] and VGG16bn [45]. The attack is performed on images that were correctly classified by the pretrained network. We randomly select images in the validation set for test, and all images are normalized to . We quantify our success in terms of the perceptual distance (, LPIPS and CIEDE2000) as we address the visual distortion caused by the attack. In these metrics, [36] measures the degradation of structural information in the adversarial examples. LPIPS [37] evaluates the perceptual similarity of two images with their normalized distance between their deep features. CIEDE2000 [46] measures perceptual color distance, which is developed by the CIE (International Commission on Illumination). Smaller value of these metrics denotes less visual distortion. Except for , LPIPS and CIEDE2000, the success rate and average number of queries are also reported as in most previous work. The average number of queries refers to the average number of requests to the output of the black-box network.

We initialize the noise distribution to be a uniform distribution and noise to be . The learning rate is and is set to be . In addition, we specify the shape of the resampled noise at each iteration to be a square [25, 24, 26], and adopt the tiling trick [8, 25] with tile size. The upper bound of our attack is set to be as in previous work.

4.1 Ablation Studies

In the ablation studies, the maximum number of queries is set to be . The results are averaged on test images. In the following, we discuss the trade-off between visual distortion and query efficiency, the effects of using different perceptual distance metrics in the loss function, the results on different sampling frequencies and the influence of predefining a specific form of noise distribution.

Trade-off between visual distortion and query efficiency.

Under the same ball, a query-efficient way to produce an adversarial example is to perturb most pixels with the maximum noise values [24, 26]. However, such attack introduces large visual distortion, which could make the distorted image very annoying. To constrain the visual distortion, the perturbed pixels should be those who cause smaller visual difference while performing a valid attack, which takes extra queries to find. This brings the trade-off between visual distortion and query efficiency, which can be controlled by in our loss function. As shown in Table 1, when and , the adversary does not consider visual distortion at all, and perturbs each pixel that is helpful for misclassification until the attack is successful. Thus, it causes the largest perceptual distance (, and ) with the least number of queries (). As increases to , all the perceptual metrics decrease at the cost of more queries and lower success rate. The maximum in Table 1 is since further increasing it causes the success rate to be lower than . In addition, as in [17], we perform a dynamic line search on the choice of to see the best perceptual scores the adversary can achieve, where . Comparing with fixed values, using dynamic values of greatly boosts the performance on perceptual metrics with attack success rate, at the cost of dozens of times the number of queries. Fig. 4 gives several visualized examples on different , where adversarial examples with larger suffer from less visual distortion.

Ablation studies on the perceptual distance metric.

The perceptual distance metric in the loss function is predefined to measure the visual distortion between the adversarial example and the original image. We adopt and LPIPS as the perceptual distance metric to optimize, respectively, and report their results in Table 1. When , optimizing shows better score on ( v.s. ) and CIEDE2000 ( v.s. ) whilst optimizing LPIPS has better performance on LPIPS ( v.s. ). However, when increases to and , optimizing gives better scores on both and LPIPS. Therefore, we set the perceptual distance metric to be in the following experiments.

| Attacked | Success | LPIPS | CIEDE2000 | Avg. | |||||||||||

| Range | Rate | Queries | |||||||||||||

| I | R | V | I | R | V | I | R | V | I | R | V | I | R | V | |

| Image | 100% | 100% | 100% | 0.078 | 0.076 | 0.072 | 0.096 | 0.081 | 0.079 | 0.692 | 0.741 | 0.699 | 845 | 401 | 251 |

| Out-of-object | 90.1% | 93.8% | 94.7% | 0.071 | 0.069 | 0.074 | 0.081 | 0.065 | 0.070 | 0.678 | 0.805 | 0.687 | 4275 | 3775 | 3104 |

Sampling frequency.

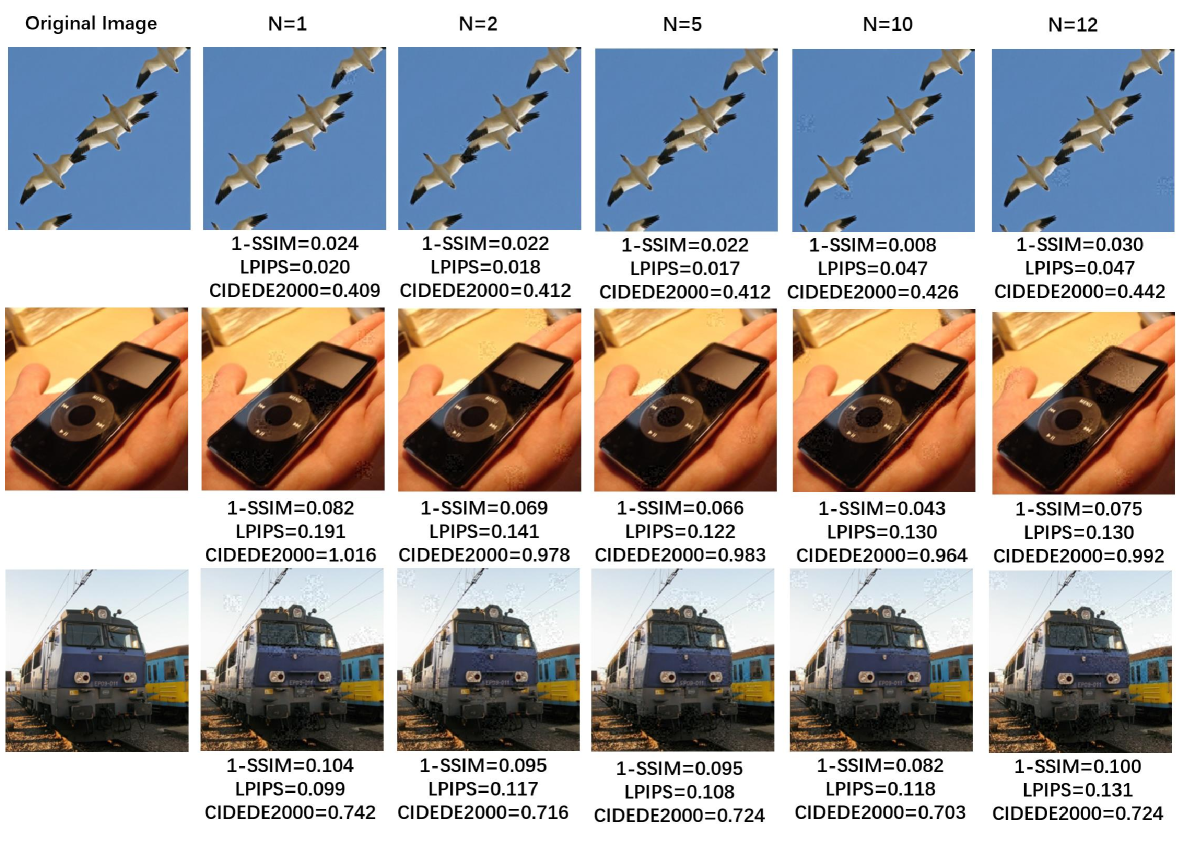

Sampling frequency decides the size of the sample space of . Setting higher frequency means there are more noise values to explore through sampling. In Table 1, increasing the sampling frequency from to reduces the perceptual distance to some extent at the cost of lower success rate. On the other hand, further increasing to does not essentially reduce the distortion yet lowers the success rate. We set the sampling frequency in the following experiments. Note that the maximum sampling frequency is because the sampling interval in RGB color space (i.e., ) would be less than if . See Fig. 3 for a few adversarial examples from different sampling frequencies.

Noise Distribution.

In the proposed algorithm, we adopt a flexible noise distribution instead of predefining it to be a specific form. Therefore, we conducted ablation studies on assuming the distribution to be a regular form as in NAttack [21]. Specifically, we let the noise distribution be an isometric normal distribution while in the loss function, and perform attacks by estimating mean and variance as Eq. (10) in [21]. As reported in the tenth row of Table 1, under the same experimental setting, it is clear that fixing the noise distribution to be a specific isometric normal distribution degrades the overall performance. We think it is because the distribution that minimizes the perceptual distance is unknown, which might not follow a Guassian distribution or other regular form of distribution. To approximate an unknown distribution, it is better to allow the noise distribution to be a free form as in the proposed approach, and let it be learned by minimizing the perceptual distance.

4.2 Out-of-Object Attack

Most existing classification networks [44, 47] are based on Convolutional Neural Network (CNN), which gradually aggregates contextual information in deeper layers. Therefore, it is possible to fool the classifier by just attacking the “context”, i.e., background that is out of the target object. Attacking just the out-of-object pixels constrains the number and the position of pixels that can be perturbed, which might further reduce the visual distortion caused by the noise. To locate the object in a given image, we exploited the object bounding box provided by ImageNet. An out-of-object mask is then created according to the bounding box such that the model is only allowed to attack pixels that are out of the object, as shown in Fig. 5. In Table 2, we report results of InceptionV3, ResNet50 and VGG16bn with the maximum queries. The attack is performed on images whose masks are at least large of the image area. The results show that attacking just the out-of-object pixels can also cause misclassification of the object with over success rate. Compared with image attack, the out-of-object attack is more difficult for the adversary in that it requires more number of queries () yet has lower success rate (). On the other hand, the out-of-object attack indeed reduces visual distortion of the adversarial examples on the three networks.

| Network | Clean Accuracy | After Attack | LPIPS | CIEDE2000 | Avg. Queries | |

|---|---|---|---|---|---|---|

| v | 75.8% | 0.8% | 0.096 | 0.149 | 0.862 | 531 |

| v | 73.4% | 1.8% | 0.103 | 0.154 | 0.979 | 777 |

4.3 Attack Effectiveness on Defended Network

| Attack | Success | LPIPS | CIEDE2000 | Avg. | |||||||||||

| Rate | Queries | ||||||||||||||

| I | R | V | I | R | V | I | R | V | I | R | V | I | R | V | |

| SignHunter [9] | 98.4% | - | - | 0.157 | - | - | 0.117 | - | - | 3.837 | - | - | 450 | - | - |

| NAttack [21] | 99.5% | - | - | 0.133 | - | - | 0.212 | - | - | 5.478 | - | - | 524 | - | |

| AutoZOOM [17] | 100% | - | - | 0.038 | - | - | 0.059 | - | - | 3.33 | - | - | 1010 | - | - |

| Bandits [8] | 96.5% | 98.8% | 98.2% | 0.343 | 0.307 | 0.282 | 0.201 | 0.157 | 0.140 | 8.383 | 8.552 | 8.194 | 935 | 705 | 388 |

| Square Attack [26] | 99.7% | 100% | 100% | 0.280 | 0.279 | 0.299 | 0.265 | 0.243 | 0.247 | 9.329 | 9.425 | 9.429 | 237 | 62 | 30 |

| TREMBA [20] | 99.0% | 100% | 99.8% | 0.161 | 0.161 | 0.160 | 0.188 | 0.189 | 0.187 | 4.413 | 4.400 | 4.421 | - | - | - |

| SignHunter-SSIM | 97.6% | - | - | 0.220 | - | - | 0.157 | - | - | 3.832 | - | - | 642 | - | - |

| NAttack-SSIM | 97.3% | - | - | 0.128 | - | - | 0.210 | - | - | 5.021 | - | - | 666 | - | - |

| AutoZOOM-SSIM | 100% | - | - | 0.028 | - | - | 0.048 | - | - | 2.98 | - | - | 2245 | - | - |

| Bandits-SSIM | 80.0% | 89.3% | 89.7% | 0.333 | 0.303 | 0.275 | 0.200 | 0.163 | 0.135 | 8.838 | 8.666 | 8.194 | 1318 | 1020 | 793 |

| Square Attack-SSIM | 99.2% | 100% | 100% | 0.260 | 0.268 | 0.292 | 0.256 | 0.238 | 0.245 | 9.301 | 9.462 | 9.451 | 278 | 65 | 30 |

| TREMBA-SSIM | 98.5% | 100% | 99.8% | 0.160 | 0.160 | 0.159 | 0.185 | 0.186 | 0.183 | 4.410 | 4.396 | 4.421 | - | - | - |

| Ours | 98.7% | 100% | 100% | 0.075 | 0.076 | 0.072 | 0.094 | 0.081 | 0.079 | 0.692 | 0.741 | 0.699 | 731 | 401 | 251 |

| Ours() | 100% | 100% | 100% | 0.016 | 0.009 | 0.006 | 0.023 | 0.009 | 0.005 | 0.215 | 0.204 | 0.155 | 7311 | 7678 | 7620 |

In the above experiments, we show that our black-box model can attack the undefended network with high success rate. To evaluate the strength of the proposed attack in defended situation, we further attack the InceptionV3 network that adopts ensemble adversarial training (i.e., v). Following [48], we set and randomly select images from the ImageNet validation set for test. The maximum number of queries is . The performance of the attacked network is reported in Table3, where clean accuracy is the classification accuracy before attack. Note that v is slightly different from InceptionV3 in Table 1 in that the pretrained model of v comes from Tensorflow, which is the same platform of the pretrained model of v. Compared with undefended network, attacking defended one causes larger visual distortion. However, the proposed attack can still reduce the classification accuracy from to , which demonstrates its effectiveness under defend.

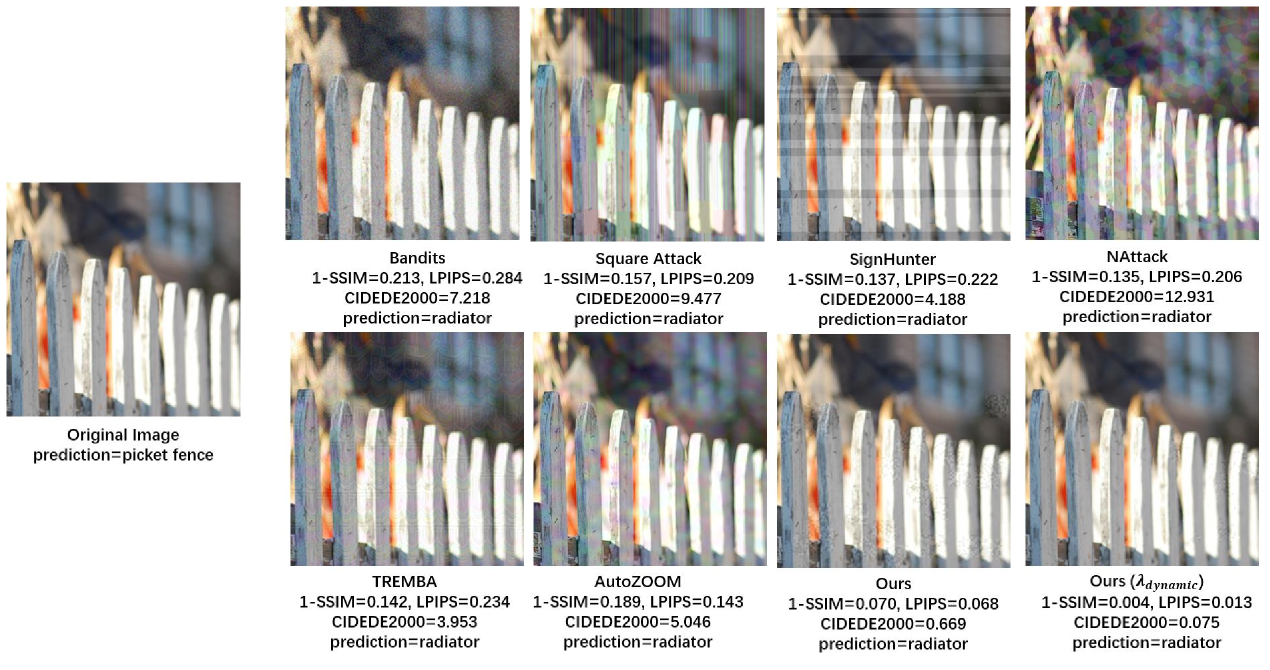

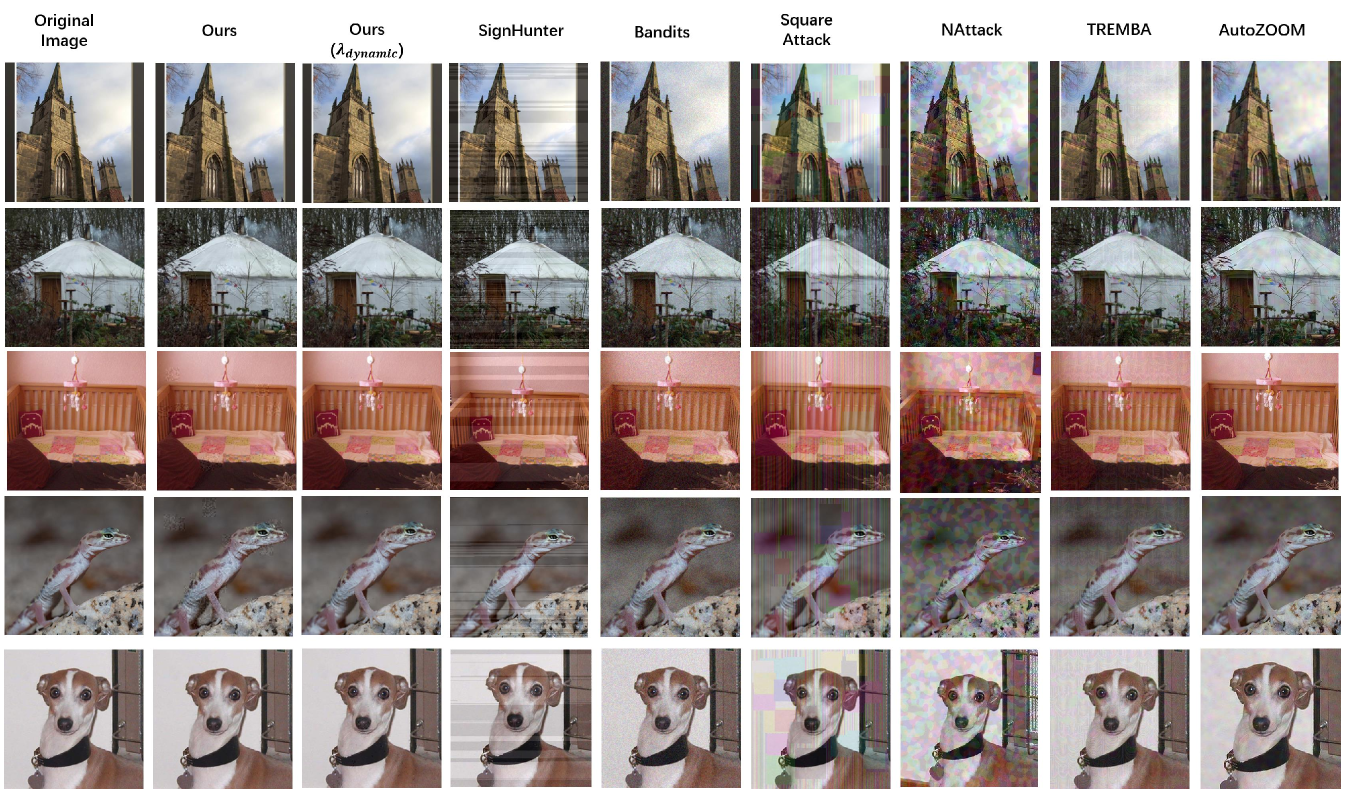

4.4 Comparison with Other Attacks

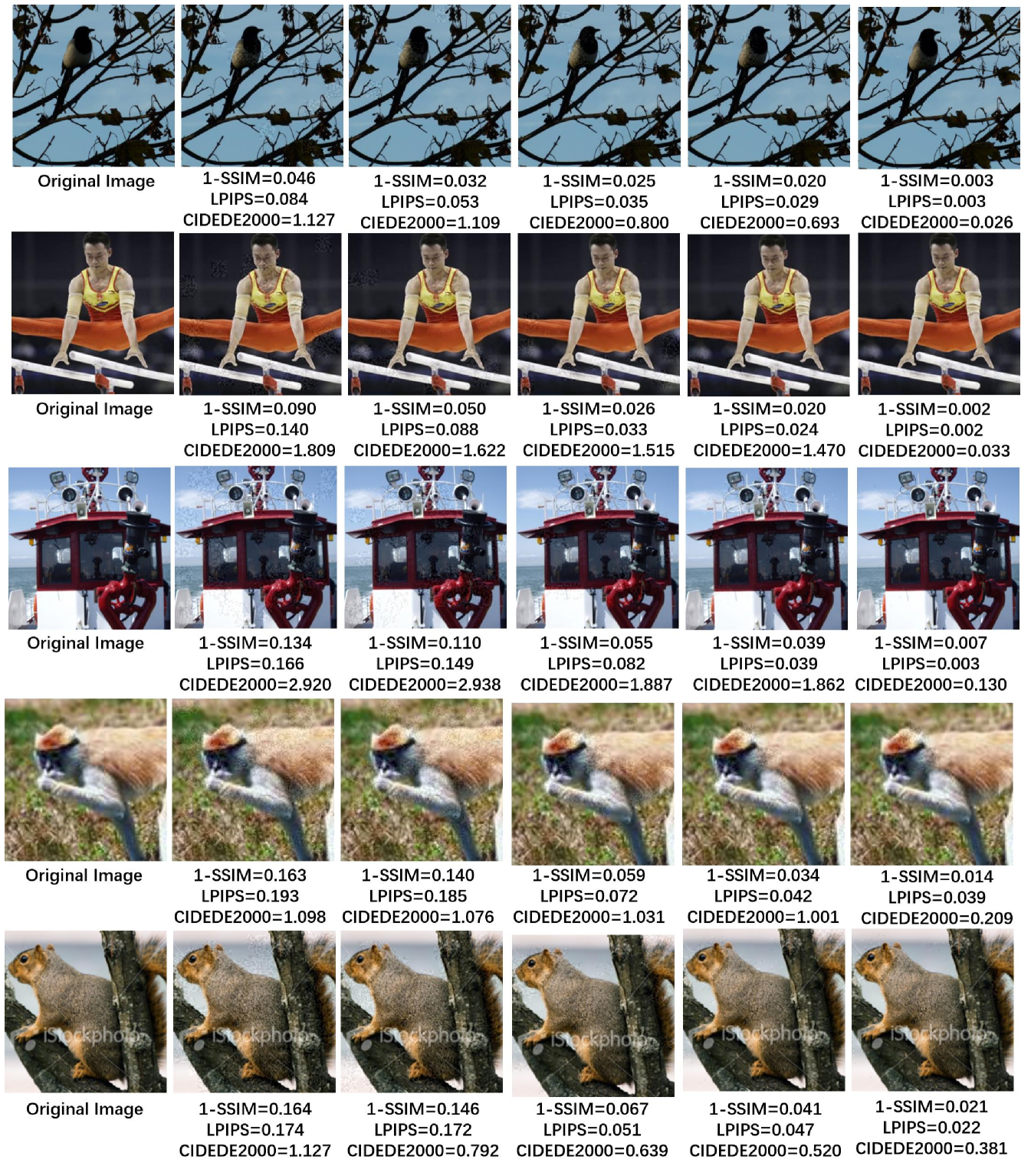

Since our approach addresses improving the visual similarity between the adversarial example and the original image, it might cost more number of queries to construct a less distorted adversarial example. To show that such costs are affordable, we compare our attack to recently proposed black-box attacks: SignHunter [9], NAttack [21], AutoZOOM [17], Bandits [8], Square Attack [26] and TREMBA [20]. For fair comparison, in Table 4, methods marked with -SSIM and Ours introduce to the loss function with . Note that AutoZOOM performs line search on the choice of , for which we adopt the same strategy and denotes this variant of our method as Ours(). The results of the above methods are reproduced using the official codes provided by the authors. We use the default parameter settings of the corresponding attack, and set the maximum number of queries to be . See Table 5 for the experimental settings of different methods. In Table 4, Comparing approaches that use fixed value (i.e., Signhunter-SSIM, NAttack-SSIM, Bandits-SSIM, Square Attack-SSIM, TREMBA-SSIM, AdvGAN-SSIM and Ours), we can see that the proposed method outperforms other attacks on reducing perceptual distance, while the average number of queries is comparable to Bandits. On the other hand, Ours() achieves state-of-the-art performance on 1-SSIM, LPIPS and CIEDE2000 when compared with methods that perform line search over (i.e., AutoZOOM and AutoZOOM-SSIM). In general, except for Signhunter, introducing perceptual distance metric in the objective function helps reduce visual distortion in other attacks. The visualized adversarial examples from different attacks are given in Fig. 6, which shows that our model produces less distorted adversarial examples. More examples can be found in Fig. 7.

We noticed that adversarial examples from SignHunter have horizontal-stripped noise and Square Attack generates adversarial examples with vertical-stripped noise. Stripped noise is helpful in improving query efficiency since the classification network is quite sensitive to such noise [26]. However, from the perspective of visual distortion, such noise greatly degrades the image quality. The adversarial examples of Bandits are relatively perceptible-friendly, but the perturbation affects most pixels in the image, which causes visually “noisy” effects, especially in a monocolor background. The noise maps from NAttack and AutoZOOM appear to be regular color patches all over the image due to their large tiling size in the methods.

| Method | Max. Iterations | |

|---|---|---|

| Signhunter-SSIM | 10 | 10,000 |

| NAttack-SSIM | 10 | 10,000 |

| AutoZOOM-SSIM | dynamic, | 10,000 |

| Bandits-SSIM | 10 | 10,000 |

| Square Attack-SSIM | 10 | 10,000 |

| TREMBA-SSIM | 10 | - |

| Ours | 10 | 10,000 |

| Ours() | dynamic, | 10,000 |

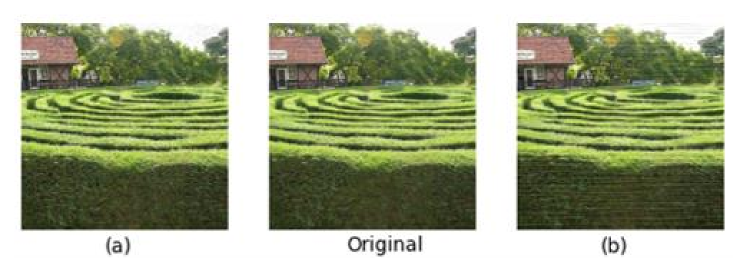

We also conducted subjective studies for further validation. Specifically, we randomly chose two adversarial examples, where one is generated by our approach (Ours()) and the other is given by the attacks (excluding ours) in Table 4. We show each human evaluator the two adversarial examples, and ask him/her which one is less distorted compared with the original image. Figure 8 gives an picture that we show to the evaluator. Note that the order of the two adversarial examples in the triplet is randomly permuted. We asked 10 human evaluators in total, each made judgements over triplets of images. As a result, adversarial examples generated by our method are thought to have less noticeable noise of the time, while of the time the evaluators think both examples are distorted at the same level. Therefore, the subjective results further prove that the proposed method effectively reduces visual distortion in adversarial examples.

| Distance Metric | Sampling Frequency | Success Rate | LPIPS | CIEDE2000 | Avg. Queries | ||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 99.5% | 0.077 | 0.083 | 0.795 | 0.133 | 0.130 | 6.75 | 536 | |

| 2 | 99.2% | 0.065 | 0.069 | 0.768 | 0.159 | 0.118 | 5.88 | 679 | |

| 5 | 97.9% | 0.058 | 0.065 | 0.789 | 0.177 | 0.118 | 5.19 | 960 | |

| 1 | 99.5% | 0.077 | 0.083 | 0.795 | 0.133 | 0.130 | 6.75 | 536 | |

| 2 | 99.5% | 0.070 | 0.076 | 0.773 | 0.176 | 0.130 | 6.14 | 658 | |

| 5 | 99.2% | 0.066 | 0.070 | 0.768 | 0.218 | 0.129 | 5.74 | 800 | |

| 1 | 99.5% | 0.110 | 0.112 | 0.829 | 0.215 | 0.211 | 8.21 | 392 | |

| 2 | 99.5% | 0.092 | 0.100 | 0.803 | 0.259 | 0.191 | 7.44 | 431 | |

| 5 | 99.5% | 0.087 | 0.094 | 0.792 | 0.312 | 0.185 | 6.89 | 579 |

4.5 Other Attacks

Although our method in this paper is based on attack, the perceptual distance metric in the loss function can be replaced by other () distance. We did not discuss it in the above experiments because these distance metrics are less accurate in measuring the perceptual distance between images compared to the specifically designed metrics, such as well-established and LPIPS. Nevertheless, we still present the results of other () attacks in Table 6, where the distance is normalized to in the loss function. Specifically, , where is the distance between the original image and the perturbed image . As in the paper, we set and the maximum number of queries being . We find that the raw and scores have much higher order of magnitude compared with other metrics, and thus the normalized scores of and distances are reported in Table 6. Note that when the sampling frequency , distance is equivalent to distance in that

| (20) | ||||

where is the number of perturbed pixels. and are the width, height and number of channels of a given image, respectively. Table 6 shows that optimizing distance gives better performance on both the perceptual distance metrics and the distance metrics.

4.6 Conclusion

We introduce a novel black-box attack based on the induced visual distortion in the adversarial example. The quantified visual distortion, which measures the perceptual distance between the adversarial example and the original image, is introduced in our loss where the gradient of the corresponding non-differentiable loss function is approximated by sampling from a learned noise distribution. The proposed attack can achieve a trade-off between visual distortion and query efficiency by introducing the weighted perceptual distance metric in addition to the original loss. The experiments demonstrate the effectiveness of our attack on ImageNet as our model achieves much lower distortion when compared to existing attacks. In addition, it is shown that our attack is valid even when it’s only allowed to perturb pixels that are out of the target object in a given image.

References

- Kwon et al. [2020] H. Kwon, Y. Kim, H. Yoon, and D. Choi. Selective audio adversarial example in evasion attack on speech recognition system. IEEE Transactions on Information Forensics and Security, 15:526–538, 2020.

- Kurakin et al. [2017] Alexey Kurakin, Ian J. Goodfellow, and Samy Bengio. Adversarial machine learning at scale. In Proc. International Conference on Learning Representations, 2017.

- Papernot et al. [2017] Nicolas Papernot, Patrick D. McDaniel, Ian J. Goodfellow, Somesh Jha, Z. Berkay Celik, and Ananthram Swami. Practical black-box attacks against machine learning. In Proc. ACM on Asia Conference on Computer and Communications Security, 2017.

- Akhtar and Mian [2018] N. Akhtar and A. Mian. Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access, 6:14410–14430, 2018.

- Carlini and Wagner [2017a] Nicholas Carlini and David A. Wagner. Towards evaluating the robustness of neural networks. In IEEE Symposium on Security and Privacy, 2017a.

- Papernot et al. [2016a] Nicolas Papernot, Patrick D. McDaniel, Somesh Jha, Matt Fredrikson, Z. Berkay Celik, and Ananthram Swami. The limitations of deep learning in adversarial settings. In Pro. IEEE European Symposium on Security and Privacy, EuroS&P, 2016a.

- Jordan et al. [2019] Matt Jordan, Naren Manoj, Surbhi Goel, and Alexandros G. Dimakis. Quantifying perceptual distortion of adversarial examples. arXiv preprint arXiv:1902.08265, 2019.

- Ilyas et al. [2019] Andrew Ilyas, Logan Engstrom, and Aleksander Madry. Prior convictions: Black-box adversarial attacks with bandits and priors. In Proc. International Conference on Learning Representations, 2019.

- Al-Dujaili and O’Reilly [2020] Abdullah Al-Dujaili and Una-May O’Reilly. Sign bits are all you need for black-box attacks. In Proc. International Conference on Learning Representations, 2020.

- Zhang et al. [2020] Y. Zhang, X. Tian, Y. Li, X. Wang, and D. Tao. Principal component adversarial example. IEEE Transactions on Image Processing, 29:4804–4815, 2020.

- Zhang et al. [2019] Q. Zhang, K. Wang, W. Zhang, and J. Hu. Attacking black-box image classifiers with particle swarm optimization. IEEE Access, 7:158051–158063, 2019.

- Goodfellow et al. [2015] Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. In Proc. International Conference on Learning Representations, 2015.

- Laidlaw and Feizi [2019] Cassidy Laidlaw and Soheil Feizi. Functional adversarial attacks. In Proc. International Conference on Neural Information Processing Systems, 2019.

- Zheng et al. [2019] Tianhang Zheng, Changyou Chen, and Kui Ren. Distributionally adversarial attack. In Proc. AAAI Conference on Artificial Intelligence, 2019.

- Wang et al. [2020a] Hongjun Wang, Guanbin Li, Xiaobai Liu, and Liang Lin. A hamiltonian monte carlo method for probabilistic adversarial attack and learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020a.

- Carlini and Wagner [2017b] Nicholas Carlini and David A. Wagner. Adversarial examples are not easily detected: Bypassing ten detection methods. In ACM Workshop on Artificial Intelligence and Security, 2017b.

- Tu et al. [2019] Chun-Chen Tu, Pai-Shun Ting, Pin-Yu Chen, Sijia Liu, Huan Zhang, Jinfeng Yi, Cho-Jui Hsieh, and Shin-Ming Cheng. AutoZOOM: Autoencoder-based zeroth order optimization method for attacking black-box neural networks. In Proc. AAAI Conference on Artificial Intelligence, 2019.

- Zhang et al. [2020] Yonggang Zhang, Ya Li, Tongliang Liu, and Xinmei Tian. Dual-path distillation: A unified framework to improve black-box attacks. In Proc. International Conference on Machine Learning, 2020.

- [19] Huichen Li, Linyi Li, Xiaojun Xu, Xiaolu Zhang, Shuang Yang, and Bo Li. Nonlinear gradient estimation for query efficient blackbox attack.

- Huang and Zhang [2019] Zhichao Huang and Tong Zhang. Black-box adversarial attack with transferable model-based embedding. In Proc. International Conference on Learning Representations, 2019.

- Li et al. [2019] Yandong Li, Lijun Li, Liqiang Wang, Tong Zhang, and Boqing Gong. NATTACK: learning the distributions of adversarial examples for an improved black-box attack on deep neural networks. In Proc. International Conference on Machine Learning, 2019.

- Cheng et al. [2019] Shuyu Cheng, Yinpeng Dong, Tianyu Pang, Hang Su, and Jun Zhu. Improving black-box adversarial attacks with a transfer-based prior. In Proc. International Conference on Neural Information Processing Systems, 2019.

- Papernot et al. [2016b] Nicolas Papernot, Patrick McDaniel, and Ian Goodfellow. Transferability in machine learning: from phenomena to black-box attacks using adversarial samples. arXiv preprint arXiv:1605.07277, 2016b.

- Moon et al. [2019] Seungyong Moon, Gaon An, and Hyun Oh Song. Parsimonious black-box adversarial attacks via efficient combinatorial optimization. In Proc. International Conference on Machine Learning, 2019.

- Meunier et al. [2019] Laurent Meunier, Jamal Atif, and Olivier Teytaud. Yet another but more efficient black-box adversarial attack: tiling and evolution strategies. arXiv preprint arXiv:1910.02244, 2019.

- Andriushchenko et al. [2019] Maksym Andriushchenko, Francesco Croce, Nicolas Flammarion, and Matthias Hein. Square attack: a query-efficient black-box adversarial attack via random search. arXiv preprint arXiv:1912.00049, 2019.

- Xiao et al. [2018] Chaowei Xiao, Bo Li, Jun-Yan Zhu, Warren He, Mingyan Liu, and Dawn Song. Generating adversarial examples with adversarial networks. 2018.

- Zhang [2019] Weijia Zhang. Generating adversarial examples in one shot with image-to-image translation gan. IEEE Access, 7:151103–151119, 2019.

- Gragnaniello et al. [2019] Diego Gragnaniello, Francesco Marra, Giovanni Poggi, and Luisa Verdoliva. Perceptual quality-preserving black-box attack against deep learning image classifiers. arXiv preprint arXiv:1902.07776, 2019.

- Rozsa et al. [2016] Andras Rozsa, Ethan M Rudd, and Terrance E Boult. Adversarial diversity and hard positive generation. In Proc. IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2016.

- Gao et al. [2015] Fei Gao, Dacheng Tao, Xinbo Gao, and Xuelong Li. Learning to rank for blind image quality assessment. IEEE Transactions on Neural Networks and Learning Systems, 26(10):2275–2290, 2015.

- Ma et al. [2016] Lin Ma, Long Xu, Yichi Zhang, Yihua Yan, and King Ngi Ngan. No-reference retargeted image quality assessment based on pairwise rank learning. IEEE Transactions on Multimedia, 18(11):2228–2237, 2016.

- Zhao et al. [2017] Zhengli Zhao, Dheeru Dua, and Sameer Singh. Generating natural adversarial examples. In Proc. International Conference on Learning Representations, 2017.

- Wang et al. [2020b] Hongjun Wang, Guangrun Wang, Ya Li, Dongyu Zhang, and Liang Lin. Transferable, controllable, and inconspicuous adversarial attacks on person re-identification with deep mis-ranking. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, 2020b.

- Szegedy et al. [2014] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian J. Goodfellow, and Rob Fergus. Intriguing properties of neural networks. In Proc. International Conference on Learning Representations, 2014.

- Zhou Wang et al. [2004] Zhou Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4):600–612, 2004.

- Zhang et al. [2018] Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- Sutton and Barto [1998] Richard S Sutton and Andrew G Barto. Reinforcement learning: An introduction. MIT press Cambridge, 1998.

- Ruan et al. [2018] Wenjie Ruan, Xiaowei Huang, and Marta Kwiatkowska. Reachability analysis of deep neural networks with provable guarantees. In Proc. International Joint Conference on Artificial Intelligence, 2018.

- Gao and Pavel [2017] Bolin Gao and Lacra Pavel. On the properties of the softmax function with application in game theory and reinforcement learning. arXiv preprint arXiv:1704.00805, 2017.

- Orabona [2020] Francesco Orabona. Almost sure convergence of sgd on smooth non-convex functions. https://parameterfree.com/2020/10/05/almost-sure-convergence-of-sgd-on-smooth-non-convex-functions, 2020.

- Russakovsky et al. [2015] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision, 115(3):211–252, 2015.

- Szegedy et al. [2016] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jonathon Shlens, and Zbigniew Wojna. Rethinking the inception architecture for computer vision. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- Simonyan and Zisserman [2015] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. In Proc. International Conference on Learning Representations, 2015.

- Zhao et al. [2020] Zhengyu Zhao, Zhuoran Liu, and Martha Larson. Towards large yet imperceptible adversarial image perturbations with perceptual color distance. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, pages 1039–1048, 2020.

- Hu et al. [2018] Jie Hu, Li Shen, and Gang Sun. Squeeze-and-excitation networks. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- Tramèr et al. [2018] Florian Tramèr, Alexey Kurakin, Nicolas Papernot, Ian J. Goodfellow, Dan Boneh, and Patrick D. McDaniel. Ensemble adversarial training: Attacks and defenses. In Proc. International Conference on Learning Representations, 2018.