Transfer in Sequential Multi-armed Bandits via Reward Samples

Abstract

We consider a sequential stochastic multi-armed bandit problem where the agent interacts with bandit over multiple episodes. The reward distribution of the arms remain constant throughout an episode but can change over different episodes. We propose an algorithm based on UCB to transfer the reward samples from the previous episodes and improve the cumulative regret performance over all the episodes. We provide regret analysis and empirical results for our algorithm, which show significant improvement over the standard UCB algorithm without transfer.

I INTRODUCTION

The Multi-armed Bandit (MAB) problem [1, 2, 3] is a popular sequential decision-making problem where an agent interacts with the environment by taking actions at every time step and in return gets a random reward. The goal of the agent is to maximize the average reward received. Recently, there has been a lot of interest in the application of the MAB problem in the context of online advertisements and recommender systems[4, 5]. One of the problems highlighted in [5] is the user cold start problem, which is the inability of a recommender system to make a good recommendation for a new user in absence of any prior information. In this scenario, it is useful to transfer knowledge from other related users in order to make better initial recommendations to the new user. In the context of a MAB problem, transfer learning uses knowledge from one bandit problem in order to improve the performance of another related bandit problem [6, 7]. In particular, it helps to accelerate learning and make better decisions quickly.

In this paper, we consider a sequential stochastic MAB problem where the agent interacts with the environment sequentially in episodes (similar to [6]), where different episodes are synonymous with different tasks or different bandit problems. The reward distributions of the arms remain constant throughout the episode but change over different episodes. This scenario is useful, for instance, in recommender systems where the reward distributions of recommended items change in order to capture the changing user preferences over time. The goal is to leverage the knowledge from previous episodes in order to improve the performance in the current episode, thereby leading to an overall performance improvement. Towards this, we use reward samples from previous episodes to make decisions in the current episode. Our algorithm is based on the UCB algorithm for bandits [8].

Related Work: Transfer learning in the context of MAB has been studied in the framework of Multi-task learning [6, 9, 10, 11] and Meta-learning [12, 13, 14]. In Multi-task learning, the set of tasks are fixed and they are repeatedly encountered by the learning algorithm whereas in meta-learning, the algorithm learns to adapt to a new task after learning from a few tasks drawn from the same task distribution. For instance, the authors in [6] consider a finite set of bandit problems which are encountered repeatedly over time. In contrast, we consider an infinite set of bandit problems but assume that the problems are “similar” (we define the notion of “similarity” later). The idea of transferring knowledge using samples is used in the SW-UCB algorithm in [15], but it suffers from the notion of negative transfer, where knowledge transfer can degrade the performance. In contrast, our algorithm facilitates knowledge transfer while guaranteeing that there is no negative transfer.

The main contributions of the paper are:

(i) We develop an algorithm based on UCB to transfer knowledge using the reward samples from the previous episodes in a sequential stochastic MAB setting. Our algorithm has a better performance compared to UCB with no transfer.

(ii) We provide the regret analysis for the proposed algorithm and our regret upper bound explicitly captures the performance improvement due to transfer.

(iii) We show via numerical simulations that our algorithm is able to effectively transfer knowledge from previous episodes.

Notations: denotes the indicator function whose value is if the event (condition) is true, and otherwise. denotes null set.

II PRELIMINARIES AND PROBLEM STATEMENT

We consider the Multi-Armed Bandit problem with arms and episodes. The length of each episode is . Define and . At any given integer time , one among the arms is pulled and a random reward is received. Let and , denote the arm pulled at time and the corresponding random reward, respectively. We assume that and the rewards are independent across time and across all arms. In any given episode, the distributions of the arms do not change. However, they are allowed to be different over different episodes.

Let be the mean reward of arm in episode . Let denote the vector containing the mean rewards of all arms for episode . Further, let and denote an optimal arm111There may be more than one optimal arms which have equal maximum mean rewards. in episode and it’s mean reward, respectively. Define as the sub-optimality gap of arm in episode . Note that the mean rewards of the arms are unknown.

We assume that the episodes in the MAB problem are related in the sense that the mean rewards of the arms across episodes do not change considerably. We capture this by the following assumption.

Assumption 1.

We assume that for any , where the parameter is assumed to be known.

This assumption implies that for each arm, the mean rewards across all episodes do not differ by more than . In applications like online advertising and recommender systems, the user preferences change over time only gradually, and therefore, the parameter can be used to capture this behaviour.

Let denote the number of pulls of arm in the time interval . Thus, counts the number of times arm is pulled from the beginning of episode until time . Note that for episode , the allowable values of in are . Further, let denote the number of pulls of arm in the time interval . Thus, counts the number of times arm is pulled from the beginning of episode until time . For example, if and , then counts the number of times arm is pulled in time instants and . Further, counts the number of times arm is pulled in the interval .

The goal of the agent is to decide which arm to pull (what should be the value of ) at any given time based on the information in order to maximize the average reward over all episodes. This is captured by the pseudo-regret of the MAB problem over episodes:

| (1) |

where the last equality follows since for any . Thus, the goal is to make decisions to minimize the regret in (II).

In this paper, we exploit the relation among the mean rewards of arms in different episodes (c.f. Assumption 1) in order to minimize the regret . This is achieved by reusing (transferring) reward samples from previous episodes to make decisions in the current episode. We describe the approach and the proposed algorithm in detail in the next section.

III ALL SAMPLE TRANSFER UCB (AST-UCB)

Our approach of reusing samples from previous episodes builds on the standard UCB algorithm for bandits. In this section, we first describe the UCB algorithm and then our proposed algorithm, which we call All Sample Transfer UCB (AST-UCB).

III-A UCB Algorithm [8]

Intuitively, the arm-pulling decisions should be made on the reward samples obtained from each arm. Since the mean rewards of the arms are unknown, the UCB algorithm computes their sample-average estimates and the corresponding confidence intervals. Then, based on the principle of optimism in the face of uncertainty, the upper (maximum) value in the confidence interval of each arm is treated as the optimistic mean reward of that arm. Then, the arm with the highest optimistic mean reward is pulled.

As time progresses and more reward samples are received, the estimates become better and the confidence intervals become smaller. Thus, the upper value in the confidence interval approaches the true mean. Eventually, the optimistic mean reward of the optimal arm becomes larger than all other sub-optimal arms, and thereafter, only the optimal arm is pulled.

The standard UCB algorithm is used when the arm distributions are assumed to be the same at all times. However, in our setting, the distributions change over episodes. Therefore, one approach would be to implement UCB algorithm separately in each episode by using only the samples of that particular episode. In other words, the UCB algorithm is restarted at the beginning of every episode and it uses only the reward samples received during the current episode. We call this approach as No Transfer UCB (NT-UCB) algorithm. Next, we explain NT-UCB algorithm for episode .

Let denote the sample-average estimate of the mean reward of arm at time , and is computed as:

| (2) |

where denotes the number of times arm is pulled until time since the beginning of episode . Next, we compute the optimistic mean reward corresponding to . For this, we require the following result.

Lemma 1.

Let . For episode , time and arm , with probability at least the following equation is satisfied

| (3) |

-

Proof.

The rewards are independent random variables with support . Using Hoeffding’s inequality[16] for estimate , we get

Setting for , the lemma follows. ∎

Using Lemma 1, we form a confidence interval for mean reward using the estimate at time in episode as

Next, the NT-UCB algorithm pulls the arm with maximum optimistic reward:

The above steps are repeated until the end of episode . Next, we provide an upper bound on the pseudo-regret of the NT-UCB algorithm.

Lemma 2.

Let . The pseudo-regret of NT-UCB satisfies

| (4) | ||||

-

Proof.

An upper bound on the regret over all episodes is obtained by adding the per-episode regret bound of the standard UCB algorithm, which is given as [1]222The second term in (4) differs from the corresponding term mentioned in [1], since additional union bounds are used to obtain the result in [1].

The result then follows. ∎

III-B AST-UCB Algorithm

For any particular episode, the NT-UCB algorithm mentioned above uses samples only in that episode to compute the estimates. However, as per Assumption 1, the mean rewards across the episodes are related, and therefore, reward samples in previous episodes carry information about the mean reward in the current episode. In order to capture this information, we construct an auxiliary estimate (in addition to the UCB estimate) that uses the reward samples from the beginning of the first episode. Then, we combine these two estimates to make the decisions. Next, we describe this approach for episode .

Let denote the auxiliary sample-average estimate of the mean reward of arm at time , computed as:

| (5) |

where denotes the number of times arm is pulled until time since the beginning of episode . Note that estimate captures the information of reward samples of arm from all previous episodes 333An alternate strategy would be to construct the auxiliary estimate from a fixed number of previous episodes. However, our strategy is better since the confidence interval corresponding to estimate (5) is always better than this alternate strategy.. Next, we compute the optimistic mean reward corresponding to . For this, we require the following result.

Lemma 3.

Let . For episode , time and arm , with probability at least the following equation is satisfied

| (6) | ||||

-

Proof.

The rewards are independent random variables with support . Using McDiarmid’s inequality[17] for estimate , we get

Setting for , we get

Hence, with probability at least , the following holds

(7) Next, we bound for , :

(8) where the inequality follows from (Asssumption 1). Similarly, using (Asssumption 1), we get

(9) Conditions (Proof.) and (9) yield . Using this in (7), we get the result in (6). ∎

Using Lemma 3, we form a confidence interval for mean reward using the estimate at time step in episode as

Next, we present two key steps of the AST-UCB algorithm.

(ii) Pull arm

The above steps are repeated until the end of episode . All the steps of AST-UCB are given below in Algorithm 1.

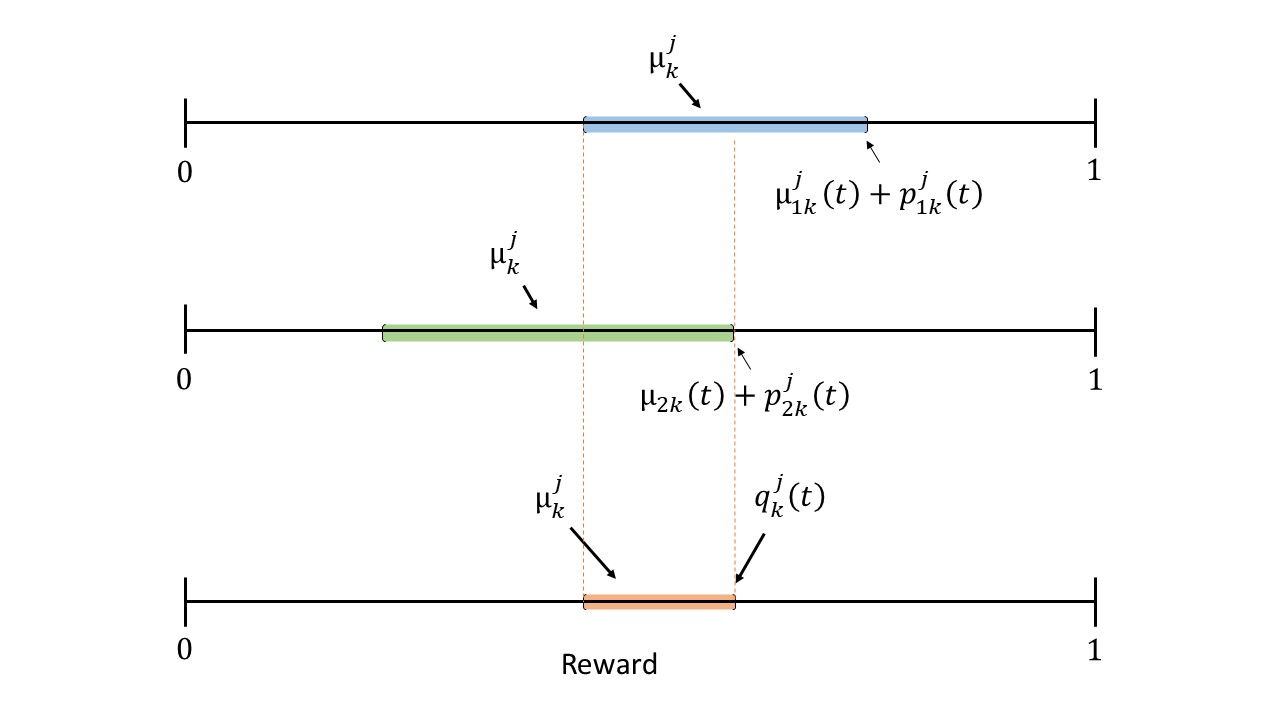

Next, we explain the motivation for Step (i). We combine the confidence intervals and by taking their intersection to get a better confidence interval. Note that by taking the intersection, the new confidence interval is always smaller than the original two confidence intervals, as illustrated in Figure 1. This smaller interval results in a better estimate of . We then pick the optimistic reward in the new confidence interval444Note that the Step (i) is valid even when and do not intersect.. Further, Step (ii) is similar to the UCB algorithm where we pull the arm with the maximum optimistic reward. The next result presents a bound on the probability of lying in the new confidence interval (the new confidence interval being non-empty).

Lemma 4.

For episode , time and arm , with probability at least the following equations are satisfied

| (11) | ||||

| (12) |

- Proof.

Note that although the new confidence interval is smaller, Lemma 4 shows that the probability bound of the mean reward belonging to this new interval has reduced as compared to that in (3) or (6). However, we show in Theorem 1 that the negative effect of the reduction of the probability is not significant, and the smaller interval leads to an overall reduction in the regret.

IV REGRET ANALYSIS

In this section, we derive the regret of the AST-UCB algorithm and then provide the analysis of the result.

Theorem 1.

Let and . The pseudo-regret of AST-UCB with and satisfies

| (13) |

-

Proof.

Refer to the appendix. ∎

Next, we compare the regret bounds of our algorithm (1) and NT-UCB (4), and highlight the benefit of transfer. The transfer happens due to the first term in (1). Hence, we compare the first terms in the regret bounds. To this end, we define the following terms that capture the dependence on :

Several comments are in order. First, observe that, for transfer to be beneficial, we need . Since , this can happen only if . The term behaves like a constant as compared to which increases as the total number of episodes increases. Therefore, for some large enough , we get which leads to decrease in the regret as compared to NT-UCB. Second, as increases (episodes become increasingly non-related), increases since more episodes (samples) are required for the transfer to be beneficial. Third, we have logarithmic dependence of episode length on the regret (which is the case with NT-UCB as well). Fourth, the second term in the regret bound of AST-UCB (1) is higher than the corresponding term in NT-UCB bound (4) due to the decreased probability bound in Lemma 4 as compared to Lemmas 1 and 3.

V Numerical Simulations

In this section, we present the numerical results for AST-UCB algorithm. We consider armed bandit problem. In numerical simulations, we need to select the mean reward () of each arm for each episode which should satisfy Assumption 1. Towards this end, we fix a seed interval of length for each arm. Then, at the beginning of each episode, we uniformly sample the value of from this seed interval. This ensures that Assumption 1 is satisfied. Once the mean reward value is obtained, we construct a uniform distribution with mean and width . In case the support of this uniform distribution lies outside the interval , we reduce to avoid this. The reward samples are then generated from the uniform distribution. For each scenario, we compute the regret by taking an empirical average over independent realizations of that scenario.

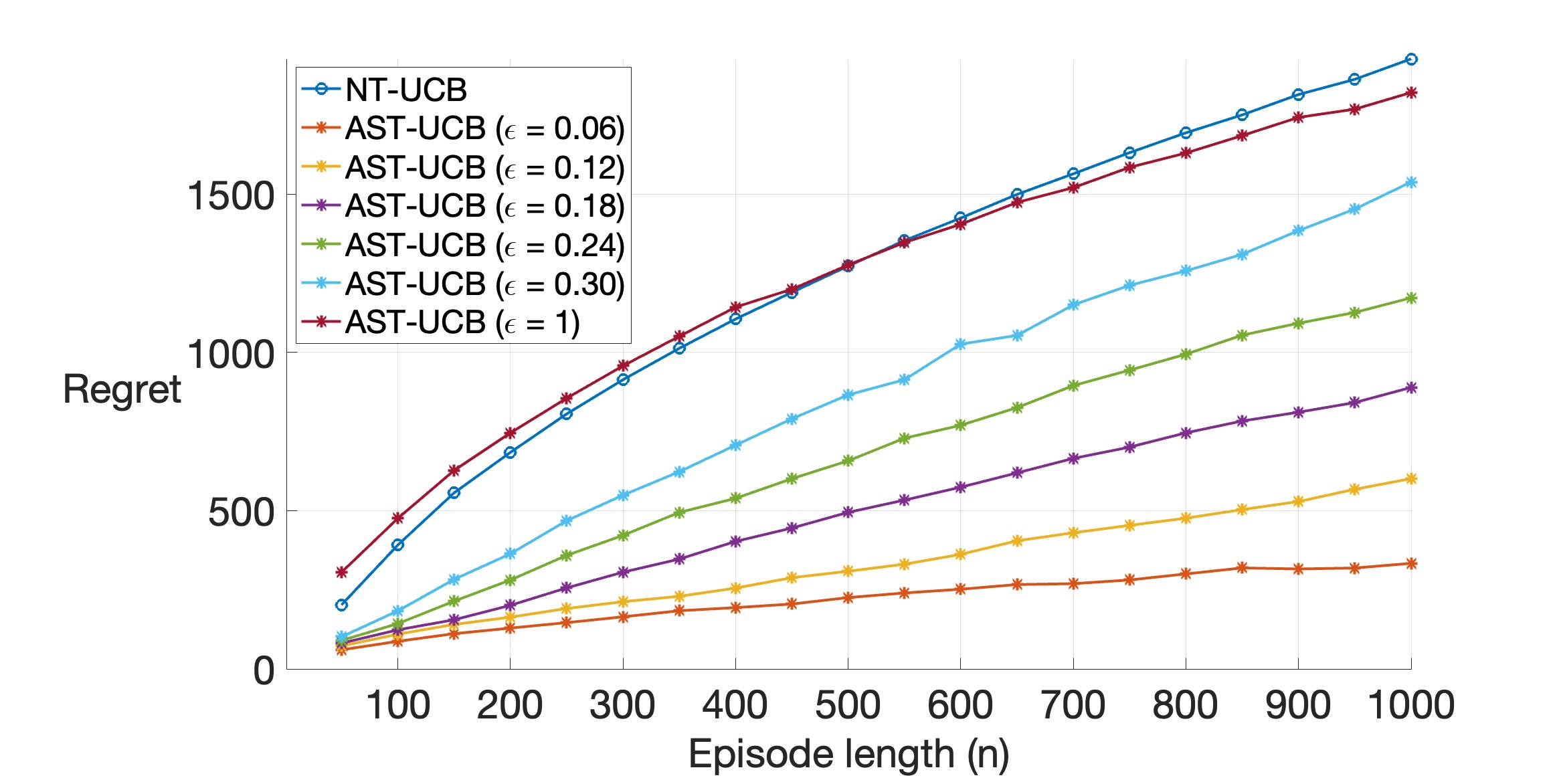

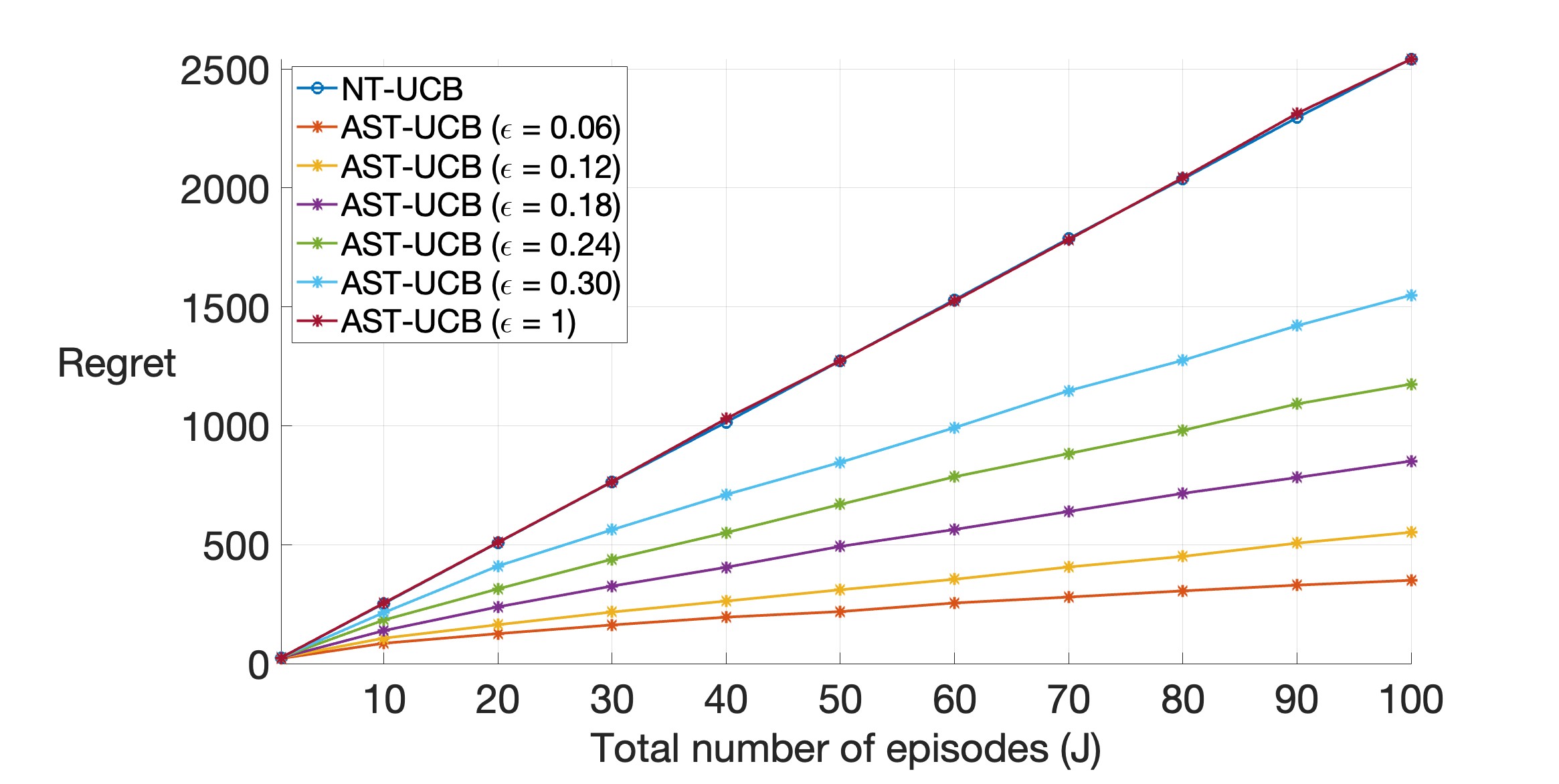

We simulate AST-UCB and NT-UCB for two cases (two sets of seed intervals). Note that the seed intervals for each arm are of length . The mid-points of the seed intervals of the four arms for Case I and Case II are and , respectively.

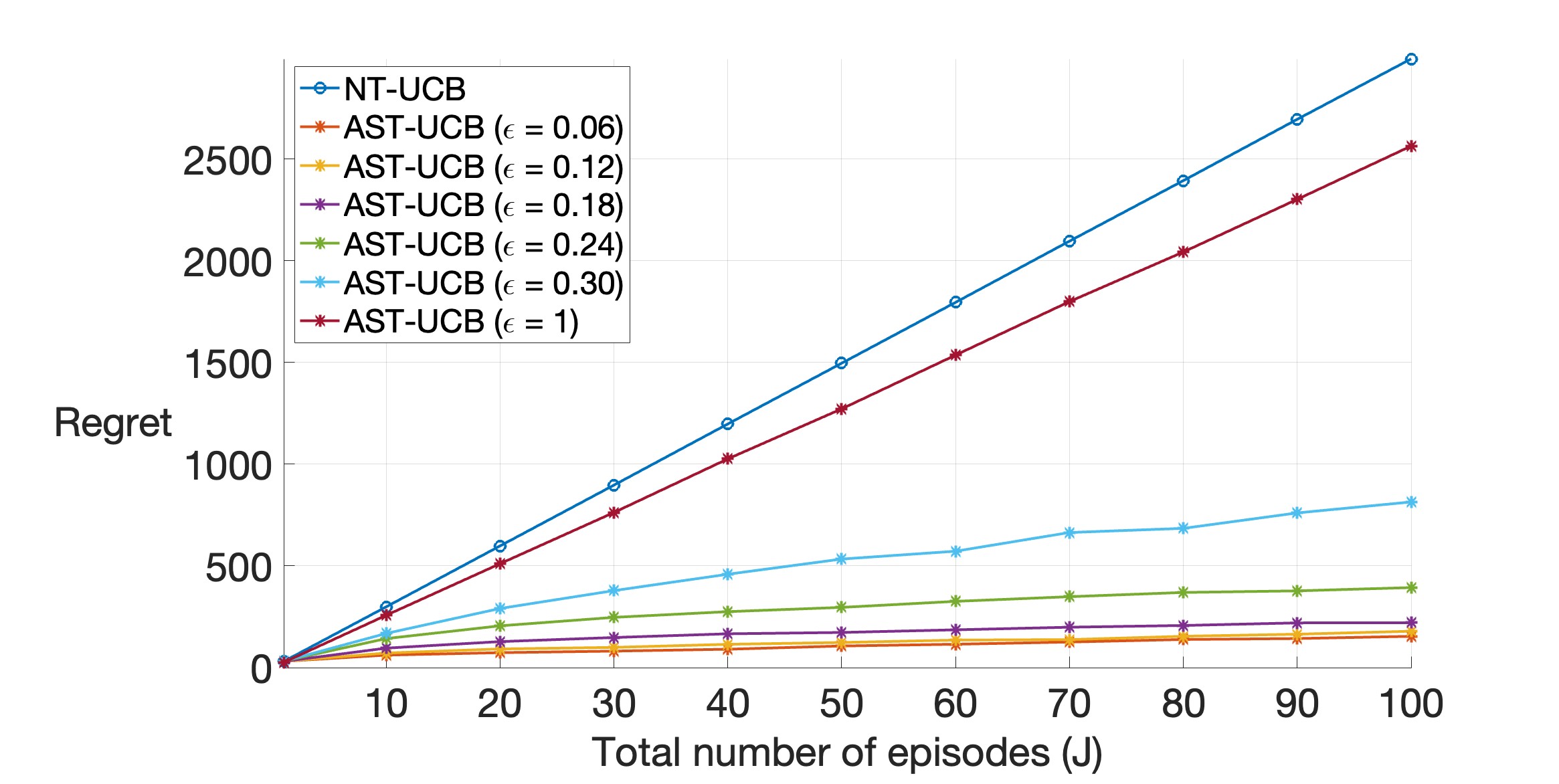

In Figure 2(a), we observe that the regret of AST-UCB is considerably smaller as compared to NT-UCB. This is particularly true for smaller values of . As increases555Since the reward support is , values of are not valid in our setting., the regret of AST-UCB approaches to that of NT-UCB. This is in accordance with the fact that when is large, the confidence interval of the auxiliary estimate in (6) is large and transfer is not much beneficial. Further, we observe a logarithmic dependence of the regret on , as quantified by the regret bounds in (4) and (1).

In Figure 2(b), we again observe that AST-UCB performs better than NT-UCB, particularly for small values of . We also observe that the regret has a “approximate” linear dependence on . The plots in Figures 2 show that for any value of the difference between the regret of NT-UCB and AST-UCB increases with episode length () or total number of episodes (). This is because a larger number of reward samples from previous episodes become available, thereby increasing the transfer.

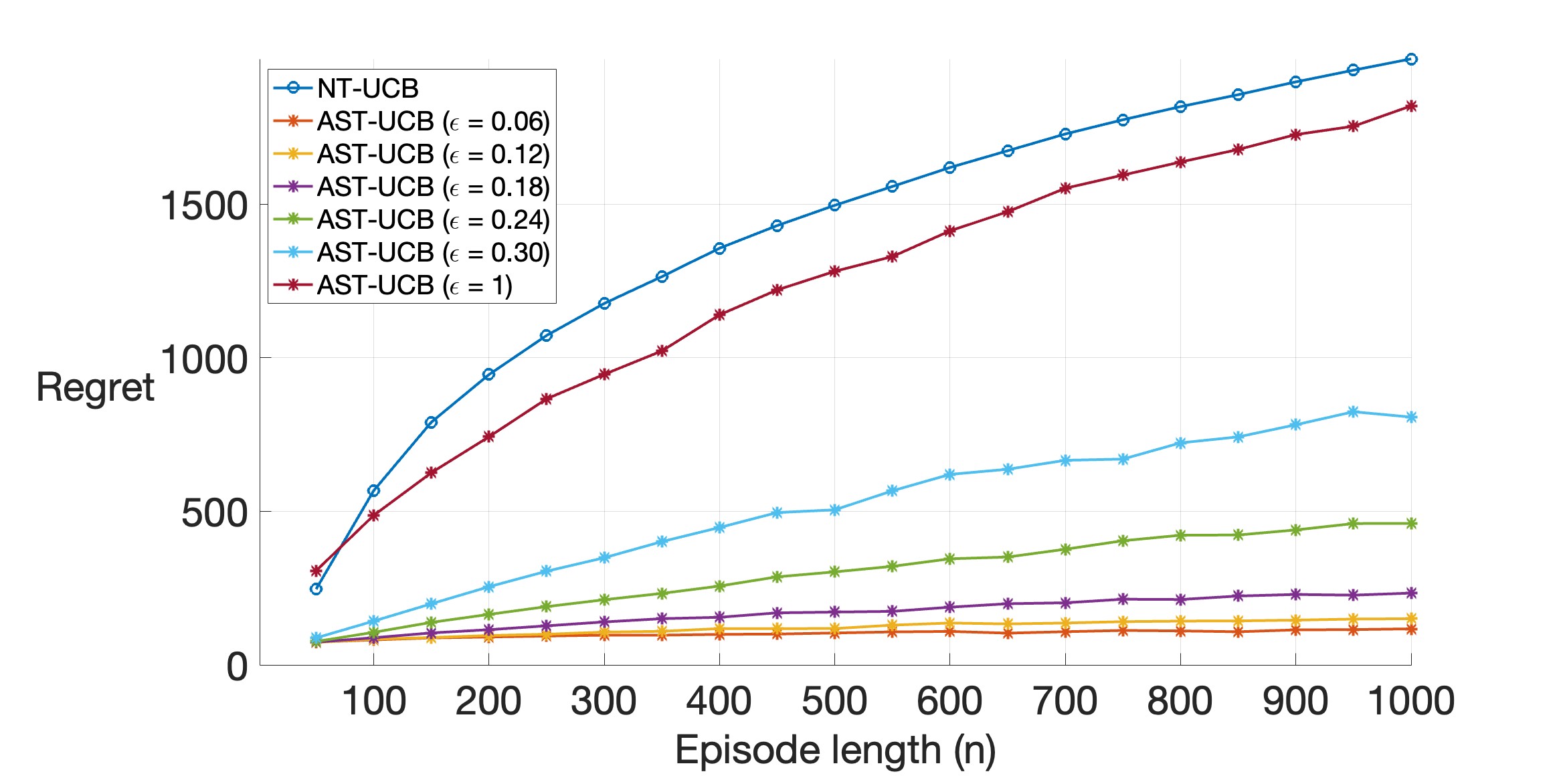

Similar observations can be seen in Figures 3(a) and 3(b) for Case II. However, the improvement of AST-UCB over NT-UCB in terms of regret is more in Case II as compared to Case I. This happens because the seed intervals in Case II are farther apart, which helps in distinguishing the best arm more quickly using the samples of previous episodes.

VI CONCLUSION

We analyzed the transfer of reward samples in a sequential stochastic multi-armed bandit setting. We proposed a transfer algorithm based on UCB and showed that its regret is lower than UCB with no transfer. We provide regret analysis of our algorithm and validate our approach via numerical experiments. Future research directions include extending the work to the case when the parameter is unknown, and studying a similar transfer problem in the context of reinforcement learning.

APPENDIX: Proof of Theorem 1

To simplify the notation, we re-denote several variables as , , , , , , ,

For arm to be pulled at time (), at least one of the following five conditions should be true:

| (14) | ||||

| (15) | ||||

| (16) | ||||

| (17) |

| (18) |

We show this by contradiction. Assume that none of the conditions in (14)-(17) is true and the first condition in (18) is false. Then, using the fact that , we have

| (19) | ||||

| (20) |

Conditions in (19) and (20) imply

| (21) |

Similarly, when none of the conditions in (14)-(17) is true and the second condition in (18) is false, we get

| (22) |

Thus, at least one of the conditions in (21) and (22) is true, and this yields

The above condition implies that the AST-UCB algorithm will not pull arm , and hence, we have a contradiction. The cumulative regret after episodes (each with length ) is given by

where is the total number of sub-optimal pulls to arm over all episodes. Next, we bound the regret by bounding the term . For an arbitrary sequence , , we have

| (23) |

| (24) |

| (25) |

Using (APPENDIX: Proof of Theorem 1), (APPENDIX: Proof of Theorem 1), (APPENDIX: Proof of Theorem 1) and taking expectation, we get

| (26) | ||||

Next, we bound the probability of the event that at least one of (14) or (15) or (16) or (17) is true. We use the union bound, followed by the application of one-sided Hoeffding’s inequality (steps are similar to the proof of Lemma 1 and 3) to get,

| (27) |

Using (APPENDIX: Proof of Theorem 1) and (APPENDIX: Proof of Theorem 1), we have

Hence the theorem follows.

ACKNOWLEDGMENT

References

- [1] S. Bubeck, N. Cesa-Bianchi, et al., “Regret analysis of stochastic and nonstochastic multi-armed bandit problems,” Foundations and Trends® in Machine Learning, vol. 5, no. 1, pp. 1–122, 2012.

- [2] T. Lattimore and C. Szepesvári, Bandit algorithms. Cambridge University Press, 2020.

- [3] H. Robbins, “Some aspects of the sequential design of experiments,” 1952.

- [4] D. Bouneffouf, I. Rish, and C. Aggarwal, “Survey on applications of multi-armed and contextual bandits,” in 2020 IEEE Congress on Evolutionary Computation (CEC), pp. 1–8, 2020.

- [5] N. Silva, H. Werneck, T. Silva, A. C. Pereira, and L. Rocha, “Multi-armed bandits in recommendation systems: A survey of the state-of-the-art and future directions,” Expert Systems with Applications, vol. 197, p. 116669, 2022.

- [6] A. Lazaric, E. Brunskill, et al., “Sequential transfer in multi-armed bandit with finite set of models,” Advances in Neural Information Processing Systems, vol. 26, 2013.

- [7] A. Shilton, S. Gupta, S. Rana, and S. Venkatesh, “Regret bounds for transfer learning in bayesian optimisation,” in Artificial Intelligence and Statistics, pp. 307–315, PMLR, 2017.

- [8] P. Auer, N. Cesa-Bianchi, and P. Fischer, “Finite-time analysis of the multiarmed bandit problem,” Machine learning, vol. 47, pp. 235–256, 2002.

- [9] J. Zhang and E. Bareinboim, “Transfer learning in multi-armed bandit: a causal approach,” in Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems, pp. 1778–1780, 2017.

- [10] A. A. Deshmukh, U. Dogan, and C. Scott, “Multi-task learning for contextual bandits,” Advances in Neural Information Processing Systems, vol. 30, 2017.

- [11] B. Liu, Y. Wei, Y. Zhang, Z. Yan, and Q. Yang, “Transferable contextual bandit for cross-domain recommendation,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, 2018.

- [12] L. Cella, A. Lazaric, and M. Pontil, “Meta-learning with stochastic linear bandits,” in International Conference on Machine Learning, pp. 1360–1370, PMLR, 2020.

- [13] L. Cella and M. Pontil, “Multi-task and meta-learning with sparse linear bandits,” in Uncertainty in Artificial Intelligence, pp. 1692–1702, PMLR, 2021.

- [14] J. Azizi, B. Kveton, M. Ghavamzadeh, and S. Katariya, “Meta-learning for simple regret minimization,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 6709–6717, 2023.

- [15] A. Garivier and E. Moulines, “On upper-confidence bound policies for switching bandit problems,” in International Conference on Algorithmic Learning Theory, pp. 174–188, Springer, 2011.

- [16] W. Hoeffding, “Probability inequalities for sums of bounded random variables,” The collected works of Wassily Hoeffding, pp. 409–426, 1994.

- [17] C. McDiarmid et al., “On the method of bounded differences,” Surveys in combinatorics, vol. 141, no. 1, pp. 148–188, 1989.