undefined

Transfer Learning with Kernel Methods

Abstract

Transfer learning refers to the process of adapting a model trained on a source task to a target task. While kernel methods are conceptually and computationally simple machine learning models that are competitive on a variety of tasks, it has been unclear how to perform transfer learning for kernel methods. In this work, we propose a transfer learning framework for kernel methods by projecting and translating the source model to the target task. We demonstrate the effectiveness of our framework in applications to image classification and virtual drug screening. In particular, we show that transferring modern kernels trained on large-scale image datasets can result in substantial performance increase as compared to using the same kernel trained directly on the target task. In addition, we show that transfer-learned kernels allow a more accurate prediction of the effect of drugs on cancer cell lines. For both applications, we identify simple scaling laws that characterize the performance of transfer-learned kernels as a function of the number of target examples. We explain this phenomenon in a simplified linear setting, where we are able to derive the exact scaling laws. By providing a simple and effective transfer learning framework for kernel methods, our work enables kernel methods trained on large datasets to be easily adapted to a variety of downstream target tasks.

1 Introduction

Transfer learning refers to the machine learning problem of utilizing knowledge from a source task to improve performance on a target task. Recent approaches to transfer learning have achieved tremendous empirical success in many applications including in computer vision [45, 17], natural language processing [40, 43, 16], and the biomedical field [19, 15]. Since transfer learning approaches generally rely on complex deep neural networks, it can be difficult to characterize when and why they work [44]. Kernel methods [46] are conceptually and computationally simple machine learning models that have been found to be competitive with neural networks on a variety of tasks including image classification [3, 29, 42] and drug screening [42]. Their simplicity stems from the fact that training a kernel method involves performing linear regression after transforming the data. There has been renewed interest in kernels due to a recently established equivalence between wide neural networks and kernel methods [25, 2], which has led to the development of modern, neural tangent kernels (NTKs) that are competitive with neural networks. Given their simplicity and effectiveness, kernel methods could provide a powerful approach for transfer learning and also help characterize when transfer learning between a source and target task would be beneficial. However, developing an algorithm for transfer learning with kernel methods for general source and target tasks has been an open problem. In particular, while there is a standard transfer learning approach for neural networks that involves replacing and re-training the last layer of a pre-trained network, there is no known corresponding operation for kernels. The limited prior work on transfer learning with kernels focuses on applications in which the source and target tasks have the same label sets [14, 30, 37]. Examples include predicting stock returns for a given sector based on returns available for other sectors [30] or predicting electricity consumption for certain zones of the United States based on the consumption in other zones [37]. These methods are not applicable to general source and target tasks with differing label dimensions, which includes classical transfer learning applications such as using a model trained to classify between thousands of objects to subsequently classify new objects.

In this work, we present a general framework for performing transfer learning with kernel methods. Unlike prior work, our framework enables transfer learning for kernels regardless of whether the source and target tasks have the same or differing label sets. Furthermore, like for transfer learning methodology for neural networks, our framework allows transferring to a variety of target tasks after training a kernel method only once on a source task. To provide some intuition for our proposed framework, instead of replacing and re-training the last layer of a neural network as is standard for transfer learning using neural networks, our approach for transfer learning using kernels translates to adding a new layer to the end of a neural network.

The key components of our transfer learning framework are: Train a kernel method on a source dataset and then apply the following operations to transfer the model to the target task.

-

•

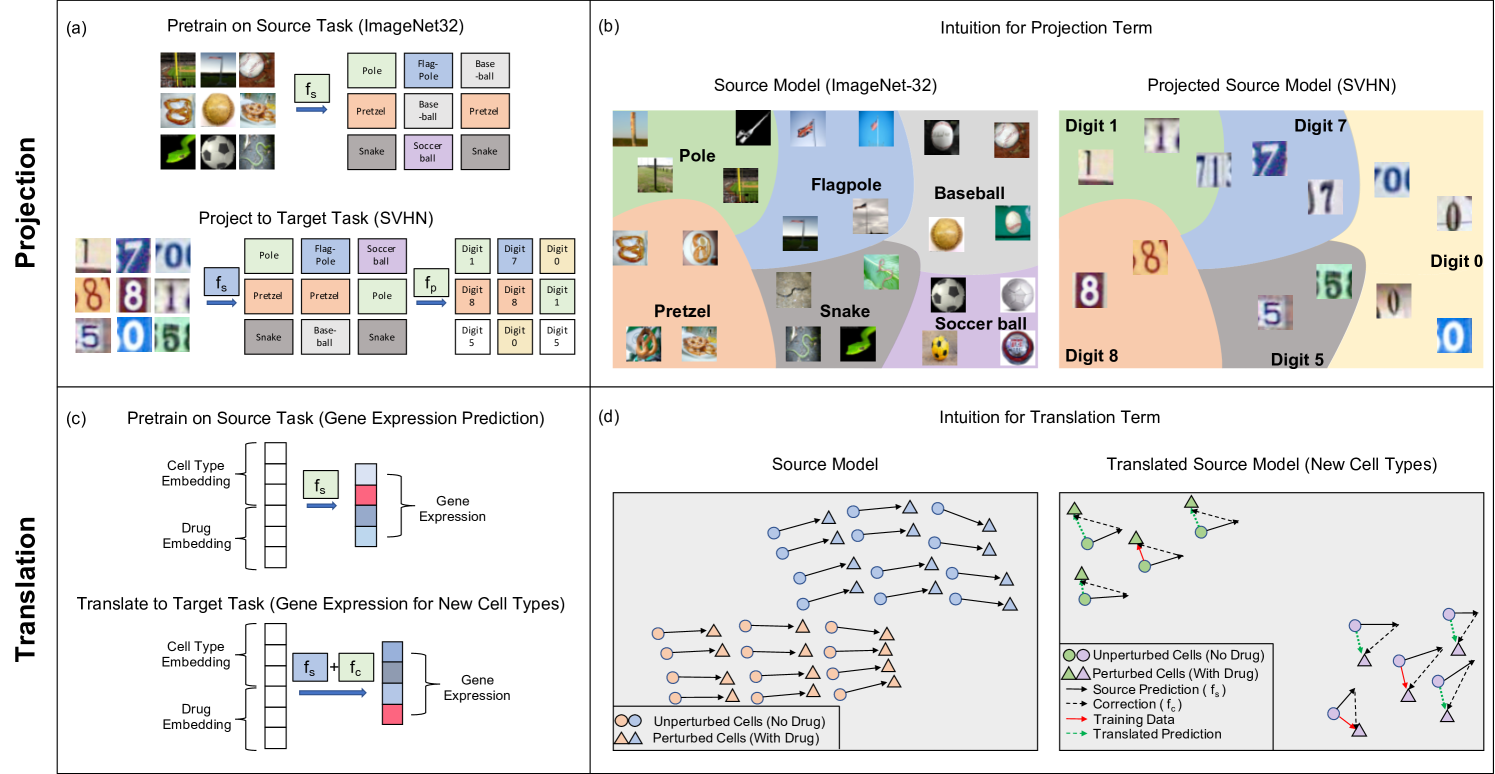

Projection. We apply the trained source kernel to each sample in the target dataset and then train a secondary model on these source predictions to solve the target task; see Fig. 1a.

-

•

Translation. When the source and target tasks have the same label sets, we train a correction term that is added to the source model to adapt it to the target task; see Fig. 1c.

Projection is effective when the source model predictions contain information regarding the target labels. We will demonstrate that this is the case in image classification tasks in which the predictions of a classifier trained to distinguish between a thousand objects in ImageNet32 [11] provides information regarding the labels of images in other datasets such as street view house numbers (SVHN) [33]; see Fig. 1b. In particular, we will show across 23 different source and target task combinations that kernels transferred using our approach achieve up to a increase in accuracy over kernels trained on target tasks directly.

On the other hand, translation is effective when the predictions of the source model can be corrected to match the labels of the target task via an additive term. We will show that this is the case in virtual drug screening in which a model trained to predict the effect of a drug on one cell line can be adjusted to capture the effect on a new cell line; see Fig. 1d. In particular, we will show that our transfer learning approach provides an improvement to prior kernel method predictors [42] even when transferring to cell lines and drugs not present in the source task.

Interestingly, we observe that for both applications, image classification and virtual drug screening, transfer learned kernel methods follow simple scaling laws; i.e., how the number of available target samples effects the performance on the target task can be accurately modelled. As a consequence, our work provides a simple method for estimating the impact of collecting more target samples on the performance of the transfer learned kernel predictors. In the simplified setting of transfer learning with linear kernel methods we are able to mathematically derive the scaling laws, thereby providing a mathematical basis for the empirical observations. Overall, our work demonstrates that transfer learning with kernel methods between general source and target tasks is possible and demonstrates the simplicity and effectiveness of the proposed method on a variety of important applications.

2 Results

In the following, we present our framework for transfer learning with kernel methods more formally. Since kernel methods are fundamental to this work, we start with a brief review.

Given training examples , corresponding labels , a standard nonlinear approach to fitting the training data is to train a kernel machine [46]. This approach involves first transforming the data, , with a feature map, , and then performing linear regression. To avoid defining and working with feature maps explicitly, kernel machines rely on a kernel function, , which corresponds to taking inner products of the transformed data, i.e., . The trained kernel machine predictor uses the kernel instead of the feature map and is given by:

| (1) |

and with and with . Note that for datasets with over samples, computing the exact minimizer is computationally prohibitive, and we instead use fast, approximate iterative solvers such as EigenPro [31]. For a more detailed description of kernel methods see Appendix A.

For the experiments in this work, we utilize a variety of kernel functions. In particular, we consider the classical Laplace kernel given by , which is a standard benchmark kernel that has been widely used for image classification and speech recognition [31]. In addition, we consider recently discovered kernels that correspond to infinitely wide neural networks: While there is an emerging understanding that increasingly wider neural networks generalize better [5, 32], such models are generally computationally difficult to train. Remarkably, recent work identified conditions under which neural networks in the limit of infinite width implement kernel machines; the corresponding kernel is known as the Neural Tangent Kernel (NTK) [25]. In the following, we use the NTK corresponding to training an infinitely wide ReLU fully connected network [25] and also the convolutional NTK (CNTK) corresponding to training an infinitely wide ReLU convolutional network [2].111We chose to use the CNTK without global average pooling (GAP) [2] for our experiments. While the CNTK model with GAP as well as the models considered in [8] give higher accuracy on image datasets, they are computationally prohibitive to compute for our large-scale experiments. For example, a CNTK with GAP is estimated to take 1200 GPU hours for 50k training samples [29].

Unlike the usual supervised learning setting where we train a predictor on a single domain, we will consider the following transfer learning setting from [50], which involves two domains: (1) a source with domain and data distribution ; and (2) a target with domain and data distribution . The goal is to learn a model for a target task by making use of a model trained on a source task . We let and denote the dimensionality of and respectively, i.e. for image classification these denote the number of classes in the source and target. Lastly, we let and denote the source and target dataset, respectively. Throughout this work, we assume that the source and target domains are equal (), but that the data distributions differ ().

Our work is concerned with the recovery of by transferring a model, , that is learned by training a kernel machine on the source dataset. To enable transfer learning with kernels, we propose the use of two methods, projection and translation. We first describe these methods individually and demonstrate their performance on transfer learning for image classification using kernel methods. For each method, we empirically establish scaling laws relating the quantities to the performance boost given by transfer learning, and we also derive explicit scaling laws when are linear maps. We then utilize a combination of the two methods to perform transfer learning in an application to virtual drug screening. Code and hardware details are available in Appendix L.

2.1 Transfer learning via projection

Projection involves learning a map from source model predictions to target labels and is thus particularly suited for situations where the number of labels in the source task is much larger than the number of labels in the target task .

Definition 1.

Given a source dataset and a target dataset , the projected predictor, , is given by:

| (2) |

where is a predictor trained on the source dataset.222When there are infinitely many possible values for the parameterized function , we consider the minimum norm solution.

While Definition 1 is applicable to any machine learning method, we focus on predictors and parameterized by kernel machines given their conceptual and computational simplicity. As illustrated in Fig. 1a and b, projection is effective when the predictions of the source model already provide useful information for the target task.

Improving kernel-based image classifier performance with projection. We now demonstrate the effectiveness of projected kernel predictors for image classification. In particular, we first train kernels to classify among 1000 objects across 1.28 million images in ImageNet32 and then transfer these models to 4 different target image classification datasets: CIFAR10 [28], Oxford 102 Flowers [35], Describable Textures Dataset (DTD) [12], and SVHN [33]. We selected these datasets since they cover a variety of transfer learning settings, i.e. all of the CIFAR10 classes are in ImageNet32, ImageNet32 contains only 2 flower classes, and none of DTD and SVHN classes are in ImageNet32. A full description of the datasets is provided in Appendix B.

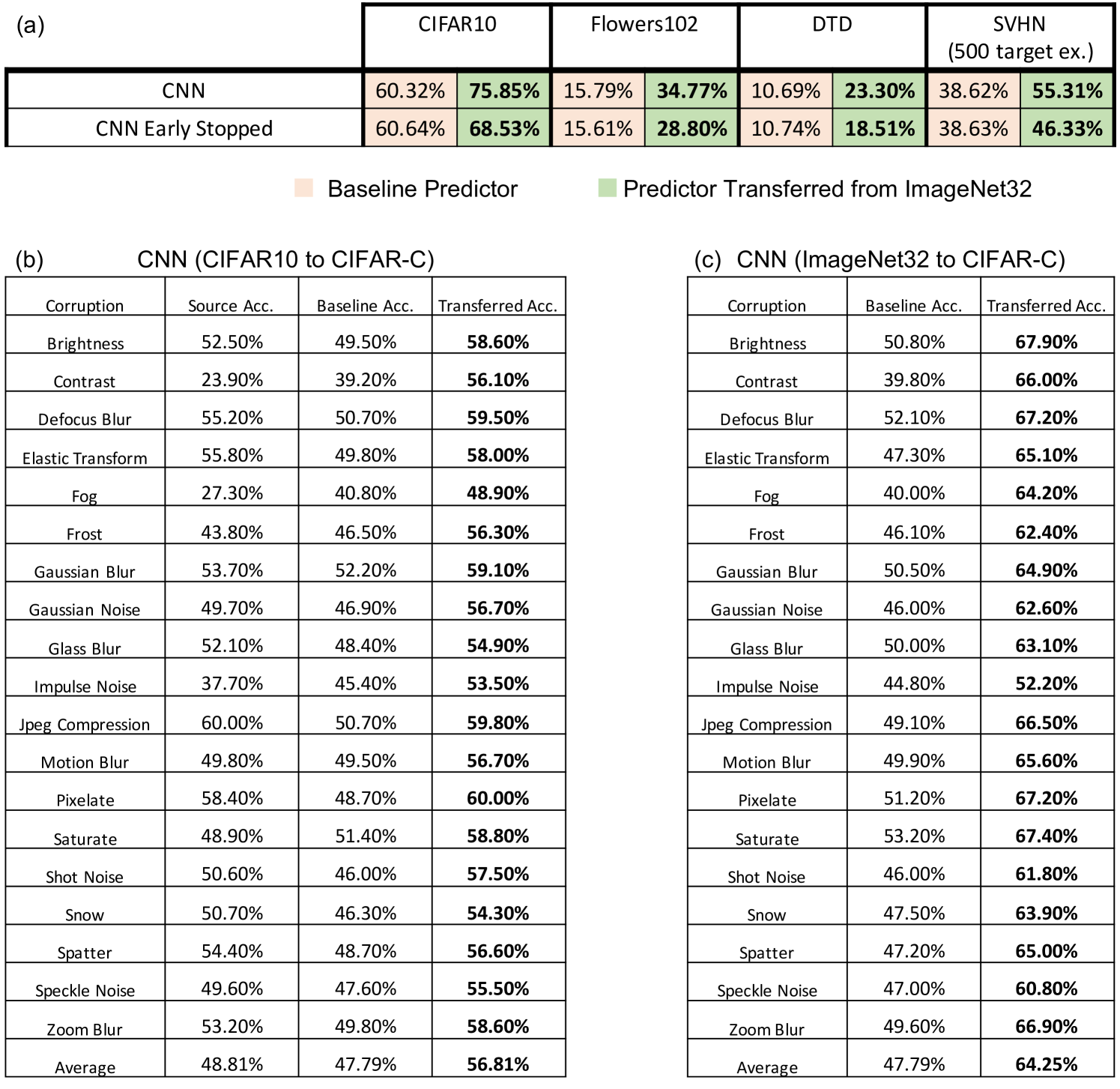

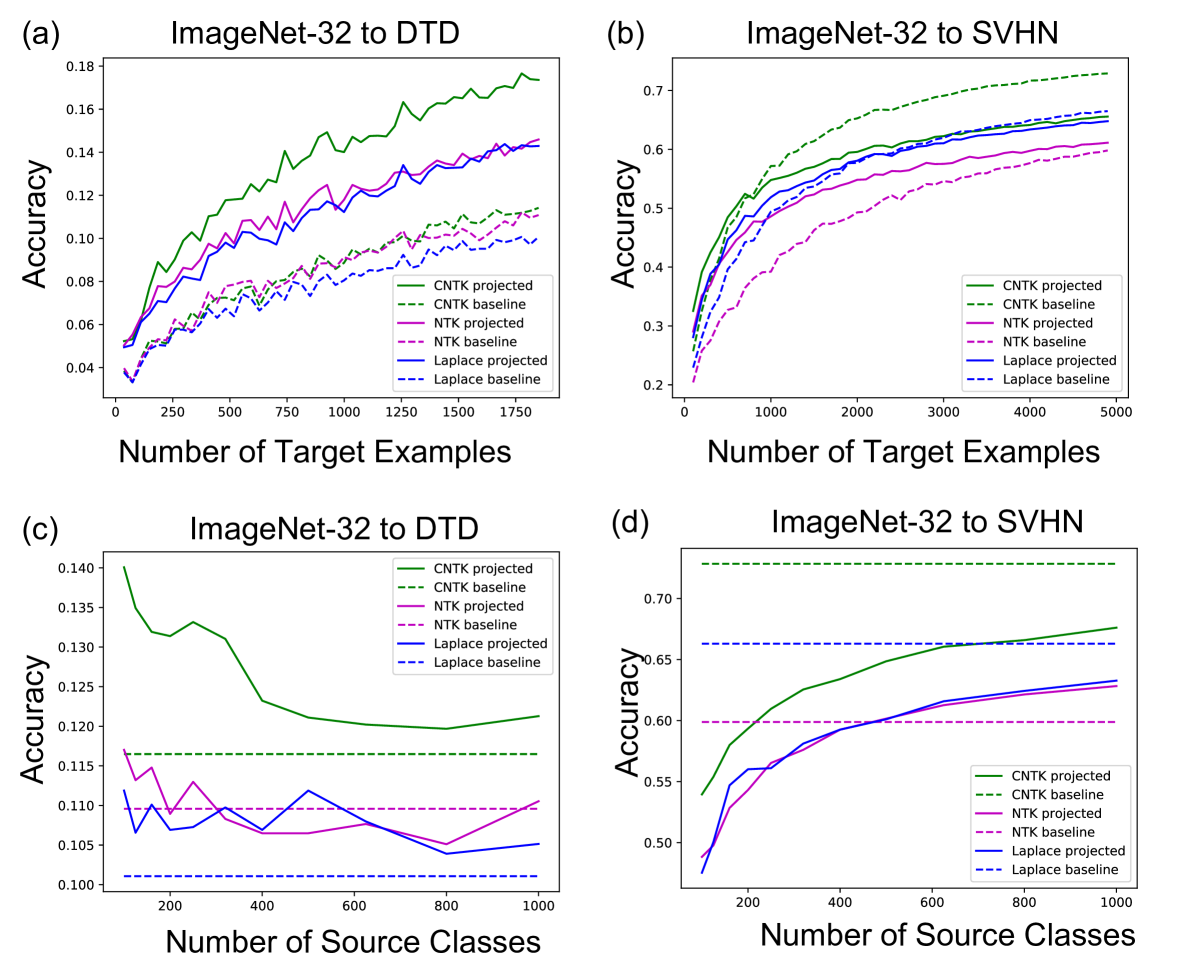

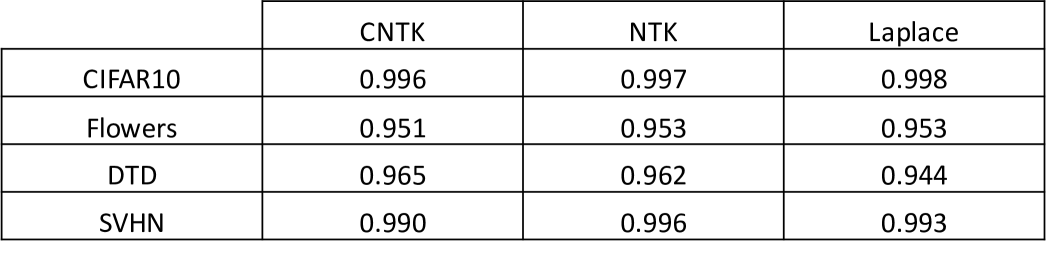

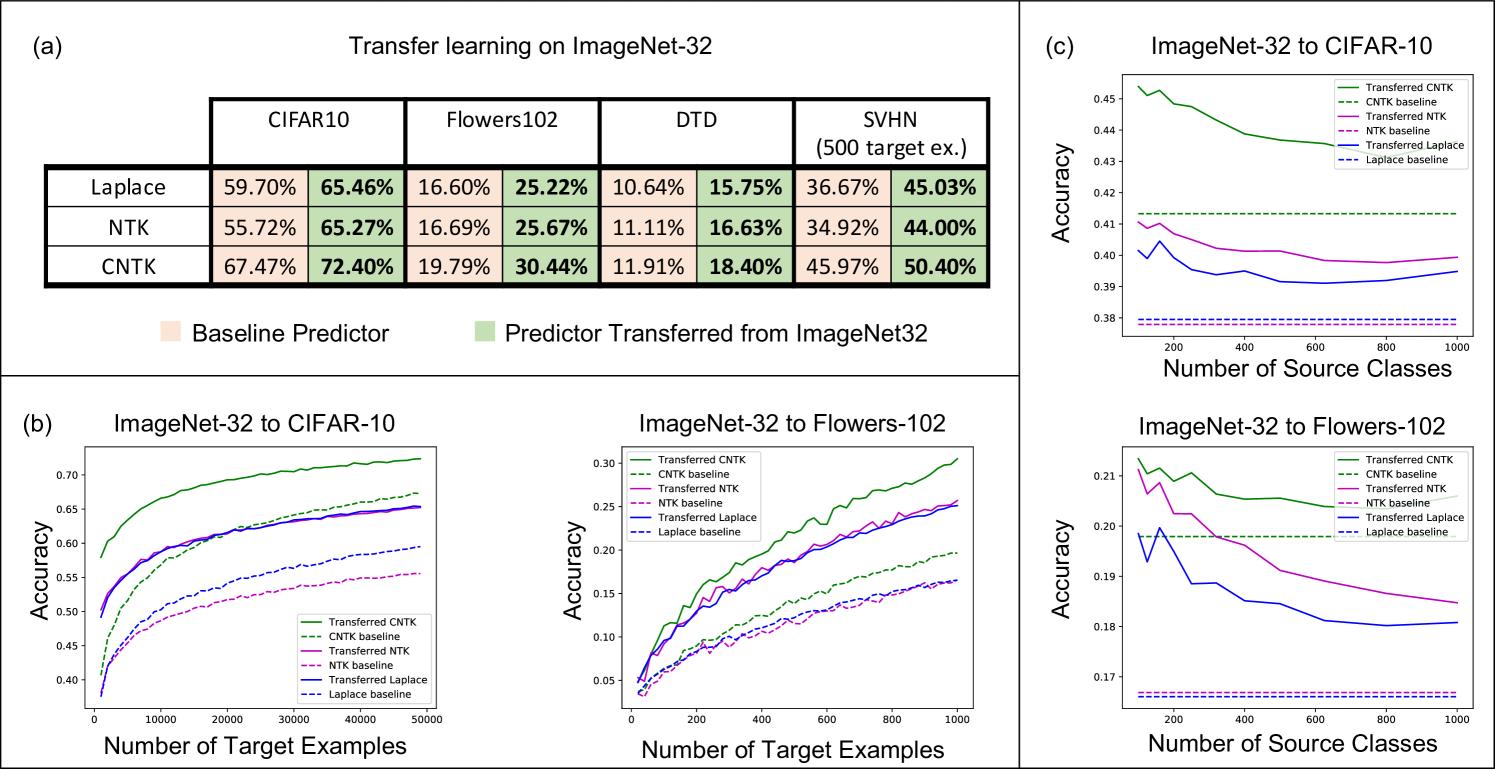

For all datasets, we compare the performance of 3 kernels (the Laplace kernel, NTK, and CNTK) when trained just on the target task, i.e. the baseline predictor, and when transferred via projection from ImageNet32. Training details for all kernels are provided in Appendix C. In Fig. 2a, we showcase the improvement of projected kernel predictors over baseline predictors across all datasets and kernels. We observe that projection yields a sizeable increase in accuracy (up to ) on the target tasks, thereby highlighting the effectiveness of this method. It is remarkable that this performance increase is observed even for transferring to Oxford 102 Flowers or DTD, datasets that have little to no overlap with images in ImageNet32.

In Appendix Fig. 5a, we compare our results with those of a finite-width neural network analog of the (infinite-width) CNTK where all layers of the source network are fine-tuned on the target task using the standard cross-entropy loss [20] and the Adam optimizer [27]. We observe that the performance gap between transfer-learned finite-width neural networks and the projected CNTK is largely influenced by the performance gap between these models on ImageNet32. In fact, in Appendix Fig. 5a, we show that finite-width neural networks trained to the same test accuracy on ImageNet32 as the (infinite-width) CNTK yield lower performance than the CNTK when transferred to target image classification tasks.

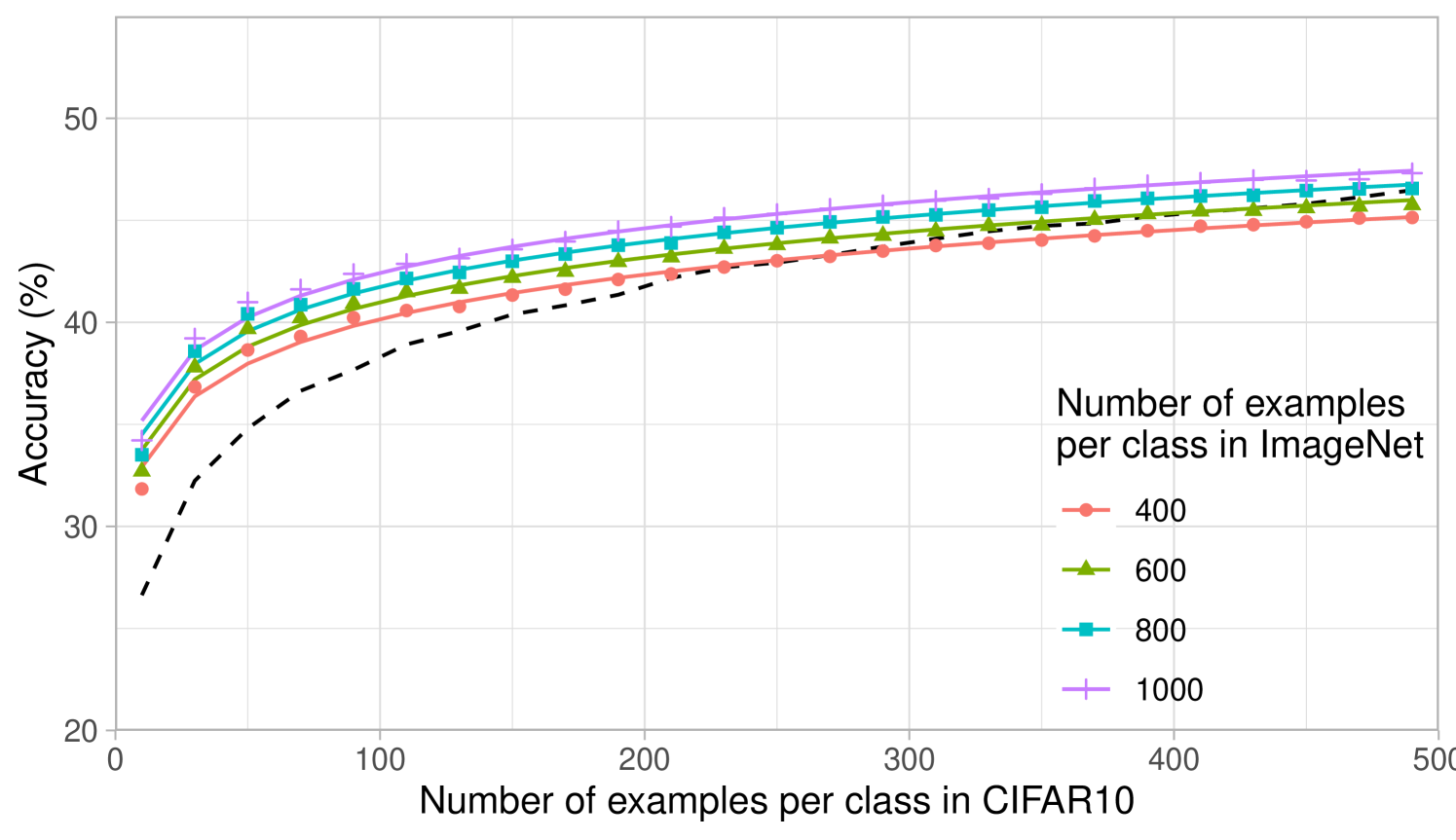

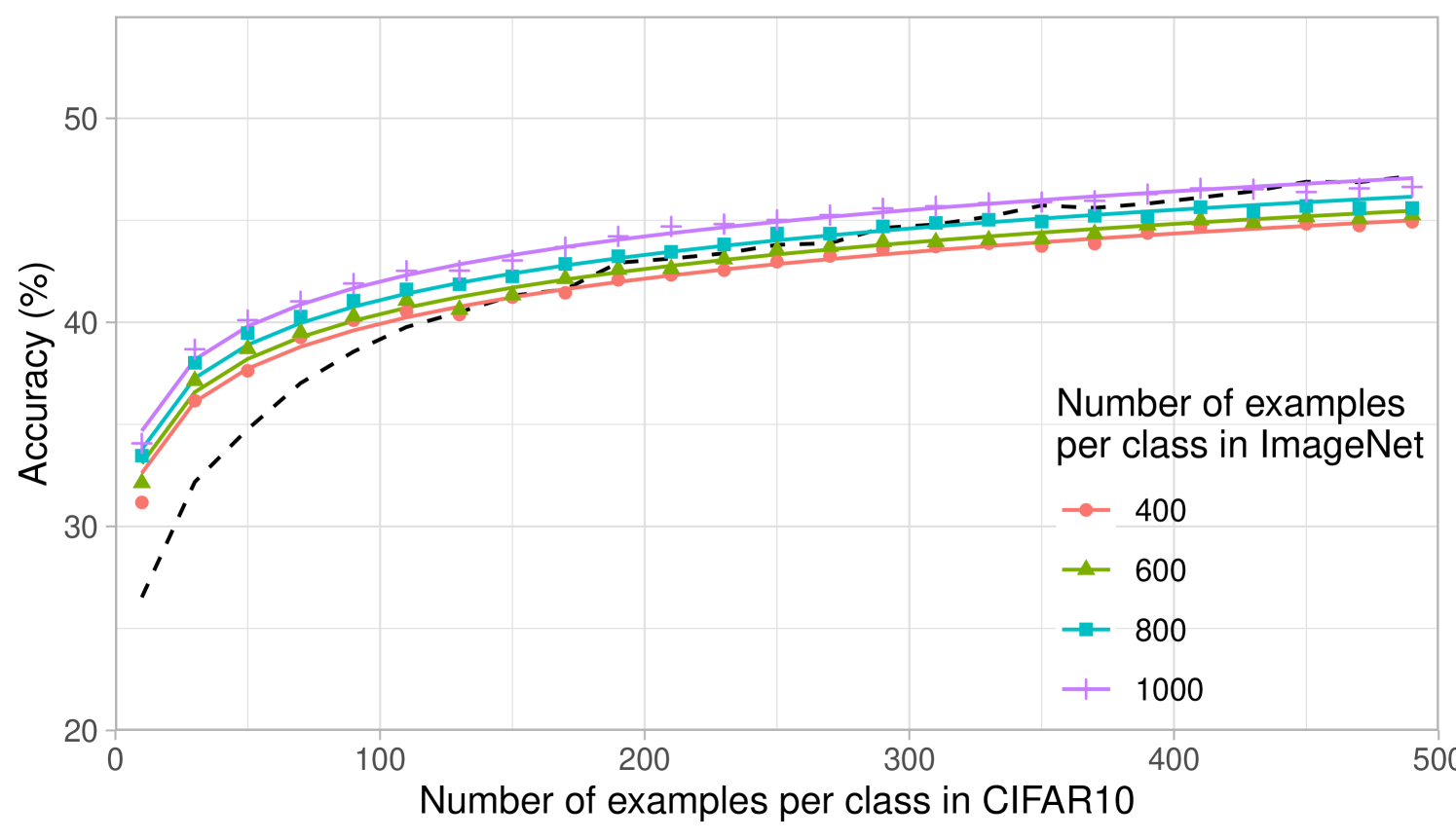

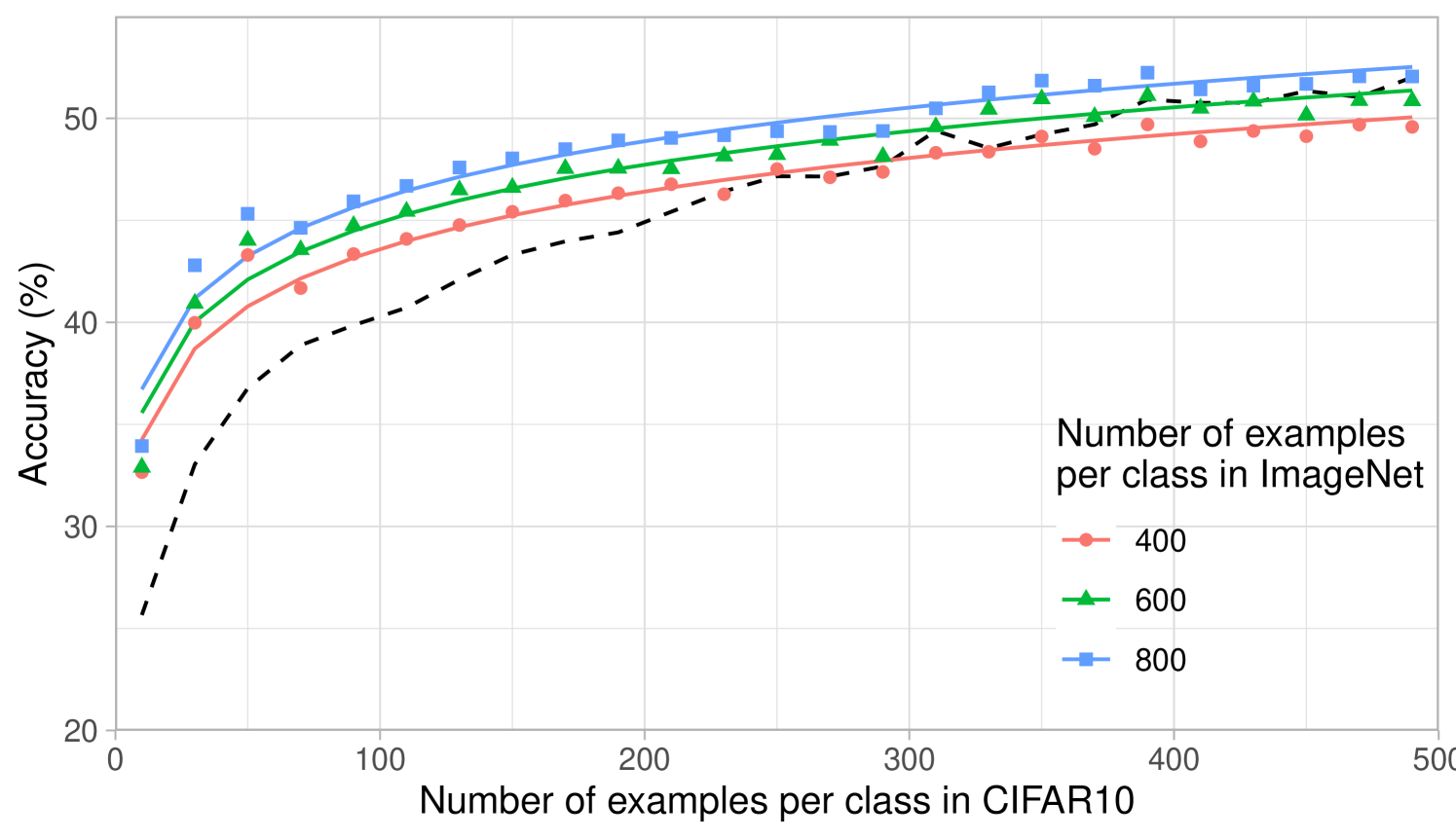

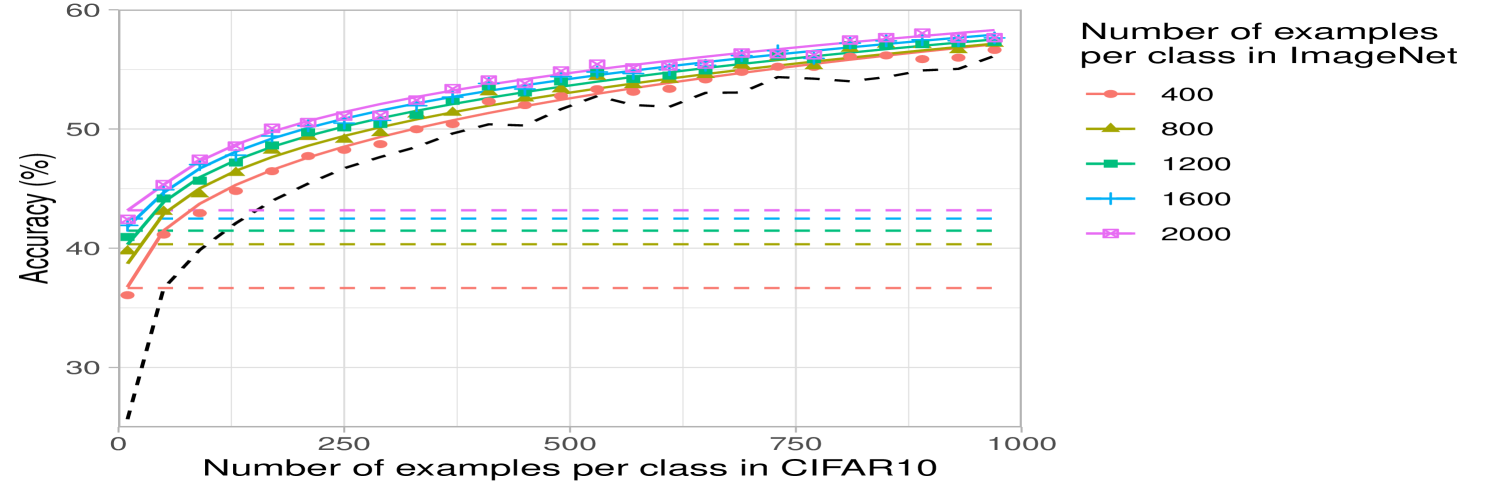

The computational simplicity of kernel methods allows us to compute scaling laws for the projected predictors. In Fig. 2b, we analyze how the performance of projected kernel methods varies as a function of the number of target examples, , for CIFAR10 and Oxford 102 Flowers. The results for DTD and SVHN are presented in Appendix Fig. 6a and b. For all target datasets, we observe that the accuracy of the projected predictors follows a simple logarithmic trend given by the curve for constants ( values on all datasets are above ). By fitting this curve on the accuracy corresponding to just the smallest five values of , we are able to predict the accuracy of the projected predictors within 2% of the reported accuracy for large values of (see Appendix D and Appendix Fig. 8). The robustness of this fit across many target tasks illustrates the practicality of the transferred kernel methods for estimating the number of target examples needed to achieve a given accuracy. Additional results on the scaling laws upon varying the number of source examples per class are presented in Appendix Fig. 7 for transferring between ImageNet32 and CIFAR10. In general, we observe that the performance increases as the number of source training examples per class increases, which is expected given the similarity of source and target tasks.

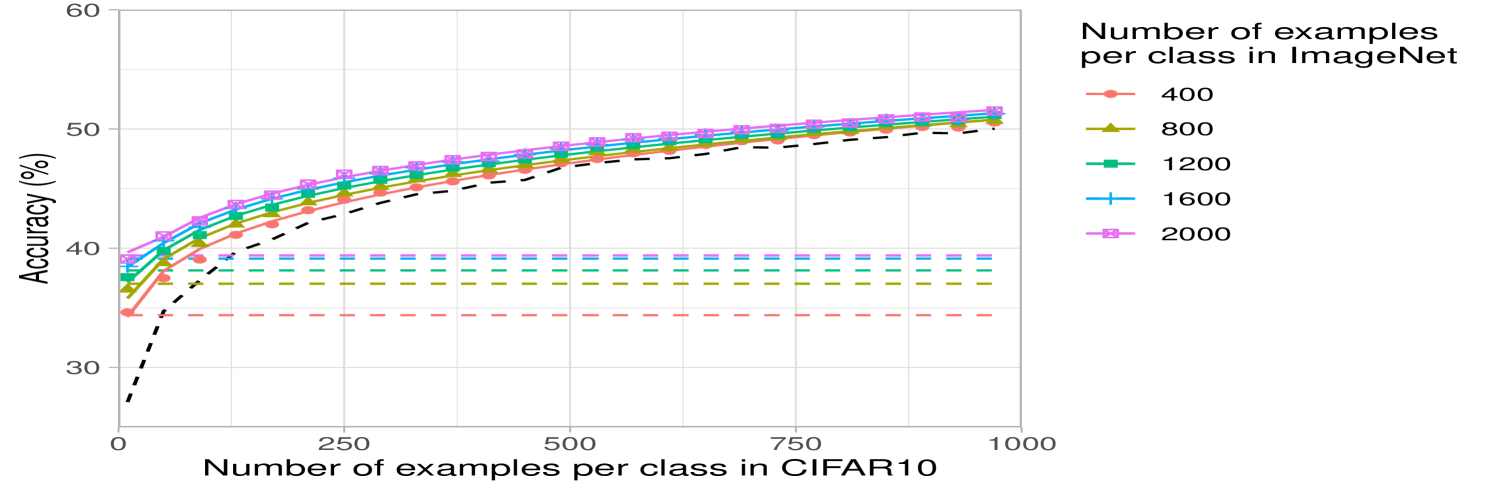

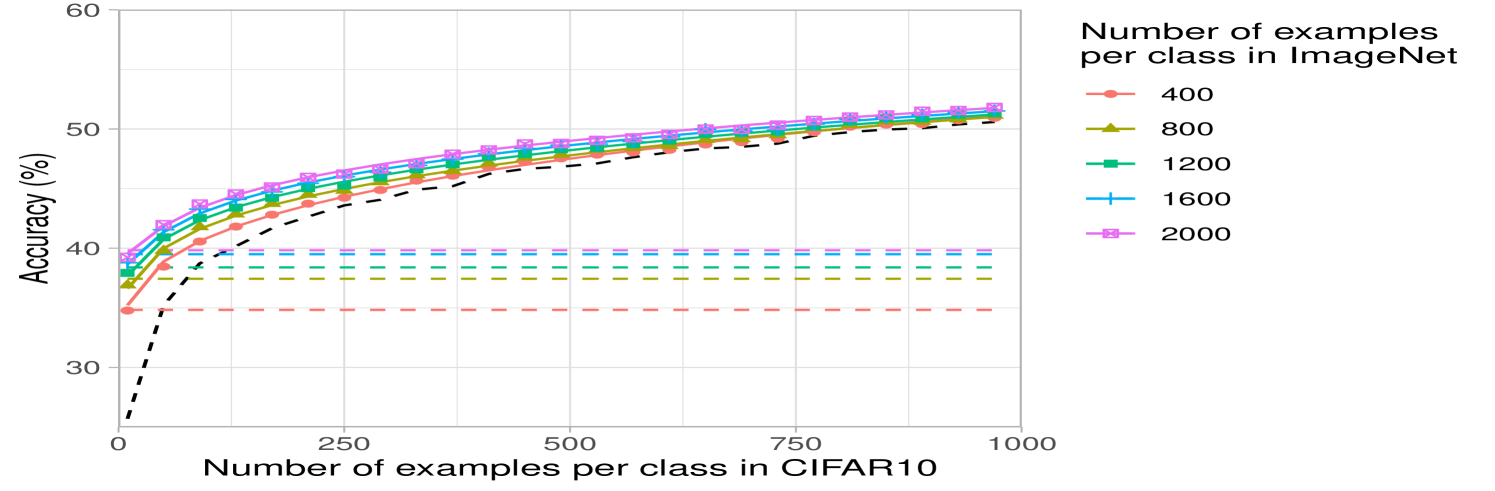

Lastly, we analyze the impact of increasing the number of classes while keeping the total number of source training examples fixed at k. Fig. 2c shows that having few samples for each class can be worse than having a few classes with many samples. This may be expected for datasets such as CIFAR10, where the classes overlap with the ImageNet32 classes: having few classes with more examples that overlap with CIFAR10 should be better than having many classes with fewer examples per class and less overlap with CIFAR10. A similar trend can be observed for DTD, but interestingly, the trend differs for SVHN, indicating that SVHN images can be better classified by projecting from a variety of ImageNet32 classes (see Appendix Fig. 6).

2.2 Transfer learning via translation

While projection involves composing a map with the source model, the second component of our framework, translation, involves adding a map to the source model as follows.

Definition 2.

Given a source dataset and a target dataset , the translated predictor, , is given by:

| (3) |

where is a predictor trained on the source dataset.333When there are infinitely many possible values for the parameterized function , we consider the minimum norm solution.

Translated predictors correspond to first utilizing the trained source model directly on the target task and then applying a correction, , which is learned by training a model on the corrected labels, . Like for the projected predictors, translated predictors can be implemented using any machine learning model, including kernel methods. When the predictors and are parameterized by linear models, translated predictors correspond to training a target predictor with weights initialized by those of the trained source predictor (proof in Appendix J). We note that training translated predictors is also a new form of boosting [9] between the source and target dataset, since the correction term accounts for the error of the source model on the target task. Lastly, we note that while the formulation given in Definition 2 requires the source and target tasks to have the same label dimension, projection and translation can be naturally combined to overcome this restriction.

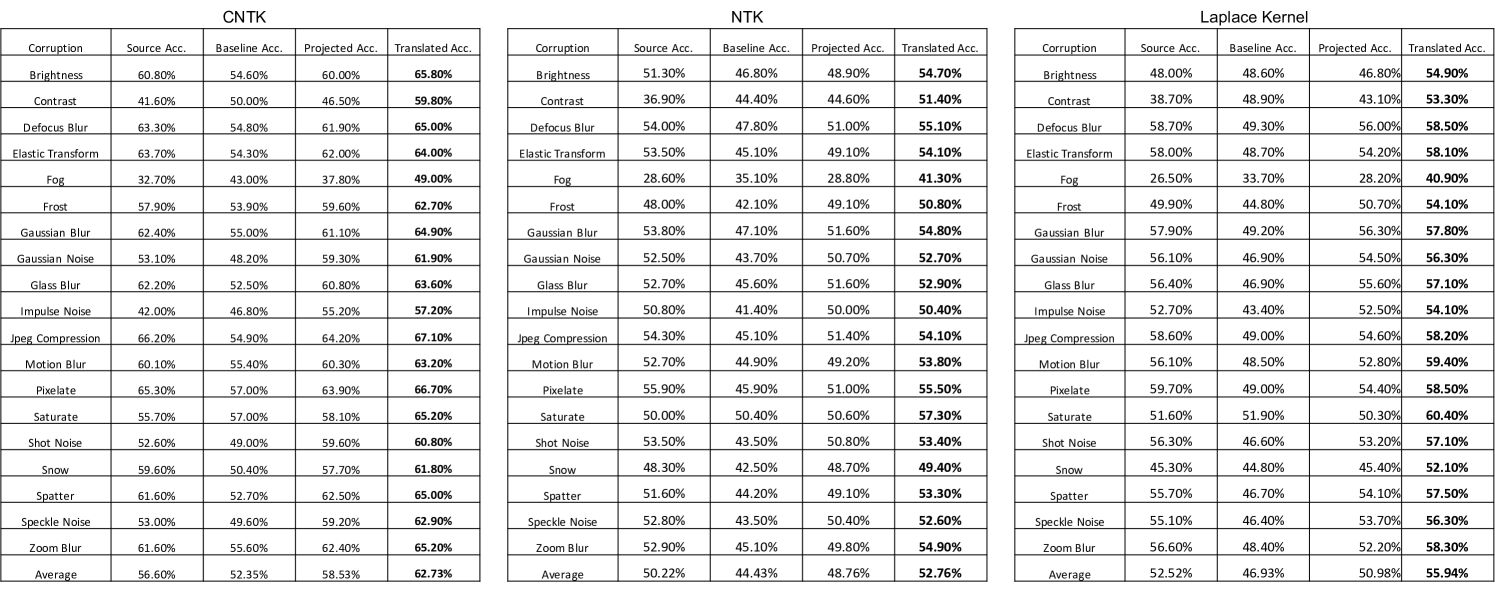

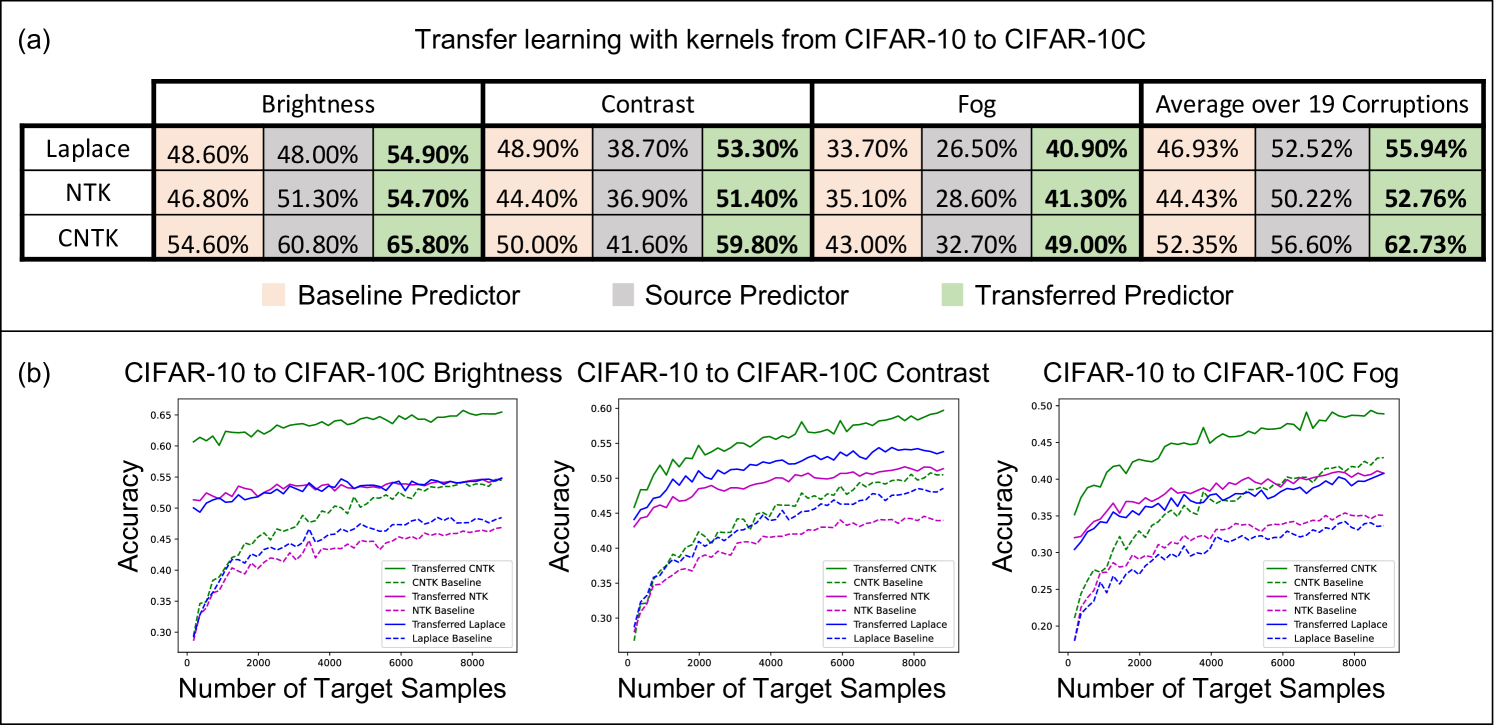

Improving kernel-based image classifier performance with translation. We now demonstrate that the translated predictors are particularly well-suited for correcting kernel methods to handle distribution shifts in images. Namely, we consider the task of transferring a source model trained on CIFAR10 to corrupted CIFAR10 images in CIFAR10-C [22]. CIFAR10-C consists of the test images in CIFAR10, but the images are corrupted by one of 19 different perturbations such as adjusting image contrast and introducing natural artifacts such as snow or frost. In our experiments, we select the k images of CIFAR10-C with the highest level of perturbation, and we reserve k images of each perturbation for training and k images for testing. In Appendix Fig. 9, we additionally analyze translating kernels from subsets of ImageNet32 to CIFAR10.

Again, we compare the performance of the three kernel methods considered for projection, but along with the accuracy of the translated predictor and baseline predictor, we also report the accuracy of the source predictor, which is given by using the source model directly on the target task. In Fig. 3a and Appendix Fig. 10, we show that the translated predictors outperform the baseline and source predictors on all 19 perturbations. Interestingly, even for corruptions such as contrast and fog where the source predictor is worse than the baseline predictor, the translated predictor outperforms all other kernel predictors by up to . In Appendix Fig. 10, we show that for these corruptions, the translated kernel predictors also outperform the projected kernel predictors trained on CIFAR10. In Appendix Fig. 5b, we additionally compare with the performance of a finite-width analog of the CNTK by fine-tuning all layers on the target task with cross-entropy loss and the Adam optimizer. We observe that the translated kernel methods outperform the corresponding neural networks. Remarkably kernels translated from CIFAR10 can even outperform fine-tuning a neural network pre-trained on ImageNet32 for several perturbations (see Appendix Fig. 5c).

Analogously to our analysis of the projected predictors, we visualize how the accuracy of the translated predictors is affected by the number of target examples, , for a subset of corruptions shown in Fig. 3b. We observe that the performance of the translated predictors is heavily influenced by the performance of the source predictor. For example, as shown in Fig. 3b for the brightness perturbation, where the source predictor already achieves an accuracy of , the translated predictors achieve an accuracy of above when trained on only target training samples. For the examples of the contrast and fog corruptions, Fig. 3b also shows that very few target examples allow the translated predictors to outperform the source predictors (e.g., by up to for only target examples). Overall, our results showcase that translation is effective at adapting kernel methods to distribution shifts in image classification.

2.3 Transfer learning via projection and translation in virtual drug screening

We now demonstrate the effectiveness of projection and translation for the use of kernel methods for virtual drug screening. A common problem in drug screening is that experimentally measuring many different drug and cell line combinations is both costly and time consuming. The goal of virtual drug screening approaches is to computationally identify promising candidates for experimental validation. Such approaches involve training models on existing experimental data to then impute the effect of drugs on cell lines for which there was no experimental data.

The CMAP dataset [47] is a large-scale, publicly available drug screen containing measurements of 978 landmark genes for 116,228 combinations of 20,336 drugs (molecular compounds) and 70 cell lines. This dataset has been an important resource for drug screening[41, 7].444CMAP also contains data on genetic perturbations; but in this work, we focus on imputing the effect of chemical perturbations only. Prior work for virtual drug screening demonstrated the effectiveness of low-rank tensor completion and nearest neighbor predictors for imputing the effect of unseen drug and cell line combinations in CMAP [23]. However, these methods crucially rely on the assumption that for each drug there is at least one measurement for every cell line, which is not the case when considering new chemical compounds. To overcome this issue, recent work [42] introduced kernel methods for drug screening using the NTK to predict gene expression vectors from drug and cell line embeddings, which capture the similarity between drugs and cell lines.

In the following, we demonstrate that the NTK predictor can be transferred to improve gene expression imputation for drug and cell line combinations, even in cases where neither the particular drug nor the particular cell line were available when training the source model. To utilize the framework of [42], we use the control gene expression vector as cell line embedding and the 1024 bit circular fingerprints from [1] as drug embedding. All pre-processing of the CMAP gene expression vectors is described in Appendix E. For the source task, we train the NTK to predict gene expression for the 54,444 drug and cell line combinations corresponding to the 65 cell lines with the least drug availability in CMAP. We then impute the gene expression for each of the 5 cell lines (A375, A549, MCF7, PC3, VCAP) with the most drug availability. We chose these data splits in order to have sufficient target samples to analyze model performance as a function of the number of target samples. In our analysis of the transferred NTK, we always consider transfer to a new cell line, and we stratify by whether a drug in the target task was already available in the source task. For this application we combine projection and translation into one predictor as follows.

Definition 3.

Given a source dataset and a target dataset , the projected and translated predictor, , is given by:

where is a predictor trained on the source dataset and is the concatenation of and .

Note that if we omit in the concatenation above, we get the projected predictor and if we omit in the concatenation above, we get the translated predictor. Generally, and can correspond to different modalities (e.g., class label vectors and images), but in the case of drug screening, both correspond to gene expression vectors of the same dimension. Thus, combining projection and translation is natural in this context.

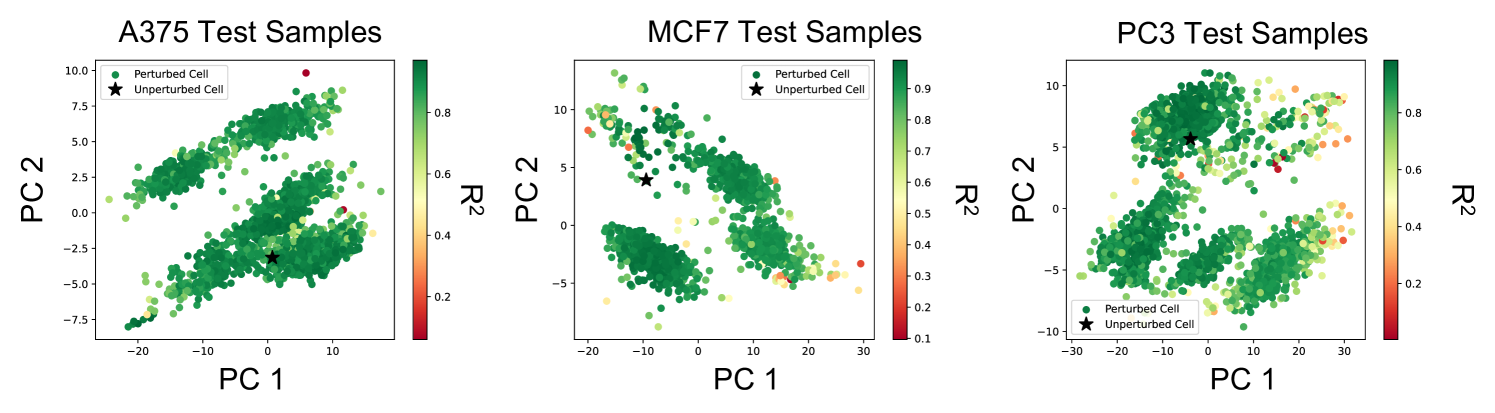

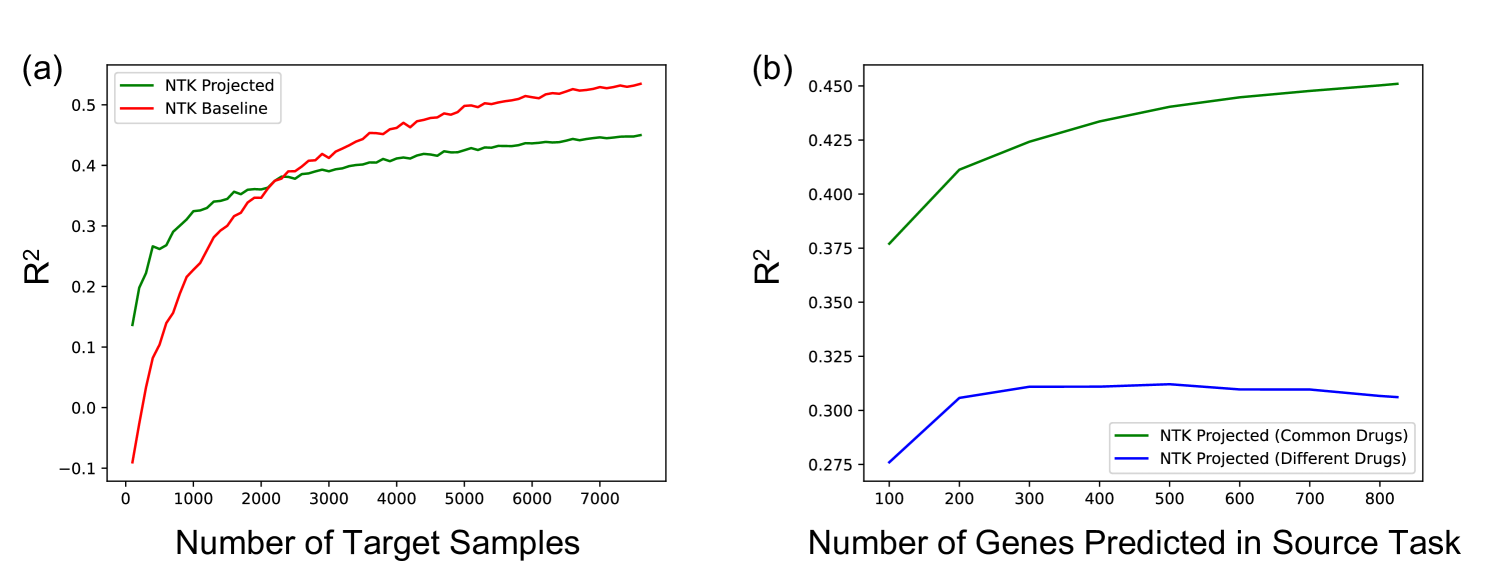

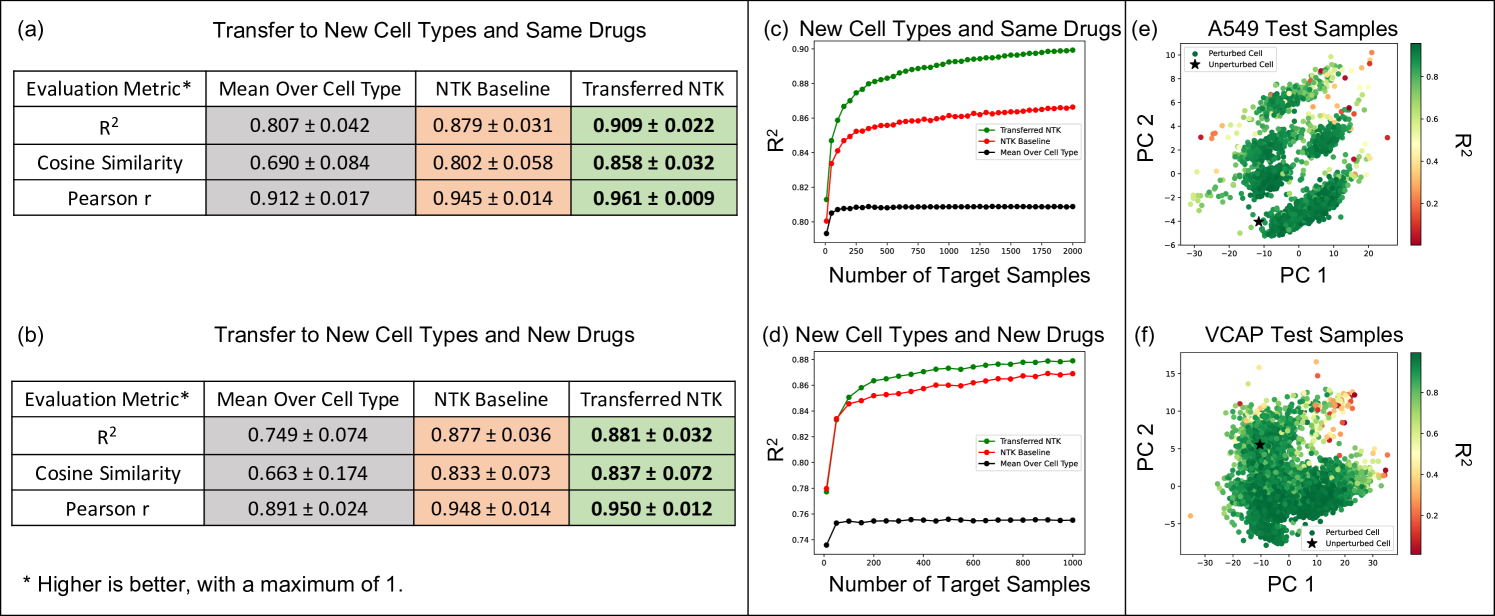

Fig. 4a and b show that the transferred kernel predictors outperform both, the baseline model from [42] as well as imputation by mean (over each cell line) gene expression across three different metrics (, cosine similarity, and Pearson r value) on both tasks (i.e., transferring to drugs that were seen in the source task as well as completely new drugs). All metrics considered are described in Appendix F. All training details are presented in Appendix C. Interestingly, the transferred kernel methods provide a boost over the baseline kernel methods even when transferring to new cell lines and new drugs. But as expected, we note that the increase in performance is greater when transferring to drug and cell line combinations for which the drug was available in the source task. Fig. 4c and d show that the transferred kernels again follow simple logarithmic scaling laws (fitting a logarithmic model to the red and green curves yields ). We note that the transferred NTKs have better scaling coefficients than the baseline models, thereby implying that the performance gap between the transferred NTK and the baseline NTK grows as more target examples are collected. In Fig. 4e and f, we visualize the performance of the transferred NTK in relation to the top 2 principal components of gene expression for drug and cell line combinations. We generally observe that the performance of the NTK is lower for cell and drug combinations that are further from the control, i.e., the unperturbed state. Plots for the other 3 cell lines are presented in Appendix Fig. 11. In Appendix G and Appendix Fig. 12, we show that this approach can also be used for other transfer learning tasts related to virtual drug screening. In particular, we show that the imputed gene expression vectors can be transferred to predict the viability of a drug and cell line combination in the large-scale, publicly available Cancer Dependency Map (DepMap) dataset [13].

2.4 Theoretical analysis of projection and translation in the linear setting

In the following, we provide explicit scaling laws for the performance of projected and translated kernel methods in the linear setting, thereby providing a mathematical basis for the empirical observations in the previous sections.

Derivation of the scaling law for the projected predictor in the linear setting. We assume that , , and that and are linear maps, i.e., and . The following results provide a theoretical foundation for the empirical observations regarding the role of the number of source classes and the number of source samples for transfer learning shown in Fig. 2 as well as in [24]. In particular, we will derive scaling laws for the risk, or expected test error, of the projected predictor as a function of the number of source examples, , target examples, , and number of source classes, . We note that the risk of a predictor is a standard object of study for understanding generalization in statistical learning theory [48] and defined as follows.

Definition 4.

Let be a probability density on and let for . Let and for . The risk of a predictor trained on the samples is given by

| (4) |

By understanding how the risk scales with the number of source examples, target examples, and source classes, we can characterize the settings in which transfer learning is beneficial. As is standard in analyses of the risk of over-parameterized linear regression [18, 6, 4, 21], we consider the risk of the minimum norm solution given by

where is the Moore-Penrose inverse of . Theorem 1 establishes a closed form for the risk of the projected predictor , thereby giving a closed form for the scaling law for transfer learning in the linear setting; the proof is given in Appendix H.

Theorem 1.

Let , , , and let and . Assuming that and are independent, isotropic distributions on , then the risk is given by

where and

The term in Theorem 1 quantifies the similarity between the source and target tasks. For example, if there exists a linear map such that , then . In the context of classification, this can occur if the target classes are a strict subset of the source classes. Since transfer learning is typically performed between source and target tasks that are similar, we expect to be small. To gain more insights into the behavior of transfer learning using the projected predictor, the following corollary considers the setting where in Theorem 1; the proof is given in Appendix I.

Corollary 1.

Let and assume . Under the setting of Theorem 1, if as , then:

-

a)

is monotonically decreasing for if .

-

b)

If , then decreases as increases.

-

c)

If , then .

-

d)

If and , then

Remarks. Corollary 1 not only formalizes several intuitions regarding transfer learning, but also theoretically corroborates surprising dependencies on the number of source examples, target examples, and source classes that were empirically observed in Fig. 2 for kernels and in [24] for convolutional networks. First, Corollary 1a implies that increasing the number of source examples is always beneficial for transfer learning when the source and target tasks are related (), which matches intuition. Next, Corollary 1b implies that increasing the number of source classes while leaving the number of source examples fixed can decrease performance (i.e. if ), even for similar source and target tasks satisfying . This matches the experiments in Fig. 2c, where we observed that increasing the number of source classes when keeping the number of source examples fixed can be detrimental to the performance. This is intuitive for transferring from ImageNet32 to CIFAR10, since we would be adding classes that are not as useful for predicting objects in CIFAR10. Corollary 1c implies that when the source and target task are similar and the number of source classes is less than the data dimension, transfer learning with the projected predictor is always better than training only on the target task. Moreover, if the number of source classes is finite (), Corollary 1c implies that the risk of the projected predictor decreases an order of magnitude faster than the baseline predictor. In particular, the risk of the baseline predictor is given by , while that of the projected predictor is given by . Note also that when the number of target samples is small relative to the dimension, Corollary 1c implies that decreasing the number of source classes has minimal effect on the risk. Lastly, Corollary 1d implies that when and are small, the risk of the projected predictor is roughly that of a baseline predictor trained on twice the number of samples.

Derivation of the scaling law for the translated predictor in the linear setting. Analogously to the case for projection, we analyze the risk of the translated predictor when is the minimum norm solution to and is the minimum norm solution to .

Theorem 2.

Let , , , and let where and . Assuming that and are independent, isotropic distributions on , the the risk is given by

where is the baseline predictor.

The proof is given in Appendix K. Theorem 2 formalizes several intuitions regarding when translation is beneficial. In particular, we first observe that if the source model is recovered exactly (i.e. ), then the risk of the translated predictor is governed by the distance between the oracle source model and target model, i.e., . Hence, the translated predictor generalizes better than the baseline predictor if the source and target models are similar. In particular, by flattening the matrices and into vectors and assuming , the translated predictor outperforms the baseline predictor if the angle between the flattened and is less than . On the other hand, when there are no source samples, the translated predictor is exactly the baseline predictor and the corresponding risks are equivalent. In general, we observe that the risk of the translated predictor is simply a weighted average between the baseline risk and the risk in which the source model is recovered exactly.

Comparing Theorem 2 to Theorem 1, we note that the projected predictor and the translated predictor generalize based on different quantities. In particular, in the case when , the risk of the translated predictor is a constant multiple of the baseline risk while the risk of the projected predictor is a multiple of the baseline risk that decreases with . Hence, depending on the distance between and , the translated predictor can outperform the projected predictor or vice-versa. As a simple example consider the setting where , , and ; then the translated predictor achieves risk while the projected predictor achieves non-zero risk. When , we suggest combining the projected and translated predictors, as we did in the case of virtual drug screening. Otherwise, our results suggest using the translated predictor for transfer learning problems involving distribution shift in the features but no difference in the label sets, and the projected predictor otherwise.

3 Discussion

In this work, we developed a framework that enables transfer learning with kernel methods. In particular, we introduced the projection and translation operations to adjust the predictions of a source model to a specific target task: While projection involves applying a map directly to the predictions given by the source model, translation involves adding a map to the predictions of a source model. We demonstrated the effectiveness of the transfer learned kernels on image classification and virtual drug screening tasks. Namely, we showed that transfer learning increased the performance of kernel-based image classifiers by up to over training such models directly on the target task. Interestingly, we found that transfer-learned convolutional kernels performed comparably to transfer learning using the corresponding finite-width convolutional networks. In virtual drug screening, we demonstrated that the transferred kernel methods provided an improvement over prior work [42], even in settings where none of the target drug and cell lines were present in the source task. For both applications, we analyzed the performance of the transferred kernel model as a function of the number of target examples and observed empirtically that the transferred kernel followed a simple logarithmic trend, thereby enabling predicting the benefit of collecting more target examples on model performance. Lastly, we mathematically derived the scaling laws in the linear setting, thereby providing a theoretical foundation for the empirical observations. We end by discussing various consequences as well as future research directions motivated by our work.

Benefit of pre-training kernel methods on large datasets. A key contribution of our work is enabling kernels trained on large datasets to be transferred to a variety of downstream tasks. As is the case for neural networks, this allows pre-trained kernel models to be saved and shared with downstream users to improve their applications of interest. A key next step to making these models easier to save and share is to reduce their reliance on storing the entire training set such as by using coresets [49]. We envision that by using such techniques in conjunction with modern advances in kernel methods, the memory and runtime costs could be drastially reduced.

Reducing kernel evaluation time for state-of-the-art convolutional kernels. In this work, we demonstrated that it is possible to train convolutional kernel methods on datasets with over 1 million images. In order to train such models, we resorted to using the CNTK of convolutional networks with a fully connected last layer. While other architectures, such as the CNTK of convolutional networks with a global average pooling last layer, have been shown to achieve superior performance on CIFAR10 [2], training such kernels on k images from CIFAR10 is estimated to take GPU hours [36], which is more than three orders of magnitude slower than the kernels used in this work. The main computational bottleneck for using such improved convolution kernels is evaluating the kernel function itself. Thus an important problem is to improve the computation time for such kernels, which would allow training better convolutional kernels on large-scale image datasets, which could then be transferred using our framework to improve the performance on a variety of downstream tasks.

Using kernel methods to adapt to distribution shifts. Our work demonstrates that kernels pre-trained on a source task can adapt to a target task with distribution shift when given even just a few target training samples. This opens novel avenues for applying kernel methods to tackle distribution shift in a variety of domains including healthcare or genomics in which models need to be adapted to handle shifts in cell lines, populations, batches, etc. In the context of virtual drug screening, we showed that our transfer learning approach could be used to generalize to new cell lines. The scaling laws described in this work may provide an interesting avenue to understand how many samples are required in the target domain for more complex domain shifts such as from a model organism like mouse to humans, a problem of great interest in the pharmacological industry.

Acknowledgements

The authors were partially supported by ONR (N00014-22-1-2116), NSF (DMS-1651995), NCCIH/NIH, the MIT-IBM Watson AI Lab, MIT J-Clinic for Machine Learning and Health, the Eric and Wendy Schmidt Center at the Broad Institute, and a Simons Investigator Award (to C.U.).

References

- [1] Democratizing deep-learning for drug discovery, quantum chemistry, materials science and biology. https://github.com/deepchem/deepchem, 2016.

- [2] S. Arora, S. S. Du, W. Hu, Z. Li, R. Salakhutdinov, and R. Wang. On exact computation with an infinitely wide neural net. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems. Curran Associates, Inc., 2019.

- [3] S. Arora, S. S. Du, Z. Li, R. Salakhutdinov, R. Wang, and D. Yu. Harnessing the power of infinitely wide deep nets on small-data tasks. In International Conference on Learning Representations, 2020.

- [4] P. L. Bartlett, P. M. Long, G. Lugosi, and A. Tsigler. Benign overfitting in linear regression. Proceedings of the National Academy of Sciences, 117(48):30063–30070, 2020.

- [5] M. Belkin, D. Hsu, S. Ma, and S. Mandal. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proceedings of the National Academy of Sciences, 116(32):15849–15854, 2019.

- [6] M. Belkin, D. Hsu, and J. Xu. Two models of double descent for weak features. Society for Industrial and Applied Mathematics Journal on Mathematics of Data Science, 2(4):1167–1180, 2020.

- [7] A. Belyaeva, L. Cammarata, A. Radhakrishnan, C. Squires, K. Yang, G. Shivashankar, and C. Uhler. Causal network models of SARS-CoV-2 expression and aging to identify candidates for drug repurposing. Nature Communications, 12(1024), 2021.

- [8] A. Bietti. Approximation and learning with deep convolutional models: a kernel perspective. In International Conference on Learning Representations, 2022.

- [9] C. M. Bishop. Pattern Recognition and Machine Learning (Information Science and Statistics). Springer-Verlag, Berlin, Heidelberg, 2006.

- [10] S. Chatterjee and E. Meckes. Multivariate normal approximation using exchangeable pairs. ALEA Lat. Am. J. Probab. Math. Stat., 4, 01 2007.

- [11] P. Chrabaszcz, I. Loshchilov, and F. Hutter. A downsampled variant of imagenet as an alternative to the cifar datasets. arXiv:1707.08819, 2017.

- [12] M. Cimpoi, S. Maji, I. Kokkinos, S. Mohamed, , and A. Vedaldi. Describing textures in the wild. In Proceedings of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2014.

- [13] S. Corsello, R. Nagari, R. Spangler, J. Rossen, M. Kocak, J. Bryan, R. Humeidi, D. Peck, X. Wu, A. Tang, V. Wang, S. Bender, E. Lemire, R. Narayan, P. Montgomery, U. ben david, C. Garvie, Y. Chen, M. Rees, and T. Golub. Discovering the anticancer potential of non-oncology drugs by systematic viability profiling. Nature Cancer, 1:1–14, 02 2020.

- [14] W. Dai, Q. Yang, G.-R. Xue, and Y. Yu. Boosting for transfer learning. In ACM International Conference Proceeding Series, volume 227, pages 193–200, 01 2007.

- [15] J. De Fauw, J. R. Ledsam, B. Romera-Paredes, S. Nikolov, N. Tomasev, S. Blackwell, H. Askham, X. Glorot, B. O’Donoghue, D. Visentin, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nature Medicine, 24(9):1342–1350, 2018.

- [16] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), 2019.

- [17] J. Donahue, Y. Jia, O. Vinyals, J. Hoffman, N. Zhang, E. Tzeng, and T. Darrell. Decaf: A deep convolutional activation feature for generic visual recognition. In International Conference on Machine Learning, 2014.

- [18] H. W. Engl, M. Hanke, and A. Neubauer. Regularization of Inverse Problems, volume 375. Springer Science & Business Media, 1996.

- [19] A. Esteva, B. Kuprel, R. Novoa, J. Ko, S. Swetter, H. Blau, and S. Thrun. Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542, 2017.

- [20] I. Goodfellow, Y. Bengio, and A. Courville. Deep Learning, volume 1. MIT Press, 2016.

- [21] T. Hastie, A. Montanari, S. Rosset, and R. J. Tibshirani. Surprises in high-dimensional ridgeless least squares interpolation. arXiv:1903.08560, 2019.

- [22] D. Hendrycks and T. G. Dietterich. Benchmarking neural network robustness to common corruptions and perturbations. arXiv:1903.12261, 2019.

- [23] R. Hodos, P. Zhang, H.-C. Lee, Q. Duan, Z. Wang, N. R. Clark, A. Ma’ayan, F. Wang, B. Kidd, J. Hu, D. Sontag, and J. Dudley. Cell-specific prediction and application of drug-induced gene expression profiles. Pacific Symposium on Biocomputing, 23:32–43, 2018.

- [24] M. Huh, P. Agrawal, and A. A. Efros. What makes ImageNet good for transfer learning? arXiv:1608.08614, 2016.

- [25] A. Jacot, F. Gabriel, and C. Hongler. Neural Tangent Kernel: Convergence and generalization in neural networks. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems. Curran Associates, Inc., 2018.

- [26] S. Jaeger-Honz, S. Fulle, and S. Turk. Mol2vec: Unsupervised machine learning approach with chemical intuition. Journal of Chemical Information and Modeling, 58, 12 2017.

- [27] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. In International Conference on Learning Representations, 2015.

- [28] A. Krizhevsky. Learning multiple layers of features from tiny images. Master’s thesis, University of Toronto, 2009.

- [29] J. Lee, S. S. Schoenholz, J. Pennington, B. Adlam, L. Xiao, R. Novak, and J. Shol-Dickstein. Finite Versus Infinite Neural Networks: an Empirical Study. In Advances in Neural Information Processing Systems, 2020.

- [30] H. Lin and M. Reimherr. On transfer learning in functional linear regression. arXiv:2206.04277, 2022.

- [31] S. Ma and M. Belkin. Kernel machines that adapt to GPUs for effective large batch training. In Conference on Machine Learning and Systems, 2019.

- [32] P. Nakkiran, G. Kaplun, Y. Bansal, T. Yang, B. Barak, and I. Sutskever. Deep double descent: Where bigger models and more data hurt. In International Conference in Learning Representations, 2020.

- [33] Y. Netzer, T. Wang, A. Coates, A. Bissacco, B. Wu, and A. Y. Ng. Reading digits in natural images with unsupervised feature learning. 2011.

- [34] E. Nichani, A. Radhakrishnan, and C. Uhler. Increasing depth leads to U-shaped test risk in over-parameterized convolutional networks. In International Conference on Machine Learning Workshop on Over-parameterization: Pitfalls and Opportunities, 2021.

- [35] M.-E. Nilsback and A. Zisserman. Automated flower classification over a large number of classes. 2008.

- [36] R. Novak, L. Xiao, J. Hron, J. Lee, A. A. Alemi, J. Sohl-Dickstein, and S. S. Schoenholz. Neural Tangents: Fast and easy infinite neural networks in Python. In International Conference on Learning Representations, 2020.

- [37] D. Obst, B. Ghattas, J. Cugliari, G. Oppenheim, S. Claudel, and Y. Goude. Transfer learning for linear regression: a statistical test of gain. arXiv:2102.09504, 2021.

- [38] T. E. Oliphant. A guide to NumPy, volume 1. Trelgol Publishing USA, 2006.

- [39] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems. Curran Associates, Inc., 2019.

- [40] M. E. Peters, M. Neumann, M. Iyyer, M. Gardner, C. Clark, K. Lee, and L. Zettlemoyer. Deep contextualized word representations. In Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), 2018.

- [41] S. Pushpakom, F. Iorio, P. A. Eyers, K. J. Escott, S. Hopper, A. Wells, A. Doig, J. Guilliams, T. Latimer, C. McNamee, A. Norris, P. Sanseau, D. Cavalla, and M. Pirmohamed. Drug repurposing: progress, challenges and recommendations. Nature Reviews Drug Discovery, 18(1):41–58, 2019.

- [42] A. Radhakrishnan, G. Stefanakis, M. Belkin, and C. Uhler. Simple, fast, and flexible framework for matrix completion with infinite width neural networks. arXiv:2108.00131, 2021.

- [43] C. Raffel, N. Shazeer, A. Roberts, K. Lee, S. Narang, M. Matena, Y. Zhou, W. Li, and P. J. Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of Machine Learning Research, 21(140):1–67, 2020.

- [44] M. Raghu, C. Zhang, J. Kleinberg, and S. Bengio. Transfusion: Understanding transfer learning for medical imaging. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d’ Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, 2019.

- [45] A. S. Razavian, H. Azizpour, J. Sullivan, and S. Carlsson. CNN features off-the-shelf: An astounding baseline for recognition. In IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2014.

- [46] B. Schölkopf and A. J. Smola. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. MIT Press, 2002.

- [47] A. Subramanian, R. Narayan, S. M. Corsello, et al. A next generation connectivity map: L1000 platform and the first 1,000,000 profiles. Cell, 171(6):1437–1452, 2017.

- [48] V. N. Vapnik. Statistical Learning Theory. Wiley-Interscience, 1998.

- [49] Y. Zheng and J. M. Phillips. Coresets for kernel regression. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 645–654, 2017.

- [50] F. Zhuang, Z. Qi, K. Duan, D. Xi, Y. Zhu, H. Zhu, H. Xiong, and Q. He. A comprehensive survey on transfer learning. 2020.

Appendix

Appendix A Review of Kernel Regression

We provide a brief review of training kernel machines using kernel ridge regression. For a detailed description of these methods, we refer the reader to [46].

Let denote training examples and denote the corresponding labels. The key idea behind kernel machines is to first transform the training examples, , using a feature map and then perform linear regression on the transformed data to fit the labels, . In particular, given a feature map , we can nonlinearly fit the data by solving

where and the th column of is . In cases where is much larger than , solving the above system becomes computationally expensive. The key idea behind kernel regression is to assume that is given by a linear combination of training examples, i.e., , and then solve the above system for the coefficients instead. Assuming this form for , the above optimization problem can be written as

Importantly, this optimization problem only depends on the inner product of the feature map between training samples. Thus, instead of working with the feature map directly, we can define a kernel, i.e., a positive semi-definite, symmetric function , such that . The resulting optimization problem is known as kernel regression and given as follows:

where with . Importantly, the abstraction to kernel methods allows using feature maps that map into an infinite dimensional inner product space (i.e., a Hilbert space), which are central to the study of infinite-width neural networks.

In addition to kernel regression described above, we also consider kernel ridge regression, which involves modifying the objective with a regularization term with a tunable ridge parameter as follows:

We primarily use a small non-zero ridge term to avoid numerical issues leading to a singular (or non-invertible) kernel matrix, .

Appendix B Overview of Image Classification Datasets

For projection, we used ImageNet32 as the source dataset and CIFAR10, Oxford 102 Flowers, DTD, and a subset of SVHN as the target datasets. For all target datasets, we used the training and test splits given by the PyTorch library [39]. For ImageNet32, we used the training and test splits provided by the authors [11]. An overview of the number of training and test samples used from each of these datasets is outlined below.

-

1.

ImageNet32 contains training images across classes and k images for validation. All images are of size .

-

2.

CIFAR10 contains k training images across classes and k images for validation. All images are of size .

-

3.

Oxford 102 Flowers contains training images across classes and images for validation. Images were resized to for the experiments.

-

4.

DTD contains training images across classes and images for validation. Images were resized to size for experiments.

-

5.

SVHN contains training images across classes and images for validation. All images are of size . In Fig. 2, we used the same training image subset for all experiments.

Appendix C Training and Architecture Details

Model descriptions:

-

1.

Laplace Kernel: For samples , and bandwidth parameter , the kernel is of the form:

For our experiments, we used a bandwidth of .

-

2.

NTK: We used the NTK corresponding to an infinite width ReLU fully connected network with hidden layers. We chose this depth as it gave superior performance on image classification task considered in [34].

-

3.

CNTK: We used the CNTK corresponding to an infinite width ReLU convolutional network with convolutional layers followed by a fully connected layer. All convolutional layers used filters of size . The first convolutional layers used a stride size of to downsample the image representations. All convolutional layers used zero padding. The CNTK was computed using the Neural Tangents library [36].

-

4.

CNN: We compare the CNTK to a finite-width CNN of the same architecture that has filters in the first layer, filters in the second layer, filters in the third layer, filters in the fourth layer, and filters in the fifth and sixth layers. In all experiments, the CNN was trained using Adam with a learning rate of .

Details for projection experiments.

For all kernels trained on ImageNet32, we used EigenPro [31]. For all models, we trained until the training accuracy was greater than , which was at most 6 epochs of EigenPro. For transfer learning to CIFAR10, Oxford 102 Flowers, DTD, and SVHN, we applied a Laplace kernel to the outputs of the trained source model. For CIFAR10 and DTD, we solved the kernel regression exactly using NumPy [38]. For DTD and SVHN, we used ridge regularization with a coefficient of to avoid numerical issues with solving exactly. The CNN was trained for at most 500 epochs on ImageNet32, and the transferred model corresponded to the one with highest validation accuracy during this time. When transfer learning, we fine-tuned all layers of the CNN for up to epochs (again selecting the model with the highest validation accuracy on the target task).

Details for translation experiments.

For transferring kernels from CIFAR10 to CIFAR-C, we simply solved kernel regression exactly (no ridge regularization term). For the corresponding CNNs, we trained the source models on CIFAR10 for 100 epochs and selected the model with the best validation performance. When transferring CNNs to CIFAR-C, we fine-tuned all layers of the CNN for epochs and selected the model with the best validation accuracy. When translating kernels from ImageNet32 to CIFAR10 in Appendix Fig. 9, we used the following aggregated class indices in ImageNet32 to match the classes in CIFAR10:

-

1.

plane = {372, 230, 231, 232}

-

2.

car = {265, 266, 267, 268 }

-

3.

bird = {383, 384, 385, 386}

-

4.

cat = {8, 10, 11, 55}

-

5.

deer = {12, 9, 57}

-

6.

dog = {131, 132, 133, 134}

-

7.

frog = {499, 500, 501, 494}

-

8.

horse = {80, 39}

-

9.

ship = {243, 246, 247, 235}

-

10.

truck = {279, 280, 281, 282}.

Details for virtual drug screening.

We used the NTK corresponding to a 1 hidden layer ReLU fully connected network with an offset term. The same model was used in [42]. We solved kernel ridge regression when training the source models, baseline models, and transferred models. For the source model, we used ridge regularization with a coefficient of . To select this ridge term, we used a grid search over on a random subset of k samples from the source data. We used a ridge term of when transferring the source model to the target data and a term of when training the baseline model. We again tuned the ridge parameter for these models over the same set of values but on a random subset of examples for one cell line (A549) from the target data. We used 5-fold cross validation for the target task and reported the metrics computed across all folds.

Appendix D Projection Scaling Laws

For the curves showing the performance of the projected predictor as a function of the number of target examples in Fig. 2b and Appendix Fig. 6a, b, we performed a scaling law analysis. In particular, we used linear regression to fit the coefficients of the function to the points from each of the curves presented in the figures. Each curve in these figures has evenly spaced points and all accuracies are averaged over 3 seeds at each point. The values for each of the fits is presented in Appendix Fig. 8. Overall, we observe that all values are above and are higher than for CIFAR10 and SVHN, which have more than target training samples. Moreover, by fitting the same function on the first 5 points from these curves for CIFAR10, we are able to predict the accuracy on the last point of the curve within of the reported accuracy.

Appendix E Pre-processing for CMAP Data

While CMAP contains landmark genes, we removed all genes that were upon scaling the data. This eliminates genes and removes batch effects identified in [7] for each cell line. Following the methodology of [7], we also removed all perturbations with dose less than and used only the perturbations that had an associated simplified molecular-input line-entry system (SMILES) string, which resulted in a total of perturbations. Following [7], for each of the observed drug and cell type combinations we then averaged the gene expression over all the replicates.

Appendix F Metrics for Evaluating Virtual Drug Screening

Let denote the predicted gene expression vectors and let denote the ground truth. Let . Let denote vectorized versions of and . We use the same three metrics as those considered in [42, 23]. All evaluation metrics have a maximum value of and are defined below.

1. Pearson r value:

2.Mean :

3. Mean Cosine Similarity:

We additionally subtract out the mean over cell type before computing cosine similarity to avoid inflated cosine similarity arising from points far from the origin.

Appendix G DepMap Analysis

To provide another application of our framework in the context of virtual drug screening, we used projection to transfer the kernel methods trained on imputing gene expression vectors in CMAP to predicting the viability of a drug and cell line combination in DepMap [13]. Viability scores in DepMap are real values indicating how lethal a drug is for a given cancer cell line (negative viability indicates cell death). To transfer from CMAP to DepMap, we trained a kernel method to predict the gene expression vectors for cell line and drug combinations for the 64 cell lines from CMAP that do not overlap with DepMap. We then used projection to transfer the model to the held-out cell lines present in both CMAP and DepMap, which are PC3, MCF7, A375, A549, HT29, and HEPG2. Analogously to our analysis of CMAP, we stratified the target dataset by drugs that appear in both the source and target tasks (9726 target samples) and drugs that are only found in the target task but not in the source task (2685 target samples). For this application, we found that Mol2Vec [26] embeddings of drugs outperformed 1024-bit circular fingerprints. We again used a 1-hidden layer ReLU NTK with an offset term for this analysis and solved kernel ridge regression with a ridge coefficient of .

Appendix Fig. 12a shows the performance of the projected predictor as a function of the number of target samples when transferring to a target task with drugs that appear in the source task. All results are averaged over folds of cross-validation and across 5 random seeds for the subset of target samples considered in each fold. It is apparent that performance is greatly improved when there are fewer than samples, thereby highlighting the benefit of the imputed gene expression vectors in this setting. Interestingly, as in all the previous experiments, we find a clear logarithmic scaling law: fitting the coefficients of the curve to the points on the graph yields an of , and fitting the curve to the first points lets us predict the for the last point on the curve within . Appendix Fig. 12b shows how the performance on the target task is affected by the number of genes predicted in the source task. Again performance is averaged over 5 fold cross-validation and across 5 seeds per fold. When transferring to drugs that were available in the source task, performance monotonically increases when predicting more genes. On the other hand, when transferring to drugs that were not available in the target task, performance begins to degrade when increasing the number of predicted genes. This is intuitive, since not all genes would be useful for predicting the effect of an unseen drug and could add noise to the prediction problem upon transfer learning.

Appendix H Proof of Theorem 1

The proof of Theorem 1 relies on the following lemma.

Lemma 1.

Let be two diagonal matrices of rank and , respectively. Let be an orthogonal matrix and a Haar distributed random matrix. If , then

Proof.

Without loss of generality, assume that , and . Since the Haar distribution is rotational invariant, is Haar distributed. Therefore,

where . Now the upper left block of is equal to the corresponding block in , and all other entries of are . Letting , we have

Thus, , and so, only depends on the fourth moments of the entries in . In particular,

To calculate these moments, we use Lemma 9 from [10]. In particular, if , then , and if , then

Therefore, we have the following closed form for the expectation:

Since , , and , the result follows. ∎

We now prove the following simpler version of Theorem 1 for the case when (i.e., when ).

Theorem 3.

Let and let . Assuming are independent, isotropic distributions on , then

where .

Proof.

Let to simplify notation. Let , , , and , and note that are all projections. Then, we have:

Therefore,

Using the cyclic property of the trace, the risk is given by

Using the idempotent property of projections and the fact that , we conclude that

and as a consequence that

Both are projections, and since follows an isotropic distribution, its right singular vectors (the eigenvectors of ) are Haar distributed. Now using Lemma 1 with we obtain that

Using and reordering the terms we obtain

Lastly, we use the standard result that (see e.g. [6]) and that to conclude that

which completes the proof. ∎

Using Lemma 1 and Theorem 3, we next prove Theorem 1, which is restated below for the reader’s convenience.

Theorem.

Let , , , and let and . Assuming that and are independent, isotropic distributions on , then the risk is given by

where and

Proof.

As in the proof of Theorem 3, we let to simplify notation. We follow the proof of Theorem 3, but now account for the expectation with respect to . Namely,

Using the independence of and and Fubini’s theorem, we compute the expectations sequentially:

Now, since , we have that . Therefore, . Similarly, . As a consequence, we calculate the two expectations involving by using Lemma 1 with . In particular, we conclude that

Therefore, is given by the sum of the following terms:

where for the second equality, we applied Theorem 3, which gives rise to and , thereby completing the proof. ∎

Appendix I Proof of Corollary 1

Proof.

We restate Corollary 1 below for the reader’s convenience.

Corollary.

Let and assume . Under the setting of Theorem 1, if as , then:

-

a)

is monotonically decreasing for if .

-

b)

If , then decreases as increases.

-

c)

If , then .

-

d)

If and , then

We first derive forms for the terms from Theorem 1 as . In particular, we have:

Substituting these values into in Theorem 1, we obtain

| (5) |

Next, we analyze Eq. (5) for . For fixed and , it holds that is a quadratic in and given by

For and , this quadratic is strictly decreasing and thus we can conclude that is decreasing in . We next observe that is linear in and thus decreases as increases if and only if the coefficient of is negative, i.e. . Lastly, if , then

Corollary 1c, d follow from the above form of the risk, thus completing the proof. ∎

Appendix J Equivalence of Fine-tuned and Translated Linear Models

We now prove that for linear models transfer learning using the translated predictor from Definition 2 is equivalent to transfer learning via the conventional fine-tuning process. This follows from Proposition 1 below, which implies that when parameterized by a linear model, the translated predictor is the interpolating solution for the target dataset that is nearest to the source predictor.

Proposition 1.

Let , where is a feature map and is a Hilbert space. Then the translated predictor, , is the solution to

| (6) | ||||

Appendix K Proof of Theorem 2

We restate Theorem 2 below for convenience and then provide the proof.

Theorem.

Let , , , and let where and . Assuming that and are independent, isotropic distributions on , the the risk is given by

where is the baseline predictor.

Proof.

We prove the statement by directly simplifying the risk as follows.

where the penultimate equality follows from adding and subtracting the term and the last equality is given by , thereby completing the proof. ∎

Appendix L Code and Hardware Details

All experiments were run using two servers. One server had 128GB of CPU random access memory (RAM) and 2 NVIDIA Titan XP GPUs each with 12GB of memory. This server was used for the virtual drug screening experiments and for training the CNTK on ImageNet32. The second server had 128GB of CPU RAM and 4 NVIDIA Titan RTX GPUs each with 24GB of memory. This server was used for all the remaining experiments. All code is available at https://github.com/uhlerlab/kernel_tf.