Transformed Fay-Herriot Model

with Measurement Error in Covariates

Abstract

Statistical agencies are often asked to produce small area estimates (SAEs) for positively skewed variables. When domain sample sizes are too small to support direct estimators, effects of skewness of the response variable can be large. As such, it is important to appropriately account for the distribution of the response variable given available auxiliary information. Motivated by this issue and in order to stabilize the skewness and achieve normality in the response variable, we propose an area-level log-measurement error model on the response variable. Then, under our proposed modeling framework, we derive an empirical Bayes (EB) predictor of positive small area quantities subject to the covariates containing measurement error. We propose a corresponding mean squared prediction error (MSPE) of EB predictor using both a jackknife and a bootstrap method. We show that the order of the bias is , where is the number of small areas. Finally, we investigate the performance of our methodology using both design-based and model-based simulation studies.

KEYWORDS: Small area estimation; official statistics; Bayesian methods; jackknife; parametric bootstrap; applied statistics; simulation studies.

1 Introduction

Typically, in small area measurement error models, both the response variable and covariate can be any real number (see Ybarra and Lohr (2008), Arima et al. (2017)). However, statistical agencies are often asked to produce small area estimates (SAEs) for skewed variables, which are also positive in . For instance, the Census of the Governments (CoG) provides information on roads, tolls, airports, and other similar information at the local-government level as defined by the United States Census Bureau (USCB). Another example includes the United States National Agricultural Statistics Service (NASS), which provides estimates regarding crop harvests (see Bellow and Lahiri (2011)). The United States Natural Resources Conservation Service (NRCS) provides estimates regarding roads at the county-level (e.g., Wang and Fuller (2003)), and the Australian Agricultural and Grazing Industries Survey provides estimates of the total expenditures of Australian farms (e.g., Chandra and Chambers (2011)).

When domain sample sizes are too small to support direct estimators, the effect of skewness can be quite large, and it is critical to account for the distribution of the response variable given auxiliary information at hand. For a review of the SAE literature, we refer to recent work by Rao and Molina (2015) and Pfefferman (2013). The case of positively skewed response variables is one such that the governing parameter in the Box-Cox transformation is zero. Due to the fact that the covariate in the model may be positively skewed and contains measurement error, this has received less attention in the literature. Throughout this paper, we explain the problem which is beyond a simple substitution and address some of its difficulties.

1.1 Census of the Governments

As mentioned in Sec. 1, our proposed framework is motivated by data that is positively skewed. One such data set is the Census of Governments (CoG), which is a survey data collected by the United States Census Bureau (USCB) periodically that provides comprehensive statistics about governments and governmental activities. Data is reported on government organizations, finances, and employment. For example, data from organizations refer to location, type, and characteristics of local governments and officials. Data from finances/employment refer to revenue, expenditure, debt, assets, employees, payroll, and benefits.

We utilize data from the CoG from 2007 and 2012 (https://www.census.gov/econ/overview/go0100.html). In the CoG, the small areas consist of the 48 states of the contiguous United States. These 48 areas contain 86,152 local governments defined by the USCB, such as airports, toll roads, bridges, and other federal government corporations. The parameter of interest is the average number of full-time employees per government at the state level from the 2012 data set, which can be defined as the total number of full-time employees from all local governments divided by the total number of local governments per state. The covariate of interest is the average number of full-time employees per government at the state level from the 2007 data set. After studying residual plots and histograms, we observe skewed patterns in the average number of full-time employees in both the 2007 and 2012 data sets, which partially motivate our proposed framework.

1.2 Our Contribution

Motivated by issues that statistical agencies face with skewed response variables we make several contributions to the literature. In order to stabilize the skewness and achieve normality in the response variable, we propose an area-level log-measurement error model on the response variable (Eq. (1)). In addition, we propose a log-measurement error model on the covariates (Eq. (2)). Next, under our proposed modeling framework, we derive an EB predictor of positive small area quantities subject to the covariates containing measurement error. In addition, we propose a corresponding estimate of the MSPE using a jackknife and a parametric bootstrap, where we illustrate that the order of the bias is under standard regularity conditions. We illustrate the performance of our methodology in both model-based simulation and design-based simulation studies. We summarize our conclusions and provide directions for future work.

The article is organized as follows. Sec. 1.3 details the prior work related to our proposed methodology. In Sec. 2, we propose a log-measurement error model for the response variable. In addition, we consider a measurement error model of the covariates with a log transformation. Further, we derive the EB predictor under our framework. Sec. 2.2 provides the MSPE for our EB predictor. We provide a decomposition of the MSPE to include the uncertainty of the EB predictor through unknown parameters. Sec. 3 provides two estimators of the MSPE, namely a jackknife and a parametric bootstrap, where we prove that the order of the bias is under standard regularity conditions. Sec. 4 provides both design-based and model-based simulation studies. Sec. 5 provides a discussion and directions for future work.

1.3 Prior Work

In this section, we review the prior literature most relevant to our proposed work. There is a rich literature on the area-level Fay-Herriot model, where various additive measurement error models have been proposed on the covariates. Ybarra and Lohr (2008) proposed the first additive measurement error model on the covariates. More specifically, the authors considered covariate information from another survey that was independent of the response variable. More recently, Berg and Chandra (2014) have proposed an EB predictor and an approximately unbiased MSE estimator under a unit-level log-normal model, where no measurement error is assumed present in the covariates. Turning to the Bayesian literature, Arima et al. (2017), Arima et al. (2015a), and Arima et al. (2015b) have provided fully Bayesian solutions to the measurement error problem for both unit-level and area-level small area estimation problems.

Next, we discuss related literature regarding the proposed jackknife and parametric bootstrap estimator of the MSPE of the Bayes estimators, where the order of the bias is under standard regularity conditions. Our proposed jackknife estimator of the MSPE contrasts that of Jiang et al. (2002), who proposed an MSE using an orthogonal decomposition, where the leading term in the MSE does not depend on the area-specific response and is nearly unbiased. Given that the authors can make an orthogonal decomposition, they can show that the order of the bias of the MSE is which contrasts our proposed approach. Under our approach, the leading term depends on the area-specific response, and thus, the bias is of order . Turning to the bootstrap, we utilize methods similar to Butar and Lahiri (2003). Using this approach, we propose a parametric bootstrap estimator of the MSPE of our estimator. In a similar manner to the jackknife, the order of the bias for the parametric bootstrap estimator of the MSPE is .

2 Area-Level Logarithmic Model with Measurement Error

Consider small areas and let () denote the population characteristic of interest in area , where often the information of interest is a population mean or proportion. A primary survey provides a direct estimator of for some or all of the small areas. In this section, we propose a measurement error model suitable for the inference of positively skewed response variable . To achieve normality in the response variable, we therefore propose an area-level log-measurement error model on In the rest of this section, we explain our model and the desirable predictor.

Consider the following model:

| (1) |

where , , and is the sampling error distributed as . Assume

where is the -th covariate of the -th small area, which is unknown but is observed by . The regression coefficient is unknown and must be estimated, and is the random effect distributed as , where is unknown.

Our measurement error model for the case of positively skewed ’s is proposed as

or in a vector form

| (2) |

where and for . Note that in Eq. (2), is non-stochastic within the class of functional measurement error models (c.f. Fuller (2006)). We assume is known, and if it is unknown, it can be estimated using microdata or from another independent survey. We refer to Arima et al. (2017) for further details of estimating .

Now, one can write

where . Thus, for the pair , we have the following joint normal distribution

We assume all the sources of errors for are mutually independent throughout the rest of the paper.

Remark 1.

Next, we give the following conditional distribution to later justify our Bayesian interpretation of the unknown interested parameter :

i.e.

where .

Recall that the parameter of interest is after transforming from the logarithmic scale back to the original scale. Therefore, the corresponding Bayes predictor is given by . By using the moment generating function of the normal distribution of , the Bayes predictor has the form of . In practice, is unobserved, and since , we can replace it with the observed . Also, and are unknown, and we need to replace them with their consistent estimators. Therefore, the EB predictor of is

| (3) |

2.1 Estimation of Unknown Parameters

In this section, we discuss estimation of the unknown parameters and First, an estimator of is obtained by solving the equation

| (4) |

The justification for Eq. (4) is as follows. Let and . Then, where and . Hence, an estimator of is obtained by solving

2.2 Mean Squared Prediction Error of the EB Predictor

In this section, we first define the MSPE of the EB predictor Second, we show that the cross-product term of the MSPE of the EB predictor is exactly zero. Now, we introduce notation that will be used throughout the rest of the paper. Let

Note that we estimate with , and the term depends on the area-specific response variable unlike Jiang et al. (2002), and its estimator has bias of order . Since we wish to include the uncertainty of the EB predictor with respect to the unknown parameters and , we decompose the MSPE into three terms using Definition 1.

Definition 1.

The MSPE of the EB predictor is

where we show below that

To show that the cross product, goes to 0, recall the Bayes estimator is

Consider

3 Jackknife and Parametric Bootstrap Estimators of the MSPE

In this section, we propose two estimators for the MSPE of the EB predictor First, we propose a jackknife estimator of the MSPE. Second, we propose a parametric bootstrap estimator of the MSPE. The expectation of the proposed measure of uncertainty based on both methods is correct up to the order for the EB predictor.

3.1 Jackknife Estimator of the MSPE

In this section, we propose a jackknife estimator of the MSPE of the EB predictor denoted by We prove the order of the bias of is correct up to the order under six regularity conditions. We propose the following jackknife estimator:

| (6) |

where denotes all areas except the -th area. Therefore, let

| (7) | ||||

Note that for all cases, the estimators should plug into the expressions where the data is based on all the areas other than . We define some notation and then establish six regularity conditions used in Theorem 1. Let denote the conditional likelihood function. We define the corresponding first, second, and third derivatives of the conditional likelihood function by , and , respectively. Now, assume the following six regularity conditions:

Condition 1. Define where is a compact set such that .

Condition 2. Assume is a consistent estimator for i.e. .

Condition 3. Assume and both exist for , almost surely in probability.

Condition 4. Assume for .

Condition 5. Assume is a continuous function of for , almost surely in probability, where is positive definite, uniformly bounded away from , and is a measurable function of .

Condition 6. Assume , , and are uniformly bounded for under some and .

Theorem 1.

Assume Conditions 1–6 hold. Then

Proof.

Define

Also, define a remainder term that is bounded in absolute value by such that,

First, we prove has a bias of order Using a Taylor series expansion, we find that

for between and . Also, , , and stand for the first, second, and third derivatives of with respect to . Let , and it follows that

for some between and . In order to approximate the solution of the equation in iteration , we use Householder’s method (Householder (1970), Theorem 4.4.1). See also Theorem 1 of Lohr and Rao (2009):

By taking the initial value , we have

By combining the above results, we find that

Second, we prove has a bias of order . Let

and . Using a Taylor series expansion, we find that

where

and is between and Using an additional Taylor series expansion, we find that

By combining the above results, we find that

Finally,

Hence, .

∎

3.2 Parametric Bootstrap Estimator of the MSPE

In this section, we propose a parametric bootstrap estimator of the MSPE of the EB predictor , which we denote it by We prove that the order of the bias is correct up to order Specifically, we extend Butar and Lahiri (2003) to find a parametric bootstrap of our proposed EB predictor. To introduce the parametric bootstrap method, consider the following bootstrap model:

| (8) |

Recall that from Definition 1, since . We use the parametric bootstrap twice. First, we use it to estimate in order to correct the bias of (see Eq. (3.1)). Second, we use it to estimate . More specifically, we propose to estimate by , and by , where denotes that the expectation is computed with respect to model in Eq. (3.2) and . In addition, where and are estimators of and with respect to the parametric bootstrap model in Eq. (3.2).

Our proposed estimator of is

| (9) |

which has bias of order as shown in the Theorem 2.

Theorem 2.

Assume and . The bootstrap estimator of the MSPE has bias of order , i.e.

4 Experiments

In this section, we investigate the performance of the EB predictors in comparison to the direct estimators through design-based and model-based simulation studies. In addition, we evaluate the MSPE estimators using both a jackknife and parametric bootstrap.

4.1 Design-Based Simulation Study

In this section, we consider a design-based simulation study using the CoG data set as described in Sec. 1.1.

4.1.1 Design-Based Simulation Setup

We describe the design-based simulation setup. The parameter of interest is average number of full-time employees per government at the state level from 2012 data set. The covariate is the average number of full-time employees per government at the state level from the 2007 data set. There are observed skewed patterns in the average number of full-time employees in both 2007 and 2012, which motivates our proposed framework.

For the response variable, we select a total sample of 7,000 governmental units proportionally allocated to the states and for the covariates, we select a total sample of 70,000 units and the survey-weighted averages were then calculated. The measurement error variance was obtained from a Taylor series approximation, where was estimated from the formula of variance in simple random sampling without replacement at each state. The ’s were estimated by a Generalized Variance Function (GVF) method (see Fay and Herriot (1979)). We assume the sampling variances to be known throughout the estimation procedure.

For the design-based simulation, we draw 1,000 samples and estimate the parameters from each sample. We evaluate our proposed predictors by empirical MSE per each state :

where is the total number of replications, and is the estimator of . In addition, when the parametric bootstrap it used, we take bootstrap samples. We use the same number of replications and bootstrap samples in the design and model-based simulation studies.

4.1.2 Design-Based Simulation Results

In this section, we provide the results of the design-based simulation study.

Investigating the performance of the proposed estimators

Recall that the covariate of interest is the average number of full-time employees per government at the state level from 2007 data set, and we wish to predict the average number of full-time employees per government at the state level in 2012. To do so, we give the predictors for each state as well as their corresponding EMSE’s in Tables 1 and 2. More specifically, we compare the following three estimators:

-

1)

: the direct estimator,

-

2)

: the EB predictor, assuming the true covariate and ignoring in our model,

-

3)

: the EB predictor, assuming the true covariate has measurement error, where is included in our model.

We observe that in most cases the is smaller than the . However, we observe that our proposed EB predictor does not always outperform the direct estimator, which we further explore in our model-based simulation studies in Sec. 4.2.

| State | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | RI | 10 | 191.641 | 202.928 | 204.907 | 6.523 | 5.390 | 5.103 |

| 2 | AK | 14 | 132.301 | 120.112 | 123.684 | 4.501 | 6.153 | 5.793 |

| 3 | NV | 15 | 420.299 | 422.992 | 431.912 | 8.077 | 8.170 | 8.449 |

| 4 | MD | 19 | 824.939 | 784.779 | 794.784 | 8.050 | 5.524 | 6.503 |

| 5 | DE | 27 | 64.273 | 72.674 | 73.130 | 3.003 | 5.113 | 5.182 |

| 6 | LA | 40 | 363.838 | 342.056 | 343.344 | 6.017 | 0.845 | -2.863 |

| 7 | VA | 40 | 560.881 | 526.612 | 530.160 | 6.669 | 8.265 | 8.148 |

| 8 | NH | 44 | 80.695 | 83.645 | 83.857 | -3.994 | 2.254 | 2.387 |

| 9 | UT | 47 | 126.045 | 117.729 | 118.512 | 2.827 | 2.873 | 2.461 |

| 10 | AZ | 49 | 332.610 | 349.704 | 350.915 | 6.270 | 7.380 | 7.439 |

| 11 | CT | 49 | 187.500 | 191.494 | 192.054 | 4.851 | 5.457 | 5.528 |

| 12 | SC | 53 | 231.790 | 221.881 | 222.574 | 5.158 | 6.279 | 6.218 |

| 13 | WV | 53 | 83.568 | 84.071 | 84.315 | 2.754 | 2.482 | 2.336 |

| 14 | WY | 55 | 43.084 | 39.629 | 39.795 | 2.493 | 3.873 | 3.824 |

| 15 | VT | 59 | 27.717 | 27.529 | 27.574 | 0.474 | 0.751 | 0.688 |

| 16 | ME | 65 | 38.892 | 40.713 | 40.789 | 2.966 | 1.899 | 1.840 |

| 17 | NM | 67 | 93.817 | 93.360 | 93.913 | 3.496 | 3.331 | 3.529 |

| 18 | MA | 70 | 237.066 | 231.213 | 231.999 | 4.218 | 1.741 | 2.310 |

| 19 | TN | 72 | 245.317 | 232.133 | 233.405 | 4.257 | 6.144 | 6.023 |

| 20 | NC | 76 | 372.264 | 357.791 | 359.375 | 5.846 | 6.997 | 6.700 |

| 21 | MS | 78 | 137.977 | 132.143 | 132.454 | 3.891 | 0.299 | 0.774 |

| 22 | ID | 92 | 46.450 | 45.642 | 45.784 | 2.451 | 1.910 | 2.016 |

| 23 | AL | 93 | 162.841 | 160.389 | 160.818 | 3.356 | 2.131 | 2.406 |

| 24 | MT | 99 | 23.296 | 22.457 | 22.508 | 0.461 | 1.481 | 1.433 |

| 25 | KY | 104 | 119.415 | 121.105 | 121.576 | 1.935 | 2.928 | 3.134 |

| 26 | NJ | 108 | 235.215 | 245.163 | 245.595 | 3.898 | 5.663 | 5.713 |

| 27 | GA | 109 | 255.141 | 243.862 | 244.539 | 5.686 | 6.696 | 6.648 |

| State | ||||||||

|---|---|---|---|---|---|---|---|---|

| 28 | AR | 118 | 68.911 | 65.223 | 65.362 | -3.160 | 2.719 | 2.646 |

| 29 | OR | 120 | 72.363 | 72.483 | 72.653 | 1.376 | 1.493 | 1.648 |

| 30 | FL | 124 | 377.822 | 365.035 | 366.658 | 7.658 | 8.148 | 8.092 |

| 31 | OK | 144 | 76.686 | 74.610 | 74.803 | -2.447 | 1.155 | 0.926 |

| 32 | WA | 145 | 93.681 | 91.238 | 91.476 | 3.077 | 3.920 | 3.852 |

| 33 | SD | 153 | 14.757 | 14.078 | 14.142 | -1.338 | -3.583 | -4.546 |

| 34 | IA | 155 | 56.219 | 55.686 | 55.790 | -4.924 | -0.962 | -1.332 |

| 35 | CO | 184 | 74.373 | 71.579 | 71.908 | 0.789 | 2.907 | 2.746 |

| 36 | NE | 197 | 33.713 | 31.017 | 31.215 | 1.326 | -0.561 | -1.167 |

| 37 | IN | 217 | 75.994 | 78.712 | 78.830 | 0.619 | 0.609 | 0.775 |

| 38 | ND | 217 | 8.336 | 7.421 | 7.469 | -1.704 | -1.436 | -1.644 |

| 39 | MI | 236 | 85.314 | 97.710 | 97.705 | -1.220 | 4.945 | 4.944 |

| 40 | WI | 246 | 61.304 | 61.320 | 61.451 | 2.486 | 2.495 | 2.569 |

| 41 | NY | 270 | 280.982 | 278.784 | 283.973 | 6.457 | 6.275 | 6.681 |

| 42 | MO | 278 | 61.972 | 62.846 | 62.946 | 0.093 | 1.307 | 1.409 |

| 43 | MN | 289 | 42.025 | 45.747 | 45.727 | -1.572 | 2.367 | 2.355 |

| 44 | OH | 302 | 108.261 | 111.756 | 111.905 | 2.364 | 3.820 | 3.864 |

| 45 | KS | 309 | 30.804 | 30.253 | 30.321 | 1.859 | 2.253 | 2.208 |

| 46 | CA | 338 | 238.284 | 248.558 | 248.800 | 6.991 | 6.244 | 6.223 |

| 47 | TX | 374 | 221.105 | 204.983 | 205.689 | 2.570 | 5.965 | 5.892 |

| 48 | PA | 386 | 81.653 | 83.551 | 83.668 | 2.888 | 3.628 | 3.666 |

| 49 | IL | 567 | 64.650 | 67.278 | 67.172 | 1.490 | 3.110 | 3.064 |

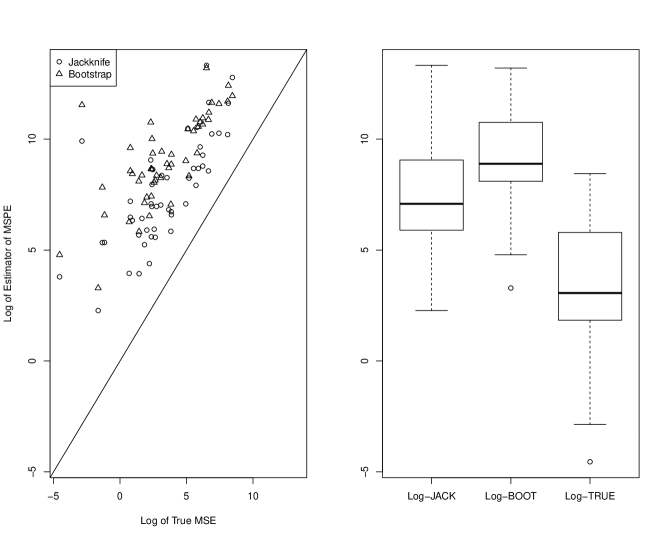

Jackknife versus parametric bootstrap estimators

Next, we consider the performance of MSPE estimators, i.e., the jackknife and bootstrap, with respect to the true MSE, i.e., in Figure 1. The results are given on the logarithmic scale, and we observe that the distribution of jackknife is closer to the distribution of true MSE when compared to the bootstrap. Therefore, we recommend the jackknife given that it slightly overestimates the true MSE. As already mentioned, given that our proposed estimator does not uniformly beat the direct estimator in terms of the EMSE, we conduct a model-based simulation study in Sec. 4.2 to investigate this and provide further insight.

|

4.2 Model-Based Simulation Study

In this section, we describe our model-based simulation study to further investigate the performance of the proposed EB predictor Second, we compare the proposed jackknife and parametric bootstrap estimators, and Third, we investigate how often the variance estimates are zero. Finally, we investigate how the regression parameter changes when is misspecified. Our goal through this model-based simulation study is to understand how one could improve the EB predictor through future research, and to further understand its underlying behavior.

4.2.1 Model-Based Simulation Setup

In this section, we provide the setup of our model-based simulation study in Table 3. This setup follows Eqs. (1) and (2). We are interested in comparing the following four estimators:

-

1)

: the direct estimator,

-

2)

: the EB predictor, assuming the true covariate

-

3)

: the EB predictor, assuming the true covariate and ignoring in our model,

-

4)

: the EB predictor, assuming the true covariate has measurement error, where is included in our model

We compare these four estimators (for each area ) using the empirical MSE:

where is the estimator of .

| Simulation Setup: |

| Generate from a Normal(5,9) and from a Gamma(4.5,2) |

| Take , , and |

| , , and |

| Take and |

| Parameter Definition: |

| Let and (number of small areas) |

| Let (for all cases) |

| Let |

| , where or |

| Allow of the ’s randomly receive and the rest . |

In order to evaluate the jackknife and parametric bootstrap estimators of , we consider the relative bias, denoted by and , respectively. More specifically, the relative biases are defined as follows for each area :

4.2.2 Model-Based Simulation Results

In this section, we summarize our results of the model-based simulation study.

Investigating the performance of the proposed estimators

In this section, we investigate the performance of the proposed estimators. Table 4 provides the four estimators given in Sec. 4.2.1 with their empirical MSEs, where we average the results over all the small areas and re-scale them using the logarithmic scale. When , the MSE’s for all EB predictors are the same since the term vanishes and is the same as . Overall, as the value of increases, the empirical MSE increases for almost all predictors. We observe there are cases in which the EB predictors cannot outperform the direct estimators based on the simulation results.

Table 4 illustrates that there are cases in which the is larger than the In fact, the EB predictors cannot outperform the direct estimators due to propagated errors in the term which is present in the term in the EB predictors through the simulations; see expression (3). Therefore, as the measurement error variance increases, we have shown that the MSE of our proposed EB predictors can also increase. This is the main point that one should notice when using a log-model with measurement error. In order to prevent such behavior, a further adjustment should be made to the EB predictors, which we discuss in Sec. 5.

| m | k | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 20 | 0 | 46.766 | 50.626 | 50.626 | 50.626 | 102.088 | 111.09 | 111.09 | 111.09 |

| 20 | 53.054 | 50.139 | 49.229 | 49.864 | 115.974 | 109.338 | 104.398 | 108.425 | |

| 50 | 42.232 | 41.174 | 42.464 | 43.110 | 93.496 | 91.163 | 92.948 | 94.223 | |

| 80 | 42.519 | 44.285 | 43.469 | 44.794 | 93.881 | 97.557 | 95.152 | 97.495 | |

| 100 | 44.682 | 41.81 | 45.073 | 46.331 | 99.13 | 92.161 | 99.938 | 102.48 | |

| 50 | 0 | 49.624 | 47.732 | 47.732 | 47.732 | 110.061 | 106.167 | 106.167 | 106.167 |

| 20 | 44.292 | 42.851 | 44.702 | 45.098 | 97.644 | 94.541 | 99.321 | 100.255 | |

| 50 | 45.512 | 44.677 | 46.071 | 47.59 | 99.615 | 98.56 | 101.351 | 105.547 | |

| 80 | 42.703 | 41.773 | 45.289 | 46.469 | 94.339 | 93.268 | 101.068 | 103.615 | |

| 100 | 43.83 | 43.201 | 44.779 | 45.68 | 97.144 | 95.961 | 98.347 | 100.319 | |

| 100 | 0 | 42.635 | 42.241 | 42.241 | 42.241 | 94.625 | 92.802 | 92.802 | 92.802 |

| 20 | 46.216 | 45.601 | 46.179 | 47.427 | 103.264 | 101.412 | 103.035 | 106.172 | |

| 50 | 50.93 | 45.982 | 49.347 | 48.519 | 113.343 | 103.08 | 109.718 | 108.214 | |

| 80 | 50.132 | 46.586 | 48.137 | 48.966 | 111.618 | 103.055 | 106.712 | 108.454 | |

| 100 | 44.925 | 44.711 | 48.009 | 49.031 | 100.996 | 100.764 | 107.472 | 109.515 | |

| 500 | 0 | 47.338 | 45.253 | 45.253 | 45.253 | 107.275 | 103.465 | 103.465 | 103.465 |

| 20 | 46.382 | 45.369 | 47.575 | 47.652 | 104.607 | 102.867 | 107.896 | 108.169 | |

| 50 | 53.045 | 46.854 | 50.662 | 49.126 | 119.208 | 106.396 | 114.378 | 110.006 | |

| 80 | 47.766 | 44.868 | 47.706 | 49.950 | 108.289 | 104.921 | 107.454 | 112.805 | |

| 100 | 48.586 | 45.313 | 49.372 | 50.449 | 109.795 | 103.378 | 111.069 | 113.218 |

Jackknife versus parametric bootstrap estimators

We compare the jackknife MSPE estimator of the EB predictor to that of the bootstrap using the relative bias (see Table 5). In addition, we consider box plots for the jackknife and bootstrap MSPE estimators of the EB predictor where we compare these to box plots of the true values (see Figure 3). Both Table 5 and Figure 3 illustrate that the bootstrap receives a large number of negative values, which is due to the construction of Here, we find that the bootstrap grossly underestimates the true values, whereas the jackknife slightly overestimates the true values. This could be due to generating data from the normal distribution and the non-linear transformation in the model. Thus, we would recommend the jackknife in practice.

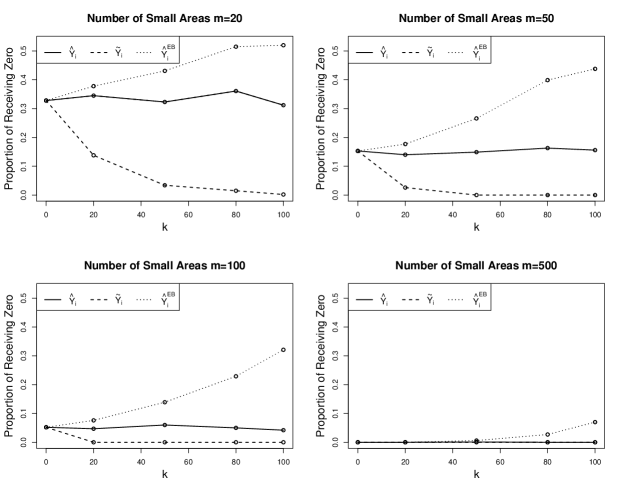

Amount of zeros for the estimates of

Here, we investigate the proportion of zero estimates for based on iteratively solving the Eqs. (4) and (5). Figure 2 illustrates that as the number of small areas increases, the magnitude of receiving zeros decreases. More specifically, we observe when and as increases, and tend to have a proportion of zero estimates of between 0.3 and 0.5. When and as increases, and tend to have a proportion of zero estimates of between 0.15 and 0.4. When and as increases, and tend to have a proportion of zero estimates of between 0.05 and 0.3. When and as increases, and tend to have a proportion of zero estimates of between 0 and 0.05. One should be cautious of this in practical applications, and adjusting for this is of the interest of future work.

|

The effect of misspecification of on

We investigate the effect of mis-specifying the variance on the estimation of the regression parameter To accomplish this, we conduct an empirical study based on the proposed model-based simulation study in Table 3 for the EB predictor . Assume and we consider two sets of experiments for each value of which are summarized in Table 6. Recall that Denote the first set of experiments by and where we assume Denote the misspecified value of by Denote the second set of experiments by and where we assume and We conduct both sets of experiments for and . For each experiment, we estimate the unknown parameter under the followings: (1) the true value of denoted by and (2) the misspecified value of denoted by .

Then we compute the average absolute difference between the respective ’s by considering the following:

In addition, we compute the magnitude of bias related to and with respect to the true value of as follows

Table 6 illustrates that the overall misspecification of leads to bias in When the magnitude of measurement error is zero (i.e. ), there is no difference between the estimated using or . On the other hand, when the magnitude of increases and we have more uncertainty in the error variance , values of and diverge more from one another, and the magnitude of the bias increases. Also, we observe as the number of small areas increases, the value of bias decreases. One can resolve this bias issue by constructing an adaptive estimator for in which its bias is corrected through some techniques such as bootstrap and develop a test of parameter specification.

| m | k | |||||

|---|---|---|---|---|---|---|

| 20 | 0 | 111.09 | 120.731 | 118.478* | 9.641 | 7.389* |

| 20 | 108.425 | 115.222 | 115.884* | 6.796 | 7.459* | |

| 50 | 94.223 | 107.245 | 111.255* | 13.021 | 17.032* | |

| 80 | 97.495 | 113.06 | 129.772* | 15.565 | 32.277* | |

| 100 | 102.48 | 115.536* | 119.84* | 13.056* | 17.36* | |

| 50 | 0 | 106.167 | 112.318* | 116.383* | 6.154* | 10.216* |

| 20 | 100.255 | 110.449 | 106.743* | 10.194 | 6.489* | |

| 50 | 105.547 | 115.365* | 118.838* | 9.818* | 13.291* | |

| 80 | 103.615 | 114.127 | 112.717* | 10.512 | 9.102* | |

| 100 | 100.319 | 110.454* | 112.552* | 10.134* | 12.233* | |

| 100 | 0 | 92.802 | 98.67 | 102.932* | 5.866 | 10.13* |

| 20 | 106.172 | 106.788* | 108.623* | 1.048* | 2.534* | |

| 50 | 108.214 | 119.666 | 120.032* | 11.452 | 11.819* | |

| 80 | 108.454 | 117.223* | 119.418* | 8.769* | 10.964* | |

| 100 | 109.515 | 119.028 | 117.071* | 9.513 | 7.557* | |

| 500 | 0 | 103.465 | 108.38 | 110.455* | 4.908 | 6.991* |

| 20 | 108.169 | 119.672 | 115.892 | 11.502 | 7.722 | |

| 50 | 110.006 | 121.191 | 125.754* | 11.184 | 15.747* | |

| 80 | 112.805 | 125.672* | 129.761* | 12.867* | 16.955* | |

| 100 | 113.217 | 123.037* | 124.597* | 9.819* | 11.379* |

|

| m | k | Experiment | |||

|---|---|---|---|---|---|

| 20 | |||||

| 500 | |||||

5 Discussion

In this paper, in order to stabilize the skewness and achieve normality in the response variable, we have proposed an area-level log-measurement error model on the response variable. In addition, we have proposed a measurement error model on the covariates. Second, under our proposed modeling framework, we derived the EB predictor of positive small area quantities subject to the covariates containing measurement error. Third, we proposed a corresponding estimate of MSPE using a jackknife and a bootstrap method, where we illustrated that the order of the bias is , where is the number of small areas. Fourth, we have illustrated the performance of our methodology in both design-based simulation and model-based simulation studies, where the EMSE of the proposed EB predictor is not always uniformly better than that of the direct estimator. Our model-based simulation studies have provided further investigation and guidance on the behavior. For example, one fruitful area of future research would be providing a correction to the EB predictor to avoid for such behavior. One way to address this issue is by estimating in such a way that its order of bias is smaller than . This could help to reduce the amount of propagated errors in the EB predictor . Another way, which is less theoretical burdensome is by estimating the covariate as we have assumed it follows a functional measurement error model rather than a structural one. We have studied the MSPE of the EB predictor using both the jackknife and bootstrap in simulation studies, where we have shown the jackknife estimator performs better than the bootstrap one under our log model.

References

- Arima et al. (2017) Arima, S., Bell, W., Datta, C., Gauri S. Franco and Liseo, B. 2017. Multivariate fay–herriot bayesian estimation of small area means under functional measurement error. Journal of the Royal Statistical Society: Series A, 180 (4): 1191–1209.

- Arima et al. (2015a) Arima, S., Datta, G. S. and Liseo, B. 2015a. Accounting for measurement error in covariates in SAE: an overview in analysis of poverty data by small area methods. M. Pratesi Editor, Wiley New York.

- Arima et al. (2015b) Arima, S., Datta, G. S. and Liseo, B. 2015b. Bayesian estimators for small area models when auxiliary information is measured with error. Scandinavian Journal of Statistics, 42 (2): 518–529.

- Bellow and Lahiri (2011) Bellow, M. E. and Lahiri, P. S. 2011. An empirical best linear unbiased prediction approach to small area estimation of crop parameters. National Agricultural Statistics Service, University of Maryland.

- Berg and Chandra (2014) Berg, E. and Chandra, H. 2014. Small area prediction for a unit-level lognormal model. Computational Statistics & Data Analysis, 78: 159–175.

- Butar and Lahiri (2003) Butar, F. B. and Lahiri, P. 2003. On measures of uncertainty of empirical bayes small-area estimators. Journal of Statistical Planning and Inference, 112 (2): 63–76.

- Chandra and Chambers (2011) Chandra, H. and Chambers, R. 2011. Small area estimation for skewed data in presence of zeros. Calcutta Statistical Association Bulletin, 63 (4): 241–258.

- Fay and Herriot (1979) Fay, R. E. and Herriot, R. A. 1979. Estimates of income for small places: an application of james–stein procedures to census data. Journal of the American Statistical Association, 74 (366a): 269–277.

- Fuller (2006) Fuller, W. A. 2006. Measurement error models. John Wiley & Sons.

- Ghosh et al. (2015) Ghosh, M., Kubokawa, T. and Kawakubo, Y. 2015. Benchmarked empirical bayes methods in multiplicative area-level models with risk evaluation. Biometrika, 102 (3): 647–659.

- Householder (1970) Householder, A. S. 1970. The numerical treatment of a single nonlinear equation. McGraw-Hill.

- Jiang et al. (2002) Jiang, J., Lahiri, P. and Wan, S. M. 2002. A unified jackknife theory for empirical best prediction with m-estimation. The Annals of Statistics, 30 (6): 1782–1810.

- Lohr and Rao (2009) Lohr, S. L. and Rao, J. 2009. Jackknife estimation of mean squared error of small area predictors in nonlinear mixed models. Biometrika, 96 96: 457–468.

- Pfefferman (2013) Pfefferman, D. 2013. New important developments in small area estimation. Statistical Science 28 (1): 40–68.

- Rao and Molina (2015) Rao, J. N. and Molina, I. 2015. Small area Estimation. Wiley Series in Survey Sampling, London.

- Slud and Maiti (2006) Slud, E. V. and Maiti, T. 2006. Mean-squared error estimation in transformed fay–herriot models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 68 (2): 239–257.

- Wang and Fuller (2003) Wang, J. and Fuller, W. A. 2003. The mean squared error of small area predictors constructed with estimated area variances. Journal of the American Statistical Association, 98 (463): 716–723.

- Ybarra and Lohr (2008) Ybarra, L. M. and Lohr, S. L. 2008. Small area estimation when auxiliary information is measured with error. Biometrika, 95 (4): 919–931.