Transformer-based Personalized Attention Mechanism for Medical Images with Clinical Records

Abstract

In medical image diagnosis, identifying the attention region, i.e., the region of interest for which the diagnosis is made, is an important task. Various methods have been developed to automatically identify target regions from given medical images. However, in actual medical practice, the diagnosis is made based not only on the images but also on a variety of clinical records. This means that pathologists examine medical images with some prior knowledge of the patients and that the attention regions may change depending on the clinical records. In this study, we propose a method called the Personalized Attention Mechanism (PersAM), by which the attention regions in medical images are adaptively changed according to the clinical records. The primary idea of the PersAM method is to encode the relationships between the medical images and clinical records using a variant of Transformer architecture. To demonstrate the effectiveness of the PersAM method, we applied it to a large-scale digital pathology problem of identifying the subtypes of 842 malignant lymphoma patients based on their gigapixel whole slide images and clinical records.

1 Introduction

Medical images are often diagnosed on the basis of specific regions of interest in the images rather than their entirety. For example, cancer pathologists typically focus on specific tumor regions rather than the entire pathological tissue specimen. In this study, we refer to such regions as attention regions. Developing computational methods to estimate the attention regions is an important task in medical image analysis to obtain high performance and explainability. In existing methods, the attention regions are predominantly estimated based solely on the images themselves [15, 9, 17]. However, in clinical practice, pathologists use both the imaging information and various clinical records (including basic demographic details such as patient age and gender, the results of various medical examinations, and genetic information). It is well-recognized among pathologists that patient-specific information can help them focus on specific tissues in the specimens or narrow the diagnostic target classes. In practice, the region to be focused on in a tissue slide changes depending on the type of organs from which a tissue specimen is sliced, or the results of a medical interview and some medical tests narrow down suspected diseases. It is known that the additional use of clinical record information can enhance the performance also in medical image analysis [35, 36, 30, 23]. In this study, we introduce a framework, called the Personalized Attention Mechanism (PersAM) framework, that adaptively changes the attention regions in medical images according to patient-specific information. The PersAM framework mimics pathologists’ decision-making and provides high explainability by modeling the relationship between medical images and clinical records.

In this paper, we focus on the PersAM framework in the context of digital pathology. Particularly, we deal with malignant lymphoma as the target disease, whereas the proposed PersAM framework can be applied to other images in similar problem settings. In digital pathology, whole slide images (WSIs) are used as image data, which are large digital images scanned by a scanner. The image size of WSIs can be up to 100,000100,000 pixels and the tissue regions of WSIs have both tumor and normal regions mixedly. Therefore, it is especially important in digital pathology to identify the attention regions in a vast image and diagnose focusing on some areas in the tissue specimen. For examples of malignant lymphoma, pathologists diagnose diffuse large B-cell lymphoma (DLBCL) focusing on large cells in a tissue specimen, while they diagnose follicular lymphoma (FL) focusing on follicular structures in a tissue specimen. In practical diagnosis, as mentioned above, pathologists observe such regions considering a patient’s clinical record information that includes basic profiles and results of some examinations. As the main target problem, we are concerned in this paper with the PersAM framework in a digital cancer pathology task, where clinical records can be used together with the WSIs of tissue specimens as patient-specific information.

The problem of attention region estimation in digital cancer pathology can be formulated as a weakly supervised learning problem because only the class label for the entire image is given—the annotations for the attention region are not. Some public database has pathologists’ annotations for tumor regions, but most problem settings that employ other private datasets have no patch-level annotations and only patient-level annotations. Hence, in digital pathology using WSIs, attention region estimation and each machine learning task should be generally performed with only patient-level annotations. Multiple Instance Learning (MIL) [15, 25, 18] is one method used for such weakly supervised attention region estimation problems. In MIL, an image patch is considered an instance and the entire image (or set of a large number of patches) is considered a bag. The problem then reduces to estimating the label of each image patch given the label of the bag (e.g., tumor or normal), where the image patches estimated to be tumors are interpreted as the attention regions. In the context of MIL in digital pathology, attention-based MIL is well-known as a successful method. [15, 9, 17]. An attention-based MIL can compute attention weights that indicate how each instance contributes to the classification result. Instances that have higher attention weights in WSI are interpreted as tumor regions in attention-based MIL for digital pathology. In this study, we would like to introduce the PersAM framework that can adaptively change attention regions depending on different clinical record information even if input WSI is the same. In the case of the aforementioned attention-based MIL, there is no mechanism to change the attention regions when different clinical record information is input to the same WSI since attention weights are calculated independently for each instance without considering the relationship between medical images and clinical records. The proposed PersAM method employs a variant of the Transformer architecture to encode the relationships between the medical images and corresponding clinical records. In the Transformer architecture, the relationships between multiple components are expressed in the form of attentions [32]. The application of Transformer architecture to computer vision can calculate attention regions calculated by encoding the relationship between image patches [6]. The Transformer architecture could be expanded even into multimodal inputs such as images and table data, which encodes the relationship between each instance of multimodal inputs [37, 3]. By combining the medical images and clinical records and then computing the attentions, the proposed method enables personalized attention, which represents the strength of the relationship between each clinical record and each region (patch) in the image.

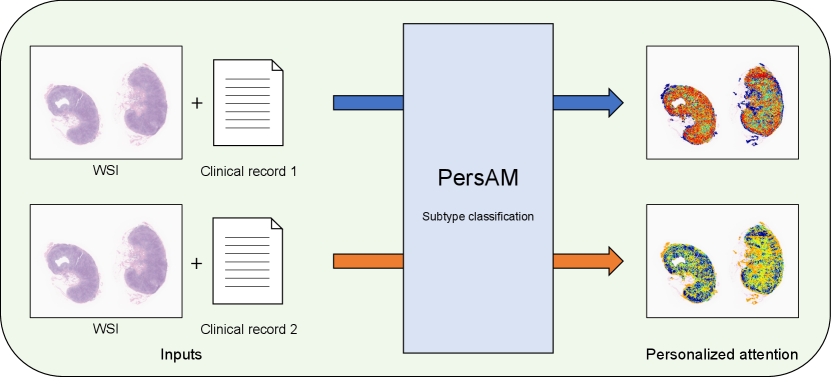

In this study, we present a weakly supervised attention region estimation problem formulated as an MIL problem and propose the PersAM method to obtain attention regions that can be adaptively changed according to patient clinical records. Figure 1 illustrates the concept of the proposed PersAM method, where different clinical record information is given to the same WSI as inputs. Regions with red color in Personalized attention represent attention regions in each output, and the PersAM method can provide different attention regions depending on different clinical records even if input WSIs are the same. This mimics a pathologist’s decision-making where he/she observes tumor-specific regions in the tissue specimen considering the corresponding patient’s clinical record. With a slight abuse of terminologies, we refer to both the framework and our proposed method as PersAM in this work. The proposed PersAM method enables us to provide two types of personalized attentions: exploratory and explanatory attentions. Exploratory attention is a class-independent attention that is determined solely by a WSI and a clinical record, i.e., the first regions of interest to the pathologist when observing a tissue specimen. On the other hand, explanatory attention is a class-dependent attention that is determined by a WSI, a clinical record, and class information, i.e., the regions of interest to the pathologist when predicting a disease. To obtain these attentions, the proposed model has a Transformer architecture that can encode the relationship among images, clinical records, and class information.

To demonstrate the effectiveness of the proposed PersAM method, we applied it to the pathological subtype classification of 842 patients with malignant lymphoma. The training dataset consisted of WSIs and clinical records, where each WSI was a gigapixel image of an entire pathological tissue slide: the clinical record included the age, gender, target organ of the tissue section, interview with a doctor, and blood test results. By combining pathological images and clinical records, the proposed method performed better than several baseline methods. Furthermore, we confirmed that the proposed PersAM method can successfully provide personalized attention in the Transformer architecture.

The main contributions of this work are summarized as follows.

-

•

First, inspired by medical image diagnoses by pathologists in clinical practice, we introduce a framework for a personalized attention mechanism in which the attention is determined on the basis of patient-specific information.

-

•

Second, for the problem of weakly supervised attention region estimation based on MIL, we propose a variant of the Transformer architecture.

-

•

Third, we apply the proposed model to a large-scale digital pathology task to demonstrate the effectiveness of the proposed framework and method.

2 Preliminaries

2.1 Problem setup

In this paper, we focus on the PersAM framework in the context of subtype classification for digital cancer pathology. Let be the set of natural numbers up to . The training dataset is denoted as , where is the number of patients and each of , , and represents the pathological image, clinical record, and subtype class label of the patient, respectively. The image is a digitally scanned WSI of the entire pathological specimen. Because the WSI is usually a huge image of gigapixel size, it is too large to be directly fed into the model. Therefore, image patches extracted from are used as the inputs to the model, and we write where is the image patch taken from the patient and is the number of image patches taken from . The clinical record is represented as a set of numerical vectors and denoted as , where is the clinical factor represented as a vector and is the number of clinical factors. For example, in §4, we consider the case with where the first clinical factor is the patient profile (such as age and gender) and the results of a medical interview, whereas the second clinical factor is a set of blood test results. In our clinical records, the patient profiles are represented by integer values or binary labels, the results of a medical interview are represented by binary labels, and the blood test results are represented by continuous values, respectively. If a clinical record has text information as findings, it can be used as a clinical factor after vectorizing in some manner. The details of clinical record information is explained in §4. The subtype class label is represented as a -dimensional one-hot vector.

In digital cancer pathology, a WSI includes both tumor cells and normal cells, and subtype diagnosis is conducted on the basis of a subset of the tumor cells. This means that, among the image patches taken from a WSI, only some of them are considered to contain useful information for subtype classification. We regard that image patch subset as the attention region. We represent the attention degree of each image patch as an attention weight. Given a pathological WSI and a clinical record, our model provides the attention weights—which each represents the importance of each image patch—and then makes a subtype classification based on the attention weights. As an example of the application of the PersAM framework in the digital pathology problem, we consider the case where the attention weights vary according to the clinical records. Clinical records possibly contain two types of information: i) the parts of the pathological specimen that should be observed and ii) which subtype it is likely to be classified under. In this study, we consider two types of personalized attentions, called exploratory and explanatory attentions, each of which is respectively obtained from each of these two types of information. We introduce a variant of the Transformer architecture that can provide both the exploratory attention weight and the explanatory attention weight of each image patch.

2.2 Related works

Digital pathology

Pathological diagnosis plays an important role in medicine. Various computer-aided diagnosis methods for pathological images have been developed for various problems, such as classification [13, 21, 9], tumor region identification [4, 5, 1], segmentation [34, 22, 31, 29], survival prediction [38, 33, 14], and similar image retrieval [12, 16, 10]. In digital pathology, a digital scan of the entire pathology specimen, called a WSI, is used as the target image. Because a WSI is usually huge (e.g., 100,000100,000 pixels), it cannot be directly fed into a model. Therefore, image patches extracted from the WSI are often used as the inputs to a model. In pathological diagnosis based on WSIs, it is important to note that WSIs contain both tumor cells and normal cells. Therefore, if there is no annotation of the tumor cell region, it is necessary to first identify the tumor cell region and then make a pathological diagnosis. This problem is a weakly supervised learning problem in the sense that only the WSI is labeled and the tumor cell region is not.

Multiple instance learning (MIL)

MIL is a weakly supervised learning problem in which labels are not given for instances but for a group of instances called a bag. In an MIL formulation of a binary classification problem, it is assumed that a positive bag contains at least one positive instance, whereas a negative bag contains only negative instances. By considering a WSI as a bag and an image patch as an instance, the subtype classification problem can be interpreted as an MIL problem in which class-specific image patches (e.g., tumor patches) are considered positive instances. Several MIL approaches have been developed for digital pathology tasks [15, 25, 18]. Among them, attention-based MIL [15, 9, 17] is particularly useful because the identified attention regions can be interpreted as class-specific image patches.

Attentionand explanation

Although the development of deep learning techniques has dramatically improved the accuracies of many medical image analysis tasks, it is critically important for medical practice to develop techniques that provide explanation of the results. Various visualization methods, such as Grad-CAM [26], have been proposed to interpret and explain the rationale for classification results. Singla et al. [28] proposed a method that visualizes the medical image regions that serve as the basis for the classification of diseases for each concept derived from clinical report analysis. However, most visualization methods visualize the regions that contribute to the classification results after the classifier is applied to given images (rather than using clinical reports for finding and visualizing the image regions that contribute to the classification results, as in our proposed method). The attention-based MIL described above can also be considered as a method to provide explanation of the results because attention can be visualized as an informative region for making decisions. In this study, we employ the Transformer architecture [32] as a basis for estimating the attention region in medical images. Although the Transformer was originally developed for natural language processing (NLP) tasks, it has been demonstrated to be effective for general computer vision tasks [6], including medical image analysis [24, 27, 20, 7]. In particular, the Transformer has been effectively used to aggregate bag features in an MIL setting [27]. The Transformer architecture can encode the relationship among a pair of components in input data, e.g., between two words in the case of NLP tasks, and between two image patches in the case of computer vision tasks. This study was inspired by the use of the Transformer in the context of vision and language [37, 3], where it has been demonstrated that a change in the language token can change the attention of the image token. The Transformer has a mechanism to quantify the relevance of multimodal information in the form of attentions. The encoding mechanism for the relationship of input data can be expanded into multimodal input including image patches and table data, which enables computing attention from an image patch to table data, or attention from table data to an image patch. We expect that encoding the relationship between image patches and clinical factors can provide more appropriate personalized attentions based on the clinical record.

Multimodal learning

In clinical practice, doctors obtain additional information from patients’ clinical records and image diagnoses are performed by considering such clinical factors as prior information. In multimodal analysis, clinical records that include the basic information of patients and some examination results can often be used in addition to digital images [35, 36, 30, 23]. Yala et al. [35] used a combination of mammography images and patient data (basic information, medical history, etc.) and demonstrated that the performance of breast cancer risk prediction could be improved. Multimodal analysis of dermoscopic images and patient data (age, gender, and body location) has also been studied to enhance the accuracy of skin lesions classification and melanoma detection [36].

Multimodal analyses of medical images and clinical records have primarily been performed on radiology images, but recent works have reported that pathological images can also be combined with clinical records to improve the task performance. Li et al. [18] combined tabular clinical data with histological images in an MIL setting, where eighteen attributes, including age, genes, and tumor location, were used as inputs with multiscale histological images. Additional clinical factors have also been used in a mixture-of-experts model as inputs of a gating network [25]. Chen et al. [2] proposed a Transformer-based multimodal model for survival prediction using images and genetic data. Their method could visualize co-attentions between images and genetic information. However, these previous works primarily focused on performance improvement by using multimodal inputs, and detailed effects on attention regions by additional clinical factors have not been reported. The advantage of the proposed PersAM method is that it can provide personalized attention regions according to the clinical records, which mimics the actual pathological practice of human expert pathologists.

3 Proposed Method

This study was conducted to develop an AI system for pathological diagnosis that mimics the actual diagnosis process of human pathologists. When a pathologist makes a diagnosis, they have patient information based on the clinical record, which is used as prior knowledge of the parts of the pathological image on which to focus. We call such an attention region in the early exploratory phase of the diagnosis exploratory attention. Exploratory attention is a class-independent attention that is determined solely by a WSI and a clinical record. Furthermore, after a pathologist has made a diagnosis, they should be able to explain which part of the pathological image they focused on. We call such an attention region in the later explanatory phase of the diagnosis explanatory attention. Explanatory attention is a class-dependent attention that is determined by a WSI, a clinical record, and class information.

Given the WSI and the clinical record of a patient, the proposed PersAM method can identify both exploratory and explanatory attention regions and make a diagnosis based on those identified attention regions. In §3.1, we first introduce a Transformer-based network structure that enables pathological diagnosis based on the two types of attentions. Then, in §3.2, we describe how the network can be used to identify both exploratory and explanatory attention regions to make a pathological diagnosis. The key property of the proposed PersAM method is that both exploratory and explanatory attentions can be adaptively changed according to patient clinical records even if the same WSI is given to the model. This mimics the actual diagnostic process employed by human pathologists, making the AI system highly explainable.

In this section, for ease of notation, when there is no ambiguity, we omit the subscript in referring to the patient.

3.1 Proposed network structure

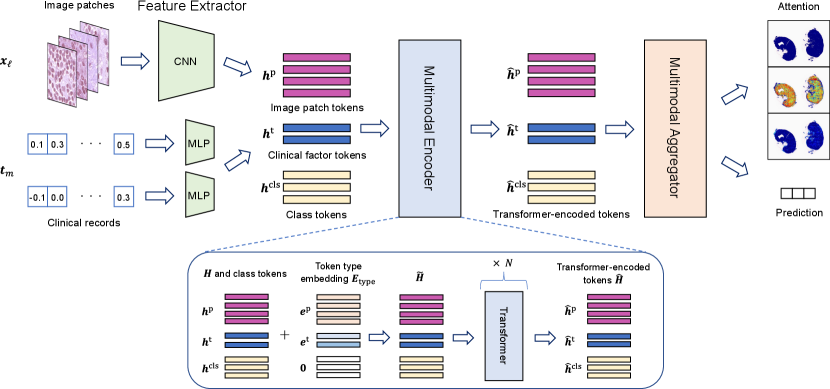

To realize pathological diagnosis based on exploratory and explanatory attentions, as shown in Fig. 2, we propose a new network structure consisting of three components: feature extractor, multimodal encoder, and multimodal aggregator. We describe each of these three components below.

3.1.1 Feature extractor

In this section, to simplify the notations, we describe the MIL setting where the entire WSI is considered as a bag and each of the multiple patches taken from the WSI as an instance. In §4, we consider the MIL setting where a WSI contains multiple bags. See §4 for details. Let be the WSI and be the clinical record of a patient, where is the patch image taken from the WSI , whereas is the clinical factor. The role of the feature extractor is to compute a feature vector for each of the image patches and clinical factors so that input data can be used in the Transformer architecture. For image patches, we employ a convolutional neural network (CNN) : that maps an image patch to a feature vector , where is the dimension of the feature vector. For clinical factors, we employ a simple multi-layer perceptron (MLP) : that maps the vector of the clinical factor into a feature vector that has the same dimension as . We denote the sets of trainable parameters for and as and , respectively. We denote the combined feature vectors as

| (1) |

3.1.2 Multimodal encoder

The multimodal encoder characterizes the relationship between multimodal information. We implement this component using a Transformer. The Transformer was initially developed for NLP tasks, where each feature vector is called a token. In the proposed network structure, in addition to the image patch tokens and clinical factor tokens , we introduce class tokens , which are considered trainable parameters. Let

| (2) |

where is called token type embedding and is used to characterize the type of tokens. Here, the token type embedding is defined as

| (3) |

where denotes a -dimensional zero vector. Each token in is characterized as either of image patch, clinical factor, or class token by this token embedding. Note that the same type token is used for all image patches because the image patches are randomly sampled from WSI. Token type embedding parameters and are considered trainable parameters.

We denote the Transformer as a function , where is the collection of the outputs of the Transformer called Transformer-encoded tokens, denoted as

| (4) |

We denote the set of trainable parameters for the Transformer as

| (5) |

where is the other general parameters in the Transformer. By feeding tokens into the Transformer encoder several times repeatedly, can encode the relationship among image patches, clinical factors, and class tokens. Here each element of the self-attention map for corresponds to the relationship between two tokens, and represents how a token is focused when the other token is given together as input data.

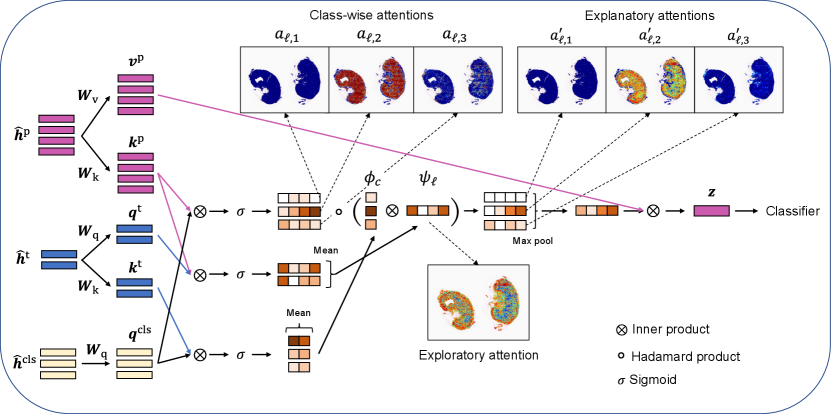

3.1.3 Multimodal aggregator

The multimodal aggregator aggregates the Transformer-encoded tokens for obtaining exploratory/explanatory attentions and subtype classification results. Let

| (6) | |||

| (7) | |||

| (8) |

where and are called queries for class tokens and clinical factor tokens, respectively; and are called keys for image patch tokens and clinical factor tokens, respectively; and is called values for image patch tokens in the context of the Transformer. The matrices are trainable parameters. We denote these three matrices collectively as .

The inner product between a query and a key represents the relevance between the corresponding multimodal information. First, the relevance between each image patch and each class is written as

| (9) |

where is the sigmoid function. Next, the relevance between each image patch and the set of clinical factors is written as

| (10) |

Furthermore, the relevance between each class and the set of clinical factors is written as

| (11) |

The three types of relevance information in (9)–(11) are used to obtain exploratory/explanatory attentions and subtype classification results.

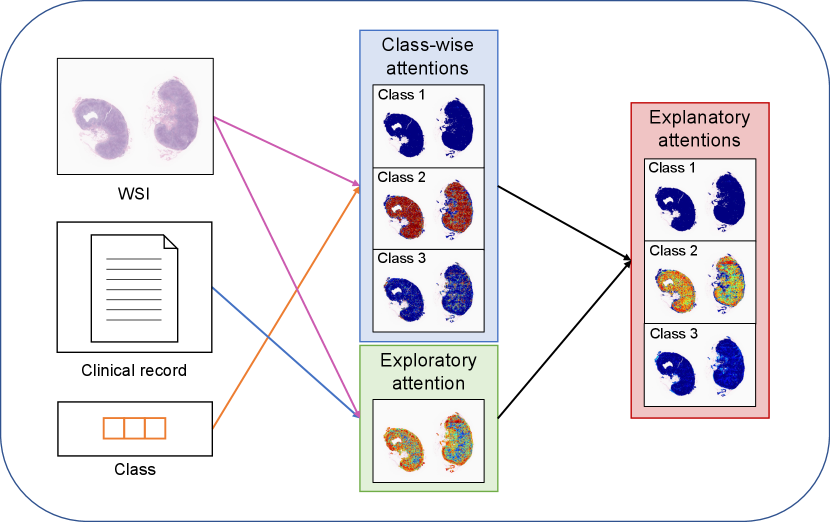

3.2 Exploratory/Explanatory attentions and subtype classifications

Based on the relevance information in (9)–(11), we obtain three types of attentions: class-wise attentions, exploratory attentions, and explanatory attentions. Fig. 3 illustrates the three types of attentions. We call class-wise attentions because they are obtained as the relevance between the image patch and the class token without clinical factors. We regard as exploratory attentions because they are obtained as the relevance between the image patch and the set of clinical factors . Note that the exploratory attentions are class-independent; thus, they can be considered as the attention regions in the WSI in the early exploratory phase of the diagnosis. The explanatory attentions are obtained by combining the class-wise attentions and the exploratory attentions as follows:

| (12) |

It can be interpreted that the explanatory attentions are obtained by filtering the class-wise attentions with the exploratory attentions.

The subtype classification results are obtained based on a linear combination of the aggregate feature vector

| (13) |

where . Then, the class-wise probabilities are obtained by using a neural network (NN) with the softmax operator as follows:

| (14) |

where is a -dimensional vector whose element represents the probability that the subtype of the patient is class and is the set of trainable parameters.

When the network is trained, the loss function consists of two loss components. The first loss component is simply the cross-entropy loss between the true one-hot class vector and the predicted class probability vector . The second loss component is considered to take into account the specific property of the MIL setting, where the bag (WSI) is positive if any of the instances (image patches) is positive. To formulate this specific property, we consider

| (15) |

where is close to one if there exists at least one image patch with an attention value close to one. The second loss component is defined as the binary cross-entropy between , the element , and . This loss function is inspired by the probability aggregation approach studied in [19].

The proposed network contains trainable parameters , , , , and . All the parameters in the model are simultaneously trained by minimizing the following loss function:

| (16) |

The operations performed in the network structure are illustrated in Fig. 4.

4 Experimental Evaluation

In the experiments, we first compared the proposed PersAM MIL with several baseline methods to confirm the improvement of classification performance. Then, the effectiveness of exploratory and explanatory attentions in the proposed method was evaluated.

4.1 Experimental setting

Dataset

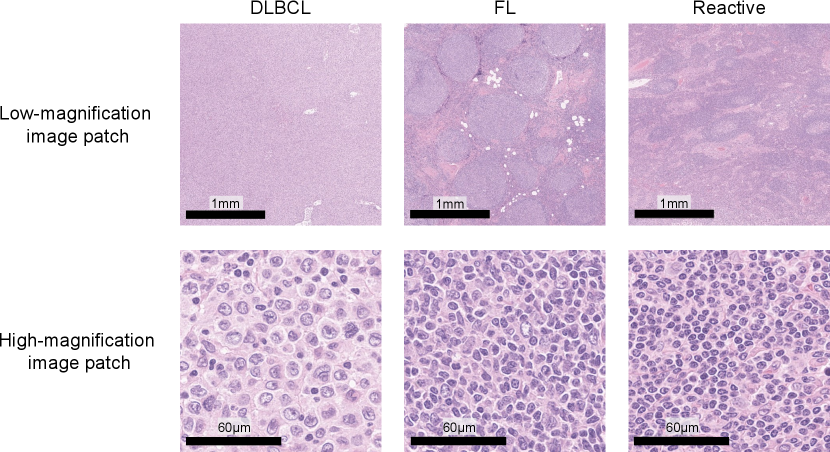

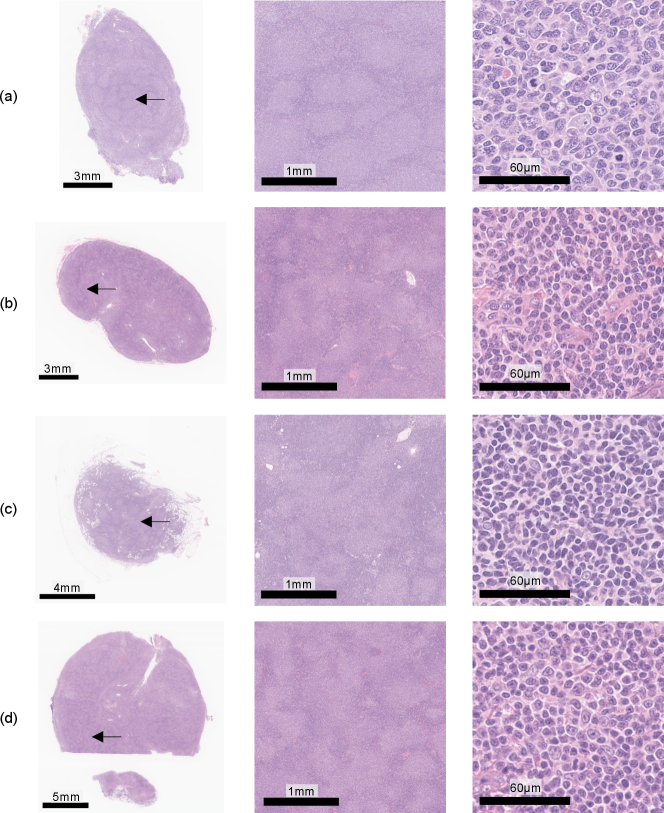

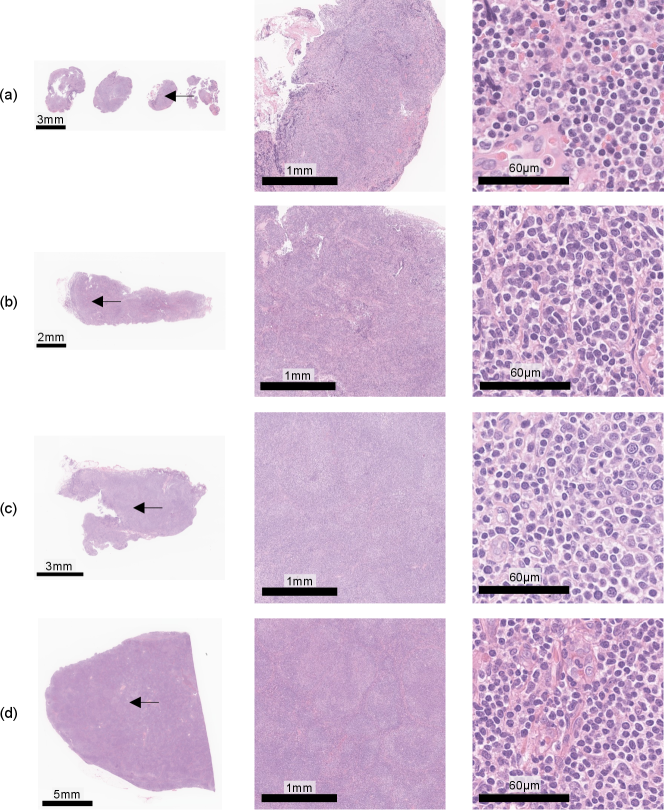

Our database of malignant lymphoma was composed of clinical cases with three subtypes: 277 DLBCL, 270 FL, and 295 reactive lymphoid hyperplasia (Reactive). Figure 5 shows sample image patches for typical DLBCL, FL, and Reactive cases. DLBCL has large tumor cells over a wide region in the tissue specimen, and FL has follicular structures which have tumor cells. In contrast, Reactive is classified as non-lymphoma, which has diverse cell structures but no tumor cells. All the patient data were clinically diagnosed by expert hematopathologists and a WSI of a hematoxylin-and-eosin (H&E)-stained tissue specimen and a clinical record were given for each case. A gigapixel digitized WSI of the entire H&E-stained tissue slide, was used as an input image . All the glass slides were digitized using a WSI scanner (Aperio GT 450; Leica Biosystems, Germany) at 40x magnification (0.26 µm/pixel), where the maximum image size was approximately 100,000100,000 pixels. The OpenSlide [8] software was used for handling WSIs and extracting image patches from . An original clinical record includes the definitive subtype and clinical factors . Note that we cannot use patch-level annotations, and the class label is given only to a WSI , not image patches. The clinical factors consist of 28 elements that are summarized by clinical factors: an 18-dimensional vector with patient basic information and interview results and a 10-dimensional vector with blood test results. The details of items included in each clinical factor are listed in tables 1 and 2.

| Item | Value type | ||||

|---|---|---|---|---|---|

| Age | Nonnegative integer | ||||

|

Binary |

| Item | Value type |

|---|---|

| RBC, WBC, plt, LDH | Amount |

| Stab, seg, eosino, baso, mono, lympho | Percentile |

Implementation details

In the experiment, -pixel image patches were randomly extracted from an entire WSI and 100 image patches were used as a bag due to the amount of computation and memory. The corresponding label was assigned to a bag generated from a WSI , e.g., a bag generated from image patches of a WSI of DLBCL was labeled as DLBCL. A maximum of 30 bags were generated from a single WSI in our experiment. The length of the feature vector was set to inspired by TransMIL [27]. To obtain feature vector of image patches , employed an ResNet50 [11] pre-trained with ImageNet and a two-layer NN that had 1024 hidden units, 512 output units, and ReLU as its activation function, where a 2048-dimensional vector after global average pooling layer in ResNet50 was converted into the 512-dimensional feature vector. Clinical factors were mapped to by a two-layer NN that had 256 hidden units, 512 output units, and ReLU as its activation function, where both 18-dimensional and 10-dimensional clinical factors were converted into 512-dimensional feature vectors. Class tokens were designed as three 512-dimensional vectors, and then we could obtain input data for the Transformer architecture . In (15), to prevent underflow computing, was normalized to . The dataset was divided into training, validation, and testing data in the ratio of 3:1:1, and the models were evaluated via five-fold cross-validation where the model that had the smallest validation loss after third epoch was used for testing.

For the setting on Transformer, the number of layers and heads were set to two and eight, respectively, where dropout rate was set to 0.1. The classifier was an NN that had a hidden layer with 256 units and an output layer with three units to compute the class probability from a 512-dimensional aggregated feature vector .

For stability in the optimization, label smoothing was applied as a regularization technique in calculating the loss function , where the label for a correct class was set to and the labels for incorrect classes were set to . As an optimization method, momentumSGD (nesterov, weight decay=) was employed and the training of the model was performed in nine epochs. Learning rates were determined as for optimizing the parameter , for the parameters , and , and for the parameter in which the learning rate was multiplied by 0.1 every three epochs. Random horizontal flip and random rotations () were applied to the input image patches as the data augmentation. All the parameters of the model were simultaneously optimized in the above setting. It took about 20 hours to perform five-fold cross-validation by a computer with eight Quadro RTX 5000 (NVIDIA, U.S.). Our source code is available from https://github.com/PersAM-MIL/PersAM.

4.2 Subtype classification

We performed the three-class classification experiment using the dataset outlined above.

Baseline methods

The proposed PersAM model was compared with the following baseline models:

-

1.

MLP using clinical factors as input (clinical MLP)

Clinical MLP employs a three-layer NN that has hidden layers with 256 and 512 units and uses a 28-dimensional vector indicating clinical factors as input data. The training of clinical MLP was performed in 500 epochs, where the learning rate was set to without scheduling. -

2.

Attention-based MIL using images [15] (img MIL)

Img MIL employs an attention-based MIL that aggregates 2048-dimensional feature vectors in a bag and predicts the class label from an aggregated feature vector using the classifier with the hidden layer having 1024 units. -

3.

Attention-based MIL using images and clinical factors (img-clinical MIL)

In img-clinical MIL, in addition to a 512-dimensional aggregated feature vector computed from image patches by the attention-based MIL, a 28-dimensional clinical factor is also used as an input for computing the 512-dimensional feature. By concatenating the aggregated feature vector for images in a bag and the computed feature vector for clinical factors, the classifier predicts the class using the 1024-dimensional concatenated feature vector through the hidden layer with 512 units. -

4.

Transformer-based MIL using images (img Transformer)

In img Transformer, only one class token was concatenated to feature vectors for image patches. The classifier predicts the class using the encoded class token (it is a common technique in the Transformer-based classification model). -

5.

Transformer-based MIL using images and clinical factors (img-clinical Transformer)

Similar to img Transformer, img-clinical Transformer uses only one class token, and it is concatenated to feature vectors for image patches and clinical factors. Img-clinical Transformer predicts the class using the encoded class token as an input for the classifier .

The setting for an optimization method and learning rates are the same as above except for clinical MLP.

Results

The classification results are shown in Table 3, where each row shows the mean accuracy and standard error in three-class classification by five-fold cross-validation. The results show that the proposed method achieved the highest accuracy compared to all the baseline methods. In particular, whereas the baseline methods using image and clinical factors showed low accuracy, our proposed method classified the subtype more accurately by properly aggregating image and clinical features through the multimodal aggregator.

| Method | Accuracy |

|---|---|

| Clinical MLP | 0.5795 0.0071 |

| Img MIL | 0.8195 0.0090 |

| Img-clinical MIL | 0.8230 0.0085 |

| Img Transformer | 0.8219 0.0140 |

| Img-clinical Transformer | 0.8147 0.0141 |

| Proposed | 0.8313 0.0149 |

4.3 Attention visualization

Class-wise, exploratory, and explanatory attentions

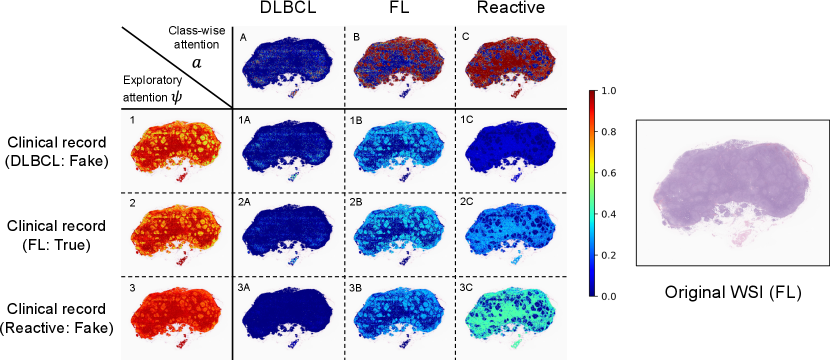

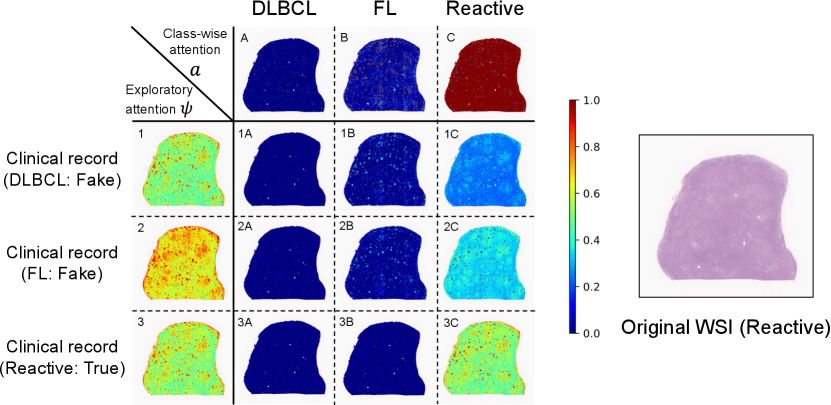

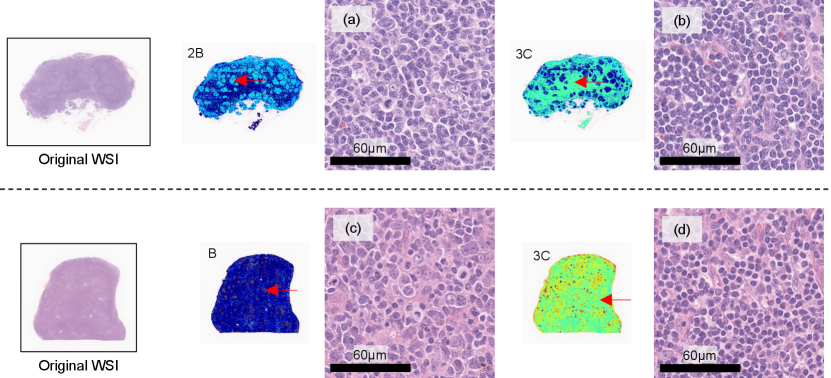

We also performed visualization experiments to demonstrate that the proposed personalized attentions could be adaptively changed according to input clinical records. In the visualization results, attention weights ranging from zero to one were assigned in the range blue to red. Figs. 6 and 7 show the visualization results of class-wise attentions, exploratory attentions, and explanatory attentions, where the images on the right are thumbnails of the original WSIs. In the matrices on the left, the columns show the class-wise attentions , the rows show exploratory attentions , and each element shows explanatory attentions , where clinical records sampled from other cases of three different subtypes were input with the WSI of a patient instead of the original clinical factor of that patient. Fake clinical records were used to confirm that exploratory and explanatory attentions changed when different clinical records were input with the same WSI.

Fig. 6 shows the results for an FL case. It is known that the follicular structure, a subtype-specific region for FL, is important in the diagnosis of FL cases. This case has large follicular structures in the tissue, and we can confirm that exploratory attention , i.e., the follicular region on which focus is placed, changes depending on the clinical records. This case should have some difficulty in the diagnosis using only images, and explanatory attentions change according to the input clinical records.

Fig. 7 shows the result for a Reactive case. It is known that some Reactive cases have a similar appearance to FL cases. This case also has small follicular structures in the tissue, which is similar to FL, and exploratory attentions are enhanced in those regions. Class-wise attentions for FL focus on the follicular regions and those for Reactive focus on the outside follicular regions. Detailed discussions for these results will be done with magnified image patches later.

The visualization results whose attentions were changed are observed for parts of the dataset, and not all cases changed their attentions depending on input clinical records. From the above results, it is expected that the changes of attentions are caused by whether the input WSI is pathologically typical or not; a case whose disease can clearly be determined only from a WSI does not change its attentions if a different clinical record is input together, and on the other hand, a case whose WSI has ambiguous features to identify the subtype has the possibility of changes of attentions depending on the input clinical record. To qualitatively confirm this, an expert hematopathologist (one of the authors, who is an institution member with over 15 years of experience diagnosing more than 10,000 cases of lymphoma) investigated whether each case was typical or not in both cases whose attention were changed and not. We targeted FL cases to easily interpret the observation results. The pathologist observed many WSIs of FL cases that changed attentions and did not change attentions when the different (fake) clinical records were input and evaluated their typicalness of FL with blind whether the attentions of each case changed or not. A case that was determined as FL only from a WSI was evaluated as a typical FL case, and a case that cannot be determined as FL only from a WSI and requires immunohistochemical (IHC) stains was evaluated as an atypical case. The results are discussed in §4.4.

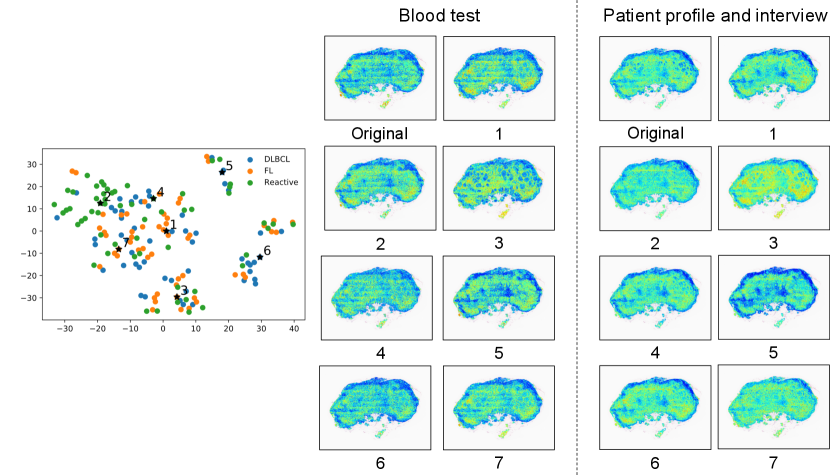

Clinical-record-to-patch attentions

We call self-attentions in the bag representation which indicates attentions from each clinical factor to image patches “clinical-record-to-patch attentions”. As an additional experiment, we visualized how clinical-record-to-patch attentions changed according to input clinical factors. Fig. 8 shows the visualization result of clinical-record-to-patch attentions for an FL case, where the clinical factor of an original (real) case is replaced with the those of representative (fake) case in the clustering results. Fake cases were used to confirm that clinical-record-to-patch attentions changed when different clinical records were input with the same WSI similarly to Figs. 6 and 7.

In the clustering, -medoids method was applied to two-dimensional t-SNE embedded features that were calculated from 28-dimensional clinical factors. We determined the number of clusters by looking at t-SNE embedded features and set to . Instead of the original clinical factors, the clinical factors of the representative cases in each cluster were input with a WSI of the original case into the PersAM model. The plots on the left represent the embedded clinical factors, in which are the representative cases in each cluster. The images on the middle and right are the visualization results of clinical-record-to-patch attentions corresponding to blood test and interview, respectively. Attention weights of image patches are normalized in each case for visualization.

We can confirm that the proposed PersAM could adaptively change clinical-record-to-patch attentions depending on the clinical records that were input with an original WSI. The visualization results for the representative case 1, 2, 4, and 7 are similar to each other because the embedded clinical factors were located close, but the result for case 3 focuses on the follicular structures and the result for case 5 focuses on the outside follicular structures. We confirmed that clinical-record-to-patch attention also changed depending on clinical factors by effectively encoding the relationship between image patches and clinical factors.

4.4 Pathological viewpoint on attention

Here we discuss the detailed results for the experiment of attention visualization with the expert hematopathologist’s comments. For Fig. 6, the hematopathologist made a comment on this result that the change in the explanatory attentions in this case is reasonable because pathologists need to focus more on the follicular regions to identify FL cases (Fig. 9(a)) and on the outside follicular regions to identify Reactive cases (Fig. 9(b)). In general, as mentioned above, follicular region is important to identify FL cases, but the case of Fig. 6 has a lot outside follicular regions compared to typical FL cases.When a case has a large part of such outside follicular regions in the WSI, it is expected that the classification model focuses on outside follicular regions when the clinical factor of Reactive cases was input with the WSI.

For Fig. 7, the hematopathologist made a comment on this result that the change in the explanatory attentions in this case is also reasonable because the model focuses less on the follicular structure to identify Reactive cases. This case has follicular regions as shown in Fig. 9(c), where histological features of the entire tissue specimen were not typical Reactive case. In such cases, the pathologist can not identify Reactive case only from a WSI with confidence even if the outside follicular regions has typical features of Reactive cases (Fig. 9(d)).

Furthermore, we discuss the results of the investigation of typicalness with magnified images in Figs. 10 and 11. Figure 10 shows cases, which were evaluated as typical FL cases by the pathologist, in the cases where attentions did not change regardless of input clinical records. All these cases were evaluated as typical FL cases since follicular regions exist in the entire tissue specimens, which enables pathologists to identify them as FL cases only from WSIs. Figure 11 shows cases, which were evaluated as atypical FL cases by the pathologist, in the cases where attentions changed depending on input clinical records. Most cases have less follicular regions in low magnification and the pathologist can not determine them as FL cases due to the lack of definitive FL features. In Fig. 11(d), there are a lot of nodes in the tissue specimen, and the pathologist expects other diseases and has to require IHC stains. The proposed PersAM method can provide a reasonable explanation that is similar to pathologists’ decision-making where the subtype of typical cases can be identified only from tissue specimens regardless of clinical records and attention regions of atypical cases are affected by clinical records.

5 Conclusion

In this study, to develop an AI system that mimics the diagnosis process of human pathologists, we proposed the PersAM method, which adaptively changes the attention regions according to patient clinical records. Our proposed method provided three types of attention regions, which were calculated considering the relationship among multimodal information. The results of experiments conducted with 842 malignant lymphoma cases verify the effectiveness of the PersAM method.

References

- [1] B. E. Bejnordi, M. Veta, P. J. Van Diest, B. Van Ginneken, N. Karssemeijer, G. Litjens, J. A. Van Der Laak, M. Hermsen, Q. F. Manson, M. Balkenhol, et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. Jama, 318(22):2199–2210, 2017.

- [2] R. J. Chen, M. Y. Lu, W.-H. Weng, T. Y. Chen, D. F. Williamson, T. Manz, M. Shady, and F. Mahmood. Multimodal co-attention transformer for survival prediction in gigapixel whole slide images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4015–4025, 2021.

- [3] Y.-C. Chen, L. Li, L. Yu, A. El Kholy, F. Ahmed, Z. Gan, Y. Cheng, and J. Liu. Uniter: Universal image-text representation learning. In European conference on computer vision, pages 104–120. Springer, 2020.

- [4] D. C. Cireşan, A. Giusti, L. M. Gambardella, and J. Schmidhuber. Mitosis detection in breast cancer histology images with deep neural networks. In International Conference on Medical Image Computing and Computer-assisted Intervention, pages 411–418. Springer, 2013.

- [5] A. Cruz-Roa, A. Basavanhally, F. González, H. Gilmore, M. Feldman, S. Ganesan, N. Shih, J. Tomaszewski, and A. Madabhushi. Automatic detection of invasive ductal carcinoma in whole slide images with convolutional neural networks. In Medical Imaging 2014: Digital Pathology, volume 9041, page 904103. International Society for Optics and Photonics, 2014.

- [6] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [7] Z. Gao, B. Hong, X. Zhang, Y. Li, C. Jia, J. Wu, C. Wang, D. Meng, and C. Li. Instance-based vision transformer for subtyping of papillary renal cell carcinoma in histopathological image. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 299–308. Springer, 2021.

- [8] A. Goode, B. Gilbert, J. Harkes, D. Jukic, and M. Satyanarayanan. Openslide: A vendor-neutral software foundation for digital pathology. Journal of pathology informatics, 4, 2013.

- [9] N. Hashimoto, D. Fukushima, R. Koga, Y. Takagi, K. Ko, K. Kohno, M. Nakaguro, S. Nakamura, H. Hontani, and I. Takeuchi. Multi-scale domain-adversarial multiple-instance cnn for cancer subtype classification with unannotated histopathological images. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 3852–3861, 2020.

- [10] N. Hashimoto, Y. Takagi, H. Masuda, H. Miyoshi, K. Kohno, M. Nagaishi, K. Sato, M. Takeuchi, T. Furuta, K. Kawamoto, et al. Case-based similar image retrieval for weakly annotated large histopathological images of malignant lymphoma using deep metric learning. arXiv preprint arXiv:2107.03602, 2021.

- [11] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [12] N. Hegde, J. D. Hipp, Y. Liu, M. Emmert-Buck, E. Reif, D. Smilkov, M. Terry, C. J. Cai, M. B. Amin, C. H. Mermel, et al. Similar image search for histopathology: Smily. NPJ digital medicine, 2(1):1–9, 2019.

- [13] L. Hou, D. Samaras, T. M. Kurc, Y. Gao, J. E. Davis, and J. H. Saltz. Patch-based convolutional neural network for whole slide tissue image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2424–2433, 2016.

- [14] Z. Huang, H. Chai, R. Wang, H. Wang, Y. Yang, and H. Wu. Integration of patch features through self-supervised learning and transformer for survival analysis on whole slide images. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 561–570. Springer, 2021.

- [15] M. Ilse, J. Tomczak, and M. Welling. Attention-based deep multiple instance learning. In International conference on machine learning, pages 2127–2136. PMLR, 2018.

- [16] S. Kalra, H. R. Tizhoosh, C. Choi, S. Shah, P. Diamandis, C. J. Campbell, and L. Pantanowitz. Yottixel–an image search engine for large archives of histopathology whole slide images. Medical Image Analysis, 65:101757, 2020.

- [17] B. Li, Y. Li, and K. W. Eliceiri. Dual-stream multiple instance learning network for whole slide image classification with self-supervised contrastive learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14318–14328, 2021.

- [18] H. Li, F. Yang, X. Xing, Y. Zhao, J. Zhang, Y. Liu, M. Han, J. Huang, L. Wang, and J. Yao. Multi-modal multi-instance learning using weakly correlated histopathological images and tabular clinical information. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 529–539. Springer, 2021.

- [19] Z. Li, C. Wang, M. Han, Y. Xue, W. Wei, L.-J. Li, and L. Fei-Fei. Thoracic disease identification and localization with limited supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 8290–8299, 2018.

- [20] M. Lu, Y. Pan, D. Nie, F. Liu, F. Shi, Y. Xia, and D. Shen. Smile: Sparse-attention based multiple instance contrastive learning for glioma sub-type classification using pathological images. In MICCAI Workshop on Computational Pathology, pages 159–169. PMLR, 2021.

- [21] H. S. Mousavi, V. Monga, G. Rao, and A. U. Rao. Automated discrimination of lower and higher grade gliomas based on histopathological image analysis. Journal of pathology informatics, 6, 2015.

- [22] P. Naylor, M. Laé, F. Reyal, and T. Walter. Segmentation of nuclei in histopathology images by deep regression of the distance map. IEEE transactions on medical imaging, 38(2):448–459, 2018.

- [23] D. Nie, J. Lu, H. Zhang, E. Adeli, J. Wang, Z. Yu, L. Liu, Q. Wang, J. Wu, and D. Shen. Multi-channel 3d deep feature learning for survival time prediction of brain tumor patients using multi-modal neuroimages. Scientific reports, 9(1):1–14, 2019.

- [24] D. Rymarczyk, A. Borowa, J. Tabor, and B. Zielinski. Kernel self-attention for weakly-supervised image classification using deep multiple instance learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 1721–1730, 2021.

- [25] M. Sahasrabudhe, P. Sujobert, E. I. Zacharaki, E. Maurin, B. Grange, L. Jallades, N. Paragios, and M. Vakalopoulou. Deep multi-instance learning using multi-modal data for diagnosis of lymphocytosis. IEEE Journal of Biomedical and Health Informatics, 25(6):2125–2136, 2020.

- [26] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

- [27] Z. Shao, H. Bian, Y. Chen, Y. Wang, J. Zhang, X. Ji, and Y. Zhang. Transmil: Transformer based correlated multiple instance learning for whole slide image classication. arXiv preprint arXiv:2106.00908, 2021.

- [28] S. Singla, S. Wallace, S. Triantafillou, and K. Batmanghelich. Using causal analysis for conceptual deep learning explanation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 519–528. Springer, 2021.

- [29] K. Tanizaki, N. Hashimoto, Y. Inatsu, H. Hontani, and I. Takeuchi. Computing valid p-values for image segmentation by selective inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9553–9562, 2020.

- [30] K.-H. Thung, P.-T. Yap, and D. Shen. Multi-stage diagnosis of alzheimer’s disease with incomplete multimodal data via multi-task deep learning. In Deep learning in medical image analysis and multimodal learning for clinical decision support, pages 160–168. Springer, 2017.

- [31] H. Tokunaga, Y. Teramoto, A. Yoshizawa, and R. Bise. Adaptive weighting multi-field-of-view cnn for semantic segmentation in pathology. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 12597–12606, 2019.

- [32] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017.

- [33] E. Wulczyn, D. F. Steiner, Z. Xu, A. Sadhwani, H. Wang, I. Flament-Auvigne, C. H. Mermel, P.-H. C. Chen, Y. Liu, and M. C. Stumpe. Deep learning-based survival prediction for multiple cancer types using histopathology images. PLoS One, 15(6):e0233678, 2020.

- [34] Y. Xu, Z. Jia, Y. Ai, F. Zhang, M. Lai, I. Eric, and C. Chang. Deep convolutional activation features for large scale brain tumor histopathology image classification and segmentation. In 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP), pages 947–951. IEEE, 2015.

- [35] A. Yala, C. Lehman, T. Schuster, T. Portnoi, and R. Barzilay. A deep learning mammography-based model for improved breast cancer risk prediction. Radiology, 292(1):60–66, 2019.

- [36] J. Yap, W. Yolland, and P. Tschandl. Multimodal skin lesion classification using deep learning. Experimental dermatology, 27(11):1261–1267, 2018.

- [37] Z. Yu, Y. Cui, J. Yu, D. Tao, and Q. Tian. Multimodal unified attention networks for vision-and-language interactions. arXiv preprint arXiv:1908.04107, 2019.

- [38] X. Zhu, J. Yao, F. Zhu, and J. Huang. Wsisa: Making survival prediction from whole slide histopathological images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7234–7242, 2017.