TSGN: Transaction Subgraph Networks Assisting Phishing Detection in Ethereum

Abstract

Due to the decentralized and public nature of the Blockchain ecosystem, the malicious activities on the Ethereum platform impose immeasurable losses for the users. Existing phishing scam detection methods mostly rely only on the analysis of original transaction networks, which is difficult to dig deeply into the transaction patterns hidden in the network structure of transaction interaction. In this paper, we propose a Transaction SubGraph Network (TSGN) based phishing accounts identification framework for Ethereum. We first extract transaction subgraphs for target accounts and then expand these subgraphs into corresponding TSGNs based on the different mapping mechanisms. In order to make our model incorporate more important information about real transactions, we encode the transaction attributes into the modeling process of TSGNs, yielding two variants of TSGN, i.e., Directed-TSGN and Temporal-TSGN, which can be applied to the different attributed networks. Especially, by introducing TSGN into multi-edge transaction networks, the Multiple-TSGN model proposed is able to preserve the temporal transaction flow information and capture the significant topological pattern of phishing scams, while reducing the time complexity of modeling large-scale networks. Extensive experimental results show that TSGN models can provide more potential information to improve the performance of phishing detection by incorporating graph representation learning.

Index Terms:

Ethereum Phishing identification Subgraph network Network representation Graph classification.I Introduction

Through consensus mechanisms and voluntary respect for the social contract, the internet can be utilized to make a decentralised value-transfer system that can be shared around the world and virtually free to use [1]. Ethereum [2], a very professional system of a cryptographically secure, offers us information that has never been seen before in the financial world. In contrast to fiat currencies of financial world, the transactions through virtual currencies like Ether are completely transparent. Accounts can freely and conveniently conduct transactions with currency and information and do not have to rely on traditional third parties, and these transactions of cryptocurrencies are permanently recorded on Blockchain and are available at any time.

There is no doubt that Ethereum platform facilitates transactions between consenting individuals who are trust with each other. But, the cryptocurrency market provided by Ethereum is inevitably flooded with a variety of cybercrimes due to anonymity and unsupervised organization, including smart Ponzi schemes, phishing, money laundering, fraud, and criminal-related activities. The overall losses caused by DeFi exploits on Ethereum, the backbone of many DeFi applications, have totaled $12 billion so far in 2021 according to Elliptic 111https://blockchaingroup.io/, a firm which tracks movements of funds on the digital ledgers that underpin cryptocurrencies. Moreover, it is reported that phishing scams can break out periodically and are the most deceptive form of fraud [3]. In general, phishing is a kind of cybercrime aiming to exploit the weaknesses of users and obtain personal and confidential information [4]. Some scams lure investors to visit/click the fake projects/websites by offering extra Ether coins as the enticement, and to buy digital assets from scammers for the purpose of phishing scams. Although the hash mechanism set up inside the blockchain can prevent transactions from being tampered with, so far, there are no available internal tools that can detect illegal accounts and suspicious transactions on the network. Thus it can be seen that cybercrimes, especially phishing scams, have become a critical issue on Ethereum and should be worthy of long-term attention and research to maintain a more secure blockchain ecosystem.

Existing methods of phishing detection on Ethereum mainly fall into two categories, propose elaborate handcrafted features [5, 6, 7] and design automatical feature learning models [8, 9, 10]. These studies attempt to learn more useful information from the raw transaction data to reveal the transaction patterns, which are effective for phishing detection but have several important omissions. First, the transaction networks constructed by sampling the original Ethereum transaction data may result in missing structural information which can affect the performance of learning models. This forces researchers to design more precise and data-targeted representation methods to improve the effect, but at the expense of the generalization performance of models on datasets of different attributes and scales. Second, the above models are well-designed only for learning the original networks, ignoring the potential transaction flow patterns provided by the interaction of transactions.

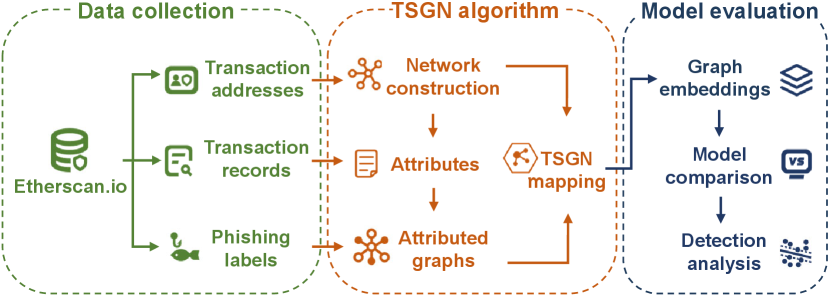

In this paper, inspired by SGN model [11], we develop a network model called TSGN to complement the features of original networks and further assist in identifying phishing accounts. According to the essential attributes contained in the transactions, we design the specific structural mapping strategies in order to encode the weight, direction and timestamp information into transaction subgraph networks. And then, the transaction network is mapped into the transaction subgraph structure space according to the different strategies to obtain the corresponding TSGN and its variants for the subsequent feature extraction and detection task. The algorithm framework is shown in Fig. 1. Specifically, our contributions can be concluded as follows:

-

•

We propose a novel transaction network model, transaction subgraph networks (TSGNs). According to original transaction networks (TN) of various attributes and scales, our TSGNs can introduce different network mapping strategies to fully capture the potential structural topological information which can not be obtained easily from raw transaction networks, benefiting the subsequent phishing detection algorithms.

-

•

We built the first variant of TSGN model, i.e., Directed-TSGN, to integrate direction attribute for effectively characterize transaction flow information from the original transaction data. And, we further introduce timestamp information into Directed-TSGN, producing the Temporal-TSGN which can exactly learn the time-aware transaction behaviors and is applied on multi-edge transaction networks.

-

•

Experimental results demonstrate the effectiveness of the TSGN models by integrating various phishing detection models such as Handcrafted features, Graph2Vec, DeepKernel, Diffpool, and U2GNN. Especially, the classification result of Temporal-TSGN increases to 98.43% (97.00% for Directed-TSGN) when only Diffpool is considered, greatly improving the phishing detection performance. More remarkably, compared with TSGN, the variants of TSGN are of the controllable scale and have lower time cost.

The rest of the paper is structured as follows. In section II, we make a brief description of the phishing identification and graph representation methods. In Section III, we mainly introduce the definitions and construction methods of transaction subgraph networks. In Section IV, we give several feature extraction methods, which together with TN, TSGN, Directed-TSGN, and Temporal-TSGN are applied to six Ethereum transaction network datasets. Finally, we conclude our paper in Section V. For further study, our source codes are available online 222https://github.com/GalateaWang/TSGN-master.

II Background and Related Work

In this section, we provide some necessary background information on phishing detection methods and graph representation algorithms for blockchain data mining.

II-A Phishing Identification

To create a good investment environment in the Ethereum ecosystem, many researchers have paid lots of attention to study the effective detection methods for phishing scams. Wu et al. [9] proposed an approach to detect phishing scams on Ethereum by mining its transaction records. By considering the transaction amount and timestamp, it introduced a novel network embedding algorithm called trans2vec to extract the features of the addresses for subsequent phishing identification. Chen et al. [6] proposed a detecting method based on Graph Convolutional Network (GCN) and autoencoder to precisely distinguish phishing accounts. Li et al. [12] introduced a self-supervised incremental deep graph learning model based on Graph Neural Network (GNN), for phishing scam detection problem on Ethereum. One can see that these methods mentioned above mainly built phishing account detection as a node classification task, which cannot capture more potential global structural features for phishing accounts. Yuan et al. [13] built phishing identification problem as the graph classification task, which used line graph to enhance the Graph2Vec method and achieved good performance. However, Yuan et al. only consider the structural features obtained from line graphs, ignoring the transaction temporal information which plays a significant role in phishing scam detection. As we know, the phishing funds mostly flow from multiple accounts to a specific account. Our method takes the direction and temporal information into consideration and builds the Directed-TSGN and Temporal-TSGN models, revealing the topological pattern of phishing scams.

II-B Graph Representation on Blockchain

Graphs are a general language for describing and analyzing entities with relations or interactions. Due to its unique structure characteristics, the Blockchain ecosystem is naturally modeled as transaction networks to carry out related research. Simultaneously, many graph representation methods are applied to capture the dependency relationships between objects in the Blockchain network structures. There are two main categories of existing representation methods, the former mostly employs machine learning models with dedicated feature engineering [5, 6, 7], and the latter adopts some embedding techniques and deep learning models such as walk-based [9] and the GNN methods. For example, Hu et al. [8] presented a novel deep learning scam detection framework assembling with the Gate Recurrent Unit (GRU) network and attention mechanism, which produced good results on both Ponzi and Honeypot scams. Alarab et al. [10] adopted GCNs intertwined with linear layers to predict illicit transactions in the Bitcoin transaction graph and this method outperforms graph convolutional methods used in the original paper of the same data. Liu et al. [14] introduced an identity inference approach based on big graph analytics and learning, aiming to infer the identity of Blockchain addresses using the graph learning technique based on GCNs. Zhang et al. [15] constructed a graph to represent both syntactic and semantic structures of an Ethereum smart contract function and introduced the GNN for smart contract vulnerability detection.

According to the above works, one can find that the graph representation methods, especially GNNs, can indeed be utilized to study the Blockchain data and outperform in many different applications. However, the existing studies have two potential problems. Firstly, these researches are mostly based on a large-scale transaction network where the scarcity of labels and the huge volume of transactions make it difficult and intricate to take advantage of GNN methods. Secondly, such methods largely rely on the original transaction network and thus may overlook the powerful potential of transaction interactions which contain important hidden transaction patterns. In this work, we collect the 1-hop neighborhood information to construct the subgraph for each target address and use the features of the entire subgraph as the representation of the target node for avoiding inadequate graph feature learning. We further obtain the TSGNs and their three variants by structural space mapping, capturing potential transaction patterns from various aspects.

III Methodology

We first give the description of problem formulation of phishing identification and then illustrate the construction details of the transaction subgraph network models by introducing several definitions.

III-A Problem Formulation

Generally, a cryptocurrency transaction network is modeled as an address interaction graph, illustrating the transferring amount and the flow direction of fund between different accounts in a certain time bucket. Given a set of addresses on Ethereum, we can construct transaction network as a directed graph , where each node indicates an address that can be an Externally Owned Account (EOA) or Contract Account (CA), the edge set is represented as a quad, , where () indicates the direction of the transaction (e.g., assets transfer or smart contract invocation) from to , weight represents the amount of transferred cryptocurrencies or is 0 if the kind of transaction is smart contract invocation, and is the timestamp of the transaction.

Here, we construct a set of transaction graphs for the center addresses , where is the label of address and its corresponding transaction subgraph , where is the label set of all target addresses. In this work, our purpose is to learn a mapping function which can predict the labels of graphs in . The label set includes phishing labels and non-phishing labels in the scenario of Ethereum phishing account identification.

To keep mathematical formulas unified in a stricter and tidier manner, TABLE I shows the notations used in the progress of TSGNs modeling.

| Notation | Description |

|---|---|

| The original Ethereum transaction graph | |

| The TSGN graph | |

| The Directed-TSGN graph | |

| The Temporal-TSGN graph | |

| The generic notation of TSGN graph with an attribute | |

| , | The account nodes in graph |

| A transaction edge in graph | |

| The directed transaction edge in graph | |

| The temporal directed transaction edge in graph | |

| The timestamp attached on the edge | |

| , , , | The edge weights set by the transaction amount |

| , , , | The node sets |

| , , , | The edge sets |

| , | The edge weight sets |

| The weight mapping function | |

| , , | The structural mapping functions |

| The function for getting the attributed object | |

| The set of graphs | |

| , | The label of the graph and the label set |

III-B Transaction Subgraph Networks

In this section, we introduce the detail of our transaction subgraph network model. Firstly, we give the definitions of transaction subgraph network (TSGN), directed transaction subgraph network (Directed-TSGN), and temporal transaction network (Temporal-TSGN). And then, we elaborate the construction methods of the TSGN, Directed-TSGN, and Temporal-TSGN, respectively.

Definition 1 (TSGN).

Given a transaction graph where indicates a transaction edge with the weight , the TSGN, denoted by , is a mapping from to , with the node and edge sets denoted by and , . The transaction subgraphs and will be connected if they share the common addresses in the transaction graph .

According to the Definition 1, we can find that TSGN is the variant of SGN model [11] for the background of Ethereum transaction ecosystem. Different from SGN model, TSGN adds a network weight mapping mechanism , which can retain the transaction amount information in the original transaction network for the downstream behaviour analysis task. The edge weights of TSGN can be calculated specifically by,

| (1) |

where is the mapped weights, and are the original weights. Note that transaction amount is set to 0 when the account interaction relationship is the invocation of contract accounts. Here, TSGN is a classical model which can be applied for any simple Ethereum account interaction network. Considering the complex transaction direction information, we propose the variant named Directed-TSGN.

Definition 2 (Directed-TSGN).

Given a transaction digraph = , where indicates a directed transaction edge, the Directed-TSGN, denoted by , is a mapping from to , with the node and edge sets denoted by . There will be a directed interaction edge between transaction subgraphs and when they meet the following conditions:

-

•

transaction subgraphs and share the common addresses in the weighted directed transaction graph ;

-

•

the source of (or ) is the destination of (or ), which can form a 2-top path with the same direction.

As shown in the Definition 2, Directed-TSGN based on TSGN model introduces the direction information into the mapping mechanism which can capture the path of transaction behavior. The weight can be obtained by Eq. (1). Also, Ethereum data is dynamic and gets larger and more complicated over time, which brings the challenge to analyze transaction behaviors by adopting some graph mining algorithms. In addition, phishing behaviors generally have the strong time-aware property. These challenges require the development of a novel graph model mapping approach that is suitable for the analysis of time-varying and dense networks. Hence, we further propose Temporal-TSGN.

Definition 3 (Temporal-TSGN).

Given a temporal transaction digraph = , where indicates a directed transaction edge with the timestamp , the Temporal-TSGN, denoted by , is a mapping from to , with the node and edge sets denoted by and . A directed edge will be built between two temporal directed transaction subgraphs and when they meet the stricter conditions: In the original temporal transaction graph ,

-

•

and share the common addresses in the original directed transaction graph ;

-

•

the source of (or ) is the destination of (or ), which can form a 2-top path with the same direction.

-

•

the timestamp (or ) makes () (or ()) present a sequential transaction flow.

Clearly, the Temporal-TSGN defined in the Definition 3 is the substructure which is obtained by filtering the nodes and edges of of Directed-TSGN according to the time information, i.e., . By attaching the time attribute on the directed transaction subgraphs (edges), Temporal-TSGN can capture neighbor transaction flow information for each target account address while reducing the scale of the network. The weight value can also be calculated by Eq. (1). Next, we would focus on the specific construction methods for the TSGN, Directed-TSGN, and Temporal-TSGN.

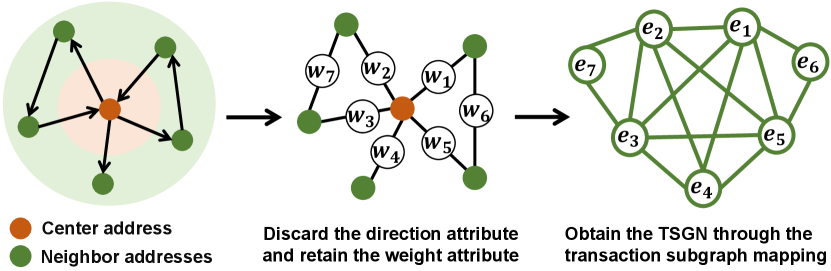

III-C Construction of TSGN

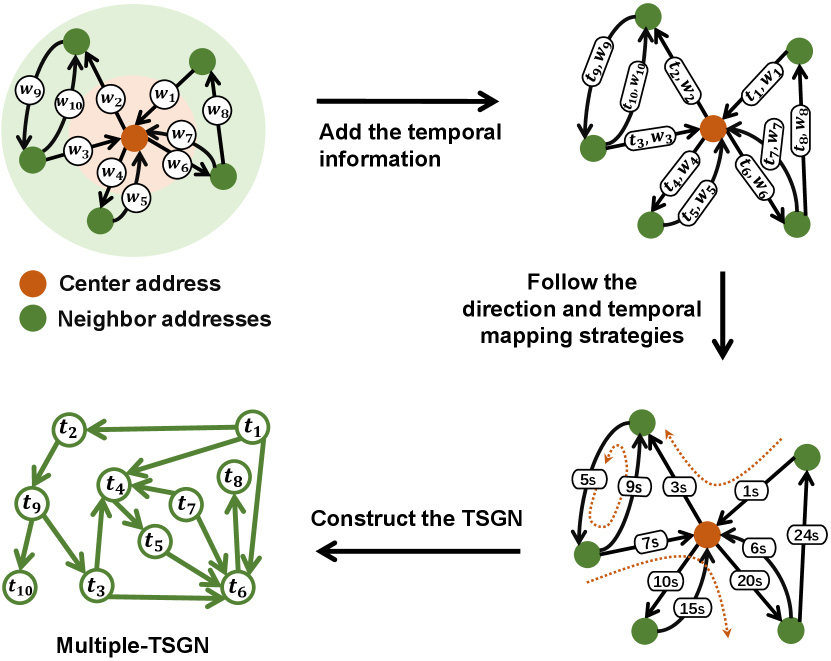

Fig. 2 shows the process of constructing TSGN. Given an original transaction network composed of a center address and its neighbor addresses, we can firstly get a plain transaction network with weight values by discarding the direction attribute and retaining the weight attribute. And then, this network is mapped into TSGN structural space according to the rule indicated in the Definition 1.

Specifically, the edges in the undirected transaction network is mapping to the nodes of TSGN, and then new edges are built between nodes , , , , because the edges of undirected transaction network share the common center node. There is no doubt that transaction subgraph mapping strategy of TSGN also works for the case that there are connections between neighbor addresses. The transactions and are mapped into the TSGN to acquire the neighbor trading pattern information for the center address. A pseudocode of constructing TSGN is given in Algorithm 1. The input of this algorithm is the original transaction network (,) and the output is the constructed TSGN, denoted by (,), where and represent the sets of nodes and edges in the TSGN, respectively. Here, we choose the weight mapping function introduced in Section III-B. Of course, different weight mapping functions can be defined as required.

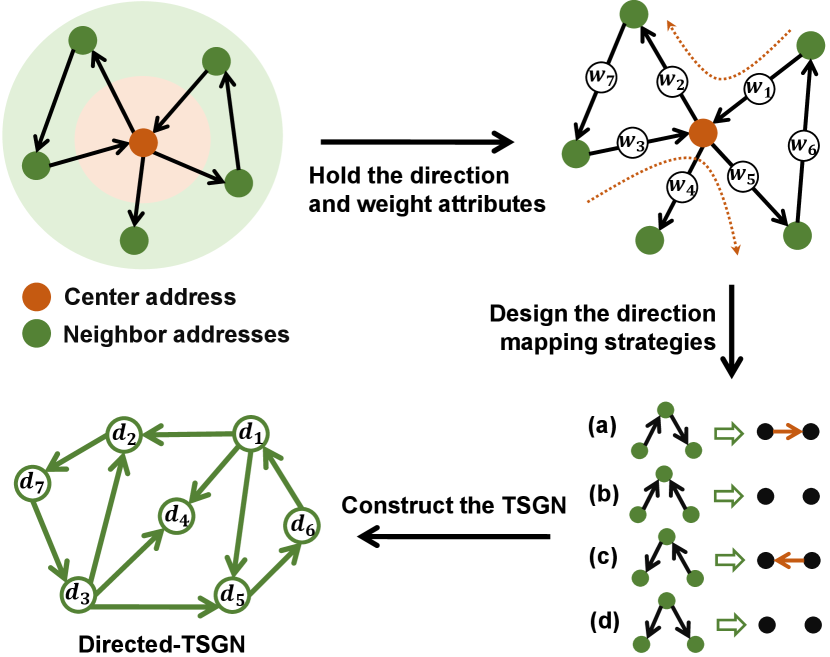

III-D Construction of Directed-TSGN

Transaction direction is one of the most noteworthy characteristics in the field of financial research. Most of studies model the transaction pattern of an account by Indegree and Outdegree in a transaction graph. In Ethereum or Bitcion platform, transaction direction combined with transaction amount information can be adopted to study the peculiar unusual patterns [16], detect abnormal economic interactions [3], predict virtual currency prices [17] and so on. According to Section III-C, we can find that the TSGN model can not retain the direction information which may play an important role in the following phishing identification tasks. Moreover, the TSGN is denser than the original transaction network and even becomes a fully connected network, which may reduce the representation ability of graph mining algorithms. Directed-TSGN proposed in this paper can take into account the two attributes of transaction direction and amount into consideration, benefiting for extracting the potential transaction patterns of the target addresses.

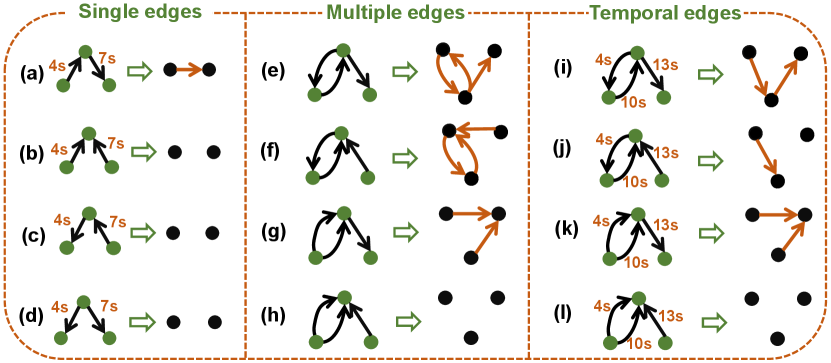

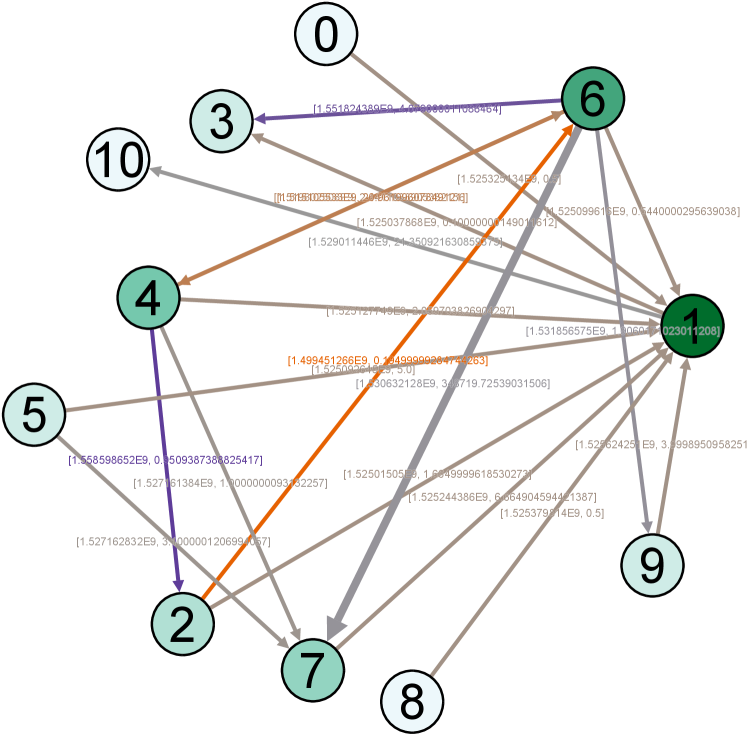

In response to the above problems, we propose one variant of TSGN, named Directed-TSGN. As shown in Fig. 3, the construction of Directed-TSGN consists of the following three steps: (i) hold the direction and weight attributes, (ii) design the direction mapping strategies, (iii) construct the TSGN. The first step aims to hold the direction and weighted attributes of the original transaction network and prepare for the subsequent structural mapping. Similarly, the edges are mapped into the nodes , , , , , , of the Directed-TSGN. The two red directed dashed lines indicate that the transactions and and transactions and can be considered as two continuous transaction behaviors, respectively. In other words, the edges and and the edges and can form two 2-hop paths with the same direction, respectively. According to the four direction mapping strategies, we can build the new edges in the Directed-TSGN. Due to the fact that patterns of (b) and (d) don’t satisfy the requirements of constructing edges, the Directed-TSGN can limit the network size and get a relatively sparse transaction subgraph network. The pseudocodes of constructing Directed-TSGN consist of Algorithm 1 and 2. The input of Algorithm 2 is two transaction subgraphs in the attribute attached graph , and the output is the edge matching the mapping rules. Algorithm 2 aiming to illustrate the Directed-TSGN mapping will be executed when is attached the transaction direction attribute.

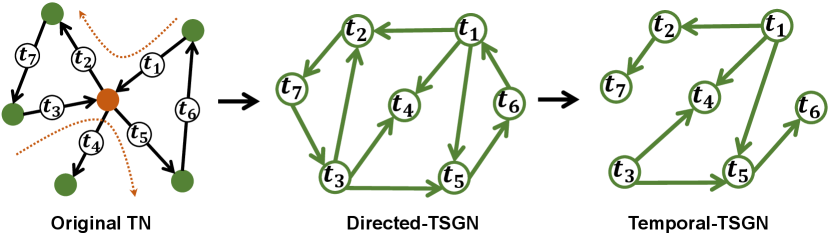

III-E Construction of Temporal-TSGN

Temporal information, i.e., transaction timestamps, can clearly indicate when the transaction occurred. Hence, the transaction time in alliance with trading flow direction offers the possibility for the traceability of transferring funds on Ethereum [18]. In this section, we further introduce the temporal attribute of transactions into the TSGN mapping mechanism. On the basis of Directed-TSGN, Temporal-TSGN is proposed to fuse crucial temporal and direction attributes related to phishing detection for enhancing the ability to identify phishing scams. Temporal-TSGN improves the mapping strategies compared with Directed-TSGN, which can finely captures the temporal transaction flow structural information in Ethereum, further limiting the network scale.

Fig. 4 shows the progress of constructing Temporal-TSGN. Given an original transaction network, Directed-TSGN can be firstly obtained according to the strategy mentioned in Section III-D, and then we can get the Temporal-TSGN from Directed-TSGN by filtering the edges that are not in chronological order. The timestamps on the edges determine the order in which transaction are generated, i.e., . We define that, in the Temporal-TSGN, the transaction must be linked to transaction where , which can form a 2-hop sequential transaction flow. Here, the edges , , and are unsatisfied with the formation condition and are therefore ignored.

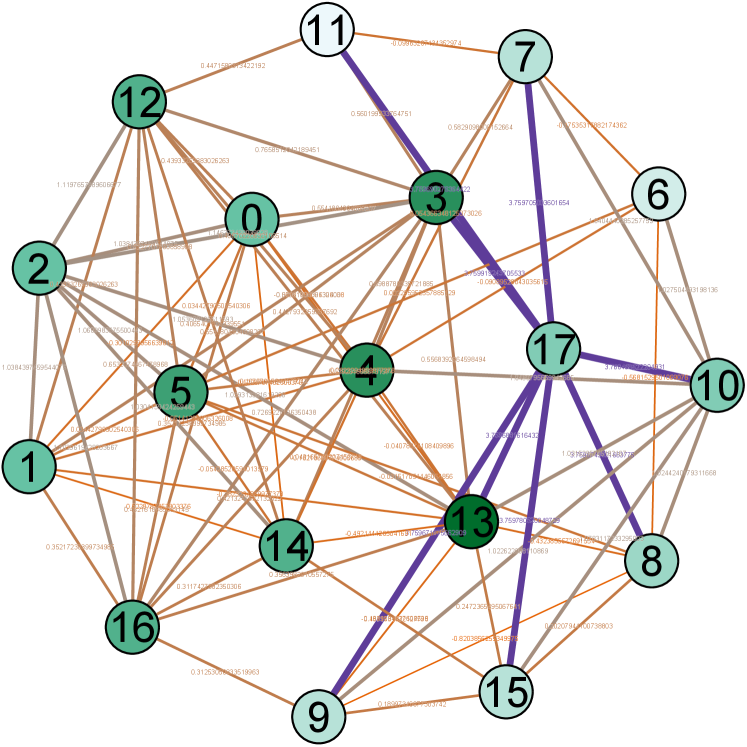

At this point, the Temporal-TSGN mapping strategy can be summarized as the single edges part of Fig. 5. We attach the timestamps onto the edges of different directions, getting the fund flow of the transactions within a certain time frame. It is important to note that, a 2-hop transaction chain is available for the construction of Temporal-TSGN only under the three conditions described in the Definition 3. Specifically, the transaction chain shown in Fig. 5 (a) can be successfully mapped into the TSGN structural space, becoming a 1-hop directed node pair, while the other three transaction chains , , and shall be excluded since they do not satisfy the second condition or the third condition or both in the Definition 3, respectively.

III-F TSGN for Multi-edge Case

Ethereum transaction data poses following several challenges. First, the transaction network is generally sparse and dynamic, but it chould be very dense when viewed over a long period of time. Secondly, the nodes in the transaction graph appear (i.e., new accounts are created) and disappear (i.e., no future transactions occur) daily. Thirdly, transaction throughput on a daily basis fluctuates greatly. So, the time attribute is a vitally important factor when constructing transaction networks, which can exhibit the real complexity of the Ethereum transaction network from the time dimension. Li [19] modeled the temporal relationship of historical transaction records between nodes to construct the edge representation of the Ethereum transaction network. In this section, how to deal with the mapping rules of multi-edge temporal networks is an urgent problem to be solved for the subsequent phishing behavior analysis.

It is worth to note that the temporal mapping mechanism can be extended to the case of multiple edges. As shown in Fig. 5 (e)-(l), the case of multi-edge mapping does not alter the loop of a nodes pair (Fig. 5 (e) and (f)), while the case of temporal edges can tackle it (Fig. 5 (i) and (j)). The timestamps can break the cycle in the network structure and orient the information flow in the TSGN. Intuitively, TSGN under multi-edge case can yield to a clear path of funding transferring and capture the fund flow pattern in the complex temporal transaction network, which is conducive to the detection of illegal trading activities.

In particular, the construction progress of TSGN for multi-edge transaction network, denoted as Multiple-TSGN, is shown in Fig. 6. Firstly, given a weighted directed multi-edge graph, it will turn to a MultiDiGraph where each transaction has its own fixed order of occurrence after being attached with the timestamp attribute. And then, according to the Algorithm 3, we can get the variant of Temporal-TSGN for multi-edge transaction network, i.e., Multiple-TSGN.

To date, it is still the research focus to deeply understand and analyze the knowledge of multi-edge temporal transaction networks in Ethereum. In this section, Multiple-TSGN proposed provides a novel perspective to excavate the potential transaction patterns in Ethereum.

| Dataset | Type | # | #N | #N | Plain | Directed | Multiedge | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #E | #E | #E | #E | #E | #E | |||||||

| EtherG1 | Star | 700 | 350 | 2 | 7 | 13 | 6 | 12 | 6 | 12 | 8 | 13 |

| EtherG2 | Star | 700 | 350 | 2 | 14 | 33 | 13 | 32 | 13 | 32 | 20 | 34 |

| EtherG3 | Star | 700 | 350 | 2 | 96 | 4972 | 95 | 4972 | 100 | 4985 | 23 | 12209 |

| EtherG4 | Net | 700 | 350 | 2 | 7 | 13 | 7 | 20 | 7 | 21 | 163 | 24317 |

| EtherG5 | Net | 700 | 350 | 2 | 14 | 33 | 17 | 58 | 18 | 65 | 465 | 24354 |

| EtherG6 | Net | 700 | 350 | 2 | 96 | 4972 | 161 | 7534 | 174 | 10745 | 1765 | 68959 |

IV Experiments

In this section, we describe the experiments conducted to evaluate the effectiveness of our TSGN models on the task of phishing detection. We first construct the network datasets by exploiting transactions records from Ethereum, then outline several baselines followed by the corresponding experimental setup. Finally, we show the experimental results with discussion.

IV-A Data Collection and Preparation

As today’s largest blockchain-based application, Ethereum has fully open transaction data which are permanently recorded and easily accessed through the API of Etherscan 333https://etherscan.io or the academic blockchain data platform XBlock 444http://xblock.pro/.

Ethereum has gained massive momentum in recent years, mainly due to the success of cryptocurrencies. Considering that the entire cryptocurrency trading network is enormous, we crawl some phishing addresses and non-phishing addresses as the target nodes and extract their first-order neighbor nodes from the first seven million blocks of Ethereum to construct transaction network datasets (all transactions occerred between July 30, 2015 and January 2, 2019). After filtering and preprocessing the raw data, we randomly select 1050 phishing addresses and 1050 unlabeled addresses according based on the account label information on the XBlock platform, and crawl their neighbor addresses and transaction records to construct transaction networks. And then, these networks will be randomly divided so that we finally get three balanced star-form network datasets EtherG1, EtherG2, and EtherG3, each of which has 350 transaction networks of phishing addresses and 350 transaction networks of non-phishing addresses. Besides, in order to increase the structural complexity of the transaction networks, we expand the scale of the networks by introducing the interactive edges between 1-hop neighbors, yielding to three net-form transaction datasets EtherG4, EtherG5, and EtherG6. The basic statistics of these datasets are presented in TABLE II.

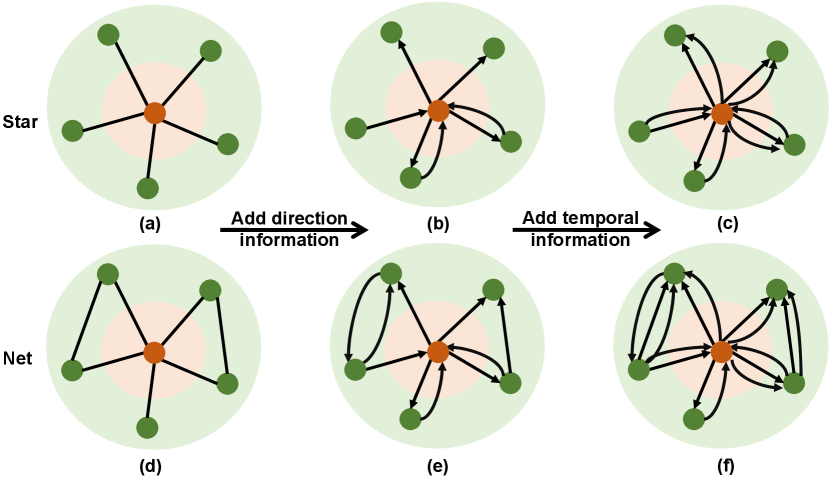

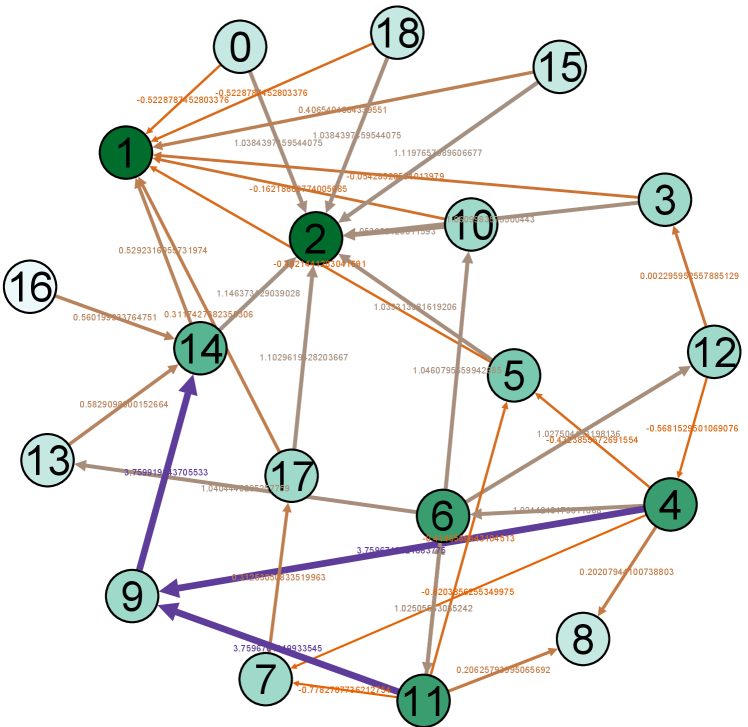

As shown in TABLE III, different variants of TSGN model have their own unique attribute dependencies. Hence, it is necessary to sketch the transaction network structure under different attributes. Based on Fig. 7, we further illustrate the details of edge statistics of TABLE II. Plain indicates no directed and temporal attributes are included in the network structures (Fig. 7 (a) and (d)), Directed means the edges of networks are directed (Fig. 7 (b) and (e)), and Multiedge signifies the edges are attached directed and temporal attributes simultaneously to distinguish the multiple same-directed edges between a node pair (Fig. 7 (c) and (f)). Note that, the weight is set as the inherent attribute. Apparently, the richer the edge attributes, the more complex the network structures. And the next experiments will be conducted on these six datasets to verify the performance of the four TSGN models.

| Model | Weighted | Directed | Temporal | Multiedge |

|---|---|---|---|---|

| TSGN | ✓ | ✗ | ✗ | ✗ |

| Directed-TSGN | ✓ | ✓ | ✗ | ✗ |

| Temporal-TSGN | ✓ | ✓ | ✓ | ✗ |

| Multiple-TSGN | ✓ | ✓ | ✓ | ✓ |

IV-B Baseline Methods

In order to better verify the effect of the proposed TSGN models, we adopt six Ethereum phishing scams detection models, namely handcrafted features, Graph2Vec, DeepKernel, Diffpool, U2GNN and Line_Graph2Vec which are introduced in the following.

Handcrafted Features: Handcrafted features are the most straightforward method to depict structure properties of networks. Different from previous feature designs listing a lot transaction attributes, we adopted ten classic topological attributes in network science which are widely used in graph mining tasks [20, 11, 21, 22]. As a baseline, we aim at building the representation of the transaction networks by manually extracting the features for the subsequent account identification task. The first six handcrafted features are the basic statistics of topological structures, including Number of addresses, Number of transactions, Average degree of transaction subgraph, Percentage of leaf nodes, Network density, and Average neighbor degree. And the last four features, mainly including Average clustering coefficient, Largest eigenvalue of the adjacency matrix, Average betweenness centrality, and Average closeness centrality, are the relatively complex network computing properties. Here, Average clustering coefficient is a very popular metric to quantify the edge density in ego networks. Eigenvalues as the isomorphic invariant of a graph can be used to estimate many static attributes, such as connectivity, diameter, of transaction networks. Centrality measures are indicators of the importance (status, prestige, standing, and the like) of a node in a network. Above various features provide a comprehensive portrait of the transaction network.

Graph2Vec [23]: It is the first unsupervised embedding approach for the entire networks, which constructs Weisfeiler-Lehman tree features for nodes in graphs and then learn graph representations by decomposing the graph-feature co-occurrence matrix.

DeepKernel [24]: This method provides a unified framework, named DGK, that defines the similarity between graphs via leveraging the dependency information of sub-structures, while learning latent representations.

Diffpool [25]: This method proposed a differentiable graph pooling module, which can generate hierarchical representations of graphs and can be combined with various GNN architectures in an end-to-end manner. This method mainly solves the problem that the traditional GNN methods are flat and can’t learn the hierarchical representations of graphs.

U2GNN [26]: It presented a GNN model leveraging a transformer self-attention mechanism followed by a recurrent transition to induce a powerful aggregation function to learn graph representations, which achieved state-of-the-art performance on many classical benchmark for graph classification.

Line_Graph2Vec [13]: This method collected a set of subgraphs, each of which contains a target address and its surrounding transaction network, and then converted them into the corresponding line graphs which can be input into the Graph2Vec model for acquiring the representation vectors for all the graphs.

| Method | Dataset | Classification results (-, ) | |||||||

| Star | Net | Avg. | |||||||

| EtherG1 | EtherG2 | EtherG3 | EtherG4 | EtherG5 | EtherG6 | ||||

| Baseline | Feature-based | Handcrafted | 80.36 | ||||||

| \cdashline2-10[1pt/1pt] | Embedding | Graph2Vec | 70.29 | ||||||

| DeepKernel | 74.38 | ||||||||

| \cdashline2-10[1pt/1pt] | GNN-based | Diffpool | 90.47 | ||||||

| U2GNN | 74.69 | ||||||||

| \cdashline2-10[1pt/1pt] | Line_Graph2Vec | 71.86 | |||||||

| Ours | Handcrafted w/ TSGN | 81.34 | |||||||

| Handcrafted w/ Directed-TSGN | 82.76 | ||||||||

| Handcrafted w/ Temporal-TSGN | 85.88 | ||||||||

| 13.26% | 7.32% | 5.96% | 6.85% | 5.36% | 3.38% | 6.87% | |||

| \cdashline2-10[1pt/1pt] | Graph2Vec w/ TSGN | 71.86 | |||||||

| Graph2Vec w/ Directed-TSGN | 72.82 | ||||||||

| Graph2Vec w/ Temporal-TSGN | 76.62 | ||||||||

| 10.66% | 10.04% | 13.97% | 4.73% | 8.62% | 6.65% | 9.01% | |||

| \cdashline2-10[1pt/1pt] | DeepKernel w/ TSGN | 74.50 | |||||||

| DeepKernel w/ Directed-TSGN | 74.41 | ||||||||

| DeepKernel w/ Temporal-TSGN | 77.26 | ||||||||

| 6.88% | 4.36% | 1.94% | -0.38% | 6.78% | 4.60% | 3.87% | |||

| \cdashline2-10[1pt/1pt] | Diffpool w/ TSGN | 94.31 | |||||||

| Diffpool w/ Directed-TSGN | 94.03 | ||||||||

| Diffpool w/ Temporal-TSGN | 94.90 | ||||||||

| 14.58% | 10.68% | 2.10% | 0.84% | 4.56% | 4.95% | 4.90% | |||

| \cdashline2-10[1pt/1pt] | U2GNN w/ TSGN | 75.17 | |||||||

| U2GNN w/ Directed-TSGN | 76.60 | ||||||||

| U2GNN w/ Temporal-TSGN | 79.64 | ||||||||

| 5.41% | 10.63% | 4.06% | 4.34% | 8.31% | 6.85% | 6.63% | |||

IV-C Experimental Setup

To investigate the performance of our TSGN models, above mentioned representation methods are adopted to extract the feature vectors for TN, TSGN, Directed-TSGN, and Temporal-TSGN, preparing for training the classification model. Here, the parameters setting of representation models under different TSGNs are given as follows.

For Handcrafted Features, there are no hyperparameters, just extract 10 features manually for each graph as the feature vectors to train the phishing detection model.

For Graph2Vec and Line_Graph2Vec, the parameter height of the WL kernel is set to 3. The commonly-used value of 1,024 is adopted as the embedding dimension. The other parameters are set to defaults: the learning rate is set to 0.025 and the epoch is set to 1000.

For DeepKernel, the Weisfelier-Lehman subtree kernel is used to build the corpus and its height is set to 2, the embedding dimension is set to 10, the window size is set to 5 and skip-gram is used for the word2vec model. Furthermore, the node degrees are set as the node labels for TN and all TSGNs. As the key point of this method, the sub-structure similarity matrix , is calculated by the matrix with each column representing a sub-structure vector, . Let denote the matrix with each column representing a sub-structure frequency vector. According to the definition of kernel: , one can use the columns in the matrix as the inputs to the classifier.

For Diffpool, we use the in-degree and out-degree as the node features for the TN, and initialize the feature matrix by the edge weights of TN for different TSGNs. The other parameters of the model are set to defaults.

For U2GNN, we use the in-degree and out-degree as the node features for the TN, and initialize the feature matrix by the edge weights of TN for different TSGNs. The other parameters of the model are set to defaults.

Next, for each feature representation method, the feature vectors of TN is respectively concatenated with those of different TSGNs, and then the dimension of the feature vectors is reduced to the same as that of the feature vector obtained from the original transaction network using PCA in the experiments, for a fair comparison. For each dataset, one randomly split it into 9 folds for training and 1 fold for testing. In order to accurately evaluate the quality of each classification model, in this paper, we will use - as a metric,

| (2) |

where P is precision and R is recall. - is the harmonic mean of precision and recall, so it can more comprehensively judge the pros and cons of the classification models. To exclude the random effect of fold assignment, the experiment is repeated 300 times using the Random Forest classifier and then the average - and its standard deviation recorded. Moreover, Percentage Increase () is calculated to measure the performance improvement of the proposed models relative to the original model.

| (3) |

IV-D Experimental Results and Analysis

According to the above setting, we conduct some experiments on the six Ethereum datasets. To investigate the effectiveness of TSGN models, for each feature extraction algorithm, the classification results are compared on the basis of TN. Firstly, we present the performance of original model based on TN. The phishing identification performance of TSGN, Directed-TSGN, Temporal-TSGN, and Multiple-TSGN, integrating TN, are then displayed respectively. Note that, the concatenated feature vectors are fed into the same classification model for a fair comparison.

IV-D1 TSGN models can enhance phishing account identification ability

TABLE IV summarises our results in terms of the metric -. On the whole, compared with the transaction networks, TSGN, Directed-TSGN, and Temporal-TSGN models indeed have good performance in enhancing the phishing address detection. TSGN proposed as the most basic model is comparable with the five baseline models based on TN, which produces slight improvement under different datasets and feature extraction methods. Line_Graph2Vec is the improved version of Graph2Vec, which is equivalent to our Graph2Vec w/ TSGN in model design and model performance. Directed-TSGN increases the performance of the original phishing identification in 26 out of 30 cases, with a relative improvement of 3.28% on average. Moreover, since different TSGNs generated by different attribute mapping strategies can capture the different perspectives of a network, one may expect that the fusion of attributes, i.e., Temporal-TSGN, can produce even better detection results. Apparently, the proposed TSGN models improve the identification performance of the TN (original) model in 29 of 30 cases, achieving the optimal result of 98.43% on EtherG6. Especially, Temporal-TSGN almost always performs best on the six datasets, which incorporating with Diffpool outperforms the results based on other baselines.

IV-D2 Transaction feature exploration should be paralleled with topological structure learning

It is undisputed that the quality of features extracted from transaction networks will affect the performance of downstream phishing detection model, which is clearly reflected in our experiments. It is obvious that the classification results vary widely based on five different baseline models. Handcrafted based on feature assembly is superior to the automatic feature extraction methods relied on learning model, i.e., Graph2Vec and DeepKernel, with average gains of 9.29% and 8.26% respectively. We also find that the handcrafted features elaborately could greatly boost the performance of our TSGN model, achieving a percentage increase of 13.26% on EtherG1. And then, the experimental results have turned the spotlight on the Diffpool. In this case, our TSGN models produce optimal results exceeding those of other methods. Besides, U2GNN, which tops the global graph classification leaderboard, did not achieve impressive performances, even underperforming Handcrafted. It follows that transaction feature exploiting and topological structure learning of transaction networks are equally important in the scenario of Ethereum phishing identification. The comprehensive and rational exploration of transaction features in the progress of network modeling is the main attribution for the good performance of our TSGNs. Furthermore, we find that the complex topological interaction in the transaction networks offers more insights into the model learning of phishing identification. The models on datasets of Net type outperform those on datasets of Star type in terms of the metric - in 43 out of 60 cases. While, the models on datasets of Star type have great potential for improvement, which produce higher %Increase values than datasets of Net type in 11 out of 15 cases. It also shows that the interaction information between 1-hop neighbors could indeed enhance the performance of the detection models.

| Dataset | TSGN | Directed-TSGN | Temporal-TSGN |

|---|---|---|---|

| EtherG1 | 2.42 | 2.35 | 2.29 |

| EtherG2 | 3.29 | 2.62 | 2.60 |

| EtherG3 | 605.79 | 93.28 | 71.62 |

| EtherG4 | 3.58 | 3.45 | 3.31 |

| EtherG5 | 6.54 | 5.92 | 5.56 |

| EtherG6 | 801.93 | 202.49 | 165.96 |

| Multiple-TSGN | Handcrafted | Graph2vec | DeepKernel | Diffpool | U2GNN |

|---|---|---|---|---|---|

| EtherG1 | |||||

| EtherG2 | |||||

| EtherG3 | |||||

| EtherG4 | |||||

| EtherG5 | |||||

| EtherG6 | |||||

| \hdashlineAvg.Multiple-TSGN | 93.24 | 74.31 | 78.86 | 92.40 | 80.38 |

| Avg.TSGNs (best) | 85.88 | 76.62 | 77.26 | 94.90 | 79.64 |

| Avg.TN | 80.36 | 70.29 | 74.38 | 90.47 | 74.69 |

| \hdashline (TSGNs) | 8.57% | -3.01% | 2.07% | -2.63% | 0.93% |

| (TN) | 16.03% | 5.72% | 6.02% | 2.13% | 7.62% |

IV-D3 TSGNs with attributes can effectively reduce the modeling time of large Ethereum networks

Furthermore, we record the computational time and compare the time consumption of constructing TSGN, Directed-TSGN, and Temporal-TSGN on six Ethereum datasets. The results are presented in TABLE V, where one can see that the time consumption of constructing TSGN, Directed-TSGN, and Temporal-TSGN only show a slight decrease in these small-scale datasets, such as EtherG1, EtherG2, EtherG4, and EtherG5. However, there is a significant decrease in computational time of Directed-TSGN compared with that of TSGN when considering the larger datasets EtherG3 and EtherG6, decreasing from 6 hundred seconds to less than 93 seconds and from 800 seconds to 200 seconds respectively. In addition, the time consumption of Temporal-TSGN presents a further reduction on the basis of Directed-TSGN. Such results suggest that Directed-TSGN and Temporal-TSGN can further enhance the performance of the algorithm for phishing account identification, while also greatly improving the efficiency of the algorithms.

(a) TN-star

(b) TN-net

(c) TSGN

(d) Directed-TSGN

(e) Temporal-TSGN

(f) Multiple-TSGN

IV-D4 Multiple-TSGN performs well on the multi-edge networks

Temporal attributes can be adopted to identify and distinguish the multiple same-directed transaction edges between a pair of nodes. Therefore, Multiple-TSGN can dispose of more complex multi-edge transaction networks via extending the scope of application of Temporal-TSGN. In this section, the performance of our TSGN is further evaluated in the case of multi-edge transaction networks, the specific statistics of which are shown in the Multiedge collection of TABLE II. As shown in TABLE VI, experiments on the six Ethereum datasets show competitive results. We can see that the classification performance of Graph2Vec, DeepKernel, and U2GNN methods on multi-edge datasets does not show much difference compared with the cases as shown in TABLE IV. Interestingly, Multiple-TSGN presents its capacity to integrate information of Multi-edge networks under the Handcrafted method, achieving good performance of 93.24% on average, and Diffpool still preserves the relatively high detection results of 95.86% and 96.71% on EtherG3 and EtherG4, respectively. Besides, compared with TN and TSGNs models, it generates a relative improvement of 16.03% and 8.57% on average, respectively, in terms of the metric. On the other hand, Multiple-TSGN increases the performance of the original TN model in all cases, with the (TN) ranging from 2.13% to 16.03%. It follows that our Multiple-TSGN model has a considerable performance when dealing with large-scale transaction networks with complex structural attributes. Overall, TSGN conducted on multi-edge cases can further improve the representation capability of the classification methods.

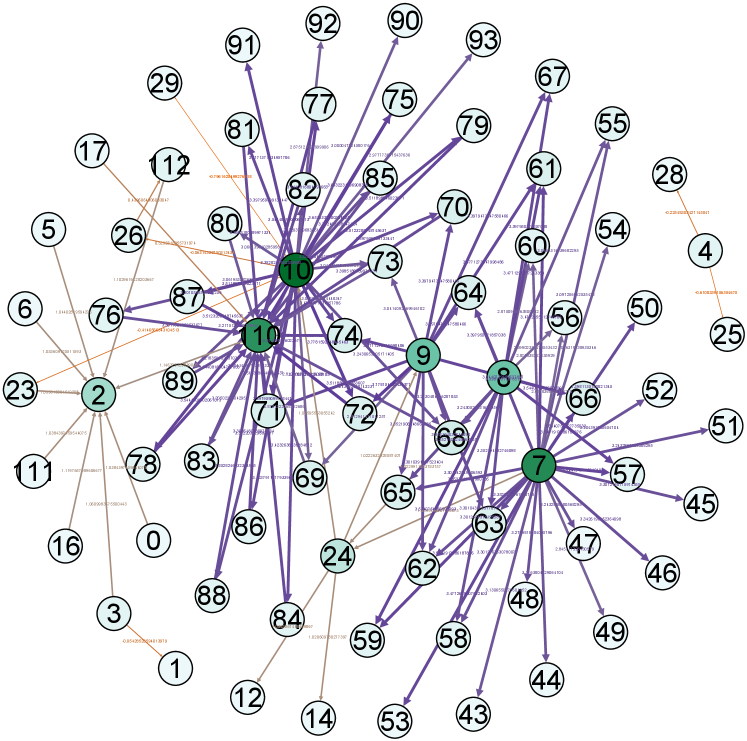

IV-E Visualization

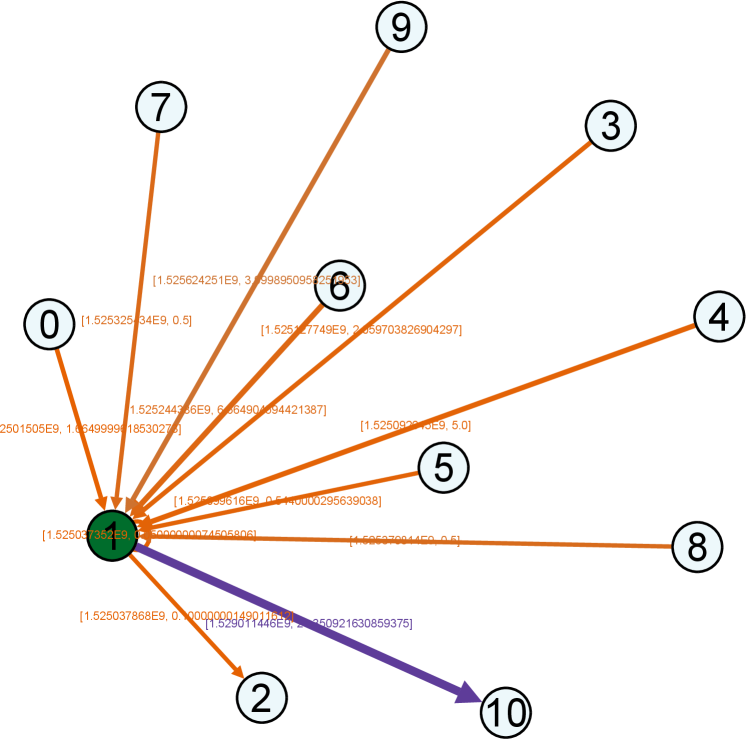

Moreover, for a better demonstration of network structures, we provide the visualization of real example network and its TSGNs as shown in Fig. 8. Fig. 8 (a)-(b) show the transaction networks of different types, following with TSGN, Directed-TSGN, and Temporal-TSGN shown in (c)-(e) which are mapped from (b) the net-form transaction network. Multiple-TSGN in (f) is constructed based on the multi-edge net-form transaction network. In these networks, the node with more dark color has a bigger degree value. The arrow on the edge represents the direction of fund flows between accounts, and the thicker edge with higher-level color means a higher transaction amount (orange, gray, and purple are adopted as the color levels in turns). It can be found that Directed-TSGN can indeed reduce the scale of TSGN and yield a clear structure of transfer flows, and Temporal-TSGN further filters the network structure of Directed-TSGN by the transaction temporal attribute, where some edges disappear such as (9,14), (16,14) and (14,1) and forth. Multiple-TSGN is much more complex than the other TSGNs, providing more interaction information from different accounts around the target node, benefiting the phishing detection.

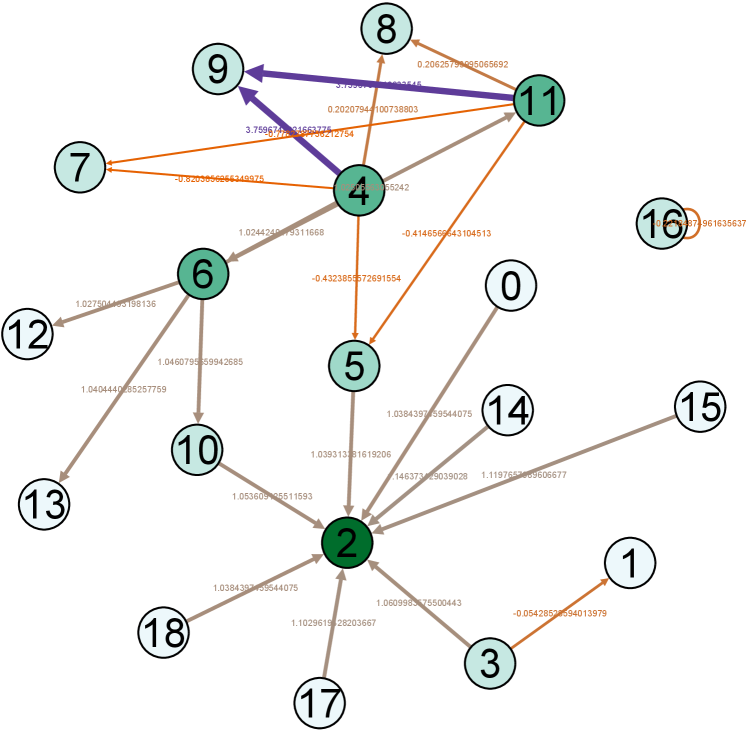

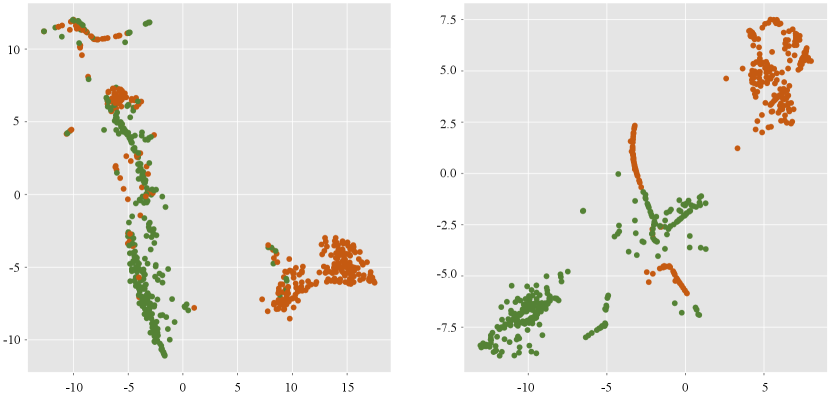

As a simple case study, we utilize the t-SNE to visualize the results of classification on EtherG1 dataset based on Diffpool method to verify the effectiveness of our TSGN model. Here, we choose the Directed-TSGN to visualize since it produces the good performance that enhances the detection performance of Diffpool most, with of 14.58%. As shown in Fig. 9, the structural features are located in different places by utilizing t-SNE. The left shows the original detection result using Diffpool with TN model, while the right depicts the optimized distribution of the same dataset using Diffpool with Directed-TSGN. One can see that the transaction networks in EtherG1 dataset can indeed be distinguished by the original features of Diffpool, but it appears that the distinction of networks could become more explicit after TSGN mapping with direction, demonstrating the effectiveness of our Directed-TSGN model. It also suggests that our TSGN model provides more predominant features contrasted with original TN model.

V Conclusion

In this paper, we propose a novel transaction subgraph network (TSGN) model for phishing detection. By introducing different mapping mechanisms into the transaction networks, we built TSGN, Directed-TSGN, Temporal-TSGN models to enhance the classification algorithms. Compared with the baselines only based on the TN, our TSGNs indeed provide more potential information to benefit the phishing detection. By comparing with the TSGN, Directed-TSGN and Temporal-TSGN are of the controllable scale and indeed have much lower time cost, benefiting the network feature extraction methods to learn the network structure with higher efficiency. Experimental results manifest that, combined with network representation algorithms, these TSGN models can capture more features to enhance the classification algorithm and improve phishing nodes identification accuracy in Ethereum. In particular, when integrating the appropriate feature representation methods, such as Handcrafted and Diffpool, TSGNs can achieve the best results on all datasets.

In the future, we will extend and improve our model for suit more blockchain data mining tasks, such as Pondzi scheme detection, transaction tracking, etc.

Acknowledgment

This work was partially supported by the Key R&D Program of Zhejiang under Grant No. 2022C01018, by the National Natural Science Foundation of China under Grant No. U21B2001 and 61973273, by the Zhejiang Provincial Natural Science Foundation of China under Grant No. LR19F030001, and by the National Key R&D Program of China under Grant No. 2020YFB1006104.

References

- [1] S. Nakamoto, “Bitcoin: A peer-to-peer electronic cash system,” Decentralized Business Review, p. 21260, 2008.

- [2] G. Wood, “Ethereum: A secure decentralised generalised transaction ledger,” Ethereum project yellow paper, vol. 151, no. 2014, pp. 1–32, 2014.

- [3] W. Chen, X. Guo, Z. Chen, Z. Zheng, and Y. Lu, “Phishing scam detection on ethereum: Towards financial security for blockchain ecosystem,” in IJCAI, 2020, pp. 4506–4512.

- [4] M. Khonji, Y. Iraqi, and A. Jones, “Phishing detection: a literature survey,” IEEE Communications Surveys & Tutorials, vol. 15, no. 4, pp. 2091–2121, 2013.

- [5] Y. Huang, H. Wang, L. Wu, G. Tyson, X. Luo, R. Zhang, X. Liu, G. Huang, and X. Jiang, “Understanding (mis) behavior on the eosio blockchain,” Proceedings of the ACM on Measurement and Analysis of Computing Systems, vol. 4, no. 2, pp. 1–28, 2020.

- [6] L. Chen, J. Peng, Y. Liu, J. Li, F. Xie, and Z. Zheng, “Phishing scams detection in ethereum transaction network,” ACM Transactions on Internet Technology (TOIT), vol. 21, no. 1, pp. 1–16, 2020.

- [7] F. Poursafaei, G. B. Hamad, and Z. Zilic, “Detecting malicious ethereum entities via application of machine learning classification,” in 2020 2nd Conference on Blockchain Research & Applications for Innovative Networks and Services (BRAINS). IEEE, 2020, pp. 120–127.

- [8] H. Hu and Y. Xu, “Scsguard: Deep scam detection for ethereum smart contracts,” arXiv preprint arXiv:2105.10426, 2021.

- [9] J. Wu, Q. Yuan, D. Lin, W. You, W. Chen, C. Chen, and Z. Zheng, “Who are the phishers? phishing scam detection on ethereum via network embedding,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, 2020.

- [10] I. Alarab, S. Prakoonwit, and M. I. Nacer, “Competence of graph convolutional networks for anti-money laundering in bitcoin blockchain,” in Proceedings of the 2020 5th International Conference on Machine Learning Technologies, 2020, pp. 23–27.

- [11] Q. Xuan, J. Wang, M. Zhao, J. Yuan, C. Fu, Z. Ruan, and G. Chen, “Subgraph networks with application to structural feature space expansion,” IEEE Transactions on Knowledge and Data Engineering, vol. 33, no. 6, pp. 2776–2789, 2021.

- [12] S. Li, F. Xu, R. Wang, and S. Zhong, “Self-supervised incremental deep graph learning for ethereum phishing scam detection,” arXiv preprint arXiv:2106.10176, 2021.

- [13] Z. Yuan, Q. Yuan, and J. Wu, “Phishing detection on ethereum via learning representation of transaction subgraphs,” in International Conference on Blockchain and Trustworthy Systems. Springer, 2020, pp. 178–191.

- [14] X. Liu, Z. Tang, P. Li, S. Guo, X. Fan, and J. Zhang, “A graph learning based approach for identity inference in dapp platform blockchain,” IEEE Transactions on Emerging Topics in Computing, 2020.

- [15] Y. Zhuang, Z. Liu, P. Qian, Q. Liu, X. Wang, and Q. He, “Smart contract vulnerability detection using graph neural networks,” in Proceedings of the 2020 29th International Joint Conference on Artificial Intelligence, 2020, pp. 3283–3290.

- [16] D. D. F. Maesa, A. Marino, and L. Ricci, “Detecting artificial behaviours in the bitcoin users graph,” Online Social Networks and Media, vol. 3, pp. 63–74, 2017.

- [17] N. C. Abay, C. G. Akcora, Y. R. Gel, M. Kantarcioglu, U. D. Islambekov, Y. Tian, and B. Thuraisingham, “Chainnet: Learning on blockchain graphs with topological features,” in 2019 IEEE international conference on data mining (ICDM). IEEE, 2019, pp. 946–951.

- [18] D. Lin, J. Wu, Q. Xuan, and K. T. Chi, “Ethereum transaction tracking: Inferring evolution of transaction networks via link prediction,” Physica A: Statistical Mechanics and its Applications, p. 127504, 2022.

- [19] S. Li, G. Gou, C. Liu, C. Hou, Z. Li, and G. Xiong, “Ttagn: Temporal transaction aggregation graph network for ethereum phishing scams detection,” in Proceedings of the ACM Web Conference 2022, 2022, pp. 661–669.

- [20] G. Li, M. Semerci, B. Yener, and M. J. Zaki, “Graph classification via topological and label attributes,” in Proceedings of the 9th international workshop on mining and learning with graphs (MLG), San Diego, USA, vol. 2, 2011.

- [21] J. Wang, P. Chen, B. Ma, J. Zhou, Z. Ruan, G. Chen, and Q. Xuan, “Sampling subgraph network with application to graph classification,” IEEE Transactions on Network Science and Engineering, vol. 8, no. 4, pp. 3478–3490, 2021.

- [22] C. Fu, M. Zhao, L. Fan, X. Chen, J. Chen, Z. Wu, Y. Xia, and Q. Xuan, “Link weight prediction using supervised learning methods and its application to yelp layered network,” IEEE Transactions on Knowledge and Data Engineering, vol. 30, no. 8, pp. 1507–1518, 2018.

- [23] A. Narayanan, M. Chandramohan, L. Chen, Y. Liu, and S. Saminathan, “subgraph2vec: Learning distributed representations of rooted sub-graphs from large graphs,” in International Workshop on Mining and Learning with Graphs., 2016.

- [24] P. Yanardag and S. Vishwanathan, “Deep graph kernels,” in Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 2015, pp. 1365–1374.

- [25] R. Ying, J. You, C. Morris, X. Ren, W. L. Hamilton, and J. Leskovec, “Hierarchical graph representation learning with differentiable pooling,” in Proceedings of the 32nd International Conference on Neural Information Processing Systems, 2018, pp. 4805–4815.

- [26] D. Q. Nguyen, T. D. Nguyen, and D. Phung, “Universal graph transformer self-attention networks,” in Companion Proceedings of the Web Conference 2022 (WWW ’22 Companion), 2022.