TURF: A Two-factor, Universal, Robust, Fast

Distribution Learning Algorithm

Abstract

Approximating distributions from their samples is a canonical statistical-learning problem. One of its most powerful and successful modalities approximates every distribution to an distance essentially at most a constant times larger than its closest -piece degree- polynomial, where and . Letting denote the smallest such factor, clearly , and it can be shown that for all other and . Yet current computationally efficient algorithms show only and the bound rises quickly to for . We derive a near-linear-time and essentially sample-optimal estimator that establishes for all . Additionally, for many practical distributions, the lowest approximation distance is achieved by polynomials with vastly varying number of pieces. We provide a method that estimates this number near-optimally, hence helps approach the best possible approximation. Experiments combining the two techniques confirm improved performance over existing methodologies.

1 Introduction

Learning distributions from samples is one of the oldest (Pearson, 1895), most natural (Silverman, 1986), and important statistical-learning paradigms (Givens & Hoeting, 2012). Its numerous applications include epidemiology (Bithell, 1990), economics (Zambom & Ronaldo, 2013), anomaly detection (Pimentel et al., 2014), language based prediction (Gerber, 2014), GANs (Goodfellow et al., 2014), and many more, as outlined in several books and surveys e.g., (Tukey, 1977; Scott, 2012; Diakonikolas, 2016).

Consider estimating an unknown, real, discrete, continuous, or mixed distribution from independent samples it generates. A distribution estimator maps to an approximating distribution meant to approximate . We evaluate its performance via the expected distance .

The distance between two functions and , , is one of density estimation’s most common distance measures (Devroye & Lugosi, 2012). Among its several desirable properties, its value remains unchanged under linear transformation of the underlying domain, and the absolute difference between the expected values of any bounded function of the observations under and is at most a constant factor larger than , as for any bounded , . Further, a small distance between two distributions implies a small difference between any given Lipschitz functions of the two distributions. Therefore, learning in distance implies a bound on the error of the plug-in estimator for Lipschitz functions of the underlying distribution (Hao & Orlitsky, 2019).

Ideally, we would like to learn any distribution to a small distance. However, arbitrary distributions cannot be learned in distance with any number of samples (Devroye & Gyorfi, 1990), as the following example shows.

Example 1.

Let be the continuous uniform distribution over . For any number of samples, construct a discrete distribution by assigning probability to each of random points in . By the birthday paradox, samples from will be all distinct with high probability and follow the same uniform distribution as samples from , and hence and will be indistinguishable. As , the triangle inequality implies that for any estimator , .

A common remedy to this shortcoming assumes that the distribution belongs to a structured approximation class , for example unimodal (Birgé, 1987), log-concave (Devroye & Lugosi, 2012) and Gaussian (Acharya et al., 2014; Ashtiani et al., 2018) distributions.

The min-max learning rate of is the lowest worst-case expected distance achieved by any estimator,

The study of for various classes such as Gaussians, exponentials, and discrete distributions has been the focus of many works e.g., (Vapnik, 1999; Kamath et al., 2015; Han et al., 2015; Cohen et al., 2020).

Considering all pairs of distributions in , (Yatracos, 1985) defined a collection of subsets with VC dimension (Vapnik, 1999) , and applying the minimum distance estimation method (Wolfowitz, 1957), showed that

However, real underlying distributions are unlikely to fall exactly in any predetermined class. Hence (Yatracos, 1985) also considered approximating nearly as well as its best approximation in . Letting

be lowest distance between and any distribution in , he designed an estimator , possibly outside , whose distance from is close to . For all distributions ,

For many natural classes, . Hence, an estimator is called a -factor approximation for if for any distribution ,

and may be thought of as the error’s bias and variance components.

A small is desirable as it upper bounds the asymptotic error when for , hence providing robustness guarantees when the underlying distribution does not quite follow the assumed model. It ensures robust estimation also under the Huber contamination model (Huber, 1992) where with probability , is perturbed by an arbitrary noise, and the error incurred by a -factor approximation is upper bounded as .

One of the more important distribution classes is the collection of -piecewise degree- polynomials. For simplicity, we assume that all polynomials in are defined over a known interval , hence any consists of degree- polynomials , each defined over one part in a partition of .

The significance of stems partly from the fact that it approximates numerous important distributions even with small and . For example, for every distribution in the class of log-concave distributions, (Chan et al., 2014). Also, , e.g., (Acharya et al., 2017).

It follows that if is a -factor estimator for , then for all , Choosing to equate the bias and variance terms, achieves an expected error , which is the optimal min-max learning rate of (Chan et al., 2014).

Lemma 17 in Appendix A.2 shows a stronger result. If is a -factor approximation for for some and and achieves the min-max rate of a distribution class , then is also a -factor approximation for . In addition to the log-concave class, this result also holds for Gaussian, and unimodal distributions, and for their mixtures.

2 Contributions

Lower Bounds: As noted above, it is beneficial to find the smallest approximation factor for . The following simple example shows that if we allow sub-distributions, even simple collections may have an approximation factor of at least 2.

Example 2.

Let class consist of the uniform distribution and the subdistribution , over . Consider any estimator . Let when as . Since , for to achieve finite approximation factor, we must have . Now consider the discrete distribution in Example 1. Since its samples are indistinguishable from those of , also for . But then , so has approximation factor .

Our definition however considers only strict distributions, complicating lower bound proofs. Let be the lowest approximation factor for . consists of a single distribution over a known interval, hence . (Chan et al., 2014) showed that for all and , . The following lemma, proved in Appendix A.1, shows that for all , and as we shall see later, establishes a precise lower bound for all and .

Lemma 3.

For all except , .

Upper Bounds: As discussed earlier, is a -factor approximation for . However its runtime is . For many applications, or may be large, and even increase with , for example in learning unimodal distributions, we select (Birgé, 1987), resulting in exponential time complexity. (Chan et al., 2014) improved the runtime to polynomial in independent of , and (Acharya et al., 2017) further reduced it to near-linear . (Hao et al., 2020) derived a time algorithm, SURF, achieving , and for . They also showed that this estimator can be parallelized to run in time . (Bousquet et al., 2019, 2021)’s estimator for the improper learning setting (wherein can be any distribution as we consider in this paper) achieves a bias nearly within a factor of , but the variance term exceeds , hence does not satisfy the constant factor approximation definition. Moreover, like Yatracos, they suffer a prohibitive runtime, that could be exponential for some applications.

Our main contribution is an estimator, , a two factor, universal, robust and fast estimator that achieves an approximation factor that is arbitrarily close to the optimal in near-linear time. is also simple to implement as a step on top of the existing merge routine in (Acharya et al., 2017). The construction of our estimate relies on upper bounding the maximum absolute value of polynomials (see Lemma 7) based on their norm, similar to the Bernstein (Rahman et al., 2002) and Markov Brothers’ (Achieser, 1992) inequalities. We show for any and ,

where indicates the respective norms over any interval . This point-wise inequality reveals a novel connection between the and norms of a polynomial, which may be interesting in its own right.

Practical Estimation: For many practical distributions, the optimal parameters values of in approximating with many be unknown. While for common structured classes such as Gaussian, log-concave and unimodal, and their mixtures, it suffices to choose to be any small value, but the optimal choice of can vary significantly. For example, for any constant , for a unimodal , the optimal pieces whereas for a smoother log-concave , significantly lower errors are obtained with a much smaller . Given a family of -factor approximate estimators for , , a suitable objective is to select the number of pieces, to achieve for any given degree-,

| (1) |

Simple modifications to existing cross-validation approaches (Yatracos, 1985) partly achieve Equation (1) with the larger along with an additive . Via a novel cross-validation technique, we obtain a that satisfies Equation (1) with the factor arbitrarily close to the optimal with an additive . In fact, this technique removes the need to know parameters beforehand in other related settings as well, such as the corruption level in robust estimation that all existing works assume is known. We elaborate this in (Jain et al., 2022).

Our experiments reflect the improved errors of over existing algorithms in regimes where the bias dominates.

3 Setup

3.1 Notation and Definitions

Henceforth, for brevity, we skip the subscript when referring to estimators. Given samples , the empirical distribution is defined via the dirac delta function as

allotting a mass at each sample location.

Note that if an estimator is partly negative but integrates to , then , satisfies for any distribution , e.g., Devroye & Lugosi (2012). This allows us to estimate using any real normalized function as our estimator.

For any interval and integrable functions , let denote the distance evaluated over . Similarly, for any class of real functions, let denote the least distance between and members of over .

The distance between and is closely related to their or statistical distance as

the greatest absolute difference in areas of and over all subsets of . As we argued in the introduction, a direct approach to estimate in all possible subsets of is not feasible with finitely many samples. Instead, for a given , the distance (Devroye & Lugosi, 2012) considers the largest difference between and on real subsets with at most intervals. As we show in Lemma 4, it is possible to learn any in distance simply by using the empirical distribution .

We formally define the distance as follows. For any given and interval , let be the set of all unions of at most intervals contained in . Define the distance between as

where denotes the area of the function on the set . For example, if and , the distance . Suppose is the support of . Use this to define .

For two distributions and , the distance is at-most half the distance and with equality achieved as since approximates all subsets of for large ,

The reverse is not true, the distance between two functions may be made arbitrarily small even for a constant distance. For example the distance between any distribution and its empirical distribution is for any . However, the distance between and goes to zero. The next lemma, which is a consequence of VC inequality (Devroye & Lugosi, 2012), gives the rate at which goes to zero.

Lemma 4.

(Devroye & Lugosi, 2012) Given according to any real distribution ,

Note that if is a discrete distribution with support size , Lemma 4 implies , matching the rate of learning discrete distributions. Since arbitrary continuous distributions can be thought of as infinite dimensional discrete distributions where , the lemma does not bound this error.

3.2 Preliminaries

The following -distance properties are helpful.

Property 5.

Given the partition of any interval integrable functions , and integers ,

Property 5 follows since the interval choices with and intervals respectively that achieve the suprema of and are included in the interval partition considered in the RHS.

Property 6.

Given any interval , integrable functions , and integers ,

4 A 2-Factor Estimator for

Our objective is to obtain a -factor approximation for the piecewise class, . To achieve this, we first consider the single-piece class that for simplicity we denote by , and then use the resulting estimator as a sub-routine for the multi-piece class.

4.1 Intuition and Results

It is easy to show from the triangle inequality that if an estimator is as close to all degree- polynomials as their distances to , then the estimator achieves an distance to that is nearly twice that of the best degree- polynomial.

Let denote the length of an interval . The histogram of an integrable function over is , where we assign zero to division by zero.

Let be a polynomial estimator of over , and let be the function obtained by adding to a constant to match its mass to over . For any ,

where follows by the triangle inequality, and follows since has the same mass as by construction, it implies .

Since , if is a small value , approximates nearly as well as any degree- polynomial. Let

be the difference between ’s largest and smallest values. Note that has zero mean over , hence must be zero on at least one point in , implying

| (2) |

Thus we would like to be small , but which may not hold for the given . By additivity, we may partition and perform this adjustment over each sub-interval. A partition of is a collection of disjoint intervals whose union is . Let the histogram of over be

| (3) |

We will construct a partition for which is small . Further, as we don’t know , we will use the empirical distribution that approximates the mass of over each interval of . By Lemma 4, for any with intervals, the expected extra error . If we want this error to be within a constant factor from the min-max rate of , we need to take .

4.2 Polynomial Histogram Approximation

We would first like to bound for any in terms of its norm. From the Markov Brothers’ inequality (Achieser, 1992), for any ,

and is achieved by the Chebyshev polynomial of degree-. Instead, the next lemma shows that the bound can be improved for the interior of . Its proof in Appendix B.1 carefully applies Markov Brothers’ inequality over select sub-intervals of based on the Bernstein’s inequality. For simplicity, consider .

Lemma 7.

For any and ,

We use the lemma and Equation 2 to construct a partition of such that , is bounded by a small value. Note that the lemma’s bound is weaker when is close to the boundary of , hence the parts of decrease roughly geometrically towards the boundary, ensuring is small over each. The geometric partition ensures that the number of intervals is still upper bounded by as we show in Lemma 8.

Consider the positive half of . Given , let . For define the intervals , that together span , and let complete the partition of . Note that . For each further partition into intervals of equal width, and denote this partition by . Clearly partitions .

Define the mirror-image partition of , where, for example, we mirror the interval in to . The following lemma upper bounds the number of intervals in the combination of and and is proven in Appendix B.2.

Lemma 8.

For any degree and , the number of intervals in is at most .

The lemma ensures that we get the desired partition, , with intervals by setting

| (4) |

For any interval , we obtain by a linear translation of . For example, translates to .

Recall that denotes the histogram of on . The following lemma, proven in Appendix B.3 using Equations (2), (4), and Lemma 7, shows that the distance of any degree- polynomial to its histogram on is a factor times than the norm of the polynomial.

Lemma 9.

Given an interval , for some universal constant , for all , and integer ,

We obtain our split estimator for a given polynomial estimator as

that over each subinterval adds to a constant so that its mass over equals that of . Since has intervals, it follows that .

The next lemma (essentially) upper bounds the distance of from any that is close to in distance by the distance of from any function that is close to in distance.

Lemma 10.

For any interval , functions and polynomials , , and , satisfies

where is the universal constant in Lemma 9.

Proof Consider an interval and let respectively denote the histograms of respectively over .

where and follow from the triangle inequality, follows since has the same mass as by construction, it implies , and follows because since and differ by a constant in each .

The proof is complete by summing over using the fact that has at most intervals, and since over , from Lemma 9, the sum

4.3 Applying the Estimator

In this more technical section, we show how to use existing estimators in place of to achieve Theorem 12. The next lemma follows from a straightforward application of triangle inequality to Lemma 10 as shown in Appendix B.4. It shows that given an estimate whose distance to is a constant multiple of plus , depending on the value of , has nearly the optimal approximation factor of at the expense of the larger .

Lemma 11.

Given an interval , , such that for some constants ,

and the parameter , the estimator satisfies

where and is the constant from Lemma 9.

Prior works (Acharya et al., 2017; Hao et al., 2020) derive a polynomial estimator that achieves a constant factor approximation for . We may thus use them as in the above lemma. In particular, the estimator in (Acharya et al., 2017) achieves and and in (Hao et al., 2020) achieves a and , where increases with the degree (e.g., ). Define

| (5) |

and for any , let

| (6) |

where is the constant from Lemma 10. We obtain the following theorem for by using with in Lemma 11, and then applying Lemma 4, all over , i.e. the interval between the least and the largest sample.

Theorem 12.

Given , for any , the estimator for , achieves

5 A 2-Factor Estimator for

In the previous section, we described an estimator that approximates a distribution to a distance only slightly larger than twice . We now extend this result to .

Consider a that achieves . If the intervals corresponding to the different polynomial pieces of are known, we may apply the routine in Section 4 to each interval and combine the estimate to obtain the -factor approximation for .

However, as these intervals are unknown, we instead use the partition returned by the routine in (Acharya et al., 2017). returns a partition with intervals where the parameter by choice. Among these intervals, is not a degree- polynomial in at most intervals. Let be an interval in this partition where has more than one piece. The routine has the property that there are at-least other intervals in the partition in which is a single-piece polynomial with a worse distance to . That is, for any interval in the interval collection,

This is used to bound the distance in these intervals.

Our main routine consists of simply applying the transformation discussed in Section 4 to the partition returned by the routine in (Acharya et al., 2017). Given samples , the number of pieces-, degree-, for any , we first run the routine with input , , and parameters ,

| (7) |

where is as defined in Equation (6), and . returns a partition of with intervals and a degree-, -piecewise polynomial defined over the partition. For any interval , let denote the degree- estimate output by over this interval. We obtain our output estimate by applying the routine in Section 4 to for each with (ref. Equation (6)). This is summarized below in Algorithm 1.

Theorem 13 shows that is a min-max -factor approximation for . We have a term in the ‘variance’ term in Theorem 13 that reflects the pieces in the output estimate. A small corresponds to a low-bias, high-variance estimator with many pieces, and vice-versa. Note that the exponent here is larger than the corresponding in the result for in Section 4 (Theorem 12). The increased exponent over is due to the unknown locations of the polynomial pieces of . Obtaining the exact exponent for -factor approximation for various classes may be an interesting question but beyond the scope of this paper. Let be the matrix multiplication constant. As our transformation of takes time, the overall time complexity is the same as ’s near-linear .

Theorem 13.

Given , an integer number of pieces and degree , the parameter , is returned by in time such that

6 Optimal Parameter Selection

Like many other statistical learning problems, learning distributions exhibits a fundamental trade-off between bias and variance. In Equation (1) increasing the parameters and enlarges the polynomial class , hence decreases the bias term while increasing the variance term . As the number of samples increases, asymptotically, it is always better to opt for larger and . Yet for any given , some parameters and yield the smallest error. We consider the parameters minimizing the upper bound in Theorem 13.

6.1 Context and Results

For several popular structured distributions such as unimodal, log-concave, Gaussian, and their mixtures, low-degree polynomials, e.g. , are essentially optimal (Birgé, 1987; Chan et al., 2014; Hao et al., 2020). Yet for the same classes, the range of the optimal is large, between and . Therefore, for a given , we seek the minimizing the error upper bound in Equation (1).

In the next subsection, we describe a parameter selection algorithm that improves this result for the estimators we considered in the previous section. Following nearly identical steps as in the derivation of Theorem 13 from Lemma 14, and using the probabilistic version of the VC Lemma 4 (see (Devroye & Lugosi, 2012)), it may be shown that with high probability is a -factor approximation for . Namely, for any ,

| (8) |

where is a function of the chosen . We use the estimates to find an estimate such that has an error comparable to the -factor approximation for with the best .

Theorem 15.

Given , , , -factor estimates for in high probability (see Equation (8)), , for any , we find the estimate such that w.p. ,

The proof, provided in Appendix D.1, exploits the fact that the bias term of is at most , which decreases with , and the variance term upper bounded by , increasing with .

6.2 Construction

We use the following algorithm derived in (Jain et al., 2022). Consider the set , an unknown target , an unknown non-increasing sequence , and a known non-decreasing sequence such that .

First consider selecting for a given , the point among that is closer to . Suppose for some constant , . Then from the triangle inequality, . On the other hand if , since (as ), .

Therefore if we set to be sufficiently large and select if , and otherwise set , we roughly obtain . We now generalize this approach to selecting between all points in . Let be the smallest index in such that , . Lemma 16 shows the favorable properties of , for example, that for a sufficiently large , is comparable to when . The proof may be found in Appendix D.2.

Lemma 16.

Given a set in a metric space , a sequence , and , let be the smallest index such that for all , . Then for all sequences such that for all , ,

The set of real integrable functions with distance forms a metric space. For simplicity for the given , , and , denote . Assume is a power of and let . Suppose for constants , and a chosen , is ’s variance term. For any chosen , we obtain by applying the above method with and the s corresponding to as . That is, is the smallest such that , (where we select ). In Section 7, we experimentally evaluate the estimator and the cross-validation technique.

7 Experiments

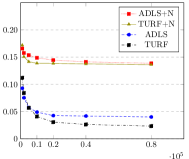

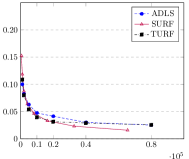

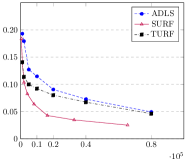

Direct comparison of and for a given is not straightforward as outputs polynomials consisting of more pieces. To compare the algorithms more equitably, we apply the cross-validation technique in Section 6 to select the best for each. The cross validation parameter is chosen to reflect the actual number of pieces output by and . Note that while (Hao et al., 2020) is another piecewise polynomial based estimation method, it has an implicit method to cross-validate , unlike and . As comparisons against may only reflect the relative strengths of the cross validation methods and not that of the underlying estimation procedure, we defer them to Appendix 5. All experiments compare the error, run for between 1,000 and 80,000, and averaged over 50 runs. For we use the code provided in (Acharya et al., 2017), and for we use the algorithm in Section 5.

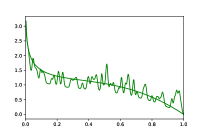

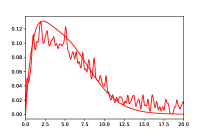

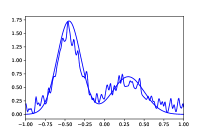

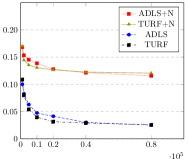

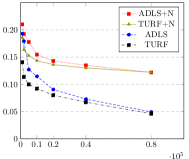

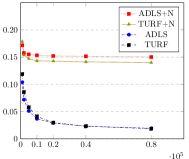

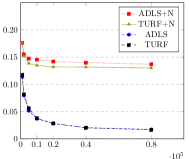

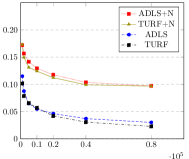

The experiments consider the structured distributions addressed in (Acharya et al., 2017), namely mixtures of Beta: , Gamma: , and Gaussians: .65(-.45,)+.35(.3,) as shown in Figure 1. Figure 2 considers approximation relative to . The blue-dashed and the black-dot-dash plots show that modestly outperforms . It is especially significant for the Beta distribution as has a large second derivative near , and approximating it may require many degree-1 pieces localized to that region. For this lower width region, the distance may be too small to warrant many pieces in , unlike in that forms intervals guided by shape constraints e.g., based on Lemma 7.

We perturb these distribution mixtures to increase their bias. For a given , select by independently choosing uniformly from the effective support of (we remove tail mass on either side). At each of these locations, apply a Gaussian noise of magnitude with standard deviation , for some constant that is chosen to scale with the effective support width of . That is,

We choose and for the Beta, Gamma and Gaussian mixtures respectively, to yield the distributions shown in Figure 1. The red-dotted and olive-solid plots in Figure 2 compares and on these distributions. While the overall errors are larger due to the added noise, outperforms on nearly all distributions. A consistent trend across our experiments is that for large , the performance gap between and decreases. This may be explained by the fact that as increases, the value of output by the cross-validation method also increases, reducing the bias under both and . However, the reduction in ’s bias is more significant due to its larger approximation factor compared to , resulting in the smaller gap.

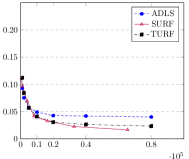

Figure 3 repeats the same experiments for . Increasing the degree leads to lower errors on both and in the non-noisy case. However, the larger bias in the noisy case reveals the improved performance of .

References

- Acharya et al. (2014) Acharya, J., Jafarpour, A., Orlitsky, A., and Suresh, A. T. Near-optimal-sample estimators for spherical gaussian mixtures. arXiv preprint arXiv:1402.4746, 2014.

- Acharya et al. (2017) Acharya, J., Diakonikolas, I., Li, J., and Schmidt, L. Sample-optimal density estimation in nearly-linear time. In Proceedings of the Twenty-Eighth Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 1278–1289. SIAM, 2017.

- Achieser (1992) Achieser, N. Theory of Approximation. Dover books on advanced mathematics. Dover Publications, 1992. ISBN 9780486671291.

- Ashtiani et al. (2018) Ashtiani, H., Ben-David, S., Harvey, N. J., Liaw, C., Mehrabian, A., and Plan, Y. Nearly tight sample complexity bounds for learning mixtures of gaussians via sample compression schemes. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 3416–3425, 2018.

- Birgé (1987) Birgé, L. Estimating a density under order restrictions: Nonasymptotic minimax risk. The Annals of Statistics, pp. 995–1012, 1987.

- Bithell (1990) Bithell, J. F. An application of density estimation to geographical epidemiology. Statistics in medicine, 9(6):691–701, 1990.

- Bousquet et al. (2019) Bousquet, O., Kane, D., and Moran, S. The optimal approximation factor in density estimation. arXiv preprint arXiv:1902.05876, 2019.

- Bousquet et al. (2021) Bousquet, O., Braverman, M., Efremenko, K., Kol, G., and Moran, S. Statistically near-optimal hypothesis selection. arXiv preprint arXiv:2108.07880, 2021.

- Chan et al. (2014) Chan, S.-O., Diakonikolas, I., Servedio, R. A., and Sun, X. Efficient density estimation via piecewise polynomial approximation. In Proceedings of the forty-sixth annual ACM symposium on Theory of computing, pp. 604–613. ACM, 2014.

- Cohen et al. (2020) Cohen, D., Kontorovich, A., and Wolfer, G. Learning discrete distributions with infinite support. Advances in Neural Information Processing Systems, 33:3942–3951, 2020.

- Devroye & Gyorfi (1990) Devroye, L. and Gyorfi, L. No empirical probability measure can converge in the total variation sense for all distributions. The Annals of Statistics, pp. 1496–1499, 1990.

- Devroye & Lugosi (2012) Devroye, L. and Lugosi, G. Combinatorial methods in density estimation. Springer Science & Business Media, 2012.

- Diakonikolas (2016) Diakonikolas, I. Learning structured distributions. Handbook of Big Data, pp. 267, 2016.

- Gerber (2014) Gerber, M. S. Predicting crime using twitter and kernel density estimation. Decision Support Systems, 61:115–125, 2014.

- Givens & Hoeting (2012) Givens, G. H. and Hoeting, J. A. Computational statistics, volume 703. John Wiley & Sons, 2012.

- Goodfellow et al. (2014) Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. Generative adversarial nets. Advances in neural information processing systems, 27, 2014.

- Han et al. (2015) Han, Y., Jiao, J., and Weissman, T. Minimax estimation of discrete distributions under loss. IEEE Transactions on Information Theory, 61(11):6343–6354, 2015.

- Hao & Orlitsky (2019) Hao, Y. and Orlitsky, A. Unified sample-optimal property estimation in near-linear time. Advances in Neural Information Processing Systems, 32, 2019.

- Hao et al. (2020) Hao, Y., Jain, A., Orlitsky, A., and Ravindrakumar, V. Surf: A simple, universal, robust, fast distribution learning algorithm. Advances in Neural Information Processing Systems, 33:10881–10890, 2020.

- Huber (1992) Huber, P. J. Robust estimation of a location parameter. In Breakthroughs in statistics, pp. 492–518. Springer, 1992.

- Jain et al. (2022) Jain, A., Orlitsky, A., and Ravindrakumar, V. Robust estimation algorithms don’t need to know the corruption level. arXiv preprint arXiv:2202.05453, 2022.

- Kamath et al. (2015) Kamath, S., Orlitsky, A., Pichapati, D., and Suresh, A. T. On learning distributions from their samples. In Conference on Learning Theory, pp. 1066–1100. PMLR, 2015.

- Pearson (1895) Pearson, K. X. contributions to the mathematical theory of evolution.—ii. skew variation in homogeneous material. Philosophical Transactions of the Royal Society of London.(A.), (186):343–414, 1895.

- Pimentel et al. (2014) Pimentel, M. A., Clifton, D. A., Clifton, L., and Tarassenko, L. A review of novelty detection. Signal Processing, 99:215–249, 2014.

- Rahman et al. (2002) Rahman, Q. I., Schmeisser, G., et al. Analytic theory of polynomials. Number 26 in London Mathematical Society monographs. Clarendon Press, 2002.

- Scott (2012) Scott, D. W. Multivariate density estimation and visualization. In Handbook of computational statistics, pp. 549–569. Springer, 2012.

- Silverman (1986) Silverman, B. W. Density Estimation for Statistics and Data Analysis, volume 26. CRC Press, 1986.

- Tukey (1977) Tukey, J. W. Exploratory data analysis. Addison-Wesley Series in Behavioral Science: Quantitative Methods, 1977.

- Vapnik (1999) Vapnik, V. N. An overview of statistical learning theory. IEEE transactions on neural networks, 10(5):988–999, 1999.

- Wolfowitz (1957) Wolfowitz, J. The minimum distance method. The Annals of Mathematical Statistics, pp. 75–88, 1957.

- Yatracos (1985) Yatracos, Y. G. Rates of convergence of minimum distance estimators and kolmogorov’s entropy. The Annals of Statistics, pp. 768–774, 1985.

- Zambom & Ronaldo (2013) Zambom, A. Z. and Ronaldo, D. A review of kernel density estimation with applications to econometrics. International Econometric Review, 5(1):20–42, 2013.

Appendix A Proofs for Section 1

A.1 Proof of Lemma 3

Proof

Note that for any and , . Therefore increases with . (Chan et al., 2014) showed that . We show below that that . Together they imply when or .

Let be the uniform distribution on . Fix an and consider the distribution . Note that . For a fixed , we construct two random distributions and that are essentially indistinguishable using samples as is made large, and such that all functions have an distance to either or that is at least twice as far as their respective approximations in .

To construct we perturb separately on the left, , and right, , halves of .

For the left half, we use the discrete sub-distribution that assigns a mass to values drawn according to the distribution over . Then let

Thus consists of discrete atoms added to on .

For the right half, assuming Wolog that is even, first partition into intervals of width by letting for . Let denote the width of interval . For each , select a random circular sub-interval of width

as follows: Suppose for simplicity. Choose a point uniformly at random in and define

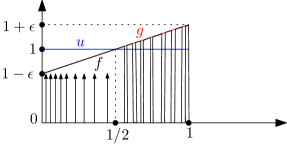

Let be over and over , hence as illustrated in Figure 4, for ,

It is easy to show that on any sub-interval of , the area of is within that of up to an additive .

Construct via the same method for but mirrored along , with , adding atoms to and alternating between and on as described in the construction of .

By a birthday paradox type argument, for any , it is easy to see that the distributions , are indistinguishable from with probability using any finitely many samples (by choosing an appropriately large ). Thus w.p. , the estimate is identical under both and . Therefore any estimator suffers a factor

By the mirror image symmetry between and about 1/2, is the optimal estimate to within an additive . This lower bounds as

where follows since , follows since is a discrete distribution, follows since has a total mass and since by a straightforward calculation, follows since in , follows since the area of and on are equal to within an additive , and follows since . Choosing and completes the proof.

A.2 Description and proof of Lemma 17

The following lemma shows that if is a -factor approximation for for some and and achieves the min-max rate of a distribution class , then is also a -factor approximation for .

Lemma 17.

If is a -factor approximation for and for all in a class , , then for any , not necessarily in ,

Proof For a distribution and class , let be the closest approximation to from , namely achieving . Then for any distribution ,

where in , just as is the distribution closest to , is the distribution closest to and the inequality follows since and by definition, is the least distance from to any , follows since by definition, is the best approximation to from , and follows from the property of considered in the lemma as .

Appendix B Proofs for Section 4

B.1 Proof of Lemma 7

Proof Observe that for any and interval ,

where denotes the first derivative of . The case of is trivial. We give a proof for . Consider

The following two claims (indicated with the overset (*)) may be verified via Wolfram Mathematica e.g. version 12.3.

Claim 1 ,

because

Claim 2 and .

Let and note that for any ,

where the final inequality follows by integration by parts.

For simplicity let , then and . Hence,

where follows from Bernstein’s inequality since

for a degree- polynomial and any , and applying the inequality to of degree . For we apply Bernstein’s inequality to

, set and notice that .

Equivalently by a change of variables, for

and ,

Using the same reasoning for instead of we obtain

where the equality follows since is a periodic function with period . Integrating both sides for from to yields

where (a) follows since from Claim 1 and the definition of . Finally, for a proper of order ,

where follows since for , follows from the Markov Brothers’ inequality, and holds for some . Proof is complete from the fact that .

B.2 Proof of Lemma 8

Since for any , and both have intervals for , it follows that the total number of intervals in is upper bounded as

where follows since and follows from the identity that for any .

B.3 Proof of Lemma 9

Proof We provide a proof for by considering . An identical proof follows for any other interval as its partition is obtained by a linear translation of . For , let

Similarly let . Applying Lemma 7 with , we obtain

where follows since . As consists of equal width intervals from Equation (4) and since is of width , it follows that each interval in (and similarly for ) is of width . Thus from Equation (2), the difference between and over is given by

Therefore

where follows since is a symmetric interval, , from the Markov Brothers’ inequality, follows from the infinite negative geometric sum and follows since as defined in Equation (4).

B.4 Proof of Lemma 11

Proof Select a that achieves . Then

where follows from setting in Lemma 10, and follows from using along with the fact that (so that ).

B.5 Proof of Theorem 12

Appendix C Proofs for Section 5

C.1 Proof of Theorem 13

C.2 Proof of Lemma 14

For simplicity denote and consider a particular that achieves . Let denote the set of intervals in that has as a single piece polynomial in . Let be the remaining intervals where has more than one polynomial piece. Since has polynomial pieces, the number of intervals in is .

Recall that for any subset , and integrable functions , , and integer , , denote the and distances over respectively. Equation (14) in (Acharya et al., 2017) shows that over ,

| (9) |

We bound the error in by setting in Lemma 10, using Equation (9), and noting that as from Equation (6):

| (10) |

From Lemma 49 (Acharya et al., 2017), for all intervals , the following Equation (11) holds that they use to derive Equation (12).

| (11) |

| (12) |

Recall that we obtain by adding a constant to along each interval to match its area to in that interval. Since has intervals (Lemma 8), ,

| (13) |

where the last inequality follows from Property 6.

Appendix D Proofs for Section 6

D.1 Proof of Theorem 15

Proof Applying the probabilistic version of the VC inequality, i.e. Lemma 4, (see (Devroye & Lugosi, 2012)) to Lemma 14 we have with probability ,

From the union bound, the above condition holds true for the sized estimate collection with probability . Apply the method discussed in Section 6.2 with , to obtain . Using Lemma 16 that w.p. ,

where follows from the fact that for any , (so that and follows since .

D.2 Proof of Lemma 16

Proof For , from the triangle inequality, and as by definition, for all ,

For , if

the proof follows since for any ,

where follows since . On the other hand if

then ,

where follows since , and follows since , contradicting the definition of .

Appendix E Additional Experiments

We compare (Hao et al., 2020) against and (Acharya et al., 2017) for the non-noisy distributions considered in Section 7, namely mixtures of Beta: , Gamma: , and Gaussians: .65(-.45,)+.35(.3,). This is shown in Figure 5. While achieves a lower error, this may be due to its implicit cross-validation method, unlike in and that relies on our independent cross-validation procedure in Section 6. While the primary focus of our work was in determining the optimal approximation constant, evaluating the experimental performance of the various piecewise polynomial estimators may be an interesting topic for future research.