Artem Moskaleva.moskalev@uva.nl1

\addauthorIvan Sosnoviki.sosnovik@uva.nl1

\addauthorArnold Smeuldersa.w.m.smeulders@uva.nl1

\addinstitution

UvA - Bosch Delta Lab

University of Amsterdam, Netherlands

Amsterdam, Netherlands

Tracking relations in videos

Two is a crowd: tracking relations in videos

Abstract

Tracking multiple objects individually differs from tracking groups of related objects. When an object is a part of the group, its trajectory depends on the trajectories of the other group members. Most of the current state-of-the-art trackers follow the approach of tracking each object independently, with the mechanism to handle the overlapping trajectories where necessary. Such an approach does not take inter-object relations into account, which may cause unreliable tracking for the members of the groups, especially in crowded scenarios, where individual cues become unreliable due to occlusions. To overcome these limitations and to extend such trackers to crowded scenes, we propose a plug-in Relation Encoding Module (REM). REM encodes relations between tracked objects by running a message passing over a corresponding spatio-temporal graph, computing relation embeddings for the tracked objects. Our experiments on MOT17 and MOT20 demonstrate that the baseline tracker improves its results after a simple extension with REM. The proposed module allows for tracking severely or even fully occluded objects by utilizing relational cues.

1 Introduction

For online multi-object tracking, when objects are part of a group, the frequent mutual occlusions make individual tracking harder. Rather than rejecting that information, identifying group membership is interesting by itself, where in principle the group is easier to identify having more uniquely identifying characteristics than an individual object would. In this paper we set out to exploit group relations for multi-object tracking.

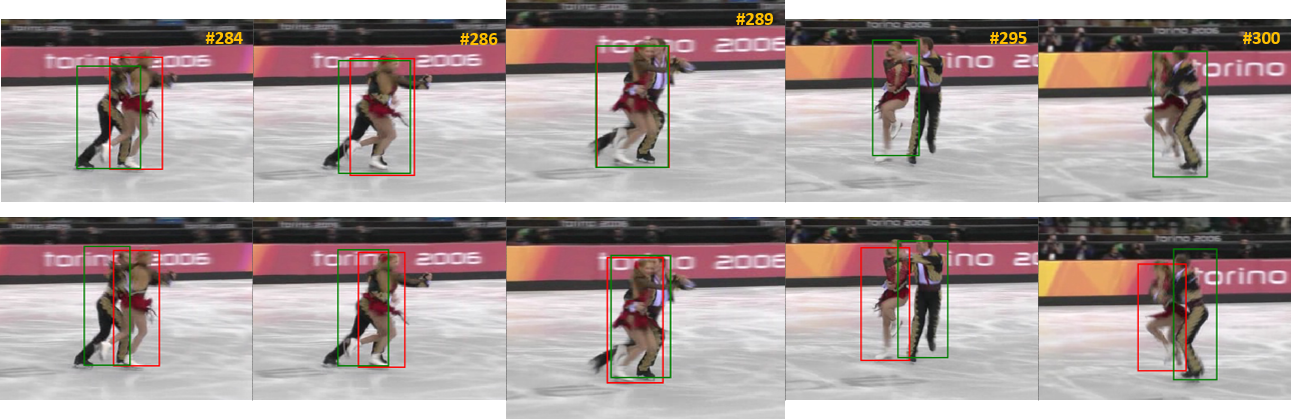

When tracking pedestrians online in a crowd, following one specifically is generally harder than following all members of a family of three, just because their combination offers good distinction: one tall with one small person, each with a trolley. Occlusion may hamper complete view of one of the targets but then the characteristics of related members may be borrowed to render approximate tracking for the occluded one like parents with a child in shopping malls and other forms of crowd control. See Figure 1 for other examples of dense interaction, where tracking group relations is advantageous.

Multi-object online tracking has recently made great progress with tracking-by-regression [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé, Zhou et al.(2020)Zhou, Koltun, and Krähenbühl, Zhang et al.(2020)Zhang, Wang, Wang, Zeng, and Liu, Lu et al.(2020)Lu, Rathod, Votel, and Huang, Wang et al.(2019)Wang, Zheng, Liu, and Wang, Xu et al.(2020)Xu, Osep, Ban, Horaud, Leal-Taixé, and Alameda-Pineda, Voigtlaender et al.(2019)Voigtlaender, Krause, Osep, Luiten, Sekar, Geiger, and Leibe]. These methods track each object separately until, at a crossroad of tracks, a mechanism is called upon to determine which object continues on what track. The current methods demonstrate good speed and good accuracy. They do not, however, consider inter-object relations, which may cause tracking to become unreliable especially when the interaction between bodies becomes dense where occlusion becomes a major obstacle, as in (Figure 2).

We draw inspiration from multi-object processing, where the whole video is available for the analysis. In [Tang et al.(2015)Tang, Andres, Andriluka, and Schiele, Tang et al.(2016)Tang, Andres, Andriluka, and Schiele, Keuper et al.(2016)Keuper, Tang, Zhongjie, Andres, Brox, and Schiele, Keuper et al.(2020)Keuper, Tang, Andres, Brox, and Schiele, Tang et al.(2017)Tang, Andriluka, Andres, and Schiele], the trajectories are derived by running a graph optimization on the object detections. While the structure of the graph encodes the inter-object relations in these offline trackers, their capability of finding relations relies heavily on having all detections in the video at once, combining information before and after dense interactions. This offline information blocks the methods unsuited for online multi-object tracking as the detections of the future are not yet available.

In this work, we extend current tracking-by-regression methods with online group relations. Inspired by offline graph-based video analysis, we learn to encode inter-object relations from limited data a priory. In our relation encoding module, a message passing algorithm is running over a dynamic object-graph to produce relation embeddings, which encode the group structure for each object. The message passing is performed over space and time to capture the complex dynamics of group behaviour. The relation encoding module is implemented as a plug-in extension for tracking-by-regression methods.

We make the following contributions:

-

•

We develop a method to encode inter-object relations online in dense scenes by running spatial-temporal message passing.

-

•

We demonstrate the virtue of relations to improve the tracking of objects in dense scenes by adding relations on top of current tracking-by-regression methods, even tracking objects with low visibility.

-

•

And we demonstrate an online view of the degree of the relationships between objects.

2 Related work

Multi-object tracking by graph association

Many the multi-object trackers first apply an object detector on the whole sequence, then link the detections across frames on the basis of a best match criterion [Tang et al.(2015)Tang, Andres, Andriluka, and Schiele, Tang et al.(2016)Tang, Andres, Andriluka, and Schiele, Keuper et al.(2016)Keuper, Tang, Zhongjie, Andres, Brox, and Schiele, Keuper et al.(2020)Keuper, Tang, Andres, Brox, and Schiele, Tang et al.(2017)Tang, Andriluka, Andres, and Schiele]. They follow the tracking by a graph association paradigm. The matching is usually posed as an offline graph association problem connecting the detections into trajectories. In [Tang et al.(2015)Tang, Andres, Andriluka, and Schiele, Tang et al.(2016)Tang, Andres, Andriluka, and Schiele], the authors solve association as a multicut problem, where trajectories are derived from a dense graph of detections by extracting weighted subgraphs. Along the same lines, in [Keuper et al.(2016)Keuper, Tang, Zhongjie, Andres, Brox, and Schiele], Keuper et al\bmvaOneDotpropose a multicut formulation to decompose a dense detection graph into a set of trajectories. To better handle occlusions, Tang et al\bmvaOneDot[Tang et al.(2017)Tang, Andriluka, Andres, and Schiele] further extend multicuts with lifted edges.

Graph associations are powerful as they reason about groups of detections, while taking inter-object relations into account. However, their offline nature limits the real-life application of such methods. Also, due to their combinatorial non-differentiable formulation, it is not trivial to combine these graph association algorithms with modern end-to-end trackers. In this work, we take inspiration from these offline graph association works and develop a new method for relation encoding, which learns to encode the dynamics of multiple objects for online tracking. Our relation encoding module is fully end-to-end compatible with modern trackers.

Multi-object tracking by regression association

Recently, a family of methods called tracking-by-regression has become the state-of-the-art approach in multi-object tracking. The key idea is to assess the association of detections to previously detected objects by utilizing the regression head of the object detector. In the pioneering work of Bergmann et al\bmvaOneDot[Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé], tracking is based on the second stage of the Faster R-CNN [Ren et al.(2017)Ren, He, Girshick, and Sun] object detector with the previous positions of detected objects as proposals. Later, more sophisticated object detectors were used [Zhou et al.(2020)Zhou, Koltun, and Krähenbühl, Lu et al.(2020)Lu, Rathod, Votel, and Huang, Zhang et al.(2020)Zhang, Wang, Wang, Zeng, and Liu, Wang et al.(2019)Wang, Zheng, Liu, and Wang]. In [Zhou et al.(2020)Zhou, Koltun, and Krähenbühl] Zhou et al\bmvaOneDotmodify CenterNet [Zhou et al.(2019)Zhou, Wang, and Krähenbühl] for multi-object tracking. In [Lu et al.(2020)Lu, Rathod, Votel, and Huang], authors modify the lightweight RetinaNet [Girshick et al.(2018)Girshick, Radosavovic, Gkioxari, Dollár, and He] for faster inference. In [Zhang et al.(2020)Zhang, Wang, Wang, Zeng, and Liu, Wang et al.(2019)Wang, Zheng, Liu, and Wang], the authors extend the detector with ReID embeddings, which allows for better identity preservation in case of occlusion.

In all these works, objects are tracked independently of one another. When scenes become crowded or filled with similar targets, independent tracking under frequent and heavy occlusion becomes hard or impossible. Whereas the above methods function well generally, they tend to break when individual cues are no longer available (Figure 2). For these hard but frequent circumstances, one has to employ relations in tracking. In this paper, we propose a simple yet effective extension for regression-based multi-object trackers to improve tracking robustness in dense interaction.

Reasoning on relations

From the literature on relations in computer vision, we focus in particular on self-attention [Vaswani et al.(2017)Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, and Polosukhin, Lee et al.(2019)Lee, Lee, Kim, Kosiorek, Choi, and Teh] and graph neural networks (GNN) [Wu et al.(2021)Wu, Pan, Chen, Long, Zhang, and Yu, Veličković et al.(2018)Veličković, Cucurull, Casanova, Romero, Liò, and Bengio]. Their ability to use structural information in the input has motivated Hu et al\bmvaOneDot[Hu et al.(2018)Hu, Gu, Zhang, Dai, and Wei] to develop a self-attention relation module to remove duplicates in the task of object detection. In [Cai et al.(2019)Cai, Ge, Liu, Cai, Cham, Yuan, and Thalmann] Cai et al\bmvaOneDotuse graph convolution to propagate relational information to refine a predicted pose. Narasimhan et al\bmvaOneDot[Narasimhan et al.(2018)Narasimhan, Lazebnik, and Schwing] rely on graph-structured representations to encode relations for visual question answering. In [Materzynska et al.(2020)Materzynska, Xiao, Herzig, Xu, Wang, and Darrell], Materzynska et al\bmvaOneDotmodel interactions in a subject-object graph representation for action recognition.

These works demonstrate how relation cues improve the analysis. Inspired by the success of relation reasoning for various tasks, we expand relation cues to multi-object online tracking as a plug-in extension for state-of-the-art trackers and with the potential online applicability for all above purposes.

Metrics of multi-object tracking

Classical multi-object tracking evaluation methods include CLEAR MOT metrics [Bernardin and Stiefelhagen(2008)] and IDF1 score [Ristani et al.(2016)Ristani, Solera, Zou, Cucchiara, and Tomasi]. These metrics assess various aspects of tracking, while the recently proposed HOTA metric [Luiten et al.(2020)Luiten, Osep, Dendorfer, Torr, Geiger, Leal-Taixé, and Leibe] aims to provide the measure of overall performance. None of these metrics, however, capture, the dense interaction of objects, which is one of the circumstances which makes multi-object tracking interesting. To do so, in this work we also consider the decomposition of the HOTA metric over various localization thresholds.

3 Encoding relations

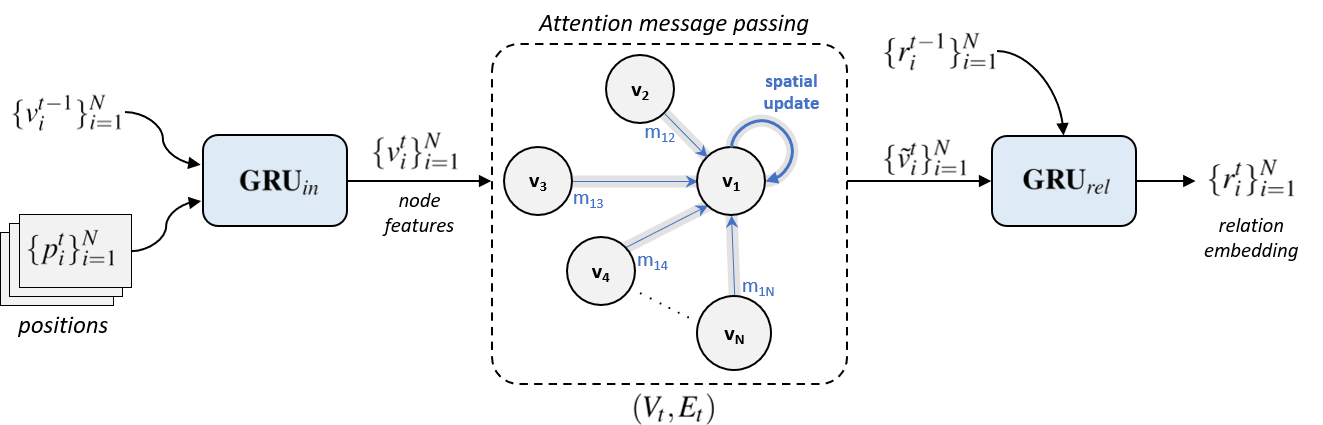

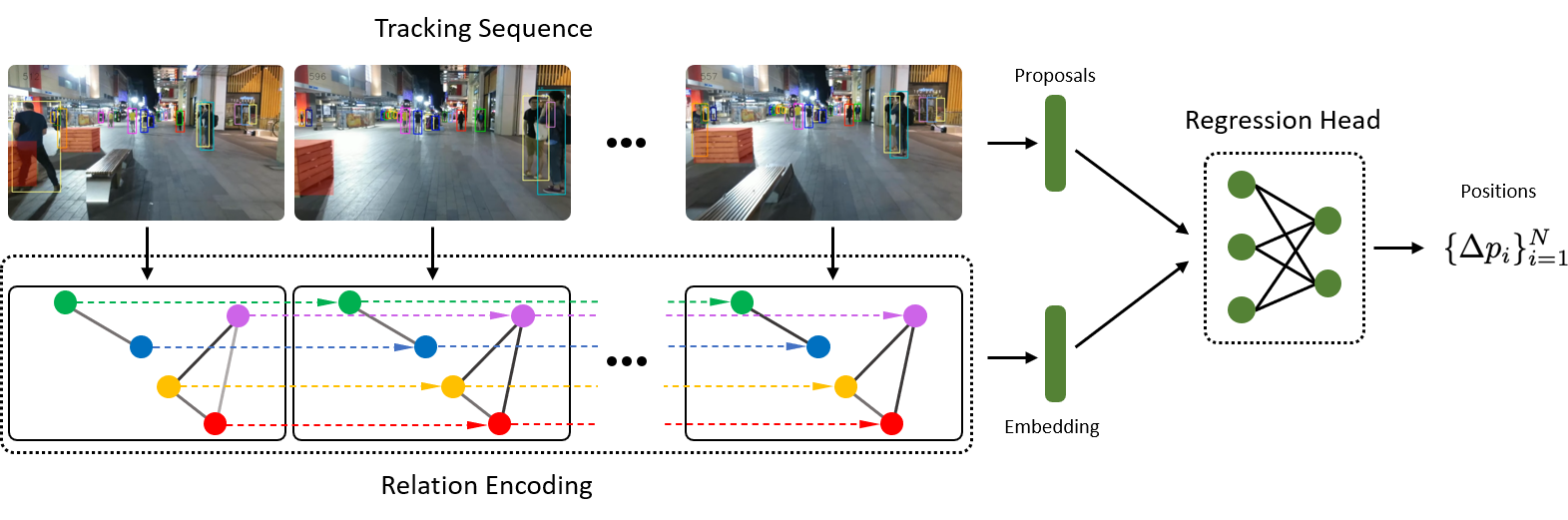

To encode inter-object relations, the relation encoding module takes a set of tracked instances as input and produces relation embeddings by running a message passing algorithm over the spatial-temporal graph. Figure 3 renders the architecture of the module.

3.1 Building relational graph

We define as a spatial-temporal graph, where is a total number of time steps, represent the vertices and edges of the graph at the time step , respectively. is a set of temporal edges from to . Vertices correspond to the objects as tracked, while the temporal edges encode their trajectories. Only the nodes, which correspond to the same instance, are linked in time. To decide on the spatial edges at time step , we first compute the distance matrix with entries:

| (1) |

where corresponds to the center coordinates, width and height of the -th object and respectively. We use the scaled Euclidean distance to prevent linking remote instances, which may be close if evaluated only by the center coordinates, but far away in depth. To obtain an adjacency matrix we simply threshold the distances, i.e. , where is a hyper-parameter.

3.2 Graph-attention message passing

Inter-object relations are modulated by running message passing over the relational graph. The procedure consists of 4 steps: compute input node features, compute messages between spatial nodes, aggregate messages and compute spatial-temporal updates of node representations. This procedure is recurrently performed for each time step until the end of the graph is reached.

Node features

To construct the input feature we use bounding box coordinates of the detection and the positional offset with respect to the previous time step. Let be the bounding box of the i-th object at time , the input feature for the node is then computed as:

| (2) | |||

| (3) |

where are learnable parameters, is a non-linearity and denotes concatenation operator. The initial hidden states of the cell are set to zeros.

Message sending

A message between two nodes of the graph is designed to encode their pairwise interaction. We define the message as a function of both the sending and the receiving nodes and , respectively. To make the message aware of the geometry of the graph, we also include the distance between the objects as an additional input for the message function. The message is calculated as:

| (4) |

Aggregating messages

When the messages have been computed, they are gathered in an aggregated message. An aggregation function should be permutation equivariant with respect to the neighbors’ features. In this work, we follow the graph attention approach [Veličković et al.(2018)Veličković, Cucurull, Casanova, Romero, Liò, and Bengio], which computes attention between features to weigh them according to their importance. The attention mechanism computes the attention coefficients as:

| (5) |

where denotes the set of the nodes spatially adjacent to i-th node in the graph. Temporal edges are not considered at this stage. The attention coefficients are then used to compute a linear combination of the corresponding neighbors’ representation into an aggregated feature.

Spatial-Temporal update

In the final step, we update node representations spatially and temporally. For the spatial update, we concatenate the self-feature of the node with the aggregated message from its neighbors and pass it through the perceptron. The temporal update is performed by passing the features through the GRU-cell. Formally:

| (spatial) | (6) | |||

| (temporal) | (7) |

We call the resulting feature relation embedding of the i-th node at time t. Relation embeddings at are all set to zero vectors.

3.3 Relation-importance

To answer the question to what degree object relates to object at time , we define a relation-importance function :

| (8) |

where can be any bounded metric and denotes the relation encoding of i-th object computed by excluding the j-th node from the set of neighbors. In early experiments, we found to work well as a metric (data not shown).

Higher values for indicate a higher degree of relation between these objects. Note that we can also compute relation-importance by adopting the attention weights from Equation 5. However, we observed that such an approach yields less intuitive relations as it does not take the information encoded in the messages into account. In addition, it restricts the relations to be symmetric, while formulation from Equation 8 permits asymmetric relations.

3.4 Utilizing relations for tracking

Next, we explore two purposes of using relations in tracking: (i) extending tracking-by-regression models to reason about the object’s position based both on appearance and relation cues, (ii) recovering the position of the objects purely from their relation embeddings, which is useful in the case of occlusions.

Relation-aware tracking-by-regression

To make a tracker aware of relations, we condition the predicted positions of the objects on their relation embeddings. To that end, we concatenate the appearance features extracted from proposal regions with the relation embeddings of the corresponding objects. The positional offset is then predicted by passing the combined feature via the regression head of the object detector. The model can be seen as the REM with the tracker attached to graph nodes. Such a framework applies to a wide range of trackers [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé, Zhou et al.(2020)Zhou, Koltun, and Krähenbühl, Zhang et al.(2020)Zhang, Wang, Wang, Zeng, and Liu, Lu et al.(2020)Lu, Rathod, Votel, and Huang, Wang et al.(2019)Wang, Zheng, Liu, and Wang]. It does not require modification of the tracker other than adjusting the regression head, see the supplementary material.

Tracking-by-relations only

When the object is heavily occluded, appearance features become unreliable, causing a tracking failure. As relation embeddings produced by the relation module do not depend on the objects’ appearance, they can be used to track objects under severe occlusion. To that end, we train an output MLP to directly regress the coordinates of the occluded objects from their relation embeddings, see the supplementary material. Then, we run the relation-aware tracker on the whole sequence and apply tracking-by-relations only to the occluded objects, when the rest of the objects are tracked both based on appearance and relational cues. This way, the model can explain hard occluded cases through easy non-occluded ones.

4 Experiments

We evaluate relation-aware tracker on the MOTChallenge benchmarks MOT17 [Milan et al.(2016)Milan, Leal-Taixé, Reid, Roth, and Schindler] and MOT20 [Dendorfer et al.(2020)Dendorfer, Rezatofighi, Milan, Shi, Cremers, Reid, Roth, Schindler, and Leal-Taixé].

4.1 Implementation details

We use Tracktor111https://github.com/phil-bergmann/tracking_wo_bnw as our baseline model as it provides good speed-accuracy balance. We extend Tracktor to relation-aware RelTracktor by plugging in the REM. To do so, we modify the regression head of the tracker to take the concatenated relation-appearance feature instead of just the appearance feature as the input. The rest of the tracker remains unchanged.

We use Xavier initialization [Glorot and Bengio(2010)] for the relation encoding module. We also initialize the modified regression head from the backbone tracker. We then jointly train the modified regression head and the relational module. To do so, we randomly sample consecutive frames from MOT17/MOT20 datasets, compute relation embeddings and feed them into the regression head together with appearance features to refine bounding boxes at time step . We train for 50 epochs using the Adam optimizer [Kingma and Ba(2017)] with a learning rate of while setting to build relational graphs. We choose for a dimension of the relation embedding vectors. Generalized intersection over union [Rezatofighi et al.(2019)Rezatofighi, Tsoi, Gwak, Sadeghian, Reid, and Savarese] is used as a loss function. We highlight that only the relational module and the regression head are trained, while the rest of the model is kept as is.

4.2 Datasets and evaluation metrics

The MOT17 benchmark consists of 7 train and 7 test sequences, which contain pedestrians with annotated full-body bounding boxes. It also specifies the degree of visibility for each annotated instance in the train split. The MOT20 benchmark contains 4 train and 4 test sequences of moving pedestrians in unconstrained environments with bounding boxes, covering the visible part of the objects.

Following [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé], we evaluate the multi-object tracking quality in a public detection setting. Such an evaluation protocol allows for a fair comparison with other methods. We employ standard the MOT-metrics [Bernardin and Stiefelhagen(2008)] and the HOTA metric [Luiten et al.(2020)Luiten, Osep, Dendorfer, Torr, Geiger, Leal-Taixé, and Leibe] as an indicator of the overall performance. We additionally analyze HOTA over different localization thresholds. In a scene that contains a lot of dense interactions, a higher HOTA under low localization thresholds indicates more robust tracking of bodily interacting objects.

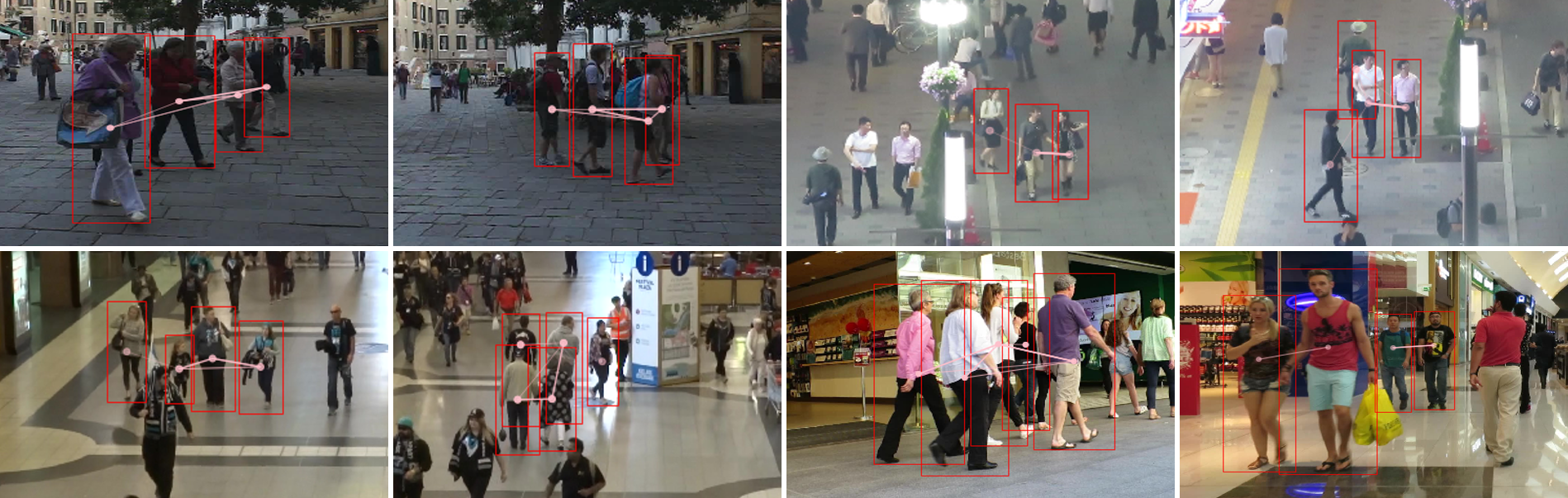

4.3 Discovering relations

We start by testing the ability of the relation encoding module to catch inter-object relations in the scene. As the MOT-benchmark does not provide explicit annotation for the relations, we conduct a qualitative study. In particular, from the relation-aware tracker we compute relation-importance weights (Equation 8) for the objects in each time-step. We then visualize the top relations in Figure 4 by the same color.

As can be seen, objects moving in a group tend to have stronger relations. We also observe that relations often are formed between coherently moving objects, even if they are not a part of what we would assess as a group (on our social experience). Such relations are inevitable as we do not employ social rules into the model, but still these assessed relations are useful as the trajectories of such ad-hoc groups can still be explained together.

| Method | HOTA | IDF1 | MOTA | MOTP | MT | ML | |

|---|---|---|---|---|---|---|---|

| MOT17 | RelTracktor (Ours) | 45.8 | 56.5 | 57.2 | 79.0 | 21.9 | 34.3 |

| Tracktor [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé] | 44.8 | 55.1 | 56.3 | 78.8 | 21.1 | 35.3 | |

| deepMOT [Xu et al.(2020)Xu, Osep, Ban, Horaud, Leal-Taixé, and Alameda-Pineda] | 42.4 | 53.8 | 53.7 | 77.2 | 19.4 | 36.6 | |

| eHAF [Sheng et al.(2019)Sheng, Zhang, Chen, Xiong, and Zhang] | - | 54.7 | 51.8 | - | 23.4 | 37.9 | |

| FWT [Henschel et al.(2017)Henschel, Leal-Taixé, Cremers, and Rosenhahn] | - | 47.6 | 51.3 | - | 21.4 | 35.2 | |

| jCC [Keuper et al.(2020)Keuper, Tang, Andres, Brox, and Schiele] | - | 54.5 | 51.2 | - | 20.9 | 37.0 | |

| MOT20 | RelTracktor (Ours) | 43.4 | 53.0 | 54.1 | 79.2 | 36.7 | 22.6 |

| Tracktor [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé] | 42.1 | 52.7 | 52.6 | 79.9 | 29.4 | 26.7 | |

| SORT20 [Bewley et al.(2016)Bewley, Ge, Ott, Ramos, and Upcroft] | 36.1 | 45.1 | 42.7 | 78.5 | 16.7 | 26.2 |

4.4 Relation-aware tracking-by-regression

We compare the relation-aware RelTracktor versus the baseline method from [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé] and other trackers. We run the tracker on the test subset of MOT benchmarks and submit results to the evaluation server. Results are presented in Table 1.

On the MOT17-benchmark, the relation-aware tracker shows an improvement in all metrics compared to the Tracktor baseline. In particular, a higher IDF1 score indicates that our model robustly preserves the identities of the objects throughout the sequence, while also providing more accurate localization as indicated by the MOTP score. Specifically, we observed that when the baseline model fails for close objects in a group, the plain tracker drifts to follow the non-occluded member of the group and loses the target. The relation aware extension of the tracker, on the other hand, is more robust in such scenarios. We validate this by investigating failure cases and by analyzing HOTA values over different localization thresholds in supplementary materials.

On the MOT20-benchmark, the relation-aware tracker demonstrates 1.3% increase in the overall HOTA score. Although the baseline tracker provides slightly higher localization precision as indicated by MOTP score, its relation-aware extension is much more robust and is able to track targets longer as indicated by the higher percentage of mostly tracked objects (MT).

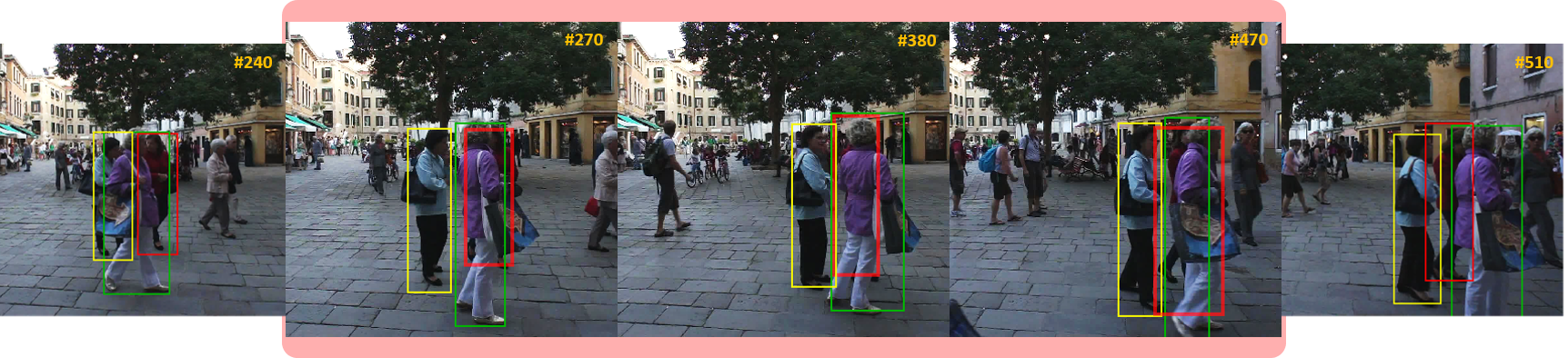

4.5 Tracking-by-relations

We next demonstrate that the position of out of sight objects can be recovered based purely on relation cues. To do so, we select an instance, which undergoes occlusion while moving in a group of related objects. We then apply the approach described in Section 3.4. Practically, we do not kill the trajectories of non-visible objects, but continue to predict them from relational cues.

Figure 5 demonstrate qualitative results. As can be seen, the position of the occluded object can be approximately recovered from the group relations. It indicates that relation embeddings can be learned to encode the geometric prior about the relative positions of the objects. We provide more qualitative examples in supplementary materials.

5 Discussion

In this work, we demonstrate that learning inter-object relations is important for robust multi-object tracking. We develop a plug-in relation encoding module, which encodes relations by running a message passing over a spatial-temporal graph of tracked instances.

We experimentally demonstrate that extending a backbone multi-object tracker with REM improves tracking quality. We also investigate the ability of the proposed method to track heavily occluded objects based on the relational cues, when appearance information is unreliable. Our experiments suggest that relational information is important and should not be left out from the analysis.

We suppose that REM would be the most useful in problems, where video analysis of crowded scenes with regular occlusions is required. For example, multi-object tracking in crowded streets, underground, airports, and railway stations. Moreover, one can use the proposed relation encoding module not only for tracking but also for action recognition or scene anomaly detection. We leave these questions for further research.

References

- [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé] Philipp Bergmann, Tim Meinhardt, and Laura Leal-Taixé. Tracking without bells and whistles. In The IEEE International Conference on Computer Vision (ICCV), October 2019.

- [Bernardin and Stiefelhagen(2008)] Keni Bernardin and Rainer Stiefelhagen. Evaluating multiple object tracking performance: The clear mot metrics. J. Image Video Process., 2008, January 2008. ISSN 1687-5176. 10.1155/2008/246309.

- [Bewley et al.(2016)Bewley, Ge, Ott, Ramos, and Upcroft] Alex Bewley, Zongyuan Ge, Lionel Ott, Fabio Ramos, and Ben Upcroft. Simple online and realtime tracking. In 2016 IEEE International Conference on Image Processing (ICIP), pages 3464–3468, 2016. 10.1109/ICIP.2016.7533003.

- [Cai et al.(2019)Cai, Ge, Liu, Cai, Cham, Yuan, and Thalmann] Yujun Cai, Liuhao Ge, Jun Liu, Jianfei Cai, Tat-Jen Cham, Junsong Yuan, and Nadia Magnenat Thalmann. Exploiting spatial-temporal relationships for 3d pose estimation via graph convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, pages 2272–2281, 2019.

- [Dendorfer et al.(2020)Dendorfer, Rezatofighi, Milan, Shi, Cremers, Reid, Roth, Schindler, and Leal-Taixé] Patrick Dendorfer, Hamid Rezatofighi, Anton Milan, Javen Shi, Daniel Cremers, Ian Reid, Stefan Roth, Konrad Schindler, and Laura Leal-Taixé. Mot20: A benchmark for multi object tracking in crowded scenes, 2020.

- [Girshick et al.(2018)Girshick, Radosavovic, Gkioxari, Dollár, and He] Ross Girshick, Ilija Radosavovic, Georgia Gkioxari, Piotr Dollár, and Kaiming He. Detectron. https://github.com/facebookresearch/detectron, 2018.

- [Glorot and Bengio(2010)] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feed forward neural networks. In Yee Whye Teh and Mike Titterington, editors, Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, volume 9 of Proceedings of Machine Learning Research, pages 249–256. PMLR, 2010.

- [Henschel et al.(2017)Henschel, Leal-Taixé, Cremers, and Rosenhahn] Roberto Henschel, Laura Leal-Taixé, Daniel Cremers, and Bodo Rosenhahn. Improvements to frank-wolfe optimization for multi-detector multi-object tracking. CVPR, 05 2017.

- [Hu et al.(2018)Hu, Gu, Zhang, Dai, and Wei] Han Hu, Jiayuan Gu, Zheng Zhang, Jifeng Dai, and Yichen Wei. Relation networks for object detection. In CVPR, pages 3588–3597, 2018.

- [Keuper et al.(2016)Keuper, Tang, Zhongjie, Andres, Brox, and Schiele] Margret Keuper, Siyu Tang, Yu Zhongjie, Bjoern Andres, Thomas Brox, and Bernt Schiele. A multi-cut formulation for joint segmentation and tracking of multiple objects, 2016.

- [Keuper et al.(2020)Keuper, Tang, Andres, Brox, and Schiele] Margret Keuper, Siyu Tang, Bjoern Andres, Thomas Brox, and Bernt Schiele. Motion segmentation multiple object tracking by correlation co-clustering. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(1):140–153, 2020.

- [Kingma and Ba(2017)] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization, 2017.

- [Lee et al.(2019)Lee, Lee, Kim, Kosiorek, Choi, and Teh] Juho Lee, Yoonho Lee, Jungtaek Kim, Adam Kosiorek, Seungjin Choi, and Yee Whye Teh. Set transformer: A framework for attention-based permutation-invariant neural networks. In Proceedings of the 36th International Conference on Machine Learning, pages 3744–3753, 2019.

- [Lu et al.(2020)Lu, Rathod, Votel, and Huang] Zhichao Lu, Vivek Rathod, Ronny Votel, and Jonathan Huang. Retinatrack: Online single stage joint detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [Luiten et al.(2020)Luiten, Osep, Dendorfer, Torr, Geiger, Leal-Taixé, and Leibe] Jonathon Luiten, Aljosa Osep, Patrick Dendorfer, Philip Torr, Andreas Geiger, Laura Leal-Taixé, and Bastian Leibe. Hota: A higher order metric for evaluating multi-object tracking. International Journal of Computer Vision, pages 1–31, 2020.

- [Materzynska et al.(2020)Materzynska, Xiao, Herzig, Xu, Wang, and Darrell] Joanna Materzynska, Tete Xiao, Roei Herzig, Huijuan Xu, Xiaolong Wang, and Trevor Darrell. Something-else: Compositional action recognition with spatial-temporal interaction networks. In CVPR, 2020.

- [Milan et al.(2016)Milan, Leal-Taixé, Reid, Roth, and Schindler] Anton Milan, Laura Leal-Taixé, Ian D. Reid, Stefan Roth, and Konrad Schindler. Mot16: A benchmark for multi-object tracking. ArXiv, abs/1603.00831, 2016.

- [Narasimhan et al.(2018)Narasimhan, Lazebnik, and Schwing] Medhini Narasimhan, S. Lazebnik, and A. Schwing. Out of the box: Reasoning with graph convolution nets for factual visual question answering. In NeurIPS, 2018.

- [Ren et al.(2017)Ren, He, Girshick, and Sun] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(6):1137–1149, 2017.

- [Rezatofighi et al.(2019)Rezatofighi, Tsoi, Gwak, Sadeghian, Reid, and Savarese] Hamid Rezatofighi, Nathan Tsoi, JunYoung Gwak, Amir Sadeghian, Ian Reid, and Silvio Savarese. Generalized intersection over union. 2019.

- [Ristani et al.(2016)Ristani, Solera, Zou, Cucchiara, and Tomasi] Ergys Ristani, Francesco Solera, Roger S. Zou, R. Cucchiara, and Carlo Tomasi. Performance measures and a data set for multi-target, multi-camera tracking. European Conference on Computer Vision, pages 17–35, 2016.

- [Sheng et al.(2019)Sheng, Zhang, Chen, Xiong, and Zhang] Hao Sheng, Yang Zhang, Jiahui Chen, Zhang Xiong, and Jun Zhang. Heterogeneous association graph fusion for target association in multiple object tracking. IEEE Transactions on Circuits and Systems for Video Technology, 29(11):3269–3280, 2019.

- [Tang et al.(2015)Tang, Andres, Andriluka, and Schiele] S. Tang, B. Andres, M. Andriluka, and B. Schiele. Subgraph decomposition for multi-target tracking. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 5033–5041, 2015.

- [Tang et al.(2016)Tang, Andres, Andriluka, and Schiele] Siyu Tang, Bjoern Andres, Mykhaylo Andriluka, and Bernt Schiele. Multi-person tracking by multicut and deep matching. In Computer Vision – ECCV 2016 Workshops, pages 100–111, 2016.

- [Tang et al.(2017)Tang, Andriluka, Andres, and Schiele] Siyu Tang, Mykhaylo Andriluka, Bjoern Andres, and Bernt Schiele. Multiple people tracking by lifted multicut and person re-identification. In CVPR, pages 3701–3710, 2017.

- [Vaswani et al.(2017)Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, and Polosukhin] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Ł ukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in Neural Information Processing Systems, volume 30, 2017.

- [Veličković et al.(2018)Veličković, Cucurull, Casanova, Romero, Liò, and Bengio] Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. Graph Attention Networks. International Conference on Learning Representations, 2018.

- [Voigtlaender et al.(2019)Voigtlaender, Krause, Osep, Luiten, Sekar, Geiger, and Leibe] Paul Voigtlaender, Michael Krause, Aljosa Osep, Jonathon Luiten, Berin Balachandar Gnana Sekar, Andreas Geiger, and Bastian Leibe. MOTS: Multi-object tracking and segmentation. In CVPR, 2019.

- [Wang et al.(2019)Wang, Zheng, Liu, and Wang] Zhongdao Wang, Liang Zheng, Yixuan Liu, and Shengjin Wang. Towards real-time multi-object tracking. arXiv preprint arXiv:1909.12605, 2019.

- [Wu et al.(2021)Wu, Pan, Chen, Long, Zhang, and Yu] Zonghan Wu, Shirui Pan, Fengwen Chen, Guodong Long, Chengqi Zhang, and Philip S. Yu. A comprehensive survey on graph neural networks. IEEE Transactions on Neural Networks and Learning Systems, 32(1):4–24, 2021.

- [Xu et al.(2020)Xu, Osep, Ban, Horaud, Leal-Taixé, and Alameda-Pineda] Yihong Xu, Aljosa Osep, Yutong Ban, Radu Horaud, Laura Leal-Taixé, and Xavier Alameda-Pineda. How to train your deep multi-object tracker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6787–6796, 2020.

- [Zhang et al.(2020)Zhang, Wang, Wang, Zeng, and Liu] Yifu Zhang, Chunyu Wang, Xinggang Wang, Wenjun Zeng, and Wenyu Liu. Fairmot: On the fairness of detection and re-identification in multiple object tracking. arXiv preprint arXiv:2004.01888, 2020.

- [Zhou et al.(2019)Zhou, Wang, and Krähenbühl] Xingyi Zhou, Dequan Wang, and Philipp Krähenbühl. Objects as points. In arXiv preprint arXiv:1904.07850, 2019.

- [Zhou et al.(2020)Zhou, Koltun, and Krähenbühl] Xingyi Zhou, Vladlen Koltun, and Philipp Krähenbühl. Tracking objects as points. ECCV, 2020.

Appendix A Utilizing relations for tracking

Relation-aware tracking-by-regression

We illustrate a pipeline of a tracking-by-regression extended with relation reasoning in Figure 6. The graph is built dynamically from the tracked instances. When the object enters or leaves the scene, the corresponding node in the relational graph is updated.

Tracking-by-relations

As the relation embeddings contain the prior about object’s positions, the location of the object can be approximately recovered from the relational cues. To that end, we pass the relation embeddings through an output MLP , which predicts the coordinates of the object.

We set the dimension of the relation embedding . Then, the output MLP is a two layer feed forward network with LeakyReLU non-linearities. The output MLP is jointly trained with the main model by minimizing the generalized intersection over union [Rezatofighi et al.(2019)Rezatofighi, Tsoi, Gwak, Sadeghian, Reid, and Savarese] with respect to the ground truth bounding boxes.

Appendix B Experiments

Ablations studies

We conduct an ablation study on the MOT17-FRCNN train split to investigate an impact of the distance used to build the relational graph , with higher resulting in a relational graph of a larger spatial extent. Since the relation-aware model is fine-tuned for the tracking task, we also compare against the fine-tuned baseline method from [Bergmann et al.(2019)Bergmann, Meinhardt, and Leal-Taixé].

As can be seen in Table 2, the relation-aware tracker outperforms the baseline method and its fine-tuned version. We observed that using the relational graphs of a larger spatial extent generally results in higher performance. Intuitively, lower restricts to encode relation only in small groups, missing the possible distant connections. At the same time, a very large leads to a slight decrease in performance. We attribute it to the fact that in very big relational graphs, formed relations are less sharp because of the attention aggregation mechanism.

Analyzing HOTA

| Method | IDF1 | MOTA | MOTP |

|---|---|---|---|

| Base | 65.0 | 61.7 | 89.4 |

| Base-tuned | 66.7 | 61.8 | 89.5 |

| Rel | 66.8 | 61.9 | 89.4 |

| Rel | 66.9 | 61.9 | 89.5 |

| Rel | 67.1 | 62.0 | 89.5 |

| Rel | 67.1 | 62.0 | 89.5 |

| Rel | 66.9 | 61.9 | 89.4 |

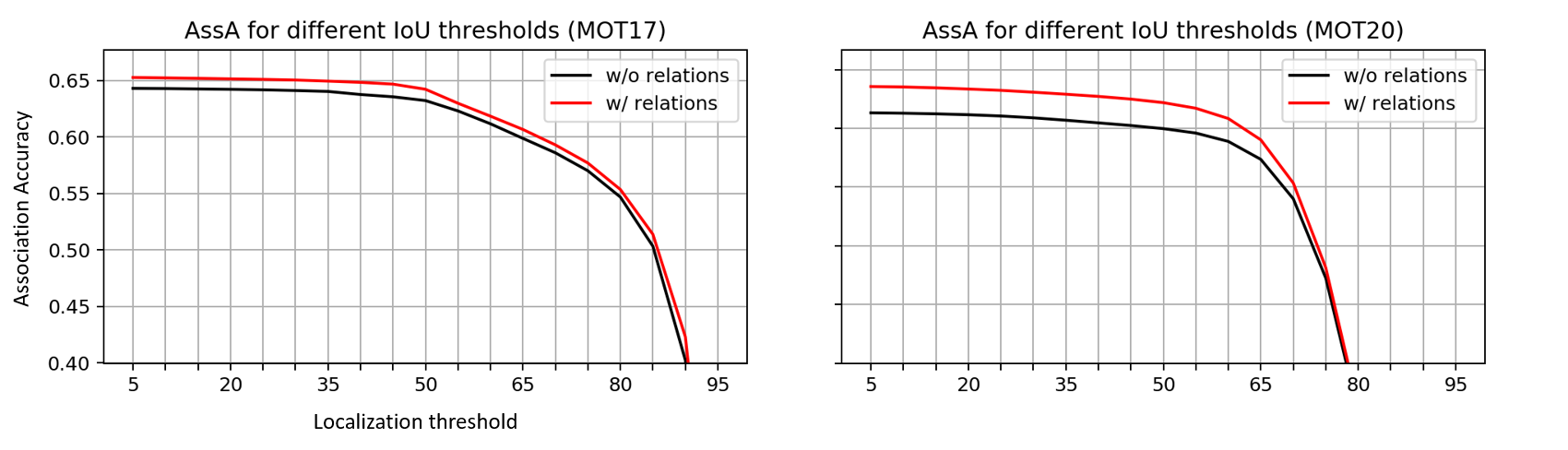

To investigate the ability of the relation-aware model to provide robust tracking in the dense scenes, we decompose and analyze HOTA metrics over various localization thresholds . From [Luiten et al.(2020)Luiten, Osep, Dendorfer, Torr, Geiger, Leal-Taixé, and Leibe]:

| (9) |

where stand for detection and association accuracy for a given . Since the classification part responsible for the quality of detection remains unchanged, we only analyze the association accuracy. Association accuracy for tracking-by-regression methods measures the ability of the regression head of the tracker to preserve identities frame by frame. We plot for the baseline and relation-aware trackers at Figure 7.

Low localization thresholds permit the association of loose bounding boxes. It can deteriorate the association quality when predicted bounding boxes are densely overlapped, which is a common case in crowded scenarios. Thus, the higher association accuracy of relation-aware tracker (Figure 7) under low localization thresholds indicates the better ability to preserve identities of densely interacting objects.

Qualitative examples of tracking-by-relations

We provide more qualitative examples of the tracking-by-relation approach in Figure 8.

Failure cases

When inspecting the per-sequence results of the relation-aware tracker, we observed the improvement of tracking quality on a range of sequences compared to the baseline. The modest performance gain was on the sequences with severe camera motion. We attribute it to the fact that the global camera motion enforces the abrupt shifts in object trajectories, which hinders the formation of meaningful relations.