Uncoded Caching and Cross-level Coded Delivery

for Non-uniform File Popularity

Abstract

Proactive content caching at user devices and coded delivery is studied for a non-uniform file popularity distribution. A novel centralized uncoded caching and coded delivery scheme, called cross-level coded delivery (CLCD), is proposed, which can be applied to large file libraries under non-uniform demands. In the CLCD scheme, the same sub-packetization is used for all the files in the library in order to prevent additional zero-padding in the delivery phase, and unlike the existing schemes in the literature, users requesting files from different popularity groups can still be served by the same multicast message in order to reduce the delivery rate. Simulation results indicate more than reduction in the average delivery rate for typical Zipf distribution parameter values.

Index Terms:

Content caching, non-uniform demands, coded delivery, multi-level cachingI Introduction

Proactive caching is a well known strategy to reduce the network load and delivery latency in content delivery networks. Recently, it has been shown that proactive caching and coded delivery, together, can further help to reduce the network load. The “shared-link problem”, in which a server with a finite file library serves the demands of cache-equipped users over a shared error-free link is analyzed in [1], where the objective is to design a caching and delivery strategy to minimize the load on the shared link, often referred to as the “delivery rate” in the literature.The proposed solution consists of two phases. In the placement phase, all the files in the library are divided into sub-files, and each user proactively caches a subset of these sub-files. In the delivery phase, the server multicasts XORed versions of the requested sub-files through the shared link, such that each user can recover its demand by exploiting both the cached sub-files and the multicast messages. In the scheme proposed in [1], the server controls the caching decisions of the users in a centralized manner. However, in [2], the authors have shown that similar gains can still be achieved when the caching is performed in a random and decentralized manner under certain assumptions. It is shown in [3] that the delivery rate can be further reduced with a coded delivery scheme that exploits the common requests.

Most of the initial studies on coded caching and delivery have been built upon certain simplifying unrealistic assumptions, such as the presence of an error-free broadcast link, uniform user cache capacities, and uniform file demands. A plethora of works have since focused on trying to remove or relax these assumptions in order to bring coded caching and delivery closer to reality. To this end, the case of non-uniform cache sizes is studied in [4, 5, 6], extension to unequal link rates is studied in [7, 5, 8], and non-uniform file popularity is considered in [9, 10, 11, 12, 13, 14, 15].

In this work, we are interested in the case of non-uniform demands as this is commonly encountered in practice[16, 17, 18, 19].It has been shown that by taking into account the statistics of the content popularity and by allocating the cache memory to the files in the library based on this statistics, communication load can be further reduced [9, 10, 11, 12, 13, 14, 15]. Most of the papers dealing with non-uniform demands consider decentralized caching in the placement phase. In [9], the authors proposed a group-based memory sharing approach for the placement phase, where the files in the library are grouped according to their popularities and the available cache memory at user devices is distributed among these groups. Then, in the delivery phase, the coded delivery scheme in [2] is used to deliver the missing parts of the demands. In [10], the authors introduced both a placement and a delivery strategy for non-uniform demands. Optimization of the delivery phase is analyzed as an index coding problem and the multicast messages are constructed based on the clique cover problem, where each missing sub-file111We use the term sub-file to be consistent with the later part of the paper, while the papers studying the asymptotic performance of decentralized coded caching typically use the term ”packet” or ”bit”. (requested but not available in the user’s cache) is considered as a vertex of a conflict graph, and two sub-files are delivered together in the same multicast message if there is no edge between the corresponding vertices. The chromatic number of the conflict graph gives the number of required multicast messages, equivalently, the delivery rate normalized by the sub-file size. Due to the NP-hardness of the original graph coloring problem, the authors introduced a low-complexity suboptimal greedy constrained coloring (GCC) scheme. For the placement phase, similarly to [9], a group-based memory sharing approach with only two groups is considered, where only the files in the first group are cached. They show that the proposed scheme is order-optimal when the popularity of the files follows a Zipf distribution. Results of [10] are further extended by [11] to show that group-based memory sharing with two groups222 In certain cases there might be an additional group consist of only a single file. is order-optimal for any popularity distribution. The group-based memory sharing approach for non-uniform demands is also studied in [12], where the users do not have caches, but instead have access to multiple cache-enabled access points and the shared link is between the server and the access points. All these works analyze the asymptotic performance (as the number of sub-files goes to infinity) of the decentralized coded caching approach.

Decentralized coded caching with finite number of sub-files is recently studied in [15],[20] and [21] and to provide an efficient low-complexity solution to the clique cover problem. Hence, as a common objective, the proposed methods in these works aim to minimize the delivery rate for a given placement strategy using heuristic approaches.

For the centralized coded caching problem under a non-uniform demand distribution, a novel content placement strategy is introduced in [13] and [14] independently. In these papers, content placement is formulated as a linear optimization problem, where each file is divided into disjoint fragments, not necessarily of equal size. The th fragment of each file is cached as in the placement phase of [1] with parameter . In the proposed approach, the size of each fragment of each file is represented by a variable in the linear optimization problem, and the solution of the optimization problem reveals how each file should be divided into fragments. We remark that the group-based memory sharing scheme of [9] is a special case of these approaches, where in each file group only one fragment has non-zero size. Since this approach considers the most general placement strategy, we call it general memory sharing, while we will refer to the original scheme in [1] as the naive memory sharing scheme.

Note that, in the delivery phase, when a multicast message contains sub-files with different sizes, the size of the message is determined according to the largest sub-file in the message, and the smaller are zero-padded, which results in inefficiency.

Hence, although the above scheme allows each file to be divided into different size fragments, when the file library is large, the proposed policy tends to divide the library into small number of groups, where, in each group, the size of the fragments are identical. This is because unequal fragment sizes among users requires zero-padding. In particular, we observe that, for a large file library, the proposed scheme in [14] often tends to classify the files into two groups according to their popularities, such that only the popular files are cached in an identical manner. We highlight here that without mitigating the sub-file mismatch issue, which induces zero padding, the advantage of optimization over fragment sizes is limited. Another limitation of the strategy proposed in [14] is its complexity.

The number variables in the optimization problem is . Although linear optimization can be solved in polynomial time, it may still be infeasible when the library size is large (in practical scenarios the number of files are often considered to be between [17]).

In the case of less skewed popularity distributions using more than two groups can be effective in reducing the delivery rate, together with a more intelligent coded delivery design that reduces or prevents zero-padding. This motivates the proposed cross-level coded delivery (CLCD) scheme, whose goal is to design a zero-padding-free coded delivery scheme for a given group structure. The zero-padding problem in the delivery phase of centralized coded caching was previously studied in [15], where a heuristic “bit borrowing ” approach is introduced. The proposed algorithm greedily pairs the bits of the sub-files for a given placement strategy.

In this paper, different from the aforementioned works, we introduce a novel centralized coded caching framework, where the delivery and the placement phases are optimized jointly. In the proposed CLCD strategy, the placement phase is limited to three file groups, formed according to popularities. Then, for each possible demand realization, i.e., the number of users requesting a file from each group, we obtain the optimal delivery scheme and the corresponding delivery rate. Finally, using this information we find the optimal group sizes based on the popularity of the files in the library. We will show that the proposed CLCD scheme provides a noticable reduction in the average delivery rate, up to 10-20%, compared to the state-of-the-art.

The rest of the paper is organized as follows. In Section II, we introduce the system model and the problem definition. In Section III, we introduce the CLCD scheme and analyze it for a particular scenario, where we impose certain constraints on the placement phase. In Section IV, we extend our analysis by lifting some constraints on the placement phase, and in Section V, we present numerical comparisons of the proposed CLCD scheme with the state-of-the-art. In Section VI, we present the most general form of the CLCD scheme without any constraints on the placement phase, and finally, we conclude the paper in Section VII.

II System Model

We consider a single content server with a library of files, each of size bits, denoted by , serving users, each with a cache memory of capacity bits. The users are connected to the server through a shared error-free link. We follow the two-phase model in [1]. Caches are filled during the placement phase without the knowledge of particular user demands. User requests are revealed in the delivery phase, and are satisfied simultaneously.

The request of user is denoted by , , and the demand vector is denoted by . The corresponding delivery rate is defined as the total number of bits sent over the shared link, normalized by the file size. We assume that the user requests are independent of each other, and each user requests file with probability , where , and . Let denote the probability of observing demand vector , where . We want to minimize the average delivery rate , defined as

| (1) |

Next, we explain the uncoded caching and centralized coded delivery scheme, introduced in [1]. We say that a file is cached at level , if it is divided into non-overlapping sub-files of equal size, and each sub-file is cached by a distinct subset of users. Then, each sub-file can be identified by an index term , where and , such that sub-file is cached by users . Following a placement phase in which all the files are cached at level , as proposed in [1], in the delivery phase, for each subset , , all the requests of the users in can be served simultaneously by multicasting

| (2) |

Thus, with a single multicast message the server can deliver sub-files, and achieve a multicasting gain of .

III Cross-level Coded Delivery (CLCD) SCHEME

III-A An introductory overview

In the proposed CLCD scheme, the file library is divided into three groups according to their popularity: the most popular files are called the high-level files, the less popular files are called the low-level files, and the remaining least popular files are called the zero-level files. All the files in the same group are cached at the same level. Hence, the scheme can be represented by five parameters , where and represent the caching levels of the low-level and high-level files, respectively, whereas the zero-level files are not cached at all. Hence, we use to denote the overall caching strategy.

For the given parameters , the required normalized cache-memory size is given by

| (3) |

since caching a file at level means dividing it into sub-files and letting each user cache sub-files; that is, each user caches portion of each file.

The key contribution of the CLCD scheme is in the delivery phase. When the user demands are revealed, they are clustered into three groups, denoted by , and , which correspond to the set of users that request high-level, low-level, and zero-level files, respectively. A naive approach would be to serve the users in each set , , separately as in Algorithm 1.

The main limitation of this approach is that, although multicasting gain of is targeted at line 4 of Algorithm 1, the achieved multicasting gain is limited to . The main objective of the CLCD strategy is to design a proper way of forming multicast messages such that users from two different groups can be targeted as well in order to reduce the communication load. To this end, CLCD addresses two main challenges; first, how to form the set of target users for multicating, second, how to align sub-file sizes. The latter challenge stems from the mismatch between the sizes of high-level and low-level sub-files. This mismatch can be resolved either at the placement or at the delivery phase. That is, the size of each multicast message can be fixed, or can be adjusted during the delivery phase according to the targeted users. This will be further clarified later in the paper. To simplify the presentation and gradually introduce the key ideas behind the CLCD, in the following subsections we will start introducing special cases of the general CLCD scheme, and gradually extend the analysis to the more general version.

III-B

In this section, we focus on a particular case of CLCD scheme, , that is high-level files are cached at level and low-level files are cached at level , , that is, all the files are cached at either at level 1 or level 2. We will later extend our analysis to the more general CLCD schemes.

III-B1 Placement phase

We first want to remark that the placement strategy employed here is not specific to and can be utilized for more general schemes as well, as long as . The placement of the high-level files is identical to the one in [1] such that each file is divided into sub-files and the high-level files are cached at level ; that is, each user caches sub-files of each high-level file.

The key difference appears in the placement of the low-level files; instead of dividing files into sub-files, we use the same sub-packetization scheme used for the high-level files above and group them into disjoint and equal-size subsets so that each user caches the sub-files of a different subset. Equivalently, each sub-file is cached by only a single user, and each user caches only sub-files of each low-level file exclusively. We assume here that is divisible by , which holds for any when is a prime number. We emphasize that this assumption is not required for CLCD in general; however, under this assumption all the files are divided into equal number of sub-files, thus the alignment problem can be resolved at the placement phase as we explain next.

Although the presented placement scheme is general, in the case of , we can provide a closed form expression for the achievable delivery rate as a function of the requested files from each caching level, which is denoted by , and the corresponding delivery strategy can be obtained in polynomial time. This is particularly important, since it implies a low complexity coded delivery design with a certain achievable rate guarantee in the low cache-memory regime. Next, we provide an example to better illustrate the proposed placement strategy.

| User | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| index of cached sub-files |

Example 1. Consider a network of users and a library of files with decreasing popularity. To simplify the notation, we will denote the files as , , , , , , . We set the normalized cache size as . For the given cache size, we have ; that is, the most popular files, , are treated as high-level files, whereas, and as low level files. Each file is divided into sub-files, and each user stores sub-files of each high-level file, and sub-files of each low-level file. User cache contents after the placement phase is illustrated in Table I; for each user the sub-files in red are cached for all the files, whereas the sub-files in blue are cached for only the high-level files.

Remark 1.

In Example 1, we use a systematic placement strategy for the low-level files; such that, for each user, the index set of the cached sub-files of a low-level file is a subset of the index set of the cached sub-files of a high level file. Although, this particular placement strategy may not affect the delivery rate performance, it can be beneficial in practice if the library and/or the file popularity dynamically varies over time and the cached content should be updated accordingly [22]. If a high-level file becomes low-level, then the user simply removes the sub-files colored with blue, and similarly, if a low-level file becomes high-level, then the user only downloads the sub-files colored with blue from the server. This systematic placement strategy can be summarized in the following way. Let be the set of index sets of sub-files that contain . Then, when a file is cached at level 2, user caches all the sub-files , . Further, let each , for , be ordered as illustrated in Table I, where the th column includes the elements of within an order. Once we have ordered sets for each user, then, starting from the first user, each user selects the first index set, that are not chosen, in order to cache corresponding low-level files as illustrated in Table I for .

III-B2 Delivery phase

| High-level users | Multicast messages | Low-level users | Multicast messages |

|---|---|---|---|

| 1 2 3 | 6 7 | ||

| 1 2 4 | 6 7 | ||

| 1 2 5 | 6 7 | ||

| 1 3 4 | 6 | ||

| 1 3 5 | 6 | ||

| 1 4 5 | 6 | ||

| 2 3 4 | 6 | ||

| 2 3 5 | 6 | ||

| 2 4 5 | 6 | ||

| 3 4 5 | 6 | ||

| 1 2 | 6 | ||

| 1 2 | 6 | ||

| 1 3 | 6 | ||

| 1 3 | 6 | ||

| 1 4 | 6 | ||

| 1 4 | 6 | ||

| 1 5 | 6 | ||

| 1 5 | 6 | ||

| 2 3 | 7 | ||

| 2 3 | 7 | ||

| 2 4 | 7 | ||

| 2 4 | 7 | ||

| 2 5 | 7 | ||

| 2 5 | 7 | ||

| 3 4 | 7 | ||

| 3 4 | 7 | ||

| 3 5 | 7 | ||

| 3 5 | 7 | ||

| 4 5 | 7 | ||

| 4 5 | 7 | ||

| 1 | 7 | ||

| 2 | 7 | ||

| 3 | 7 | ||

| 4 | - | - | |

| 5 | - | - |

Delivery phase of the proposed scheme consists of four steps. We will continue to use Example 1 to explain the key ideas of the delivery scheme

Example 1 (continued).

Assume that user requests file , , so that and . Before explaining the delivery steps in detail, we want to highlight the main idea behind the CLCD scheme, which has been inspired by the bit borrowing approach introduced in [15]. For the given example, if the conventional coded delivery scheme is used for delivering the missing sub-files of the high-level and low-level users separately, then the server should send all the messages given in Table II. Now, consider the following three messages in Table II: , which targets high-level users 1 and 2, and and , which are sent as unicast messages to low-level user 6. In the cross-level design, the server decomposes the message into sub-files and , and instead, pairs them with sub-files

and to construct cross-level messages , which targets high-level user 1 and low-level user 6, and , which targets high-level user 2 and low-level user 6. Hence, the server multicasts two messages instead of three. We call this process as multicast message decomposition, since the cross-level messages are constructed by decomposing a message constructed according to the conventional coded delivery scheme.

The key challenge in CLCD is deciding which messages to decompose, and how to pair the decomposed high-level sub-files with the low-level sub-files. Now, if we go back to Example 1, all the messages in blue in Table II will be used for cross-level delivery and the total number of broadcast messages will be reduced to from (24% reduction). In the delivery phase, the server first multicasts the messages in red in Table II, then the messages in blue are used to construct cross-level messages, and finally the remaining messages in green are sent as in the conventional coded delivery scheme. Now, we will further explain the four steps of the delivery phase:

Step 1) Intra-high-level delivery:

The first step of the delivery phase is identical to that in (2) for . The only difference is that, now we consider only the users in , instead of . This corresponds to the red messages in the second column of Table II.

Step 2) Intra-low-level delivery:

The second step also follows (2) with , targeting low-level users in . These are the red messages in the last column of Table II.

Step 3) Cross-level delivery:

This step is the main novelty of the CLCD scheme. First, note that each high-level user has sub-files in its cache that are requested by a low-level user. For instance, in Example 1, user 1 has sub-files that are requested by user 7. For and , let denote the set of sub-files stored at high-level user and requested by low-level user , e.g., in Example 1. We note that these sub-files are sent as unicast messages in the conventional coded delivery scheme. Similarly, for and , let be the set of sub-files stored by low-level user that are requested by high-level user , e.g., in Example 1. One can observe that a cross-level message, targeting high-level user and low-level user , can be constructed by taking one sub-file from each of and , and bit-wise XORing them. For the given example, any three sub-files can be chosen from set

and paired with any of the sub-files in to construct a cross-level message. To generalize, if there is a set of sub-files such that , then we can easily construct cross-level messages that target high-level user and low-level user , using any one-to-one mapping between these two sets. However, we remark that, if sub-file is used to construct a cross-level message, i.e., , then the multicast message should also be decomposed, and the sub-file should also be paired with a low-level file, i.e., .

Hence, the main challenge in cross-level delivery is the construction of sets in a joint manner. Before the construction process of these sets, we will define two more sets that will be useful. We note that, in the given example, sub-files are also cached by a high-level user, but sub-file is cached by only low-level users. For and , we introduce the set of the sub-files that are requested by high-level user , and cached by low-level user as well as by a high-level user, e.g., . Furthermore, we introduce the set , , of the sub-files that are requested by high-level user but cached by only low-level users, i.e., . We have, in Example 1, .

In the third step of proposed CLCD scheme, our aim is to deliver all the remaining sub-files requested by the low-level users and the sub-files requested by the high-level users that are cached by only low-level users, via multicast messages, each destined for one high-level and one low-level user. More formally, we want low-level user , , to recover all the sub-files in , and we want high-level user , , to recover all the sub-files in . To this end, sets must satisfy the following properties:

| (4) | |||

| (5) |

where (4) ensures that each high-level user collects its missing sub-files that are available only in the caches of the low-level users and (5) guarantees that high-level users do not receive the same sub-file multiple times.

Next, we show how to construct the sets , , . In order to ensure (4) and (5), is partitioned into subsets with approximately uniform cardinality, i.e., , , , and such that holds for all and . Further details on approximately uniform partitioning are provided in Appendix C. We note that, if , then (4) holds. We also assume that the same partitioning is applied to all ’s. Partitions of ’s for Example 1 are illustrated in Table III.

For given and , can be constructed as follows:

,

for some . Then, from the construction, one can easily verify that (5) holds. We note that, in this construction, simply denotes the sub-files in that are not used in a cross-level message. In Example 1, this corresponds to the green multicast messages in Table II. In particular, if multicast message is not decomposed for cross-level delivery, then and . Hence, the ultimate aim is to find a proper way of constructing sets , which is equivalent to deciding which multicast messages to be decomposed.

In order to ensure the initial requirement of , we need to show that it is possible to construct , , , which satisfy the following equality

| (6) |

We note that, if the following inequality holds

| (7) |

satisfying (6) can always be found.

From the construction, we know that ; however, and depend on the realization of the user demands, i.e., and or due to the approximately uniform partitioning. Accordingly,

| (8) | ||||

| (9) |

One can observe that, when , in both cases. If , the high-level file is considered as a low-level file in the delivery phase, and the achievable rate becomes . In the remainder of this section, we assume . One can also observe that , since . Let be the cardinality of the set that satisfies (6), i.e., . We can consider any subset of with cardinality as to construct .

| (i,j) | Cross-level messages | |||||

| (1,6) | , , | |||||

| (1,7) | , , | |||||

| (2,6) | , , | |||||

| (2,7) | , , | |||||

| (3,6) | , , | |||||

| (3,7) | , , | |||||

| (4,6) | , , | |||||

| (4,7) | , , | |||||

| (5,6) | , , | |||||

| (5,7) | , , |

Eventually, the overall problem can be treated as choosing the multicast messages that will be decomposed, colored with blue in Table II, so that the sub-files that are not used for cross-level delivery satisfy the constraint , . Once the decomposed files and are decided, can be constructed easily as illustrated in Table III, which also lists all the cross-level messages.

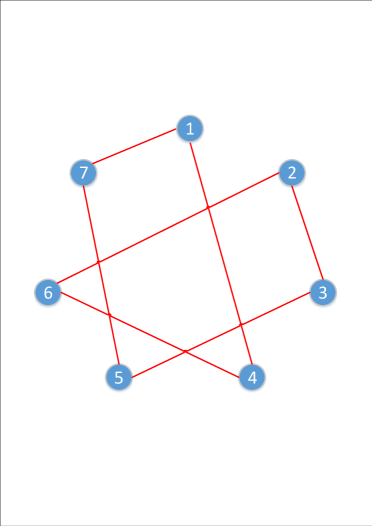

We remark that when , for and , this also requires . Hence, for a particular with , for all , construction of the sets is equivalent to finding number of pairs such that each appears in exactly of them. This problem can be analyzed by constructing a graph whose vertices are the elements of , and each vertex has a degree of so that each edge of the graph can be considered as a pair. To successfully construct such a graph we utilize the matching concept used in graph theory. The key graph theoretic definitions and lemmas used for this analysis are introduced in Appendix A, while the construction of sets and is detailed in Appendix B.

Step 4) Intra-high-level delivery with multicasting gain of two:

In the last step, the server multicasts the remaining messages which are colored with green in Table II. In Example 1, this step is finalized with unicasting the sub-file . Let denote the set of multicast messages that are not decomposed to be used for cross-level delivery, and hence, are multicasted in the last step.

The required delivery rate for a particular demand vector under scheme is given by the following lemma.

Lemma 1.

For a cache size of , and demand realization , the following delivery rate is achievable by the proposed scheme:

| (10) |

where is the total number of missing sub-files for demand realization , and is given by

| (11) |

Note that, in the centralized coded delivery scheme of [1], when all the files are cached at level , the delivery rate is equal to . We can observe from (10) that, when , there are only low level users and . Similarly, when , there are only high level users and . In general, Lemma 1 implies that for any demand realization , sub-files are served with a minimum multicasting gain of 2. We also remark that the delivery rate depends only on the vector ; rather than the demand vector; hence, the dependence on in (10) and (11) can be replaced by , i.e., we use and , respectively. Note that and are random variables, and their distribution depends on , and the popularity distribution. Accordingly, the average delivery rate can now be written as follows:

| (12) |

In the next subsection, we extend our analysis to the case in which a certain subset of the files are not cached at all, that is, .

III-C

We recall that when , the cache memory requirement for is given by

| (13) |

Hence, in this particular case, for a given cache memory size of , and can be directly written as a parameter of , , and . However, by choosing not to cache number of least popular files, it is possible to consider different values for and under the constraint

| (14) |

or, equivalently,

| (15) |

From Equation (15), one can observe that by playing with the parameter it is possible to seek a different placement strategy in terms of the caching levels. We will later discuss how the placement strategy can be optimized, but here now we will focus on the delivery of the scheme, where we have three set of users, , and , and set as before.

| (i,j) | Cross-level messages | ||||||

| (1,5) | , , | ||||||

| (1,6) | , , | ||||||

| (2,5) | , , | ||||||

| (2,6) | , , | ||||||

| (3,5) | , , | ||||||

| (3,6) | , , | ||||||

| (4,5) | , , | ||||||

| (4,6) | , , |

We highlight the key modifications compared to the case . First, we introduce a new set of sub-files that are requested by a high-level user , and cached by a low-level user and a zero-level user. The fundamental modification appears in the cross-level delivery step, i.e., in Step 3 of the delivery algorithm, particularly in the construction of set . When , is constructed in the following way

| (16) |

where . Initially, we want all the sub-files in to be included in , i.e., ; however, this is not possible if

| (17) |

In that case, sub-files must be removed from to obtain , where is found as

| (18) |

where is if , and otherwise.

We remark that when , this means that , thus and . In general, , where

| (19) |

When ’s are obtained, the sets are constructed as in . Overall, the delivery phase of consists of five steps. We will use the following example to illustrate these steps.

Example 2. In this example, we use the same setup as in Example 1; however, now we assume that files are high-level files, and are low-level files, while file is not cached at all. We also assume that users request files respectively. Hence, , and .

| High-level users | Multicast message |

|---|---|

| 1 2 3 | |

| 1 2 4 | |

| 1 2 | |

| 1 3 4 | |

| 1 3 | |

| 1 4 | |

| 2 3 4 | |

| 2 3 | |

| 2 4 | |

| 3 4 | |

| Low-level users | Multicast message |

| 5 6 | |

| 5 6 | |

| 5 6 |

Step 1) Intra-high-level delivery:

The first step of the delivery phase is identical to that in (2) for . The only difference is that, now we consider only the users in , instead of , and a multicast message is not transmitted for subsets of , i.e., .

Step 2) Intra-low-level delivery:

The second step also follows (2) with , targeting low-level users in . In Example 2, the messages delivered by the server corresponding to the first two steps are listed in Table V.

Step 3) Cross-level delivery:

We construct sets as illustrated in Table IV for Example 2. One can easily observe that , and , which implies that . Then, we evaluate the value of for all and . Subsequently, we construct the sets and for the evaluated values of . Eventually, sets and are used to construct the set of multicast messages similarly to the case . We again refer the reader to Appendix B for further details.

Sets , , and all the multicasted messages in the third step for Example 2 are given in Table IV.

Step 4) Intra-high-level delivery with multicasting gain of two:

In this step, the server multicasts the messages

| (20) | ||||

each of which is destined for two high-level users.

Step 5) Unicasting:

The remaining sub-files are sent as unicast messages in the last step. The sub-files that are sent in this step can be categorized under three groups: the first group consists of the sub-files that are requested by zero-level users, the second group consists of the sub-files that are requested by low-level users and cached by zero-level users, finally the third group is formed by the sub-files in set .

Let be the number of transmitted messages (unicast and multicast) for a given demand realization

, i.e.,

| (21) |

Then, the normalized delivery rate for is given by . The average delivery rate can be written as follows

| (22) |

where denotes the demand realization. Hence, the placement strategy can be written as an optimization problem;

| subject to: | (23) |

To solve the optimization problem P1, we first need to compute the required delivery rate for each possible demand realization , which scales with . The cache memory constraint in (15) can be rewritten in the following way;

| (24) |

where and are defined as the number of files that are not cached in order to cache all the remaining files at level and , respectively. Hence, solving the optimization problem P1 is equivalent to searching for , that gives the minimum . Hence, for each value of , must be calculated for each possible realization of , which scales with . Hence, the optimization of the placement phase has an overall complexity of .

IV Extension to

In this section, we extend our analysis to the more general CLCD scheme where can take any integer value, although we still fix . We emphasize here that when , it is possible to establish a link between the process of constructing multicast messages, particularly forming of the pair of sub-files, and the concept of matchings from graph theory. However, this

correspondence does not generalize with , we particularly focus on a multicasting gain of two, whereas in this section, our aim is provide a more general perspective on CLCD and show how the delivery phase of the proposed scheme can be analyzed as an optimization problem.

The placement phase of the scheme is similar to that of . Each file is divided into sub-files, and the high-level files are cached at level ; that is each user caches sub-files of each high-level file. On the other hand, for the

low-level files, the sub-files are grouped into disjoint and equal-size subsets and each user caches the sub-files in a different subset which corresponds to caching at level 1.

Now, to analyze the delivery phase with cross-level coded delivery, let be the power set of , and be the set of sets with cardinality , i.e., , and defined as . Recall that, with the conventional coded delivery scheme, the low-level and high-level users are served separately. Hence, for the delivery of the high-level files, for each set the server transmits

| (25) |

in order to deliver all the sub-files requested by the high-level users. However, although the high-level files are cached at level , a message destined for high-level users has a multicasting gain of , instead of . In particular, when

the corresponding message is a unicast message.

We call a multicast message in the form of (25) as decomposable if in the corresponding set , there is at least one high-level and one low-level user. The server can utilize those high-level sub-files for cross-level delivery. Let be the set of sets that correspond to a decomposable multicast message. In the scheme, for each demand realization , we look for a set to use for pairing sub-files of high-level users and sub-files of low-level users.

We introduce variable , where if the conventional coded delivery scheme, as given in (25), is used to deliver the sub-files corresponding to set , while if the corresponding multicast message is decomposed and the sub-files in the message are delivered by cross-level coded delivery. We further introduce variables , for and , where , if sub-file is paired with a sub-file that is requested by low-level user and cached by high-level user . Then, the server multicasts . Hence, for each set there are variables in the form .

To illustrate the multicast message decomposition process, consider the scheme, and assume that there are users with a demand realization ,

and . Consider a particular set . According to (25), the server multicasts for high-level users 1 and 2. With a multicast message decomposition specified by , , , , and , the server multicasts the messages and in the cross-level delivery phase. We remark that, if the conventional coded delivery scheme is used, sub-files and are sent as unicast messages. Hence, in total three messages would be transmitted with the conventional coded delivery scheme, i.e., , , and ; however, via CLCD this can be reduced to two, i.e., and . One can observe that if a multicast message is decomposed and used by the CLCD scheme, then the number of multicast messages is reduced by one.

Note that variables and cannot be 1 at the same time, since the sub-file can be paired with only one low-level sub-file. Hence, for the message decomposition we have the following constraint

| (26) |

Another constraint, from the construction, is that each high-level user contains sub-files that are missing at low-level user ; thus, the total number of sub-file pairings between a low-level user and a high-level user is at most i.e.,

| (27) |

Finally, if , then all the corresponding sub-files must be sent via CLCD, i.e.,

| (28) |

For the given values of the variables and , the delivery phase of is given in Algorithm 2. According to Algorithm 2, the number of transmitted messages, , for a given demand realization is given by

| (29) | ||||

| (30) |

Hence, the main objective of scheme is to maximize in order to minimize the delivery rate for each possible demand realization. Equivalently, the minimum delivery rate problem can be converted to the following optimization problem:

| (31) | ||||

| (32) | ||||

| (33) |

By solving P2 for each demand realization , we can construct the optimal coded delivery scheme for . We remark that P2 is a binary integer programming problem, and unlike , the optimization of the delivery phase cannot be solved in polynomial time. Nevertheless, complexity of P2 does not depend on the number of files, , but depends on the number of users, ; hence, for moderate number of users the CLCD scheme can be optimized even for a very large file library. Once the required delivery rate for each possible demand realization is computed, optimal placement strategy; that is, the number of files not to cache, can be found following the same procedure in Section III. We want to remark that instead of solving P2, it is also possible to design a greedy algorithm to construct the multicast messages.

In Section VI, we will study the most general scheme and show that the delivery phase can be still analyzed as an optimization problem with a reduced computational complexity. But before extending our analysis, we would like to present the numerical results comparing the CLCD schemes presented so far with the alternative approaches in the literature.

V Numerical Results

In this section, we compare the performance of the proposed CLCD scheme with that of the conventional centralized coded delivery scheme with two different content placement strategies. The first content placement strategy is called naive memory sharing, introduced in [1], in which all the files are cached at the same level according to a single parameter . Note that, when parameter is not an integer, then the files in the library are divided into two disjoint fragments identically, and these fragments are cached according to parameters and .

The second benchmark strategy is the general memory sharing scheme proposed in [14], which is shown to outperform other coded delivery techniques under non-uniform demand distributions. In this scheme, each file is divided into disjoint fragments, and the th fragment, , is cached according to parameter , while the first fragment is not cached. Thus, the overall system can be considered as a combination of sequential coded delivery phases with different multicasting gains, i.e., the th delivery phase is executed with multicasting gain of . The size of each fragment of each file can be obtained via solving a linear optimization problem [14].

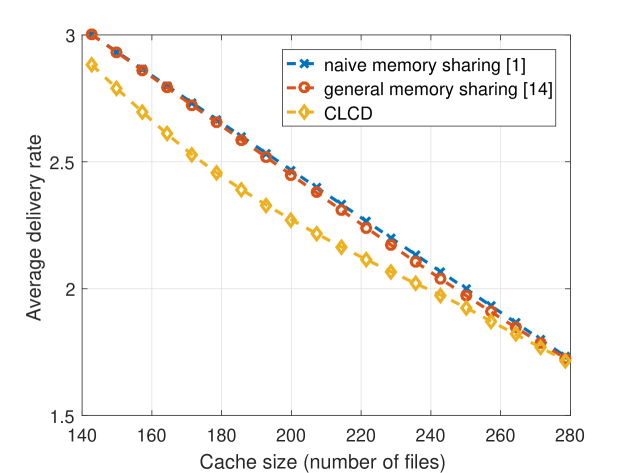

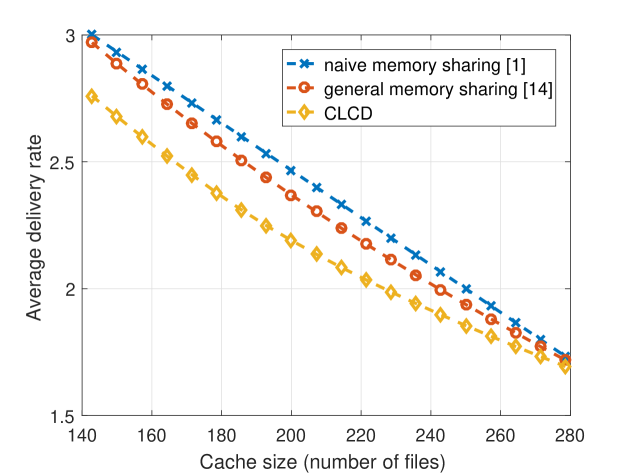

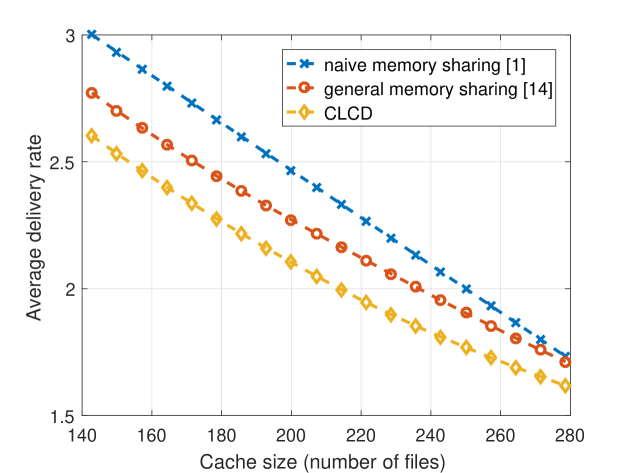

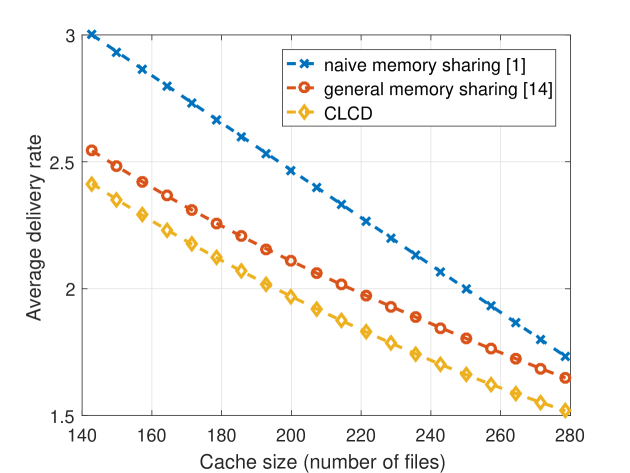

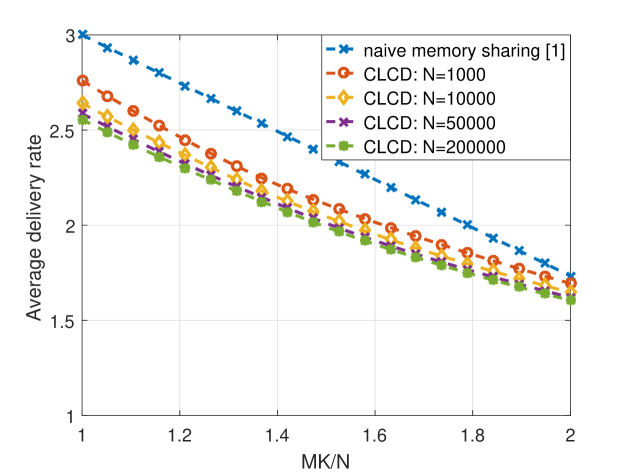

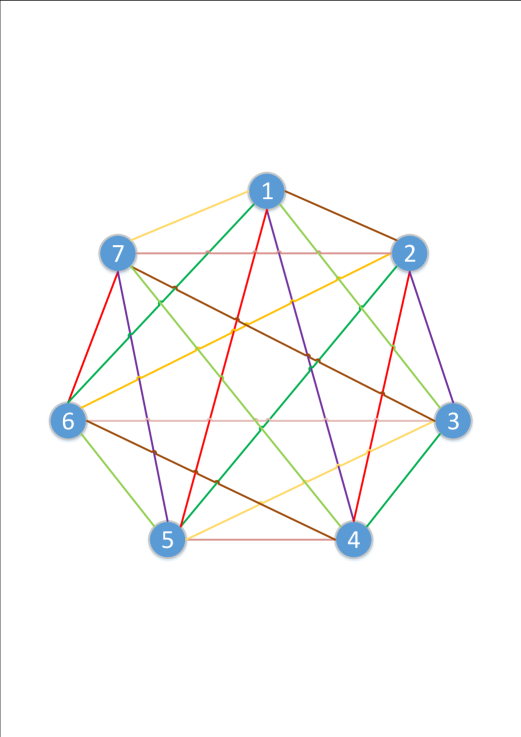

In general, it has been observed that the popularity of video files for on-demand streaming applications approximately follows a Zipf distribution with parameter [16], [17]. In our simulations, we consider . We note that , represents the skewness of the distribution of video popularity, where smaller values indicates a more uniform popularity distribution. In realistic scenarios, number of files in the video library is considered to be on the order of . However, due to the complexity of the general memory sharing scheme, we will consider and in our initial simulations. We let the cache size vary from 140 to 280, which corresponds to . We are particularly interested in this regime as all three strategies considered in this paper converge to the same performance for larger values. The delivery rates achieved by the naive memory sharing, the general memory sharing, and the CLCD schemes are illustrated in Figure 1. We note that, for each value of the Zipf parameter we calculate the achievable delivery rate of the scheme , for , and take the minimum of them.

We observe that when the number of files is large, the general memory sharing algorithm tends to divide the library into two groups according popularities, such that the most popular files are cached according to the naive memory sharing scheme while the less popular files are not cached at all. One can observe from Fig. 1 that when or the optimal memory sharing scheme performs very similarly to the naive memory sharing scheme, while the proposed CLCD scheme can provide a significant reduction in the achievable delivery rate. In addition, we observe that CLCD outperforms both the naiive and general memory sharing schemes at all values considered here.

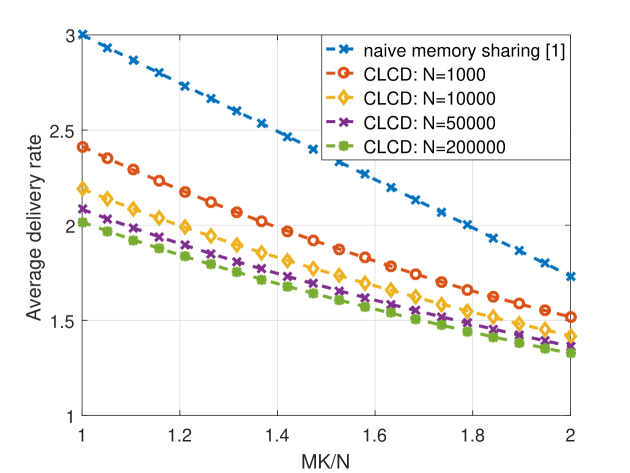

We also perform simulations to illustrate how the number of files affects the performance of the CLCD scheme when is fixed, i.e., the cache memory size scales with the number of files in the library. In the naive memory sharing scheme, all the files are cached at level , thus the performance of this scheme does not change with the size of the file library as long as is fixed. In the simulations, we consider , and . Due to its complexity it is not possible to run the general memory sharing scheme for large libraries, thus we compare CLCD scheme only with the naive memory sharing scheme. We observe in Fig. 2 that the CLCD scheme performs better for larger file libraries. In particular, when the performance gap between the CLCD scheme and the naive memory sharing scheme for is almost two times of the performance gap for .

VI General Scheme

Having shown the superiority of the proposed scheme to its alternatives in the literature, in this section, we present the most generic form of the CLCD scheme. In the previously introduced versions with , the placement phase is designed to prevent any miss-alignment between the high-level and low-level sub-file sizes. Hence, the fundamental modification in the scheme with respect to the previous versions is that the alignment of the sub-file sizes and the construction of the multicast messages are done jointly during the deliver phase. In this section, for sake of clarity, we start our presentation by setting . This constraint will later be removed. The general structure of the scheme is given in Algorithm 3.

Here, the main design issue is how to overlap the bits of high-level and low-level files that are delivered in the CLCD phase. The key point here is that, for a subset of different types of users, i.e., and with , any high level user appears in exactly of the subsets of with . Hence, the requested sub-file should be divided into smaller sub-files. Similarly, from the perspective of low-level users, each appears as a subset of exactly different sets, thus the sub-file requested by low-level user , , should be divided into smaller sub-files. Let and be the sizes of the sub-files used for the aforementioned high-level and low-level sub-files, respectively, in the cross-delivery phase, i.e.,

| (34) |

and

| (35) |

By expanding (34) and (35) one can observe that

| (36) |

which implies that it is possible to overlap the high-level files with the low-level files in the cross-delivery step as long as there is at least one low-level user in . Accordingly, the overall rate, for given can be written as

| (37) | ||||

Here, the CLCD scheme has two objectives: one is to achieve a multicasting gain of at least in the delivery phase, and the other is to overlap the delivery of the high-level and low-level files as much as possible. Hence, we want to minimize the term in (37). This can be achieved by dividing the high-level files in a non-uniform manner. To clarify the non-uniform file division, consider the case with , , and assume that there are users with a demand realization , where and .

According to the proposed CLCD design, for set , delivery of the requested sub-files are realized over 5 steps, with a multicasting gain of , corresponding to the subsets , , , , . We recall that since user 1 receives its required file , part by part in four of these steps, the corresponding sub-file is further divided into smaller sub-files. Let denote the one delivered in the step corresponding to subset . Subset consists of only high-level users. We also recall that, as given in (36), the low-level sub-files are larger than the high-level ones. Hence, to reduce the amount of non overlapping bits , one can reduce the size of and, accordingly, increase the size of , , until they match with that of .

The main question arising from this observation is how to adjust the size of the high-level sub-files assigned to each so that the high-level and low-level sub-files overlap as much as possible. Let us focus on a particular subset , and a particular high-level user . Let the number of subsets , be denoted by , and the number of subsets be denoted by . Since we want to reduce the size of sub-files , , and increase that of sub-files , , we have the following constraint based on (36):

| (38) |

where denotes the ratio of reduction in the size of the high-level sub-files. Accordingly, parameter can be written as

| (39) |

We note that, parameters , and are identical for all the high-level users ; hence, we drop the user index for simplicity. Once the size of the high-level sub-files are adjusted, can be rewritten as follows:

| (40) |

Consequently, by inserting above into (37), one can obtain the required delivery rate for Algorithm 3.

We remark that with the CLCD scheme all the sub-files are served with a minimum multicasting gain of . If , one can claim the optimality of the delivery phase, under the same placement scheme, since the low-level files cannot be served with a multicasting gain greater than . On the other hand, if , then the non-overlapped bits of the high-level sub-files are delivered with a multicasting gain of , although, in principle, they could be delivered with a multicasting gain of , where ; thus, when this approach might be inefficient. Hence, the main question at this point is how to decide which subsets with will be used for CLCD. To this end, we introduce another parameter, which denoted by , where , such that is used for CLCD if , otherwise high-level users in are served with multicasting gain of as in Algorithm 3.

The CLCD scheme with is given in Algorithm 4.

The delivery rate, , for given demand realization with Algorithm 4 can be written as,

| (41) | ||||

The second term on the right hand side of (41) does not depend on parameter ; whereas the first term decreases with , while the last term increases, which implies that seeks a balance between the first and the last terms. Therefore, for each , a different value may minimize . The average delivery rate is given by

| (42) |

In general, given and , one should find the best value for each .

In the most generic form of the CLCD scheme we also allow . We slightly modify the delivery scheme by simply considering the zero-level users (users with uncached file requests) as low-level users for cross-level delivery and serve them with unicast transmissions at the end. Accordingly, the delivery rate is given by as

| (43) | ||||

We note that it might be possible to further reduce the delivery rate by revisiting the sub-file size alignment strategy taking into account the zero-level users at the expense of additional complexity.

VII Conclusions

We introduced a novel centralized coded caching delivery scheme, called CLCD, for cache-aided content delivery under non-uniform demand distributions. The proposed caching scheme uses a different placement strategy for the files depending on their popularities, such that the cache memory allocated to the sub-files belonging to more popular files are larger compared to the low popular ones. The main novelty of the proposed approach is in the delivery phase, where the messages multicasted to the group of users are carefully designed to satisfy as many users as possible with minimal delivery rate while in the case where the files are cached in a non-uniform manner based on their popularities. For a certain special case of the proposed algorithm we are able to provide a closed form expression for the delivery rate. We also showed via numerical simulations that the proposed CLCD scheme can provide up to reduction in the average delivery rate compared to the state-of-the-art. We expect this gain to grow considerably as the number of files increases, while the state-of-the-art schemes in the literature cannot be implemented for a large file library due to their formidable complexity.

Appendix A Set partitioning

In this section, we will introduce some useful lemmas and definitions related to graph theory that will be utilized in the process of multicast message construction, particularly for pairing the sub-files. We use , and to denote a graph, its set of vertices and edges, respectively. For simplicity, we enumerate the vertices, and set , so that represents the number of vertices.

Definition 1.

A graph is a complete graph if each pair of distinct vertices is connected by a unique edge.

Definition 2.

A matching of graph is a subgraph of whose edges share no vertex; that is, each vertex in matching has degree one.

Definition 3.

A matching is maximum when it has the largest possible size.

Definition 4.

A matching of a graph is perfect if it contains all of ’s vertices. From definition a perfect matching is a maximum matching although reverse is not necessarily true.

Definition 5.

1-factorization of a graph is a collection of edge-disjoint perfect matchings (also referred to as 1-factors) whose union is . Graph is 1-factorable if it admits 1-factorization.

Lemma 2.

A complete graph , with for some , is 1-factorable and

Definition 6.

For a given edge , is the index set of adjacent vertices .

Definition 7.

A partition of a set is a collection of nonempty and mutually disjoint subsets of , called blocks, whose union is . For instance given set ; , is a partition of with blocks of sizes and .

Lemma 3.

Consider a graph with for some . Then, for any perfect matching of , is a partition of set with blocks of size two.

Lemma 4.

Given set , with for some , it is possible to obtain different disjoint partitions333By a disjoint partition, we mean that the distinct partitions, represented as sets, are disjoint. of set with blocks of size two.

Proof.

Consider a complete graph , such that with . From Lemma 2, can be written as a collection of edge-disjoint perfect matchings. Lemma 3 implies that for any , one can obtain which is a partition of set with blocks of size two. Further, since any edge-disjoint perfect matching, corresponding partitions, and are disjoint i.e., . ∎

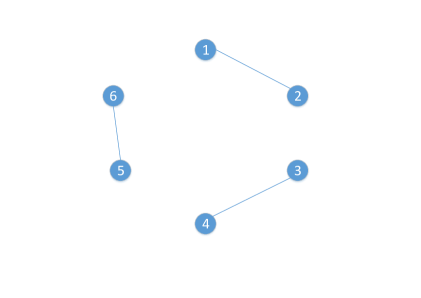

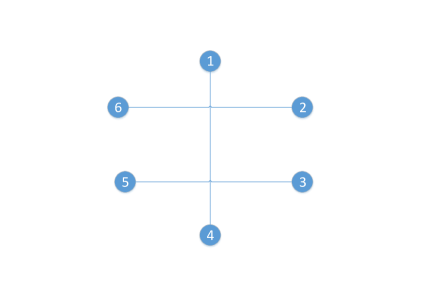

In Fig. 3, all disjoint partitions for set , and the corresponding edge-disjoint perfect matchings are illustrated. We note that, although Lemma 4 is introduced for set , with for some , it is valid for any set , , and we use the notation to refer a particular set .

Lemma 5.

Consider a complete graph with for some . Then, for any maximum matching of , is a partition of set with blocks of size two, where .

Lemma 6.

A complete graph , with for some , can be decomposed into collection of edge-disjoint maximum matchings .

Proof.

Assume that all the vertices in are located on a circle according to their index with an increasing order. Then, for any vertex , a sub-graph with and , where , is a maximum mathching. By following the aforementioned method, , it is possible to generate a maximum matching . Then one can easily observe that for any

| (44) |

and,

| (45) |

∎

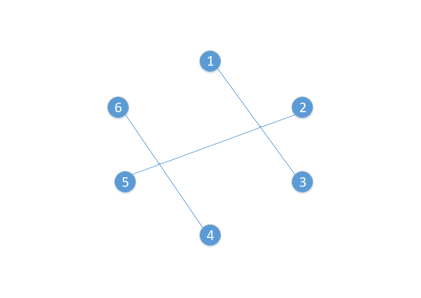

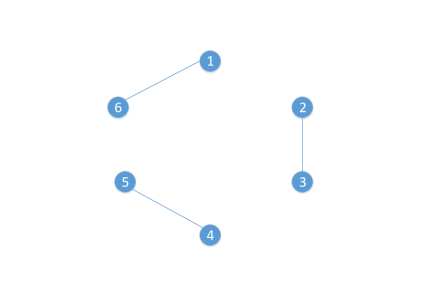

Decomposition of a complete graph , with , into edge-disjoint maximum matchings is illustrated in Figure 4. From Lemma 5 and Lemma 6, we can conclude that for a given set , for each , it is possible to have a partition of with blocks of size two, denoted by such that

Appendix B Construction procedure for and

In this section, we will explain the procedure for constructing the set of multicast messages, , that are sent in the last step of the delivery phase. The main concern of this procedure is to satisfy the constraint 444Since the same partitioning is applied to all ’s, , for all ., and , while constructing the set . In the construction procedure, we consider two cases, where is even and odd, separately.

B-A Even number of high-level users

Before presenting the algorithm for an even number of high-level users, we will briefly explain how the concept of partitions can be utilized for the construction of set . Consider and a partition of with blocks of size two555In the scope of this work, we use the term partition to refer only a particular type of partition with blocks of size two. Hence, from this point on we use the term partition without further specifying the block sizes., e.g., . Then, for a particular , each can be converted into the following multicast message666Throughout the paper, we often refer to the subset as a node pairing.: . To this end, the specified partition corresponds to the following set of multicast messages;

| (46) |

Recall that, is the set of multicast-messages that are not decomposed and are sent in the last step of the delivery phase. Hence, if the multicast messages in (46) are added to , then sub-files , , , , and are added to sets , , , , , and , respectively. One can observe that, for a particular , if a partition is used for adding multicast messages to set , then exactly one sub-file is added to the set for each . Hence, for a particular , we consider any disjoint partitions to determine the multicast messages to be included in set , and the constraint would be satisfied for all . Hence, we define , and use it to add multicast messages to set in Algorithm 5. We note that can be considered as a set of user pairings, where each user appears in exactly pairings. Further details on the construction of the disjoint partitions are explained in Appendix A.

B-B Odd number of high-level users

Above, we first obtained a set of node pairings for each , and then constructed the set of multicast messages using these node pairings in Algorithm 5. We recall that is the union of partitions generated from . However, when is odd, it is not possible to obtain partition of with blocks of size two. We remark that, if is odd, must be even, which means that for low-level users, will be an odd number , and for the remaining low-level users, will be an even number . Let and be the subset of low-level users with and , respectively. Furthermore, let and denote the cardinality of the sets and , respectively.

For the case of , we introduce a new method, Algorithm 6, to construct for each . In Algorithm 6, we use the notation to denote the th element of set for an arbitrary ordering of its elements777 Although we use index for the set , Algorithm 6 does not require a particular ordering for this set.. We also want to remark that the partitions used in Algorithm 6 are disjoint, i.e.,

| (47) |

where , and . The process of obtaining these disjoint partitions are explained in Appendix A. Now, one can easily observe that, each high-level user appears in exactly one pairing in , except , and in each high-level user appears exactly in two pairings. To clarify, assume that we want to construct for . We can set and use partition , so that and . Then, Algorithm 6 uses the partitions given in Table VI to construct as follows:

| (48) | ||||

| Partition | Corresponding set |

|---|---|

Eventually, in each high-level user appears exactly in pairings. Hence, as in Algorithm 5, can be used to construct sets as well the set of multicast messages .

We remark that, for each the same set of node pairings is used, thus the process is identical for each . However, for low-level users the process will not be identical since it is not possible to construct a single for all . Nevertheless, we follow a similar procedure to construct the multicast messages for the low-level users in .

The overall procedure for the case of odd number of high-level users is illustrated in Algorithm 7. In the algorithm, the set of node pairings for each is constructed separately, and is used to decide the multicast message to be placed in , and the sub-files to be placed in sets , as in Algorithm 1. From the construction, one can easily observe that in , each high-level user appears exactly in pairings except a particular that appears in pairings. Thus, when is used to construct multicast messages, constraint is satisfied for all , except . Therefore, at line 11 and 16 in Algorithm 7, we add a sub-file to set , where the high-level user appears in pairings in , and at line 17 we XOR these sub-files. Hence, eventually we ensure that, at the end of the algorithm the equality constraint is satisfied for all and for all .

With the proposed set construction algorithms we are ensuring that, in the third and fourth steps of the delivery phase all the sub-files are delivered with a multicasting gain of two888When is odd, exactly one sub-file is unicasted, while the remaining sub-files achieve a multicasting gain of two, as in Example 1., which explains the achievable delivery rate.

Now, we go back to Example 1, where we have an odd number of high-level files. In particular, we have , and , thus and . Further, partition are given as , , , , . Then, Algorithm 7 takes the set and use the partition . Accordingly, Algorithm 7 constructs and . Thereafter, sets and are constructed as and . The corresponding set of multicast messages, , are already illustrated with blue in Table II.

Appendix C Partition of

In this part, we will show how can be approximately partitioned. Recall that the main concern is to assign sub-file to either or in order to achieve approximately uniform cardinality sets . We consider two cases, where is even and odd, respectively. We start with the case where is an odd number. Let the set of partitions are given for . Then Algorithm 8 is used to construct .

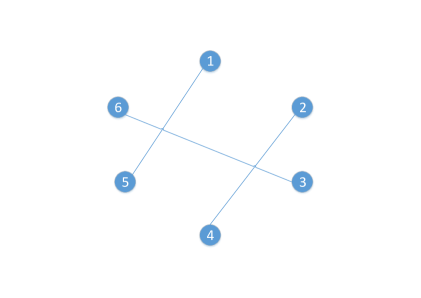

Note that, in each step of Algorithm 8 the size of set is equal to and low-level user index appears in exactly two sub-files in the . By "sequential distribution” we mean that we start with some low-level user and take the two sub-files . We start with assigning to set , then the other sub-file is assigned to set . Thereafter, we take the file and assign it to set and we continue the process in the same way. Formally speaking, each in the algorithm resembles a Hamiltonian cycle, and in Algorithm 8 we are assigning each edge in the cycle to a node. To clarify, consider Hamiltonian cycle corresponding to is illustrated in Fig. 5. Assume that, we start from node 1 and assign to node 1, and edges ,and are assigned to nodes , respectively. Note that, each edge corresponds to a sub-file and each node corresponds to a set .

We further remark that a complete graph with odd order can be decomposed into Hamiltonian cycles (as done in Algorithm 8); hence, can be partitioned uniformly where

For the case of even we can use a similar approach, but this time we construct the Hamiltonian cycles via combining two perfect matchings. Different from the previous case complete graph G with even order cannot be decomposed to Hamiltonian cycles. Hence, in the end we will have a remaining perfect matching. Those edges in the last perfect matching are assigned to nodes randomly. Therefore, in the end, of the partitions will have cardinality , while the remaining will have cardinality .

References

- [1] M. A. Maddah-Ali and U. Niesen, “Fundamental limits of caching,” IEEE Trans. Inf. Theory, vol. 60, no. 5, May 2014.

- [2] ——, “Decentralized coded caching attains order-optimal memory-rate tradeoff,” IEEE/ACM Trans. Netw., vol. 23, no. 4, Aug 2015.

- [3] Q. Yu, M. A. Maddah-Ali, and A. S. Avestimehr, “The exact rate-memory tradeoff for caching with uncoded prefetching,” IEEE Transactions on Information Theory, vol. 64, no. 2, pp. 1281–1296, Feb 2018.

- [4] M. M. Amiri, Q. Yang, and D. Gündüz, “Decentralized caching and coded delivery with distinct cache capacities,” IEEE Transactions on Communications, vol. 65, no. 11, pp. 4657–4669, Nov 2017.

- [5] A. M. Ibrahim, A. A. Zewail, and A. Yener, “Coded caching for heterogeneous systems: An optimization perspective,” IEEE Transactions on Communications, vol. 67, no. 8, pp. 5321–5335, 2019.

- [6] ——, “Device-to-device coded-caching with distinct cache sizes,” IEEE Transactions on Communications, pp. 1–1, 2020.

- [7] A. Tang, S. Roy, and X. Wang, “Coded caching for wireless backhaul networks with unequal link rates,” IEEE Transactions on Communications, vol. PP, no. 99, pp. 1–1, 2017.

- [8] M. M. Amiri and D. Gündüz, “Cache-aided content delivery over erasure broadcast channels,” IEEE Transactions on Communications, vol. PP, no. 99, pp. 1–1, 2017.

- [9] U. Niesen and M. A. Maddah-Ali, “Coded caching with nonuniform demands,” IEEE Trans. Inf. Theory, vol. 63, no. 2, Feb 2017.

- [10] M. Ji, A. M. Tulino, J. Llorca, and G. Caire, “Order-optimal rate of caching and coded multicasting with random demands,” IEEE Trans. Inf. Theory, vol. 63, no. 6, June 2017.

- [11] J. Zhang, X. Lin, and X. Wang, “Coded caching under arbitrary popularity distributions,” IEEE Transactions on Information Theory, vol. 64, no. 1, pp. 349–366, Jan 2018.

- [12] J.Hachem, N. Karamchandani, and S. N. Diggavi, “Coded caching for multi-level popularity and access,” IEEE Trans. Inf. Theory, vol. 63, no. 5, May 2017.

- [13] A. M. Daniel and W. Yu, “Optimization of heterogeneous coded caching,” IEEE Transactions on Information Theory, vol. 66, no. 3, pp. 1893–1919, 2020.

- [14] S. Jin, Y. Cui, H. Liu, and G. Caire, “Structural properties of uncoded placement optimization for coded delivery,” CoRR, vol. abs/1707.07146, 2017.

- [15] A. Ramakrishnan, C. Westphal, and A. Markopoulou, “An efficient delivery scheme for coded caching,” in Proceedings of the 2015 27th International Teletraffic Congress, ser. ITC ’15. Washington, DC, USA: IEEE Computer Society, 2015, pp. 46–54.

- [16] X. Cheng, C. Dale, and J. Liu, “Statistics and social network of youtube videos,” in 2008 16th Int. Workshop on Quality of Service, June 2008.

- [17] M. Cha, H. Kwak, P. Rodriguez, Y. Y. Ahn, and S. Moon, “Analyzing the video popularity characteristics of large-scale user generated content systems,” IEEE/ACM Trans. Netw., vol. 17, no. 5, Oct 2009.

- [18] M. Zink, K. Suh, Y. Gu, and J. Kurose, “Characteristics of youtube network traffic at a campus network - measurements, models, and implications,” Comput. Netw., vol. 53, no. 4, pp. 501–514, Mar. 2009.

- [19] J. Lin, Z. Li, G. Xie, Y. Sun, K. Salamatian, and W. Wang, “Mobile video popularity distributions and the potential of peer-assisted video delivery,” IEEE Communications Magazine, vol. 51, no. 11, pp. 120–126, November 2013.

- [20] K. Wan, D. Tuninetti, and P. Piantanida, “Novel delivery schemes for decentralized coded caching in the finite file size regime,” in 2017 IEEE International Conference on Communications Workshops (ICC Workshops), May 2017, pp. 1183–1188.

- [21] N. Zhang and M. Tao, “Fitness-aware coded multicasting for decentralized caching with finite file packetization,” IEEE Wireless Communications Letters, pp. 1–1, 2018.

- [22] M. S. Heydar Abad, E. Ozfatura, O. Ercetin, and D. Gündüz, “Dynamic content updates in heterogeneous wireless networks,” in 2019 15th Annual Conference on Wireless On-demand Network Systems and Services (WONS), 2019, pp. 107–110.