Understanding User Topic Preferences across Multiple Social Networks

Abstract

In recent years, social networks have shown diversity in function and applications. People begin to use multiple online social networks simultaneously for different demands. The ability to uncover a user’s latent topic and social network preference is critical for community detection, recommendation, and personalized service across social networks. Unfortunately, most current works focus on the single network, necessitating new technology and models to address this issue. This paper proposes a user preference discovery model on multiple social networks. Firstly, the global and local topic concepts are defined, then a latent semantic topic discovery method is used to obtain global and local topic word distributions, along with user topic and social network preferences. After that, the topic distribution characteristics of different social networks are examined, as well as the reasons why users choose one network over another to create a post. Next, a Gibbs sampling algorithm is adopted to obtain the model parameters. In the experiment, we collect data from Twitter, Instagram, and Tumblr websites to build a dataset of multiple social networks. Finally, we compare our research to previous works, and both qualitative and quantitative evaluation results have demonstrated the effectiveness.

Index Terms:

topic discovery, topic modeling, social network, user model, data fusionI Introduction

People’s lifestyles have changed tremendously as a result of the rapid development of the Internet. The emergence of online social networks has ushered in a new information era revolution. Online social networks have reshaped everyone’s daily life, according to survey statistics revealing that 69% 111http://www.pewinternet.org/fact-sheet/social-media/ of adults use at least one social network.

In recent years, existing social networks have been enriching themselves with multi-modal information (text, image, video, etc.), highlighting their characteristics due to the diversity and variability of people’s needs for information content. To meet different needs, people might use a variety of online social networks. On Twitter, for example, users can join in discussions about current events; on Instagram, users can submit images of their daily lives and share them with others.

Users who have accounts on various online social networks are referred to as “overlapping users” in this study. Overlapping users associate information data from several isolated social networks, allowing for a more complete and detailed analysis of user modeling and behavior patterns based on multiple social networks. Integrating user data from different social networks is critical for a more comprehensive view of user behavior patterns.

Many works have been conducted on multiple social networks, such as overlapping users identification[1, 2], item recommendation[3], influence maximization[4, 5], community detection[6, 7] and so on. By designing data fusion strategies, scholars are able to depict users more deeply and uncover the differences in user characteristics.

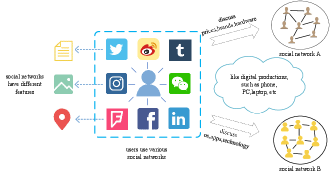

With the increasing multiple social networks, people are eager to understand why users need to use different social networks and what characteristics attract them. Therefore, this paper aims to investigate users’ topic preferences across multiple social networks as a starting point to understand users’ motivations. This paper considers users have both consistent and complementary characteristics on various social networks. Consistency refers to the fact that users show similar interests in different social networks. For example, if a user loves digital products, he or she will show his or her love for digital products on different social networks. Complementarity refers to a user presents different aspect characteristics of one topic on various social networks. As shown in Fig.1, if a user likes a digital product, he or she will focus on the brand of the computer or phone, hardware specifications, price, and user experience in network A; In contrast, he or she will focus on the operating system, related applications, and the technology involved in the digital product in network B. Consistency explores users’ similar interests across networks from the common view of the integration across multiple social networks, which is also the basis of the existing research across multiple social networks.

Existing studies[3, 8] focus solely on the issue of consistent user topic preferences across multiple social networks. This paper focuses on the complementarity of user topic preferences and analyzes the similarity between user topic preferences and social network topics in different dimensions to explore users’ intrinsic motivations for using specific social networks. Social network service providers can collaborate and share data in mutually beneficial ways to provide better social services to users.

The main contributions of this paper are as follows.

1. In this paper, we design a topic preference discovery model across multiple social networks. The model can fuse the data from each social network for unsupervised user topic discovery.

2. This model unifies the two discovery processes of global and local topics corresponding to multiple networks and each specific network, making it possible to explore the similarities and differences of topic word distribution between social networks.

3. Users’ topic preferences and social network preferences over topics are also output by this model. As a result, we may analyze each user’s characteristics as well as their intrinsic motive for using a specific social network.

In the remainder of this paper, Section 2 reviews the related works; Section 3 describes the research problem; Section 4 gives the multiple social networks topic model; Section 5 evaluates the experiments; Finally, conclusions and future works are provided in Section 5.

II Related Works

II-A User Preference Modeling for Single Social Network

II-A1 Based on users’ personal data

Current work on mining users’ topic preferences usually uses the data generated by individual data, which include explicitly labeled profile information, such as age, date of birth, gender, interests, and other data. The information also includes users’ comments and rating data of various content in social networks[9]. However, due to the service limitations of online social networks or personal privacy issues, users’ detailed data cannot be easily obtained. Most studies often need to integrate users’ implicit feedback data, such as data on users’ publicly posted content, forwarded content information, etc. Hong et al.[10] view users’ posting data in the Twitter network as documents and use the LDA topic model to model users’ topic model. Considering that users’ posts in social networks are relatively short, Zhao et al.[2] assume each user’s post only has one topic and then restructured a probability generating model based on the LDA model to mine users’ topic preferences. Cao et al. [11] combined users’ social, interest, and behavioral footprints in the Twitter network and used the matrix decomposition technique to obtain users’ topic preference features. Chen et al.[12] divided users’ attributes into individual interests and shared interests based on users’ item ratings. Related works have further used multi-modal data to enrich the user’s feature content. You et al.[13] analyzed the images in social networks. They train the image data to extract the hidden features. Then, based on the hidden features, a label propagation algorithm is constructed to achieve images classification. At last, they obtain a user interest model based on image information. Farnadi et al.[14] used deep learning technique to fuse multi-modal data from social networks. The fused data were classified based on the Big Five personality traits of users from the MyPersonality project, and then mining and analysis of user characteristics was carried out.

II-A2 Based on users’ friend data

Mislove et al.[15] pointed out that users with similar attributes are more likely to establish friendships and can use the characteristics of users’ friends to infer users’ attributes. Chen et al.[16] used relationships and structure characteristics of social networks to construct a user-label attribute prediction model. Xu et al.[17] modeled users based on three factors, including breaking news, posts from social friends, and user’s intrinsic interest.

II-A3 Based on users’ group data

Due to the sparsity of users’ data, related works have also attempted to integrate data from similar users. Hu et al. [18] developed a topic model to analyze the change of users’ topic preference from a community view; Feng et al. [19] used user groups to alleviate the sparsity problem of short texts on social media and to detect emotions and relevant topics based on groups; Wang et al.[20] integrated users’ topic and network topologies to catch the topic correlations inside a community.

II-B User Preference Modeling for Multiple Social Networks

Considering that users will register accounts and generate data in different social networks, some studies attempt to fuse data of various social networks based on “overlapping users”. Abel et al.[21] integrated user information from multiple social networks, such as Flickr, Twitter, and Delicious, and built an attribute tag-based user topic model, which can greatly improve the quality of recommendation algorithms in social networks. Subsequently, they also design to build on a tag-based user model by further integrating the profile description from users’ registry information[22]. Cao et al.[3] used a topic model to simulate users’ process of content data generation process in Douan and Weibo, and they correlated the content data of users in different social networks to achieve the mining of user interest preference based on the basic assumption that “the same user has the same interest preference in different networks”. Lee et al.[8] used an extended TwitterLDA model to mine users’ topic preferences based on their post contents in different social networks and analyzed users’ selection preferences for various social networks.

In summary, the study process of user preference modeling, which is gradually transformed from single data in terms of dimensions, types, and features to more complex multi-source heterogeneous data as research objects, the multiple social networks further provide a new research horizon for users’ topic preference mining. This paper focuses on analyzing characteristics from a new prospect of multiple social networks compared with the existing works.

III Preliminary

To describe our research work clearly, this paper’s main concepts and definitions are given as follows.

Definition 1. Multiple Social Networks. Multiple social networks in this paper are defined as . It has the user set . Every social network is . represents social network set of user . represents content set of user in social networks .

Definition 2. User Topic Preference. User topic preference represents the topic interest of the user. It is expressed by multinomial distribution over topics, represented as . The topic has a probability distribution of belonging to user .

Definition 3. Global Topics and Local Topics. Global topics represent topics that are discovered based on fused multiple social networks. Local Topics represent topics that are discovered based on a specific single social network. In this paper, the global topic distribution of users is described as a multinomial distribution which is a probability distribution of vocabulary over topic across multiple social networks. Local topic distribution of users is described as a multinomial distribution , a probability distribution of vocabulary over the topic in social network . Each word has a probability of belonging to topic . Every post in content set can generate word set .

Definition 4. User Social Network Preference. This paper describes user social network preference as a multinomial distribution which is a probability distribution of choosing social network by user over topic . The social network has the probability of belonging to .

This paper aims to obtain users’ topic preference features based on their content data and preference data of social networks for multiple social networks, analyze users’ topic contents, and explore the similarity and difference of topic word distribution in different social networks. The formalization of the problem is as follows. Given multiple social network set . The model will output user topic preference features , user global topic word distribution and user local topic word distribution , and social network selection preference . The relevant notations in this paper are illustrated in Tab.I.

| Symbol | Description | ||

|---|---|---|---|

| , , |

|

||

| , |

|

||

| , , | topic, post, word | ||

| switching variable | |||

| topic distribution of user | |||

| , |

|

||

| word distribution over the background topic | |||

| social network distribution of user over topic | |||

|

|||

|

|||

| dirichlet prior hyperparameters |

IV User Topic Preference Model

This section will describe the model structure and give the algorithm for solving the model parameters.

IV-A Model Structure

In social networks, the aggregation of high-frequency co-occurring words often represents a topic. This paper needs to aggregate high-frequency co-occurring words from the global perspective across multiple social networks and find out the consistent global topic content. Furthermore, the words that have a co-occurrence relationship with a global topic in a specific social network are aggregated as local topics, showing different specific topic words. The aggregation of high-frequency co-occurring words prompts us to use the LDA-based topic model to mine user topic preferences in multiple social network situations. The LDA model is an unsupervised cluster that uses co-occurring words to generate a topic.

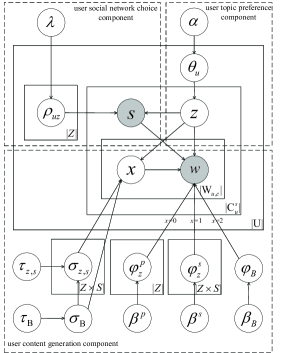

We design a Multiple Social Networks Topic Model (MSNT). The basic structure of the model is shown in Fig.2, which mainly contains the user topic preference component, user content generation component, and user social network choice component.

IV-A1 User topic preference component

Users have kinds of topic content in social networks, and each user has unique topic preferences than others. To model this situation, we view users’ topic preference distribution as a multinomial distribution over topics . Each topic can be generated from the distribution.

IV-A2 User content generation component

Users create posts based on their topic preferences. In our MSNT model, the word generation process over the topic is divided into two types of processes.

Global topics words generation: In multiple social network situations, the model first uses a multinomial distribution to generate word over topic based on the user’s all content data. This can find co-occurring words from the global perspective on all social networks. We can discover users’ topics based on complete multiple social network data to reflect the intrinsic preferences of users.

Local topics words generation: The specific social network may also influence a user to use different words to make posts. The model uses a multinomial distribution to generate word over topic only on user content data in a specific social network. We can find topics to reflect the specific content characteristic based on the single social network.

In order to unify users’ global topics and local topics, this paper describes a switch variable to control the selection of words for topic content. The probability distribution that takes the 0 or 1 is denoted as a binomial distribution . The binomial distribution can represent the latent proportion distribution of global topic words and local topic words. When , the users select global topic word under topic , and the distribution of this process is denoted as multinomial distribution . When , the users generate local topic word with topic in social network , and distribution of this process is denoted as multinomial distribution . The semantic content between the global topic and local topic is associated with each other by switch variables. In addition, we assume that a background topic captures the background words used across the multiple social networks to improve the ability to mining other topics. Therefore, the switch variable first takes the value tag from a binomial distribution . If get , word is generated from a background topic word multinomial distribution . If is not , the switch variable then takes value from the binomial distribution . The word is from the global topic or local topic depending on the variable value of or .

IV-A3 User social network choice component

The service differences in social networks drive users to choose different social networks. Users may choose different social networks to post content based on topics. Users’ social network choice is influenced by potential relevance between users’ topic preferences and social network topics. In the model, the probability distribution that user chooses social network with topic is described as a multinomial distribution .

IV-A4 whole generative process

We summarize the above generative process as follows.

-

1.

Sample the background topic vocabulary Multinomial distribution from a prior Dirichlet distribution: ; Sampe the Binomial distribution of whether user’s content word is background word from a prior beta distribution: ;

-

2.

For each topic ,

-

(a)

Sample the global topic vocabulary Multinomial distribution from a Dirichlet prior distribution. ;

-

(b)

For each social network ,

-

i.

Sample the local topic vocabulary Multinomial distribution in social networks from a prior Dirichlet distribution: ; Sample the Binomial distribution of whether user’s content word is global or local topic word from a prior beta distribution: ;

-

ii.

Sample the user social network preference Multinomial distribution from a prior Dirichlet distribution: ;

-

i.

-

(a)

-

3.

For each user ,

-

(a)

Sample the user topic preference Multinomial distribution from a prior Dirichlet distribution: .

-

(b)

For each user ’s post,

-

i.

Sample a topic assignment from Multinomial distribution: ,

-

ii.

Sample a social network indicator from Multinomial distribution: ;

-

iii.

For each word ,

-

A.

Sample a value from binomial distribution: ,

-

B.

If , sample a word from Multinomial distribution: ;

-

C.

If , sample a value from binomial distribution: . If , sample a word from Multinomial distribution: . If , sample a word from Multinomial distribution: .

-

A.

-

i.

-

(a)

IV-B Model Inference

For the brevity of description, this paper lets hyper-parameters be denoted as , and hidden variables as . The latent variables conditioned on the observed variables, namely are needed to estimate. This paper uses the collapsed Gibbs sampling method to obtain hidden variables in our model. In the Gibbs sampling algorithm, the new value of the parameter is determined by the previous parameter value of the model, and the model parameters are constantly updated iteratively to finally complete the solution of the model parameters.

Sampling topic of the post. The length of a post in social network is generally much smaller than the length of a normal document. In most cases, users’ posts only describe one topic. The sampling formula for the topic of the post is Eq.(1).

| (1) | ||||

where denotes the words which are not background topic words. is the number of posts of user which are associated with topic , excluding the current post instance . is the number of social network of user ’s posts according to topic , excluding the current post instance . is the number of words according to topic , excluding the current post instance . is the number of words according to topic in social network , excluding the current post instance . is the number of global topic words according to topic in social network . is the number of local topic words according to topic in social network .

Sampling switch variable . As described above, the value of is the process of users’ choice of words in the multiple social networks. The sampling formula of variable is Eq.(2), (3) or (4).

| (2) | ||||

| (3) | ||||

| (4) | ||||

where is the number of words which are not associated with background topic, excluding the current word instance . is the number of words which are associated with background topic, excluding the current word instance . is the number of global topic words according to topic in social network , excluding the current word instance . is the number of local topic words according to topic in social network , excluding the current word instance . is the number of words according to topic , excluding the current word instance . is the number of words according to topic in social network , excluding the current word instance . is the number of background topic words, excluding the current word instance .

After Gibbs algorithm sampling, the model parameters are calculated as in Eq.(5) to Eq.(8).

| (5) |

| (6) |

| (7) |

| (8) |

The algorithm of parameters inference for MSNT is described as Algorithm 1.

Line 2 in the algorithm, max_iter_number indicates the maximum number of iterations. The termination condition can also be replaced by other convergence conditions. The time complexity of the algorithm is .

V Experiment

This section performs some experiments to verify the model effectiveness of mining user topic preference. Specifically, the dataset and experiment setup are first given. Then, the evaluation criteria are described in detail. We show the evaluation of the proposed model MSNT compared with other works. Finally, the analysis and discussion of the results will be presented.

V-A Dataset

This paper uses a dataset of multiple social networks, including Twitter, Instagram, and Tumblr. Twitter is a text-based short-text social network. Instagram is a photo-sharing social network. Tumblr is a multimedia blog-based social network that supports a wide range of rich media such as pictures, videos, etc.

The user account information in the dataset is crawled from the business card website About.me, from which to link multiple online identities. The total number of users is 5747. The user accounts include 2076 Twitter users, 2014 Instagram users, and 1655 Tumblr users. Based on these users, user content data are crawled from the connected social networks respectively. The users’ content data spans from January 2017 to January 2018, where the non-text type data, such as images, are converted into word tags by Google’s deep learning-based image annotation model222https://www.googlevision.com.

The statistics of the dataset are shown in Tab.II and Tab.III. Each element in Tab.II is the number of identical users corresponding to two social networks.In addition, there are 935 identical users who link all three networks. We conduct topic mining based on their content data. The number of users’ content data is shown in Tab.III.

| Tumblr | |||

|---|---|---|---|

| 2076 | 1435 | 1076 | |

| - | 2014 | 1014 | |

| Tumblr | - | - | 1665 |

| Tumblr | |||

| content | 1,257,055 | 302,192 | 413,359 |

V-B Setup

V-C Topic Content Quality

In this paper, we first compare and analyze the model’s topic discovery quality.

V-C1 Evaluation metrics

Viewing users as a document set, the model in this paper can be regarded as a document topic discovery model. Therefore, this paper uses the Perplexity[25], Likelihood[25] and word Pointwise Mutual Information Score (PMI-score)[26] metrics to evaluate the model performance.

Specifically, the perplexity is a widely used evaluation metric in the field of natural language processing, and the higher quality of the model is corresponding to the lower perplexity value. Its specific form is shown in Eq.(9).

| (9) |

where denotes the probability that document is output by model and denotes the length of document . Here, since this paper considers each post of user as a document and marks it as one topic. Then .

The likelihood calculates the probability of generating the sentence in the test corpus. The likelihood value is higher, and it can show better quality of the model. Its specific form is shown in Eq.(10).

| (10) |

Generally, the multiplication value of probability is transformed into summation by log operation to prevent the multiplication value from being too small.

The PMI-score measures the topic quality based on the co-occurrence word pairs in one topic. The words within the topic are correlated, and the higher mutual information score indicates a higher model quality. Its specific form is shown in Eq.(11).

| (11) |

where, denotes the number of topic. denotes the number of words in topic. The word mutual information calculates the score of the words with the highest probability of occurrence in the topic. . is probability of co-occurring word pair and . is probability of occurrence of word .

V-C2 Baselines

To demonstrate the effectiveness of the proposed MSNT model, we choose different representative baseline methods as follows.

LDA[25], which is a classical topic discovery model. During topic discovery, the model integrates multiple social network data directly without distinguishing the source of the data, and one document consists of all contents generated by the user.

TwitterLDA[23], which takes into account the short-text features in social networks, links one post to only one topic in the topic discovery process, and the model integrates data of multiple social networks without distinguishing the sources of data.

MTM[24], the model can mine topic-related opinion words from different corpus. During the topic discovery process, the model can distinguish the source corpus of the data. In the experiments, various social networks are corresponding to the different corpus. The topic discovery part of the model is also equivalent to the ccLDA [35] topic discovery model.

MutilLDA[8], which has similar features to TwitterLDA, links one post to only one topic in the topic discovery process. The model considers users’ choice preferences of social networks across multiple social networks.

MSNT, which is the topic discovery model across multiple social networks in this paper.

V-C3 Results Analysis

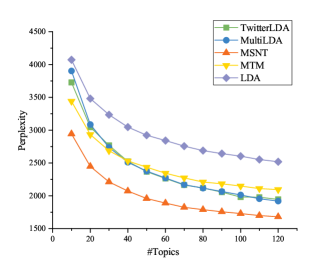

First, we show perplexity experiment results. The lower perplexity value is better. It can be observed that perplexity values of all models tends to decrease as the number of topic increases shown in Fig.3.

Starting from the number of topics, about 80, the decrease of the perplexity value gradually tends to be smooth. In particular, the perplexity values of TwitterLDA, MultiLDA, and the MSNT are significantly lower than MTM and LDA. The reason is that TwitterLDA, MultiLDA, and MSNT are specific to short texts of social networks, and the background topics in these models reduce the influence of noisy words in social networks. The perplexity experiment results of MSNT in this paper are better than TwitterLDA and MultiLDA, because MSNT is designed to apply to the scenario of multiple social networks compared with TwitterLDA. For MultiLDA, MSNT involves users’ preference of choosing social networks and mining users’ topic preference from a global view on fusing multiple social networks data. It can also discover differences in local topic content across multiple social networks. Overall, MSNT obtains better performance than other works due to modeling global topics and local topics being more reasonable in multiple social network scenarios, i.e., consistency and complementarity co-exist.

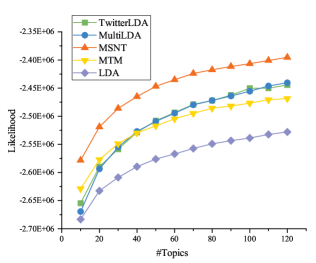

The likelihood value can describe the generation probability of a sentence in the test set based on the model. Its rising and decreasing trends are similar to perplexity. From experiment results shown in Fig.4, we can also see that the increasing trend of likelihood gradually becomes smooth as the number of topics increases. Compared with other works, MSNT significantly gets better experiment results.

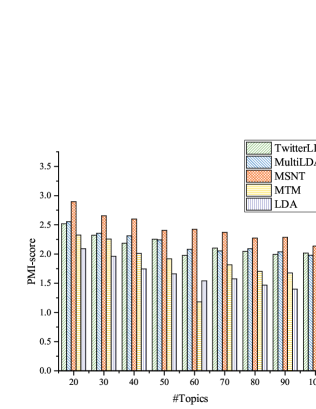

For word PMI-score, which is used to measure the lexical relevance in one topic, the higher mutual information score indicates a higher model quality. Experiment results are shown in Fig.5. It can be seen that the number of topic is from 20 to 100, and the top 50 words are taken in one topic to calculate the word PMI-score under different topic numbers.

The figure shows that TwitterLDA, MultiLDA, and MSTN perform better than MTM and LDA because these models are designed based on social networks with short texts. The MSTN in this paper achieves better results than TwitterLDA and MultiLDA because MSTN considers local topics that are more likely to have some feature topic words in a specific social network. These words are easier to form co-occurring word pairs in a specific social network, thus obtaining better results about word PMI-score.

V-D Topic Content Analysis

V-D1 Topic Word Difference across Multiple Social Networks

Perplexity is utilized to determine the topic number in related work[25]. When the number of topics reaches 80, the perplexity progressively becomes smooth, as mentioned in the previous section. Therefore, we use the experiment results when the number of topics is 80 to show the general topic content of each network. The number of topics is also the same in work[8].

The general topic words are shown in in Tab.IV. We can find that the general topic contents that users like to discuss on Twitter are daily stuff, media events, etc. Twitter users use more oral words. There are larger verbs used by users compared with other social media platforms. Due to users’ daily images on Instagram, classified as a photo-based social network, the objects in photos are typically people or scenes, and the subject is inclined to landscape, fashion, food, etc. Tumblr resembles a typical blog in appearance. Tumblr features more text material than Twitter, as well as more nouns and fewer vocal verbs. On the Tumblr network, users create content by combining words with other multimedia elements, such as photographs, to describe topics. The topic content shown in Tab.IV is about vehicles and business marketing, respectively.

| Tumblr | |||||

| Topic 1 | Topic 2 | Topic 1 | Topic 2 | Topic 1 | Topic 2 |

| get | trump | food | sky | light | store |

| make | say | dish | tree | lead | kindle |

| people | president | cuisine | landscape | truck | bestseller |

| time | people | ingredient | nature | rear | new |

| know | white | food | sky | light | store |

| go | get | recipe | mountain | trailer | amazon |

| use | vote | meal | wilderness | lamp | buy |

| say | call | breakfast | environment | car | list |

| take | woman | lunch | plant | pair | author |

| work | make | meat | cloud | indicator | visit |

Then we compare global and local topic content in each social network and understand users’ latent motivations for using social networks. We discover that, for one type of topic content, global topic content has an explicit relationship with each social network’s local topic content. Different social network service providers can share data and give more comprehensive services to users by utilizing global topics and local topics. As shown in Tab.V, the topicA content is food, and the local topic has a large number of words that are explicitly embodied in the global topic. Global and local topics are more relevant. When a user prefers this type of topic, their preference tends to be consistent across networks.

| TopicA | |||

|---|---|---|---|

| Global | Local(Twitter) | Local(Instagram) | Local(Tumblr) |

| food | food | food | food |

| cuisine | coffee | cuisine | chocolate |

| dish | new | dish | good |

| dessert | restaurant | dessert | make |

| ingredient | good | ingredient | day |

| good | get | cake | get |

| cake | eat | cream | recipe |

| cream | day | good | new |

| chocolate | best | meal | restaurant |

| coffee | make | chocolate | chocolate |

The other type of topic content, shown in Tab.VI, is that local topic content is the implicit correlation to the global topic. The notable words in the local topic content are not the same as keywords in the global topic content. TopicB is a topic about residential design, local topic content in Instagram reflects “plant” decorations, and local topic content in Tumblr focuses on the room design itself. The local topic reflects the influence of social networks on users’ topic content. For latent semantic content, some things described in global topic content can be examined and understood through local topic content. It is feasible to deliver customized demand services to users in each social network based on this type of topic.

| TopicB | |||

|---|---|---|---|

| Global | Local(Twitter) | Local(Instagram) | Local(Tumblr) |

| plant | wakafire | plant | room |

| flower | image | flower | design |

| room | garden | tree | furniture |

| tree | flower | petal | building |

| design | new | botany | house |

| furniture | post | leaf | property |

| house | poem | spring | architecture |

| botany | day | family | home |

| petal | location | pink | table |

| building | plant | garden | floor |

V-D2 Word Distribution over Topic across Multiple Social Networks

We can quantitatively explore the similarities and differences between global topics and local topics across multiple social networks.

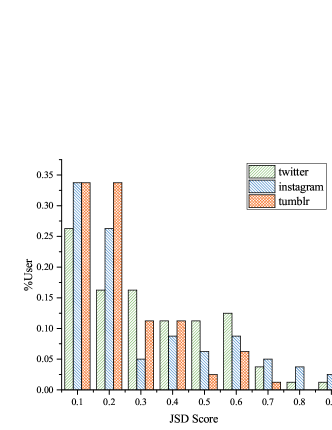

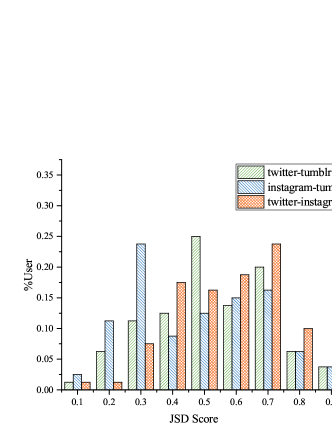

Based on the probability distribution of word over topic , i.e., or in this paper, we can calculate the similarity between two word distributions over topic across social networks. This paper uses Jensen-Shannon Divergence (JSD) to measure the similarity, which takes values in the range of , and two probability distributions are more similar to each other with respect to the lower JSD score.

We first calculate JSD scores between each identical local topic across social networks. Then we can obtain proportion distribution based on JSD scores. The JSD score proportion distribution of identical local topics across social networks is shown in Fig.6. Overall, the JSD values are concentrated in the middle of distribution in most cases, reflecting the consistency and complementarity are co-exist across multiple social networks as described in this paper.

Similarly, we can also obtain the JSD similarity between the local topic and the global topic in each social network, i.e., the JSD score between and . The JSD score proportion distribution between local topic and global topic across social networks is shown in Fig.7. It can be seen that most of the distributed JSD values are concentrated in the interval less than 0.3, which indicates the consistency of topic content between global topic and local topics.

VI Conclusion

In this paper, we propose a user topic preference model for multiple social networks. The model integrates a variety of data in different social networks to mine the topic preferences of users in multiple social networks. Experiments show that the model described in this research outperforms prior works and is capable of effectively mining the differences in users’ topic content across social networks. However, the topic contents reported in the experiment section still have noise that is difficult to decipher, which could be due to the sparse and colloquial usage of user content in social networks. Better data preprocessing could help solve the situation. In the future, we’ll look into more fine-grained methods for inferring users’ personalized topic preferences, more diverse multimedia data to model user preferences, and an evolution model of user topic preferences over time.

Acknowledgment

This work is supported by Natural Science Foundation of China under Grants No. 61772133, No.61972087.National Social Science Foundation of China under Grants No. 19@ZH014. Jiangsu Provincial Key Project under Grants No.BE2018706.Natural Science Foundation of Jiangsu province under Grants No.SBK2019022870. Jiangsu Provincial Key Laboratory of Computer Networking Technology. Jiangsu Provincial Key Laboratory of Network and Information Security under Grants No. BM2003201, and Key Laboratory of Computer Network and Information Integration of Ministry of Education of China under Grants No. 93K-9.

References

- [1] X. Zhou, X. Liang, H. Zhang, and Y. Ma, “Cross-platform identification of anonymous identical users in multiple social media networks,” IEEE transactions on knowledge and data engineering, vol. 28, no. 2, pp. 411–424, 2015.

- [2] J. Zhang, P. S. Yu, and Z.-H. Zhou, “Meta-path based multi-network collective link prediction,” in Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, 2014, pp. 1286–1295.

- [3] X. Cao and Y. Yu, “Joint user modeling across aligned heterogeneous sites,” in Proceedings of the 10th ACM Conference on Recommender Systems, 2016, pp. 83–90.

- [4] G.-L. Li, Y.-P. Chu, J.-H. Feng, and Y.-Q. XU, “Influence maximization on multiple social networks [j],” Chinese Journal of Computers, vol. 39, no. 4, pp. 643–656, 2016.

- [5] H. Zhang, D. T. Nguyen, H. Zhang, and M. T. Thai, “Least cost influence maximization across multiple social networks,” IEEE/ACM Transactions on Networking, vol. 24, no. 2, pp. 929–939, 2015.

- [6] S. Y. Philip and J. Zhang, “Mcd: mutual clustering across multiple social networks,” in 2015 IEEE International Congress on Big Data. IEEE, 2015, pp. 762–771.

- [7] Z. Zhu, T. Zhou, C. Jia, W. Liu, B. Liu, and J. Cao, “Community detection across multiple social networks based on overlapping users,” Transactions on Emerging Telecommunications Technologies, p. e3928, 2019.

- [8] R. K.-W. Lee, T.-A. Hoang, and E.-P. Lim, “On analyzing user topic-specific platform preferences across multiple social media sites,” in Proceedings of the 26th International Conference on World Wide Web, 2017, pp. 1351–1359.

- [9] D. M. Boyd and N. B. Ellison, “Social network sites: Definition, history, and scholarship,” Journal of computer-mediated Communication, vol. 13, no. 1, pp. 210–230, 2007.

- [10] L. Hong and B. D. Davison, “Empirical study of topic modeling in twitter,” in Proceedings of the first workshop on social media analytics, 2010, pp. 80–88.

- [11] C. Cao, H. Ge, H. Lu, X. Hu, and J. Caverlee, “What are you known for? learning user topical profiles with implicit and explicit footprints,” in Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2017, pp. 743–752.

- [12] E. Chen, G. Zeng, P. Luo, H. Zhu, J. Tian, and H. Xiong, “Discerning individual interests and shared interests for social user profiling,” World Wide Web, vol. 20, no. 2, pp. 417–435, 2017.

- [13] Q. You, S. Bhatia, and J. Luo, “A picture tells a thousand words—about you! user interest profiling from user generated visual content,” Signal Processing, vol. 124, pp. 45–53, 2016.

- [14] G. Farnadi, J. Tang, M. De Cock, and M.-F. Moens, “User profiling through deep multimodal fusion,” in Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, 2018, pp. 171–179.

- [15] A. Mislove, B. Viswanath, K. P. Gummadi, and P. Druschel, “You are who you know: inferring user profiles in online social networks,” in Proceedings of the third ACM international conference on Web search and data mining, 2010, pp. 251–260.

- [16] J. Chen, J. He, L. Cai, and J. Pan, “Profiling online social network users via relationships and network characteristics,” in 2016 IEEE Global Communications Conference (GLOBECOM). IEEE, 2016, pp. 1–6.

- [17] Z. Xu, Y. Zhang, Y. Wu, and Q. Yang, “Modeling user posting behavior on social media,” in Proceedings of the 35th international ACM SIGIR conference on Research and development in information retrieval, 2012, pp. 545–554.

- [18] Z. Hu, J. Yao, B. Cui, and E. Xing, “Community level diffusion extraction,” in Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data, 2015, pp. 1555–1569.

- [19] J. Feng, Y. Rao, H. Xie, F. L. Wang, and Q. Li, “User group based emotion detection and topic discovery over short text,” World Wide Web, pp. 1–35, 2019.

- [20] Y. Wang, D. Jin, K. Musial, and J. Dang, “Community detection in social networks considering topic correlations,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, no. 01, 2019, pp. 321–328.

- [21] F. Abel, S. Araújo, Q. Gao, and G.-J. Houben, “Analyzing cross-system user modeling on the social web,” in International Conference on Web Engineering. Springer, 2011, pp. 28–43.

- [22] F. Abel, E. Herder, G.-J. Houben, N. Henze, and D. Krause, “Cross-system user modeling and personalization on the social web,” User Modeling and User-Adapted Interaction, vol. 23, no. 2, pp. 169–209, 2013.

- [23] W. X. Zhao, J. Jiang, J. Weng, J. He, E.-P. Lim, H. Yan, and X. Li, “Comparing twitter and traditional media using topic models,” in European conference on information retrieval. Springer, 2011, pp. 338–349.

- [24] S. Qian, T. Zhang, and C. Xu, “Multi-modal multi-view topic-opinion mining for social event analysis,” in Proceedings of the 24th ACM international conference on Multimedia, 2016, pp. 2–11.

- [25] G. Heinrich, “Parameter estimation for text analysis,” Technical report, Tech. Rep., 2005.

- [26] X. Cheng, X. Yan, Y. Lan, and J. Guo, “Btm: Topic modeling over short texts,” IEEE Transactions on Knowledge and Data Engineering, vol. 26, no. 12, pp. 2928–2941, 2014.