UniFormer: Unifying Convolution and Self-attention for Visual Recognition

Abstract

It is a challenging task to learn discriminative representation from images and videos, due to large local redundancy and complex global dependency in these visual data. Convolution neural networks (CNNs) and vision transformers (ViTs) have been two dominant frameworks in the past few years. Though CNNs can efficiently decrease local redundancy by convolution within a small neighborhood, the limited receptive field makes it hard to capture global dependency. Alternatively, ViTs can effectively capture long-range dependency via self-attention, while blind similarity comparisons among all the tokens lead to high redundancy. To resolve these problems, we propose a novel Unified transFormer (UniFormer), which can seamlessly integrate the merits of convolution and self-attention in a concise transformer format. Different from the typical transformer blocks, the relation aggregators in our UniFormer block are equipped with local and global token affinity respectively in shallow and deep layers, allowing tackling both redundancy and dependency for efficient and effective representation learning. Finally, we flexibly stack our blocks into a new powerful backbone, and adopt it for various vision tasks from image to video domain, from classification to dense prediction. Without any extra training data, our UniFormer achieves 86.3 top-1 accuracy on ImageNet-1K classification task. With only ImageNet-1K pre-training, it can simply achieve state-of-the-art performance in a broad range of downstream tasks. It obtains 82.9/84.8 top-1 accuracy on Kinetics-400/600, 60.9/71.2 top-1 accuracy on Something-Something V1/V2 video classification tasks, 53.8 box AP and 46.4 mask AP on COCO object detection task, 50.8 mIoU on ADE20K semantic segmentation task, and 77.4 AP on COCO pose estimation task. Moreover, we build an efficient UniFormer with a concise hourglass design of token shrinking and recovering, which achieves 2-4 higher throughput than the recent lightweight models. Code is available at https://github.com/Sense-X/UniFormer.

Index Terms:

UniFormer, Convolution Neural Network, Transformer, Self-Attention, Visual Recognition.1 Introduction

Representation learning is a fundamental research topic for visual recognition [61, 24]. Basically, we confront two distinct challenges that exist in visual data such as images and videos. On one hand, the local redundancy is large, e.g., visual content in a local region (space, time or space-time) tends to be similar. Such locality often introduces inefficient computation. On the other hand, the global dependency is complex, e.g., targets in different regions have dynamic relations. Such long-range interaction often causes ineffective learning.

To tackle such difficulties, researchers have proposed a number of powerful models in visual recognition [107, 117, 28, 116]. In particular, the mainstream backbones are Convolution Neural Networks (CNNs) [37, 40, 30] and Vision Transformers (ViTs) [24, 88], where convolution and self-attention are the key operations in these two structures. Unfortunately, each of these operations mainly addresses one aforementioned challenge while ignoring the other. For example, the convolution operation is good at reducing local redundancy and avoiding unnecessary computation, by aggregating each pixel with context from a small neighborhood (e.g, 33 or 333). However, the limited receptive field makes convolution suffer from difficulty in learning global dependency [101, 53]. Alternatively, self-attention has been recently highlighted in the ViTs. By similarity comparison among visual tokens, it exhibits the strong capacity of learning global dependency in both images [24, 61] and videos [3, 1, 62]. Nevertheless, we observe that ViTs are often inefficient to encode local features in the shallow layers.

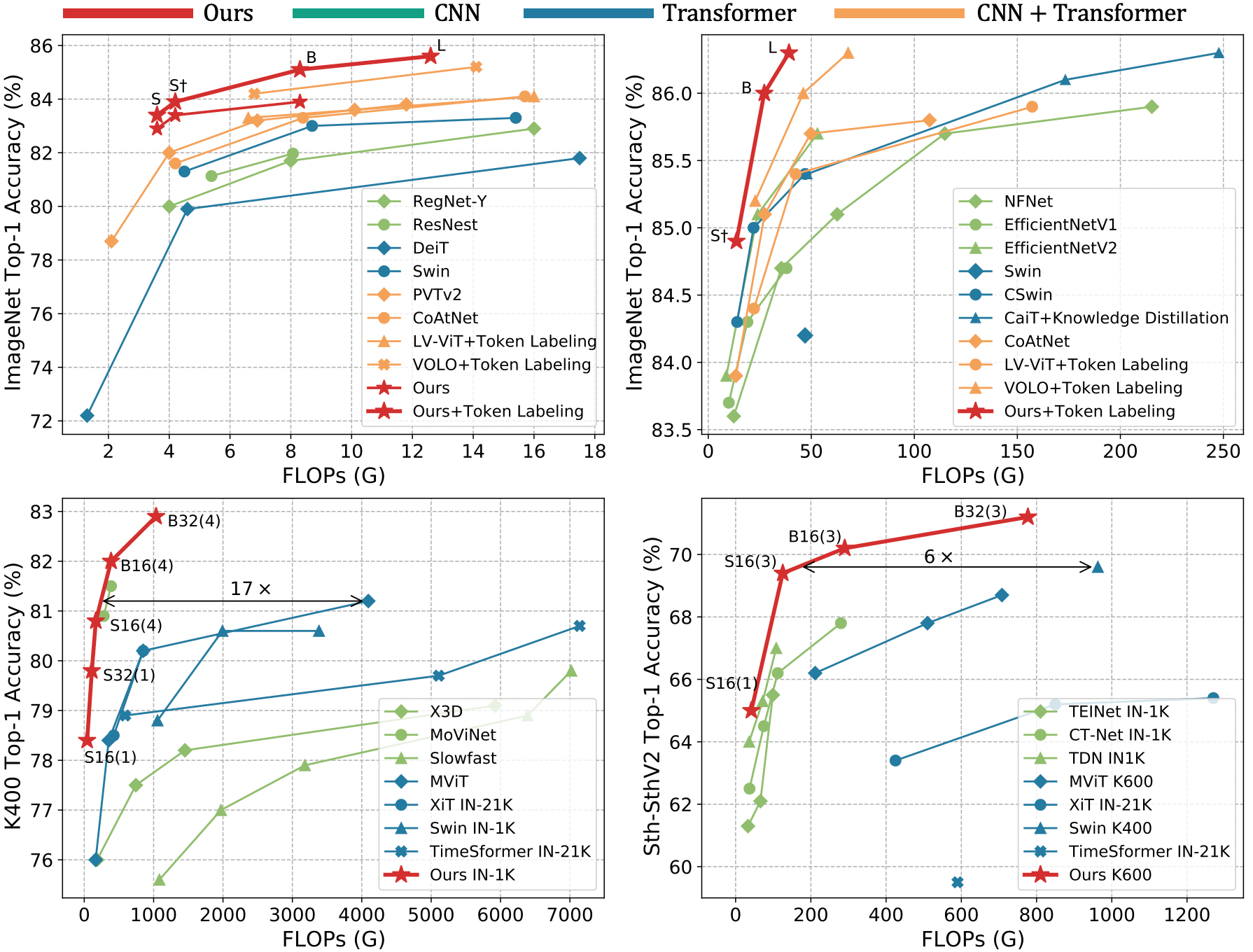

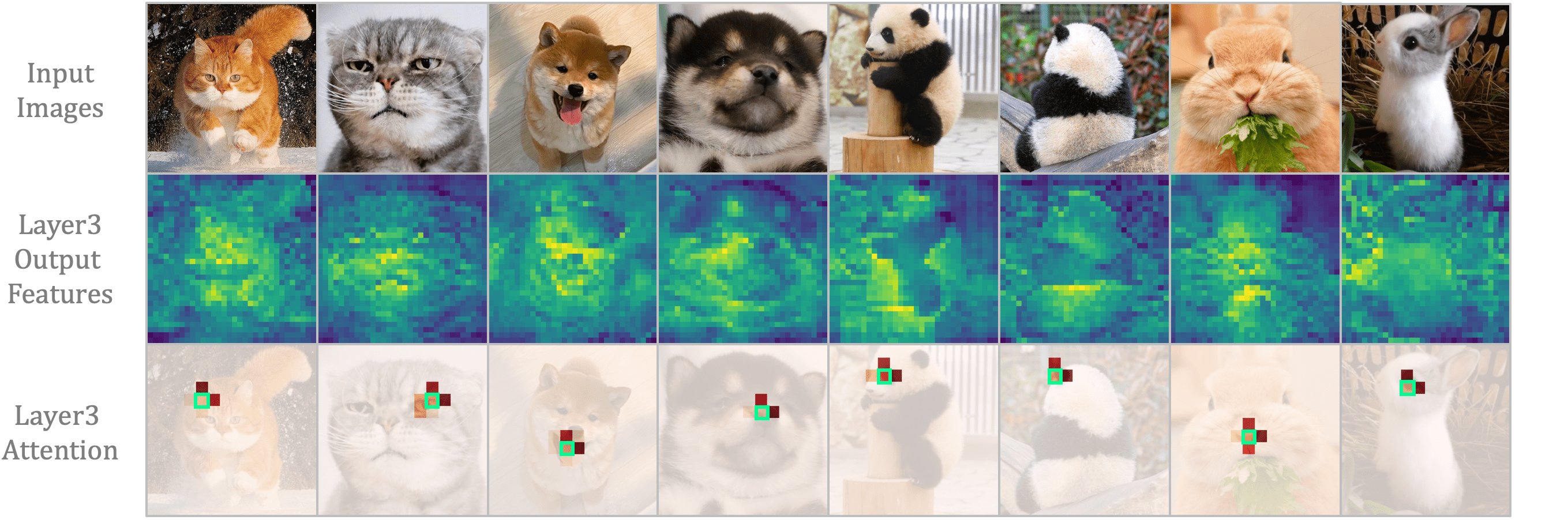

We take the well-known ViTs in the image and video domains (i.e., DeiT [87] and TimeSformer [3]) as examples, and visualize their attention maps in the shallow layer. As shown in Figure 2, both ViTs indeed capture detailed visual features in the shallow layer, while spatial and temporal attention are redundant. One can easily see that, given an anchor token, spatial attention largely concentrates on the tokens in the local region (mostly 33), and learns little from the rest tokens in this image. Similarly, temporal attention mainly aggregates the tokens in the adjacent frames, while losing sight of the rest tokens in the distant frames. However, such local focus is obtained by global comparison among all the tokens in space and time. Clearly, this redundant attention manner brings large and unnecessary computation burden, thus deteriorating the computation-accuracy balance in ViTs (Figure 1).

Based on these discussions, we propose a novel Unified transFormer (UniFormer) in this work. It flexibly unifies convolution and self-attention in a concise transformer format, which can tackle both local redundancy and global dependency for effective and efficient visual recognition. Specifically, our UniFormer block consists of three key modules, i.e., Dynamic Position Embedding (DPE), Multi-Head Relation Aggregator (MHRA), and Feed-Forward Network (FFN). The distinct design of the relation aggregator is the key difference between our UniFormer and the previous CNNs and ViTs. In the shallow layers, our relation aggregator captures local token affinity with a small learnable parameter matrix, which inherits the convolution style that can largely reduce computation redundancy by context aggregation in the local region. In the deep layers, our relation aggregator learns global token affinity with token similarity comparison, which inherits the self-attention style that can adaptively build long-range dependency from distant regions or frames. Via progressively stacking local and global UniFormer blocks in a hierarchical manner, we can flexibly integrate their cooperative power to promote representation learning. Finally, we provide a generic and powerful backbone for visual recognition and successfully address various downstream vision tasks with simple and elaborate adaptations. Additionally, we further introduce the lightweight design for UniFormer, which can achieve a preferable accuracy-throughout balance, by a concise hourglass style of token shrinking and recovering.

Extensive experiments demonstrate the strong performance of our UniFormer on a broad range of vision tasks, including image classification, video classification, object detection, instance segmentation, semantic segmentation and pose estimation. Without any extra training data, UniFomrer-L achieves 86.3 top-1 accuracy on ImageNet-1K. Moreover, with only ImageNet-1K pre-training, UniFormer-B achieves 82.9/84.8 top-1 accuracy on Kinetics-400/Kinetics-600, 60.9 and 71.2 top-1 accuracy on Something-Something V1&V2, 53.8 box AP and 46.4 mask AP on the COCO detection task, 50.8 mIoU on the ADE20K semantic segmentation task, and 77.4 AP on the COCO pose estimation task. Finally, our efficient UniFormer with a concise hourglass design can achieve 2-4 higher throughput than the recent lightweight models.

2 Related Work

2.1 Convolution Neural Networks (CNNs)

In the past few years, the development of computer vision has been mainly driven by convolutional neural networks (CNNs). Beginning with the classical AlexNet [49], many powerful CNN networks have been proposed [80, 84, 37, 107, 41, 40, 120, 85] and achieved remarkable performance in various tasks of image understanding [22, 60, 121, 36, 6, 47, 104, 95]. Recently, due to the fact that video has gradually become one main data resource in many realistic applications, researchers have attempted to apply CNNs in the video domain. Naturally, one can adapt 2D convolution as 3D one, by temporal dimension extension [89]. However, 3D CNNs often suffer from difficult optimization problem and large computation cost. To resolve these issues, the prior works try to inflate the pre-trained 2D convolution kernels for better optimization [11] and factorize 3D convolution kernels in different dimensions to reduce complexity [91, 72, 90, 29, 30, 52]. Besides, many recent studies of video understanding [44, 59, 53, 56] focus on adapting vanilla 2D CNNs with elaborated temporal modeling modules, such as temporal shift [59, 67], motion enhancement [44, 56, 63], and spatiotemporal excitation [53, 52], etc. Unfortunately, due to the limited reception field, the traditional convolution struggles to capture long-range dependency even if they are stacked deeper.

2.2 Vision Transformers (ViTs)

To capture long-term dependencies, Vision Transformer (ViT) has been proposed [24]. With the inspiration of Transformer architectures in NLP [92], ViT treats image as a number of visual tokens and leverages attention to encode token relations for representation learning. However, vanilla ViT depends on sufficient training data and careful data augmentation. To tackle these problems, several approaches have been developed by improved patch embedding [55], data-efficient training [87], efficient self-attention [61, 23, 109], and multi-scale architectures [99, 28, 100]. These works successfully boost the performance of ViT on various image tasks [8, 123, 106, 15, 46, 55, 110, 114, 38, 12, 57]. Recently, researchers have attempted to extend image ViTs for video modeling. The classical work is TimeSformer [3] by spatial-temporal attention. Starting from this, many works propose different variants for spatiotemporal representation learning [3, 1, 28, 71, 5, 62], and subsequently they are adapted to various video understanding tasks [98, 25, 26, 31, 7]. Although these works demonstrate the outstanding ability of ViTs to learn long-term token relations, the self-attention mechanism requires costly token-to-token comparisons [24]. Hence, it is often inefficient to encode low-level features, as shown in Figure 2. Though Video Swin [62] advocates an inductive bias of locality with shift window, window-based self-attention is still less efficient than local convolution [37] when encoding low-level features. Moreover, the shifted window should be carefully configured.

2.3 Combination of CNNs and ViTs

To bridge the gap between CNNs and ViTs, researchers have tried to take advantage of them to build stronger vision backbones for image understanding, by adding convolutional patch stem for fast convergence [111, 105], introducing convolutional position embedding [17, 23], inserting depthwise convolution into feed-forward network [111, 114], utilizing convolutional projection in self-attention [102], and combining MBConv [77] with Transformer [21]. As for video understanding, the combination is also straightforward, i.e., one can insert self-attention as global attention [101], and/or use convolution as patch stem [64]. However, all these approaches ignore inherent relations between convolution and self-attention, leading to inferior local and/or global token relation learning. Several recent works have demonstrated that self-attention operates similarly to convolution [75, 20]. But they suggest replacing convolution instead of combining them together. Differently, our UniFormer unifies convolution and self-attention in the transformer style, which can effectively learn local and global token relation, and achieve better accuracy-computation trade-offs on all the vision tasks from image to the video domain.

2.4 Lightweight CNNs and ViTs

In many practical applications, the running platforms usually lack enough computility. Hence, a series of lightweight CNNs are proposed to satisfy such on-device requirements. For example, the classical MobileNets [40, 77, 39] adopt depthwise separable convolution in well-organized efficient ResNet. ShuffleNets [120, 68] leverage channel shuffle for computation reduction. EfficientNets [85, 86] further scale model with neural architecture search at depth, width, and resolution. However, such lightweight design has not been fully investigated in ViTs. Two recent works are proposed by designing transformers as convolutions (i.e., MobielViT[69]), and introducing a parallel architecture of MobileNet and ViT (i.e., MobileFormer[14]). But both works ignore the inference speed (e.g., throughout). Hence, the prior efficient CNNs are still the better choice. To bridge this gap, we build a lightweight UniFormer by token shrinking and recovering in Section 5.

3 Method

In this section, we introduce the proposed UniFormer in detail. First, we describe the overview of our UniFormer block. Then, we explain its key modules such as multi-head relation aggregator and dynamic position embedding. Moreover, we discuss the distinct relations between our UniFormer and existing CNNs/ViTs, showing its preferable design for accuracy-computation balance.

3.1 Overview

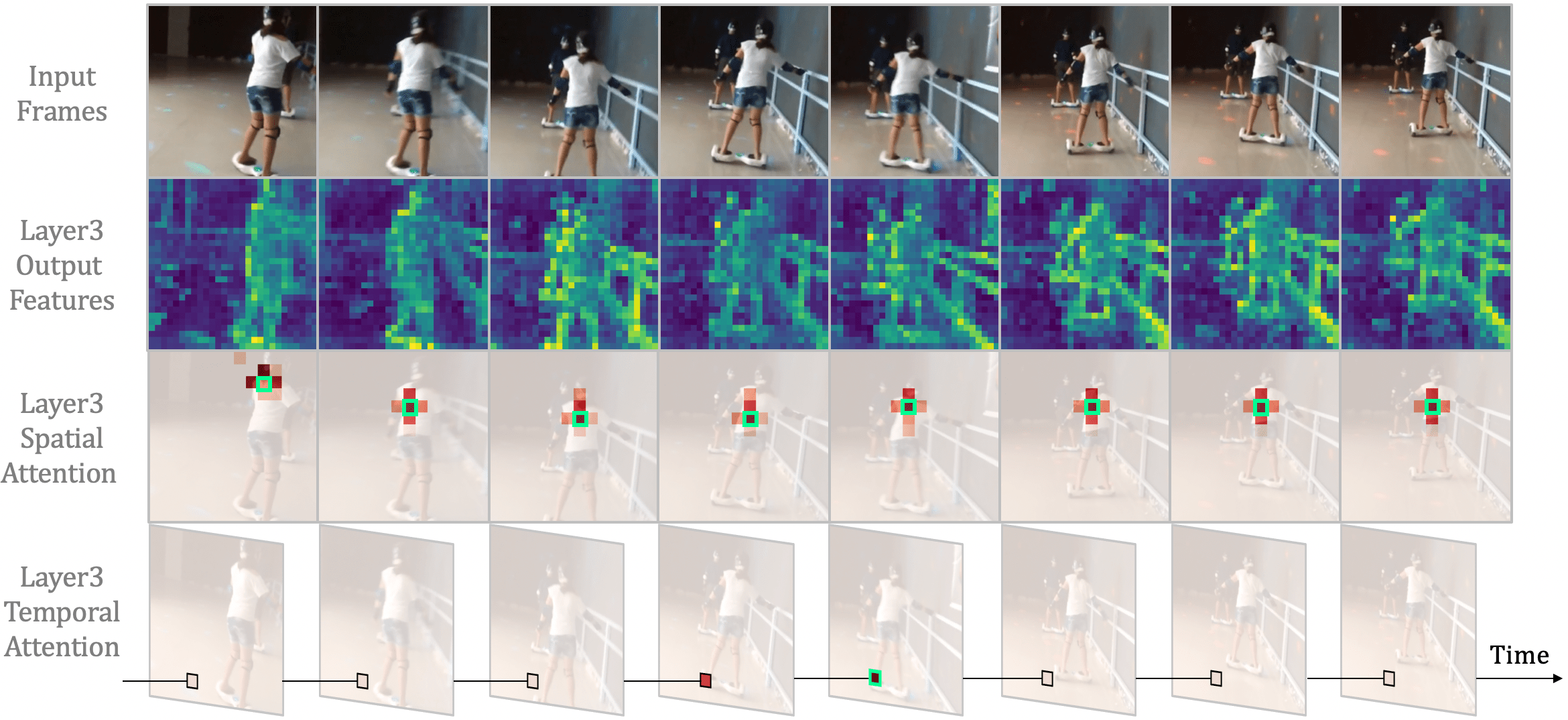

Figure 3 shows our Unified transFormer (UniFormer). For simple description, we take a video with frames as an example and an image input can be seen as a video with a single frame. Hence, the dimensions highlighted in red only exit for the video input, while all of them are equal to one for image input. Our UniFormer is a basic transformer format, while we elaborately design it to tackle computational redundancy and capture complex dependency.

Specifically, our UniFormer block consists of three key modules: Dynamic Position Embedding (DPE), Multi-Head Relation Aggregator (MHRA) and Feed-Forward Network (FFN):

| (1) | ||||

| (2) | ||||

| (3) | ||||

Considering the input token tensor ( for an image input), we first introduce DPE to dynamically integrate position information into all the tokens (Eq. 1). It is friendly to arbitrary input resolution and makes good use of token order for better visual recognition. Then, we use MHRA to enhance each token by exploiting its contextual tokens with relation learning (Eq. 2). Via flexibly designing the token affinity in the shallow and deep layers, our MHRA can smartly unify convolution and self-attention to reduce local redundancy and learn global dependency. Finally, we add FFN like traditional ViTs [24], which consists of two linear layers and one non-linear function, i.e., GELU (Eq. 3). The channel number is first expanded by the ratio of 4 and then recovered, thus each token will be enhanced individually.

3.2 Multi-Head Relation Aggregator

As analyzed before, the traditional CNNs and ViTs focus on addressing either local redundancy or global dependency, leading to unsatisfactory accuracy or/and unnecessary computation. To overcome these difficulties, we introduce a generic Relation Aggregator (RA), which elegantly unifies convolution and self-attention for token relation learning. It can achieve efficient and effective representation learning by designing local and global token affinity in the shallow and deep layers respectively. Specifically, MHRA exploits token relationships in a multi-head style:

| (4) | ||||

| (5) | ||||

Given the input tensor , we first reshape it to a sequence of tokens with the length of . Moreover, refers to RA in the -th head and is a learnable parameter matrix to integrate heads. Each RA consists of token context encoding and token affinity learning. We apply a linear transformation to encode the original tokens into contextual tokens . Subsequently, RA can summarize context with the guidance of token affinity . We will describe how to learn the specific in the following.

3.2.1 Local MHRA

As shown in Figure 2, though the previous ViTs compare similarities among all the tokens, they finally learn local representations. Such redundant self-attention design brings large computation cost in the shallow layers. Based on it, we suggest learning token affinity in a small neighborhood, which coincidentally shares a similar insight with the design of a convolution filter. Hence, we propose to represent local affinity as a learnable parameter matrix in the shallow layers. Concretely, given an anchor token , our local RA learns the affinity between this token and other tokens in the small neighborhood ( for an image input):

| (6) |

where is the learnable parameter, and refers to any neighbor token in . denotes the relative position between token and . Note that, visual content between adjacent tokens varies subtly in the shallow layers, since the receptive field of tokens is small. In this case, it is not necessary to make token affinity dynamic in these layers. Hence, we use a learnable parameter matrix to describe local token affinity, which simply depends on the relative position between tokens.

Comparison to Convolution Block. Interestingly, we find that our local MHRA can be interpreted as a generic extension of MobileNet block [77, 90, 29]. Firstly, the linear transformation in Eq. 4 is equivalent to a pointwise convolution (PWConv), where each head is corresponding to an output feature channel . Furthermore, our local token affinity can be instantiated as the parameter matrix that operated on each output channel (or head) , thus the relation aggregator can be explained as a depthwise convolution (DWConv). Finally, the linear matrix , which concatenates and fuses all heads, can also be seen as a pointwise convolution. As a result, such local MHRA can be reformulated with a manner of PWConv-DWConv-PWConv in the MobileNet block. In our experiments, we instantiate our local MHRA as such channel-separated convolution, so that our UniFormer can boost computation efficiency for visual recognition. Moreover, different from the MobileNet block, our local UniFormer block is designed as a generic transformer format, i.e., it also contains dynamical position encoding (DPE) and feed-forward network (FFN), besides MHRA. This unique integration can effectively enhance token representation, which has not been explored in the previous convolution blocks.

3.2.2 Global MHRA

In the deep layers, it is important to exploit long-range relation in the broader token space, which naturally shares a similar insight with the design of self-attention. Therefore, we design the token affinity via comparing content similarity among all the tokens:

| (7) |

where can be any token in the global tube with a size of ( for an image input), while and are two different linear transformations.

Comparison to Transformer Block. Our global MHRA (Eq. 7) can be instantiated as a spatiotemporal self attention, where , and become Query, Key and Value in ViT [24]. Hence, it can effectively learn long-range dependency. However, our global UniFormer block is different from the previous ViT blocks. First, most video transformers divide spatial and temporal attention in the video domain [3, 1, 79], in order to reduce the dot-product computation in token similarity comparison. But such an operation inevitably deteriorates the spatiotemporal relation among tokens. In contrast, our global UniFormer block jointly encodes spatiotemporal token relation to generate more discriminative video representation for recognition. Since our local UniFormer block largely saves computation of token comparison in the shallow layers, the overall model can achieve a preferable computation-accuracy balance. Second, instead of absolute position embedding [24, 92], we adopt dynamic position embedding (DPE) in our UniFormer. It is in convolution style (see the next section), which can overcome permutation-invariance and be friendly to different input lengths of visual tokens.

| Model | Type | #Blocks | #Channels | #Param. | FLOPs |

| Small | [L, L, G, G] | [3, 4, 8, 3] | [64, 128, 320, 512] | 21.5M | 3.6G |

| Base | [L, L, G, G] | [5, 8, 20, 7] | [64, 128, 320, 512] | 50.3M | 8.3G |

| Large | [L, L, G, G] | [5, 10, 24, 7] | [128, 192, 448, 640] | 100M | 12.6G |

3.3 Dynamic Position Embedding

The position information is an important clue to describe visual representation. Previously, most ViTs encode such information by absolute or relative position embedding [24, 99, 61]. However, absolute position embedding has to be interpolated for various input sizes with fine-tuning [87, 88], while relative position embedding does not work well due to the modification of self-attention [17]. To improve flexibility, convolutional position embedding has been recently proposed [16, 23]. In particular, conditional position encoding (CPE) [17] can implicitly encode position information via convolution operators, which unlocks Transformer to process arbitrary input size and promotes recognition performance. Due to its plug-and-play property, we flexibly adopt it as our Dynamical Position Embedding (DPE) in the UniFormer:

| (8) |

where refers to depthwise convolution with zero paddings. We choose such a design as our DPE based on the following reasons. First, depthwise convolution is friendly to arbitrary input shapes, e.g., it is straightforward to use its spatiotemporal version to encode 3D position information in videos. Second, depthwise convolution is light-weight, which is an important factor for computation-accuracy balance. Finally, we add extra zero paddings, since it can help tokens be aware of their absolute positions by querying their neighbors progressively [17].

4 Framework

In the section, we mainly develop visual frameworks for various downstream tasks. Specifically, we first develop a number of visual backbones for image classification, by hierarchically stacking our local and global UniFormer blocks with consideration of computation-accuracy balance. Then, we extend the above backbones to tackle other representative vision tasks, including video classification and dense prediction (i.e., object detection, semantic segmentation and human pose estimation). Such generality and flexibility of our UniFormer demonstrate its valuable potential for computer vision research and beyond.

4.1 Image Classification

It is important to progressively learn visual representation for capturing semantics in the image. Hence, we build up our backbone with four stages, as illustrated in Figure 3.

More specifically, we use the local UniFormer blocks in the first two stages to reduce computation redundancy, while the global UniFormer blocks are utilized in the last two stages to learn long-range token dependency. For the local UniFormer block, MHRA is instantiated as PWConv-DWConv-PWConv with local token affinity (Eq. 6), where the spatial size of DWConv is set to 55 for image classification. For the global UniFormer block, MHRA is instantiated as multi-head self-attention with global token affinity (Eq. 7), where the number of attention heads is set to 64. For both local and global UniFormer blocks, DPE is instantiated as DWConv with a spatial size of 33, and the expand ratio of FFN is 4.

Additionally, as suggested in the CNN and ViT literatures [37, 24], we utilize BN [43] for convolution and LN [2] for self-attention. For feature downsampling, we use the 44 convolution with stride 44 before the first stage and the 22 convolution with stride 22 before other stages. Besides, an extra LN is added after each downsampling convolution. Finally, the global average pooling and fully connected layer are applied to output the predictions. When training models with Token Labeling [45], we add another fully connected layer for auxiliary loss. For various computation requirements, we design three model variants as shown in Table I.

4.2 Video Classification

Given our image-based 2D backbones, one can easily adapt them as 3D backbones for video classification. Without loss of generality, we adjust Small and Base models for spatiotemporal modeling. Specifically, the model architectures keep the same with four stages, where we use the local UniFormer blocks in the first two stages and the global UniFormer blocks in the last two stages. But differently, all the 2D convolution filters are changed as 3D ones via filter inflation [11]. Concretely, the kernel size of DWConv in DPE and local MHRA are 333 and 555 respectively. Moreover, we downsample both spatial and temporal dimensions before the first stage. Hence, the convolution filter before this stage becomes 344 with the stride of 244. For the other stages, we just downsample the spatial dimension to decrease the computation cost and maintain high performance. Hence, the convolution filters before these stages are 122 with stride of 122 .

Note that we use spatiotemporal attention in the global UniFormer blocks for learning token relation jointly in the 3D view. It is worth mentioning that, due to the large model sizes, the previous video transformers [3, 1] divide spatial and temporal attention to reduce computation and alleviate overfitting, but such factorization operation inevitably tears spatiotemporal token relations. In contrast, our joint spatiotemporal attention can avoid the issue. Besides, our local UniFormer blocks largely save computation via 3D DWconv. Hence, our model can achieve effective and efficient video representation learning.

4.3 Dense Prediction

Dense prediction tasks are necessary to verify the generality of our recognition backbones. Hence, we adopt our UniFormer backbones for a number of popular dense tasks such as object detection, instance segmentation, semantic segmentation, and human pose estimation. However, direct usage of our backbone is not suitable because of the high input resolution of most dense prediction tasks, e.g., the size of the image is 1333800 in the COCO object detection dataset. Naturally, feeding such images into our classification backbones would inevitably lead to large computation, especially when operating self-attention of global UniFormer block in the last two stages. Taking visual tokens as an example, the MatMul operation in token similarity comparison (Eq. 7) causes complexity, which is prohibitive for most dense tasks.

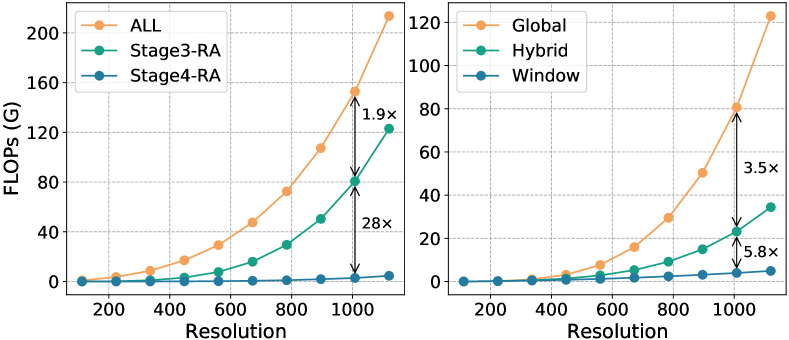

We propose to adjust the global UniFormer block for different downstream tasks. First, we analyze the FLOPs of our UniFormer-S under different input resolutions. Figure 4 clearly indicates that Relation Aggregator (RA) in Stage3 occupies large computation. For example, for a 10081008 image, the MatMul operation of RA in Stage3 even occupies over 50% of the total FLOPs, while the FLOPs in Stage4 is only 128 of that in Stage3. Thus we focus on modifying RA in Stage3 for computation reduction.

Inspired by [75, 61], we propose to apply our global MHRA in a predefined window (e.g., 1414), instead of using it in the entire image with high resolution. Such operation can effectively cut down computation with the complexity of , where is the window size. However, it undoubtedly drops model performance, due to insufficient token interaction. To bridge this gap, we integrate window and global UniFormer blocks together in Stage3, where a hybrid group consists of three window blocks and one global block. In this case, there are 2/5 hybrid groups in Stage3 of our UniFormer-Small/Base backbones.

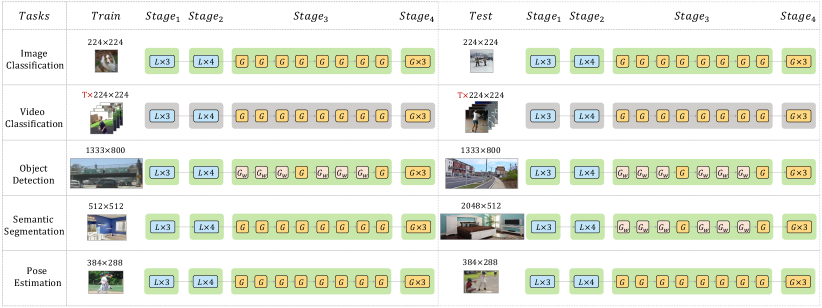

Based on this design, we next introduce the specific backbone settings of various dense tasks, depending on the input resolution of training and testing images. For object detection and instance segmentation, the input images are usually large (e.g., 1333800), thus we adopt the hybrid block style in Stage3. In contrast, the inputs are relatively small for pose estimation, such as 384288, hence global blocks are still applied in Stage3 for both training and testing. Specially, for semantic segmentation, the testing images are often larger than the training ones. Therefore, we utilize the global blocks in Stage3 for training, while adapting the hybrid blocks in Stage3 for testing. We use the following simple design. The window-based block in testing has the same receptive field as the global block in training, e.g., 3232. Such design can maintain training efficiency, and boost testing performance by keeping consistency with training as much as possible.

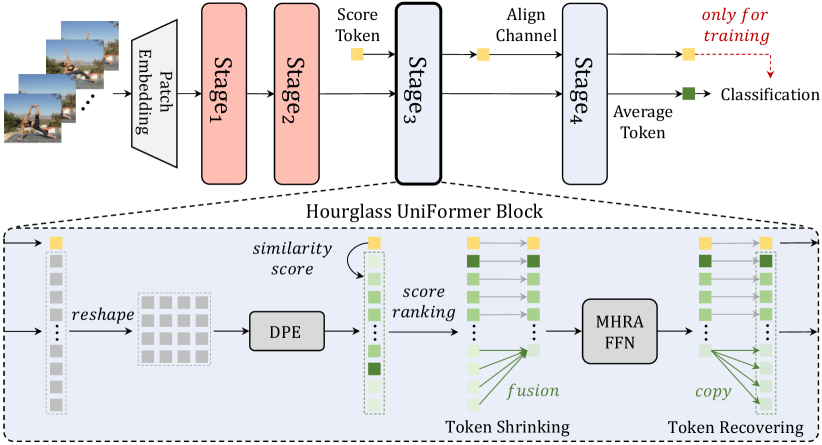

5 Towards Lightweight UniFormer

Recently, researchers have tried to combine CNNs with ViTs to design lightweight models. For example, MobileFormer[14] proposes a parallel design of MobileNet[77] and ViT[24], and MobileViT[69] designs transformers as convolutions. However, the inference speeds of these works should be further improved. Hence, the prior efficient CNNs are still the better choice, such as EfficientNet [85] for image tasks and MoViNet[48] for video tasks. To bridge the gap, we propose a lightweight UniFormer architecture in Figure 6, by designing a distinct hourglass UniFormer block. In this block, we adaptively leverage token shrinking and recovering, to achieve a preferable accuracy-throughput balance.

5.1 Hourglass UniFormer Block

Since a large computation load lies in token similarity comparison in the global UniFormer block, we propose an Hourglass UniFormer (H-UniFormer) block to reduce the number of visual tokens involved in global MHRA. Note that, the existing token pruning methods [76, 58, 124] are infeasible for our UniFormer and those ViTs with convolution [100, 111, 21]. The main reason is that, after pruning, the rest tokens often maintain a broken spatiotemporal structure, which makes convolution inapplicable. To overcome such difficulty, we propose a concise integration of token shrinking and recovering in our H-UniFormer block.

Token Shrinking. We introduce a score token to measure the importance of the visual tokens after DPE. Specifically, we compare similarity (using Eq. 7) between the score token and a visual token , and average the similarity values over attention heads,

| (9) |

where represents the importance of visual token , and we use to represent the importance vector of all the visual tokens. For tokens with high values in , we consider them to be crucial tokens and keep them. For tokens with low values, we consider them to be unimportant tokens. Hence, we fuse them as one representative token, where their score values are used as the fusing weights. By such unimportant token reduction, we can shrink visual tokens from to , where the number of tokens . Subsequently, the reduced visual tokens are fed into global MHRA and FFN, for computation saving. Additionally, we pass this score token through all the H-UniFormer blocks in Stage3 and Stage4, and use it to calculate the classification loss when training. In this case, the score token is effectively guided by the ground-truth label, and it thus becomes discriminative to weight token importance.

Token Recovering. After learning token interactions in global MHRA and FFN, we replicate the representative token to recover the unimportant tokens. Thus, we can maintain the spatiotemporal structure of all the visual tokens, for effective dynamic position encoding (i.e., convolution) in the next H-UniFormer block.

| Arch. | Method | #Param | FLOPs | Train | Test | ImageNet |

| (M) | (G) | Size | Size | Top-1 | ||

| CNN | RegNetY-4G [74] | 21 | 4.0 | 224 | 224 | 80.0 |

| EffcientNet-B5 [85] | 30 | 9.9 | 456 | 456 | 83.6 | |

| EfficientNetV2-S [86] | 22 | 8.5 | 384 | 384 | 83.9 | |

| Trans | DeiT-S [87] | 22 | 4.6 | 224 | 224 | 79.9 |

| PVT-S [99] | 25 | 3.8 | 224 | 224 | 79.8 | |

| T2T-14 [112] | 22 | 5.2 | 224 | 224 | 80.7 | |

| Swin-T [61] | 29 | 4.5 | 224 | 224 | 81.3 | |

| Focal-T [109] | 29 | 4.9 | 224 | 224 | 82.2 | |

| CSwin-T [23] | 23 | 4.3 | 224 | 224 | 82.7 | |

| CSwin-T 384 [23] | 23 | 14.0 | 224 | 384 | 84.3 | |

| CNN+Trans | CvT-13 [102] | 20 | 4.5 | 224 | 224 | 81.6 |

| CvT-13 384 [102] | 20 | 16.3 | 224 | 384 | 83.0 | |

| CoAtNet-0 [21] | 25 | 4.2 | 224 | 224 | 81.6 | |

| CoAtNet-0 384 [21] | 20 | 13.4 | 224 | 384 | 83.9 | |

| Container [32] | 22 | 8.1 | 224 | 224 | 82.7 | |

| LV-ViT-S [45] | 26 | 6.6 | 224 | 224 | 83.3 | |

| LV-ViT-S 384 [45] | 26 | 22.2 | 224 | 384 | 84.4 | |

| UniFormer-S | 22 | 3.6 | 224 | 224 | 82.9 | |

| UniFormer-S⋆ | 22 | 3.6 | 224 | 224 | 83.4 | |

| UniFormer-S⋆ 384 | 22 | 11.9 | 224 | 384 | 84.6 | |

| UniFormer-S | 24 | 4.2 | 224 | 224 | 83.4 | |

| UniFormer-S | 24 | 4.2 | 224 | 224 | 83.9 | |

| UniFormer-S 384 | 24 | 13.7 | 224 | 384 | 84.9 | |

| CNN | RegNetY-8G [74] | 39 | 8.0 | 224 | 224 | 81.7 |

| EffcientNet-B7 [85] | 66 | 39.2 | 600 | 600 | 84.3 | |

| EfficientNetV2-M [86] | 54 | 25.0 | 480 | 480 | 85.1 | |

| Trans | PVT-L [99] | 61 | 9.8 | 224 | 224 | 81.7 |

| T2T-24 [112] | 64 | 13.2 | 224 | 224 | 82.2 | |

| Swin-S [61] | 50 | 8.7 | 224 | 224 | 83.0 | |

| Focal-S [109] | 51 | 9.1 | 224 | 224 | 83.5 | |

| CSwin-S [23] | 35 | 6.9 | 224 | 224 | 83.6 | |

| CSwin-S 384 [23] | 35 | 22.0 | 224 | 384 | 85.0 | |

| CNN+Trans | CvT-21 [102] | 32 | 7.1 | 224 | 224 | 82.5 |

| CoAtNet-1 [21] | 42 | 8.4 | 224 | 224 | 83.3 | |

| CoAtNet-1 384 [21] | 42 | 27.4 | 224 | 384 | 85.1 | |

| LV-ViT-M [45] | 56 | 16.0 | 224 | 224 | 84.1 | |

| LV-ViT-M 384 [45] | 56 | 42.2 | 224 | 384 | 85.4 | |

| UniFormer-B | 50 | 8.3 | 224 | 224 | 83.9 | |

| UniFormer-B⋆ | 50 | 8.3 | 224 | 224 | 85.1 | |

| UniFormer-B⋆ 384 | 50 | 27.2 | 224 | 384 | 86.0 | |

| CNN | RegNetY-16G [74] | 84 | 16.0 | 224 | 224 | 82.9 |

| EfficientNetV2-L [86] | 121 | 53 | 480 | 480 | 85.7 | |

| NFNet-F4 [4] | 316 | 215.3 | 384 | 512 | 85.9 | |

| Trans | DeiT-B [87] | 86 | 17.5 | 224 | 224 | 81.8 |

| Swin-B [61] | 88 | 15.4 | 224 | 224 | 83.3 | |

| Swin-B 384 | 88 | 47.0 | 224 | 384 | 84.2 | |

| Focal-B [61] | 90 | 16.0 | 224 | 224 | 83.8 | |

| CSwin-B [23] | 78 | 15.0 | 224 | 224 | 84.2 | |

| CSwin-B 384 [23] | 78 | 47.0 | 224 | 384 | 85.4 | |

| CaiT-S36 384 [88] | 68 | 48.0 | 224 | 384 | 85.4 | |

| CaiT-M36 448 [88] | 271 | 247.8 | 224 | 448 | 86.3 | |

| CNN+Trans | BoTNet-T7 [82] | 79 | 19.3 | 256 | 256 | 84.2 |

| CoAtNet-3 [21] | 168 | 34.7 | 224 | 224 | 84.5 | |

| CoAtNet-3 384 [21] | 168 | 107.4 | 224 | 384 | 85.8 | |

| LV-ViT-L [45] | 150 | 59.0 | 288 | 288 | 85.3 | |

| LV-ViT-L 448 [45] | 150 | 157.2 | 288 | 448 | 85.9 | |

| VOLO-D3 [113] | 86 | 20.6 | 224 | 224 | 85.4 | |

| VOLO-D3 448 [113] | 86 | 67.9 | 224 | 448 | 86.3 | |

| UniFormer-L⋆ | 100 | 12.6 | 224 | 224 | 85.6 | |

| UniFormer-L⋆ 384 | 100 | 39.2 | 224 | 384 | 86.3 | |

5.2 LightWeight UniFormer Architecture

To build our light-weight UniFormer, We follow most of the architectures in Section 4.1, except that we change to use the H-UniFormer block in Stage3 and Stage4, and adopt smaller depth, width or resolution (e.g., 128). Specifically, for UniFomrer-XS, the block number, channel number and head dimension are [3, 5, 9, 3], [64, 128, 256, 512] and 32. For UniFomrer-XXS, the block number, channel number and head dimension are [2, 5, 8, 2], [56, 112, 224, 448] and 28.

Beginning from the second layer in Stage3, we utilize the similarity score in the previous layer to guide the token shrinking. Based on the phenomenon that the locations of the crucial tokens are basically the same among different layers [108], we update the similarity scores via mean, i.e., . Thus it can focus on the significant tokens consistently. By default, we keep half of the tokens and fuse the rest (i.e., the shrinking ratio is 0.5) in our light-weight UniFormer.

6 Experiments

To verify the effectiveness and efficiency of our UniFormer for visual recognition, we conduct extensive experiments on ImageNet-1K[22] image classification, Kinetics-400[10]/600[9] and Something-Something V1&V2[34] video classification, COCO [60] object detection, instance segmentation and pose estimation, and ADE20K [121] semantic segmentation. We also perform comprehensive ablation studies to analyze each design of our UniFormer.

| Method | Pretrain | #frame | FLOPs | K400 | K600 | ||

| #crop#clip | (G) | Top-1 | Top-5 | Top-1 | Top-5 | ||

| SmallBigEN[53] | IN-1K | (8+32)34 | 5700 | 78.7 | 93.7 | - | - |

| TDNEN[97] | IN-1K | (8+16)310 | 5940 | 79.4 | 94.4 | - | - |

| CT-NetEN[52] | IN-1K | (16+16)34 | 2641 | 79.8 | 94.2 | - | - |

| LGD[73] | IN-1K | 128N/A | N/A | 79.4 | 94.4 | 81.5 | 95.6 |

| SlowFast[30] | - | 8310 | 3180 | 77.9 | 93.2 | 80.4 | 94.8 |

| SlowFast+NL[30] | - | 16310 | 7020 | 79.8 | 93.9 | 81.8 | 95.1 |

| ip-CSN[90] | Sports1M | 32310 | 3270 | 79.2 | 93.8 | - | - |

| CorrNet[94] | Sports1M | 32310 | 6720 | 81.0 | - | - | - |

| X3D-M[29] | - | 16310 | 186 | 76.0 | 92.3 | 78.8 | 94.5 |

| X3D-XL[29] | - | 16310 | 1452 | 79.1 | 93.9 | 81.9 | 95.5 |

| MoViNet-A5[48] | - | 12011 | 281 | 80.9 | 94.9 | 82.7 | 95.7 |

| MoViNet-A6[48] | - | 12011 | 386 | 81.5 | 95.3 | 83.5 | 96.2 |

| ViT-B-VTN [70] | IN-21K | 25011 | 3992 | 78.6 | 93.7 | - | - |

| TimeSformer-HR[3] | IN-21K | 1631 | 5109 | 79.7 | 94.4 | 82.4 | 96.0 |

| TimeSformer-L[3] | IN-21K | 9631 | 7140 | 80.7 | 94.7 | 82.2 | 95.5 |

| STAM [79] | IN-21K | 6411 | 1040 | 79.2 | - | - | - |

| X-ViT[5] | IN-21K | 831 | 425 | 78.5 | 93.7 | 82.5 | 95.4 |

| X-ViT[5] | IN-21K | 1631 | 850 | 80.2 | 94.7 | 84.5 | 96.3 |

| Mformer-HR[71] | IN-21K | 16310 | 28764 | 81.1 | 95.2 | 82.7 | 96.1 |

| MViT-B,164[28] | - | 1615 | 353 | 78.4 | 93.5 | 82.1 | 95.7 |

| MViT-B,323[28] | - | 3215 | 850 | 80.2 | 94.4 | 83.4 | 96.3 |

| ViViT-L[1] | IN-21K | 1634 | 17352 | 80.6 | 94.7 | 82.5 | 95.6 |

| ViViT-L[1] | JFT-300M | 1634 | 17352 | 82.8 | 95.3 | 84.3 | 96.2 |

| ViViT-H[1] | JFT-300M | 1634 | 99792 | 84.8 | 95.8 | 85.8 | 96.5 |

| Swin-T[62] | IN-1K | 3234 | 1056 | 78.8 | 93.6 | - | - |

| Swin-B[62] | IN-1K | 3234 | 3384 | 80.6 | 94.6 | - | - |

| Swin-B[62] | IN-21K | 3234 | 3384 | 82.7 | 95.5 | 84.0 | 96.5 |

| Swin-L-384[62] | IN-21K | 32510 | 105350 | 84.9 | 96.7 | 86.1 | 97.3 |

| UniFormer-S | IN-1K | 1614 | 167 | 80.8 | 94.7 | 82.8 | 95.8 |

| UniFormer-B | IN-1K | 1614 | 389 | 82.0 | 95.1 | 84.0 | 96.4 |

| UniFormer-B | IN-1K | 3214 | 1036 | 82.9 | 95.4 | 84.8 | 96.7 |

| UniFormer-B | IN-1K | 3234 | 3108 | 83.0 | 95.4 | 84.9 | 96.7 |

6.1 Image Classification

Settings. We train our models from scratch on the ImageNet-1K dataset [22]. For a fair comparison, we follow the same training strategy proposed in DeiT [87] by default, including strong data augmentation and regularization. Additionally, we set the stochastic depth rate as 0.1/0.3/0.4 respectively for our UniFormer-S/B/L in Table I. We train all models via AdamW [65] optimizer with cosine learning rate schedule [66] for 300 epochs, while the first 5 epochs are utilized for linear warm-up [33]. The weight decay, learning rate and batch size are set to 0.05, 1e-3 and 1024 respectively. For UniFormer-S, we follow state-of-the-art ViTs [23, 109] to apply overlapped patch embedding and blocks (3/5/9/3 blocks in each stage) for fair comparisons. As for UniFormer-B, we use the learning rate of 8e-4 for better convergence.

| Method | Pretrain | #frame | FLOPs | SSV1 | SSV2 | ||

| #crop#clip | (G) | Top-1 | Top-5 | Top-1 | Top-5 | ||

| TSN[96] | IN-1K | 1611 | 66 | 19.9 | 47.3 | 30.0 | 60.5 |

| TSM[59] | IN-1K | 1611 | 66 | 47.2 | 77.1 | - | - |

| GST[67] | IN-1K | 1611 | 59 | 48.6 | 77.9 | 62.6 | 87.9 |

| TEINet[63] | IN-1K | 1611 | 66 | 49.9 | - | 62.1 | - |

| TEA[56] | IN-1K | 1611 | 70 | 51.9 | 80.3 | - | - |

| MSNet[50] | IN-1K | 1611 | 101 | 52.1 | 82.3 | 64.7 | 89.4 |

| CT-Net[52] | IN-1K | 1611 | 75 | 52.5 | 80.9 | 64.5 | 89.3 |

| CT-NetEN[52] | IN-1K | 8+12+16+24 | 280 | 56.6 | 83.9 | 67.8 | 91.1 |

| TDN[97] | IN-1K | 1611 | 72 | 53.9 | 82.1 | 65.3 | 89.5 |

| TDNEN[97] | IN-1K | 8+16 | 198 | 56.8 | 84.1 | 68.2 | 91.6 |

| TimeSformer-HR[3] | IN-21K | 1631 | 5109 | - | - | 62.5 | - |

| TimeSformer-L[3] | IN-21K | 9631 | 7140 | - | - | 62.3 | - |

| X-ViT[5] | IN-21K | 1631 | 850 | - | - | 65.2 | 90.6 |

| X-ViT[5] | IN-21K | 3231 | 1270 | - | - | 65.4 | 90.7 |

| Mformer-HR[71] | K400 | 1631 | 2876 | - | - | 67.1 | 90.6 |

| Mformer-L[71] | K400 | 3231 | 3555 | - | - | 68.1 | 91.2 |

| ViViT-L[1] | K400 | 1634 | 11892 | - | - | 65.4 | 89.8 |

| MViT-B,643[28] | K400 | 6413 | 1365 | - | - | 67.7 | 90.9 |

| MViT-B-24,323[28] | K600 | 3213 | 708 | - | - | 68.7 | 91.5 |

| Swin-B[62] | K400 | 3231 | 963 | - | - | 69.6 | 92.7 |

| UniFormer-S | K400 | 1611 | 42 | 53.8 | 81.9 | 63.5 | 88.5 |

| UniFormer-S | K600 | 1611 | 42 | 54.4 | 81.8 | 65.0 | 89.3 |

| UniFormer-S | K400 | 1631 | 125 | 57.2 | 84.9 | 67.7 | 91.4 |

| UniFormer-S | K600 | 1631 | 125 | 57.6 | 84.9 | 69.4 | 92.1 |

| UniFormer-B | K400 | 1631 | 290 | 59.1 | 86.2 | 70.4 | 92.8 |

| UniFormer-B | K600 | 1631 | 290 | 58.8 | 86.5 | 70.2 | 93.0 |

| UniFormer-B | K400 | 3231 | 777 | 60.9 | 87.3 | 71.2 | 92.8 |

| UniFormer-B | K600 | 3231 | 777 | 61.0 | 87.6 | 71.2 | 92.8 |

| UniFormer-B | K400 | 3232 | 1554 | 61.0 | 87.3 | 71.4 | 92.8 |

| UniFormer-B | K600 | 3232 | 1554 | 61.2 | 87.6 | 71.3 | 92.8 |

For training high-performance ViTs, hard distillation [87] and Token Labeling [45] are proposed, both of which are complementary to our backbones. Since Token Labeling is more efficient, we apply it with an extra fully connected layer and auxiliary loss, following the settings in LV-ViT [45]. Different from the training settings in DeiT, MixUp [118] and CutMix [115] are not used since they conflict with MixToken [45]. The base learning rate is 1.6e-3 for the batch size of 1024 by default. Specially, we adopt the base learning rate of 1.2e-3 and layer scale [88] for UniFormer-L to avoid NaN loss. When fine-tuning our models on larger resolution, i.e., 384384, the weight decay, learning rate, batch size, warm-up epoch and total epoch are set to 1e-8, 5e-6, 512, 5 and 30.

Results. In Table II, we compare our UniFormer with the state-of-the-art CNNs, ViTs and their combinations. It clearly shows that our UniFormer outperforms previous models under different computation restrictions. For example, our UniFormer-S achieves 83.4% top-1 accuracy with only 4.2G FLOPs, surpassing RegNetY-4G [74], Swin-T [61], CSwin-T [23] and CoAtNet [21] by 3.4%, 2.1%, 0.7% and 1.8% respectively. Though EfficientNet [85] comes from extensive neural architecture search, our UniFormer outperforms it (83.9% vs. 83.6%) with less computation cost (8.3G vs. 9.9G). Furthermore, we enhance our models with Token Labeling [45], which is denoted by ‘⋆’. Compared with the models training with the same settings, our UniFormer-L achieves higher accuracy but only 21% FLOPs of LV-ViT-M[45] and 61% FLOPs of VOLO-D3[113]. Moreover, when fine-tuned on 384384 images, our UniFormer-L obtains 86.3% top-1 accuracy. It is even better than EfficientNetV2-L [86] with larger input, demonstrating the powerful learning capacity of our UniFormer.

| Method | #Params | FLOPs | Mask R-CNN 1 schedule | Mask R-CNN 3 + MS schedule | ||||||||||

| (M) | (G) | |||||||||||||

| Res50 [37] | 44 | 260 | 38.0 | 58.6 | 41.4 | 34.4 | 55.1 | 36.7 | 41.0 | 61.7 | 44.9 | 37.1 | 58.4 | 40.1 |

| PVT-S [99] | 44 | 245 | 40.4 | 62.9 | 43.8 | 37.8 | 60.1 | 40.3 | 43.0 | 65.3 | 46.9 | 39.9 | 62.5 | 42.8 |

| TwinsP-S [16] | 44 | 245 | 42.9 | 65.8 | 47.1 | 40.0 | 62.7 | 42.9 | 46.8 | 69.3 | 51.8 | 42.6 | 66.3 | 46.0 |

| Twins-S [16] | 44 | 228 | 43.4 | 66.0 | 47.3 | 40.3 | 63.2 | 43.4 | 46.8 | 69.2 | 51.2 | 42.6 | 66.3 | 45.8 |

| Swin-T [61] | 48 | 264 | 42.2 | 64.6 | 46.2 | 39.1 | 61.6 | 42.0 | 46.0 | 68.2 | 50.2 | 41.6 | 65.1 | 44.8 |

| ViL-S [119] | 45 | 218 | 44.9 | 67.1 | 49.3 | 41.0 | 64.2 | 44.1 | 47.1 | 68.7 | 51.5 | 42.7 | 65.9 | 46.2 |

| Focal-T [109] | 49 | 291 | 44.8 | 67.7 | 49.2 | 41.0 | 64.7 | 44.2 | 47.2 | 69.4 | 51.9 | 42.7 | 66.5 | 45.9 |

| UniFormer-Sh14 | 41 | 269 | 45.6 | 68.1 | 49.7 | 41.6 | 64.8 | 45.0 | 48.2 | 70.4 | 52.5 | 43.4 | 67.1 | 47.0 |

| Res101 [37] | 63 | 336 | 40.4 | 61.1 | 44.2 | 36.4 | 57.7 | 38.8 | 42.8 | 63.2 | 47.1 | 38.5 | 60.1 | 41.3 |

| X101-32 [107] | 63 | 340 | 41.9 | 62.5 | 45.9 | 37.5 | 59.4 | 40.2 | 44.0 | 64.4 | 48.0 | 39.2 | 61.4 | 41.9 |

| PVT-M [99] | 64 | 302 | 42.0 | 64.4 | 45.6 | 39.0 | 61.6 | 42.1 | 44.2 | 66.0 | 48.2 | 40.5 | 63.1 | 43.5 |

| TwinsP-B [16] | 64 | 302 | 44.6 | 66.7 | 48.9 | 40.9 | 63.8 | 44.2 | 47.9 | 70.1 | 52.5 | 43.2 | 67.2 | 46.3 |

| Twins-B [16] | 76 | 340 | 45.2 | 67.6 | 49.3 | 41.5 | 64.5 | 44.8 | 48.0 | 69.5 | 52.7 | 43.0 | 66.8 | 46.6 |

| Swin-S [61] | 69 | 354 | 44.8 | 66.6 | 48.9 | 40.9 | 63.4 | 44.2 | 48.5 | 70.2 | 53.5 | 43.3 | 67.3 | 46.6 |

| Focal-S [109] | 71 | 401 | 47.4 | 69.8 | 51.9 | 42.8 | 66.6 | 46.1 | 48.8 | 70.5 | 53.6 | 43.8 | 67.7 | 47.2 |

| CSWin-S [23] | 54 | 342 | 47.9 | 70.1 | 52.6 | 43.2 | 67.1 | 46.2 | 50.0 | 71.3 | 54.7 | 44.5 | 68.4 | 47.7 |

| Swin-B [61] | 107 | 496 | 46.9 | - | - | 42.3 | - | - | 48.5 | 69.8 | 53.2 | 43.4 | 66.8 | 46.9 |

| Focal-B [109] | 110 | 533 | 47.8 | - | - | 43.2 | - | - | 49.0 | 70.1 | 53.6 | 43.7 | 67.6 | 47.0 |

| UniFormer-Bh14 | 69 | 399 | 47.4 | 69.7 | 52.1 | 43.1 | 66.0 | 46.5 | 50.3 | 72.7 | 55.3 | 44.8 | 69.0 | 48.3 |

| Method | Pretrain | Backbone | UCF101 | HMDB51 |

| C3D[89] | Sports-1M | ResNet18 | 85.8 | 54.9 |

| TSN[96] | IN1K+K400 | InceptionV2 | 91.1 | - |

| I3D[11] | IN1K+K400 | InceptionV2 | 95.8 | 74.8 |

| R(2+1)D[91] | K400 | ResNet34 | 96.8 | 74.5 |

| TSM[59] | IN1K+K400 | ResNet50 | 94.5 | 70.7 |

| STM[44] | IN1K+K400 | ResNet50 | 96.2 | 72.2 |

| TEA[56] | IN1K+K400 | ResNet50 | 96.9 | 73.3 |

| CT-Net[52] | IN1K+K400 | ResNet50 | 96.2 | 73.2 |

| TDN[97] | IN1K+K400 | ResNet50 | 97.4 | 76.3 |

| VidTr[54] | IN21K+K400 | ViT-B | 96.6 | 74.4 |

| UniFormer | IN1K+K400 | UniFormer-S | 98.1 | 76.9 |

6.2 Video Classification

Settings. We evaluate our UniFormer on the popular Kinetics-400 [10], Kinetics-600 [9], UCF101 [81] and HMDB51 [93], and we verify the transfer learning performance on temporal-related datasets Something-Something (SthSth) V1&V2 [34]. Our codes mainly rely on PySlowFast [27]. For training, we adopt the same training strategy as MViT [28] by default, but we do not apply random horizontal flip for SthSth. We utilize AdamW [65] optimizer with cosine learning rate schedule [66] to train our video backbones.. The first 5 or 10 epochs are used for warm-up [33] to overcome early optimization difficulty. For UniFormer-S, the warmup epoch, total epoch, stochastic depth rate, weight decay are set to 10, 110, 0.1 and 0.05 respectively for Kinetics, 5, 50, 0.3 and 0.05 respectively for SthSth, and 5, 20, 0.2 and 0.05 for UCF101 and HMDB51. For UniFormer-B, all the hyper-parameters are the same unless the stochastic depth rates are doubled. Moreover, We linearly scale the base learning rates according to the batch size, which are 1e-4 for Kinetics, 2e-4 for SthSth, and 1e-5 for UCF101 and HMDB51.

We utilize the dense sampling strategy [101] for Kinetics, UCF101 and HMDB51, and uniform sampling strategy [96] for Something-Something. To reduce the total training cost, we inflate the 2D convolution kernels pre-trained on ImageNet for Kinetics [11]. To obtain a better FLOPs-accuracy balance, Besides, we adopt multi-clip testing for Kinetics and multi-crop testing for Something-Something. All scores are averaged for the final prediction.

Results on Kinetics. In Table III, we compare our UniFormer with the state-of-the-art methods on Kinetics-400 and Kinetics-600. The first part shows the prior works using CNN. Compared with SlowFast [30] equipped with non-local blocks [101], our UniFormer-S16f requires fewer GFLOPs but obtains 1.0% performance gain on both datasets (80.8% vs. 79.8% and 82.8% vs. 81.8%). Even compared with MoViNet [48], which is a strong CNN-based models via extensive neural architecture search, our model achieves slightly better results (82.0% vs. 81.5%) with fewer input frames ( vs. ). The second part lists the recent methods based on vision transformers. With only ImageNet-1K pre-training, UniFormer-B16f surpasses most existing backbones with large dataset pre-training. For example, compared with ViViT-L [1] pre-trained from JFT-300M [83] and Swin-B [62] pre-trained from ImageNet-21K, UniFormer-B32f obtains comparable performance (82.9% vs. 82.8% and 82.7%) with and fewer computation on both Kinetics-400 and Kinetics-600. These results demonstrate the effectiveness of our UniFormer for video.

Results on Something-Something. Table IV presents the results on Something-Something (SthSth) V1&V2. Since these datasets require robust temporal relation modeling, it is difficult for the CNN-based methods to capture long-term dependencies, which leads to their worse results. On the contrary, video transformers are good at processing long sequential data and demonstrate better transfer learning capabilities [122], thus they achieve higher accuracy but with large computation costs. In contrast, our UniFormer-S16f combines the advantages of both convolution and self-attention, obtaining 54.4%/65.0% in SthSth V1/V2 with only 42 GFLOPs. It also demonstrates that small model UniFormer-S benefit from larger dataset pre-training (Kinetics-400, 53.8% vs. Kinetics-600, 54.4%), but large model UniFormer-B do not (Kinetics-400, 59.1% vs. Kinetics-600, 58.8%). We argue that the large model is easy to converge better. Besides, it is worth noting that our UniFormer pre-trained from Kinetis-600 outperforms all the current methods under the same settings. In fact, our best model achieves the new state-of-the-art results: 61.0% top-1 accuracy on SthSth V1 (4.2% higher than TDNEN) [97] and 71.2% top-1 accuracy on SthSth V2 (1.6% higher than Swin-B [62]). Such results verify its high capability of spatiotemporal learning.

Results on UCF101 and HMDB51. We further verify the generalization ability on UCF101 and HMDB51. Since these datasets are relatively small, the performances have already saturated. As shown in Table VI, our UniFormer significantly outperforms the previous SOTA methods, revealing its strong generalization ability to transfer to small datasets.

| Method | #Params | FLOPs | 3 + MS schedule | |||||

| (M) | (G) | |||||||

| Res50 [37] | 82 | 739 | 46.3 | 64.3 | 50.5 | 40.1 | 61.7 | 43.4 |

| DeiT [87] | 80 | 889 | 48.0 | 67.2 | 51.7 | 41.4 | 64.2 | 44.3 |

| Swin-T [61] | 86 | 745 | 50.5 | 69.3 | 54.9 | 43.7 | 66.6 | 47.1 |

| Shuffle-T [42] | 86 | 746 | 50.8 | 69.6 | 55.1 | 44.1 | 66.9 | 48.0 |

| Focal-T [109] | 87 | 770 | 51.5 | 70.6 | 55.9 | - | - | - |

| UniFormer-Sh14 | 79 | 747 | 52.1 | 71.1 | 56.6 | 45.2 | 68.3 | 48.9 |

| X101-32 [107] | 101 | 819 | 48.1 | 66.5 | 52.4 | 41.6 | 63.9 | 45.2 |

| Swin-S [61] | 107 | 838 | 51.8 | 70.4 | 56.3 | 44.7 | 67.9 | 48.5 |

| Shuffle-S [42] | 107 | 844 | 51.9 | 70.9 | 56.4 | 44.9 | 67.8 | 48.6 |

| CSWin-S [23] | 92 | 820 | 53.7 | 72.2 | 58.4 | 46.4 | 69.6 | 50.6 |

| Swin-B [61] | 145 | 972 | 51.9 | 70.9 | 57.0 | 45.3 | 68.5 | 48.9 |

| Shuffle-B [42] | 145 | 989 | 52.2 | 71.3 | 57.0 | 45.3 | 68.5 | 48.9 |

| UniFormer-Bh14 | 107 | 878 | 53.8 | 72.8 | 58.5 | 46.4 | 69.9 | 50.4 |

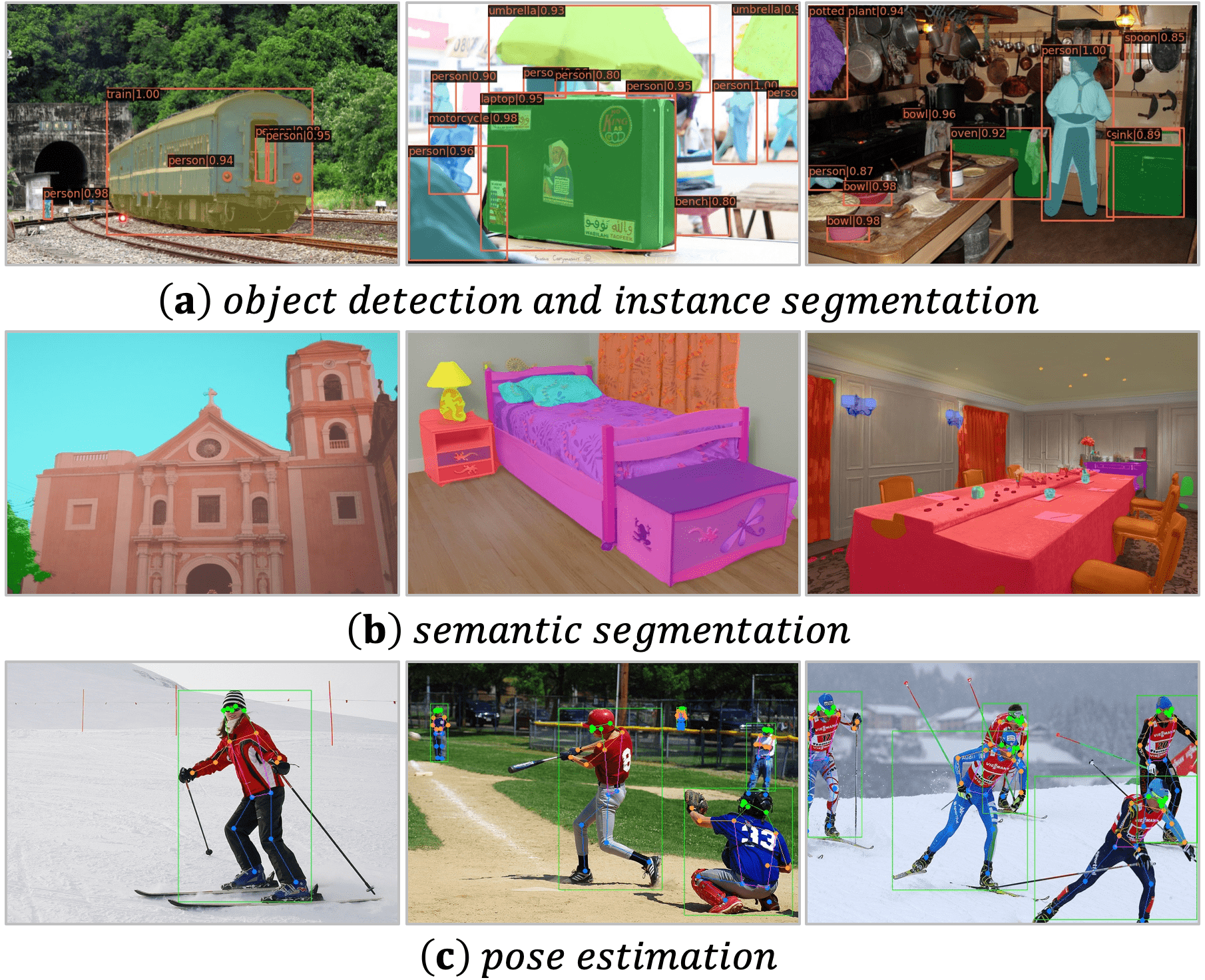

6.3 Object Detection and Instance Segmentation

Settings. We benchmark our models on object detection and instance segmentation with COCO2017 [60]. The ImageNet-1K pre-trained models are utilized as backbones and then armed with two representative frameworks: Mask R-CNN [6] and Cascade Mask R-CNN [36]. Our codes are mainly based on mmdetection [13], and the training strategies are the same as Swin Transformer [61]. We adopt two training schedules: 1 schedule with 12 epochs and 3 schedule with 36 epochs. For the 1 schedule, the shorter side of the image is resized to 800 while keeping the longer side no more than 1333. As for the 3 schedule, we apply the multi-scale training strategy to randomly resize the shorter side between 480 to 800. Besides, we use AdamW optimizer with the initial learning rate of 1e-4 and weight decay of 0.05. To regularize the training, we set the stochastic depth drop rates to 0.1/0.3 and 0.2/0.4 for our small/base models with Mask R-CNN and Cascade Mask R-CNN.

Results. Table V reports box mAP () and mask mAP () of the Mask R-CNN framework. It shows that our UniFormer variants outperform all the CNN and Transformer backbones. To reduce the training cost for object detection, we utilize a hybrid UniFomer style with a window size of 14 in Stage3 (denoted by ). Specifically, with 1 schedule, our UniFormer brings 7.0-7.6 points of box mAP and 6.7-7.2 mask mAP against ResNet [37] at comparable settings. Compared with the popular Swin Transformer [61], our UniFormer achieves 2.6-3.4 points of box mAP and 2.2-2.5 mask mAP improvement. Moreover, with 3 schedule and multi-scale training, our models still consistently surpass CNN and Transformer counterparts. For example, our UniFormer-B outperforms the powerful CSwin-S [23] by +0.3 box mAP and +0.3 mask mAP, and even better than larger backbones such as Swin-B and Focal-B [109]. Table VII reports the results with the Cascade Mask R-CNN framework. The consistent improvement demonstrates our stronger context modeling capacity.

| Method | Semantic FPN 80K | ||

| #Param(M) | FLOPs(G) | mIoU(%) | |

| Res50 [37] | 29 | 183 | 36.7 |

| PVT-S [99] | 28 | 161 | 39.8 |

| TwinsP-S [16] | 28 | 162 | 44.3 |

| Twins-S [16] | 28 | 144 | 43.2 |

| Swin-T [61] | 32 | 182 | 41.5 |

| UniFormer-Sh32 | 25 | 199 | 46.2 |

| UniFormer-S | 25 | 247 | 46.6 |

| Res101 [37] | 48 | 260 | 38.8 |

| PVT-M [99] | 48 | 219 | 41.6 |

| PVT-L [99] | 65 | 283 | 42.1 |

| TwinsP-B [16] | 48 | 220 | 44.9 |

| TwinsP-L [16] | 65 | 283 | 46.4 |

| Twins-B [16] | 60 | 261 | 45.3 |

| Swin-S [61] | 53 | 274 | 45.2 |

| Twins-L [16] | 104 | 404 | 46.7 |

| Swin-B [61] | 91 | 422 | 46.0 |

| UniFormer-Bh32 | 54 | 350 | 47.7 |

| UniFormer-B | 54 | 471 | 48.0 |

| Method | Upernet 160K | |||

| #Param.(M) | FLOPs(G) | mIoU(%) | MS mIoU(%) | |

| TwinsP-S [16] | 55 | 919 | 46.2 | 47.5 |

| Twins-S [16] | 54 | 901 | 46.2 | 47.1 |

| Swin-T [61] | 60 | 945 | 44.5 | 45.8 |

| Focal-T [109] | 62 | 998 | 45.8 | 47.0 |

| Shuffle-T [42] | 60 | 949 | 46.6 | 47.8 |

| UniFormer-Sh32 | 52 | 955 | 47.0 | 48.5 |

| UniFormer-S | 52 | 1008 | 47.6 | 48.5 |

| Res101 [37] | 86 | 1029 | - | 44.9 |

| TwinsP-B [16] | 74 | 977 | 47.1 | 48.4 |

| Twins-B [16] | 89 | 1020 | 47.7 | 48.9 |

| Swin-S [61] | 81 | 1038 | 47.6 | 49.5 |

| Focal-T [109] | 85 | 1130 | 48.0 | 50.0 |

| Shuffle-S [42] | 81 | 1044 | 48.4 | 49.6 |

| Swin-B [61] | 121 | 1188 | 48.1 | 49.7 |

| Focal-B [109] | 126 | 1354 | 49.0 | 50.5 |

| Shuffle-B [42] | 121 | 1196 | 49.0 | 50.5 |

| UniFormer-Bh32 | 80 | 1106 | 49.5 | 50.7 |

| UniFormer-B | 80 | 1227 | 50.0 | 50.8 |

| Arch. | Method | Input Size | #Param(M) | FLOPs(G) | ||||||

| CNN | SimpleBaseline-R101 [103] | 256192 | 53.0 | 12.4 | 71.4 | 89.3 | 79.3 | 68.1 | 78.1 | 77.1 |

| SimpleBaseline-R152 [103] | 256192 | 68.6 | 15.7 | 72.0 | 89.3 | 79.8 | 68.7 | 78.9 | 77.8 | |

| HRNet-W [95] | 256192 | 28.5 | 7.1 | 74.4 | 90.5 | 81.9 | 70.8 | 81.0 | 78.9 | |

| HRNet-W [95] | 256192 | 63.6 | 14.6 | 75.1 | 90.6 | 82.2 | 71.5 | 81.8 | 80.4 | |

| CNN+Trans | TransPose-H-A [110] | 256192 | 17.5 | 21.8 | 75.8 | - | - | - | - | 80.8 |

| TokenPose-L/D [55] | 256192 | 27.5 | 11.0 | 75.8 | 90.3 | 82.5 | 72.3 | 82.7 | 80.9 | |

| HRFormer-S [114] | 256192 | 7.8 | 3.3 | 74.0 | 90.2 | 81.2 | 70.4 | 80.7 | 79.4 | |

| HRFormer-B [114] | 256192 | 43.2 | 14.1 | 75.6 | 90.8 | 82.8 | 71.7 | 82.6 | 80.8 | |

| UniFormer-S | 256192 | 25.2 | 4.7 | 74.0 | 90.3 | 82.2 | 66.8 | 76.7 | 79.5 | |

| UniFormer-B | 256192 | 53.5 | 9.2 | 75.0 | 90.6 | 83.0 | 67.8 | 77.7 | 80.4 | |

| CNN | SimpleBaseline-R152 [103] | 384288 | 68.6 | 35.6 | 74.3 | 89.6 | 81.1 | 70.5 | 79.7 | 79.7 |

| HRNet-W [95] | 384288 | 28.5 | 16.0 | 75.8 | 90.6 | 82.7 | 71.9 | 82.8 | 81.0 | |

| HRNet-W [95] | 384288 | 63.6 | 32.9 | 76.3 | 90.8 | 82.9 | 72.3 | 83.4 | 81.2 | |

| CNN+Trans | PRTR [51] | 384288 | 57.2 | 21.6 | 73.1 | 89.4 | 79.8 | 68.8 | 80.4 | 79.8 |

| PRTR [51] | 512384 | 57.2 | 37.8 | 73.3 | 89.2 | 79.9 | 69.0 | 80.9 | 80.2 | |

| HRFormer-S [114] | 384288 | 7.8 | 7.2 | 75.6 | 90.3 | 82.2 | 71.6 | 82.5 | 80.7 | |

| HRFormer-B [114] | 384288 | 43.2 | 30.7 | 77.2 | 91.0 | 83.6 | 73.2 | 84.2 | 82.0 | |

| UniFormer-S | 384288 | 25.2 | 11.1 | 75.9 | 90.6 | 83.4 | 68.6 | 79.0 | 81.4 | |

| UniFormer-B | 384288 | 53.5 | 22.1 | 76.7 | 90.8 | 84.0 | 69.3 | 79.7 | 81.9 | |

| UniFormer-S | 448320 | 25.2 | 14.8 | 76.2 | 90.6 | 83.2 | 68.6 | 79.4 | 81.4 | |

| UniFormer-B | 448320 | 53.5 | 29.6 | 77.4 | 91.1 | 84.4 | 70.2 | 80.6 | 82.5 | |

6.4 Semantic Segmentation

Settings. Our semantic segmentation experiments are conducted on the ADE20k [121] dataset and our codes are based on mmseg [19]. We adopt the popular Semantic FPN [47] and Upernet [104] as the basic framework. For a fair comparison, we follow the same setting of PVT [99] to train Semantic FPN for 80k iterations with cosine learning rate schedule [66]. As for Upernet, we apply the settings of Swin Transformer [61] with 160k iteration training. The stochastic depth drop rates are set to 0.1/0.2 and 0.25/0.4 for small/base variants with Semantic FPN and Upernet respectively.

Results. Table VIII and Table IX report the results of different frameworks. It shows that with the Semantic FPN framework, our UniFormer-Sh32/Bh32 achieve +4.7/+2.5 higher mIoU than the Swin Transformer [61] with similar model sizes. When equipped with the UperNet framework, they achieve +2.5/+1.9 mIoU and +2.7/+1.2 MS mIoU improvement. Furthermore, when utilizing the global MHRA, the results are consistently improved but with a larger computation cost. More results can be found in Table XXII.

| Method | Size | #Param | FLOPs | Throughput | ImageNet |

| (M) | (G) | (imaegs/s) | Top-1 | ||

| UniFormer-XXS | 128 | 10.2 | 0.43 | 12886 | 76.8 |

| MobilelViT-XXS[69] | 256 | 1.3 | 0.43 | 9742 | 68.9 |

| EfficientNet-B0[85] | 224 | 5.3 | 0.42 | 9501 | 77.1 |

| UniFormer-XXS | 160 | 10.2 | 0.67 | 9382 | 79.1 |

| PVTv2-B0[100] | 224 | 3.7 | 0.57 | 8737 | 70.7 |

| EfficientNet-B1[85] | 240 | 7.8 | 0.74 | 5820 | 79.1 |

| UniFormer-XXS | 192 | 10.2 | 0.96 | 5766 | 79.9 |

| MobileFormer[14] | 224 | 14.0 | 0.51 | 4953 | 79.3 |

| MobilelViT-XS[69] | 256 | 2.3 | 1.1 | 4822 | 74.6 |

| PVTv2-B1[100] | 224 | 14.0 | 2.1 | 4812 | 78.7 |

| UniFormer-XS | 192 | 16.5 | 1.4 | 4492 | 81.5 |

| UniFormer-XXS | 224 | 10.2 | 1.3 | 4446 | 80.6 |

| EfficientNet-B2[85] | 260 | 9.1 | 1.1 | 4247 | 80.1 |

| UniFormer-XS | 224 | 16.5 | 2.0 | 3506 | 82.0 |

| MobilelViT-S[69] | 256 | 5.6 | 2.0 | 3360 | 78.3 |

| EfficientNet-B3[85] | 300 | 12.2 | 1.9 | 2568 | 81.6 |

6.5 Pose Estimation

Settings. We evaluate the performance of UniFormer on the COCO2017[60] human pose estimation benchmark. For a fair comparison with previous SOTA methods, we employ a single Top-Down head after our backbones. We follow the same training and evaluation setting of mmpose [18] as HRFormer [114]. In addition, the batch size and stochastic depth drop rates are set to 1024/256 and 0.2/0.5 for small/base variants during training.

Results. Table X reports results of different input resolutions on COCO validation set. Compared with previous SOTA CNN models, our UniFormer-B surpasses HRNet-W48 [95] by 0.4% AP with fewer parameters (53.5M vs. 63.6M) and FLOPs (22.1G vs. 32.9G). Moreover, our UniFormer-B can outperform the current best approach HRFormer [114] by 0.2% AP with smaller FLOPs (29.6G vs. 30.7G). It is worth noting that HRFormer [114], PRTR [51], TokenPose [55] and TransPose [110] are sophisticatedly designed for pose estimation task. On the contrary, our UniFormer can outperform all of them as a simple yet effective backbone.

| Method | #frame | Size | #Param | FLOPs | Throughput | K400 |

| (M) | (G) | (videos/s) | Top-1 | |||

| UniFormer-XXS | 4 | 128 | 10.4 | 1.0 | 1878 | 63.2 |

| UniFormer-XXS | 4 | 160 | 10.4 | 1.6 | 1384 | 65.8 |

| UniFormer-XXS | 8 | 128 | 10.4 | 2.0 | 1125 | 68.3 |

| UniFormer-XXS | 8 | 160 | 10.4 | 3.3 | 679 | 71.4 |

| UniFormer-XXS | 16 | 128 | 10.4 | 4.2 | 591 | 73.3 |

| UniFormer-XXS | 16 | 160 | 10.4 | 6.9 | 367 | 75.1 |

| MoViNet-A0[48] | 50 | 172 | 3.1 | 2.7 | 315 | 65.8 |

| MoViNet-A1[48] | 50 | 172 | 4.6 | 6.0 | 167 | 72.7 |

| UniFormer-XXS | 32 | 160 | 10.4 | 15.4 | 160 | 77.9 |

| MoViNet-A2[48] | 50 | 224 | 4.8 | 10.3 | 103 | 75.0 |

| UniFormer-XS | 32 | 192 | 16.7 | 34.2 | 75 | 78.6 |

| X3D-XS⋆[48] | 4 | 182 | 3.8 | 27.4 | 39 | 69.1 |

| MoViNet-A3[48] | 120 | 256 | 5.3 | 56.9 | 30 | 78.2 |

| X3D-S⋆[48] | 13 | 182 | 3.8 | 88.7 | 18 | 73.3 |

6.6 Light-Weight UniFormer

Settings. For the light-weight UniFormer, we follow most of the previous settings. Differently, we train UniFormer-XSS and UniFormer-XS for 600 epochs on ImageNet, since the lightweight models are difficult to converge [35, 14]

Results of classification. Table XI represents the results on ImageNet. We roughly divide the models according to the FLOPs: 1G and 1G2G. It clearly reveals that our efficient UniFormer achieves the best accuracy-throughput trade-off under similar FLOPs. For example, compared with the strong CNN method EfficientNet-B3[85], our UniFormer-XS192 obtains 1.7 higher throughput with similar performance. Compared with SOTA MobileFormer[14] that combines CNN and ViT, our UniFormer-XXS192 obtains 0.6% higher accuracy with 16% higher throughput. We further fine-tune the above models with different frames on Kinetics-400. Results in Table XII show that our efficient backbone surpasses the SOTA lightweight video backbones by a large margin. Compared with MoViNet-A0[48], our UniFormer-XXS150×16f achieves 9.3% higher performance with 16% higher throughput. While compared with X3D-S[29], our UniFormer-XS192×32f runs 4.2 faster with 5.4% higher accuracy. Note that we do not apply complicated designs as in the recent lightweight methods[14, 69, 48]. Our concise extension already shows powerful performance, which further demonstrates the great potential of UniFormer.

| Method | #Params | Mask R-CNN 1 + MS schedule | |||||

| (M) | |||||||

| PVTv2-B0[100] | 23.5 | 38.2 | 60.5 | 40.7 | 36.2 | 57.8 | 38.6 |

| ResNet18[37] | 31.2 | 34.0 | 54.0 | 36.7 | 31.2 | 51.0 | 32.7 |

| PVTv1-Tiny[99] | 32.9 | 36.7 | 59.2 | 39.3 | 35.1 | 56.7 | 37.3 |

| PVTv2-B1[100] | 33.7 | 41.8 | 64.3 | 45.9 | 38.8 | 61.2 | 41.6 |

| UniFormer-XXS | 29.4 | 42.8 | 65.0 | 47.0 | 39.2 | 61.7 | 42.0 |

| UniFormer-XS | 35.6 | 44.6 | 67.4 | 48.8 | 40.9 | 64.2 | 44.1 |

| Method | Semantic FPN 80K | ||

| #Param(M) | FLOPs(G) | mIoU(%) | |

| PVTv2-B0[100] | 7.6 | 25.0 | 37.2 |

| ResNet18[37] | 15.5 | 32.2 | 32.9 |

| PVTv1-Tiny[99] | 17.0 | 33.2 | 35.7 |

| PVTv2-B1[100] | 17.8 | 34.2 | 42.5 |

| UniFormer-XXS | 13.5 | 29.2 | 42.3 |

| UniFormer-XS | 19.7 | 32.9 | 44.4 |

| FFN | DPE | MHRA | ImageNet | K400 | |||

| Size | Type | #Param | Top-1 | GFLOPs | Top-1 | ||

| ✔ | ✔ | 5 | 21.5 | 82.9 | 41.8 | 79.3 | |

| ✗ | ✔ | 5 | 21.3 | 82.6 | 41.0 | 78.6 | |

| ✔ | ✗ | 5 | 21.5 | 82.4 | 41.4 | 77.6 | |

| ✔ | ✔ | 3 | 21.5 | 82.8 | 41.0 | 79.0 | |

| ✔ | ✔ | 7 | 21.6 | 82.9 | 43.5 | 79.1 | |

| ✔ | ✔ | 9 | 21.6 | 82.8 | 46.6 | 78.9 | |

| ✔ | ✔ | 5 | 23.3 | 81.9 | 31.6 | 77.2 | |

| ✔ | ✔ | 5 | 22.2 | 82.5 | 31.6 | 78.4 | |

| ✔ | ✔ | 5 | 21.6 | 82.7 | 39.0 | 79.0 | |

| ✔ | ✔ | 5 | 20.1 | 82.1 | 72.0 | 75.3 | |

| Type | Joint | GFLOPs | Pretrain | SSV1 | ||

| Dataset | Top-1 | Top-1 | Top-5 | |||

| ✔ | 26.1 | IN-1K | 81.0 | 49.2 | 77.4 | |

| K400 | 77.4 | 49.2 | 77.6 | |||

| ✗ | 36.8 | IN-1K | 82.9 | 51.9 | 80.1 | |

| K400 | 80.1 | 51.8 | 80.1 | |||

| IN-1K | 82.9 | 52.0 | 80.2 | |||

| ✔ | 41.8 | K400 | 80.8 | 53.8 | 81.9 | |

| Dataset | Pretrain | #frame | 2D | 3D | ||

| #crop#clip | Top-1 | Top-5 | Top-1 | Top-5 | ||

| K400 | IN-1K | 811 | 74.7 | 90.8 | 74.9 | 90.7 |

| 814 | 78.5 | 93.2 | 78.4 | 93.3 | ||

| SSV1 | IN-1K | 811 | 47.9 | 75.8 | 48.3 | 76.1 |

| 831 | 51.4 | 79.6 | 51.3 | 79.7 | ||

| K400 | 811 | 47.9 | 75.6 | 48.6 | 75.6 | |

| 831 | 51.3 | 79.4 | 51.5 | 79.5 | ||

Results of dense prediction. We also verify the efficient UniFormer for COCO object detection and instance segmentation in Table XIII, and ADE20K semantic segmentation in Table XIV. Our models obviously beat ResNet[37] and PVTv2[100] on these dense prediction tasks. For example, our UniFormer-XS brings 2.7 points of box mAP and 2.1 mask mAP against PVTv2-B1 on COCO, and achieves +1.9 mIoU improvement on ADE20K.

6.7 Ablation Studies

To inspect the effectiveness of UniFormer as the backbone, we ablate each key structure design and evaluate the performance on image and video classification datasets. Furthermore, for video backbones, we explore the vital designs of pre-training, training and testing. Finally, we demonstrate the efficiency of our adaption on downstream tasks, and the effectiveness of H-UniFormer.

6.7.1 Model designs for image and video backbones

We conduct ablation studies of the vital components in Table XV.

FFN. As mentioned in Section 3.2, our UniFormer blocks in the shallow layers are instantiated as a transformer-style MobileNet block [90] with extra FFN as in ViT [24]. Hence, we first investigate its effectiveness by replacing our UniFormer blocks in the shallow layers with MobileNet blocks [77]. BN and GELU are added as the original paper, but the expand ratios are set to 3 for similar parameters. Note that the dynamic position embedding is kept for a fair comparison. As expected, our UniFormer outperforms such MobileNet block both in ImageNet (+0.3%) and Kinetics-400 (+0.7%). It shows that, FFN in our model can further mix token context at each position to boost classification accuracy.

DPE. With dynamic position embedding, our UniFormer obviously improves the top-1 accuracy by +0.5% on ImageNet, but +1.7% on Kinetics-400. It shows that via encoding the position information, our DPE can maintain spatial and temporal order, thus contributing to better representation learning, especially for video.

MHRA. In our local token affinity (Eq. 6), we aggregate the context from a small local tube. Hence, we investigate the influence of this tube by changing the size from 3 to 9. Results show that our network is robust to the tube size on both ImageNet and Kinetics-400. We simply choose the kernel size of 5 for better accuracy. More importantly, we investigate the configuration of local and global UniFormer block stage by stage, in order to verify the effectiveness of our network. As shown in row1, 7-10 in Table XV, when we only use local MHRA (), the computation cost is light. However, the accuracy is largely dropped (-1.0% and -2.1% on ImageNet and Kinetics-400) due to the lack of capacity for learning long-term dependency. When we gradually replace local MHRA with global MHRA, the accuracy becomes better as expected. Unfortunately, the accuracy is dramatically dropped with a heavy computation load when all the layers apply global MHRA (), i.e., -4.0% on Kinetics-400. It is mainly because that, without local MHRA, the network lacks the ability to extract detailed representations, leading to severe model overfitting with redundant attention for sequential video data.

| Model | #frame | FLOPs | Sampling | K400 | K600 | ||

| #crop#clip | (G) | Stride | Top-1 | Top-5 | Top-1 | Top-5 | |

| Small | 1611 | 41.8 | 4 | 76.2 | 92.2 | 79.0 | 93.6 |

| 8 | 78.4 | 92.9 | 80.8 | 94.7 | |||

| 1614 | 167.2 | 4 | 80.8 | 94.7 | 82.8 | 95.8 | |

| 8 | 80.8 | 94.4 | 82.7 | 95.7 | |||

| Base | 1611 | 96.7 | 4 | 78.1 | 92.8 | 80.3 | 94.5 |

| 8 | 79.3 | 93.4 | 81.7 | 95.0 | |||

| 1614 | 386.8 | 4 | 82.0 | 95.1 | 84.0 | 96.4 | |

| 8 | 81.7 | 94.8 | 83.4 | 96.0 | |||

| Small | 3211 | 109.6 | 2 | 77.3 | 92.4 | - | - |

| 4 | 79.8 | 93.4 | - | - | |||

| 3214 | 438.4 | 2 | 81.2 | 94.7 | - | - | |

| 4 | 82.0 | 95.1 | - | - | |||

6.7.2 Pre-training, training and testing for video backbone

In this section, we explore more designs for our video backbones. Firstly, to load 2D pre-trained backbones, it is essential to determine how to inherit self-attention and inflate convolution filters. Hence, we compare the transfer learning performance of different MHRA configurations and inflating methods of filters. Besides, since we use dense sampling [101] for Kinetics, we should confirm the appropriate sampling stride. Furthermore, as we utilize Kinetics pre-trained models for SthSth, it is interesting to explore the effect of sampling methods and dataset scales for pre-trained models. Finally, we ablate the testing strategies for different datasets.

Transfer learning. Table XVII presents the results of transfer learning. All models share the same stage numbers but the stage types are different. For Kinetics-400, it clearly shows that the joint version is more powerful than the separate one, verifying that joint spatiotemporal attention can learn more discriminative video representations. As for SthSth V1, when the model is gradually pre-trained from ImageNet to Kinetics-400, the performance of our joint version becomes better. Compared with pre-training from ImgeNet, pre-training from Kinetics-400 will further improve the top-1 accuracy by +1.8%. However, such distinct characteristic is not observed in the pure local MHRA structure () and UniFormer with divided spatiotemporal attention. It demonstrates that the joint learning manner is preferable for transfer learning, thus we adopt it by default.

| Model | Train | Pre-train | 1crop1clip | 3crops1clip | ||

| #frame | #frame#stride | Top-1 | Top-5 | Top-1 | Top-5 | |

| Small | 16 | 164 | 53.8 | 81.9 | 57.2 | 84.9 |

| 168 | 53.7 | 81.3 | 57.3 | 84.6 | ||

| Base | 16 | 164 | 55.4 | 82.9 | 59.1 | 86.2 |

| 168 | 55.5 | 83.1 | 58.8 | 86.2 | ||

| Small | 32 | 164 | 55.8 | 83.6 | 58.8 | 86.4 |

| 322 | 55.6 | 83.1 | 58.6 | 85.6 | ||

| 324 | 55.9 | 82.9 | 58.9 | 86.0 | ||

Inflating methods. As indicated in I3D [11], we can inflate the 2D convolutional filters for easier optimization. Here we consider whether or not to inflate the filters. Note that the first convolutional filter in the patch stem is always inflated for temporal downsampling. As shown in Table XVII, inflating the filters to 3D achieves similar results on Kinetics-400, but obtains performance improvement on SthSth V1. We argue that Kinetics-400 is a scene-related dataset, thus 2D convolution is enough to recognize the action. In contrast, SthSth V1 is a temporal-related dataset, which requires powerful spatiotemporal modeling. Hence, we inflate all the convolutional filters to 3D for better generality by default.

Sampling stride. For dense sampling strategy, the basic hyperparameter is the sampling stride of frames. Intuitively, a larger sampling stride will cover a longer frame range, which is essential for better video understanding. In Table XVIII, we show more results on Kinetics under different sampling strides. As expected, larger sampling stride (i.e. sparser sampling) often achieves higher single-clip results. However, when testing with multi clips, sampling with a frame stride of 4 always performs better.

| Type | FLOPs | 1 + MS | 3 + MS | ||||||

| (G) | |||||||||

| W-14 | 250 | 45.0 | 67.8 | 40.8 | 64.7 | 47.5 | 69.8 | 43.0 | 66.7 |

| H-14 | 269 | 45.4 | 68.2 | 41.4 | 64.9 | 48.2 | 70.4 | 43.4 | 67.1 |

| G | 326 | 45.8 | 68.7 | 41.5 | 50.5 | 48.1 | 70.1 | 43.4 | 67.1 |

| Model | Type | Semantic FPN | UperNet | ||

| GFLOPs | mIoU(%) | GFLOPs | (MS)mIoU(%) | ||

| Small | W-32 | 183 | 45.2 | 939 | (48.4)46.6 |

| H-32 | 199 | 46.2 | 955 | (48.5)47.0 | |

| G | 247 | 46.6 | 1004 | (48.5)47.6 | |

| Base | W-32 | 310 | 47.2 | 1066 | (50.6)49.1 |

| H-32 | 350 | 47.7 | 1106 | (50.7)49.5 | |

| G | 471 | 48.0 | 1227 | (50.8)50.0 | |

| Type | Input | FLOPs | ||||||

| Size | (G) | |||||||

| W-14 | 384288 | 12.3 | 76.1 | 90.8 | 83.2 | 68.9 | 79.1 | 81.1 |

| H-14 | 384288 | 12.0 | 75.9 | 90.7 | 83.2 | 68.6 | 78.9 | 81.0 |

| G | 384288 | 11.1 | 75.9 | 90.6 | 83.4 | 68.6 | 79.0 | 81.4 |

Sampling methods of Kinetics pre-trained model. For SthSth, we uniformly sample frames as suggested in [52]. Since we load Kinetics pre-trained models for fast convergence, it is necessary to find out whether pre-trained models that cover more frames can help fine-tuning. Table XIX shows that, different pre-trained models achieve similar performances for fine-tuning. We apply 164 pre-training for better generalization.

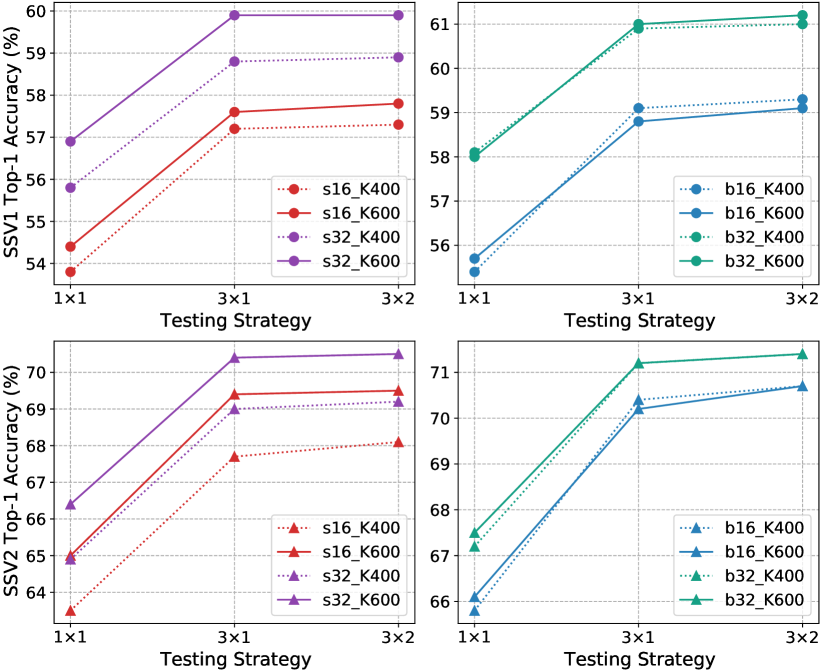

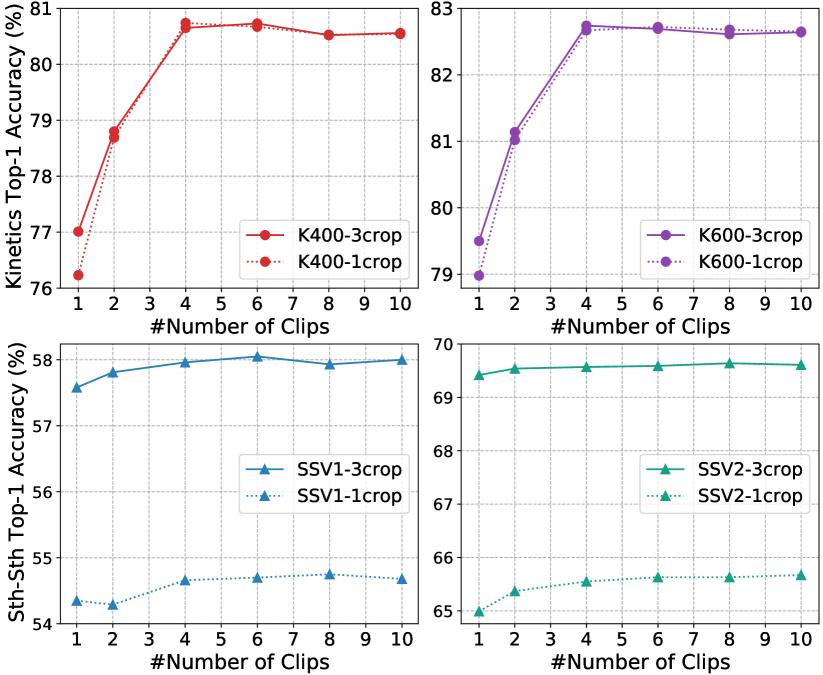

Pre-trained dataset scales. In Figure 8, we show more results on SthSth with Kinetics-400/600 pre-training. For UniFormer-S, Kinetics-600 pre-training consistently performs better than Kinetics-400 pre-training, especially for large benchmark SthSth V2. However, both of them achieve comparable results for UniFormer-B. These results indicate that small models are harder to converge and eager for larger dataset pre-training, but big models are not.

Testing strategies. We evaluate our network with various numbers of clips and crops for the validation videos on different datasets. As shown in Figure 8, since Kinetics is a scene-related dataset and trained with dense sampling, multi-clip testing is preferable to cover more frames for boosting performance. Alternatively, Something-Something is a temporal-related dataset and trained with uniform sampling, so multi-crop testing is better for capturing the discriminative motion for boosting performance.

6.7.3 Adaption designs for downstream tasks

We verify the effectiveness of our adaption for dense prediction tasks in Table XXII, Table XXII and Table XXII. ‘W’, ‘H’ and ‘G’ refer to window, hybrid and global UniFormer style in Stage3 respectively. Note that the pre-trained global UniFormer block can be seen as a window UniFormer block with a large window size, thus the minimal window size in our experiments is 224/32=14.

Table XXII shows results on object detection. Though the hybrid style performs worse than the global style with the 1 schedule, it achieves comparable results with the 3 schedule, which indicates that training more epochs can narrow the performance gap. We further conduct experiments on semantic segmentation with different model variants in Table XXII. As expected, large window size and global UniFormer blocks contribute to better performances, especially for big models. Moreover, when testing with multi-scale inputs, hybrid style with a window size of 32 obtains similar results to the global style. As for human pose estimation (Table XXII), due to the small input resolution, i.e. 384288, utilizing window style requires more computation for zero paddings. We simply apply global UniFormer blocks for better computation-accuracy balance.

| Score | Running | Shrinking | ImageNet | ||

| Token | Mean | Ratio | GFLOPs | Throughput | Top-1 |

| ✔ | ✔ | 0.5 | 0.67 | 9382 | 79.1 |

| ✗ | ✔ | 0.5 | 0.67 | 9395(+0.1%) | 78.7(-0.4) |

| ✔ | ✗ | 0.5 | 0.67 | 9283(+0.0%) | 78.8(-0.3) |

| ✔ | ✔ | 1.0 | 0.91 | 8342(-11.1%) | 79.9(+0.8) |

| ✔ | ✔ | 0.8 | 0.82 | 8452(-9.9%) | 79.8(+0.7) |

| ✔ | ✔ | 0.6 | 0.72 | 9094(-3.1%) | 79.3(+0.2) |

| ✔ | ✔ | 0.4 | 0.62 | 9692(+3.3%) | 78.4(-0.7) |

| ✔ | ✔ | 0.25 | 0.55 | 10162(+8.3%) | 76.8(-2.3) |

6.7.4 Designs for H-UniFormer

We further explore the lightweight designs based on UniFormer-XXS160 in Table XXIII. Firstly, we try to remove the score token (i.e., ) and simply use the mean of global similarity to measure the token importance. Results show that the learnable score token is more helpful for token selection. Besides, the running mean of the similarity score (i.e., ) will improve the top-1 accuracy, which verifies the effectiveness of consistent important tokens. Finally, we ablate different shrinking ratios, where we use the ratio of 0.5 for a better trade-off.

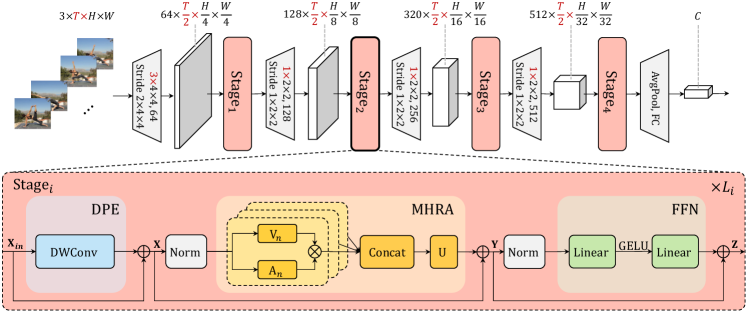

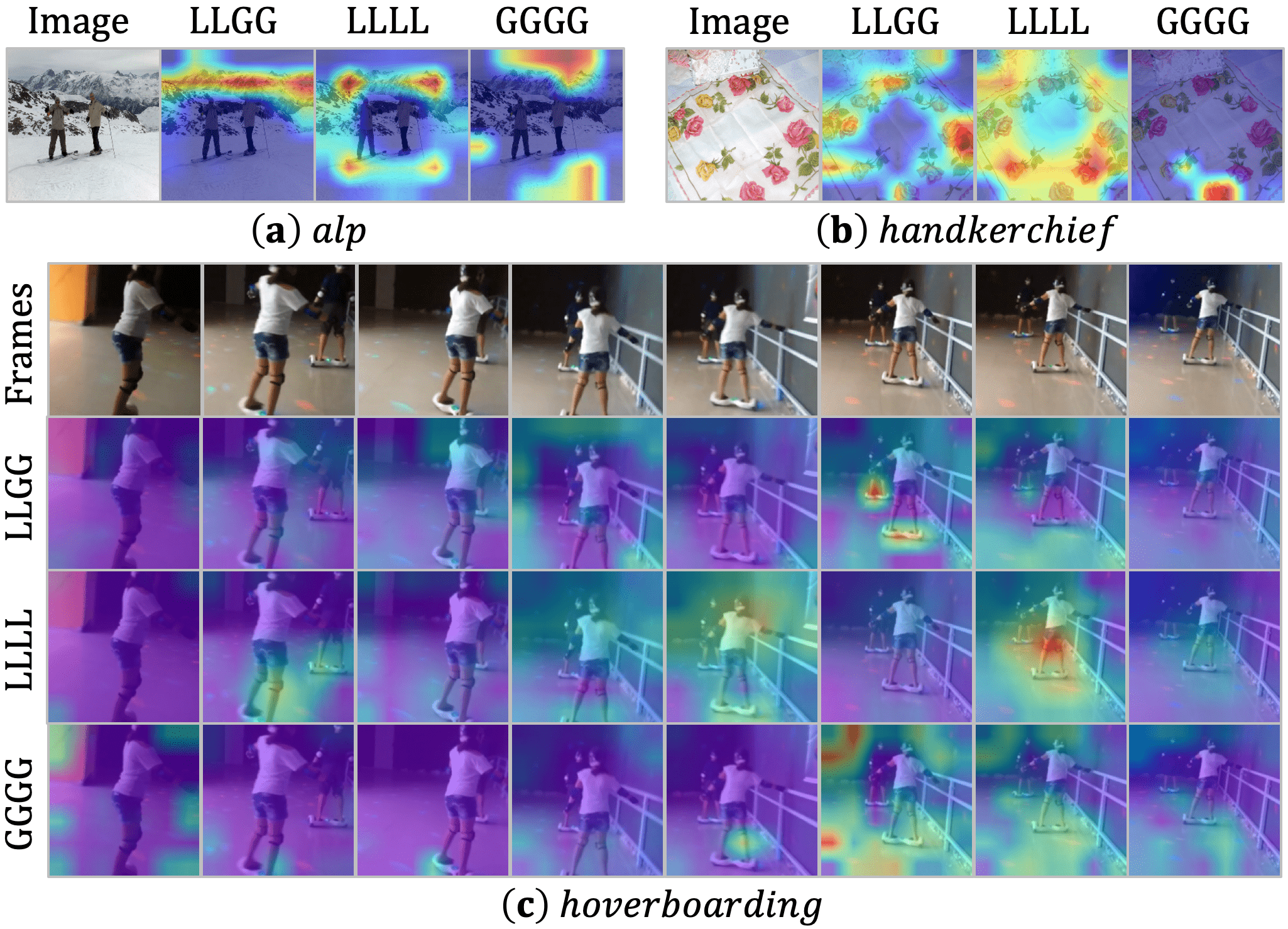

6.8 Visualizations

In Figure 10, We apply Grad-CAM [78] to show the areas of the greatest concern in the last layer. Images are sampled from ImageNet validation set [22] and the video is sampled from Kintics-400 validation set [10]. It reveals that struggles to focus on the key object, i.e., the mountain and the skateboard, as it blindly compares the similarity of all tokens in all layers. Alternatively, only performs local aggregation. Hence, its attention tends to be coarse and inaccurate without a global view. Different from both cases, our UniFormer with can cooperatively learn local and global contexts in a joint manner. As a result, it can effectively capture the most discriminative information, by paying precise attention to the mountain and the skateboard.

In Figure 10, we further conduct visualization on validation datasets for various downstream tasks. Such robust qualitative results demonstrate the effectiveness of our UniFormer backbones.

7 Conclusion

In this paper, we propose a novel UniFormer for efficient visual recognition, which can effectively unify convolution and self-attention in a concise transformer format to overcome redundancy and dependency. We adopt local MHRA in shallow layers to largely reduce computation burden and global MHRA in deep layers to learn global token relation. Extensive experiments demonstrate the powerful modeling capacity of our UniFormer. Via simple yet effective adaption, our UniFormer achieves state-of-the-art results on a broad range of vision tasks with less training cost.

References

- [1] A. Arnab, M. Dehghani, G. Heigold, Chen Sun, Mario Lucic, and C. Schmid. Vivit: A video vision transformer. ICCV, 2021.

- [2] Jimmy Ba, Jamie Ryan Kiros, and Geoffrey E. Hinton. Layer normalization. ArXiv, abs/1607.06450, 2016.

- [3] Gedas Bertasius, Heng Wang, and L. Torresani. Is space-time attention all you need for video understanding? ICML, 2021.

- [4] Andrew Brock, Soham De, Samuel L. Smith, and K. Simonyan. High-performance large-scale image recognition without normalization. ArXiv, abs/2102.06171, 2021.