Unsupervised Acoustic Scene Mapping Based on Acoustic Features and Dimensionality Reduction

Abstract

Classical methods for acoustic scene mapping require the estimation of the time difference of arrival (TDOA) between microphones. Unfortunately, TDOA estimation is very sensitive to reverberation and additive noise. We introduce an unsupervised data-driven approach that exploits the natural structure of the data. Toward this goal, we adapt the recently proposed local conformal autoencoder (LOCA) – an offline deep learning scheme for extracting standardized data coordinates from measurements. Our experimental setup includes a microphone array that measures the transmitted sound source, whose position is unknown, at multiple locations across the acoustic enclosure. We demonstrate that our proposed scheme learns an isometric representation of the microphones’ spatial locations and can perform extrapolation over new and unvisited regions. The performance of our method is evaluated using a series of realistic simulations and compared with a classical approach and other dimensionality-reduction schemes. We further assess reverberation’s influence on our framework’s results and show that it demonstrates considerable robustness.

Index Terms— acoustic scene mapping, relative transfer function (RTF), unsupervised learning, local conformal autoencoder (LOCA), dimensionality reduction

1 Introduction

From augmented reality to robot autonomy, it is essential to map the environment and the room’s shape in many audio applications. Consequently, there has been growing attention towards the task of simultaneous localization and mapping (SLAM). In SLAM, a moving observer is considered, following an unknown path, and the task is then to reconstruct both the trajectory and the map of the environment. Due to the rapid improvement of computer vision technology, visual SLAM [1], based on optical sensors, has been a subject of extensive research. The audio processing community later adopted the same concept, as spatial information can also be extracted from the audio signals [2, 3].

This work focuses on the environment mapping problem using audio signals acquired by a microphone array, a task closely related to the acoustic SLAM problem. Traditionally, the environment map is specified in terms of landmarks, and reconstruction of the map is equivalent to localizing the landmarks [1]. In our setting, audio signals are emitted from sound sources of unknown positions and recorded by a microphone array that scans the region of interest (RoI) and maps it. Practically, acoustic SLAM methods necessitate the use of localization techniques, usually based on TDOA estimation between microphone pairs [2, 3]. A widely used approach for TDOA estimation is the generalized cross-correlation (GCC-PHAT) algorithm [4]. Unfortunately, the performance of GCC-PHAT severely degrades in highly reverberated environments [5], resulting in erroneous TDOA estimates and poor source localization, especially for large source-sensor distances. Although many improvements to generalized cross-correlation schemes were proposed, e.g., [6, 7, 8], simple TDOA-based mappings cannot yield a reliable reconstruction of the environment map.

In this work, we propose to adopt the relative transfer function (RTF) [9, 10] as a feature for the source localization task rather than first estimating the TDOA. The RTFs are high-dimensional acoustic vectors that were shown to lie on manifolds and were successfully applied to audio processing tasks, e.g. source localization and tracking [11], and source separation [10]. As the RTFs are lying on a manifold [11], they can be naturally integrated with the LOCA dimensionality reduction scheme [12], enabling the inference of a latent space representation. The learned representation captures the latent variables that parameterize the data, i.e., it can recover the 2-D RoI. The manifold assumption has been previously used for audio-based source localization [11, 13, 14, 15, 16], but is now adapted to Acoustic Scene Mapping for the first time.

Several key elements reflect the novelty of our approach: 1) We utilize recent progress from the manifold learning field, enabling us to robustly deal with the problem of acoustic scene mapping in reverberant environments circumventing the pre-processing stage of extracting the TDOA, which is sensitive to reverberation. Importantly, no prior knowledge about the position of the sound source is required. 2) We introduce a more suitable acoustic feature vector, namely the RTF, into the LOCA training process. 3) We design a circular microphone array that travels in the RoI in a scanner-like manner and is tailor-made for our framework requirements. 4) We change the optimization scheme of LOCA to enable stable convergence in our setting. 5) Our method outperforms existing kernel-based schemes in terms of mapping accuracy and time efficiency. Moreover, we show that it can predict the locations of measurements in regions unseen during training, which is usually not the case for more traditional training-based localization schemes.

2 Theoretical Background

2.1 The relative transfer function (RTF)

The RTFs [9, 10] are used as the acoustic feature for our approach. They are independent of the source signal and carry relevant spatial information. Consequently, they can serve as spatial fingerprints that characterize the positions of each of the sources in a reverberant enclosure [11].

RTF - Definition and Estimation: For a pair of microphones, we consider time-domain acoustic recordings of the form

| (1) |

with being the source signal, , the room impulse responses relating the source and each of the microphones, , and noise signals independent of the source. We define the acoustic transfer functions as the Fourier transform of the RIRs . Following [9], the RTF is defined as . Assuming negligible noise and selecting as the reference signal, the RTF can be estimated from:

| (2) |

where and are the power spectral density (PSD) and the cross-PSD, respectively.

Representing RTFs on the Acoustic Manifold: Previous works have studied the nature of the RTFs [16, 15, 11]. RTFs are high-dimensional representations in that correspond to the vast amount of reflections from different surfaces characterizing the enclosure. Following the manifold hypothesis [17], we assume that the RTF vectors, drawn from a specific RoI in the enclosure, are not spread uniformly in the entire space of . Instead, they are confined to a compact nonlinear manifold of dimension , much smaller than the ambient space dimension, i.e., . This assumption is justified by the fact that perturbations of the RTF are only influenced by a small set of parameters related to the physical characteristics of the environment. These parameters include the enclosure dimensions, its shape, the surfaces’ materials, and the positions of the microphones and the source. Moreover, we focus on a static configuration, where the enclosure properties and the source position remain fixed. In such an acoustic environment, the only varying degree of freedom is the location of the microphone array.

Although nonlinear, in small neighborhoods, the manifold is locally linear, meaning that it is flat in the vicinity of each point and coincides with the tangent plane to the manifold at that point. Hence, the Euclidean distance can faithfully measure affinities between points that reside close to each other on the manifold. For remote points, however, the Euclidean distance is meaningless. This property is utilized to our advantage by LOCA, which makes use of the linear relation between points in a local neighborhood.

2.2 Local Conformal Autoencoder (LOCA)

Motivation: Our approach requires a localized sampling strategy denoted burst sampling [18]. A burst is a collection comprising samples taken from a local neighborhood. Consequently, bursts can provide information on the local variability in the vicinity of each data point, allowing for estimating the Jacobian (up to an orthogonal transformation) of the unknown measurement function.

Assumptions and Derivation: First, for simplicity, we consider the case where the latent domain of our system of interest is a path-connected domain in the Euclidean space . Observations of the system consist of samples captured by a measurement device and are given as a nonlinear smooth and bijective function , where is the ambient or measurement space, and . The high dimensional acoustic features, namely the RTFs, constitute the measurement space in our setting.

Consider RTF data points, denoted in the latent space. Assume that all these points lie on a path-connected, -dimensional subdomain of . Importantly, we do not have direct access to the latent space . Samples in the latent space , which can be thought of as latent states, are pushed forward to the ambient space via the unknown deformation . Let be a set of measurements, with . We assume that an observed burst around each consists of perturbed versions of the latent state , pushed through the unknown deformation . Formally, for fixed , we define the burst (sample cloud) as the set of independent and identically distributed samples of the random variable , where , are independent random variables and denotes the number of samples in each burst. The available data now consists of bursts of observed states and their perturbed samples. We assume that is sufficiently small such that, practically, the differential of does not change within a ball of radius around any point. Such sufficiently small allows us to capture the local neighborhoods of the states at this measurement scale on the latent manifold.

Ideally, being able to find would suffice to reconstruct the latent domain, but even if is invertible, it is generally not feasible to identify without access to . We can, however, try to construct an embedding that maps the observations so that the image of is isometric to when is known. In our Euclidean setting, it is equivalent to require that the distances in the latent space are preserved, i.e., samples should satisfy for any . Following the mathematical derivation in [12], the embedding that preserves the distances should satisfy the condition

| (3) |

where is the covariance matrix computed over the embedding of the samples from each burst. In other words, (3) requires whitening the covariance of local samples in the embedding domain.

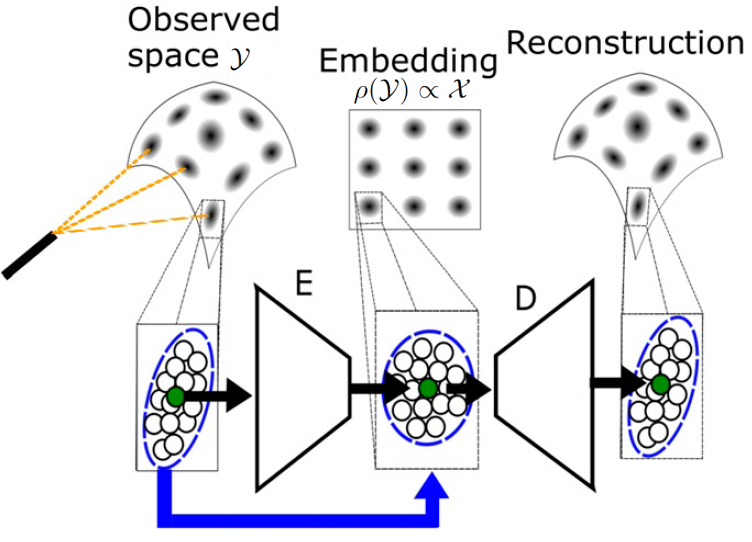

Implementation: As stated above, our task is to find the embedding function . We use an encoder neural network to learn the embedding function. Following (3), we define the loss term to be minimized at each iteration:

| (4) |

with the learned embedding function up to the current iteration and is the empirical covariance over a set of realizations . Next, we note that is an invertible function, and so is its inverse . Since our embedding tries to estimate up to an orthogonal transformation, we can enforce the invertibility of , reducing ambiguity in the solution. The invertibility of means that there exists an inverse mapping such that for any . By imposing an invertibility property on , we effectively regularize the solution of away from noninvertible functions. Practically, we impose invertibility by using a decoder neural network parameterized by . This approach induces a loss term based on the mean square error (MSE) between samples and their reconstructed counterparts:

| (5) |

The two-loss terms are equally weighted. The LOCA framework is schematically depicted in Fig. 1.

3 Simulation Setup

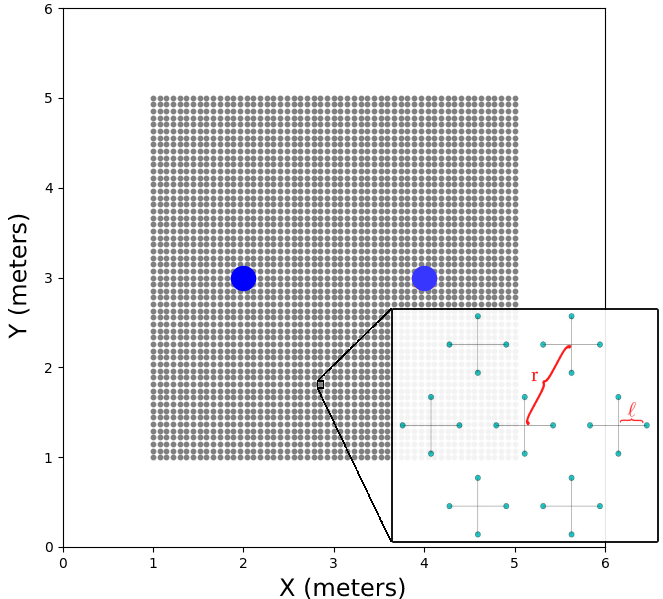

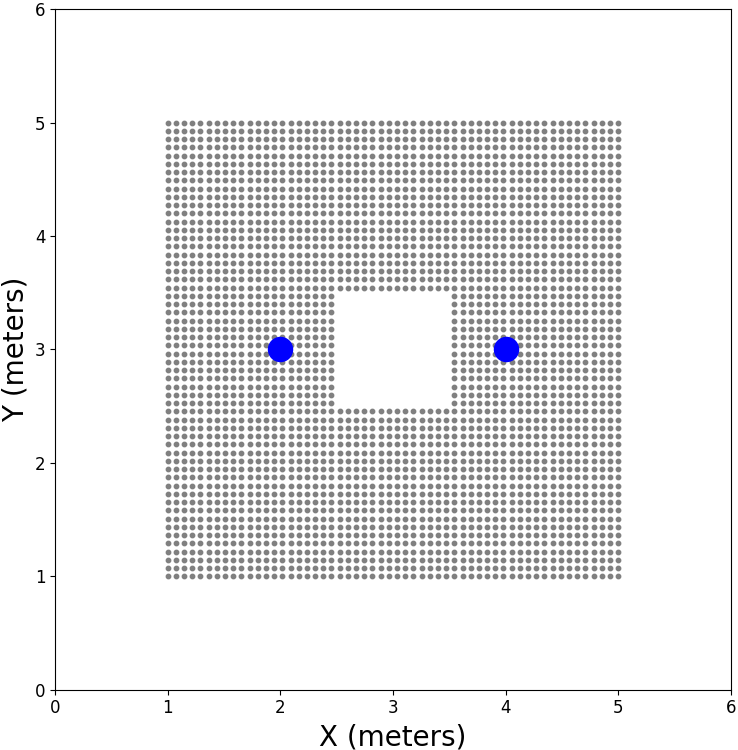

Experimental Setup: To implement the burst sampling strategy, we construct a microphone array with seven cross-shaped sub-arrays in a circular constellation, each consisting of four omnidirectional microphones. The distance between the microphones in each arm of the cross is , and the radius w.r.t. the constellation’s center is . For a relatively small radius, this circular array accurately simulates a Gaussian neighborhood sampled in the latent domain, as the burst sampling strategy requires. Practically, might be larger than , resulting in overlapping crosses of microphones (see Fig. 2).

Consider a room of dimensions m. Define a RoI within the room of size m, which is symmetrically positioned around the center of the room, m above the room’s floor. The following simulation will be divided into two parts: a learning phase and a test phase. In the learning phase, the microphone array is free to move only on a grid with a spatial resolution of samples along each dimension, yielding a total of bursts for training and validation, with a spacing of cm between the centers of neighboring bursts. In the test phase, we allow the device to travel in the same confined region but not necessarily on the grid. The test set includes 750 bursts from random locations in the RoI. Two omni-directional sound sources are placed at m and m, lying m above the sampling-grid plane. Note that the positions of the sound sources are unknown to the algorithm. The height of the sound sources above the sampling plane is vital due to simple geometric considerations. It allows phase accumulation between received signals relative to the sources in all directions. In addition, it became apparent by a series of experiments that using only a single emitting source may produce heavily deformed embeddings at the edges far from the source. For that reason, we used two sources in the simulations. Note that the sound sources are not simultaneously active, thus allowing for the estimation of the individual RTFs. A top-view visualization of the sampling constellation is shown in Fig. 2.

Reverberated Data and Training: The reverberant acoustic data was generated using the GPU-accelerated RIR Simulator [19, 20]. For the learning-phase data, the synthetic RIRs, , were convolved with second long white noise signals, . The acoustic data at the test phase is simulated by convolving the RIRs, , with second long speech signals, , drawn from the TIMIT dataset [21]. We examined three reverberation times ( ms), set the sampling frequency to kHz, and the speed of sound to m/s. We also set the microphone array parameters to cm and cm. Using this configuration, at any given location and for each source, we can estimate the horizontal RTFs and the vertical RTFs, having a total of seven horizontal and seven vertical RTFs for a single burst. RTF estimation was applied according to (2), using , with 50% overlapping Hamming windows. A single estimated RTF is a complex-valued feature of length . For working with real-valued deep neural networks, we concatenated the real and imaginary parts to form a 258-dimension real-valued feature. It turns out that picking a portion of the RTF bins is preferable. We obtain the best results when taking bins 5 to 99, corresponding to frequencies – Hz.

Our data tensor is constructed as follows: we take 95 bins from each complex RTF for each single sound source to construct a real-valued feature of length 190. Since we have vertical and horizontal RTF components in our burst, we concatenate them to obtain a feature vector of length 380. Finally, having two speech sources, we repeat the process and concatenate both results. We eventually end up with a data tensor of shape . In terms of the LOCA terminology, we can write that , and we wish to reconstruct a 2-D latent embedding, .

Training and Parameters: We implemented both the encoder and decoder using simple feed-forward architectures. The encoder consists of an initial layer of dimension with no nonlinearity, followed by five layers of size with ‘Leaky-Relu’ activations, ending with a latent representation layer of size . Similarly, the decoder consists of the latent representation layer with no nonlinearity, followed by four layers of size with ‘tanh’ activations, an additional linear layer of size , and an output reconstruction layer of size . We use a batch size of samples and a learning rate of . While the original LOCA formulation applied alternating minimization steps between the whitening and reconstruction loss terms, we found that, in our setting, a more stable solution is obtained by minimizing a combined loss function. We use of the learning-phase data during training, and the rest are used for validation and determining the best model weights. Finally, based on the geometry of the microphone array, we set .

4 Simulation Study and Analysis

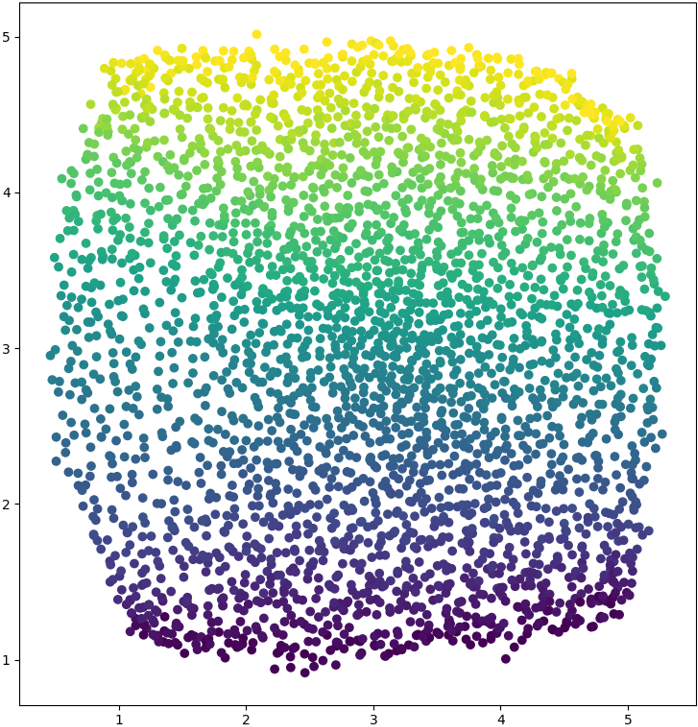

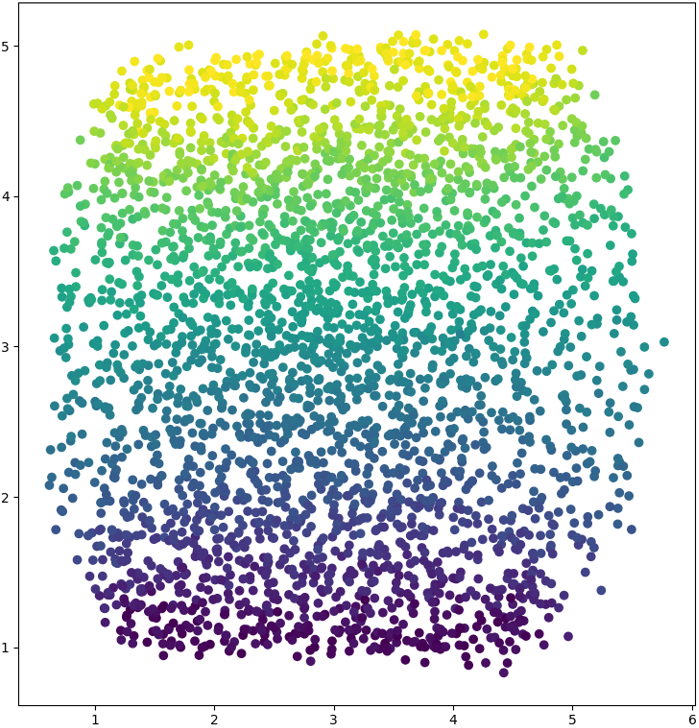

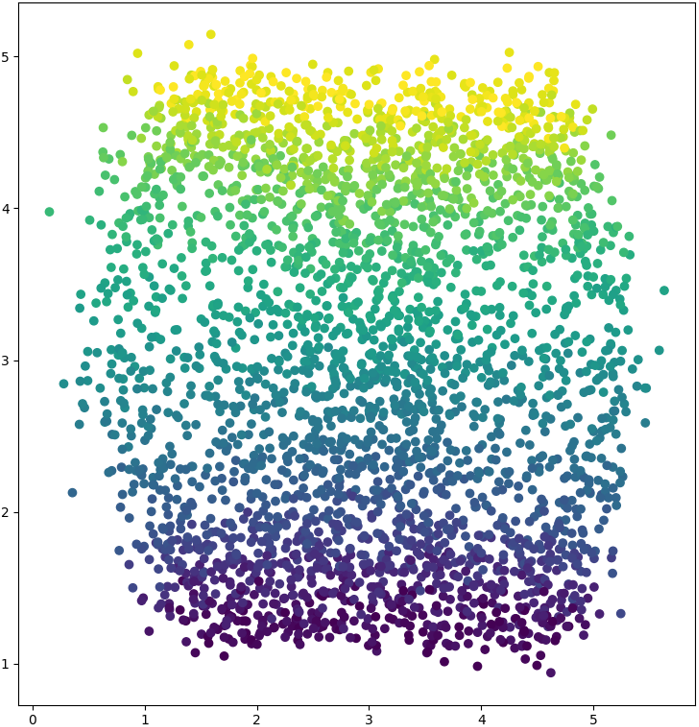

As discussed earlier, our embeddings are invariant to shifts and orthogonal transformations; therefore, they should be calibrated using a set of anchor points. Typically, three anchor points are required to recover the required transformation. Note that the calibration process is the first time we incorporate our knowledge of the true geometry of the problem into the model. In Fig. 3, we visualize the calibrated embedding provided by our framework. Our learned embedding demonstrates a high correlation between the main directions of the embedding and the true axes characterizing the square sampling grid. While increasing the reverberation level may deform the embedding, the model can still capture the directional correlation and reveal the square shape of the grid (see Fig. 3). We suspect the reverberation-attributed degradation stems from the LOCA’s assumption that is a smooth function. In high reverberation, small perturbations in the location translate to relatively sharp changes in the RTF, leading to less smooth . As the high-frequency bins tend to change rapidly with the burst’s position, it was empirically verified that we should discard these bins to achieve better results.

| Method | ms | ms | ms |

|---|---|---|---|

| PCA | 72.2 | 72.5 | 74.3 |

| MMDS | 73.4 | 79.1 | 82.5 |

| DM | 20.4 | 22.7 | 65.1 |

| A-DM | 17.8 | 18.3 | 33.8 |

| LOCA |

Comparison with Manifold Learning Methods: Next, we compare the results with other baseline methods. Since we focus on unsupervised acoustic scene mapping, we compare LOCA to alternative dimensionality reduction methods. Specifically, we compare to: Principal-Component-Analysis (PCA), Metric-Multi-Dimensional-Scaling (MMDS) [22], diffusion maps (DM) [23], and anisotropic diffusion maps (A-DM) [18]. The above methods are commonly used in manifold learning applications and are justified by our assumption of the existence of the acoustic manifold. Hence, they may serve as valid candidates for comparison. We stress that these schemes are tested with the same data used to test LOCA.

As discussed earlier, we quantify the performance using the test-phase data. We calculate the mean absolute error (MAE) of the distance between the positions in the embedding space and their matching counterparts in the latent space. Note that we first calibrate the embedding using an orthogonal transformation and a shift before calculating the MAE. Additionally, in DM and A-DM, where we need to carry out hyper-parameter tuning for , we first find the optimal value by selecting the embedding that leads to the minimal MAE, given a small set of four anchors. In Table 1, we compare the performance measures of all methods for the different reverberation times. It is evident that LOCA leads to the best results in terms of MAE for all reverberation levels. Finally, it is important to note the advantage of LOCA over the other methods regarding inference time. Once a mapping of the RoI has been constructed in the training phase, we can perform self-localization using new samples, where now we can use only a single cross instead of an entire burst. LOCA presents a fast and simple inference process based on the DNN’s forward pass. In contrast, Kernel-based methods require calculating the kernels between the new and all other training samples, which is a cumbersome process. To quantify this gap, we compared the inference time of our method with kernel schemes and observed that we could reduce the run time by four orders of magnitude.

To complete the study, we have tested a naïve classical approach for acoustic scene mapping of the discussed RoI. We considered a coarser grid than before, consisting of samples, with a spacing of 44.4 cm between neighboring locations. To further simplify, the sound sources were lowered to the grid plane. For this approach, which is further explained in Section 3.6 of [24], we used two crosses of microphone pairs identical to the central cross array in Fig. 2. One is a static reference array located at the middle of the RoI, whereas the second array is successively moved along the grid points. We then used GCC-PHAT to estimate the TDOAs between the moving array and the reference array centroid w.r.t. each speaker. Additionally, we used SRP-PHAT [25] to estimate the DOA of each speaker w.r.t. each array. This scheme lead to MAEs of 13.7 cm for ms and to 74.8 cm for ms. For this evaluation grid LOCA’s performance remains relatively stable.

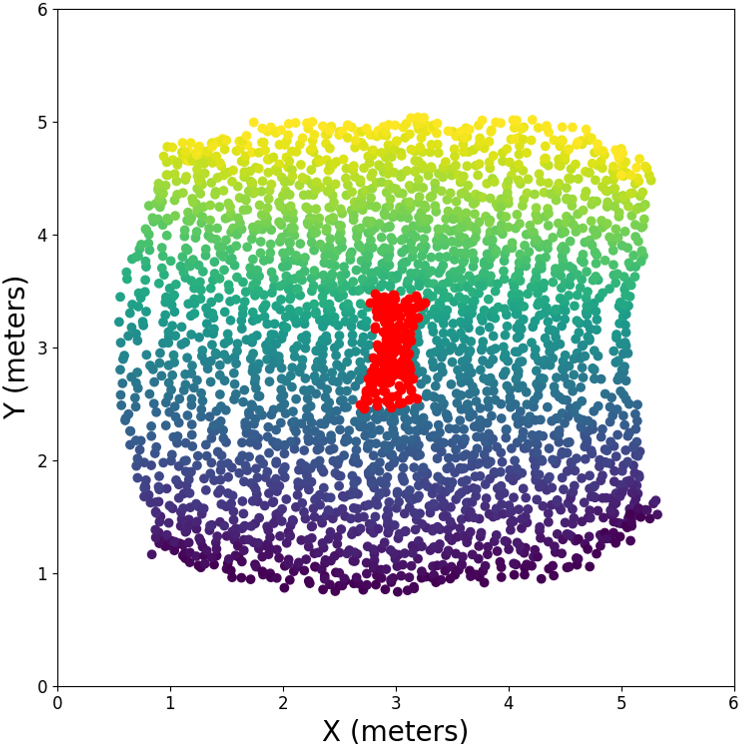

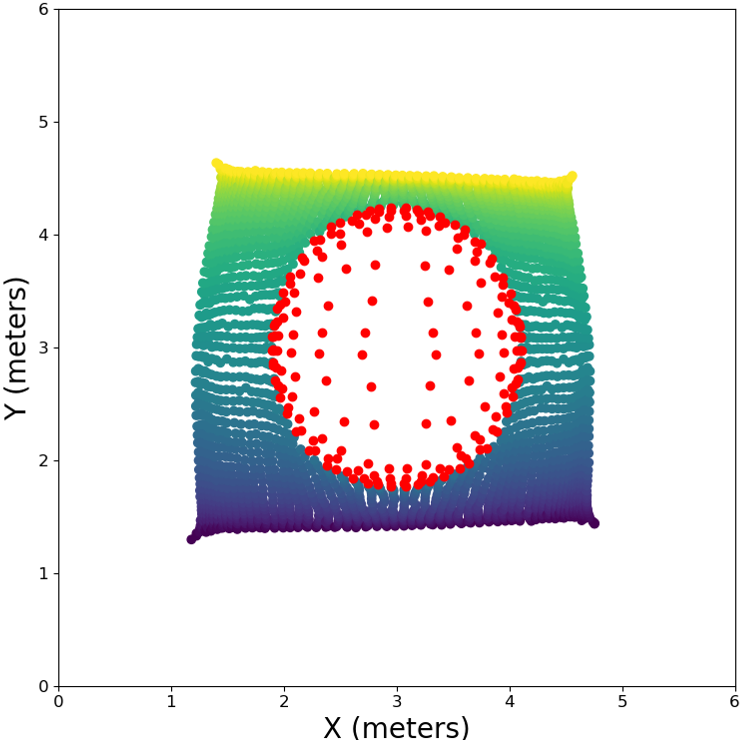

Extrapolation Capabilities: We now examine the extrapolation capabilities of the proposed scheme and compare them to the A-DM manifold learning scheme. For that, we modify our simulation setup as follows. The manifold is learned using the m RoI, excluding the m region in the center of the room, as depicted in Fig. 4(a). We only demonstrate the performance for the ms case. As evident from Figs. 4(b), 4(c) LOCA extrapolates very well to the excluded region compared to the A-DM.

5 Conclusions

In this work, we harness recent advances in the field of DNN-based manifold learning to deal with the problem of acoustic scene mapping in a reverberant environment. We demonstrate the applicability of the RTF as a proper feature vector, which encapsulates the relevant spatial information for the task at hand. We apply an approach that utilizes the natural structure of the data and the local relations between samples, avoiding the severe performance degradation typical to other localization schemes. Our simulation results demonstrate the ability to perform extrapolation to regions not seen during training while significantly reducing run-time requirements compared with existing schemes. Furthermore, we confirm the robustness of the approach even in mild to high reverberation.

References

- [1] Hugh Durrant-Whyte and Tim Bailey, “Simultaneous localization and mapping: part i,” IEEE Robotics & Automation magazine, vol. 13, no. 2, pp. 99–110, 2006.

- [2] Jwu-Sheng Hu, Chen-Yu Chan, Cheng-Kang Wang, and Chieh-Chih Wang, “Simultaneous localization of mobile robot and multiple sound sources using microphone array,” in IEEE International Conference on Robotics and Automation, 2009, pp. 29–34.

- [3] Christine Evers and Patrick A. Naylor, “Acoustic SLAM,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 26, no. 9, pp. 1484–1498, 2018.

- [4] C. Knapp and G. Carter, “The generalized correlation method for estimation of time delay,” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 24, no. 4, pp. 320–327, Aug. 1976.

- [5] B. Champagne, S. Bedard, and A. Stephenne, “Performance of time-delay estimation in the presence of room reverberation,” IEEE Transactions on Speech and Audio Processing, vol. 4, no. 2, pp. 148–152, Mar. 1996.

- [6] M.S. Brandstein and H.F. Silverman, “A robust method for speech signal time-delay estimation in reverberant rooms,” in IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 1997, vol. 1, pp. 375–378 vol.1.

- [7] Joseph H DiBiase, Harvey F Silverman, and Michael S Brandstein, “Robust localization in reverberant rooms,” in Microphone Arrays: Signal Processing Techniques and Applications, pp. 157–180. Springer, 2001.

- [8] Tsvi G. Dvorkind and Sharon Gannot, “Time difference of arrival estimation of speech source in a noisy and reverberant environment,” Signal Processing, vol. 85, no. 1, pp. 177–204, Jan. 2005.

- [9] S. Gannot, D. Burshtein, and E. Weinstein, “Signal enhancement using beamforming and nonstationarity with applications to speech,” IEEE Transactions on Signal Processing, vol. 49, no. 8, pp. 1614–1626, 2001.

- [10] S. Markovich, S. Gannot, and I. Cohen, “Multichannel eigenspace beamforming in a reverberant noisy environment with multiple interfering speech signals,” IEEE Transactions on Audio, Speech, and Language Processing, vol. 17, no. 6, pp. 1071–1086, Aug. 2009.

- [11] Bracha Laufer-Goldshtein, Ronen Talmon, and Sharon Gannot, “Data-driven multi-microphone speaker localization on manifolds,” Foundations and Trends in Signal Processing, vol. 14, no. 1–2, pp. 1–161, 2020.

- [12] Erez Peterfreund, Ofir Lindenbaum, Felix Dietrich, Tom Bertalan, Matan Gavish, Ioannis G. Kevrekidis, and Ronald R. Coifman, “Local conformal autoencoder for standardized data coordinates,” Proceedings of the National Academy of Sciences, vol. 117, no. 49, pp. 30918–30927, Nov. 2020.

- [13] Antoine Deleforge and Radu Horaud, “2d sound-source localization on the binaural manifold,” in 2012 IEEE International Workshop on Machine Learning for Signal Processing. Sept. 2012, IEEE.

- [14] Antoine Deleforge, Florence Forbes, and Radu Horaud, “Acoustic space learning for sound-source separation and localization on binaural manifolds,” International Journal of Neural Systems, vol. 25, no. 01, pp. 1440003, Jan. 2015.

- [15] Bracha Laufer-Goldshtein, Ronen Talmon, and Sharon Gannot, “A study on manifolds of acoustic responses,” in Latent Variable Analysis and Signal Separation, pp. 203–210. Springer International Publishing, 2015.

- [16] Bracha Laufer-Goldshtein, Ronen Talmon, and Sharon Gannot, “Semi-supervised sound source localization based on manifold regularization,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 24, no. 8, pp. 1393–1407, Aug. 2016.

- [17] Joshua B. Tenenbaum, Vin de Silva, and John C. Langford, “A global geometric framework for nonlinear dimensionality reduction,” Science, vol. 290, no. 5500, pp. 2319–2323, Dec. 2000.

- [18] Amit Singer and Ronald R. Coifman, “Non-linear independent component analysis with diffusion maps,” Applied and Computational Harmonic Analysis, vol. 25, no. 2, pp. 226–239, Sept. 2008.

- [19] David Diaz-Guerra, Antonio Miguel, and Jose R. Beltran, “gpuRIR: A python library for room impulse response simulation with GPU acceleration,” Multimedia Tools and Applications, vol. 80, no. 4, pp. 5653–5671, Oct. 2020.

- [20] Jont B. Allen and David A. Berkley, “Image method for efficiently simulating small-room acoustics,” The Journal of the Acoustical Society of America, vol. 65, no. 4, pp. 943–950, 1979.

- [21] John Garofolo, Lori Lamel, William Fisher, Jonathan Fiscus, David Pallett, Nancy Dahlgren, and Victor Zue, “TIMIT acoustic-phonetic continuous speech corpus,” 1993.

- [22] Hervé Abdi, “Metric multidimensional scaling (mds): analyzing distance matrices,” Encyclopedia of measurement and statistics, pp. 1–13, 2007.

- [23] Ronald R Coifman and Stéphane Lafon, “Diffusion maps,” Applied and computational harmonic analysis, vol. 21, no. 1, pp. 5–30, 2006.

- [24] Yuval Dorfan, Ofer Schwartz, and Sharon Gannot, “Joint speaker localization and array calibration using expectation-maximization,” EURASIP Journal on Audio, Speech, and Music Processing, vol. 2020, no. 1, June 2020.

- [25] Hoang Do, Harvey F Silverman, and Ying Yu, “A real-time srp-phat source location implementation using stochastic region contraction (src) on a large-aperture microphone array,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2007.