Unsupervised Competitive Hardware Learning Rule for Spintronic Clustering Architecture

Abstract

We propose a hardware learning rule for unsupervised clustering within a novel spintronic computing architecture. The proposed approach leverages the three-terminal structure of domain-wall magnetic tunnel junction devices to establish a feedback loop that serves to train such devices when they are used as synapses in a neuromorphic computing architecture.

I Introduction

Neuromorphic computing promises exceptional capabilities for artificial intelligence through highly-efficient circuit structures mimicking the structure and functionality of the human brain. While neural networks composed of artificial synapses and neurons have been demonstrated with conventional CMOS devices, it is expected that computing efficiency can be improved by more closely emulating neurobiological hardware. Thus, the potential use of non-volatile spintronic analog devices that can be interconnected in a manner that enables on-chip unsupervised learning is particularly promising. In particular, spintronic domain-wall (DW) devices have been proposed as capable synapses [1] and neurons [2] and achieve a remarkable energy efficiency and reliability relative to competing non-volatile candidate devices such as resitive/filamentary memristive and phase-change memory (PCM) candidate devices for on-chip learning [3]. Recently, DW synaptic devices have been co-integrated with transistor learning circuits to show basic learning [4].

Domain wall-magnetic tunnel junction devices (DW-MTJs) are spintronic devices that provide non-volatile memory. The position of the DW along its track determines the tunneling resistance of a DW-MTJ and can be modulated by applying a current through the track. Three-terminal DW-MTJs have been leveraged as analog artificial synapses with long tunnel barriers and as binary artificial neurons by a digital version with shorter tunnel barrriers [5]. Furthermore, a CMOS-free monolothic multi-layer perceptron system can be achieved solely with three-terminal DW-MTJ synapses and four-terminal DW-MTJ neurons intrinsically capable of emulating the leaky-integrate-and-fire (LIF) neuron model. However, as this perceptron system does not utilize the third terminal of the DW-MTJ synapses, there is an opportunity to use this third terminal for efficient on-chip unsupervised learning. We propose such an approach to clustering using DW-MTJ crossbars that minimize the use of CMOS.

II Neuromorphic Crossbar Array: Background

A neuromorphic crossbar array is a hardware organization of circuit units that can perform brain-inspired functionalities, and has its origins in the use of crossbars for logic and memory. Like the crossbar array, the biological brain can be simplified and abstracted as a neural network consisting of neurons connected by synapses.

Neurons receive electrical or chemical stimuli through the dendrites, perform computations in the cell body (i.e., soma), and propagate the output through axons. Synapses are the junctions between neurons that modulate the signal propagation strength. The leaky integrate-and-fire (LIF) neuron is a popular artificial neuron model that mimics the biological neuron. An LIF neuron continuously integrates energy provided through the synapses connected to the input neuron.Throughout the integration process, the stored energy leaks if the energy received by the neuron is insufficient. When the stored energy reaches a particular threshold, the LIF neuron generates a spike that can be propagated to the next connected neuron or be utilized to perform inference.

The synaptic connections of a neuromorphic crossbar can be implemented with variable resistors and many such crossbar architectures have been proposed using memristors [6], 1-transistor 1-resistor RRAM [7], phase-change memories [8], and ferroelectric RAM [9], among others. These variable resistors define the weighted connections between neurons. The weight tuning is performed by changing the conductance of the synapses and is referred to as ‘learning’ when the tuning is conducive to the optimization of some objective function for which a cognitive task is defined (i.e. face recognition).

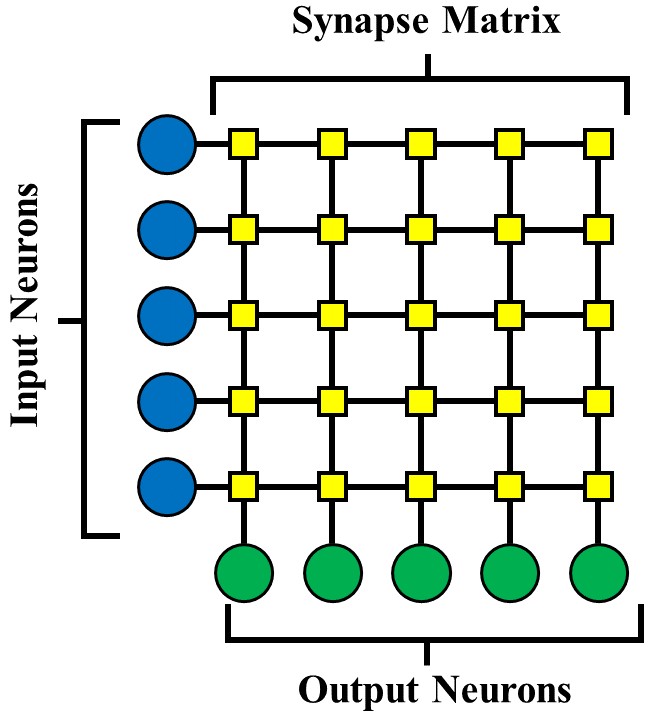

Fig. 1 shows a neuromorphic crossbar array where horizontal word lines are connected to the input neurons and vertical bit lines are connected to the output neurons. At the intersection of each word line and bit line, a synapse is placed that defines the connectivity between the input and the output neuron. In this fashion, a weight matrix is formed by the synapses, and the output neurons receive the product of vector-matrix multiplication between an input vector and the weight matrix. Cascading multiple crossbar array results in a multi-layer perceptron.

III DW-MTJ Synapse and Neuron

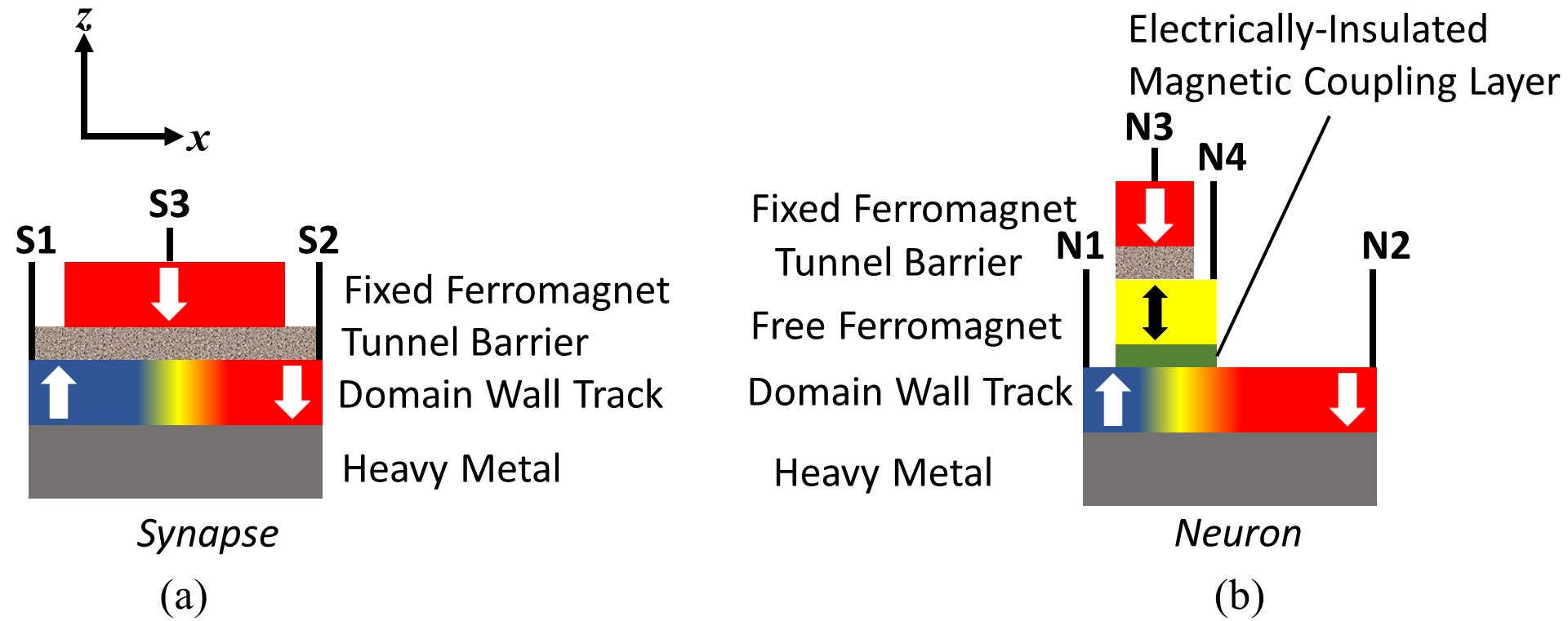

Fig. 2(a) shows a three-terminal (3T) DW-MTJ that acts as a synapse by providing an analog conductance element to the neural system [10]. Between terminals S1 and S2, it has a free ferromagnetic domain wall track divided into +z and -z directed magnetic domains separated by a DW. An analog MTJ is formed between the S1 and S3 terminals by the DW track, tunnel barrier and, the -z directed fixed ferromagnet. The position of the DW can be controlled by applying the S2-S1 spin-transfer torque inducing write current. The MTJ conductance can be represented as a parallel-resistance combination of parallel and anti-parallel (with respect to the fixed ferromagnet) portions of the DW-MTJ. A positive (negative) S2-S1 current grows the anti-parallel (parallel) +z (-z) domain and decreases (increases) the conductance of the DW-MTJ.

Fig. 2(b) shows a four-terminal (4T) version of DW-MTJ which performs the leaky integrate-and-fire neuronal functionalities [11]. Similar to the synapse, it has a DW track between terminals N1 and N2. The magnetic state of the DW track is coupled to a free ferromagnet of smaller dimension. The electrically-insulating magnetic coupling layer separates the N1-N2 write current path from the N3-N4 read path. The N3-N4 path has an MTJ of smaller dimension compared to the synapse MTJ. Spin-transfer torque inducing current through the N1-N2 path performs integration by moving the DW from right-to-left direction. Leaking causes the DW to move in left-to-right direction and can be performed by one of three approaches - dipolar coupling field [5], anisotropy gradient along the DW track [12] and shape variation of the DW track [13]. When the DW crosses beneath the dipolarly-coupled free ferromagnet during the integration process, the MTJ between the N3-N4 path switches from an anti-parallel low conductive state to a parallel high conductive state, thus performing the firing functionality.

IV Circuit for Unsupervised Learning

The process of updating weights in a neural network is called learning. Depending on the presence of a teacher signal, learning can be classified into the categories of supervised, unsupervised and semi-supervised methods. In our hardware system, we have focused on optimizing the unsupervised learning primitive. This primitive can later be co-integrated with other learning sub-systems, as demonstrated in Section VIII. Our approach implements unsupervised learning as an electrical approximation of Hebbian (associative) learning, which can be efficiently implemented via the spike-timing-dependent plasticity (STDP) rule; this rule can efficiently extract information from real-time data, such as images, video, or other raw sensor data [14].

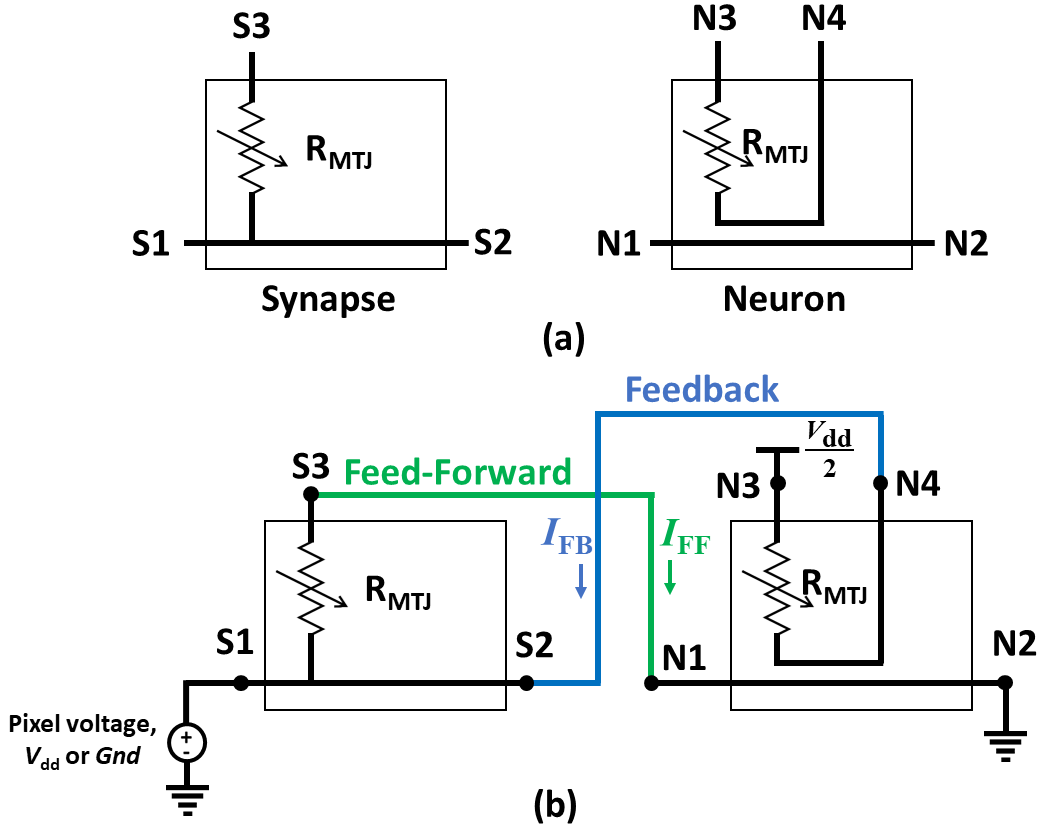

Fig. 3(a) shows the electrical equivalents of the DW-MTJ synapse and neuron discussed in section III. The synapse is represented by an analog resistor in the S1-S3 path and a conducting wire in the S1-S2 path. The conducting wire represents the DW track which has a negligible resistance compared to the MTJ resistance. The neuron equivalent also consists of a conducting wire in the N1-N2 path representing the DW track and a binary resistor in the N3-N4 path. The isolation between the N1-N2 and N3-N4 paths results from the electrically insulated magnetic coupling layer in Fig. 2 (b).

Fig. 3(b) illustrates the neuron-synapse connectivity underlying the inference and learning mechanisms. The synapse is connected between an input pattern source and a post-synaptic neuron. The image pixel can be converted into binary 0 and 1 input patterns corresponding to voltage levels and , respectively. The input voltage is connected to the S1 terminal of the synapse and a feed-forward connection is made between the S3 and N1 terminals. The feed-forward current flows through the S1-S3-N1-N2 path, is modulated by the analog resistor corresponding to the synapse, and results in the integration process of the post-synaptic neuron.

The learning is performed by creating a feedback connection between the N4 and S2 terminals. The feedback current flows through the N3-N4-S2-S1 path and is modulated by the binary resistor in the N3-N4 path. The N3 terminal is connected to voltage level /2. Therefore, the feedback current has a positive (negative) value if the input voltage connected to the N1 terminal is (). The positive (negative) current through the S2-S1 path decreases (increases) the synaptic analog conductance of connected in the S1-S3 path, as mentioned in Section III. The conductance update summary is provided in Table I, where the synapse conductance changes only when the post-synaptic neuron fires. The binary resistor connected between N3-N4 controls the rate of the learning mechanism. For a firing (non-firing) neuron, connected between N3-N4 provides a high (low) conductance and results in a higher (lower) value of the current and learning rate.

| Input / Pixel Voltage | Synapse Conductance | |

|---|---|---|

| Gnd | Positive | Decreases |

| Negative | Increases |

V DW-MTJ Perceptron with Unsupervised Learning

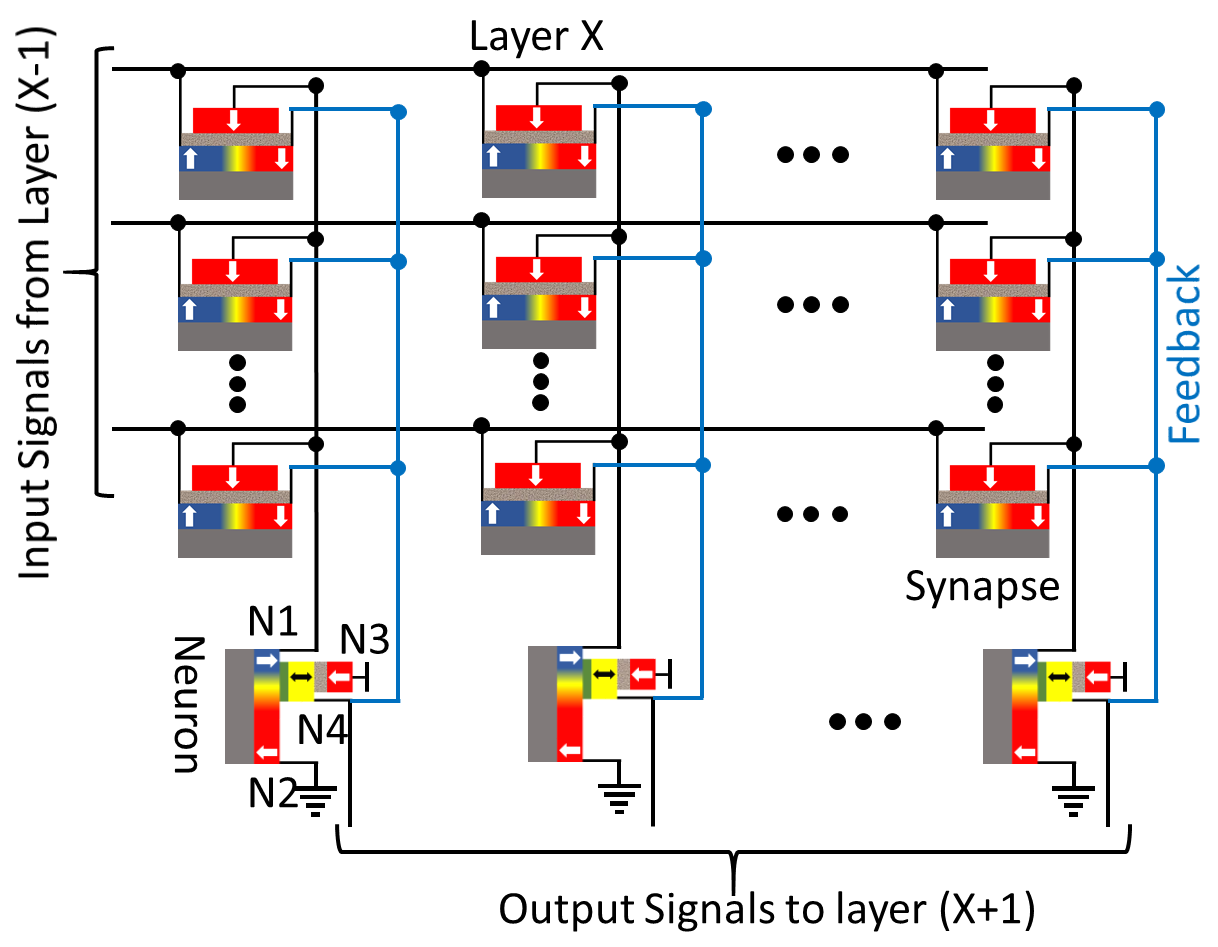

Fig. 4 shows the proposed DW-MTJ crossbar with a feedback connection from 4-terminal DW-MTJ neurons to 3-terminal DW-MTJ synapses in order to perform unsupervised learning. Input signals are provided to the left terminals of the synapses. Electrical signal flows across the tunnel barrier to the central terminal of each synapse, eventually reaching the connected output neuron. The current flowing across each synapse tunnel barrier is a function of the DW position, and therefore the synaptic weight given by the conductance of the DW-MTJ [15]. The weighted current fed into the N1 terminal of the output neuron performs LIF functionalities by modulating its DW position. The feedback operation to perform unsupervised learning is achieved by connecting the N4-terminal of the output neuron to the right terminals of the synapses connected to its N1 terminal.

VI Characteristic Equations

The behavior of DW-MTJ synapses and neurons is given by equations that characterize tunnel barrier conductance as a function of DW position and the motion of DWs as a function of current flow between the left and right terminals. For the synapse , its conductance at time is given by (1), where is the position of the synapse DW along the track, is the width of the tunnel barrier, is the anti-parallel conductance, and is the parallel conductance. For consistency with the literature on neural networks, we use this conductance value to define the weight of the synapse as a normalized value between and in (2). In the neuron , the short tunnel barrier produces only binary conductance states characterized by (3), where is the position of the neuron barrier along the track. In every synapse , a current causes the DW to move from its current position to after a time (4). In the neuron , a leaking force is included such that the DW dynamics are given by (5). Due to this leaking and the lateral inhibition among nearby neurons, the perceptron system can be designed to ensure that exactly one neuron fires in response to each set of inputs, and that the neurons are reset before the next set of inputs is provided [5].

| (1) | |||

| (2) | |||

| (3) | |||

| (4) | |||

| (5) |

VII Clustering Algorithm

Given: images, fully connected independent layers with synapses each connected to one output neuron .

Output: Trained synaptic weights such that output neurons act as classifiers by assigning the input data to one of clusters.

Procedure: For every data point , do the following, where denotes pixel () as in Fig. 5:

-

1.

Inference: Feed to each layer by applying voltage (Gnd) for pixels with value 1 (0). Let be the largest neuron output current.

-

2.

Training: Feed to layer by applying (Gnd) for pixels with value 1 (0). Use output current as feedback to the right terminal of synapses .

-

3.

-

4.

Update conductance and weights

-

5.

(Procedure starts at ).

Our approach relies on two phases: training and inference. In line (3): If , then the feedback current flows through synapse in the right-to-left direction, leading to . That is, the synaptic weight of will be decreased because this synapse did not contribute to detecting pixel . If , then the feedback current flows in the left-to-right direction through the synapse, leading to . That is, the synaptic weight of will be increased because this synapse contributed to detecting pixel . Since the neurons are reset after each input, we need not worry about the changes in for output neurons.

VIII Learning and Recognition Results

VIII-1 Integrated approach for on-chip classification

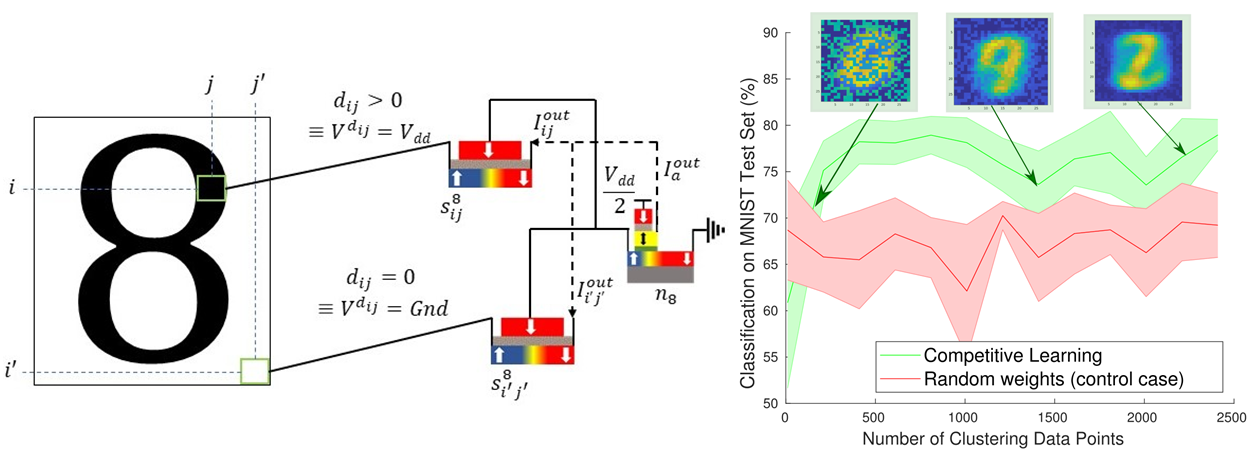

Next, we combined the DW-MTJ clustering network (the encoder) with an appropriate read-out crossbar (the decoder) and tested it on the MNIST digit recognition challenge. This system reads spikes from the encoder and maps them to the labels provided at the output of the system, adjusting conductances following a supervised rule, as in [16]. In this work, we apply the same overall method but adapt DW-MTJ synapses in the first layer intrinsically, following Section VI. We initially simulated the hard winner-take-all case, where lateral inhibition is tuned in (5) such that one neuron in the encoder fires at each training moment. The results of this demonstration are shown in Fig. 5, where performance on the dataset is plotted as a function of the total size of the set of datapoints presented for clustering, and compared to a control case where the encoder’s weights are untrained. In both cases, supervised (labeled) examples are given to the decoder network to map spikes to labels, and competing neurons cluster together. The clustering system alone receives samples prior to read-out and these are used to adapt the weights following the algorithm introduced in Section VII. As visible, while the algorithm at first performs worse than a random distribution of conductances, it quickly outperforms and reaches around classification on the task at and thereafter.

VIII-2 State-of-the-art performance on clustering tasks

Since our algorithm is shallow, it may not be suited for challenging/large classification tasks such as the MNIST task. However, it is an exciting way to perform a natural clustering operation that can otherwise be performed in a small network such as a perceptron, support vector machine, or k-means clustering system. In order to demonstrate the utility of our algorithm, we attempt two tasks which have previously been used in clustering with memristive devices:

-

•

The Fisher IRIS task. This task consists of 150 examples of flower properties collected for three species of Iris plants, with each data point using 4 different type of measured flower characteristics. In [17], this task was solved with emerging devices using a centroid learning based approach, which achieved . However, this centroid approach requires complex search and update schemes.

-

•

The Wisconsin breast cancer dataset, which consists of 398 datapoints, each consisting of many cell properties (fractal dimension, concavity, area etc) observed during an assay. The objective is to determine if cells are cancerous or benign (e.g., the clustering/classification task is binary). This task was solved using an approximation for Principal Component Analysis (PCA) using Sanger’s rule in a small crossbar with accuracy. However, Sanger’s rule requires a very complex pulse width calculation to implement, making it difficult to implement on-chip with high energy efficiency .

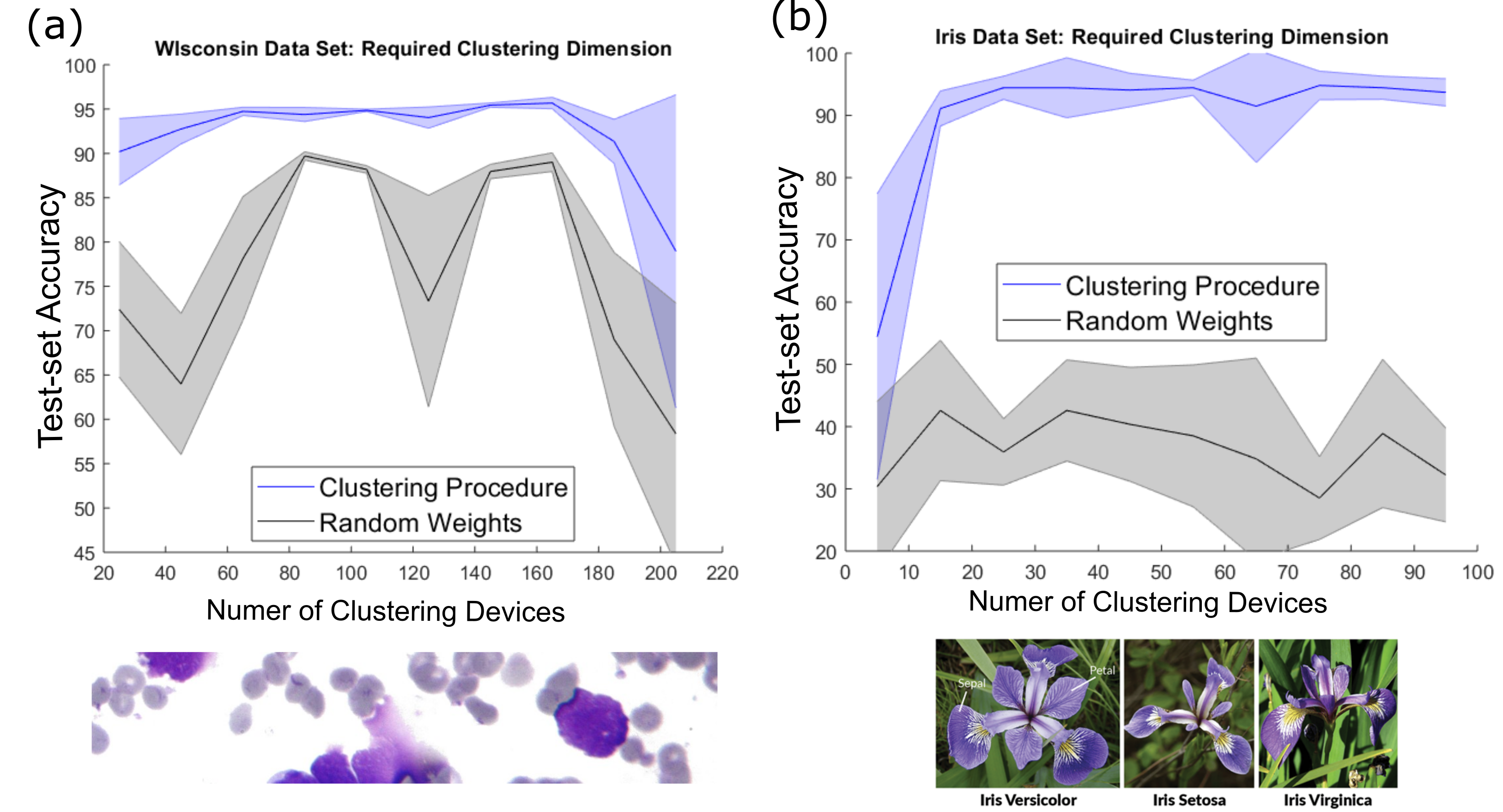

In Fig. 6(a), we demonstrate an average result of and best result of accuracy on the Wisconsin task when between and devices participate in clustering and they use the soft Winner-Take-All (WTA) formulation (e.g., multiple domain-wall neurons can fire at a given example/moment). These results assume a split of 227 training and 171 test points between the two cases, and compare favorably to results obtained using a software clustering algorithm [18]. Meanwhile, in the Fisher Iris task, average best result of and best result of is achieved for any amount of clustering units greater than and again using the soft-WTA approach (Fig. 6(a)).

In both cases, we have also contrasted to a case where the clustering layer is forced to random weights, so as to demonstrate that our system has more computational power than a perceptron alone. Note that the pictured random weights performance is somewhat restricted from best possible performances ( for Iris, for Wisconsin) as we have restricted the total number of supervised learning samples to in both cases. This highlights the fast learning capabilities provided via intrinsic clustering operations and physically enabled by the DW-MTJ device properties. Additionally, since the clustering performance we have achieved is better than hyperplane classification boundaries have when fully converged (e.g., the standard perceptron read-out), we confirm that our clustering layer captures a meaningful latent statistical interpretation of the tasks. As suggested in [19], our clustered system may contain Hidden Markov Model (HMM) representations and implement an approximate version of the Expectation Maximization (EM) algorithm.

IX Conclusion

In this work, we highlight how a set of DW-MTJ synapses connected to several competitively learning DW-MTJ neurons can implement an effective form of unsupervised learning (Clustering) and have demonstrated the fundamentals of the electrical circuit and algorithm implementation that make this possible. Through concrete use-cases on the MNIST, Fisher Iris, and Wisconsin Breast Cancer datasets, we have demonstrated the utility of this approach and its superiority to standard shallow neural network learning. In our next work, we plan to build upon these promising early results by incorporating additional bio-plausible neuronal effects such as homeostasis and resonant and fire, on the neuron level, and three-factor and other additional plasticity effects at the synapse level.

References

- [1] A. F. Vincent, N. Locatelli, Q. Wu, and D. Querlioz, “Implications of the use of magnetic tunnel junctions as synapses in neuromorphic systems,” in Proceedings of the on Great Lakes Symposium on VLSI 2017. ACM, 2017, pp. 317–320.

- [2] W. H. Brigner, N. Hassan, X. Hu, L. Jiang-Wei, O. G. Akinola, F. Garcia-Sanchez, M. Pasquale, C. H. Bennett, J. A. C. Incorvia, and J. S. Friedman, “Magnetic domain wall neuron with intrinsic leaking and lateral inhibition capability,” in Spintronics XII, vol. 11090. International Society for Optics and Photonics, 2019, p. 110903K.

- [3] D. Kaushik, U. Singh, U. Sahu, I. Sreedevi, and D. Bhowmik, “Comparing domain wall synapse with other non volatile memory devices for on-chip learning in analog hardware neural network,” arXiv preprint arXiv:1910.12919, 2019.

- [4] K. Yue, Y. Liu, R. K. Lake, and A. C. Parker, “A brain-plausible neuromorphic on-the-fly learning system implemented with magnetic domain wall analog memristors,” Science advances, vol. 5, no. 4, p. eaau8170, 2019.

- [5] N. Hassan, X. Hu, L. Jiang-Wei, W. H. Brigner, O. G. Akinola, F. Garcia-Sanchez, M. Pasquale, C. H. Bennett, J. A. C. Incorvia, and J. S. Friedman, “Magnetic domain wall neuron with lateral inhibition,” Journal of Applied Physics, vol. 124, no. 15, p. 152127, 2018.

- [6] M. R. Azghadi, B. Linares-Barranco, D. Abbott, and P. H. Leong, “A hybrid cmos-memristor neuromorphic synapse,” IEEE transactions on biomedical circuits and systems, vol. 11, no. 2, pp. 434–445, 2016.

- [7] S. Ambrogio, S. Balatti, V. Milo, R. Carboni, Z.-Q. Wang, A. Calderoni, N. Ramaswamy, and D. Ielmini, “Neuromorphic learning and recognition with one-transistor-one-resistor synapses and bistable metal oxide rram,” IEEE Transactions on Electron Devices, vol. 63, no. 4, pp. 1508–1515, 2016.

- [8] S. Kim, M. Ishii, S. Lewis, T. Perri, M. BrightSky, W. Kim, R. Jordan, G. Burr, N. Sosa, A. Ray et al., “Nvm neuromorphic core with 64k-cell (256-by-256) phase change memory synaptic array with on-chip neuron circuits for continuous in-situ learning,” in 2015 IEEE international electron devices meeting (IEDM). IEEE, 2015, pp. 17–1.

- [9] B. Rajendran and F. Alibart, “Neuromorphic computing based on emerging memory technologies,” IEEE Journal on Emerging and Selected Topics in Circuits and Systems, vol. 6, no. 2, pp. 198–211, 2016.

- [10] S. Dutta, S. A. Siddiqui, F. Büttner, L. Liu, C. A. Ross, and M. A. Baldo, “A logic-in-memory design with 3-terminal magnetic tunnel junction function evaluators for convolutional neural networks,” in 2017 IEEE/ACM International Symposium on Nanoscale Architectures (NANOARCH). IEEE, 2017, pp. 83–88.

- [11] W. H. Brigner, N. Hassan, C. H. Bennett, M. J. Marinella, F. Garcia-Sanchez, J. A. C. Incornvia, and J. S. Friedman, “CMOS-free multilayer perceptron enabled by four-terminal MTJ device,” Government Microcircuit Applications & Critical Technology Conference, March 2020.

- [12] W. H. Brigner, N. Hassan, L. Jiang-Wei, X. Hu, D. Saha, C. H. Bennett, M. J. Marinella, J. A. C. Incorvia, F. Garcia-Sanchez, and J. S. Friedman, “Shape-based magnetic domain wall drift for an artificial spintronic leaky integrate-and-fire neuron,” arXiv preprint arXiv:1905.05485, 2019.

- [13] W. H. Brigner, X. Hu, N. Hassan, C. H. Bennett, J. A. C. Incorvia, F. Garcia-Sanchez, and J. S. Friedman, “Graded-anisotropy-induced magnetic domain wall drift for an artificial spintronic leaky integrate-and-fire neuron,” IEEE Journal on Exploratory Solid-State Computational Devices and Circuits, vol. 5, no. 1, pp. 19–24, 2019.

- [14] T. Masquelier and S. J. Thorpe, “Unsupervised learning of visual features through spike timing dependent plasticity,” PLoS computational biology, vol. 3, no. 2, p. e31, 2007.

- [15] A. Sengupta, Y. Shim, and K. Roy, “Proposal for an all-spin artificial neural network: Emulating neural and synaptic functionalities through domain wall motion in ferromagnets,” IEEE Transactions on Biomedical Circuits and Systems, vol. 10, no. 6, pp. 1152–1160, 2016.

- [16] C. H. Bennett, N. Hassan, X. Hu, J. A. C. Incornvia, J. S. Friedman, and M. J. Marinella, “Semi-supervised learning and inference in domain-wall magnetic tunnel junction (DW-MTJ) neural networks,” in Spintronics XII, vol. 11090. International Society for Optics and Photonics, 2019, p. 110903I.

- [17] Y. Jeong, J. Lee, J. Moon, J. H. Shin, and W. D. Lu, “K-means data clustering with memristor networks,” Nano letters, vol. 18, no. 7, pp. 4447–4453, 2018.

- [18] A. K. Dubey, U. Gupta, and S. Jain, “Analysis of k-means clustering approach on the breast cancer wisconsin dataset,” International journal of computer assisted radiology and surgery, vol. 11, no. 11, pp. 2033–2047, 2016.

- [19] A. Tavanaei and A. S. Maida, “Training a hidden markov model with a bayesian spiking neural network,” Journal of Signal Processing Systems, vol. 90, no. 2, pp. 211–220, 2018.