Unsupervised Deformable Image Registration for Respiratory Motion Compensation in Ultrasound Images ††thanks: 1Robotics Institute, Carnegie Mellon University, Pittsburgh, PA, USA (abhiman2, aorekhov, choset, jgaleotti)@andrew.cmu.edu

Abstract

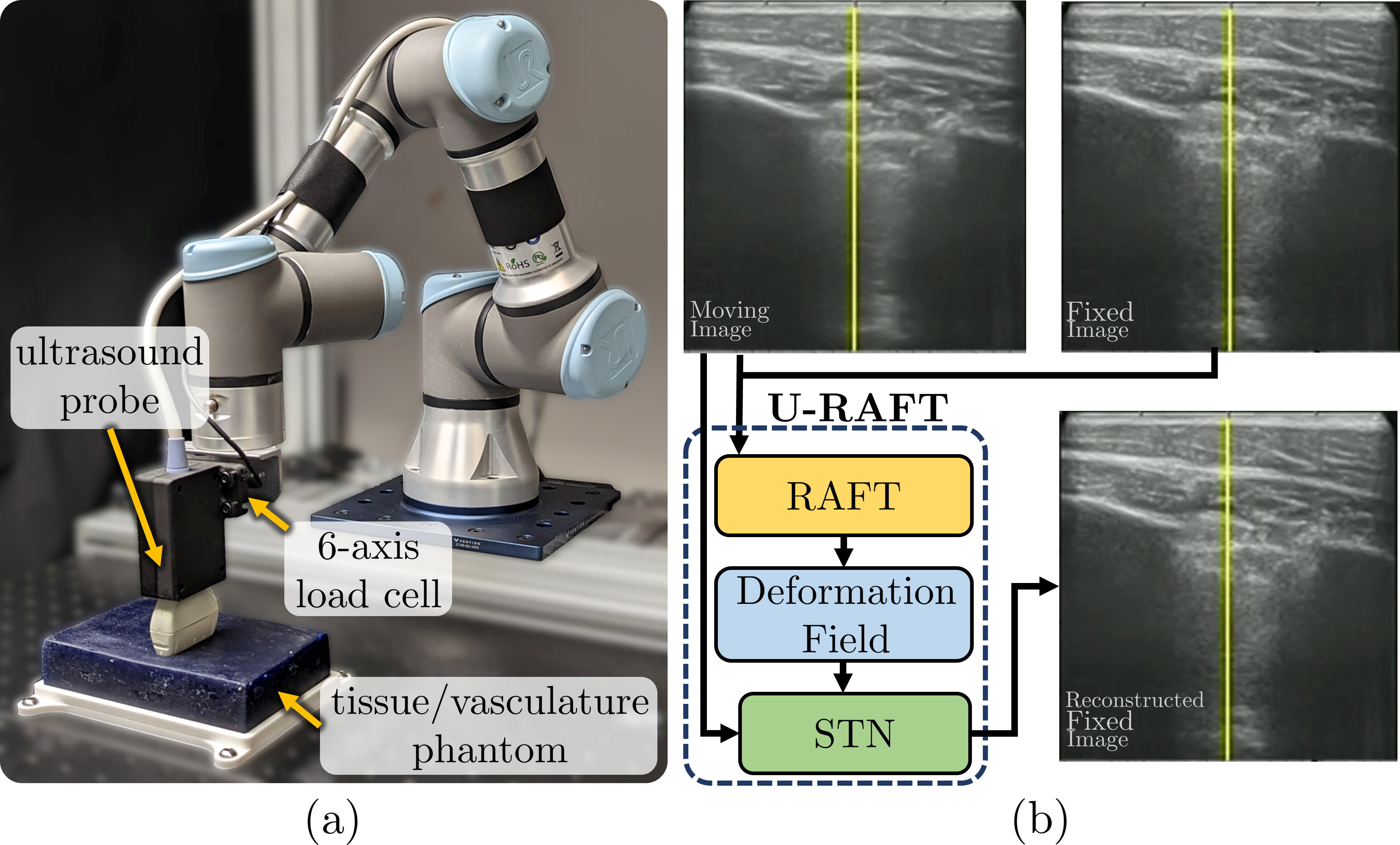

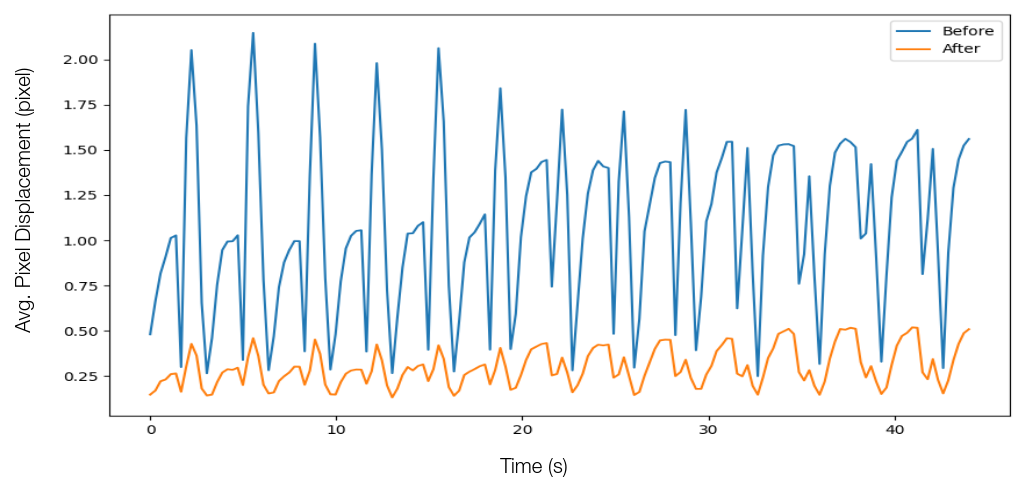

In this paper, we present a novel deep-learning model for deformable registration of ultrasound images and an unsupervised approach to training this model. Our network employs recurrent all-pairs field transforms (RAFT) and a spatial transformer network (STN) to generate displacement fields at online rates (30 Hz) and accurately track pixel movement. We call our approach unsupervised recurrent all-pairs field transforms (U-RAFT). In this work, we use U-RAFT to track pixels in a sequence of ultrasound images to cancel out respiratory motion in lung ultrasound images. We demonstrate our method on in-vivo porcine lung videos. We show a reduction of 76% in average pixel movement in the porcine dataset using respiratory motion compensation strategy. We believe U-RAFT is a promising tool for compensating different kinds of motions like respiration and heartbeat in ultrasound images of deformable tissue.

I Introduction

Ultrasound is a widely used modality for medical imaging due to its safety, lack of radiation exposure, and non-invasive nature. With high spatial resolution and real-time imaging capabilities, it can effectively locate malignant tissues for cancer treatment. However, in cases where the tumor is located in the lung or liver, respiratory motion can affect the accuracy of tumor localization. This can result in the exposure of healthy tissues to high levels of radiation, leading to adverse effects. Existing methods like gating and breath-hold can help with tumor localization, but they require significant patient cooperation and setup time. Accurately tracking pixels under respiratory motion and canceling the motion remains a challenging problem.

One approach to respiratory motion compensation is to use deformable registration. Deformable registration is the problem of how to register pairs of images, one referred to as the fixed image and the other as the moving image, where the two images are of the same anatomy but exhibit displacement between them. For respiratory motion compensation, we can use deformable registration between a fixed image and images during the respiratory cycle to track pixels and compensate for their motion.

Previous approaches for respiratory motion compensation have tried multiple methods like [1] used independent component analysis to estimate respiratory kinetics, [2] tried to minimize the effects by manually selecting the reference position of the diaphragm, and [3] tried to study the respiratory motion using principal component analysis. However, all these methods treat the respiration process as a rigid process, which causes drift in tracking respiratory motion. In order to cover this limitation, our method treats respiration motion compensations as a non-linear/deformable registration problem.

To compensate for respiratory motion, here we propose a deep learning-based deformable registration approach called Unsupervised-Recurrent All-Pairs Field Transforms (U-RAFT), where we learn to predict the movement of the pixels in an unsupervised manner.

In Section II, we present the network architecture of U-RAFT and its use for analyzing and compensating the breathing motion. In Section III, we present experimental results using U-RAFT on videos collected on the porcine animals. Section IV presents our conclusions and discussion on future directions.

II Approach

This section discusses the network architecture and loss function to train the network for predicting the deformation field (DF) and the use of U-RAFT for tracking pixels to capture and compensate for respiratory motion.

II-A U-RAFT network architecture and loss function

Let and be the fixed and moving ultrasound images, respectively from the same respiratory cycle. We denote a displacement field (DF) as , where is the function we seek to model with our network and the subscript denotes RAFT’s [4] network parameters. RAFT is a state-of-the-art optical flow estimation network, but no prior work has shown the application of RAFT on ultrasound images, which are inherently noisier than RGB images[5]. RAFT is also needs labelled ground truth displacement fields for training. Acquiring ground truth for ultrasound images is time-consuming and labor-intensive, so we seek to make the training unsupervised. We do this by passing the output of RAFT through a Spatial Transformer Network (STN) [6] to generate a reconstructed deformed image . We then use a cyclic loss function, denoted as (), to compare to and to using multi-scale structural similarity (MS-SSIM), where and . We also add a smoothness loss term to regularize the flow prediction.

II-B Respiratory compensation using U-RAFT

We use U-RAFT to track the displacement of every pixel in the video sequence and therefore capture the periodic movement of the tissue during respiratory motion. The first frame in the video sequence is and the rest of the frames are the moving images . For the frame in the video sequence, we calculate the net displacement of the pixel at location as, , where is the norm. We then pass the sequence of moving images to STN along with to retrieve the static sequence of images . Furthermore, we compute the Fast Fourier Transform (FFT) of over time to calculate the per-pixel displacement frequency.

III Results

III-A Experimental setup and training details

The study collected ultrasound images on three live anesthetized pigs for experiments in a controlled lab setting under the supervision of clinicians. The vascular ultrasound images from two of the pigs were used for training a U-RAFT, while lung ultrasound images from the third pig were used for testing. A robotic ultrasound system was used for data collection, including a UR3e manipulator, a Fukuda Denshi portable point-of-care ultrasound scanner, and a six-axis force/torque sensor. The training dataset was collected while palpating with the robot in a sinusoidal force profile, with a minimum and maximum force of 2 N and 10 N, respectively, while the testing dataset was collected by keeping the ultrasound probe static.

| Dataset |

|

|

||||

|---|---|---|---|---|---|---|

| Pig 1 | 2.09 | 0.32 | ||||

| Pig 2 | 2.32 | 0.65 | ||||

| Pig 3 | 1.75 | 0.49 |

III-B Analyzing and canceling respiratory motion with U-RAFT

Table I provides the mean displacement of pixels for all the frames before and after applying for respiratory compensation. Figure 2 shows the net displacement of one of the pixels for the whole video sequence around a critical landmark before and after the application of the compensation. Furthermore, we also calculate the respiration rate, via the FFT, by tracking pixels near the rib cage in the ultrasound images. We calculated the respiration rate to be approximately beats per minute, which is consistent with the recorded breathing rate of approximately bpm during the experiment.

.

IV Conclusions and Future Work

In this work we proposed a new deep-learning model for deformable registration of ultrasound images, which we call U-RAFT. The model enables accurate pixel-tracking in at online rates, which makes it suitable for compensating for tissue motion, such as motion due to respiration. The effectiveness of the proposed approach is demonstrated through experiments on multiple in-vivo porcine lung videos, where a significant reduction in pixel movement is achieved, and the respiration rate could be estimated from the image alone. These results suggest that the proposed model has potential in helping interpret ultrasound images for roboti-assisted medical inverventions.

References

- [1] G. Renault, F. Tranquart, V. Perlbarg, A. Bleuzen, A. Herment, and F. Frouin, “A posteriori respiratory gating in contrast ultrasound for assessment of hepatic perfusion,” Physics in Medicine & Biology, vol. 50, no. 19, p. 4465, 2005.

- [2] M. Averkiou, M. Lampaskis, K. Kyriakopoulou, D. Skarlos, G. Klouvas, C. Strouthos, and E. Leen, “Quantification of tumor microvascularity with respiratory gated contrast enhanced ultrasound for monitoring therapy,” Ultrasound in medicine & biology, vol. 36, no. 1, pp. 68–77, 2010.

- [3] S. Mulé, N. Kachenoura, O. Lucidarme, A. De Oliveira, C. Pellot-Barakat, A. Herment, and F. Frouin, “An automatic respiratory gating method for the improvement of microcirculation evaluation: application to contrast-enhanced ultrasound studies of focal liver lesions,” Physics in Medicine & Biology, vol. 56, no. 16, p. 5153, 2011.

- [4] Z. Teed and J. Deng, “RAFT: Recurrent all-pairs field transforms for optical flow,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16. Springer, 2020, pp. 402–419.

- [5] C. Che, T. S. Mathai, and J. Galeotti, “Ultrasound registration: A review,” Methods, vol. 115, pp. 128–143, 2017.

- [6] M. Jaderberg, K. Simonyan, A. Zisserman, and k. kavukcuoglu, “Spatial transformer networks,” in Advances in Neural Information Processing Systems, C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, and R. Garnett, Eds., vol. 28. Curran Associates, Inc., 2015.