Unsupervised Domain Adaptive Graph Classification

Abstract

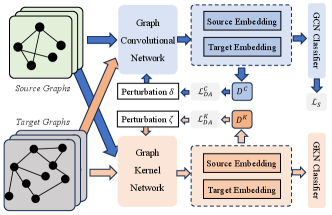

Despite the remarkable accomplishments of graph neural networks (GNNs), they typically rely on task-specific labels, posing potential challenges in terms of their acquisition. Existing work have been made to address this issue through the lens of unsupervised domain adaptation, wherein labeled source graphs are utilized to enhance the learning process for target data. However, the simultaneous exploration of graph topology and reduction of domain disparities remains a substantial hurdle. In this paper, we introduce the Dual Adversarial Graph Representation Learning (DAGRL), which explore the graph topology from dual branches and mitigate domain discrepancies via dual adversarial learning. Our method encompasses a dual-pronged structure, consisting of a graph convolutional network branch and a graph kernel branch, which enables us to capture graph semantics from both implicit and explicit perspectives. Moreover, our approach incorporates adaptive perturbations into the dual branches, which align the source and target distribution to address domain discrepancies. Extensive experiments on a wild range graph classification datasets demonstrate the effectiveness of our proposed method.

Index Terms— Adversarial Learning, Dual Graph Representation Learning, Unsupervised Domain Adaptive Learning

1 Introduction

Graph neural networks (GNNs) have achieved remarkable accomplishments in various domains such as social network analysis [1, 2], time series prediction [3, 4], and protein property prediction [5, 6]. These networks leverage the rich relational information present in graph-structured data to make accurate predictions and learn meaningful representations. However, the success of GNNs often depends on the task-specific labels, which can be costly and slow to obtain.

To address the challenge of label acquisition, researchers have explored unsupervised domain adaptation techniques for GNNs [7, 8, 9]. These techniques aim to transfer knowledge learned from labeled source graphs to improve the learning process for target data, which lacks labeled information. By aligning the distribution of source and target data, unsupervised domain adaptation enabling the application of GNNs in scenarios where labeled target data is scarce.

Existing work on unsupervised domain adaptation for GNNs faces significant challenges. The first is how to efficiently extract source and target topological features with limited labeled data. Simply utilizing implicit topological information is not enough for predicting unlabeled target graphs. The second challenge is the domain discrepancy. The model trained on the source domain cannot directly apply to the target domain with different data distributions. Moreover, the extracted topological information may interfere with the domain alignment. Therefore, a third challenge is how to extract graph topology exploration and reduce domain discrepancy alternately.

In this paper, we propose a novel approach called Dual Adversarial Graph Representation Learning (DAGRL) to tackle these challenges. DAGRL introduces a dual-brunch graph structure, comprising a graph convolutional network branch and a graph kernel branch. This dual-branch architecture enables us to capture graph semantics from both implicit and explicit perspectives, leveraging both the local neighborhood information and global structural properties. Moreover, our method incorporates adaptive perturbations into the dual branches to align the source and target distributions and address domain discrepancies. By perturbing the graph representations in a controlled manner, DAGRL encourages the network to learn robust and domain-invariant features. This adversarial learning framework allows us to jointly optimize the graph topology exploration and domain adaptation iteratively, resulting in improved representation learning for target graphs. To evaluate the effectiveness of our proposed DAGRL method, we conduct extensive experiments on a wide range of graph classification datasets. The experimental results demonstrate the superiority of our approach compared to existing state-of-the-art methods, highlighting its potential to enhance graph representation learning in various domains. The main contributions can be summarized as follows:

-

•

We propose a novel technique DAGRL for graph classification that adapts to different domains. DAGRL contains a graph convolutional network branch and a graph kernel network branch to capture topological information from different perspectives.

-

•

We introduce adaptive perturbations that align the source and target domains by perturbing the graph representations in a controlled manner, reducing domain discrepancy. Besides, we present an adversarial learning framework that alternately optimizes graph topology exploration and domain adaptation, improving the representation learning performance for target graphs.

-

•

Extensive experiments on various graph classification datasets demonstrate the effectiveness of the proposed DAGRL.

2 METHODOLOGY

2.1 Problem Definition

A graph is denoted as , where is the set of nodes and is the set of edges. The graph node feature is , each row represents the feature vector of node and is the dimension of node features, is the number of nodes. In our settings, we are given a labeled source domain containing labeled samples and an unlabeled target domain with examples. and sharing the same label space but have distinct data distributions in the graph space. Our purpose is to train the graph classification model on the labeled and unlabeled to obtain the competitive result on the target domain.

2.2 Dual Graph Branches

Graph Convolutional Network Branch. We use a graph convolutional network to implicitly capture the topology of the graph. For each node, we combine the embeddings of its neighbors from the previous layer. Then we update the node embedding by merging it with the combined neighbor embedding. The embedding of node at layer is :

where denotes the neighbors of . and denote the aggregation and combination operations parameterized by at the -th layer, respectively. Then, we utilize the function to summarize the node representations, and introduce a multilayer perception (MLP) classifier for final prediction:

| (1) |

where denotes the graph-level representation and is final prediction.

Graph Kernel Network Branch. Given two graph samples and , graph kernels calculate their similarity by comparing their local-substructure using a kernel function. In formulation,

| (2) |

where represents the local substructure centered at node and is a pre-defined similarity measurement. We omit and leave in Eq. 2 for simplicity.

In this part, we apply the Weisfeiler-Lehmah (WL) Subtree Kernels as the default kernel branch. WL subtree kernels compare all subtree patterns with limited depth rooted at every node. Given the maximum depth , we have:

| (3) | ||||

where is derived by counting matched subtree pairs of depth rooted at node and , respectively. We would assign the source label to the similar target graphs.

| Methods | M0M1 | M1M0 | M0M2 | M2M0 | M0M3 | M3M0 | M1M2 | M2M1 | M1M3 | M3M1 | M2M3 | M3M2 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WL subtree | 74.9 | 74.8 | 67.3 | 69.9 | 57.8 | 57.9 | 73.7 | 80.2 | 60.0 | 57.9 | 70.2 | 73.1 | 68.1 |

| GCN | 71.1 | 70.4 | 62.7 | 69.0 | 57.7 | 59.6 | 68.8 | 74.2 | 53.6 | 63.3 | 65.8 | 74.5 | 65.9 |

| GIN | 72.3 | 68.5 | 64.1 | 72.1 | 56.6 | 61.1 | 67.4 | 74.4 | 55.9 | 67.3 | 62.8 | 73.0 | 66.3 |

| CIN | 66.8 | 69.4 | 66.8 | 60.5 | 53.5 | 54.2 | 57.8 | 69.8 | 55.3 | 74.0 | 58.9 | 59.5 | 62.2 |

| GMT | 73.6 | 75.8 | 65.6 | 73.0 | 56.7 | 54.4 | 72.8 | 77.8 | 62.0 | 50.6 | 64.0 | 63.3 | 65.8 |

| CDAN | 73.8 | 74.1 | 68.9 | 71.4 | 57.9 | 59.6 | 70.0 | 74.1 | 60.4 | 67.1 | 59.2 | 63.6 | 66.7 |

| ToAlign | 74.0 | 72.7 | 69.1 | 65.2 | 54.7 | 73.1 | 71.7 | 77.2 | 58.7 | 73.1 | 61.5 | 62.2 | 67.8 |

| MetaAlign | 66.7 | 51.4 | 57.0 | 51.4 | 46.4 | 51.4 | 57.0 | 66.7 | 46.4 | 66.7 | 46.4 | 57.0 | 55.4 |

| DEAL | 76.3 | 72.6 | 69.8 | 73.3 | 58.3 | 71.2 | 77.9 | 80.8 | 64.1 | 74.1 | 70.6 | 74.9 | 72.0 |

| CoCo | 77.7 | 76.6 | 73.3 | 74.5 | 66.6 | 74.3 | 77.3 | 80.8 | 67.4 | 74.1 | 68.9 | 77.5 | 74.1 |

| DAGRL | 77.9 | 76.8 | 72.8 | 75.3 | 66.7 | 75.4 | 77.8 | 81.0 | 67.7 | 75.3 | 71.1 | 77.7 | 74.6 |

| Methods | T0T1 | T1T0 | T0T2 | T2T0 | T0T3 | T3T0 | T1T2 | T2T1 | T1T3 | T3T1 | T2T3 | T3T2 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WL subtree | 65.3 | 51.1 | 69.6 | 52.8 | 53.1 | 54.4 | 71.8 | 65.4 | 60.3 | 61.9 | 57.4 | 76.3 | 61.6 |

| GCN | 64.2 | 50.3 | 67.9 | 50.4 | 52.2 | 53.8 | 68.7 | 61.9 | 59.2 | 51.4 | 54.9 | 76.3 | 59.3 |

| GIN | 67.8 | 51.0 | 77.5 | 54.3 | 56.8 | 54.5 | 78.3 | 63.7 | 56.8 | 53.3 | 56.8 | 77.1 | 62.3 |

| CIN | 67.8 | 50.3 | 78.3 | 54.5 | 56.8 | 54.5 | 78.3 | 67.8 | 59.0 | 67.8 | 56.8 | 78.3 | 64.2 |

| GMT | 67.8 | 50.0 | 78.4 | 50.1 | 56.8 | 50.7 | 78.3 | 67.8 | 56.8 | 67.8 | 56.4 | 78.1 | 63.3 |

| CDAN | 69.9 | 55.2 | 78.3 | 56.0 | 59.5 | 56.6 | 78.3 | 68.5 | 61.7 | 68.1 | 61.0 | 78.3 | 66.0 |

| ToAlign | 68.2 | 58.5 | 78.4 | 58.8 | 58.5 | 53.8 | 78.8 | 67.1 | 64.4 | 68.8 | 57.9 | 78.4 | 66.0 |

| MetaAlign | 65.7 | 57.5 | 78.0 | 58.5 | 63.9 | 52.2 | 78.8 | 67.1 | 62.3 | 67.5 | 56.8 | 78.4 | 65.6 |

| DEAL | 73.9 | 59.4 | 68.2 | 58.2 | 53.8 | 58.7 | 69.2 | 66.2 | 63.5 | 67.4 | 61.1 | 77.8 | 64.8 |

| CoCo | 69.9 | 59.8 | 78.8 | 59.0 | 62.3 | 59.0 | 78.4 | 66.8 | 65.0 | 68.8 | 61.2 | 78.4 | 67.3 |

| DAGRL | 74.2 | 60.1 | 78.8 | 59.3 | 62.7 | 59.4 | 78.1 | 67.2 | 65.7 | 69.3 | 62.8 | 79.2 | 68.1 |

2.3 Dual Adversarial Perturbation Learning

In this part, our goal is to use perturbations on source graphs to mitigate the impact of domain inconsistencies on feature representations with target semantics. We employ adversarial training to identify promising perturbation directions. Taking the GCN branch as an example, we obtain feature representations and label predictions for both the source and target domains. We train a domain discriminator on each branch to differentiate between the two domains. For each source graph , we introduce noise to the node embedding . The direction of perturbation is determined through a minimax optimization process, and is updated based on the gradient descent direction for each source graph .

| (4) |

where is the parameters of the domain discriminator and is the maximum of perturbation size. is the gradient of the loss for with respect to the perturbation . Similarity, we can get the perturbation on the GKN branch by optimize the minimax objective, i.e., .

2.4 Learning Framework

The primary training objective of DAGRL combines adversarial perturbation loss and target data classification loss. Additionally, minimizing the expected source error for labeled source samples is essential.

| (5) |

where denotes the representations of source data under perturbations. Consequently, to update the network parameters, we minimize the ultimate objective as follows:

| (6) |

where and are hyper-parameters to balance the domain adversarial loss and classification loss. Meanwhile, we need to update the perturbations of source samples, i.e., and , and then update the model parameters interactively.

| Methods | M0M1 | M1M0 | M0M2 | M2M0 | M0M3 | M3M0 | M1M2 | M2M1 | M1M3 | M3M1 | M2M3 | M3M2 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DAGRL/P1 | 76.3 | 72.6 | 64.7 | 73.2 | 64.1 | 73.3 | 76.4 | 78.6 | 63.7 | 73.4 | 68.8 | 74.6 | 71.6 |

| DAGRL/P2 | 76.7 | 73.9 | 65.4 | 73.8 | 65.2 | 73.4 | 75.9 | 78.1 | 64.5 | 74.4 | 67.2 | 75.9 | 72.0 |

| DAGRL-GIN | 77.0 | 74.2 | 64.6 | 73.3 | 64.3 | 74.1 | 76.3 | 79.5 | 66.8 | 75.2 | 68.7 | 76.0 | 72.5 |

| DAGRL-GKN | 76.8 | 73.7 | 65.3 | 74.1 | 65.2 | 74.7 | 76.7 | 78.6 | 66.4 | 74.3 | 67.7 | 77.5 | 72.6 |

| DAGRL | 77.9 | 76.8 | 72.8 | 75.3 | 66.7 | 75.4 | 77.8 | 81.0 | 67.7 | 75.3 | 71.1 | 77.7 | 74.6 |

3 Experiments

3.1 Datasets and Baselines

Datasets. To evaluate the proposed method’s effectiveness, we conducted experiments using our method on several real-world datasets: Mutagenicity (M) [10], and Tox21 111https://tripod.nih.gov/tox21/challenge/data.jsp. (T) from the TUDataset [11]. Mutagenicity dataset consists of 4337 molecular structures and their corresponding Ames test data. Tox21 dataset is used to assess the predictive ability of models in detecting compound interferences. To address domain distribution variations within each dataset, we divided them into four sub-datasets: 0, 1, 2, and 3 ( represents each respective dataset) based on edge density [8].

Baselines. To evaluate the effectiveness of our method, we compare the proposed method with a large number of state-of-the-art methods, including five graph approaches (WL subtree [12], GCN [13], GIN [14], CIN [15] and GMT [16]), and three recent domain adaptation methods (CDAN [17], ToAlign [18], MetaAlign [19]) and two graph domain adaptation methods (DEAL [7], CoCo [8]).

3.2 Implementation Details

In our implementation, a two-layer GIN [14] is employed in the GCN branch, while a two-layer network with the Weisfeiler-Lehman (WL) kernel [12] is used in the GKN branch. The Adam optimizer with a learning rate of is used by default. Both the embedding dimension of hidden layers and the batch size are set to 64.

3.3 Performance Comparison

Table 1 and 2 display the performance of graph classification in different unsupervised domain adaptation scenarios. The results indicate the following observations: 1) Domain adaptation methods outperform graph kernel and GNN methods, suggesting that current graph classification models lack transfer learning capabilities. Therefore, an effective domain adaptive framework is crucial for graph classification. 2) While domain adaptation methods achieve competitive results in simple transfer tasks, they struggle to make significant progress in challenging transfer tasks compared to GIN. This difficulty is attributed to the complexity of acquiring graph representations, making direct application of current domain adaptation approaches to GCNs unwise. 3) DAGRL achieves superior performance compared to all baselines in most cases. This improvement is attributed to two key factors: (i) Dual branches (GCN and GKN) enhances graph representation in situations with limited labels. (ii) Adversarial perturbation learning facilitates effective domain alignment.

3.4 Ablation Study

To evaluate the impact of each component in our DAGRL, we introduce several model variants as follows: 1) DAGRL/P1: It removes the perturbation on the GCN branch; 2) DAGRL/P2: It removes the perturbation on the GKN branch; 3) DAGRL-GIN: It uses two different GINs to generate graph representations; 4) DAGRL-GKN: It uses two different GKN to generate graph representations.

We conducted performance comparisons on Mutagenicity, and the results are presented in Table 3. Based on the observations from the table, the following findings can be summarized: 1) DAGRL/P1 and DAGRL/P2 exhibit worse performance compared to DAGRL. This confirms that adaptive adversarial perturbation learning is effective in achieving domain alignment. 2) Both DAGRL-GIN and DAGRL-GKN produce similar results but perform worse than DAGRL. This suggests that relying solely on ensemble learning in either implicit or explicit manner does not significantly enhance graph representation learning when labels are scarce.

4 Conclusion

We introduce the practical problem of unsupervised domain adaptive graph classification named DAGRL. DAGRL is proposed with two branches, i.e., a graph convolutional network branch and a graph kernel network branch, which explores graph topological information in implicit and explicit manners, respectively. Then, we introduce the dual adaptive adversarial perturbation learning to minimize the domain discrepancy. Throughout the training process, we iteratively update the perturbation direction and model parameters, enabling us to align domain distributions and learn graph representations more accurately. Extensive experiments valdate the effectiveness of the proposed DAGRL.

References

- [1] H. Chen, H. Yin, X. Sun, T. Chen, B. Gabrys, and K. Musial, “Multi-level graph convolutional networks for cross-platform anchor link prediction,” in KDD, 2020, pp. 1503–1511.

- [2] F. Gao, G. Wolf, and M. Hirn, “Geometric scattering for graph data analysis,” in ICML, 2019, pp. 2122–2131.

- [3] N. Yin, L. Shen, H. Xiong, B. Gu, C. Chen, X.-S. Hua, S. Liu, and X. Luo, “Messages are never propagated alone: Collaborative hypergraph neural network for time-series forecasting,” IEEE Transactions on Pattern Analysis & Machine Intelligence, no. 01, pp. 1–15, 2023.

- [4] N. Yin, F. Feng, Z. Luo, X. Zhang, W. Wang, X. Luo, C. Chen, and X.-S. Hua, “Dynamic hypergraph convolutional network,” in 2022 IEEE 38th International Conference on Data Engineering (ICDE). IEEE, 2022, pp. 1621–1634.

- [5] N. Yin, L. Shen, M. Wang, X. Luo, Z. Luo, and D. Tao, “Omg: Towards effective graph classification against label noise,” TKDE, 2023.

- [6] N. Yin and Z. Luo, “Generic structure extraction with bi-level optimization for graph structure learning,” Entropy, vol. 24, no. 9, p. 1228, 2022.

- [7] N. Yin, L. Shen, B. Li, M. Wang, X. Luo, C. Chen, Z. Luo, and X.-S. Hua, “Deal: An unsupervised domain adaptive framework for graph-level classification,” in ACMMM, 2022, pp. 3470–3479.

- [8] N. Yin, L. Shen, M. Wang, L. Lan, Z. Ma, C. Chen, X.-S. Hua, and X. Luo, “Coco: A coupled contrastive framework for unsupervised domain adaptive graph classification,” in ICML, 2023.

- [9] J. Pang, Z. Wang, J. Tang, M. Xiao, and N. Yin, “Sa-gda: Spectral augmentation for graph domain adaptation,” in ACMMM, 2023.

- [10] J. Kazius, R. McGuire, and R. Bursi, “Derivation and validation of toxicophores for mutagenicity prediction,” Journal of medicinal chemistry, vol. 48, no. 1, pp. 312–320, 2005.

- [11] C. Morris, N. M. Kriege, F. Bause, K. Kersting, P. Mutzel, and M. Neumann, “Tudataset: A collection of benchmark datasets for learning with graphs,” in ICMLW, 2020.

- [12] N. Shervashidze, P. Schweitzer, E. J. Van Leeuwen, K. Mehlhorn, and K. M. Borgwardt, “Weisfeiler-lehman graph kernels,” Journal of Machine Learning Research, vol. 12, no. 9, pp. 2539–2561, 2011.

- [13] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in ICLR, 2017.

- [14] K. Xu, W. Hu, J. Leskovec, and S. Jegelka, “How powerful are graph neural networks?” in ICLR, 2019.

- [15] C. Bodnar, F. Frasca, N. Otter, Y. G. Wang, P. Liò, G. F. Montufar, and M. Bronstein, “Weisfeiler and lehman go cellular: Cw networks,” in NeurIPS, 2021, pp. 2625–2640.

- [16] J. Baek, M. Kang, and S. J. Hwang, “Accurate learning of graph representations with graph multiset pooling,” in ICLR, 2021.

- [17] M. Long, Z. Cao, J. Wang, and M. I. Jordan, “Conditional adversarial domain adaptation,” 2018, pp. 1647–1657.

- [18] G. Wei, C. Lan, W. Zeng, Z. Zhang, and Z. Chen, “Toalign: Task-oriented alignment for unsupervised domain adaptation,” in NeurIPS, 2021, pp. 13 834–13 846.

- [19] G. Wei, C. Lan, W. Zeng, and Z. Chen, “Metaalign: Coordinating domain alignment and classification for unsupervised domain adaptation,” in CVPR, 2021, pp. 16 643–16 653.