Unsupervised Learning of Debiased Representations with Pseudo-Attributes

Abstract

Dataset bias is a critical challenge in machine learning since it often leads to a negative impact on a model due to the unintended decision rules captured by spurious correlations. Although existing works often handle this issue based on human supervision, the availability of the proper annotations is impractical and even unrealistic. To better tackle the limitation, we propose a simple but effective unsupervised debiasing technique. Specifically, we first identify pseudo-attributes based on the results from clustering performed in the feature embedding space even without an explicit bias attribute supervision. Then, we employ a novel cluster-wise reweighting scheme to learn debiased representation; the proposed method prevents minority groups from being discounted for minimizing the overall loss, which is desirable for worst-case generalization. The extensive experiments demonstrate the outstanding performance of our approach on multiple standard benchmarks, even achieving the competitive accuracy to the supervised counterpart. The source code is available at our project page111https://github.com/skynbe/pseudo-attributes.

1 Introduction

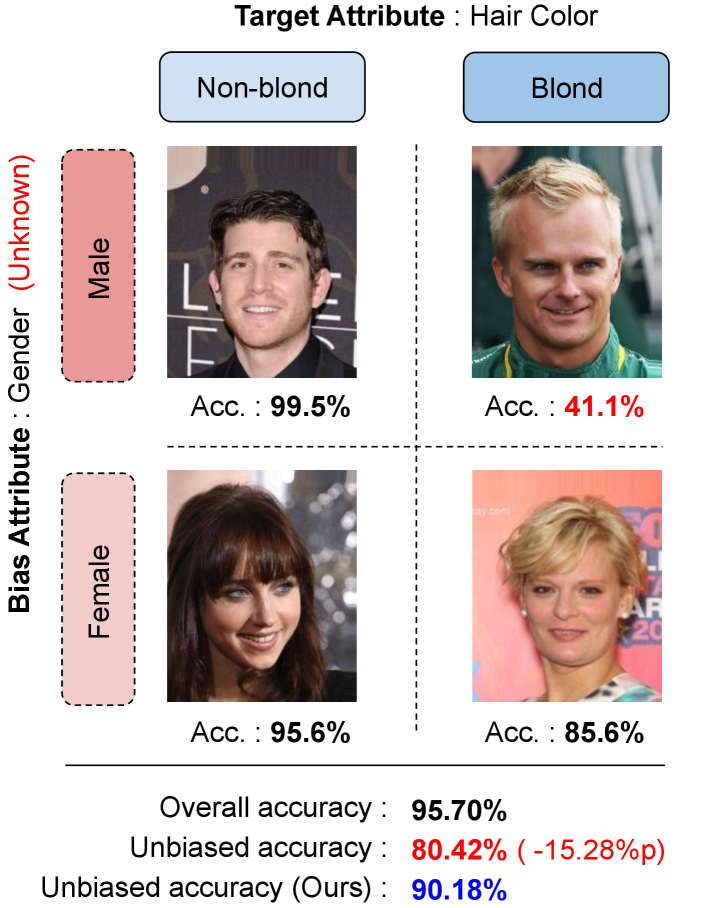

Deep neural networks have achieved impressive performance by minimizing the average loss on training datasets. Although we typically adopt the empirical risk minimization framework as a training objective, it is sometimes problematic due to dataset bias leading to significant degradation of worse-case generalization performance as discussed in [2, 37, 18, 12, 38]. This is because models do not always learn what we expect, but, to the contrary, rather capture unintended decision rules from spurious correlations. For example, on the Colored MNIST dataset [21, 25, 1], where each digit is highly correlated to a certain color, a network often learns the color patterns in images, not the digit information, while ignoring few conflicting samples. Such an unintended rule works well on most of the training samples but incurs unexpected worst-case errors for the examples in minority groups, which makes the model unable to generalize on test environments with distribution shifts or robustness constraints. Figure 1 illustrates the problem that we mainly deal with in this paper.

To mitigate the bias issue, learning debiased representations from a biased dataset has received growing attention from machine learning community [21, 34, 26, 28, 14, 1, 3]. However, most previous works rely on the explicit supervision or prior knowledge under the assumption of the presence of dataset bias. This setting is problematic because it is challenging to identify what kinds of bias exist and which attributes involve spurious correlations without thorough analysis of model and dataset. Note that, even with the information about dataset bias, the relevant annotations over all training examples is challenging. Contrary to the supervised approaches, [25, 30] tackle a more challenging setting, where the bias information is unavailable, via failure-based learning or subgroup-based penalizing.

This paper presents a simple but effective unsupervised debiasing technique via feature clustering and cluster re-weighting. We first observe that the examples with the same label for a certain attribute other than the target attribute tend to have similar representations in the feature space by the model trained sufficiently. Based on this observation, we estimate bias pseudo-attributes in an unsupervised manner from the clustering results within each class. To exploit the bias pseudo-attributes for learning debiased representations, we introduce a reweighting scheme for the corresponding clusters, where each cluster has an importance weight depending on its size and task-specific accuracy. This strategy encourages the minority clusters to participate in the optimization process actively, which is critical to improving worst-group generalization. Despite its simplicity, our method turns out to be effective for debiasing without any supervision of bias information; it is even comparable to the supervised debiasing method. The main contributions of our work are summarized below:

-

We propose a simple but effective unsupervised debiasing approach, which requires no explicit supervision about spurious correlations across attributes.

-

We introduce a technique to learn debiased representations by identifying bias pseudo-attributes via clustering and reweighting the corresponding clusters based on both their size and target loss.

-

We provide extensive experimental results and achieve outstanding performance in terms of unbiased and worst-group accuracies, which are even as competitive as supervised debiasing methods.

2 Related Work

2.1 Bias in computer vision tasks

Real-world datasets are inevitably biased due to their insufficiently controlled collection process, and consequently, deep neural networks often capture unintended correlations between the true labels and the spuriously correlated ones. Measuring and mitigating the potential risks posed by dataset or algorithmic bias has been extensively investigated in various computer vision tasks [6, 31, 21, 16, 33, 3, 36]. For example, VQA models frequently exploits statistical regularities between answer occurrences and patterns of questions while ignoring visual information [3, 7]. Semantic segmentation models typically take advantage of scene context for pixel-level predictions of semantic labels [6]. To prevent using undesirable correlation in biased datasets, existing approaches often rely on human supervision for bias annotations and present several technical directions such as data augmentation [13, 39], model ensembles [3, 7], and statistical regularizations [1]. Such supervised debiasing techniques have been applied to various computer vision tasks by exploiting known application-specific bias information, including uni-modality of the dataset in visual question answering [3], stereotype textures in image recognition [13], temporal invariance in action recognition [20], and demographic information in facial image recognition [26, 39, 35].

2.2 Handling distribution shifts

Distribution shift has recently emerged as a critical challenge in machine learning, where the goal of the optimization is to learn a robust model in a test environment with a different distribution. Distributionally robust optimization (DRO) [2, 11, 9, 17] has been proposed to improve the worst-case generalization performance over a family of target distributions, and has provided theoretical background of Group DRO [26] and its variation [30]. However, the objective of DRO often leads to an overly conservative model and results in performance degeneration on unseen environments [10, 15]. To relax the constraints for the uncertainty set of test distributions, some approaches pose additional assumptions. For instance, the dataset consists of multiple groups with the shared properties and the uncertainty set is represented by a mixture of these groups. This assumption is also used in robust federated learning [24, 19], algorithmic fairness [37, 5, 8], and domain generalization [18, 4, 29]. Our framework also takes advantage of this assumption but does not rely on the supervision of group information.

2.3 Debiasing via loss-based reweighting

There exist several generic debiasing techniques via sample reweighting based on observed task-specific losses under the supervised environment [26] or the unsupervised setting [25, 30]. Group DRO [26] exploits the group information specified by the bias attributes and aims to improve the worst-group generalization performance. On the other hand, Nam et al. [25] employ the difference between the generalized and standard cross-entropy loss to capture the bias-alignment for sample reweighting while Sohoni et al. [30] estimate subclass labels via clustering and utilize the information for distributionally robust optimization to mitigate hidden stratification. Although the unsupervised approaches work well in small and artificial datasets such as MNIST, their performance improvement becomes marginal in real-world datasets including CelebA. Our framework also belongs to unsupervised methods that do not rely on the bias information to learn debiased representations.

3 Method

This section presents our debiasing technique via bias pseudo-attribute estimation and sample reweighting.

3.1 Preliminaries

Let an example be associated with a set of attributes . The goal of our model is to predict a target attribute by estimating the intended causation , which does not involve any undesirable correlation to other latent attributes, i.e., , . On the other hand, spurious correlation indicates strong coincidence between two attributes ; the conditional entropy is close to zero and there exists no causal relationship between them. A machine learning algorithm is considered biased if a certain attribute has a spurious correlation with the target attribute and affects the prediction, i.e., . Our approach performs debiasing by estimating groups in the dataset without supervision, where the group is defined by a pair of target and bias attributes, e.g., .

3.2 Observation

If a bias attribute is highly correlated to a target attribute while being easy to learn, the model may ignore few conflicting examples and learn its decision rule based on the bias attributes with spurious correlations to maximize accuracy [25, 27]. To prevent this undesirable situation, a simple group upweighting or resampling strategies [27] are known to be effective while they work poorly in realistic scenarios, where the bias information is unknown during training.

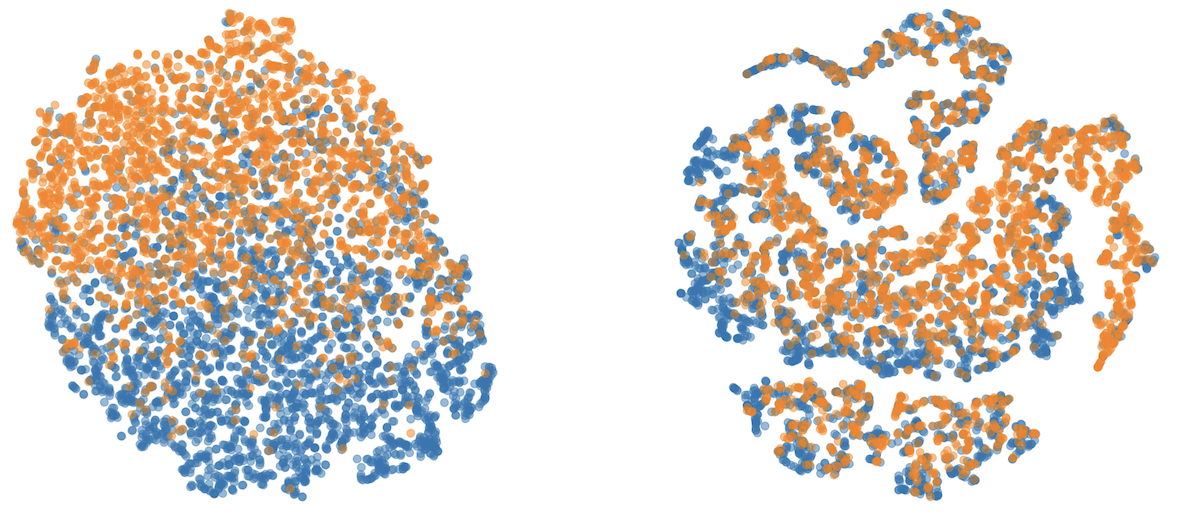

To overcome this challenge, from our intuition, we analyze the feature semantics over the target and bias attributes. We first naïvely train a base model on the CelebA dataset to classify hair color, and visualize the representation of the examples after convergence with a sufficient number of epochs (). We select gender as a bias attribute, but do not utilize any information of the bias attribute during training. It turns out that, even without using the bias information during training, the examples drawn from certain groups, which are given by a combination of hair color and gender attribute values in this case, e.g., (male, non-blonde) and (female, blonde), are located closely in the feature space. This observation implies that it is possible to identify bias pseudo-attributes by taking advantage of the embedding results even without attribute supervisions. Our unsupervised debiasing framework is based on the capability to identify the bias pseudo-attributes via clustering.

3.3 Formulation

Suppose that training examples, , are drawn from a certain distribution . Given a loss function and model parameters , the objective of the empirical risk minimization (ERM) is to optimize the following expected loss:

| (1) |

where is the empirical distribution over training data that approximates the true distribution . Although ERM generally works well, it tends to ignore examples in minority groups that conflict with bias attributes and implicitly assumes the consistency of the underlying distributions for training and testing data. Consequently, the approach often leads to high unbiased and worst-group test error [9, 26].

Several distributionally robust optimization (DRO) techniques [2, 9, 17] can be employed to tackle the dataset bias and distribution shift problems and maximize unbiased generalization accuracy. They consider a particular uncertainty set , which is close to the training distribution , e.g., , where indicates an -divergence function222Let and be probability distributions over a space , then f-divergence is .. To minimize the worst-case loss over the uncertainty distribution set , DRO optimizes

| (2) |

However, this objective is overly pessimistic and makes the model consider the implausible worst cases [10, 15].

The group distributionally robust optimization, referred to as group DRO [26] creates more realistic sets of possible test distributions by leveraging the prior knowledge of group information. They assumes the training distribution is a mixture of groups, , which is given by

| (3) |

where and is a -dimensional simplex. Then the uncertainty set is defined by a set of all possible mixtures of these groups, i.e., . Because is a simplex, its optimum is achieved at a vertex, thus minimizing the worst-case risk of is equivalent to

| (4) |

Different from the group DRO setting, we do not know the group assignment for each training example. Instead, we use the bias pseudo-attribute information, obtained by any clustering algorithm in the feature embedding space, to define groups. Note that the clustering is performed with the representations given by the base model trained without debiasing, which is parameterized by . Our goal is to alleviate dataset bias and maximize unbiased accuracy, and we need to consider all groups fairly for optimization. To this end, we assign a proper importance weight, , to the cluster, where , and the final objective of our framework is given by minimizing a weighted empirical risk as follows:

| (5) |

where denotes the cluster membership of an example . The details of the weight assignment method will be discussed next.

3.4 Sample weighting with bias pseudo-attributes

Based on our observation described in Section 3.2, we first cluster training examples in each class on the feature embedding space defined by the base model optimized sufficiently, e.g., for 100 epochs using the standard classification loss. We suppose that each cluster corresponds to a bias pseudo-attribute. Among all clusters, we focus on the examples in the minority clusters, especially when they have high average losses. A common failure case in the presence of dataset bias is incurred by ignoring specific subpopulation groups for minimizing the overall training loss, and minority clusters are prone to be ignored due to their sizes. The problematic cases among the clusters are the ones that contain many bias-conflicting examples, having high losses, and thus result in poor worst-case errors. If the minority clusters consist of mostly bias-aligned samples, they will apparently achieve high classification accuracy.

Therefore, to handle dataset bias issue, we should consider both scale and average difficulty (loss) of each cluster, unlike group DRO [26] and George [30], which focus only on the average loss. We calculate the importance weight of each cluster by our reweighting scheme to train the target model, which is given by

| (6) |

where and indicates the parameters of the final and base models, respectively, is a cluster membership function, and is an indicator function. Note that denotes the sample distribution of the cluster and is the number of samples in the cluster, where .

3.5 Algorithm procedure

Algorithm 1 presents the optimization procedure of the proposed framework. We first näively train a baseline network (line 4-7), parameterized by . Then, we cluster all training examples based on the features extracted from the network to obtain the membership distribution and the size of cluster (line 8-11). Based on the cluster assignments, we calculate the importance weight of each cluster using the target model, parameterized by , where the weight is updated by exponential moving average at each iteration (line 15). We finally use the normalized importance weight of each sample over a mini-batch to train the target model (line 18-19).

| Unbiased accuracy (%) | Worst-group accuracy (%) | ||||||||||

| \cdashline3-12 | Unsupervised | Supervised | Unsupervised | Supervised | |||||||

| Target | Bias | Base | LfF | LfF∗ | BPA (ours) | Group DRO | Base | LfF | LfF∗ | BPA (ours) | Group DRO |

| Blond Hair | Gender | 80.42 | 59.46 | 84.89 | 90.18 | 91.39 | 41.02 | 34.23 | 57.96 | 82.54 | 87.86 |

| Heavy Makeup | Gender | 71.19 | 56.34 | 71.85 | 73.78 | 72.70 | 17.35 | 30.81 | 23.87 | 39.84 | 21.36 |

| Pale Skin | Gender | 71.50 | 78.69 | 75.23 | 90.06 | 90.55 | 36.64 | 57.38 | 43.26 | 88.60 | 87.68 |

| Wearing Lipstick | Gender | 73.90 | 53.79 | 73.84 | 78.28 | 78.26 | 31.38 | 25.52 | 31.92 | 46.52 | 46.08 |

| Young | Gender | 78.19 | 45.99 | 79.58 | 82.27 | 82.40 | 52.79 | 0.34 | 57.79 | 74.33 | 76.29 |

| Double Chin | Gender | 64.61 | 65.46 | 68.47 | 82.92 | 83.19 | 21.33 | 28.19 | 28.24 | 67.78 | 72.94 |

| Chubby | Gender | 67.42 | 60.03 | 71.56 | 83.88 | 81.90 | 24.30 | 7.60 | 34.09 | 72.32 | 72.64 |

| Wearing Hat | Gender | 93.53 | 84.56 | 94.81 | 96.80 | 96.84 | 85.12 | 69.06 | 88.31 | 94.94 | 94.67 |

| Oval Face | Gender | 62.70 | 57.64 | 62.30 | 67.18 | 65.40 | 29.15 | 7.40 | 36.00 | 55.78 | 56.84 |

| Pointy Nose | Gender | 62.10 | 42.20 | 63.83 | 68.90 | 70.71 | 25.80 | 1.05 | 38.04 | 52.48 | 63.76 |

| Straight Hair | Gender | 70.28 | 39.57 | 72.84 | 79.18 | 77.04 | 47.82 | 1.95 | 58.53 | 72.09 | 66.10 |

| Blurry | Gender | 73.05 | 76.70 | 77.52 | 88.93 | 87.05 | 45.68 | 43.81 | 52.35 | 84.10 | 82.06 |

| Narrow Eyes | Gender | 63.18 | 68.53 | 67.77 | 76.39 | 76.72 | 27.01 | 31.81 | 38.53 | 73.24 | 71.47 |

| Arched Eyebrows | Gender | 69.72 | 56.17 | 71.87 | 74.77 | 78.30 | 34.76 | 26.21 | 44.97 | 54.36 | 69.44 |

| Bags Under Eyes | Gender | 69.47 | 44.61 | 71.86 | 77.84 | 75.88 | 41.65 | 0.06 | 49.10 | 62.55 | 63.34 |

| Bangs | Gender | 89.04 | 41.41 | 89.04 | 93.94 | 94.45 | 76.91 | 3.18 | 82.37 | 92.21 | 92.12 |

| Big Lips | Gender | 60.87 | 46.74 | 62.15 | 66.50 | 63.70 | 30.85 | 31.44 | 38.54 | 56.99 | 47.55 |

| No Beard | Gender | 73.11 | 60.12 | 73.13 | 79.58 | 77.86 | 13.30 | 11.92 | 20.00 | 40.00 | 36.70 |

| Receding Hairline | Gender | 69.72 | 70.57 | 74.58 | 84.95 | 85.15 | 35.69 | 32.10 | 45.53 | 79.11 | 79.12 |

| Wavy Hair | Gender | 73.10 | 48.00 | 74.53 | 79.89 | 79.65 | 38.01 | 0.06 | 45.24 | 65.74 | 66.79 |

| Wearing Earrings | Gender | 72.17 | 59.35 | 74.17 | 84.57 | 83.50 | 26.26 | 0.10 | 32.95 | 72.81 | 75.24 |

| Wearing Necklace | Gender | 55.04 | 58.64 | 57.21 | 68.96 | 62.89 | 2.72 | 0.22 | 6.67 | 41.93 | 24.34 |

| Average | Gender | 72.67 | 58.65 | 74.87 | 81.74 | 80.87 | 39.91 | 21.91 | 47.88 | 69.84 | 69.68 |

4 Experiments

| Unbiased accuracy (%) | Worst-group accuracy (%) | ||||||||||

| \cdashline3-12 | Unsupervised | Supervised | Unsupervised | Supervised | |||||||

| Target | Bias | Base | LfF | LfF∗ | BPA (ours) | Group DRO | Base | LfF | LfF∗ | BPA (ours) | Group DRO |

| Object | Place | 84.63 | 85.48 | 84.57 | 87.05 | 88.99 | 62.39 | 68.02 | 61.68 | 71.39 | 80.82 |

| Place | Object | 87.99 | 85.77 | 85.05 | 88.44 | 89.20 | 73.34 | 62.37 | 60.00 | 79.16 | 85.27 |

| Unsupervised | Supervised | ||||

|---|---|---|---|---|---|

| Target | Bias | Baseline | LfF | BPA (ours) | Group DRO |

| Digit | Color | 74.48 | 85.15 | 85.26 | 85.88 |

| Color | Digit | 99.95 | 99.91 | 99.82 | 98.96 |

4.1 Dataset

CelebA [22] is a large-scale face dataset for face image recognition, containing 40 attributes for each image. Following the previous works [26, 25], we set hair color and heavy makeup as the target attribute. Note that gender attribute is spuriously correlated to the two attributes and employed as the bias attribute for worst-group accuracy evaluation in our experiment. For more comprehensive results, we also consider the other 32 attributes as the target attributes.

Waterbirds [26] is a synthesized dataset with 4,795 training examples, created by combining birds photographs from the Caltech-UCSD Birds-200-2011 (CUB) dataset [32] and the background images in the Places dataset [40]. There exist two attributes in the dataset; one is the type of a bird, {waterbird, landbird}, which is the target attribute, and the other is the background place, {water, land}.

The Colored-MNIST dataset [21, 25, 1] is an extension of MNIST with the color attributes, where each digit is highly correlated to a certain color. There are 60K training examples and 10K test images, where the ratio of bias-aligned samples333It denotes the samples that can be correctly classified by using the bias attribute (color). is 95%. We follow the protocol employed in [25] for the experiment.

4.2 Implementation details

For CelebA and Waterbirds, we use a ResNet-18 as our backbone network, which is pretrained on ImageNet. We train both the base and target models using the Adam optimizer with a learning rate of , a batch size of 256, and a weight decay rate of 0.01. For the Colored MNIST dataset, we adopt a multi-layer perceptron with three hidden layers, each of which has 100 hidden units. We also employ the same Adam optimizer with a learning rate of . We train the models for 100 epochs for all experiments, and decay the learning rate using the cosine annealing [23].

For clustering, we extract features from a separately trained base network with the standard classification loss and perform -means clustering with in all experiments. The cluster weight of the cluster, , is updated by exponential moving average at each iteration with a momentum of 0.3. All the results reported in our paper are obtained from the average of three runs.

4.3 Evaluation protocol

To evaluate the unbiased accuracy with an imbalanced evaluation set, we measure the accuracy of each group , , defined by a pair of target and bias attribute values. We finally report the average accuracy of each group and worst-group accuracy among all groups as in [25].

4.4 Results

We present our main results on the standard benchmarks including CelebA, WaterBirds, and Colored-MNIST. In the rest of this section, our method is denoted by BPA, which stands for bias pseudo-attribute.

CelebA

Before evaluating our frameworks, we first thoroughly analyze the CelebA dataset in terms of algorithmic bias among the attributes. There are a total of 40 attributes in the CelebA dataset. The bias attribute is fixed to gender, and we analyze its relation to the attributes of the target candidates given by the rest of 39 attributes. We accept the target attributes if the smallest group given by its combinations with the bias attribute in the test split contains at least 10 examples for statistical stability444The removed target attributes are 5 o’clock shadow, bald, rosy cheeks, sideburns, goatee, mustache, and wearing necktie.. We suppose that the spurious correlation exists between target and bias attributes when a baseline model gives a large performance gap between its overall accuracy and unbiased accuracy (e.g., 5% points). We found that 26 out of 32 attributes have spurious correlation to gender, and report the results for the attributes. See our supplementary files for more detailed analysis.

Table 1 presents the experimental results of the proposed algorithm on the CelebA dataset, in comparison to the existing methods as well as the baseline model. Our model significantly outperforms the baseline and LfF [25] for all target attributes in terms of both unbiased and worst-group accuracies. Note that our model is almost competitive to a supervised approach, Group DRO [26], without the explicit bias information. On the other hand, we observe that training the model with LfF deteriorates performance even compared to the baseline. This is because it fixes the feature extractor and only trains its classification layer at the end555https://github.com/alinlab/LfF. To conduct a meaningful comparison with the stable version of LfF, we first train the baseline model used in our experiment for 100 epochs and then fine-tune the classification layer only using the LfF algorithm; this revised version is referred to as LfF∗ in the rest of this section. Although the performance of LfF∗ is stable, the improvement by debiasing is still limited compared to Group DRO and our approach. Additional experimental results for other bias attributes are provided in our supplementary documents.

| Unbiased accuracy (%) | Worst-group accuracy (%) | ||||||||||

| \cdashline3-12 | Unsupervised | Supervised | Unsupervised | Supervised | |||||||

| Target | Bias | Base | LfF | LfF∗ | BPA (ours) | Group DRO | Base | LfF | LfF∗ | BPA (ours) | Group DRO |

| Attractive | Gender | 76.05 | 30.18 | 75.97 | 77.90 | 78.35 | 63.61 | 6.09 | 64.78 | 65.20 | 66.30 |

| Smiling | Gender | 91.66 | 74.62 | 91.20 | 92.08 | 91.64 | 88.49 | 60.09 | 88.65 | 90.06 | 88.48 |

| Mouth Open | Gender | 93.10 | 81.85 | 92.96 | 93.45 | 93.64 | 91.52 | 66.92 | 92.44 | 92.27 | 91.69 |

| High Cheekbones | Gender | 83.44 | 48.40 | 83.70 | 84.93 | 84.52 | 70.49 | 7.92 | 73.56 | 78.56 | 78.37 |

| Eyeglasses | Gender | 98.20 | 85.47 | 98.38 | 98.39 | 98.65 | 96.24 | 76.89 | 96.85 | 97.22 | 97.64 |

| Black Hair | Gender | 84.92 | 61.00 | 85.19 | 86.57 | 86.76 | 75.47 | 22.04 | 75.69 | 81.28 | 80.67 |

| Average | Gender | 87.90 | 63.59 | 87.92 | 88.89 | 88.93 | 80.97 | 39.51 | 82.29 | 84.10 | 83.86 |

Waterbirds

We also evaluate our model on the Waterbirds dataset and present the results in Table 2. As in the CelebA dataset, our model achieves the best accuracies among the unsupervised methods in terms of both the unbiased and worst-group accuracies, and presents comparable results to the supervised method [26]. Our successful results on Waterbirds imply that the proposed method is robust to small-scale datasets as well.

Colored-MNIST

Table 3 shows that our model achieves consistent accuracy in the digit classification with color bias. Besides, the color classification performance, where the algorithmic bias does not exist, turns out to be also competitive to the baseline, while the supervised approach is not good at this setting.

| Target | Blond Hair | Blurry | ||||||

| Unsupervised | Supervised | Unsupervised | Supervised | |||||

| Biases | Baseline | LfF∗ | BPA (ours) | Group DRO | Baseline | LfF∗ | BPA (ours) | Group DRO |

| Gender | 80.42 | 84.89 | 90.18 | 91.39 | 73.05 | 76.70 | 88.93 | 87.05 |

| Gender, Heavy Makeup | 83.64 | 88.82 | 91.90 | 81.09 | 75.37 | 79.55 | 89.09 | 72.17 |

| Gender, Wearing Lipstick | 80.34 | 84.13 | 91.63 | 85.93 | 79.88 | 83.21 | 89.79 | 79.88 |

| Gender, Young | 78.39 | 81.21 | 89.05 | 87.96 | 72.97 | 77.77 | 88.66 | 85.39 |

| Gender, No Beard | 79.50 | 82.51 | 89.92 | 85.01 | 78.91 | 79.84 | 84.06 | 81.07 |

| Gender, Wearing Necklace | 79.25 | 81.03 | 92.62 | 92.26 | 71.80 | 78.07 | 89.60 | 85.33 |

| Gender, Big Nose | 81.18 | 84.10 | 90.58 | 90.83 | 71.89 | 77.11 | 88.57 | 87.11 |

| Gender, Smiling | 79.75 | 82.91 | 89.85 | 91.73 | 73.31 | 78.04 | 89.32 | 87.87 |

| Average | 80.29 1.71 | 83.53 2.64 | 90.79 1.29 | 87.83 4.10 | 74.88 3.32 | 79.08 2.06 | 88.44 1.98 | 82.69 5.50 |

4.5 Analysis

Results with no algorithmic bias

We test our algorithm on unbiased datasets to make sure that it is dependable on the cases without algorithmic bias. The unbiased setting is defined by the configuration that a baseline model involves a marginal difference between its overall accuracy and unbiased accuracy (e.g., % points). Similar to Table 1, we identify a subset of target attributes in CelebA, which is not spuriously correlated to gender; there exist 6 out of 32 attributes. Table 4 illustrates the results of the 6 target attributes, where the accuracy of our approach is most consistent among the 4 methods. This implies that our framework can be incorporated into the existing recognition models directly, without knowing the presence of dataset bias. Note that color classification with digit bias on Colored-MNIST or background place classification with object bias on Waterbirds are also qualified as unbiased settings, where our model gives consistent results.

Multiple bias attributes

Thanks to the unsupervised nature of our method, we can simply evaluate our model on multi-bias scenarios, where multiple bias attributes exist in the dataset, without modification. Table 5 presents the unbiased results with multiple bias attributes using our method and Group DRO [26], where we additionally report the average and standard deviation over all bias sets to compare the overall effectiveness and robustness. We also present the results of LfF∗, a variant of LfF [25], introduced in Table 1. When trained on multiple bias attributes, the accuracy of Group DRO is sensitive to bias sets while our method achieves stable and superior results for a variety of sets. Note also that our model is applicable to any bias sets without additional fine-tuning while a supervised method should separately train their model for each set.

Ablative results on importance weighting

| Scale | Loss | Unbiased Acc. | Worst-group Acc. |

|---|---|---|---|

| 80.42 | 41.02 | ||

| ✓ | 83.86 | 57.44 | |

| ✓ | 89.08 | 76.55 | |

| ✓ | ✓ | 90.18 | 82.54 |

We perform the ablative experiments on the CelebA dataset to analyze the effectiveness of our cluster weighting strategy. Table 6 presents the results when only one of the scale and the average loss , respectively, are taken into account to compute for the cluster in Eq. (3.4). Note that our ablative model with the loss factor only is closely related to [30]. Table 6 also shows that combining both terms plays a crucial role for learning debiased representations while the scale factor is clearly more important.

| Target | Baseline | BPA (ours) | Group DRO |

|---|---|---|---|

| Blond Hair | 79.13 2.72 | 90.16 3.19 | 90.82 2.76 |

| Heavy Makeup | 70.26 3.84 | 73.52 3.86 | 71.57 4.33 |

| Young | 77.56 1.80 | 81.31 2.31 | 80.56 2.09 |

| Double Chin | 62.56 2.22 | 81.71 3.61 | 78.46 3.37 |

| Chubby | 67.80 2.77 | 82.36 3.55 | 79.91 3.43 |

| Wearing Hat | 90.80 4.01 | 95.11 3.10 | 94.71 3.76 |

| Oval Face | 61.77 1.80 | 66.63 1.63 | 65.35 1.45 |

| Pointy Nose | 63.96 1.42 | 70.53 1.52 | 70.16 1.45 |

Unspecified group shifts

To verify the robustness in another realistic scenario, we test unspecified group shifts, where the group information at test time is not fully provided during training. The bias attribute specified during training, which is exploited by group DRO, is fixed to gender. To evaluate the performance in this setting, we measure a set of unbiased accuracies corresponding to the combinations of the specified bias attribute, gender, and each of 25 unspecified bias attributes except the target attribute666There exist 26 (out of 39) valid attributes as described in Section 4.4.. Note that the unspecified bias attributes are not introduced during training but used to define groups at test time. Table 7 clearly shows that our model outperforms Group DRO in the bias-unspecified setting, where we only report the average and standard deviation of the set of unbiased accuracies due to space limitation. This implies that, although Group DRO handles group shifts well within the simplex of the specified group distributions, it suffers from worst-case generalization for unspecified group shifts. Note that the proposed approach is free from the issue because it does not use any information about bias for training.

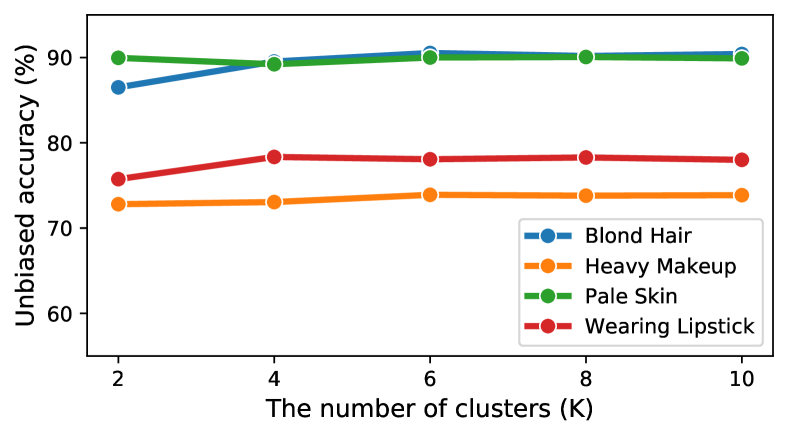

Sensitivity analysis on the number of clusters

We conduct ablation study on the number of groups for clustering on the feature embedding space to obtain bias pseudo-attributes on the CelebA dataset. We set gender as the bias attribute and evaluate the unbiased accuracies for several target attributes. Figure 2 presents that the results are stable over a wide range of the number of clusters and the accuracy is saturated when .

Feature visualization

Figure 3 illustrates the t-SNE results of instance embeddings for the baseline model (left) and ours (right) on the CelebA dataset for the blond hair attribute classification, where we visualize only negative (blond hair = false) examples for an effective visualization. Blue and orange colors indicate values—female and male, respectively—of the bias attribute, gender. We observe that our model helps to mix the examples in different groups within the same class, which is desirable for debiasing.

5 Conclusion

We presented a generic unsupervised debiasing framework using pseudo-attribute. We observed that the examples sampled from the same groups are closely located in the feature embedding space. Based on our empirical observation, we claim that it is possible to identify the pseudo-attributes by taking advantage of the embedding results even without the attribute supervision. Inspired by this fact, we introduced a novel cluster-based weighting strategy for learning debiased representations. We demonstrated the effectiveness of our method on multiple standard benchmarks, which is even as competitive as the supervised debiasing method, group DRO. We also conducted a thorough analysis of our framework in many realistic scenarios, where our model provides substantial gains consistently.

Potential societal impact and limitation

Machine learning models typically focus on performance improvement unconditionally. Hence, it is often exposed to the risk caused by dataset and/or algorithmic bias, which need to be carefully addressed for enhancing reliability and robust of models. This research contributes to a positive societal impact from this point of view. Although the proposed algorithm turns out to be effective for bias identification, there may be blind spots due to unexplored types of bias. Therefore, we believe that the identification of hidden and unobservable biases without prior knowledge is a promising research direction.

Acknowledgments

This work was partly supported by the IITP grants [2021-0-02068, Artificial Intelligence Innovation Hub; 2021-0-01343, Artificial Intelligence Graduate School Program (Seoul National University)] and the National Research Foundation of Korea (NRF) grant [2022R1A2C3012210] funded by the Korean government (MSIT) and by Samsung Electronics Co., Ltd. [IO210917-08957-01].

References

- [1] Hyojin Bahng, Sanghyuk Chun, Sangdoo Yun, Jaegul Choo, and Seong Joon Oh. Learning de-biased representations with biased representations. In ICML, 2020.

- [2] Aharon Ben-Tal, Dick Den Hertog, Anja De Waegenaere, Bertrand Melenberg, and Gijs Rennen. Robust solutions of optimization problems affected by uncertain probabilities. Management Science, 59(2):341–357, 2013.

- [3] Remi Cadene, Corentin Dancette, Matthieu Cord, Devi Parikh, et al. Rubi: Reducing unimodal biases for visual question answering. In NeurIPS, 2019.

- [4] Fabio M Carlucci, Antonio D’Innocente, Silvia Bucci, Barbara Caputo, and Tatiana Tommasi. Domain generalization by solving jigsaw puzzles. In CVPR, 2019.

- [5] Alexandra Chouldechova and Aaron Roth. The frontiers of fairness in machine learning. arXiv preprint arXiv:1810.08810, 2018.

- [6] Sanghyeok Chu, Dongwan Kim, and Bohyung Han. Learning debiased and disentangled representations for semantic segmentation. In NeurIPS, 2021.

- [7] Christopher Clark, Mark Yatskar, and Luke Zettlemoyer. Don’t take the easy way out: Ensemble based methods for avoiding known dataset biases. In EMNLP, 2019.

- [8] Michele Donini, Luca Oneto, Shai Ben-David, John S Shawe-Taylor, and Massimiliano Pontil. Empirical risk minimization under fairness constraints. In NIPS, 2018.

- [9] John Duchi, Peter Glynn, and Hongseok Namkoong. Statistics of robust optimization: A generalized empirical likelihood approach. arXiv preprint arXiv:1610.03425, 2016.

- [10] John Duchi, Tatsunori Hashimoto, and Hongseok Namkoong. Distributionally robust losses for latent covariate mixtures. arXiv preprint arXiv:2007.13982, 2020.

- [11] Rui Gao, Xi Chen, and Anton J Kleywegt. Wasserstein distributional robustness and regularization in statistical learning. arXiv preprint arXiv:1712.06050, 2017.

- [12] Robert Geirhos, Jörn-Henrik Jacobsen, Claudio Michaelis, Richard Zemel, Wieland Brendel, Matthias Bethge, and Felix A Wichmann. Shortcut learning in deep neural networks. arXiv preprint arXiv:2004.07780, 2020.

- [13] Robert Geirhos, Patricia Rubisch, Claudio Michaelis, Matthias Bethge, Felix A Wichmann, and Wieland Brendel. Imagenet-trained cnns are biased towards texture; increasing shape bias improves accuracy and robustness. In ICLR, 2019.

- [14] Lisa Anne Hendricks, Kaylee Burns, Kate Saenko, Trevor Darrell, and Anna Rohrbach. Women also snowboard: Overcoming bias in captioning models. In ECCV, 2018.

- [15] Weihua Hu, Gang Niu, Issei Sato, and Masashi Sugiyama. Does distributionally robust supervised learning give robust classifiers? In ICML, 2018.

- [16] Justin Johnson, Bharath Hariharan, Laurens van der Maaten, Li Fei-Fei, C Lawrence Zitnick, and Ross Girshick. Clevr: A diagnostic dataset for compositional language and elementary visual reasoning. In CVPR, 2017.

- [17] Johannes Kirschner, Ilija Bogunovic, Stefanie Jegelka, and Andreas Krause. Distributionally robust bayesian optimization. In AISTATS, 2020.

- [18] Da Li, Yongxin Yang, Yi-Zhe Song, and Timothy M Hospedales. Deeper, broader and artier domain generalization. In ICCV, 2017.

- [19] Tian Li, Maziar Sanjabi, Ahmad Beirami, and Virginia Smith. Fair resource allocation in federated learning. arXiv preprint arXiv:1905.10497, 2019.

- [20] Yingwei Li, Yi Li, and Nuno Vasconcelos. Resound: Towards action recognition without representation bias. In ECCV, 2018.

- [21] Yi Li and Nuno Vasconcelos. Repair: Removing representation bias by dataset resampling. In CVPR, 2019.

- [22] Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Deep learning face attributes in the wild. In ICCV, 2015.

- [23] Ilya Loshchilov and Frank Hutter. Sgdr: Stochastic gradient descent with warm restarts. In ICLR, 2017.

- [24] Mehryar Mohri, Gary Sivek, and Ananda Theertha Suresh. Agnostic federated learning. arXiv preprint arXiv:1902.00146, 2019.

- [25] Junhyun Nam, Hyuntak Cha, Sungsoo Ahn, Jaeho Lee, and Jinwoo Shin. Learning from failure: Training debiased classifier from biased classifier. In NeurIPS, 2020.

- [26] Shiori Sagawa, Pang Wei Koh, Tatsunori B Hashimoto, and Percy Liang. Distributionally robust neural networks for group shifts: On the importance of regularization for worst-case generalization. In ICLR, 2020.

- [27] Shiori Sagawa, Aditi Raghunathan, Pang Wei Koh, and Percy Liang. An investigation of why overparameterization exacerbates spurious correlations. In ICML, 2020.

- [28] Seonguk Seo, Joon-Young Lee, and Bohyung Han. Information-theoretic bias reduction via causal view of spurious correlation. In AAAI, 2022.

- [29] Seonguk Seo, Yumin Suh, Dongwan Kim, Geeho Kim, Jongwoo Han, and Bohyung Han. Learning to optimize domain specific normalization for domain generalization. In ECCV, 2020.

- [30] Nimit Sohoni, Jared Dunnmon, Geoffrey Angus, Albert Gu, and Christopher Ré. No subclass left behind: Fine-grained robustness in coarse-grained classification problems. In NeurIPS, 2020.

- [31] Antonio Torralba and Alexei A Efros. Unbiased look at dataset bias. In CVPR, 2011.

- [32] Catherine Wah, Steve Branson, Peter Welinder, Pietro Perona, and Serge Belongie. The caltech-ucsd birds-200-2011 dataset. 2011.

- [33] Angelina Wang, Arvind Narayanan, and Olga Russakovsky. REVISE: A tool for measuring and mitigating bias in visual datasets. In ECCV, 2020.

- [34] Haohan Wang, Zexue He, Zachary L. Lipton, and Eric P. Xing. Learning robust representations by projecting superficial statistics out. In ICLR, 2019.

- [35] Mei Wang, Weihong Deng, Jiani Hu, Xunqiang Tao, and Yaohai Huang. Racial faces in the wild: Reducing racial bias by information maximization adaptation network. In ICCV, 2019.

- [36] Tianlu Wang, Jieyu Zhao, Mark Yatskar, Kai-Wei Chang, and Vicente Ordonez. Balanced datasets are not enough: Estimating and mitigating gender bias in deep image representations. In ICCV, 2019.

- [37] Rich Zemel, Yu Wu, Kevin Swersky, Toni Pitassi, and Cynthia Dwork. Learning fair representations. In ICML, 2013.

- [38] Marvin Zhang, Henrik Marklund, Abhishek Gupta, Sergey Levine, and Chelsea Finn. Adaptive risk minimization: A meta-learning approach for tackling group shift. arXiv preprint arXiv:2007.02931, 2020.

- [39] Yi Zhang and Jitao Sang. Towards accuracy-fairness paradox: Adversarial example-based data augmentation for visual debiasing. arXiv preprint arXiv:2007.13632, 2020.

- [40] Bolei Zhou, Agata Lapedriza, Aditya Khosla, Aude Oliva, and Antonio Torralba. Places: A 10 million image database for scene recognition. TPAMI, 40(6):1452–1464, 2017.

A Full experimental results

Table A and B present the full results of Table 1 in the main paper, including George [30] and a class weighting method. George [30] is closely related to our ablative model with sample weighting based on its loss, which is shown in Table 6 of the main paper, while class weighting approach adjusts the weight of each example depending on the associated class scale (size) to mitigate the class imbalance issue. We also report the gap between the overall accuracy and the unbiased accuracy of the baseline model to present the degree of algorithmic bias for each target attribute with gender bias. Bold and underline fonts indicate the first and second place among the compared approaches, respectively. The proposed approach achieves outstanding performance compared to all other unsupervised methods, and is even as competitive as the supervised counterpart [26]. Also, it is surprising that the class weighting method is superior to existing unsupervised debiasing methods including LfF [25] and George [30]. We run all experimental three times and compute average accuracies and their standard deviations.

| Unsupervised | Supervised | |||||||

|---|---|---|---|---|---|---|---|---|

| Target | Gap (%p) | Overall | Baseline | LfF [25] | George [30] | Class weighting | Ours | Group DRO [26] |

| Blond Hair | -15.28 | 95.70 | 80.42 0.51 | 84.89 0.14 | 83.13 1.86 | 83.35 0.85 | 90.18 0.23 | 91.39 0.27 |

| Heavy Makeup | -19.63 | 90.82 | 71.19 0.37 | 71.85 0.17 | 70.91 0.77 | 71.74 0.83 | 73.78 0.25 | 72.70 0.71 |

| Pale Skin | -25.25 | 96.75 | 71.50 1.60 | 75.23 0.74 | 78.22 3.75 | 90.02 0.56 | 90.06 0.75 | 90.55 0.84 |

| Wearing Lipstick | -18.70 | 92.60 | 73.90 0.53 | 73.84 0.05 | 78.05 0.98 | 72.89 1.28 | 78.28 0.88 | 78.26 2.73 |

| Young | -9.30 | 87.49 | 78.19 0.39 | 79.58 0.14 | 80.79 0.20 | 82.13 0.82 | 82.27 0.65 | 82.40 0.48 |

| Double Chin | -31.32 | 95.93 | 64.61 0.82 | 68.47 0.22 | 76.23 0.11 | 82.13 1.43 | 82.92 0.54 | 83.19 1.11 |

| Chubby | -27.97 | 95.39 | 67.42 0.95 | 71.56 0.52 | 74.88 1.91 | 79.64 0.56 | 83.88 0.36 | 81.90 0.20 |

| Wearing Hat | -5.57 | 99.10 | 93.53 0.37 | 94.81 0.15 | 95.72 0.71 | 96.16 0.50 | 96.80 0.26 | 96.84 0.46 |

| Oval Face | -10.40 | 73.10 | 62.70 0.62 | 62.30 0.21 | 65.16 0.23 | 65.13 1.05 | 67.18 0.82 | 65.40 0.14 |

| Pointy Nose | -11.81 | 73.91 | 62.10 0.74 | 63.83 0.28 | 61.68 1.59 | 66.82 2.76 | 68.90 0.90 | 70.71 0.28 |

| Straight Hair | -12.24 | 82.52 | 70.28 1.06 | 72.84 0.12 | 77.80 0.19 | 77.46 0.70 | 79.18 0.38 | 77.04 0.70 |

| Blurry | -22.98 | 96.03 | 73.05 1.28 | 77.52 0.45 | 81.28 0.28 | 87.75 0.87 | 88.93 0.32 | 87.05 0.90 |

| Narrow Eyes | -23.29 | 86.47 | 63.18 1.05 | 67.77 0.08 | 68.03 0.11 | 70.99 0.60 | 76.39 0.64 | 76.72 1.98 |

| Arched Eyebrows | -12.09 | 81.81 | 69.72 0.37 | 71.87 0.10 | 73.25 0.29 | 75.58 1.13 | 74.77 0.69 | 78.30 1.79 |

| Bags Under Eyes | -14.16 | 83.63 | 69.47 0.57 | 71.86 0.05 | 74.81 0.38 | 76.36 1.05 | 77.84 1.14 | 75.88 1.18 |

| Bangs | -6.37 | 95.41 | 89.04 0.47 | 89.04 0.50 | 92.62 0.12 | 93.09 0.29 | 93.94 0.57 | 94.45 0.17 |

| Big Lips | -8.99 | 69.86 | 60.87 0.58 | 62.15 0.06 | 64.99 0.13 | 63.74 0.56 | 66.50 0.24 | 63.70 0.44 |

| No Beard | -22.73 | 95.84 | 73.11 0.90 | 73.13 0.89 | 77.90 0.20 | 77.83 2.29 | 79.58 0.14 | 77.86 1.35 |

| Receding Hairline | -23.31 | 93.03 | 69.72 0.78 | 74.58 0.21 | 78.86 0.40 | 82.97 0.97 | 84.95 0.49 | 85.15 1.31 |

| Wavy Hair | -9.19 | 82.29 | 73.10 0.56 | 74.53 0.17 | 77.39 0.15 | 76.50 0.65 | 79.89 0.71 | 79.65 0.63 |

| Wearing Earrings | -17.18 | 89.35 | 72.17 0.91 | 74.17 0.33 | 80.65 0.04 | 78.65 0.28 | 84.57 0.69 | 83.50 0.63 |

| Wearing Necklace | -30.73 | 85.77 | 55.04 0.59 | 57.21 0.76 | 58.79 0.10 | 67.05 1.37 | 68.96 0.12 | 62.89 3.69 |

| Big Nose | -14.74 | 82.44 | 67.70 1.11 | 69.75 0.03 | 71.85 0.18 | 70.52 1.02 | 74.21 0.43 | 73.73 0.27 |

| Brown Hair | -8.88 | 86.95 | 78.07 0.87 | 78.93 1.24 | 83.07 0.07 | 83.12 0.38 | 83.83 0.66 | 84.87 0.07 |

| Bushy Eyebrows | -17.02 | 91.44 | 74.42 0.91 | 75.20 0.34 | 80.99 0.32 | 82.73 1.21 | 85.02 0.02 | 85.43 0.19 |

| Gray Hair | -20.54 | 98.01 | 77.47 0.67 | 80.09 0.21 | 86.10 1.18 | 90.12 1.12 | 91.80 0.22 | 92.52 0.14 |

| Average | -16.91 | 88.52 | 71.61 | 73.73 | 76.66 | 78.63 | 80.93 | 80.46 |

| Unsupervised | Supervised | |||||||

|---|---|---|---|---|---|---|---|---|

| Target | Gap (%p) | Overall | Baseline | LfF [25] | George [30] | Class weighting | Ours | Group DRO [26] |

| Blond Hair | -54.68 | 95.70 | 41.02 1.96 | 57.96 2.00 | 65.45 15.52 | 53.58 3.10 | 82.54 1.22 | 87.86 0.10 |

| Heavy Makeup | -73.47 | 90.82 | 17.35 4.60 | 23.87 2.79 | 9.09 1.24 | 28.86 11.91 | 39.84 2.28 | 21.36 1.36 |

| Pale Skin | -60.11 | 96.75 | 36.64 3.53 | 43.26 1.40 | 62.03 16.50 | 85.42 1.70 | 88.60 1.48 | 87.68 2.37 |

| Wearing Lipstick | -61.22 | 92.60 | 31.38 4.27 | 31.92 0.02 | 51.04 2.59 | 27.68 3.45 | 46.52 1.62 | 46.08 5.57 |

| Young | -34.70 | 87.49 | 52.79 1.45 | 57.79 0.84 | 65.12 0.88 | 71.43 1.75 | 74.33 0.70 | 76.29 1.96 |

| Double Chin | -74.60 | 95.93 | 21.33 2.24 | 28.24 0.46 | 50.00 0.41 | 62.43 4.71 | 67.78 0.91 | 72.94 1.14 |

| Chubby | -71.09 | 95.39 | 24.30 3.73 | 34.09 0.90 | 58.01 11.04 | 52.76 2.59 | 72.32 0.93 | 72.64 1.70 |

| Wearing Hat | -13.98 | 99.10 | 85.12 0.31 | 88.31 0.12 | 92.93 0.76 | 93.61 0.32 | 94.94 0.19 | 94.67 0.41 |

| Oval Face | -43.95 | 73.10 | 29.15 2.76 | 36.00 1.46 | 38.01 2.63 | 43.52 6.37 | 55.78 0.94 | 56.84 1.83 |

| Pointy Nose | -48.11 | 73.91 | 25.80 4.03 | 38.04 1.49 | 22.63 3.67 | 47.46 3.75 | 52.48 0.52 | 63.76 2.80 |

| Straight Hair | -34.70 | 82.52 | 47.82 6.75 | 58.53 1.61 | 69.23 1.24 | 68.97 1.15 | 72.09 0.76 | 66.10 3.56 |

| Blurry | -50.35 | 96.03 | 45.68 3.98 | 52.35 1.18 | 62.23 1.58 | 82.30 3.05 | 84.10 0.73 | 82.06 2.27 |

| Narrow Eyes | -59.46 | 86.47 | 27.01 1.30 | 38.53 0.44 | 35.16 1.14 | 52.62 4.11 | 73.24 0.88 | 71.47 3.72 |

| Arched Eyebrows | -47.05 | 81.81 | 34.76 1.86 | 44.97 0.46 | 45.64 1.21 | 52.94 5.28 | 54.36 1.37 | 69.44 5.44 |

| Bags Under Eyes | -41.98 | 83.63 | 41.65 1.01 | 49.10 0.49 | 56.28 2.11 | 59.77 8.13 | 62.55 0.90 | 63.34 3.02 |

| Bangs | -18.50 | 95.41 | 76.91 3.27 | 82.37 0.52 | 85.90 0.24 | 87.91 1.80 | 92.21 1.24 | 92.12 1.03 |

| Big Lips | -39.01 | 69.86 | 30.85 0.62 | 38.54 0.18 | 44.51 0.83 | 43.16 5.62 | 56.99 3.05 | 47.55 1.03 |

| No Beard | -82.54 | 95.84 | 13.30 3.87 | 20.00 0.00 | 33.33 5.77 | 30.00 10.00 | 40.00 0.00 | 36.70 5.10 |

| Receding Hairline | -57.34 | 93.03 | 35.69 0.35 | 45.53 0.55 | 57.30 0.90 | 72.14 2.56 | 79.12 1.91 | 79.12 2.11 |

| Wavy Hair | -44.28 | 82.29 | 38.01 0.85 | 45.24 0.83 | 53.17 0.43 | 49.69 4.65 | 65.74 1.13 | 66.79 1.62 |

| Wearing Earrings | -63.09 | 89.35 | 26.26 4.14 | 32.95 1.31 | 52.74 1.10 | 47.18 4.08 | 72.81 1.50 | 75.24 2.10 |

| Wearing Necklace | -83.05 | 85.77 | 2.72 0.83 | 6.67 2.07 | 13.82 0.41 | 30.36 3.36 | 41.93 2.47 | 24.34 7.81 |

| Big Nose | -49.25 | 82.44 | 33.19 3.97 | 45.30 0.50 | 46.22 0.41 | 49.56 4.79 | 63.00 4.27 | 65.08 1.17 |

| Brown Hair | -27.37 | 86.95 | 59.58 2.55 | 60.68 3.62 | 73.20 0.88 | 70.91 3.09 | 71.50 0.97 | 78.92 1.61 |

| Bushy Eyebrows | -54.30 | 91.44 | 37.14 2.54 | 52.67 3.14 | 56.08 0.97 | 66.92 6.98 | 74.08 0.75 | 81.56 3.24 |

| Gray Hair | -55.52 | 98.01 | 42.49 1.86 | 48.46 1.09 | 67.23 2.75 | 80.00 3.78 | 83.03 1.37 | 88.55 1.85 |

| Average | 51.68 | 88.79 | 36.84 | 44.67 | 50.39 | 58.00 | 67.76 | 68.02 |

B Additional Analysis

Unbiased results with various bias attributes

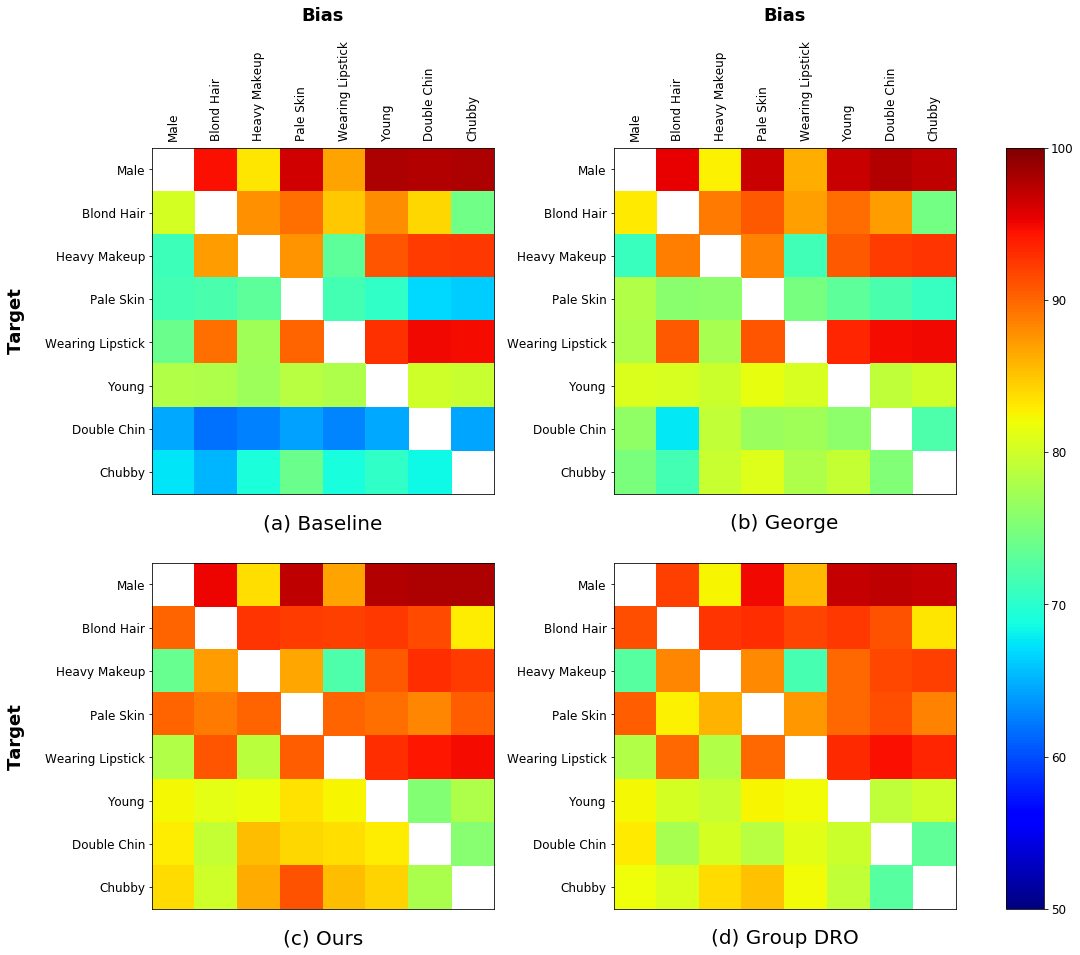

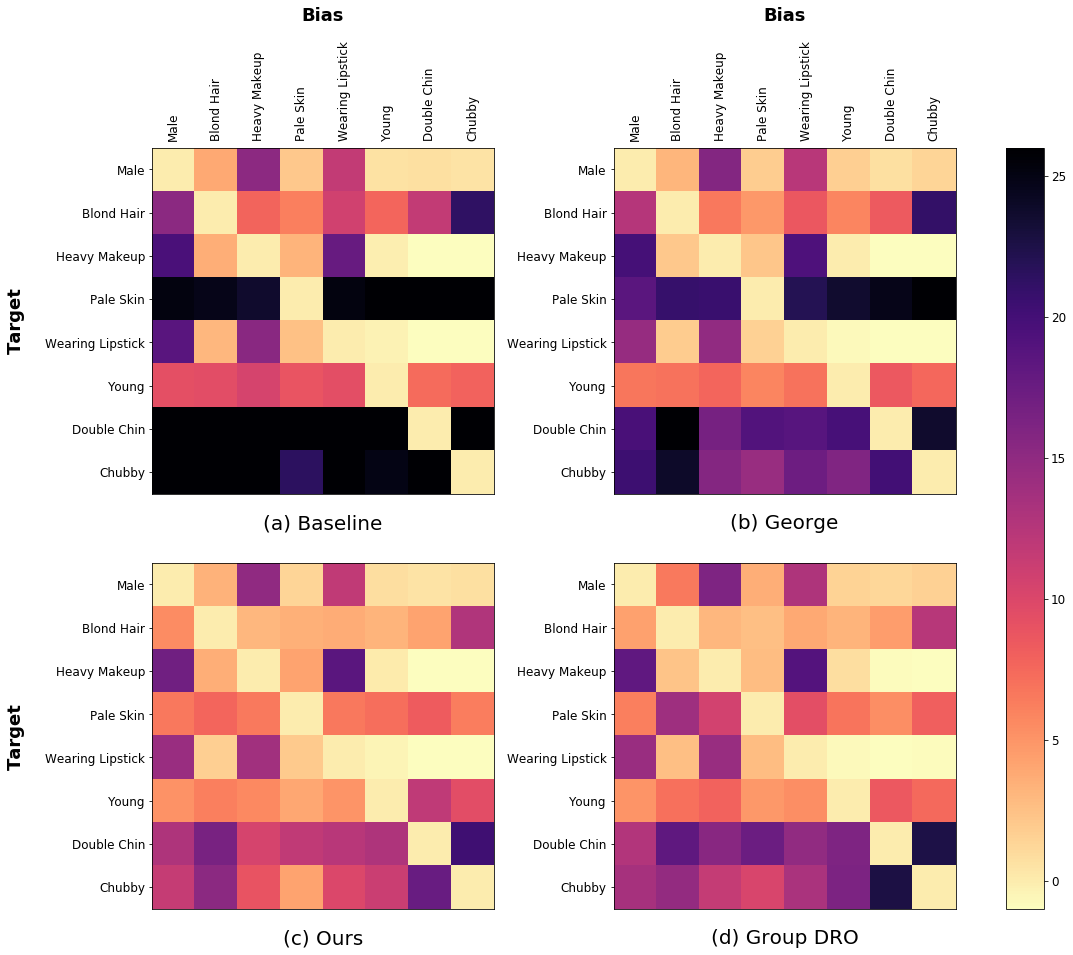

To make our study more comprehensive, we also evaluate our model with various bias attributes, in addition to Male (gender), on the CelebA dataset. Specifically, we select 8 attributes777The selected attributes are male, blond hair, heavy makeup, pale skin, wearing lipstick, young, double chin and chubby. and test our model with all possible (target, bias) pairs among the attributes. Figure A visualizes the experimental results with different methods, including baseline, George [30], group DRO [26] and our approach, in terms of unbiased accuracy (%). The columns and rows denote bias and target attributes, respectively. As shown in the figure, our model improves unbiased accuracies substantially for various bias attributes, which outperforms baseline and George [30] and is even as competitive as group DRO [26].

Algorithmic bias with various bias attributes

In Figure B, we visualize the performance gap between overall accuracy and unbiased accuracy for each method to analyze the degree of algorithmic bias between target and bias attributes. We use the same experimental setting with Figure A. The larger the performance gap, the more severe the algorithmic bias. This implies that, based on the performance gap from Figure B (a), we can measure the existence of algorithmic bias on the CelebA dataset, e.g., the target attribute Heavy Makeup is spuriously correlated to Male and Wearing Lipstick biases while not to Young, Double Chin and Chubby biases.888As in the main paper, we suppose that the algorithmic bias exists between target and bias attributes when a baseline model gives a large performance gap between its overall accuracy and unbiased accuracy (e.g., 5% points). As shown in the figure, even with the same target attribute, the gap varies largely depending on bias attributes. We also observe that the algorithmic bias does not exist symmetrically, e.g., the target attribute Chubby is spuriously correlated to Heavy Makeup bias, not vice versa. Compared to the baseline, all methods reduce the algorithmic bias while our framework is more effective than George [30] and as competitive as group DRO [26].

Multi-target classification

We tested our framework with another setting, called multi-target classification, where a single backbone model adopts multiple classification heads. To this end, we attached multiple linear classification layers, which correspond to individual targets, respectively, to a shared feature extractor. For evaluation, we calculate unbiased accuracy for each target attribute separately, where the bias attribute is fixed to gender. Table C presents the multi-target classification results with several target attribute pairs, where our model achieves consistently better results than the compared unsupervised method in terms of unbiased accuracy, while it is as competitive as group DRO [26].

| Unsupervised | Supervised | |||

| Targets | Baseline | George [30] | Ours | Group DRO [26] |

| Blond Hair / Heavy Makeup | 78.92 / 71.46 | 83.06 / 70.99 | 89.78 / 72.25 | 90.38 / 70.94 |

| Blond Hair / Wearing Lipstick | 80.76 / 71.91 | 82.08 / 73.06 | 89.09 / 77.34 | 88.86 / 78.45 |

| Straight Hair / Oval Face | 69.93 / 60.84 | 76.77 / 63.84 | 78.33 / 64.85 | 76.38 / 64.77 |

| Straight Hair / Big Lips | 70.05 / 60.14 | 69.73 / 63.22 | 76.03 / 66.39 | 77.06 / 64.07 |

| Blurry / Pale Skin | 73.89 / 68.18 | 79.51 / 79.45 | 87.35 / 89.28 | 87.82 / 85.91 |

| Blurry / Young | 76.48 / 77.82 | 74.66 / 77.16 | 88.64 / 82.79 | 88.57 / 82.14 |