Upvotes? Downvotes? No Votes? Understanding the relationship between reaction mechanisms and political discourse on Reddit

Abstract.

A significant share of political discourse occurs online on social media platforms. Policymakers and researchers try to understand the role of social media design in shaping the quality of political discourse around the globe. In the past decades, scholarship on political discourse theory has produced distinct characteristics of different types of prominent political rhetoric such as deliberative, civic, or demagogic discourse. This study investigates the relationship between social media reaction mechanisms (i.e., upvotes, downvotes) and political rhetoric in user discussions by engaging in an in-depth conceptual analysis of political discourse theory. First, we analyze 155 million user comments in 55 political subforums on Reddit between 2010 and 2018 to explore whether users’ style of political discussion aligns with the essential components of deliberative, civic, and demagogic discourse. Second, we perform a quantitative study that combines confirmatory factor analysis with difference in differences models to explore whether different reaction mechanism schemes (e.g., upvotes only, upvotes and downvotes, no reaction mechanisms) correspond with political user discussion that is more or less characteristic of deliberative, civic, or demagogic discourse. We produce three main takeaways. First, despite being “ideal constructs of political rhetoric,” we find that political discourse theories describe political discussions on Reddit to a large extent. Second, we find that discussions in subforums with only upvotes, or both up- and downvotes are associated with user discourse that is more deliberate and civic. Third, and perhaps most strikingly, social media discussions are most demagogic in subreddits with no reaction mechanisms at all. These findings offer valuable contributions for ongoing policy discussions on the relationship between social media interface design and respectful political discussion among users.111Our source code is available for public use under https://github.com/civicmachines/political_discourse_reaction_design

1. Introduction

Political exchange among citizens occurs largely on social media platforms. Platforms have become the “de facto public sphere” (Tufekci, 2017) to discuss political topics (Stier et al., 2018), perform political campaigning (Kreiss and McGregor, 2018), and communicate important messages for the pursuit of social causes and protests (Jackson et al., 2020). However, they have also become common places for users to engage in hateful (Mathew et al., 2019) and low-credibility political rhetoric (Vosoughi et al., 2018). Social media platforms are not simply digital representations of offline political activity. They are key spaces for articulation, organization, and implementation of political action (Burrell and Fourcade, 2021). Indeed, they are not intermediaries of communication processes, but function as their curators (Gillespie, 2017). When billions of people interact in a common space, a platform’s design has enormous power over the production, mediation, and dissemination of user discussions. A platform’s choice of communication reaction mechanisms (i.e., “likes,” “upvotes/downvotes” etc.) and its recommendation algorithms critically influence the nature of user interactions on the platform. On the one hand, such reaction mechanisms – as means of evaluation – condition what type of information users produce, and, on the other hand, recommendation algorithms determine what information users will come to interact with (Papacharissi, 2010; Lazer, 2015; Bond et al., 2012; Meta, 2021).

Prior research studies illustrate that social media platforms have transformative effects on political communication, albeit intense debates about whether and how design features influence the quality of discourse among users (Tucker et al., 2018, 2017; Margetts, 2018; Bail et al., 2018; Barberá, 2014). Scholarship on political rhetoric has proposed a vast set of rhetoric devices that are essential to the corresponding modes of political discourse (Ayers, 2013; Monnoyer-Smith and Wojcik, 2012; Engesser et al., 2017; Kushin and Kitchener, 2009; Jennings et al., 2021).

In this research, we first explore to what extent essential components of political discourse theories characterize political discussions on Reddit. Second, we study how specific digital reaction mechanisms on platforms (such as liking, voting, retweeting) that navigate user feedback on content (Dellarocas, 2003) and optimize platforms’ recommendation systems (Covington et al., 2016; TikTok, 2020) impact the prevalence of specific types of political discourse (Halpern and Gibbs, 2013). To this end, we analyze an extensive dataset of political discussions on Reddit against the theoretical framework of three prominent political discourse theories: deliberative, civic, and demagogic discourse. Finally, we answer the following research questions.

- RQ1::

-

To what extent can essential rhetoric components of deliberative, civic, and demagogic discourse describe users’ political discussions on Reddit?

- RQ2::

-

Are different reaction mechanisms (i.e., upvotes, downvotes, no votes) associated with different rhetoric components of political discourse in political discussions on Reddit?

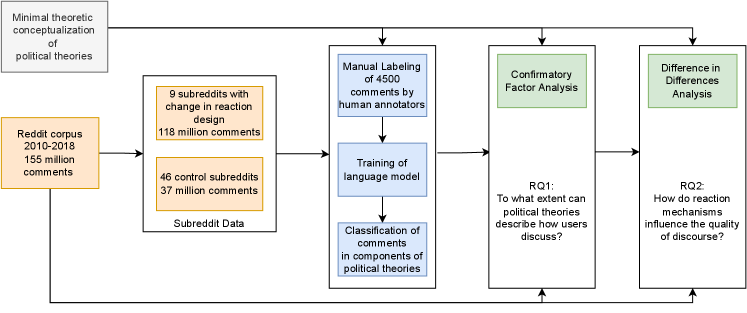

Our study investigates to what extent the essential components of prominent political discourse theories resurface in the political discussions of millions of users on Reddit. That is, do people’s political conversations on Reddit incorporate the rhetoric components suggested by deliberative, civic, and demagogic discourse theory (RQ1)? We then test whether the existence of specific reaction mechanisms (i.e., upvotes, downvotes, no votes) correlates with deliberative, civic, and demagogic rhetoric components in political discussions on Reddit (RQ2). Combining an in-depth account on political discourse theories with a comprehensive, data-driven analysis of social media user discussions is necessary to best inform policy debates on the role of platform reaction mechanisms in creating more civil, respectful, and just user discussion. Figure 1 shows an overview of the entire study.

2. Background & related work

2.1. Understanding political discourse theories

Political theorists and scientists develop analytic frames to understand how people speak when they discuss topics of political relevance (Hicks, 2002; McCoy and Scully, 2002; Bohman and Rehg, 2017; Dahlgren, 2006). In the last century, this line of scholarship has advanced prominent conceptions of political rhetoric, including the ones we study in this work (civic, deliberative, demagogic rhetoric). Deliberative discourse requires the giving and receiving of reasons when discussing propositions (Bohman and Rehg, 2017). In contrast, the rhetoric elements of civic discourse are less constrained by rationalization (Barber, 1989). Demagogic speech tends to oversimplify complex societal issues (Levinger, 2017). We provide an in-depth discussion of these three discourse theories in Section 3.

Nonetheless, defining the exact demarcation lines between political discourse theories remains a contested terrain. Political discourse theories are ideal constructs and as such consist of constitutive components that together ought to represent deliberative, civic, and demagogic discourse. Through an in-depth engagement with scholarship on these three political discourse theories, we cast out their essential rhetoric components and explore to what extent these rhetoric components can describe people’s discussions of political topics on Reddit. We note that our analysis of these political discourse theories (and their subsequent application to social media user comments) necessarily falls back on our interpretation of the literature on political discourse theories. Clarifying our disciplinary backgrounds, our research team consists of political data scientists, philosophers, and computer scientists. While this allowed us to engage in recurring multidisciplinary discussion that mitigated possible biasing effects resulting from a discipline-specific reading of the literature, we wish to highlight that our interpretation of political discourse theories does not claim generalizability and, consequently, perfect external validity. Rather, our goal is to contribute to an ongoing policy discussion and we hope to encourage other scholars to replicate or otherwise perform similar research studies that attempt to bridge contested concepts of political discourse theories with empirical and quantitative analyses of social media discussions.

2.2. Political discourse & social media

Our study builds on and significantly extends research studies that have performed first steps towards understanding social media users’ political rhetoric. For example, using quantitative interviewing, Semaan et al. (Semaan et al., 2014) investigated whether social media users’ interactions could be characterized by deliberative and civic agency. They described deliberation as the presence of reasoned and respectful discussions and civic agency as the ability to interact and participate in the public sphere. Both Friess et al. (Friess et al., 2021) and Wright et al. (Wright and Street, 2007) developed coding schemes for labeling user content as deliberative based on features such as rationality and constructiveness. Guimaraes et al. (Guimaraes et al., 2019) formulated the conversational archetypes “harmony”, “discrepancy”, “disruption”, and “dispute” to describe online political discourse. Lee et al. (Lee and Hsieh, 2013) connected user behavior on social media such as debating, posting or forwarding news, to features of civic engagement. Connecting online and offline behavior, Hampton et al. (Hampton et al., 2017) investigated the association of social media usage with the level of offline deliberation, which they defined as the propensity to discuss political issues with others. Evidently, prior research that investigates political rhetoric on social media has only used basic conceptualizations of political discourse theories. We see this as an opportunity to perform a more in-depth engagement with scholarship on deliberative, civic, and demagogic discourse theories to understand the extent to which their essential components map to political discussions on Reddit.

2.3. Reaction mechanisms, digital environments, & political discourse

Our study also explores whether specific digital reaction mechanisms on Reddit (i.e., upvoting and downvoting) relate to the way users talk about political topics on social media. Previous studies have extensively analyzed how specific platform design features impact how users communicate with each other on the platform.

2.3.1. Platform design and content structure

Focusing on design features that explicitly structure discussions, Rho et al. (Rho and Mazmanian, 2020) showed that the design input of political hashtags on social media influenced the deliberative quality of online discussions causing an increase in emotional and more black-and-white rhetoric. Kang et al. discuss various policy changes on the South Korean platform Naver including the removal of negative emoticons that aimed to reduce the amount of abusive and offensive comments (Kang et al., 2022). Kriplean et al. (Kriplean et al., 2012) built a platform called ConsiderIt that helped users understand previous user posts. By deploying list designs, the platform encouraged users to formulate pros and cons, leading to a higher level of deliberation. Furthermore, Aragón et al. (Aragón et al., 2017) showed that changing a linear to a hierarchical interface design increased social reciprocity on Menéame, a popular Spanish social news platform. In their study, Wijenayake et al. (Wijenayake et al., 2020) manipulated user interactivity and response visibility in an online environment and found that these variables influence the level of conformity of users. Seering et al. found that presenting CAPTCHAs with positive stimuli to users leads them to externalize more positivity of tone and analytical complexity in their arguments (Seering et al., 2019). Liang et al. (Liang, 2017) found that the maximum depth of a Reddit thread, and consequently of the respective discussions, was positively related to its rating (difference between up- to downvotes). In addition, Gilbert et al. (Gilbert, 2013) showed that users’ tendency to focus on submissions that have higher rating on the platform resulted in an incidental “filtering” of information that would otherwise be of interest to them.

2.3.2. Reaction mechanisms and user behavior

Besides design interventions that structure the content in a digital environment, many research studies have shown that reaction mechanisms have an impact on how users behave. Cheng et al. (Cheng et al., 2014) found that a higher number of downvotes across multiple social media platforms resulted in worsening the quality of discourse, while a higher count of positive reactions did not improve discourse significantly. Warut Khern-am-nuai et al. (Khern-am nuai et al., 2020) showed that after removing downvoting in a popular public forum, the number of both posts and replies significantly increased. Furthermore they found a decrease in toxicity and an increase in the diversity of replies. Shmargad et al. (Shmargad et al., 2021) demonstrated that upvoting incivility incentivized users to generate more toxic content. In a field experiment, Matias et al. (Matias and Mou, 2018; Likeafox, 2018) showed that hiding downvotes increased the percentage of commenters who had not been vocal on political subreddits before. On Twitter, Adelani et al. (Adelani et al., 2020) demonstrated that user feedback expressed as likes and retweets significantly affected topic continuation in discussions. Stroud et al. (Stroud et al., 2017) concluded that the type of feedback that users gave by pressing a button (recommend, like, respect) altered the frequency and the scope of its use (see also (Sumner et al., 2020)). Focusing on Reddit, Graham et al. (Graham and Rodriguez, 2021) found that indeed users rarely use the voting reaction mechanisms as community guidelines dictate. Generalizing, Hayes et al. (Hayes et al., 2016) found that users interpreted and applied the same reaction mechanism differently, depending on system, social, and structural factors.

Taken together, these studies underline that reaction mechanisms exert significant influence on user discourse in public online spheres.

2.3.3. Reaction mechanisms and recommender systems

In addition to the direct impact of reaction mechanisms on social media discourse, reaction mechanism designs may have additional indirect consequences since user reactions are often used as signals in training data for recommendation algorithms, such as those used to order news feeds. Both existing (TikTok, 2020) and proposed recommender systems (Babaei et al., 2018; Celis et al., 2019; Bountouridis et al., 2019) take different forms of user feedback as input to suggest content, be that likes, retweets, or other actions facilitated by reaction mechanisms. Such feedback does not always represent explicit user preferences about content, but rather is used as a way to overcome training issues of recommender systems. It is also useful for suggesting content that will keep users engaged, regardless of potential ”spill over effects”, i.e., users externalizing further unforeseen behaviors. (Zhao et al., 2018; Amatriain et al., 2009; Adomavicius et al., 2013; Hu et al., 2008).

Although user reactions can serve as a proxy, albeit imperfect, for user preferences where ground truth about these preferences is not available, the use of such proxies in training recommendation algorithms can also have undesirable effects. Prior research studies demonstrate that recommender algorithms’ suggestions correlate with user radicalization (Ribeiro et al., 2020), discriminate against users and social groups (Guo et al., 2021), and replicate political bias in discussions (Papakyriakopoulos et al., 2020; Huszár et al., 2022). However, only few studies bridge between political discourse theories, design, and engineering (Hampton et al., 2017; Lucherini et al., 2021) to produce a better understanding of these phenomena.

2.3.4. Reaction mechanisms as technical features

Before we engage in the theoretical discussion on the three political discourse theories, we need to point out the important conceptual distinction between reaction mechanisms as technical features of a platform and platform affordances as relational, community-specific behaviors that result from interacting with reaction mechanisms in a non-deterministic manner (Boyd, 2010; Treem and Leonardi, 2013; Evans et al., 2017). The technical features of digital artifacts (e.g., the downvoting functionality) are exactly the same to each user. However, different users may perceive and use such technical features differently resulting in the different interaction affordances that a common technical artifact provides to users. The key takeaway from this conceptual distinction is that the technical features of an artifact do not necessarily determine how users relate to and use the artifact. Thus, in our study, we refer to upvoting and downvoting as reaction mechanisms because we do not explore how specific online communities and cultures differ in the perception, use, and interactions with such reaction mechanisms.

3. Minimal Conceptualization of political discourse theories

This study investigates essential rhetoric components of deliberation, civic engagement, and demagoguery. Foreshadowing stark differences, demagoguery oversimplifies complex societal issues (Gustainis, 1990; Roberts-Miller, 2005). Demagoguery’s rhetoric polarization lays the groundwork for action-based political mobilization: you are either with “us” or with “them” (Hogan and Tell, 2006; Levinger, 2017). Civic engagement interactions are unstructured and characterized by multiple forms of communication and action, with its discourse being frequently characterized as “messy conversation” that facilitates participation (Dahlgren, 2006). Civic engagement interactions aim to be inclusive – a key goal of civic engagement movements (McCoy and Scully, 2002). Deliberation, from a Habermasian perspective, requires intersubjective propositional knowledge between conversation members (Bohman and Rehg, 2017). That is, knowledge-claims must fulfill standards of intersubjectivity: ideally, all discussants are able to relate to the beliefs that underlie a proposition. Propositions must be grounded in logical plausibility, factual correctness, and communal narratives. Discussants’ claims must have pragmatic value (i.e, “propositionality”) in order to support the group’s goal of reaching an understanding. Only when everyone can relate to the proposed statements, can deliberative rhetoric help solve a social, civil, or communal issue that is important to the group (Hicks, 2002).

For our analysis of social media comments, we cannot measure whether a particular social media interaction leads to (or otherwise supports) specific offline actions. Our primary analysis seeks to investigate only the rhetoric components of these three political practices, that is, at the textual level of individual social media comments. We collect and analyze user comments from 55 political subreddits posted between 2010 and 2018. We presuppose a “minimal conceptualization” of demagogic, civic, and deliberative discourse. We here highlight this minimal conceptualization because we do not wish to suggest that an analysis of a textual corpus can fully account for the theoretical and practical complexities attributable to the theories. Nonetheless, it uncovers the extent to which the discursive elements of these theories manifest themselves in the political discussion on Reddit.

In the following section, we outline the conceptual differences and commonalities of demagogic, civic, and deliberative discourse. This conceptual analysis serves to carve out the essential components of the three political discourse theories that we will use for data annotation and, eventually, classification of social media comments using the language model XLnet (Yang et al., 2019).

Table 1 highlights the key rhetoric components we develop for the labeling of social media comments and whether they are (indicated by a +) or are not (indicated by a -) part of demagoguery, civic engagement, or deliberation. In Appendix B, we describe and justify the rhetoric components in more detail and provide further examples of social media comments. Finally, Appendix D, Table 5 offers further explanations of the labels.

3.1. Demagogic discourse

Training set example comment: “You socialist guys sound like superior human beings. I’m really impressed.”

Demagogic discourse oversimplifies, distorts, or exaggerates complex societal challenges and has little regard for the truthfulness of propositions (Gustainis, 1990; Roberts-Miller, 2005). In offering simple solutions that often entail “pseudo-reasoning” (Gustainis, 1990), demagogic statements are difficult to falsify if not even impervious and unresponsive to opposing arguments (Roberts-Miller, 2005). Not only does this impede a constructive exchange of propositions but, for the demagogue, it renders perspective-taking of opposing positions irrelevant. The oversimplification of complex social phenomena results in a polarization that facilitates political mobilization (Levinger, 2017). Demagogic talk aims to contrast social groups, highlighting apparent identity differences and putting them in competition with each other. It promises to care for the needs of “ordinary” people, creating an ethos around the often hateful division into laypeople and experts, elites and the forgotten, or poor and rich (Roberts-Miller, 2005, 2020). The disregard for truthfulness and the evocation of a collective identity based on hate, fear-mongering, and scapegoating means that demagogic rhetoric necessarily contains emotional linguistic components (Gustainis, 1990). For example, it typically expresses fear of outsiders and hatred against elites (Roberts-Miller, 2020; Hogan and Tell, 2006).

Finally, demagogic rhetoric often speaks of a “movement” without specifying the particulars of its policy-making goals. As Levinger (2017) states, such movements rely on general messages that tend to revolve around themes such as “love for the country, its glorious past, degraded present, and utopian future.” (Levinger, 2017). In contrast to civic and deliberative discourse, scholarship on demagogic rhetoric largely agrees on its constitutive components. Its essential rhetoric devices are easier to pinpoint. It is important to note that demagogic political rhetoric and practice exist in many parts of the political spectrum and there are prominent cases for both left-leaning and right-leaning demagogues. Consequently, we do not argue (or implicitly suggest) that all demagogic rhetoric is necessarily right-wing or nationalist only. Demagoguery is an alienating discourse that appeals to the fancies and preconceptions of “ordinary” people, and it flourishes under different conditions and circumstances.

3.2. Civic discourse

Training set example comment: “Maybe we should look into why we’re having more wildfires and address the issues that are causing that.”

Civic engagement aims to mobilize people to solve a commonly defined social or political issue. It allows for speech that remains unconstrained by “overrationalization” (McCoy and Scully, 2002; Barber, 1989). Several authors suggest that civic discourse considers rationality, neutrality, and a lack of emotional talk as hindrances for multiple forms of speech (Dahlgren, 2006; Barber, 1989). “Norms of deliberation” and their associated speaking styles represent social privilege that can have a silencing effect for some participants (Young, 2001). However, the relationship between civic engagement and deliberation is not as clear cut. Some perspectives (for example, (Adler and Goggin, 2005)) claim that civic engagement requires at least some deliberation to enable discussants to work towards a public goal. It needs to connect personal experience with public issues and thus encourages personal anecdotes, storytelling, or brainstorming (McCoy and Scully, 2002). While civic engagement does not place priority on how participants formulate an argument and how well supported arguments are by evidence, it would be wrong to assume that the telling of personal experiences by participants does not contain any truthfulness. Indeed, if such personal anecdotes had no epistemic validity in the life of the community, then they could not produce a sense of connection and interrelatedness that is pivotal for civic engagement (Diller, 2001).

Furthermore, civic discourse presupposes a struggle or conflict for what a group considers to be a valuable civic goal. This requires discussants to listen to each other, take perspective, and critically analyze opposing arguments. As an inherently social practice, civic discourse represents a group’s struggle to define political problems, draw up potential solutions, and mark out specific actions. This often involves intense exchange between engaged citizens that differ on and share perspectives on a single matter. In civic engagement, interactions are both collaborative and competitive.

Successful public engagement processes evolve around a narrative of unity (Hauser and Grim, 2004). In comparison to political parties and trade unions, mobilization in civic engagement is oriented toward well-defined civil issues and causes (Loader et al., 2014). Online, the use of specific hashtagging, e.g., #ferguson or #policebrutality, creates a sense of a collective that often clearly demarcates “who is with us” and “who is not” (Bonilla and Rosa, 2015). Nonetheless, in civic discourse, a collective identity is built around a well-formulated cause. This stands in contrast to demagogic rhetoric that typically affirms a group’s identity through the degradation of another group (Levinger, 2017). Similarly, civic engagement addresses common concerns of a community. Participation aims at specific, practical, and do-able solutions (Dahlgren, 2015), often externalized by protest movements calling for social action and change. This further differentiates civic from demagogic discourse, as the latter relies on more abstract and general messages promoted by social groups (Levinger, 2017). Overall, civic discourse is an identity-based discourse whose success depends on a high level of empathy and practical, action-oriented incentives.

3.3. Deliberative discourse

Training set example comment: “I think those jobs should have a union, I’m in a white-collar union myself (as a teacher), and I have no idea why the private white collar sector shouldn’t. I see corporations as an equal negotiation between management, shareholders, and employees, and all three should have a roughly equal stake, and that’s only possible through unionization.”

Different from demagoguery and civic engagement, deliberation rests on the ideal of reasoning, truth, and truthfulness (Bohman and Rehg, 2017; Hicks, 2002; Young, 2001; Halpern and Gibbs, 2013). Its rhetoric devices consist of logical reasoning and argumentation (Halpern and Gibbs, 2013).

While public reasoning does not (and cannot) fulfill the demands of scientific proof, “(it) should not contradict the claims supported by the best available evidence” (Hicks, 2002): evidence that is publicly available and comprehensible for citizens. Besides drawing on the best available evidence, deliberative reasoning requires interaction that presupposes motivated participants that are able to provide justifications for their assertions (Young, 2001). Deliberative discussions aim to follow a particular structuring order. After rounds of debates, some members of the group may summarize others’ claims and hence evaluate the considerations that speak in favor or against the presented propositions (Halpern and Gibbs, 2013).

Deliberative discourse works in the service of accomplishing a public goal that, eventually, should help improve participants’ lives. Communicative practices allow for and even encourage criticism of other participants’ arguments. However, counter argumentation is only legitimate when it rests on the premises and standards of public reasoning. Otherwise it “trangresse(s) the limits of civility” (Hicks, 2002). In deliberative discourse, the ideal of reasoning is intimately connected to the moral principles of respect, equality, and trust (Markovits, 2006; Bohman and Rehg, 2017). Such moral principles are often used to argue that deliberation is inclusive, a claim that has been met with scepticism by some authors (Barber, 1989; Young, 2001).

Deliberative discourse, in contrast to demagogic and civic discourse, does not typically put emphasis on a collective identity. Rather, it presupposes that participants can move beyond their own interests and agree to work toward a common goal. Participants eventually give up their own interest for the sake of a collective identity in civic discourse. In contrast, in deliberative discourse, rhetoric demands for reasoning and truthfulness are supposed to put strong normative pressure on discussants’ interactions. Hicks (2002) states that “citizens agree to justify their political proposals…because they agree to propose and abide by the terms of fair cooperation…they will accept the results of public deliberation as binding and agree to abide by those results even at the costs of their own interests.” (Hicks, 2002). Consequently, deliberative discourse is strongly based on the factuality of content, argumentative completeness, and respect of other discussants.

3.4. Mapping theories to essential components

Our previous discussion demonstrates some of the conceptual plurality inherent to different political discourse theories. After an in-depth engagement with and critical reading of the literature, and several subsequent rounds of discussion among co-authors, we argue that there is sufficient agreement among scholars on the essential components of each type of political discourse to train a classifier that is able to discriminate among them. Developing the corresponding set of labels was a cyclical rather than a linear process. After critical engagement with the cited literature on the three political discourse theories, two co-authors separately developed codes based on their analysis of the most essential components of each political discourse theory. Then, they compared the created categories and, with the aid of a third co-author, agreed on an initial set of components. Through multiple rounds of discussion, two co-authors assigned the essential components to each of the three discourse theories. This process led to further discussion on the definitional scope of the components. Thus, through multiple rounds of discussion between three co-authors, going back and forth between the choices of essential components and their assignment to the three discourse theories, we finally agreed on thirteen essential components that could be operationalized in a multilabel classification task (see final set of components together with their definitions in Appendix B & Table 5). We document disagreement among co-authors on the definition and assignment of some of the components in Appendix B (see Fact-related argument in Appendix B.3 and Identity Labels in Appendix B.8). Table 1 presents an overview of the essential components and how we assigned them to each political discourse theory for our multilabel classification task.

| Deliberative discourse | |

|---|---|

| Argument is part of theory (+) | Argument is not part of theory (-) |

| fact-related argument (Hicks, 2002; Markovits, 2006; Gastil, 2000; Bohman and Rehg, 2017; Black et al., 2011) | we vs. them (Bohman and Rehg, 2017) |

| structured argument (Hicks, 2002; Markovits, 2006; Black et al., 2011; Robertson et al., 2010) | generalized call for action (Yang et al., 2019; Hicks, 2002; Markovits, 2006) |

| counterargument (Bohman and Rehg, 2017; Halpern and Gibbs, 2013) | who instead of what (Bohman and Rehg, 2017; Hicks, 2002) |

| empathy/reciprocity (Bohman and Rehg, 2017; Young, 2001) | emotional language (Hicks, 2002; Halpern and Gibbs, 2013; Bohman and Rehg, 2017) |

| unsupported argument (Hicks, 2002; Halpern and Gibbs, 2013; Bohman and Rehg, 2017) | |

| Civic discourse | |

|---|---|

| Argument is part of theory (+) | Argument is not part of theory (-) |

| situational call for action (Adler and Goggin, 2005; Dahlgren, 2006; Bonilla and Rosa, 2015; Skoric et al., 2016) | fact-related argument (Barber, 1989; McCoy and Scully, 2002; Dahlgren, 2006) |

| we vs. them (Loader et al., 2014; McCoy and Scully, 2002; Dahlgren, 2006; Bonilla and Rosa, 2015) | structured argument (Barber, 1989; McCoy and Scully, 2002; Dahlgren, 2006) |

| counterargument (Barber, 1989; McCoy and Scully, 2002) | generalized call for action (Adler and Goggin, 2005; Dahlgren, 2006) |

| empathy/reciprocity (Young, 2001; Dahlgren, 2006; McCoy and Scully, 2002; Barber, 1989) | |

| emotional language (Young, 2001; Dahlgren, 2006; McCoy and Scully, 2002; Barber, 1989) | |

| collective rhetoric (McCoy and Scully, 2002; Dahlgren, 2006; Bonilla and Rosa, 2015) | |

| Demagogic discourse | |

|---|---|

| Argument is part of theory (+) | Argument is not part of theory (-) |

| you in the epicenter (Levinger, 2017; Gustainis, 1990) | fact-related argument (Gustainis, 1990; Roberts-Miller, 2005, 2020) |

| we vs. them (Hogan and Tell, 2006; Roberts-Miller, 2020) | structured argument (Gustainis, 1990; Roberts-Miller, 2005, 2020) |

| generalized call for action (Roberts-Miller, 2020; Hogan and Tell, 2006) | empathy/reciprocity (Levinger, 2017; Gustainis, 1990) |

| who instead of what (Gustainis, 1990) | counterargument (Roberts-Miller, 2020, 2005) |

| emotional language (Roberts-Miller, 2005; Gustainis, 1990) | |

| unsupported argument (Roberts-Miller, 2005; Gustainis, 1990) | |

| collective rhetoric (Roberts-Miller, 2020; Hogan and Tell, 2006; Gustainis, 1990) | |

Essential components of political discourse

First, demagogic discourse is represented by the presence of collective, emotional and “we vs. them” rhetoric, unsupported arguments (including those who prime identity and group membership), and calls for generalized abstract action. It does not include fact-related, structured and empathetic arguments. “Collective rhetoric” classified comments that emphasize a collective identity around words such as “we” or “our” in a way that promotes group membership.

Second, in civic discourse, the essential components include emotional, collective, and “we vs. them” rhetoric, together with counterarguments, and statements that call for situational action. In contrast, civic discourse explicitly excludes fact-related, structured arguments, and calls for generalized abstract action. The label “we vs. them” classified user comments that contrasted, discriminated or degraded another group with the purpose to consolidate the identity of the user’s group. In contrast to the concept “generalized call for action”, “situational call for action” included comments that described a specific policy goal.

Third, deliberative discourse includes fact-related and structured arguments, counterargument statements, the presence of empathy/reciprocity in user comments, as well as the explicit absence of rhetoric that does not provide any evidence (unsupported statements or statements that focus on who is doing something instead of what is happening, emotional language, and generalized abstract calls for action). The concept “fact-related argument” consisted of two types of justifications: empirical and reasoned justifications. When supporting a claim, empirical justifications provided either a direct reference to other sources (e.g., in the form of links or article references) or referred to personal experiences and anecdotes relevant for the overall claim. With the label “empathy/reciprocity” we classified comments that explicitly acknowledged another user’s perspective, claim, or proposition. The identity label “who instead of what” classified comments that referred to a person or social group without specifying any of their behavior or action. In comments with the label “generalized call for action” users stated an explicit need for policy change without providing any justification.

A more detailed description and justification of all argument types, together with examples and explanations for the generation of the minimal conceptualization can be found in Appendix B. Next, we use these essential components as labels for the classification of comments on Reddit to quantify the prevalence of the political discourse theories on the platform.

4. Data & Methods

4.1. Data Collection

To understand whether and to what extent essential components of political discourse theories characterize political discussions on social media, we collected a large volume of user comments from Reddit. We decided to conduct our study on Reddit for three reasons. First, Reddit offers free full access to historical data of the platform. Hence, we were able to perform a large-scale data analysis at the level of an entire ecosystem (Zuckerman, 2021). Second, Reddit discussions are hosted in separate message boards (i.e., subreddits), with each of them having a specific topic of discussion. This allowed us to focus on subreddits with discussions of political topics. Third, Reddit enables moderators of each subreddit to customize their subreddit’s user interface, allowing them to select which reaction mechanisms (upvote, downvote, or both) are available to users. Therefore, Reddit provided an ideal ecosystem to perform our analysis.

To create our pool of Reddit comments, we first generated a list of 55 political subreddits, nine of which changed their available reaction mechanisms at some point (retrieved from (Foontum, 2020)). Then, we used the pushshift API (Baumgartner et al., 2020) to extract all comments created in these subreddits between January 1, 2010 and April 1, 2018. We chose this time frame since many of the subreddits in our sample were created around 2010. Furthermore, moderators have the ability to customize the old Reddit interface, which stopped being the default interface on April 2, 2018 (Liao, 2018). Overall, we collected 155 million comments created during this period. We used the Wayback Machine (archive, 2022) to extract the intervals at which each subreddit introduced a change in their available reaction mechanisms. Table 6 in Appendix D offers an overview of the subreddits used in the study, and Table 7 in Appendix D indicates the date when nine of the political subreddits changed their available reaction mechanisms.

| Label |

|

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| You in the epicenter | 300.0 | 0.82 | 0.86 | |||||||

| We vs. them | 366.0 | 0.68 | 0.79 | |||||||

| Generalised call for action | 371.0 | 0.87 | 0.90 | |||||||

| Situational call for action | 304.0 | 0.96 | 0.96 | |||||||

| Who instead of what | 345.0 | 0.87 | 0.90 | |||||||

| Fact-related argument | 1307.0 | 0.89 | 0.78 | |||||||

| Structured argument | 1124.0 | 0.90 | 0.88 | |||||||

| Counter-argument structure | 563.0 | 0.91 | 0.83 | |||||||

| Empathy/reciprocity | 329.0 | 0.90 | 0.86 | |||||||

| Emotional language | 438.0 | 0.74 | 0.77 | |||||||

| Collective rhetoric | 469.0 | 0.81 | 0.84 | |||||||

| Unsupported argument | 422.0 | 0.67 | 0.76 | |||||||

| Other | 874.0 | 0.91 | 0.84 |

4.2. Annotation & model training

To map our minimal conceptualization of deliberative, demagogic, and civic discourse to discussions on Reddit, we labeled a set of comments from our Reddit corpus and trained a large language model in a multilabel classification task. Two coders labelled 4,500 unique comments with at least one of the thirteen types of essential components extracted from our theoretical analysis (see label development documentation in Section 3.4 and Appendix B). To ensure intercoder reliability and the representativeness of the sample, we performed the following procedures. First, coders discussed the developed minimal theoretic conceptualization, reviewed predefined examples for each class, and resolved any questions about the nature of the classes. Then, both coders labelled the same set of 100 random comments from the corpus, yielding a Krippendorf alpha of 0.7. After discussing prevailing differences in the coding tactics, coders learned to adjust their coding in a way that conformed more to the theoretic framework. Coders then relabelled the same corpus, yielding an intercoder reliability of 0.75. This was higher than the expected minimum for coding complicated language tasks in the literature (¿0.6 - see e.g., (Sap et al., 2019; Morstatter et al., 2018; Daxenberger and Gurevych, 2012; Amidei et al., 2019; Engelmann et al., 2022; Ullstein et al., 2022)). Since coders’ labeling practices were robust, they proceeded with the selection and labeling of further comments. Specifically, an initial sample of 1650 comments was labeled, containing 30 comments from each subreddit in the dataset. Next, a second batch of 1000 comments was annotated, which we randomly selected by stratified sampling among subreddits. To assess how many comments were necessary for an accurate classifier, we trained a preliminary language model on these 2750 comments, finding that for reliable prediction for each class, at least 300 observations were necessary. To satisfy this condition, we continued labeling comments by stratified random sampling until an annotated sample of 4500 comments was produced.

For the final model, we split the corpus of the 4500 comments in a 80-20 train/test set. We kept capitalization and punctuation of comments in their original form and removed the quoted content in the case that a user was quoting another user. To train our model, we used the large language model XLnet (Yang et al., 2019). XLnet is an architecture that combines transformers and auto-regressive modeling. We selected XLnet over other commonly used language models such as BERT (Devlin et al., 2018) because it still holds the top performance in multiple text classification benchmarks (e.g., first place in Amazon-5, Amazon-2, DBpedia, Yelp-2, AG News, second place in IMDb, Yelp-5) (with Code, 2022). We applied a warm-up initialization of 0.1, a learning rate of 3e-5, and a maximum sequence length of 100 words. Our final model resulted in a label ranking average precision score of 91%, while all class specific F1 scores were higher than 0.87. To ensure the robustness of our model, we additionally created an evaluation set of 200 comments, in which each class of the dataset appeared at least ten times. On the evaluation set, the model achieved an accuracy of 0.72, label ranking average precision score of 0.85, while all class specific F-1 scores were higher than 0.76 (Figure 2). Given the obtained model accuracy, we then analyzed a total of 155 million comments in our corpus.

5. Answering RQ 1: To what extent can essential rhetoric components of deliberative, civic, and demagogic discourse describe users’ political discussions on Reddit?

5.1. Confirmatory factor analysis

To understand to what extent the essential components of deliberation, civic discourse, and demagoguery characterize social media discussions, we conducted a confirmatory factor analysis (CFA). CFA is generally used to test hypotheses about plausible model structures (Bollen, 1989), and has commonly been used to model different types of data, from survey information to time-series (Bollen and Curran, 2006). Until now, the application of CFA in NLP-driven questions has been limited (e.g., (Park et al., 2017)), and our study serves as an inspiration for exploiting its capabilities, but also understanding its limits in machine-learning based research. Factor analysis allowed us to mathematically represent the political theories as latent unobserved variables (factors) as described by a set of observed variables (items). The observed variables corresponded to the different rhetoric components as predicted by the language model. We chose CFA rather than exploratory factor analysis (EFA) since we were investigating whether a specific conceptualization of discourse theories empirically characterized social media discussions, and not which general argument structures were best described by our data. With CFA, we could construct structures of variables that complied with the minimal conceptualizations of political theories and by assessing the quality of model-fit, we explored to what extent political theories can describe how users discuss political topics on Reddit. Furthermore, we quantified which arguments were empirically associated with which theories and their corresponding magnitude of importance.

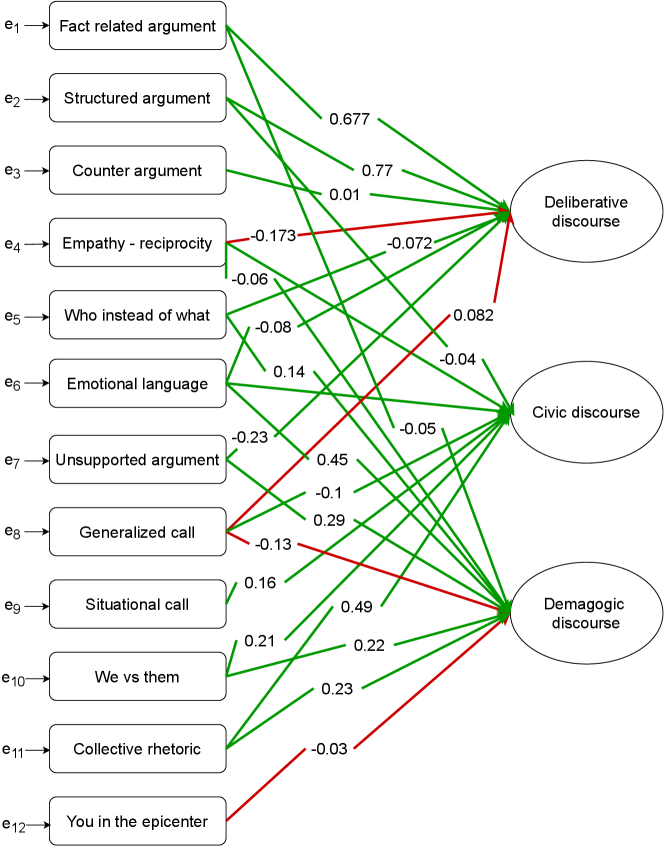

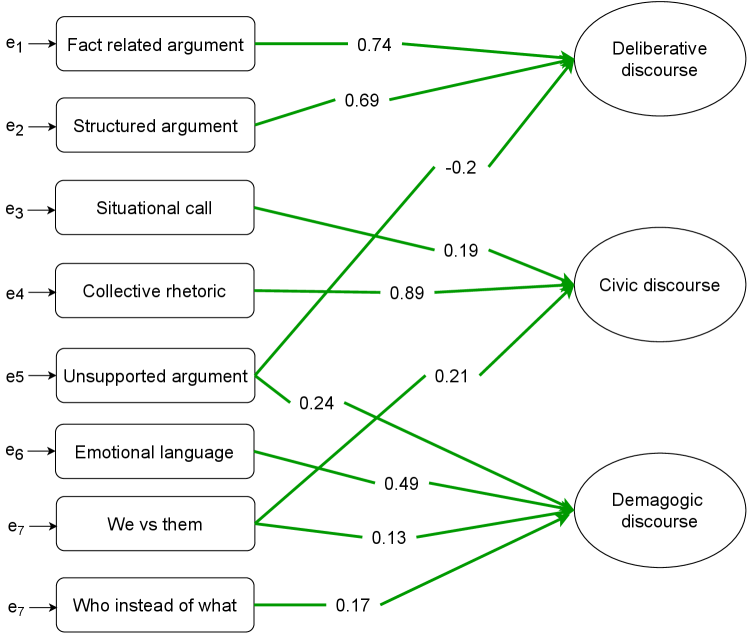

We created CFA models in which each political theory was represented as a function of all or a subset of the arguments that compose it, following the minimal conceptualization we performed (see Table 1). We did so by applying the algorithm depicted in Algorithm 1. We initiated a null model in which the latent factors are not loaded by any variable, and by iterating over the list of argumentations, we added them to the equation of theories that are associated with them. An argumentation was associated with a specific theory, if according to the theory it explicitly appears in it or is absent from it. For example, both emotional rhetoric and structured argument are associated with demagogic discourse, because the theory dictates that emotional language is its constitutive element, but also well-formed arguments are absent from it. Therefore, we hypothesized a positive loading of the emotional rhetoric item and a negative loading of the structured argument item on the factor of demagogic rhetoric. In contrast, counterargument structure is not associated with demagogic rhetoric according to the theories we engaged with and hence we did not load it on the factor at all. For each addition of an argumentation, we calculated the CFA model and stored its Confirmatory Fit Index (CFI) score (Bentler and Bonett, 1980) as a metric for model fit. After finishing the process, we selected two models. We kept the model with the highest CFI, which converged without errors, and the one with the most loaded arguments that converged without errors. The first model revealed those elements of the theories that were used in discussions on Reddit, while the second described how good the closest empirical model to the theories described political discussions on Reddit.

For all models we created, we allowed factors to correlate since discourse theories shared commonalities and differences in their essential components. Thus, we also included cross-loadings in our models. Moreover, we did not use a cut-off when evaluating the magnitude of factor loadings. Studies suggest to use a cut-off given a small sample size (Guadagnoli and Velicer, 1988; Stevens, 2012) (e.g., less than 1000 observations) to avoid type I or type II errors. In our case, the sample size was at the magnitude of millions, yielding all detected associations statistically significant. Therefore, our criterion for evaluating a factor loading was theory-driven only. Each discourse theory was described by at least nine elementary arguments, and a user comment would rarely include more than two argumentation types at the same time, as most comments on Reddit do not exceed two to three sentences. Thus, besides dominant loadings with high coefficients, we did not automatically reject loadings with low values (even ¡0.2), as even arguments appearing sparsely could be plausible and theory-conforming. A simulation that showed this case can be found in the Appendix C. In contrast, our model selection process was primarily informed by overall model fit, as adding variables that did not comply to the model structure would result in lower values of CFI and TLI.

Next, after selecting the best and most expansive model, and by drawing from the developed theoretic framework, we sought to answer whether political discourse theories could describe how people talked on political subreddits between 2010-2018.

5.2. Results for RQ1

The CFA results show that the discourse theories characterize political discussions on Reddit, aligning to a large extent with the conceptualizations of political theorists. Deliberative, civic, and demagogic discourse were present in the subreddits we studied, with user discourse related comments containing rhetoric components belonging to the theories. Table 4 presents which items (arguments) loaded to each theory for the best and most expansive model (see also figures with diagrams 4,3 in the Appendix ), while Table 3 provides the corresponding Goodness of Fit metrics.

| Best model | Expansive model | |||

|---|---|---|---|---|

|

0.97 | 0.85 | ||

|

0.95 | 0.74 | ||

|

0.027 | 0.044 | ||

|

0.016 | 0.028 |

The best model showed a very good fit (CFI0.95) and contained at least three items for each discourse construct. When users performed deliberative discussions, they created fact-related and structured arguments, with the variables’ loadings being 0.743 & 0.691, respectively. Furthermore, they avoided generating unsupported statements (). Civic discourse on Reddit was characterized mostly through statements of collective rhetoric () together with statements calling for situational action () and “we vs. them” statements ().

As expected, “we vs. them” rhetoric was also present in users’ demagogic discussions (), albeit the strongest argumentation type in it was the generation of emotional comments (), followed by unsupported arguments () and “who instead of what” rhetoric (). Factors covaried pairwise (demagogic & civic , civic & deliberative , demagogic & deliberative ), as discourses shared specific argumentation types.

Focusing on the expansive model, it included 24 out of the 28 theoretical item-factor associations. Again, factors correlated pairwise (demagogic & civic , civic & deliberative , demagogic & deliberative ). Nonetheless, the model’s fit was borderline, since RMSA was acceptable (0.044 ¡ lower than 0.05), but CFI was not (0.85, lower than the generally recommended threshold of 0.9) (Xia and Yang, 2019). For the additional variables that were not included in the best model, they generally had weak associations with the constructs, except of four cases. For civic discourse, “empathy/reciprocity” was associated to the construct (), while collective rhetoric loaded on demagogic discourse (). These associations complied with theoretical conceptions of the theories. In contrast, empathy/reciprocity () and generalised call for action () loaded on deliberative and demagogic discourse, respectively. This contradicted the theoretical conceptualization of these two discourse theories.

|

|||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Argumentation |

|

|

|

|

|

|

|

|

|

||||||||||||||||||

| Theory | + | + | + | + | - | - | - | - | - | ||||||||||||||||||

| Expansive model | 0.677 | 0.7772 | 0.005 | -0.173 | 0.082 | -0.072 | -0.083 | - 0.225 | |||||||||||||||||||

| Best Model | 0.743 | 0.691 | -0.202 | ||||||||||||||||||||||||

|

|||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Argumentation |

|

|

|

|

|

|

|

|

|

||||||||||||||||||

| Theory | + | + | + | + | + | + | - | - | - | ||||||||||||||||||

| Expansive model | 0.162 | 0.208 | 0.2347 | 0.062 | 0.494 | -0.037 | - 0.098 | ||||||||||||||||||||

| Best Model | 0.194 | 0.211 | 0.891 | ||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Argumentation |

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||

| Theory | + | + | + | + | + | + | + | - | - | - | |||||||||||||||||||||||

| Expansive model | -0.025 | 0.223 | -0.132 | 0.142 | 0.453 | 0.291 | 0.227 | -0.052 | -0.063 | ||||||||||||||||||||||||

| Best Model | 0.133 | 0.165 | 0.485 | 0.235 | |||||||||||||||||||||||||||||

The CFA’s results reveal that there is a clear connection between theory and social media discussions, and also show which key rhetoric components of deliberative, civic, and demagogic discourse describe user interactions in our sample (RQ1). Although user comments included central features of each discourse, such as fact-related and structured arguments in deliberation, collective rhetoric in civic discourse, and unsupported, emotional, and identity-related (“we vs. them”) statements for demagogic discourse, other properties prescribed by theorists to each discourse did not empirically connect to the latent constructs. Nonetheless, there is a sufficient overlap between theoretical conceptions of political discourse and discussions taking place on social media, which also allows us to answer RQ2 – whether a specific part of the platform’s digital environment, its available reaction mechanisms, relate to the prevalence of the above political discourse types.

6. Answering RQ 2: Are different reaction mechanisms (i.e., upvotes, downvotes, no votes) associated with different rhetoric components of political discourse in political discussions on Reddit?

6.1. Difference in Differences Analysis (DID)

On Reddit, subreddit moderators are free to customize the user interface. In particular, moderators can determine the types of reaction mechanisms (upvote, downvote) that subreddit members can use for interacting with other members. This creates a rich pool of behavioral data that describe political discourse dynamics in the presence or absence of different reaction mechanisms. To assess this relationship, we created a quantitative model based on a difference in differences (DID) analysis that compares how specific interventions, i.e., the change of available reaction mechanisms by moderators within subreddits, relate to changes in the type of political discourse among users. In general, DID analysis attempts to measure the effects of a sudden change in the environment, policy, or general treatment on a group of individuals or entities (Goodman-Bacon, 2021). It evaluates how the time-series or observations of a treatment group suddenly change based on a specific intervention, compared to a control group that is not subjected to the treatment. DID largely assumes that in the absence of treatment, the average outcomes for treated and comparison groups would have followed parallel paths over time, without any significant variation (Callaway and Sant’Anna, 2021). Therefore, any difference between treatment and control after the intervention can be attributed to the intervention itself, revealing causal relations. In our case, although we apply DID and fulfill the parallel-trends assumption in pre-treatment periods, we are still careful in reporting our results, which we claim are mainly observational. Detected associations related only to the specific social media platform and time-periods, and to generate generalized knowledge, more in detail experimentation and research studies need to take place.

To evaluate changes in reaction mechanisms, we selected posts from “treatment” subreddits that underwent a change in a reaction mechanism. We defined the “baseline” period as the period of time in which the subreddit operated with the default reaction mechanism (i.e., both up and down votes were available) and the “intervention” period as the period of time after the change in reaction mechanism was implemented. In our data, all subreddits started with the same default reaction mechanisms. Then, moderators had the option to change the reaction mechanism. As a result, any change in political discourse between the baseline and intervention period can reflect a maturation effect (Campbell and Stanley, 2015) rather than an effect of the intervention. To account for this possibility (and besides controlling for time), for each treatment subreddit, we also created a matched “control” sample from a subreddit that did not undergo a change in reaction mechanism but that otherwise had the same characteristics as the treatment subreddits during their baseline periods.

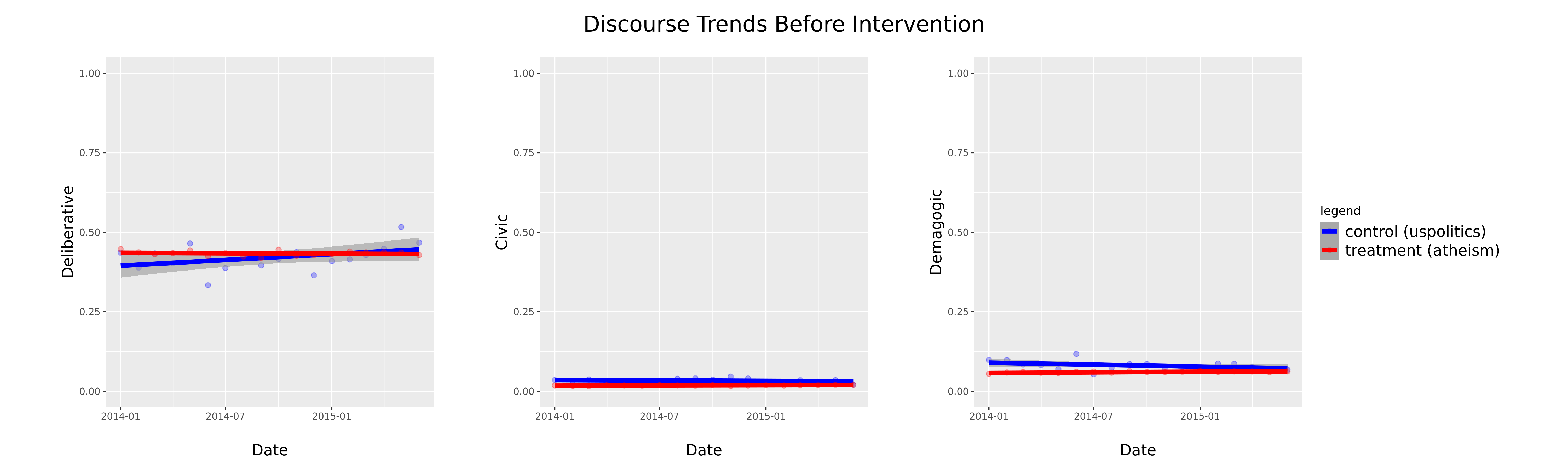

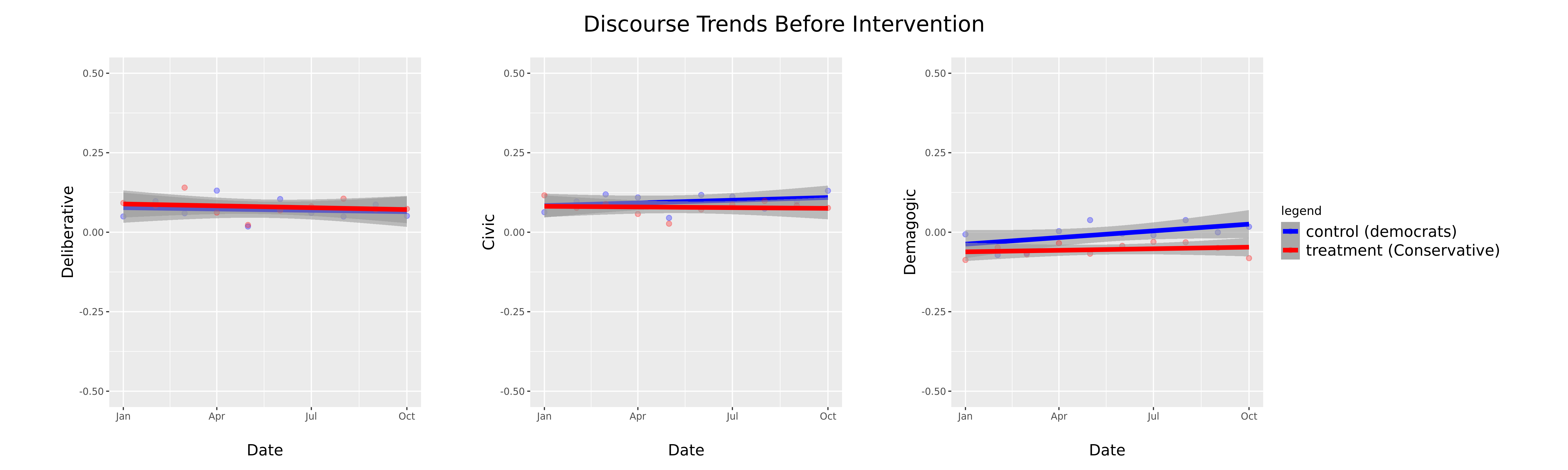

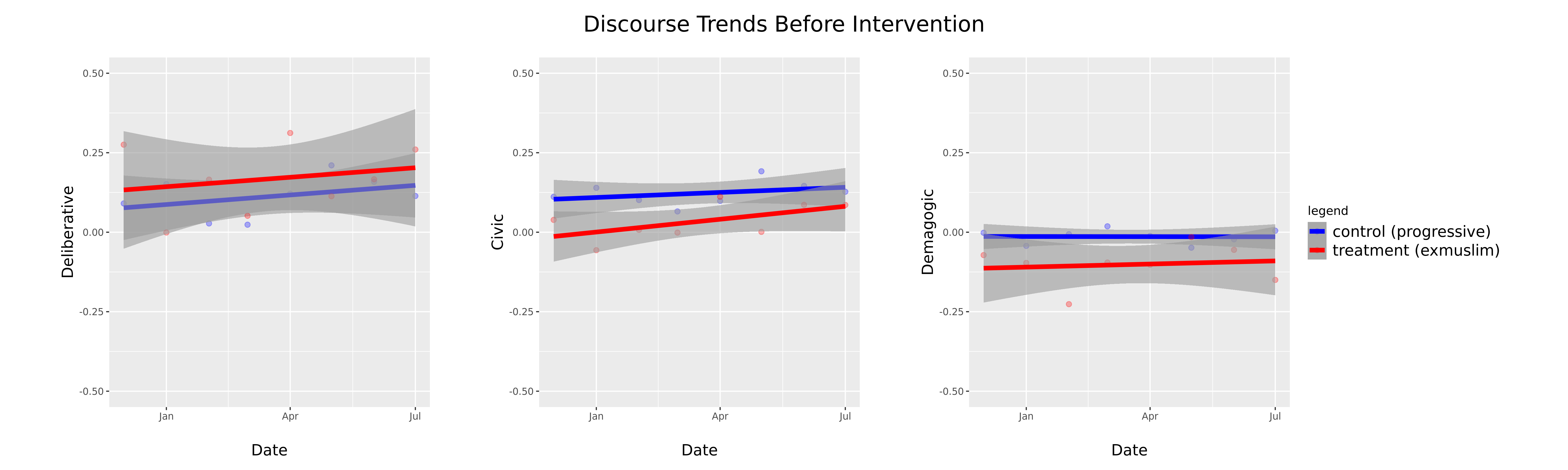

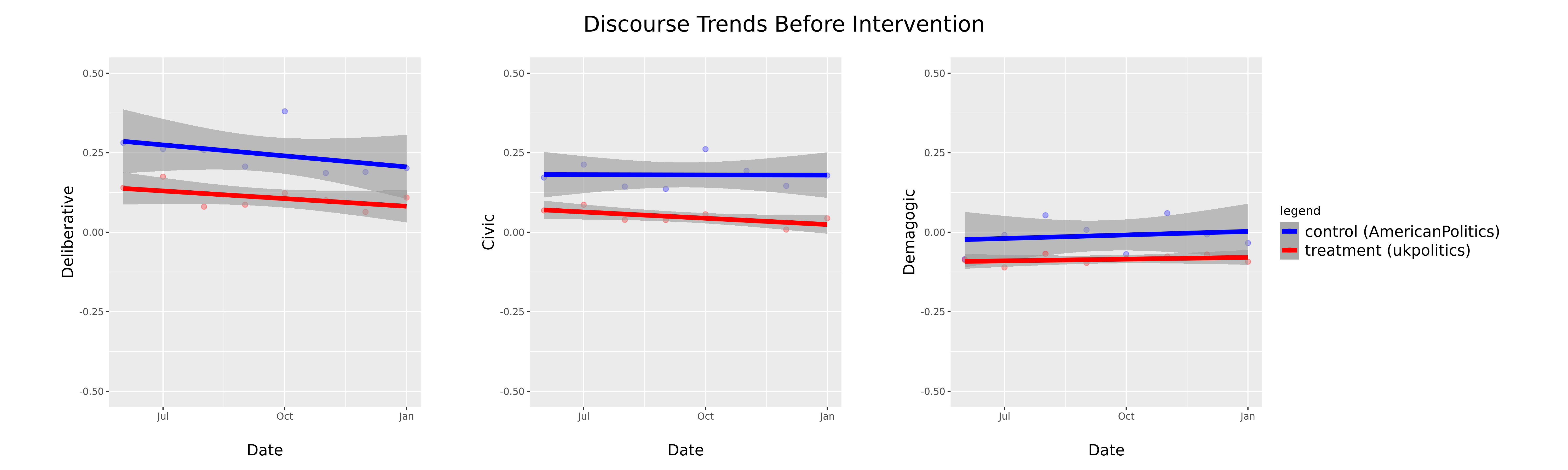

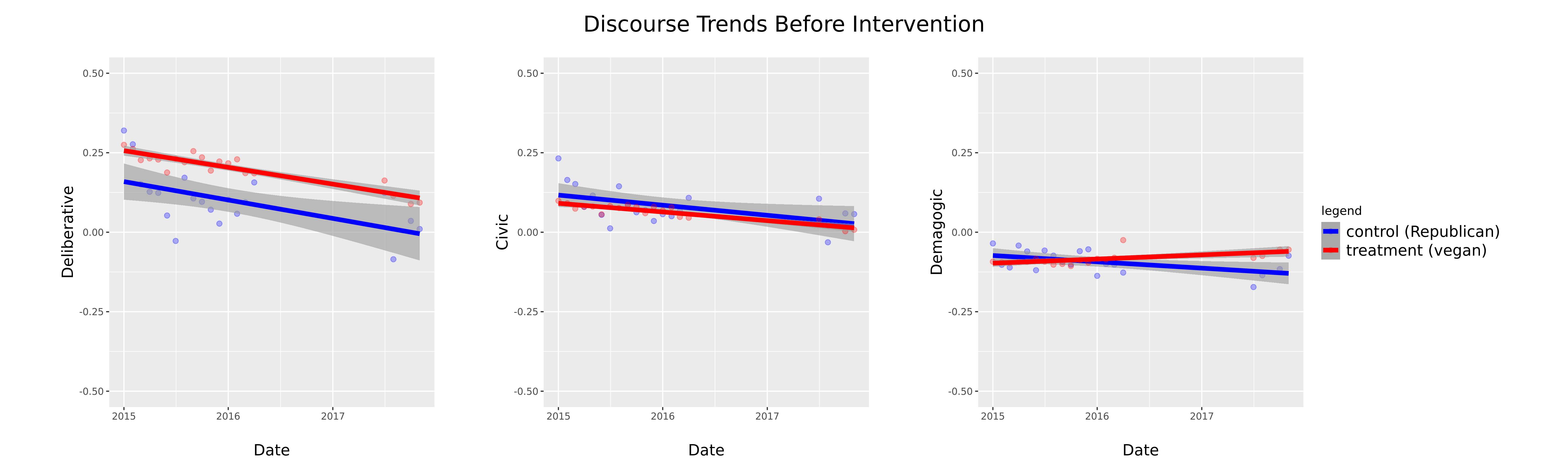

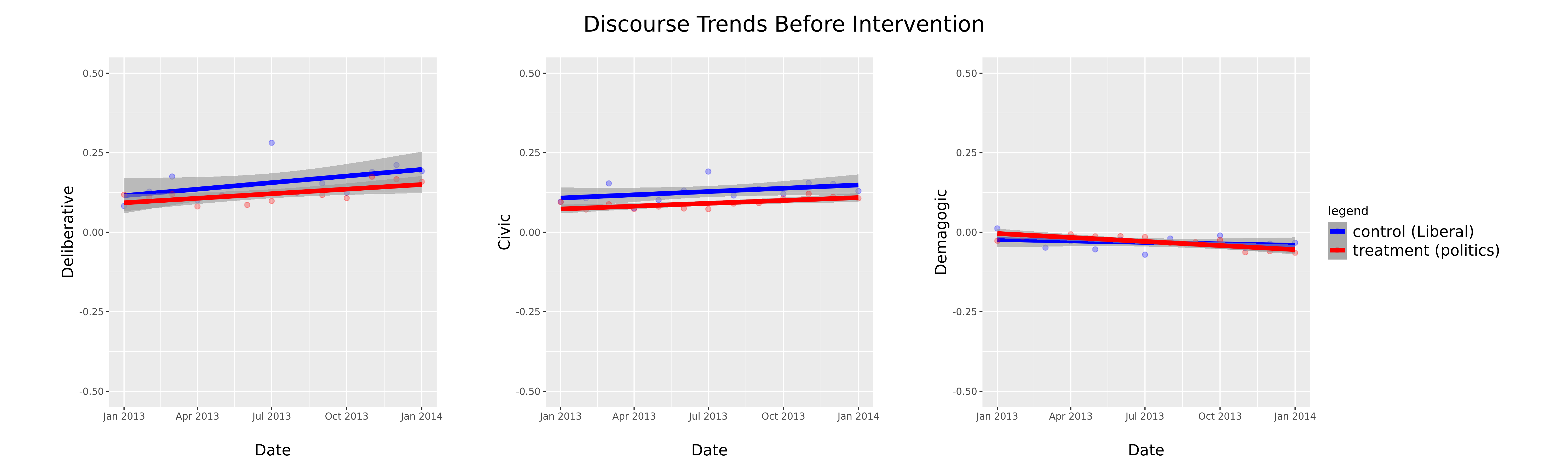

We first selected all posts from subreddits that did not undergo a mechanism change but that were posted within the baseline or intervention period for each individual treatment subreddit. By aligning posts made during the same time window for the treatment and control subreddits, we could control for any overarching maturation effects that might have similarly affected both posts in the treatment and control subreddits (e.g., changes in world politics). We then calculated the Pearson correlation between the average discourse elements’ scores for posts made during each treatment subreddits’ baseline period and the average discourse elements’ scores for posts from each potential control subreddit made during that same period. For each treatment subreddit, we then selected the control subreddit for sampling that had: 1) a large number of posts during both the baseline and intervention period for the matched treatment subreddit; and 2) a high correlation between average discourse elements’ scores during each treatment subreddit’s baseline period. A high correlation coefficient between control and treatment subreddits ensured that the “parallel trend” assumption of the DID design was fulfilled. The extracted treatment and control subreddits are presented in Table 8, together with exemplary diagnostics plots in Appendix F. These plots verified that the matched time-series were similar in levels and in trends, as advised by Kahn-Lang & Lang (Kahn-Lang and Lang, 2020), who argued for a more careful election of control and treatment groups.

To further control for other factors that could plausibly affect the outcome, we again used the Wayback Machine (archive, 2022) to extract different moderation rules that existed in each subreddit during the investigated period. Since we analyzed how people discuss within each community, we controlled for further factors that could have influenced interactions (Perrault and Zhang, 2019; Andalibi et al., 2016). We create six different variables that represent different moderation rules that could be associated with how user discourse takes place (Matias, 2019): Anonymity, which describes whether a user’s real identity should remain hidden in a subreddit. No troll, which encompasses guidelines that explicitly prohibit trolling/spamming behavior that in themselves contain the usage of inflammatory language or repeated posting of nonconstructive information that can make discourse less deliberative. No hate-speech, which forbids the usage of offensive and hateful speech towards individuals and social groups, which generally leads to emotional and ungrounded rhetoric. Civility, which encompasses direct prompts in the guidelines to use civil language, and deliberation, which includes moderation rules that promote evidence-based arguments and multi-perspective discussions. We also create a variable in-group for subreddits that do focus only on the perspective of one social group (e.g., r/vegan, r/enoughtrumpspam) and explicitly mention in their guidelines that other opinions about an issue will not be tolerated, potentially leading to higher “we vs. them” rhetoric and less counterargument structures. These variables serve as proxies of either the implementation of rules that explicitly aimed to alter the nature of discourse (Saha et al., 2020; Birman, 2018; Dosono and Semaan, 2019) or that have been implemented because of abrupt incidents and patterns in user dynamics that moderators wanted to control (Thach et al., 2022; Squirrell, 2019; Kiene et al., 2016). Besides these moderation rules, we also use the “nest level” of a comment as a control. The nest level quantifies how deep a comment appeared in a specific discussion.

We detected three main interventions in subreddits. The first one encompasses a set of subreddits that changed the baseline reaction mechanisms (up/down votes) to “only upvoting” (intervention A), a further set of subreddits that changed “only upvoting” to “no voting” (absence of available reactions, intervention B), and a set of subreddits that directly changed from the baseline to “no voting” (intervention C). Therefore, we created three different DID models that included the “treatment” subreddits together with the matched “control” subreddits that had the general form:

| (1) |

, where is the value of the latent variable for discourse d as predicted by the best CFA model for a specific comment c that belongs to subreddit s, has the nest level n, given the presence (absence) of intervention i at a specific year. corresponds to the intercept for each discourse d at subreddit s, the relationship size between intervention i and discourse type s, the relationship between a comment’s nest level and the prevalence of discourse d, the general discourse prevalence at a specific year, and the estimator describing the association between the six moderation types m and each discourse s. For each of the three models, represents a change of the corresponding reaction mechanisms for the “treatment” subreddits.

This model structure is essentially based on Chiou et al. (Chiou and Tucker, 2018) for measuring effects between social media platform interventions on advertising and user sharing behavior. It allows us to take the subreddit’s discourse heterogeneity into account, ensuring that the results are not driven by the subreddit with the largest number of observations, while controlling for each subreddit’s specific effects and time effects. In our model, we exploit that the change in reaction mechanisms was not caused by how user discussions in terms of their political discourse took place. Reaction design interventions were related to how users misused the buttons (i.e., they used them to declare content preference over content fit in the subreddit, violating the unofficial reddit rules) (Yarosh, 2017). They also corresponded to moderators’ theoretic conceptions about whether the logic of up- or downvoting conformed to the scope of the subreddit (Users, 2022), hence being unrelated to whether users generated language containing specific rhetoric components. Overall, the model’s focus on interventions A (up/downvotes to only upvotes), B (upvotes to no votes) and C (up/downvotes to no votes) included 48, 65, and 13 million observations respectively. Given the large number of observations, we created 100 mutually exclusive stratified samples for each case, on which we ran the models, and by bootstrapping calculated the corresponding mean values. We ran models on a random sample of 1% of the observations, and extracted clustered standard errors by subreddit, in order to avoid issues caused by the non-independence of observations within each subreddit (see e.g., (Bertrand et al., 2004)).

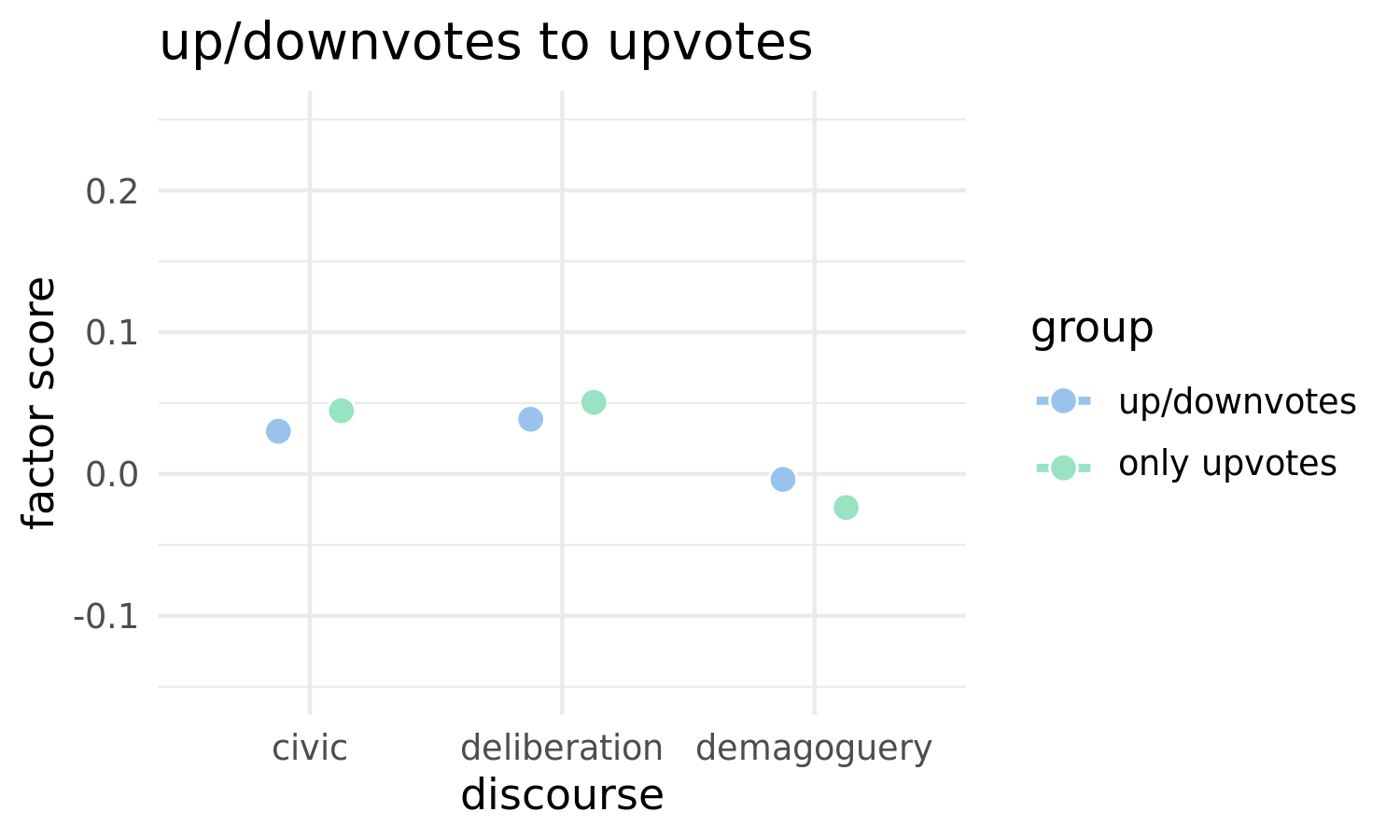

6.2. Results for RQ2

The DID analysis showed that different reaction mechanisms corresponded to different forms of political discourse that users engaged with. Here, two findings are particularly relevant: First, our analysis demonstrates that subreddits with upvoting only had an increased prevalence of deliberative and civic discourse. Second, we find that no voting at all, the complete absence of reaction mechanisms, was associated with the highest levels of demagogic rhetoric.

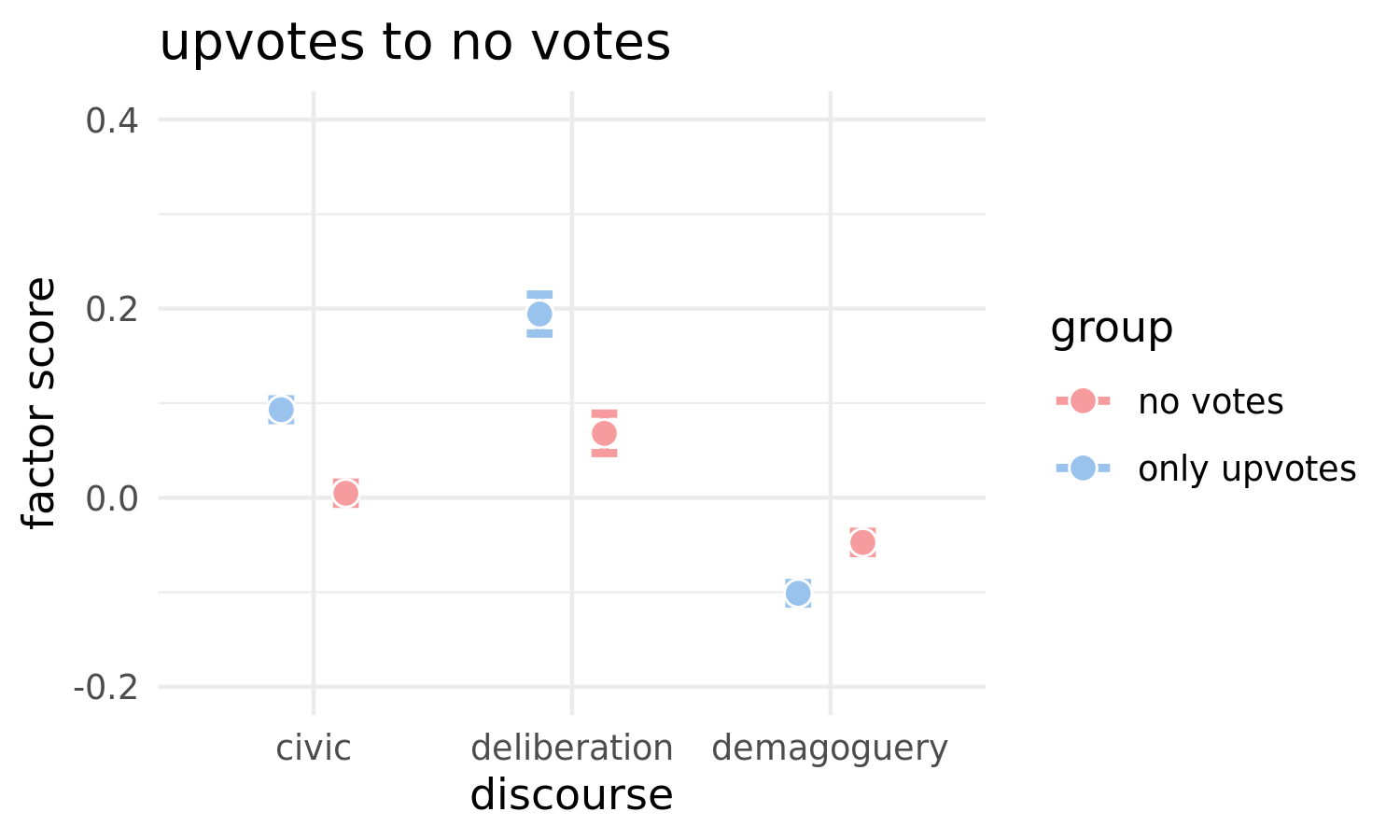

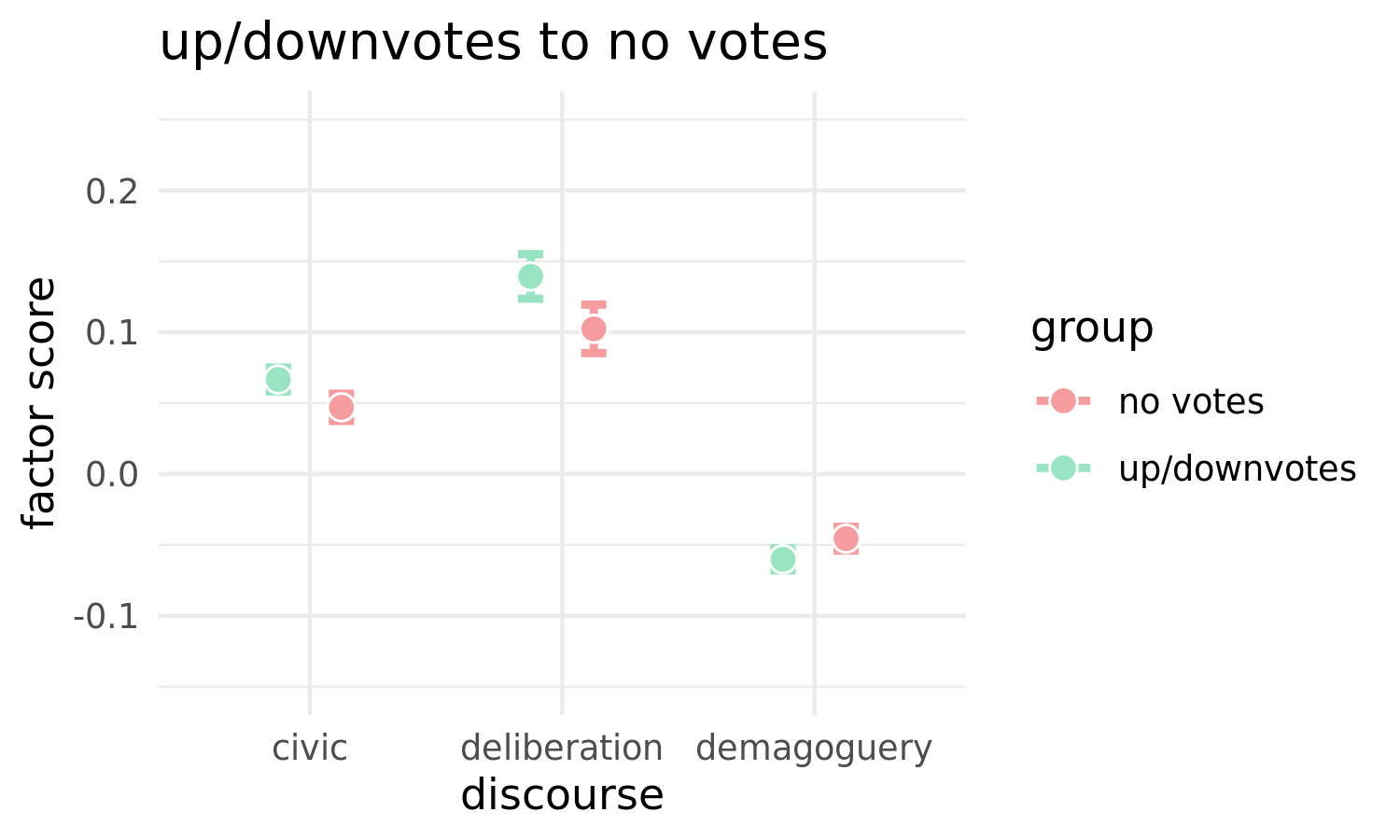

Figure 2 presents how changes in reaction mechanisms related to civic, demagogic, and deliberative discourse in each of the three distinct cases. Since we used the predicted scores for each discourse by the CFA model, which set factor variance to unity, the results are not comparable between discourses and interventions. Instead, we can assess the relative change within a discourse, given factor scores before and after an intervention took place.

For subreddits where moderators decided to hide downvoting but keep upvoting (r/Conservative, r/EnoughTrumpSpam, \seqsplitr/GenderCritical, r/atheism, r/politics, r/exmuslim, r/ukpolitics), the prevalence of civic and deliberative discourse increased on average 0.47 and 0.30 times respectively, while demagogic discourse decreased 1.68 times. For example, unsupported arguments on r/politics decreased from 11% to 9%, while fact-related and structured arguments increased from 47% to 49%. Removal of downvoting was associated with a significant reduction of demagogic rhetoric. An inverse relationship was observed when a subset of these subreddits subsequently decided to further remove the upvoting mechanism as well (r/Conservative, r/EnoughTrumpSpam, r/GenderCritical, r/atheism, r/politics, r/ exmuslim). In this case, civic and deliberative discourse decreased 0.94 times and 0.64 times respectively, while demagogic discourse increased 0.53 times. For example, the discourse on r/Conservative contained 23% more arguments that belong to demagoguery (13% with only upvote, 16% with no reaction mechanism). In contrast, collective rhetoric, which belongs to civic discourse, decreased from 3.3% to 3.1%.

In subreddit interfaces without reaction mechanisms, the discourse became significantly more demagogic compared to an environment with only upvoting. Moreover, subreddits who immediately removed all available voting mechanisms from the baseline state (r/unpopularopinion, r/vegan), encountered a decrease in civic and deliberative discourse by 0.27 and 0.34 times, respectively. They also faced an increase in demagogic discourse by 0.22 times. Again, comparing up and downvoting to no voting in subreddits, no voting was associated with a significantly lower level of constructive dialogue. For example, the discourse on r/politics contained 15% less fact-related and structured arguments (47% of comments contained one of the two arguments when both votes were available, while only 40% after). In contrast, the usage of unsupported arguments increased by 17% (5.8% with both reaction mechanisms, 7% with no reaction mechanism). These absolute values in the percentage changes of specific arguments provide a better understanding about variations in the discourse taking place, albeit are not controlled for time and community-specific effects, which the DID model actually accounts for. For example, there was a declining trend in deliberative discourse over time across all subreddits, which partially masks the difference between the levels of deliberative discourse in environments having up/downvotes and only upvotes, since the change from up/downvotes to another reaction mechanism structure always appeared later in time.

These results offer a clear answer to RQ2: Changes in reaction mechanisms corresponded to changes in the nature of the political discourse taking place in political subreddits between 2010 and 2018. Only upvoting was associated with more civic and deliberative discourse, while the presence of downvoting was associated with a decrease in their prevalence. Furthermore, the complete absence of any voting means was associated with the lowest level of civic and deliberative discourse. We observed an inverse behavior for demagoguery, with the upvoting mechanism being associated least with the discourse, while downvotes and no-votes were associated with significantly increased demagogic rhetoric. Although our analysis did not focus on understanding why these effects appeared on Reddit discussions, our findings correspond to previous studies that show that the absence of negative reinforcement (downvoting) improved the quality of discussions (Khern-am nuai et al., 2020), but also that the type and magnitude of feedback users receive does influence the future content they will generate (Adelani et al., 2020).

7. Discussion

Our analyses shed light on how users discuss political topics on Reddit through the lens of three prominent political discourse theories. When using deliberative rhetoric, interactions were fact-related and structured and lacked statements without evidence. In contrast, they did not recognize the perspectives of other authors (empathy/reciprocity) or provide counterarguments.

When the discourse contained civic features, users underlined a collective identity and made calls for situational actions. Nevertheless, there was no significant use of emotional or unstructured/nonfactual language as suggested by civic discourse scholars.

When the discourse was demagogic, users mostly engaged in emotional language and nonfactual arguments, but they did not make calls for generalized arguments or focus on the importance of the “people” as suggested by theorists. These elements of discourse show both that discussions on Reddit differ from theoretical conceptions and from how these discourse types might be deployed in other environments or by other discussants. For example, politicians on social media, when creating demagogic statements, often use “you in the epicenter” arguments (Bobba, 2019), which we did not find for Reddit users in our analysis.

Focusing on the overlap between theories and empirical data, we detected core elements of the theories in our sample discussions and used them to evaluate political discourse. Simultaneously, we located specific divergences that might create concerns as to whether normative theoretic conceptions can actually be translated into discursive practices. These questions necessitate a further systematic analysis and measurement of theories in different environments and conditions, which were not performed by us. Nonetheless, our study emphasizes the relation between theory and political discussions on social media. It further serves as a proof-of-concept on how to measure political communication from the lens of political theories and recursively verify, falsify, or reevaluate the use of existing political theories when evaluating political communication. We believe that this is a valuable contribution in the field, since the relationship between social media and democracy and its core values is under heightened scrutiny. For example, our study informs discussions in political discourse theory that seek to understand whether political discourse on social media is essentially distinct and unique in contrast to political discussions ”offline”. As such, our findings could inform scholarly discussion on political discourse theory.

Moreover, our study produces an understanding of the relationship between different reaction mechanisms and the type of political discourse user engage in. We found that having “only upvotes” as reaction mechanism was the most beneficial for the prevalence of deliberative and civic discourse, while the absence of voting favored the generation of discussions with more demagogic rhetoric. These results contribute to an ongoing debate on how to design social media platforms. Recently, YouTube experimented with the removal of downvoting from its platform (YouTube, 2021), while Twitter recently incorporated downvoting in its user interface (Hunter, 2022).

Based on our findings, it seems that up- and downvoting is associated with an increase of demagogic discourse compared to only upvoting, and we hope that our findings can be taken into consideration when making such decisions in the future. Although we controlled for multiple factors and based our modeling decisions on prior scientific work, additional experiments and studies are needed to further validate whether results support the existence of a causal relationship. A reaction mechanism can relate to discussions in different ways, many of which we did not analyze in our study (e.g., prevalence of hate speech (Sasse et al., 2022; Cypris et al., 2022) or user participation (Engelmann et al., 2018)). Reaction mechanisms can also play a variable role given different user demographics and the nature of the platform. Hence, we do not claim that our results are necessarily generalizable, and we argue that further research studies should continue to systematize knowledge to provide policy recommendations for designing social media platforms that promote democratic values.

As we pointed out in Section 2.3, our investigation concentrated on reaction mechanisms rather than affordances. Future research should further investigate the relationship between community-specific uses of reaction mechanisms (i.e., as affordances) and political rhetoric among social media users. Users do not evaluate reaction mechanisms simply by their technical functionality. In Reddit, voting is inseparable from the culture of the platform, with users disregarding platform rules by making, and enforcing, their own rules and norms as voting is used for pointing out who and what is “right”, to assign and recognize social status, and to negotiate meaning and ethical norms (Graham and Rodriguez, 2021). While reaction mechanisms create the propensity to behave in specific ways, what kind of behaviors will dominate interactions depends on complex social dynamics in online communities that are difficult to control for. It is no coincidence therefore that the same reaction mechanisms can have varying effects in different digital environments and also can lead to different dimensions of user behavior (e.g., the content of posts, the frequency of posting, how long users will remain in a community, etc.) (Cheng et al., 2014; Khern-am nuai et al., 2020). This social dimension of reaction mechanisms can already be recognized when evaluating the reasons why moderators on specific subreddits decide to change reaction mechanisms. For example, in our own analysis, we found that the exmuslim subreddit deactivated downvotes because of button abuse and because its usage did not end up conforming to the general rules of reddit, while other subreddits did not have a downvote in order to create a “safe space” for its members. These discrepancies reveal the relationship between social dimensions of the platforms and their technical design, which cannot be neglected when integrating or evaluating design features on platforms.

Building on this sociotechnical perspective, future research should focus on understanding why reaction mechanisms relate in specific ways to positive and negative feedback from a democratic perspective and how this can be integrated ideally in platform design. Properties such as platform objective (political vs. non-political topics, humor vs. deliberation) and user group properties (e.g., age, gender, political background), can interact in unforeseen ways with design features, affecting user discussions and behaviors (see for example political identity induced differences in content moderation effects (Papakyriakopoulos and Goodman, 2022)). Furthermore, what is democratically valuable is contestable, and even the evaluation about specific effects of reaction mechanisms is not clear cut. For example, expressing emotion might be detrimental for deliberative discourse, but empowering from a civic perspective; therefore, an increase or decrease because of technical design might be favored in some cases and not in others. Especially since reaction mechanisms are fundamental data inputs for recommendation systems that distribute and shape the flow of information between users, it is important to quantify biases, issues, and effects that related reaction mechanisms bring to the digital ecosystems. We argue that the proposed methodologies in this paper can contribute towards that end as they are able to directly associate political theories to empirical user behavior, which can inform policy decisions based on more scientifically and theoretically grounded democratic frameworks. Furthermore, we hope that researchers advance our research project and its proposed methodologies to better understand combining machine learning tools with classical statistics.

8. Conclusion

Our study introduced a framework for evaluating the empirical manifestation of political theories on social media. We performed a comparative analysis of three prominent theories of political discourse, i.e., deliberative, civic, and demagogic. We produced a set of constitutive rhetoric elements that we referred to as a “minimal conceptualization” of these three discourse theories. We then used multilabel classification to explore the extent to which these rhetoric components characterize 155 million user comments across 55 political message boards on Reddit. We find that essential components of the three discourse theories indeed characterize political user discussion. Nonetheless, we also found that specific theoretically defined elements of discourses did not resurface in the political discussions on Reddit. Over a time span of eight years (2010-2018), we created a quantitative setup to identify changes in political discourse as a function of introducing or removing upvotes, downvotes or both. We showed that social media reaction changes were associated with changes in the nature of political discourse. Interfaces with upvoting only were associated with the highest level of civic and deliberative discourse, while the absence of any reaction mechanisms showed the strongest manifestation of demagogic rhetoric. We believe that these results are valuable contributions to ongoing policy and research discussions on platform design and its important role in supporting more civil interaction on social media.

Acknowledgements.

This study was funded in part by the Princeton Center for Information Technology Policy and supported by a Princeton Data Driven Social Science Initiative Grant. We gratefully acknowledge further financial support from the Schmidt DataX Fund at Princeton University made possible through a major gift from the Schmidt Futures Foundation. We thank Arvind Narayanan for his conceptual support in the first stages of the study, Aaron Snoswell and Oscar Torres-Reyna for their methodological advice, and Andrés Monroy-Hernández, Jens Grossklags & Michael Zoorob for their feedback on the final manuscript. We are further grateful for the constructive feedback received from the Princeton Center for Information Technology fellows meeting and the Work in Progress reading group, and the MPSA 2022 Panel on Social Media Ecosystems and Their Effects Across Platforms.References

- (1)