UrbanVR: An immersive analytics system for context-aware urban design

Abstract

Urban design is a highly visual discipline that requires visualization for informed decision making. However, traditional urban design tools are mostly limited to representations on 2D displays that lack intuitive awareness. The popularity of head-mounted displays (HMDs) promotes a promising alternative with consumer-grade 3D displays. We introduce UrbanVR, an immersive analytics system with effective visualization and interaction techniques, to enable architects to assess designs in a virtual reality (VR) environment. Specifically, UrbanVR incorporates 1) a customized parallel coordinates plot (PCP) design to facilitate quantitative assessment of high-dimensional design metrics, 2) a series of egocentric interactions, including gesture interactions and handle-bar metaphors, to facilitate user interactions, and 3) a viewpoint optimization algorithm to help users explore both the PCP for quantitative analysis, and objects of interest for context awareness. Effectiveness and feasibility of the system are validated through quantitative user studies and qualitative expert feedbacks.

keywords:

\KWDImmersive analytics , urban design , virtual PCP , viewport optimization , gesture interactionwei.zeng@siat.ac.cnWei Zeng

1 Introduction

Visualization plays a key role in supporting informed discussions among stakeholders in an urban design process: For designers, the design process comprises visual representations in practically all stages and for most aspects from ideation and specification to analysis and communication [1]; For decision makers, visualizations help to understand implications of the design [2]. Specifically, the process of designing a city’s development site is rather tedious: designers need to come up with several design options, then analyze them against key performance indicators, and finally select one. The process requires effective analysis and visualization tools.

Urban environments are composed of buildings and various surroundings, which can be naturally represented as 3D models. As such, many 3D visual analytics systems have been developed for a variety of urban design applications, including cityscape and visibility analysis [3, 4], flood management [5, 6], vitality improvement [7], and recycling [8]. Yet, most of them are developed for desktop displays, which lack spatial presence that is the sense of “being there” in the world depicted by the virtual environment [9]. The 3D nature of urban environments calls for immersive analytics tools that can provide vivid presence of the urban environment beyond the desktop.

The advance of affordable, consumer head-mounted displays (HMDs) such as the HTC Vive, has revived the fields of VR and immersive analytics. Recently, there is a growing interesting in utilizing VR technology for urban planning, which can improve design efficiency and facilitate public engagement [10, 11]. Nevertheless, developing such an immersive analytics system is challenging: First, the system needs to present the urban environment (3D spatial information) together with mostly quantitative analysis data (abstract information) that are typically high-dimensional. It remains challenging to display both spatial and abstract information in the virtual environment [12]. Second, conventional interactions for desktop displays, such as mouses and keyboard, are infeasible for VR. New ways to interact with the immersive analytics systems are required [13].

We present UrbanVR, an immersive analytics system that integrates advanced analytics and visualization techniques to support the decision-making process in site development in a VR environment. UrbanVR caters to various analytical tasks that are feasible for analysis and visualization in VR, identified from semi-structured interviews with a collaborating architect (Sect. 3). We then focus on visualization and interaction design for supporting site development in a virtual environment. Specifically, we design a parallel coordinates plot (PCP) that can be spatially situated with 3D physical objects, to facilitate quantitative assessment of high-dimensional analysis metrics (Sect. 4.2). Next, we integrate a series of egocentric interactions, including gesture interactions, and handle bar metaphors, to facilitate user interactions in VR (Sect. 4.3). We further develop a viewpoint optimization algorithm to mitigate occlusions and help users explore spatial and abstract information (Sect. 4.4). A quantitative user study demonstrates effectiveness of these interactions, and qualitative feedbacks from domain experts confirm the applicability of UrbanVR in supporting shading and visibility analysis (Sect. 5).

The primary contributions of our work include:

-

1.

UrbanVR is an immersive analytics system with a 3D visualization of an urban site for context-aware exploration, and a customized parallel coordinate plot (PCP) for quantitative analysis in VR.

-

2.

UrbanVR integrates viewport optimization and various egocentric interactions such as gesture interactions and handle-bar metaphors, to facilitate user exploration in VR.

-

3.

A quantitative user study together with qualitative expert interviews demonstrate the effectiveness and applicability of UrbanVR in supporting urban design in virtual reality.

2 Related Work

This section summarizes previous studies closely related to our work in the following categories.

Visual Analytics for Urban Data. Nowadays, big urban data are being ubiquitously available, which has promoted the development of evidence-based urban design. Meanwhile, visualization has been recognized as an effective means for communication and analysis. As such, many visual analytics systems have been developed to support various urban design applications, like transportation planning [14] and environment assessment [15]. Most of these systems utilize 2D maps for visualizing big urban data [16], which omits 3D buildings and surroundings in an urban site. Recent years have witnessed some visual analytics systems for urban data in 3D. For instance, VitalVizor [7] arranges 3D visualization of physical entities and 2D representation of quantitative metrics side-by-side for urban vitality analysis. Closely related to this work, Urbane [3], Vis-A-Ware [4], and Shadow Profiler [17] provide 3D visualizations for comparing effects of new buildings on landmark visibility, sky exposure, and shading. Our work also caters to landmark visibility and shadow analysis. But instead of presenting 3D spatial information on 2D display, we render 3D visualizations in a VR environment, to enable more vivid experience.

Immersive Analytics. VR HMDs provide an alternative to 2D displays for data visualization in an immersive environment. Immersive visualization is naturally appropriate for spatial data, e.g., scientific data [18]. Advances of technical features of VR HMDs including higher resolution and lower latency tracking, promote a wide adoption of consumer-grade HMDs for data visualization and analytics in VR, i.e., immersive analytics [19].

Nevertheless, there are many challenges when developing immersive analytics systems. The challenges can be grouped into four topics: spatially situated data visualization, collaborative analytics, interacting with immersive analytics systems, and user scenarios [13]. Recently, many immersive visualization techniques for displaying abstract information have been developed and evaluated, such as DXR toolkit [20], glyph visualization [21], flow maps [22], heatmaps [23, 24], and tilt map [25]. These techniques provide expressive visualizations for building immersive analytics. For example, DXR toolkit [20] provides a library of pre-defined visualizations such as scatterplots, bar charts, and flow visualizations. Yet, these visualizations are infeasible for displaying the high-dimensional shadow and visibility analysis metrics. As such, we design a customized PCP that can be overlaid on top of a building, to address the challenge of spatially situated data visualization.

Interaction Design. Interactivity is one of the key elements of a vivid experience in VR [26]. Advanced interaction techniques are required for simultaneous exploration of 3D urban context and abstract analysis metrics. Egocentric interaction that can embed users in VR is the most common approach for immersive visualizations [27]. We design interactions as follows to facilitate interaction with immersive analytics systems.

-

1.

Direction Manipulation. VR HMDs block users’ vision of the real environment, thus traditional interactions (e.g., mouse and keyboard) for 2D displays are not applicable anymore. On the other hand, mid-air gestural interaction can mimic the physical actions we make in the real world, which has been studied as a promising approach to 3D manipulation [28]. For example, Huang et al. [29] developed a gesture system for abstract graph visualization in VR. Moreover, virtual hand metaphors have been studied for enhancing 3D manipulation, for both multi-touch displays [30] and mid-air [31]. In line with these studies, this work presents a systematical categorization and development of gesture interactions required for immersive urban development, which will allow users to manipulate 3D urban context and interact with abstract analysis metrics.

-

2.

Viewpoint Motion Control. Occlusion is considered as a weakness of 3D visualization [32]. In the development stage, we observed that users often spend much time on changing their viewpoint when manipulating 3D physical objects, for mitigating the occlusion problem. To facilitate the navigation process, Ragan et al. [33] designed a head rotation amplification technique that maps the user’s physical head rotation to a scaled virtual rotation. Alternatively, viewpoint optimization techniques have been proven effective by extensive studies in visualization [34] and computer graphics [35]. A principle metric for these methods is view complexity [36], and the metrics are expanded with visual saliency, view stability, and view goodness [37]. As such, we develop an automatic approach for viewpoint optimization to reduce navigation time.

3 Overview

In this section, we discuss domain problems derived from a collaborating architect (Sect. 3.1), followed by a summary of distilled analytical tasks (Sect. 3.2).

3.1 Domain Requirements

In a six-month collaboration period, we worked closely with an architect having over 10 years of experience in urban design. The architect is often asked to propose building development schemes for a development site, which mainly involves considerations of the following entities.

-

1.

Static environment: Physical entities surrounding the development site. The entities cannot be modified.

-

2.

Building candidates: The buildings that are proposed by architects to be placed on the development site. Their sizes and orientations can be modified.

-

3.

Landmark: A physical entity in the development site that is easily seen and recognized.

Quantitative evaluation of the building candidates need to be carried out, in order to meet the evidence-based design principle. Many evaluation criteria have been proposed, such as visibility, accessibility, openness, etc. After evaluation, the building candidates and corresponding evaluation criteria will be demonstrated to stakeholders.

-

1.

Visualization Requirements. The expert showed us existing tools for site development, and pointed out the lack of intuitive visualizations. He expressed a strong desire of utilizing emerging VR HMDs, as stakeholders are usually impressed with new technologies. The visualization should include both 3D geometries of the development site, and abstract information of analysis results.

-

2.

Analytics Requirements. Together with the expert, we identified two key criteria that should be carefully evaluated in site development: visibility and shadow. Visibility analysis is applicable to a landmark, while shadow analysis for the static environment. Both criteria are preferably visualized in an immersive environment.

-

3.

Interaction Requirements. Architects working with city planning and development often manually manipulate physical models with hands. As such, the architects prefer to interact with virtual objects using gestural interactions, instead of HMD coupled controllers that they are not familiar with. The collaborating architect also suggested to support system exploration without body movement to reduce the risk of falling, stumbling over cables, or inducing motion sickness.

3.2 Task Abstraction

The overall goal of this work is to develop an immersive analytics system for accessing site development. Based on the domain requirements, we follow the nested model for visualization design and validation [38], and compile a list of analytical tasks in terms of data/operation abstraction design, and encoding/interaction technique design:

-

1.

T.1: Quantitative Analysis. The system should provide quantitative analysis of the identified criteria, i.e., visibility (T.1.1) and shadow (T.1.2). Efficient computation algorithms should be developed such that the analyses can be performed interactively.

-

2.

T.2: Multi-Perspective Visualization. The system should present multi-perspective information in an immersive environment. First, a 3D view of the urban area should be provided to enable context-aware exploration (T.2.1). Second, an analytics view should be presented to visualize quantitative results (T.2.2).

-

3.

T.3: Effective Interaction. The system should be coupled with a robust gesture interaction system (T.3.1). Actions on virtual objects should be directly visible to the users (T.3.2). Lastly, the system should provide optimal viewpoints to reduce body movement (T.3.3).

4 UrbanVR System

The UrbanVR system consists of three components: data analysis (Sect. 4.1), visualization design (Sect. 4.2), and interaction design (Sect. 4.3).

4.1 Data Analysis

The analysis component supports the quantitative analysis task (T.1). Here, both shadow and visibility measurements are calculated using GPU-accelerated image processing methods. The analyses include the following steps:

-

1.

Viewpoints Generation. Visibility and shadow are measured for a landmark and static environment, respectively. Both measurements are performed along a path of viewpoints in accordance to real-world scenarios, e.g., a city tourist tour and sun movement over one day. Specifically, viewpoints for visibility analysis are manually specified by the collaborating architect, which simulates a popular route in the urban area. Viewpoints for shadow analysis are automatically generated using a solar position algorithm111https://midcdmz.nrel.gov/spa/.

-

2.

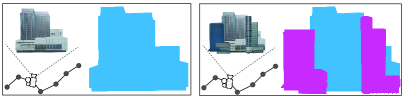

Image Rendering. For each viewpoint, two high-resolution images are rendered. First, we create a frame buffer object (FBO) denoted as FBO-1, and render only the target in blue color to FBO-1 (Fig. 1(left)). Then, we create FBO-2 and render the entire urban scene to it, with the target rendered in blue color and other models in red color (Fig. 1(right)). For both images, the camera is directed towards the center of the target.

-

3.

Pixel Counting. We can then extract the amount of blue pixels, denoted as and in FBO-1 and FBO-2, respectively. Then we compute visibility as . For shadow analysis, a building candidate produces shadows on the static environment. Thus, the shadow brought in by a building candidate is computed as . To accelerate the computation, we develop a parallel computing method that divides each image into grids and counts blue pixels in each grid using GPU.

An alternative approach to the above described pixel counting is to directly clip other models when rendering the target, which could be even faster. Yet the image processing method can be generalized to other urban design scenarios, such as isovist analysis that measures the volume of space visible from a given point in space.

At each viewpoint, we precompute these measurements for each building in increments and at three scales. In this way, we generate a total of 18 (6 orientations 3 scales) design variations for each building candidate. The shadows from 8:00 to 18:00 for each design are measured every 30 minutes. Our system loads the precomputed results for visualization. At runtime, our system also allows users to manipulate a building candidate, such as to scale the building up, or change its orientation. After the interaction, the system will recompute the measurements in the background. With the GPU-accelerated image processing method and this background computing approach, our system achieves interactive frame rates at runtime. Note that visibility analysis is an approximation of stereographic projection of a landmark onto VR glasses. This approximation is acceptable since human eyes distance is much smaller than the viewpoint sampling step, which is about 20 meters in our work.

4.2 Visualization Design

The visualization component is designed to support the multi-perspective visualization task (T.2). The component mainly consists of two closely linked views:

Physical View

The view presents 3D visualization of an urban area in VR to enable urban context awareness (T.2.1). We employ Unity3D’s built-in VR rendering framework to generate the view. All rendering features are enabled and adjusted to optimal settings, and our system is able to run at interactive frame rates. Selection, manipulation, and navigation operations are enabled in the view through a gesture interaction system described below. Besides, the view also supports animation, which is integrated to facilitate users’ understanding of shadow and visibility. For shadow analysis in animation mode, the user’s position will be moved to the optimal viewpoint for the building candidate, and the system’s lighting direction will change over time according to the sun movement. For visibility analysis, the user’s position moves along the architect’s defined path while keeping the target building in view.

Analytics View

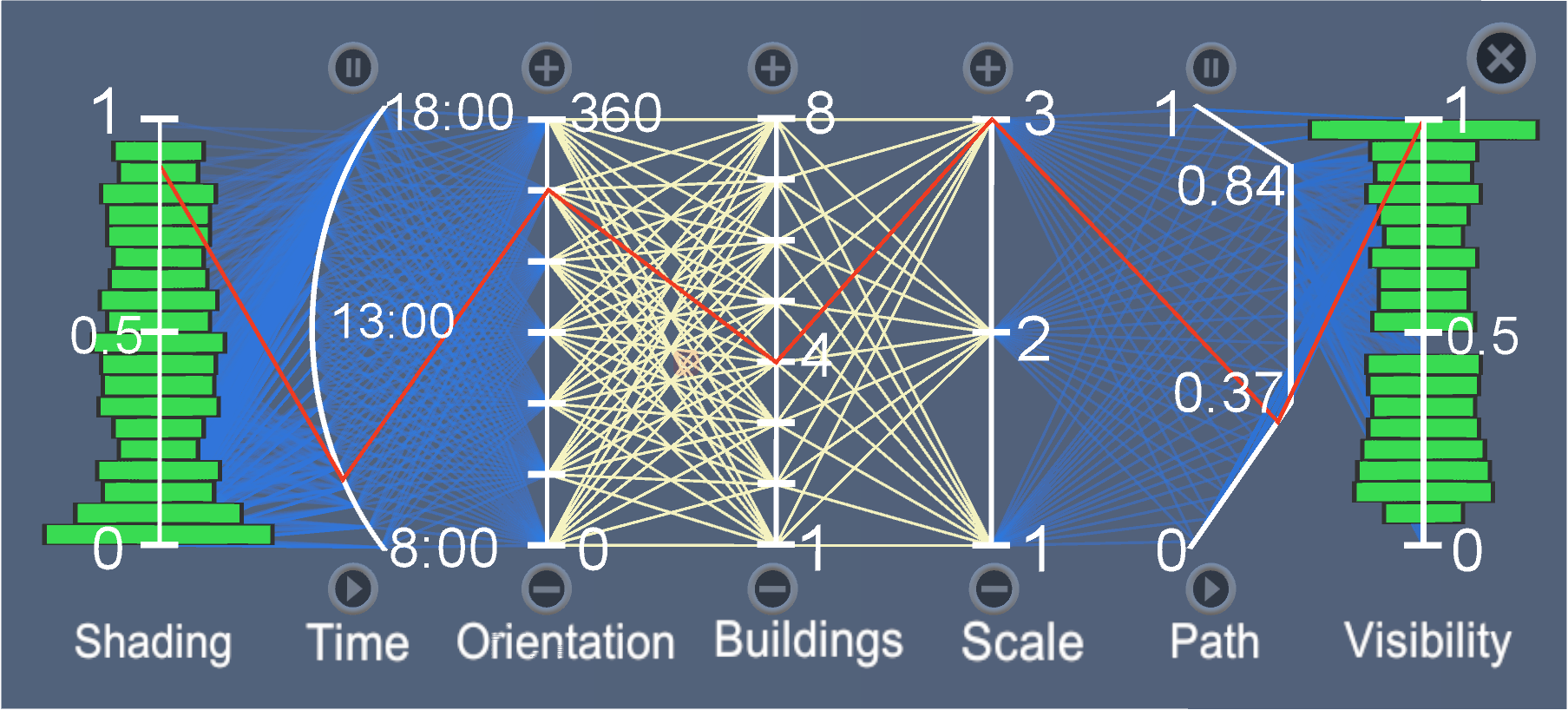

The view supports visualization of quantitative analysis results (T.2.2), which is mainly made up of a parallel coordinate plot (PCP) as shown in Fig. 2. The PCP consists of seven axes, where the middle three correspond to buildings, orientations, and scales of building candidates, the left two correspond to time and shading values for shadow analysis, and the right two correspond to path and visibility results for visibility analysis, respectively. We bend the time axis into an arc to hint sunrise and sundown over time, and arrange the path axis in according to street topology of the path [39]. Since architects would like to first compare building candidates, we integrate bar charts on the left- and right-most axes to indicate shading and visibility distributions for all orientations and scales of a specific candidate. If the candidate’s orientation and scale are further specified, a red line is added in the PCP to show shading and visibility values at certain time and path position.

Besides PCP, various buttons are integrated in the Analytics View. For the middle three axes, plus and minus buttons are placed at the top and bottom, respectively. Users can click the buttons to select a different design, i.e., to change building candidate, orientation, or scale. For time and path axes, start and stop buttons are placed at the top and bottom to control animation. The view is always placed on top of the development site, and if a design is selected, the view will be moved up to the top of the candidate building.

4.3 Interaction Design

The specific interaction requirements raised by the architect bring in research challenges for interaction design. To support efficient exploration (T.3), we develop a series of egocentric interactions, including robust gesture interaction system, and intuitive handle bar metaphor.

4.3.1 Gesture Interaction System

To cater to the requirement of efficient and natural interactions, we decided to implement an interaction system based on handle gesture. We start with a careful study of required operations and raw input gestures.

Required Operations

We summarize required operations into the following categories.

-

1.

Selection. Users can select an object in the virtual environment, e.g., to select a development site, or to select a building candidate.

-

2.

Manipulation. After selecting a building candidate, users can manipulate it, including translate its position, rotate its orientation, and scale its size.

-

3.

Navigation. As requested by the architect, our system should allow users to explore the urban area in the virtual space while keeping their body stationary in the real world. We opt to seated user postures with gestural interactions for users to navigate the urban scene, which allow users to sit comfortably and suit for most people [40, 41]. We define two types of navigation operations in our system: pan in x-, y- and z-dimensions, and rotate around y-axis (Unity3D uses y-up coordinate system).

Raw Input Gestures

Our system detects hand gestures using a Leap Motion device, which is attached to the front of the HMD. When a hand is detected, the device captures various motion tracking data about hands and fingers, including palm position and orientation, fingertip positions and directions, etc. Before deciding what gestures to be used, we first tested different kinds of gestures, including index finger and thumb up, five fingers open, and fist, etc. These gestures are tested with both right and left hands, from different distances, and in different orientations. We identify three most recognizable and stable gestures, i.e., index finger up, five fingers open, and fist. We denote them as Point, Open, and Close, respectively.

Gesture Interaction Design

When one of the three gestures is recognized at time , our system will model the gesture Status, P, O, where denoting left and right hand respectively, and Status . P refers to gesture position in the virtual space, which is set to the index finger’s tip position for Point and the palm position for Open and Close. O refers to orientation of the gesture, which is taken as the palm orientation. Orientation is a necessary attribute in our system, as we use orientation stability to classify consecutive gestures belonging to the same operation. Both P and O are 3D vector type data.

We map the raw gestures to interactions as follows.

-

1.

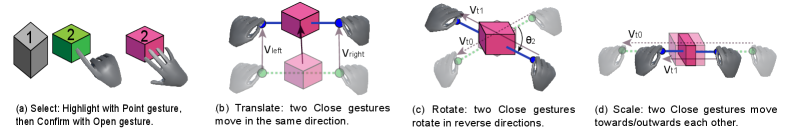

Select. Humans naturally select an object by putting up index finger and pointing at the object. We modify this approach to a two-step operation in our system. First, when a Point gesture is detected, our system measures distances from all interactive objects (including building candidates, development sites, and buttons) to the gesture’s position. The closest object with distance less than a threshold will be highlighted. Second, users confirm the selection by opening up the palm, as shown in Fig. 3(a).

-

2.

Manipulate. After an object is selected, users can manipulate it with translate, rotate, and scale operations. These operations also work in two steps. First, left- and right-hand Close gestures need to be positioned besides the object at time , and our system records the gestures as and , respectively. Second, the gestures will move to other positions at time , recorded as and . Corresponding P of these gestures are used to generate two vectors in 3D space:

(1) representing movements of left and right hands.

Translate: Next, we measure the angle between and . If is less than , we consider the interaction as Translate. A translation corresponding to will be applied to position of the object. If the condition is not met, we measure two more vectors:

(2) Rotate: If is above , we consider the interaction as Rotate. Buildings can only be rotated around the y-axis. Hence we measure the angle of mapped on XZ plane and rotate the building accordingly.

Scale: If is less than , we consider the interaction as Scale. The operation is applicable to an object in x-, y-, and z-dimensions. Scaling factor is proportional to , and divided in x-, y-, and z-dimensions. Notice that scaling is only applicable to a selected virtual object, but not to the whole scene. This is because we would like to maintain the relative proportion between the virtual body and the environment.

-

3.

Navigate. UrbanVR system supports map navigation by camera panning and tilting. The operations are implemented similarly to Translate and Rotate manipulation operations. The differences include: first, Open gesture is used instead of Close gesture; and second, no object needs to be selected first.

4.3.2 Handle Bar Metaphor

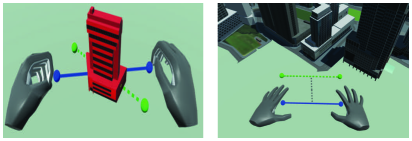

Continuous visual representations of user operations are necessary for designing effective interactions. Besides choosing the three most accurate gestures, we further improve user interactions through a virtual handle bar.

Handle bar metaphor was proven effective for manipulating virtual objects with mid-air gesture interactions [31]. Our system adapts this approach: when users are manipulating building candidates or navigating the map, a green and blue handle bar are presented for the initial and moved gesture positions, respectively. First, our system detects if two-hand Open or Close gestures can be detected for 100 consecutive frames (about one second). Once the first step succeeds, a green handle bar with two balls at both ends and a linking dashed line will be drawn to represent the initial gesture positions. Next, the system detects if follow-up gestures can make up a manipulation or navigation operation, as described in the above section. If an operation is matched, a blue handle bar with a solid connecting line will be presented at the moved gesture positions. Specifically, for Translate operations, a gray dashed line connecting middle points of the two handle bars is drawn as well. Fig. 4 presents examples of handle bar metaphors representing building rotation (left), and map navigation (right).

4.4 Viewpoint Optimization

The complex urban scene can easily cause occlusion that hinders users’ exploration. Making occluders semi-transparent is not a good solution, since the buildings are coupled with high-resolution textures and translucency of the textures will defect users’ perception on the PCP. Alternatively, users can use gesture interactions described above to navigate the map. However, map navigation is relatively slow and usually takes a long time to find a good viewpoint. This motivates us to develop a method which can help users automatically find an optimal viewpoint.

Specifically, this work optimizes the camera position that generates maximum view goodness for a target. The maximum view goodness is defined on the following requirements:

-

1.

R1: Distance and viewing angle to the target shall be not too close that fails to view the whole target, meanwhile not too far away that loses details of the target.

-

2.

R2: Occlusion of the target shall be minimized. Specifically, the camera shall not fall inside any building (R2.1), and there shall be minimum occluders in-between the camera and the target (R2.2).

Next, we formulate these requirements as an energy function that can be feasibly solved with optimization algorithms.

Problem Definition

In our setup, viewpoint optimization is applied to a target, e.g., the development site or building candidate. We adapt a LoD simplification approach that first simplifies LoD3 buildings in an urban scene into LoD1 cuboids [42], and then maximizes the visibility for the target. The target can be represented as , where represents a cuboid belonging to the target. Target can be occluded by other buildings, and we represent these occluding buildings . Since field of view (FOV) is fixed by the HMD, we can only change the viewpoint position and view direction. We further simplify the problem by always pointing the view ray to the center of the target. Thus, we define the viewport optimization problem as:

given cuboids of a target , and cuboids of occluding buildings , to find an optimal position P for observation that generates maximum view goodness of the target.

Energy Function

To solve the problem, we introduce a constraint to optimize P to the target distance (R1), a constraint to avoid P inside occluding buildings (R2.1), and a constraint to minimize view occlusion of the target (R2.2). We formulate these constraints into different energy terms and assemble the energy terms into an energy function.

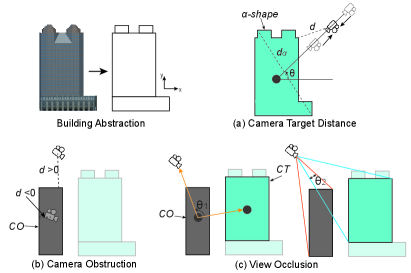

Fig. 5 presents an illustration of these energy constraints of one building projected onto the XY plane. We further repeat projections and measurements on XZ and YZ planes, and combine results from all three planes together. Detailed procedures are described as follows.

-

1.

Camera Target Distance (). After projection, we can get a list of vertices in the XY plane from . We extract a closed polygon, i.e., -shape from these vertices, which is the boundary of the target projected onto the XY plane. Maximum diagonal distance of the -shape is calculated and denoted as . Then we use a point to polygon distance function which measures distance from projected viewpoint to the -shape. The distance is negative if the point falls in the closed polygon. To allow arbitrary viewing angles, we introduce which measures the elevation angle of the viewpoint over the horizon from the center of the -shape to the viewpoint. Thus, we create the sub-energy term on the XY plane:

(3) where and are the weights for each term. and are preferred distance range and set to and , respectively. and are preferred view directions meeting the condition , and they are empirically set to and . is the sum of all sub-energy terms on each plane.

-

2.

Camera Obstruction (). After projecting onto the XY plane, we can get polygons . For each , we measure its distance to , denoted as . The sub-energy term can be constructed as:

(4) To make sure becomes large when P falls in any , we model as:

(5) -

3.

View Occlusion (). are projected on the XY plane, and corresponding polygons are generated. For each , we measure how much another can occlude it. Here, . The measurement is calculated as follows:

-

3..1

Generate a vector from center of (denoted as ) to center of (denoted as ), and a second vector from to .

-

3..2

Measure angle between and . Lager means more likely may lay in-between and .

-

3..3

Generate vectors from to all vertices in , and extract two vectors with maximum and minimum angles, denoted as and . These vectors represent up- and low-bound view angles for , respectively. In the same way, extract and for .

-

3..4

Lastly, we measure intersection angle between and . Larger means more occlusions.

We construct the energy term as:

(6) is the sum of all sub-energy terms on each plane.

-

3..1

With these terms defined, we can model the problem as minimization of the following energy:

| (7) |

where represents the weight for each term, which are empirically set as , , , respectively. is the largest to prevent the camera from moving inside a physical object, and is larger than to minimize view occlusion meanwhile prevent the camera from moving too far away.

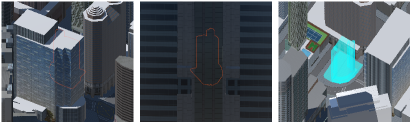

Fig. 6 illustrates the effects of different energy terms on viewport optimization. On the left, we adopt the energy function based on defaults weights for camera target distance (E1) and camera obstruction (E2), whilst the weight for view occlusion (E3) is set to zero. The target building (red outline) is obscured by a surrounding building. In the middle, we adopt the energy function based on default weights for E1 and E3, whilst the weight for E2 is set to zero. The camera is now positioned inside a building that blocks the target building. The full energy function based on E1 + E2 + E3 addresses these issues and leads to optimal viewport as shown on the right. Note that we neglect the result of energy function based on E2 + E3, since the camera will be located in a far distance.

4.5 System Implementation

UrbanVR is implemented in Unity3D. The input models contain 3D geometry information, including geo-positions in WGS-84 coordinate system [43], and a third dimension for height. All building models have high-resolution textures, making it suitable for immersive visualization. This, however, also increases computation costs when users interact with our system. In order to accelerate the computation process, we pre-process all building models in LOD3 by abstracting each model into up to five cuboids in LOD1 [42]. The cuboids act as bounding boxes in 3D space for a model. When the system starts, each model is loaded with corresponding cuboids, which are used in viewpoint optimization and gesture interactions.

The viewpoint optimization energy (Equation 7) is a continuous derivative function, which works well for many optimization algorithms. This work employs a Quasi-Newton method. In each computation iteration, the method will find the gradient and length to next position. The process will stop if either a local minimum is found, or the number of iterations is exceeded (1000 in our case). To find multiple local minima, we start 10 parallel computation processes from 10 different initial positions, making full use of the computing resource. The initial positions are uniformly sampled on the circle that is at a distance of (defined in energy term ) from the center of the target building, and forms a 60 degree angle with the ground above the map. We also accelerate the optimization process by considering only the buildings near the target building. Specifically, we exclude buildings whose distances are more than from the target building. After all processes are finished, we combine all candidates and choose the best one.

5 Evaluation

UrbanVR is evaluated from two perspectives: First, a quantitative user study is conducted to assess the performance of egocentric interactions and viewport optimization, in accomplishing the task of manipulating a selected building to match the target building. Second, qualitative expert feedbacks associated with a case study in Singapore, are collected for applicability evaluation.

5.1 User Study

Experiment Design

To evaluate the egocentric interactions, we design a within-subjects experiment with 12 conditions: 4 interaction mode 3 scene complexity. Each participant participated in each condition. For each condition, a target building colored in translucent cyan is positioned in a development site. Participants are asked to select one from multiple building candidates. The selected candidate has different position, orientation, and scale with the target building. Completion time is recorded and used as the evaluation criteria.

-

1.

Interaction Mode. The gesture system provides fundamental interactions for UrbanVR, while viewpoint optimization and handle bar are complementary for improving user experience. We would like to first test if gestures alone work, and then verify if the other two can facilitate user exploration. Hence, we design four modes of interaction:

-

1..1

C1: Gesture only (Ge).

-

1..2

C2: Gesture and viewpoint optimization (Ge+VO).

-

1..3

C3: Gesture and handle bar (Ge+HB).

-

1..4

C4: Gesture, viewpoint optimization, and handle bar together (Ge+VO+HB).

-

1..1

-

2.

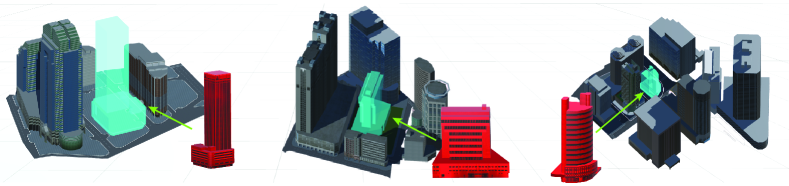

Scene Complexity. In reality, surroundings of a development site can be sparse or dense. High buildings may occlude users’ view of the site, so more interactions are needed to avoid occlusion. This causes more exploration difficulties. As illustrated in Fig. 7, we design three scenes with different complexities:

-

2..1

S1: Simple. The surrounding consists of only two buildings; Fig. 7 (left).

-

2..2

S2: Moderate. The site is surrounded by six sparsely located buildings. Two of them are relatively low height on one side, from where the space can be viewed without occlusion; Fig. 7 (middle).

-

2..3

S3: Complex. The surrounding consists of eight densely located buildings. All buildings are higher than the target building, thus the space is occluded from almost all viewpoints; Fig. 7 (right).

-

2..1

Participants

We recruited 15 participants, 10 males and five females. 12 of them are graduate students, and the others are research staff. The age of the participants range between 20 and 30 years. Two participants played VR games using the HTC Vive controllers. No participant has experience with gesture interactions in VR before the study. Three participants have a background in architecture, while the others have no knowledge about urban design.

Apparatus and Implementation

UrbanVR system was implemented in Unity3D. The experiments were conducted on a desktop PC with 12 Intel(R) Core(TM) i7-6800K CPU @ 3.4GHz, 32GB memory, and a GeForce GTX1080 Ti graphics card. The VR environment was running in a HTC Vive VR HMD, and the hand gestures were captured using a Leap Motion attached to the front of HMD.

Procedure

The studies are performed in the order of introduction, training, experiment, and questionnaire. First, we present a 5-min introduction about the interactions, followed by a 10-min training session to make sure all participants are familiar with the interactions. Then, the experiment starts, and completion time for each experiment condition is recorded. In the end, feedbacks are collected. The questions include if they had experience with gesture interactions in VR, if they feel dizzy, if they think the gestures are natural, and advices for improvement.

In each experiment condition, the starting viewpoint is positioned on top of the urban scene. Participants are asked to complete the task requiring the following operations:

-

1.

Navigate to the site. This can be either done through gesture-based map navigation, or viewpoint optimization by selecting the site.

-

2.

Select a building. Participants can open up a menu through left-hand Open gesture, and eight building candidates will be presented at the left hand position. Then participants can select a building that matches the target through right hand Selection. The selected building will be placed besides the menu.

-

3.

Manipulate the selected building to match the target. Position, orientation, and size of the selected building differ from the target. Participants need to manipulate the selected building to match the target.

All operations are completed while the participants are sitting in a chair. This process is repeated in total 12 times (4 interaction combinations 3 scene complexities) for each participant. The participants are asked to take a break (3 minutes) in every 10 minutes, and take a break (5 minutes) after finishing a task. Sequence of the interaction combination is pseudo-randomly assigned to each participant in order to suppress learning effects gained from previous assignments. Each participant was compensated with SGD $40.00 (USD $30) after the study.

Hypotheses

We postulate the following hypotheses:

-

1.

H1. Gesture only (C1) is the slowest interaction technique.

-

2.

H2. Viewpoint optimization (C2) can facilitate gesture only (C1) interaction, i.e., time(C1) time(C2).

-

3.

H3. Handle bar metaphor (C3) can facilitate gesture only (C1) interaction, i.e., time(C1) time(C3).

-

4.

H4. All techniques together (C4) is the fastest technique, i.e., time(C2) time(C4) and time(C3) time(C4).

Experiment Results

15 experiment results are generated for each experiment condition. We first test the results in each condition against normal distribution using Shapiro-Wilk test. The results show that conditions of C2 do not follow normal distribution, while the results in other conditions are following normal distributions under certain probability.

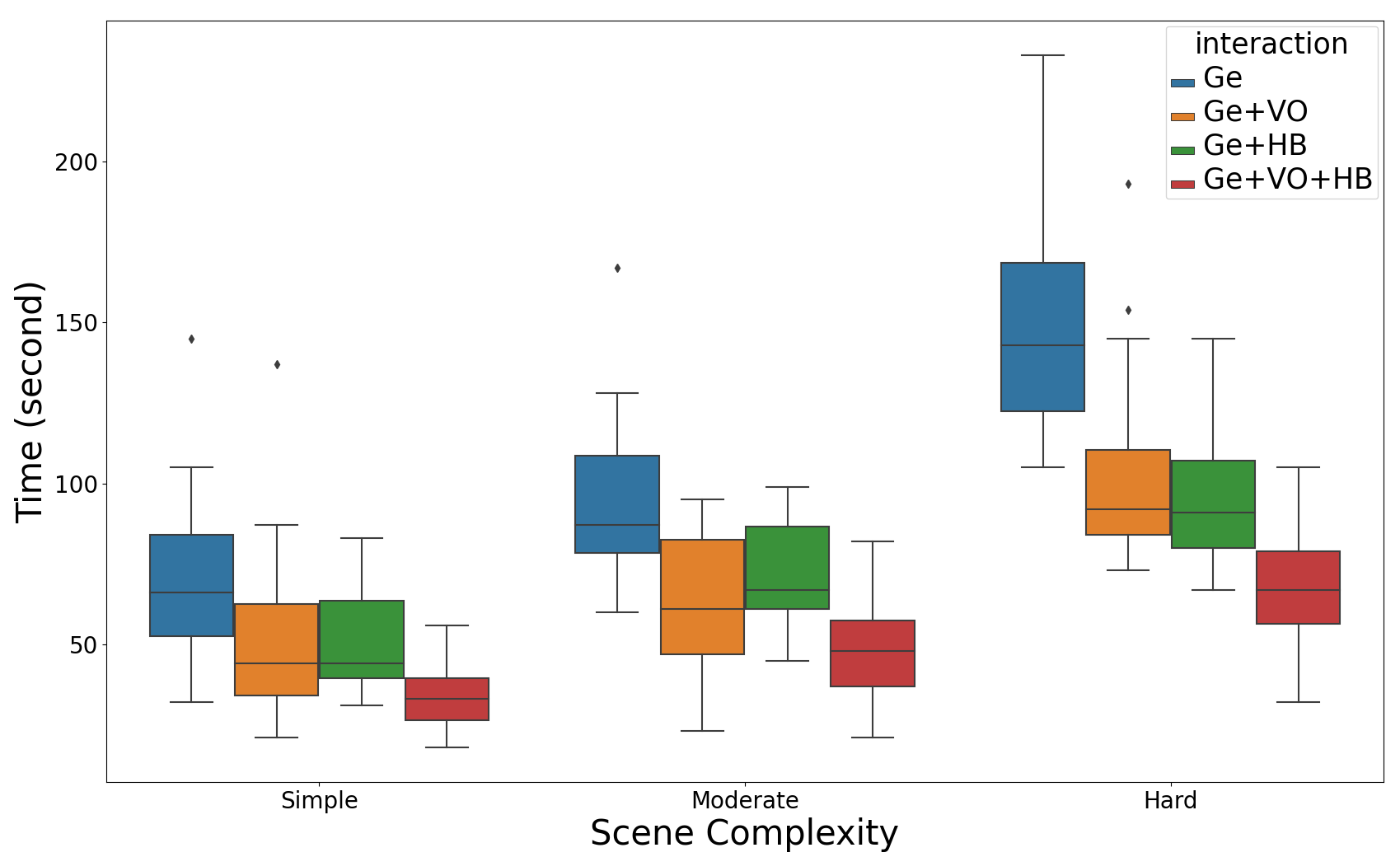

Prerequisites for computing ANOVA are fulfilled for H1, H3 and H4. We perform a two-way ANOVA (3 interaction combinations 3 scene complexities) on them. Significant effects of interactions on completion time are observed. Scene complexity also has a significant main effect on completion time . We use Kruskal-Wallis test [44] to evaluate H2. The result shows the viewpoint optimization has significant effect on completion time .

We also perform post hoc comparisons of completion times among the interaction combinations. The results are shown in Fig. 8. C1 is on average more than 33s slower than C3 , while C3 is on average 23s slower than C4 ; C1 is on average more than 32s slower than C2 , while C2 is on average 24s slower than C4 . The results confirmed H1, H2, H3, and H4. Through more detailed probes, we figure out: C1 is slower than C3 , while C3 is slower than C4 ; C1 is slower than C2 , while C2 is slower than C4 for S1, S2, and S3, respectively. We use False Discovery Rate [45] () for the correction of above data. The results suggest that for more complicated scenes, handle bar metaphor and viewpoint optimization techniques make interactive VR exploration more efficient.

User Feedback

All participants finished the experiment in 1.5 hours. No one felt dizzy with our system in 10-min tests, but one felt a bit eye dry. All participants agreed that the gestures are natural and easy to use, and viewpoint optimization and handle bar are quite helpful. Three participants especially liked the viewpoint optimization, as they got easily bored and tired when navigating the map using gestures. “Viewpoint optimization is really cool. I think too much navigation just makes users feel bored.” There are also some negative feedbacks. Two participants felt the HMD resolution is not high enough, which reduces immersive feeling. One participant was not able to manipulate the building precisely, even with the handle bar. It took her a long time to complete the task.

5.2 Expert Feedbacks

To evaluate the applicability of UrbanVR in urban design, we further conducted expert interviews with two independent architects (denoted as EA EB) other than our collaborating architect. EA is a registered architect in Germany with more than 15 years of experience, while EB has about five years experience. In the interviews, we started by explaining the tasks, visual interfaces, and interaction designs, and demonstrated a case study of how UrbanVR can be utilized in a real-world scenario in Singapore. The following data are received from the collaborating architect.

-

1.

3D models of a central business district in Singapore. The district is about 1 km long and approximately 0.5 km wide. The models include about 50 buildings, and a number of other objects, such as roads and street furniture.

-

2.

Eight building models that are used as building candidates.

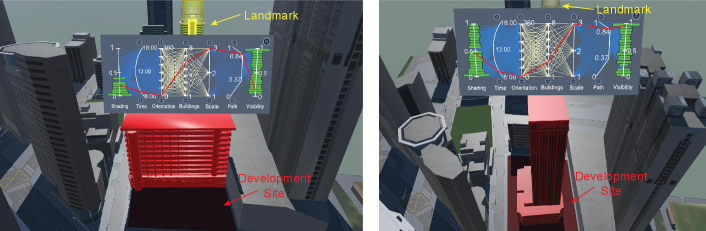

In the study, we first specify a development site and a landmark building at back for visibility analysis. Visibility and shadow analysis results are precomputed for the building candidates. Next, we select candidates and evaluate their visibility and shading impacts. Two candidates are compared in Fig. 9.

-

1.

The left figure presents a low and wide candidate. From the bar chart on the shading axis, we can see that the shading values are concentrated in between [0, 0.5], indicating the candidate generates little shadow on the surrounding. From the bar chart on the visibility axis, the values are approximately evenly distributed in between [0, 1], except for a high concentration at 1. If we further specify the view position at a street corner (the turning point on the path axis), we can see the visibility is low, which indicates that the candidate occlude the landmark a lot at the corner.

-

2.

A tall and thin candidate is shown in the right figure. In comparison with Fig. 9 (left), the bar distribution on the shading axis shows relatively more values over 0.5, and visibility values are more concentrated at 1. This indicates that the candidate generates more shading while affect less visibility than the left one. In addition, the red line also indicates that the candidate does not occlude the landmark at the corner.

After the demonstration, the experts were asked to explore the system by themselves for about 20 minutes. Both of them repeated the case study scenario successfully. Feedbacks about the system were collected and summarized below.

Feasibility

The experts agreed that VR is a new technology that is certainly worth exploration for urban design. Both experts appreciated our work in applying immersive analytics for urban design. They know some planning teams are exploring VR and AR technologies. However, these works are more “focusing on demonstrations and lack analysis”, according to EA. In comparison, our system well integrates visualization and analysis. EB also commented that UrbanVR provides “immersive feelings of an urban design, and more importantly, being able to modify the plan and provide quantitative results”.

Visual Design

Both experts had no motion sickness with the visualization. They are familiar with the urban scene studied in the work, yet they did not expect to get much more immersive feelings in VR than desktop 3D visualizations. “The animation is very vivid, especially when navigating on the path” in the visibility analysis, commented by EB. The experts had some difficulty in understanding the PCP in the beginning. EA thought some simple line charts would be more effective. He was persuaded after we explained that there could be too much line charts to represent all visibility and shadow analysis. They liked the idea of bending time-axis into a curve, and arranging path-axis in the same topology with the streets. “These small adjustments give me strong visual cues of reality”, commented by EB. The study clearly reveals that for the development site, shading is determined by building heights, while visibility is mainly affected by building width. The collaborating architect appreciated this finding and praised the bar chart design on the shading and visibility axes.

Interaction Design

Both experts got used to the gesture interactions quickly. They tried both building manipulation and map navigation using gestures, and felt the gestures are easy to use in VR. EA especially liked the handle bar metaphor, which “helps a lot when manipulating the buildings”. In the beginning, the experts did not realize that when visualizing the case study, the viewpoint was optimized. After the demonstration, they liked the idea and felt it is necessary, as EA felt “the HMD is too heavy - not suitable for long-time wearing”.

Limitations

The experts pointed out two major limitations in our system. First, the experts felt the “gestures are not comparable with mouse regarding accuracy”, even though we have provided handle bars for visual feedbacks. Nevertheless, they liked gestural interactions because hand guestures are natural, and they encouraged us to further improve the accuracy. Second, the experts would like to see more analysis features integrated into our system, such as building functionality, street accessibility, transportation mobility, etc. The current analyses are not covering all necessary evaluation criteria they need.

6 Conclusion, Discussion, and Future Work

We have presented UrbanVR an immersive analytics system that can facilitate site development. UrbanVR integrates a GPU-based image processing method to support quantitative analysis, a customized PCP design in VR to present analysis results, and immersive visualization of an urban site. In comparison to similar tools on the desktop (e.g., [3, 4, 17]), the main advantage of UrbanVR is that the immersive environment gives users the feeling of spatial presence of “being there”. This is especially appreciated for context-aware urban design, as commented by the experts. Nevertheless, the immersive environment also brings about difficulty for user interaction. We develop several egocentric interactions, including gesture interactions, viewpoint optimization, and handle bar metaphors, to facilitate user interactions. The results of the user study show that viewpoint optimization and handle bar metaphor can improve gesture interactions, especially for more complex urban scenes. There is an emerging trend for immersive data analysis in various application domains. In addition to general immersive visualizations, customized designs that can fulfill specific analytical tasks, are also in high demand.

Discussion

There is a strong desire for immersive analytics systems that can facilitate urban design using personalized HMDs. Examples can be found in both virtual (e.g. [10, 11]) and augmented reality (e.g. [46, 47]) environments. However, challenges exist in every stage of system development, from task characterization to data analysis to visualization and interaction designs. Through the development of UrbanVR, we gain insightful experience. First, a close collaboration with domain experts is necessary from the beginning, and iterative feedbacks from domain experts can help reduce unnecessary efforts. Second, integration of advanced techniques from cross domains of visualization, graphics, and HCI fields can greatly improve usability of the system.

Currently, limited user interactions are supported by UrbanVR. In the gesture interaction design (Sect. 4.3.1), we do not implement scene scaling, but only provide camera panning. Even though both interactions can make the scene look bigger or smaller, the experience they bring to the user is completely different in the VR environment, and this affects user behavior [48, 49]. Besides, more advanced interactions for exploring analysis results, such as to filter and reconfigure the PCP [50], are missing. This limits analysis functionality of our system. For instance, the collaborating architect would like our system to filter and sort designs according to a specific criteria. UrbanVR will be more useful if such features are integrated. A main obstacle is that the raw gesture detection provided by Leap Motion is not accurate enough. We look forward to more advanced interaction paradigms, such as hybrid interactions that combine gestural and controller-based interactions [28], and more accurate interaction algorithms, such as deep learning techniques [51], in the near future. With more accurate and robust gesture interactions, we can further improve the interface design, following the guidelines for interface design for virtual reality [52].

For the moment, we integrate viewpoint optimization and handle bar metaphor to improve gesture interactions. The user study shows that viewport optimization (C2), handle bar (C3), and handle bar + viewpoint optimization (C4) facilitate gesture interaction in terms of completion time. The tasks require users to match a building’s position, size, and orientation with a target building. Hence, the matching can also be considered as an accuracy test. In terms of this, the handle bar also improves the accuracy of gesture interactions. Results for viewpoint optimization as a supplement to gesture interactions do not distribute normally. This may be because the participants are not familiar with viewpoint optimization. More try-outs may suppress the participant’s cognitive bias on viewpoint optimization.

Besides viewpoint optimization, another popular occlusion minimization and view enhancement method is , which has also been well-studied in the visualization and graphics communities. Recently, some study has applied this method to urban scenes [53]. The method allows buildings to be shifted and scaled, thus should generate views with less occlusion than our approach. However, the distortion of urban scene may increase the burden of mental mapping of reality in the virtual world. Considering the benefits and drawbacks, it is worth another study to compare the effectiveness of these methods for immersive analytics.

Future Work

There are several directions for future work. First, we would like to implement the technique in VR, and compare it with the viewpoint optimization method to check which one is more effective. In addition, we will continue collaborating with domain experts, to find more problems and data that are suitable for immersive analytics. To facilitate applicability of UrbanVR, we plan to add support for common data formats of urban planning, and integrate an export function that allows users to export their designs, in the near future. Nevertheless, the system is expected to encounter scalability issues when the tasks become complex and data become diverse. This will bring in new research challenges and opportunities for immersive analytics.

Acknowledgments

This work is supported partially by National Natural Science Foundation of China (62025207) and Guangdong Basic and Applied Basic Research Foundation (2021A1515011700).

References

- Burkhard et al. [2007] Burkhard, RA, Andrienko, G, Andrienko, N, Dykes, J, Koutamanis, A, Kienreich, W, et al. Visualization summit 2007: ten research goals for 2010. Information Visualization 2007;6(3):169–188.

- Delaney [2000] Delaney, B. Visualization in urban planning: they didn’t build LA in a day. IEEE Computer Graphics and Applications 2000;20(3):10–16.

- Ferreira et al. [2015] Ferreira, N, Lage, M, Doraiswamy, H, Vo, H, Wilson, L, Werner, H, et al. Urbane: A 3D framework to support data driven decision making in urban development. In: Proceedings of IEEE Conference on Visual Analytics Science and Technology (VAST). 2015, p. 97–104.

- Ortner et al. [2017] Ortner, T, Sorger, J, Steinlechner, H, Hesina, G, Piringer, H, Gröller, E. Vis-A-Ware: Integrating spatial and non-spatial visualization for visibility-aware urban planning. IEEE Transactions on Visualization and Computer Graphics 2017;23(2):1139–1151.

- Waser et al. [2014] Waser, J, Konev, A, Sadransky, B, Horváth, Z, Ribicic, H, Carnecky, R, et al. Many plans: Multidimensional ensembles for visual decision support in flood management. Computer Graphics Forum 2014;33(3):281–290.

- Cornel et al. [2015] Cornel, D, Konev, A, Sadransky, B, Horvath, Z, Gröller, E, Waser, J. Visualization of object-centered vulnerability to possible flood hazards. Computer Graphics Forum 2015;34(3).

- Zeng and Ye [2018] Zeng, W, Ye, Y. VitalVizor: A visual analytics system for studying urban vitality. IEEE Computer Graphics and Applications 2018;38(5):38–53.

- von Richthofen et al. [2017] von Richthofen, A, Zeng, W, Asada, S, Burkhard, R, Heisel, F, Mueller Arisona, S, et al. Urban mining: Visualizing the availability of construction materials for re-use in future cities. In: Proceedings of International Conference on Information Visualisation. 2017, p. 306 – 311.

- Slater [1999] Slater, M. Measuring presence: A response to the witmer and singer presence questionnaire. Presence: Teleoper Virtual Environ 1999;8(5):560–565.

- Liu [2020] Liu, X. Three-dimensional visualized urban landscape planning and design based on virtual reality technology. IEEE Access 2020;8:149510–149521.

- Schrom-Feiertag et al. [2020] Schrom-Feiertag, H, Stubenschrott, M, Regal, G, Matyus, T, Seer, S. An interactive and responsive virtual reality environment for participatory urban planning. In: Proceedings of the Symposium on Simulation for Architecture and Urban Design (SimAUD). 2020, p. 119–125.

- Chen et al. [2017] Chen, Z, Wang, Y, Sun, T, Gao, X, Chen, W, Pan, Z, et al. Exploring the design space of immersive urban analytics. Visual Informatics 2017;1(2):132–142.

- Ens et al. [2021] Ens, B, Bach, B, Cordeil, M, Engelke, U, Serrano, M, Willett, W, et al. Grand challenges in immersive analytics. In: Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery; 2021, p. 1–17.

- Zeng et al. [2014] Zeng, W, Fu, CW, Mueller Arisona, S, Erath, A, Qu, H. Visualizing mobility of public transportation system. IEEE Transactions on Visualization and Computer Graphics 2014;20(12):1833–1842.

- Shen et al. [2018] Shen, Q, Zeng, W, Ye, Y, Mueller Arisona, S, Schubiger, S, Burkhard, R, et al. StreetVizor: Visual exploration of human-scale urban forms based on street views. IEEE Transactions on Visualization and Computer Graphics 2018;24(1):1004 – 1013.

- Zheng et al. [2016] Zheng, Y, Wu, W, Chen, Y, Qu, H, Ni, LM. Visual analytics in urban computing: An overview. IEEE Transactions on Big Data 2016;2(3):276–296.

- Miranda et al. [2019] Miranda, F, Doraiswamy, H, Lage, M, Wilson, L, Hsieh, M, Silva, CT. Shadow accrual maps: Efficient accumulation of city-scale shadows over time. IEEE Transactions on Visualization and Computer Graphics 2019;25(3):1559–1574.

- Bryson [1996] Bryson, S. Virtual reality in scientific visualization. Communications of the ACM 1996;39(5):62–71.

- Dwyer et al. [2018] Dwyer, T, Marriott, K, Isenberg, T, Klein, K, Riche, N, Schreiber, F, et al. Immersive Analytics: An Introduction. Springer International Publishing; 2018, p. 1–23.

- Sicat et al. [2019] Sicat, R, Li, J, Choi, J, Cordeil, M, Jeong, WK, Bach, B, et al. DXR: A toolkit for building immersive data visualizations. IEEE Transactions on Visualization and Computer Graphics 2019;25(1):715–725.

- Chen et al. [2019] Chen, Z, Su, Y, Wang, Y, Wang, Q, Qu, H, Wu, Y. MARVisT: Authoring glyph-based visualization in mobile augmented reality. IEEE Transactions on Visualization and Computer Graphics 2019;26(8):2645 – 2658.

- Yang et al. [2019] Yang, Y, Dwyer, T, Jenny, B, Marriott, K, Cordeil, M, Chen, H. Origin-destination flow maps in immersive environments. IEEE Transactions on Visualization and Computer Graphics 2019;25(1):693–703.

- Perhac et al. [2017] Perhac, J, Zeng, W, Asada, S, Burkhard, R, Mueller Arisona, S, Schubiger, S, et al. Urban fusion: Visualizing urban data fused with social feeds via a game engine. In: Proceedings of International Conference on Information Visualisation. 2017, p. 312–317.

- Kraus et al. [2020] Kraus, M, Angerbauer, K, Buchmüller, J, Schweitzer, D, Keim, DA, Sedlmair, M, et al. Assessing 2d and 3d heatmaps for comparative analysis: An empirical study. In: Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery; 2020, p. 1–14.

- Yang et al. [2020] Yang, Y, Dwyer, T, Marriott, K, Jenny, B, Goodwin, S. Tilt map: Interactive transitions between choropleth map, prism map and bar chart in immersive environments. IEEE Transactions on Visualization and Computer Graphics 2020;:1–1.

- Sherman and Craig [2003] Sherman, WR, Craig, AB. Interacting with the virtual world. In: Understanding Virtual Reality. Morgan Kaufmann; 2003, p. 283–378.

- Poupyrev et al. [1998] Poupyrev, I, Ichikawa, T, Weghorst, S, Billinghurst, M. Egocentric object manipulation in virtual environments: Empirical evaluation of interaction techniques. Computer Graphics Forum 1998;17(3):41–52.

- Besançon et al. [2021] Besançon, L, Ynnerman, A, Keefe, DF, Yu, L, Isenberg, T. The state of the art of spatial interfaces for 3d visualization. Computer Graphics Forum 2021;40(1):293–326.

- Huang et al. [2017] Huang, YJ, Fujiwara, T, Lin, YX, Lin, WC, Ma, KL. A gesture system for graph visualization in virtual reality environments. In: Proceedings of IEEE Pacific Visualization Symposium (PacificVis). 2017, p. 41–45.

- Sun et al. [2013] Sun, Q, Lin, J, Fu, CW, Kaijima, S, He, Y. A multi-touch interface for fast architectural sketching and massing. In: Proceedings of SIGCHI Conference on Human Factors in Computing Systems. 2013, p. 247–256.

- Song et al. [2012] Song, P, Goh, WB, Hutama, W, Fu, CW, Liu, X. A handle bar metaphor for virtual object manipulation with mid-air interaction. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2012, p. 1297–1306.

- Teyseyre and Campo [2009] Teyseyre, AR, Campo, MR. An overview of 3D software visualization. IEEE Transactions on Visualization and Computer Graphics 2009;15(1):87–105.

- Ragan et al. [2017] Ragan, ED, Scerbo, S, Bacim, F, Bowman, DA. Amplified head rotation in virtual reality and the effects on 3D search, training transfer, and spatial orientation. IEEE Transactions on Visualization and Computer Graphics 2017;23(8):1880–1895.

- Bordoloi and Shen [2005] Bordoloi, UD, Shen, H. View selection for volume rendering. In: Proceedings of IEEE Visualization. 2005, p. 487–494.

- Andújar et al. [2004] Andújar, C, Vázquez, P, Fairén, M. Way-Finder: guided tours through complex walkthrough models. Computer Graphics Forum 2004;23(3):499–508.

- Plemenos et al. [2004] Plemenos, D, Sbert, M, Feixas, M. On viewpoint complexity of 3D scenes. In: International Conference GraphiCon. GraphiCon; 2004, p. 24–31.

- Christie et al. [2008] Christie, M, Olivier, P, Normand, JM. Camera control in computer graphics. Computer Graphics Forum 2008;27(8):2197–2218.

- Munzner [2009] Munzner, T. A nested model for visualization design and validation. IEEE Transactions on Visualization and Computer Graphics 2009;15(6):921–928.

- Shen et al. [2018] Shen, Q, Zeng, W, Ye, Y, Arisona, SM, Schubiger, S, Burkhard, R, et al. StreetVizor: Visual exploration of human-scale urban forms based on street views. IEEE Transactions on Visualization and Computer Graphics 2018;24(1):1004–1013.

- Zielasko et al. [2016] Zielasko, D, Horn, S, Freitag, S, Weyers, B, Kuhlen, TW. Evaluation of hands-free hmd-based navigation techniques for immersive data analysis. In: IEEE Symposium on 3D User Interfaces. 2016, p. 113–119.

- Zielasko and Riecke [2020] Zielasko, D, Riecke, BE. Sitting vs. standing in VR: Towards a systematic classification of challenges and (dis)advantages. In: Proceedings of IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). 2020, p. 297–298.

- Verdie et al. [2015] Verdie, Y, Lafarge, F, Alliez, P. LOD generation for urban scenes. ACM Transactions on Graphics 2015;34(3):30:1–14.

- Defense Mapping Agency [1987] Defense Mapping Agency, . Department of defense world geodetic system 1984: its definition and relationships with local geodetic systems. Tech. Rep.; 1987.

- ref [2008] Kruskal-wallis test. In: The Concise Encyclopedia of Statistics. New York, NY: Springer New York; 2008, p. 288–290.

- Benjamini and Hochberg [1995] Benjamini, Y, Hochberg, Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological) 1995;57(1):289–300.

- Noyman et al. [2019] Noyman, A, Sakai, Y, Larson, K. CityScopeAR: urban design and crowdsourced engagement platform. arXiv:190708586 2019;.

- Noyman and Larson [2020] Noyman, A, Larson, K. DeepScope: HCI platform for generative cityscape visualization. In: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems. 2020, p. 1–9.

- Zhang [2009] Zhang, X. Multiscale traveling: crossing the boundary between space and scale. Virtual Reality 2009;13(2):101.

- Langbehn et al. [2016] Langbehn, E, Bruder, G, Steinicke, F. Scale matters! analysis of dominant scale estimation in the presence of conflicting cues in multi-scale collaborative virtual environments. In: Proceedings of IEEE Symposium on 3D User Interfaces (3DUI). 2016, p. 211–220.

- Yi et al. [2007] Yi, JS, a. Kang, Y, Stasko, J, Jacko, JA. Toward a deeper understanding of the role of interaction in information visualization. IEEE Transactions on Visualization and Computer Graphics 2007;13(6):1224–1231.

- Chen et al. [2020] Chen, Z, Zeng, W, Yang, Z, Yu, L, Fu, CW, Qu, H. LassoNet: Deep lasso-selection of 3D point clouds. IEEE Transactions on Visualization and Computer Graphics 2020;26(1):195–204.

- Bowman and Hodges [1999] Bowman, DA, Hodges, LF. Formalizing the design, evaluation, and application of interaction techniques for immersive virtual environments. Journal of Visual Languages & Computing 1999;10(1):37–53.

- Deng et al. [2016] Deng, H, Zhang, L, Mao, X, Qu, H. Interactive urban context-aware visualization via multiple disocclusion operators. IEEE Transactions on Visualization and Computer Graphics 2016;22(7):1862–1874.