Variable-Length Sparse Feedback Codes for Point-to-Point, Multiple Access, and Random Access Channels

Abstract

This paper investigates variable-length stop-feedback codes for memoryless channels in point-to-point, multiple access, and random access communication scenarios. The proposed codes employ decoding times for the point-to-point and multiple access channels and decoding times for the random access channel with at most active transmitters. In the point-to-point and multiple access channels, the decoder uses the observed channel outputs to decide whether to decode at each of the allowed decoding times , at each time telling the encoder whether or not to stop transmitting using a single bit of feedback. In the random access scenario, the decoder estimates the number of active transmitters at time and then chooses among decoding times if it believes that there are active transmitters. In all cases, the choice of allowed decoding times is part of the code design; given fixed value , allowed decoding times are chosen to minimize the expected decoding time for a given codebook size and target average error probability. The number in each scenario is assumed to be constant even when the blocklength is allowed to grow; the resulting code therefore requires only sparse feedback. The central results are asymptotic approximations of achievable rates as a function of the error probability, the expected decoding time, and the number of decoding times. A converse for variable-length stop-feedback codes with uniformly-spaced decoding times is included for the point-to-point channel.

Index Terms:

Variable-length coding, multiple-access, random-access, feedback codes, sparse feedback, second-order analysis, channel dispersion, moderate deviations, sequential hypothesis testing.I Introduction

Although feedback does not increase the capacity of memoryless, point-to-point channels (PPCs) [3], feedback can simplify coding schemes and improve the speed of approach to capacity with blocklength. Examples that demonstrate this effect include Horstein’s scheme for the binary symmetric channel (BSC) [4] and Schalkwijk and Kailath’s scheme for the Gaussian channel [5], both of which leverage full channel feedback to simplify coding in the fixed-length regime. Wagner et al. [6] show that feedback improves the second-order term in the achievable rate as a function of blocklength for fixed-rate coding over discrete, memoryless, point-to-point channels (DM-PPCs) that have multiple capacity-achieving input distributions giving distinct dispersions.

I-A Literature Review on Variable-Length Feedback Codes

The benefits of feedback increase for codes with multiple decoding times (called variable-length or rateless codes). In [7], Burnashev shows that feedback significantly improves the optimal error exponent of variable-length codes for DM-PPCs. In [8], Polyanskiy et al. extend the work of Burnashev to the finite-length regime with non-vanishing error probabilities, introducing variable-length feedback (VLF) codes and deriving achievability and converse bounds on their performance. Tchamkerten and Telatar [9] show that Burnashev’s optimal error exponent is achieved for a family of BSCs and channels, where the cross-over probability of the channel is unknown. For the BSC, Naghshvar et al. [10] propose a VLF coding scheme with a novel encoder called the small-enough-difference (SED) encoder and derive a non-asymptotic achievability bound. Their scheme is an alternative to Burnashev’s scheme to achieve the optimal error exponent. Yang et al. [11] extend the SED encoder to the binary asymmetric channel, of which the BSC is a special case, and derive refined non-asymptotic achievability bounds for the binary asymmetric channel. Guo and Kostina [12] propose an instantaneous SED code for a source whose symbols progressively arrive at the encoder in real time.

The feedback in VLF codes can be limited in its amount and frequency. Here, the amount refers to how much feedback is sent from the receiver at each time feedback is available; the frequency refers to how many times feedback is available throughout the communication epoch. The extreme cases in the frequency are no feedback and feedback after every channel use. The extreme cases in the amount are full feedback and stop feedback. With full feedback, at time , the receiver sends all symbols received until that time, , which can be used by the transmitter to encode the -th symbol. With stop feedback, the receiver sends a single bit of feedback to inform the transmitter whether or not to stop transmitting. Unlike full-feedback codes, variable-length stop-feedback (VLSF) codes employ codewords that are fixed when the code is designed; that is, feedback affects how much of a codeword is sent but does not affect the codeword’s value.

In [8], Polyanskiy et al. define VLSF codes with feedback after every channel use. The result in [8, Th. 2] shows that variable-length coding improves the first-order term in the asymptotic expansion of the maximum achievable message set size from to , where is the capacity of the DM-PPC, is the average decoding time (averaging is with respect to both the random message and the random noise), and is the average error probability. The second-order term achievable for VLF codes is , which means that VLF codes have zero dispersion and that the convergence to the capacity is much faster than that achieved by the fixed-length codes [13, 14]. In [15], Altuğ et al. modify the VLSF coding paradigm by replacing the average decoding time constraint with a constraint on the probability that the decoding time exceeds a target value; the benefit in the first-order term does not appear under this probabilistic delay constraint, and the dispersion is no longer zero. A VLSF scenario with noisy feedback and a finite largest available decoding time is studied in [16]. For VLSF codes, Forney [17] shows an achievable error exponent that is strictly better than that of fixed-length, no-feedback codes and is strictly worse than Burnashev’s error exponent for variable-length full-feedback codes. Ginzach et al. [18] derive the exact error exponent of VLSF codes for the BSC.

Bounds on the performance of VLSF codes that allow feedback after every channel use are derived for several network communication problems. Truong and Tan [19, 20] extend the results from [8] to the Gaussian multiple access channel (MAC) under an average power constraint. Trillingsgaard et al. [21] study the VLSF scenario where a common message is transmitted across a -user discrete memoryless broadcast channel. Heidari et al. [22] extend Burnashev’s work from the DM-PPC to the DM-MAC, deriving lower and upper bounds on the error exponents of VLF codes for the DM-MAC. Bounds on the performance of VLSF codes for the DM-MAC with an unbounded number of decoding times appear in [23]. The achievability bounds for -transmitter MAC in [20] and [23] employ simultaneous information density threshold rules.

While high rates of feedback are impractical for many applications — especially wireless applications on half-duplex devices — most prior work on VLSF codes (e.g., [8, 15, 19, 20, 21, 22, 23]) considers the densest feedback possible, using feedback at each of the (at most) time steps before decoding, where is the largest blocklength used by a given VLSF code. To consider more limited feedback scenarios, let denote the number of potential decoding times in a VLSF code, a number that we assume to be independent of the blocklength. We further assume that feedback is available only at the fixed decoding times , which are fixed in the code design and known by the transmitter and receiver before the start of transmission. In [24], Kim et al. choose the decoding time for each message from the set for some positive integer and , In [25], Williamson et al. numerically optimize the values of decoding times and employ punctured convolutional codes and a Viterbi algorithm. In [26], Vakilinia et al. introduce a sequential differential optimization (SDO) algorithm to optimize the choices of the potential decoding times , approximating the random decoding time by a Gaussian random variable. Vakilinia et al. apply the SDO algorithm to non-binary low-density parity-check codes over binary-input, additive white Gaussian channels; the mean and variance of are determined through simulation. Heidarzadeh et al. [27] extend [26] to account for the feedback rate and apply the SDO algorithm to random linear codes over the binary erasure channel. In [28], we develop a communication strategy for a random access scenario with a total of transmitters; in this scenario, neither the transmitters nor the receiver knows the set of active transmitters, which can vary from one epoch to the next. The code in [28] is a VLSF code with decoding times . The decoder decodes messages only if it decides at that time that out of total transmitters are active at time . It informs the transmitters about its decision by sending a one-bit signal at each time until the time at which it decodes. We show that our random access channel (RAC) code with sparse stop feedback achieves performance identical in the capacity and dispersion terms to that of the best-known code without feedback for a MAC in which the set of active transmitters is known a priori. An extension of [28] to low-density parity-check codes appears in [29]. Building upon an earlier version of the present paper [1], Yang et al. [30] construct an integer program to minimize the upper bound on the average blocklength subject to constraints on the average error probability and the minimum gap between consecutive decoding times. By employing a combination of the Edgeworth expansion [31, Sec. XVI.4] and the Petrov expansion (Lemma 2), that paper develops an approximation to the cumulative distribution function of the information density random variable ; the numerical comparison of their approximation and the empirical cumulant distribution function shows that the approximation is tight even for small values of . Their analysis uses this tight approximation to numerically evaluate the non-asymptotic achievability bound (Theorem 1, below) for the BSC, binary erasure channel, and binary-input Gaussian PPC for all . The resulting numerical results show performance that closely approaches Polyanskiy’s VLSF achievability bound [8] with a relatively small . For the binary erasure channel, [30] also proposes a new zero-error code that employs systematic transmission followed by random linear fountain coding; the proposed code outperforms Polyanskiy’s achievability bound.

Sparse feedback is known to achieve the optimal error exponent for VLF codes. Yamamoto and Itoh [32] construct a two-phase scheme that achieves Burnashev’s optimal error exponent [7]. Although their scheme allows an unlimited number of feedback instances and decoding times, it is sparse in the sense that feedback is available only at times for some and integer . Lalitha and Javidi [33] show that Burnashev’s optimal error exponent can be achieved by only decoding times by truncating the Yamamoto–Itoh scheme.

Decoding for VLSF codes can be accomplished by running a sequential hypothesis test (SHT) on each possible message. At each increasingly larger stopping times, the SHT compares a hypothesis corresponding to a particular transmitted message to the hypothesis corresponding to the marginal distribution of the channel output. In [34], Berlin et al. derive a bound on the average stopping time of an SHT. They then use this bound to derive a non-asymptotic converse bound for VLF codes. This result is an alternative proof for the converse of Burnashev’s error exponent [7].

I-B Contributions of This Work

Like [26, 25, 27], this paper studies VLSF codes under a finite constraint on the number of decoding times. While [26, 25, 27] focus on practical coding and performance, our goal is to derive new achievability bounds on the asymptotic rate achievable by VLSF codes between (the fixed-length regime analyzed in [13, 35]) and (the classical variable-length regime defined in [8, Def. 1] where all decoding times 1, 2, are available).

Our contributions are summarized as follows.

-

1.

We derive second-order achievability bounds for VLSF codes over DM-PPCs, DM-MACs, DM-RACs, and the Gaussian PPC with maximal power constraints. These bounds are presented in Theorems 2, 5, 6, and 7, respectively. In our analysis for each problem, we consider the asymptotic regime where the number of decoding times is fixed while the average decoding time grows without bound, i.e., with respect to . Each of our asymptotic bounds follows from the corresponding non-asymptotic bound that employs an information-density threshold rule with a stop-at-time-zero procedure. Asymptotically optimizing the values of the decoding times yields the given results. By viewing the proposed decoder as a special case of SHT-based decoders, we show a more general non-asymptotic achievability bound; Theorem 8 employs an arbitrary SHT to decide whether a message is transmitted.

-

2.

Linking the error probability of any given VLSF code to that of an SHT, in Theorem 9, we prove a converse bound in the spirit of the meta-converse bound from [13, Th. 27]. Analyzing the new bound with infinitely many uniformly-spaced decoding times over Cover–Thomas symmetric channels, in Theorem 3, we prove a converse bound for VLSF codes; the resulting bound is tight up to its second-order term. Unfortunately, since analyzing our meta-converse bound is challenging in the general case of an arbitrary DM-PPC and an arbitrary number of decoding times (see [36, Th. 3.2.3] for the structure of optimal SHTs with finitely many decoding times), whether or not the second-order term is tight in the general case remains an open question.

| Number of decoding times | Channel type | First-order term | Second-order term | |||||

| Lower bound | Upper bound | |||||||

|

DM-PPC | [13] | [13] | |||||

|

DM-PPC | (Theorem 2) | [8] | |||||

|

DM-PPC | [8] | [8] | |||||

|

DM-MAC | [37] | [38] | |||||

|

DM-MAC | (Theorem 5) | [20] | |||||

|

DM-MAC | eq. (44) | [20] | |||||

|

|

[20] | [20] | |||||

| DM-RAC | [28] | [38] | ||||||

|

[39] | [38] | ||||||

| DM-RAC | (Theorem 6) | [20] | ||||||

|

|

(Theorem 7) | [19] | |||||

|

|

[19] | [19] | |||||

Below, we detail these contributions. Our main result shows that for VLSF codes with decoding times over a DM-PPC, message set size satisfying

| (1) |

is achievable. Here denotes the -fold nested logarithm, is the average decoding time, is the average error probability, and and are the capacity and dispersion of the DM-PPC, respectively. Similar formulas arise for the DM-MAC and DM-RAC, where and are replaced by the sum-rate mutual information and the sum-rate dispersion. The speed of convergence to depends on . It is slower than the convergence to in the fixed-length scenario, which has second-order term [13]. The case in (1) recovers the rate of convergence for the variable-length scenario without feedback, which has second-order term [8, Proof of Th. 1]; that rate is achieved with . The nested logarithm term in (1) arises because after writing the average decoding time as , the decoding time choices in (22), below, satisfy for , making the effect of each decoding time on the average decoding time asymptotically similar. We then use the SDO algorithm introduced in [26] to show that our particular choice of is second-order optimal (see Appendix B.II). Despite the order-wise dependence of the rate of convergence on , (1) grows so slowly with that it suggests little benefit to choosing a large . For example, when , behaves very similarly to for practical values of (e.g., ). Notice, however, that the given achievability result provides a lower bound on the benefit of increasing ; bounding the benefit from above requires a converse result. We note, however, that the numerical results in [30] support our conclusion from the asymptotic achievability bound (1) that indicates that the improvement over achievable from to decoding times diminishes as increases.

For the PPC and MAC, the feedback rate of our code is if the decoding time is ; for the RAC, that rate becomes if the decoding time is . In both cases, our feedback rate approaches 0 as the decoding time grows. In contrast, VLSF codes like in [8, 17] use feedback rate 1 bit per channel use. In VLSF codes for the RAC, the decoder decodes at one of the available times if it estimates that the number of active transmitters is ; we reserve a single decoding time for decoding the possibility that no active transmitters are active. Theorem 6 extends the RAC code in [28] from to any .

The converse result in Theorem 3 shows that in order to achieve (1) with evenly spaced decoding times, one needs at least decoding times. In contrast, our optimized codes achieve (1) with a finite that does not grow with the average decoding time , which highlights the importance of optimizing the values of decoding times in a VLSF code.

Table I summarizes the literature on VLSF codes and the new results from this work, showing how they vary with the number of decoding times and the channel type.

In what follows, Section II gives notation and definitions. Sections III–VI introduce variable-length sparse stop-feedback codes for the DM-PPC, DM-MAC, DM-RAC, and the Gaussian PPC, respectively, and present our main theorems for those channel models; Section VII concludes the paper. The proofs appear in the Appendix.

II Preliminaries

II-A Notation

For any positive integers and , , , and . The collection of length- vectors from the transmitter index set is denoted by ; we drop the superscript if , i.e., . The collection of non-empty strict subsets of a set is denoted by All-zero and all-one vectors are denoted by and , respectively; dimension is determined from the context. The sets of positive integers and non-negative integers are denoted by and , respectively. We write if there exists a permutation of such that , and if such a permutation does not exist. The identity matrix of dimension is denoted by . The Euclidean norm of vector is denoted by . Unless specified otherwise, all logarithms and exponents have base . Information is measured in nats. The standard , , and notations are defined as if , if , and if . The distribution of a random variable is denoted by ; denotes the Gaussian distribution with mean and covariance matrix , represents the complementary standard Gaussian cumulative distribution function , and is its functional inverse. We define the nested logarithm function

| (2) |

is undefined for all other pairs.

We denote the Radon-Nikodym derivative of distribution with respect to distribution by . We denote the relative entropy and relative entropy variance between and by and , respectively, where . The -algebra generated by random variable is denoted by . A random variable is called arithmetic if there exists some such that . The largest that satisfies this condition is called the span. If such a does not exist, then the random variable is non-arithmetic. Denote and for any random variable .

II-B Discrete Memoryless Channel and Information Density

A DM-PPC is defined by the triple , where is the finite input alphabet, is the channel transition kernel, and is the finite output alphabet. The -letter input-output relationship of the channel is given by for all , , and .

The -letter information density of a channel under input distribution is defined as

| (3) |

where is the marginal of . If the inputs are independently and identically distributed (i.i.d.) according to , then

| (4) |

where the single-letter information density is given by

| (5) |

The mutual information and dispersion are defined as

| (6) | ||||

| (7) |

respectively, where .

Let denote all distributions on the alphabet . The capacity of the DM-PPC is

| (8) |

and the dispersion of the DM-PPC is

| (9) |

III VLSF Codes for the DM-PPC

III-A VLSF Codes with Decoding Times

We consider VLSF codes with a finite number of potential decoding times over a DM-PPC. The receiver chooses to end the transmission at the first time that it is ready to decode. The transmitter learns of the receiver’s decision via a single bit of feedback at each of times . Feedback bit “0” at time means that the receiver is not yet ready to decode, and transmission should continue; feedback bit “1” means that the receiver can decode at time , which signals the transmitter to stop. Using this feedback, the transmitter and the receiver are synchronized and aware of the current state of the transmission at all times. Since is the last decoding time available, the receiver always makes a final decision if time is reached. Unlike [7, 32, 25], we do not allow re-transmission of the message after time . Since the transmitter and the receiver both know the values of decoding times, the receiver does not need to send feedback at the last available time . We assume that the transmitter and the receiver know the channel transition kernel . We employ average decoding time and average error probability constraints. Definition 1, below, formalizes our code description.

Definition 1

Fix , positive integers and , and a positive scalar . An -VLSF code for the DM-PPC comprises

-

1.

non-negative integer-valued decoding times ,

-

2.

a finite alphabet and a probability distribution on defining a common randomness random variable that is revealed to both the transmitter and the receiver before the start of the transmission,111The realization of specifies the codebook.

-

3.

an encoding function , for each , that assigns a codeword

(10) to each message and common randomness instance ,

-

4.

a non-negative integer-valued random stopping time for the filtration generated by that satisfies an average decoding time constraint

(11) -

5.

and a decoding function for each (where is the erasure symbol used to indicate that the receiver is not ready to decode), satisfying an average error probability constraint

(12) where the message is equiprobably distributed on the set , and .

Recall that Definition 1 with recovers the fixed-length no-feedback codes in [13]. As in [8, 28, 21], we here need common randomness because the traditional random-coding argument does not prove the existence of a single (deterministic) code that simultaneously satisfies conditions (11) and (12) on the code. Therefore, randomized codes are necessary for our achievability argument; here, suffices [28, Appendix D].

We define the maximum achievable message set size with decoding times, average decoding time , and average error probability as

| (13) |

The maximum achievable message set size for VLSF codes with decoding times that are restricted to belong to a subset is denoted by .

III-B Related Work

The following discussion summarizes prior asymptotic expansions of the maximum achievable message set size for the DM-PPC.

-

a)

: For and , Polyanskiy et al. [13, Th. 49] show that

(14) For , the dispersion in (9) is replaced by the maximum dispersion . The term is lower bounded by and upper bounded by . For nonsingular DM-PPCs, i.e., the channels that satisfy for the distributions that achieve the capacity and the dispersion , the term is equal to [40]. Moulin [41] derives lower and upper bounds on the term in the asymptotic expansion when the channel is nonsingular with non-lattice information density.

-

b)

: For VLSF codes with , Polyanskiy et al. [8, Th. 2] show that for ,

(15) (16) where is the binary entropy function (in nats). The bounds in (15)–(16) indicate that the -capacity (the first-order achievable term) is

(17) The achievable dispersion term is zero, i.e., the second-order term in the fundamental limit in (15)–(16) is .

III-C Our Achievability Bounds

Theorem 1, below, is our non-asymptotic achievability bound for VLSF codes with decoding times.

Theorem 1

Fix a constant , decoding times , and a positive integer . For any positive number and , there exists an -VLSF code for the DM-PPC with

| (18) | |||||

| (19) |

where is a product of distributions of sub-vectors of lengths , , i.e.,

| (20) |

where .

Proof:

Polyanskiy et al. [13] interpret the information-density threshold test for a fixed-length code as a collection of hypothesis tests aimed at determining whether the channel output is or is not dependent on a given codeword. In our coding scheme, we use SHTs in a similar way. The strategy is as follows.

The VLSF decoder at each time runs SHTs between a hypothesis that the channel output results from transmission of the -th codeword, , and the hypothesis that the channel output is drawn from the unconditional channel output distribution. The former indicates that the decoder hypothesizes that message is the sent message. The latter indicates that the decoder hypothesizes that message has not been sent and thus can be removed from the list of possible messages to decode. Transmission stops at the first time that hypothesis is accepted for some message or the first time that hypothesis is accepted for all . If the latter happens, decoding fails and we declare an error. Transmission continues as long as one of the SHTs has not accepted either or . If is declared for multiple messages at the same decoding time, then we stop and declare an error. Since is the last available decoding time, the SHTs are forced to decide between and at time . Once or is decided for some message, the decision cannot be reversed at a later time.

The optimal SHT has the form of a two-sided information density threshold rule, where the thresholds depend on the individual decision times [36, Th. 3.2.3]. To simplify the analysis, we employ sub-optimal SHTs for which the upper threshold is set to a value that is independent of the decoding times and the lower thresholds are set to for and to for . That is, we declare for a message if and only if the corresponding information density never reaches at any of decoding times . Theorem 1 analyzes the error probability and the average decoding time of the sub-optimal SHT-based decoder above, and extends the achievability bound in [8, Th. 3] that considers to the scenario where only a finite number of decoding times is allowed. The bound on the average decoding time (19) is obtained by expressing the bound on the average decoding time in [8, eq. (27)] using the fact that the stopping time is in . When we compare Theorem 1 with [8, Th. 3], we see that the error probability bound in (18) has an extra term . This term appears since transmission always stops at or before time .

Theorem 1 is related to [24, Lemma 1], which similarly treats but requires for some constant , and [25, Cor. 2], where the transmitter retransmits the message if decoding attempts at times are unsuccessful.

See Appendix A for the proof details.

∎

Theorem 2, stated next, is our second-order achievability bound for VLSF codes with decoding times over the DM-PPC. The proof of Theorem 2 builds upon the non-asymptotic bound in Theorem 1.

Theorem 2

Proof:

Inspired by [8, Th. 2], the proof employs a time-sharing strategy between an -VLSF code whose smallest decoding time is nonzero and a simple “stop-at-time-zero” procedure that does not involve any code and decodes an error at time 0. Specifically, we set the VLSF code as the one that achieves the bound in Theorem 1, and we use the VLSF code and the stop-at-time-zero procedure with probabilities and , respectively, where and satisfy

| (23) | ||||

| (24) |

The error probability of the resulting code is bounded by , and the average decoding time is

| (25) |

For the scenario where , we again use time-sharing with the stop-at-time-zero procedure in the achievability bound in [8, Th. 2] with instead of (23). In the asymptotic regime , the choice in (23) results in a better second-order term than that achieved by .

In the analysis of Theorem 1, we need to bound the probability . Since this probability decays sub-exponentially to zero due to (23), we use a moderate deviations result from [42, Ch. 8] to bound this probability. Such a tool was not needed in the proof of [8, Th. 2] for because when , the term disappears from (18), and the average decoding time is bounded via martingale analysis instead of (19). Finally, we apply Karush-Kuhn-Tucker conditions to show that the decoding times in (22) yield a value of that is the maximal value achievable by the non-asymptotic bound up to terms of order . The details of the proof appear in Appendix B. ∎

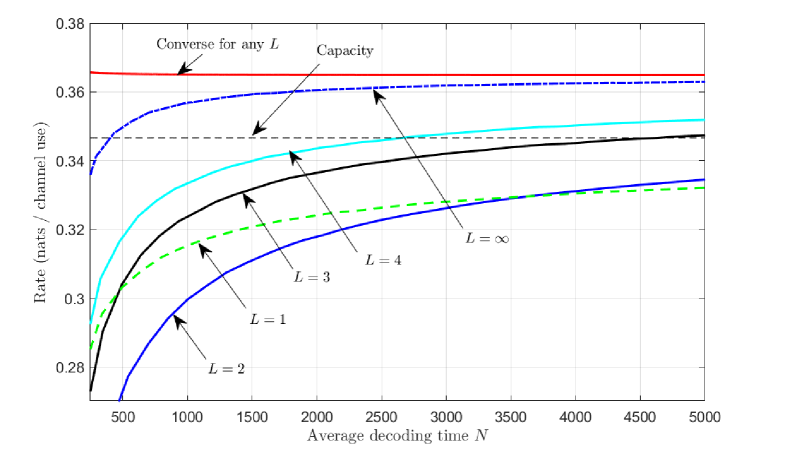

The non-asymptotic achievability bounds obtained from the coding scheme described in the proof sketch of Theorem 2 are illustrated for the BSC in Fig. 1. For , the decoding times are chosen as described in (22) with the term ignored, and in the stop-at-time-zero procedure is replaced with the right-hand side of (18). For , Fig. 1 shows the random coding union bound in [13, Th. 16], which is a non-asymptotic achievability bound for fixed-length no-feedback codes. For , Fig. 1 shows the non-asymptotic bound in [8, eq. (102)]. The curves for and cross because the choice of decoding times in (22) requires and is optimal only as . In [30], Yang et al. construct a computationally intensive integer program for the numerical optimization of the decoding times for finite . If such a precise optimization is desired, our approximate decoding times in (22) can be used as starting locations for that integer program.

Replacing the information-density-based decoding rule in the proof sketch with the optimal SHT would improve the performance achieved on the right-hand side of (21) by only .

Since any -VLSF code is also an -VLSF code, (16) provides an upper bound on for an arbitrary . The order of the second-order term, , depends on the number of decoding times . The larger , the faster the achievable rate converges to the capacity. However, the dependence on is weak since grows very slowly in even if is small. For example, for and , . For a finite , this bound falls short of the achievability bound in (16) achievable with . Whether the second-order term achieved in Theorem 2 is tight remains an open problem.

The following theorem gives achievability and converse bounds for VLSF codes with decoding times uniformly spaced as .

Theorem 3

Fix . Let with , and let be any DM-PPC. Then, it holds that

| (26) |

If the DM-PPC is a Cover–Thomas symmetric DM-PPC [43, p. 190] i.e., the rows (and resp. the columns) of the transition probability matrix are permutations of each other, then

| (27) |

Proof:

The achievability bound (26) employs the sub-optimal SHT in the proof sketch of Theorem 2. To prove the converse in (27), we first derive in Theorem 9, in Appendix C below, the meta-converse bound for VLSF codes. The meta-converse bound in Theorem 9 bounds the error probability of any given VLSF code from below by the minimum achievable type-II error probability of the corresponding SHT; it is an extension and a tightening of Polyanskiy et al.’s converse in (16) since for , weakening it by applying a loose bound on the performance of SHTs from [36, Th. 3.2.2] recovers (16). The Cover-Thomas symmetry assumption allows us to circumvent the maximization of that minimum type-II error probability over codes since the log-likelihood ratio is the same regardless of the channel input for that channel class. In both bounds in (26)–(27), we use the expansions for the average stopping time and the type-II error probability from [36, Ch. 2-3]. See Appendix C for details. ∎

Theorem 3 establishes that when , the second-order term of the logarithm of maximum achievable message set size among VLSF codes with uniformly spaced decoding times is . Theorem 3 implies that in order to achieve the same performance as achieved in (21) with decoding times, one needs on average uniformly spaced stop-feedback instances, suggesting that the optimization of available decoding times considered in Theorem 2 is crucial for attaining the second-order term in (21).

The case where is not as interesting as the case where since analyzing Theorem 9 using Chernoff bound would yield a bound on the probability that the optimal SHT makes a decision at times other than and . Since that probability decays exponentially with , the scenario where is unbounded and is asymptotically equivalent to . For example, for for some , the right-hand side of (21) is tight up to the second-order term.

IV VLSF Codes for the DM-MAC

We begin by introducing the definitions used for the multi-transmitter setting.

IV-A Definitions

A -transmitter DM-MAC is defined by a triple , where is the finite input alphabet for transmitter , is the finite output alphabet of the channel, and is the channel transition probability.

In what follows, the subscript and superscript indicate the corresponding transmitter indices and the codeword lengths, respectively. Let denote the marginal output distribution induced by the input distribution . The unconditional and conditional information densities are defined for each non-empty as

| (28) | ||||

| (29) |

where . Note that in (28)–(29), the information density functions depend on the transmitter set unless further symmetry conditions are assumed (e.g., in some cases we assume that the components of are i.i.d., and is invariant to permutations of the inputs ).

The corresponding mutual informations under the input distribution and the channel transition probability are defined as

| (30) | ||||

| (31) |

The dispersions are defined as

| (32) | ||||

| (33) |

For brevity, we define

| (34) | ||||

| (35) |

A VLSF code for the MAC with transmitters is defined similarly to the VLSF code for the PPC.

Definition 2

Fix , , and positive integers . An -VLSF code for the MAC comprises

-

1.

non-negative integer-valued decoding times ,

-

2.

finite alphabets , , defining common randomness random variables ,

-

3.

sequences of encoding functions , ,

-

4.

a stopping time for the filtration generated by , satisfying an average decoding time constraint (11), and

-

5.

decoding functions for , satisfying an average error probability constraint

(36) where the independent messages are uniformly distributed on the sets , respectively.

IV-B Our Achievability Bounds

Our main results are second-order achievability bounds for the rates approaching a point on the sum-rate boundary of the MAC achievable region expanded by a factor of .

Theorem 4, below, is a non-asymptotic achievability bound for any DM-MAC with transmitters and decoding times.

Theorem 4

Fix constants , , for , integers , and distributions , . For any DM-MAC with transmitters , there exists an -VLSF code with

| (37) | |||

| (38) | |||

| (39) | |||

| (40) | |||

| (41) |

Proof:

The proof of Theorem 4 uses a random coding argument that employs independent codebook ensembles, each with distribution , . The receiver employs decoders that operate by comparing an information density for each possible transmitted codeword set to a threshold. At time , decoder computes the information densities ; if there exists a unique message vector satisfying , then the receiver decodes to the message vector ; if there exists multiple such message vectors, then the receiver stops the transmission and decodes an error. If no such message vectors exist at time , then the receiver emits output and passes the decoding time without decoding if and decodes an error if . The term (37) bounds the probability that the information density corresponding to the true messages is below the threshold for all decoding times; (38) bounds the probability that all messages are decoded incorrectly; and (39)-(40) bound the probability that the messages from the transmitter index set are decoded incorrectly, and the messages from the index set are decoded correctly. The proof of Theorem 4 appears in Appendix D. ∎

Theorem 5, below, is a second-order achievability bound in the asymptotic regime for any DM-MAC. It follows from an application of Theorem 4.

Theorem 5

Fix , an integer , and distributions , . For any -transmitter DM-MAC , there exists a -tuple and an -VLSF code satisfying

| (42) |

Proof:

See Appendix D. ∎

In the application of Theorem 4 to prove Theorem 5, we choose the parameters and so that the terms in (39)-(40) decay exponentially with , which become negligible compared to (37) and (38). Between (37) and (38), the term (37) is dominant when does not grow with , and (38) is dominant when grows linearly with .

Like the single-threshold rule from [28] for the RAC, the single-threshold rule employed in the proof of Theorem 4 differs from the decoding rules employed in [20] for VLSF codes over the Gaussian MAC with expected power constraints and in [23] for the DM-MAC. In both [20] and [23], , and the decoder employs simultaneous threshold rules for each of the boundaries that define the achievable region of the MAC with transmitters. Those rules fix thresholds , , and decode messages if for all , the codeword for satisfies

| (43) |

for some , . Our decoder can be viewed as a special case of (43) obtained by setting for .

Analyzing Theorem 4 in the asymptotic regime , we determine that there exists a -tuple and an -VLSF code satisfying

| (44) |

Both (42) and (44) are achieved at rate points that approach a point on the sum-rate boundary of the -MAC achievable region expanded by a factor of .

For any VLSF code, case can be treated as regardless of the number of transmitters since if we truncate an infinite-length code at time , by Chernoff bound, the resulting penalty term added to the error probability decays exponentially with , whose effect in (44) is . See Appendix D.III for the proof of (44).

For , Trillingsgaard and Popovski [23] numerically evaluate their non-asymptotic achievability bound for a DM-MAC while Truong and Tan [20] provide an achievability bound with second-order term for the Gaussian MAC with average power constraints. Applying our single-threshold rule and analysis to the Gaussian MAC with average power constraints improves the second-order term in [20] from to for all non-corner points in the achievable region. The main challenge in [20] is to derive a tight bound on the expected value of the maximum over of stopping times for the corresponding threshold rules in (43). In our analysis, we avoid that challenge by employing a single-threshold decoder whose average decoding time is bounded by .

Under the same model and assumptions on , to achieve non-corner rate points that do not lie on the sum-rate boundary, we modify our single-threshold rule to (43), where is the transmitter index set corresponding to the capacity region’s active sum-rate bound at the (non-corner) point of interest. Following steps similar to the proof of (44) gives second-order term for those points as well. For corner points, more than one boundary is active222The capacity region of a -transmitter MAC is characterized by the region bounded by planes. By definition of a corner point, at least two inequalities corresponding to these planes are active at a corner point.; therefore, more than one threshold rule in (43) is needed at the decoder. In this case, again for , [20] proves an achievability bound with a second-order term . Whether this bound can be improved to as in (44) remains an open problem.

V VLSF Codes for the DM-RAC with at Most Transmitters

Definition 3 (Yavas et al. [28, eq. (1)])

A permutation-invariant, reducible DM-RAC for the maximal number of transmitters is defined by a family of DM-MACs , where the -th DM-MAC defines the channel for active transmitters.

By assumption, each of the DM-MACs satisfies the permutation-invariance condition

| (45) |

for all permutations of , and , and the reducibility condition

| (46) |

for all , , and , where specifies a unique “silence” symbol that is transmitted when a transmitter is silent.

The permutation-invariance (45) and reducibility (46) conditions simplify the presentation and enable us to show, using a single-threshold rule at the decoder [28], that the symmetrical rate point at which the code operates lies on the sum-rate boundary of each of the underlying DM-MACs,

The VLSF RAC code defined here combines our rateless communication strategy from [28] with the sparse feedback VLSF PPC and MAC codes with optimized average decoding times described above. Specifically, the decoder estimates the value of at time . If the estimate is not zero, it decodes at one of the decoding times (rather than just the single time used in [28, 39]). For every , the locations of the decoding times are optimized to attain the minimum average decoding delay. As in [28], we do not assume any probability distribution on the user activity pattern. We seek instead to optimize the rate-reliability trade-off simultaneously for all possible activity patterns. (By the permutation-invariance assumption, there are only distinguishable activity patterns to consider here indexed by the number of active transmitters.) If the decoder concludes that no transmitters are active, then it ends the transmission at time decoding no messages. At each time , , the receiver broadcasts “0” to the transmitters, signaling that they should continue to transmit. At time , the receiver broadcasts feedback bit “1” to the transmitters if it is able to decode messages; otherwise, it outputs an erasure symbol “” and sends feedback bit “0”, again signaling that decoding has not occurred and transmission should continue.

As in [28, 39], we assume that the transmitters know nothing about the set except their own membership and the receiver’s feedback at potential decoding times. We employ identical encoding [44], that is, all transmitters use the same codebook. This implies that the RAC code operates at the symmetrical rate point, i.e., for . As in [44, 28], the decoder is required to decode the list of messages transmitted by the active transmitters but not the identities of these transmitters.

To deal with the scenario where the number of transmitters in the RAC grows linearly with the blocklength, i.e., , [44] employs the per-user error probability (PUPE) constraint rather than the joint error probability used here and in the analysis of the MAC (e.g., [20, 28, 39]). The PUPE is a weaker error probability constraint since, under PUPE, an error at one decoder does not count as an error at all other decoders. In [28], it is shown that when , PUPE and joint error probability constraints have the same second-order performance for random access coding. As a result, there is no advantage to using PUPE rather than the more stringent joint error criterion when . Therefore, we employ the joint error probability constraint throughout.

We formally define VLSF codes for the RAC as follows.

Definition 4

Fix and . An -VLSF code with identical encoders comprises

-

1.

a set of integers (without loss of generality, we assume that is the largest available decoding time),

-

2.

a common randomness random variable on an alphabet ,

-

3.

a sequence of encoding functions , , defining length- codewords,

-

4.

non-negative integer-valued random stopping times for the filtration generated by , satisfying

(47) if transmitters are active, and

-

5.

decoding functions and , and , satisfying an average error probability constraint

(48) when messages are transmitted, where are independent and equiprobable on the set , and

(49) when no transmitters are active.

To guarantee that the symmetrical rate point arising from identical encoding lies on the sum-rate boundary for all , following [28], we assume that there exists an input distribution that satisfies the interference assumptions

| (50) |

Permutation-invariance (45), reducibility (46), and interference (50) together imply that the mutual information per transmitter, , strictly decreases with increasing (see [28, Lemma 1]). This property guarantees the existence of decoding times satisfying for any and .

In order to be able to detect the number of active transmitters using the received symbols but not the codewords themselves, we require that the input distribution satisfies the distinguishability assumption

| (51) |

where is the marginal output distribution under the -transmitter DM-MAC with input distribution .

An example of a permutation-invariant and reducible DM-RAC that satisfies interference (50) and distinguishability (51) assumptions is the adder-erasure RAC in [45, 28]

| (52) |

where , , and .

Theorem 6

Proof:

The coding strategy to prove Theorem 6 is as follows. The decoder applies a -ary hypothesis test using the output sequence and decides an estimate of the number of active transmitters . If the hypothesis test declares that , then the receiver stops the transmission at time , decoding no messages. If , then the receiver decodes messages at one of the times using the VLSF code in Theorem 5 for the -transmitter DM-MAC with decoding times. If the receiver decodes at time , then it sends feedback bit ‘0’ at all previous decoding times and feedback bit ‘1’ at time . Note that alternatively, the receiver can send its estimate using bits at time , informing the transmitters that it will decode at some time ; in this case, the number of feedback bits decreases from the worst-case that results from the strategy described above. The details of the proof appear in Appendix E. ∎

VI VLSF Codes for the Gaussian PPC with Maximal Power Constraints

VI-A Gaussian PPC

The output of a memoryless, Gaussian PPC of blocklength in response to the input is

| (55) |

where are drawn i.i.d. from , independent of .

The channel’s capacity and dispersion are

| (56) | ||||

| (57) |

VI-B Related Work on the Gaussian PPC

We first introduce the maximal and average power constraints on VLSF codes for the PPC. Given a VLSF code with decoding times , the maximal power constraint requires that the length- prefixes, , of each codeword all satisfy a power constraint , i.e.,

| (58) |

The average power constraint on the length- codewords, as defined by [20, Def. 1], is

| (59) |

The definitions of and -VLSF codes for the Gaussian PPC are similar to Definition 1 with the addition of maximal (58) and average (59) power constraints, respectively. Similar to (13), (resp. ) denotes the maximum achievable message set size with decoding times, average decoding time , average error probability , and maximal (resp. average) power constraint .

In the following, we discuss prior asymptotic expansions of and for the Gaussian PPC, where .

-

a)

: For , , and , Tan and Tomamichel [35, Th. 1] and Polyanskiy et al. [13, Th. 54] show that

(60) The converse for (60) is derived in [13, Th. 54] and the achievability for (60) in [35, Th. 1]. The achievability scheme in [35, Th. 1] generates i.i.d. codewords uniformly distributed on the -dimensional sphere with radius , and applies maximum likelihood (ML) decoding. These results imply that random codewords uniformly distributed on a sphere and ML decoding are, together, third-order optimal, meaning that the gap between the achievability and converse bounds in (60) is .

-

b)

: For with an average-power-constraint, Yang et al. show in [46] that

(61) Yang et al. use a power control argument to show the achievability of (61). They divide the messages into disjoint sets and , where . For the messages in , they use an -VLSF code with a single decoding time . The codewords are generated i.i.d. uniformly on the sphere with center at 0 and radius . The messages in are assigned the all-zero codeword. The converse for (61) follows from an application of the meta-converse [13, Th. 26].

-

c)

: For VLSF codes with and average power constraint (59), Truong and Tan show in [19, Th. 1] that for and ,

(62) (63) where is the binary entropy function. The results in (62)–(63) are analogous to the fundamental limits for DM-PPCs (15)–(16) and follow from arguments similar to those in [8]. Since the information density for the Gaussian channel is unbounded, bounding the expected value of the decoding time in the proof of [19, Th. 1] requires different techniques from those applicable to DM-PPCs [8].

| First-order term | Second-order term | ||||

| Lower Bound | Upper Bound | ||||

| Fixed-length | No Feedback | Max. power | ([35, 13]) | ([13]) | |

| Ave. power | ([46]) | ([46]) | |||

| Feedback | Max. power | ([35, 13]) | ([14]) | ||

| Ave. power | ([47]) | ([47]) | |||

| Variable-length | Max. power | (Theorem 7) | ([19]) | ||

| Ave. power | (Theorem 7) | ([19]) | |||

| Variable-length | Max. power | ([1]) | ([19]) | ||

| Ave. power | ([19]) | ([19]) | |||

Table II combines the summary from [47, Table I] with the corresponding results for to summarize the performance of VLSF codes for the Gaussian channel in different communication scenarios.

VI-C Main Result

The theorem below is our main result for the Gaussian PPC under the maximal power constraint (58).

Theorem 7

Proof:

See Appendix F. ∎

Note that the achievability bound in Theorem 7 has the same form as the one in Theorem 2 with and replaced with the Gaussian capacity and the Gaussian dispersion , respectively. The bound in (64) holds for the average power constraint as well since any code that satisfies the maximal power constraint also satisfies the average power constraint.

From Shannon’s work in [48], it is known that for the Gaussian channel with a maximal power constraint, drawing i.i.d. Gaussian codewords yields a performance inferior to that achieved by the uniform distribution on the power sphere. As a result, almost all tight achievability bounds for the Gaussian channel in the fixed-length regime under a variety of settings (e.g., all four combinations of the maximal/average power constraint and feedback/no feedback [35, 46, 14, 47] in Table I) employ random codewords drawn uniformly at random on the power sphere. A notable exception is Truong and Tan’s result in (62) [19, Th. 1], which considers VLSF codes with an average power constraint; that result employs i.i.d. Gaussian inputs. The Gaussian distribution works in this scenario because when , the usually dominant term in (18) disappears. The second term in (18) is not affected by the input distribution. Unfortunately, the approach from [19, Th. 1] does not work here since drawing codewords i.i.d. satisfies the average power constraint (59) but not the maximal power constraint (58). When and the probability dominates, using i.i.d. inputs achieves a worse second-order term in the asymptotic expansion (64) of the maximum achievable message set size. For the case , we draw codewords according to the rule that the sub-codewords indexed from to are drawn uniformly on the -dimensional sphere of radius for , independently of each other. Note that this input distribution is dispersion-achieving for the fixed-length no-feedback case, i.e., [13] and is superior to choosing codewords i.i.d. , even under the average power constraint. In particular, i.i.d. inputs achieve (21), where the dispersion is replaced by the variance of when ; here is greater than the dispersion for all (see [49, eq. (2.56)]). Whether or not our input distribution is optimal in the second-order term remains an open question.

VII Conclusions

This paper investigates the maximum achievable message set size for sparse VLSF codes over the DM-PPC (Theorem 2), DM-MAC (Theorem 5), DM-RAC (Theorem 6), and Gaussian PPC (Theorem 7) in the asymptotic regime where the number of decoding times is constant as the average decoding time grows without bound. Under our second-order achievability bounds, the performance improvement due to adding more decoding time opportunities to our code quickly diminishes as increases. For example, for the BSC with crossover probability 0.11, at average decoding time , our VLSF coding bound with only decoding times achieves 96% of the rate of Polyanskiy et al.’s VLSF coding bound for . Incremental redundancy automatic repeat request codes, which are some of the most common feedback codes, employ only a small number of decoding times and stop feedback. Our analysis shows that such a code design is not only practical but also has performance competitive with the best known dense feedback codes.

In all channel types considered, the first-order term in our achievability bounds is , where is the average decoding time, is the error probability, and is the capacity (or the sum-rate capacity in the multi-transmitter case), and the second-order term is . For DM-PPCs, there is a mismatch between the second-order term of our achievability bound for VLSF codes with decoding times (Theorem 2) and the second-order term of the best known converse bound (16); the latter applies to , and therefore to any . Towards closing the gap between the achievability and converse bounds, in Theorem 9 in Appendix C, below, we derive a non-asymptotic converse bound that links the error probability of a VLSF code with the minimum achievable type-II error probability of an SHT. However, since the threshold values of the optimal SHT with decoding times do not have a closed-form expression [36, pp. 153-154], analyzing the non-asymptotic converse bound in Theorem 9 is a difficult task. Whether the second-order term in Theorem 2 is optimal is a question left to future work.

Appendix A Proof of Theorem 1

In this section, we derive an achievability bound based on a general SHT, which we use to prove Theorem 1.

A.I A General SHT-based Achievability Bound

A.I1 SHT definitions

We begin by formally defining an SHT. We extend the definition in [36, Ch. 3] to allow non-i.i.d. distributions and finitely many testing times. Let be the observed sequence. Consider two hypotheses for the distribution of

| (66) | ||||

| (67) |

where and are distributions on a common alphabet . Let be the set of times that the hypothesis is tested. Let denote the marginal distribution of the first symbols in , . At time , we either decide , , or we wait until the next available time in . Let be a stopping time adapted to the filtration . Let be a -valued, -measurable function. An SHT is a triple , where is called the decision rule, is called the stopping time, and is the set of available decision times. Type-I and type-II error probabilities are defined as

| (68) | ||||

| (69) |

Below, we derive an achievability using a general SHT.

A.I2 Achievability Bound

Given some input distribution , define the common randomness random variable on with the distribution

| (70) |

The realization of defines length- codewords . Denote the set of available decoding times by

| (71) |

Let be copies of an SHT that distinguishes between the hypotheses

| (72) | ||||

| (73) |

for each message , where the type-I and type-II error probabilities are and , respectively. Define for and ,

| (74) |

Theorem 8, below, is an achievability bound that employs an arbitrary SHT with decoding times.

Theorem 8

Proof:

We generate i.i.d. codewords according to (70). For each of messages, we run the hypothesis test given in (72)–(73). We decode at the earliest time that one of the following events happens

-

•

is declared for some message ,

-

•

is declared for all .

The decoding output is if is declared for ; if there exist more than one such or if there exists no such , the decoder declares an error.

Mathematically, the random decoding time of this code is expressed as

| (77) |

Note that is bounded by by construction. The average decoding time bound in (76) immediately follows from (77). The decoder output is

| (78) |

Since the messages are equiprobable, without loss of generality, assume that message is transmitted. An error occurs if and only if is decided for or if is decided for some , giving

| (79) |

A.II Proof of Theorem 1

In addition to the random code design in (70), let satisfy (20). We here specify the stopping rule and the decision rule for the SHT in (72)–(73).

Define the information density for message and decoding time as

| (80) |

Note that is the log-likelihood ratio between the distributions in hypotheses and . We fix a threshold and construct the SHTs

| (81) | ||||

| (82) | ||||

| (83) |

for all , that is, we decide for message at the first time that passes ; if this never happens for , then we decide for . Without loss of generality, assume that message 1 is transmitted.

We bound the type-I error probability of the given SHT as

| (89) | ||||

| (90) | ||||

| (91) | ||||

| (92) |

where (91) uses the definition of the decision rule (83). The type-II error probability is bounded as

| (93) | ||||

| (94) | ||||

| (95) | ||||

| (96) | ||||

| (97) |

where (95) follows from changing measure from to . Equality (96) uses the same arguments as in [8, eq. (111)-(118)] and the fact that is a martingale due to the product distribution in (20). Inequality (97) follows from the definition of in (81). Applying (75) together with (92) and (97) proves (18).

In his analysis of the error exponent regime, Forney [17] uses a slightly different threshold rule than the one in (81). Specifically, he uses a maximum a posteriori threshold rule, which can also be written as

| (98) |

whose denominator is the output distribution induced by the code rather than by the random codeword distribution .

Appendix B Proof of Theorem 2

The proof uses an idea that is similar to that in [13, Th. 2], which combines the achievability bound of a VLSF code with a sub-exponentially decaying error probability with the stop-at-time-zero procedure. The difference is that we set the sub-exponentially decaying error probability as while [13, Th. 2] sets it to . This modification yields a better second-order term for finite .

Inverting (25), we get

| (99) |

Next, we particularize the decision rules in the SHT at times to the information density threshold rule. Lemma 1, below, is an achievability bound for an -VLSF code that employs the information density threshold rule with the optimized decoding times and the threshold value.

Lemma 1

Proof:

We use the average decoding time and average error probability of a VLSF code in Lemma 1 in the places of and in (23). By Lemma 1, there exists an -VLSF code with

| (102) |

Plugging (99) into (102) and applying the necessary Taylor series expansions complete the proof.

Lemma 1 is an achievability bound in the moderate deviations regime since the error probability decays sub-exponentially to zero. The fixed-length scenario in Lemma 1, i.e., , is recovered by [50], which investigates the moderate deviations regime in channel coding. A comparison between the right-hand side of (100) and [50, Th. 2] highlights the benefit of using VLSF codes in the moderate deviations regime. The second-order rate achieved by a VLSF code with decoding times, average decoding time , and error probability is achieved by a fixed-length code with blocklength and error probability .

In the remainder of the appendix, we prove Lemma 1 and show the second-order optimality of the parameters used in the proof of Theorem 2 including the decoding times set in (22).

B.I Proof of Lemma 1

We first present two lemmas used in the proof of Lemma 1 (step 1). We then choose the distribution of the random codewords (step 2) and the parameters in Theorem 1 (step 3). Finally, we analyze the bounds in Theorem 1 using the supporting lemmas (step 4).

B.I1 Supporting lemmas

Lemma 2, below, is the moderate deviations result that bounds the probability that a sum of zero-mean i.i.d. random variables is above a function of that grows at most as quickly as .

Lemma 2 (Petrov [42, Ch. 8, Th. 4])

Let be i.i.d. random variables. Let , , and . Suppose that the moment generating function is finite in the neighborhood of . (This condition is known as Cramér’s condition.) Let . As , it holds that

| (103) |

Lemma 3, below, gives the asymptotic expansion of the root of an equation. We use Lemma 3 to find the asymptotic expansion for the gap between two consecutive decoding times and .

Lemma 3

Let be a differentiable increasing function that satisfies as . Suppose that

| (104) |

Then, as it holds that

| (105) |

Proof:

Define the function . Applying Newton’s method with the starting point yields

| (106) | ||||

| (107) | ||||

| (108) |

Recall that by assumption. Equality (108) follows from the Taylor series expansion of the function around . Let

| (109) |

From Taylor’s theorem, it follows that

| (110) |

for some . Therefore, , and . Putting (109)–(110) in (104), we see that is a solution to the equality in (104).

∎

B.I2 Random encoder design

We set the distribution of the random codewords as the product of ’s, where is the capacity-achieving distribution with minimum dispersion, i.e.,

| (111) | ||||

| (112) |

B.I3 Choosing the threshold and decoding times

We choose so that the equalities

| (113) |

hold for all . This choice minimizes our upper bound (19) on the average decoding time up to the second-order term in the asymptotic expansion. See Appendix B.II for the proof. Applying Lemma 3 with

| (114) | ||||

| (115) | ||||

| (116) |

for , gives the following gaps between consecutive decoding times

| (117) |

B.I4 Analyzing the bounds in Theorem 1

The information density of a DM-PPC is a bounded random variable. Therefore, satisfies Cramér’s condition (see Lemma 2).333Here, Cramér’s condition is the bottleneck that determines whether our proof technique applies to a specific DM-PPC. For DM-PPCs with infinite input or output alphabets, Cramér’s condition may or may not be satisfied. Our proof technique applies to any DM-PPC for which the information density satisfies Cramér’s condition. For each , applying Lemma 2 with satisfying (113) gives

| (119) | |||||

for , where

| (120) |

and (119) follows from the Taylor series expansion as , and the well-known bound (e.g., [42, Ch. 8, eq. (2.46)])

| (121) |

For , Lemma 2 gives

| (122) | |||||

By Theorem 1, there exists a VLSF code with decoding times such that the expected decoding time is bounded as

| (123) |

By (117), we have for . Multiplying these asymptotic equations, we get

| (124) |

for . Plugging (117), (119), and (124) into (123), we get

| (125) |

Applying Lemma 3 to (125), we get

| (126) |

Comparing (126) and (117), we observe that for large enough,

| (127) |

Further, from (113) and (125), we have

| (128) |

Finally, we set message set size such that

| (129) |

Plugging (122) and (129) into (18), we bound the error probability as

| (130) | |||||

| (131) | |||||

| (132) |

where (132) follows from (127). Inequality (132) implies that the error probability is bounded by for large enough. Plugging (126) and (129) into (113) with , we conclude that there exists an -VLSF code with

| (133) |

which completes the proof.

B.II Second-order optimality of the decoding times in Theorem 2

From the code construction described in Theorems 1–2, the average decoding time is

| (134) |

where

| (135) | ||||

| (136) |

We here show that given a fixed , the parameters chosen according to (113) and (129) (and also the error value chosen in (23) since is a function of )) minimize the average decoding time in (134) in the sense that the second-order expansion of in terms of is maximized. That is, our parameter choice optimizes our bound on our code construction.

B.II1 Optimality of

We first set and to satisfy the equations

| (137) | ||||

| (138) |

and optimize the values of under (137)–(138). Section B.II2 proves the optimality of the choices in (137)–(138).

Next, we define the functions

| (140) | ||||

| (141) | ||||

| (142) |

Assume that is such that , and . Then by Lemma 2, , which is a step-wise constant function of , is approximated by differentiable function as

| (143) |

Taylor series expansions give

| (144) | ||||

| (145) | ||||

| (146) |

Let denote the solution to the optimization problem in (139) with replaced by . Then must satisfy the Karush-Kuhn-Tucker conditions , giving

| (147) | ||||

| (148) |

for .

Let be the decoding times chosen in (113). We evaluate

| (149) | ||||

| (150) | ||||

| (151) | ||||

| (152) |

Our goal is to find a vector such that

| (154) |

Assume that . By plugging into (144)–(146) and using the Taylor series expansion of , we get

| (155) | ||||

| (156) |

Using (155)–(156), and putting in (147)–(148), we solve (154) as

| (157) | ||||

| (158) | ||||

| (159) |

for . Hence, satisfies the optimality criterion, and .

It remains only to evaluate the gap . We have

| (160) | |||

| (161) | |||

| (162) |

where is a positive constant. From the relationship between and in (124) and the equality (162), we get

| (163) |

Plugging (163) into our VLSF achievability bound (133) gives

| (164) |

Comparing (164) and (133), note that the decoding times chosen in (113) have the optimal second-order term in the asymptotic expansion of the maximum achievable message set size within our code construction. Moreover, the order of the third-order term in (164) is the same as the order of the third-order term in (133).

The method of approximating the probability , which is an upper bound for (see (86)), by a differentiable function is introduced in [26, Sec. III] for the optimization problem in (139). In [26], Vakilinia et al. approximate the distribution of the random stopping time by the Gaussian distribution, where and are found empirically. They derive the Karush-Kuhn-Tucker conditions in (147)–(148), which is known as the SDO algorithm. A similar analysis appears in [27] for the binary erasure channel. The analyses in [26, 27] use the SDO algorithm to numerically solve the equations (147)–(148) for a fixed , , and . Unlike [26, 27], we find the analytic solution to (147)–(148) as decoding times approach infinity, and we derive the achievable rate in Theorem 2 as a function of .

B.II2 Optimality of and

Let be the solution to in (134). Section A finds the values of that minimize . Minimizing also minimizes in (134) since depends only on and , and is a constant. Therefore, to minimize , it only remains to find such that

| (165) | ||||

| (166) |

Consider the case where . Solving (165) and (166) using (147)–(152) gives

| (167) | ||||

| (168) |

where is a positive constant. Solving (167)–(168) simultaneously, we obtain

| (169) |

Plugging (167) and (169) into (136), we get

| (170) |

where is a constant. Let be the parameters chosen in (113) and (129). Note that is order-wise different than in (23). Following steps similar to (160)–(162), we compute

| (171) |

Plugging (171) into (21) gives

| (172) |

Comparing (21) and (172), we see that although (23) and (170) are different, the parameters chosen in (113) and (129) have the same second-order term in the asymptotic expansion of the maximum achievable message set size as the parameters that minimize the average decoding time in the achievability bound in Theorem 1.

Appendix C Proof of Theorem 3

Let and be two distributions. Let be the log-likelihood ratio, and let

| (174) |

where ’s are i.i.d. and have the same distribution as . For , we denote the probability measures and expectations under distribution by and , respectively. Given a threshold , define the stopping time

| (175) |

and the overshoot

| (176) |

The following lemma from [36], which gives the refined asymptotics for the stopping time , is the main tool to prove our bounds.

Lemma 4 ([36, Cor. 2.3.1, Th. 2.3.3, Th. 2.5.3, Lemma 3.1.1])

Suppose that , and is non-arithmetic. Then, as , it holds that

| (177) | ||||

| (178) |

and

| (179) | ||||

| (180) | ||||

| (181) |

C.I Achievability Proof

Let be a capacity-achieving distribution of the DM-PPC. Define the hypotheses

| (182) | ||||

| (183) |

and the random variables

| (184) | ||||

| (185) |

for Note that under , . Here, we use in the place of in (174). Define

| (186) |

and

| (187) | ||||

| (188) |

We employ the stop-at-time-zero procedure described in the proof sketch of Theorem 2 with and the information density threshold rule (80)–(83) from the proof of Theorem 1, where the threshold is set to . Here, is as in (175). We set and so that

| (189) |

where the inequality follows from (180). Following steps identical to (99), and noting that , we get

| (190) |

and the average error probability of the code is bounded by .

To evaluate , we use Lemma 4 with in place of . A straightforward calculation yields

| (191) | ||||

| (192) |

Next, we have that

| (193) |

where . Applying the saddlepoint approximation (e.g., [51, eq. (1.2)]) to , we get

| (194) |

where and are bounded from below a positive constant for all . Applying the Laplace’s integral [51, eq. (2.5)] to (194), we get

| (195) |

for all , where each is a positive constant depending on . Putting (192) and (195) into (178) and (188), we get

| (196) |

| (197) | ||||

| (198) |

C.II Converse Proof

Recall the definition of an SHT from Appendix A.I1 that tests the hypotheses

| (199) | ||||

| (200) |

where and are distributions on a common alphabet . Here, denotes a vector of infinite length whose joint distribution is either or , which need not be product distributions in general. We define the minimum achievable type-II error probability, subject to a type-I error probability bound and a maximal expected decoding time constraint, with decision times restricted to the set as

| (201) |

which is the SHT version of the -function defined for the fixed-length binary hypothesis test [13].

The following theorem extends the meta-converse bound [13, Th. 27], which is a fundamental theorem used to show converse results in fixed-length channel coding without feedback and many other applications.

Theorem 9

Fix any set , a real number , and a DM-PPC . Then, it holds that

| (202) |

Proof:

The proof is similar to that in [13]. Let denote a message equiprobably distributed on , and let be its reconstruction. Given any VLSF code with the set of available decoding times , average decoding time , error probability , and codebook size , let denote the input distribution induced by the code’s codebook. The code operation creates a Markov chain . As full feedback breaks this Markov chain, stop feedback does not since the channel inputs are conditionally independent of the channel outputs given the message . Fix an arbitrary output distribution , and consider the SHT

| (203) | ||||

| (204) |

with a test , where are generated by the (potentially random) encoder-decoder pair of the VLSF code. The type-I and type-II error probabilities of this code-induced SHT are

| (205) | ||||

| (206) |

where (206) follows since the sequence is independent of under . The stopping time of this SHT under or is bounded by by the definition of a VLSF code. Since the error probabilities in (205)–(206) cannot be better than that of the optimal SHT, it holds that

| (207) | |||

| (208) | |||

| (209) |

where (208) follows since the choice is arbitrary. ∎

To prove (27), we apply Theorem 9 and get

| (210) |

where is the capacity-achieving output distribution, and . The reduction from Theorem 9 to (210) follows since has the same distribution for all for Cover–Thomas symmetric channels [43, p. 190]. In the remainder of the proof, we derive an upper bound for the right-hand side of (210).

Consider any SHT with and . Our definition in (201) is slightly different than the classical SHT definition from [52] since our definition allows one to make a decision at time 0. Notice that at time 0, any test has three choices: decide , decide , or decide to start taking samples. When the test decides to start taking samples, the remainder of the procedure becomes a classical SHT. From this observation, any test satisfies

| (211) | ||||

| (212) |

where at time 0, the test decides with probability , and and are the type-I and type-II error probabilities conditioned on the event that the test decides to take samples at time 0, which occurs with probability .

Let denote the average stopping time of the test with error probabilities . We have

| (213) | ||||

| (214) | ||||

| (215) | ||||

| (216) |

since decays exponentially with .

The following argument is similar to that in [53, Sec. V-C]. Set an arbitrary and the thresholds

| (217) | ||||

| (218) |

where is arbitrary, and let be the SPRT associated with the thresholds , and type-I and type-II error probabilities and .

Applying [36, eq. (3.56)] to (196), we get

| (219) | ||||

| (220) |

| (221) | ||||

| (222) |

Letting , it follows from (213)–(216) and (221)–(222) that

| (223) | ||||

| (224) |

for a large enough . Using Wald and Wolfowitz’s SPRT optimality result [54], we get

| (225) | ||||

| (226) |

Now it only remains to lower bound . Applying [36, Th. 3.1.2, 3.1.3] and (181) gives

| (227) |

where

| (228) |

and is as in (186). Since is a sum of i.i.d. random variables, where the summands have a non-zero mean, the Chernoff bound implies that each of the probabilities in (228) decays exponentially with . Thus,

| (229) |

| (230) | |||

| (231) |

where (231) follows from (211). Inequalities (212), (226), and (231) imply (27).

Appendix D Proofs for the DM-MAC

In this section, we prove our main results for the DM-MAC, beginning with Theorem 4, which is used to prove Theorem 5.

D.I Proof of Theorem 4

For each transmitter , , we generate -dimensional codewords i.i.d. from . Codewords for distinct transmitters are drawn independently of each other. Denote the codeword for transmitter and message by for and . The proof extends the DM-PPC achievability bound in Theorem 1 that is based on a sub-optimal SHT to the DM-MAC. Below, we explain the differences.

Without loss of generality, assume that is transmitted. The hypothesis test in (72)–(73) is replaced by

| (232) | ||||

| (233) |

which is run for every message tuple . The information density (80), the stopping times (81)–(82), and the decision rule (83) are extended to the DM-MAC as

| (234) | ||||

| (235) | ||||

| (236) | ||||

| (237) |

for every message tuple and decoding time . For brevity, let be drawn i.i.d. according to the joint distribution

| (238) |

Error probability analysis: The following error analysis extends the PPC bounds in (79) and (89)–(97) to the DM-MAC.

In the analysis below, for brevity, we write to denote that for . The error probability is bounded as

| (239) | ||||

| (240) | ||||

| (241) |

where (240)–(241) apply the union bound to separate the probabilities of the following error events:

-

1.

the information density of the true message tuple does not satisfy the threshold test for any available decoding time;

-

2.

the information density of a message tuple in which all messages are incorrect satisfies the threshold test for some decoding time;

-

3.

the information density of a message tuple in which the messages from some transmitters are correct and the messages from the other transmitters are incorrect satisfies the threshold test for some decoding time.

For the cases where at least one message is decoded correctly, we delay the application of the union bound. Let be the set of transmitters whose messages are decoded correctly. Define

| (244) | ||||

| (245) |

We first bound the probability term in (241) by applying the union bound according to which subset of the transmitter set is decoded correctly, and get

| (246) | |||

| (247) |

where refers to the random sample from the codebooks of transmitter set , independent from the codewords transmitted by the transmitters and the received output .

We bound the right-hand side of (247) using the same method as in [28, eq. (65)–(66)]. This step is crucial in enabling the single-threshold rule for the rate vectors approaching a point on the sum-rate boundary. Set an arbitrary . Define two events

| (248) | ||||

| (249) |

Define the threshold

| (250) |

We have

| (251) | |||

| (252) | |||

| (253) | |||

| (254) |

where inequality (252) uses the chain rule for mutual information, (253) applies the union bound, and (254) follows from [20, eq. (88)].

D.II Proof of Theorem 5

We employ the stop-at-time-zero procedure in the proof sketch of Theorem 2 with . Therefore, we first show that there exists an -VLSF code satisfying

| (255) |

We set the parameters

| (256) | ||||

| (257) | ||||

| (258) |

Note that ’s are bounded below by a positive constant for rate points lying on the sum-rate boundary.

By Theorem 4, there exists a VLSF code with decoding times such that the average decoding time is bounded as

| (259) |