Variational Bayes Inference for Data Detection in Cell-Free Massive MIMO

Abstract

Cell-free massive MIMO is a promising technology for beyond-5G networks. Through the deployment of many cooperating access points (AP), the technology can significantly enhance user coverage and spectral efficiency compared to traditional cellular systems. Since the APs are distributed over a large area, the level of favorable propagation in cell-free massive MIMO is less than the one in colocated massive MIMO. As a result, the current linear processing schemes are not close to the optimal ones when the number of AP antennas is not very large. The aim of this paper is to develop nonlinear variational Bayes (VB) methods for data detection in cell-free massive MIMO systems. Contrary to existing work in the literature, which only attained point estimates of the transmit data symbols, the proposed methods aim to obtain the posterior distribution and the Bayes estimate of the data symbols. We develop the VB methods accordingly to the levels of cooperation among the APs. Simulation results show significant performance advantages of the developed VB methods over the linear processing techniques.

Index Terms:

Cell-free, inference, massive MIMO, variational Bayes.I Introduction

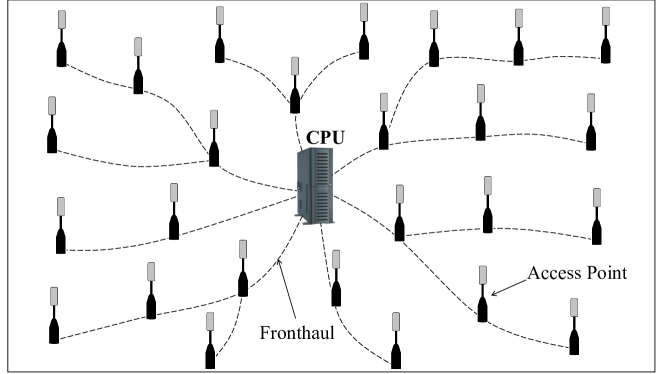

Cell-free massive multiple-input multiple-output (MIMO) is considered as a promising technology for powering beyond-5G networks. The key idea of a cell-free massive MIMO system is to distributively deploy a large number of access points (APs) coherently serving all users in the system. As illustrated in Fig. 1, the APs in a cell-free system can be randomly located all over the coverage area and are connected to one or several central processing units (CPUs). Due to this distributed deployment, any user is highly likely to be close to at least one AP. A cell-free system can effectively resolve the poor coverage issue in cell-edge areas of conventional cellular systems [1, 2, 3]. In addition, a cell-free system enables different levels of cooperation among the APs with certain levels of joint signal processing at the CPU, ranging from fully centralized processing (Level 4), to partially distributed processing (Levels 3 and 2), and to a fully distributed processing (Level 1) [4]. Joint signal processing at the system’s CPU allows a cell-free system to better address the inter-cell interference, which becomes more severe in cellular systems with small cell deployments. Therefore, cell-free massive MIMO systems can offer significant enhancements in user coverage and energy efficiency compared to traditional cellular systems [1, 5, 4].

The majority of existing research on uplink cell-free massive MIMO has focused on spectral and energy efficiency analysis with linear signal processing methods, such as maximum-ratio combining (MRC) [1], zero-forcing (ZF) [1], and linear minimum mean-squared error (LMMSE) [4]. While such approaches have relatively low complexity, linear methods do not perform well in systems with low level of favorable propagation (e.g. when the number of AP antennas is small or is not much larger than the number of UEs, or the channels are highly correlated). Nonlinear signal processing is thus a promising alternative approach that can offer higher spectral efficiency [4] or lower bit error rate (BER) [6]. The recent work in [6] proposed a nonlinear optimization-based algorithm for joint channel estimation and data detection in cell-free massive MIMO. However, the approach in [6] can only provide point estimates of the data symbols of interest. Different from these papers, the focus of this paper is on devising efficient algorithms to obtain Bayesian estimates of the data symbols. Unfortunately, realizing the exact posterior distributions of the data symbols is intractable, even in a conventional single cell MIMO system. We, therefore, develop variational Bayes (VB) inference methods for approximating intractable posterior distributions of data symbols, which are then used to detect the symbols. We investigate the VB methods for joint data detection with fully centralized processing at the CPU, as well as for distributed data detection at the APs. For fully centralized processing, we assume that full knowledge of the channel state information (CSI) is available at the CPU. Likewise, for distributed processing at each AP, we assume that CSI knowledge for the channel from the users to that AP is locally available. Simulation results show significant performance advantages of the developed VB methods over the LMMSE processing techniques in [4].

Notation: Upper-case and lower-case boldface letters denote matrices and column vectors, respectively. The transpose and conjugate transpose are denoted by and , respectively. represents a complex Gaussian random vector with mean and covariance matrix ; denotes the probability distribution function (PDF) of a length- random vector . and are the mean and the variance of with respect to its distribution ; and denote the mean and variance of with respect to a variational distribution .

II System Model

We consider an uplink cell-free massive MIMO system with distributed APs, each equipped with antennas, serving randomly located single-antenna users. It is assumed that . Denote as the uplink channel from the -th user and the -th AP and . We assume a block Rayleigh fading scenario in which the channel remains constant for time slots and is normally distributed as . Here, is the large-scale fading coefficient and is the normalized spatial correlation matrix whose diagonal elements equal to one. Due to the random user deployment, the large-scale fading coefficient is different from one user to another user, resulting in a non-i.i.d. channel matrix . We assume that the channel vectors are independent of each other for each user-AP pair.

Let be the transmitted symbol vector at time slot , in which the transmitted symbol from the -th user is drawn from a complex-valued discrete constellation such that and . The prior distribution of is thus given by

| (1) |

where corresponds to the known prior probability of the constellation point . The received signal vector at the -th AP can be modeled as

| (2) |

where is the noise vector whose elements are independent and identically distributed (i.i.d.) as . The interest of this paper is to obtain an estimated of from multiple observed signal vectors ’s across the distributed APs with minimum mean squared detection error .

III Four Levels of Cell-Free Massive MIMO Signal Processing Using LMMSE Filtering

To frame the discussion on the developed VB methods, we revisit the 4 levels of signal processing in cell-free systems using LMMSE filtering as studied in [4]. Since the processing is based on a per time slot basis, without loss of generality, we drop the time index .

III-A Level 4: Fully Centralized Processing

At this level, the APs do not process their received signals. Instead, the received signals are forwarded to the CPU for fully centralized processing, including the data detection task. The signals forwarded from the APs can be stacked into

| (3) |

where , , and . The processing for cell-free massive MIMO in this level is similar to the processing at a conventional co-located MIMO receiver. The CPU detects using the received signal vector and the channel matrix . Among the linear detectors, the LMMSE detector maximizes the signal-to-interference-and-noise ratio (SINR) and also achieves the best detection performance [4]. With the full knowledge of , the LMMSE estimate is formed as

| (4) |

which is then element-wise projected onto . We note that the LMMSE filter in the presented form requires the inverse of a -dimensional matrix.

III-B Level 3: Local Processing & Large-Scale Fading Decoding

At this level, each AP pre-processes its received signal by computing a local estimate of that are forwarded to the CPU for final decoding [4]. Assuming full knowledge of channel matrix at the -th AP, the local LMMSE estimate of can be found as

| (5) |

We note that the LMMSE filter in this presented form requires the inverse of a -dimensional matrix. The CPU then can linearly combine the local estimates to obtain the estimate

| (6) |

which is eventually used to decode . Here, the weighting coefficient vector relies only on channel statistics and can be optimized by the CPU. This combining method is also known as the large-scale fading decoding (LSFB) strategy in the context of cellular massive MIMO. We note that no instantaneous CSI of any channel is required at the CPU.

III-C Level 2: Local Processing & Simple Centralized Decoding

At this level, the CPU forms an estimate of by simply taking the average of the local estimates [4]. This yield an estimate as

| (7) |

We note that no statistical parameters of CSI are needed at the CPU at this level of centralized signal processing.

III-D Level 1: Small-Cell Network

At this level, each user signal is decoded by only one AP that gives the highest spectral efficiency to the user, i.e., the highest SINR [4]. LMMSE filtering can be applied to obtain the local estimate of the user signal. Since only one estimate per user is forwarded to the CPU, no centralizing decoding is required.

IV Variational Bayes for Cell-Free Detection

In this paper, we focus on developing VB-based methods for data detection in cell-free massive MIMO systems that require certain levels of centralized processing, i.e., Levels 4, 3, and 2. For Level 4 processing, we assume that the symbol vectors are estimated independently at each time slot. However, for Levels 3 and 2 processing, we assume that the symbol vectors are first estimated locally over the whole fading block. As explained later in the section, this method of processing helps reduce the amount of signaling to the CPU, where the local estimates are aggregated to obtain the final estimate.

IV-A Background on VB

We first present the background on VB for approximate inference that will be exploited for solving the data detection in cell-free systems. VB inference is a powerful framework from machine learning that approximates intractable posterior distributions of latent variables with a known family of simpler distributions through optimization. The goal of VB inference is to find an approximation for a computationally intractable posterior distribution given a probabilistic model that specifies the joint distribution , where represents the set of all observed variables and is a set of latent variables and parameters. The VB inference method aims at finding a density function with its own setting of variational parameters within a family of density functions that makes close to the posterior distribution of interest . VB inference amounts to solving the following optimization problem:

| (8) |

where is the Kullback-Leibler (KL) divergence from to . Minimizing the KL divergence is equivalent to maximizing the evidence lower bound () [7], which is defined as

| (9) |

The maximum of occurs when . Since working with the true posterior distribution is often intractable, it is more convenient to consider a restricted family of distributions . Among VB inference methods, the mean-field approximation enables efficient optimization of the variational distribution over a partition of the latent variables, while keeping the variational distributions over other partitions fixed [7]. The mean-field variational family is constructed such that

| (10) |

where the latent variables are mutually independent and each is governed by a distinct factor in the variational density. Among all mean-field distributions , the general expression for the optimal solution of the variational density that maximizes the ELBO can be obtained as [7]

| (11) |

where denotes the expectation with respect to all latent variables except using the currently fixed variational density . By iterating the update of sequentially over all , the objective function can be monotonically improved. This is the basis behind the coordinate ascent variational inference algorithm, which guarantees convergence to at least a local optimum of [7, 8]. To this send, we examine how the mean-field VB framework can be exploited for data detection at different levels of cooperation in a cell-free system.

IV-B Level 4: Fully Centralized Processing

At this level, the signals forwarded from the APs can be stacked into a single large-scale MIMO system as being shown in (3). In a recent work [9], we developed several VB-based methods for MIMO data detection. Among them, the LMMSE-VB algorithm showed superior performance in MIMO systems with non-i.i.d. channels. Certainly, the algorithm can be adopted for data detection in cell-free systems with fully centralized processing. In the following, we present key operations in the algorithm. For details of the algorithm, we refer the readers to [9].

The LMMSE-VB algorithm floats the background noise covariance matrix as an unknown random variable, instead of treating the noise’s variance as known. The postulated noise covariance matrix is estimated by the algorithm itself. For ease of computation, we use to denote the precision matrix and assume a conjugate prior complex Wishart distribution for , where is the scale matrix and indicates the degrees of freedom. The PDF of satisfies

| (12) |

The joint distribution can be factored as

| (13) |

where . Given the observation , we aim at obtaining the mean-field variational distribution such that

| (14) |

The optimization of is executed by iteratively updating and as follows.

a) Updating . The variational distribution is obtained by expanding the conditional in (13) and taking the expectation with respect to all latent variables except using the variational distribution :

| (15) |

where is a linear estimate of that is defined as

| (16) |

It is observed in (15) that with can be interpreted as the likelihood function . In other words, the mean-field VB approximation decouples the linear MIMO system into parallel AWGN channels .

The variational distribution is realized by normalizing . The variational mean and variance are then computed accordingly.

b) Updating . The variational distribution is obtained by taking the expectation of the conditional in (13) with respect to :

| (17) |

The variational distribution is also complex Wishart with degrees of freedom [9]. The variational mean can be computed accordingly. In [9], we also proposed to use the estimator

| (18) |

where .

By iteratively optimizing and via the updates of and , we obtain the CAVI algorithm for estimating and the precision matrix . We refer to this scheme as the LMMSE-VB algorithm since resembles an LMMSE estimate of due to the cancellation of the inter-user interference and the whitening with the postulated noise covariance matrix .

IV-C Level 3: Local Processing & Nonlinear Decoding

At this level, our proposed VB-based method involves two operations: 1) Executing the LMMSE-VB algorithm independently at each AP to compute local estimates of and 2) Aggregating the local estimates at the CPU for joint nonlinear decoding of . However, we make a minor modification to the LMMSE-VB algorithm which allow it to operate over the whole block of time slots.

IV-C1 AP Processing

The signal processing at an AP, say the -th AP, is to generate a coarse estimate of , from the observation . We treat the background noise covariance matrix at the -th AP as an unknown random variable. The postulated noise matrix has to be estimated as well. We denote the precision matrix , , and . The joint distribution can be factorized as

| (19) |

where with . Given the observation , we aim at obtaining the mean-field variational distribution such that

| (20) |

The optimization of is executed by iteratively updating and as follows.

a) Update : The variational distribution is obtained by expanding the conditional in (19) and taking the expectation with respect to all latent variables except using the variational distribution :

| (21) |

where

| (22) | |||||

It is observed in (IV-C1) that with can be interpreted as the likelihood function . In this case, the mean-field VB approximation decouples the uplink MIMO channel to the -th AP into parallel AWGN channels . It is also observed that is the local LMMSE estimate of , while the variance indicates the reliability of this estimate.

The variational distribution is realized by normalizing . The variational mean and variance can be computed accordingly. Hereafter, we use instead of or to indicate the nonlinear MMSE estimate of at the -th AP.

b) Update : The variational distribution is obtained by taking the expectation of the conditional in (19) with respect to :

| (23) |

| (24) |

Assuming a conjugate prior complex Wishart distributed for , the variational distribution is also complex Wishart with degrees of freedom. The variational mean is given in (24), where .

The LMMSE-VB algorithm is executed at the -th AP by iteratively optimizing and via the updates of and . The -th AP then sends the LMMSE estimate and the variance to the CPU for centralized decoding. By pre-processing the whole block of time slots, is sent only once for each channel realization. In contrast, if the LMMSE-VB algorithm is executed on a per time slot basis, the variance of the LMMSE estimate has to be computed and sent for each time slot.

IV-C2 CPU Processing

After collecting the local estimates and the variance from the APs, the CPU can proceed to decode each of the symbols independently. Since , an approximate posterior distribution can be easily derived. The MAP estimate of is obtained as

| (25) |

We note that the above nonlinear combination of local estimates and reliability information is significantly different from the linear combination of local estimates in (6).

IV-D Level 2: Local Processing & Simple Linear Combining

At this level, only local estimates are fed back to the CPU. The LMMSE-VB mentioned in Level 3 signal processing can be used to generate the coarse local estimates. However, the local nonlinear MMSE estimates is sent, instead of the LMMSE estimate and the variance . We note that can be computed using and , but not the reverse.

A simple estimate of can be obtained by simply taking the average of all the estimates as

| (26) |

The final detected symbol of is the constellation point that is closest to .

V Numerical Results

This section presents the numerical results comparing the developed VB-based methods for data detection in cell-free systems with the LMMSE filtering methods in [4]. We use a simulation setting and a channel model in urban environments similar to the work in [4]. In particular, a network area of km is considered where the APs are deployed on a square grid and users are randomly distributed. The large-scale fading coefficient of the channel between user- and AP- (in dB) is given as

| (27) |

where (in m) is the distance between user- and AP- and is the shadow fading. The correlation between the shadowing terms from an AP to different users is modeled as

| (28) |

where (in m) is the distance between user- and user-. Receive antennas at each AP are arranged in a uniform linear array with half-wavelength spacing. For spatial correlation, we use the Gaussian local scattering model with a angular standard deviation [10]. We set the noise as and vary the transmit power of users.

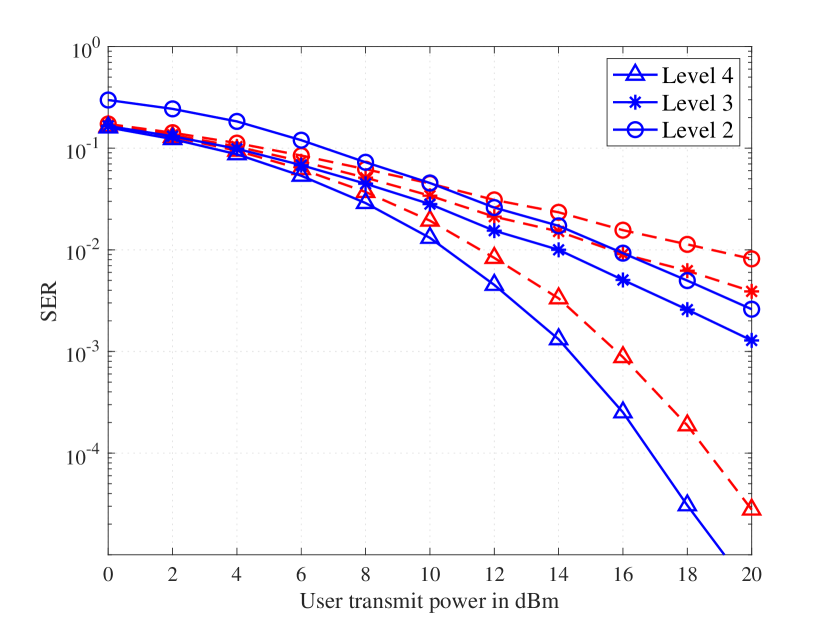

In this work, we compare different data detection methods assuming perfect CSI and QPSK signalling. We assume that each AP is equipped with antennas, i.e., . Fig. 2 presents the symbol error rate (SER) performance of the two types of methods in a relatively small setting of cell-free systems with and . As the user transmit power is increased, the VB-based methods attain much lower SER than the MMSE filtering methods. Up to -dB gain is observed at Level 4 and -dB gain is observed at Level 3 and 2.

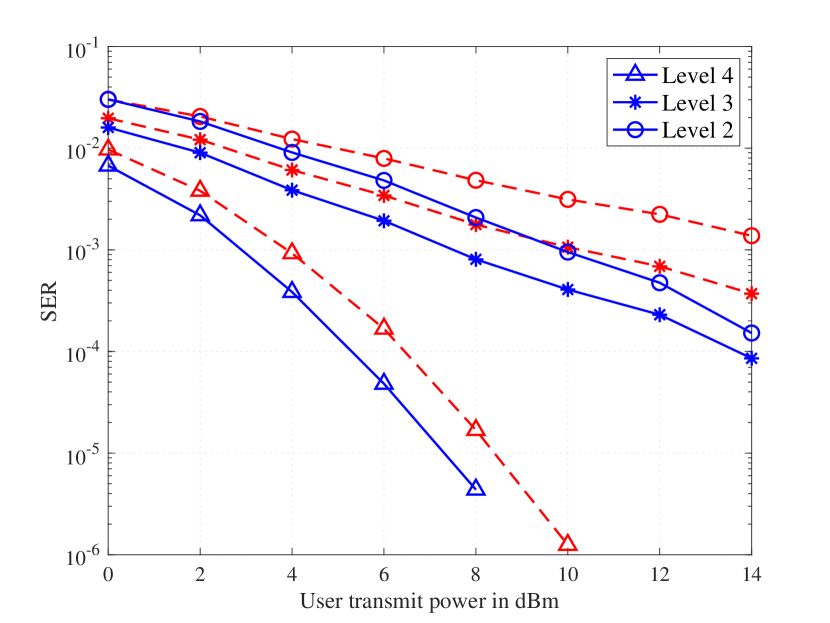

Fig. 3 presents the SER performance a cell-free system with and . The figure clearly indicates the superior performance of the proposed VB-based methods over the MMSE filtering methods. It is also observed from both figures that the more centralized signal processing is carried at the CPU, the better SER performance can be achieved, especially in systems with a large number of users, e.g., .

VI Conclusion

In this paper, we have proposed the VB-based methods for data detection in cell-free systems at three different levels of AP cooperation. The proposed methods can achieve much lower SER than the linear MMSE signal processing methods. We note that the presented study only considers the case of perfect CSI available at the CPU (for Level 4) and at the APs (for Levels 3 and 2). As an extension of this paper, we are developing novel VB-based methods for data detection with imperfect CSI and joint channel estimation and data detection in cell-free systems.

Acknowledgment

This work was supported by the U.S. National Science Foundation under Grants ECCS-2146436 and CCF-2225576.

References

- [1] H. Q. Ngo, A. Ashikhmin, H. Yang, E. G. Larsson, and T. L. Marzetta, “Cell-free massive MIMO versus small cells,” IEEE Trans. Wireless Commun., vol. 16, no. 3, pp. 1834–1850, Mar. 2017.

- [2] G. Interdonato, E. Björnson, H. Quoc Ngo, P. Frenger, and E. G. Larsson, EURASIP J. Wireless Commun. and Networking, 2019. [Online]. Available: https://doi.org/10.1186/s13638-019-1507-0

- [3] E. Björnson and L. Sanguinetti, “Scalable cell-free massive MIMO systems,” IEEE Trans. Commun., vol. 68, no. 7, pp. 4247–4261, July 2020.

- [4] ——, “Making cell-free massive MIMO competitive with MMSE processing and centralized implementation,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 77–90, Jan. 2020.

- [5] E. Nayebi, A. Ashikhmin, T. L. Marzetta, H. Yang, and B. D. Rao, “Precoding and power optimization in cell-free massive MIMO systems,” IEEE Trans. Wireless Commun., vol. 16, no. 7, pp. 4445–4459, July 2017.

- [6] H. Song, T. Goldstein, X. You, C. Zhang, O. Tirkkonen, and C. Studer, “Joint channel estimation and data detection in cell-free massive MU-MIMO systems,” IEEE Trans. Wireless Commun. (Early Access), 2021.

- [7] C. M. Bishop, Pattern Recognition and Machine Learning. Springer, 2006.

- [8] M. J. Wainwright and M. I. Jordan, Graphical models, exponential families, and variational inference. Now Publishers Inc, 2008.

- [9] D. H. N. Nguyen, I. Atzeni, A. Tölli, and A. L. Swindlehurst, “A variational Bayesian perspective on massive MIMO detection,” 2022. [Online]. Available: http://engineering.sdsu.edu/ñguyen/downloads/VB_for_MIMO_detection.pdf

- [10] E. Björnson, J. Hoydis, and L. Sanguinetti, “Massive MIMO networks: Spectral, energy, and hardware efficiency,” Foundations and Trends in Signal Processing, vol. 11, no. 3-4, pp. 154–655, 2017.