Variational Imbalanced Regression: Fair Uncertainty Quantification via Probabilistic Smoothing

Abstract

Existing regression models tend to fall short in both accuracy and uncertainty estimation when the label distribution is imbalanced. In this paper, we propose a probabilistic deep learning model, dubbed variational imbalanced regression (VIR), which not only performs well in imbalanced regression but naturally produces reasonable uncertainty estimation as a byproduct. Different from typical variational autoencoders assuming I.I.D. representations (a data point’s representation is not directly affected by other data points), our VIR borrows data with similar regression labels to compute the latent representation’s variational distribution; furthermore, different from deterministic regression models producing point estimates, VIR predicts the entire normal-inverse-gamma distributions and modulates the associated conjugate distributions to impose probabilistic reweighting on the imbalanced data, thereby providing better uncertainty estimation. Experiments in several real-world datasets show that our VIR can outperform state-of-the-art imbalanced regression models in terms of both accuracy and uncertainty estimation. Code will soon be available at https://github.com/Wang-ML-Lab/variational-imbalanced-regression.

1 Introduction

Deep regression models are currently the state of the art in making predictions in a continuous label space and have a wide range of successful applications in computer vision [50], natural language processing [22], healthcare [43, 45], recommender systems [16, 42], etc. However, these models fail however when the label distribution in training data is imbalanced. For example, in visual age estimation [30], where a model infers the age of a person given her visual appearance, models are typically trained on imbalanced datasets with overwhelmingly more images of younger adults, leading to poor regression accuracy for images of children or elderly people [48, 49]. Such unreliability in imbalanced regression settings motivates the need for both improving performance for the minority in the presence of imbalanced data and, more importantly, providing reasonable uncertainty estimation to inform practitioners on how reliable the predictions are (especially for the minority where accuracy is lower).

Existing methods for deep imbalanced regression (DIR) only focus on improving the accuracy of deep regression models by smoothing the label distribution and reweighting data with different labels [48, 49]. On the other hand, methods that provide uncertainty estimation for deep regression models operates under the balance-data assumption and therefore do not work well in the imbalanced setting [1, 8, 29].

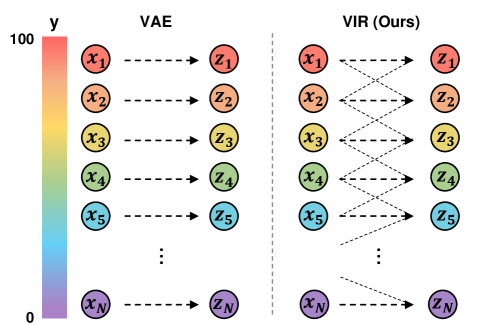

To simultaneously cover these two desiderata, we propose a probabilistic deep imbalanced regression model, dubbed variational imbalanced regression (VIR). Different from typical variational autoencoders assuming I.I.D. representations (a data point’s representation is not directly affected by other data points), our VIR assumes Neighboring and Identically Distributed (N.I.D.) and borrows data with similar regression labels to compute the latent representation’s variational distribution. Specifically, VIR first encodes a data point into a probabilistic representation and then mix it with neighboring representations (i.e., representations from data with similar regression labels) to produce its final probabilistic representation; VIR is therefore particularly useful for minority data as it can borrow probabilistic representations from data with similar labels (and naturally weigh them using our probabilistic model) to counteract data sparsity. Furthermore, different from deterministic regression models producing point estimates, VIR predicts the entire Normal Inverse Gamma (NIG) distributions and modulates the associated conjugate distributions by the importance weight computed from the smoothed label distribution to impose probabilistic reweighting on the imbalanced data. This allows the negative log likelihood to naturally put more focus on the minority data, thereby balancing the accuracy for data with different regression labels. Our VIR framework is compatible with any deep regression models and can be trained end to end.

We summarize our contributions as below:

-

•

We identify the problem of probabilistic deep imbalanced regression as well as two desiderata, balanced accuracy and uncertainty estimation, for the problem.

-

•

We propose VIR to simultaneously cover these two desiderata and achieve state-of-the-art performance compared to existing methods.

-

•

As a byproduct, we also provide strong baselines for benchmarking high-quality uncertainty estimation and promising prediction performance on imbalanced datasets.

2 Related Work

Variational Autoencoder. Variational autoencoder (VAE) [25] is an unsupervised learning model that aims to infer probabilistic representations from data. However, as shown in Figure 1, VAE typically assumes I.I.D. representations, where a data point’s representation is not directly affected by other data points. In contrast, our VIR borrows data with similar regression labels to compute the latent representation’s variational distribution.

Imbalanced Regression. Imbalanced regression is under-explored in the machine learning community. Most existing methods for imbalanced regression are direct extensions of the SMOTE algorithm [9], a commonly used algorithm for imbalanced classification, where data from the minority classes is over-sampled. These algorithms usually synthesize augmented data for the minority regression labels by either interpolating both inputs and labels [40] or adding Gaussian noise [5, 6] (more discussion on augmentation-based methods in the Appendix).

Such algorithms fail to measure the distance in continuous label space and fall short in handling high-dimensional data (e.g., images and text). Recently, DIR [49] addresses these issues by applying kernel density estimation to smooth and reweight data on the continuous label distribution, achieving state-of-the-art performance. However, DIR only focuses on improving the accuracy, especially for the data with minority labels, and therefore does not provide uncertainty estimation, which is crucial to assess the predictions’ reliability. [32] focuses on re-balancing the mean squared error (MSE) loss for imbalanced regression, and [13] introduces ranking similarity for improving deep imbalanced regression. In contrast, our VIR provides a principled probabilistic approach to simultaneously achieve these two desiderata, not only improving upon DIR in terms of performance but also producing reasonable uncertainty estimation as a much-needed byproduct to assess model reliability. There is also related work on imbalanced classification [11], which is related to our work but focusing on classification rather than regression.

Uncertainty Estimation in Regression. There has been renewed interest in uncertainty estimation in the context of deep regression models [1, 12, 18, 23, 26, 29, 36, 37, 38, 51]. Most existing methods directly predict the variance of the output distribution as the estimated uncertainty [1, 23, 52], rely on post-hoc confidence interval calibration [26, 37, 51], or using Bayesian neural networks [44, 46, 47]; there are also training-free approaches, such as Infer Noise and Infer Dropout [29], which produce multiple predictions from different perturbed neurons and compute their variance as uncertainty estimation. Closest to our work is Deep Evidential Regression (DER) [1], which attempts to estimate both aleatoric and epistemic uncertainty [20, 23] on regression tasks by training the neural networks to directly infer the parameters of the evidential distribution, thereby producing uncertainty measures. DER [1] is designed for the data-rich regime and therefore fails to reasonably estimate the uncertainty if the data is imbalanced; for data with minority labels, DER [1] tends produce unstable distribution parameters, leading to poor uncertainty estimation (as shown in Sec. 5). In contrast, our proposed VIR explicitly handles data imbalance in the continuous label space to avoid such instability; VIR does so by modulating both the representations and the output conjugate distribution parameters according to the imbalanced label distribution, allowing training/inference to proceed as if the data is balance and leading to better performance as well as uncertainty estimation (as shown in Sec. 5).

3 Method

In this section we introduce the notation and problem setting, provide an overview of our VIR, and then describe details on each of VIR’s key components.

3.1 Notation and Problem Setting

Assuming an imbalanced dataset in continuous space where is the total number of data points, is the input, and is the corresponding label from a continuous label space . In practice, is partitioned into B equal-interval bins , with slight notation overload. To directly compare with baselines, we use the same grouping index for target value as in [49].

We denote representations as , and use to denote the probabilistic representations for input generated by a probabilistic encoder parameterized by ; furthermore, we denote as the mean of representation in each bin, i.e., for a bin with data points. Similarly we use to denote the mean and variance of the predictive distribution generated by a probabilistic predictor .

3.2 Method Overview

In order to achieve both desiderata in probabilistic deep imbalanced regression (i.e., performance improvement and uncertainty estimation), our proposed variational imbalanced regression (VIR) operates on both the encoder and the predictor .

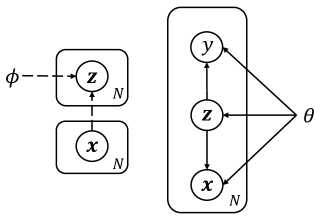

Typical VAE [25] lower-bounds input ’s marginal likelihood; in contrast, VIR lower-bounds the marginal likelihood of input and labels :

Note that our variational distribution (1) does not condition on labels , since the task is to predict and (2) conditions on all (neighboring) inputs rather than just . The second term is VIR’s evidence lower bound (ELBO), which is defined as:

| (1) |

where the is the standard Gaussian prior , following typical VAE [25], and the expectation is taken over , which infers by borrowing data with similar regression labels to produce the balanced probabilistic representations, which is beneficial especially for the minority (see Sec. 3.3 for details).

Different from typical regression models which produce only point estimates for , our VIR’s predictor, , directly produces the parameters of the entire NIG distribution for and further imposes probabilistic reweighting on the imbalanced data, thereby producing balanced predictive distributions (more details in Sec. 3.4).

3.3 Constructing

To cover both desiderata, one needs to (1) produce balanced representations to improve performance for the data with minority labels and (2) produce probabilistic representations to naturally obtain reasonable uncertainty estimation for each model prediction. To learn such balanced probabilistic representations, we construct the encoder of our VIR (i.e., ) by (1) first encoding a data point into a probabilistic representation, (2) computing probabilistic statistics from neighboring representations (i.e., representations from data with similar regression labels), and (3) producing the final representations via probabilistic whitening and recoloring using the obtained statistics.

Intuition on Using Probabilistic Representation. DIR uses deterministic representations, with one vector as the final representation for each data point. In contrast, our VIR uses probabilistic representations, with one vector as the mean of the representation and another vector as the variance of the representation. Such dual representation is more robust to noise and therefore leads to better prediction performance. Therefore, We first encode each data point into a probabilistic representation. Note that this is in contrast to existing work [49] that uses deterministic representations. We assume that each encoding is a Gaussian distribution with parameters , which are generated from the last layer in the deep neural network.

From I.I.D. to Neighboring and Identically Distributed (N.I.D.). Typical VAE [25] is an unsupervised learning model that aims to learn a variational representation from latent space to reconstruct the original inputs under the I.I.D. assumption; that is, in VAE, the latent value (i.e., ) is generated from its own input . This I.I.D. assumption works well for data with majority labels, but significantly harms performance for data with minority labels. To address this problem, we replace the I.I.D. assumption with the N.I.D. assumption; specifically, VIR’s variational latent representations still follow Gaussian distributions (i.e., ), but these distributions will be first calibrated using data with neighboring labels. For a data point where is in the ’th bin, i.e., , we compute with the following four steps.

| (1) Mean and Covariance of Initial : | |||

| (2) Statistics of Bin ’s Statistics: | |||

| (3) Smoothed Statistics of Bin ’s Statistics: | |||

| (4) Mean and Covariance of Final : |

where the details of functions , , , and are described below.

(1) Function : From Deterministic to Probabilistic Statistics. Different from deterministic statistics in [49], our VIR’s encoder uses probabilistic statistics, i.e., statistics of statistics. Specifically, VIR treats as a distribution with the mean and covariance rather than a deterministic vector.

As a result, all the deterministic statistics for bin , , , , and are replaced by distributions with the means and covariances, , , , and , respectively (more details in the following three paragraphs on , , and ).

(2) Function : Statistics of the Current Bin ’s Statistics. In VIR, the deterministic overall mean for bin (with data points), , becomes the probabilistic overall mean, i.e., a distribution of with the mean and covariance (assuming diagonal covariance) as follows:

Similarly, the deterministic overall covariance for bin , , becomes the probabilistic overall covariance, i.e., a matrix-variate distribution [15] with the mean:

since and . Note that the covariance of , i.e., , involves computing the fourth-order moments, which is computationally prohibitive. Therefore in practice, we directly set to zero for simplicity; empirically we observe that such simplified treatment already achieves promising performance improvement upon the state of the art. More discussions on the idea of the hierarchical structure of the statistics of statistics for smoothing are in the Appendix.

(3) Function : Neighboring Data and Smoothed Statistics. Next, we can borrow data from neighboring label bins to compute the smoothed statistics of the current bin by applying a symmetric kernel (e.g., Gaussian, Laplacian, and Triangular kernels). Specifically, the probabilistic smoothed mean and covariance are (assuming diagonal covariance):

(4) Function : Probabilistic Whitening and Recoloring. We develop a probabilistic version of the whitening and re-coloring procedure in [39, 49]. Specifically, we produce the final probabilistic representation for each data point as:

| (2) |

During training, we keep updating the probabilistic overall statistics, , and the probabilistic smoothed statistics, , across different epochs. The probabilistic representation are then re-parameterized [25] into the final representation , and passed into the final layer (discussed in Sec. 3.4) to generate the prediction and uncertainty estimation. Note that the computation of statistics from multiple ’s is only needed during training. During testing, VIR directly uses these statistics and therefore does not need to re-compute them.

3.4 Constructing

Our VIR’s predictor predicts both the mean and variance for by first predicting the NIG distribution and then marginalizing out the latent variables. It is motivated by the following observations on label distribution smoothing (LDS) in [49] and deep evidental regression (DER) in [1], as well as intuitions on effective counts in conjugate distributions.

LDS’s Limitations in Our Probabilistic Imbalanced Regression Setting. The motivation of LDS [49] is that the empirical label distribution can not reflect the real label distribution in an imbalanced dataset with a continuous label space; consequently, reweighting methods for imbalanced regression fail due to these inaccurate label densities. By applying a smoothing kernel on the empirical label distribution, LDS tries to recover the effective label distribution, with which reweighting methods can obtain ‘better’ weights to improve imbalanced regression. However, in our probabilistic imbalanced regression, one needs to consider both (1) prediction accuracy for the data with minority labels and (2) uncertainty estimation for each model. Unfortunately, LDS only focuses on improving the accuracy, especially for the data with minority labels, and therefore does not provide uncertainty estimation, which is crucial to assess the predictions’ reliability.

DER’s Limitations in Our Probabilistic Imbalanced Regression Setting. In DER [1], the predicted labels with their corresponding uncertainties are represented by the approximate posterior parameters of the NIG distribution . A DER model is trained via minimizing the negative log-likelihood (NLL) of a Student-t distribution:

| (3) |

where . It is therefore nontrivial to properly incorporate a reweighting mechanism into the NLL. One straightforward approach is to directly reweight for different data points . However, this contradicts the formulation of NIG and often leads to poor performance, as we verify in Sec. 5.

Intuition of Pseudo-Counts for VIR. To properly incorporate different reweighting methods, our VIR relies on the intuition of pseudo-counts (pseudo-observations) in conjugate distributions [4]. Assuming Gaussian likelihood, the conjugate distributions would be an NIG distribution [4], i.e., , which means:

where is an inverse gamma distribution. With an NIG prior distribution , the posterior distribution of the NIG after observing real data points are:

| (4) |

where and . Here and can be interpreted as virtual observations, i.e., pseudo-counts or pseudo-observations that contribute to the posterior distribution. Overall, the mean of posterior distribution above can be interpreted as an estimation from observations, with virtual observations and real observations. Similarly, the variance can be interpreted an estimation from observations. This intuition is crucial in developing our VIR’s predictor.

From Pseudo-Counts to Balanced Predictive Distributions. Based on the intuition above, we construct our predictor (i.e., ) by (1) generating the parameters in the posterior distribution of NIG, (2) computing re-weighted parameters by imposing the importance weights obtained from LDS, and (3) producing the final prediction with corresponding uncertainty estimation.

Based on Eqn. 4, we feed the final representation generated from the Sec. 3.3 (Eqn. 2) into a linear layer to output the intermediate parameters for data point :

We then apply the importance weights calculated from the smoothed label distribution to the pseudo-count to produce the re-weighted parameters of posterior distribution of NIG, where denotes the marginal distribution of . Along with the pre-defined prior parameters , we are able to compute the parameters of posterior distribution for :

Based on the NIG posterior distribution, we can then compute final prediction and uncertainty estimation as

We use an objective function similar to Eqn. 3, but with different definitions of , to optimize our VIR model:

| (5) |

where . Note that is part of the ELBO in Eqn. 1. Similar to [1], we use an additional regularization term to achieve better accuracy :

and together constitute the objective function for learning the predictor .

Table 1: Accuracy on AgeDB-DIR. Metrics MAE GM Shot all many medium few all many medium few Vanilla [49] 7.77 6.62 9.55 13.67 5.05 4.23 7.01 10.75 VAE [25] 7.63 6.58 9.21 13.45 4.86 4.11 6.61 10.24 Deep Ens. [27] 7.73 6.62 9.37 13.90 4.87 4.37 6.50 11.35 Infer Noise [29] 8.53 7.62 9.73 13.82 5.57 4.95 6.58 10.86 SmoteR [40] 8.16 7.39 8.65 12.28 5.21 4.65 5.69 8.49 SMOGN [5] 8.26 7.64 9.01 12.09 5.36 4.9 6.19 8.44 SQInv [49] 7.81 7.16 8.80 11.2 4.99 4.57 5.73 7.77 DER [1] 8.09 7.31 8.99 12.66 5.19 4.59 6.43 10.49 LDS [49] 7.67 6.98 8.86 10.89 4.85 4.39 5.8 7.45 FDS [49] 7.69 7.10 8.86 9.98 4.83 4.41 5.97 6.29 LDS + FDS [49] 7.55 7.01 8.24 10.79 4.72 4.36 5.45 6.79 RANKSIM [13] 7.02 6.49 7.84 9.68 4.53 4.13 5.37 6.89 LDS + FDS + DER [1] 8.18 7.44 9.52 11.45 5.30 4.75 6.74 7.68 VIR (Ours) 6.99 6.39 7.47 9.51 4.41 4.07 5.05 6.23 Ours vs. Vanilla +0.78 +0.23 +2.08 +4.16 +0.64 +0.16 +1.96 +4.52 Ours vs. Infer Noise +1.54 +1.23 +2.26 +4.31 +1.16 +0.88 +1.53 +4.63 Ours vs. DER +1.10 +0.92 +1.52 +3.15 +0.78 +0.52 +1.38 +4.26 Ours vs. LDS + FDS +0.56 +0.62 +0.77 +1.28 +0.31 +0.29 +0.40 +0.56 Ours vs. RANKSIM +0.03 +0.10 +0.37 +0.17 +0.12 +0.06 +0.32 +0.66

Table 2: Accuracy on IW-DIR. Metrics MAE GM Shot All Many Medium Few All Many Medium Few Vanilla [49] 8.06 7.23 15.12 26.33 4.57 4.17 10.59 20.46 VAE [25] 8.04 7.20 15.05 26.30 4.57 4.22 10.56 20.72 Deep Ens. [27] 8.08 7.31 15.09 26.47 4.59 4.26 10.61 21.13 Infer Noise [29] 8.11 7.36 15.23 26.29 4.68 4.33 10.65 20.31 SmoteR [40] 8.14 7.42 14.15 25.28 4.64 4.30 9.05 19.46 SMOGN [5] 8.03 7.30 14.02 25.93 4.63 4.30 8.74 20.12 SQInv [49] 7.87 7.24 12.44 22.76 4.47 4.22 7.25 15.10 DER [1] 7.85 7.18 13.35 24.12 4.47 4.18 8.18 15.18 LDS [49] 7.83 7.31 12.43 22.51 4.42 4.19 7.00 13.94 FDS [49] 7.83 7.23 12.60 22.37 4.42 4.20 6.93 13.48 LDS + FDS [49] 7.78 7.20 12.61 22.19 4.37 4.12 7.39 12.61 RANKSIM [13] 7.50 6.93 12.09 21.68 4.19 3.97 6.65 13.28 LDS + FDS + DER [1] 7.24 6.64 11.87 23.44 3.93 3.69 6.64 16.00 VIR (Ours) 7.19 6.56 11.81 20.96 3.85 3.63 6.51 12.23 Ours vs. Vanilla +0.87 +0.67 +3.31 +5.37 +0.72 +0.54 +4.08 +8.23 Ours vs. Infer Noise +0.92 +0.80 +3.42 +5.33 +0.83 +0.70 +4.14 +8.08 Ours vs. DER +0.66 +0.62 +1.54 +3.16 +0.62 +0.55 +1.67 +2.95 Ours vs. LDS + FDS +0.59 +0.64 +0.8 +1.23 +0.52 +0.49 +0.88 +0.38 Ours vs. RANKSIM +0.31 +0.37 +0.28 +0.72 +0.34 +0.34 +0.14 +1.05

3.5 Final Objective Function

4 Theory

Notation. As mentioned in Sec. 3.1, we partitioned into equal-interval bins (denote the set of bins as ), and are sampled from the label space . In addition, We use the binary set to represent the label distribution (frequency) for each bin , i.e., . We also use the binary set to represent whether the data point is observed (i.e., , and ), where represents the bin which belongs to. For each bin , we denote the associated set of data points as

When the imbalanced dataset is partially observed, we denote the observation set as:

Definition 4.1 (Expectation over Observability ).

We define the expectation over the observability variable as .

Definition 4.2 (True Risk).

Based on the previous definitions, the true risk is defined as:

where refers to some loss function (e.g. MAE, MSE). In this paper, we assume the loss is upper bounded by , i.e., .

Below we define the Naive Estimator.

Definition 4.3 (Naive Estimator).

Given the observation set, the Naive Estimator is defined as:

It is easy to verify that the expectation of this naive estimator is not equal to the true risk, as .

Considering an imbalanced dataset as a subset of observations from a balanced one, we contrast it with the Inverse Propensity Score (IPS) estimator [34].

Definition 4.4 (Inverse Propensity Score Estimator).

The inverse propensity score (IPS) estimator (an unbiased estimator) is defined as

The IPS estimator is an unbiased estimator, as we can verify by taking the expectation value over the observation set:

Finally, we define our VIR/DIR estimator below.

Definition 4.5 (VIR Estimator).

The VIR estimator, denoted by , is defined as:

| (6) |

where represents the smoothed label distribution used in our VIR’s objective function. It is important to note that our VIR estimator is biased.

For multiple predictions, we select the “best” estimator according to the following definition.

Definition 4.6 (Empirical Risk Minimizer).

For a given hypothesis space of predictions , the Empirical Risk Minimization (ERM) identifies the prediction as

With all the aforementioned definitions, we can derive the generalization bound for the VIR estimator.

Theorem 4.1 (Generalization Bound of VIR).

In imbalanced regression with bins , for any finite hypothesis space of predictions , the transductive prediction error of the empirical risk minimizer using the VIR estimator with estimated propensities () and given training observations from with propensities , is bounded by:

| (7) |

Remark. The naive estimator (i.e., Definition 4.3) has large bias and large variance. If one directly uses the original label distribution in the training objective (i.e., Definition 4.4), i.e., , the “bias” term will be . However, the “variance” term will be extremely large for minority data because is very close to . In contrast, under VIR’s N.I.D., used in the training objective function will be smoothed. Therefore, the minority data’s label density will be smoothed out by its neighbors and becomes larger (compared to the original ), leading to smaller “variance” in the generalization error bound. Note that , VIR (with N.I.D.) essentially increases bias, but significantly reduces its variance in the imbalanced setting, thereby leading to a lower generalization error.

5 Experiments

Datasets. We evaluate our methods in terms of prediction accuracy and uncertainty estimation on four imbalanced datasets111Among the five datasets proposed in [49], only four of them are publicly available., AgeDB-DIR [30], IMDB-WIKI-DIR [33], STS-B-DIR [7], and NYUD2-DIR [35]. Due to page limit, results for NYUD2-DIR [35] are moved to the Appendix. We follow the preprocessing procedures in DIR [49]. Details for each datasets are in the Appendix, and please refer to [49] for details on label density distributions and levels of imbalance.

Baselines. We use ResNet-50 [17] (for AgeDB-DIR and IMDB-WIKI-DIR) and BiLSTM [19] (for STS-B-DIR) as our backbone networks, and more details for baseline are in the Appendix. we describe the baselines below.

-

•

Vanilla: We use the term VANILLA to denote a plain model without adding any approaches.

- •

-

•

Cost-Sensitive Reweighting: As shown in DIR [49], the square-root weighting variant (SQINV) baseline (i.e., ) always outperforms Vanilla. Therefore, for fair comparison, all our experiments (for both baselines and VIR) use SQINV weighting.

Evaluation Metrics. We follow the evaluation metrics in [49] to evaluate the accuracy of our proposed methods; these include Mean Absolute Error (MAE), Mean Squared Error (MSE). Furthermore, for AgeDB-DIR and IMDB-WIKI-DIR, we use Geometric Mean (GM) to evaluate the accuracy; for STS-B-DIR, we use Pearson correlation and Spearman correlation. We use typical evaluation metrics for uncertainty estimation in regression problems to evaluate our produced uncertainty estimation; these include Negative Log Likelihood (NLL), Area Under Sparsification Error (AUSE). Eqn. 5 shows the formula for NLL, and more details regarding to AUSE can be found in [21]. We also include calibrated uncertainty results for VIR in the Appendix.

| Metrics | MSE | Pearson | ||||||

|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few |

| Vanilla [49] | 0.974 | 0.851 | 1.520 | 0.984 | 0.742 | 0.720 | 0.627 | 0.752 |

| VAE [25] | 0.968 | 0.833 | 1.511 | 1.102 | 0.751 | 0.724 | 0.621 | 0.749 |

| Deep Ens. [27] | 0.972 | 0.846 | 1.496 | 1.032 | 0.746 | 0.723 | 0.619 | 0.750 |

| Infer Noise [29] | 0.954 | 0.980 | 1.408 | 0.967 | 0.747 | 0.711 | 0.631 | 0.756 |

| SmoteR [40] | 1.046 | 0.924 | 1.542 | 1.154 | 0.726 | 0.693 | 0.653 | 0.706 |

| SMOGN [5] | 0.990 | 0.896 | 1.327 | 1.175 | 0.732 | 0.704 | 0.655 | 0.692 |

| Inv [49] | 1.005 | 0.894 | 1.482 | 1.046 | 0.728 | 0.703 | 0.625 | 0.732 |

| DER [1] | 1.001 | 0.912 | 1.368 | 1.055 | 0.732 | 0.711 | 0.646 | 0.742 |

| LDS [49] | 0.914 | 0.819 | 1.319 | 0.955 | 0.756 | 0.734 | 0.638 | 0.762 |

| FDS [49] | 0.927 | 0.851 | 1.225 | 1.012 | 0.750 | 0.724 | 0.667 | 0.742 |

| LDS + FDS [49] | 0.907 | 0.802 | 1.363 | 0.942 | 0.760 | 0.740 | 0.652 | 0.766 |

| RANKSIM [13] | 0.903 | 0.908 | 0.911 | 0.804 | 0.758 | 0.706 | 0.690 | 0.827 |

| LDS + FDS + DER [1] | 1.007 | 0.880 | 1.535 | 1.086 | 0.729 | 0.714 | 0.635 | 0.731 |

| VIR (Ours) | 0.892 | 0.795 | 0.899 | 0.781 | 0.776 | 0.752 | 0.696 | 0.845 |

| Ours vs. Vanilla | +0.082 | +0.056 | +0.621 | +0.203 | +0.034 | +0.032 | +0.069 | +0.093 |

| Ours vs. Infer Noise | +0.062 | +0.185 | +0.509 | +0.186 | +0.029 | +0.041 | +0.065 | +0.089 |

| Ours vs. DER | +0.109 | +0.117 | +0.469 | +0.274 | +0.044 | +0.041 | +0.050 | +0.103 |

| Ours vs. LDS + FDS | +0.015 | +0.007 | +0.464 | +0.161 | +0.016 | +0.012 | +0.044 | +0.079 |

| Ours vs. RANKSIM | +0.011 | +0.113 | +0.012 | +0.023 | +0.018 | +0.046 | +0.006 | +0.018 |

Evaluation Process. Following [28, 49], for a data sample with its label which falls into the target bins , we divide the label space into three disjoint subsets: many-shot region , medium-shot region , and few-shot region , where denotes the cardinality of the set. We report results on the overall test set and these subsets with the accuracy metrics and uncertainty metrics discussed above.

Implementation Details. We conducted five separate trials for our method using different random seeds. The error bars and other implementation details are included in the Appendix.

5.1 Imbalanced Regression Accuracy

We report the accuracy of different methods in Table 2, Table 2, and Table 3 for AgeDB-DIR, IMDB-WIKI-DIR and STS-B-DIR, respectively. In all the tables, we can observe that our VIR consistently outperforms all baselines in all metrics.

As shown in the last four rows of all three tables, our proposed VIR compares favorably against strong baselines including DIR variants [49] and DER [1], Infer Noise [29], and RankSim [13], especially on the imbalanced data samples (i.e., in the few-shot columns). Notably, VIR improves upon the state-of-the-art method RankSim by and on AgeDB-DIR and IMDB-WIKI-DIR, respectively, in terms of few-shot GM. This verifies the effectiveness of our methods in terms of overall performance. More accuracy results on different metrics are included in the Appendix. Besides the main results, we also include ablation studies for VIR in the Appendix, showing the effectiveness of VIR’s encoder and predictor.

5.2 Imbalanced Regression Uncertainty Estimation

Different from DIR [49] which only focuses on accuracy, we create a new benchmark for uncertainty estimation in imbalanced regression. Table 5, Table 5, and Table 6 show the results on uncertainty estimation for three datasets AgeDB-DIR, IMDB-WIKI-DIR, and STS-B-DIR, respectively. Note that most baselines from Table 2, Table 2, and Table 3 are deterministic methods (as opposed to probabilistic methods like ours) and cannot provide uncertainty estimation; therefore they are not applicable here. To show the superiority of our VIR model, we create a strongest baseline by concatenating the DIR variants (LDS + FDS) with the DER [1].

Results show that our VIR consistently outperforms all baselines across different metrics, especially in the few-shot metrics. Note that our proposed methods mainly focus on the imbalanced setting, and therefore naturally places more emphasis on the few-shot metrics. Notably, on AgeDB-DIR, IMDB-WIKI-DIR, and STS-B-DIR, our VIR improves upon the strongest baselines, by in terms of few-shot AUSE.

| Metrics | NLL | AUSE | ||||||

|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Med | Few | All | Many | Med | Few |

| Deep Ens. [27] | 5.311 | 4.031 | 6.726 | 8.523 | 0.541 | 0.626 | 0.466 | 0.483 |

| Infer Noise [29] | 4.616 | 4.413 | 4.866 | 5.842 | 0.465 | 0.458 | 0.457 | 0.496 |

| DER [1] | 3.918 | 3.741 | 3.919 | 4.432 | 0.523 | 0.464 | 0.449 | 0.486 |

| LDS + FDS + DER [1] | 3.787 | 3.689 | 3.912 | 4.234 | 0.451 | 0.460 | 0.399 | 0.565 |

| VIR (Ours) | 3.703 | 3.598 | 3.805 | 4.196 | 0.434 | 0.456 | 0.324 | 0.414 |

| Ours vs. DER | +0.215 | +0.143 | +0.114 | +0.236 | +0.089 | +0.008 | +0.125 | +0.072 |

| Ours vs. LDS + FDS + DER | +0.084 | +0.091 | +0.107 | +0.038 | +0.017 | +0.004 | +0.075 | +0.151 |

| Metrics | NLL | AUSE | ||||||

|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few |

| Deep Ens. [27] | 5.219 | 4.102 | 7.123 | 8.852 | 0.846 | 0.862 | 0.745 | 0.718 |

| Infer Noise [29] | 4.231 | 4.078 | 5.326 | 8.292 | 0.732 | 0.728 | 0.561 | 0.478 |

| DER [1] | 3.850 | 3.699 | 4.997 | 6.638 | 0.813 | 0.802 | 0.650 | 0.541 |

| LDS + FDS + DER [1] | 3.683 | 3.602 | 4.391 | 5.697 | 0.784 | 0.670 | 0.459 | 0.483 |

| VIR (Ours) | 3.651 | 3.579 | 4.296 | 5.518 | 0.634 | 0.649 | 0.434 | 0.379 |

| Ours vs. DER | +0.199 | +0.120 | +0.701 | +1.120 | +0.179 | +0.153 | +0.216 | +0.162 |

| Ours vs. LDS + FDS + DER | +0.032 | +0.023 | +0.095 | +0.179 | +0.150 | +0.021 | +0.025 | +0.104 |

| Metrics | NLL | AUSE | ||||||

|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few |

| Deep Ens. [27] | 3.913 | 3.911 | 4.223 | 4.106 | 0.709 | 0.621 | 0.676 | 0.663 |

| Infer Noise [29] | 3.748 | 3.753 | 3.755 | 3.688 | 0.673 | 0.631 | 0.644 | 0.639 |

| DER [1] | 2.667 | 2.601 | 3.013 | 2.401 | 0.682 | 0.583 | 0.613 | 0.624 |

| LDS + FDS + DER [1] | 2.561 | 2.514 | 2.880 | 2.358 | 0.672 | 0.581 | 0.609 | 0.615 |

| VIR (Ours) | 1.996 | 1.810 | 2.754 | 2.152 | 0.591 | 0.575 | 0.602 | 0.510 |

| Ours vs. DER | +0.671 | +0.791 | +0.259 | +0.249 | +0.091 | +0.008 | +0.011 | +0.114 |

| Ours vs. LDS + FDS + DER | +0.565 | +0.704 | +0.126 | +0.206 | +0.081 | +0.006 | +0.007 | +0.105 |

5.3 Limitations

Although our methods successfully improve both accuracy and uncertainty estimation on imbalanced regression, there are still several limitations. Exactly computing variance of the variances in Sec. 3.3 is challenging; we therefore resort to fixed variance as an approximation. Developing more accurate and efficient approximations would also be interesting future work.

6 Conclusion

We identify the problem of probabilistic deep imbalanced regression, which aims to both improve accuracy and obtain reasonable uncertainty estimation in imbalanced regression. We propose VIR, which can use any deep regression models as backbone networks. VIR borrows data with similar regression labels to produce the probabilistic representations and modulates the conjugate distributions to impose probabilistic reweighting on imbalanced data. Furthermore, we create new benchmarks with strong baselines for uncertainty estimation on imbalanced regression. Experiments show that our methods outperform state-of-the-art imbalanced regression models in terms of both accuracy and uncertainty estimation. Future work may include (1) improving VIR by better approximating variance of the variances in probability distributions, and (2) developing novel approaches that can achieve stable performance even on imbalanced data with limited sample size, and (3) exploring techniques such as mixture density networks [3] to enable multi-modality in the latent distribution, thereby further improving the performance.

Acknowledgement

The authors thank Pei Wu for help with figures, the reviewers/AC for the constructive comments to improve the paper, and Amazon Web Service for providing cloud computing credit. Most work is done when ZW is a master student at UMich. HW is partially supported by NSF Grant IIS-2127918 and an Amazon Faculty Research Award. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors.

References

- [1] A. Amini, W. Schwarting, A. Soleimany, and D. Rus. Deep evidential regression. Advances in Neural Information Processing Systems, 33:14927–14937, 2020.

- [2] J. Bergstra and Y. Bengio. Random search for hyper-parameter optimization. Journal of machine learning research, 13(2), 2012.

- [3] C. M. Bishop. Mixture density networks. 1994.

- [4] C. M. Bishop. Pattern recognition and machine learning. springer, 2006.

- [5] P. Branco, L. Torgo, and R. P. Ribeiro. Smogn: a pre-processing approach for imbalanced regression. In First international workshop on learning with imbalanced domains: Theory and applications, pages 36–50. PMLR, 2017.

- [6] P. Branco, L. Torgo, and R. P. Ribeiro. Rebagg: Resampled bagging for imbalanced regression. In Second International Workshop on Learning with Imbalanced Domains: Theory and Applications, pages 67–81. PMLR, 2018.

- [7] D. Cer, M. Diab, E. Agirre, I. Lopez-Gazpio, and L. Specia. Semeval-2017 task 1: Semantic textual similarity multilingual and crosslingual focused evaluation. In Proceedings of the 11th International Workshop on Semantic Evaluation, pages 1–14, 2017.

- [8] B. Charpentier, O. Borchert, D. Zügner, S. Geisler, and S. Günnemann. Natural posterior network: Deep bayesian predictive uncertainty for exponential family distributions. In The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, 2022.

- [9] N. V. Chawla, K. W. Bowyer, L. O. Hall, and W. P. Kegelmeyer. Smote: synthetic minority over-sampling technique. Journal of artificial intelligence research, 16:321–357, 2002.

- [10] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton. A simple framework for contrastive learning of visual representations. In International conference on machine learning, pages 1597–1607. PMLR, 2020.

- [11] Z. Deng, H. Liu, Y. Wang, C. Wang, Z. Yu, and X. Sun. PML: progressive margin loss for long-tailed age classification. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021, 2021.

- [12] Y. Gal and Z. Ghahramani. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, June 19-24, 2016, volume 48 of JMLR Workshop and Conference Proceedings, pages 1050–1059. JMLR.org, 2016.

- [13] Y. Gong, G. Mori, and F. Tung. Ranksim: Ranking similarity regularization for deep imbalanced regression. In International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA, volume 162 of Proceedings of Machine Learning Research, pages 7634–7649. PMLR, 2022.

- [14] C. Guo, G. Pleiss, Y. Sun, and K. Q. Weinberger. On calibration of modern neural networks. In International conference on machine learning, pages 1321–1330. PMLR, 2017.

- [15] A. K. Gupta and D. K. Nagar. Matrix variate distributions, volume 104. CRC Press, 2018.

- [16] S. Gupta, H. Wang, Z. Lipton, and Y. Wang. Correcting exposure bias for link recommendation. In ICML, 2021.

- [17] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016, pages 770–778. IEEE Computer Society, 2016.

- [18] J. Heiss, J. Weissteiner, H. Wutte, S. Seuken, and J. Teichmann. Nomu: Neural optimization-based model uncertainty. arXiv preprint arXiv:2102.13640, 2021.

- [19] Z. Huang, W. Xu, and K. Yu. Bidirectional LSTM-CRF models for sequence tagging. CoRR, 2015.

- [20] E. Hüllermeier and W. Waegeman. Aleatoric and epistemic uncertainty in machine learning: A tutorial introduction. CoRR, abs/1910.09457, 2019.

- [21] E. Ilg, Ö. cCiccek, S. Galesso, A. Klein, O. Makansi, F. Hutter, and T. Brox. Uncertainty estimates and multi-hypotheses networks for optical flow. In Computer Vision - ECCV 2018 - 15th European Conference, Munich, Germany, September 8-14, 2018, Proceedings, Part VII, volume 11211 of Lecture Notes in Computer Science, pages 677–693. Springer, 2018.

- [22] H. Jiang, P. He, W. Chen, X. Liu, J. Gao, and T. Zhao. SMART: robust and efficient fine-tuning for pre-trained natural language models through principled regularized optimization. In D. Jurafsky, J. Chai, N. Schluter, and J. R. Tetreault, editors, Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, July 5-10, 2020, pages 2177–2190. Association for Computational Linguistics, 2020.

- [23] A. Kendall and Y. Gal. What uncertainties do we need in bayesian deep learning for computer vision? arXiv preprint arXiv:1703.04977, 2017.

- [24] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. In 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015.

- [25] D. P. Kingma and M. Welling. Auto-encoding variational bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings, 2014.

- [26] V. Kuleshov, N. Fenner, and S. Ermon. Accurate uncertainties for deep learning using calibrated regression. In International Conference on Machine Learning, pages 2796–2804. PMLR, 2018.

- [27] B. Lakshminarayanan, A. Pritzel, and C. Blundell. Simple and scalable predictive uncertainty estimation using deep ensembles. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, pages 6402–6413, 2017.

- [28] Z. Liu, Z. Miao, X. Zhan, J. Wang, B. Gong, and S. X. Yu. Large-scale long-tailed recognition in an open world. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pages 2537–2546. Computer Vision Foundation / IEEE, 2019.

- [29] L. Mi, H. Wang, Y. Tian, and N. Shavit. Training-free uncertainty estimation for neural networks. In AAAI, 2022.

- [30] S. Moschoglou, A. Papaioannou, C. Sagonas, J. Deng, I. Kotsia, and S. Zafeiriou. Agedb: The first manually collected, in-the-wild age database. In 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2017, Honolulu, HI, USA, July 21-26, 2017, pages 1997–2005, 2017.

- [31] J. Pennington, R. Socher, and C. D. Manning. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pages 1532–1543, 2014.

- [32] J. Ren, M. Zhang, C. Yu, and Z. Liu. Balanced mse for imbalanced visual regression. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022, pages 7916–7925. IEEE, 2022.

- [33] R. Rothe, R. Timofte, and L. V. Gool. Deep expectation of real and apparent age from a single image without facial landmarks. Int. J. Comput. Vis., 126(2-4):144–157, 2018.

- [34] T. Schnabel, A. Swaminathan, A. Singh, N. Chandak, and T. Joachims. Recommendations as treatments: Debiasing learning and evaluation. In international conference on machine learning, pages 1670–1679. PMLR, 2016.

- [35] N. Silberman, D. Hoiem, P. Kohli, and R. Fergus. Indoor segmentation and support inference from rgbd images. ECCV (5), 7576:746–760, 2012.

- [36] J. Snoek, Y. Ovadia, E. Fertig, B. Lakshminarayanan, S. Nowozin, D. Sculley, J. V. Dillon, J. Ren, and Z. Nado. Can you trust your model’s uncertainty? evaluating predictive uncertainty under dataset shift. In Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, pages 13969–13980, 2019.

- [37] H. Song, T. Diethe, M. Kull, and P. Flach. Distribution calibration for regression. In International Conference on Machine Learning, pages 5897–5906. PMLR, 2019.

- [38] M. Stadler, B. Charpentier, S. Geisler, D. Zügner, and S. Günnemann. Graph posterior network: Bayesian predictive uncertainty for node classification. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, virtual, pages 18033–18048, 2021.

- [39] B. Sun, J. Feng, and K. Saenko. Return of frustratingly easy domain adaptation. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, February 12-17, 2016, Phoenix, Arizona, USA, pages 2058–2065. AAAI Press, 2016.

- [40] L. Torgo, R. P. Ribeiro, B. Pfahringer, and P. Branco. SMOTE for regression. In L. Correia, L. P. Reis, and J. Cascalho, editors, Progress in Artificial Intelligence - 16th Portuguese Conference on Artificial Intelligence, EPIA 2013, Angra do Heroísmo, Azores, Portugal, September 9-12, 2013. Proceedings, volume 8154 of Lecture Notes in Computer Science, pages 378–389. Springer, 2013.

- [41] A. Wang, A. Singh, J. Michael, F. Hill, O. Levy, and S. R. Bowman. Glue: A multi-task benchmark and analysis platform for natural language understanding. EMNLP 2018, page 353, 2018.

- [42] H. Wang, Y. Ma, H. Ding, and Y. Wang. Context uncertainty in contextual bandits with applications to recommender systems. In AAAI, 2022.

- [43] H. Wang, C. Mao, H. He, M. Zhao, T. S. Jaakkola, and D. Katabi. Bidirectional inference networks: A class of deep bayesian networks for health profiling. In AAAI, volume 33, pages 766–773, 2019.

- [44] H. Wang, S. Xingjian, and D.-Y. Yeung. Natural-parameter networks: A class of probabilistic neural networks. In NIPS, pages 118–126, 2016.

- [45] H. Wang and J. Yan. Self-interpretable time series prediction with counterfactual explanations. In ICML, 2023.

- [46] H. Wang and D.-Y. Yeung. Towards bayesian deep learning: A framework and some existing methods. TDKE, 28(12):3395–3408, 2016.

- [47] H. Wang and D.-Y. Yeung. A survey on bayesian deep learning. CSUR, 53(5):1–37, 2020.

- [48] Y. Yang, H. Wang, and D. Katabi. On multi-domain long-tailed recognition, generalization and beyond. In ECCV, 2022.

- [49] Y. Yang, K. Zha, Y. Chen, H. Wang, and D. Katabi. Delving into deep imbalanced regression. In International Conference on Machine Learning, pages 11842–11851. PMLR, 2021.

- [50] W. Yin, J. Zhang, O. Wang, S. Niklaus, L. Mai, S. Chen, and C. Shen. Learning to recover 3d scene shape from a single image. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021, pages 204–213. Computer Vision Foundation / IEEE, 2021.

- [51] E. Zelikman, C. Healy, S. Zhou, and A. Avati. Crude: calibrating regression uncertainty distributions empirically. arXiv preprint arXiv:2005.12496, 2020.

- [52] Z. Zhang, A. Romero, M. J. Muckley, P. Vincent, L. Yang, and M. Drozdzal. Reducing uncertainty in undersampled mri reconstruction with active acquisition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2049–2058, 2019.

Appendix A Theory

Notation. As defined in Sec.3 in main paper, we partitioned into equal-interval bins (denote the set of bins as ), and are sampled from the label space . In addition, We denote the binary set as the label distribution (frequency) for each bin, i.e., . We also denote the binary set to represent whether the data are observed (i.e., , and ), where represents the bin which belongs to. For each bin , we denote the global set of samples as

When the imbalanced dataset is partially observed, we denote the observation set as:

Definition A.1 (Expectation over Observability ).

We define the expectation over the observability variable as

Definition A.2 (True Risk).

Based on the previous definitions, the true risk for our model is defined as:

where refers to some loss function (e.g. MAE, MSE). In this paper we assume these loss is upper bounded by , i.e., .

Then in next step we define the Naive Estimator.

Definition A.3 (Naive Estimator).

Given the observation set, the Naive Estimator is defined as:

It is easy to verify that the expectation of this naive estimator is not equal to the true risk, as .

Considering an imbalanced dataset as a subset of observations from a balanced one, we contrast it with the Inverse Propensity Score (IPS) estimator [34], underscoring the superiorities of our approach.

Definition A.4 (Inverse Propensity Score Estimator).

The inverse propensity score (IPS) estimator (an unbiased estimator) is defined as

IPS estimator is an unbiased estimator, as we can verify by taking the expectation value over the observation set:

Finally, we define our VIR/DIR estimator below.

Definition A.5 (VIR Estimator).

The VIR estimator, denoted by , is defined as:

| (8) |

where represents the smoothed label distribution utilized in our VIR’s objective function (see Eqn.5 in the main paper). It’s important to note that our VIR estimator is biased.

For multiple predictions, we select the “best” estimator according to the following definition.

Definition A.6 (Empirical Risk Minimizer).

For a given hypothesis space of predictions , the Empirical Risk Minimization (ERM) identifies the prediction as

Lemma A.1 (Tail Bound for VIR Estimator).

For any given and , with probability , the VIR estimator does not deviate from its expectation by more than

Proof.

For independent bounded random variables that takes values in intervals of sizes with probability , and for any ,

Consider the error term for each bin in as . Using Hoeffding’s inequality, define and . Then, by setting , we are then able to show that:

We can then solve for , completing the proof. ∎

With all the aforementioned definitions, we can derive the generalization bound for the VIR estimator.

Theorem A.1 (Generalization Bound of VIR).

In imbalanced regression with bins , for any finite hypothesis space of predictions , the transductive prediction error of the empirical risk minimizer using the VIR estimator with estimated propensities () and given training observations from with propensities , is bounded by:

| (9) |

Proof.

We first re-state the generalization bound for our VIR estimator: with probability , we have

We start to prove it from the LHS:

Below we derive each term:

Variance Term. With probability , the variance term is derived as

| (10) | ||||

| (11) | ||||

where inequality (10) is by Boole’s inequality (Union bound), and inequality (11) holds by Lemma A.1. Then, by solving for , we can derive Variance Term that with probability ,

Bias Term. By definition, we can derive:

concluding the proof for the Bias Term, hence completing the proof for the whole generalization bound. ∎

| Metrics | MSE | MAE | GM | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few |

| Vanilla [49] | 101.60 | 78.40 | 138.52 | 253.74 | 7.77 | 6.62 | 9.55 | 13.67 | 5.05 | 4.23 | 7.01 | 10.75 |

| VAE [25] | 99.85 | 78.86 | 130.59 | 223.09 | 7.63 | 6.58 | 9.21 | 13.45 | 4.86 | 4.11 | 6.61 | 10.24 |

| Deep Ensemble [27] | 100.94 | 79.3 | 129.95 | 249.18 | 7.73 | 6.62 | 9.37 | 13.90 | 4.87 | 4.37 | 6.50 | 11.35 |

| Infer Noise [29] | 119.46 | 95.02 | 149.84 | 266.29 | 8.53 | 7.62 | 9.73 | 13.82 | 5.57 | 4.95 | 6.58 | 10.86 |

| SmoteR [40] | 114.34 | 93.35 | 129.89 | 244.57 | 8.16 | 7.39 | 8.65 | 12.28 | 5.21 | 4.65 | 5.69 | 8.49 |

| SMOGN [5] | 117.29 | 101.36 | 133.86 | 232.90 | 8.26 | 7.64 | 9.01 | 12.09 | 5.36 | 4.9 | 6.19 | 8.44 |

| SQInv [49] | 105.14 | 87.21 | 127.66 | 212.30 | 7.81 | 7.16 | 8.80 | 11.2 | 4.99 | 4.57 | 5.73 | 7.77 |

| DER [1] | 106.77 | 91.29 | 122.43 | 209.69 | 8.09 | 7.31 | 8.99 | 12.66 | 5.19 | 4.59 | 6.43 | 10.49 |

| LDS [49] | 102.22 | 83.62 | 128.73 | 204.64 | 7.67 | 6.98 | 8.86 | 10.89 | 4.85 | 4.39 | 5.8 | 7.45 |

| FDS [49] | 101.67 | 86.49 | 129.61 | 167.75 | 7.69 | 7.10 | 8.86 | 9.98 | 4.83 | 4.41 | 5.97 | 6.29 |

| LDS + FDS [49] | 99.46 | 84.10 | 112.20 | 209.27 | 7.55 | 7.01 | 8.24 | 10.79 | 4.72 | 4.36 | 5.45 | 6.79 |

| RANKSIM [13] | 83.51 | 71.99 | 99.14 | 149.05 | 7.02 | 6.49 | 7.84 | 9.68 | 4.53 | 4.13 | 5.37 | 6.89 |

| LDS + FDS + DER [1] | 112.62 | 94.21 | 140.03 | 210.72 | 8.18 | 7.44 | 9.52 | 11.45 | 5.30 | 4.75 | 6.74 | 7.68 |

| VIR (Ours) | 81.760.10 | 70.610.05 | 91.471.50 | 142.362.10 | 6.99 0.02 | 6.390.02 | 7.470.04 | 9.510.06 | 4.410.03 | 4.070.02 | 5.050.03 | 6.230.05 |

| Ours vs. Vanilla | +19.84 | +7.79 | +47.05 | +111.38 | +0.78 | +0.23 | +2.08 | +4.16 | +0.64 | +0.16 | +1.96 | +4.52 |

| Ours vs. Infer Noise | +37.70 | +24.41 | +58.37 | +123.93 | +1.54 | +1.23 | +2.26 | +4.31 | +1.16 | +0.88 | +1.53 | +4.63 |

| Ours vs. DER | +25.01 | +20.68 | +30.96 | +67.33 | +1.10 | +0.92 | +1.52 | +3.15 | +0.78 | +0.52 | +1.38 | +4.26 |

| Ours vs. LDS + FDS | +17.70 | +13.49 | +20.73 | +66.91 | +0.56 | +0.62 | +0.77 | +1.28 | +0.31 | +0.29 | +0.40 | +0.56 |

| Ours vs. RANKSIM | +1.75 | +1.38 | +7.67 | +6.69 | +0.03 | +0.10 | +0.37 | +0.17 | +0.12 | +0.06 | +0.32 | +0.66 |

| Metrics | MSE | MAE | GM | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few |

| Vanilla [49] | 138.06 | 108.70 | 366.09 | 964.92 | 8.06 | 7.23 | 15.12 | 26.33 | 4.57 | 4.17 | 10.59 | 20.46 |

| VAE [25] | 137.98 | 108.62 | 361.74 | 964.87 | 8.04 | 7.20 | 15.05 | 26.30 | 4.57 | 4.22 | 10.56 | 20.72 |

| Deep Ensemble [27] | 138.02 | 108.83 | 365.76 | 962.88 | 8.08 | 7.31 | 15.09 | 26.47 | 4.59 | 4.26 | 10.61 | 21.13 |

| Infer Noise [29] | 143.62 | 112.26 | 373.19 | 961.97 | 8.11 | 7.36 | 15.23 | 26.29 | 4.68 | 4.33 | 10.65 | 20.31 |

| SmoteR [40] | 138.75 | 111.55 | 346.09 | 935.89 | 8.14 | 7.42 | 14.15 | 25.28 | 4.64 | 4.30 | 9.05 | 19.46 |

| SMOGN [5] | 136.09 | 109.15 | 339.09 | 944.20 | 8.03 | 7.30 | 14.02 | 25.93 | 4.63 | 4.30 | 8.74 | 20.12 |

| SQInv [49] | 134.36 | 111.23 | 308.63 | 834.08 | 7.87 | 7.24 | 12.44 | 22.76 | 4.47 | 4.22 | 7.25 | 15.10 |

| DER [1] | 133.81 | 107.51 | 332.90 | 916.18 | 7.85 | 7.18 | 13.35 | 24.12 | 4.47 | 4.18 | 8.18 | 15.18 |

| LDS [49] | 131.65 | 109.04 | 298.98 | 829.35 | 7.83 | 7.31 | 12.43 | 22.51 | 4.42 | 4.19 | 7.00 | 13.94 |

| FDS [49] | 132.64 | 109.28 | 311.35 | 851.06 | 7.83 | 7.23 | 12.60 | 22.37 | 4.42 | 4.20 | 6.93 | 13.48 |

| LDS + FDS [49] | 129.35 | 106.52 | 311.49 | 811.82 | 7.78 | 7.20 | 12.61 | 22.19 | 4.37 | 4.12 | 7.39 | 12.61 |

| RANKSIM [13] | 125.30 | 102.68 | 299.10 | 777.48 | 7.50 | 6.93 | 12.09 | 21.68 | 4.19 | 3.97 | 6.65 | 13.28 |

| LDS + FDS + DER [1] | 120.86 | 97.75 | 297.64 | 873.10 | 7.24 | 6.64 | 11.87 | 23.44 | 3.93 | 3.69 | 6.64 | 16.00 |

| VIR (Ours) | 118.941.10 | 96.100.80 | 295.791.20 | 771.473.10 | 7.190.03 | 6.560.03 | 11.810.04 | 20.960.05 | 3.850.04 | 3.630.05 | 6.510.03 | 12.230.03 |

| Ours vs. Vanilla | +19.12 | +12.6 | +70.3 | +193.45 | +0.87 | +0.67 | +3.31 | +5.37 | +0.72 | +0.54 | +4.08 | +8.23 |

| Ours vs. Infer Noise | +24.68 | +16.16 | +77.40 | +190.50 | +0.92 | +0.80 | +3.42 | +5.33 | +0.83 | +0.70 | +4.14 | +8.08 |

| Ours vs. DER | +14.87 | +11.41 | +37.11 | +144.71 | +0.66 | +0.62 | +1.54 | +3.16 | +0.62 | +0.55 | +1.67 | +2.95 |

| Ours vs. LDS + FDS | +10.41 | +10.42 | +15.7 | +40.35 | +0.59 | +0.64 | +0.8 | +1.23 | +0.52 | +0.49 | +0.88 | +0.38 |

| Ours vs. RANKSIM | +6.36 | +6.58 | +3.31 | +6.01 | +0.31 | +0.37 | +0.28 | +0.72 | +0.34 | +0.34 | +0.14 | +1.05 |

| Metrics | MSE | MAE | Pearson | Spearman | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few |

| Vanilla [49] | 0.974 | 0.851 | 1.520 | 0.984 | 0.794 | 0.740 | 1.043 | 0.771 | 0.742 | 0.720 | 0.627 | 0.752 | 0.744 | 0.688 | 0.505 | 0.750 |

| VAE [25] | 0.968 | 0.833 | 1.511 | 1.102 | 0.782 | 0.721 | 1.040 | 0.767 | 0.751 | 0.724 | 0.621 | 0.749 | 0.752 | 0.674 | 0.501 | 0.743 |

| Deep Ensemble [27] | 0.972 | 0.846 | 1.496 | 1.032 | 0.791 | 0.723 | 1.096 | 0.792 | 0.746 | 0.723 | 0.619 | 0.750 | 0.741 | 0.689 | 0.501 | 0.746 |

| Infer Noise [29] | 0.954 | 0.980 | 1.408 | 0.967 | 0.795 | 0.745 | 0.977 | 0.741 | 0.747 | 0.711 | 0.631 | 0.756 | 0.742 | 0.681 | 0.508 | 0.753 |

| SmoteR [40] | 1.046 | 0.924 | 1.542 | 1.154 | 0.834 | 0.782 | 1.052 | 0.861 | 0.726 | 0.693 | 0.653 | 0.706 | 0.726 | 0.656 | 0.556 | 0.691 |

| SMOGN [5] | 0.990 | 0.896 | 1.327 | 1.175 | 0.798 | 0.755 | 0.967 | 0.848 | 0.732 | 0.704 | 0.655 | 0.692 | 0.732 | 0.670 | 0.551 | 0.670 |

| Inv [49] | 1.005 | 0.894 | 1.482 | 1.046 | 0.805 | 0.761 | 1.016 | 0.780 | 0.728 | 0.703 | 0.625 | 0.732 | 0.731 | 0.672 | 0.541 | 0.714 |

| DER [1] | 1.001 | 0.912 | 1.368 | 1.055 | 0.812 | 0.772 | 0.989 | 0.809 | 0.732 | 0.711 | 0.646 | 0.742 | 0.731 | 0.672 | 0.519 | 0.739 |

| LDS [49] | 0.914 | 0.819 | 1.319 | 0.955 | 0.773 | 0.729 | 0.970 | 0.772 | 0.756 | 0.734 | 0.638 | 0.762 | 0.761 | 0.704 | 0.556 | 0.743 |

| FDS [49] | 0.927 | 0.851 | 1.225 | 1.012 | 0.771 | 0.740 | 0.914 | 0.756 | 0.750 | 0.724 | 0.667 | 0.742 | 0.752 | 0.692 | 0.552 | 0.748 |

| LDS + FDS [49] | 0.907 | 0.802 | 1.363 | 0.942 | 0.766 | 0.718 | 0.986 | 0.755 | 0.760 | 0.740 | 0.652 | 0.766 | 0.764 | 0.707 | 0.549 | 0.749 |

| RANKSIM [13] | 0.903 | 0.908 | 0.911 | 0.804 | 0.761 | 0.759 | 0.786 | 0.712 | 0.758 | 0.706 | 0.690 | 0.827 | 0.758 | 0.673 | 0.493 | 0.849 |

| LDS + FDS + DER [1] | 1.007 | 0.880 | 1.535 | 1.086 | 0.812 | 0.757 | 1.046 | 0.842 | 0.729 | 0.714 | 0.635 | 0.731 | 0.730 | 0.680 | 0.526 | 0.699 |

| VIR (Ours) | 0.8920.002 | 0.7950.002 | 0.8990.002 | 0.7810.003 | 0.7400.002 | 0.7060.001 | 0.7790.002 | 0.7080.002 | 0.7760.004 | 0.7520.003 | 0.6960.005 | 0.8450.006 | 0.7750.003 | 0.7160.003 | 0.5860.005 | 0.8610.007 |

| Ours vs. Vanilla | +0.082 | +0.056 | +0.621 | +0.203 | +0.054 | +0.034 | +0.264 | +0.063 | +0.034 | +0.032 | +0.069 | +0.093 | +0.031 | +0.028 | +0.081 | +0.111 |

| Ours vs. Infer Noise | +0.062 | +0.185 | +0.509 | +0.186 | +0.055 | +0.039 | +0.198 | +0.033 | +0.029 | +0.041 | +0.065 | +0.089 | +0.033 | +0.035 | +0.078 | +0.108 |

| Ours vs. DER | +0.109 | +0.117 | +0.469 | +0.274 | +0.072 | +0.066 | +0.210 | +0.101 | +0.044 | +0.041 | +0.050 | +0.103 | +0.044 | +0.044 | +0.067 | +0.122 |

| Ours vs. LDS + FDS | +0.015 | +0.007 | +0.464 | +0.161 | +0.026 | +0.012 | +0.207 | +0.047 | +0.016 | +0.012 | +0.044 | +0.079 | +0.011 | +0.009 | +0.037 | +0.112 |

| Ours vs. RANKSIM | +0.011 | +0.113 | +0.012 | +0.023 | +0.021 | +0.053 | +0.007 | +0.004 | +0.018 | +0.046 | +0.006 | +0.018 | +0.017 | +0.043 | +0.093 | +0.012 |

| Metrics | RMSE | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few |

| Vanilla [49] | 1.477 | 0.591 | 0.952 | 2.123 | 0.086 | 0.066 | 0.082 | 0.107 | 0.677 | 0.777 | 0.693 | 0.570 | 0.899 | 0.956 | 0.906 | 0.840 | 0.969 | 0.990 | 0.975 | 0.946 |

| VAE [25] | 1.483 | 0.596 | 0.949 | 2.131 | 0.084 | 0.062 | 0.079 | 0.110 | 0.675 | 0.774 | 0.693 | 0.575 | 0.894 | 0.951 | 0.906 | 0.846 | 0.963 | 0.982 | 0.976 | 0.951 |

| Deep Ensemble [27] | 1.479 | 0.595 | 0.954 | 2.126 | 0.091 | 0.067 | 0.082 | 0.109 | 0.678 | 0.782 | 0.702 | 0.583 | 0.906 | 0.961 | 0.912 | 0.851 | 0.972 | 0.993 | 0.981 | 0.956 |

| Infer Noise [29] | 1.480 | 0.594 | 0.959 | 2.125 | 0.088 | 0.069 | 0.089 | 0.111 | 0.672 | 0.768 | 0.688 | 0.566 | 0.894 | 0.949 | 0.902 | 0.834 | 0.963 | 0.983 | 0.970 | 0.941 |

| DER [1] | 1.483 | 0.615 | 0.961 | 2.142 | 0.098 | 0.089 | 0.091 | 0.110 | 0.597 | 0.647 | 0.657 | 0.525 | 0.880 | 0.904 | 0.894 | 0.851 | 0.964 | 0.974 | 0.959 | 0.955 |

| LDS [49] | 1.387 | 0.671 | 0.913 | 1.954 | 0.086 | 0.079 | 0.079 | 0.097 | 0.672 | 0.701 | 0.706 | 0.630 | 0.907 | 0.932 | 0.929 | 0.875 | 0.976 | 0.984 | 0.982 | 0.964 |

| FDS [49] | 1.442 | 0.615 | 0.940 | 2.059 | 0.084 | 0.069 | 0.080 | 0.101 | 0.681 | 0.760 | 0.695 | 0.596 | 0.903 | 0.952 | 0.918 | 0.849 | 0.975 | 0.989 | 0.976 | 0.960 |

| LDS + FDS [49] | 1.338 | 0.670 | 0.851 | 1.880 | 0.080 | 0.074 | 0.070 | 0.090 | 0.705 | 0.730 | 0.764 | 0.655 | 0.916 | 0.939 | 0.941 | 0.884 | 0.979 | 0.984 | 0.983 | 0.971 |

| LDS + FDS + DER [1] | 1.426 | 0.703 | 0.906 | 1.918 | 0.092 | 0.081 | 0.088 | 0.098 | 0.676 | 0.677 | 0.754 | 0.621 | 0.889 | 0.912 | 0.899 | 0.862 | 0.964 | 0.976 | 0.969 | 0.958 |

| VIR (Ours) | 1.305 | 0.589 | 0.831 | 1.749 | 0.075 | 0.060 | 0.064 | 0.082 | 0.722 | 0.781 | 0.793 | 0.688 | 0.929 | 0.966 | 0.961 | 0.910 | 0.985 | 0.993 | 0.989 | 0.979 |

| Ours vs. Vanilla | +0.172 | +0.002 | +0.121 | +0.374 | +0.011 | +0.006 | +0.018 | +0.025 | +0.045 | +0.004 | +0.100 | +0.118 | +0.003 | +0.010 | +0.055 | +0.070 | +0.016 | +0.003 | +0.014 | +0.033 |

| Ours vs. Infer Noise | +0.175 | +0.005 | +0.128 | +0.376 | +0.013 | +0.009 | +0.025 | +0.029 | +0.050 | +0.013 | +0.105 | +0.122 | +0.035 | +0.017 | +0.059 | +0.076 | +0.022 | +0.010 | +0.019 | +0.038 |

| Ours vs. DER | +0.178 | +0.026 | +0.130 | +0.393 | +0.023 | +0.029 | +0.027 | +0.028 | +0.125 | +0.134 | +0.136 | +0.163 | +0.049 | +0.062 | +0.067 | +0.059 | +0.021 | +0.019 | +0.030 | +0.024 |

| Ours vs. LDS + FDS | +0.033 | +0.081 | +0.020 | +0.131 | +0.005 | +0.014 | +0.006 | +0.008 | +0.017 | +0.051 | +0.029 | +0.033 | +0.013 | +0.027 | +0.020 | +0.026 | +0.006 | +0.009 | +0.006 | +0.008 |

| Metrics | NLL | AUSE | ||||||

|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few |

| Deep Ensemble [27] | 5.054 | 3.640 | 3.856 | 5.335 | 0.782 | 0.658 | 0.604 | 0.583 |

| Infer Noise [29] | 4.542 | 3.120 | 3.634 | 5.028 | 0.764 | 0.643 | 0.566 | 0.408 |

| DER [1] | 4.169 | 2.913 | 3.011 | 4.777 | 0.713 | 0.623 | 0.535 | 0.382 |

| LDS + FDS + DER [1] | 4.175 | 2.987 | 2.976 | 4.686 | 0.715 | 0.629 | 0.511 | 0.366 |

| VIR (Ours) | 3.866 | 2.815 | 2.727 | 4.113 | 0.690 | 0.603 | 0.493 | 0.335 |

| Ours vs. DER | +0.303 | +0.098 | +0.284 | +0.664 | +0.023 | +0.020 | +0.042 | +0.047 |

| Ours vs. LDS + FDS + DER | +0.309 | +0.172 | +0.249 | +0.573 | +0.025 | +0.026 | +0.018 | +0.031 |

| Metrics | MSE | MAE | NLL | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shot | All | Many | Medium | Few | All | Many | Medium | Few | All | Many | Medium | Few |

| 104.31 | 91.01 | 116.43 | 196.35 | 7.88 | 7.38 | 8.42 | 11.13 | 3.827 | 3.733 | 4.140 | 4.407 | |

| 104.10 | 87.28 | 128.26 | 196.12 | 7.83 | 7.21 | 8.81 | 10.89 | 3.848 | 3.738 | 4.041 | 4.356 | |

| 86.28 | 76.87 | 101.57 | 132.90 | 7.19 | 6.75 | 7.97 | 9.19 | 3.785 | 3.694 | 3.963 | 4.151 | |

| 86.86 | 76.58 | 99.95 | 147.82 | 7.12 | 6.69 | 7.72 | 9.59 | 3.887 | 3.797 | 4.007 | 4.401 | |

| 87.25 | 74.13 | 104.78 | 162.64 | 7.13 | 6.64 | 7.92 | 9.63 | 3.980 | 3.868 | 4.161 | 4.546 | |

| Metrics | Bins | MSE | MAE | GM | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shot | # | All | Many | Med. | Few | All | Many | Med. | Few | All | Many | Med. | Few |

| Ranksim | 100 | 83.51 | 71.99 | 99.14 | 149.05 | 7.02 | 6.49 | 7.84 | 9.68 | 4.53 | 4.13 | 5.37 | 6.89 |

| VIR (Ours) | 100 | 81.76 | 70.61 | 91.47 | 142.36 | 6.99 | 6.39 | 7.47 | 9.51 | 4.41 | 4.07 | 5.05 | 6.23 |

| Ranksim | 33 | 109.45 | 91.78 | 128.10 | 187.13 | 7.46 | 6.94 | 8.42 | 10.66 | 5.13 | 4.70 | 5.23 | 8.21 |

| VIR (Ours) | 33 | 84.77 | 77.29 | 95.66 | 125.33 | 7.01 | 6.70 | 7.45 | 8.74 | 4.36 | 4.20 | 4.73 | 4.94 |

| Ranksim | 20 | 98.71 | 84.38 | 107.89 | 171.04 | 7.32 | 6.78 | 8.35 | 10.57 | 5.33 | 4.51 | 5.69 | 7.92 |

| VIR (Ours) | 20 | 84.05 | 72.12 | 100.49 | 151.25 | 7.06 | 6.50 | 7.90 | 10.06 | 4.49 | 4.05 | 5.34 | 7.28 |

Appendix B Details for Experiments

Datasets. In this work, we evaluate our methods in terms of prediction accuracy and uncertainty estimation on four imbalanced datasets222Among the five datasets proposed in [49], only four of them are publicly available., AgeDB [30], IMDB-WIKI [33], STS-B [7], and NYUD2-DIR [35]. Due to page limit, the results for NYUD2-DIR [35] are in the supplementary. We follow the preprocessing procedures in DIR [49]. Details for each datasets are to the Supplement, and details for label density distributions and levels of imbalance are discussed in DIR [49].

-

•

AgeDB-DIR: We use AgeDB-DIR constructed in DIR [49], which contains 12.2K images for training and 2.1K images for validation and testing. The maximum age in this dataset is 101 and the minimum age is 0, and the number of images per bin varies between 1 and 353.

-

•

IW-DIR: We use IMDB-WIKI-DIR (IW-DIR) constructed in DIR [49], which contains 191.5K training images and 11.0K validation and testing images. The maximum age is 186 and minimum age is 0; the maximum bin density is 7149, and minimum bin density is 1.

-

•

STS-B-DIR: We use STS-B-DIR constructed in DIR [49], which contains 5.2K pairs of training sentences and 1.0K pairs for validation and testing. This dataset is a collection of sentence pairs generated from news headlines, video captions, etc. Each pair is annotated by multiple annotators with a similarity score between 0 and 5.

-

•

NYUD2-DIR: We use NYUD2-DIR constructed in DIR [49], which contains 50K images for training and 654 images for testing, and to make the test set balanced 9357 test pixels for each bin are randomly selected. The depth maps have an upper bound of 10 meters, and we set the bin length as 0.1 meter.

Baselines. We use ResNet-50 [17] (for AgeDB-DIR, IMDB-WIKI-DIR and NYUD2-DIR) and BiLSTM [19] (for STS-B-DIR) as our backbone networks, and moredetails for baseline are in the supplement. we describe the baselines below.

-

•

Vanilla: We use the term VANILLA to denote a plain model without adding any approaches.

- •

-

•

Cost-Sensitive Reweighting: As shown in DIR [49], the square-root weighting variant (SQINV) baseline (i.e. ) always outperforms Vanilla. Therefore, for simplicity and fair comparison, all our experiments (for both baselines and VIR) use SQINV weighting. To use SQINV in VIR, one simply needs to use the symmetric kernel described in the Method section of the main paper. To use SQINV in DER, we replace the final layer in DIR [49] with the DER layer [1] to produce the predictive distributions.

Evaluation Process. Following [28, 49], for a data sample with its label which falls into the target bins , we divide the label space into three disjoint subsets: many-shot region , medium-shot region , and few-shot region , where denotes the cardinality of the set. We report results on the overall test set and these subsets with the accuracy metrics discussed above.

Implementation Details. We use ResNet-50 [17] for all experiments in AgeDB-DIR and IMDB-WIKI-DIR. For all the experiments in STS-B-DIR, we use 300-dimensional GloVe word embeddings (840B Common Crawl version) [31] (following [41]) and a two-layer, 1500-dimensional (per direction) BiLSTM [19] with max pooling to encode the paired sentences into independent vectors and , and then pass to a regressor. We use the Adam optimizer [24] to train all models for 100 epochs, with same learning rate and decay by 0.1 and the 60-th and 90-th epoch, respectively. In order to determine the optimal batch size for training, we try different batch sizes and corroborate the conclusion from [49], i.e., the optimal batch size is 256 when other hyperparameters are fixed. Therefore, we stick to the batch size of for all the experiments in the paper. We also use the same configuration as in DIR [49] for other hyperparameters.

We use PyTorch to implement our method. For fair comparison, we implemented a PyTorch version for the official TensorFlow implementation of DER[1]. To make sure we can obtain the reasonable uncertainty estimations, we restrict the range for to instead of in DER. Besides, in the activation function SoftPlus, we set the hyperparameter beta to 0.1. As discussed in the main paper, we implement a layer which produces the parameters . We assign as the minimum number for , and use the same hyperparameter settings for activation function for DER layer.

To search for a combination hyperparameters of prior distribution for NIG, we combine grid search method and random search method [2] to select the best hyperparameters. We first intuitively assign a value and a proper range with some step sizes which correspond to the hyperparameters, then, we apply grid search to search for the best combination for the hyperparameters on prior distributions. After locating a smaller range for each hyperparameters, we use random search to search for better combinations, if it exists. In the end, we find our best hyperparameter combinations for NIG prior distributions.

Appendix C Complete Results

We include the complete results for all the experiments in AgeDB-DIR, IMDB-WIKI-DIR, STS-B-DIR and NYUD2-DIR in Table 7, Table 8, Table 9 and Table 10. These results demonstrate the superiority of our methods. Note that we did not select to report the baseline for DIR + DEEP ENS. since in DER paper [1], it has been showed that DER is better than Deep Ensemble method, therefore we select to report the baseline DIR+DER rather than DIR + DEEP ENS..

Appendix D Discussions

D.1 Why We Need Bins

Throughout our method, we need to compute the statistics (i.e., the mean and variance) and the "statistics of statistics" of data points (Line 164-165); computing these statistics (e.g., the mean) requires a group of data points. Therefore, we need to partition the continuous label size into bins. For example, in the equations from Line 176-177, e.g., , we need to compute the statistics of bin , which contains data points in the bin.

It is also worth noting in the extreme case where (i) each data point has a different label and (ii) we use a very small bin size, each bin will then contain exactly only one data point.

D.2 Equal-Interval Bins versus Equal-Size Bins

Note that since our smoothing kernel function is based on labels (i.e., ), it is more reasonable to use equal-interval bins rather than equal-size bins.

-

•

For example, if we use the equal-interval bins , VIR will naturally compute for and .

-

•

In contrast, if we use equal-size bins, VIR may end up with large intervals and may lead to inaccurate kernel values for . To see this, consider a case where equal-size bins are ; the kernel value between bins and is , which is very inaccurate since is very far away from the mean of the bin (i.e., ). Using small and equal-interval bins can naturally address such issues.

D.3 The Number of Bins

Our preliminary results indicate that the performance of our VIR remains consistent regardless of the number of bins, as shown in the Sec. E.3 of the Supplement. Thus in our paper, we chose to use the same number of bins as the imbalanced regression literature [13, 49] for fair comparison with prior work. For example, in the AgeDB dataset where the regression labels are people’s "age" in the range of 0 99, we use 100 bins, with each year as one bin.

D.4 Reweighting Methods and Stronger Augmentations

Our method focus on reweighting methods, and using augmentation (e.g., the SimCLR pipeline [10]) is an orthogonal direction to our work. However, we expect that data augmentation could further improve our VIR’s performance. This is because one could perform data augmentation only on minority data to improve accuracy in the minority group, but this is sub-optimal; the reason is that one could potentially further perform data augmentation on majority data to improve accuracy in the majority group without sacrificing too much accuracy in the minority group. However, performing data augmentation on both minority and majority groups does not transform an imbalanced dataset to an balanced dataset. This is why our VIR is still necessary; VIR could be used on top of any data augmentation techniques to address the imbalance issue and further improve accuracy.

D.5 Discussion on I.I.D. and N.I.D. Assumptions

Generalization Error, Bias, and Variance. We could analyze the generalization error of our VIR by bounding the generalization with the sum of three terms: (a) the bias of our estimator, (2) the variance of our estimator, (3) model complexity. Essentially VIR uses the N.I.D. assumption increases our estimator’s bias, but significantly reduces its variance in the imbalanced setting. Since the model complexity is kept the same (using the same backbone neural network) as the baselines, N.I.D. will lead to a lower generalization error.

Variance of Estimators in Imbalanced Settings. In the imbalanced setting, one typically use inverse weighting (i.e., the IPS estimator in Definition A.4) to produced an unbiased estimator (i.e., making the first term of the aforementioned bound zero). However, for data with extremely low density, its inverse would be extremely large, therefore leading to a very large variance for the estimator. Our VIR replaces I.I.D. with N.I.D. to “smooth out” such singularity, and therefore significantly lowers the variance of the estimator (i.e., making the second term of the aforementioned bound smaller), and ultimately lowers the generalization error.

D.6 Why We Need Statistics of Statistics for Smoothing

Compared with DIR [49], which only considers the statistics for deterministic representations, our VIR considers the statistics of statistics for probabilistic representations, this is because the requirement to perform feature smoothing to get the representation necessitates the computation of mean and variance of ’s neighboring data (i.e., data with neighboring labels). Here contains the statistics of neighboring data. In contrast, our VIR also needs to generate uncertainty estimation, which requires a stochastic representation for , e.g., the mean and variance of (note that itself is already a form of statistics). This motivates the hierarchical structure of the statistics of statistics. Here the variance measures the uncertainty of the representation.

D.7 The Choice of Kernel Function

The DIR paper shows that a simple Gaussian kernel with inverse square-root weighting (i.e., SQINV) achieves the best performance. Therefore, we use exactly the same parameter configuration as the DIR paper [49]. Specifically, we set ; for label in bin , we define neighboring labels as labels such that , i.e., contains bins. For example, if , its neighboring labels are , , , , and .

Besides, our preliminary results also show that the performance is not very sensitive to the choice of kernels, as long as the kernel reflects the distance between and , i.e., larger distance between and leads to smaller .

D.8 Why VIR Solves the Imbalanced Regression Problem

Our training objective function (Eqn.5 in the main paper) is the negative log likelihood for the Normal Inverse Gaussian (NIG) distribution, and each posterior parameter () of the NIG distribution is reweighted by importance weights, thereby assigning higher weights to minority data during training and allowing minority data points to benefit more from their neighboring information.

Take as an example. Assume a minority data point that belongs to bin , i.e., its label . Note that there is a loss term in Eqn.5, where is the model prediction, is the label, and is the importance weight for this data point.

Here where represents the pseudo-count for the NIG distribution. Since is a minority data point, data from its neighboring bins has smaller frequency and therefore smaller , leading to a larger importance weight for this minority data point in Eqn.5.

This allows VIR to naturally put more focus on the minority data, thereby alleviating the imbalance problem.

D.9 Difference from DIR, VAE and DER

From a technical perspective, VIR is substantially different from any combinations of DIR [49], VAE [25], and DER [1]. Specifically,

-

•

VIR is a deep generative model to define how imbalanced data are generated, which is learned by a principled variational inference algorithm. In contrast, DIR is a simply discriminative model (without any principled generative model formulation) that directly predict the labels from input. It is more prone to overfitting.

-

•

DIR uses deterministic representations, with one vector as the final representation for each data point. In contrast, our VIR uses probabilistic representations, with one vector as the mean of the representation and another vector as the variance of the representation. Such dual representation is more robust to noise and therefore leads to better prediction performance.

-

•

DIR is a deterministic model, while our VIR is a Bayesian model. Essentially VIR is equivalent to sampling infinitely many predictions for each input data point and averaging these predictions. Therefore intuitively it makes sense that VIR could lead to better prediction performance.

-

•

Different from VAE and DIR, VIR introduces a reweighting mechanism naturally through the pseudo-count formulation in the NIG distribution (discussed in the paragraphs Intuition of Pseudo-Counts for VIR and From Pseudo-Counts to Balanced Predictive Distribution in the paper). Note that such a reweighting mechanism is more natural and powerful than DIR since it is rooted in the probabilistic formulation.

-

•