VectorFlow: Combining Images and Vectors for Traffic Occupancy and Flow Prediction

Abstract

Predicting future behaviors of road agents is a key task in autonomous driving. While existing models have demonstrated great success in predicting marginal agent future behaviors, it remains a challenge to efficiently predict consistent joint behaviors of multiple agents. Recently, the occupancy flow fields representation was proposed to represent joint future states of road agents through a combination of occupancy grid and flow, which supports efficient and consistent joint predictions. In this work, we propose a novel occupancy flow fields predictor to produce accurate occupancy and flow predictions, by combining the power of an image encoder that learns features from a rasterized traffic image and a vector encoder that captures information of continuous agent trajectories and map states. The two encoded features are fused by multiple attention modules before generating final predictions. Our simple but effective model ranks 3rd place on the Waymo Open Dataset Occupancy and Flow Prediction Challenge, and achieves the best performance in the occluded occupancy and flow prediction task.

1 Introduction

Behavior prediction is a key task in autonomous driving, as it enables autonomous vehicles to interact with other road agents in crowded scenes. Many existing work focuses on predicting accurate marginal agent trajectories [1, 2, 3, 4], yet they do not account for agent interactions in the future and could lead to inconsistent predictions [5]. On the other hand, predicting joint trajectories of multiple agents remains an open challenge, as the number of future trajectory combinations grows exponentially with the number of agents. Despite recent progress toward joint trajectory prediction [5, 6], it remains an open question on predicting joint behaviors in an efficient and scalable way to meet the latency requirements for real-time deployment in crowded urban scenes [7].

Recently, the occupancy flow fields representation [8] was introduced to overcome the shortcomings of the traditional trajectory representation for behavior prediction of multiple agents. This new representation is a spatio-temporal grid, where each grid cell includes i) the probability of the cell occupied by any agent and ii) the flow representing the motion of the agents that occupy the cell. It offers better efficiency and scalability, as the computational complexity of predicting occupancy flow fields is independent of the number of road agents in the scene [7].

In this work, we propose a novel occupancy flow fields predictor by exploring the benefit of combining vectorized and rasterized representations as the input to the predictor. Both representations have demonstrated great success in trajectory prediction benchmarks, yet few models combine them together. Our proposed model, VectorFlow, is a simple but effective approach that fuses both vectorized and rasterized representations of traffic context through attention to predict occupancy flow fields for both observed agents and occluded agents.

Experimental results show that VectorFlow achieves state-of-the-art performance in the occluded occupancy and flow prediction task, and ranks 3rd place on the Waymo Open Dataset Occupancy and Flow Prediction Challenge.

2 Problem Formulation

In this work, we use the following problem setup111More detail on the data format can be found at: https://waymo.com/open/challenges/2022/occupancy-flow-prediction-challenge/: Given one-second history of traffic agents in a scene and the scene context such as map coordinates [9], the objective is to predict i) future observed occupancy, ii) future occluded occupancy, and iii) future flow of all the vehicles in a scene over the next 8 waypoints, where each waypoint covers a one-second interval.

2.1 Data Processing

We process the input into a rasterized image and a set of vectors. To obtain the image, we create a rasterized grid at each time step in the past given the observed agent trajectories and map data, with respect to the local coordinate of the self-driving car (SDC)222More detail can be found at https://github.com/waymo-research/waymo-open-dataset/blob/master/tutorial/tutorial_occupancy_flow.ipynb. To obtain the vectorized input that is consistent with the rasterized image, we follow the same transformation by rotating and shifting the input agent and map coordinates with respect to SDC’s local view.

3 Model

In this section, we provide a brief overview of VectorFlow, which adopts a standard encoder-decoder architecture. More implementation detail can be found in Sec. 4.

3.1 Encoder

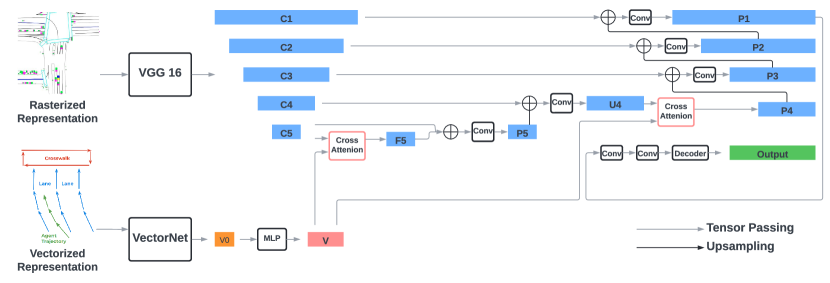

The encoder includes two parts: a VGG-16 model [10] that encodes the rasterized representation, and a VectorNet [1] model that encodes the vectorized representation. We fuse the vectorized features with the features of the last two stages of VGG-16 by cross-attention modules. The fused features are upsampled to the original resolution as the input rasterized features by an FPN-style network. More detail can be found in Fig. 1 and Sec. 4.3.

3.2 Decoder

The decoder is a single 2D convolution layer that maps the output of the encoder to the occupancy flow fields prediction, which includes a sequence of 8 grid maps representing the predicted occupancy and flow at each time step over the next 8 seconds.

3.3 Loss

We follow the loss function as in [8], including a cross entropy loss on observed occupancy prediction , a cross entropy loss on occluded occupancy prediction , and an L2 loss on flow prediction . The total loss is:

| (1) |

4 Experiment

4.1 Dataset

We train, evaluate, and test our model on the Waymo Open Motion Dataset (WOMD) based on the standard split and the filtered scenarios.

4.2 Metrics

We follow the metrics proposed by [8], which include AUC and Soft IoU for observed occupancy, occluded occupancy, and flow-grounded occupancy, as well as the end-point error (EPE) that measures the error of flow prediction.

4.3 Model Detail

Our model is illustrated in Fig 1. We use the standard VGG-16 model from torchvision.models as our rasterized encoder, and follow the implementations of VectorNet as in [2]333Code available at https://github.com/Tsinghua-MARS-Lab/DenseTNT. The input to the VectorNet includes i) a set of road element vectors with a shape of , where is the batch size, is the maximum number of road element vectors, and the last dimension of 9 represents the positions () and headings ( of two end points in each vector and the vector id; ii) a set of agent vectors with a shape of , including the vectors of up to 128 agents in a scene, where each agent has 10 vectors from the observed positions. We follow VectorNet by first running a local graph over each traffic element based on their ids and second running a global graph over all local features to obtain a vectorized feature with a shape of , where is the total number of traffic elements, including road elements and agents. We further quadrupled the size of the feature through an MLP layer to obtain a final vectorized feature with a shape of , so that its feature size is consistent with the channel size of the image feature, as discussed in the following paragraph.

We denote the output features of each VGG stage as , and they have strides of pixels with respect to the input image and a hidden dimension of 512. The vectorized feature is fused with the rasterized image feature with a shape of by a cross-attention module to obtain with the same shape. The query term of the cross-attention is the image feature flattened into with 256 tokens, and the key and value term is the vectorized feature with tokens. We then concatenate and in the channel dimension and pass it through two conv layers to obtain with a shape of . is upsampled and concatenated with () by an FPN-style upsampling module to generate with the same shape as . Next, we perform another round of fusion between and to obtain () following the same procedure, including cross attention. At the end, will be gradually upsampled by the FPN-style network and concatenated with to generate with a shape of . We pass through two conv layers to obtain the final output feature with a shape of .

The decoder is a single 2D convolution layer with an input channel size of 128 and an output channel size of 32 (8 waypoints 4 output dimensions).

| Observed | Occluded | Flow-Grounded | |||||

| Model | AUC | Soft IoU | AUC | Soft IoU | AUC | Soft IoU | EPE |

| HorizonOccFlowPred. | 0.803 | 0.235 | 0.165 | 0.017 | 0.839 | 0.633 | 3.672 |

| Look Around | 0.801 | 0.234 | 0.139 | 0.029 | 0.825 | 0.549 | 2.619 |

| Temporal Query | 0.757 | 0.393 | 0.171 | 0.040 | 0.778 | 0.465 | 3.308 |

| STrajNet | 0.751 | 0.482 | 0.161 | 0.018 | 0.777 | 0.555 | 3.587 |

| 3D-STCNN | 0.691 | 0.412 | 0.115 | 0.021 | 0.733 | 0.468 | 4.181 |

| Motionnet | 0.694 | 0.411 | 0.141 | 0.031 | 0.732 | 0.469 | 4.275 |

| FTLS | 0.618 | 0.318 | 0.085 | 0.019 | 0.689 | 0.431 | 9.612 |

| OccFlowNet | 0.667 | 0.391 | 0.111 | 0.026 | 0.678 | 0.443 | 6.636 |

| VectorFlow | 0.755 | 0.488 | 0.174 | 0.045 | 0.767 | 0.530 | 3.583 |

| Observed | Occluded | Flow-Grounded | |||||

| Model | AUC | Soft IoU | AUC | Soft IoU | AUC | Soft IoU | EPE |

| VectorFlow (VGG-only) | 0.746 | 0.468 | 0.139 | 0.034 | 0.755 | 0.520 | 3.713 |

| VectorFlow | 0.760 | 0.490 | 0.173 | 0.050 | 0.761 | 0.524 | 3.603 |

4.4 Training Detail

We train our model on the full training set of WOMD with a batch size of 32 for 16 epochs on 8 Nvidia A10 GPUs. We use an Adam optimizer and a learning rate scheduler that decays the learning rate by 50% every 5 epochs, with an initial value of 1e-3. The loss coefficients are , and , as customary in [8].

4.5 Results

We present the results of our model and other entries in the Waymo Challenge in Table 1. Our model achieves the best performance in three metrics, including AUC and Soft IoU scores for occluded occupancy predictions, and Soft IoU score for observed occupancy predictions. The gap in AUC scores is partially due to the choice of our loss function, compared to other entries that use a focal loss. Our model ranks 3rd place on the Waymo Open Dataset Occupancy and Flow Prediction Challenge.

Furthermore, we compare the performance of our model with a variant that only includes the VGG encoder. The results in Table 2 show that fusion helps improve the prediction performance, especially in occluded metrics. More specifically, the Occluded AUC and Occluded Soft IoU scores improve by 24.46% and 47.06%, respectively.

5 Conclusion

In this work, we present a simple but effective occupancy and flow predictor that efficiently generates joint agent behaviors as occupancy flow fields. Our predictor VectorFlow fuses two representations commonly used in trajectory prediction, vectorized representation and rasterized representation, through multiple attention modules. It achieves the state-of-the-art performance on the Waymo Open Dataset Occupancy and Flow Prediction Challenge.

References

- Gao et al. [2020] J. Gao, C. Sun, H. Zhao, Y. Shen, D. Anguelov, C. Li, and C. Schmid. VectorNet: Encoding hd maps and agent dynamics from vectorized representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11525–11533, 2020.

- Gu et al. [2021] J. Gu, C. Sun, and H. Zhao. DenseTNT: End-to-end trajectory prediction from dense goal sets. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 15303–15312, 2021.

- Varadarajan et al. [2022] B. Varadarajan, A. Hefny, A. Srivastava, K. S. Refaat, N. Nayakanti, A. Cornman, K. Chen, B. Douillard, C. P. Lam, D. Anguelov, et al. Multipath++: Efficient information fusion and trajectory aggregation for behavior prediction. In 2022 International Conference on Robotics and Automation (ICRA), pages 7814–7821. IEEE, 2022.

- Huang et al. [2022] X. Huang, G. Rosman, I. Gilitschenski, A. Jasour, S. G. McGill, J. J. Leonard, and B. C. Williams. HYPER: Learned hybrid trajectory prediction via factored inference and adaptive sampling. In 2022 International Conference on Robotics and Automation (ICRA), pages 2906–2912. IEEE, 2022.

- Sun et al. [2022] Q. Sun, X. Huang, J. Gu, B. C. Williams, and H. Zhao. M2I: From factored marginal trajectory prediction to interactive prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6543–6552, 2022.

- Ngiam et al. [2021] J. Ngiam, V. Vasudevan, B. Caine, Z. Zhang, H.-T. L. Chiang, J. Ling, R. Roelofs, A. Bewley, C. Liu, A. Venugopal, et al. Scene Transformer: A unified architecture for predicting future trajectories of multiple agents. In International Conference on Learning Representations, 2021.

- Kim et al. [2022] J. Kim, R. Mahjourian, S. Ettinger, M. Bansal, B. White, B. Sapp, and D. Anguelov. StopNet: Scalable trajectory and occupancy prediction for urban autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022.

- Mahjourian et al. [2022] R. Mahjourian, J. Kim, Y. Chai, M. Tan, B. Sapp, and D. Anguelov. Occupancy flow fields for motion forecasting in autonomous driving. IEEE Robotics and Automation Letters, 7(2):5639–5646, 2022.

- Ettinger et al. [2021] S. Ettinger, S. Cheng, B. Caine, C. Liu, H. Zhao, S. Pradhan, Y. Chai, B. Sapp, C. R. Qi, Y. Zhou, et al. Large scale interactive motion forecasting for autonomous driving: The waymo open motion dataset. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9710–9719, 2021.

- Simonyan and Zisserman [2014] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.