Virtual Reality in Metaverse over Wireless Networks with User-centered Deep Reinforcement Learning

Abstract

The Metaverse and its promises are fast becoming reality as maturing technologies are empowering the different facets. One of the highlights of the Metaverse is that it offers the possibility for highly immersive and interactive socialization. Virtual reality (VR) technologies are the backbone for the virtual universe within the Metaverse as they enable a hyper-realistic and immersive experience, and especially so in the context of socialization. As the virtual world 3D scenes to be rendered are of high resolution and frame rate, these scenes will be offloaded to an edge server for computation. Besides, the metaverse is user-center by design, and human users are always the core. In this work, we introduce a multi-user VR computation offloading over wireless communication scenario. In addition, we devised a novel user-centered deep reinforcement learning approach to find a near-optimal solution. Extensive experiments demonstrate that our approach can lead to remarkable results under various requirements and constraints.

Index Terms:

Metaverse, computation offloading, reinforcement learning, wireless networksI Introduction

Background. Maturing technologies in areas such as 6G wireless networks [1] and high-performance extended reality (XR) technology [2] has empowered the developments of the Metaverse [3]. One of the key developments of the Metaverse is highly interactive and immersive socialization. Users can interact with one another via full-body avatars, improving the overall socialization experience.

Motivation. Virtual Reality is a key feature of an immersive Metaverse socialization experience. Compared to traditional two-dimensional images, generating panoramic images for the VR experience is computationally intensive. However, the rendering and computation of scenes of high resolution and frame rate are still not feasible on existing VR devices, due to the lack of local device computing power. A feasible solution to powering an immersive socialization experience on VR devices is through computation offloading [4]. In addition, the metaverse is a user-centric application by design, and we need to place the user experience at the core of the network design [5]. Therefore, we have to consider a multi-user socialization scenario, in which each user has a different purpose of use and requirements. This propels us to seek a more user-centered and oriented solution.

Related work. In recent years, VR services over wireless communication have been thoroughly studied in many previous works. Chen et al. studied the quality of service of a VR service over wireless communication using an echo state network [6]. However, none of the previous works considered the varying purpose of use and requirements between users. Although MEC-based VR services are thoroughly studied, few of them considered a sequential scenario over wireless communication. Machine-learning-based approaches have been widely adopted to tackle wireless communication challenges [7, 8], and DRL has been proven to achieve excellent performance. Meng et al. [9] addressed the synchronization between physical objects and the digital models in Metvaerse with deep reinforcement learning (DRL). This is due to the ability of DRL agents to explore and exploit in self-defined environments[10]. However, there are no existing works which has designed a user-centered and user-oriented DRL method.

Approach. This paper proposes a novel multi-user VR model in a downlink Non-Orthogonal Multiple Access (NOMA) system [11]. We designed a novel DRL algorithm that considers the varying purpose of use and requirements of the users. We re-design the Proximal Policy Optimization (PPO) algorithm [12] with a reward decomposition structure.

Contributions. Our contributions are as follows:

-

•

User-centered Computation Offloading VR Formulation: We study the user-centered Metaverse computation offloading over the wireless network, designing a multi-user scenario where a Virtual Service Provided (VSP) assists users in generating reality-assisted virtual environments.

-

•

HRPPO: We crafted a novel DRL algorithm Hybrid Reward PPO (HRPPO) to tackle the proposed channel allocation problem. The HRPPO is imbued with the hybrid reward architecture (HRA), enabling it to have a more user-centred perspective.

-

•

DRL Scenario Design: The design of the three core DRL elements: state, action, and reward are explained in detail. Extensive experiments demonstrate the effectiveness of our method.

The rest of the paper is organized as follows. Section II introduces our system model. Then Section III and IV proposes our deep reinforcement learning approach and settings. In Section V-A, extensive experiments are performed, and various methods are compared to show the prowess of our strategy. Section VI concludes the paper.

II System model

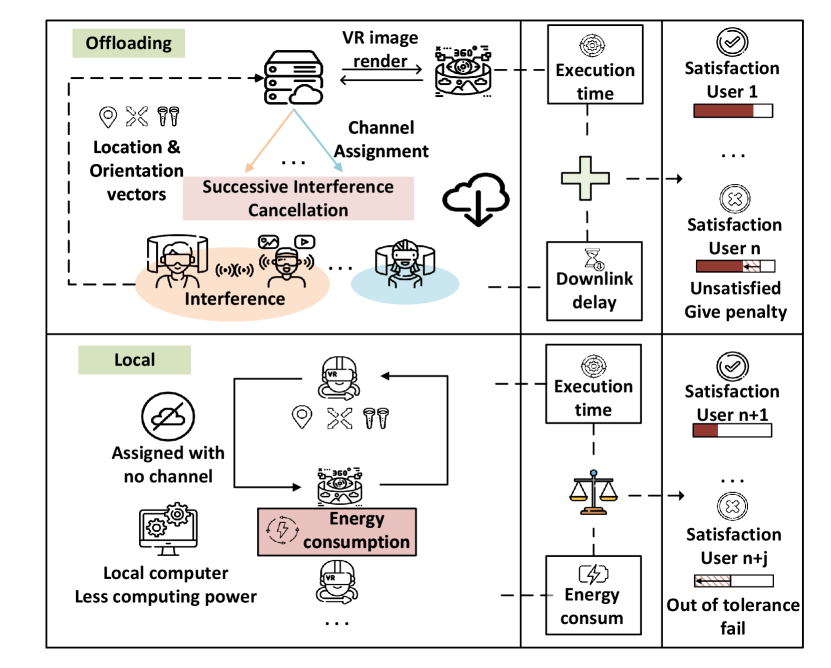

Consider a multi-user wireless downlink transmission in an indoor environment, in which one second is divided into time steps. To ensure a smooth experience, we consider a slotted structure with the clock signal sent by the server for synchronization, and each slot contains one high resolution frame transmission, and the duration for each time slot is (). In each time step, a sequence of varying resolution 3D scenes is generated by the VSP and sent to VR device users (VU) with distinct characteristics (e.g., Computation capability) and different requirements (e.g., Tolerant delay). Each user is selected for either (1) Computation offloading by offloading the tracking vectors [13] to the virtual service provider (VSP) for scene rendering, or (2) Local computing by receiving scenes (tracking vectors) from others and render scenes locally with a lower computation capability and at the expense of energy consumption. If a user is selected for computation offloading, the VSP will generate the frame and send it back to them via a set of channels ,

Each user can accept virtual scene frame rates as low as a minimum tolerable frames per second (FPS) , which is the number of successfully received frames in a second. Considering that the tracking vectors are relatively very small [13], we assume that the vectors are transmitted with dedicated channels between VSP-VU and VU-VU, and neglect the overhead.

We use to denote the selection of downlink channel arrangement and inherently, the computing method (VSP or local computation). indicates VU is arranged to channel at time step , and means user needs to generate locally.

Thus, it is imperative to devise a comprehensive algorithm that takes into account the varying satisfaction threshold and requirements of the users. In the next section, we explain the computation offloading and local computing models in detail. The system model is shown in Fig.1.

II-A Computation offloading model

We first introduce the computation offloading model based on the wireless cellular network. The server VSP will manage the downlink channels of all VUs . And then, the server VSP Furthermore, we denote () as the size of the virtual scene frame at time step that needs to be transmitted to user .

We adopt the Non-Orthogonal Multiple Access (NOMA) system as this work’s propagation model. In NOMA system, several users can be multiplexed on one channel by the successive interference cancellation (SIC) and superposition coding, and the received signals of VUs in channel are sorted in ascending order: [11]. In this paper, we assume the decoders of the VUs can recover the signals from each channel through SIC. We denote as the channel gain between the VSP and the user allocated to channel at time step (iteration) . The Downlink rate can be expressed as [11]:

| (1) |

denotes the transmission power of each VU’s device. Note that transmission power is not time-related in our scenario. denotes the channel gain between VU and VSP in channel , with , , being the Rayleigh fading parameter, the distance between VU and VSP, and the path loss exponent, respectively. is the bandwidth of each channel, and denotes the background noise. Accordingly, the total delay of each frame in time step is divided into (1) Execution time and (2) downlink transmission time:

| (2) |

where is the computation capability of VSP, and is the required number of cycles per bit of this frame [14].

II-B Local computing model

When VU is not allocated a channel, it needs to generate the virtual world frames locally at the expense of energy consumption. Let be the computation capability of VU , and it varies across VUs. Adopting the model from [15], the energy per cycle can be expressed as . Therefore, the overhead of local computing in terms of execution delay and energy can be derived as:

| (3) | |||

| (4) |

where is the weighting parameter of energy for VU . The battery state of each VU can be different, then, we assume that is closer to with a higher battery.

II-C Problem formulation

With the slotted structure, we set the VUs’ maximum tolerable delay to be for every frame to be the problem constraint. Different users have different purpose of use (video games, group chat, etc.). Thus, they also have varying expectations of satisfactory number of frames per second . We set the tolerable frame transmission failure count of VU as . Initially, the tolerable frame transmission failure count of VU is defined as . For each successive frame, the delay in exceedance of tolerable threshold leads to a decrease in VU’s remaining tolerable count: , where

| (5) |

Our goal is to find the near-optimal channel arrangement for the transmission of frames, to minimize the total frame transmission failure count and VU device energy consumption.

| (6) | ||||

| (7) | ||||

| (8) |

The are the weighting parameters of delay and energy. Constraint ensures that the frame transmission failure count of each user is within their tolerable limit. Constraint is our integer optimization variable which denotes the computing method and channel assignment for each user at every time step.

This formulated problem is sequential, where the remaining tolerable frame transmission failure count of each user changes over time, and influences the following states. Thus, convex optimization methods are unsuitable for our proposed problem due to the huge space of integer variables and daunting computational complexity. Also, as the problem contains too many random variables, model-based RL approaches which require transition probabilities are infeasible techniques to tackle our proposed problem. We next introduce our deep RL environment settings according to the formulated problem.

III Deep reinforcement learning setting

For a reinforcement learning environment (problem), the most important components are (1) State: the key factors for an agent to make a decision. (2) Action: the operation decided by an agent to interact with the environment. (3) Reward: the feedback for Agent to evaluate the action under this state. Thus, we expound on these three components next.

III-A State

We included the following attributes into the state: (1) Each VU’s virtual world frame size: . (2) Each VU’s remaining tolerable frame transmission failure count: . (3) The channel gain of each VU: . (4) The remaining number of frames to be transmitted at each time step: .

III-B Action

The discrete action channel assignment to each VU is:

| (9) | |||

| (10) |

In practice, we use a tuple in which there are elements corresponding to users and each element can take values, which corresponds to the number of channels; plus 1 for a user being assigned to perform local computing. However, we need to encode the discrete actions as discrete numbers to be evaluated by the neural network. The encoding method is shown in Fig. 2.

III-C Reward

As the main objective is to minimize the frame transmission failure counts and energy consumption, the overall reward for each VU contains: (1) a penalty for every frame transmission failure and (2) a weighted reward for energy consumption corresponding to VU’s battery life. To implement the tolerance constraint , we give (3) a huge penalty corresponding to the number of frames left to be transmitted when any VU’s remaining tolerable frame transmission failure count is . In the circumstance of (3), the episode ends immediately.

IV Deep Reinforcement learning Approach

Our proposed Hybrid Reward Proximal Policy Optimization (HRPPO) is based on Proximal Policy Optimization (PPO) algorithm, which is considered as the state-of-art RL algorithm [12]. HRPPO is inspired by the Hybrid Reward Architecture (HRA) [16]. Thus, PPO and HRA preliminaries will first be introduced. We will then explain the HRPPO.

IV-A Preliminary

IV-A1 Proximal Policy Optimization (PPO)

As we emphasize on developing a user-centred model which considers VUs’ varying purpose of use and requirements, policy stability is essential. Proximal Policy Optimization (PPO) by openAI [12] is an enhancement of the traditional policy gradient algorithm. PPO has better sample efficiency by using a separate policy for sampling, and is more stable by embedding policy constraint.

In summary, PPO has two main characteristics in its policy network (Actor): (1) Increased sample efficiency. PPO uses a separate policy for sampling trajectories (during training) and evaluating (during evaluation) to increase sample efficiency as well. Here we use as the evaluating policy and as the data sampling policy. As we use the to sample data for training, the expectation can be rewritten as:

| (11) |

(2) Policy constraint. After switching the data sampling policy from to , an issue still remains. Although in the equation (11), they have the similar expectation value of their objective functions, their variances are starkly distinct. Therefore, a KL-divergence penalty can be added as a constraint to the reward formulation to constrain the distances. However, the KL divergence is impractical to calculate in practice as this constraint is imposed on every observation. Thus, we rewrite the objective function as: [12], where

| (12) |

And . The problem is solved by gradient ascent, therefore, the gradient can be written as:

| (13) |

In terms of the value network (Critic), PPO uses identical Critic as per other Actor-Critic algorithms; and the loss function can be formulated in [12] as:

| (14) |

is the widely used state-value function [17], which is estimated by a learned critic network with parameter . We update by minimizing the , and the parameter of the target state-value function periodically with . Using target value is a prevailing trick in RL, which has been used in many algorithms [17].

IV-A2 Hybrid Reward Architecture (HRA)

High-dimensional objective functions are common in communication problems, especially for multi-user scenarios, since we usually need to consider multiple factors and distinct requirements of different users. This issue of using RL to solve a high dimensional objective function was first studied in [16]. In their work, they proposed the HRA structure for Deep Q-learning (DQN) which aims to decompose high-dimensional objective functions into several simpler objective functions. HRA has remarkable performance in handling high-dimensional objectives, which serves as the inspiration for our work.

IV-B HRPPO

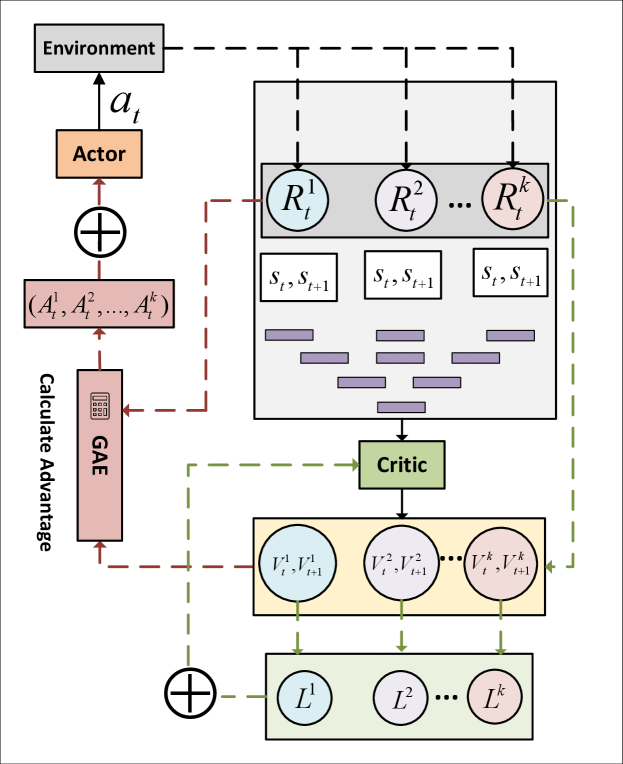

In contrast to decomposing the overall reward into separate sub-goal rewards as done in [16], we built a user-centered reward decomposition architecture as an extension to PPO, Hybrid Reward PPO (HRPPO), which takes in the rewards of different users and calculates the actions-values separately. In other words, we give the network a view of the state-value of each user, instead of merely evaluating the overall value of an action based on an overall state-value.

Function process: In each episode, when the current transmission is accomplished with the selected action , the environment will issue rewards as feedback to different VUs. These rewards along with their corresponding states and next iteration state, will be sent to the Critic to generate the state-values , representing the state-value of each VU. The state-value is then used to calculate the advantages and losses for each VU. The above-mentioned process is illustrated in Fig. 3.

Update function: In equation (13), we established the policy gradient for PPO Actor, and in HRPPO we have the gradient as:

| (15) |

where denotes the advantages of different VUs. The generalized advantage estimation (GAE) [18] is chosen as the advantage function:

| (16) | |||

| (17) |

specifies the length of the given trajectory segment, specifies the discount factor, and denotes the GAE parameter. In terms of Critic loss, the equation (14) is formatted into:

| (18) |

Similar to the renowned centralized training decentralized execution (CTDE) framework [19], the also uses centralized training with equation (18). Therefore, the training time will not scale with the number of users.

IV-B1 Baselines

We also implement some of the most renowned RL algorithms that are capable of tackling problems with a discrete action space.

-

•

HRDQN. We implemented the hybrid reward DQN following the structure of HRA [16].

-

•

PPO. The traditional PPO is used as a baseline. The sum of all users’ rewards is selected as the global reward.

-

•

Random. The random Agent selects actions randomly, which represents the system performance if no channel resource allocation is performed.

IV-C Metrics

We introduce a set of metrics (apart from RL rewards) to evaluate the effectiveness of our proposed methods.

-

•

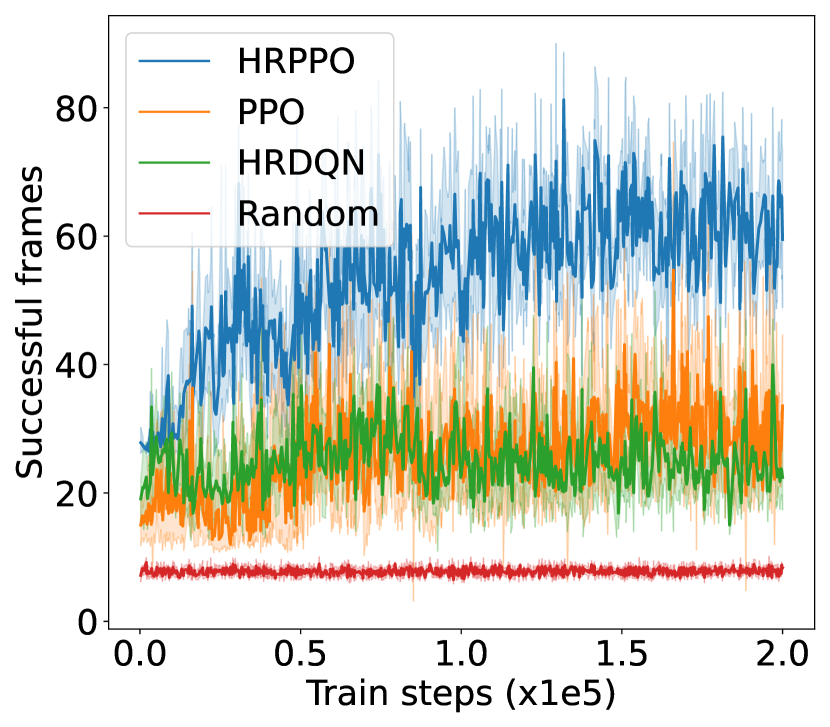

Successful frames. The number of successful frames among total frames determines the Frame Rate per Second (FPS) of the virtual world scenes, and hence fluidity of the Metaverse VR experience.

-

•

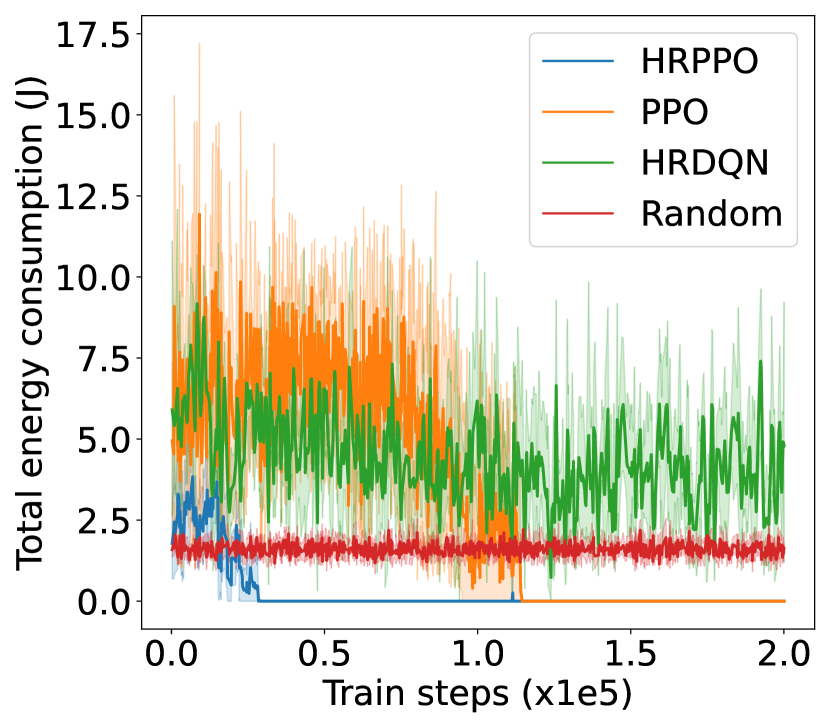

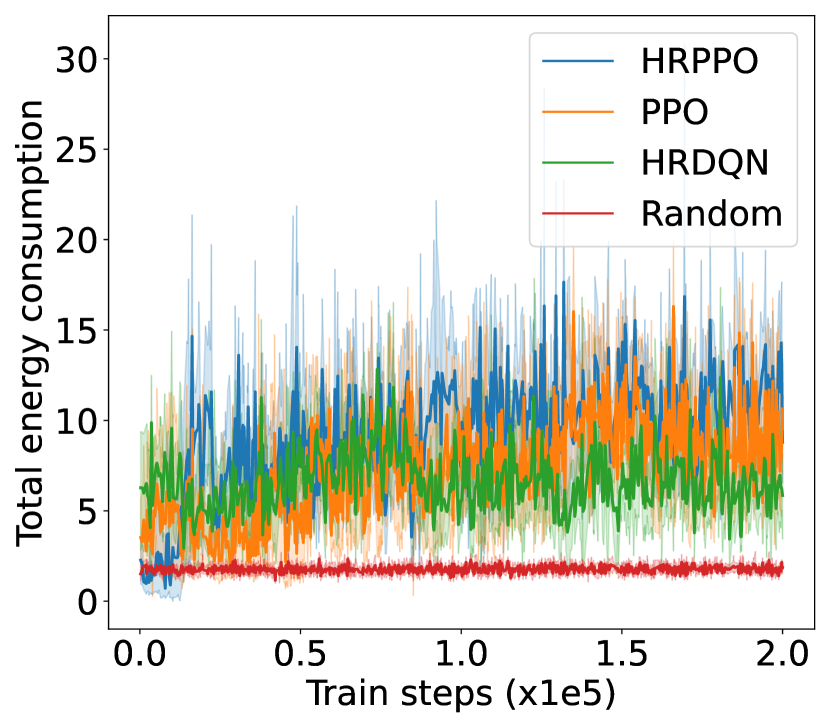

Energy consumption. We illustrate the total energy consumption in each episode. Lower energy consumption signifies a more effective use of channel resources.

-

•

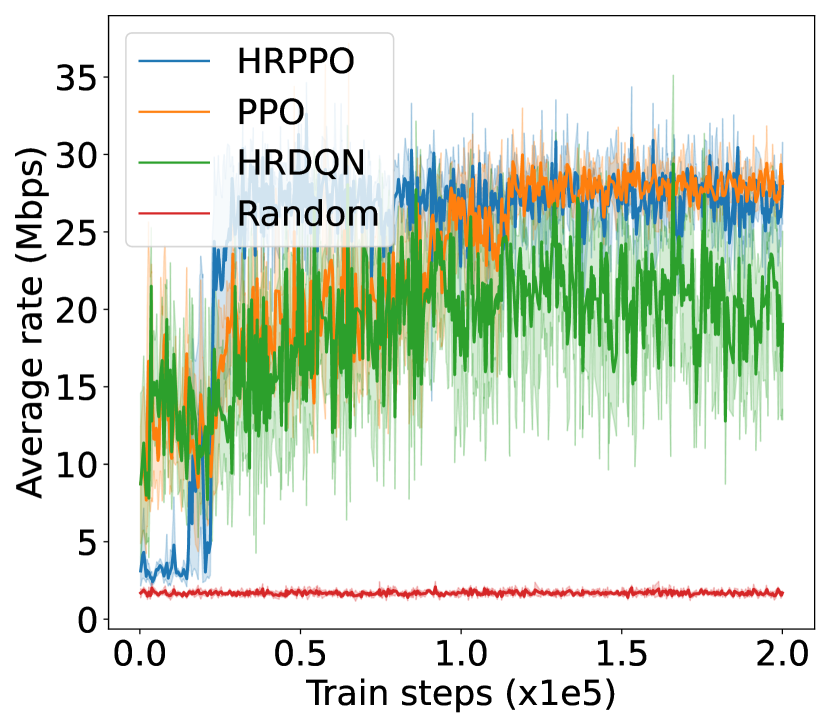

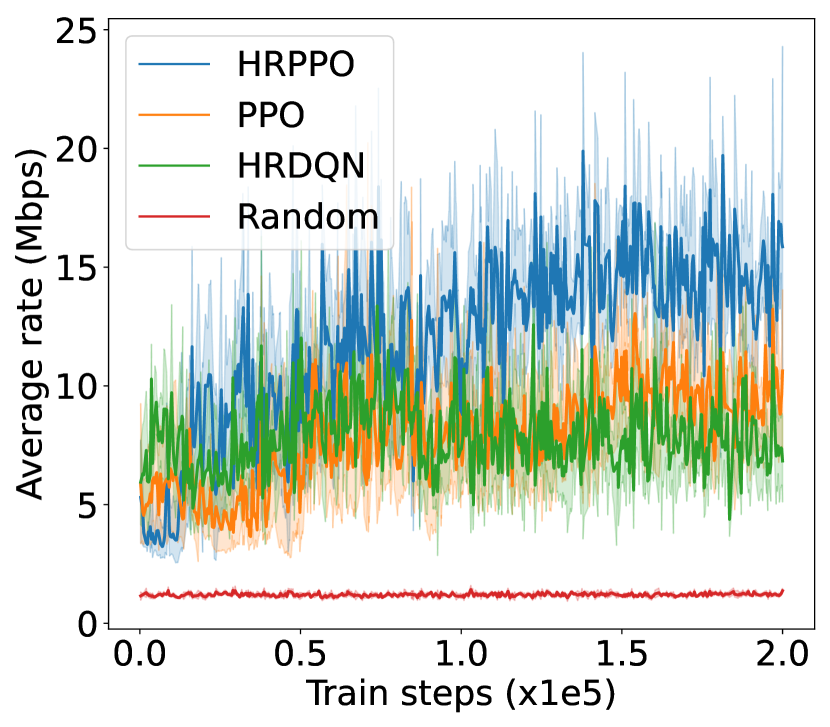

Average rate. The average downlink transmission rate of all VUs and frames in each episode is shown to evaluate the trained policy. A higher average rate indicates better allocation of channel resources.

V Experiment results

V-A Numerical Setting

Consider a indoor space where multiple VUs are distributed uniformly across the space. We set the number of channels to be in each experiment configuration, and the number of VUs to be from to across the different experiment configurations. The maximum resolution of one frame is 2k () and the minimum is 1080p (). Each pixel is stored in 16 bits [20] and the factor of compression is 150 [13]. We randomize the data size of one frame to take values from a uniform distribution in which . The flashed rate, frames in one second is taken to be 90, which is considered the best rate for VR [13] applications. The bandwidth of each channel is set to kHZ. The required successful frame transmission count is uniformly selected from , which is higher than the acceptable of [13]. In terms of channel gain, the small-scale fading follows the Rayleigh distribution and is the path loss exponent. For all experiments, we use steps for training, and the evaluation interval is set to be training steps. As there are several random variables in our environment, all experiments are conducted under global random seeds from 0-10, and the error bands are drawn to better illustrate the model performances.

V-B Result analysis

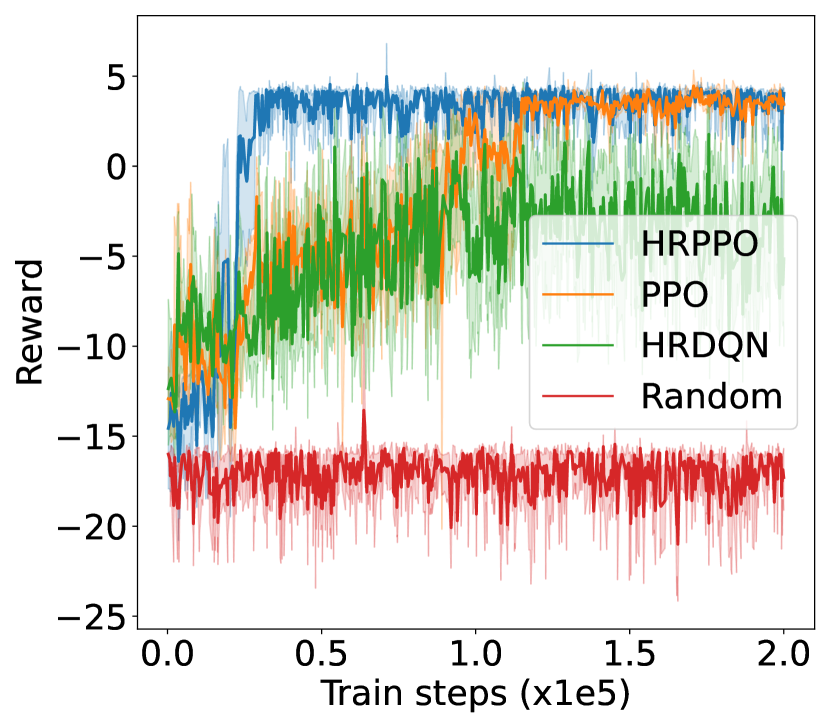

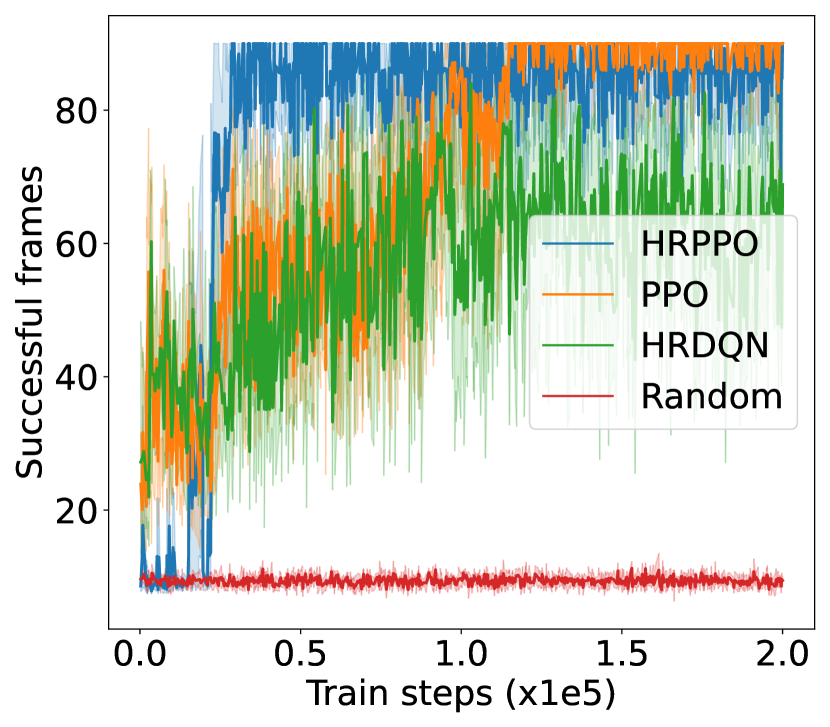

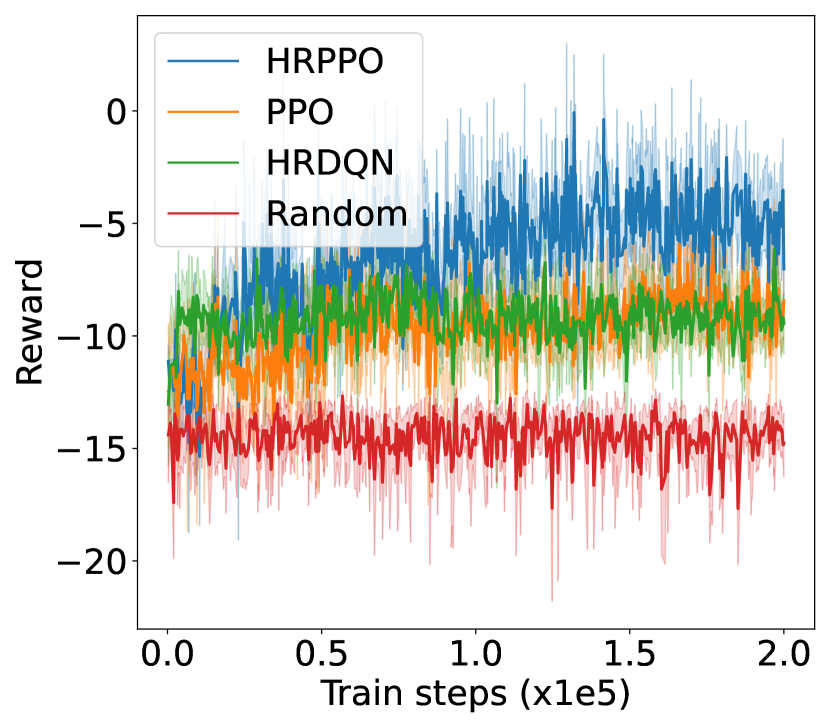

We first illustrate the performances of the different models against different metrics in two experimental configurations (shown in Fig. 4): one with 6 VUs and the other with 8 VUs. We then show the overall results for each experimental configurations in Table I. Results in Table I are taken from the average of the final steps.

The training reward, successful frame transmission counts, and average downlink transmission rate show an overall upward trend as training progresses. When pitted against these metrics, HRPPO performed the best out of the tested baseline algorithms. In the experimental setting with VUs, although PPO and HRPPO are able to attain similar peak rewards towards later training stages, HRPPO converges in half the number of training steps taken for PPO to achieve convergence. In the experimental setting with VUs, HRPPO obtains a much higher final reward when compared to PPO. Both HRPPO and PPO achieved higher rewards than HRDQN, and performed better in each metric. HRPPO and PPOs’ performance superiority can be attributed to the PPO’s policy KL penalty and higher sample efficiency. However, HRDQN and PPO fail to find a good solution in more complicated scenarios. In the VU experimental setting, both HRPPO and PPO are able to allocate VUs to a VSP channel for computation offloading in each round, and this is reflected in zero energy spent on local device computation. However, in the experimental setting with VUs, there is insufficient channel resources and all of the three algorithms learn strategies to increase transmission rate and avoid frame rate decrement, by allocating some VUs to perform local computation, which increases energy consumption.

The complete results in Table. I shows that HRPPO obtains the best performance for almost every metric under every scenario. This demonstrates that decomposing the reward and using sum-losses which provides a user-centered view to the RL agent, is good approach to tackling a multi-user computation offloading problem.

| VU number | Reward | Successful frames | Energy consumption(J) | Average rate (Mbps) |

| HRPPO | ||||

| 4.66 | 88.44 | 0 | 28.33 | |

| 3.26 | 88.35 | 0 | 27.56 | |

| -2.21 | 83.67 | 7.05 | 19.90 | |

| -5.15 | 65.69 | 15.02 | ||

| PPO | ||||

| 0 | ||||

| 0 | ||||

| 9.12 | ||||

| HRDQN | ||||

VI conclusion

In this paper, we study a multi-user VR in the Metaverse mobile edge computing over wireless networks scenario. Multiple users with varying requirements are considered, and a novel user-centered RL algorithm HRPPO is designed to tackle it. Extensive experiment results show that HRPPO has the quickest convergence and achieves the highest reward, which is higher than the traditional PPO. In the future, we will continue to optimize the power allocation to seek more optimal solutions to our proposed problems.

Acknowledgement

This research is partly supported by Singapore Ministry of Education Academic Research Fund under Grant Tier 1 RG90/22, RG97/20, Grant Tier 1 RG24/20 and Grant Tier 2 MOE2019-T2-1-176; partly by the NTU-Wallenberg AI, Autonomous Systems and Software Program (WASP) Project.

References

- [1] K. B. Letaief, W. Chen, Y. Shi, J. Zhang, and Y.-J. A. Zhang, “The roadmap to 6G: AI empowered wireless networks,” IEEE Communications Magazine, vol. 57, no. 8, pp. 84–90, 2019.

- [2] I. F. Akyildiz and H. Guo, “Wireless extended reality (xr): Challenges and new research directions,” ITU J. Future Evol. Technol, 2022.

- [3] Y. Wang, Z. Su, N. Zhang, R. Xing, D. Liu, T. H. Luan, and X. Shen, “A survey on metaverse: Fundamentals, security, and privacy,” IEEE Communications Surveys & Tutorials, 2022.

- [4] Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A survey on mobile edge computing: The communication perspective,” IEEE Communications Surveys & Tutorials, 2017.

- [5] L.-H. Lee, T. Braud, P. Zhou, L. Wang, D. Xu, Z. Lin, A. Kumar, C. Bermejo, and P. Hui, “All one needs to know about Metaverse: A complete survey on technological singularity, virtual ecosystem, and research agenda,” arXiv preprint arXiv:2110.05352, 2021.

- [6] M. Chen, W. Saad, and C. Yin, “Virtual reality over wireless networks: Quality-of-service model and learning-based resource management,” IEEE Transactions on Communications, 2018.

- [7] G. Aceto, D. Ciuonzo, A. Montieri, and A. Pescapé, “Mobile encrypted traffic classification using deep learning: Experimental evaluation, lessons learned, and challenges,” IEEE Transactions on Network and Service Management, 2019.

- [8] Y. Lu, X. Huang, K. Zhang, S. Maharjan, and Y. Zhang, “Blockchain empowered asynchronous federated learning for secure data sharing in internet of vehicles,” IEEE Transactions on Vehicular Technology, 2020.

- [9] Z. Meng, C. She, G. Zhao, and D. De Martini, “Sampling, communication, and prediction co-design for synchronizing the real-world device and digital model in metaverse,” IEEE Journal on Selected Areas in Communications, 2022.

- [10] N. C. Luong, D. T. Hoang, S. Gong, D. Niyato, P. Wang, Y.-C. Liang, and D. I. Kim, “Applications of deep reinforcement learning in communications and networking: A survey,” IEEE Communications Surveys & Tutorials, vol. 21, no. 4, pp. 3133–3174, 2019.

- [11] L. Dai, B. Wang, Z. Ding, Z. Wang, S. Chen, and L. Hanzo, “A survey of non-orthogonal multiple access for 5G,” IEEE Communications Surveys & Tutorials, vol. 20, no. 3, pp. 2294–2323, 2018.

- [12] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint:1707.06347, 2017.

- [13] E. Bastug, M. Bennis, M. Médard, and M. Debbah, “Toward interconnected virtual reality: Opportunities, challenges, and enablers,” IEEE Communications Magazine, vol. 55, no. 6, pp. 110–117, 2017.

- [14] C. You, Y. Zeng, R. Zhang, and K. Huang, “Asynchronous mobile-edge computation offloading: Energy-efficient resource management,” IEEE Transactions on Wireless Communications, 2018.

- [15] Y. Wen, W. Zhang, and H. Luo, “Energy-optimal mobile application execution: Taming resource-poor mobile devices with cloud clones,” in 2012 proceedings IEEE Infocom. IEEE, 2012, pp. 2716–2720.

- [16] H. Van Seijen, M. Fatemi, J. Romoff, R. Laroche, T. Barnes, and J. Tsang, “Hybrid reward architecture for reinforcement learning,” Advances in Neural Information Processing Systems, vol. 30, 2017.

- [17] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018.

- [18] J. Schulman, P. Moritz, S. Levine, M. Jordan, and P. Abbeel, “High-dimensional continuous control using generalized advantage estimation,” arXiv preprint arXiv:1506.02438, 2015.

- [19] P. K. Sharma, R. Fernandez, E. Zaroukian, M. Dorothy, A. Basak, and D. E. Asher, “Survey of recent multi-agent reinforcement learning algorithms utilizing centralized training,” in Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications III. SPIE.

- [20] M. Quest, “Mobile virtual reality media overview.” [Online]. Available: https://developer.oculus.com/documentation/mobilesdk/latest/concepts/mobile-media-overview/