WaDeNet: Wavelet Decomposition based CNN for Speech Processing

Abstract

Existing speech processing systems consist of different modules, individually optimized for a specific task such as acoustic modelling or feature extraction. In addition to not assuring optimality of the system, the disjoint nature of current speech processing systems make them unsuitable for ubiquitous health applications. We propose WaDeNet, an end-to-end model for mobile speech processing. In order to incorporate spectral features, WaDeNet embeds wavelet decomposition of the speech signal within the architecture. This allows WaDeNet to learn from spectral features in an end-to-end manner, thus alleviating the need for feature extraction and successive modules that are currently present in speech processing systems. WaDeNet outperforms the current state of the art in datasets that involve speech for mobile health applications such as non-invasive emotion recognition. WaDeNet achieves an average increase in accuracy of 6.36% when compared to the existing state of the art models. Additionally, WaDeNet is considerably lighter than a simple CNNs with a similar architecture.

Index Terms— Wavelet Decomposition, Speech Processing, End-to-End Deep Learning, CNNs

1 Introduction

A substantial part of current speech modelling systems is feature extraction [1, 2]. However, the advent of Deep Learning (DL) has caused a significant paradigm shift in how these signals are modelled. Over the last 3 decades, advanced “hand-crafted” transformations such as the Mel-frequency cepstral coefficients (MFCC) were used owing to a rise in accuracy in their use with Hidden Markov Model (HMM) systems [3]. However, these transformations caused a loss of information. DL models sought to make up for this loss by learning naive spectral [4] or waveform [5] features from the speech signal thus reducing the dependency on hand-crafted features. In fact, Deng et al. [6] showed that DL models benefit from using simpler spectrograms over manually extracted MFCCs. Additionally, when compared to complex features, raw spectral features preserve more information, making them suitable for handling large variability across users (speaking styles, accents, etc.).

Current systems for speech processing contain different modules that perform different tasks, such as an acoustic module and a classifier [7]. Every module has its own training process that optimizes a different objective function, each differing from the true evaluation criteria. Thus, global optimality of the entire system is not guaranteed in spite of module optimality [8]. Additionally, development of these systems require extensive hyper parameter tuning by experts [9]. These shortcomings curb the ability of current systems to be deployed in a mobile environment, ushering in the need for a powerful end-to-end model.

Keeping in mind these disadvantages, we propose an end-to-end Deep Learning model for speech processing. Due to its end-to-end nature, these models find use in mobile health applications such as outpatient telemetry and ubiquitous affective computing tasks. We chose to perform our experiments on tasks involving speech towards emotion recognition and pervasive pathological voice detection, since they are important mobile affective computing tasks. The nature of this task makes it appropriate for our experiments since it demands the ability of the solution to be run on edge devices.

2 Methodology

In an attempt to provide a complete end-to-end solution, an architecture that learns to derive features and suitably learn to classify from these features is to be realised. To this end, a convolutional feature extractor followed by a series of fully connected layers for classification is proposed. Convolutional Neural Network (CNN) architectures have shown success as temporal feature extractors in speech processing tasks [10]. By connecting the feature extractor and fully connected layers, and training the resultant neural network, an end-to-end pipeline is achieved. This eliminates the need for feature extraction via complex signal processing techniques.

For our experiments we used two architectures – a naive 1D CNN (Naive CNN) and an enhanced CNN incorporating wavelet decomposition. Both CNNs contain the same fully connected layers but vary in the feature extractor. Their architectures are explained in succeeding sections.

2.1 Naive CNN

The Naive CNN maps each window of the speech signal directly to its corresponding class. The components of the architecture are as follows:

-

1.

Convolutional Block: Each Convolutional Block consists of a Convolutional Layer111All convolutions refer to one dimensional convolutions unless specified. of kernel size , followed by Batch Normalization and ReLU activation, succeeded by another Convolutional Layer (kernel size = ), Batch Normalization and ReLU activation. The first convolution doubles the number of features and maintains the same length of the input feature map while the second convolutional layer maintains the same number of features and divides the length by half. Effectively, for an input feature map of dimension the dimension of the output feature map of the block is .

-

2.

Fully Connected Block: This block consists of a series of Fully Connected Layers, each being succeeded by ReLU activation and a dropout of probability 0.5 to prevent overfitting. Depending on the complexity of the task, the number of activation units and number of layers is empirically altered.

The Naive CNN comprises of a Convolutional Blocks followed by a Fully Connected Block. The output feature map of the Convolutional Block has dimensions where is the number of output channels of the first Convolutional Block and is the length of the input. The last Convolutional Block’s output feature map is flattened before passing it on to the Fully Connected Block. Softmax activation is applied to the output of the last layer of the Fully Connected Block.

| Dataset | Model | Accuracy | F1 Score | Model Parameters |

| EmoDB | Naive CNN | 0.843 | 0.829 | 172,368,455 |

| WaDeNet | 0.934 | 0.928 | 47,156,391 | |

| RAVDESS | Naive CNN | 0.497 | 0.484 | 173,955,656 |

| WaDeNet | 0.872 | 0.871 | 47,156,904 | |

| VOICED | Naive CNN | 0.961 | 0.947 | 90,057,282 |

| WaDeNet | 0.998 | 0.997 | 26,182,306 | |

| TESS | Naive CNN | 0.961 | 0.962 | 173,953,607 |

| WaDeNet | 0.982 | 0.982 | 47,156,391 |

2.2 Wavelet Decomposition Net (WaDeNet)

From the analysis of the architecture of the Naive CNN it is clear that there are some shortcomings. Firstly, increasing the depth of this network for better information capture will possibly introduce the problem of vanishing gradients. Secondly, the frequency composition of each window, which is vital for speech processing, is unknown to the model.

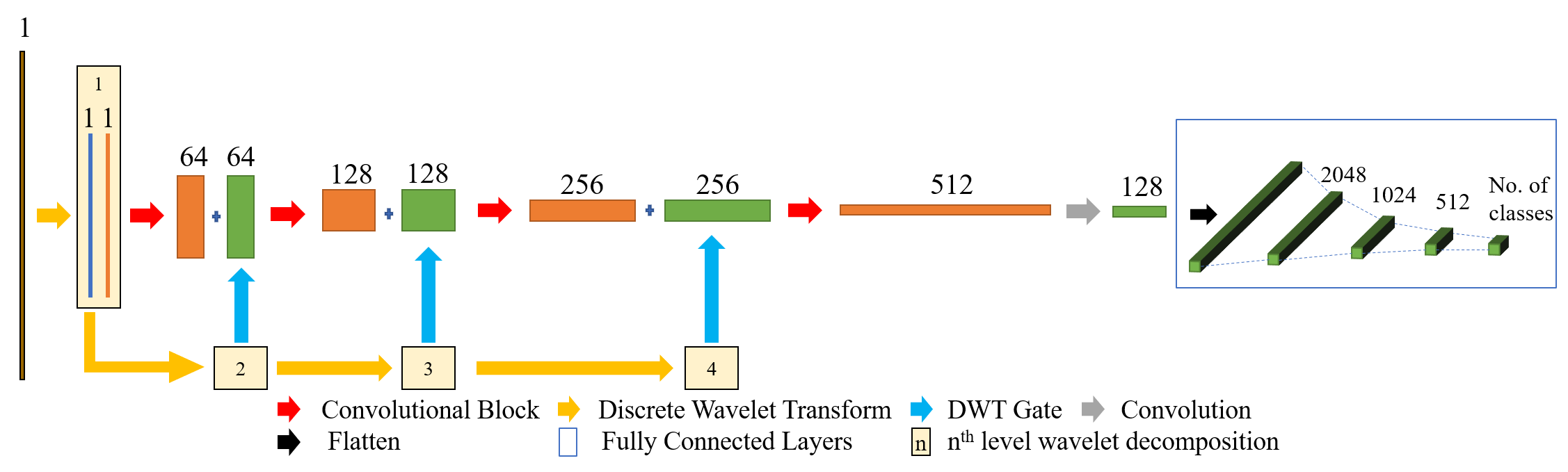

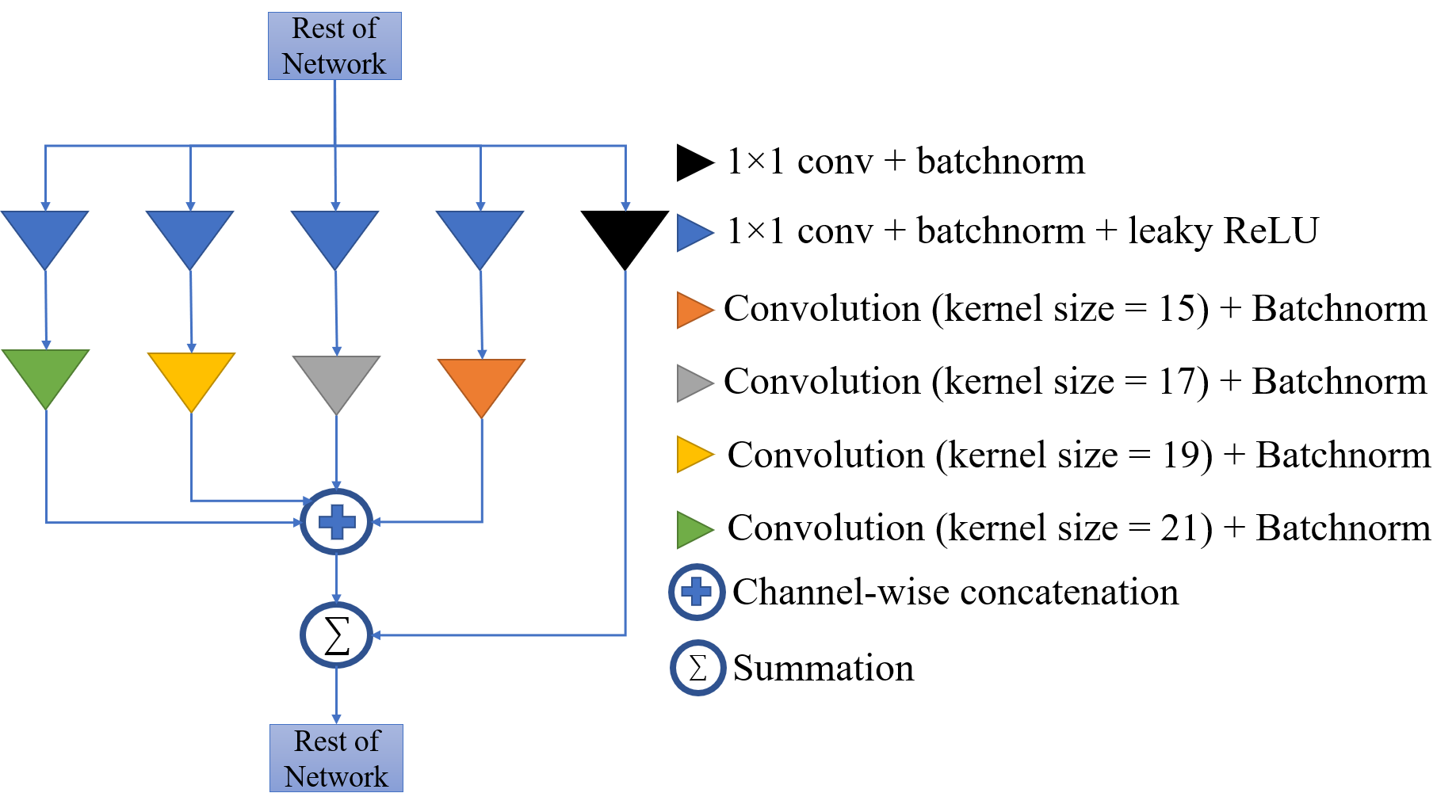

In an effort to address these drawbacks, we propose Wavelet Decomposition Net (WaDeNet), an enhancement of the Naive CNN. By inserting an Inception-Residual Block at the end of each Convolutional Block, WaDeNet has an increased network depth while alleviating vanishing gradients. The Inception-Residual Block consists of multiple convolutions with different kernel sizes performed in parallel as shown in Fig. 2. Following this, channel wise concatenation of these representations is done.

The second shortcoming is countered by introducing frequency information into the model by incorporating a multiresolutional representation. This is achieved by embedding the Wavelet Transform into the architecture, which is well experimented with for image reconstruction [11, 12, 13]. As shown in Fig 1, a representation of a particular order of the Discrete Wavelet Transformation (DWT) of the signal is concatenated with the output feature map of the preceeding Convolutional Block () and passed on to the succeeding Convolutional Block (). The Haar wavelet was used for the decomposition of the signal. Each decomposition is passed to a which consists of a series of Convolutions that produces the representation. Thus for a total of Convolutional Blocks there are levels of wavelet decomposition.

This approach is fundamentally different from using signal processing techniques to extract features that consist of both time and frequency information followed by training a classifier using these features. By incorporating the DWT into the network, the temporal information of the signal is not only retained but also learnt on in an end-to-end manner. Additionally, through feature map concatenation, the number of parameters is drastically reduced while improving performance, as discussed in Section 3.3. This makes it suitable for mobile health applications such has emotion and stress monitoring.

3 Experiments and Results

3.1 Dataset and Preproccesing

We run our experiments on popular datasets which consist of speech data suitable for mobile health applications such as emotion recognition and pathological voice recognition. The datasets and their respective descriptions are as follows:

-

1.

EmoDB [14]: This database consists of 10 actors who simulate 7 different emotions (anger, disgust, fear, happiness, pleasant surprise, sadness, and neutral) by uttering sentences used in everyday communication. There are 535 utterances in the dataset, amounting to 1487 seconds and an average utterance length of about 2.77 seconds.

-

2.

RAVDESS [15]: The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS) consists of 12 male and 12 female actors speaking each expression in a North American Accent. The actors speak each statement with 8 different emotions - anger, calm, disgust, fear, happiness, sadness, surprise, neutral - at two levels of intensities, except when speaking in a neutral manner.

-

3.

VOICED [16]: The VOice ICar fEDerico II Database (VOICED) contains 208 voice samples of adults between the age 18 and 70, of which 150 are pathological and the rest healthy. Each sample is consists of uninterrupted vocalization of the vowel ’a’ for 5 seconds.

-

4.

TESS [17]: The Toronto Emotional Speech Set (TESS) consists of 200 words spoken by two female actors. Each of the voice samples emulate 7 different emotions namely anger, disgust, fear, happiness, pleasant surprise, sadness, and neutral.

The voice samples are segmented into windows of 320 ms each with a 75% overlap. Each window is labelled depending on the dataset. For instance, a window in the EmoDB dataset is labelled with the corresponding emotion.

3.2 Implementation

For our experiments, , and for each convolution was chosen. Stochastic Gradient Descent with an initial learning rate of 0.001 was used to optimize the weights of the model by minimizing the categorical cross entropy. WaDeNet was trained for 150 epochs on 60% of the data with a learning rate scheduler which reduced the learning rate by a factor of 10 after 50 epochs. These constants were empirically decided after extensive experimentation.

3.3 Baseline Comparison

We compare the performance of the Naive CNN and WadeNet on the datasets discussed in the previous section. Since the F1 score is a more reliable metric for multi-class classification problems, we report it in addition to the overall accuracy of the model’s performance on the test data (20% of the total data).

It is evident that the absence of spectral features translates to a subpar performance as evident from the performance of the Naive CNN (Table 1). In contrast, WaDeNet outperforms the Naive CNN with an average increase in accuracy and F1 score of 13.1% and 13.9% respectively. Additionally, WaDeNet has a maximum improvement up to 38.7% in the F1 score on the RAVDESS dataset.

Furthermore, WaDeNet is considerably lighter than the Naive CNN, with an average reduction of 72.26% in the number of model parameters.

3.4 Benchmarking

In order to validate WaDeNet, we evaluate WaDeNet’s performance against existing research work that portray state of the art performance on the datasets considered. The experimental conditions for each benchmark were replicated as specified in the respective work. As seen in Table 2, WaDeNet performs considerably better than the current state of the art models across all the datasets, with an average increase in accuracy of 6.36%, with a maximum of 11.4% on the RAVDESS dataset.

4 Conclusion and Future Work

In this paper we propose WaDeNet, an end-to-end architecture capable of learning spectral information through Wavelet Decomposition from a raw speech signal. WaDeNet outperforms the Naive CNN with the same number of fully connected layers with a 72.26% reduction in model parameters. Moreover, WaDeNet also beats the current state of the art accuracy on all the datasets considered in Section 3.1. Since WaDeNet is end-to-end, it does not require disjoint training processes of different modules. This advantage combined with the benefits of a light model makes it suitable for mobile speech processing applications.

Additionally, we intend to perform quantization and replace standard convolutions with mobile convolutions to further optimize the model for mobile deployment.

References

- [1] Douglas O’Shaughnessy, “Linear predictive coding,” IEEE potentials, vol. 7, no. 1, pp. 29–32, 1988.

- [2] Hynek Hermansky, B Hanson, and Hisashi Wakita, “Perceptually based linear predictive analysis of speech,” in ICASSP’85. IEEE International Conference on Acoustics, Speech, and Signal Processing. IEEE, 1985, vol. 10, pp. 509–512.

- [3] Lindasalwa Muda, Mumtaj Begam, and Irraivan Elamvazuthi, “Voice recognition algorithms using mel frequency cepstral coefficient (mfcc) and dynamic time warping (dtw) techniques,” arXiv preprint arXiv:1003.4083, 2010.

- [4] Hynek Hermansky, “Speech recognition from spectral dynamics,” Sadhana, vol. 36, no. 5, pp. 729–744, 2011.

- [5] Hamid Sheikhzadeh and Li Deng, “Waveform-based speech recognition using hidden filter models: Parameter selection and sensitivity to power normalization,” IEEE Transactions on Speech and Audio Processing, vol. 2, no. 1, pp. 80–89, 1994.

- [6] Li Deng, Michael L Seltzer, Dong Yu, Alex Acero, Abdel-rahman Mohamed, and Geoff Hinton, “Binary coding of speech spectrograms using a deep auto-encoder,” in Eleventh Annual Conference of the International Speech Communication Association, 2010.

- [7] Dong Wang, Xiaodong Wang, and Shaohe Lv, “An overview of end-to-end automatic speech recognition,” Symmetry, vol. 11, no. 8, pp. 1018, 2019.

- [8] Ying Zhang, Mohammad Pezeshki, Philémon Brakel, Saizheng Zhang, Cesar Laurent Yoshua Bengio, and Aaron Courville, “Towards end-to-end speech recognition with deep convolutional neural networks,” arXiv preprint arXiv:1701.02720, 2017.

- [9] Yajie Miao, Mohammad Gowayyed, and Florian Metze, “Eesen: End-to-end speech recognition using deep rnn models and wfst-based decoding,” in 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU). IEEE, 2015, pp. 167–174.

- [10] O. Abdel-Hamid, A. Mohamed, H. Jiang, and G. Penn, “Applying convolutional neural networks concepts to hybrid nn-hmm model for speech recognition,” in 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2012, pp. 4277–4280.

- [11] Pengju Liu, Hongzhi Zhang, Wei Lian, and Wangmeng Zuo, “Multi-level wavelet convolutional neural networks,” IEEE Access, vol. 7, pp. 74973–74985, 2019.

- [12] Shin Fujieda, Kohei Takayama, and Toshiya Hachisuka, “Wavelet convolutional neural networks,” arXiv preprint arXiv:1805.08620, 2018.

- [13] Sriprabha Ramanarayanan, Balamurali Murugesan, Keerthi Ram, and Mohanasankar Sivaprakasam, “Dc-wcnn: A deep cascade of wavelet based convolutional neural networks for mr image reconstruction,” in 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). IEEE, 2020, pp. 1069–1073.

- [14] Felix Burkhardt, Astrid Paeschke, Miriam Rolfes, Walter F Sendlmeier, and Benjamin Weiss, “A database of german emotional speech,” in Ninth European Conference on Speech Communication and Technology, 2005.

- [15] Steven R Livingstone and Frank A Russo, “The ryerson audio-visual database of emotional speech and song (ravdess): A dynamic, multimodal set of facial and vocal expressions in north american english,” PloS one, vol. 13, no. 5, pp. e0196391, 2018.

- [16] Ugo Cesari, Giuseppe De Pietro, Elio Marciano, Ciro Niri, Giovanna Sannino, and Laura Verde, “A new database of healthy and pathological voices,” Computers & Electrical Engineering, vol. 68, pp. 310–321, 2018.

- [17] M. Kathleen Pichora-Fuller and Kate Dupuis, “Toronto emotional speech set (TESS),” 2020.

- [18] P. Nantasri, E. Phaisangittisagul, J. Karnjana, S. Boonkla, S. Keerativittayanun, A. Rugchatjaroen, S. Usanavasin, and T. Shinozaki, “A light-weight artificial neural network for speech emotion recognition using average values of mfccs and their derivatives,” in 2020 17th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), 2020, pp. 41–44.

- [19] Anjali Bhavan, Pankaj Chauhan, Rajiv Ratn Shah, et al., “Bagged support vector machines for emotion recognition from speech,” Knowledge-Based Systems, vol. 184, pp. 104886, 2019.

- [20] Lili Chen and Junjiang Chen, “Deep neural network for automatic classification of pathological voice signals,” Journal of Voice, 2020.