Wasserstein Generative Learning of

Conditional Distribution

Abstract

Conditional distribution is a fundamental quantity for describing the relationship between a response and a predictor. We propose a Wasserstein generative approach to learning a conditional distribution. The proposed approach uses a conditional generator to transform a known distribution to the target conditional distribution. The conditional generator is estimated by matching a joint distribution involving the conditional generator and the target joint distribution, using the Wasserstein distance as the discrepancy measure for these joint distributions. We establish non-asymptotic error bound of the conditional sampling distribution generated by the proposed method and show that it is able to mitigate the curse of dimensionality, assuming that the data distribution is supported on a lower-dimensional set. We conduct numerical experiments to validate proposed method and illustrate its applications to conditional sample generation, nonparametric conditional density estimation, prediction uncertainty quantification, bivariate response data, image reconstruction and image generation. image generation and reconstruction.

Keywords: Conditional distribution; Generative learning; Neural Networks; Non-asymptotic error bounds; Nonparametric estimation.

1 Introduction

Conditional distribution is a fundamental quantity for measuring how a response variable depends on a predictor . Unlike regression methods that only model certain aspects of the relationship between and , such as the conditional mean, conditional distribution provides a complete description of the relationship. In this paper, we propose a nonparametric generative approach to learning a conditional distribution. This approach uses a function, which we shall refer to as a conditional generator, that transforms a known reference distribution to the target conditional distribution. The conditional generator is estimated by matching the joint distribution involving the conditional generator and the predictor and the joint distribution of the response and the predictor. We use the Wasserstein distance as the discrepancy measure for matching these joint distributions.

There is a vast literature on nonparametric conditional density estimation. Many existing methods use smoothing techniques, including kernel smoothing and local polynomials (Rosenblatt,, 1969; Scott,, 1992; Fan et al.,, 1996; Hyndman et al.,, 1996; Hall and Yao,, 2005; Bott and Kohler,, 2017). Basis expansion methods have also been developed for nonparametric conditional density estimation (Izbicki and Lee,, 2016; Izbicki et al.,, 2017). A common feature of these methods is that they seek to estimate the functional form of the conditional density. However, these existing conditional density estimation methods do not work well with high-dimensional complex data. In addition, most existing methods only consider the case when the response is a scalar and is not applicable to the settings when is a high-dimensional response vector.

The proposed approach is inspired by the generative adversarial networks (GAN) (Goodfellow et al.,, 2014) and Wasserstein GAN (WGAN) (Arjovsky et al.,, 2017). These methods were developed to learn high-dimensional (unconditional) distributions nonparametrically. Instead of estimating the functional forms of density functions, GAN and WGAN start from a known reference distribution and use a function that pushes the reference distribution to the data distribution. In practice, this function, often referred to as a generator, is usually parameterized using deep neural networks. In GAN and WGAN, it is only necessary to estimate a single generator function for sampling from a (unconditional) distribution. However, to sample from a conditional distribution, the generator function necessarily depends on the given value of to be conditioned on. Since it is difficult to estimate a collection of generator functions for all the possible values of , an effective approach is to formulate the generator function as a map from the product space of the spaces of the reference distribution and to the space of . The existence of such a map is guaranteed by the noise-outsourcing lemma in probability theory (Kallenberg,, 2002).

Several authors have generalized GANs to the setting of learning a conditional distribution. Mirza and Osindero, (2014) proposed conditional generative adversarial networks (cGAN). Similar to GANs, it solves a two-player minimax game using an objective function with the same form as that of the original GAN (Goodfellow et al.,, 2014). Kovachki et al., (2021) proposed a conditional sampling approach with monotone GANs. A limitation of this approach is that it requires the dimension of the reference distribution to be the same as the dimension of . In the high-dimensional settings, this does not allow the exploration of a possible low-dimensional latent structure in the data. Zhou et al., (2021) proposed a generative approach to conditional sampling based on the noise-outsourcing lemma and distribution matching. The Kullback-Liebler divergence is used for matching the generator distribution and the data distribution. They established consistency of the conditional sampler with respect to the total variation distance, with the help of Pinsker’s inequality that bounds the total variation distance via the Kullback-Liebler divergence (Tsybakov,, 2008). However, it is difficult to establish the convergence rate of the conditional sampling distribution with the Kullback-Liebler divergence.

Although the Kullback-Liebler divergence is an attractive discrepancy measure for distributions, it has some drawbacks in the present setting (Arjovsky et al.,, 2017). First, the Kullback-Liebler divergence is a strong divergence measure, for example, it is stronger than the Jensen-Shannon divergence and the total variation distance. Weak convergence of distributions does not imply their convergence in Kullback-Liebler divergence. Second, in high-dimensional problems, we are often interested in learning distributions with a low-dimensional latent structure, whose density functions may not exist. In this case, it is not sensible to use the Kullback-Liebler divergence. In contrast, the Wasserstein distance metricizes the space of probability distributions under mild conditions. This enables us to obtain the non-asymptotic error bounds of the proposed method and its convergence rate, see Theorems 3.1 and 3.2 in Section 3.1. Since the computation of the proposed method does not involve density functions, we can use it to learn distributions without a density function, such as distributions supported on a set with a lower intrinsic dimension than the ambient dimension. We show that the proposed method has an improved convergence rate under a low-dimensional support assumption and can mitigate the curse of dimensionality, see Theorem 3.4 in Section 3.2.

The proposed method for learning a conditional distribution has several appealing properties compared with the standard conditional density estimation methods. First, the proposed method can use a reference distribution with a lower dimension than that of the target distribution, therefore, it can learn conditional distributions with a lower-dimensional latent structure by using a low-dimensional reference distribution. Second, there is no restriction on the dimensionality of the response variable, while the standard methods typically only consider the case of a scalar response variable. Third, the proposed method allows continuous, discrete and mixed types of predictors and responses, while the smoothing and basis expansion methods only deal with continuous-type variables. Finally, since the computation of Wasserstein distance does not involve density functions, it can be used as a loss function for learning distributions without a density function, such as distributions supported on a set with a lower intrinsic dimension than the ambient dimension. Also, by using the Wassertein distance, we are able to establish the non-asymptotic error bound of the generated sampling distribution. To the best of our knowledge, this is the first error bound result in the context of conditional generative learning.

The remainder of this paper is organized as follows. In Section 2 we present the proposed method. In Section 3 we establish the non-asymptotic error bounds for the generated sampling distribution by the proposed method. in terms of the Dudley metric. We also show that the proposed method is able to mitigate the curse of dimensionality under the assumption that the joint distribution of is supported on a set with a low Minkowski dimension. In Section 4 we desribe the implementation of the proposed method. In Section 5 we conduct numerical experiments to validate the proposed method and illustrate its applications in conditional sample generation, nonparametric conditional density estimation, visualization of multivariate data, image generation and image reconstruction. Concluding remarks are given in Section 6. Additional numerical experiment results and the technical details are given in the appendices.

2 Method

We first describe an approach to representing a conditional distribution via a conditional generator function based on the noise-outsoucing lemma. We then describe the proposed method based on matching distributions using Wasserstein metric.

2.1 Conditional sampling based on noise outsourcing

Consider a pair of random vectors , where and Suppose with marginal distributions and . Denote the conditional distribution of given by . For a given value of , we also write the conditional distribution as . For regression problems, with ; for classification problems, is a set of finitely many labels. We assume with . The random vectors and can contain both continuous and categorical components. Let be a random vector independent of with a known distribution that is easy to sample from, such as a normal distribution.

We are interested in finding a function such that the conditional distribution of given equals the conditional distribution of given . Since is independent of , this is equivalent to finding a such that

| (2.1) |

Therefore, to sample from the conditional distribution , we can first sample an and calculate , then the resulting is a sample from Because of this property, we shall refer to as a conditional generator. This approach has also been used in Zhou et al., (2021). The existence of such a is guaranteed by the noise-outsourcing lemma (Theorem 5.10 in Kallenberg, (2002)). For ease of reference, we state it here with a slight modification.

Lemma 2.1.

(Noise-outsourcing lemma). Suppose is a standard Borel space. Then there exist a random vector for a given and a Borel-measurable function such that is independent of and

| (2.2) |

In Lemma 2.1, the noise distribution is taken to be with , since it is convenient to generate random numbers from a standard normal distribution. It is a simple consequence of the original noise-outsourcing lemma which uses the uniform distribution on for the noise distribution. The dimension of the noise vector does not need to be the same as , the dimension of

2.2 Wasserstein generative conditional sampler

Let be a subset of on which the measures we consider are defined. Let be the set of Borel probability measures on with finite first moment, that is, the set of probability measures on such that , where denotes the 1-norm in . The -Wasserstein metric is defined as

| (2.3) |

where is the set of joint probability distributions with marginals and The -Wasserstein metric is also known as the earth mover distance. A computationally more convenient form of the 1-Wasserstein metric is the Monge-Rubinstein dual (Villani,, 2008),

| (2.4) |

where is the -Lipschitz class,

| (2.5) |

When only random samples from and are available in practice, it is easy to obtain the empirical version of (2.4).

We apply the -Wasserstein metric (2.4) to the present conditional generative learning problem, that is, we seek a conditional generator function satisfying (2.1). The basic idea is to formulate this problem as a minimisation problem based on the -Wasserstein metric. By Lemma 2.1, we can find a conditional generator by matching the joint distributions and By (2.4), the 1-Wasserstein distance between and is

We have for every measurable and if and only if Therefore, a sufficient and necessary condition for

is , which implies It follows that the problem of finding the conditional generator can be formulated as the minimax problem:

where

| (2.6) |

Let be a random sample from and let be independently generated from . The empirical version of based on is

| (2.7) |

We use a feedforward neural network with parameter for estimating the conditional generator G and a second network with parameter for estimating the discriminator . We refer to Goodfellow et al., (2016) for a detailed description of neural network functions. We estimate and by solving the minimax problem:

| (2.8) |

The estimated conditional generator is and the estimated discriminator is .

We show below that for , converges in distribution to the conditional distribution . Therefore, we can use to learn this conditional distribution. Specifically, for an integer , we generate a random sample from the reference distribution and calculate , which are approximately distributed as Since generating random samples from and calculating are inexpensive, the computational cost is low once is obtained. For , this immediately leads to the estimated conditional quantiles of Moreover, for any function , by the noise outsourcing lemma, we have We can estimate this conditional expectation by In particular, the conditional mean and conditional variance of can be estimated in a straightforward way. In Section 5 below, we illustrate this approach with a range of examples.

3 Non-asymptotic error analysis

We establish the non-asymptotic error bound for the proposed method in terms of the integral probability metric (Müller,, 1997)

where is the uniformly bounded 1-Lipschitz function class,

for some constant . The metric is also known as the bounded Lipschitz metric () which metricizes the weak topology on the space of probability distributions. If has a bounded support, then is essentially the same as the 1-Wasserstein distance.

Let . We make the following assumptions.

Assumption 1.

For some , satisfies the first moment tail condition

Assumption 2.

The noise distribution is absolutely continuous with respect to the Lebesgue measure.

These are two mild assumptions. Assumption 1 is a technical condition for dealing with the case when the support of is an unbounded subset of It can be shown that Assumption 1 is equivalent to When has a bounded support, Assumption 1 is automatically satisfied. Moreover, this assumption is satisfied if is subgaussian. Assumption 2 is satisfied by commonly used reference distributions such as the normal distribution.

For the generator network , we require that

| (3.1) |

This requirement is satisfied by adding an additional clipping layer after the original output layer of the network,

where We truncate the value of to an increasing cube so that the support of the evaluation function to This restricts the evaluation function class to a domain.

3.1 Non-asymptotic error bound

Let be the depth and width of the feedforward ReLU discriminator network and let be the depth and width of the feedforward ReLU generator network . Denote the joint distribution of by .

Theorem 3.1.

When has a bounded support, we can drop the logarithm factor in the error bound.

Theorem 3.2.

Suppose that is supported on for . Let of and of be specified such that and for some constants . Let the output of be on . Then, under Assumption 2, we have

where is a constant independent of .

The error bound for the conditional distribution follows as a corollary.

Corollary 3.3.

Under the same assumptions and conditions of Theorem 3.2, we have

where is a constant independent of .

The proofs of Theorems 3.1 and 3.2 and Corollary 3.3 are given in the supplementary material. Assumption 1 only concerns the first moment tail of the joint distribution of . Assumption 2 requires the noise distribution to be absolutely continuous with respect to the Lebesgue measure. These assumptions are easily satisfied in practice. Moreover, we have made clear how the prefactor in the error bound depends on the dimension of . This is useful in the high-dimensional settings since how the prefactor depends on the dimension plays an important role in determining the quality of the bound. Here the prefactors in the error bounds depend on the dimensions and through . The convergence rate is .

Unfortunately, these results suffer from the curse of dimensionality in the sense that the quality of the error bound deteriorates quickly when becomes large. It is generally not possible to avoid the curse of dimensionality without any conditions on the distribution of . Detailed discussions on this problem in the context of nonparametric regression using neural networks can be found in Bauer and Kohler, (2019); Schmidt-Hieber, (2020) and Jiao et al., (2021) and the references therein. In particular, Jiao et al., (2021) also provided an analysis of how the prefactor depends on the dimension of . To mitigate the curse of dimensionality, certain assumptions on the distribution of is needed. We consider one such assumption below.

3.2 Mitigating the curse of dimensionality

If the joint distribution of is supported on a low dimensional set, we can improve the convergence rate substantially. Low dimensional structure of complex data have been frequently observed by researchers in image analysis, computer vision and natural language processing, therefore, it is often a reasonable assumption. We use the Minkowski dimension as a measure of the dimensionality of a set (Bishop and Peres,, 2016).

Let be a subset of . For any , the covering number is the minimum number of balls with radius needed to cover (Van der Vaart and Wellner,, 1996). The upper and the lower Minkowski dimensions of are defined respectively as

If , then is called the Minkowski dimension or the metric dimension of

Minkowski dimension measures how the covering number of the set decays when radius of the covering balls goes to 0. When is a smooth manifold, its Minkowski dimension equals its own topological dimension characterized by the local homeomorphisms. Minkowski dimension can also be used to measure the dimensionality of highly non-regular set, such as fractals (Falconer,, 2004). Nakada and Imaizumi, (2020) and Jiao et al., (2021) have shown that deep neural networks can adapt to the low dimensional structure of the data and mitigate the curse of dimensionality in nonparametric regression. We show similar results can be obtained for the proposed method if the data distribution is supported on a set with low Minkowski dimension.

Assumption 3.

Suppose is supported on a bounded set and .

Theorem 3.4.

In comparison with Theorem 3.2, where the rate of convergence depends on , the convergence rate in Theorem 3.4 is determined by the Minkowski dimension . Therefore, the assumption of a low Minkowski dimension on the support of data distribution alleviates the curse of dimensionality. However, unless we have better approximation error bounds for Lipschitz functions defined on low Minkowski dimensional set using neural network functions, the prefactor in Theorem 3.4 is still , that is, even the convergence rate only depends on the Minkowski dimension , the prefactor still depends on the ambient dimension

4 Implementation

The estimator of the neural network parameter is the solution to the minimax problem

| (4.1) |

We use the gradient penalty algorithm to impose the constraint that the discriminator belongs to the class of 1-Lipschitz functions (Gulrajani et al.,, 2017). The minimax problem (4.1) is solved by updating and alternately as follows:

-

(a)

Fix , update the discriminator by maximizing the empirical objective function

with respect to , where is the gradient of with respect to evaluated at The third term on the right side is the gradients penalty for the Lipschitz condition on the discriminator (Gulrajani et al.,, 2017).

-

(b)

Fix , update the generator by minimizing the empirical objective function

with respect to .

We implemented this algorithm in TensorFlow (Abadi et al.,, 2016).

We also implemented the weight clipping method for enforcing the Lipschitz condition on the discriminator (Arjovsky et al.,, 2017). With weight clipping, all weights in the discriminator is truncated to be between where is a small number. We found that the gradient penalty method is more stable and converges faster. So we only report the numerical results based on the gradient penalty method below.

5 Numerical experiments

We carry out numerical experiments to evaluate the finite sample performance of the proposed method described in Section 2 and illustrate its applications using examples in conditional sample generation, nonparametric conditional density estimation, visualization of multivariate data, and image generation and reconstruction. Additional numerical results are provided in the supplementary material. For all the experiments below, the noise random vector is generated from a standard multivariate normal distribution. We implemented the proposed method in TensorFlow (Abadi et al.,, 2016) and used the stochastic gradient descent algorithm Adam (Kingma and Ba,, 2015) in training the neural networks.

5.1 Conditional sample generation: the two-moon dataset

We use the two-moon data example to illustrate the use of the proposed method for generating conditional samples. Let be the class label and let . The two-moon model is

| (5.1) |

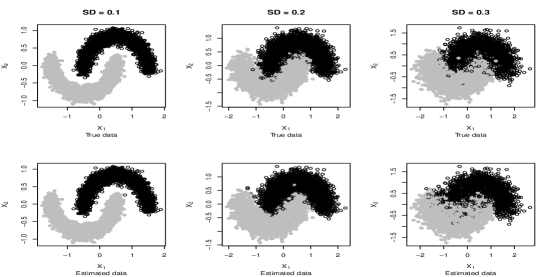

where , are independent and identically distributed as We generate three sets of random samples of size with for each class and and 0.3 from this model. The generated datasets are shown in the first row of Figure 1.

We use the simulated data to estimate the conditional generator, which is parameterized as a two-layer fully-connected network with 30 and 20 nodes. The discriminator is also a two-layer fully-connected network with 40 and 20 nodes. The noise The activation function for the hidden layer of the generator and the discriminator is ReLU. For the output layer of the generator, the activation function is the hyperbolic tangent function. The estimated samples , are shown in the second row of Figure 1. It can be seen that the scatter plots of the estimated samples are similar to those of the simulated data. This provides a visual validation of the estimated samples in this toy example.

5.2 Nonparametric conditional density estimation

We consider the problem of estimating conditional mean and conditional standard deviation in nonparametric conditional density models. We also compare the proposed Wasserstein generative conditional sampling method, referred to as WGCS in Table 1, with three existing conditional density estimation methods, including the nearest neighbor kernel conditional density estimator (NNKCDE) (Dalmasso et al.,, 2020), the conditional kernel density estimator (CKDE, implemented in R package np (Hall et al.,, 2004), and a basis expansion method FlexCode (Izbicki et al.,, 2017). We simulated data from the following three models:

-

•

Model 1 (M1). A nonlinear model with an additive error term:

-

•

Model 2 (M2). A model with a multiplicative non-Gassisan error term:

where

-

•

Model 3 (M3). A mixture of two normal distributions:

where and is independent of .

In each model, the covariate vector is generated from . So the ambient dimension of is 100, but (M1) and (M2) only depend on the first 5 components of and (M3) only depends on the first 2 components of . The sample size .

For the proposed method, the conditional generator is parameterized using a one-layer neural network in models (M1) and (M2); it is parameterized by a two-layer fully connected neural network in (M3). The discriminator is parameterized using a two-layer fully connected neural network. The noise vector

For the conditional density estimation method NNKCDE, the tuning parameters are chosen using cross-validation. The bandwidth of the conditional kernel density estimator CKDE is determined based on the standard formula where is a measure of spread of the th variable, the order of the kernel, and the dimension of . The basis expansion based method FlexCode uses Fourier basis. The maximum number of bases is set to 40 and the actual number of bases is selected using cross-validation.

| WGCS | NNKCDE | CKDE | FlexCode | ||

|---|---|---|---|---|---|

| M1 | Mean | 1.10(0.05) | 2.49(0.01) | 3.30(0.02) | 2.30(0.01) |

| SD | 0.24(0.04) | 0.43(0.01) | 0.59(0.01) | 1.06(0.08) | |

| M2 | Mean | 3.71(0.23) | 6.09(0.07) | 66.76(2.06) | 10.20(0.33) |

| SD | 3.52(0.17) | 9.33(0.23) | 18.87(0.59) | 11.08(0.34) | |

| Mean | 0.32(0.03) | 0.11(0.01) | 1.55(0.03) | 0.12(0.04) | |

| M3 | SD | 0.10(0.01) | 0.36(0.01) | 0.51(0.01) | 0.33(0.01) |

We consider the mean squared error (MSE) of the estimated conditional mean and the estimated conditional standard deviation . We use a test data set of size . For the proposed method, we first generate samples from the reference distribution and calculate conditional samples The estimated conditional standard deviation is calculated as the sample standard deviation of the conditional samples. The MSE of the estimated conditional mean is For the proposed method, the estimate of the conditional mean is obtained using Monte Carlo. For other methods, the estimate is calculated by numerical integration. Similarly, the MSE of the estimated conditional standard deviation is The estimated conditional standard deviation of other methods are computed by numerical integration.

We repeat the simulations 10 times. The average MSEs and simulation standard errors are summarized in Table 1. Comparing with CKDE and FlexCode and NNKCED. WGCS has the smallest MSEs for estimating conditional mean and conditional SD in most cases.

5.3 Prediction interval: the wine quality dataset

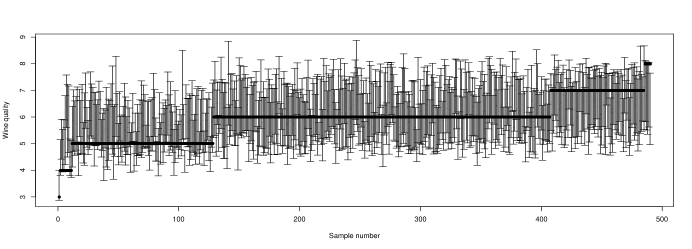

We use the wine quality dataset (Cortez et al.,, 2009) to illustrate the application of the proposed method to prediction interval construction. This dataset is available at UCI machine learning repository (https://archive.ics.uci.edu/ml/datasets/Wine+Quality). There are eleven physicochemical quantitative variables including fixed acidity, volatile acidity, citric acid, residual sugar, chlorides, free sulfur dioxide, total sulfur dioxide, density, pH, sulphates, alcohol as predictors and a sensory quantitative variable quality that measures wine quality, which is a score between 0 and 10. The goal is to build a prediction model for the wine quality score based on the eleven physicochemical variables. Such a model can be used to help the oenologist wine tasting evaluations and improve wine production. We use the proposed method to learn the conditional distribution of quality given the eleven variables. An advantage of the proposed method over the standard nonparametric regression is that it provides a prediction interval for the quality score, not just a point prediction.

The sample size of this dataset is 4,898. We use 90% of the samples as the training set and 10% as the test set. All variables are centered and standardized first before training the model. In our proposed method, the conditional generator is a two-layer fully-connected feedforward network with 50 and 20 nodes, the discriminator is a two-layer network with 50 and 25 nodes. The ReLU activation function is used in both networks. The noise

To examine the prediction performance of the proposed method, we construct the 90% prediction interval for the wine quality score in the test set. The prediction intervals are shown in Figure 2. The actual quality scores are plotted as solid dots. The actual coverage for all 490 in the test set is 90.80%, close to the nominal level of 90%.

5.4 Bivariate response: California housing data

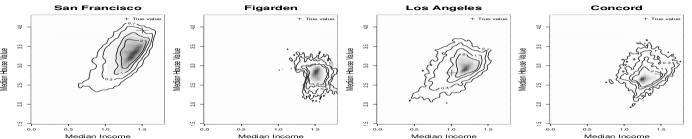

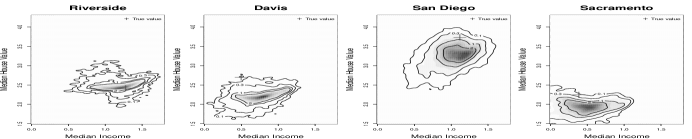

We use the California housing dataset to demonstrate that the generated conditional samples from the proposed method can be used for visualizing conditional distributions of bivariate response data. This dataset is available at StatLib repository (http://lib.stat.cmu.edu/datasets/). It contains 20,640 observations on housing prices with 9 covariates including median house value, median income, median house age, total rooms, total bedrooms, population, households, latitude, and longitude. Each row of the data matrix represents a neighborhood block in California. We use the logarithmic transformation of the variables except longitude, latitude and median house age. All columns of the data matrix are centered and standardized to have zero mean and unit variance. The generator is a two-layer fully-connected neural network with 60 and 25 nodes and the discriminator is a two-layer network with 65 and 30 nodes. ReLU activation is used for the hidden layers. The random noise vector .

We train the WGCS model with (median income, median house value) as the response vector and other variables as the predictors in the model. We use 18,675 observations (about the 90% of the dataset) as training set and the remaining 2,064 (about 10% of the dataset) observations as the test set.

Figure 3 shows the contour plot of the conditional distributions of median income and median house value (in logarithmic scale) for 8 single blocks in the test set. The colored density function represents the kernel density estimates based on the samples generated using the proposed method. We see that the blue cross is in the main body of the plot, which shows that the proposed method provides reasonable prediction of the median income and house values. We also see that big cities like San Francisco and Los Angles have higher house values with larger variations, smaller cities such as Davis and Concord tend to have lower house values.

5.5 Image reconstruction: MNIST dataset

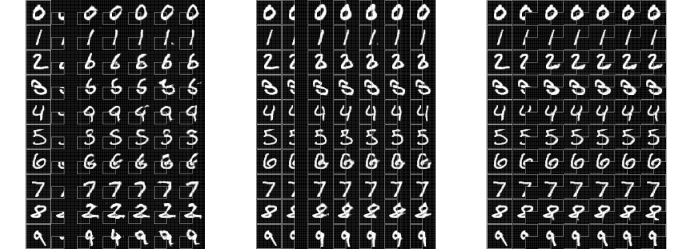

We now illustrate the application of the proposed method to high-dimensional data problems and demonstrate that it can easily handle the models when either of both of and are high-dimensional. We use the MNIST handwritten digits dataset (LeCun and Cortes,, 2010), which contains 60,000 images for training and 10,000 images for testing. The images are stored in matrices with gray color intensity from 0 to 1. Each image is paired with a label in . We use WGCS to perform two tasks: generating images from labels and reconstructing the missing part of an image.

We illustrate using the proposed method for image reconstruction when part of the image is missing with the MNIST dataset. Suppose we only observe or of an image and would like to reconstruct the missing part of the image. For this problem, let be the observed part of the image and let be the missing part of the image. Our goal is to reconstruct based on . For discriminator, we use two convolutional networks to process and separately. The filters are then concatenated together and fed into another convolution layer and fully-connected layer before output. For the generator, is processed by a fully-connected layer followed by 3 deconvolution layers. The random noise vector

In Figure 4, three panels from left to right correspond to the situations when or of an image are given, the problem is to reconstruct the missing part of the image. In each panel, the first column contains the true images in the testing set; the second column shows the observed part of the image; the third to the seventh columns show the reconstructed images. The digits “0”, “1” and “7” are easy to reconstruct, even when only 1/4 of their images are given. For the other digits, if only 1/4 of their images are given, it is difficult to reconstruct them. However, as the given part increases from 1/4 to 1/2 and then 3/4 of the images, the proposed method is able to reconstruct the images correctly, and the reconstructed images become less variable and more similar to the true image. For example, for the digit “2”, if only the left lower 1/4 of the image is given, the reconstructed images tend to be incorrect; the reconstruction is only successful when 3/4 of the image is given.

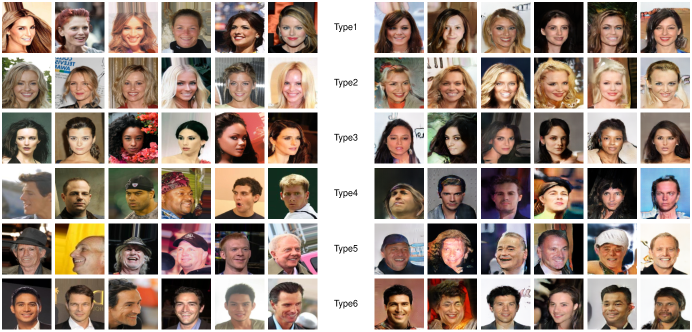

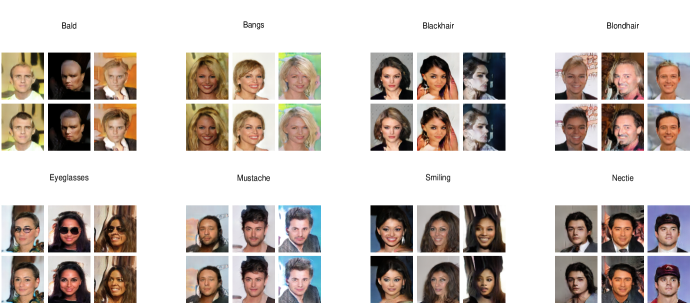

5.6 Image generation: CelebA dataset

We illustrate the application of the proposed method to the problem of image generation given label information with the CelebFaces Attributes (CelebA) dataset (Liu et al.,, 2015). This dataset contains more then 200K colored celebrity images with 40 attributes annotation. Here we use 10 binary features, including: Gender, Young, Bald, Bangs, Blackhair, Blondhair, Eyeglasses, Mustache, Smiling, WearingNecktie. We take these features as . So is a vector consisting of 10 binary components. We consider six types of images; the attributes of these six types are shown in Table A.1. We used the aligned and cropped images and further resize them to as our training set. We take these colored images as the values of . Therefore, the dimension of is 10 and the dimension of is .

| Attributes | Gender | Young | Blkhair | Bldhair | Glass | Bald | Mus | Smile | Necktie | Bangs |

|---|---|---|---|---|---|---|---|---|---|---|

| Type 1 | F | Y | N | N | N | N | N | Y | N | N |

| Type 2 | F | Y | N | Y | N | N | N | Y | N | N |

| Type 3 | F | Y | Y | N | N | N | N | N | N | N |

| Type 4 | M | Y | N | N | N | N | N | N | N | N |

| Type 5 | M | N | N | N | N | N | N | Y | N | N |

| Type 6 | M | Y | Y | N | N | N | N | Y | N | N |

The architecture of the discriminator is specified as follows: the 10 dimensional one-hot vector is first expanded to by replicating each number to a matrix. Then it is concatenated with image on the last axis. Thus the processed data has dimension . This processed data is then fed into 5 consecutive convolution layers initialized by truncated normal distribution with with 64,128,256,512,1024 filters respectively. The activation for each convolution layer is a leaky ReLU with . The strides is 2 and the kernel size is 5. After convolution, it is flattened and connected to a dense layer with one unit as output.

The architecture of the conditional generator is as follows: the noise vector and the feature vector are concatenated and fed to a dense layer with units with ReLU activation and then reshaped to . Then it goes through 5 layers of deconvolution initialized by truncated normal distribution with with 512,256,128,64,3 filters respectively. The activation for each intermediate convolution layer is ReLU and hyperbolic tangent function for the last layer. The strides is 2 and kernel size is 5. The optimizer is Adam with and learning rate decrease from to . The noise dimension is 100.

6 Discussion

We have proposed a Wasserstein conditional sampler, a generative approach to learning a conditional distribution. We establish the convergence rate of the sampling distribution of the proposed method. We also show that the curse of dimensionality can be mitigated if the data distribution is supported on a lower dimensional set. Our numerical experiments demonstrate that the proposed method performs well in a variety of settings from standard conditional density estimation to more complex image generation and reconstruction problems.

In the future work, it would be interesting to consider incorporating additional information such as sparsity and latent low dimensional structure of data in the proposed method to better deal with the curse of dimensionality in the high-dimensional settings.

Acknowledgements

The work J. Huang is supported in part by the U.S. National Science Foundation grant DMS-1916199. The work of Y. Jiao is supported in part by the National Science Foundation of China (No. 11871474) and by the research fund of KLATASDSMOE of China.

References

- Abadi et al., (2016) Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., et al. (2016). Tensorflow: A system for large-scale machine learning. In 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), pages 265–283.

- Arjovsky et al., (2017) Arjovsky, M., Chintala, S., and Bottou, L. (2017). Wasserstein generative adversarial networks. In International Conference on Machine Learning.

- Bauer and Kohler, (2019) Bauer, B. and Kohler, M. (2019). On deep learning as a remedy for the curse of dimensionality in nonparametric regression. Ann. Statist., 47(4):2261–2285.

- Bishop and Peres, (2016) Bishop, C. J. and Peres, Y. (2016). Fractals in Probability and Analysis. Cambridge Studies in Advanced Mathematics. Cambridge University Press.

- Bott and Kohler, (2017) Bott, A.-K. and Kohler, M. (2017). Nonparametric estimation of a conditional density. Annals of the Institute of Statistical Mathematics, 69(1):189–214.

- Cortez et al., (2009) Cortez, P., Cerdeira, A., Almeida, F., Matos, T., and Reis, J. (2009). Modeling wine preferences by data mining from physicochemical properties. Decision Support Systems, 47(4):547–553.

- Dalmasso et al., (2020) Dalmasso, N., Pospisil, T., Lee, A. B., Izbicki, R., Freeman, P. E., and Malz, A. I. (2020). Conditional density estimation tools in python and R with applications to photometric redshifts and likelihood-free cosmological inference. Astronomy and Computing, page 100362.

- Dua and Graff, (2017) Dua, D. and Graff, C. (2017). UCI machine learning repository. http://archive.ics.uci.edu/ml.

- Falconer, (2004) Falconer, K. (2004). Fractal Geometry: Mathematical Foundations and Applications. John Wiley & Sons.

- Fan et al., (1996) Fan, J., Yao, Q., and Tong, H. (1996). Estimation of conditional densities and sensitivity measures in nonlinear dynamical systems. Biometrika, 83(1):189–206.

- Goodfellow et al., (2014) Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. (2014). Generative adversarial nets. In Advances in Neural Information Processing Systems, volume 27, pages 2672–2680.

- Goodfellow et al., (2016) Goodfellow, I. J., Bengio, Y., and Courville, A. (2016). Deep Learning. The MIT Press, Cambridge, MA, USA. http://www.deeplearningbook.org.

- Gulrajani et al., (2017) Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., and Courville, A. (2017). Improved training of wasserstein gans. arXiv preprint arXiv:1704.00028.

- Hall et al., (2004) Hall, P., Racine, J., and Li, Q. (2004). Cross-validation and the estimation of conditional probability densities. Journal of the American Statistical Association, 99(468):1015–1026.

- Hall and Yao, (2005) Hall, P. and Yao, Q. (2005). Approximating conditional distribution functions using dimension reduction. Annals of Statististics, 33(3):1404–1421.

- Hyndman et al., (1996) Hyndman, R. J., Bashtannyk, D. M., and Grunwald, G. K. (1996). Estimating and visualizing conditional densities. Journal of Computational and Graphical Statistics, 5(4):315–336.

- Izbicki and Lee, (2016) Izbicki, R. and Lee, A. B. (2016). Nonparametric conditional density estimation in a high-dimensional regression setting. Journal of Computational and Graphical Statistics, 25(4):1297–1316.

- Izbicki et al., (2017) Izbicki, R., Lee, A. B., et al. (2017). Converting high-dimensional regression to high-dimensional conditional density estimation. Electronic Journal of Statistics, 11(2):2800–2831.

- Jiao et al., (2021) Jiao, Y., Shen, G., Lin, Y., and Huang, J. (2021). Deep nonparametric regression on approximately low-dimensional manifolds. arXiv 2104.06708.

- Kallenberg, (2002) Kallenberg, O. (2002). Foundations of Modern Probability. Springer-Verlag, New York, 2nd edition.

- Kingma and Ba, (2015) Kingma, D. P. and Ba, J. (2015). Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representation.

- Kovachki et al., (2021) Kovachki, N., Baptista, R., Hosseini, B., and Marzouk, Y. (2021). Conditional sampling with monotone gans. arXiv 2006.06755.

- LeCun and Cortes, (2010) LeCun, Y. and Cortes, C. (2010). MNIST handwritten digit database.

- Liu et al., (2015) Liu, Z., Luo, P., Wang, X., and Tang, X. (2015). Deep learning face attributes in the wild. In Proceedings of International Conference on Computer Vision (ICCV).

- Lu et al., (2020) Lu, J., Shen, Z., Yang, H., and Zhang, S. (2020). Deep network approximation for smooth functions. arXiv preprint arXiv:2001.03040.

- Lu and Lu, (2020) Lu, Y. and Lu, J. (2020). A universal approximation theorem of deep neural networks for expressing probability distributions. arXiv preprint arXiv:2004.08867.

- Lu et al., (2017) Lu, Z., Pu, H., Wang, F., Hu, Z., and Wang, L. (2017). The expressive power of neural networks: A view from the width. arXiv preprint arXiv:1709.02540.

- Mirza and Osindero, (2014) Mirza, M. and Osindero, S. (2014). Conditional generative adversarial nets. arXiv:1411.1784.

- Mohri et al., (2018) Mohri, M., Rostamizadeh, A., and Talwalkar, A. (2018). Foundations of Machine Learning. The MIT press.

- Montanelli and Du, (2019) Montanelli, H. and Du, Q. (2019). New error bounds for deep relu networks using sparse grids. SIAM Journal on Mathematics of Data Science, 1(1):78–92.

- Müller, (1997) Müller, A. (1997). Integral probability metrics and their generating classes of functions. Advances in Applied Probability, pages 429–443.

- Nakada and Imaizumi, (2020) Nakada, R. and Imaizumi, M. (2020). Adaptive approximation and generalization of deep neural network with intrinsic dimensionality. J. Mach. Learn. Res., 21:174–1.

- Petersen and Voigtlaender, (2018) Petersen, P. and Voigtlaender, F. (2018). Optimal approximation of piecewise smooth functions using deep relu neural networks. Neural Networks, 108:296–330.

- Rosenblatt, (1969) Rosenblatt, M. (1969). Conditional probability density and regression estimators. In In Multivariate Analysis II, Ed. P. R. Krishnaiah, pages 25–31, New York. Academic Press, New York.

- Schmidt-Hieber, (2020) Schmidt-Hieber, J. (2020). Nonparametric regression using deep neural networks with ReLU activation function (with discussion). Ann. Statist., 48(4):1916–1921.

- Scott, (1992) Scott, D. W. (1992). Multivariate Density Estimation: Theory, Practice and Visualization. Wiley, New York.

- (37) Shen, Z., Yang, H., and Zhang, S. (2019a). Deep network approximation characterized by number of neurons. arXiv preprint arXiv:1906.05497.

- (38) Shen, Z., Yang, H., and Zhang, S. (2019b). Nonlinear approximation via compositions. Neural Networks, 119:74–84.

- Srebro and Sridharan, (2010) Srebro, N. and Sridharan, K. (2010). Note on refined dudley integral covering number bound. Unpublished results. http://ttic. uchicago. edu/karthik/dudley. pdf.

- Suzuki, (2018) Suzuki, T. (2018). Adaptivity of deep relu network for learning in besov and mixed smooth besov spaces: optimal rate and curse of dimensionality. arXiv preprint arXiv:1810.08033.

- Tsybakov, (2008) Tsybakov, A. (2008). Introduction to Nonparametric Estimation. Springer Science & Business Media.

- Van der Vaart and Wellner, (1996) Van der Vaart, A. W. and Wellner, J. A. (1996). Weak Convergence and Empirical Processes: with Applications to Statistics. Springer, New York.

- Villani, (2008) Villani, C. (2008). Optimal Transport: Old and New. Springer Berlin Heidelberg.

- Yang et al., (2021) Yang, Y., Li, Z., and Wang, Y. (2021). On the capacity of deep generative networks for approximating distributions. arXiv preprint arXiv:2101.12353.

- Yarotsky, (2017) Yarotsky, D. (2017). Error bounds for approximations with deep relu networks. Neural Networks, 94:103–114.

- Yarotsky, (2018) Yarotsky, D. (2018). Optimal approximation of continuous functions by very deep relu networks. In Conference on Learning Theory, pages 639–649. PMLR.

- Zhou et al., (2021) Zhou, X., Jiao, Y., Liu, J., and Huang, J. (2021). A deep generative approach to conditional sampling. arXiv preprint arXiv:2110.10277.

Appendices

In the appendices, we include additional numerical results, proofs of the theorems stated in the paper and technical details.

Appendix A Additional numerical results

We carry out numerical experiments to evaluate the finite sample performance of WGCS and illustrate its applications using examples in conditional sample generation, nonparametric conditional density estimation, visualization of multivariate data, and image generation and reconstruction. For all the experiments below, the noise random vector is generated from a standard multivariate normal distribution. The discriminator is updated five times per one update of the generator. We implemented WGCS in TensorFlow (Abadi et al.,, 2016) and used the stochastic gradient descent algorithm Adam (Kingma and Ba,, 2015) in training the neural networks.

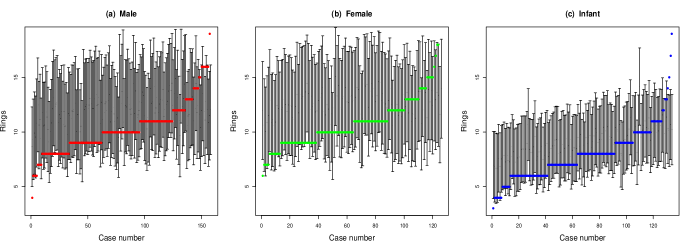

A.1 Conditional prediction: the abalone dataset

The abalone dataset is available at UCI machine learning repository (Dua and Graff,, 2017). It contains the number of rings of abalone and other physical measurements. The age of abalone is determined by cutting the shell through the cone, staining it, and counting the number of rings through a microscope, a time-consuming process. Other measurements, which are easier to obtain, are used to predict the number of rings that determines the age. This dataset contains 9 variables. They are sex, length, diameter, height, whole weight, shucked weight, viscera weight, shell weight and rings. Except for the categorical variable sex, all the other variables are continuous. The variable sex codes three groups: female, male and infant, since the gender of an infant abalone is not known. We take rings as the response and the other measurements as the covariate vector . The sample size is 4,177. We use 90% of the data for training and 10% of the data as the test set.

The generator is a two-layer fully-connected network with 50 and 20 nodes and the discriminator is also a two-layer network with 50 and 25 nodes, both with ReLU activation function. The noise

To examine the prediction performance of the estimated conditional density, we construct the 90% prediction interval for the number of rings of each abalone in the testing set. The prediction intervals are shown in Figure A.1. The actual number of rings are plotted as a solid dot. The actual coverage for all 418 cases in the testing set is 90.90%, close to the nominal level of 90%. The numbers of rings that are not covered by the prediction intervals are the largest ones in each group. This dataset was also analyzed in Zhou et al., (2021) using a generative method with the Kullback-Liebler divergence measure. The results are similar.

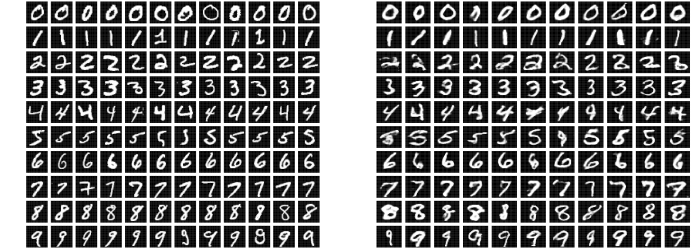

A.2 Image generation: MNIST dataset

We now illustrate the application of WGCS to high-dimensional data problems and demonstrate that it can easily handle the models when either of both of and are high-dimensional. We use the MNIST handwritten digits dataset (LeCun and Cortes,, 2010), which contains 60,000 images for training and 10,000 images for testing. The images are stored in matrices with gray color intensity from 0 to 1. Each image is paired with a label in . We use WGCS to perform two tasks: generating images from labels and reconstructing the missing part of an image.

We generate images of handwritten digits given the label. In this problem, the predictor is a categorical variable representing the ten digits: and the response represents images. We use one-hot vectors in to represent these ten categories. So the dimension of is 10 and the dimension of is The response is a matrix representing the intensity values. For the discriminator , we use a convolutional neural network (CNN) with 3 convolution layers with 128, 256, and 256 filters to extract the features of the image and then concatenate with the label information (repeated 10 times to match the dimension of the features). The concatenated information is sent to a fully connected layer and then to the output layer. For the generator , we concatenate the label information with the random noise vector Then it is fed to a CNN with 3 deconvolution layers with 256, 128, and 1 filters, respectively.

Figure A.2 shows the real images (left panel) and generated images (right panel). We see that the generated images are similar to the real images and it is hard to distinguish the generated ones from the real images. Also, there are some differences in the generated images, reflecting the random variations in the generating process.

A.3 Image generation given labels: CelebA dataset

We illustrate the application of WGCS to the problem of image generation with the CelebFaces Attributes (CelebA) dataset (Liu et al.,, 2015), which is a large-scale dataset containing more then 200K colored celebrity images with 40 attributes annotation. Here we use 10 features including: Gender, Young, Bald, Bangs, Blackhair, Blondhair, Eyeglasses, Mustache, Smiling, WearingNecktie as binary variables. We take these features as . So is a vector consisting of 10 binary components. We used the aligned and cropped images and further resize them to as our training set. We take these colored images as the values of . Therefore, the dimension of is 10 and the dimension of is .

| Attributes | Gender | Young | Blkhair | Bldhair | Glass | Bald | Mus | Smile | Necktie | Bangs |

|---|---|---|---|---|---|---|---|---|---|---|

| Type 1 | F | Y | N | N | N | N | N | Y | N | N |

| Type 2 | F | Y | N | Y | N | N | N | Y | N | N |

| Type 3 | F | Y | Y | N | N | N | N | N | N | N |

| Type 4 | M | Y | N | N | N | N | N | N | N | N |

| Type 5 | M | N | N | N | N | N | N | Y | N | N |

| Type 6 | M | Y | Y | N | N | N | N | Y | N | N |

The architecture of the discriminator is specified as follows: the 10 dimensional one-hot vector is first expanded to by replicating each number to a matrix. Then it is concatenated with image on the last axis. Thus the processed data has dimension . This processed data is then fed into 5 consecutive convolution layers initialized by truncated normal distribution with with 64,128,256,512,1024 filters respectively. The activation for each convolution layer is a leaky RELU with . The strides is 2 and the kernel size is 5. After convolution, it is flattened and connected to a dense layer with one unit as output.

The structure of generator is as follows: the input of and noise is concatenated and fed to a dense layer with units with RELU activation and then reshaped to . Then it goes through 5 layers of deconvolution initialized by truncated normal distribution with with 512,256,128,64,3 filters respectively. The activation for each intermediate convolution layer is RELU and hyperbolic tangent function for the last layer. The strides is 2 and kernel size is 5. The optimizer is Adam with and learning rate decrease from to . The noise dimension is 100.

In Figure A.3, we present image generation given some specific attributes. All the images in this figure are generated. For each panel, there are three pairs of faces. Each pair is generated with the same except for the feature labeled above so they look exactly the same except for the specified feature. For example, the first panel shows the generate bald or non-bald faces, the first row includes bald faces and the second row includes non-bald faces.

Appendix B A high level description of the error analysis

Below we first present a high level description of the error analysis. For the estimator of the conditional generator as given in (2.8), we are interested in bounding the error . Our basic idea is to decompose this error into terms that are easier to analyze.

Let and be ghost samples that are independent of the original samples. Here we introduce ghost samples as a technical tool for bounding the stochastic error term defined below. The details are given in the proof of Lemma C.7 in the appendix. We consider based on the empirical version of that depends on the original samples given in (2.7) and based on the loss function of the ghost samples ,

Recall the error is defined by

We decompose it as follows:

where ,

| (B.1) |

and

| (B.2) |

By Lemma C.1, we have

where

| (B.3) |

and

| (B.4) |

By their definitions, we can see that and are approximation errors; and are stochastic errors.

We summarize the above derivation in the following lemma.

Lemma B.1.

Let be the minimax solution in (2.8). Then the bounded Lipschitz distance between and can be decomposed as follows.

| (B.5) |

Appendix C Supporting Lemmas and Proofs

In this section, we present support lemmas and prove Theorems 3.1 to 3.4. We first prove the following lemma.

Lemma C.1.

For any symmetric function classes and , denote the approximation error as

then for any probability distributions and ,

This inequality can be extended to an empirical version by using empirical measures.

Proof.

By the definition of supremum, for any , there exists such that

where the last line is due to the definition of . ∎

C.1 An equivalent statement

We hope that functions in the evaluation class are defined on a bounded domain so we can apply existing neural nets approximation theorems to bound the approximation error . It motivates us to first show that proving the desired convergence rate is equivalent to establishing the same convergence rate but with the domain restricted function class as the evaluation class under Assumption 1.

Suppose Assumption 1 holds. By the Markov inequality we have

| (B.1) |

where . The bounded Lipschitz distance is defined as

| (B.2) |

The first term above can be decomposed as

For any and a fixed point , due to the Lipschitzness of , the second term above satisfies

where the second inequality is due to lipschitzness and boundedness of , and the last inequality is due to Assumption 1 and (B.1). The second term in (B.2) can be dealt similarly due to Condition 3.1 for the network . Hence, restricting the evaluation class to will not affect the convergence rate in the main results, i.e. . Due to this fact, to keep notation simple, we denote as in the following sections.

C.2 Bounding the errors

We first state two lemmas for controlling the approximation error in (B.4). Let be the set of all continuous piecewise linear functions which have breakpoints only at and are constant on and . The following lemma is from Yang et al., (2021).

Lemma C.2.

Suppose that , and . Then for any , can be represented by a ReLU FNNs with width and depth no larger than and , respectively.

If we choose , a simple calculation shows with and . This means when the number of breakpoints are moderate compared with the network structure, such piecewise linear functions are expressible by feedforward ReLU networks. The next result shows that we can apply piecewise linear functions to push each to .

Lemma C.3.

Suppose probability measure supported on is absolutely continuous w.r.t. Lebesgue measure, and probability meansure is supported on . and are i.i.d. samples from and , respectively for . Then there exist generator ReLU FNN maps to for all . Moreover, such can be obtained by properly specifying for some constant .

Proof.

By the absolute continuity of , all the are distinct a.s.. Without loss of generality, assume (or we can reorder them). Let be any point between and for . We define the continuous piece-wise linear function by

Then maps to for all . By Lemma C.2, if . Taking , a simple calculation shows for some constant . ∎

We now consider a way to characterize the distance between two joint distributions of and by the Integral Probability Metric (IPM, Müller, (1997)) with respect to the uniformly bounded 1-Lipschitz function class , which is defined as

The metric is also known as the bounded Lipschitz metric () which metricizes the weak topology on the space of probability distributions. Let be the joint distribution of and be the joint distribution of . We are bounding the error .

We consider the error bound under because the approximation errors will be unbounded if we do not impose the uniform bound for the evaluation class . However, if has bounded support, then will be essentially .

For convenience, we restate Lemma B.1 below.

Lemma C.4.

Let be the minimax solution in (2.8). Then the bounded Lipschitz distance between and can be decomposed as follows.

Remark.

As far as we know, all the tools for controlling approximation error require that the approximated functions be defined on a bounded domain. Hence, the restriction on cube for is technically necessary for controlling . The fact that we used uniform bound in the proof also explains that we can only consider for the unbounded support case. However, in the bounded support case, this statement is obviously unnecessary and we are able to obtain results under 1-Wasserstein distance.

C.3 Bounding the error terms

Bounding . The discriminator approximation error describes how well the discriminator neural network class is in the task of approximating functions from the Lipschitz class . Intuitively speaking, larger discriminator class architecture will lead to smaller . There has been much recent work on the approximation power of deep neural networks (Lu et al.,, 2020, 2017; Montanelli and Du,, 2019; Petersen and Voigtlaender,, 2018; Shen et al., 2019a, ; Shen et al., 2019b, ; Suzuki,, 2018; Yarotsky,, 2017, 2018), quantitatively or non-quantitatively, asymptotically or non-asymptotically. The next lemma is a quantitative and non-asymptotic result from Shen et al., 2019a .

Lemma C.5 (Shen et al., 2019a Theorem 4.3).

Let be a Lipschitz continuous function defined on . For arbitrary , there exists a function implemented by a ReLU feedforward neural network with width no more than and depth no more than such that

When balancing the errors, we can let the discriminator structure be and so that is of the order , which is the same order of the statistical errors.

Bounding . The generator approximation error describes how powerful the generator class is to realize the empirical version of noise outsourcing lemma. If we can find a ReLU FNN such that for all , then . To ease the problem, it suffices to find a ReLU FNN that maps all the to . If such a ReLU network exists, then the desired exists automatically by ignoring the input ’s. The existence of such a neural network is guaranteed by the Lemma C.3 in appendix, where the structure of the generator network is to be set as for some constant . Hence by properly setting the generator network in this way, we can realize . Note that Lemma C.3 holds under the condition that the range of covers the support of . Since we imposed Condition 3.1, this is not always satisfied. However, assumption 1 controls the probability of the bad set where and we can show that the desired convergence rate is not affected by the bad set.

Bounding . The statistical error quantifies how close the empirical distribution and the true target are under bounded Lipschitz distance. The following lemma is a standard result quantifying such a distance.

Lemma C.6 (Lu and Lu, (2020) Proposition 3.1).

Assume that probability distribution on satisfies that , and let be its empirical distribution. Then

The finite moment condition is satisfied due to (B.1) and . Recall that , hence we have

| (B.3) |

Bounding . Similar to , the statistical error describes the distance between the distribution of and its empirical distribution. We need to introduce the empirical Rademacher complexity to quantify it. Define the empirical Rademacher complexity of function class as

where are i.i.d. Rademacher variables, i.e. uniform .

The next lemma shows how to bound by empirical Rademacher complexity using symmetrization and chaining argument. We include the proof here for completeness, which can also be found in Srebro and Sridharan, (2010).

Lemma C.7 (Random Covering Entropy Integral).

Assume . For any distribution and its empirical distribution , we have

| (B.4) |

of Lemma C.7.

The first inequality in (B.4) can be proved by symmetrization. We have

where the first inequality is due to Jensen inequality, and the third equality is because that has symmetric distribution, which is the reason why we introduce the ghost sample.

The second inequality in (B.4) can be proved by chaining. Let and for any let . For each , let be a -cover of w.r.t. such that . For each and , pick a function such that . Let and for any , we can express by chaining as

Denote . Hence for any , we can express the empirical Rademacher complexity as

where and the second-to-last inequality is due to Cauchy–Schwarz. Now the second term is the summation of empirical Rademacher complexity w.r.t. the function classes , . Note that

Massart’s lemma (Lemma C.9 below) states that if for any finite function class , , then we have

Applying Massart’s lemma to the function classes , , we get that for any ,

where the third inequality is due to . Now for any small we can choose such that . Hence,

Since is arbitrary, we can take w.r.t. to get

Now the result follows due to the fact that for any function class and samples,

This completes the proof of Lemma C.7. ∎

In , we used the discriminator network obtained from the ghost samples for the empirical distribution. The reason is that symmetrization requires two distributions being the same. In our settings, and do not have the same distribution, but and do. Recall that we have restricted to . Since , now it suffices to bound the covering number .

Lemma C.8 below provides an upper bound for the covering number of Lipschitz class. It is a direct corollary of (Van der Vaart and Wellner,, 1996, Theorem 2.7.1).

Lemma C.8.

Let be a bounded, convex subset of with nonempty interior. There exists a constant depending only on such that

for every , where is the 1-Lipschitz function class defined on , and is the Lebesgue measure of the set .

C.4 Proofs of the theorems

(of Theorem 3.1).

of Theorem 3.2.

We now prove Corollary 3.3.

of Corollary 3.3.

It suffices to show . By the definition of Wasserstein distance, we have , where the is taken over the set of all the couplings of and . Adding a coordinate while preserving the norm, we have

Therefore,

where the last is taken over the set of all the couplings of and . ∎

Next, we prove Theorem 3.4.

of Theorem 3.4.

Finally, we include Massart’s lemma for convenience.

Lemma C.9 (Massart’s Lemma).

Let be a finite set, with , then the following holds:

A proof of this lemma can be found at (Mohri et al.,, 2018, Theorem 3.3).