Watch Me Calibrate My Force-Sensing Shoes!

Abstract

This paper presents a novel method for smaller-sized humanoid robots to self-calibrate their foot force sensors. The method consists of two steps: 1. The robot is commanded to move along planned whole-body trajectories in different double support configurations. 2. The sensor parameters are determined by minimizing the error between the measured and modeled center of pressure (CoP) and ground reaction force (GRF) during the robot’s movement using optimization. This is the first proposed autonomous calibration method for foot force-sensing devices in smaller humanoid robots. Furthermore, we introduce a high-accuracy manual calibration method to establish CoP ground truth, which is used to validate the measured CoP using self-calibration. The results show that the self-calibration can accurately estimate CoP and GRF without any manual intervention. Our method is demonstrated using a NAO humanoid platform and our previously presented force-sensing shoes.

I Introduction

Foot force sensors are widely used in humanoid robots for measuring GRF and CoP to determine the stability of the system [1]. Many researchers have designed planning and control algorithms for humanoid robots that incorporate foot sensory feedback in dynamic walking [2], self-balancing [3], push-and-recovery [4], etc. In addition, foot force sensors are also applied to estimate the humanoid robot’s center of mass (CoM) [5], and external forces [6]. Recent studies also use foot force sensors to identify the mass properties of heavy objects in manipulation tasks of humanoid robots [7, 8].

Two common types of foot force sensors are used to measure CoP and GRF for humanoid robots. Commercial force/torque (F/T) sensors have been widely applied to larger-sized humanoid platforms [9, 10]. Such sensors are usually mounted between the robot’s ankle and foot. The GRF is directly measured from the sensor’s force outputs, and the CoP is obtained by solving torque balance [11]. The commercial F/T sensors, although accurate, are too expensive and heavy for smaller-sized humanoids [12]. By contrast, light-weight and low-cost force-sensing resistors (FSRs) are often adopted to construct the foot force-sensing modules by smaller-sized humanoids [13, 14]. In most foot designs, FSRs are distributed close to the foot’s boundary. The convex hull containing these FSRs is the allowable measurement area for CoP, also called the sensing polygon (often smaller than the robot’s support polygon) [15]. Since each FSR outputs 1-D force, the robot’s normal GRF is the total force output (see 1). The CoP is obtained by averaging the position of each sensor weighted by their corresponding force outputs (see 2). In practice, FSRs often suffer from resistance drift and hysteresis, and they are not suitable for accurate force measurement [16].

In the literature, there are three ways to enhance the measurement accuracy of the foot force-sensing devices constructed by FSRs for smaller humanoid robots.

1. Improving sensor’s quality. Researchers either developed their own light-weight and high-accuracy force/pressure sensors or customized the existing higher-quality commercial sensors into the foot design. For example, Shayan et al. modified barometric pressure sensors to replace the poor factory default FSRs in the NAO robot’s feet [17]. Kwon et al. developed a pressure-sensing foot using custom-fabricated polymer-based pressure sensors for a KIBO robot [18]. Our proposed force-sensing shoes [19] utilize light-weight single-axis load cells for force measurement.

2. Improving foot force-sensing module design. To improve the NAO robot’s poor foot design, where the FSRs incompletely contact the foot bottom plate, Almeida et al. proposed a two-layer force-sensing module design facilitating all the FSRs to engage [20]. Our proposed force-sensing shoe ensures all force sensors are effectively activated by directly cantilevering the shoe’s top plate on the force sensors [19]. Additionally, the authors in [21] add a soft sponge layer between the FSRs and the foot bottom plate to facilitate contact for a KHR-2HV robot.

3. Applying manual calibration. Manual calibration includes single sensor calibration and force-sensing module calibration. The former requires applying different reference forces to the sensor and then using linear or nonlinear regressions to map out the relationship between the sensor’s raw signal output and the applied force. The latter aims to improve the accuracy of a specific measured metric incorporating all sensor outputs, of which the most common one is the CoP. Our work [19] proposed a CoP manual calibration method, which applies known weights to designated positions on the top of the foot module and minimizes the error between the measured CoP and its ground truth over sensor parameters.

The above mentioned manual calibration methods need to be implemented frequently since force sensors drift as the environmental factors change. For example, a temperature change may lead to measurement offsets; Slight variations in the mechanical structure during usage, such as changes in screw tension and deformations of the mounting material, may affect the measurement accuracy. However, performing manual calibration is time-consuming and requires human intervention, making it impossible for robots to use accurate force sensory feedback in long-term autonomous tasks.

To equip the smaller humanoid robots with embodied intelligence to calibrate their foot sensors autonomously, we propose a novel self-calibration method. In our method, the robot is commanded to reach several sampled double-support configurations. After reaching each double support, the robot moves its body following planned trajectories. Then the optimal sensor parameters are determined by minimizing the error between the measured CoP, GRF, and their modeled references using nonlinear optimization. To our best knowledge, this is the first autonomous method for calibrating foot sensors for smaller-sized humanoid robots.

To validate the CoP measurement using the self-calibration approach, a manual calibration method is developed. This method incorporates force-plate calibration techniques, enabling high CoP measurement accuracy. The measured CoP using manual calibration parameters is considered ground truth to compare with the self-calibration result.

Both the self and the manual calibrations are individual novelties to enhance foot force-sensing performance for smaller humanoid robots. The former can be used for long-term autonomous tasks, and the latter can be applied to tasks requiring higher measurement accuracy. The two calibration methods are demonstrated using our previously presented force-sensing shoes [19] and a NAO robot. Experimental results show that our self-calibration method can accurately estimate CoP and GRF without human interference.

II Force-sensing shoe overview

This section gives a brief overview of our previously presented force-sensing shoes in [19].

II-A Design

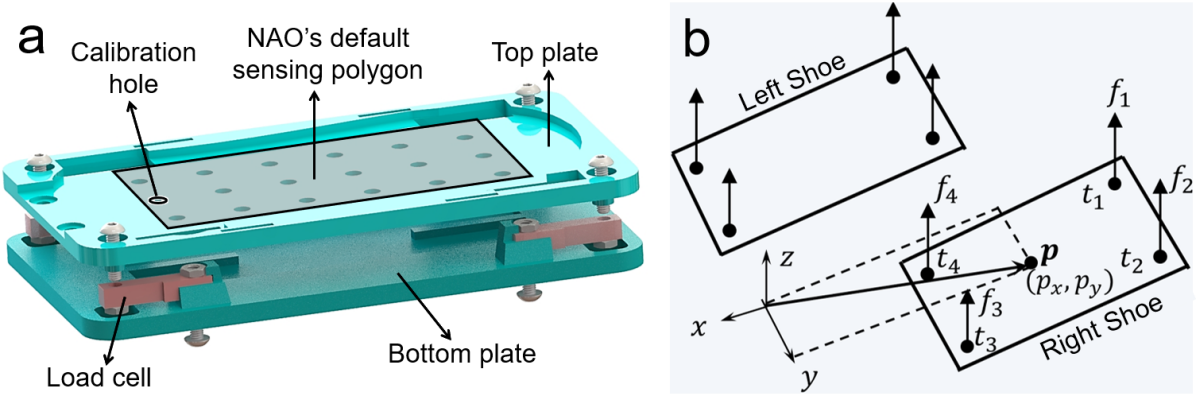

The force-sensing shoe design is shown in Fig. 1a. The shoe consists of two 3-D printed plates and measures forces using four 1-D load cells. One side of the load cell is cantilevered at the holders of the bottom plate, and its other side connects the corners of the top plate. Slightly different from the first version design in [19], the current design includes a six-by-three array of holes on the top plate, which are used for manual calibration (see III). These holes cover the sensing polygon constructed by the NAO’s factory default FSRs (grey area) [22]. This calibration area, slightly smaller than the shoe’s support polygon, is the allowable CoP region for the robot’s default functionalities for safety concerns.

II-B Sensing principle

The shoe measures GRF and CoP. The GRF, , is acquired by summing up all the force output (Fig. 1b):

| (1) |

The CoP, C, is obtained by averaging the 2-D position of each load cell in the spatial coordinate, , weighted by their corresponding force outputs, (Fig. 1b):

| (2) |

The sensing principle for single support can also be applied to double support by incorporating all eight sensors.

III Shoe Manual Calibration

This section introduces a manual calibration method for the shoe’s CoP measurement, including load-cell calibration and shoe calibration. The manual calibration method is used to establish CoP ground truth for evaluation of the self-calibration algorithm that will come later.

III-A Load cell calibration

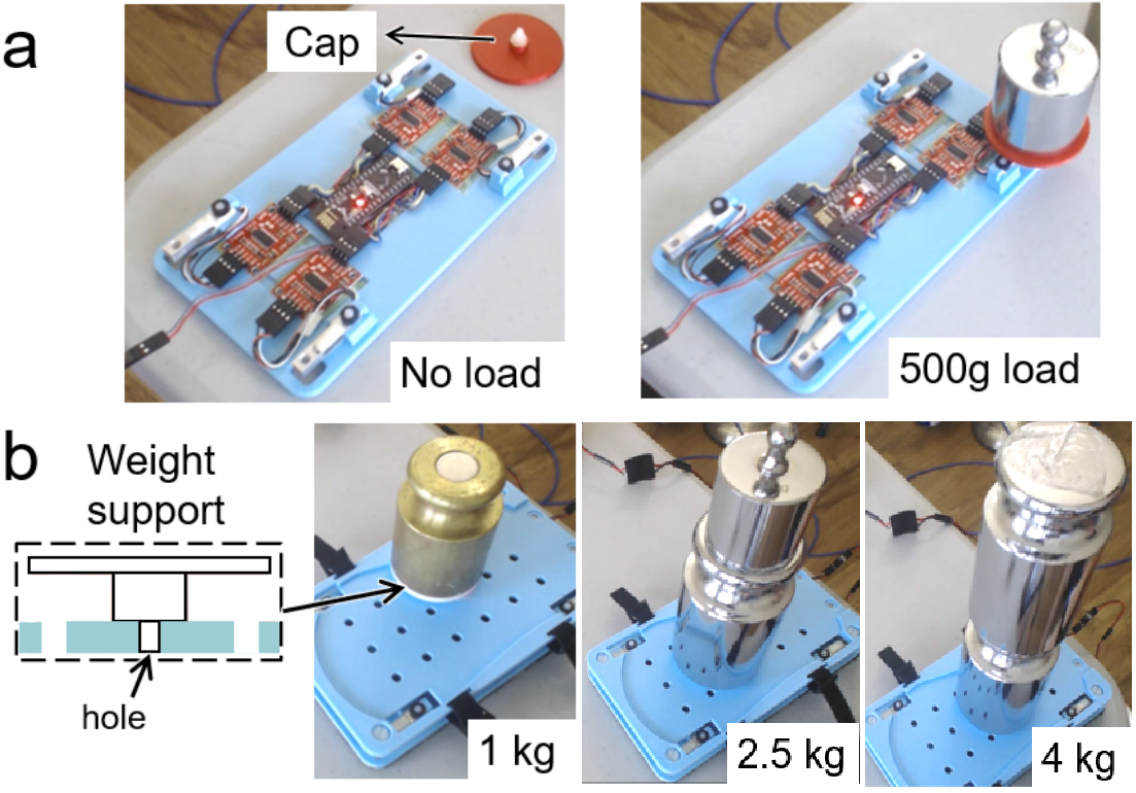

To calibrate each load cell, we first record its no-load voltage (Fig. 2a, left) and then record the updated voltage after applying a known weight to the sensor (Fig. 2a, right). Due to the load cell’s linear response between the voltage output and the applied force, the voltage to force ratio is . Then, the force output corresponding to a loaded voltage output is given by:

| (3) |

where and are the modified scaling factor and offset.

III-B Shoe calibration

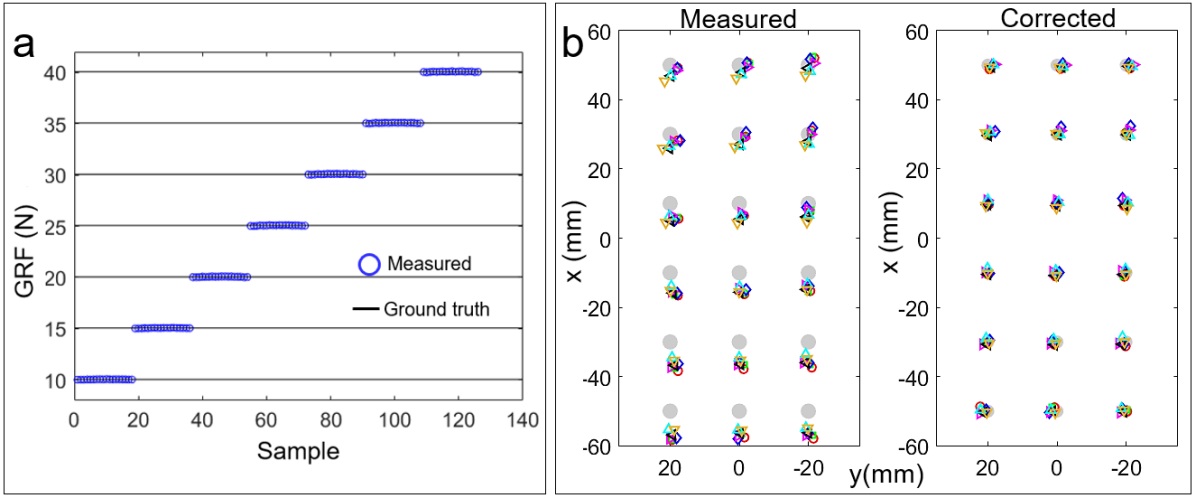

As introduced in [19], our force-sensing shoes possess high accuracy in GRF measurement (Fig. 3a) but low accuracy in CoP measurements (Fig. 3b, left). To improve the CoP measurement accuracy, we apply seven different weights (1, 1.5, 2, 2.5, 3, 3.5, 4kg masses of the robot’s mass) to the holes on the shoe’s top plate using a 3-D printed weight support (Fig. 2b shows three examples). Then, we utilize nonlinear least squares to minimize the error between measured CoPs and their corresponding ground truths, which are the known 2-D positions of the holes.

Inspired by the force plate calibration method introduced in [23], our manual calibration defines a corrected CoP, , by summing the measured CoP and a correction term :

| (4) |

The correction terms are formulated as:

| (5) |

which are designed first using a second-order polynomial of the measured CoP, , parameterized using , (). This part is used to improve the averaged measurement at each calibration hole. The correction terms also includes first-order terms of the force outputs parameterized using and , corresponding to each force output). This second part is for reducing the deviation at the same calibration spot for different applied weights. Eventually, nonlinear least squares is used to minimize the error between the corrected CoP, and the ground truth, :

| (6) |

where is the sample index, is the sample number, and in (5) are the optimization variables.

III-C Shoe measurement accuracy

Mean absolute error (MAE) is used to quantify the CoP and GRF accuracy. The MAE of the GRF, , is given by:

| (7) |

where is the measured GRF and is the calibration weight. The MAE of CoP, , is defined as:

| (8) |

where and are the measured CoP and the ground truth.

The example of GRF and CoP measurements of the left shoe are shown in Fig. 3. The MAEs of both shoes are listed in Table I. According to the results, the measured GRF (Fig. 3a, blue circles) almost lie perfectly on their corresponding ground truths (black lines) with only around 0.03N MAE (Table I); The corrected CoPs (Fig. 3b, right, colored markers) become much closer to their corresponding ground truth (gray circles) compared with the initial measurements (left, colored markers). The accuracy of the corrected CoPs is improved about five times with only around 1 mm MAE (Table I). The results indicate that our manual calibration enables high CoP measurement accuracy.

| Shoe | Measured GRF (N) | Measured CoP (mm) | Corrected CoP (mm) |

|---|---|---|---|

| Left | 0.02 0.01 | 4.53 1.89 | 1.07 0.62 |

| Right | 0.03 0.03 | 3.51 1.90 | 0.76 0.35 |

IV Shoe Self-Calibration

IV-A Method overview

When a humanoid robot moves slowly under a quasi-static assumption, its CoP equals the ground projection of its CoM [11]; Its normal GRF is simply the robot’s weight. Ideally, the measured CoP and normal GRF using force sensors should equal their corresponding modeled values. Therefore, our self-calibration method collects modeled CoP, GRF and their corresponding force sensor outputs by moving the robot along planned trajectories in different double support configurations. Then the sensor parameters can be solved by minimizing the error between the sensor measurements and their modeled references using optimization.

IV-B Data sampling strategy

In order to initiate the self-calibration, the robot needs to first reach different foot configurations. Two options are considered to sample these configurations: 1. The robot stands with single support and self-calibrate each shoe separately. 2. The robot stands with double support and self-calibrate both shoes together. The former is ideal since it calibrates only four sensors of one shoe, reducing the potential of over-fitting by limiting the dimension of the optimization space. However, this option requires the robot to move its body with only one foot supporting its weight, resulting in excessive torque for smaller-sized humanoid robots with under-powered motors. Practically, our NAO robot platform encountered serious ankle overheating issues standing in single support during our initial testing. Therefore, the double support option is chosen for safety concerns.

To prevent overfitting for calibrating eight sensors in both feet, we collect sensor voltage data covering a sufficient range. Additionally, we ensure a large sample size. Two strategies are implemented to realize the above sampling results. 1. We sample double supports with the position and orientation of one foot sufficiently far from the other (Section V-A). 2. We plan whole-body trajectory for each sampled double support such that the robot’s CoP covers different areas of the sensing polygon (Section V-B).

V Motion Planning

This section introduces the details for double support generation and whole-body motion planning.

V-A Double support configuration generation

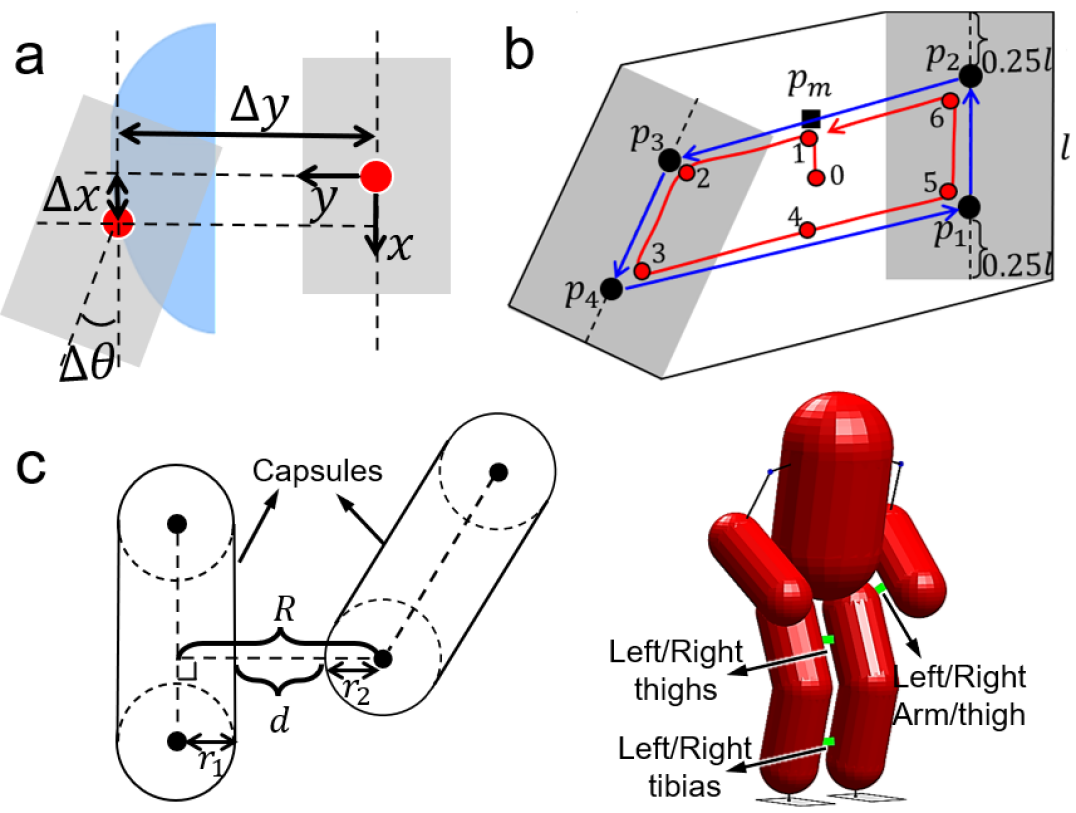

Each double support configuration is defined using the position and orientation of one shoe relative to another with three parameters: , (Fig. 4a). We first discretize these parameters in the robot’s factory allowable ranges (blue area) and then randomly sample in the discretized space. For each sampled double support configuration, a collision check is implemented to ensure no collision between the feet. The configuration distance between the feet of each sample must also be greater than a threshold, , designed as:

| (9) |

where and are the weights of the Cartesian distance and the relative orientation. Once the newly sampled double support passes both checks, it is stored for later execution.

V-B Whole-body trajectory generation

For each sampled double support, a whole-body trajectory is generated using trajectory optimization (Fig. 4b). First, four CoP landmarks are designed at the sensing polygon’s centerlines and are 0.25-length away from the sensing polygon’s front or rear edge (, is the foot length). Then a trajectory planner generates a series of joint angle vectors, enabling the modeled CoP (red curve) to move counter-clockwise along the polygon contour constructed by the landmarks (blue curve). Initially, the CoP is driven towards one of the midpoints of the polygon contour () that is closest to the robot’s initial CoP (point 0). The robot’s arms are fixed in the trajectory, as they have little impact on CoP.

We adopt the direct collocation technique [24] for trajectory optimization because it effectively solves trajectory including complex path constraints like our case. The optimization is formulated as:

| (10) | ||||

| subject to: | (11) | |||

| (12) | ||||

| (13) | ||||

| (14) | ||||

| (15) |

where the system states are a series of joint angle vectors: , and the transition vectors: . The transition vectors connect consecutive states along the trajectory (11). The cost function (10) penalizes the distance between the modeled CoP and the CoP landmark , driving the CoP trajectory towards the landmark. Moreover, the cost (10) minimizes the state transition difference to smooth the trajectory. Several path constraints are imposed: the stability constraint (12) confines the modeled CoP inside the sensing polygon of the double support. The joint limit constraint (13) determines the lower and upper bound of the states. The collision constraints (14) prevent leg collision. 3-D capsules are used to approximate the robot’s link geometry (Fig. 4c). The collision constraints set the minimal distance of the collision pairs (4c, right) larger than a smaller threshold . This minimal distance is calculated using the capsule centerline distance () to subtract the sum of their radii () (Fig. 4c, left). The foot transformation constraint (15) preserves the relative position and orientation between the feet for all the states in the trajectory. Finally, the optimization is solved using a nonlinear programming solver.

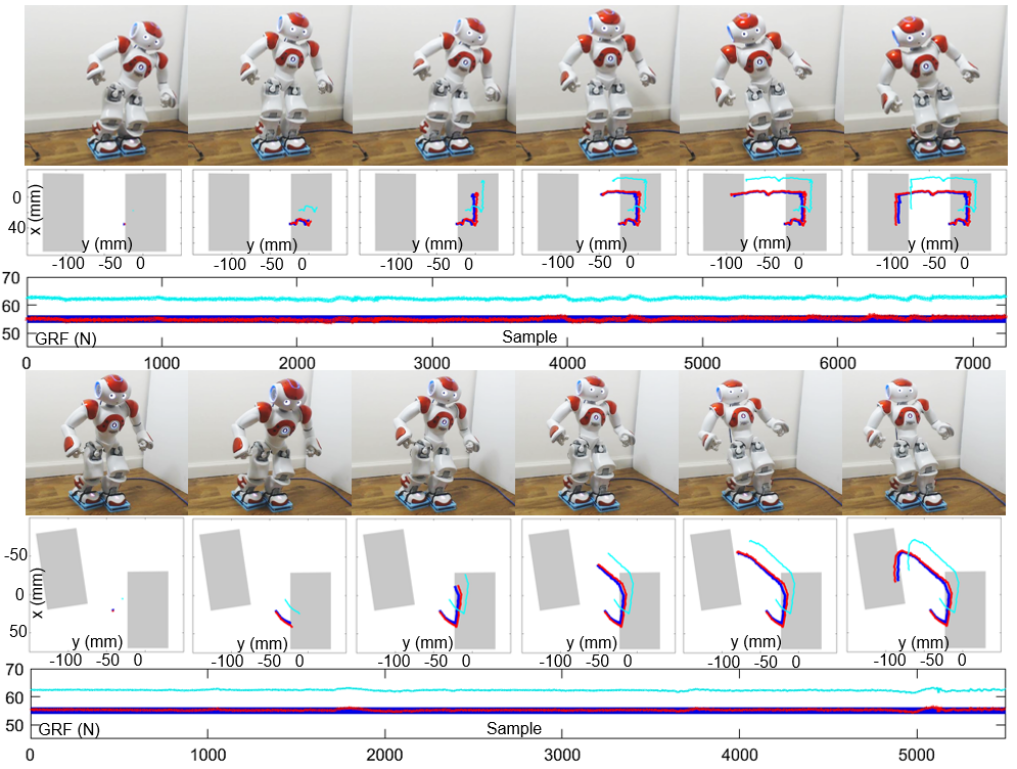

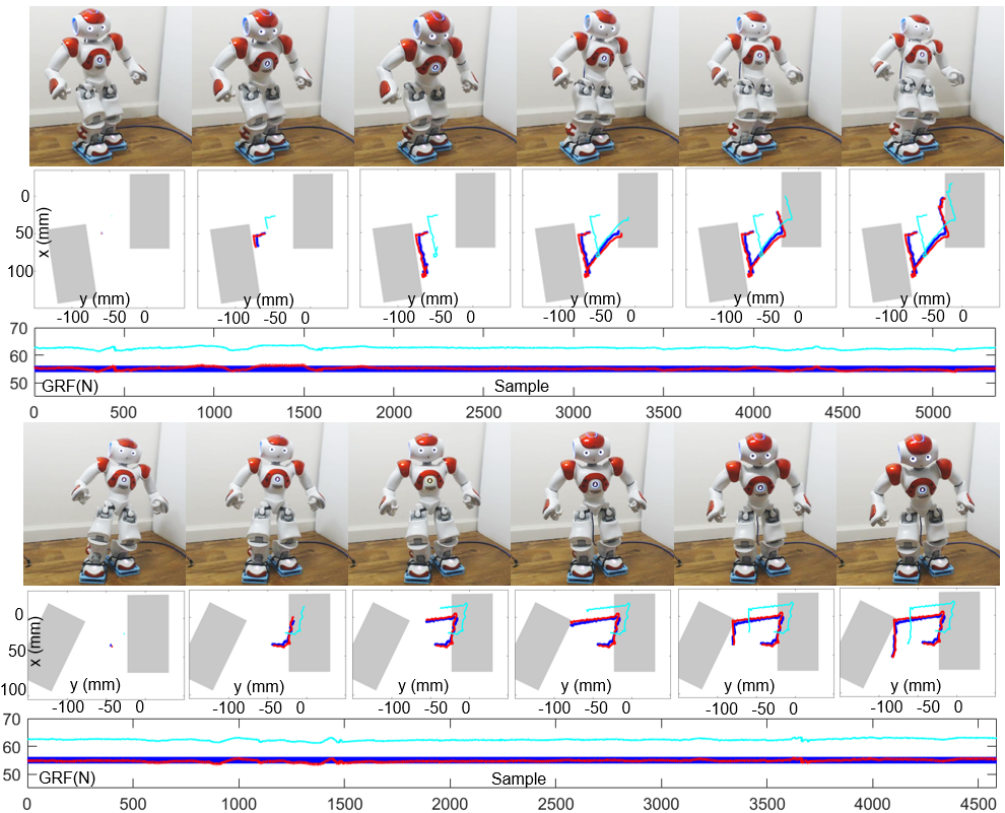

In practice, it is challenging for the solver to generate a complete trajectory directly connecting adjacent CoP landmarks in the sampled double supports (Fig. 4b). On the one hand, the convergence of the optimizer is sensitive to the number of states in the trajectory. On the other hand, a feasible trajectory may not exist once the robot’s configuration gets closer to its singularity, which occurs when the CoP is close to its landmark. To ensure our algorithm runs autonomously, the planner is implemented in a model predictive control scheme: the optimizer continuously generates a small portion of the trajectory ahead of time, moving the CoP towards its landmark. Once the CoP is close enough to the landmark, or the CoP trajectory cannot proceed more towards the landmark, a new landmark is updated. The detailed implementation of the planner is presented in Algorithm 1 and the snapshots of the generated whole-body trajectories are shown in Fig. 6 and 7.

VI Implementation Details

VI-A Data preparation

To implement the self-calibration algorithm, we sampled five different double supports and then generated their corresponding whole-body trajectories. Among these datasets, three are randomly chosen for training (Fig. 6). The other two (Fig. 7) are used for evaluation.

| data 1 | data 2 | data 3 | data 4 | data 5 | |

|---|---|---|---|---|---|

| GRF (N) | 0.27 0.22 | 0.27 0.20 | 0.12 0.16 | 0.25 0.21 | 0.31 0.16 |

| CoP (mm) | 2.98 1.62 | 2.72 1.37 | 2.20 1.19 | 2.50 1.71 | 2.01 1.56 |

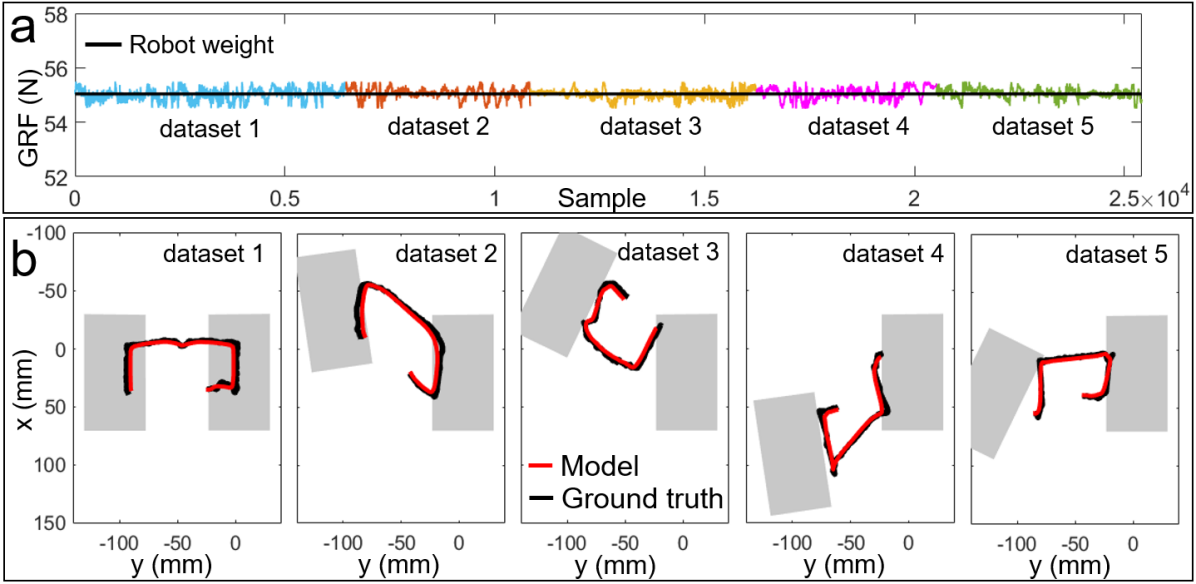

VI-B Modeling reliability

For the self-calibration to work effectively, two assumptions need to be validated. Assumption 1: The robot’s slow movement can be modeled as a quasi-static system. Assumption 2: The modeled CoP is sufficiently close to the ground truth. To test these two assumptions, we manually calibrated the shoes and use their high-accurate measurements (see Table I) as ground truths to evaluate the modeled results. For Assumption 1, we compared the measured normal GRF using manual calibration and the robot’s weight. For Assumption 2, we compared the modeled CoP and the measured CoP using manual calibration. The results show that the measured GRF (Fig. 5a, colored curves) slightly oscillates around the ground truth (black line) with only around 0.3 N MAE (Table II), indicating that the robot’s slow movement is close enough to quasi-static condition. In addition, the modeled CoP trajectories (Fig. 5b, red curves) are close to the measured references (black curves) with less than 3 mm MAE (Table II), indicating that the modeling is with decent fidelity. The experimental results prove that both assumptions are practically applicable.

VI-C Center of pressure modeling

Under quasi-static assumption, the modeled CoP equals the projection of the modeled CoM of the robot. With a priori known mass properties of the robot’s body components, the CoM can be modeled by solving whole body forward kinematics using joint angle data obtained by encoders.

VI-D Initial sensor parameters estimation

The self-calibration utilizes nonlinear least squares to determine the sensor parameters, requiring a reasonable initial guess. Since all load cells are of the same type, we apply the same initial guess (see III-A for load cell modeling) to all the sensors. Assuming the measured GRF and CoP using initial guess approximately equal their modeled references, the following equations hold:

| (16) |

The left side consists of the robot’s weight, , and the modeled CoP, . The right side consists of the measured GRF and CoP, where is the sensor index, and are the sensor voltage output and location. We can rearrange the equations to include more training samples:

| (17) |

where is the sample number. Therefore, the optimal initial guess can be solved by least squares regression.

VI-E Sensor parameter identification

VI-E1 Loadcell parameter identification

Given training samples consisting of sensor voltage outputs and their corresponding modeled GRFs and CoPs (Fig. 6), the load cells’ parameters are determined by minimizing the differences between these corresponding pairs using nonlinear least squares (NLS). The optimization variables are the parameters of the load cells’ scaling factors and offsets in (3). The NLS is formulated as:

| (18) | |||

where is the number of training samples, and are measured GRF and CoP; is the modeled CoP; is the robot’s weight; is the initial guess solved by (17). , and are the weights for the cost terms. The regulation term in the cost is designed to avoid overfitting.

VI-E2 CoP measurement calibration

Similar to the manual calibration method (see III-B), we further improve the CoP measurement by first defining a corrected CoP:

| (19) |

where , , and are the measured CoP and GRF using sensor parameters for the left and right feet solved by (18). and are the correction terms applied to the left and right feet defined in (5). Then we use NLS to minimize the error between corrected and modeled CoPs over the parameters of the correction terms:

| (20) |

where is the sample number, is the modeled CoP and are the parameters of the correction terms defined in (5).

VII Results

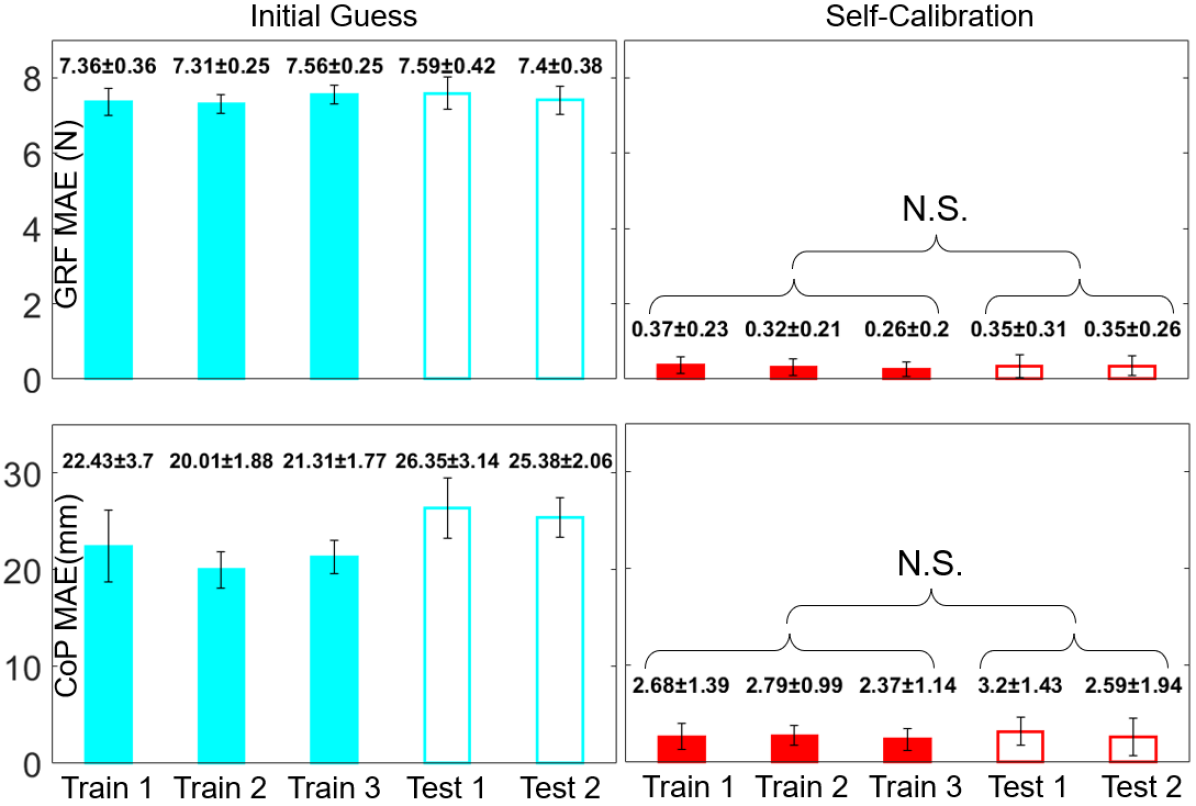

The shoe measurements using self-calibrated parameters and the corresponding ground truths acquired by manually calibrated parameters for the training and testing datasets are shown in Fig. 6 and 7. The MAE of the measurements using initial guess (Section VI-D) and the measurements using self-calibration are presented in Fig. 8. The results show that GRF and CoP measurements using initial guess (Fig. 6 and 7, teal lines) are far away from their ground truths (blue lines), with about 7.5 N MAE for GRF and 23 mm MAE for CoP (Fig. 8, left). By contrast, the measurement using self-calibration (Fig. 6 and 7, red lines) are much closer to their corresponding ground truths (blue lines) with only approximate 0.35 N MAE for GRF and 2.8 mm MAE for CoP. The self-calibration improves the measurements using initial guess by approximately ten times for CoP (Fig. 8, bottom) and 20 times for GRF (Fig. 8, top). In addition, statistical tests show that the training (Fig. 8, right, filled bars) and testing (empty bars) MAEs are not significantly different, indicating no overfitting takes place. The overall results demonstrate that our self-calibration approach can effectively determine sensor parameters without any manual intervention and without needing initial sensor information.

VIII Discussion

This letter presents a novel self-calibration method for humanoid robots to determine the sensor parameters of their foot force-sensing modules autonomously. Our method does not require any manual intervention. Therefore, this approach enables a calibration process without the need of detaching the device from the robot or disassembling the device. In addition, this method can also be applied for sensor correction in long-term autonomous tasks. Although the self-calibration approach is demonstrated on our force-sensing shoes designed for a NAO humanoid robot, it can theoretically be applied to other foot-sensing modules for both smaller and larger humanoids, using similar planning and optimization procedures as ours.

It is observable that our planned CoP trajectories in the training and testing datasets do not cover the edge of the sensing polygon (Fig. 6 and 7). This is due to the limitation of our smaller-sized robot platform. During our initial testing, we discovered that when the robot’s CoP is near the edge of the sensing polygon, its major supporting leg almost always suffered from excessive torque. Therefore, we designed CoP trajectory to not cover the edge of the sensing polygon for safety concerns.

Compared with the ground truth produced by manually calibrated sensor parameters (Table I), the measurement using self-calibrated parameters is slight off (Fig. 8, right), which is likely caused by the following issues: 1. the robot’s movement can never reach fully quasi-static since the acceleration always exists during the movement of the robot (Fig. 5a and Table II). 2. The modeled CoP does not perfectly equal the ground truth (Fig. 5b and Table II). These problems lead to fitting errors in the optimization process.

IX Acknowledgement

This work was supported by NUS Startup grants R-265-000-665-133, R-265-000-665-731, Faculty Board account C-265-000-071-001, and the National Research Foundation, Singapore under its Medium Sized Center for Advanced Robotics Technology Innovation R-261-521-002-592.

References

- [1] M. Vukobratović and B. Borovac, “Zero-moment point—thirty five years of its life,” International journal of humanoid robotics, vol. 1, no. 01, pp. 157–173, 2004.

- [2] S. Tsuichihara, M. Koeda, S. Sugiyama, and T. Yoshikawa, “A sliding walk method for humanoid robots using zmp feedback control,” in 2011 IEEE International Conference on Robotics and Biomimetics. IEEE, 2011, pp. 275–280.

- [3] S. Nakaura, M. Sampei et al., “Balance control analysis of humanoid robot based on zmp feedback control,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, vol. 3. IEEE, 2002, pp. 2437–2442.

- [4] P. Ghassemi, M. T. Masouleh, and A. Kalhor, “Push recovery for nao humanoid robot,” in 2014 Second RSI/ISM International Conference on Robotics and Mechatronics (ICRoM). IEEE, 2014, pp. 035–040.

- [5] L. Hawley and W. Suleiman, “External force observer for medium-sized humanoid robots,” in 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids). IEEE, 2016, pp. 366–371.

- [6] S. Piperakis, M. Koskinopoulou, and P. Trahanias, “Nonlinear state estimation for humanoid robot walking,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3347–3354, 2018.

- [7] Y. Han, R. Li, and G. S. Chirikjian, “Can i lift it? humanoid robot reasoning about the feasibility of lifting a heavy box with unknown physical properties,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 3877–3883.

- [8] R. Shigematsu, S. Komatsu, Y. Kakiuchi, K. Okada, and M. Inaba, “Lifting and carrying an object of unknown mass properties and friction on the head by a humanoid robot,” in 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids). IEEE, 2018, pp. 1–9.

- [9] T. Takenaka, “The control system for the honda humanoid robot,” Age and ageing, vol. 35, no. suppl_2, pp. ii24–ii26, 2006.

- [10] K. H. Koch, K. Mombaur, O. Stasse, and P. Soueres, “Optimization based exploitation of the ankle elasticity of hrp-2 for overstepping large obstacles,” in 2014 IEEE-RAS International Conference on Humanoid Robots. IEEE, 2014, pp. 733–740.

- [11] S. Kajita, H. Hirukawa, K. Harada, and K. Yokoi, Introduction to humanoid robotics. Springer, 2014, vol. 101.

- [12] Y. Fujimoto and A. Kawamura, “Attitude control experiments of biped walking robot based on environmental force interaction,” in AMC’98-Coimbra. 1998 5th International Workshop on Advanced Motion Control. Proceedings (Cat. No. 98TH8354). IEEE, 1998, pp. 70–75.

- [13] D. Gouaillier, V. Hugel, P. Blazevic, C. Kilner, J. Monceaux, P. Lafourcade, B. Marnier, J. Serre, and B. Maisonnier, “Mechatronic design of nao humanoid,” in 2009 IEEE International Conference on Robotics and Automation. IEEE, 2009, pp. 769–774.

- [14] Y. Takahashi, K. Nishiwaki, S. Kagami, H. Mizoguchi, and H. Inoue, “High-speed pressure sensor grid for humanoid robot foot,” in 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2005, pp. 3909–3914.

- [15] B. J. Son, Y. S. Baek, and J. H. Kim, “Development of foot modules of an exoskeleton equipped with multiple sensors for detecting walking phase and intent,” in Applied Mechanics and Materials, vol. 752. Trans Tech Publ, 2015, pp. 1016–1021.

- [16] A. Hollinger and M. M. Wanderley, “Evaluation of commercial force-sensing resistors,” in Proceedings of the International Conference on New Interfaces for Musical Expression, Paris, France. Citeseer, 2006, pp. 4–8.

- [17] A. M. Shayan, A. Khazaei, A. Hamed, and M. T. Masouleh, “Design and development of a pressure-sensitive shoe platform for nao h25,” in 2019 7th International Conference on Robotics and Mechatronics (ICRoM). IEEE, 2019, pp. 223–228.

- [18] H.-J. Kwon, J.-H. Kim, D.-K. Kim, and Y.-H. Kwon, “Fabrication of four-point biped robot foot module based on contact-resistance force sensor and its evaluation,” Journal of mechanical science and technology, vol. 25, no. 2, p. 543, 2011.

- [19] Y. Han, R. Li, and G. S. Chirikjian, “Look at my new blue force-sensing shoes!” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 2891–2896.

- [20] L. Almeida, V. Santos, and F. Silva, “A novel wireless instrumented shoe for ground reaction forces analysis in humanoids,” in 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC). IEEE, 2018, pp. 36–41.

- [21] K. Suwanratchatamanee, M. Matsumoto, and S. Hashimoto, “Haptic sensing foot system for humanoid robot and ground recognition with one-leg balance,” IEEE Transactions on Industrial Electronics, vol. 58, no. 8, pp. 3174–3186, 2009.

- [22] “Nao robot’s force sensing resistor introduction page,” http://doc.aldebaran.com/2-5/family/robots/fsr_robot.html?highlight=fsr.

- [23] G. J. Verkerke, A. Hof, W. Zijlstra, W. Ament, and G. Rakhorst, “Determining the centre of pressure during walking and running using an instrumented treadmill,” Journal of biomechanics, vol. 38, no. 9, pp. 1881–1885, 2005.

- [24] T. Tsang, D. Himmelblau, and T. F. Edgar, “Optimal control via collocation and non-linear programming,” International Journal of Control, vol. 21, no. 5, pp. 763–768, 1975.