WaterFlow: Heuristic Normalizing Flow for Underwater Image Enhancement and Beyond

Abstract.

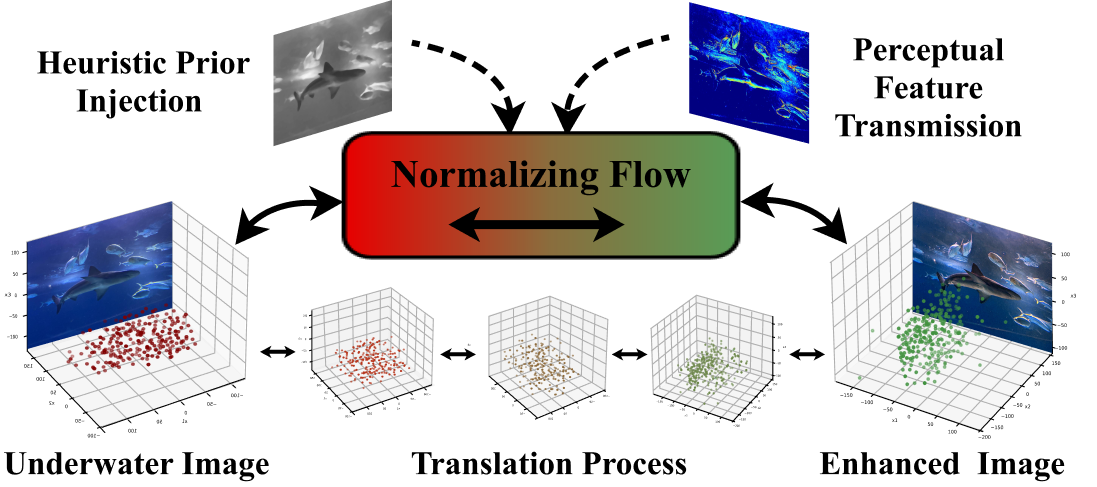

Underwater images suffer from light refraction and absorption, which impairs visibility and interferes the subsequent applications. Existing underwater image enhancement methods mainly focus on image quality improvement, ignoring the effect on practice. To balance the visual quality and application, we propose a heuristic normalizing flow for detection-driven underwater image enhancement, dubbed WaterFlow. Specifically, we first develop an invertible mapping to achieve the translation between the degraded image and its clear counterpart. Considering the differentiability and interpretability, we incorporate the heuristic prior into the data-driven mapping procedure, where the ambient light and medium transmission coefficient benefit credible generation. Furthermore, we introduce a detection perception module to transmit the implicit semantic guidance into the enhancement procedure, where the enhanced images hold more detection-favorable features and are able to promote the detection performance. Extensive experiments prove the superiority of our WaterFlow, against state-of-the-art methods quantitatively and qualitatively.

1. Introduction

In recent years, there have been significant advances in underwater robots for exploration in various fields (Lin et al., 2020; Yoerger et al., 2021). However, underwater object detection, a critical component of underwater exploration tasks, still faces considerable difficulties. The images captured underwater often suffer from severe distortion due to the complex and variable underwater environment, leading to a significant reduction in image visibility. The performance of subsequent object detection applications is also significantly affected.

To mitigate the impact of image distortion on underwater object detection, existing methods typically employ underwater image enhancement as a preprocessing step for object detection (Chen et al., 2020). They feed the enhanced underwater images as prospective guidance into the detection module to achieve better detection accuracy. Traditional underwater enhancement methods estimate imaging parameters through the underwater degradation formula to obtain enhanced images. However, fixed imaging parameters may not fully simulate the diverse and complex nature of real underwater environments. In recent years, deep learning has been widely concerned by researchers. They (Li et al., 2021a; Jiang et al., 2022) have achieved good restoration effects through the manually crafted network by end-to-end training. However, image enhancement and object detection are usually regarded as two parallel independent tasks. Enhancement results that solely focus on visual perception are insufficient to fully capture the scene information required by subsequent detection algorithms. Moreover, the enhancement network may introduce uncertain interferences that affect the detection performance.

In this paper, we propose a detection-driven heuristic normalizing flow for underwater image enhancement. we first develop a reversible translation framework based on normalizing flow to facilitate domain translation between degraded underwater images and clear counterpart. Specifically, the reversible translation between them is established by synchronous optimization with shared parameters and bilateral constraints of forward and reverse processes. The forward process aims to map the degraded image to the clear restored image by learning the nonlinear function , and the reverse process aims to map the clear image back to the degraded image through the nonlinear function . Then, considering the differentiability and interpretability, we estimate the underwater imaging parameters using different modalities of knowledge and incorporate them as heuristic priors into the data-driven mapping process. Guided by the underwater imaging physical model, the proposed method effectively prevents the introduction of the noise interference and undesirable artifacts, thereby avoiding adverse impacts on subsequent detection tasks. In order to improve the adaptability of the enhanced results for subsequent detection tasks, we further introduce a Detection Perception Module. By propagating the high-level perceptual features to the enhancement module, the generated enhanced images can implicitly possess more semantic information beneficial for subsequent detection tasks while achieving visually pleasing enhancement effects.

In summary, contributions can be concluded as follows:

-

•

We apply the normalizing flow to the underwater image enhancement task, which realizes the invertible mapping between the degradation image and its clear counterpart.

-

•

We incorporate the heuristic prior into the data-driven mapping process, which can be widely applied in a variety of real underwater scenes by improving the interpretability of the whole enhancement framework.

-

•

We propose a Detection Perception Module, which transmits high-level latent perceptual information to retain and extract detection-oriented semantic information.

-

•

Qualitative and quantitative results demonstrate that the proposed WaterFlow recovers the intrinsic scene clearly and is more conducive to the subsequent detection.

2. RELATED WORK

2.1. Underwater Image Enhancement

In recent years, numerous underwater image enhancement methods have been proposed. The early traditional methods often adjust the pixel distribution of different color channels to weaken the degradation of natural light underwater. Ghani et al. (Ghani and Isa, 2015) extended the histogram of the red channel and the blue channel of the underwater image upward and downward respectively according to the law of Rayleigh distribution. Li et al. (Li et al., 2016) corrected the red channel according to the Gray-World assumption theory after the color restoration of the blue-green image through the dark channel. Although these methods can weaken the fading of light on different color channels, it is easier to introduce underwater images into artificial colors due to the lack of guidance from physical models. Therefore, model-based methods (Li et al., 2017; Wang et al., 2017; Peng et al., 2018; Yang et al., 2023) are also widely used to improve the interpretability of networks by incorporating domain-specific prior knowledge.

In recent years, many methods based on deep learning have been proposed: Li et al. (Li et al., 2021a) introduced the multi-color space into the transmission-guided network to reduce the influence of color casts on underwater images. Mu et al. (Mu et al., 2022) introduced a bi-level model that hierarchically incorporates various knowledge modalities to enhance the quality of underwater images. Jiang et al. (Jiang et al., 2022) proposed a perceptual adversarial mechanism and introduced the global module to narrow the gap between enhanced images and reference images. Huang et al. (Huang et al., 2023) proposed a semi-supervised framework based on the mean-teacher approach to enhance the generalization ability of the enhance model on real-world data.

2.2. Normalizing Flow

Normalizing flow facilitates the transformation between complicated probability distribution and Gaussian distribution through bijection functions and differentiable mappings. The normalizing flow has received far less attention than GANs and VAEs because it requires a significant quantity of video memory with a fine structural design to handle a large amount of reversible computing. Many methods for accelerating the calculation have been proposed in recent years. Dinh et al. (Dinh et al., 2014) proposed the additive coupling layer, which makes the flow easier to calculate the determinant of Jacobian matrix. In order to increase the log-likelihood, Kingma et al. (Kingma and Dhariwal, 2018) developed the actnorm to normalize each channel of input features and convolution in place of the permutation (Dinh et al., 2014). Conditional normalizing flow was introduced for increasing the expressiveness and flexibility of normalizing flows, and has been widely used in vision tasks (Lugmayr et al., 2020; An et al., 2021; Abdal et al., 2021; Li et al., 2021b; Wang et al., 2022; Jiang et al., 2023b). However, there is little precedent dedicated to adopting flow-based methods to solve the problem of ill-posed underwater image enhancement tasks.

2.3. Object Detection

In recent years, due to the rapid development of deep learning, the effect of object detection has also been significantly improved. The existing methods are generally divided into one-stage method and two-stage method. The two-stage method (Girshick et al., 2014; Ren et al., 2015; He et al., 2017; Sun et al., 2021; Cheng et al., 2022) first needs to extract the region of interest proposals area, and then classify it through the classification of neural network. By contrast, the classification label and boundary information are often directly obtained by the one-stage method (Redmon and Farhadi, 2018; Kim and Lee, 2020; Feng et al., 2021; Xie et al., 2022).

The current detectors can achieve good performance when facing in-air images. However, the problems of scene blur and light imbalance faced by underwater images will greatly affect the effectiveness of the detectors. Existing studies (Liu et al., 2021, 2022a; Ma et al., 2022; Liu et al., 2023b, a) have used low-level visual enhancement modules as preprocessing steps for detection tasks. However, the existing enhanced networks for the underwater image often do not play a significant role in the detector, which ignores the position and semantic information while restoring the contrast of the underwater image.

3. The Proposed Method

Existing deep learning based underwater image enhancement methods rely on the enriched training data for a more robust and effective performance. However, the ignorance of physical priors in the learning process introduces the noise interference and undesirable artifacts, degrading the visibility further and undermining the subsequent detection performance. To overcome the above limitations, we propose a detection-driven heuristic normalizing flow network for underwater image enhancement. Specifically, to reduce the dependence on training data, we develop a normalizing flow based reversible translation framework to achieve domain translation between degraded underwater images and clear counterpart. The heuristic model constraints incorporated with gradient and depth information are embedded into the reversible procedure, guiding the reversible results to be more accurate and reliable. Moreover, to improve the applicability of the enhanced results for subsequent object detection task, we propose a Detection Perception Module that feeds back the performance of the enhanced results in detection tasks, allowing the enhancement module to learn more detection-favorable implicit features. Next, we provide the detailed description of each module.

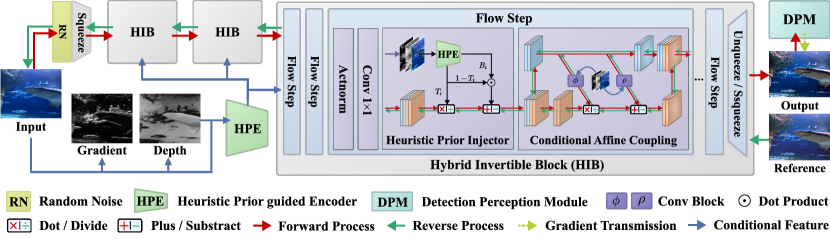

3.1. Hybrid Invertible Block

Hybrid Invertible Block (HIB) was introduced as the main part of the heuristic normalizing flow. It ensembles the heuristic prior into the data-driven network to build the invertible mapping relationship between the underwater image and its clear counterpart. As illustrated in Fig. 2, the underwater image restoration and the underwater image degradation process are combined in a invertible mapping manner during the training process. In the forward process, the underwater image is squeezed and input into multiple invertible blocks to generate enhanced images. In the reverse process, clear images are inversely input to invertible blocks with shared parameters to generate degraded images.

The Hybrid Invertible Block consists of the following parts: Actnorm (Kingma and Dhariwal, 2018), Conv 1 1 (Kingma and Dhariwal, 2018), Heuristic Prior Injector, Conditional Affine Coupling (Li et al., 2021b) and squeeze operation.

Actnorm (Kingma and Dhariwal, 2018) is a normalizing operation, which can change the input tensor into the zero mean and unit variance tensors. Conv (Kingma and Dhariwal, 2018) can convolve the input tensor of by the weight matrix of to make the calculation of Jacobian matrix and network inversion easier.

Heuristic Prior Injector was designed to better characterize the underwater imaging model by embedding a heuristic prior, which will be introduced in detail in section 3.2.

Conditional Affine Coupling was proposed by (Li et al., 2021b), which realizes the mutual conversion with conditional information between output and input through reversible transformation. We modified the previous formulation for adjusting the underwater image enhancement framework, which is described as:

| (1) | ||||

where , represents the concatenation operation. , , , and denote convolutional networks. represents the -th flow steps, , and will be explained in detail in section 3.2. Squeeze operation can convert the image of to . Unsqueeze is the reverse operation of squeeze. By the combination of these operations, the proposed architecture can attain accurate mapping between the underwater image and it clear counterpart while maintaining reversibility.

3.2. Heuristic Prior Injector

The Heuristic Prior guided Injector (HPI) is designed to incorporate the estimated physical imaging parameters into the invertible block. According to the underwater image formation model (Drews et al., 2013; Chiang and Chen, 2011), the enhanced image can be represented as:

| (2) |

where and denote the underwater image captured by sensors and the enhanced image at pixel point respectively. represents the corresponding color channels. and indicates the ambient light and the medium transmission coefficient. can also be described as with the scene depth and the attenuation coefficient according to the Beer-Lambert law (Bouguer, 1729).

Considering that the gradient map provides information about the edges and contrast in the image, which can be used to estimate the scatteration of light by the atmosphere and fine impurities in the water. Furthermore, the depth map provides information about the attenuation of the light propagation, which is also a key factor in the Beer-Lambert law. Inspired by (Peng et al., 2018), we first estimate the depth map and the gradient map as auxiliary information from the underwater image.

Then, we concatenate them with underwater image as input to the Heuristic Prior guided Encoder (HPE) to progressively estimate the imaging parameters by extracting the depth, gradient and color information of the underwater image. The equation can be illustrated as:

| (3) |

where represents underwater images. and denote the concatenate and split operation respectively. is the reciprocal of . The detailed architecture of is in the appendix due to limited space. After estimating and , we insert them into the HIB as heuristic information. The operation can be formulated as:

| (4) | ||||

where represents the middle feature of the -th flow steps, denotes dot product. By introducing the underwater image formation model based heuristic prior to the invertible blocks, the proposed method can generate enhanced images, which are more suitable for realistic underwater scenes.

3.3. Detection Perception Module

Object detection aims to acquire the location and category of each object. Hence, the performance of object detection will be improved if the enhanced image holds a more profound level of semantic feature and object localization information. Inspired by the perceptual loss (Johnson et al., 2016), deep neural networks designed for high-level visual tasks can retain semantic information and own the ability to describe potential features. Therefore, in order to make the enhanced images potentially improve the effectiveness of the underwater object detection, we propose a Detection Perception Module (DPM) to improve the performance of the enhancement module in preserving and extracting detection-oriented perceptual features.

In the whole training process, we first use the enhancement module as the data preprocessing stage of underwater object detection. After that, the enhanced image was directly fed into the DPM and conducted separate detection training. Then, we jointly optimize the pre-trained DPM and enhancement module through different benchmarks. Specifically, the underwater image is first input to the enhancement module to obtain enhanced data. We input it into the detection module to transmit the high-level implicit perceptual features extracted from the network to the enhancement module, so as to guide the visual improvement effect to be more conducive to subsequent object detection tasks.

3.4. Loss Function

Contrastive learning has been applied to multiple low-level visual tasks (Liu et al., 2022b, c, 2023c; Jiang et al., 2023a). We introduce contrastive learning to improve the quality of enhanced images by making them closer to the in-air images and more isolated from the underwater images. We have designated the reference image as a positive sample and the original underwater image as a negative sample. VGG19 (Simonyan and Zisserman, 2014) was used to extract the implicit characteristic of enhanced images. The contrastive loss is expressed as follows:

| (5) |

where represents the -th layer of VGG19 (Simonyan and Zisserman, 2014), and denotes the -th weight of each layer. represents the proposed enhancement network. and represent the underwater image and the reference image respectively.

We additionally incorporate the style loss (Deng et al., 2022) to make the generated output closer to the style pattern of the reference image. The definition of style loss function is described as follows:

| (6) | ||||

where and represent the mean and variance of the image. is the number of layers.

Localization loss and classification loss are used as detection-driven loss , which is illustrated as:

| (7) |

where classification loss is designed to minimize the discrepancy between the prediction category and the ground truth category. The localization loss is adopted to reduce the position difference between the prediction box and the ground truth box. We employ Focal loss (Lin et al., 2017) and GIoU loss (Rezatofighi et al., 2019) as classification loss and localization loss respectively.

Ideally, the incorporation of the reverse mapping process could impose a regular constraint on the degraded image, thereby enhancing the performance of the forward mapping (Guo et al., 2020). Therefore, L1 loss is used as a bilateral constraint to make the output of the forward and the reverse process closer to the reference and underwater image respectively. The formula can be described as:

| (8) |

where denotes the reverse transformation of . Therefore, the total loss of network training is expressed as follows:

| (9) |

|

|

|

|

|

|

|

|

| Input | Water-Net | DLIFM | Ucolor | TOPAL | TACL | SemiUIR | Ours |

|

|

|

|

|

|

|

|

| Input | Water-Net | DLIFM | Ucolor | TOPAL | TACL | SemiUIR | Ours |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Input | Water-Net | DLIFM | Ucolor | TOPAL | TACL | SemiUIR | Ours |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Input | Water-Net | DLIFM | Ucolor | TOPAL | TACL | SemiUIR | Ours |

| Ground Truth | Water-Net | DLIFM | Ucolor |

| TOPAL | TACL | SemiUIR | Ours |

|

|

|

|

|

|

|

|

| Ground Truth | Water-Net | DLIFM | Ucolor | TOPAL | TACL | SemiUIR | Ours |

| Method | UIEBD | EUVP | U45 | UCCS | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| UCIQE | UIQM | UICM | PSNR | SSIM | UCIQE | UIQM | UICM | UCIQE | UIQM | UICM | UCIQE | UIQM | UICM | |

| Water-Net | 0.5856 | 1.8681 | -37.9812 | 18.8156 | 0.8257 | 0.5836 | 4.4419 | -17.2934 | 0.5680 | 4.3612 | -23.7057 | 0.5559 | 3.5007 | -14.0720 |

| DLIFM | 0.6095 | 1.9553 | -38.0444 | 20.0894 | 0.8565 | 0.6205 | 4.1051 | -26.4611 | 0.5880 | 4.2763 | -25.4130 | 0.5200 | 2.9137 | -25.6534 |

| Ucolor | 0.5542 | 1.6799 | -40.9628 | 19.7202 | 0.8273 | 0.5846 | 4.3043 | -19.4697 | 0.5641 | 4.3708 | -21.6621 | 0.5201 | 3.4145 | -34.9304 |

| TOPAL | 0.5646 | 2.2013 | -48.1846 | 19.1631 | 0.8043 | 0.6010 | 4.2689 | -21.6535 | 0.5524 | 3.9625 | -32.6012 | 0.4801 | 3.7760 | -23.3429 |

| TACL | 0.6128 | 2.2848 | -39.8192 | 21.4864 | 0.8398 | 0.5842 | 3.6547 | -42.8193 | 0.6223 | 4.4013 | -20.0128 | 0.5935 | 3.6249 | -18.3285 |

| SemiUIR | 0.6202 | 1.9916 | -40.0554 | 21.6818 | 0.8753 | 0.6175 | 4.4360 | -15.3805 | 0.6110 | 4.5201 | -18.7781 | 0.5538 | 3.7847 | -5.0835 |

| Ours | 0.6157 | 2.5968 | -35.1569 | 21.7420 | 0.8584 | 0.6397 | 4.4400 | -12.6861 | 0.6229 | 4.4129 | -13.6104 | 0.5569 | 3.9842 | -4.1614 |

|

|

|

|

|

|

|

|

|

|

| Input | w/o | w/o | w/o | Ours |

|

|

|

|

|

|

|

|

|

|

| Input | w/o Color | w/o Grad | w/o Depth | Ours |

| Input | = 1 | = 2 |

| = 3 | = 4 | = 5 |

4. Experiments

In this section, we evaluate the effectiveness of the proposed method through qualitative and quantitative comparison. Specifically, widely used UIEBD (Li et al., 2019a), EUVP (Islam et al., 2020), U45 (Li et al., 2019b) and UCCS (Liu et al., 2020) datasets are used to evaluate the performance of WaterFlow for underwater image enhancement. Six representative methods Water-Net (Li et al., 2019a), DLIFM (Chen et al., 2021), Ucolor (Li et al., 2021a), TOPAL (Jiang et al., 2022), TACL (Liu et al., 2022b) and SemiUIR (Huang et al., 2023) in recent years are compared for evaluating the performance. Both subjective and objective results are adopted for analysis. Underwater Color Image Quality Evaluation (UCIQE) (Yang and Sowmya, 2015), Underwater Image Quality Measurement (UIQM) (Panetta et al., 2015) and Underwater Image Contrast Measure (UICM) (Panetta et al., 2015) are used as non-reference evaluation metrics. A higher UCIQE, UIQM or UICM score indicates a better human visual perception. Peak Signal to Noise Ratio (PSNR) and Structural Similarity (SSIM) (Wang et al., 2004) are adopted as the fully reference evaluations. Higher values of these metrics indicate a better similarity between the resulting image and the reference image in terms of both content and structure. Furthermore, UCCS (Liu et al., 2020) and Aquarium (Prytula, 2020) datasets are used to evaluate the ability of the proposed method to adapt to subsequent detection tasks. The commonly utilized Average Precision (AP) is adopted as the detection-driven evaluation metric, which is positive for the performance of underwater object detection.

4.1. Implement Details

Our network is implemented by the pytorch and trained on NVIDIA RTX 3090 GPU. We first randomly crop the UIEBD (Li et al., 2019a) dataset into the size of and use the cropped dataset as the training dataset for underwater image enhancement. UCCS (Liu et al., 2020) and Aquarium (Prytula, 2020) datasets are used as the training dataset for underwater object detection. We first train the heuristic normalizing flow and Detection Perception Module separately for iterations. Then, we jointly train the pre-trained modules for iterations. During the training process, Adam was used as our optimizer with the uniform learning rate of 1e-6 and the batch size of 2. , , , are set to , , , .

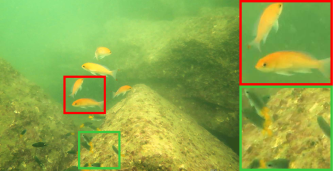

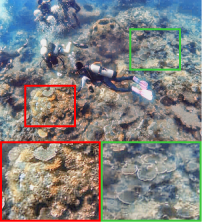

4.2. Qualitative Results

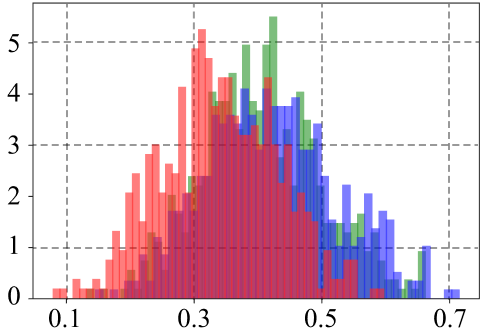

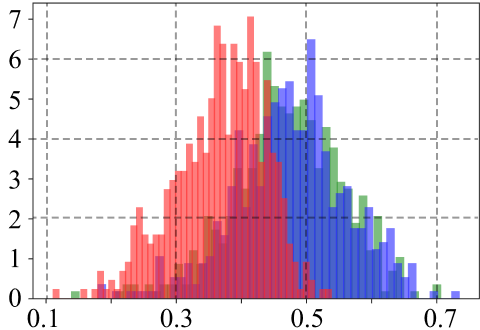

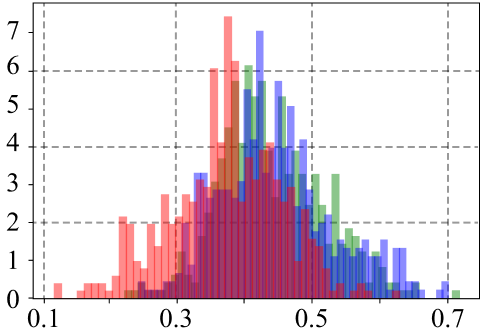

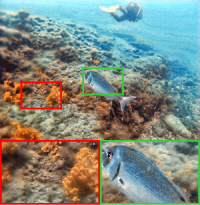

For visual comparison, Fig. 3 shows the enhancement results of all methods on UIEBD dataset. Water-Net and Ucolor not only fail to recover the original reflection, but also suffer from low contrast and saturation. DLIFM, TOPAL, TACL and SemiUIR have limited effects on underwater image enhancement, which still remain conspicuous color deviation at close scenes. Compared with the other methods, the proposed method recovers the degradation of light and rectifies the unexpected coloration. In addition, we demonstrate the pixel distribution similarity diagram of a particular region between the corresponding enhanced and reference images. The red line and the blue line in the diagram indicate the pixel distribution of the reference image and the enhanced image respectively. Notably, the proposed method yields enhanced results closest to the reference image.

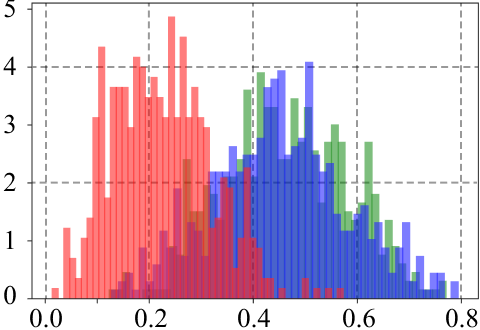

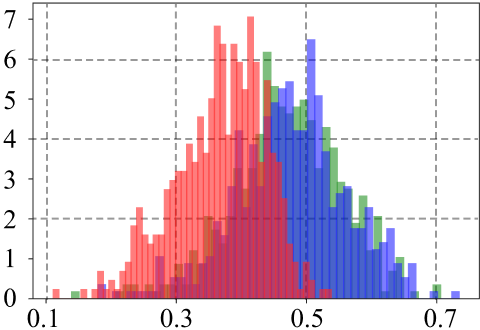

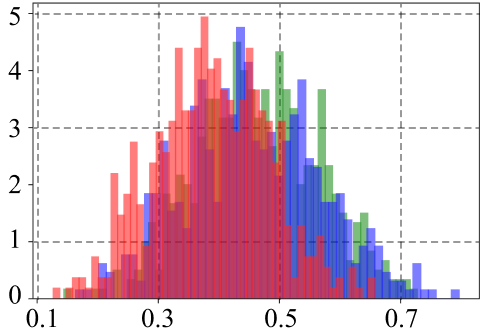

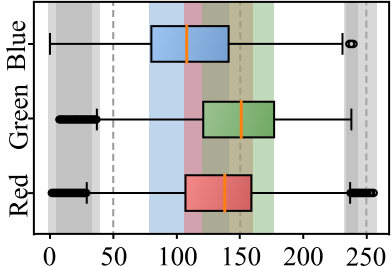

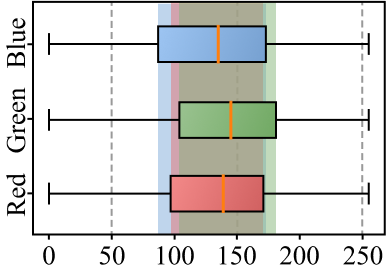

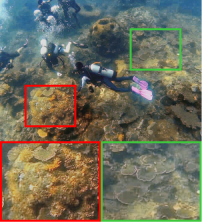

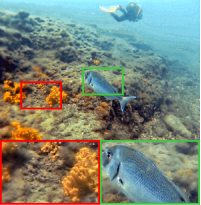

The comparison on the EUVP dataset is shown in Fig. 4. Ucolor and TOPAL introduce artifacts and unnatural colors. Water-Net, TACL and SemiUIR fail to alleviate the scattering of light underwater. Although DLIFM produces pleasing images, the instructive details of the image have not been recovered well. Compared with the other methods, the proposed method not only effectively corrects the color deviation, but also restores the scene radiance and contrast of the image. Fig. 4 also shows the distribution of RGB color space for the images obtained by different methods. In general, the proposed method more effectively addresses the swift attenuation of red wavelengths underwater, with its color space distribution closely approximating that of in-air images.

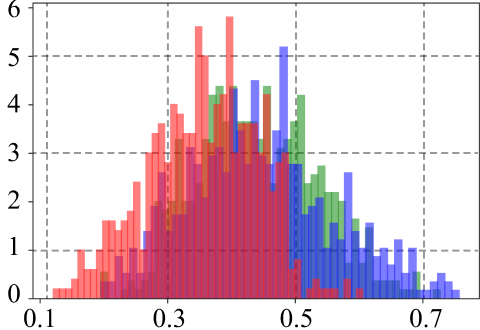

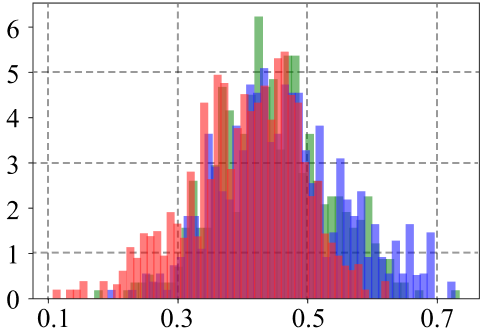

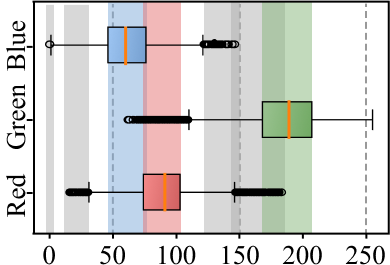

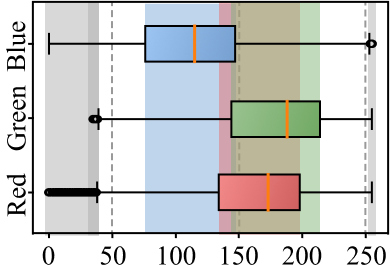

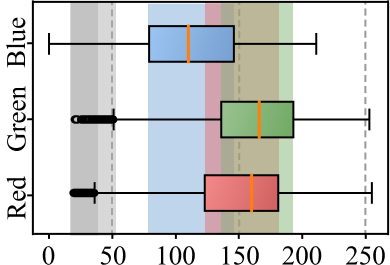

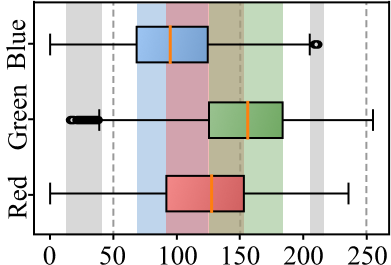

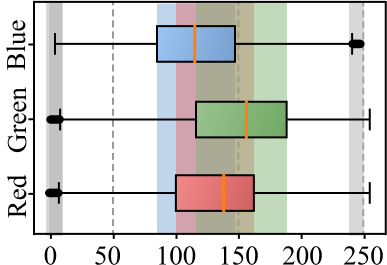

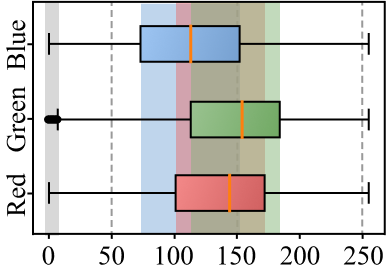

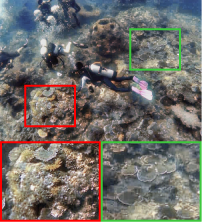

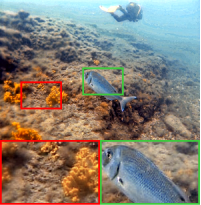

The qualitative comparison on the U45 datasets is shown in Fig. 5. It is obvious that the red light and blue light decay significantly more than the green light underwater in this acquisition environment. However, the other compared methods exhibit limited effectiveness in restoring the decay of blue light. The boxplot for the intensity distribution of the RGB color channels is set below each image, with the horizontal axis representing pixel intensity. Additionally, semi-transparent rectangles are introduced to expound the relationship between distinct color spaces more lucidly, with the gray rectangle signifying the region where outliers emerged. In comparison with the proposed method, the other methods not only have a noticeably inferior ability to revive the attenuation of blue light but also introduce certain aberrant outliers, adversely impacting the efficacy of the enhanced images.

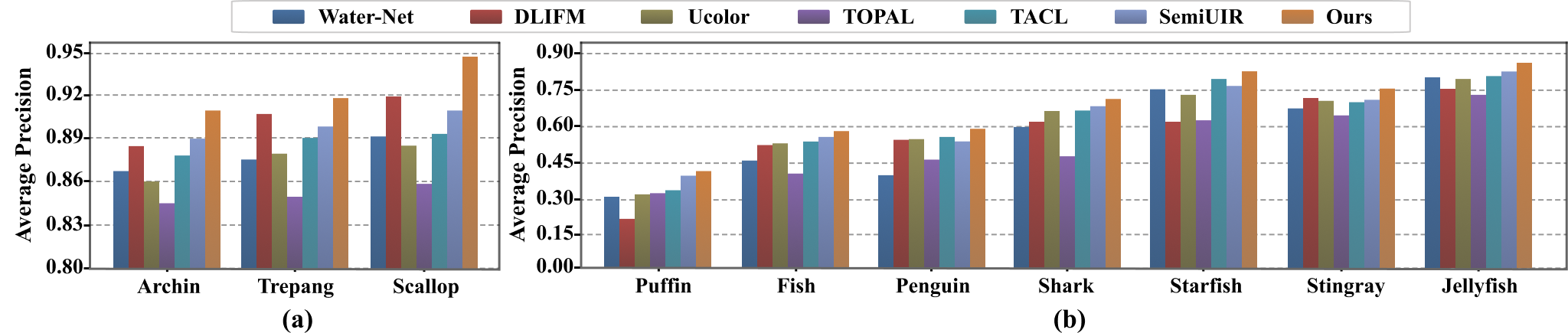

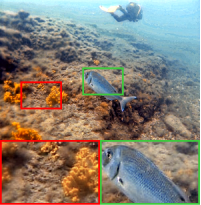

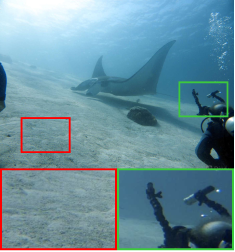

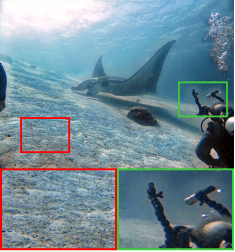

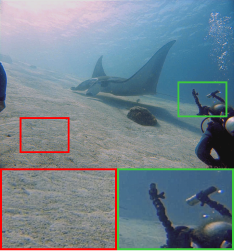

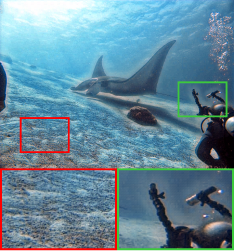

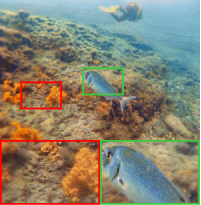

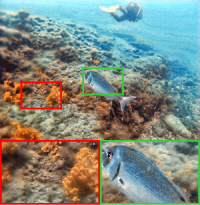

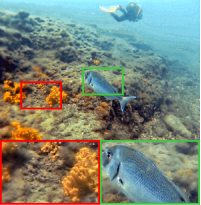

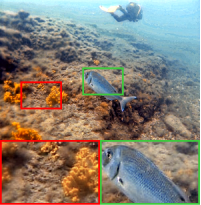

The visual comparison on the UCCS datasets is shown in Fig. 6. TOPAL fails to restore the color deviation of the image, which suffers from low saturation. Unreasonable color artifacts are introduced by Water-Net, TACL and SemiUIR. Informative details are ignored by DLIFM and Ucolor, resulting in obscure reflections and low contrast. Compared with other methods, our method significantly restores the brightness and contrast of underwater scenes without the interference introduction.

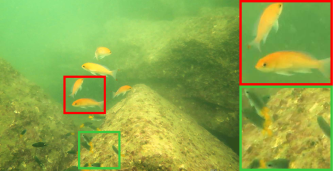

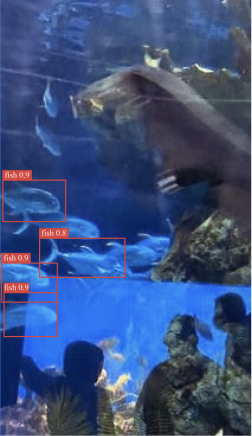

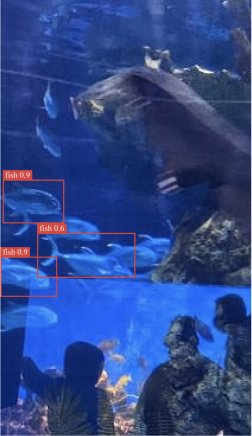

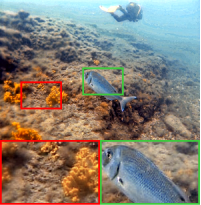

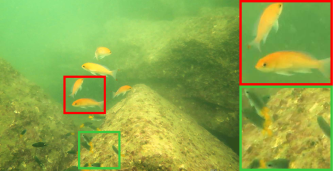

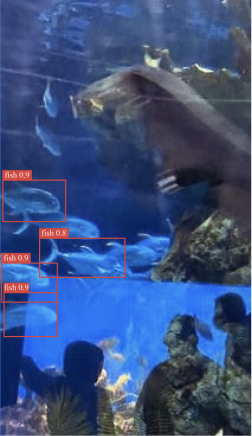

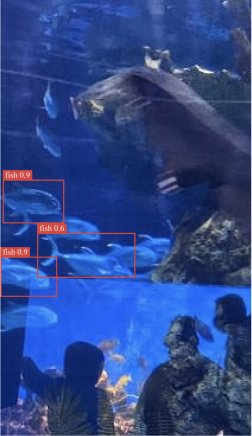

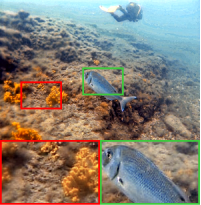

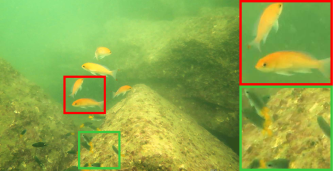

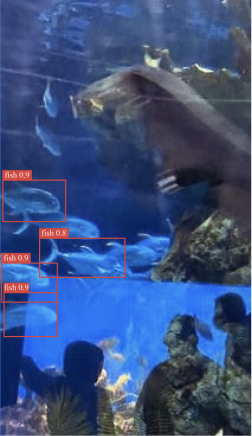

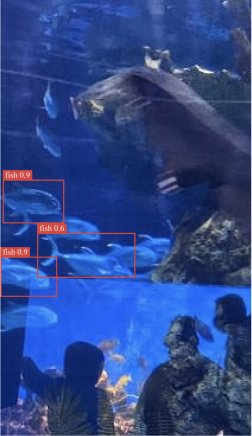

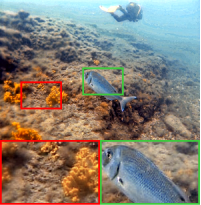

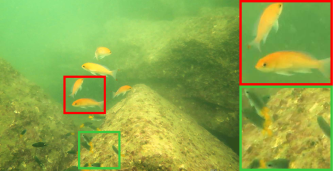

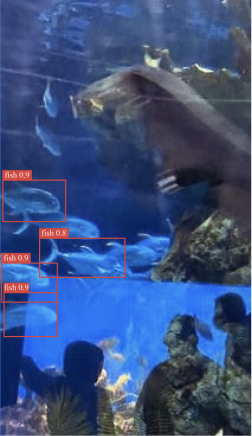

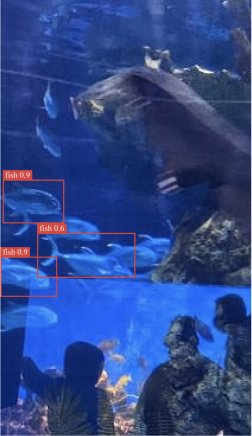

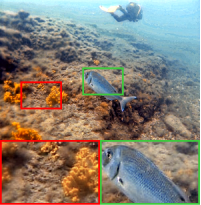

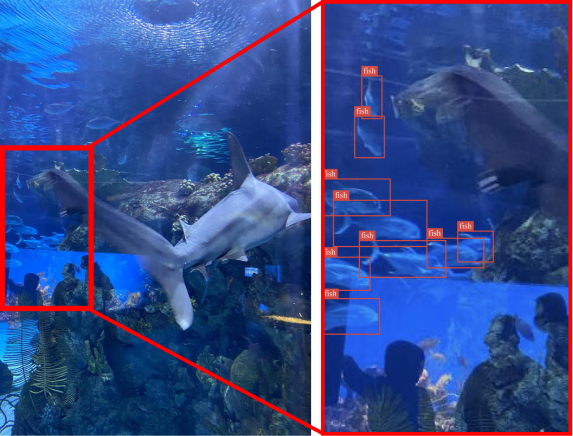

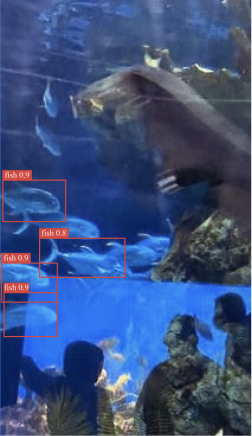

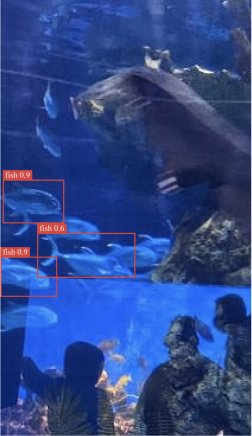

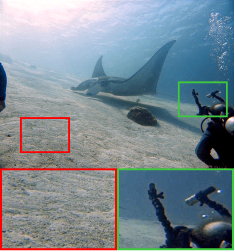

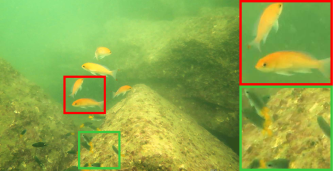

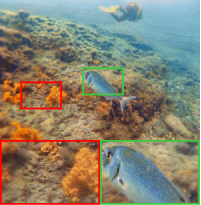

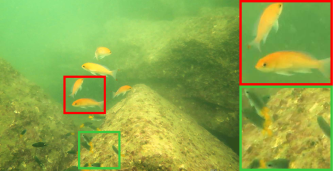

We utilize the same comparison methods to assess whether the proposed enhancement results implicitly contain more semantic features that are applicable to subsequent detection tasks. The visual comparison of UCCS dataset is shown in Fig. 7. The proposed method achieves a higher detection confidence than other methods with more detected trepangs and urchins. The qualitative comparison of the Aquarium dataset is shown in Fig. 8, which clearly shows the remarkable advantages of the proposed method in adapting to subsequent detection tasks.

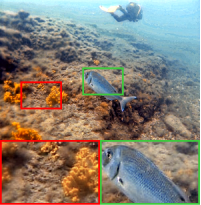

4.3. Quantitative Results

The quantitative results of representative methods for underwater image enhancement are shown in Tab. 1. It can be observed that the proposed method exceeds all other methods in UICM, and achieves competitive results in UCIQE and UIQM. However, as noted by (Berman et al., 2021; Huang et al., 2023), although both UCIQE and UIQM metrics can represent the degree of restoration of underwater images, they are somewhat heuristic and have limited applicability on evaluating the performance of underwater image enhancement. We further compared fully-reference evaluation on the UIEBD dataset which contains reference images. The proposed method achieves a significant advantage on PSNR and is only lower than SemiUIR on SSIM. However, as noted by (Li et al., 2021a), the reference images of UIEBD are synthetic by multiple enhancement methods, which indicates that some unreliable samples may affect the evaluation of the performance. Combining with NR-IQA and full-reference benchmarks, our method still achieves great advantages in underwater image enhancement, consistent with the qualitative results. The quantitative comparison for underwater object detection is shown in Fig. 9. It can be observed that the proposed method outperforms the other competitive methods in terms of detection accuracy in both UCCS and Aquarium datasets. It can be proven that the proposed results can not only achieve good visual enhancement effects, but also potentially contain more perceptual information which is beneficial for subsequent detection tasks.

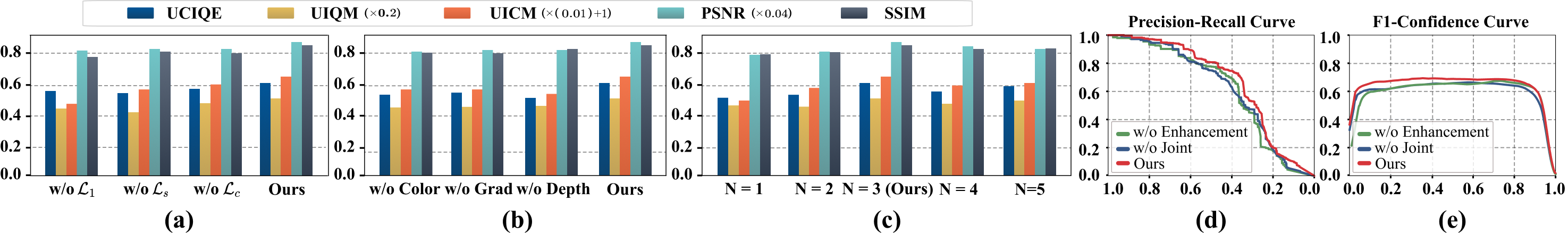

4.4. Ablation Study

4.4.1. Study on Loss Function

We discuss the effectiveness of different loss functions. The visual results are shown in Fig. 10. w/o and w/o make the images deviate from their intrinsic colors and introduce unpleasant artifacts. w/o still suffers from low contrast and clarity although it has improved the color balance of the image to a certain extent. Combined with quantitative comparison in (a) of Fig. 11, the proposed method achieves the best enhancement effect, which verifies the effectiveness of all functions.

4.4.2. Study on Heuristic Prior guided Encoder

We conduct ablation experiments on the input of HPE to verify the effectiveness of each input to the proposed method. The qualitative comparison is shown in Fig. 12. In the first example, when the color image or depth map is not adopted as input, unpleasant shadows are introduced. The effect of image enhancement is obviously limited when the gradient map is not used. In the second example, the proposed method achieved the best enhancement effect, which not only restore the color of the ground but also significantly improved the clarity of the content in the red and green frames. The quantitative comparison is shown in (b) of Fig. 11, which shows that the proposed method achieves the best results on all benchmarks. Any input removed significantly attenuates the enhanced image.

4.4.3. Study on Number of Hybrid Invertible Blocks

We conduct ablation experiments on the number of Hybrid Invertible Blocks . The visual comparison is illustrated in Fig. 13. It is obvious that when the number of blocks is less than 3, the enhanced image suffers from local over enhancement and the image artifacts introduction. Quantitative comparison is shown in (c) of Fig. 11. Considering the limitations of network parameters and computational costs, we finally employ as 3 in our framework.

4.4.4. Study on underwater object detection

In order to verify the effectiveness of the proposed method for underwater object detection. We directly train the existing detection network without enhancement on underwater images to verify the effect of enhanced data on underwater object detection. At the same time, the enhancement module and the Detection Perception Module are not trained jointly, so as to test whether DPM can make the enhanced data more conducive to subsequent detection tasks. Quantitative comparison is shown in (d) and (e) of Fig. 11. w/o Enhancement and w/o Joint respectively indicate that the enhanced image is not used as a preprocessing stage and the latent features are not transmitted to the enhancement module from the DPM. Evidently, the proposed method achieves the highest detection performance, which proves the effectiveness of the DPM.

5. CONCLUSION

In this paper, we propose a detection-driven heuristic normalizing flow underwater image enhancement. We first adopt the underwater image restoration process into the normalizing flow to establish the invertible mapping of the degraded image and the its clear counterpart through bilateral constraints. Then we further introduce the heuristic prior guided injector to improve the representation ability of the enhancement network by progressively estimating the underwater imaging parameters. At last, we introduce the detection perception module, which propagates high-level perceptual features into the enhancement model as gradient information to help generate the detection-driven enhanced image. Extensive experiments on multiple benchmarks show that the proposed method not only achieves good visual enhancement effects, but is also more suitable for subsequent detection tasks.

ACKNOWLEDGMENTS

This work is partially supported by the National Key R&D Program of China (No. 2022YFA1004101), the National Natural Science Foundation of China (No. U22B2052).

References

- (1)

- Abdal et al. (2021) Rameen Abdal, Peihao Zhu, Niloy J Mitra, and Peter Wonka. 2021. Styleflow: Attribute-conditioned exploration of stylegan-generated images using conditional continuous normalizing flows. ACM Transactions on Graphics (ToG) 40, 3 (2021), 1–21.

- An et al. (2021) Jie An, Siyu Huang, Yibing Song, Dejing Dou, Wei Liu, and Jiebo Luo. 2021. Artflow: Unbiased image style transfer via reversible neural flows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 862–871.

- Berman et al. (2021) Dana Berman, Deborah Levy, Shai Avidan, and Tali Treibitz. 2021. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. IEEE Transactions on Pattern Analysis and Machine Intelligence 43, 8 (2021), 2822–2837. https://doi.org/10.1109/TPAMI.2020.2977624

- Bouguer (1729) Pierre Bouguer. 1729. Essai d’optique sur la gradation de la lumière. Claude Jombert.

- Chen et al. (2020) Long Chen, Zheheng Jiang, Lei Tong, Zhihua Liu, Aite Zhao, Qianni Zhang, Junyu Dong, and Huiyu Zhou. 2020. Perceptual underwater image enhancement with deep learning and physical priors. IEEE Transactions on Circuits and Systems for Video Technology 31, 8 (2020), 3078–3092.

- Chen et al. (2021) Xuelei Chen, Pin Zhang, Lingwei Quan, Chao Yi, and Cunyue Lu. 2021. Underwater image enhancement based on deep learning and image formation model. arXiv preprint arXiv:2101.00991 (2021).

- Cheng et al. (2022) Xuelian Cheng, Huan Xiong, Deng-Ping Fan, Yiran Zhong, Mehrtash Harandi, Tom Drummond, and Zongyuan Ge. 2022. Implicit motion handling for video camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13864–13873.

- Chiang and Chen (2011) John Y Chiang and Ying-Ching Chen. 2011. Underwater image enhancement by wavelength compensation and dehazing. IEEE transactions on image processing 21, 4 (2011), 1756–1769.

- Deng et al. (2022) Yingying Deng, Fan Tang, Weiming Dong, Chongyang Ma, Xingjia Pan, Lei Wang, and Changsheng Xu. 2022. StyTr2: Image Style Transfer with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11326–11336.

- Dinh et al. (2014) Laurent Dinh, David Krueger, and Yoshua Bengio. 2014. Nice: Non-linear independent components estimation. arXiv preprint arXiv:1410.8516 (2014).

- Drews et al. (2013) Paul Drews, Erickson Nascimento, Filipe Moraes, Silvia Botelho, and Mario Campos. 2013. Transmission estimation in underwater single images. In Proceedings of the IEEE international conference on computer vision workshops. 825–830.

- Feng et al. (2021) Chengjian Feng, Yujie Zhong, Yu Gao, Matthew R Scott, and Weilin Huang. 2021. Tood: Task-aligned one-stage object detection. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE Computer Society, 3490–3499.

- Ghani and Isa (2015) Ahmad Shahrizan Abdul Ghani and Nor Ashidi Mat Isa. 2015. Underwater image quality enhancement through integrated color model with Rayleigh distribution. Applied soft computing 27 (2015), 219–230.

- Girshick et al. (2014) Ross Girshick, Jeff Donahue, Trevor Darrell, and Jitendra Malik. 2014. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 580–587.

- Guo et al. (2020) Yong Guo, Jian Chen, Jingdong Wang, Qi Chen, Jiezhang Cao, Zeshuai Deng, Yanwu Xu, and Mingkui Tan. 2020. Closed-loop matters: Dual regression networks for single image super-resolution. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 5407–5416.

- He et al. (2017) Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. 2017. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision. 2961–2969.

- Huang et al. (2023) Shirui Huang, Keyan Wang, Huan Liu, Jun Chen, and Yunsong Li. 2023. Contrastive semi-supervised learning for underwater image restoration via reliable bank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18145–18155.

- Islam et al. (2020) Md Jahidul Islam, Youya Xia, and Junaed Sattar. 2020. Fast underwater image enhancement for improved visual perception. IEEE Robotics and Automation Letters 5, 2 (2020), 3227–3234.

- Jiang et al. (2022) Zhiying Jiang, Zhuoxiao Li, Shuzhou Yang, Xin Fan, and Risheng Liu. 2022. Target Oriented Perceptual Adversarial Fusion Network for Underwater Image Enhancement. IEEE Transactions on Circuits and Systems for Video Technology (2022).

- Jiang et al. (2023a) Zhiying Jiang, Risheng Liu, Shuzhou Yang, Zengxi Zhang, and Xin Fan. 2023a. Contrastive Learning Based Recursive Dynamic Multi-Scale Network for Image Deraining. arXiv preprint arXiv:2305.18092 (2023).

- Jiang et al. (2023b) Zhiying Jiang, Zengxi Zhang, Jinyuan Liu, Xin Fan, and Risheng Liu. 2023b. Modality-Invariant Representation for Infrared and Visible Image Registration. arXiv preprint arXiv:2304.05646 (2023).

- Johnson et al. (2016) Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision. Springer, 694–711.

- Kim and Lee (2020) Kang Kim and Hee Seok Lee. 2020. Probabilistic anchor assignment with iou prediction for object detection. In European Conference on Computer Vision. Springer, 355–371.

- Kingma and Dhariwal (2018) Durk P Kingma and Prafulla Dhariwal. 2018. Glow: Generative flow with invertible 1x1 convolutions. Advances in neural information processing systems 31 (2018).

- Li et al. (2021a) Chongyi Li, Saeed Anwar, Junhui Hou, Runmin Cong, Chunle Guo, and Wenqi Ren. 2021a. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Transactions on Image Processing 30 (2021), 4985–5000.

- Li et al. (2019a) Chongyi Li, Chunle Guo, Wenqi Ren, Runmin Cong, Junhui Hou, Sam Kwong, and Dacheng Tao. 2019a. An underwater image enhancement benchmark dataset and beyond. IEEE Transactions on Image Processing 29 (2019), 4376–4389.

- Li et al. (2017) Chongyi Li, Jichang Guo, Chunle Guo, Runmin Cong, and Jiachang Gong. 2017. A hybrid method for underwater image correction. Pattern Recognition Letters 94 (2017), 62–67.

- Li et al. (2016) Chongyi Li, Jichang Quo, Yanwei Pang, Shanji Chen, and Jian Wang. 2016. Single underwater image restoration by blue-green channels dehazing and red channel correction. In 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 1731–1735.

- Li et al. (2019b) Hanyu Li, Jingjing Li, and Wei Wang. 2019b. A fusion adversarial underwater image enhancement network with a public test dataset. arXiv preprint arXiv:1906.06819 (2019).

- Li et al. (2021b) Hongyu Li, Jia Li, Dong Zhao, and Long Xu. 2021b. DehazeFlow: Multi-scale Conditional Flow Network for Single Image Dehazing. In Proceedings of the 29th ACM International Conference on Multimedia. 2577–2585.

- Lin et al. (2020) Chuan Lin, Guangjie Han, Mohsen Guizani, Yuanguo Bi, Jiaxin Du, and Lei Shu. 2020. An SDN architecture for AUV-based underwater wireless networks to enable cooperative underwater search. IEEE Wireless Communications 27, 3 (2020), 132–139.

- Lin et al. (2017) Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. 2017. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision. 2980–2988.

- Liu et al. (2022a) Jinyuan Liu, Xin Fan, Zhanbo Huang, Guanyao Wu, Risheng Liu, Wei Zhong, and Zhongxuan Luo. 2022a. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5802–5811.

- Liu et al. (2022c) Jinyuan Liu, Runjia Lin, Guanyao Wu, Risheng Liu, Zhongxuan Luo, and Xin Fan. 2022c. CoCoNet: Coupled Contrastive Learning Network with Multi-level Feature Ensemble for Multi-modality Image Fusion. arXiv preprint arXiv:2211.10960 (2022).

- Liu et al. (2023c) Jinyuan Liu, Guanyao Wu, Junsheng Luan, Zhiying Jiang, Risheng Liu, and Xin Fan. 2023c. HoLoCo: Holistic and local contrastive learning network for multi-exposure image fusion. Information Fusion 95 (2023), 237–249.

- Liu et al. (2020) Risheng Liu, Xin Fan, Ming Zhu, Minjun Hou, and Zhongxuan Luo. 2020. Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Transactions on Circuits and Systems for Video Technology 30, 12 (2020), 4861–4875.

- Liu et al. (2022b) Risheng Liu, Zhiying Jiang, Shuzhou Yang, and Xin Fan. 2022b. Twin adversarial contrastive learning for underwater image enhancement and beyond. IEEE Transactions on Image Processing 31 (2022), 4922–4936.

- Liu et al. (2021) Risheng Liu, Zhu Liu, Jinyuan Liu, and Xin Fan. 2021. Searching a hierarchically aggregated fusion architecture for fast multi-modality image fusion. In Proceedings of the 29th ACM International Conference on Multimedia. 1600–1608.

- Liu et al. (2023a) Risheng Liu, Zhu Liu, Jinyuan Liu, Xin Fan, and Zhongxuan Luo. 2023a. A Task-guided, Implicitly-searched and Meta-initialized Deep Model for Image Fusion. arXiv preprint arXiv:2305.15862 (2023).

- Liu et al. (2023b) Zhu Liu, Jinyuan Liu, Guanyao Wu, Long Ma, Xin Fan, and Risheng Liu. 2023b. Bi-level Dynamic Learning for Jointly Multi-modality Image Fusion and Beyond. arXiv preprint arXiv:2305.06720 (2023).

- Lugmayr et al. (2020) Andreas Lugmayr, Martin Danelljan, Luc Van Gool, and Radu Timofte. 2020. Srflow: Learning the super-resolution space with normalizing flow. In European conference on computer vision. Springer, 715–732.

- Ma et al. (2022) Long Ma, Tengyu Ma, Risheng Liu, Xin Fan, and Zhongxuan Luo. 2022. Toward Fast, Flexible, and Robust Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 5637–5646.

- Mu et al. (2022) Pan Mu, Haotian Qian, and Cong Bai. 2022. Structure-Inferred Bi-level Model for Underwater Image Enhancement. In Proceedings of the 30th ACM International Conference on Multimedia. 2286–2295.

- Panetta et al. (2015) Karen Panetta, Chen Gao, and Sos Agaian. 2015. Human-visual-system-inspired underwater image quality measures. IEEE Journal of Oceanic Engineering 41, 3 (2015), 541–551.

- Peng et al. (2018) Yan-Tsung Peng, Keming Cao, and Pamela C Cosman. 2018. Generalization of the dark channel prior for single image restoration. IEEE Transactions on Image Processing 27, 6 (2018), 2856–2868.

- Prytula (2020) Slavko Prytula. 2020. Underwater Object Detection Dataset. https://public.roboflow.com/object-detection/aquarium.

- Redmon and Farhadi (2018) Joseph Redmon and Ali Farhadi. 2018. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767 (2018).

- Ren et al. (2015) Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. 2015. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems 28 (2015).

- Rezatofighi et al. (2019) Hamid Rezatofighi, Nathan Tsoi, JunYoung Gwak, Amir Sadeghian, Ian Reid, and Silvio Savarese. 2019. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 658–666.

- Simonyan and Zisserman (2014) Karen Simonyan and Andrew Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

- Sun et al. (2021) Peize Sun, Rufeng Zhang, Yi Jiang, Tao Kong, Chenfeng Xu, Wei Zhan, Masayoshi Tomizuka, Lei Li, Zehuan Yuan, Changhu Wang, et al. 2021. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 14454–14463.

- Wang et al. (2017) Yi Wang, Hui Liu, and Lap-Pui Chau. 2017. Single underwater image restoration using adaptive attenuation-curve prior. IEEE Transactions on Circuits and Systems I: Regular Papers 65, 3 (2017), 992–1002.

- Wang et al. (2022) Yufei Wang, Renjie Wan, Wenhan Yang, Haoliang Li, Lap-Pui Chau, and Alex Kot. 2022. Low-light image enhancement with normalizing flow. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36. 2604–2612.

- Wang et al. (2004) Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. 2004. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13, 4 (2004), 600–612.

- Xie et al. (2022) Chenxi Xie, Changqun Xia, Mingcan Ma, Zhirui Zhao, Xiaowu Chen, and Jia Li. 2022. Pyramid Grafting Network for One-Stage High Resolution Saliency Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11717–11726.

- Yang and Sowmya (2015) Miao Yang and Arcot Sowmya. 2015. An underwater color image quality evaluation metric. IEEE Transactions on Image Processing 24, 12 (2015), 6062–6071.

- Yang et al. (2023) Shuzhou Yang, Moxuan Ding, Yanmin Wu, Zihan Li, and Jian Zhang. 2023. Implicit Neural Representation for Cooperative Low-light Image Enhancement. arXiv:2303.11722 [cs.CV]

- Yoerger et al. (2021) Dana R Yoerger, Annette F Govindarajan, Jonathan C Howland, Joel K Llopiz, Peter H Wiebe, Molly Curran, Justin Fujii, Daniel Gomez-Ibanez, Kakani Katija, Bruce H Robison, et al. 2021. A hybrid underwater robot for multidisciplinary investigation of the ocean twilight zone. Science Robotics 6, 55 (2021), eabe1901.