Wavelet Based Periodic Autoregressive Moving Average Models

Abstract

This paper proposes a wavelet-based method for analysing periodic autoregressive moving average (PARMA) time series. Even though Fourier analysis provides an effective method for analysing periodic time series, it requires the estimation of a large number of Fourier parameters when the PARMA parameters do not vary smoothly. The wavelet-based analysis helps us to obtain a parsimonious model with a reduced number of parameters. We have illustrated this with simulated and actual data sets.

keywords:

Fourier methods; hypothesis testing; parsimony, periodic ARMA; wavelets1 Introduction

Time series with seasonal or periodic patterns occur in many fields of applications such as economics, finance, demography, astronomy, meteorology, etc. The commonly used seasonal autoregressive integrated moving average (SARIMA) models may not capture some of the important local features of such time series (see [14]). An alternative approach suggested is to model these data by periodically stationary time series. A process is said to be periodically (weak) stationary if mean function and autocovariance function , are periodic functions with same period (see [20]). A particular class of models having periodic stationarity is the periodic autoregressive moving average models of period having autoregressive parameter and moving average parameter [], given in [6] is

| (1) |

where (i) (ii) , (iii) , and (iv) , where is the variance of the mean zero random sequence . Thus, is an iid sequence of mean 0 and variance 1 random variables. Note that PARMA reduces to ARMA when . PARMA models have been applied in fields as diverse as economics ([21] and [9]), climatology ([13], [7] and [4]), signal processing [10] and hydrology ([25], [24], [6], [1] and [5]). However, PARMA models contain a large number of parameters. For example, for a monthly series of period , the total number of parameters to be estimated is . Often, the inclusion of a large number of parameters leads to overfitting. Hence, it is important to develop methods that result in parsimonious models having less number of parameters.

This article is organised in the following manner: In Section 2, the estimators of the PARMA model and their several properties are mentioned. The Fourier-PARMA model is presented in Section 3. Section 4 gives a brief introduction to wavelet analysis with a special focus on discrete wavelet transform. In Section 5, our proposed wavelet-PARMA model is expounded. Section 6 consists of a simulation study of our proposed wavelet-PARMA model. The applicability of the proposed model is demonstrated using real data in Section 7. Section 8 contains the conclusion of our study. We have utilised the results from various articles, and for easy reference, we have included them in the Appendices.

2 Estimation of PARMA parameters

It is assumed hereafter that model (1) admits a causal representation (see [4])

where and for all . Note that for all . Also, and where is i.i.d.

Given data , where is the number of cycles, the vector is estimated using

| (2) |

The sample autocovariance for lag and season is estimated using

| (3) |

where is estimated using (2) and whenever , the corresponding term is set as zero. Let

denote the one-step predictor of that minimises the mean square error, . The estimates of and can be obtained by substituting the estimates of autocovariance function (3) in the recursive relation of innovation algorithm for periodic stationary processes given in Theorem A1. Then, Theorem A2 and Theorem A3 show that and are consistent estimators for for all , and respectively. Here the notation, is and for , where is the greatest integer function.

To demonstrate our proposed ideas we are focusing particularly on two classes of PARMA models, described in the following subsections.

2.1 Periodic autoregressive model of order 1 (PAR(1))

Consider the model given by,

| (4) |

where (i) (ii) and (iii) where is the variance of the mean zero normal random sequence . For the model (4), Theorem A4 tells us that . Thus, can be obtained using the consistent estimates of Moreover, Theorem A5 tells us that the estimator of has asymptotic normality. Also, and have asymptotic normality as stated by Theorem A6 and Theorem A7, respectively.

2.2 PARMA(1,1)

Consider the given by,

| (5) |

where (i) (ii) and is a sequence of normal random variables with mean 0 and variance 1. For the model (5), Theorem A8 tells us that,

| (6) |

| (7) |

Thus, and are obtained by replacing with its corresponding consistent estimate. The estimator of has asymptotic normality as given in Theorem A9. Also, Theorem A10 tells us that also has asymptotic normality.

After estimating the PARMA parameters our objective is to reduce the number of parameters so that the final model is still able to capture the dependency structure adequately. Since parameter functions are periodic, it is natural to consider Fourier techniques to meet the objectives.

3 Fourier-PARMA model

Often, it is found that representing a function in terms of basis terms helps us to get new insights about the function, which is otherwise obscure. The selection of the set of basis functions depends on the kind of information we are interested in. The analysis of periodic functions usually involves the identification of prominent frequencies present in the signal. For this the function is represented as an infinite series of sinusoids, known as Fourier series, which is possible for almost all functions encountered in practice. The magnitude of the coefficients associated with sines and cosines indicates the strength of the corresponding frequency component present in the process.

For a given vector , from [4], the periodic function has the representation

| (8) |

where and are Fourier coefficients and

For convenience, (8) is written as,

| (9) |

where and .

Let .

Then, we have, from [4],

| (10) |

where and are respectively given in equations (60), (62) and (61) of Theorem A11. and are unitary matrices. Using (10), we have, . Note that X can be retrieved from f by,

where denotes the conjugate transpose of matrix . Thus, X and f are representations of the same entity. Hence, to obtain a parsimonious PARMA model it is enough to represent the model using a reduced number of Fourier coefficients. Since it is observed that PARMA parameters often vary smoothly over time, [6], [5], [23] and [4] identified significant Fourier coefficients via a hypothesis test based on the asymptotic distributions of PARMA estimators. The significant Fourier coefficients are retained as it is while the insignificant coefficients are reduced to zero, thereby resulting in a parsimonious Fourier-PARMA model.

Although some success has been found in employing Fourier techniques they often fail when bursts and other transient events take place, which is often the case in many fields like economics and climatology. This is because, in the case of temporal events, all the Fourier coefficients get unduly affected as all the observations are involved in the computation of the coefficients.

4 Wavelet Analysis

Wavelet techniques are an excellent alternative to Fourier methods. Some applications of wavelets in statistics include estimation of functions like density, regression, and spectrum, analysis of long memory process, data compression, decorrelation, and detection of structural breaks. A “wavelet” means small wave (see [22], [18] and [11]). This essentially means that a wavelet has oscillations like a wave. However, it lasts only for a short duration unlike sine wave, which spans infinitely to both sides of the time axis. They are constructed so that they possess certain desirable properties. Many different wavelet functions and transforms are available in the literature. However, here we are considering only the orthogonal discrete wavelet transform (DWT) since the approximation based on an orthogonal set is best in terms of mean square error, as indicated by projection theorem (see [8]).

Fourier methods are enough when the frequencies of the signal do not change over time. However, Fourier techniques fail when different frequencies occur at different parts of the time axis. Hence, it is imperative to find techniques that have both time resolution and frequency resolution. But, by Heisenberg’s Principle, it is impossible to identify time and frequency simultaneously with arbitrary precision (see [15] and [26]) as there is a lower bound for the error occurred. However, wavelets intelligently bypass this difficulty by using short time windows to capture high frequency, so that we have good time resolution, and wider time windows for low frequencies so that we have good frequency resolution. Thus, wavelets can pick out characteristics that are local in time and frequency. Wavelets are functions of scale, instead of frequency. A scale can be loosely interpreted as a quantity inversely proportional to frequency. This makes the wavelet transform an exceptional tool for studying non-stationary signals. For the latest works on the applications of wavelets to time series, one can refer to [16], [27], and [17].

Let be a vector of size , is an orthogonal matrix used for performing discrete wavelet transform (DWT), then the DWT of is given by,

| (11) |

Here, , is the scaling coefficient that is proportional to the average of all the data, i.e,

| (12) |

where the proportionality constant depends on the particular wavelet employed. contains wavelet coefficients associated with changes on a scale . For example, the Haar DWT of is given by,

| (13) |

Note that the wavelet coefficients are localised in time. For example, the last wavelet coefficient only involves the observations and . Since is an orthogonal matrix, the original vector can easily be reconstructed via the inverse DWT

| (14) |

where denotes the transpose of . Note that from (14), X and W are representations of the same quantity and hence it is enough to represent the parameters of the PARMA model in terms of discrete wavelet transform coefficients.

Another interesting advantage of the DWT, especially pertaining to our objective of achieving parsimonious PARMA models, is the fact that DWT redistributes the energy or variability contained in a sequence (see [26]). Hence, the crux of the sequence, spread throughout the original sequence, is concentrated in a few wavelet coefficients (see [19] and [26]). Hence, we propose a wavelet-PARMA model that captures the dependency structure adequately based on the idea of retaining only a few significant wavelet coefficients. We identify the significant wavelet coefficients by developing a hypothesis test, following the parallel approach given in [4] for Fourier coefficients, utilizing the asymptotic distributions of the estimators of the PARMA parameters.

5 Wavelet - PARMA model

Suppose random vector satisfies,

| (15) |

Using Theorem A12, we have,

| (16) |

| (17) |

The main idea is to identify the statistically significant wavelet coefficients of the PARMA estimator vectors, using an appropriate test procedure so that the other coefficients can be nullified, thereby obtaining a parsimonious PARMA model. The null hypothesis of the test is . Under , , where depends on the type of wavelet used. Since the parameter vectors have all elements the same under , the PARMA process reduces to a stationary ARMA process with for all , and similarly for other parameter vectors. Thus, the null hypothesis states that the model is stationary. From (16), we have,

| (18) |

Here, we will use the Bonferroni’s test procedure (see [12]) with . In this case, we wish to test the null hypothesis versus . The test statistics is

| (19) |

Let and be the tail quantile, i.e, , where . We reject the null hypothesis when . Whenever the null hypothesis is not rejected, the corresponding element is set as zero. Hopefully, this will result in a sparse vector where most of the coefficients are 0, resulting in a parsimonious wavelet-PARMA model.

5.1 PAR(1) under

For the model considered in (4), under null hypothesis, and . In this case, the asymptotic variance-covariance matrix of , becomes (see [4]),

| (20) |

where and for ,

| (21) |

Since is a symmetric matrix, we also have the elements . For hypothesis testing, the elements of are replaced with their estimates.

Under , the asymptotic variance-covariance matrix of , is given by (see [4]),

| (22) | ||||

| (23) |

and if , we have,

| (24) | ||||

| (25) |

Since is a symmetric matrix, we also have the elements For hypothesis testing, the elements of are replaced with their estimates.

Under , for all and so from Theorem A5, we get that the asymptotic variance-covariance matrix of , as the identity matrix of order , i.e., .

5.2 PARMA(1,1) under

For the model considered in (5), under null hypothesis, and . Under , the asymptotic variance-covariance matrix of , given in Theorem A9, reduces to

| (26) |

where

| (27) | ||||

| (28) | ||||

| (29) | ||||

| (30) | ||||

| (31) | ||||

| (32) | ||||

| (33) | ||||

| (34) | ||||

| (35) |

Also, the asymptotic variance-covariance matrix of given in Theorem A10, has the following form under :

| (36) |

where , , are given in equations (27) to (30) , is given in (33) and is given in (35).

If is not a power of 2, each of the estimator vectors is extended periodically to the nearest power of 2, say . The corresponding asymptotic variance-covariance matrices are also extended periodically, resulting in matrices of order .

6 Simulation of wavelet-PARMA

The observations are simulated from the model,

| (37) |

where (i) , (ii) and is a sequence of normal random variables with mean 0 and variance 1. The parameter values and their corresponding estimates are given in Table 6.

True value and their estimates of PARMA model. Parameter Season True Estimate True Estimate 0 0.67 0.59 0.2 0.06 1 0.7 0.73 0.23 0.29 2 0.69 0.81 0.22 0.30 3 0.68 0.77 0.21 0.24 4 0.67 0.43 1.43 1.22 5 0.68 0.72 1.44 1.46 6 0.69 0.62 0.46 0.40 7 0.68 1.04 0.47 0.81 8 1.83 1.86 0.23 0.28 9 1.84 1.83 0.24 0.25 10 0.53 0.52 0.21 0.21 11 0.52 0.68 0.23 0.37

Since period 12 is not a power of 2, each of the vectors has been extended periodically to the nearest power of 2, i.e, 16. We have taken the number of cycles, to be 500.

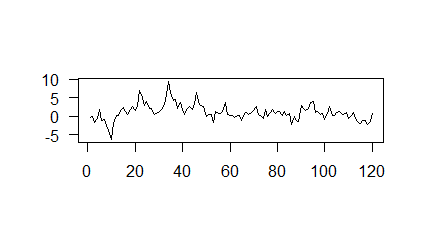

The plot of the generated observations is given in Figure 1.

7 iterations of the innovation algorithm was carried out to calculate the parameter estimates. The residuals are computed using the expression,

| (38) |

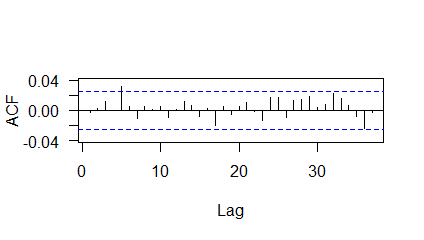

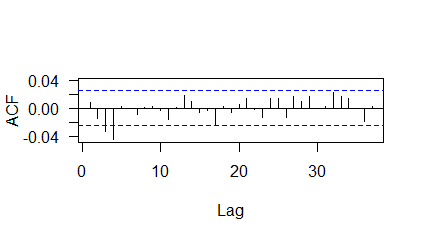

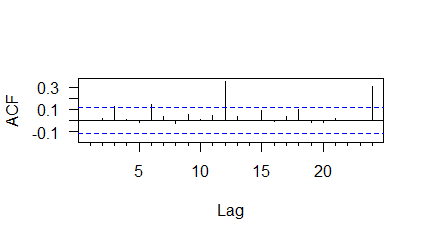

where the initial value is set as zero. The residual diagnostic plots are given in Figure 2.

The Box Pierce test results are given in Table 6.

Box-Pierce test for PARMA residuals Lags 20 30 40 50 80 100 p-value 0.8844 0.8232 0.5431 0.706 0.8313 0.8898

The normality of the residuals was tested using Kolmogorov Smirnov test and it was found that the residuals are normally distributed (p-value = 0.8586). Thus, the residuals are uncorrelated and normally distributed.

The obtained Fourier-PARMA model, by using the method outlined in [4] is given by,

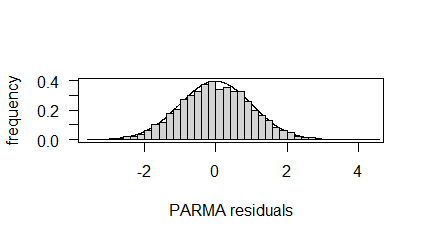

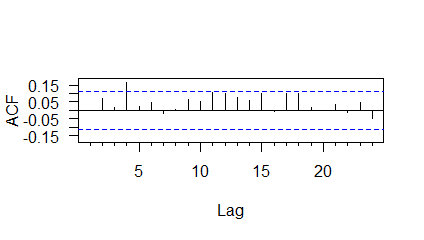

The ACF plot of residuals of the Fourier-PARMA model is given in Figure 3.

The results of Box-Pierce test are given in Table 6.

Box-Pierce test for Fourier-PARMA model. Lags 20 30 40 50 80 100 p-value 0.0003 0.00299 0.0084

Thus, the Fourier-PARMA model is not able to capture the dependencies among the observations adequately as is evident from the ACF plot given in Figure 3 and Box-Pierce test results given in Table 6.

DWT analysis of estimates and estimates of . The statistically significant DWT coefficients () are denoted by *. The coefficients considered in the Wavelet-PARMA model are indicated in bold. Parameter DWT coefficient Z-score DWT coefficient Z-score 0 3.37 - 1.69 - 1 -0.52* -5.30 0.69* 6.12 2 0.03 0.21 -1.06* -6.60 3 0.71* 5.09 0.08 0.48 4 -0.13 -0.94 -0.10 -0.63 5 -0.26 -1.85 0.73* 4.80 6 1.25* 8.97 -0.02 -0.16 7 -0.13 -0.94 -0.10 -0.63 8 -0.10 -0.72 -0.16 -1.21 9 0.03 0.21 0.05 0.36 10 -0.21 -1.49 -0.17 -1.27 11 -0.30 -2.16 -0.29 -2.15 12 0.02 0.15 0.02 0.14 13 -0.11 -0.80 -0.11 -0.85 14 -0.10 -0.72 -0.16 -1.21 15 0.03 0.21 0.05 0.36

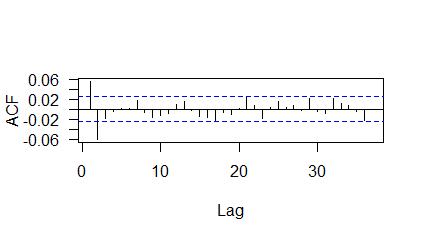

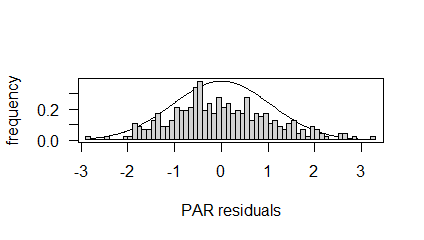

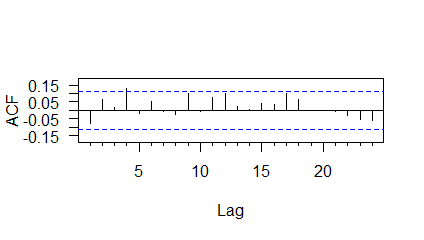

The discrete wavelet transform analysis was done using Haar wavelet. This wavelet was chosen for simulation because its structure is explicitly known. The results of Haar DWT analysis are given in Table 6. It is found that only 4 DWT coefficients of and 4 DWT coefficients of need to be incorporated into the wavelet-PAR model and so the final model contains only 8 parameters as opposed to 24 parameters in the original PARMA model. The ACF plot of the residuals of wavelet-PARMA model is given in Figure 4.

The results of Box-Pierce test are given in Table 6.

Box-Pierce test for Wavelet-PARMA model. Lags 20 30 40 50 80 100 p-value 0.0889 0.1274 0.0931 0.1923 0.4974 0.6524

The residuals of the wavelet-PARMA model are uncorrelated. Hence, the wavelet-PARMA model with just 8 parameters is able to capture the dependency structure adequately.

7 Data Analysis

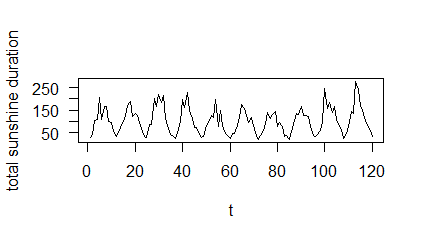

The UK Meteorological (MET) Office, established in 1854, is the government body commissioned to analyse weather in the United Kingdom. They use their findings for forecasting and for issuing warnings. They offer data from 36 stations in the UK. For our analysis, we have considered the total sunshine duration in a month recorded in hours by the Ballypatrick weather station for the years 1966-1990. This data is available from the Kaggle site https://www.kaggle.com/datasets/josephw20/uk-met-office-weather-data/code, where the total sunshine duration is given under the variable sun. The data considered is fit using the model given in equation (4). Here, period and the number of years (cycles), . The plot of the first 10 years is given in Figure 5. It was found that 2 iterations of the innovation algorithm were enough to capture the model dynamics. The residuals of the PAR model was computed using,

| (39) |

where . The PAR model diagnostics plots are given in Figure 6. The results of the Box-Pierce test are given in Table 7. The normality of the residuals was tested using the Kolmogorov-Smirnov test and it was found that the residuals were normally distributed (p-value = 0.319). Thus, the residuals are uncorrelated and normally distributed.

Box-Pierce test for PAR residuals Lags 20 30 p-value 0.0528 0.0601

The obtained Fourier-PAR model, by using the method outlined in [4] is given by,

The ACF plot of Fourier-PAR residuals is given in Figure 7.

The results of Box-Pierce test of Fourier-PAR residuals are given in Table 7.

Box-Pierce test of Fourier-PAR residuals. Lags 20 30 p-value

Thus, it is clear that the Fourier-PAR model has not been able to capture the dependency structure adequately.

The discrete wavelet transform analysis was done using Daubechies least asymmetric wavelet with 7 vanishing moments. This wavelet was chosen because the resulting wavelet-PAR model could capture the dependency structure adequately. The LA(7) DWT results are given in Table 7. The ACF plot of wavelet-PAR residuals is given in Figure 8. The Box-Pierce test of wavelet-PAR residuals is given in Table 7. From these results, it is clear that the resulting wavelet-PAR model was able to capture the dependency structure adequately. It is found that only 7 DWT coefficients of , 1 DWT coefficient of , and 5 DWT coefficients of need to be incorporated into the wavelet-PAR model and so the final model contains only 13 parameters as opposed to 36 parameters in the original PAR model.

DWT analysis of estimates, estimates and estimates of . The statistically significant DWT coefficients () are denoted by *. The coefficients considered in the wavelet-PAR model are indicated in bold. Parameter DWT coefficient Z-score DWT coefficient Z-score DWT coefficient Z-score 0 408.88 - 0.39 - 2608.33 - 1 112.69* 16.91 -0.19 -0.95 1393.30* 5.47 2 80.35* 16.96 -0.02 -0.12 848.10* 4.60 3 -56.61* -10.52 0.07 0.35 -603.82 -2.93 4 6.75 1.15 -0.24 -1.19 278.60 1.19 5 -69.44* -10.87 -0.37 -1.87 -639.87 -2.49 6 80.40* 14.36 -0.46 -2.32 898.89* 3.99 7 -7.24 -1.24 0.05 0.26 -469.41 -2.01 8 -2.07 -0.38 -0.10 -0.48 202.58 0.85 9 -0.53 -0.09 -0.02 -0.11 -69.71 -0.29 10 4.87 0.84 -0.12 -0.62 147.40 0.59 11 -75.58* -13.48 0.17 0.87 -737.15 -3.17 12 4.84 0.93 -0.16 -0.79 143.75 0.64 13 -2.82 -0.51 0.21 1.03 0.37 0.00 14 1.22 0.22 -0.15 -0.74 308.65 1.30 15 -10.45 -1.90 0.05 0.25 -681.49 -2.87

Box-Pierce test for wavelet-PAR residuals. Lags 20 30 p-value 0.3159 0.4438

8 Conclusion

PARMA models are used extensively in various fields like economics, climatology, signal processing and hydrology. However, the presence of a substantial number of parameters in a PARMA model reduces its efficiency. Fourier methods already exist in the literature for reducing the number of parameters and have found some success when the parameters vary slowly. However, transient events are quite common in real-life applications and wavelet techniques stand out as the principal analysis tool in these situations. The efficiency of wavelet methods in reducing the number of parameters of PARMA models has been demonstrated using a simulation study and their applicability in real life has been illustrated using real data. This study opens new avenues for the reduction of parameters in other classes of models containing a large number of parameters.

Acknowledgement

Rhea Davis acknowledges the financial support of the University Grants Commission (UGC), India under the Savitribai Jyotirao Phule Fellowship for Single Girl Child (SJSGC) scheme.

Disclosure statement

There is no conflict of interest between the authors.

References

- [1] P.L. Anderson and M.M. Meerschaert, Modeling river flows with heavy tails, Water Resources Research 34 (1998), pp. 2271–2280.

- [2] P.L. Anderson and M.M. Meerschaert, Parameter estimation for periodically stationary time series, Journal of Time Series Analysis 26 (2005), pp. 489–518.

- [3] P.L. Anderson, M.M. Meerschaert, and A.V. Vecchia, Innovations algorithm for periodically stationary time series, Stochastic Processes and their Applications 83 (1999), pp. 149–169.

- [4] P.L. Anderson, F. Sabzikar, and M.M. Meerschaert, Parsimonious time series modeling for high frequency climate data, Journal of Time Series Analysis 42 (2021), pp. 442–470.

- [5] P.L. Anderson, Y.G. Tesfaye, and M.M. Meerschaert, Fourier-parma models and their application to river flows, Journal of Hydrologic Engineering 12 (2007), pp. 462–472.

- [6] P. Anderson and A. Vecchia, Asymptotic results for periodic autoregressive moving-average processes, Journal of Time Series Analysis 14 (1993), pp. 1–18.

- [7] P. Bloomfield, H.L. Hurd, and R.B. Lund, Periodic correlation in stratospheric ozone data, Journal of Time Series Analysis 15 (1994), pp. 127–150.

- [8] P.J. Brockwell and R.A. Davis, Time series: theory and methods, Springer science & business media, 1991.

- [9] P.H. Franses and R. Paap, Periodic time series models, OUP Oxford, 2004.

- [10] W. Gardner and L. Franks, Characterization of cyclostationary random signal processes, IEEE Transactions on information theory 21 (1975), pp. 4–14.

- [11] W. Härdle, G. Kerkyacharian, D. Picard, and A. Tsybakov, Wavelets, approximation, and statistical applications, Vol. 129, Springer Science & Business Media, 2012.

- [12] D.C. Howell, Statistical methods for psychology, Cengage Learning, 2012.

- [13] R.H. Jones and W.M. Brelsford, Time series with periodic structure, Biometrika 54 (1967), pp. 403–408.

- [14] R. Lund and I. Basawa, Recursive prediction and likelihood evaluation for periodic arma models, Journal of Time Series Analysis 21 (2000), pp. 75–93.

- [15] S. Mallat, A wavelet tour of signal processing, Elsevier, 1999.

- [16] E.T. McGonigle, R. Killick, and M.A. Nunes, Trend locally stationary wavelet processes, Journal of Time Series Analysis 43 (2022), pp. 895–917.

- [17] H.A. Mohammadi, S. Ghofrani, and A. Nikseresht, Using empirical wavelet transform and high-order fuzzy cognitive maps for time series forecasting, Applied Soft Computing 135 (2023), p. 109990.

- [18] G.P. Nason, Wavelet methods in statistics with R, Springer, 2008.

- [19] R.T. Ogden, Essential wavelets for statistical applications and data analysis, Springer, 1997.

- [20] M. Pagano, On periodic and multiple autoregressions, The Annals of Statistics (1978), pp. 1310–1317.

- [21] E. Parzen and M. Pagano, An approach to modeling seasonally stationary time series, Journal of Econometrics 9 (1979), pp. 137–153.

- [22] D.B. Percival and A.T. Walden, Wavelet methods for time series analysis, Cambridge University Press, 2000.

- [23] Y.G. Tesfaye, P.L. Anderson, and M.M. Meerschaert, Asymptotic results for fourier-parma time series, Journal of Time Series Analysis 32 (2011), pp. 157–174.

- [24] A. Vecchia, Maximum likelihood estimation for periodic autoregressive moving average models, Technometrics (1985), pp. 375–384.

- [25] A. Vecchia, Periodic autoregressive-moving average (parma) modeling with applications to water resources 1, JAWRA Journal of the American Water Resources Association 21 (1985), pp. 721–730.

- [26] B. Vidakovic, Statistical modeling by wavelets, John Wiley & Sons, 1999.

- [27] J. Wang, S. Shao, Y. Bai, J. Deng, and Y. Lin, Multiscale wavelet graph autoencoder for multivariate time-series anomaly detection, IEEE Transactions on Instrumentation and Measurement 72 (2022), pp. 1–11.

9 Appendices

In this section, we state the results used to analyse our models, which were proved under the following assumptions:

Let be a process defined by (1). Then,

-

B1.

Finite Fourth moment: .

-

B2.

The model admits a causal representation

where and for all . Note that for all . Also, and , where is i.i.d.

-

B3.

The model satisfies an invertibility condition

where and for all . Again, for all .

-

B4.

The spectral density matrix of the equivalent vector ARMA process given by,

(40) where , and is the matrix with entry and for some we have,

for all in .

-

B5.

The number of iterations of the iterations algorithm satisfies , and as and .

-

B6.

The number of iterations of the iterations algorithm satisfies , and as and .

Theorem A1 (Innovation Algorithm for Periodically Stationary Processes, see [3]).

If has zero mean and , where the ma, is nonsingular for each , then the one-step predictors , and their mean-square errors , are given by

and for

| (41) |

| (42) |

where (41) is solved recursively in the order , .

The estimates of and , and respectively, can be obtained by substituting the estimates of autocovariance function (3).

Theorem A2 (see [3]).

Let be a process as defined in (1). Under the assumptions B1-B5, we have,

| (43) |

Here the notation, is and for , where is the greatest integer function.

Theorem A3 (see [3]).

Let be a process as defined in (1). Under the assumptions B1-B5, we have,

| (44) |

Here the notation, is and for , where is the greatest integer function.

Theorem A6 (see [4]).

Theorem A7 (see [4]).

Let be a process as defined in (1). Under assumptions B1-B5, and if,

| (47) |

we have,

| (48) |

where is given by,

and is given by,

with and .

Theorem A8 (see [23]).

Theorem A9 (see [23]).

Theorem A10 (see [23]).

Theorem A11 (see [4]).

For a given vector , the periodic function has the Fourier representation

| (58) |

where and are Fourier coefficients and

Let .

| (59) |

where and are respectively given by,

| (60) |

and

| (61) |

The th element of the is given by,

| (62) |

Theorem A12 (see [8]).

If is a vector satisfying,

| (63) |

and is any non-zero matrix such that the matrices have no zero diagonal elements then,

| (64) |