Weighted model calibration with spatial conditional information

Abstract

Cost functions such as mean square error are often used in environmental model calibration. These treat observations as independent and equally important even though model residuals exhibit spatial dependence and additional observations near existing points do not provide as much information on the system as those elsewhere. To address this issue, we develop a method to derive calibration weights based on spatial conditional information. Using simulation experiments with Gaussian processes and the Tephra2 volcanic tephra dispersion model, we show that the additional accuracy and precision from weighted inference increases with the degree of observation clustering and spatial dependence present. To demonstrate real-world relevance, the methods are applied to tephra load observations from the 2014 eruption of the Kelud volcano in Indonesia.

keywords:

Model calibration; cost functions; weights; conditional information; volcanic tephra.1 Introduction

Cost functions and goodness-of-fit metrics quantify the level of agreement between observations and modelled quantities. These are commonly used in the environmental sciences to calibrate and evaluate models. In the context of urban cooling, for example, Zawadzka et al. (2021) calibrated the InVEST model by maximising the coefficient of determination (R2) between modelled and observed land surface temperatures. To model streamflow and sediment yields, Wang et al. (2014) calibrated the Agricultural Policy Environmental eXtender (APEX) model using an objective function combining the Nash-Sutcliffe model efficiency coefficient (NSE) and percent bias.

Despite the wide variety of cost functions and their ubiquitous use in environmental modelling, majority share a much overlooked but non-trivial assumption: the statistical independence of observed data. This means that after accounting for a deterministic trend via a process-based model or secondary variables, the remaining model residuals are seen as independent samples from some common error distribution. This assumption is implicit in the expression of the cost functions as a sum of error contributions from individual observations. Rabonza et al. (2022) show that common cost functions such as mean square error (MSE), chi square error and mean square logarithmic error (MSLE) are proportional to the negative log-likelihoods of independent Gaussian distributions. In the same way, mean absolute error and mean absolute percentage error are related to independent Laplace distributions.

Counter to this independence assumption, correlation is often observed in model residuals. In line with Tobler’s first law of geography (Tobler, 1970), model residuals from observations near to each other tend to be more similar than those further away. While proximity can be defined via time, space or any other measurement characteristic, we focus on proximity in space. Spatial correlation can arise due to an inherent property of the variable (for example, in physical dispersal and growth), external spatially structured environmental factors or the study design (Haining, 2001; Kissling & Carl, 2008; Gaspard et al., 2019). Spatial correlation in model residuals indicates that the spatial characteristics in the data are not well explained by the fitted model and is a sign of model inadequacy (see for example, Valcu & Kempenaers (2010)).

When spatial correlation is not accounted for in model calibration (as is often the case), there are implications on the validity of probabilistic results and the statistical tests which are used to select and/or evaluate the model. Random sampling in the presence of dependence leads to a subtle form of subsampling since observations within a certain distance tend to be similar and we are unlikely to sample the full range of possible values (Dale & Fortin, 2014). By erroneously assuming independence and our ability to cover the whole sample space, we assume that we have more information about the population than we actually do. This inflates our effective sample size for the given dataset, leading to underestimated standard errors of our derived estimates, inflated test statistics for hypothesis tests and increased chances of Type I error, i.e. false positives. Hence, failure to account for spatial correlation within a dataset can lead to suboptimal variable selection and model misspecification (Bahn et al., 2006; Dormann, 2007).

The effect of spatial correlation on variable selection and parameter estimation has been seen in the ecological literature. Through the reanalysis of ecological datasets, Dormann (2007) observed an average of 25% shifts in the estimated regression coefficients when they move from a non-spatial to a spatial model. However, this does not mean that regression coefficients from non-spatial models are biased. While neglecting spatial correlation leads to underestimated standard errors of our estimates for a given dataset, repeating the estimation over multiple datasets can give estimates which on average are equal to the true coefficient value. Beale et al. (2007) illustrate this for the case where the true model structure is an additive spatial error model (i.e. a linear model plus spatial error). Mathematically, it can be shown that both ordinary least squares and spatial generalised least squares (GLS) give unbiased regression coefficients. Instead of unbiasedness, the advantage of modelling spatially via GLS was the higher precision of parameter estimates in the presence of spatial correlation. This was observed through simulation experiments and is dependent on the type of spatial correlation present as well as the assumed spatial model (Kissling & Carl, 2008).

Many spatial models have been proposed to explicitly model spatial dependence. Examples include simultaneous autoregressive (SAR) models which encode spatial dependence through lags of the response and/or errors (Kühn, 2007), spatial eigenvector filtering (Kim & Shin, 2016), autocovariate models (Dormann, 2007) and generalised least squares (GLS) or spatial generalised linear mixed models (GLMM), where space treated as continuous instead of discrete. These models, however, do not share the same advantages of process-based models in terms of being directly related to the physics of the environmental phenomena.

In this paper, we propose a weighted calibration approach using spatial conditional information to account for spatial dependence in process-based models. Accounting for spatial correlation has been shown to be important for both model prediction and validation. For example, Rabonza et al. (2022) harnessed residual spatial correlation to generate more localised volcanic tephra estimates in line with observations. Unlike those obtained from the fitted process-based model which generally show long-range trends, these are more useful for damage assessments. In the context of model validation, multiple studies show that predictive performance can be overinflated when we do not accounting for spatial dependence Karasiak et al. (2022); Kattenborn et al. (2022); Ploton et al. (2020). By developing an approach to account for spatial correlation in model calibration, we contribute to the consideration of spatial correlation in every modelling step.

In Section 2, we present the weighted calibration approach. This involves weighted cost functions and the derivation of the calibration weights using spatial conditional information and variograms. In Section 3, we conduct simulation experiments using Gaussian processes to generate spatial dependence. Similar to Beale et al. (2007), we examine the benefits of accounting for spatial dependence through weighting in terms of bias and precision of parameter estimates. While Beale et al. (2007) focused on raster data and estimated the slope of a linear model in the presence of spatial correlation, we focus on point observations which are more typical of field surveys and estimate the mean of the Gaussian process. This is because process-based models will be used to determine deterministic long-range trends in place of the covariates in a linear model. By comparing estimates from weighted and unweighted inference as well as from a full spatial likelihood optimisation, we investigate the importance of weighting in the presence of observation clustering as well as different levels of spatial dependence. With these insights, we apply our methods to the calibration of a process-based model, the Tephra2 model for volcanic tephra dispersion in Section 4. Since the calibration weights depend on the fitted model, this involves iteratively reweighting the observations during the Tephra2 calibration process. We show that weighted calibration leads to significantly different parameter estimates and modelled tephra load surfaces for the 2014 eruption of the Kelud volcano. Through simulation experiments, we also show that the benefits of weighting in terms of bias and precision persist despite the strong interactions and correlations between the Tephra2 model parameters. In Section LABEL:sec:discussion, we discuss our results and their implications for more general environmental modelling. Finally, we point towards future directions for research.

2 Weighted model calibration

2.1 Weighted cost functions

Cost functions which are often used for model calibration can be expressed as:

| (1) |

where is the vector of observations and denotes their corresponding model estimates. The function describes the way the individual model residuals (the difference between the modelled and observed values) contribute to the cost function. In this formulation, since the cost function is a simple sum of these contributions from the observations, the observations are thought to provide equal and independent information. This assumption is implicit for mean square error (MSE) where and mean absolute error where .

In the case of MSE, the cost function is directly proportional to the negative log-likelihood of independent Gaussian random variables (less the scalar constant ):

| (2) |

Hence, minimising MSE is akin to maximising the likelihood. Here, the random variables are for some standard deviation .

Instead of independent Gaussian likelihoods, a full spatial likelihood can be used to express the spatial dependence in the model residuals and maximum likelihood estimation through for example, kriging can be used to estimate model parameters (Chiles & Delfiner, 1999). The likelihood, however, now takes the form of a multivariate Gaussian density involving the inverse and determinant of a spatial covariance matrix. For large datasets, exact analytical methods become infeasible, especially when we need to estimate multiple parameters as in the case of process-based models.

One way to approximate a dependent likelihood is to weight independent likelihoods according to some measure of their dependence (Varin et al., 2011). A weighted cost function can be expressed as:

| (3) |

where denotes a calibration weight related to spatial dependence. For example, weighted MSE can be defined as:

| (4) |

2.2 Quantifying spatial dependence with variograms

In kriging, the variogram is often used to describe spatial dependence. Let , the model residual corresponding to observation at site . The variogram is defined as half the expected square difference between model residuals at different locations:

| (5) |

There is a one-to-one relationship between the variogram and the spatial covariance:

| (6) |

Under the common assumptions of stationarity and isotropy, the variogram becomes a function of the distance between observations, where . An example is the exponential variogram:

| (7) |

where denotes the partial sill (the amount of spatially correlated variance), is a range parameter (controlling the rate of correlation decay with distance) and is the nugget variance (the amount of spatially independent variance or measurement error). Figure 1 shows two exponential variograms with the same nugget variance and partial sill but different range parameters. The larger range parameter leads to a slower decay in spatial correlation.

In our analyses, we will use Matérn variograms which commonly used in Spatial Statistics and which extend the exponential variogram towards greater flexibility in terms of the smoothness of the generated processes:

| (8) |

where denotes the Gamma function, is a smoothness parameter and is the modified Bessel function of the second kind.

2.3 Weights based on spatial conditional information

Next, we introduce the concept of spatial conditional information (CI). This is related to the full spatial likelihood which involves spatial covariance matrices related to variograms. Based on transformations of CIs which are chosen to obtain desirable properties, we develop a weighting scheme for model calibration. Inference results from the weighted calibration will be compared with unweighted calibration in later Sections.

2.3.1 Spatial conditional information

When there is spatial dependence, clustered observations provide repeated information on the process of interest because their values are likely to be very similar. To quantify the information gained or the amount of uncertainty reduced by sampling a process at an additional location, we can compute its CI. As defined in Equation (18) of Wellmann (2013), the CI of a process at its th location is defined as:

| (9) |

Here, denotes the random variable for the process at location ,

| (10) |

denotes the joint entropy for and is their joint distribution.

When we use the multivariate normal distribution to model as is done in a spatially dependent Gaussian process, we can calculate the joint entropy by(Ahmed & Gokhale, 1989):

| (11) |

where is the spatial covariance matrix. So, the CI of given is:

| (12) |

where denotes the covariance matrix for . Note that the CI is bounded from above by the univariate entropy and obtains this value when is independent of .

Since clustered observations provide repeated information, we propose to downweigh these in favour of observations which provide more information on the process at other locations. To this end, the observations’ CI values can be used to derive weights for weighted model calibration.

Note that since we are using a continuous Gaussian distribution to model the process , the conditional information values may be negative and hence difficult to normalise such that they sum up to the total number of observations as suggested by Jeong & Qiu (2017) in their weighted calibration scheme. In addition while our motivation for weighted calibration is similar, we use CI to quantify the amount of process uncertainty reduced by the additional observation, instead of computing the uncertainty of the observation given all the other data points as done by Jeong & Qiu (2017). This is because while the Jacobian they use to compute weights may be tractable in their context of systems biology, it can be difficult to calculate for complex process-based models.

2.3.2 Transforming the conditional information

Rather than using trying to normalise the CI for weights, we can transform the CI values. We propose to transform the CI so that the resulting weights satisfy these conditions: they are equal to for independent points and for observations which lie in the same location as other existing points. The transformation is performed in three steps:

-

Step 1

(13) -

Step 2

(14) -

Step 3

(15)

Here, denotes the ratio of the partial sill of the variogram to the nugget. From Step 1, we note that when th location is far away and can be seen as independent of , and its weight Step 2 converts to to account for the dependence of the former term on and . This also enables us to obtain the desired value of when the observations coincide at the same location. In this scenario, the spatial covariance matrix takes the form of:

| (16) |

where is the nugget variance. Using 2. on p.203 of of Harville (1997) with and , we have:

| (17) | ||||

as required.

For the multivariate normal distribution, has the interpretation of being the proportion of variance reduced by kriging with :

| (18) |

If we write

| (19) |

using (E.1) on p.204 of Harville (1997), we have:

| (20) |

where denotes the th entry of and denotes the (simple) kriging variance when we use to predict (Chiles & Delfiner, 1999).

So:

| (21) |

ie. the proportion of variance reduced by kriging with . Notice that when is independent of , because the kriging variance is equal to the sill.

To use the normalised CI or the weights defined in (15) for model calibration, we can proceed as follows:

-

1.

Assign equal weight to all data points.

-

2.

Optimise the parameters using an initial unweighted cost function.

-

3.

Check for spatial dependence in the model residuals and estimate the variogram if present.

-

4.

Compute the weights for each data point via (15).

-

5.

Use the weights in the next round of model calibration via the weighted cost functions.

If necessary, we can iterate the calibration and computation of weights until the weights converge as suggested by Jeong & Qiu (2017) in the context of weighted calibration for systems biology. However, in majority of the cases we analysed, the difference between weights computed in the first and second iterations were very small and no further iteration was required.

3 Simulation experiments using Gaussian processes

To test the effect of our proposed weighting scheme, we apply it to simulated datasets. Specifically, we simulate values from Gaussian processes to generate spatial dependence. To imitate the modelling framework for the tephra case study, the means of the processes are set to zero because deterministic long-range trends will be captured by the process-based model instead of a non-zero mean and/or regression term. In addition, we simulate over a spatial domain of to mimic 50 km by 50 km area covered by our tephra load dataset.

By comparing estimates of the mean obtained from minimising weighted and unweighted MSE for different simulation settings, we can examine the properties of weighted calibration. independent datasets are generated to evaluate each simulation setting and make comparisons. In their comparison of ordinary least squares (OLS) and generalised least squares (GLS) regression where spatial correlation was explicitly modelled, Beale et al. (2007) simulated spatially correlated data over a grid and showed that while OLS and GLS both gave an unbiased results for the slope of a linear model, GLS provided higher precision: the estimates exhibited a smaller spread about the true value. We are expecting to see similar results for our weighted calibration. In addition to examining how the benefit of weighting changes with the level of spatial dependence present in the data, we explore different sampling schemes to generate point observations which are more in line with what one would obtain in scientific field surveys.

3.1 Effect of sampling schemes

We consider both random spatial sampling and clustered sampling. In line with our tephra data, our base setting involves 80 observations for each simulation. Figure 2 shows the four sampling schemes we consider: 80 randomly chosen locations, 40 randomly chosen locations, 80 locations with relative positions fixed to be the same as that in the tephra load data from the 2014 Kelud eruption and 80 locations exhibiting higher clustering than our case study data. To generate the latter, we used k-means clustering to divide the original locations into five clusters (five being the number chosen by the elbow method) and halved the distance of each observation to its associated cluster centre.

To generate Gaussian process values with spatial dependence at the chosen locations, we use a Matérn variogram with nugget , sill and range parameter . These relative values are similar to what we obtain for the case study in Section 4.1. Note that the smoothness parameter is set to which is a common choice which allows for slightly smoother surfaces than an exponential and the default value in the R package R-INLA (R-INLA, 2022; Rue et al., 2009). We generate sets of simulated values per sampling scheme and estimate the process mean for each by optimising the full spatial likelihood (via the ‘likfit’ function of the geoR package) as well as weighted and unweighted MSE.

Figure 3 shows the box plots of the process mean estimates from the 40 and 80 randomly sampled locations for the three calibration methods. With less observations, there is higher bias and spread in the estimates as seen in the larger differences between the bold line denoting the median values from the dashed line denoting the true mean of zero as well as the longer widths of the box plots. While the WMSE calibration has slightly lower bias in the case with 40 observations than the other two methods, its benefit is hard to ascertain with 80 observations. This is likely because, by random sampling, we have a good representation of the study region and process values, and this is even more so with more observations considered.

Holding the number of observations fixed at 80, we examine the effect of clustering our observations. Figure 4 shows the box plots of the process mean estimates from the 80 locations corresponding to those of the Kelud case study and after increasing the extent of clustering by halving the distance of the observations to their cluster centres. Under increased clustering, we see that the bias of the unweighted calibration increases while the estimates from the weighted calibration remain unbiased. The precision of all three methods decrease with increased clustering but based on the width of the boxes, unweighted calibration is affected the most. The same conclusions can be drawn when we compare the box plots corresponding to the highly clustered observations to that for 80 randomly selected locations in Figure 3.

3.2 Extent of spatial dependence

From the previous section, we saw that weighted calibration is important for clustered observations. In this section, we vary the spatial dependence of the Gaussian process to see its effect on the mean estimates. We do this by increasing the proportion of the nugget variance to the sill from to (hence increasing random noise and reducing spatial dependence from the ‘Mid’/base case) and increasing the spatial range parameter from to (hence increasing dependence). In Figure 5, the former case is represented by ‘Low’ and the latter by ‘High’. Generally, we see that when we increase the spatial dependence in the Gaussian process, the precision of the mean estimates decreases (the boxes are wider). Nevertheless, the estimates from the weighted calibration remain relatively unbiased under Mid-High spatial dependence unlike those from the unweighted calibration and exhibit higher precision.

4 Weighted calibration of Tephra2

In Section 3, we saw that for the simulated Gaussian processes, the benefits of weighted calibration over unweighted calibration in terms of lower bias and higher precision increase with observation clustering and spatial dependence. Now, we apply our methods to the calibration of the Tephra2 dispersion model with tephra load data collected in the aftermath of the 2014 Kelud eruption. We show that there are non-negligible differences between the estimated source parameters from weighted and unweighted calibration, and use simulation experiments to further illustrate the lower bias and higher precision obtained from weighted calibration with this process-based model.

4.1 Application: 2014 eruption of the Kelud volcano

We conduct weighted and unweighted calibration of the Tephra2 model using the tephra load data from the 2014 Kelud eruption. Note that calibration was not conducted using a full spatial likelihood unlike in the simulation experiements for the Gaussian process in Section 3 because of the infeasibility of this approach for large environmental datasets. The fitted variogram for the model residuals from the unweighted calibration is shown in Figure 6. The increase from a nugget value of to the sill of indicates that there is spatial correlation present since the expected difference in residuals increases with increasing distance between observations.

We use the fitted variogram to construct the spatial covariance matrix required to compute the CI weights and conduct the weighted calibration. The computed weights are shown on a map in Figure 7. We see that clustered observations are given lower weights. Table 1 shows the parameter estimates from the weighted and unweighted calibration. We see that the difference in estimates ranges from -71.587% for fall time threshold to +59.295% for the standard deviation in particle diameter. Hence, the effect of accounting for spatial dependence through weighting is non-negligible. As expected, the difference in total mass ejected is relatively low at +3.364% as it is known to be the parameter which is most independent of the rest and easiest to estimate (ref from Seb?).

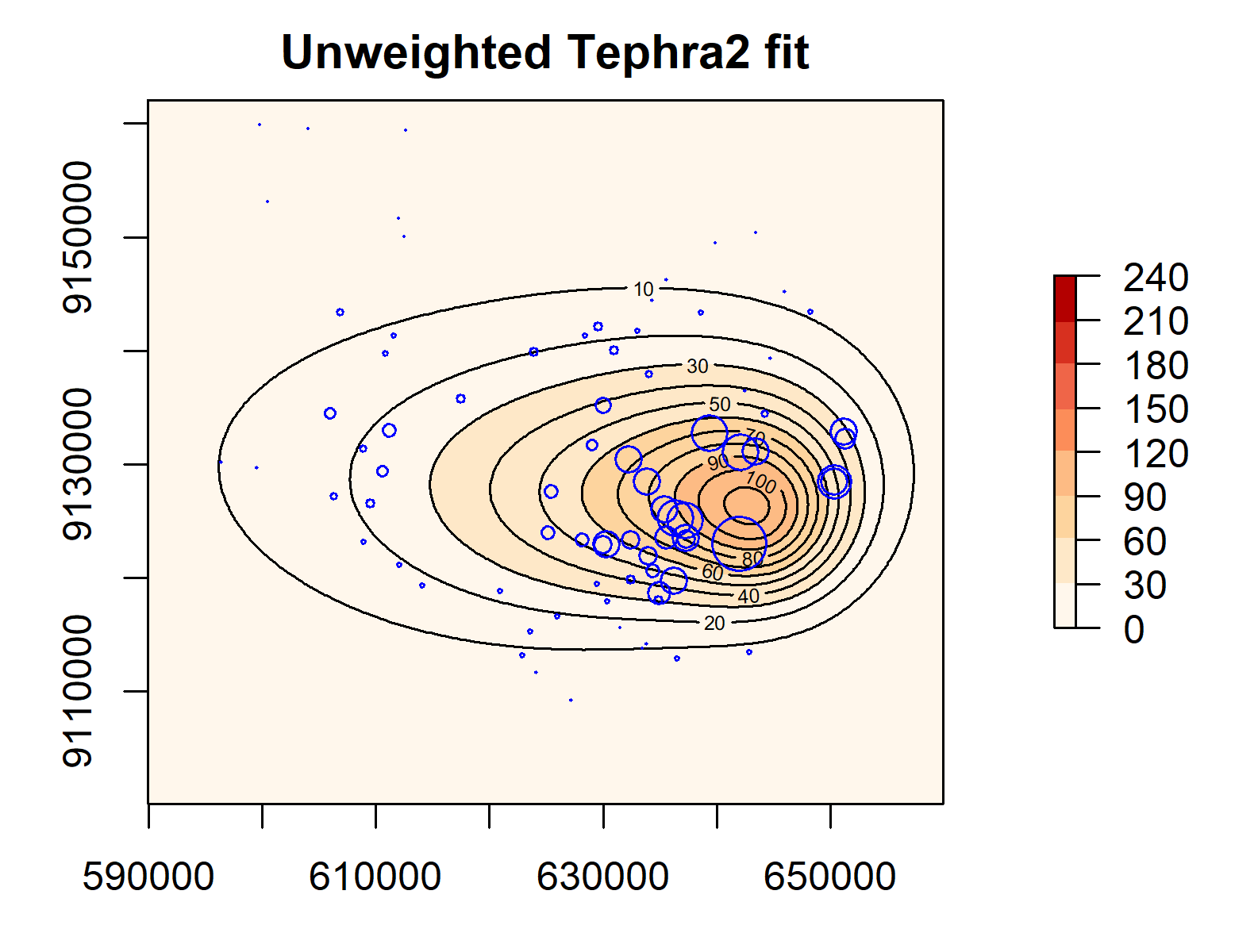

The difference in fitted parameters manifests in the estimated tephra load surface as illustrated in Figure 8. The contours from the weighted calibration, denoting areas of similar load values, are less elongated towards the left than those from the unweighted calibration. In addition, there is generally lower loads at distal and medial regions (between the 30-100 kg/m2 contours).

| Parameter | Range | Unweighted | Weighted | Difference |

| calibration | calibration | |||

| Max column height (km a.s.l.) | 15 - 23 | 22.718 | 17.966 | - 20.917% |

| Total mass ejected ( kg) | 0.1 - 10 | 6.61292 | 6.83535 | + 3.364% |

| Diffusion coefficient (m^2/s) | 0.0001 - 15000 | 10403 | 13823 | + 32.875% |

| Beta | 0.001 - 3.5 | 2.7677 | 1.25203 | - 54.763% |

| Fall time threshold (s) | 0.001 - 15000 | 11382 | 3234 | - 71.587% |

| Median grain size (phi) | -3 - 2.5 | 0.859525 | 0.877705 | + 2.115% |

| SD in particle diameter (phi) | 0.5 - 3 | 0.840127 | 1.33828 | + 59.295% |

4.2 Simulation experiments

With the estimated variogram parameters (nugget, partial sill and range), we can generate 100 sample tephra load datasets from the same distribution as the 2014 Kelud data by adding simulated residual data to the Tephra2 fitted values from weighted inference. This means that we treat the Tephra2 fit as a mean surface of tephra load and use the Gaussian process with the fitted variogram to generate spatially correlated residuals. Examples of two simulated tephra load datasets are shown in Figure 12 of the Appendix.

We perform weighted and unweighted calibration of the Tephra2 model for each of these 100 simulated datasets to examine the differences in the inferred parameters. Figure 9 shows the box plots of the parameter estimates from the two calibration methods and Table 2 summarises the results in terms of percentage median bias (the difference between the median estimate and the true parameter value expressed in terms of percentage of the latter) as well as percentage median absolute deviation, a measure of spread. From these, we see that weighted calibration clearly improves inference of certain parameters. The median bias for beta parameter and median grain size decreases from 23.86% to 11.94% and from 8.97% to 6.56% respectively. In addition, their median absolute deviation decreases from 61.93% to 55.15% and from 41.95% to 35.31% respectively. Apart from the diffusion coefficient (for which accuracy and precision seems unaffected) and the standard deviation in particle diameter (for which estimates slight increase in absolute bias), weighting based on spatial conditional information either improves accuracy or precision for the remaining Tephra2 parameters. The median absolute deviation of total mass ejected decreases from 30.61% to 25.37% while that for fall time threshold decreases from 83.23% to 65.60%. On the other hand, maximum column height sees a reduction in median bias from 7.46% to 5.75%. Note that the procedure of estimating parameters from data simulated from the fitted model can be used to construct parametric bootstrap confidence intervals for the parameters. In the cases where the median absolute deviation is lower for estimates from the weighted calibration, the corresponding confidence intervals will be narrower than those derived from unweighted calibration.

The unequal effects of weighting on the different parameters is likely due to their interactions in the physical model. Figure 10 shows that there are some high correlations between parameters estimates from both unweighted and weighted calibration. In particular, standard deviation in particle diameter (std_dev) is highly correlated to median grain size (median_size) and total mass ejected (tot_mass) and the beta parameter (beta_param) is highly correlated with the maximum column height (max_column_ht).

Figure 11 shows the maximum absolute difference in weights between consecutive calibration iterations. Using a convergence threshold of 0.1 to determine if additional iterations are required, 90 out of the 100 datasets had weights which converged after one round of weighting. Only 6 out of the 100 datasets required two rounds of weighting for the weights to converge while 4 out of the 100 datasets required three rounds.

| Parameter | % Median-bias | % Median abs. deviation | ||

|---|---|---|---|---|

| Unweighted | Weighted | Unweighted | Weighted | |

| Max column height | 7.46% | 5.75% | 22.95% | 22.47% |

| Total mass ejected | 11.38% | 10.80% | 30.61% | 25.47% |

| Diffusion coefficient | -2.93% | -2.68% | 16.01% | 15.43% |

| Beta | 23.86% | 11.94% | 61.93% | 55.14% |

| Fall time threshold | 29.11% | 28.39% | 83.23% | 65.60% |

| Median grain size | 8.97% | 6.56% | 41.95% | 35.31% |

| SD in particle diameter | 2.57% | -6.52% | 48.84% | 46.52% |

REFERENCES

- (1)

- Ahmed & Gokhale (1989) Ahmed, N. & Gokhale, D. (1989), ‘Entropy expressions and their estimators for multivariate distributions’, IEEE Transactions on Information Theory 35(3), 688–692.

- Bahn et al. (2006) Bahn, V., J. O’Connor, R. & B. Krohn, W. (2006), ‘Importance of spatial autocorrelation in modeling bird distributions at a continental scale’, Ecography 29(6), 835–844.

- Beale et al. (2007) Beale, C. M., Lennon, J. J., Elston, D. A., Brewer, M. J. & Yearsley, J. M. (2007), ‘Red herrings remain in geographical ecology: a reply to Hawkins et al.(2007)’, Ecography 30(6), 845–847.

- Chiles & Delfiner (1999) Chiles, J.-P. & Delfiner, P. (1999), Geostatistics: modeling spatial uncertainty, John Wiley & Sons, New York.

- Dale & Fortin (2014) Dale, M. R. & Fortin, M.-J. (2014), Spatial analysis: a guide for ecologists, Cambridge University Press.

- Dormann (2007) Dormann, C. F. (2007), ‘Effects of incorporating spatial autocorrelation into the analysis of species distribution data’, Global ecology and biogeography 16(2), 129–138.

- Gaspard et al. (2019) Gaspard, G., Kim, D. & Chun, Y. (2019), ‘Residual spatial autocorrelation in macroecological and biogeographical modeling: a review’, Journal of Ecology and Environment 43(1), 1–11.

-

Haining (2001)

Haining, R. (2001), Spatial sampling, in N. J. Smelser & P. B. Baltes, eds, ‘International Encyclopedia of the Social & Behavioral Sciences’, Pergamon, Oxford, pp. 14822–14827.

https://www.sciencedirect.com/science/article/pii/B0080430767025109 - Harville (1997) Harville, D. (1997), Matrix Algebra From a Statistician’s Perspective, Springer-Verlag, New York.

- Jeong (2019) Jeong, J. (2019), Experimental design and mathematical modeling in ODE-based systems biology, PhD thesis, Georgia Institute of Technology.

- Jeong & Qiu (2017) Jeong, J. & Qiu, P. (2017), The relative importance of data points in systems biology and parameter estimation, in ‘2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM)’, IEEE, pp. 367–373.

- Karasiak et al. (2022) Karasiak, N., Dejoux, J.-F., Monteil, C. & Sheeren, D. (2022), ‘Spatial dependence between training and test sets: another pitfall of classification accuracy assessment in remote sensing’, Machine Learning 111(7), 2715–2740.

- Kattenborn et al. (2022) Kattenborn, T., Schiefer, F., Frey, J., Feilhauer, H., Mahecha, M. D. & Dormann, C. F. (2022), ‘Spatially autocorrelated training and validation samples inflate performance assessment of convolutional neural networks’, ISPRS Open Journal of Photogrammetry and Remote Sensing 5, 100018.

- Kim & Shin (2016) Kim, D. & Shin, Y. H. (2016), ‘Spatial autocorrelation potentially indicates the degree of changes in the predictive power of environmental factors for plant diversity’, Ecological indicators 60, 1130–1141.

- Kissling & Carl (2008) Kissling, W. D. & Carl, G. (2008), ‘Spatial autocorrelation and the selection of simultaneous autoregressive models’, Global Ecology and Biogeography 17(1), 59–71.

- Kühn (2007) Kühn, I. (2007), ‘Incorporating spatial autocorrelation may invert observed patterns’, Diversity and Distributions 13(1), 66–69.

- Ploton et al. (2020) Ploton, P., Mortier, F., Réjou-Méchain, M., Barbier, N., Picard, N., Rossi, V., Dormann, C., Cornu, G., Viennois, G., Bayol, N. et al. (2020), ‘Spatial validation reveals poor predictive performance of large-scale ecological mapping models’, Nature communications 11(1), 1–11.

- R-INLA (2022) R-INLA (2022), ‘R-inla project’, https://www.r-inla.org. Accessed:2022-11-24.

- Rabonza et al. (2022) Rabonza, M., Nguyen, M., Biasse, S., Jenkins, S., Taisne, B. & Lallemant, D. (2022), ‘Inversion and forward estimation with process-based models: an investigation into cost functions, uncertainty-based weights and model-data fusion’. Under review.

- Rue et al. (2009) Rue, H., Martino, S. & Chopin, N. (2009), ‘Approximate Bayesian inference for latent Gaussian models by using integrated nested Laplace approximations’, Journal of the royal statistical society: Series b (statistical methodology) 71(2), 319–392.

- Tobler (1970) Tobler, W. R. (1970), ‘A computer movie simulating urban growth in the Detroit region’, Economic geography 46(sup1), 234–240.

- Valcu & Kempenaers (2010) Valcu, M. & Kempenaers, B. (2010), ‘Spatial autocorrelation: an overlooked concept in behavioral ecology’, Behavioral Ecology 21(5), 902–905.

- Varin et al. (2011) Varin, C., Reid, N. & Firth, D. (2011), ‘An overview of composite likelihood methods’, Statistica Sinica pp. 5–42.

- Wang et al. (2014) Wang, X., Yen, H., Liu, Q. & Liu, J. (2014), ‘An auto-calibration tool for the Agricultural Policy Environmental eXtender (APEX) model’, Transactions of the ASABE 57(4), 1087–1098.

- Wellmann (2013) Wellmann, J. (2013), ‘Information theory for correlation analysis and estimation of uncertainty reduction in maps and models’, Entropy 15(4), 1464–1485.

- Zawadzka et al. (2021) Zawadzka, J., Harris, J. A. & Corstanje, R. (2021), ‘Assessment of heat mitigation capacity of urban greenspaces with the use of InVEST urban cooling model, verified with day-time land surface temperature data’, Landscape and Urban Planning 214, 104163.

Appendix

Weighted calibration in systems biology

In the context of system biology, a similar problem is faced where limited experimental data is used to fit complex models and not all data points provide equal amounts of information on the system. To address this, Jeong & Qiu (2017) propose a calibration approach, where more informative data points were given higher weights. In their case, these were data points taken during the dynamic phase of the experiment rather than those taken during the steady state.

In their paper, Jeong & Qiu (2017) consider the weighted sum of squares as a cost function:

| (22) |

where represents the model parameters, the observation, the corresponding prediction and its allocated weight. Through a second-order Taylor expansion of centered around the optimal parameters and some simplifications, parameter uncertainty can be expressed in terms of the Fisher information matrix. In a similar way, the uncertainty of estimating one set of data points using another set of data points can be expressed in terms of their Fisher information matrices.

The iterative algorithm is as follows:

-

1.

Assign equal weight to all data points and use a random initial parameter setting.

-

2.

Optimise the parameters using an initial unweighted cost function.

-

3.

Compute Jacobian at the optimised parameter values.

-

4.

Compute the uncertainty of data point given all the other data points as:

(23) where is the number of parameters, where is the row of and is computed using all the other rows of . The term for which we compute trace approximates the derivatives of the other data points with respect to (Jeong 2019, p.42).

-

5.

Compute the weights by normalising the uncertainty values so that they sum up to the total number of data points .

-

6.

Use the weights in the next round of model calibration via the weighted cost function and use the previously estimated parameters as initial parameter settings.

-

7.

Iterate the calibration and and computing of weights until the weights converge.

Examples of simulated tephra data